Abstract

The primal-dual optimization algorithm developed in Chambolle and Pock (CP), 2011 is applied to various convex optimization problems of interest in computed tomography (CT) image reconstruction. This algorithm allows for rapid prototyping of optimization problems for the purpose of designing iterative image reconstruction algorithms for CT. The primal-dual algorithm is briefly summarized in the article, and its potential for prototyping is demonstrated by explicitly deriving CP algorithm instances for many optimization problems relevant to CT. An example application modeling breast CT with low-intensity X-ray illumination is presented.

I. Introduction

Optimization-based image reconstruction algorithms for CT have been investigated heavily recently due to their potential to allow for reduced scanning effort while maintaining or improving image quality [1], [2]. Such methods have been considered for many years, but within the past five years computational barriers have been lowered enough such that iterative image reconstruction can be considered for practical application in CT [3]. The transition to practice has been taking place alongside further theoretical development particularly with algorithms based on the sparsity-motivated ℓ1-norm [4], [5], [6], [7], [8], [9], [10], [11], [12]. Despite the recent interest in sparsity, optimization-based image reconstruction algorithm development continues to proceed along many fronts and there is as of yet no consensus on a particular optimization problem for the CT system. In fact, it is beginning to look like the optimization problem, upon which the iterative image reconstruction algorithms are based, will themselves be subject to design depending on the particular properties of each scanner type and imaging task.

Considering the possibility of tailoring optimization problems to a class of CT scanners, makes design of iterative image reconstruction algorithms a daunting task. Optimization formulations generally construct an objective function comprised of a data fidelity term and possible penalty terms discouraging unphysical behavior in the reconstructed image, and they possibly include hard constraints on the image. The image estimate is arrived at by extremizing the objective subject to any constraints placed on the estimate. The optimization problems for image reconstruction can take many forms depending on image representation, projection model, and objective and constraint design. On top of this, it is difficult to solve many of the optimization problems of interest. A change in optimization problem formulation can mean many weeks or months of algorithm development to account for the modification.

Due to this complexity, it would be quite desirable to have an algorithmic tool to facilitate design of optimization problems for CT image reconstruction. This tool would consist of a well-defined set of mechanical steps that generate a convergent algorithm from a specific optimization problem for CT image reconstruction. The goal of this tool would be to allow for rapid prototyping of various optimization formulations; one could design the optimization problem free of any restrictions imposed by a lack of an algorithm to solve it. The resulting algorithm might not be the most efficient solver for the particular optimization problem, but it would be guaranteed to give the answer.

In this article we consider convex optimization problems for CT image reconstruction, including non-smooth objectives, unconstrained and constrained formulations. One general algorithmic tool is to use steepest descent or projected steepest descent [13]. Such algorithms, however, do not address non-smooth objective functions and they have difficulty with constrained optimization, being applicable for only simple constraints such as non-negativity. Another general strategy involves some form of evolving quadratic approximation to the objective. The literature on this flavor of algorithm design is enormous, including non-linear conjugate gradient methods [13], parabolic surrogates [10], [14], and iteratively reweighted least-squares [15]. For the CT system these strategies often require quite a bit of know-how due to the very large scale and ill-posedness of the imaging model. Once the optimization formulation is established, however, these quadratic methods provide a good option to gain in efficiency.

One of the main barriers to prototyping alternative optimization problems for CT image reconstruction is the size of the imaging model; volumes can contain millions of voxels and the sinogram data can correspondingly consist of millions of X-ray transmission measurements. For large-scale systems there has been some resurgence of first-order methods [16], [17], [18], [19], [20], [21] and recently there has been applications of first-order methods specifically for optimization-based image reconstruction in CT [21], [22], [23]. These methods are interesting because they can be adapted to a wide range of optimization problems involving non-smooth functions such as those involving ℓ1-based norms. In particular, the algorithm that we pursue further in this paper is a first-order primal-dual algorithm for convex problems by Chambolle and Pock [20]. This algorithm goes a long way toward the goal of optimization problem prototyping, because it covers a very general class of optimization problems that contain many optimization formulations of interest to the CT community.

For a selection of optimization problems of relevance to CT image reconstruction, we work through the details of setting up the Chambolle-Pock algorithm. We refer to these dedicated algorithms as algorithm instances. Our numerical results demonstrate that the algorithm instances achieve the solution of difficult convex optimization problems under challenging conditions in reasonable time and without parameter tuning. In Sec. II the CP methodology and algorithm is summarized; in Sec. III various optimization problems for CT image reconstruction are presented along with their corresponding CP algorithm instance; and Sec. IV shows a limited study on a breast CT simulation that demonstrates the application of the derived CP algorithm instances.

II. Summary of the generic Chambolle-Pock algorithm

The Chambolle-Pock (CP) algorithm [20] is primal-dual meaning that it solves an optimization problem simultaneously with its dual. On the face of it, it would seem to involve extra work by solving two problems instead of one, but the algorithm comes with convergence guarantees and solving both problems provides a robust, non-heuristic convergence check – the duality gap.

The CP algorithm applies to a general form of the primal minimization:

| (1) |

and a dual maximization:

| (2) |

where x and y are finite dimensional vectors in the spaces X and Y; respectively; K is a linear transform from X to Y; G and F are convex, possibly non-smooth, functions mapping the respective X and Y spaces to non-negative real numbers; and the superscript “*” in the dual maximization problem refers to convex conjugation, defined in Eqs. (3) and (4). We note that the matrix K need not be square; X and Y will in general have different dimension. Given a convex function H of a vector z ∈ Z, its conjugate can be computed by the Legendre transform [24], and the original function can be recovered by applying conjugation again:

| (3) |

| (4) |

The notation 〈·, ·〉Z refers to the inner product in the vector space Z.

Formally, the primal and dual problems are connected in a generic saddle point optimization problem:

| (5) |

By performing the maximization over y in Eq. (5), using Eq. (4) with Kx associated with y′, the primal minimization Eq. (1) is derived. Similarly, performing the minimization over x in Eq. (5), using Eq. (3) and the identity 〈K x, y〉 = 〈x, KT y〉, yields the dual maximization Eq. (2), where the T superscript denotes matrix transposition.

The minimization problem in Eq. (1), though compact, covers many minimization problems of interest to tomographic image reconstruction. Solving the dual problem, Eq. (2), simultaneously allows for assessment of algorithm convergence. For intermediate estimates x and y of the primal minimization and the dual maximization, respectively, the primal objective will be greater than or equal to the dual objective. The difference between these objectives is referred to as the duality gap, and convergence is achieved when this gap is zero. Plenty of examples of useful optimization problems for tomographic image reconstruction will be described in detail in Sec. III, but first we summarize Algorithm 1 from Ref. [20].

Algorithm 1.

Pseudocode for N-steps of the basic Chambolle-Pock algorithm. The constant L is the ℓ2-norm of the matrix K; τ and σ are non-negative CP algorithm parameters, which are both set to 1/L in the present application; θ ∈ [0, 1] is another CP algorithm parameter, which is set to 1; and n is the iteration index. The proximal operators proxσ and proxτ are defined in Eq. (6).

| 1: | L ← ‖K‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize x0 and y0 to zero values |

| 3: | x̄0 ← x0 |

| 4: | repeat |

| 5: | yn+1 ← proxσ[F*](yn + σKx̄n) |

| 6: | xn+1 ← proxτ [G](xn − τKT yn+1) |

| 7: | x̄n+1 ← xn+1 + θ(xn+1 − xn) |

| 8: | n ← n + 1 |

| 9: | untiln ≥ N |

A. Chambolle-Pock: Algorithm 1

The CP algorithm simultaneously solves Eqs. (1) and (2). As presented in Ref. [20] the algorithm is simple, yet extremely effective. We repeat the steps here in Listing 1 for completeness, providing the parameters that we use for all results shown below. The parameter descriptions are provided in Ref. [20], but note that in our usage specified above there are no free parameters. This is an extremely important feature for our purpose of optimization prototyping. One caveat is that technically the proof of convergence for the CP algorithm assumes L2στ < 1, but in practice we have never encountered a case where the choice σ = τ = 1/L failed to tend to convergence. We stress that in Eq. (6) the matrix KT needs to be the transpose of the matrix K; this point can sometimes be confusing because K for imaging applications is often intended to be an approximation to some continuous operator such as projection or differentiation and often KT is taken to mean the approximation to the continuous operator’s adjoint, which may or may not be the matrix transpose of K. The constant L is the magnitude of the matrix K, its largest singular value. Appendix A gives the details on computing L via the power method. Key to deriving the particular algorithm instances are the proximal mappings proxσ[F*] and proxτ[G] (called resolvent operators in Ref. [20]).

The proximal mapping is used to generate a descent direction for the convex function H and it is obtained by the following minimization:

| (6) |

This operation does admit non-smooth convex functions, but H does need to be simple enough that the above minimization can be solved in closed form. For CT applications the ability to handle non-smooth F and G allows the study of many optimization problems of recent interest, and the simplicity limitation is not that restrictive as will be seen.

B. The CP algorithm for prototyping of convex optimization problems

To prototype a particular convex optimization problem for CT image reconstruction with the CP algorithm, there are five basic steps:

Map the optimization problem to the generic minimization problem in Eq. (1).

Derive the dual maximization problem, Eq. (2), by computing the convex conjugates of F and G using the Legendre transform Eq. (3).

Derive the proximal mappings of F and G using Eq. (6).

Substitute the results of (3) into the generic CP algorithm in Listing 1 to obtain a CP algorithm instance.

Run the algorithm, monitoring the primal-dual gap for convergence.

As will be seen below, a great variety of constrained and unconstrained optimization problems can be written in the form of Eq. (1). Specifically, using the algebra of convex functions [24], that the sum of two convex functions is convex and that the composition of a convex function with a linear transform is a convex function, many interesting optimization formulations can be put in the form of Eq. (1). We will also make use of convex functions which are not smooth – notably ℓ1 based norms and indicator functions δS(x):

| (7) |

where S is a convex set. The indicator function is particularly handy for imposing constraints. In computing the convex conjugate and proximal mapping of convex functions, we make much use of the standard calculus rule for extremization, ∇f = 0, but such computations are augmented also with geometric reasoning, which may be unfamiliar. Accordingly, we have included appendices to show some of these computation steps. With this quick introduction, we are now in a position to derive various algorithm instances for CT image reconstruction from different convex optimization problems.

III. Chambolle-Pock algorithm instances for CT

For this article, we only consider optimization problems involving the linear imaging model for X-ray projection, where the data are considered as line integrals over the object’s X-ray attenuation coefficient. Generically, maintaining consistent notation with Ref. [20], the discrete-to-discrete CT system model [25] can be written as:

| (8) |

where A is the projection matrix taking an object represented by expansion coefficients u and generating a set of line-integration values g. This model covers a multitude of expansion functions and CT configurations, including both 2D fan-beam and 3D cone-beam projection data models.

A few notes on notation are in order. In the following, we largely avoid indexing of the various vector spaces in order that the equations and pseudocode listings are brief and clear. Any of the standard algebraic operations between vectors is to be interpreted in a component-wise manner unless explicitly stated. Also, an algebraic operation between a scalar and a vector is to be distributed among all components of the vector; e.g., 1 + v adds one to all components of v. For the optimization problems below, we employ three vector spaces: I the space of discrete images in either 2 or 3 dimensions; D the space of the CT sinograms (or projection data); and V the space of spatial-vector-valued image arrays, V = Id where d = 2 or 3 for 2D and 3D-space, respectively. For the CT system model Eq. (8), u ∈ I, and g ∈ D, but we note that the space D can also include sinograms which are not consistent with the linear system matrix A. The vector space V will be used below for forming the total variation (TV) semi-norm; an example of such a vector v ∈ V is the spatial-gradient of an image u. Although the pixel representation is used, much of the following can be applied to other image expansion functions. As we will be making much use of certain indicator functions, we define two important sets, Box(a) and Ball(a), through their indicator function:

| (9) |

and

| (10) |

Recall that the ‖ · ‖∞ norm selects the largest component of the argument, thus Box(a) comprises vectors with no component larger than a (in 2D Box(a) is a square centered on the origin with width 2a). We also employ 0X and 1X to mean a vector from the space X with all components set to 0 and 1, respectively.

A. Image reconstruction by least-squares

Perhaps the simplest optimization method for performing image reconstruction is to minimize the the quadratic data error function. We present this familiar case in order to gain some experience with the mechanics of deriving CP algorithm instances, and because the quadratic data error term will play a role in other optimization problems below. The primal problem of interest is:

| (11) |

To derive the CP algorithm instance, we make the following mechanical associations with the primal problem Eq. (1):

| (12) |

| (13) |

| (14) |

| (15) |

Applying Eq. 3, we obtain the convex conjugates of F and G:

| (16) |

| (17) |

where p ∈ D and q ∈ I. While obtaining F* in this case involves elementary calculus for extremization of Eq. (3), finding G* needs some comment for those unfamiliar with convex analysis. Using the definition of the Legendre transform for G(x) = 0, we have:

| (18) |

There are two possibilities: (1) q = 0I, in which case the maximum value of 〈q, x〉I is 0, and (2) q ≠ 0I, in which case this inner product can increase without bound, resulting in a maximum value of ∞. Putting these two cases together yields the indicator function in Eq. (16). With F, G, and their conjugates, the optimization problem dual to Eq. (11) can be written down from Eq. (2):

| (19) |

For deriving the CP algorithm instance, it is not strictly necessary to have this dual problem, but it is useful for evaluating convergence.

The CP algorithm solves Eqs. (11) and (19) simultaneously. In principle, the values of the primal and dual objective functions provide a test of convergence. During the iteration the objective of the primal problem will by greater than the objective of the dual problem, and when the solutions of the respective problems are reached, these objectives will be equal. Comparing the duality gap, i.e. the difference between the primal objective and the dual objective, with 0 thus provides a test of convergence. The presence of the indicator function in the dual problem, however, complicates this test. Due to the negative sign in front of the indicator, when the argument is not the zero vector, this term and therefore the whole dual objective is assigned to a value of −∞. The dual objective achieves a finite, testable value only when the indicator function attains the value of 0, when AT p = 0I. Effectively, the indicator function becomes a way to write down a constraint in the form of a convex function, in this case an equality constraint. The dual optimization problem can thus alternately be written as a conventional constrained maximization:

| (20) |

The convergence check is a bit problematic, because the equality constraint will not likely be strictly satisfied in numerical computation. Instead, we introduce a conditional primal-dual gap (the difference between the primal and dual objectives ignoring the indicator function) given the estimates u′ and p′:

| (21) |

and separately monitor AT p′ to see if it is tending to 0I. Note that the conditional primal-dual gap need not be positive, but it should tend to zero.

To finally attain the CP algorithm instance for image reconstruction by least-squares, we derive lines 5 and 6 in Alg. 1. The proximal mapping proxσ[F*](y), y ∈ D, for this problem results from a quadratic minimization:

| (22) |

and as G(x) = 0, x ∈ I, the corresponding proximal mapping is

| (23) |

Substituting in the arguments from the generic algorithm, leads to the update steps in Listing 2. The constant L = ‖A‖2 is the largest singular value of A (see Appendix A for details on the power method). Crucial to the implementation of the CP algorithm instance is that AT be the exact transpose of A, which is a non-trivial matter for tomographic applications, because the projection matrix A is usually computed on-the-fly [26], [27], [28]. Convergence of the CP algorithm is only guaranteed when AT is the exact transpose of A, although it may be possible to extend the CP algorithm to mismatched projector/back-projector pairs by employing the analysis in Ref. [29].

Algorithm 2.

Pseudocode for N-steps of the least-squares Chambolle-Pock algorithm instance.

| 1: | L ← ‖A‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0 and p0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | pn+1 ← (pn + σ(Aūn − g))/(1 + σ) |

| 6: | un+1 ← un − τAT pn+1 |

| 7: | ūn+1 ← un+1 + θ(un+1 − un) |

| 8: | n ← n + 1 |

| 9: | untiln ≥ N |

This derivation of the CP least-squares algorithm instance illustrates the method on a familiar optimization problem, and it provides a point of comparison with standard algorithms; this quadratic minimization problem can be solved straight-forwardly with the basic, linear conjugate gradients (CG) algorithm. Another important point for this particular algorithm instance, where limited projection data can lead to an underdetermined system, is that the CP algorithm will yield a minimizer of the objective which depends on the initial image u0. In this case, it is recommended to take advantage of the prototyping capability of the CP framework to augment the optimization problem so that it selects a unique image independent of initialization. For example, one often seeks an image closest to either 0I or a prior image, which can be formulated by adding a quadratic term or with a small combination coefficient.

1) Adding in non-negativity constraints

One of the flexibilities of the CP method becomes apparent in adding bound constraints. While CG is also flexible tool for dealing with large and small quadratic optimization, modification to include constraints, such as non-negativity, considerably complicates the CG algorithm. For CP, adding in bound constraints is simply a matter of introducing the appropriate indicator function into the primal problem:

| (24) |

where the set P is all u with non-negative components. Again, we make the mechanical associations with the primal problem Eq. (1):

| (25) |

| (26) |

| (27) |

| (28) |

The difference from the unconstrained problem is the function G(x). It turns out that the convex conjugate of δP (x) is:

| (29) |

see Appendix B for insight on convex conjugate of indicator functions. Straight substitution of G* and F* into Eq. (2), yields the dual problem:

| (30) |

As a result the conditional primal-dual gap is the same as before. The difference now is that the constraint checks are that AT p and u should be non-negative.

To derive the algorithm instance, we need the proximal mapping proxτ[G], which by definition is:

| (31) |

The indicator in the objective prevents consideration of negative components of x′. The ℓ2 term can be regarded as a sum over the square difference between components of x and x′; thus the objective is separable and can be minimized by constructing x′ such that when xi > 0 and when xi ≤ 0. Thus this proximal mapping becomes a non-negativity thresholding on each component of x:

| (32) |

Substituting into the generic pseudocode yields Listing 3. Again, we have L = ‖A‖2. The indicator function δP leads to the intuitive modification that non-negativity thresholding is introduced in line 6 of Listing 3. In this case the non-negativity constraint in u will be automatically satisfied by all iterates un. Upper bound constraints are equally simple to include.

Algorithm 3.

Pseudocode for N-steps of the least-squares with non-negativity constraint, CP algorithm instance.

| 1: | L ← ‖A‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0 and p0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | pn+1 ← (pn + σ(Aūn − g))/(1 + σ) |

| 6: | un+1 ← pos(un − τAT pn+1) |

| 7: | ūn+1 ← un+1 + θ(un+1 − un) |

| 8: | n ← n + 1 |

| 9: | untiln ≥ N |

B. Optimization problems based on the Total Variation (TV) semi-norm

Optimization problems with the TV semi-norm have received much attention for CT image reconstruction lately because of their potential to provide high quality images from sparse view sampling [8], [30], [22], [9], [31], [32], [33]. The TV semi-norm has been known to be useful for performing edge-preserving regularization, and recent developments in compressive sensing have sparked even greater interest in the use of this semi-norm. Algorithm-wise the TV semi-norm is difficult to handle. Although it is convex, it is not linear, quadratic or even everywhere-differentiable, and the lack of differentiability precludes the use of standard gradient-based optimization algorithms. In this sub-section we go through, in detail, the derivation of a CP algorithm instance for a TV-regularized least squares data error norm. We then consider the Kullback-Leibler (KL) data divergence, which is implicitly employed by many iterative algorithms based on maximum likelihood expectation maximization (MLEM). We also consider a data error norm based on ℓ1 which can have some advantage in reducing the impact of image discretization error, which generally leads to a highly non-uniform error in the data domain. Finally, we derive a CP algorithm instance for constrained TV-minimization, which is mathematically equivalent to the least-squares-plus-TV problem [34], but whose data-error constraint parameter has more physical meaning than the parameter used in the corresponding unconstrained minimization. While the previous CP instances solve optimization problems, which can be solved efficiently by well-known algorithms, the following CP instances are new for the application of CT image reconstruction.

The optimization problem of interest is

| (33) |

where the last term, the ℓ1-norm of the gradient-magnitude image, is the isotropic TV semi-norm. The spatial-vector image ∇u represents a discrete approximation to the image gradient which is in the vector space V, i.e., the space of spatial-vector-valued image arrays. The expression |∇u| is the gradient-magnitude image, an image array whose pixel values are the gradient magnitude at the pixel location. Thus, ∇u ∈ V and |∇u| ∈ I. Because ∇ is defined in terms of finite differencing, it is a linear transform from an image array to a vector-valued image array, the precise form of which is covered in Appendix D. This problem was not explicitly covered in Ref. [20], and we fill in the details here. For this case, matching the primal problem to Eq. (1) is not as obvious as the previous examples. We recognize in Eq. (33) that both terms involve a linear transform, thus the whole objective function can be written in the form F(Kx) with the following assignments:

| (34) |

| (35) |

| (36) |

| (37) |

where u ∈ I, y ∈ D, and z ∈ V. Note that F(y, z) is convex because it is the sum of two convex functions. Also the linear transform K takes an image vector x and gives a data vector y and an image gradient vector z. The transpose of K, KT = (AT, −div), will produce an image vector from a data vector y and an image gradient vector z:

| (38) |

where we use the same convention as in Ref. [20] that −div ≡ ∇T, see Appendix D.

In order to get the convex conjugate of F we need . For readers unfamiliar with the Legendre transform of indicator functions Appendix B illustrates the transform of some common cases. By definition,

| (39) |

where q ∈ V, like z, is a vector-valued image array. There are two cases to consider: (1) the magnitude image |q| at all pixels is less than or equal to λ, i.e. |q| ∈ Box(λ) and (2) the magnitude image |q| has at least one pixel greater than λ, i.e. |q| ∉ Box(λ). It turns out that for the former case the maximization in Eq. (39) yields 0, while the latter cause yields ∞. Putting these two cases together, we have

| (40) |

The conjugates of F and G are:

| (41) |

| (42) |

where p ∈ D, q ∈ V, and r ∈ I.

The problem dual to Eq. (33) becomes:

| (43) |

The resulting conditional primal-dual gap is

| (44) |

with additional constraints |q′| ∈ Box(λ) and AT p′ − div q′ = 0I. The final piece needed for putting together the CP algorithm instance for Eq. (33) is the proximal mapping:

| (45) |

The proximal mapping of the data term was covered previously, and that of the TV term is explained in Appendix C. With the necessary pieces in place, the CP algorithm instance for the -TV objective can be written down in Listing 4. Line 6, and the corresponding expression in Eq. (45), require some explanation, because the division operation is non-standard as the numerator is in V and the denominator is in I. The effect of this line is to threshold the magnitude of the spatial-vectors at each pixel in qn + σ∇ūn to the value λ: spatial-vectors larger than λ have their magnitude rescaled to λ. The resulting thresholded, spatial-vector image is then assigned to qn+1. Recall that 1I at line 6 is an image with all pixels set to 1. The operator |·| in this line converts a vector-valued image in V to a magnitude image in I, and the max(λ1I, ·) operation thresholds the lower bound of the magnitude image to λ pixel-wise. Operationally, the division is performed by dividing the spatial-vector at each pixel of the numerator by the scalar in the corresponding pixel of the denominator. Another potential source of confusion is computing the magnitude ‖(A, ∇)‖2. The power method for doing this is covered explicitly in Appendix A. If it is desired to enforce the positivity constraint, the indicator δP(u) can be added to the primal objective, and the effect of this is indicator is the same as for Listing 3; namely the right hand side of line 7 goes inside the pos(·) operator.

Algorithm 4.

Pseudocode for N-steps of the -TV CP algorithm instance.

| 1: | L ← ‖(A, ∇)‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0, p0, and q0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | pn+1 ← (pn + σ(Aūn − g))/(1 + σ) |

| 6: | qn+1 ← λ(qn + σ∇ūn)/max(λ1I, |qn + σ∇ūn|) |

| 7: | un+1 ← un − τAT pn+1 + τdiv qn+1 |

| 8: | ūn+1 ← un+1 + θ(un+1 − un) |

| 9: | n ← n + 1 |

| 10: | untiln ≥ N |

1) Alternate data divergences

For a number of reasons motivated by the physical model of imaging systems, it may be of use to formulate optimization problems for CT image reconstruction with alternate data-error terms. A natural extension of the quadratic data divergence is to include a diagonal weighting matrix. The corresponding CP algorithm instance can be easily derived following the steps mentioned above. As pointed out above, the CP method is not limited to quadratic objective functions and other important convex functions can be used. We derive, here, three additional CP algorithm instances. For alternate data divergences we consider the oft-used KL divergence, and one not so commonly used, ℓ1 data-error norm. For the following, we need only analyze the function F1, as everything else remains the same as for the -TV objective in Eq. (33).

TV plus KL data divergence

One data divergence of particular interest for tomographic image reconstruction is KL. Objectives based on KL are what is being optimized in the various forms of MLEM, and it is used often when data noise is a significant physical factor and the data are modeled as being drawn from a multivariate Poisson probability distribution [25]. For the situation where the view-sampling is also sparse, it might be of interest to combine a KL data error term with the TV semi-norm in the following primal optimization:

| (46) |

where ∑i[·]i performs summation over all components of the vector argument. This example proceeds as above except that the F1 function is different:

| (47) |

where y ∈ D, and the function ln operates on the components of its argument. Use of the KL data divergence makes sense only with positive linear systems A and non-negative pixel values u and data g. However, by defining the function over the whole space and using an indicator function to restrict the domain [24], a wide variety of optimization problems can be treated in a uniform manner. Accordingly, δP is introduced into the F1 objective and the pos operator is used just so that this objective is defined in the real numbers. The derivation of , though mechanical, is a little bit too long to be included here. We simply state the resulting conjugate function:

| (48) |

The resulting dual problem to Eq. (46) is thus:

| (49) |

To form the algorithm instance, we need the proximal mapping

| (50) |

An interesting point in the derivation, shown partially in Appendix C, of is that the quadratic equation is needed, and the support function in is used to select the correct (in this case negative) root of the discriminant in the quadratic formula. With the new function F1, its conjugate, and the conjugate’s proximal mapping, we can write down the CP algorithm instance. Listing 5 gives the CP algorithm instance minimizing a KL plus TV semi-norm objective. The difference between this algorithm instance and the previous -TV case comes only at the update at line 5. This algorithm instance has the interesting property that the intermediate image estimates un can have negative values even though the converged solution will be non-negative. If it is desirable to have the intermediate image estimates be non-negative, the non-negativity constraint can be easily introduced by adding the indicator δP (u) to the primal objective, resulting in the addition of the pos(·) operator at line 7 as was shown in Listing 3.

Algorithm 5.

Pseudocode for N-steps of the KL-TV CP algorithm instance.

| 1: | L ← ‖(A, ∇)‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0, p0, and q0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | |

| 6: | qn+1 ← λ(qn + σ∇ūn)/max(λ1I, |qn + σ∇ūn|) |

| 7: | un+1 ← un − τAT pn+1 + τdiv qn+1 |

| 8: | ūn+1 ← un+1 + θ(un+1 − un) |

| 9: | n ← n + 1 |

| 10: | untiln ≥ N |

TV plus ℓ1 data-error norm

The combination of TV semi-norm regularization and ℓ1 data-error norm has been proposed for image denoising and it has some interesting properties for that purpose [35]. This objective is also presented in Ref. [20]. For tomography, this combination may be of interest because the ℓ1 data-error term is an example of a robust fit to the data. The idea of robust approximation is to weakly penalize data that are outliers [36]. Fitting with the commonly used quadratic error function, clearly puts heavy weight on outlying measurements which in some situations can lead to streak artifacts in the images. In particular, for tomographic image reconstruction with a pixel basis, discretization error and metal objects can lead to highly non-uniform error in the data model. Use of the ℓ1 data-error term may allow for large errors for measurements along the tangent rays to internal structures, where discretization can have a large effect. The ℓ1 data-error term also puts greater emphasis on fitting the data that lie close to the model. The primal problem of interest is:

| (51) |

For this objective, the function F1 is:

| (52) |

Computing the convex conjugate yields

| (53) |

and the resulting dual problem is:

| (54) |

The proximal mapping necessary for completing the algorithm instance is

| (55) |

where 1D is a data array with each component set to one and the max operation is performed component-wise. The corresponding pseudo-code for minimizing Eq. (51) is given in Listing 6, where the only difference between this code and the previous two occurs at line 5. The ability to deal with non-smooth objectives uncomplicates this particular problem substantially. If smoothness were required, there would have to be smoothing parameters on both the ℓ1 and TV terms, adding two more parameters than necessary to a study of the image properties as a function of the optimization-problem parameters.

Algorithm 6.

Pseudocode for N-steps of the ℓ1-TV CP algorithm instance.

| 1: | L ← ‖(A, ∇)‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0, p0, and q0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | pn+1 ← (pn + σ(Aūn − g))/max(1D, |pn + σ(Aūn − g)|) |

| 6: | qn+1 ← λ(qn + σ∇ūn)/max(λ1I, |qn + σ∇ūn|) |

| 7: | un+1 ← un − τAT pn+1 + τdiv qn+1 |

| 8: | ūn+1 ← un+1 + θ(un+1 − un) |

| 9: | n ← n + 1 |

| 10: | untiln ≥ N |

2) Constrained, TV-minimization

The previous three optimization problems combine a data fidelity term with a TV-penalty, and the balance of the two terms is controlled by the parameter λ. An inconvenience of such optimization problems is that it is difficult to physically interpret λ. Focusing on combining an ℓ2 data-error norm with TV, reformulating Eq. (33) as a constrained, TV-minimization leads to the following primal problem:

| (56) |

where δBall(ε)(Au − g) is zero for ‖Au − g‖2 ≤ ε. When ε > 0, this problem is equivalent to the unconstrained optimization Eq. (33), see e.g. Ref. [34], in the sense that for each positive ε there is a corresponding λ yielding the same solution. For this constrained, TV-minimization, the function F1 is

| (57) |

The corresponding conjugate is

| (58) |

leading to the dual problem:

| (59) |

Again, for the algorithm instance we need the proximal mapping :

| (60) |

The main points in deriving this proximal mapping are discussed in Appendix C, and it is an example where geometric/symmetry arguments play a large role. Listing 7 shows the algorithm instance solving Eq. (56), where once again only line 5 is modified. This algorithm instance essentially achieves the same goal as Listing 4, the only difference is that the parameter ε has an actual physical interpretation, being the data-error bound.

Algorithm 7.

Pseudocode for N-steps of the ℓ2-constrained, TV-minimization CP algorithm instance.

| 1: | L ← ‖(A, ∇)‖2; τ ← 1/L; σ ← 1/L; θ ← 1; n ← 0 |

| 2: | initialize u0, p0, and q0 to zero values |

| 3: | ū0 ← u0 |

| 4: | repeat |

| 5: | pn+1 ← max(‖pn + σ(Aūn − g)‖2 − σε, 0) (pn + σ(Aūn − g)) |

| 6: | qn+1 ← (qn + σ∇ūn)/max(1I, |qn + σ∇ūn|) |

| 7: | un+1 ← un − τAT pn+1 + τdiv qn+1 |

| 8: | ūn+1 ← un+1 + θ(un+1 − un) |

| 9: | n ← n + 1 |

| 10: | untiln ≥ N |

IV. Demonstration of CP algorithm instances for tomographic image reconstruction

In the previous section, we have derived CP algorithm instances covering many optimization problems of interest to CT image reconstruction. Not only are there the seven optimization problems, but within each case the system model/matrix A, the data g, and optimization problem parameters can vary. For each of these, practically infinite number of optimization problems, the corresponding CP algorithm instances are guaranteed to converge [20]. The purpose of this results section is not to advocate one optimization problem over another; rather to demonstrate the utility of the CP algorithm for optimization problem prototyping. For this purpose, we present example image reconstructions that could be performed in a study for investigating the impact of matching the data-divergence with data noise model for image reconstruction in breast CT.

A. Sparse-view experiments for image reconstruction from simulated CT data

We briefly describe the significance of the experiments, but we point out that the main goal here is to demonstrate the CP algorithm instances. Much of the recent interest in employing the TV semi-norm in optimization problems for CT image reconstruction has been generated by compressive sensing (CS). CS seeks to relate sampling conditions on a sensing device with sparsity in the object being scanned. So far, mathematical results have been limited to various types of random sampling [37]. System matrices such as those representing CT projection fall outside of the scope of mathematical results for CS [8]. As a result, the only current option for investigating CS in CT is through numerical experiments with computer phantoms.

A next logical step for bridging theoretical results for CS to actual application is to consider physical factors in the data model. One such factor is a noise model, which can be quite important for low-dose CT applications such as breast CT. While much work has been performed on iterative image reconstruction with various noise models under conditions of full sampling, little is known about the impact of noise on sparse-view image reconstruction. In the following limited study, we set up a breast CT simulation to investigate the impact of correct modeling of data noise with the purpose of demonstrating that the CP algorithm instances can be applied to the CT system.

B. Sparse-view reconstruction with a Poisson noise model

For the following study, we employ a digital 256 × 256 breast phantom, described in Ref. [23], [38], and used in our previous study on investigating sufficient sampling conditions for TV-based CT image reconstruction [12]. The phantom models for tissue types: the background fat tissue is a assigned a value of 1.0, the modeled fibro-glandular tissue takes a value of 1.1, the outer skin layer is set to 1.15, and the micro-calcifications are assigned values in the range [1.8,2.3].

For the present case, we focus on circular, fan-beam scanning with 60 projections equally distributed over a full 360° angular range. The simulated radius of the X-ray source trajectory is 40cm with a source-detector distance of 80cm. The detector sampling consists of 512 bins of size 200 microns. The system matrix for the X-ray projection is computed by the line-intersection method where the matrix elements of A are determined by the length of traversal in each image pixel of each source/detector-bin ray. For this phantom under ideal conditions, we have found that accurate recovery is possible with constrained, TV-minimization with as few as 50 projections. In the present study, we add Poisson noise to the data model at a level consistent with what might be expect in a typical breast CT scan. The Poisson noise model is chosen in order to investigate the impact of matching the data-error term to the noise model. For reference, the phantom is shown in Fig. 1. To have a sense of the noise level, a standard fan-beam filtered back-projection reconstruction is shown alongside the phantom for simulated Poisson noise.

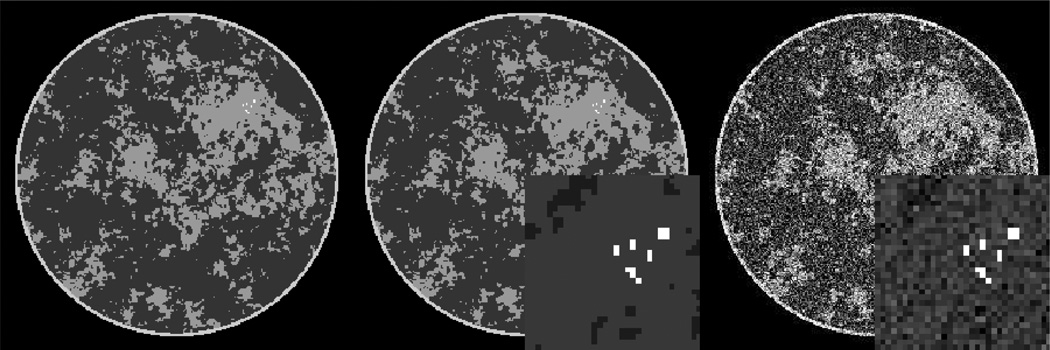

Fig. 1.

Breast phantom for CT and FBP reconstructed image for a 512-view data set with Poisson distributed noise. Left is the phantom in the gray scale window [0.95,1.15]; middle is the same phantom with a blow-up on the micro-calcification ROI displayed in the gray scale window [0.9,1.8]; and right is the FBP image reconstructed from the noisy data. The middle panel is the reference for all image reconstruction algorithm results. The FBP image is shown only to provide a sense of the noise level.

For this noise model, the maximum likelihood method prescribes minimizing the KL data divergence between the available and estimated data. To gauge the importance of selecting a maximum likelihood image, we compare the results from two optimization problems: a KL data divergence plus a TV-penalty, Eq. (46) above; and a least-squares data error norm plus a TV-penalty, Eq. (33) above. With the CP framework, these two optimization problems can be easily prototyped: the solutions to both problems can be obtained without worrying about smoothing the TV semi-norm, setting algorithm parameters, or proving convergence.

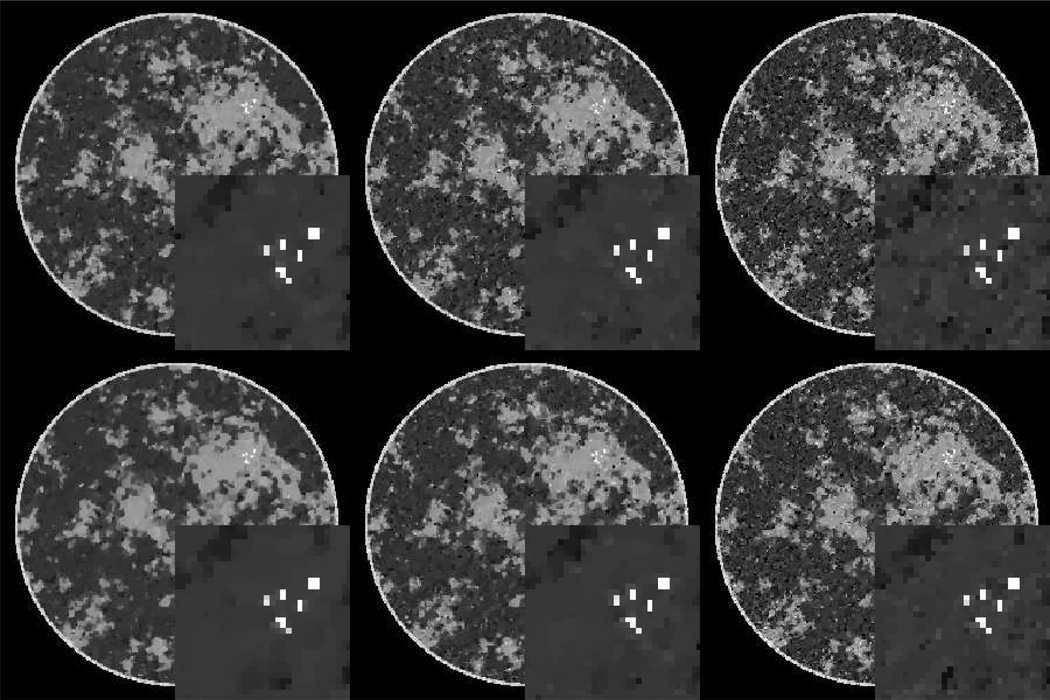

For the phantom and data conditions, described above, the images for different values of the TV-penalty parameter λ are shown in Fig. 2. An ROI of the micro-calcification cluster is also shown. The overall and ROI images give an impression of two different visual tasks important for breast imaging: discerning the fibro-glandular tissue morphology and detection/classification of micro-calcifications. The images show some difference between the two optimization problems; most notably there is a perceptible reduction in noise in the ROIs from the KL-TV images. A firm conclusion, however, awaits a more complete study with multiple noise realizations.

Fig. 2.

Images reconstructed from 60-view projection data with a Poisson distributed noise model. The top row of images result from minimizing the -TV objective in Eq. (33) for λ = 1 × 10−4, 5 × 10−5, and 2 × 10−5, going from left to right. The bottom row of images result from minimizing the KL-TV objective in Eq. (46) for the same values of λ. Note that λ does not necessarily have the same impact on each of these optimization problems. Nevertheless, we see similar trends for the chosen values of λ.

The most critical feature of the CP algorithm that we wish to promote is the rapid prototyping of a convex optimization problem for CT image reconstruction. The above study is aimed at a combination of using a data divergence based on maximum likelihood estimation with a TV-penalty, which takes advantage of sparsity in the gradient magnitude of the underlying object. The CP framework facilitates the use of many other convex optimization problems, particularly those based on some form of sparsity, which often entail some form of the non-smooth ℓ1-norm. For example, in Ref. [8] we have found it useful for sparse-view X-ray phase-contrast imaging to perform image reconstruction with a combination of a least-squares data fidelity term, an ℓ1-penalty promoting object sparseness, and an image TV constraint to further reduce streak artifacts from angular under-sampling. Under the CP framework, prototyping various combinations of these terms as constrained or unconstrained optimization problems becomes possible and the corresponding derivation of CP algorithm instances follows from the steps described in Sec. II-B. Alternative, convex data fidelity terms and image constraints motivated by various physical models may also be prototyped.

As a practical matter, though, it is important to have some sense of the convergence of the CP algorithm instances. To this end, we take an in depth look at individual runs for the KL-TV algorithm instance for CT image reconstruction.

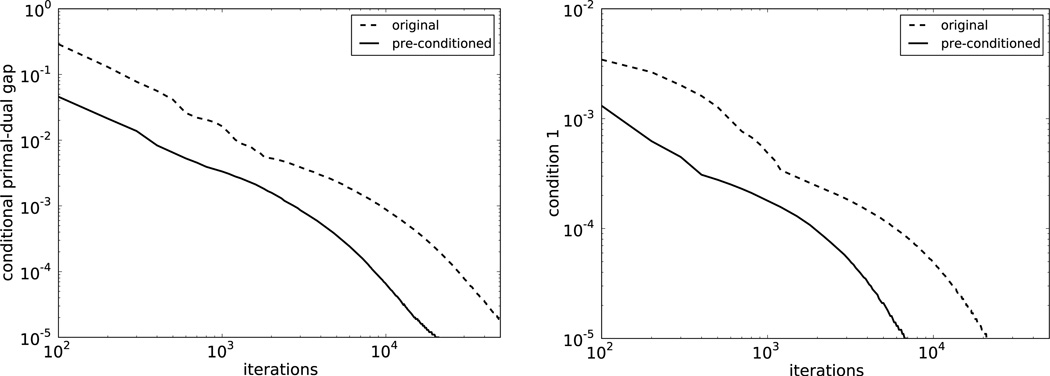

C. Iteration dependence of the CP algorithm

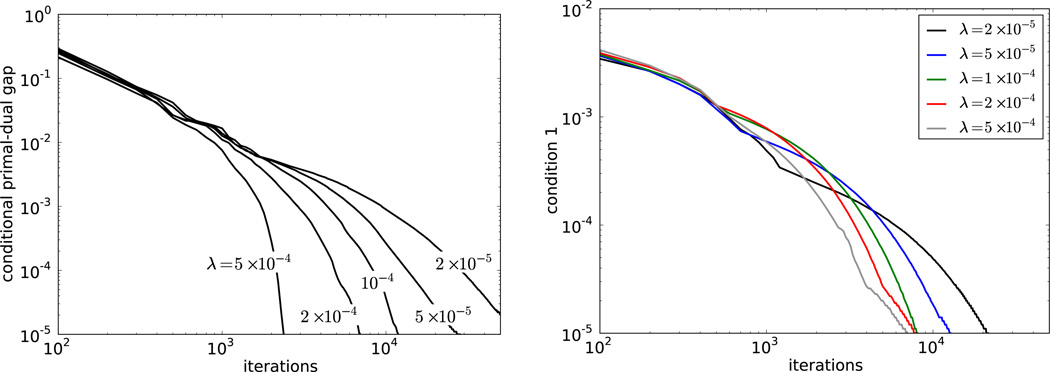

Through the methods described above, many useful algorithm instances can be derived for CT image reconstruction. It is obviously important that the resulting algorithm instance reaches the solution of the prescribed optimization problem. To illustrate the convergence of a resulting algorithm instance we focus on the TV-penalized KL data divergence, Eq. (46), and plot the conditional primal-dual gap for the different runs with varying λ in Fig. 3. Included in this figure is a plot indicating the convergence to agreement with the most challenging condition set by the indicator functions in Eq. (49). For the present results we terminated the iteration at a conditional primal-dual gap of 10−5, which appears to happen on the scale of thousands of iterations with smaller λ requiring more iterations. Interestingly, a simple pre-conditioned form of the CP algorithm was proposed in Ref. [39], which appears to perform efficiently for small λ. The pre-conditioned CP algorithm instance for this problem is reported in Appendix E.

Fig. 3.

(Left) Convergence of the partial primal-dual gap for the CP algorithm instance solving Eq. (46) for different values of λ. (Right) Plot indicating agreement with condition 1: ‖div q − AT p‖∞, the magnitude of the largest component of the argument of the last indicator function of Eq. (49). Collecting all the indicator functions of the primal, Eq. (46), and dual, Eq. (49), KL-TV optimization problems, we have four conditions to check in addition to the conditional primal-dual gap: (1) div q − AT p = 0I, (2) Au ≥ 0D, (3) p ≤ 1D, and (4) |q| < λ. The agreement with condition 1 is illustrated in the plot; agreement with condition 2 has a similar dependence; condition 3 is satisfied early on in the iteration; and condition 4 is automatically enforced by the CP algorithm instance for KL-TV. Because the curves are bunched together in the condition 1 plot, they are differentiated in color.

V. DISCUSSION

This article has presented the application of the Chambolle-Pock algorithm to prototyping of optimization problems for CT image reconstruction. The algorithm covers many optimization problems of interest allowing for non-smooth functions. It also comes with solid convergence criteria to check the image estimates.

The use of the CP algorithm we are promoting here is for prototyping; namely, when the image reconstruction algorithm development is at the early stage of determining important factors in formulating the optimization problem. As an example, we illustrated a scenario for sparse-view breast CT considering two different data-error terms. In this stage of development it is helpful to not have to bother with algorithm parameters, and questions of whether or not the algorithm will converge. After the final optimization problem is determined, then the focus shifts from prototyping to efficiency.

Optimization problem prototyping for CT image reconstruction does have its limitations. For example, in the breast CT simulation presented above, a more complete conclusion requires reconstruction from multiple realizations of the data under the Poisson noise model. Additional important dimensions of the study are generation of an ensemble of breast phantoms and considering alternate image representations/projector models. Considering the size of CT image reconstruction systems and huge parameter space of possible optimization problems, it is not yet realistic to completely characterize a particular CT system. But at least we are assured of solving isolated setups and it is conceivable to perform a study along one aspect of the system, i.e. consider multiple realizations of the random data model. Given the current state of affairs for optimization-based image reconstruction, it is crucial that simulations be as realistic as possible. There is great need for realistic phantoms, and data simulation software.

We point out that it is likely at least within the immediate future, that optimization-based image reconstruction will have to operate at severely truncated iteration numbers. Current clinical applications of iterative image reconstruction often operate in the range of one to ten iterations, which is likely far too few for claiming that the image estimate is an accurate solution to the designed optimization problem. But at least the ability to prototype an optimization problem can potentially simplify the design phase by separating optimization parameters from algorithm parameters.

Acknowledgment

The authors are grateful to Cyril Riddell and Pierre Vandergheynst for suggesting to look into the Chambolle-Pock algorithm, and to Paul Wolf for checking many of the equations. This work is part of the project CSI: Computational Science in Imaging, supported by grant 274-07-0065 from the Danish Research Council for Technology and Production Sciences. This work was supported in part by NIH R01 grants CA158446, CA120540, and EB000225. The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the National Institutes of Health.

APPENDIX A

Computing the norm of K

The matrix norm used for the parameter L in the CP algorithm instances is the largest singular value of K. This singular value can be obtained by the standard power method specified in Listing 8. When K represents the discrete X-ray transform, our experience has been that the power method converges to numerical precision in twenty iterations or less. In implementing the CP algorithm instance for TV-penalized minimization, the norm of the combined linear transform ‖(A, ∇)‖2 is needed. For this case, the program is the same as Listing 8 where KT Kxn becomes AT Axn − div∇xn; recall that −div = ∇T. Furthermore, to obtain s, the explicit computation is .

Algorithm 8.

Pseudocode for N-steps of the generic power method. The scalar s tends to ‖K‖2 as N increases.

| 1: | initialize x0 ∈ I to a non-zero image |

| 2: | n ← 0 |

| 3: | repeat |

| 4: | xn+1 ← KTKxn |

| 5: | xn+1 ← xn+1/‖xn+1‖2 |

| 6: | s ← ‖Kxn+1‖2 |

| 7: | n ← n + 1 |

| 8: | untiln ≥ N |

APPENDIX B

The convex conjugate of certain indicator functions of interest illustrated in one-dimension

This appendix covers the convex conjugate of a couple of indicator functions in one dimension, serving to illustrate how geometry plays a role in the computation and to provide a mental picture on the conjugate of higher dimensional indicator functions.

Consider first the indicator δP(x), which is zero for x ≥ 0. The conjugate of this indicator is computed from:

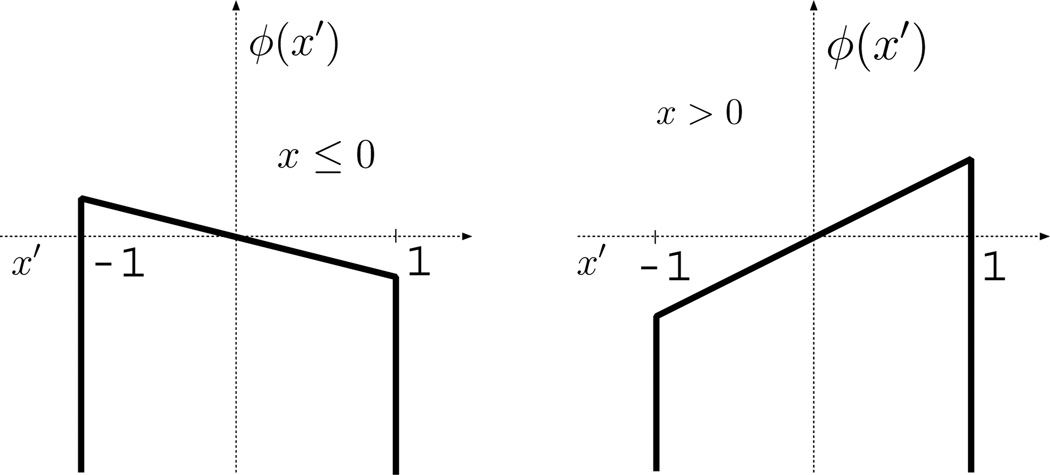

| (61) |

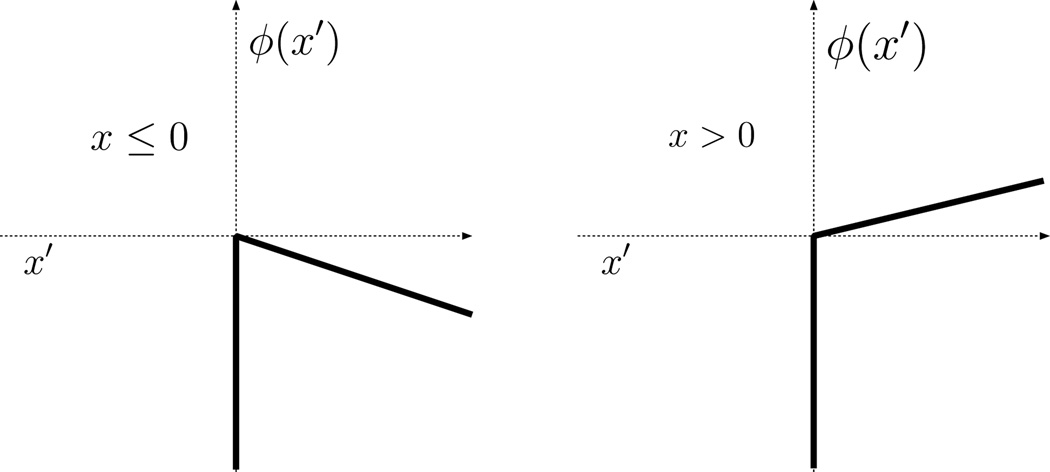

To perform this maximization, we analyze the cases, x ≤ 0 and x > 0, separately. As a visual aid, we plot the objective for these two cases in Fig. 4. From this figure it is clear that when x ≤ 0, the objective’s maximum is attained at x′ = 0 and this maximum value is 0 (note that this is true even for x = 0). When x > 0, the objective can increase without bound as x′ tends to ∞, resulting in a maximum value of ∞. Putting these two cases together yields:

Fig. 4.

Illustration of the objective function, labeled ϕ(x′), in the maximization described by Eq. (61). Shown are the two cases discussed in the text.

Generalizing this argument to multi-dimensional x, yields Eq. (29).

Next we consider δBox(1) (x), which in one dimension is the same as δBall(1)(x). This functions is zero only for −1 ≤ x ≤ 1. Its conjugate is computed from:

| (62) |

Again, we have two cases, x ≤ 0 and x > 0, illustrated in Fig. 5. In the former case the maximum value of the objective is attained at x′ = −1, and this maximum value is −x. In the latter case the maximum value is x, and it is attained at x′ = 1. Hence, we have:

Fig. 5.

Illustration of the objective function, labeled ϕ(x′), in the maximization described by Eq. (62). Shown are the two cases discussed in the text.

For multi-dimensional x, δBox(1)(x) ≠ δBall(1)(x), and this is also reflected in the conjugates:

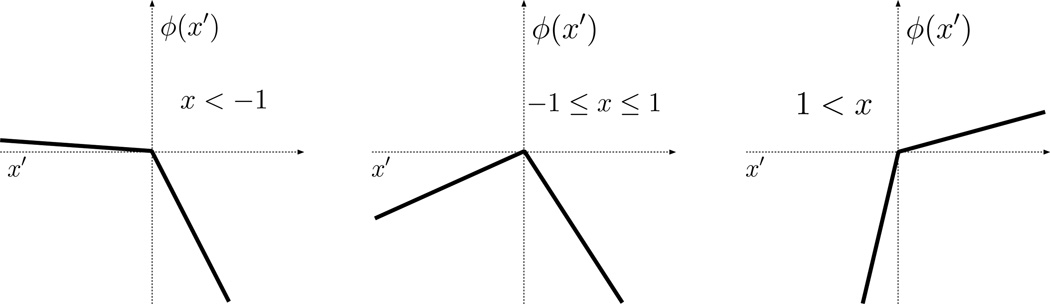

It is also interesting to verify that is indeed δBox(1)(x) by showing, again in one dimension, that |x|* = δBox(1)(x). Illustrating this example helps in understanding the convex conjugate of multi-dimensional ℓ1-based semi-norms. The relevant conjugate is computed from:

| (63) |

Here, we need to analyze three cases: x < −1, −1 ≤ x ≤ 1, and x > 1. The corresponding sketch is in Fig. 6. The −|x| term in the objective makes an upside-down wedge, and the x′x term serves to tip this wedge. In the second case, the wedge is tipped, but still opens up downward so that the objective is maximized at x′ = 0, attaining there the value of 0. In the first and third cases, the wedge is tipped so much that part of it points upward and the objective can increase without bound, attaining the value of ∞. Putting these cases together does indeed yield:

Fig. 6.

Illustration of the objective function, labeled ϕ(x′), in the maximization described by Eq. (63). Shown are the three cases discussed in the text.

APPENDIX C

Computation of important proximal mappings

This appendix fills in important steps in computing some of the proximal mappings in the text, where it is necessary to use geometrical reasoning in addition to setting the gradient of the objective to zero.

The conjugate of the TV semi-norm in Eq. (40) leads to the following proximal mapping computation:

where z, z′ ∈ V, and absolute value, |·|, of a spatial-vector image V yields an image, in I, of the spatial-vector-magnitude. The quadratic term is minimized when z = z′, but the indicator function excludes this minimizer when z ∉ Box(λ). To solve this problem, we write the quadratic as a sum over pixels:

where i indexes the image pixels and each zi and is a spatial-vector. The indicator function places an upper bound on the magnitude of each spatial-vector . The proximal mapping is built pixel-by-pixel considering two cases: if |zi| ≤ λ, then proxσ[F*](z)i = zi; if |zi| > λ, then is chosen to be closest to zi while respecting which leads to a scaling of the magnitude of zi and proxσ[F*](z)i = λzi/|zi|. Note that the constant σ does not enter into this calculation. Putting the cases and components all together yields the second part of the proximal mapping in Eq. (45).

For the KL-TV problem the proximal mapping for the data term is computed from Eq. (48):

We note that the objective is a smooth function in the positive orthant of p′ ∈ D. Accordingly, we differentiate the objective with respect to p′ ignoring the pos(·) and indicator functions, keeping in mind that we have to check that the minimizer p′ is non-negative. Performing the differentiation and setting to zero yields the following quadratic equation:

and substituting into the quadratic equation yields:

We have two possible solutions, but it turns out that applying the restriction 1D − p′ ≥ 0 selects the negative root. To see this, we evaluate 1D − p′ at both roots:

Using the fact that the data are non-negative, we have

the positive root clearly leads to possible negative values for 1D −p′ while the negative root respects 1D −p′ ≥ 0 and yields Eq. (50).

For the final computation of a proximal mapping, we take a look at the data term of the constrained, TV-minimization problem. From Eq. (58), the proximal mapping of interest is evaluated by:

Note the first term in the objective is spherically symmetric about p and increasing with distance from p, and the second term is also spherically symmetric about 0D and increasing with distance from 0D. If just these two terms were present the minimum would lie on the line segment between 0D and p. The third term, however, complicates the situation a little. We note that this term is linear in p′, and it can be combined with the first term by completing the square. Performing this manipulation and ignoring constant terms (independent of p′) yields:

By the geometric considerations discussed above, the minimizer lies on the line segment between 0D and p − σg. Analyzing this one-dimensional minimization leads to Eq. (60).

APPENDIX D

The finite differencing form of the image gradient and divergence

In this appendix we write down the explicit forms of the finite differencing approximations of ∇ and −div in two dimensions used in this article. We use x ∈ I to represent an M × M image and xi,j to refer to the (i, j)th pixel of x. To specify the linear transform ∇, we introduce the differencing images Δsx ∈ I and Δtx ∈ I:

Using these definitions, ∇ can be written as:

With this form of ∇, its transpose −div becomes:

where the elements referred to outside the image border are set to zero: Δsx0,j = Δsxi,0 = Δtx0,j = Δtxi,0 = 0. What the particular form of ∇ is in its discrete form is not that important, but it is critical that the discrete forms of −div and ∇ are the transposes of each other.

APPENDIX E

Preconditioned chambolle-pock algorithm demonstrated on the kl-tv optimization problem

Chambolle and Pock followed their article, Ref. [20], with a pre-conditioned version of their algorithm that suits our purpose of optimization problem proto-typing while potentially improving algorithm efficiency substantially for the -TV and KL-TV optimization problems with small λ. The new algorithm replaces the constants σ and τ with vector quantities that are computed directly from the system matrix K, which yields a vector in space Y from a vector in space X. One form of the suggested, diagonal pre-conditioners uses the following weights:

| (64) |

| (65) |

where Σ ∈ Y, T ∈ X, and |K| is the matrix formed by taking the absolute value of each element of K. In order to generate the CP algorithm instance incorporating pre-conditioning, the proximal mapping needs to be modified:

| (66) |

The second term in this minimization is still quadratic but no longer spherically symmetric. The difficulty in deriving the pre-conditioned CP algorithm instances is similar to that of the original algorithm. On the one hand there is no need for finding ‖K‖2, but on the other hand deriving the proximal mapping may become more involved. For the -TV and the KL-TV optimization problems, the proximal mapping is simple to derive and it turns out that the mappings can be arrived at by replacing σ by Σ and τ by T.

The gain in efficiency for small λ comes from being able to absorb this parameter into the TV term and allowing Σ to account for the mismatch between TV and data agreement terms. We modify the definitions of ∇ and −div matrices from Appendix D:

and

where again the elements referred to outside the image border are set to zero: Δsx0,j = Δsxi,0 = Δtx0,j = Δtxi,0 = 0.

For a complete example, we write the pre-conditioned CP algorithm instance for KL-TV in Listing 9 To illustrate the potential gain in efficiency, we show the condition primal-dual gap as a function of iteration number for the KL-TV problem with λ = 2 × 10−5 in Fig. 7. While we have presented the pre-conditioned CP algorithm as a patch for the small λ case, it really provides an alternative prototyping algorithm and it can be used instead of the original CP algorithm.

Algorithm 9.

Pseudocode for N-steps of the KL-TV pre-conditioned CP algorithm instance.

| 1: | Σ1 ← 1D/(|A|1I); Σ2 ← 1V/(|∇λ|1I); T ← 1I/(|AT |1D + |divλ|1V) |

| 2: | θ ← 1; n ← 0 |

| 3: | initialize u0, p0, and q0 to zero values |

| 4: | ū0 ← u0 |

| 5: | repeat |

| 6: | |

| 7: | qn+1 ← (qn + Σ2∇λūn)/max(1I, |qn + Σ2∇λūn|) |

| 8: | un+1 ← un − TAT pn+1 + Tdivλqn+1 |

| 9: | ūn+1 ← un+1 + θ(un+1 − un) |

| 10: | n ← n + 1 |

| 11: | untiln ≥ N |

Fig. 7.

(Left) Convergence of the partial primal-dual gap for the CP algorithm instance solving Eq. (46) for λ = 2 × 10−5 for the original and pre-conditioned CP algorithm. (Right) Plot indicating agreement with condition 1 for the KL-TV optimization problem. See Fig. 3 for explanation.

Contributor Information

Emil Y. Sidky, Email: sidky@uchicago.edu, Department of Radiology, University of Chicago, 5841 S. Maryland Ave., Chicago, IL 60637, USA.

Jakob H. Jørgensen, Email: jakj@imm.dtu.dk, Department of Informatics and Mathematical Modeling, Technical University of Denmark, Richard Petersens Plads, Building 321, 2800 Kgs. Lyngby, Denmark.

Xiaochuan Pan, Email: xpan@uchicago.edu, Department of Radiology, University of Chicago, 5841 S. Maryland Ave., Chicago, IL 60637, USA.

References

- 1.McCollough CH, Primak AN, Braun N, Kofler J, Yu L, Christner J. Strategies for reducing radiation dose in CT. Radiol. Clin. N. Am. 2009;vol. 47:27–40. doi: 10.1016/j.rcl.2008.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pan X, Sidky EY, Vannier M. Why do commercial CT scanners still employ traditional, filtered back-projection for image reconstruction. Inv. Prob. 2009;vol. 25:123. doi: 10.1088/0266-5611/25/12/123009. 009–(1–36) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ziegler A, Nielsen T, Grass M. Iterative reconstruction of a region of interest for transmission tomography. Med. Phys. 2008;vol. 35:1317–1327. doi: 10.1118/1.2870219. [DOI] [PubMed] [Google Scholar]

- 4.Li M, Yang H, Kudo H. An accurate iterative reconstruction algorithm for sparse objects: Application to 3D blood vessel reconstruction from a limited number of projections. Phy. Med. Biol. 2002;vol. 47:2599–2609. doi: 10.1088/0031-9155/47/15/303. [DOI] [PubMed] [Google Scholar]

- 5.Sidky EY, Kao C-M, Pan X. Accurate image reconstruction from few-views and limited-angle data in divergent-beam CT. J. X-ray Sci. Tech. 2006;vol. 14:119–139. [Google Scholar]

- 6.Sidky EY, Pan X. Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys. Med. Biol. 2008;vol. 53:4777–4807. doi: 10.1088/0031-9155/53/17/021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chen GH, Tang J, Leng S. Prior image constrained compressed sensing (PICCS): a method to accurately reconstruct dynamic CT images from highly undersampled projection data sets. Med. Phys. 2008;vol. 35:660–663. doi: 10.1118/1.2836423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sidky EY, Anastasio MA, Pan X. Image reconstruction exploiting object sparsity in boundary-enhanced x-ray phase-contrast tomography. Opt. Express. 2010;vol. 18:10 404–10 422. doi: 10.1364/OE.18.010404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ritschl L, Bergner F, Fleischmann C, Kachelrieß M. Improved total variation-based CT image reconstruction applied to clinical data. Phys. Med. Biol. 2011;vol. 56:1545–1562. doi: 10.1088/0031-9155/56/6/003. [DOI] [PubMed] [Google Scholar]

- 10.Defrise M, Vanhove C, Liu X. An algorithm for total variation regularization in high-dimensional linear problems. Inv. Prob. 2011;vol. 27 065002. [Google Scholar]

- 11.Ramani S, Fessler J. A splitting-based iterative algorithm for accelerated statistical X-ray CT reconstruction. IEEE Trans. Med. Imag. 2011 doi: 10.1109/TMI.2011.2175233. available online at IEEE TMI - early access. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jørgensen JH, Sidky EY, Pan X. Analysis of discrete-to-discrete imaging models for iterative tomographic image reconstruction and compressive sensing. 2011 arxiv preprint arxiv:1109.0629 ( http://arxiv.org/abs/1109.0629). [Google Scholar]

- 13.Nocedal J, Wright S. Numerical Optimization. 2nd ed. Springer; 2006. [Google Scholar]

- 14.Erdogan H, Fessler JA. Ordered subsets algorithms for transmission tomography. Phys. Med. Biol. 1999;vol. 44:2835–2852. doi: 10.1088/0031-9155/44/11/311. [DOI] [PubMed] [Google Scholar]

- 15.Green P. Iteratively reweighted least squares for maximum likelihood estimation, and some robust and resistant alternatives. J. Royal Stat. Soc., Ser. B. 1984;vol. 46:149–192. [Google Scholar]

- 16.Yin W, Osher S, Goldfarb D, Darbon J. Bregman iterative algorithms for ℓ1-minimization with applications to compressed sensing. SIAM J. Imag. Sci. 2008;vol. 1:143–168. [Google Scholar]

- 17.Combettes PL, Pesquet JC. A proximal decomposition method for solving convex variational inverse problems. Inv. Prob. 2008;vol. 24:065 014–065 027. [Google Scholar]

- 18.Beck A, Teboulle M. Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans. Imag. Proc. 2009;vol. 18:2419–2434. doi: 10.1109/TIP.2009.2028250. [DOI] [PubMed] [Google Scholar]

- 19.Becker SR, Candes EJ, Grant M. Templates for convex cone problems with applications to sparse signal recovery. 2010 arxiv preprint arXiv:1009.2065. [Google Scholar]

- 20.Chambolle A, Pock T. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imag. Vis. 2011;vol. 40:1–26. [Google Scholar]

- 21.Jensen TL, Jørgensen JH, Hansen PC, Jensen SH. Implementation of an optimal first-order method for strongly convex total variation regularization. 2011 accepted to BIT, available at http://arxiv.org/abs/1105.3723. [Google Scholar]

- 22.Choi K, Wang J, Zhu L, Suh T-S, Boyd S, Xing L. Compressed sensing based cone-beam computed tomography reconstruction with a first-order method. Med. Phys. 2010;vol. 37:5113–5125. doi: 10.1118/1.3481510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jørgensen JH, Hansen PC, Sidky EY, Reiser IS, Pan X. Toward optimal X-ray flux utilization in breast CT; Proceedings of the 11th International Meeting on Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine; 2011. arxiv preprint arxiv:1104.1588 ( http://arxiv.org/abs/1104.1588). [Google Scholar]

- 24.Rockafellar RT. Convex analysis. Princeton Univ. Press; 1970. [Google Scholar]

- 25.Barrett HH, Myers KJ. Foundations of Image Science. Hoboken, NJ: John Wiley & Sons; 2004. [Google Scholar]

- 26.Siddon RL. Fast calculation of the exact radiological path for a three-dimensional CT array. Med. Phys. 1985;vol. 12:252–255. doi: 10.1118/1.595715. [DOI] [PubMed] [Google Scholar]

- 27.Man BD, Basu S. Distance-driven projection and backprojection in three dimensions. Phys. Med. Biol. 2004;vol. 49:2463–2475. doi: 10.1088/0031-9155/49/11/024. [DOI] [PubMed] [Google Scholar]

- 28.Xu F, Mueller K. Real-time 3D computed tomographic reconstruction using commodity graphics hardware. Phys. Med. Biol. 2007;vol. 52:3405–3419. doi: 10.1088/0031-9155/52/12/006. [DOI] [PubMed] [Google Scholar]

- 29.Zeng GL, Gullberg GT. Unmatched projector/backprojector pairs in an iterative reconstruction algorithm. IEEE Trans. Med. Imag. 2000;vol. 19:548–555. doi: 10.1109/42.870265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bian J, Siewerdsen JH, Han X, Sidky EY, Prince JL, Pelizzari CA, Pan X. Evaluation of sparse-view reconstruction from flat-panel-detector cone-beam CT. Phys. Med. Biol. 2010;vol. 55:6575–6599. doi: 10.1088/0031-9155/55/22/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Han X, Bian J, Eaker DR, Kline TL, Sidky EY, Ritman EL, Pan X. Algorithm-enabled low-dose micro-CT imaging. IEEE Trans. Med. Imag. 2011;vol. 30:606–620. doi: 10.1109/TMI.2010.2089695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xia D, Xiao X, Bian J, Han X, Sidky EY, Carlo FD, Pan X. Image reconstruction from sparse data in synchrotron-radiation-based microtomography. Rev. Sci. Inst. 2011;vol. 82:043 706–043 709. doi: 10.1063/1.3572263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sidky EY, Duchin Y, Ullberg C, Pan X. X-ray computed tomography: advances in image formation: A constrained, total-variation minimization algorithm for low-intensity X-ray CT. Med. Phys. 2011;vol. 38:S117–S125. doi: 10.1118/1.3560887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elad M. Sparse and redundant representations: from theory to applications in signal and image processing. Springer Verlag; 2010. [Google Scholar]

- 35.Chan TF, Esedoḡlu S. Aspects of total variation regularized L1 function approximation. SIAM J. Appl. Math. 2005;vol. 65:1817–1837. [Google Scholar]

- 36.Boyd SP, Vandenberghe L. Convex optimization. Cambridge University Press; 2004. [Google Scholar]

- 37.Candés EJ, Wakin MB. An introduction to compressive sampling. IEEE Sig. Proc. Mag. 2008;vol. 25:21–30. [Google Scholar]

- 38.Reiser I, Nishikawa RM. Task-based assessment of breast tomosynthesis: Effect of acquisition parameters and quantum noise. Med. Phys. 2010;vol. 37:1591–1600. doi: 10.1118/1.3357288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Pock T, Chambolle A. Diagonal preconditioning for first order primal-dual algorithms in convex optimization; International Conference on Computer Vision (ICCV 2011); 2011. to appear; available at URL: http://gpu4vision.icg.tugraz.at. [Google Scholar]