Abstract

In this paper, we give a short introduction to machine learning and survey its applications in radiology. We focused on six categories of applications in radiology: medical image segmentation, registration, computer aided detection and diagnosis, brain function or activity analysis and neurological disease diagnosis from fMR images, content-based image retrieval systems for CT or MRI images, and text analysis of radiology reports using natural language processing (NLP) and natural language understanding (NLU). This survey shows that machine learning plays a key role in many radiology applications. Machine learning identifies complex patterns automatically and helps radiologists make intelligent decisions on radiology data such as conventional radiographs, CT, MRI, and PET images and radiology reports. In many applications, the performance of machine learning-based automatic detection and diagnosis systems has shown to be comparable to that of a well-trained and experienced radiologist. Technology development in machine learning and radiology will benefit from each other in the long run. Key contributions and common characteristics of machine learning techniques in radiology are discussed. We also discuss the problem of translating machine learning applications to the radiology clinical setting, including advantages and potential barriers.

Keywords: survey, radiology, machine learning, image registration, image segmentation, computer aided detection and diagnosis, functional MRI, content-based image retrieval, computed tomography, magnetic resonance imaging

1. Introduction

Radiologic imaging is of increasing importance in patient care. Both diagnostic and therapeutic indications for radiologic imaging are expanding rapidly (Bhargavan et al., 2009). The rapid expansion is a consequence of the need for more rapid, accurate, cost-effective, and less invasive treatment. Technologic advancements in radiologic imaging equipment have also fueled the utilization of imaging. Such technologic advancements include the capability to acquire higher and higher resolution images, enabling visualization of smaller anatomic structures and abnormalities. The higher resolution comes at the cost of an ever increasing average number of images per patient. Radiologists need to interpret these images and as the number of images increases, radiologists' workload increases as well. The increasing number and complexity of the images threatens to overwhelm radiologists' capacities to interpret them. In many real radiologic practices, automated and intelligent image analysis and understanding are becoming an essential part or procedure, such as image segmentation, registration, and computer-aided diagnosis and detection. In addition, in the area of cancer prognosis and treatment, automated and intelligent algorithms have a large market and are welcomed broadly, in areas such as radiation therapy planning or automatic identification of imaging biomarkers from radiological images of certain diseases, etc. Machine learning algorithms underpin the algorithms and software that make computer-aided diagnosis/prognosis/treatment possible.

Radiology is a branch of medical science which uses imaging technology and radiation to make diagnoses and treat disease. It has benefited greatly from the advances of physics, electronic engineering, and computer science. Based on different detection and imaging rationale, various modalities were developed in the past decades in the field of diagnostic radiology. Today, the mainstream modalities which are widely used in hospitals and medical centers include radiography, fluoroscopy, computed tomography (CT), ultrasound, magnetic resonance imaging (MRI), and positron emission tomography (PET).

In the daily practice of radiology, medical images from different modalities are read and interpreted by radiologists. Usually radiologists must analyze and evaluate these images comprehensively in a short time. But with the advances in modern medical technologies, the amount of imaging data is rapidly increasing. For example, CT examinations are being performed with thinner slices than in the past. The reading and interpretation time of radiologists will mount as the number of CT slices grows.

Machine learning provides an effective way to automate the analysis and diagnosis for medical images. It can potentially reduce the burden on radiologists in the practice of radiology. The applications of machine learning in radiology include medical image segmentation (e.g., brain, spine, lung, liver, kidney, colon); medical image registration (e.g., organ image registration from different modalities or time series); computer-aided detection and diagnosis systems for CT or MRI images (e.g., mammography, CT colonography, and CT lung nodule CAD); brain function or activity analysis and neurological disease diagnosis from fMR images; content based image retrieval systems for CT or MRI images; and text analysis of radiology reports using natural language processing (NLP) and natural language understanding (NLU).

Machine learning is the study of computer algorithms which can learn complex relationships or patterns from empirical data and make accurate decisions (Bishop, 2006; Duda et al., 2000; Mitchell, 1997). It is an interdisciplinary field that has close relationships with artificial intelligence, pattern recognition, data mining, statistics, probability theory, optimization, statistical physics, and theoretical computer science. Applications of machine learning include natural language processing, medical diagnosis, bioinformatics, video surveillance, and financial data analysis.

Machine learning algorithms can be organized into different categories based on different principles. For example, depending on the utilization of labels of training samples, they can be categorized into supervised learning, semi-supervised learning, and unsupervised learning algorithms.

In supervised learning, each sample contains two parts: one is input observations or features and the other is output observations or labels (Alpaydin, 2004; Hastie et al., 2009). Usually the input observations are causes and the output observations are effects. The purpose of supervised learning is to deduce a functional relationship from training data that generalizes well to testing data. The form of the relationship is a set of equations and numerical coefficients or weights. Examples of supervised learning include classification, regression, and reinforcement learning.

In unsupervised learning, we only have one set of observations and there is no label information for each sample (Hastie et al., 2009). Usually these observations or features are caused by a set of unobserved or latent variables. The main purpose of unsupervised learning is to discover relationships between samples or reveal the latent variables behind the observations. Examples of unsupervised learning include clustering, density estimation, and blind source separation.

Semi-supervised learning falls between supervised and unsupervised learning (Chapelle et al., 2006; Zhu, 2007). It utilizes both labeled data (usually a few) and unlabeled data (usually many) during the training process. Semi-supervised learning algorithms were developed mainly because the labeling of data is very expensive or impossible in some applications. Examples of semi-supervised learning include semi-supervised classification and information recommendation systems (Christakou et al., 2005).

Machine learning has many applications in real life. It is routinely used in banking (for detecting fraudulent transactions (Dorronsoro et al., 1997)), in finance (to predict stock prices (Huang et al., 2005a)), in marketing (to reveal patterns of consumer spending (Bose and Mahapatra, 2001)), and on the Internet (as part of search engines (Basili, 2003)). In biomedicine, MYCIN was proposed in the early 1970s at Stanford University. It is an expert system with about 600 rules designed to identify bacteria and recommend antibiotics (Swartout, 1985). Machine learning also showed capability in the field of drug design (Burbidge et al., 2001).You may not be aware of the existence of machine learning, but its applications are pervasive in our daily lives.

This review is structured as follows. In Sec. 2 we give a short introduction to machine learning and related algorithms. In Sec. 3 we describe six representative applications of machine learning in radiology. In Sec. 4 we discuss key contributions and common characteristics of machine learning techniques in radiology. In Sec. 5 we cover issues on translating machine learning techniques to clinical radiology practice. In Sec. 6 we review current research status and discuss future directions.

2. Overview of machine learning

Because of the rapid development of machine learning, it is hard to introduce every aspect of machine learning in one article. So in this section we will give a concise introduction to the most important topics of machine learning (Bishop, 2006). These topics include linear models, learning with kernels, probabilistic models, clustering analysis and dimensionality reduction. Through this introduction, we hope readers may have a general idea about the content of machine learning research, what it is capable of, and what are the implications to other research areas and real applications. The topics that will be introduced and their inter-relationships are shown in Fig. 1.

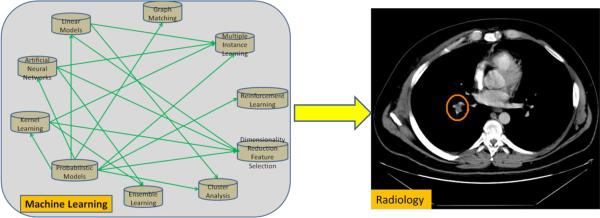

Fig. 1.

Connections between different areas of machine learning.

In these topics of machine learning research, kernel learning and probabilistic models play key roles in machine learning-based radiology applications. Kernel learning usually provides the best classifier for computer-aided detection in radiology (El-Naqa et al., 2002; Malley et al., 2003); probabilistic models provide a theoretical framework for medical image analysis, such as image reconstruction (Levitan and Herman, 1987), segmentation (Zhang et al., 2001), and registration (Ashburner et al., 1997; Maintz and Viergever, 1998). Linear models, artificial neural networks, and ensemble learning provide other options for handling classification and regression problems in radiology besides kernel learning (Jerebko et al., 2003a; Yoshida and Nappi, 2001). Dimensionality reduction and feature selection is an essential part of computer-aided detection (CAD) systems in radiology (Wang et al., 2008a). Multiple instance learning addresses the common scenario in radiology CAD where a patient may have few positive instances of disease (e.g., lesions) and many false positives (Liang and Bi, 2007). Reinforcement learning is dedicated to accumulate domain experience in sequential learning (Sahba et al., 2006). Clustering analysis could be applied in medical images to identify similar lesions or meaningful findings (Chuang et al., 1999). Graph matching is employed to handle medical image registration problems (Wang et al., 2010a).

2.1 Linear models for classification and regression

Linear models assume that there is a linear relationship between the input of the model and the output of the model. Perhaps it is the simplest method for classification and regression. It has been widely used in computer-aided classification. For example, Chan et al. employed linear discriminant analysis (LDA) in texture feature space for classification of mammographic masses and normal tissue (Chan et al., 1995b). In the work of Preul et al. on accurate, noninvasive diagnosis of human brain tumors by proton magnetic resonance spectroscopy, they used LDA for classification in the “leave-one-out” test paradigm (Preul et al., 1996).

Given an input vector xεRd which describes features of objects we want to classify, a decision function in linear models usually is defined as f(x)= wTx + w0 where w is the weight vector and w0 is a constant and called threshold. Learning the optimal weight vector w and threshold w0 is a key problem in linear models. Once w is learned from training data, it can be applied to test cases and predicts the labels of them. For two-class classification problems, Fisher proposed the following criterion to locate the optimal parameters (Fisher, 1936): , where SB=(m1−m2)(m1+m2)T is called the “between” scatter matrix (mi is the mean of samples from class i,iε{1,2}), and Sw=S1+S2 is called the “within” scatter matrix (S1=ΣxεDi(x−m1)(x−m1)T, Di is the collection of samples from class i,iε{1,2}). This method is called linear discriminant analysis (LDA). The basic idea of LDA is to try to find an optimal projection w which can maximize the distances between samples from different classes and minimize the distances between samples from the same class. An illustration of LDA is shown in Fig. 2. Once the 2D data are projected to one dimensional line, threshold along the line will affect the classification error, as depicted by the 1-D distributions in Fig. 2. For multiple classes problems, the above scatter matrices can be extended to the following form: , where K is the number of classes, mi is the mean vector of class i, pi is the priori probability, is the overall mean (Loog et al., 2001).

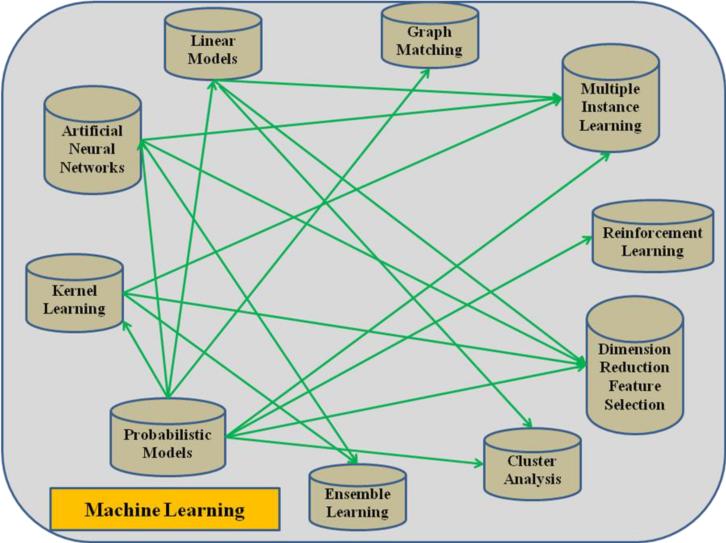

Fig. 2.

Best projection direction (purple arrow) found by LDA. Two different classes of data with “Gaussian-like” distributions are shown in different markers and ellipses. 1-D distributions of the two-classes after projection are also shown along the line perpendicular to the projection direction.

Closely related to linear discriminant analysis, quadratic discriminant analysis tries to capture the quadratic relationship between the independent and dependent variables (Hastie et al., 2009). It provides more powerful discriminant ability compared with the linear separation interface of two classes learned by LDA.

2.2 Artificial neural networks

Artificial neural networks (ANNs) are techniques that were inspired by the brain and the way it learns and processes information. ANNs are frequently used to solve classification and regression problems in real world applications. Neural networks are composed of nodes and interconnections. Nodes usually have limited computation power. They simulate neurons by behaving like a switch, just as neurons will be activated only when sufficient neurotransmitter has accumulated. The density and complexity of the interconnections are the real source of a neural network's computational power.

Neural networks can be classified by their structures. In 1957 Rosenblatt proposed the first concrete neural network model, the perceptron (Rosenblatt, 1958). A perceptron has only one layer; in essence it is a linear classifier. In 1960, Bryson and Ho proposed the multiple neural network and introduced the fundamental backpropagation algorithm for training a neural network (Bryson and Ho, 1969). In theory, a three layer neural network can learn any complicated function. In 1982, the Hopfield network was proposed which has only one layer and all neurons are fully connected with each other (Hopfield, 1982). Boltzmann machines can be seen as the stochastic, generative version of Hopfield networks (Ackley et al., 1985). Boltzmann machines are able to solve difficult combinatorial problems and learn internal representations. The self-organizing map (SOM) was introduced around the same time (Kohonen, 1982). It is a unique network which conducts unsupervised learning. Since the final network topology learned by SOM can express certain characteristics of input signal, it was widely used for dimension reduction, visualization of high dimensional data and clustering. Cellular neural network (CNN) provides a parallel computing paradigm similar to human vision perception (Chua and Yang, 1988a, 1988b). In CNN, the communication is only allowed between neighboring nodes. Typical applications of CNN include image processing, analyzing 3D surface, modeling biological vision, etc. Besides these neural networks introduced above, other important neural networks include radial basis function (RBF) (Moody and Darken, 1989), probabilistic neural (Specht, 1990) and cascading neural networks (Fahlman and Lebiere, 1991).

Baker et al. showed that ANN could be used to categorize benign and malignant breast lesions based on the standardized lexicon of the Breast Imaging Recording and Data System (BIRADS) of the American College of Radiology (Baker et al., 1995). Tourassi et al. showed an application of ANN in acute pulmonary embolism detection (Tourassi et al., 1993). They found that the ANN significantly outperformed the physicians involved in this study.

2.3 Learning with kernels

By applying traditional supervised and unsupervised learning methods in the feature space, kernel methods provide powerful tools for data analysis and have been found to be successful in a number of real applications. Support vector machines (SVMs) are a set of kernel-based supervised learning methods used for classification and regression (Burges, 1998). Here kernel means a matrix which encodes similarities between samples (evaluated by a certain kernel function which is a weighting function in the integral equation used to calculate similarities between samples). SVMs try to minimize the empirical classification error and maximize the geometric margin simultaneously on the training set which leads to high generalization ability on the new samples. For a two-class classification problem, given training samples {(x1, y1),…,(xn, yn)}, yi ε{−1,+1}, the optimization problem for learning a linear classifier in the feature space is defined as (hard margin): , subject to yi(〈w,Φ(xi〉)+b)≥1i=1,…,n,, where Φ is the mapping from original space to feature space and symbol <= means the inner product of two vectors. The matrix composed by inner products of samples in feature space (after linear or non-linear mapping) is called the kernel matrix which describes the similarities between samples and serves as evidence when we maximize the margin between two classes of samples. The above problem is a quadratic programming (QP) optimization problem and it is convex. The optimal (w*,b*) is a maximal margin classifier with geometric margin γ =1/||w*||2 if it exists. It can be applied to classify test samples once it is learned from the training set. The concept of the geometric margin learned by the SVM is shown in Fig. 3 in which samples on the margin are called support vectors.

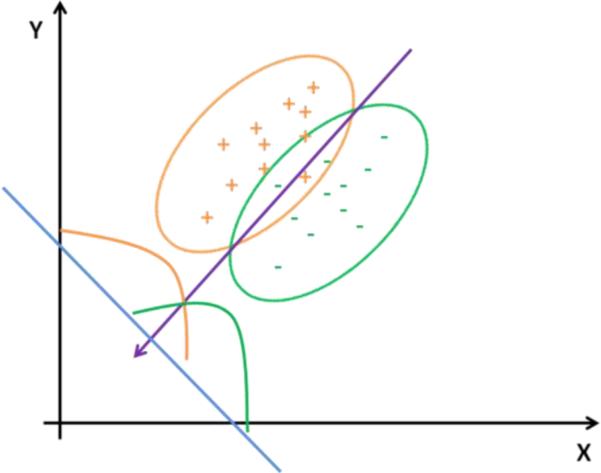

Fig. 3.

Illustration of margin learned by SVM. Black line is the best hyperplane which can separate the two classes of data with maximum margin. Support vectors are shown in circles.

In many real clinical applications, we can extract many features with the help of modern medical test equipment, e.g., lab tests and radiology devices. Some features may not be relevant to the labels of subjects tested. If we feed all the features to SVMs, it may be affected by irrelevant and noisy features which can result in poor performance. In addition, with hundreds (or thousands or more) of features from test equipments doctors may also wonder which features are relevant or useful for the diagnosis of certain diseases so they can give better interpretations of the clinical findings. In the work of Weston et al., they proposed a method of feature selection for SVMs (Weston et al., 2001). The best features are identified by minimizing bounds on the leave-one-out error.

In the basic optimization problems of SVMs introduced above, there is only one mapping function Φ which maps input vector to feature space. In real applications, we often have multiple information sources to describe the same object. A multiple kernel learning approach provides a feasible way to solve real applications which involve multiple, heterogeneous data sources (Chapelle et al., 2002; Lanckriet et al., 2004; Sonnenburg et al., 2006). This so-called “multiple kernel learning” problem usually can be solved by considering the convex combinations of K kernels, i.e., , with βk ≥ 0 and , where each kernel Kk uses a group of features from one information source and xi,xj are samples.

Typical applications of kernel-based learning methods in radiology are in CAD. SVMs perform well in detection of microcalcifications on mammography CAD (El-Naqa et al., 2002; Tang et al., 2009; Wei et al., 2005b). For computed tomographic colonography (CTC), colonic polyps were detected using statistical features extracted from polyp candidates and multiple kernel learning (Wang et al., 2010c).

2.4 Learning and inference in probabilistic models

Probabilistic models provide a concise representation of complicated real world phenomena and enable predictions of future events from present observations. For example, in radiology, dose control in clinical scanning is a critical issue. Giving a patient more dose than he/she needed may cause potential damage to the issue or induce cancer. Mohan et al. proposed a tumor control probability (TCP) model to predict the clinical consequences of different radiation dose distributions and optimize 3-D conformal treatment plans (Mohan et al., 1992). The model can be used to predict radiation effect on tissue for a given dose in simulation prior to exposing the patient. Martel et al. estimated TCP model parameters from 3-D dose distributions of non-small cell lung cancer patients (Martel et al., 1999).

The Naive Bayes classifier is a classifier based on probabilistic models with strong (naive) independence assumptions. In spite of its oversimplified assumptions, naive Bayes classifiers work well in many real life applications (Domingos and Pazzani, 1997). Assume C is a class variable depending on n input features: X1,X2,…,Xn. The prediction of C can be described by the following conditional model: p(C | X1,X2,…,Xn). By Bayes' theorem, , where p (C) is the prior probability of C, p(X1,X2,…,Xn | C is the conditional probability depending on C, and p(X1,X2,…,Xn) is the probability of input features. Assume that each feature Xi is conditionally dependent and observe that denominator p(X1,X2,…,Xn) does not depend on C which is actually a constant when features are given, the conditional probability over the class variable C can be expressed as where Z is a normalization constant. The above Naïve Bayes classifier can be trained based on the relative frequencies shown in the training set to get an estimation of the class priors and feature probability distributions. For a test sample, the decision rule will be picking the most probable hypothesis (value of C) which is known as the maximum a posteriori (MAP) decision rule using the above model.

As an example of Naïve Bayesian classifier in radiology application, Prasad et al. tackled the problem of lung parenchyma segmentation in the setting of pulmonary disease (Prasad et al., 2008). They used curvature of ribs for the segmentation and used Naïve Bayesian classifier to build the model to find the best-fitting lung boundary. The inputs of the Naïve Bayesian classifier are features of lung and rib curvature. Probability of a matched curvature can be obtained from the classifier.

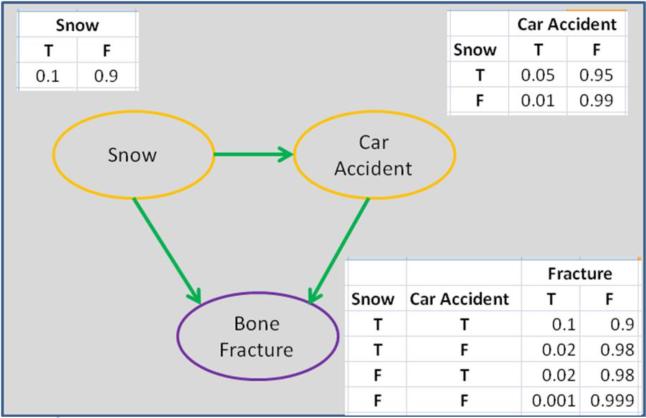

Graph models (Jordan, 1998; Jordan et al., 1999) are perhaps the most popular probabilistic models in which nodes represent random variables and links between nodes denote the conditional independence structure between random variables. Bayesian networks and Markov random fields are two typical graph models. Illustration of a Bayesian network on bone fracture modeling is shown in Fig. 4.

Fig. 4.

Modeling of bone fractures using a Bayesian network in which the bone fracture variable is caused by the states of the weather (e.g., snowing) and car accidents on the road. Each table in the figure shows the probabilities of the corresponding variables given states of father nodes (indentified by arrows). Snow is an independent variable and we show its a priori probabilities in the adjacent table.

The Bayesian network (Heckerman, 1996), also called a belief network or directed acyclic graphical model, represents conditional independencies via a directed acyclic graph (DAG). For example, in the area of medical diagnosis, the relationship between diseases and symptoms can be modeled by a Bayesian network. The probability of a specific disease can be calculated based on the presence of symptoms. Let G = (V,E) be a DAG (where G represents the graphical model, V a set of variables, and E an encoding of the casual relationships between variables) and X = (Xv)vεV be a set of random variables indexed by V. The joint probability density function of X can be factorized as follows: , where pa(v)are the parent nodes of v.

Learning of a Bayesian network includes parameter learning and structure learning. Structure learning is more challenging than parameter learning because the network structure is unknown and the solution space is much larger than that of parameter learning. Maximum likelihood (Lecam, 1990) and expectation-maximization (EM) (Dempster et al., 1977) are widely used in parameter learning of a Bayesian network and Markov chain Monte Carlo (MCMC) provides a global search method for the learning of structure of a Bayesian network.

A Markov random field (MRF), Markov network, or undirected graphical model, is a graphical model in which a set of random variables are connected by undirected links. These models have the so-called Markov property in which a random variable will only be affected by its direct neighbor variables. MRFs have wide applications in computer vision and image processing (Held et al., 1997; Li, 1994; Panjwani and Healey, 1995; Zhang et al., 2001). For example, Lei and Sewchand applied MRFs to CT image segmentation (Lei and Sewchand, 1992). They used an MRF to model CT images and segment them using a Bayesian classifier.

2.5 Ensemble learning

Learning by an ensemble of classifiers is a very effective learning mechanism and was paid much attention in recent years (Dietterich, 1997). Ensemble learning refers to a collection of methods that learn a target function by training a number of individual learners and combining their predictions together. The Bagging algorithm (Bootstrap aggregating) (Breiman, 1996) uses bootstrap samples to build base classifiers. Each bootstrap sample is formed by uniformly sampling from the training set with replacement. The accuracy can be improved through building multiple versions of the base classifier when unstable learning algorithms (e.g., neural networks, decision trees) are used. The AdaBoost algorithm (Freund and Schapire, 1995) calls a given base learning algorithm repeatedly and maintains a distribution of weights over the training set in a series of rounds t = 1, …, T . During the training process, the weights of incorrectly classified examples are increased so that the weak learner is forced to focus on the hard examples in the training set.

Exemplar applications of ensemble learning in medicine include lung cancer cell identification based on ANN ensembles (Zhou et al., 2002), colonic polyp detection using SVM ensembles (Jerebko et al., 2005), and automated classification of lung bronchovascular anatomy in CT using AdaBoost (Ochs et al., 2007).

2.6 Cluster analysis

Natural data usually show clustering properties: samples belonging to the same cluster are more similar , or have closer distance, under certain distance metrics than samples from different clusters. Analysis of the clustering properties of the data will help us understand the nature of the data and potential real applications. It has broad applications in radiology, such as medical image segmentation and diagnosis. Famous clustering algorithms include k -means clustering (Pena et al., 1999), hierarchical clustering (Hastie et al., 2009), DBSCAN (Ester et al., 1996), normalized cut (Shi and Malik, 2000), and mixtures of Gaussians (Bilmes, 1998).

The key idea of k -means clustering is assigning each sample or point to the cluster with the nearest center (also called centroid, the mean of all samples belonging to this cluster). The optimization of clustering is done by iteratively re-assigning labels (markers of clusters) and re-computing the centroids. k -means clustering has the advantage of simplicity and speed but is prone to local minima as there is no guarantee of a global minimum of intra-cluster variance. k -means clustering assigns a hard label to each sample. Fuzzy c-means clustering, a variant of k- means clustering, incorporates fuzzy logic to show the degree to which a sample belongs to each cluster.

Hierarchical clustering adopts a top-down or bottom-up strategy for clustering. It builds a tree structure (called a dendrogram) based on sample distance. For the top-down strategy, hierarchical clustering starts from one cluster as root. Then it splits the cluster successively till a desired number of clusters are derived. Agglomerative hierarchical clustering operates in the reverse direction by merging leaves or sub-clusters together step-by-step based on various similarity or distance measures. DBSCAN is a density-based clustering algorithm. It starts from a randomly selected and unlabeled point. Then it expands the cluster from the initial points based on the sample density around that point. All unvisited points near a point in the cluster which are density-reachable (i.e., density around the point is higher than a certain threshold) will be included in the cluster. DBSCAN has an overall runtime complexity of O(n log n) and usually is faster than k -means clustering (O(ndk+1 log n) where n is the number of samples, d is the dimension of samples and K is the number of clusters).

Gaussians mixture model (GMM) (Bilmes, 1998) is among the most statistically mature methods for clustering. Assume that we have a data set X composed of N samples generated by k components. Each component generates data from a d -dimension Gaussian distribution N (μi, Σi with mean μi and covariance Σi. The mixture model is expressed as follows: with parameters where x represents observed data and are the mixture coefficients. The incomplete-data log-likelihood expression for the mixture density from the data X is . Let us assume that a hidden variable z is attached to each observation which indicates from which component the sample was generated, . With the help of hidden variables, the incomplete-data log-likelihood shown above can be optimized by using the EM algorithm (Dempster et al., 1977) in which the latent variables or unobserved data z1,…, zN) can be estimated in the E-step and the parameters for each Gaussian distribution can be estimated in the M-step (Amari, 1995).

Clustering analysis has many applications in medical image segmentation. For example, Chen et al. proposed a robust algorithm for 3D image segmentation by combining adaptive k-means clustering and knowledge-based morphological operations together (Chen et al., 1998). They applied the proposed method to cardiac CT volumetric images to segment the volumes of the left ventricle chambers. Yao et al. applied fuzzy clustering and deformable models to colonic polyp segmentation in CT colonography (Yao et al., 2009).

2.7 Dimensionality reduction and feature selection

With the rapid development of modern measurement and detection instruments, we are able to sample more and more data (regarding both dimension and size of the sample) from real applications. For example, in radiology achievable image resolution has increased significantly compared with ten years ago. Higher resolution means more voxels in an image which corresponds to more input features if we feed a classifier with all voxels. The increase in dimensionality (number of voxels in radiology images or number of features in feature space that can be extracted from original images) is a significant obstacle to solving optimization problems. This, in turn, complicates machine learning because of the optimization tasks involved in the learning stage. Dimensionality reduction is dedicated to solve this problem by extracting or selecting useful information from the feature space. Classical techniques for dimensionality reduction, such as Principal Components Analysis (PCA), are designed for data whose submanifold is embedded linearly or almost linearly in the observation space (Jolliffe, 2002). Submanifold is a subset of a manifold in space and has its own structure. Because many data from real applications, such as visual perception (Tenenbaum et al., 2000), have nonlinear submanifold structures, there has been a surge in research on nonlinear dimensionality reduction (NLDR) in recent years. The representative methods of NLDR include local approaches such as Locally Linear Embedding (LLE) (Roweis and Saul, 2000) and Laplacian Eigenmaps (Belkin and Niyogi, 2003), and global approaches such as ISOMAP (Tenenbaum et al., 2000) and Diffusion Map (Coifman et al., 2005a, 2005b). In these nonlinear methods, local methods try to preserve the local geometry of the data in low-dimensional space; global approaches tend to give a more faithful representation of the data's global structure. Applications of NLDR in radiology include showing data structure and distribution in low dimensional space (which cannot be observed in the original high dimensional space), and classification (Wang et al., 2008b).

Similar to PCA, independent component analysis (ICA) looks for a linear transformation which can convert the original data to a new linear space (Comon, 1994). The difference is that for ICA the transformation matrix is designed to minimize the statistical dependence between its components; whereas in PCA the transformation matrix is to retain the components with maximal variance or energy.

By employing dimensionality reduction algorithms, we can extract useful information and build compact representations from the original data. Such benefits can also be obtained by feature selection. Feature selection is a machine learning technology which selects a subset of features based on various optimization criteria (Guyon and Elisseeff, 2003).

Feature selection methods can be classified into two types- filter and wrapper- depending on the integration of feature selection and the problem to be solved (Liu and Yu, 2005). For filter methods, the best features are selected according to a specific criterion (such as Pearson correlation and mutual information between features). The employed criterion is independent of the real problem. For wrapper methods, feature selection is embedded into the real problem to be solved and the optimal subset will be determined during iterative optimization. The optimization is based on a final criterion related to the real problem. For example, in a classification task, a wrapper method for feature selection tests a subset of the features on the classification problem. The subset of the features evolves depending on the classification results. Sequential forward selection and sequential backward selection are common wrapper methods for feature selection. In the sequential feature selection methods, an objective function or criterion will be defined first. Then a sequential search algorithm will be introduced to add or remove features from candidate subsets based on the evaluation results of the criterion.

In 2005, a method called minimal-redundancy-maximal-relevance (mRMR) feature selection was proposed by Peng et al. (Peng et al., 2005). In mRMR feature selection, the optimization criteria are affected by two factors: one is relevance between features and target classes and one is redundancy between features. Peng et al. proposed a heuristic framework to minimize redundancy and maximize relevance at the same time. mRMR showed better performance in many real applications compared with traditional feature selection methods. But as every learning algorithm has its own assumptions and conditions, depending on the specific feature selection problem to be solved, we might find other algorithms which are superior to the mRMR algorithm.

Applications of feature selection in radiology focus on selecting the best features for computer-aided detection and diagnosis systems. For example, Li et al. proposed an efficient feature selection algorithm based on piecewise linear network and orthonormal least square procedure for computer-aided polyp detection in CT colonography (Li et al., 2006). Mougiakakou et al. applied feature selection in conjunction with texture features and ensemble classifiers to the problem of differential diagnosis of CT focal liver lesions (Mougiakakou et al., 2007).

2.8 Reinforcement learning

Reinforcement learning studies how agents respond to the change of environment and maximize long-term reward (Barto and Sutton, 1999). It has broad applications in robot control (Schaal and Atkeson, 1994) and game playing (Schraudolph et al., 1994). In reinforcement learning the agent continuously updates her strategy through iterative interactions with the environment in order to develop an optimal strategy. Q-learning is a typical on-line, model-free reinforcement learning algorithm, where Q summarizes in a single number all the information needed by the agent in order to determine her discounted cumulative future reward (Watkins and Dayan, 1992). By using Q-learning, an agent can develop the optimal strategy in a Markovian decision process through a sequence of actions following a Boltzmann distribution strategy. It has been proven that Q-learning converges to the optimum action-values with a probability of 1 so long as all actions are repeatedly sampled in all states and the action-values are represented discretely.

Reinforcement learning has potential applications in radiology. For example, current medical CAD systems for radiology are typically trained on a fixed training set. An alternative approach is to incrementally enlarge the training set as new patient data becomes available. Reinforcement learning could be used to incorporate the knowledge gained from the new patients into the CAD systems. Sahba et al. have shown high potential of applying reinforcement learning in medical image segmentation (Sahba et al., 2006).

2.9 Multiple instance learning

All supervised machine learning methods introduced in previous sections are single-instance learning methods. In single-instance learning, each instance or sample has a label. But in reality, we may have single instances without labels but groups of instances with labels. Methods developed to handle such cases are called multiple-instance learning (MIL). Multiple-instance learning is currently a hot topic in machine learning (Dietterich et al., 1997; Maron and Lozano-Ṕerez, 1998). In multiple-instance learning, samples (also called instances) are wrapped in bags. A bag is defined as an ensemble of instances. The learner (computer learning algorithm) only knows the labels of bags and has no idea about the labels of instances. A bag is labeled negative if all the instances in it are negative; a bag is labeled positive if there is at least one instance in it which is positive. For example, in a radiology CAD application, a patient can be viewed as a bag and all the detections given by the CAD system can be treated as instances which include true lesions and false positives.

The axis-parallel rectangles (APR) method proposed by Dietterich is the first MIL algorithm (Dietterich et al., 1997). The idea behind APR is very simple: find an axis-parallel rectangle (APR) in the feature space to represent the target concept. APR should contain at least one instance from each positive bag and should not contain any instances from any negative bags. Experiments on drug activity prediction problems indicate that the APR method is superior to traditional supervised methods based on single instance learning such as backpropagation neural network and C4.5 decision tree.

Maron and Lozano-Ṕerez (Maron and Lozano-Ṕerez, 1998) proposed another MIL algorithm called diversity density (DD) algorithm which tries to search for a point in the feature space with the maximum diverse density. Diverse density measures the intersection of positive bags, excluding the union of negative bags. This algorithm was improved by incorporating expectation maximization (EM) to estimate which instance(s) in a bag is responsible for the assigned class label (Zhang and Goldman, 2001). As a natural extension of the classical k-nearest neighbor (k-NN) classifier, citation-kNN was proposed by Wang and Zucker (Wang and Zucker, 2000), in which a Hausdorff distance is used to measure the distance between bags, and both “citers” and “references” are considered in calculating neighbors.

MIL has many potential applications in radiology, particularly when we are interested in patient-level diagnoses rather than lesion-level diagnoses. For example, in CT pulmonary angiography, we may wish to know whether the patient has pulmonary emboli. The number of pulmonary emboli may be of secondary priority. MIL has been successfully applied to CT pulmonary angiography to detect pulmonary emboli (Liang and Bi, 2007). MIL has also shown promising results in CT colonography to detect colonic polyps (Fung et al., 2007).

2.10 Graph Matching

Learning how to match two objects is a basic problem in computer vision and machine learning. Graph matching provides an elegant way to present and match objects. It can be applied to medical image registration.

In graph matching, an object usually is represented by a graph consisting of nodes and links that connect the nodes. The nodes represent key points of the object with obvious visual features or clues, i.e., anatomical landmarks. The links represent the spatial neighbor relationship between different nodes. Given two similar graphs, graph matching studies how to match nodes from one graph to the other graph accurately under various considerations and constraints. The graph matching problem has interested researchers for decades and many approaches have been developed. For example, Luo & Hancock treated matching matrix or assignment matrix which defines the correspondence between two vertex sets as hidden variable and proposed an integrative expectation-maximization (EM) algorithm to solve this problem (Luo and Hancock, 2001). Gold and Rangarajan proposed a graduated assignment graph matching algorithm (Gold and Rangarajan, 1996). A control parameter was introduced in the algorithm to gradually impose matching constraints during the iterative optimization process. Leordeanu and Hebert employed spectral technique to decompose the affinity matrix Q of all possible assignments (Leordeanu and Hebert, 2005). The principle eigenvector of Q is trimmed by imposing the mapping constraints required by the overall correspondence mapping (one-to-one or one-to-many). For more about graph matching, readers are referred to a review paper on this topic by Conte et al. (Conte et al., 2004).

Graph matching has many applications in medical image diagnosis. For example, in content-based image retrieval in medical applications, Lehmann et al. employed graph matching to abstract medical images by hierarchical partitioning and corresponding blobs (Lehmann et al., 2004). Blobs are sub-regions of an image which usually are part of an anatomical structure. Fig. 5 shows an example of hierarchical blobs and graph representation. The authors used a database with 10,000 radiology images (CT, MRI) which were categorized by imaging modality, orientation, body region, and biological system. With the help of a graph representation, the distance or similarity of query image and database entry can be transformed into a graph matching problem. Wang et al. used graph matching to register supine and prone computed tomographic colonography scans (Wang et al., 2010a). After formulating 3D colon registration as a graph matching problem, the authors found an optimal solution by applying mean field theory to what was in essence a quadratic integer programming problem.

Fig. 5.

A hierarchical blob representation of a brain image. Right figure shows corresponding graph constructed from the blob image. Reproduced with permission from Ref. (Lehmann et al., 2004).

2.11 Training and testing of a learning algorithm

Training and testing play important roles in the evaluation of a machine learning algorithm. Usually a machine learning algorithm will be trained on a training set and tested on a test set. A good training strategy can help to find the optimal parameters for a computer-aided detection (CADe) or computer-aided diagnosis (CADx) system. Grid search is the simplest way to train an algorithm. In grid search, the parameter space of a learning algorithm will be divided into hyper-cubes of equal volume. The learning algorithm will be tested on each vertex of each hyper-cube. The vertex in the parameter space with the best performance (evaluated by the training set or validation set) will be selected as the optimal solution.

Grid search is computationally expensive and may be impossible for some large scale data. A recent approach to address this difficulty is called hyperparameter learning (Bengio, 2000; Duan et al., 2003). Hyperparameter learning tries to find the optimal parameters of a prior distribution based on different model selection criterion. Model selection criterion defines how to select the best parameters in a model. Typical strategies for hyperparameter learning include gradient-based optimization and maximum likelihood methods.

To evaluate machine learning algorithms on a particular dataset, one often partitions the dataset in different ways. Popular partition strategies include K-fold cross-validation, leave-one-out, and random sampling. In K-fold cross-validation, the whole data set is partitioned into K subsets. A learning algorithm is trained on K-1 of the subsets and tested on the remaining one. This procedure is conducted K times until all K subsets have been tested. Leave-one-out is similar to K-fold cross-validation but each subset consists of only a single sample from the dataset. Each time a sample will be held aside for testing and the learning algorithm is trained on the other samples. This process is repeated N times where N is number of samples in the dataset (each sample will be tested exactly once). For random sampling, the data subsets are formed by random sampling of the data without replacement as training samples and the remaining data serves as test data. Bootstrapping method (Efron and Tibshirani, 1986) can be viewed as a special case of random sampling with replacement during the test.

Different evaluating methods will affect the generalization ability of machine learning algorithms. An estimation method with low bias and low variance will be ideal to estimate the final accuracy of a classifier. Recent theoretical studies and experimental results on real-world datasets showed that ten-fold cross-validation is better than the more computationally expensive leave-one-out cross-validation for model selection (select a good classifier from a set of classifiers) (Kohavi, 1995). For random sampling, when the ratio between testing and training is high, it tends to give estimations with high bias and high variance due to insufficient training samples. The advantage of random sampling is that for small size datasets it can test a classifier's capability in a thorough way by varying the training set multiple times.

In many real radiology applications, detection and diagnostic decision-making play import roles in clinical practice. Receiver operating characteristic (ROC) analysis provides a practical tool for model selection. An ROC curve is a 1D curve which shows the trade-off in sensitivity versus false positive rate as the threshold is varied for the decision variable for a binary classifier (Zweig and Campbell, 1993). Cost/benefit factors which are import in diagnostic decision making can be directly and naturally embedded in the ROC analysis.

3. Applications of machine learning in radiology

In this section, we will introduce some typical applications of machine learning in radiology.

3.1 Medical image segmentation

Medical images contain many structures including normal structures such as organs, bones, muscles, fat, and abnormal structures such as tumors and fractures. Segmentation is the process of identifying structures, both normal and abnormal, in the images. It is fundamental to the interpretation of medical images. Learning how to segment anatomic structures is a critical part of medical image segmentation. Segmenting structures from medical images is not trivial due to the complexity and variability of the region-of-interest. Examples of problems that complicate medical image segmentation include normal anatomic variation, post-surgical anatomic variation, vague and incomplete boundaries, inadequate contrast, artifacts and noise.

The concept of the graph provides an elegant way to abstract image information that is useful for segmentation. Graph cuts are segmentation methods which are based on graphs and utilize flows between source and sink nodes on the graph (Greig et al., 1989). Shi and Malik proposed a graph partitioning method called “normalized cut” that performs image segmentation (Shi and Malik, 2000). In graph theoretic language, given a graph G = (V, E), where V represents a set of vertices and E a set of edges, a cut between two disjoint sets of G is defined as where w is the weight of the edge or link connecting two nodes in subsets A and B. The cut can be used to depict the dissimilarity between the two subsets. Minimizing the cut value usually will lead to the optimal bipartitioning of a graph. For real-world data, however, the minimum cut criterion favors cutting the graph into small sets of isolated nodes. To solve this problem, Shi and Malik proposed the measure called normalized cut (Ncut). Ncut penalizes partitions containing small isolated points and gives more balanced partitions compared with ordinary cut.

Graph cuts have many applications in medical image segmentation including interactive organ segmentation for 3D CT and MRI images (Boykov and Jolly, 2000), multiple sclerosis lesion segmentation in MRI (García-Lorenzo et al., 2009), segmentation of the left myocardium in four-dimensional (3D space + time) cardiac MRI data (Kedenburg et al., 2006), and lung segmentation from volumetric low-dose CT scans (Ali and Farag, 2008). Some of these applications are fully-automated.

As introduced in Sec. 2, Markov random fields (MRFs) have wide applications in medical image segmentation (Held et al., 1997; Towhidkhah et al., 2008; Zhang et al., 2001). The graph model of Markov random fields has a natural representation of the voxels in a 2D/3D medical image and their spatial relationship. Based on Bayes theory, given observed images I, the posterior probability of segmentation p(S|I) can be inferred from prior distribution p(S) of label S and conditional distribution p(I|S). The Markov model could be solved by using maximum a posterior (MAP) criterion. In MRF models, parameters controlling the spatial interactions have significant influence on the smoothness of segmentation results (Pham et al., 2000). In practice, usually we need to balance the smoothness and important structural details. In addition, MRF models usually are computationally hard to solve.

Although cluster analysis developed in machine learning and pattern recognition area was not designed for medical segmentation problems originally, many clustering algorithms can be applied directly to medical image segmentation problems because the objectives of clustering algorithms and image segmentation problems are highly overlapped. Examples include applying fuzzy c-means clustering algorithm to brain MR image segmentation (Chen et al., 2007), segmentation of thalamic nuclei from DTI using spectral clustering (Ziyan et al., 2006), and 3D cardiac CT data set segmentation using random walks (Grady, 2006).

In medical image segmentation, flexibility is often required to adapt the segmentation to the variability of biological structures over time and across different individuals (McInerney and Terzopoulos, 1996). Deformable models can sometimes help deal with such variability. Deformable models can be contours (known as snake or active contours) for 2D images and surfaces for 3D images. Deformable models combine elements from geometry, physics, approximation theory, and machine learning. Geometry provides a way to represent the object boundary. Constraints are imposed on the geometric representation of the object to limit the way it can evolve. The constraints often incorporate physical principles such as force and elasticity. Approximation theory and machine learning help fit the model to the data and learn the best parameters of the model and the deformation. For detailed information on how deformable models describe object shapes in a compact and analytical way, and incorporate anatomic constraints, readers are referred to a review paper on this topic (McInerney and Terzopoulos, 1996).

In many radiology images, objects to be segmented have irregular shapes and complicated topologies. Level set-based segmentation methods provide a natural and flexible way to handle those complicated objects (Cremers et al., 2007; Malladi et al., 1995). In level set, the boundary is defined as the zero value of a hypersurface. By casting the segmentation problem into a higher dimensional space, the motion of the hypersurface under the control of a speed function will cause the initial boundary to move. By utilizing image information, e.g., edges and grey value, the evolution of the hypersurface can be stopped at the object boundary (Malladi et al., 1995). Level set has been widely used in medical image segmentation, such as brain (Baillard et al., 2001; Ciofolo and Barillot, 2005; Yang et al., 2003), heart (Lin et al., 2003; Paragios, 2003; Yang et al., 2003), liver (Lee et al., 2007; Smeets et al., 2010), and colon (Franaszek et al., 2006; Konukoglu et al., 2007; Uitert and Summers, 2007).

For a machine learning algorithm, how to make full use of a training set is a key point of its success on the test set. In deformable models and level set-based segmentation methods, training information is incorporated into the segmentation method in an implicit way (through parameter learning). On the contrary, active shape models (ASMs) tries to utilize training shape information in a more explicit way by building a shape model from training images and adapting the model to a new test image through an alternative optimization way (Cootes et al., 1995). Later, Cootes et al. extended ASMs to active appearance models (AAMs) by incorporating appearance information of objects in an image (Cootes et al., 2001). Applications of AAMs and ASMs in radiology include cardiac MR and ultrasound images (Mitchell et al., 2002), prostate segmentation (Shen et al., 2003), and segmenting thrombus in abdominal aortic aneurysms (de Bruijne et al., 2003). More discussions on statistical shape models for 3D medical image segmentation can be found in a review paper (Heimann and Meinzer, 2009).

In recent years, joint categorization and segmentation (JCaS) has become a hot topic in computer vision (Ladicky et al., 2010; Singaraju and Vidal, 2011). In JCaS, interested objects in a 2D image are categorized and segmented simultaneously. Each pixel in the image is assigned an object category label. In JCaS, MRF was widely used to model the image and corresponding segmentation. To model the local properties of each node, and interactions among nodes in the MRF, we usually define some potential functions (known as “potentials”). Unary and pairwise potentials are typical choices used in 2D image segmentation. Given images, the MRF could be solved using maximum a-posteriori learning and expectation-maximization. Compared with traditional MRF for image segmentation, JCaS incorporates higher order potentials that encode the classification cost of statistical features extracted from objects in an image. Usually it is done by encoding the output of a classifier in a potential function which is able to capture global or higher order interactions of the objects-of-interest. Since in many radiological applications, segmentation and categorization of various organs are fundamental tasks, we expect that JCaS will have wide applications in radiology in the near future.

3.2 Medical image registration

Image registration is an application of machine learning. During a medical examination, a patient may be scanned by different imaging modalities (Studholme et al., 1996), or scanned by the same modality at different positions, times, or situations (with or without contrast agents). These images are usually complementary and in combination may lead to more accurate diagnosis. In order to integrate all the information, a first step is to align these images spatially, a procedure referred to as registration or matching (Hill et al., 2001; Lester and Arridge, 1999; Maintz and Viergever, 1998). Machine learning plays a key role in the medical image registration problem by learning the best registration or parameters under different matching criteria.

Mutual information provides a good measure on the interdependence of two images. Thus registration based on mutual information has drawn a lot of attention in recent years and served as the basis of many medical image registration methods (Pluim et al., 2003). Given two images (2D or 3D) to be registered, let us define one of the two image as the reference image u and the other one as the test image v. The registration problem can be formulated as follows: , where x are the coordinates of a voxel, T is a transformation from the coordinate system of reference image to that of the test image,I is the mutual information between u and v. I(u(x),v(T(x))) ≡ h(u(x)) + h(v(T(x))) − h(u(x),v(T(x))) where and is the joint probability of x and y which could be obtained using Parzen windowing (Pluim et al., 2003). The registration methods based on mutual information can be classified into different categories based on the transformation and optimization method employed. For example, the transformation can be used including rigid (Holden et al., 2000), affine (Radau et al., 2001), perspective, and curved (Chui and Rangarajan, 2003; Meyer et al., 1999) transformation. The optimization methods which are widely used include gradient-based (Maes et al., 1999) (steepest gradient descent, conjugate-gradient methods, quasi-Newton methods, least-squares methods) and non-gradient-based optimization methods (interpolation (Zhu and Cochoff, 2002), probability distribution estimation (pdf) estimation (Shekhar and Zagrodsky, 2002), optimization and acceleration (Studholme et al., 1997)). In addition, mutual information based on intensity information only may not be adequate. In the work of Papademetris et al., they combine point-feature and intensity information together for non-rigid registration (Papademetris et al., 2004).

Wang et al. proposed a graph matching method based on mean field theory for computed tomographic colonography (CTC) scan registration (Wang et al., 2010b). They first formulated colon registration as a graph matching problem. Then a matching algorithm was proposed based on mean field theory. During the iterative optimization process, one-to-one matching constraints were added to the system step by step. Prominent matching pairs found in previous iterations are used to guide subsequent mean field calculation. Graph also provides a concise and efficient representation of the medical objects.

The thin-plate spline (TPS) is an important tool for medical image registration (Bookstein, 1991). TPS can be considered to be a natural non-rigid extension of the affine map through minimizing a bending energy based on the second derivative of the spatial mapping (Bookstein, 1991). Chui et al. developed an algorithm which combines TPS and robust point matching (RPM) together where TPS provides the parameterization of the non-rigid spatial mapping and RPM solves the correspondence problem (Chui and Rangarajan, 2003). Combining mutual information and TPS is a very interesting topic. Interested readers can find such work in Meyer et al. (Meyer et al., 1997) by. Readers who are interested in brain registration problems could read reference (Klein et al., 2009) in which the authors evaluated and ranked 14 nonlinear deformation algorithms for human brain MRI registration.

Diffeomorphic Registration is also another type of widely used registration method in medical image analysis. It seeks an invertible function which is smooth and maps one differentiable manifold (image) to another. In ideal situation, the composition of the mapping function and its inverse should be close to the identity transform. Ashburner proposed a fast diffeomorphic registration framework called DARTEL which utilized Levenberg-Marquardt strategy to optimize the registration problem (Ashburner, 2007). Rueckert et al. used B-spines in diffeomorphic registration which serve as a way to parameterize a deformation field (Rueckert et al., 2006). Vercauteren et al. conducted research on non-parametric diffeomorphic image registration by adapting Thirion's demons algorithm to the space of diffeomorphic transformations (Vercauteren et al., 2007). Avants et al. tackled the problem from the viewpoint of cross-correlation within the space of diffeomorphic maps and developed a symmetric registration method (Avants et al., 2008).

3.3 Computer-aided detection and diagnosis systems for CT or MRI images

To assist doctors in the interpretation of medical images, computer-aided detection (CADe) and computer-aided diagnosis (CADx) provide an effective way to reduce reading time, increase detection sensitivity, and improve diagnosis accuracy. CADe and CADx are young interdisciplinary technologies combining elements of digital image processing, machine learning, pattern recognition, and domain knowledge of medicine together (Doi, 2005, 2007; Kononenko, 2001; Sajda, 2006).

The top leading cause of cancer related deaths in men and women is lung cancer in the United States. According to cancer statistics, 221,130 new cases of lung cancer and 156,940 deaths were reported in the United States in 2011 (http://www.cancer.gov/cancertopics/types/lung). In current lung cancer diagnosis, computed tomography (CT) screening is a standard procedure which is superior to traditional chest radiography in the detection of lung nodules (potential lung cancers) (Kaneko et al., 1996; Swensen et al., 2002).

An essential initial component of image interpretation and diagnosis is the identification of normal anatomical structures in the image. Since the lung has very complicated structures, developing a fully automated approach to distinguish normal lung structures will improve the utility of a lung CAD system. Ochs et al. (Ochs et al., 2007) employed AdaBoost to train a set of ensemble classifiers. The training CT images were labeled by radiologists with the following categories: airways (trachea and bronchi to 6th generation), major and minor lobar fissures, nodules, vessels (hilum to peripheral), and normal lung parenchyma.

Usually a CAD system is designed and optimized for detecting a specific disease, i.e., single-task learning. However, different diseases may share similar characteristics (for example, lung nodule and ground glass opacity), hence training a classifier to do multiple tasks may improve its performance and utility. Multi-task machine learning has been proposed (Caruana, 1997). These related problems may share the same representation. A typical example of a multi-task CAD system can be found in the work of Bi et al. (Bi et al., 2008). The multi-task learning algorithms they proposed can eliminate irrelevant features and identify discriminative features for each sub-task. They showed promising results on predicting lung cancer prognosis and heart wall motion analysis.

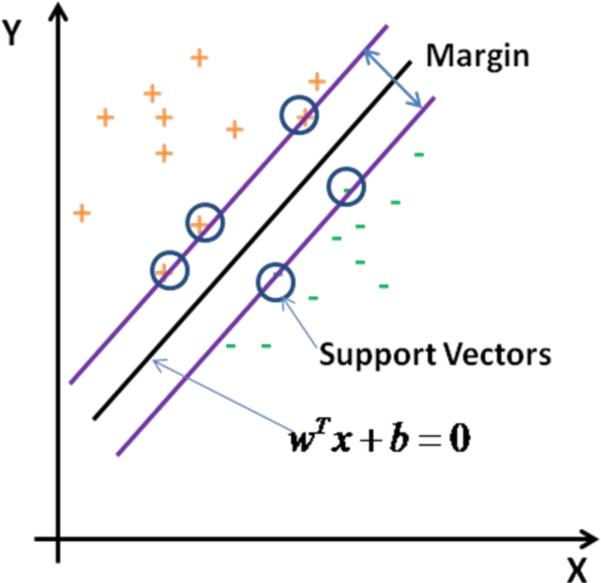

Pulmonary embolism (PE) is a blockage of the main artery of the lung or one of its branches by a substance (usually a blood clot) that has travelled to the lung through the bloodstream from another part of the body (Fig. 6). Since pulmonary embolism can be life-threatening, early diagnosis can improve survival rate. Computed tomography angiography (CTA) provides an accurate diagnostic tool for PE when it is combined with a CADx system (Schoepf and Costello, 2004; Schoepf et al., 2007). In (Liang and Bi, 2007), Liang et al. proposed a fast, effective approach for PE diagnosis. Segmentation of emboli in CTA is a very challenging task due to partial volume effects around the vessel boundaries that make PE voxels and vessel boundaries indistinguishable. Liang et al. proposed an algorithm called concentration-oriented tobogganing to solve this problem. They extracted 116 features from initial PE candidates. To reduce false positives, they applied multiple-instance classification to make the final diagnosis. This PE CAD system reported 80% sensitivity at 4 false positives per patient on a CTA dataset of 177 cases. Fung et al. also employed multiple-instance learning for diagnosis of PE (Fung et al., 2007). Their work focused on learning a convex hull representation of multiple instances. This representation enabled their algorithm to be significantly faster than existing multiple-instance learning algorithms. More information on PE CAD systems can be found in a review paper (Chan et al., 2008).

Fig. 6.

Pulmonary embolism (shown in yellow circle) in the artery of a 52-year old male patient.

Colon cancer is the second leading cause of cancer-related death in the United States. Computed tomographic colonography (CTC), also known as virtual colonoscopy (VC) when a fly-through viewing mode is used, provides a less-invasive alternative to optical colonoscopy in screening patients for colonic polyps. Computer-aided polyp detection software has improved rapidly and is highly accurate (Summers, 2010; Yoshida and Dachman, 2006).

CAD systems for detection of polyps on CTC have been under investigation over the past decade. Feature extraction and classification are two critical procedures in a successful CTC CAD system. CAD systems first extract multiple features from the images, such as curvature, shape index, curvedness, surface normal overlap, and texture. Summers et al. (Summers et al., 2005) employed curvature-related features to find polyp candidates. Yoshida and Nappi (Yoshida and Nappi, 2001) applied the shape index and curvedness measures to describe polyp candidates. Paik et al. (Paik et al., 2004) developed a method called surface normal overlap that can capture the shape of polyp candidates. Wang et al. (Wang et al., 2005) proposed a polyp detection method which employs geometrical, morphological, and textural features inside polyp candidates.

A well-functioning classifier is a critical component of a practical CTC CAD system. Example classifiers for CTC CAD systems include neural networks and binary classification trees (Jerebko et al., 2003b), committees of SVMs (Malley et al., 2003), quadratic discriminant analysis (Yoshida and Nappi, 2001), massive-training artificial neural networks (MTANNs) (Suzuki et al., 2008), and logistic regression (van Ravesteijn et al., 2010). To extract more useful information from noisy and high dimensional features, Wang et al. introduced dimensionality reduction and multiple kernel learning to CTC CAD and showed promising results (Wang et al., 2008a)(Wang et al., 2010c).

CADe and CADx have also been widely applied in breast tumor detection and diagnosis (Cheng et al., 2003). Breast cancer is the second leading cause of cancer death in U.S. women. In the United States, the lifetime risk for breast cancer is 12.5% with a 3% chance of death (http://www.cancer.org/Cancer/BreastCancer/index). The most widely used diagnostic and screening tool for breast cancer is mammography which uses low-dose X-rays for imaging the human breast (Kerlikowske et al., 1995). For practical breast tumor CADx system, differentiating benign tumor and normal tissue from malignant tumor is the top priority. Chan et al. analyzed texture features of microcalcifications (Chan et al., 1995a; Sahiner et al., 1998). El-Naqa et al. first showed that it is feasible to detect microcalcifications from digital mammograms using support vector machines (El-Naqa et al., 2002). Later, Wei et al. (Wei et al., 2005a) investigated several state-of-the-art machine learning methods for automated classification of clustered microcalcifications (MCs). The methods tested include support vector machine (SVM), kernel Fisher discriminant (KFD), relevance vector machine (RVM), and committee machines (ensemble averaging and AdaBoost).

3.4. Brain function or activity analysis and neurological disease diagnosis from fMR images

Brain function and activity analysis play important roles in research in cognition, psychology, and brain disease diagnosis. Functional magnetic resonance imaging (fMRI) provides a noninvasive and effective way to assess brain activity. Because of the complexity of the human brain and variations of brain activity, fMR images usually show complicated patterns and the interpretation of them usually requires significant computerized analysis. In recent years, machine learning algorithms have been used more and more to decode from fMRI the stimuli, mental states, behaviors, and other variables of interest (Pereira et al., 2009). After feature extraction, we can train a classifier, such as SVM, LDA, and neural network, to differentiate brain activity patterns. Developing automatic ways to learn the patterns from fMR images is challenging because the data are extremely high dimensional and noisy with small size (tens of training samples).

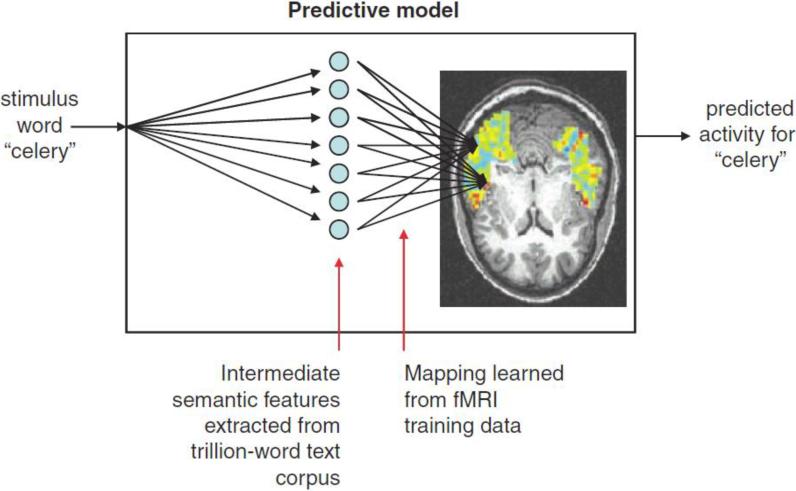

In early work, T. Mitchell et al., tried to decode cognitive states from brain images (Mitchell et al., 2004). They showed a human subject a picture or a sentence or asked the subject to read a word describing different concepts (food, people, building, etc.). The goal was to activate different patterns of brain activity that would be detected by fMRI. They explored several classifiers for analyzing the fMRI data including Gaussian naïve Bayes, SVMs, and k Nearest Neighbor (kNN). Their results showed that it was feasible to distinguish a variety of cognitive states of the brain by using machine learning algorithms. Then they conducted further research on human cognition to try to predict brain behavior under different stimulations (Mitchell et al., 2008). Previous research had shown that spatial patterns of neural activation are related with thinking about different objects and concepts. In this paper, they presented a computational model (Fig. 7) that is able to predict the neural patterns associated with words whose fMRI data have not been utilized by the training process. A striking finding was that their model could make accurate predictions for thousands of nouns in a text corpus based only on the fMRI data of 60 nouns.

Fig. 7.

Form of the model for predicting fMRI activation for arbitrary noun stimuli. fMRI activation is predicted in a two-step process. The first step encodes the meaning of the input stimulus word in terms of intermediate semantic features whose values are extracted from a large corpus of text exhibiting typical word use. The second step predicts the fMRI image as a linear combination of the fMRI signatures associated with each of these intermediate semantic features. Reproduced with permission from Ref. (Mitchell et al., 2008)

As a practical application of machine learning in spatial patterns of brain activity analysis, Davatzikos et al. proposed a new method for lie detection from fMR images (Davatzikos et al., 2005). After image acquisition and preprocessing, such as filtering and registration, they converted the original MR images into parameter estimate images (PEIs). Then, by subdividing the PEIs into 560 cubes (16mm × 16mm × 16mm), they extracted one feature from each cube. Average value of the PEI of each event was employed as the feature. In the last step, an SVM with Gaussian kernel was employed for classification. Experimental results showed that the proposed high-dimensional non-linear pattern recognition method can distinguish different brain activities associated with lying and truth-telling with high accuracy.

Alzheimer's disease (AD) is the most common form of dementia and will cause cognition disturbance gradually as it progresses. A study showed that it is feasible to predict whether persons are in the prodromal phase of AD using structural magnetic resonance imaging (Killiany et al., 2000). In brain MR images, mild cognitive impairment (MCI), which is a prodromal phase of AD, has certain patterns. Davatzikos et al. developed an automatic method to detect these patterns via high-dimensional image warping, robust feature extraction, and SVM (Davatzikos et al., 2008; Fan et al., 2005). Later, Kloppel et al. used linear SVM to classify pathologically proven AD patients and cognitively normal persons using T1-weighted MR scans from two centers and different scanners (Kloppel et al., 2008). They also showed that for dementia diagnosis, well-trained neuroradiologists were comparable to SVMs, which encourages deployment of computerized diagnostic methods in clinical practice (Stonnington et al., 2009). Other efforts on using machine learning algorithms for AD classification problems include linear programming boosting (Hinrichs et al., 2009a), multi-kernel learning (Hinrichs et al., 2009b), and relevance vector regression (Wang et al., 2010d).

Schizophrenia is another type of common neurological disease which affects about 1% of the general population (Shenton et al., 2001). MRI provides a good opportunity to evaluate brain abnormalities in schizophrenia. MRI structural findings in schizophrenia include ventricular enlargement, medial temporal lobe, superior temporal gyrus, parietal lobe, and subcortical brain region involvements (Shenton et al., 2001). Since schizophrenia MRI shows complex patterns and is hard to diagnose, machine learning based methods are preferred to identify psychiatric disorders from high dimensional imaging data. Caan et al. applied principal component analysis and linear discriminant analysis to diffusion tensor brain images of schizophrenia and controls (Caan et al., 2006). In (Demirci et al., 2008), Demirci et al. proposed a projection pursuit algorithm to classify schizophrenia using fMRI data. Their method also focuses on dimensionality reduction involving ICA and PCA in order to find a low dimensional embedding of the original data which was designed to classify schizophrenia and healthy control groups. Kim et al. proposed a hybrid machine learning framework for schizophrenia classification (Kim et al., 2008). They first used ICA to reduce the noise. Then a discrete dynamic Bayesian network was used to distinguish patients with schizophrenia from healthy controls.

In brain fMRI analysis, statistical parametric mapping (SPM) is a widely used statistical technique to test hypotheses on whether a certain region of the brain has some specific effects (Friston et al., 1995). SPM is based on the general linear model and the theory of Gaussian fields. The hypotheses are tested in a voxel-based way. The final parametric mapping image can be viewed as a compression image of original MR or PET image sequence across different time points or from different tasks which is very helpful to understanding brain activity. Applications of SPM in neurological disease diagnosis include Alzheimer's disease (Bookheimer et al., 2000; Scahill et al., 2002), schizophrenia (Sowell et al., 2000; Wilke et al., 2001; Wright et al., 1995), and obsessive-compulsive disorder (Kim et al., 2001; Saxena et al., 2001).

3.5 Content-based image retrieval systems for CT or MRI images

Content-based image retrieval (CBIR) aims to search digital images in large databases based on the contents of the image, such as colors, shapes, and textures, etc. CBIR can help radiologists in disease diagnosis by retrieving images with similar features or previously-confirmed cases with the same diagnosis. It can also help to train radiologists by creating teaching collections of similar images. CBIR is the application of computer vision to the image retrieval problem. In recent years, with the rapid development of machine learning, many machine learning algorithms have been embedded in CBIR systems to improve the query accuracy and efficiency. In the medical area, with the expanding role and quantity of digital imaging for diagnosis and therapy, there are many potential applications for CBIR systems (Tagare et al., 1997). For example, in the Radiology Department of the University Hospital of Geneva, 12,000 images were produced daily in 2002 (Muller et al., 2004). Searching target images in such a huge medical image database would be impossible without a CBIR system.

A practical and effective CBIR system has three key components: a feature extractor, a content comparator, and a query engine. For example, El-Naqa et al. (El-Naqa et al., 2004) studied content comparison for digital mammography CBIR. They proposed a two-stage supervised learning network to learn the similarity function which will assign a similarity coefficient (SC) to each pair of a query image and database entry. In the first stage, a linear classifier is employed to identify database entries which are sufficiently similar to the query image. In the second stage, they considered SVM regression and a general regression neural network to learn the optimal similarity function.

The idea of human interaction in CBIR has been explored by Brodley et al. (Brodley et al., 1999). For medical images, extraction of global information or feature to characterize the images usually will not help much for the CBIR because the clinically useful information is often localized. Brodley et al. proposed an approach called “Physician-in-the-Loop” in which a physician delineates the “pathology-bearing regions” (PBR). These PBRs are often difficult to segment using fully-automated techniques. The authors described their CBIR system as a synergy of human interaction, machine learning, and computer vision.

Global image descriptors based on color, texture, or shape often do not exhibit sufficient semantics for medical applications. Combining global and local features together is a key point for a successful CBIR system. Keysers et al. (Keysers et al., 2003) proposed a statistical framework for model-based image retrieval in medical applications. Their multi-step approach included image categorization based on global features, image registration (in geometry and contrast), feature extraction (using local features), feature selection, indexing (multiscale blob representation), identification (incorporating prior knowledge), and retrieval (on abstract blob level). They used global features such as image modality, body orientation, anatomic region, and biological system to classify and register images first. Then the query was done based on local features extracted from anatomic region.

3.6 Text analysis of radiology reports using NLP/NLU