Summary

The role that frontal-striatal circuits play in normal behavior remains unclear. Two of the leading hypotheses suggest that these circuits are important for action selection or reinforcement learning. To examine these hypotheses we carried out an experiment in which monkeys had to select actions in two different task conditions. In the first (random) condition actions were selected on the basis of perceptual inference. In the second (fixed) condition the animals used reinforcement from previous trials to select actions.

Examination of neural activity showed that the representation of the selected action was stronger in lateral prefrontal cortex (lPFC), and occurred earlier in the lPFC than it did in the dorsal striatum (dSTR). In contrast to this, the representation of action values, in both the random and fixed conditions was stronger in the dSTR. Thus, the dSTR contains an enriched representation of action value, but it followed frontal cortex in action selection.

Introduction

Cortical projections to the striatum form the front end of the cortical-basal ganglia-thalamo-cortical loops. The anatomy of these networks (Alexander et al., 1986; Haber et al., 2006; Middleton and Strick, 2000; Parent and Hazrati, 1995; Parthasarathy et al., 1992) and the physiology of single neuron responses in areas of frontal cortex (Averbeck and Lee, 2007; Funahashi et al., 1991; Fuster, 2008; Histed et al., 2009; Miller and Cohen, 2001; Pasupathy and Miller, 2005) and the striatum (Apicella et al., 2011; Barnes et al., 2005; Hollerman et al., 1998; Jin et al., 2009; Lauwereyns et al., 2002a; Lauwereyns et al., 2002b; Simmons et al., 2007) have been extensively studied. Yet the contributions of these networks to normal behavior are still unclear. There are numerous hypotheses, mostly specifying the striatal transformation of cortical inputs, including dimensionality reduction (Bar-Gad et al., 2003), reinforcement learning (Dayan and Daw, 2008; Doya, 2000; Frank, 2005; Parush et al., 2011), habit formation (Graybiel, 2008), motor learning (Doyon et al., 2009), sequential motor control (Berns and Sejnowski, 1998; Marsden and Obeso, 1994; Matsumoto et al., 1999), response vigor (Turner and Desmurget, 2010), action selection (Denny-Brown and Yanagisawa, 1976; Grillner et al., 2005; Hazy et al., 2007; Houk et al., 2007; Humphries et al., 2006; Mink, 1996; Redgrave et al., 1999; Rubchinsky et al., 2003; Sarvestani et al., 2011), execution of well-learned, automated motor plans (Marsden, 1982), and the trade-off between habitual and goal-directed or cognitive action planning (Daw et al., 2005b), also conceptualized as a trade-off between attention demanding cognitive and automatically executed actions (Norman and Shallice, 1986).

The action selection hypothesis, first proposed on the basis of monkey striatal lesion data (Denny-Brown and Yanagisawa, 1976), suggests that the cortex generates ensembles of possible actions and the striatum selects from among these actions (Houk et al., 2007; Humphries et al., 2006; Mink, 1996). The action selected by the striatum, via the rest of the basal ganglia (BG) circuitry, disinhibits the thalamo-cortical and brainstem networks that lead to execution of the selected action and inhibits the networks that represent competing actions. Many experimental studies and models also suggest that the striatum is important for reinforcement learning (RL) or learning from feedback (Amemori et al., 2011; Daw et al., 2011; Frank et al., 2004; Histed et al., 2009; O’Doherty et al., 2004; Pasupathy and Miller, 2005; Samejima et al., 2005; Sarvestani et al., 2011) and some theories suggest that reinforcement learning is the process by which the striatum learns to select appropriate actions (Frank, 2005). This hypothesis suggests that striatal activity tracks action values in the context of learning.

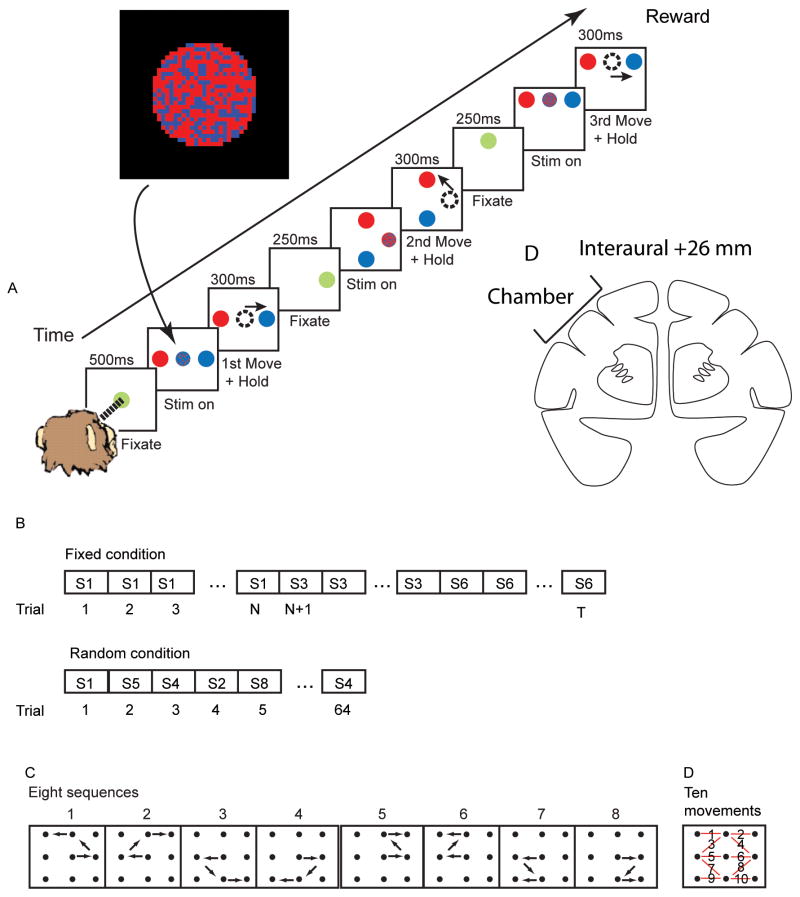

In the present study we trained animals to carry out a sequential decision making task under two conditions (Fig. 1). In both conditions the animals had to determine whether there were more red or more blue pixels in a centrally presented cue, and make a saccade to the peripheral target that matched the majority pixel color. In the first condition the correct spatial sequence of saccades changed every trial, so the only information available about saccade direction was in the central fixation cue. In the second condition the correct sequence of decisions remained fixed for blocks of eight correct trials. In this case the animal could use the information about which spatial sequence had been correct in previous trials to improve its performance. While animals carried out this task we recorded simultaneously from lateral-prefrontal cortex and the dorsal striatum (Fig. 1D). This allowed us to address three hypotheses set forth above. Specifically, we examined action selection, reinforcement learning, and the trade-off between attention-demanding and automatic behaviors in the lateral prefrontal-dorsal striatal circuit.

Fig. 1.

Task. A. Time sequence of events in task. The inset shows an example frame from the random pixel color stimulus that was presented at fixation during Stim on. The example is from a 60/40 split where 61.8% of the pixels were red. B. Trial structure of task conditions. S1, S2, etc. indicates sequence 1, sequence 2, etc. N refers to the number of trials to complete a block, which is always 8 correct trials, plus a variable number of error trials. T correspondingly varies as a function of the number of error trials. C. The eight different sequences of movements used in the two task conditions. D. The sequences are composed of ten possible movements. Each red line indicates one of the movements with the movement number superimposed on the line. D. Drawing of coronal section showing approximate center of chamber in stereo-taxic.

Results

Behavior

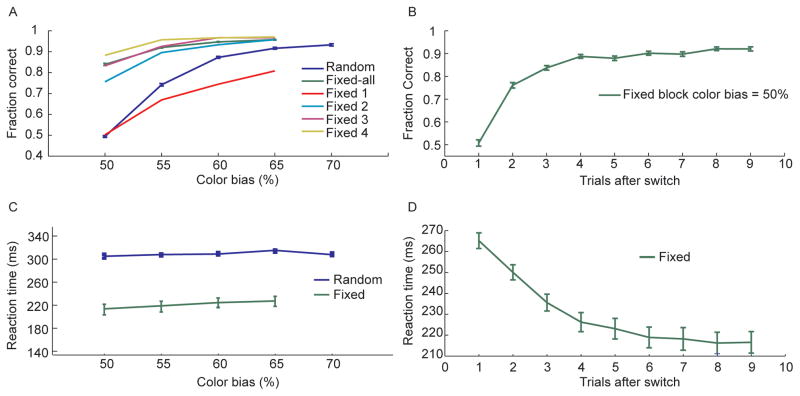

We found that in the random condition, the fraction of correct decisions improved consistently with increasing color bias (Fig. 2A) as the difficulty of determining the majority color of the stimulus decreased. In the fixed condition performance was, on average, consistently better than performance in the random condition, at each color bias (Fig. 2A). Furthermore, this improvement developed across trials after a sequence switch. Performance on the first trial after a switch to a new sequence (Fig. 2A - Fixed 1) was worse than the corresponding performance in the random condition. This reflected the animal’s reliance on their memory of which movements had been correct in the previous trial. Their performance quickly improved across trials, however, until reaching an asymptote at almost 90% correct by the 4th trial (Fig. 2A – Fixed 4), even when the color bias was equivocal, at 50%. In this case there was no information in the stimulus and the animal had to guess the correct saccade direction, in the random condition. Examination of the performance in just the 50% color bias condition as a function of trials following a sequence switch showed that performance improved until about trial 4, after which it remained consistent for the rest of the block (Fig. 2B). In the 50% condition the animal was forced to use information from previous trials to make a correct decision. This shows that the animals were able to use feedback from previous trials to improve their performance. This was further reflected in the reaction times. In the fixed condition the animals would be able to use memory of which sequence had been correct in the previous trials to preplan and execute their decision more quickly. Reaction times were consistently faster in the fixed condition at all color biases (Fig. 2C). Furthermore, this reaction time improvement increased following a sequence switch in each block (Fig. 2D).

Fig. 2.

Behavioral results. A. Fraction of correct decisions as a function of color bias for random and fixed conditions. Data for the fixed condition is shown averaged across all trials of each block (green) as well as separately for the first through fourth trials after a sequence switch (Fixed 1 – Fixed 4). B. Fraction of correct decisions in the 50% color bias condition as a function of trials after the sequence switched in the fixed condition. C. Reaction time in random and fixed conditions as a function of the color bias. D. Reaction time in the fixed condition split out by trials after switch.

Neural activity

We recorded the activity of 553 (230 in monkey 1, 323 in monkey 2) neurons in the lateral prefrontal cortex (lPFC) and 453 (210 in monkey 1, 243 in monkey 2) neurons, in the dorsal striatum (dSTR) predominantly in the caudate nucleus. Neural activity was recorded simultaneously from both areas in all sessions. All reported effects were consistent in both animals. Therefore the data were pooled. We examined activity relative to five factors; the task condition, the sequence executed in each trial, the specific movement being executed, the color bias, and learning related action value, which was estimated using a reinforcement learning algorithm (see methods). Sequence and learning effects were less well defined in the random sets, but because of the consistent task structure we analyzed them as an internal control. We began by analyzing activity using an omnibus ANOVA, across conditions, and then split the data by task condition to examine more specific hypotheses.

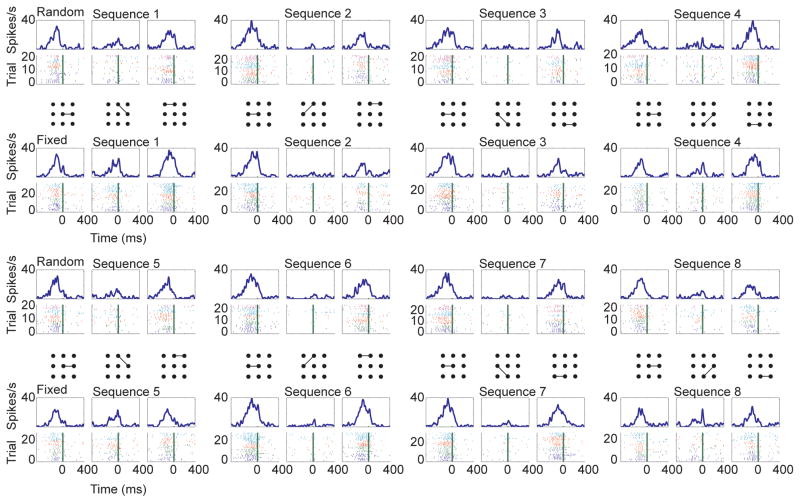

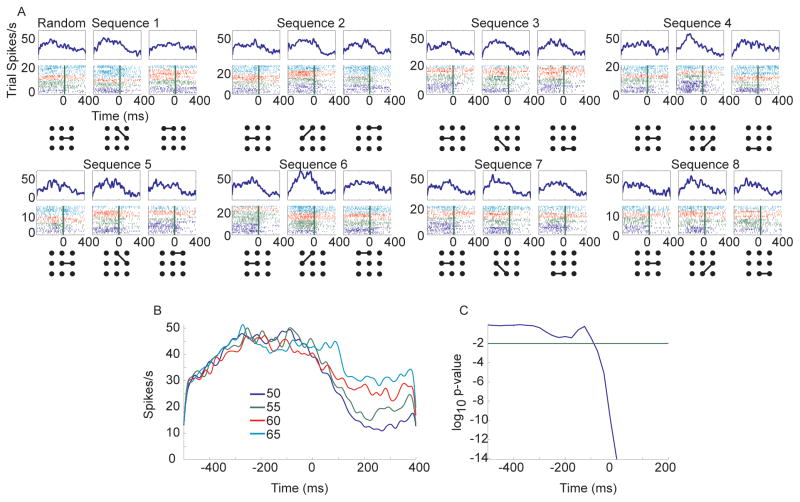

Neurons were found which were related to all variables of interest. For example, some neurons had responses which depended on the specific movement being executed, but which also depended on the task condition (Fig. 3). This lPFC neuron tended to respond strongly to the first and last movement of all sequences in both the random and the fixed condition, as has been seen in previous studies (Fujii and Graybiel, 2003). However, it also had a robust response to the second movement in sequence one and sequence five, but only in the fixed condition. We also found neurons related to the color bias. For example, in the random sets (Fig. 4A) this dSTR neuron had a very strong baseline firing rate which was additionally modulated with the color bias (Fig. 4B), an effect which became statistically significant just after movement onset (Fig. 4C).

Fig. 3.

Example lPFC neuron with movement and task condition related neural activity (p < 0.01 in ANOVA for at least one time bin for both movement and task condition). Rasters and spike density functions are aligned to movement onset (time 0 in plots). Data for each individual sequence of movements is broken out by the individual movements. Top of each panel is spike-density function, bottom is raster showing spikes in individual trials. The individual movements are indicated with the lines connecting dots. First and third rows are from the random condition, second and fourth are from the fixed condition. Only correct trials are shown for the fixed condition. Spike color indicates the color bias for the corresponding trial. Dark blue = 50%, green = 55%, red = 60%, cyan = 65% and violet = 70%.

Fig. 4.

Color bias example from dSTR. Data are aligned to movement onset (time 0 in plots). A. Raster and spike-density function data shown for the random trials only. B. Spike density functions averaged across all conditions for each color bias value for the neuron shown in A. C. P-value vs. time for the main effect of color bias. The time is the beginning of each 200 ms bin. Therefore the p-value at −200 is the bin from −200 to 0 ms.

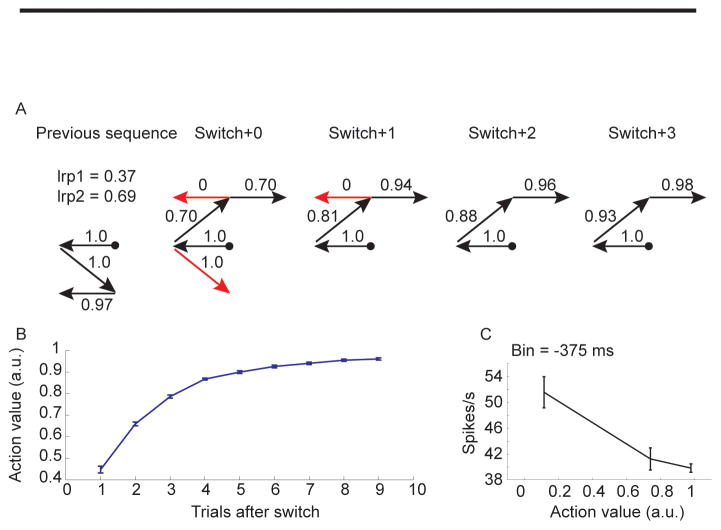

Sequence selection was modeled using a reinforcement learning algorithm (see methods). This allowed us to track the animal’s estimate of the value of each eye movement, movement by movement and trial by trial. For example, in the fixed condition, following a switch from a block in which sequence seven had been correct to a block in which sequence two was correct the animal continued trying to execute sequence seven in the first trial and the value estimates reflected this. The first execution of the leftward movement had a high value (1.0) as this had been correct in the previous block (Fig. 5A, switch+0). After this point the animal still believed that the sequence had not switched and therefore it executed a downward movement for the second movement. As the animal would assume this was correct and this movement would have a high value (1.0). Following this incorrect movement, the animal would have had some feedback that the upward movement was correct and so it would be executed with a moderate value (0.70). The next movement to the left, from the top center, however, had not been correct in the previous block and therefore it would be executed with a very low value (0). After receiving feedback that this was not correct the rightward saccade would have a moderately high value (0.70). In subsequent trials there were fewer errors and the values continued to increase as the animal received more feedback about each of its actions. Average action values tracked learning in a monotonic fashion (Fig. 5B) increasing with trials after switch. The responses of neurons often scaled with the value of the actions, for example decreasing with action value in this dSTR neuron (Fig. 5C) such that a movement executed under equivalent conditions in a fixed block would lead to a different response depending upon how well the sequence had been learned.

Fig. 5.

Example action value and corresponding average effect on neural activity in dSTR neuron. A. Action values extracted from the reinforcement learning algorithm for a series of movements. Example shows one trial preceding sequence switch, and four trials after the switch. Red arrows indicate incorrect movements, black arrows indicate correct movements. Trial always started at circle. B. Average action value as a function of trials after switch shows the accumulation of value across a block. C. Average response of a single example neuron 375 ms before movement onset for different action values. The response in this neuron decreased with increasing action value.

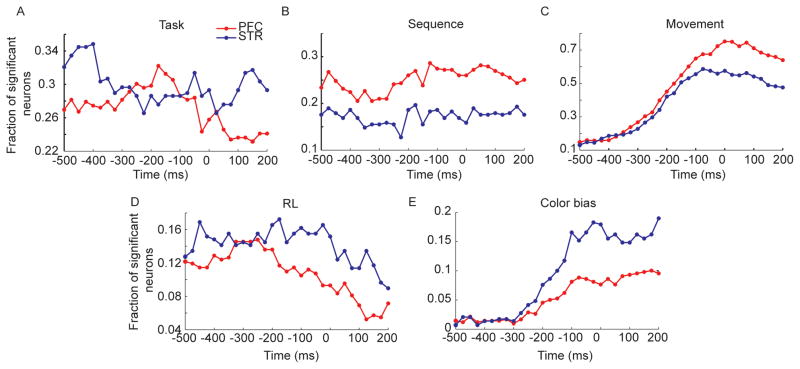

We assessed the effects of the five task factors on the responses of individual neurons using a sliding-window ANOVA aligned to movement onset for each movement of the sequence, in each trial. We found that 75.8% of the prefrontal neurons and 64.0% of the striatal neurons were significant for at least one of the five factors, in one bin of the analysis. Subsequent percentages are reported as a fraction of these task responsive neurons. Task condition (random vs. fixed) effects were present in about 30% of the single neurons in both structures and showed an idiosyncratic effect of time (Fig. 6A). Sequence effects were relatively flat across time, and were present in about 25% of lPFC neurons and 17% of striatal neurons (Fig. 6B). Movement effects evolved dynamically, peaking at about the time of movement at just over 70% in lPFC neurons and just under 60% of dSTR neurons (Fig. 6C). Movement effects were also present well in advance of the movement in about 15% of both striatal and lPFC neurons, because movements could be pre-planned in the fixed condition. The reinforcement learning effect was present in about 16% of striatal neurons and about 12% of lPFC neurons (Fig. 6D). These effects decreased following the movement. The effect of the color bias began to increase about 300 ms before the movement and peaked at the time of movement and was stronger in the dSTR than in the prefrontal cortex (Fig. 6E). There were also interactions between the various task relevant variables (data not shown). However, our specific hypotheses involved comparisons between tasks between areas. Therefore, we next split the data by task condition as well as by brain area and examined coding of the task-relevant variables.

Fig. 6.

Results of ANOVA taking into account all task factors. Data are aligned to movement onset before analysis (time 0 in plots). Time axis is beginning point of 200 ms bin. Thus, −200 is the bin from −200 to 0 ms. A. Fraction of significant neurons (p < 0.01 in this and all subsequent plots) vs. time for the main effect of task. All fractions are out of total number of task relevant neurons, i.e. neurons that were significant in the ANOVA for at least one factor. B. Same as A for main effect of sequence. C. Main effect of movement. D. Main effect of RL. E. Main effect of color bias.

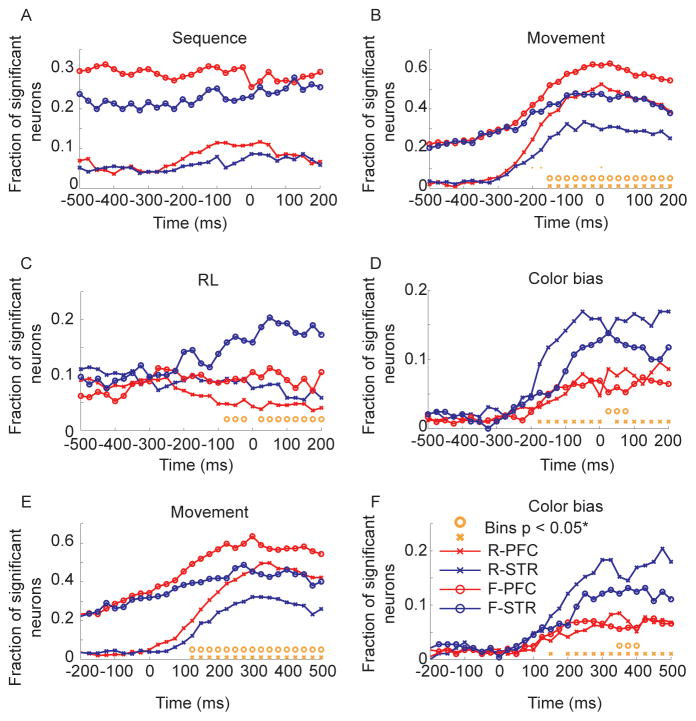

We first ran analyses with neural activity aligned to movement onset. Consistent with the structure of the task, sequence effects were much stronger in the fixed condition (Fig. 7A). There were no significant differences between the proportion of neurons representing sequence in the lPFC and the dSTR in either the fixed or random conditions. The residual sequence effects in the random condition (~5–10%) were likely due to the fact that in the random sets the animal would have partial knowledge of the sequence as the sequence developed. Correlations with RL were relatively flat in most areas, except the dSTR in the fixed condition where the representation gradually increased from about 200 ms before movement onset, reaching about 20% (Fig. 7B). The lPFC and dSTR representations diverged significantly 75 ms before the movement. The representation of movement was at chance levels in the random condition until about 275 ms before movement onset (Fig. 7C), whereas in the fixed condition it reflected the advanced knowledge of the movement, being significantly above chance (p < 0.05, FDR corrected) at least 500 ms before the movement began. In both the random and the fixed condition the representation of movement was significantly stronger in prefrontal cortex than it was in the dSTR, and the representations diverged statistically significantly 150 ms before movement onset in the random and fixed conditions. For the movement variable we also examined an interaction between region and task in the proportion of neurons significant for movement by looking at the difference in the difference in the proportion of neurons significant in each area, between tasks. Specifically, we examined the contrast (plpfc,fixed(t) – pdstr,fixed(t)) – (plpfc,random(t) – pdstr,random(t)). However, we only found three bins with significant differences; at −200, −175 and 0 ms (see orange dots in Fig. 7C at y = 0.01). Significant effects of color bias also began to increase 175 ms before movement onset, reaching peak values just over 15% in the dSTR in the random condition (Fig. 7D). In the random conditions the lPFC and dSTR representation of color bias diverged 175 ms before movement onset and in the fixed condition they diverged, somewhat inconsistently, about 25 ms after movement onset. There were also fewer bins in which the color bias representation in the dSTR exceeded the representation in the lPFC, in the fixed relative to random conditions, consistent with the fact that this variable was less important in the fixed condition, but there was not a significant difference in the fraction of neurons representing color bias in the fixed vs. random conditions, in the dSTR (p > 0.05, FDR corrected).

Fig. 7.

ANOVA split by task for lPFC and dSTR. Analyses in panels A-D are carried out on data aligned to movement onset (time 0 in plots). Analyses in panels E-F are carried out on data aligned to target onset (time 0 in plots). Orange circles indicate significant differences, after FDR correction, between the fraction of neurons significant in lPFC vs. dSTR in fixed condition. Orange x’s are the same for the random condition. R-lPFC (random condition, lPFC); R-dSTR (random condition, dSTR); F-lPFC (fixed condition; lPFC); F-dSTR (fixed condition, dSTR). A. Effect of sequence. B. Effect of RL. C. Effect of movement. Orange dots at y = 0.1 in only this panel represent significant a interaction between task and region. D. Effect of color bias. E. Effect of movement. F. Effect of color bias.

We also examined whether the neurons in our sample that were significant for color bias had a positive or negative slope (Fig. S1A). Neurons with a positive slope would have higher firing rates for the high color bias conditions (q = 0.70) and lower firing rates for the lower color bias conditions (q = 0.50). During the time before saccade onset in the dSTR in the random condition, we found that about half of the significant neurons had a positive slope, and about half had a negative slope. This fraction dipped slightly around the time of movement onset, and then increased again. For comparison we also examined the slope of the RL effect and found that about 30% of the neurons had a positive slope in the dSTR (Fig. S1B). Therefore, most neurons in this structure decreased their firing rates with increasing action value. We also examined whether neurons tended to code both RL information and color bias information, but generally very few neurons coded for both (max = 16 neurons at 50 ms after movement onset in dSTR in the fixed condition). All of these 16 neurons, at this time had the same slope for both RL and color bias (χ2=16, p < 0.001). Thus, these neurons coded value in a consistent way.

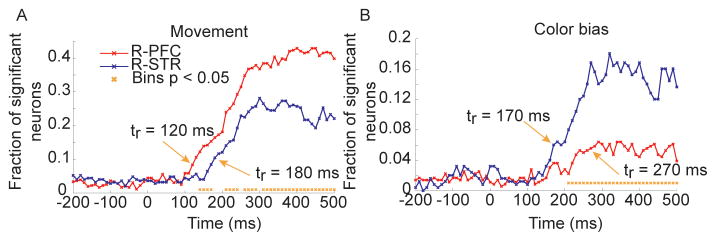

We next examined effects of movement and color bias after aligning to target onset, instead of movement onset. These two variables were examined with different alignment as they showed the strongest dynamics relative to the movement. Results were generally consistent (Fig. 7E–F) with the results from alignment to movement. Interestingly, when aligned to target onset, the representation of movements in the random condition seemed to rise slightly earlier in lPFC than it did in dSTR (Fig. 7E). To assess this in more detail we reran the same analysis using 100 ms binwidths with 10 ms shifts (Fig. 8). This analysis showed that the movement representation did increase in lPFC before it did in dSTR by about 60 ms (Fig. 8A). Specifically, the first time that the representation exceeded baseline (comparison between proportion in each bin following target onset and the average of bins preceding target onset) in lPFC was 120 ms after target onset and the first time that the representation exceeded baseline in dSTR was 180 ms after target onset. The two signals also diverged statistically significantly at about this time. The same analysis applied to color bias in the random condition showed that the dSTR representation exceeded baseline about 170 ms after target onset, whereas the lPFC representation exceeded baseline about 270 ms after target onset.

Fig. 8.

Action selection and color bias in the random condition. Fraction of significant neurons as a function of time in lPFC and dSTR in the random condition. Analysis based on data aligned to target onset, not movement onset. Orange arrow and tr are time that fraction of significant neurons exceeds baseline (-200 to 0 ms) levels. A. Significance of movement factor in the random condition. B. Significance of color bias in the random condition.

Overall, the preceding analyses suggested that the representation of movements was stronger in lPFC, and it arose sooner in lPFC in the random condition. In contrast to this, both the color bias and RL effects in fixed blocks were stronger in the dSTR. To address this directly, we used a repeated measures generalized linear model (see methods) to examine region (lPFC vs. dSTR) by variable (in the fixed condition: movement vs. RL and in the random condition: movement vs. color bias) interactions in the fixed and random conditions across time. We found that there was a significant region by variable interaction in the fixed condition (p < 0.001) such that there was a stronger representation of movements in the lPFC and a stronger representation of RL in the dSTR. We also found a significant region by variable interaction in random condition (p < 0.001) such that there was a stronger representation of movements in lPFC and a stronger representation of color bias in the dSTR.

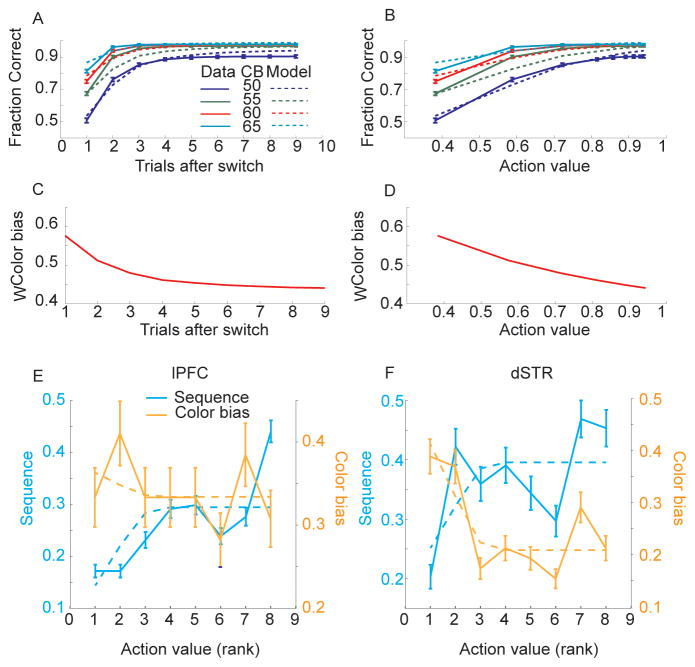

In the final analyses we considered how sequence and color bias information might be traded off during learning in the fixed blocks. Both reaction time and fraction correct analysis of the behavior in the fixed condition suggested that when the sequence switched across blocks the animals reverted to extracting information from the fixation stimulus to determine the correct direction of movement. After 3–4 trials the animals then were able to use the accumulated feedback about which sequence was correct, and execute the sequence from memory. When we examined the behavior we found that color bias and sequence information were integrated, with color bias playing a larger role in the early trials after the sequence switched when action values were small (Fig. 9A,B), and action value or learning contributing more to decisions later in the block. Both action value and color bias were used to make decisions throughout the block, however, evidenced by the impact of color bias information on decisions even at the end of the block. We used a logistic regression model to estimate the relative impact of action value and color bias on decisions. The model provided a good fit to the data (Fig. 9A, B) and both action value (p < 0.001) and color bias (p < 0.001) were significant predictors of choice. Using the coefficients derived from the model, the relative weight of color bias (WColor bias) or its complement action value (WAction value = 1 − WColor bias) on the decision process could be estimated (Fig. 9C, D).

Fig. 9.

Integration of sequence and color bias information and evolution with learning in behavioral and neural data. A. Behavior and model estimates of behavior as a function of learning and color bias (50, 55, 60, 65). Solid lines are behavior, dotted lines are model estimates. B. Same as panel A, plotted as a function of action value. C. Color bias weight derived from behavioral model. D. Same as C plotted in terms of action value. E. Fraction of neurons significant for sequence and color bias in lPFC at movement onset (bin from t = 0 to 300 ms), normalized by number of neurons significant when analysis is run without binning by RL. Solid lines are data, dashed lines are the relative behavioral weight of action value, WAction value(CB, value), or color bias, WColor bias (CB, value). Note these lines are not the same as those plotted in panel D because the x-axes differ. Panel D is linear in action value, whereas panels E and F are averages of rank action value. F. Same as panel E for dSTR.

In the next analysis we considered the change in color bias and sequence representation in neural responses in the fixed condition with learning. We assessed this in the neural responses by sorting all data from each recording session according to the RL estimate of the value of individual movements. Movements have low action value early in the block and they increase with trials in the block (Fig. 5B). Thus, action value captures how well the animals have learned the sequence. We binned all the trials by action value and ran the ANOVA model separately on the neural data in each bin, dropping RL from the model (Fig. 9E,F). Only one time bin (0–300 ms, relative to saccade onset) was analyzed. We found that the neural representation of sequence increased (fraction of significant units), and color bias decreased, as the action value increased (Fig. 9E,F). We then used estimates of the relative behavioral weight of action value information, WAction value, derived from the behavioral model to predict neural sequence information (fraction of neurons significant for sequence), and the relative behavioral weight of color bias, WColor bias, to predict the neural color bias representation (fraction of neurons significant for color bias). We found that there was a significant relationship between action value and neural sequence information in lPFC (p = 0.001) but not between behavioral color bias and neural color bias information in lPFC (p = 0.832). We also found a significant relationship between behavioral color bias weight and neural color bias information in dSTR (p = 0.010) but not between behavioral action value weight and neural sequence information (p = 0.086), although this was close to significant. Finally we compared these directly by examining the interaction between behavioral action value information and neural sequence information in lPFC and neural color bias information in the dSTR and found a significant interaction (p < 0.001). Therefore, as sequence information increased through learning of action values, lPFC increased the representation of sequence, and as color bias information became less relevant behaviorally, the dSTR decreased the representation of color bias.

Discussion

We recorded neural activity in lPFC and the dSTR while animals carried out a task in which they had to saccade to a peripheral target that matched the majority pixel color in a central fixation cue. In the random condition this was the only information available about saccade direction. In the fixed condition the spatial sequence of saccades remained fixed for sets of 8 correct trials and, therefore, animals could use this information to improve their decisions. Consistent with this, in the fixed condition the animals made more correct decisions and responded faster. We found neurons in both structures related to task condition, sequence, movement, reinforcement learning (RL) and color bias factors. When activity was split by task and compared between lPFC and dSTR we found that there were no significant differences in the representation of sequence information across structures. Movements were represented well in advance in both structures in the fixed condition, consistent with the fact that the animals could preplan movements in this condition. In the random condition the movement representation in lPFC preceded the movement representation in dSTR and in both random and fixed conditions more neurons in lPFC represented movements than in dSTR. In contrast to this, for both RL and color bias factors, there was a stronger representation in the dSTR. Thus, lPFC appeared to select actions, whereas dSTR appeared to represent the value of the action. Finally, we found that there was an inverse relationship in the fixed condition, between sequence and color bias representation in the dSTR, as each block evolved. This suggests that the dSTR is involved in trading off the relative importance of information in the fixation stimulus, necessary when a new sequence is being selected, and learned action value, about which sequence is correct in the current block.

Despite the list of disorders attributable to the frontal-striatal system -- for example schizophrenia, impulsive disorders, drug addiction, Parkinson’s disease, Tourette’s syndrome -- it is still not clear what these circuits contribute to normal behavior. Much of the thinking about this system is driven by the anatomy of the circuit. The frontal-striatal interaction is the front end of a larger cortical-basal ganglia-thalamo-cortical circuit (Alexander et al., 1986; Middleton and Strick, 2000; Parent and Hazrati, 1995). One of the prominent mechanistic theories of this circuit suggests that the medium spiny GABAergic projection neurons in the striatum, which constitute its only output, are divided into direct and indirect pathways (Albin et al., 1989; DeLong, 1990; Surmeier et al., 2007). The direct channel has predominantly dopamine D1 receptors and projects to the GPi and the indirect channel has predominantly dopamine D2 receptors and projects first to the GPe, which projects to the STN and then to the GPi (Surmeier et al., 1996).

Several authors have suggested that the striatum carries out action selection (Denny-Brown and Yanagisawa, 1976; Frank, 2005; Humphries et al., 2006; Mink, 1996). These ideas have often been motivated by the finding that STN projections to the GPi tend to be diffuse whereas striatal projections to the GPi tend to be focused and targeted (Parent and Hazrati, 1993). Thus, the inhibitory GPi output is increased in a diffuse way by STN input, and is decreased in a targeted way by the direct striatal input. These models suggest that mechanisms within the striatum refine or select actions and it is the focused projection of the striatum via the direct pathway into the GPi that disinhibits the selected action. Despite the fact that Mink proposed that the BG are important for action selection, it was also noted that the responses of GPi neurons often did not sufficiently precede movement to actually be involved in movement initiation (Mink, 1996). This, fact complicates the interpretation of the BG as an action selection circuit and leaves open the question of what the activity in this pathway is actually contributing to an ongoing movement.

As noted above, we found that more lPFC neurons than dSTR neurons in both the random and fixed conditions coded the movement. Furthermore, in the random condition movement specific activity in lPFC exceeded baseline levels about 60 ms before dSTR activity. This is generally consistent with previous studies (Crutcher and Alexander, 1990; Sul et al., 2011), although it has not been shown directly within a single experiment. If lPFC activity simply increased in a non-specific way, prior to the movement, an ANOVA for movement on spike counts would not show a significant effect. Therefore our data suggest that the action is being selected or represented in lPFC before it is being selected in the dSTR. In general, this is inconsistent with the action selection hypothesis.

A second prominent hypothesis suggests that the striatum is important for reinforcement learning (Amemori et al., 2011; Antzoulatos and Miller, 2011; Brasted and Wise, 2004; Daw et al., 2005a; Doya, 2000; Histed et al., 2009; Pasupathy and Miller, 2005; Pessiglione et al., 2006; Williams and Eskandar, 2006), which is closely related to the hypothesis that it is important for developing habits (Graybiel, 2008; Matsumoto et al., 1999). For example, studies have shown that the representation of direction in the caudate preceded in time the representation in PFC early in learning, and perhaps served as a teaching signal for the PFC (Antzoulatos and Miller, 2011; Pasupathy and Miller, 2005). This is generally consistent with our finding that the caudate had an enriched representation of value derived from the reinforcement learning algorithm in the fixed condition. The learning in Pasupathy and Miller, however, evolved over about 60 trials, whereas the selection in our task evolved over 3–4 trials, making it difficult for us to examine changes in the relative timing of movement signals with learning, to compare our results directly.

Much of the work that suggests a role for the striatum in RL has been motivated by the strong projection of the mid-brain dopamine neurons to the striatum (Haber et al., 2000) and the finding that dopamine neurons signal reward prediction errors (Schultz, 2006). Evidence also suggests, however, that dopamine neurons can be driven by aversive events (Joshua et al., 2008; Matsumoto and Hikosaka, 2009; Seamans and Robbins, 2010), and therefore a straightforward interpretation of dopamine responses as a reward prediction error is not possible. It is still possible that striatal neurons represent action value. Although this has been shown previously (Samejima et al., 2005), similar value representations have been seen in the cortex (Barraclough et al., 2004; Kennerley and Wallis, 2009; Leon and Shadlen, 1999; Platt and Glimcher, 1999) and therefore the specific role of the striatal action value signal was unclear. As we recorded from both lPFC and the dSTR simultaneously, we were able to show that there was an enrichment of value representations in the dSTR relative to the lPFC in the same task. Interestingly, this was true in both the random and fixed task conditions. In the fixed task condition we found that activity scaled with a value estimate from a reinforcement learning algorithm, and in the random and fixed conditions the activity scaled with the color bias, which is related to the animals’ probability of advancing in the sequence, and ultimately the number of steps necessary to get the reward. This finding is consistent with a role for the dSTR in reinforcement learning, although it suggests a more general role in value representation, as the neurons represent value in both random and fixed conditions. The representation in the random condition is consistent with finding from previous studies (Ding and Gold, 2010).

One interesting question is where does the action value information come from, if not from lPFC? There are three likely candidates. One is the dopamine neurons, which have a strong projection to the striatum (Haber et al., 2000), and respond to rewards and reward prediction errors (Joshua et al., 2008; Matsumoto and Hikosaka, 2009; Schultz, 2002). One caveat to this idea, however, is that dopamine works through metabotropic ion channels (Gazi and Strange, 2002), and the dynamics of the second messenger cascades activated by dopamine receptors are apparently not fast enough to affect neural activity on rapid time scales (Lavin et al., 2005). The effects could, however, be driven by glutamate co-released from dopamine neurons (Lavin et al., 2005). A second possibility is that the value signal is carried by the substantial input from the centro-median/parafascicular (CM-PF) thalamic nuclei (Nakano et al., 1990). A majority of neurons in CM-PF respond when low-value actions are required (Matsumoto et al., 2001; Minamimoto et al., 2005). An additional possibility is that the increased value representation is coming from other areas of frontal cortex, for example dorsal anterior cingulate projections to the striatum. This area has a strong value representation (Kennerley and Wallis, 2009), and it sends projections into the striatum that slightly overlap with the lPFC projection (Haber et al., 2006). The projections from this area, however, do not appear to project directly to the portion of the dorsal striatum from which we recorded (Haber et al., 2006). Overall, then, the mostly likely candidates for a fast value-related signal in the striatum would be glutamate co-released from dopamine neurons, or the CM-PF input.

Examination of the neural representation of color bias and sequence in the fixed condition showed that they followed complementary patterns, such that sequence information increased in lPFC and color bias information decreased in dSTR as the monkeys learned within each block. The increase and decrease were significantly related to the relative behavioral weight of sequence and color information, estimated by a Bayesian behavioral model. Thus, when the sequence switched, the animals reverted to using the pixel information as they relearned the sequence, and this could be seen in both the behavioral and neural data. As they learned the sequence they transitioned to using less pixel information, which was less accurate, and more sequence information, which was more accurate. This trade-off is consistent, at a high level, with a model which has suggested that dual control systems, one in lPFC and one in the dSTR, compete for control of behavior (Daw et al., 2005b). This model suggests that the trade-off between these systems is mediated by optimal integration based on the uncertainty associated with the predictions of each system. In other words, if one system is producing uncertain estimates, it is weighted less in the decision process. Thus, our data is consistent with this aspect of the model. What is less clear from our data, however, is whether the dSTR does action selection when action values are high, and the sequences can be executed like habits. A different task structure might make this clearer.

Other groups have suggested that the BG is important for sequential motor control, or the execution of well-learned motor acts (Berns and Sejnowski, 1998; Fujii and Graybiel, 2005; Marsden and Obeso, 1994). Our animals were highly over-trained on the sequences, and therefore the actions in our task were both well-learned and sequential. We did not find that the dSTR had an enriched representation of sequences, or showed a stronger representation of actions in the fixed condition where sequence information was most prevalent, although it did have representations of both. We have found in previous work that patients with Parkinson’s disease have deficits in sequence learning (Seo et al., 2010), although the deficits in that study were specifically with respect to reinforcement learning of the sequences. Thus, we do not find evidence that the dSTR is relatively more important for the execution of over-learned motor programs. If anything there was a bias for lPFC to have an enriched representation of sequences and the increase in sequence representation was more strongly correlated with behavioral estimates of sequence weight in lPFC than in dSTR. We have consistently found in previous studies that lPFC has strong sequence representations, that are predictive of the actual sequence executed by the animal, even when the animal makes mistakes (Averbeck et al., 2002, 2003; Averbeck and Lee, 2007; Averbeck et al., 2006).

Several groups have recently proposed that the striatum (Lauwereyns et al., 2002b; Nakamura and Hikosaka, 2006), BG (Turner and Desmurget, 2010) or dopamine (Niv et al., 2007) are important for modulating response vigor, which is the rate and speed of responding. In many cases actions are more vigorous when they are directed immediately to rewards than when they must be done without a reward, or to get to a subsequent state where a reward can be obtained (Shidara et al., 1998). Thus, the fact that we find a strong value related signal in the dSTR is consistent with this hypothesis. Also consistent with this, responding became much faster in the fixed condition as the animal selected the appropriate sequence of actions, although reaction times were relatively flat in the random condition as a function of color bias. The relationship between value and reaction time in our tasks, however, is complicated, as the animal had to carry out various computations to extract the value information, and the computations themselves are time consuming. This differs from the straightforward relationship between rewards and actions that have been used in previous tasks (Lauwereyns et al., 2002b) emphasizing a role for the striatum in modulating response vigor.

Conclusion

In summary, we have found that lPFC has an enriched representation of actions, and that in the random condition the action representation in lPFC precedes the representation in the dSTR. Furthermore, the enriched representation of movements in lPFC is complemented by an enriched representation of action values in the dSTR. The action value representation in the dSTR was stronger for reinforcement based value in the fixed condition, and for color bias in both fixed and random conditions. Finally, we also found that the dSTR and lPFC represented the extent to which sequence information (driven by reinforcement) vs. color bias information was relevant in the task. Thus, our data is generally not consistent with the hypothesis that the dSTR is important for action selection, but it is consistent with the hypotheses that the dSTR plays a role in reinforcement learning, and more generally in representing action value, perhaps in the service of response vigor.

Methods

General

Two male rhesus macaques were used in this study. Experimental procedures for the first monkey were in accordance with the United Kingdom Animals (Scientific Procedures) Act 1986. Experimental procedures for the second monkey were performed in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals and were approved by the Animal Care and Use Committee of the National Institute of Mental Health. Most procedures were equivalent except the UK animal received food pellet rewards and the US animal received juice reward, as detailed below. The recording chamber (18 mm diameter) was placed over the lateral prefrontal cortex (lPFC) in a sterile surgery using stereotaxic coordinates (AP 26, ML 17 relative to ear-bar zero, in both monkeys) derived from a structural MRI (Fig. 1D). This placed the center of the chamber near the caudal tip of the principal sulcus with the FEF in the rear of the chamber.

Behavioral task

The animals carried out an oculomotor sequential decision-making task (Fig. 1A). Each trial began when the animals acquired fixation on a green circle (Fixate). If the animal maintained fixation for 500 ms the green target was replaced by a dynamic pixelating stimulus (diameter was 1 degree of visual angle) with a varied proportion of red and blue pixels. At the same time target stimuli were presented (Stim On). The fixation circle stimulus was generated by randomly choosing the color of each pixel in the stimulus (N=518 pixels) to be blue (or red) with a probability q, which we refer to as the color bias (Fig. 1A, inset). The color of a subset (10%) of the pixels was updated on each video refresh (60 Hz). Whenever a pixel was updated its color was always selected with the same probability, q. The set of pixels that was updated was selected randomly on each refresh.

The animal’s task was to saccade to the target that matched the majority pixel color in the fixation stimulus. It could make its decision at any time after the target stimuli appeared. After the animal made a saccade to the peripheral target it had to maintain fixation for 300 ms to signal its decision (1st Move + Hold). If it made a saccade to the correct target, the target then turned green and the animal had to maintain fixation for an additional 250 ms (Fixate). After this 250 ms of fixation the green target was again replaced by the blue and red pixelating stimulus and two new peripheral targets were presented (Stim On). If the animal made a saccade to the wrong target it was extinguished and the animal was forced back to repeat the previous decision step with another pixelating stimulus. This was repeated until the animal made the correct choice. For each trial the animal’s task was to complete a sequence of three correct decisions at which point the animal received either a juice reward (0.1 ml) or a food pellet reward (TestDiet 5TUL 45mg) and a 2000 ms inter-trial interval began. The animal always received a reward if it reached the end of the sequence of three correct decisions, even if it made errors on the way. Furthermore, if the animal made a mistake it only had to repeat the previous decision, it was not forced back to the beginning of the sequence.

The task was carried out under two different conditions which we refer to as the fixed and random conditions. In the random condition the correct spatial sequence of decisions varied from trial to trial (Fig. 1B). In the fixed condition the correct spatial sequence of eye movements remained fixed for blocks of eight correct trials (Fig. 1B). After the animal executed eight trials without any mistakes in the fixed condition the sequence switched pseudo-randomly to a new one. Thus, in the random condition the animal had to rely on the information in the fixation stimulus to determine the correct saccade direction for each choice, whereas in the fixed condition the animal could execute the sequence from memory, except following a sequence switch. In the random condition if the animal made a mistake and had to repeat its decision, the correct direction was randomly reselected. For example, if the animal made a rightward saccade that was wrong and was forced back to repeat the decision, the rightward saccade could then be correct.

Recording sessions were randomly started with either a fixed or a random set each day and then the two conditions were interleaved. Each random set was 64 completed trials (Fig. 1B), where a trial was only counted as completed if the animal made it to the end of the sequence and received a reward. Fixed sets were 64 correct trials because the animal had to execute each of the eight sequences correctly 8 times to complete a fixed set. The total number of correct and incorrect trials in fixed sets depended upon the animal’s performance. Neural activity was analyzed if a stable isolation was maintained for a minimum of two random sets and two fixed sets.

Each trial was composed of three binary decisions and therefore there were eight possible sequences (Fig. 1C). The eight sequences were composed of ten individual movements (Fig. 1D). Each movement occurred in at least two sequences. We also used several levels of color bias, q as defined above. On most recording days in the fixed sets we used q ∈ (0.50, 0.55, 0.60, 0.65) and in random sets we used q ∈ (0.50, 0.55, 0.60, 0.65, 0.70). The higher probability level in the random sets was included to maintain the animal’s motivation as this condition was more difficult. The color bias was selected randomly for each movement and was not held constant within a trial. Choices on the 50% color bias condition were rewarded randomly. The sequences were highly overlearned. One animal had 103 total days of training, and one for 92 days, before chambers were implanted. The first 5–10 days of this training were devoted to basic fixation and saccade training.

In theory the stimulus had substantial information, and an optimal observer would have been able to infer the correct color 98% of the time with one frame with q=0.55, because of the large number of pixels, each of which provided an independent estimate of the majority color. In practice there are likely limitations in the ability of the animal to extract the maximum information in the stimulus.

Data Analysis

Neural data was analyzed by fitting ANOVA model (see Supplemental methods for details). After running the ANOVAs we had time courses of the fraction of significant neurons (all at p < 0.01) for each area, for each task factor. Significant differences, bin-by-bin between these time-courses were assessed with a Gaussian approximation (Zar, 1999). We also carried out bootstrap analysis on a subset of the data and obtained results that were highly consistent with the Gaussian approximation. The raw p-values from this analysis suffer from multiple comparisons problems as we applied the analysis across many time points. Therefore, we subsequently corrected for multiple comparisons using the false discovery rate (FDR) correction (Benjamini and Yekutieli, 2001). To do this we first calculated the uncorrected p-values using the Gaussian approximation. The p-values were then sorted in ascending order. The rank ordered p-values (P(k)) were considered significant when they were below the threshold defined by: . Where k is the rank of the sorted p-values, α is the FDR significance level and m is the total number of tests (time points) under consideration. An α level of 0.05 was used for these tests. Any p-values exceeding this threshold were set to 1.

We modeled learning after sequence switches using a reinforcement learning model (Sutton and Barto, 1998). Specifically, the value, vi of each action, i, was updated according to

| (1) |

Rewards, r(t), for correct actions were 1 and for incorrect actions were 0. This was the case for each movement, not just the movement that led to the juice reward. The variable ρf is the learning rate parameter. We used separate values of ρf for positive (ρf=positive) and negative (ρf=negative) feedback, i.e. correct and incorrect actions. In fixed blocks the value of the chosen action and the value of the action which was not chosen were both updated, as the animal knew that the opposite action was correct if the chosen action was incorrect, and vice-versa. This is often called fictive-learning (Hayden et al., 2009). When sequences switched, actions in the sequence following the switch that were the same as actions in the sequence that preceded the switch were given the value they had before the sequence switched. In other words the values were copied into the new block. This was consistent with the fact that the animal did not know when the sequence switched and so it could not update its action values until it received feedback that the previous action was no longer correct. Actions from the previously correct sequence that were not possible in the new sequence were given a value of 0.

The learning rate parameters ρf and an additional inverse temperature parameter, β, were estimated separately for each session by minimizing the log-likelihood of the animals’ decisions using fminsearch in matlab, as we have done previously (Djamshidian et al., 2011). If β is small then the animal is less likely to pick the higher value target whereas if β is large the animal is more likely to pick the higher value target, for a fixed difference in target values. To estimate the log-likelihood we first calculated choice probabilities using:

| (2) |

The sum is over the two actions possible at each point in the sequence. We then calculated the log likelihood (ll) of the animal’s decision sequence as:

| (3) |

The sum is over all decisions in a recording session, T. The variable c1(t) models the chosen action and has a value of one for action 1 and 0 for action 2. Average optimal values for β were 1.858 +/− 0.03 and 1.910 +/− 0.025 for monkeys 1 (N = 34 sessions) and 2 (N = 61 sessions), respectively. Average optimal values for ρf=positive were 0.440 +/− 0.015 and 0.359 +/− 0.008 for monkeys 1 and 2. Average optimal values for ρf=negative were 1.042 +/− 0.03 and 0.656 +/− 0.013 for monkeys 1 and 2. The value of the action that was taken, vi(t), was then correlated with neural activity in the ANOVA model.

Integration of action value and color bias information in fixed blocks

We modeled the integration of sequence or learned action value, and color bias information on choices in the fixed condition. We used action value as an estimate of sequence learning, because knowing the sequence entails knowing the actions. Although it is possible that some actions are known before the complete sequence, the structure of the task is such that knowing actions and sequences are highly correlated. Further, we found that the behavioral weight estimated by action value significantly predicted sequence representation in lPFC neurons (Fig. 9).

We estimated the relative influence of action value and color bias information by using logistic regression to predict the behavioral performance (fraction correct or fc) as a function of color bias (CB) and action value. Specifically, we fit the following equation:

| (4) |

Where g is the logistic transform. We then used the coefficients derived from the logistic regression model to estimate the weight given to action value and color bias:

| (5) |

For pixel color bias the weights were, WColor bias(CB, value) = 1 − WAction value(CB, value). As these weights for action value and color bias are related by a linear transform, –either (but not both as they are perfectly correlated) can be used to predict the fraction of neurons significant for each factor (Fig. 9E,F). It is clear, however, in Fig. 9 that the increasing function, WAction value(CB, value), correlates with sequence in lPFC, and the decreasing function, WColor bias (CB, value), correlates with color bias in the dSTR. Values plotted in Fig. 9 are averaged across color bias levels and shown only as a function of action value. Analysis of the effect of color bias was done across levels, and therefore we need to know the average weight given to color bias, not the weight given to a specific color bias, which, could not be known to the animal until after the stimulus was shown.

Supplementary Material

Acknowledgments

This work was supported by the Brain Research Trust, the Wellcome Trust and the Intramural Research Program of the National Institute of Mental Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Albin RL, Young AB, Penney JB. The functional anatomy of basal ganglia disorders. Trends Neurosci. 1989;12:366–375. doi: 10.1016/0166-2236(89)90074-x. [DOI] [PubMed] [Google Scholar]

- Alexander GE, DeLong MR, Strick PL. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annu Rev Neurosci. 1986;9:357–381. doi: 10.1146/annurev.ne.09.030186.002041. [DOI] [PubMed] [Google Scholar]

- Amemori K, Gibb LG, Graybiel AM. Shifting responsibly: the importance of striatal modularity to reinforcement learning in uncertain environments. Front Hum Neurosci. 2011;5:47. doi: 10.3389/fnhum.2011.00047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antzoulatos EG, Miller EK. Differences between neural activity in prefrontal cortex and striatum during learning of novel abstract categories. Neuron. 2011;71:243–249. doi: 10.1016/j.neuron.2011.05.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Apicella P, Ravel S, Deffains M, Legallet E. The role of striatal tonically active neurons in reward prediction error signaling during instrumental task performance. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2011;31:1507–1515. doi: 10.1523/JNEUROSCI.4880-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Chafee MV, Crowe DA, Georgopoulos AP. Parallel processing of serial movements in prefrontal cortex. Proc Natl Acad Sci U S A. 2002;99:13172–13177. doi: 10.1073/pnas.162485599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Chafee MV, Crowe DA, Georgopoulos AP. Neural activity in prefrontal cortex during copying geometrical shapes I. Single cells encode shape, sequence, and metric parameters. Exp Brain Res. 2003;150:127–141. doi: 10.1007/s00221-003-1416-6. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Lee D. Prefrontal neural correlates of memory for sequences. Journal of Neuroscience. 2007;27:2204–2211. doi: 10.1523/JNEUROSCI.4483-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Sohn JW, Lee D. Activity in prefrontal cortex during dynamic selection of action sequences. Nat Neurosci. 2006;9:276–282. doi: 10.1038/nn1634. [DOI] [PubMed] [Google Scholar]

- Bar-Gad I, Morris G, Bergman H. Information processing, dimensionality reduction and reinforcement learning in the basal ganglia. Prog Neurobiol. 2003;71:439–473. doi: 10.1016/j.pneurobio.2003.12.001. [DOI] [PubMed] [Google Scholar]

- Barnes TD, Kubota Y, Hu D, Jin DZ, Graybiel AM. Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature. 2005;437:1158–1161. doi: 10.1038/nature04053. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29:1165–1188. [Google Scholar]

- Berns GS, Sejnowski TJ. A computational model of how the basal ganglia produce sequences. J Cogn Neurosci. 1998;10:108–121. doi: 10.1162/089892998563815. [DOI] [PubMed] [Google Scholar]

- Brasted PJ, Wise SP. Comparison of learning-related neuronal activity in the dorsal premotor cortex and striatum. The European journal of neuroscience. 2004;19:721–740. doi: 10.1111/j.0953-816x.2003.03181.x. [DOI] [PubMed] [Google Scholar]

- Crutcher MD, Alexander GE. Movement-related neuronal activity selectively coding either direction or muscle pattern in three motor areas of the monkey. Journal of Neurophysiology. 1990;64:151–163. doi: 10.1152/jn.1990.64.1.151. [DOI] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005a;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature Neuroscience. 2005b;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Dayan P, Daw ND. Decision theory, reinforcement learning, and the brain. Cognitive, affective & behavioral neuroscience. 2008;8:429–453. doi: 10.3758/CABN.8.4.429. [DOI] [PubMed] [Google Scholar]

- DeLong MR. Primate models of movement disorders of basal ganglia origin. Trends Neurosci. 1990;13:281–285. doi: 10.1016/0166-2236(90)90110-v. [DOI] [PubMed] [Google Scholar]

- Denny-Brown D, Yanagisawa N. The role of the basal ganglia in the initiation of movement. In: Yahr MD, editor. The Basal Ganglia. New York: Raven Press; 1976. pp. 115–149. [PubMed] [Google Scholar]

- Ding L, Gold JI. Caudate encodes multiple computations for perceptual decisions. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2010;30:15747–15759. doi: 10.1523/JNEUROSCI.2894-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Djamshidian A, O’Sullivan SS, Wittmann BC, Lees AJ, Averbeck BB. Novelty seeking behaviour in Parkinson’s disease. Neuropsychologia. 2011 doi: 10.1016/j.neuropsychologia.2011.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doya K. Complementary roles of basal ganglia and cerebellum in learning and motor control. Current opinion in neurobiology. 2000;10:732–739. doi: 10.1016/s0959-4388(00)00153-7. [DOI] [PubMed] [Google Scholar]

- Doyon J, Bellec P, Amsel R, Penhune V, Monchi O, Carrier J, Lehericy S, Benali H. Contributions of the basal ganglia and functionally related brain structures to motor learning. Behavioural brain research. 2009;199:61–75. doi: 10.1016/j.bbr.2008.11.012. [DOI] [PubMed] [Google Scholar]

- Frank MJ. Dynamic dopamine modulation in the basal ganglia: a neurocomputational account of cognitive deficits in medicated and nonmedicated Parkinsonism. Journal of Cognitive Neuroscience. 2005;17:51–72. doi: 10.1162/0898929052880093. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O’Reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306:1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- Fujii N, Graybiel AM. Representation of action sequence boundaries by macaque prefrontal cortical neurons. Science. 2003;301:1246–1249. doi: 10.1126/science.1086872. [DOI] [PubMed] [Google Scholar]

- Fujii N, Graybiel AM. Time-varying covariance of neural activities recorded in striatum and frontal cortex as monkeys perform sequential-saccade tasks. Proc Natl Acad Sci U S A. 2005;102:9032–9037. doi: 10.1073/pnas.0503541102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Neuronal activity related to saccadic eye movements in the monkey’s dorsolateral prefrontal cortex. J Neurophysiol. 1991;65:1464–1483. doi: 10.1152/jn.1991.65.6.1464. [DOI] [PubMed] [Google Scholar]

- Fuster J. The Prefrontal Cortex. 4. London: Academic Press; 2008. [Google Scholar]

- Gazi L, Strange PG. Dopamine receptors. In: Pangalos MN, Davies CH, editors. Understanding G Protein-coupled Receptors and their Role in the CNS. New York: Oxford University Press; 2002. pp. 264–285. [Google Scholar]

- Graybiel AM. Habits, rituals, and the evaluative brain. Annual Review of Neuroscience. 2008;31:359–387. doi: 10.1146/annurev.neuro.29.051605.112851. [DOI] [PubMed] [Google Scholar]

- Grillner S, Hellgren J, Menard A, Saitoh K, Wikstrom MA. Mechanisms for selection of basic motor programs--roles for the striatum and pallidum. Trends Neurosci. 2005;28:364–370. doi: 10.1016/j.tins.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Haber SN, Fudge JL, McFarland NR. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J Neurosci. 2000;20:2369–2382. doi: 10.1523/JNEUROSCI.20-06-02369.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Kim KS, Mailly P, Calzavara R. Reward-related cortical inputs define a large striatal region in primates that interface with associative cortical connections, providing a substrate for incentive-based learning. J Neurosci. 2006;26:8368–8376. doi: 10.1523/JNEUROSCI.0271-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Pearson JM, Platt ML. Fictive reward signals in the anterior cingulate cortex. Science. 2009;324:948–950. doi: 10.1126/science.1168488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hazy TE, Frank MJ, O’Reilly RC. Towards an executive without a homunculus: computational models of the prefrontal cortex/basal ganglia system. Philos Trans R Soc Lond B Biol Sci. 2007;362:1601–1613. doi: 10.1098/rstb.2007.2055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Histed MH, Pasupathy A, Miller EK. Learning substrates in the primate prefrontal cortex and striatum: sustained activity related to successful actions. Neuron. 2009;63:244–253. doi: 10.1016/j.neuron.2009.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollerman JR, Tremblay L, Schultz W. Influence of reward expectation on behavior-related neuronal activity in primate striatum. J Neurophysiol. 1998;80:947–963. doi: 10.1152/jn.1998.80.2.947. [DOI] [PubMed] [Google Scholar]

- Houk JC, Bastianen C, Fansler D, Fishbach A, Fraser D, Reber PJ, Roy SA, Simo LS. Action selection and refinement in subcortical loops through basal ganglia and cerebellum. Philos Trans R Soc Lond B Biol Sci. 2007;362:1573–1583. doi: 10.1098/rstb.2007.2063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries MD, Stewart RD, Gurney KN. A physiologically plausible model of action selection and oscillatory activity in the basal ganglia. J Neurosci. 2006;26:12921–12942. doi: 10.1523/JNEUROSCI.3486-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jin DZ, Fujii N, Graybiel AM. Neural representation of time in cortico-basal ganglia circuits. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:19156–19161. doi: 10.1073/pnas.0909881106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joshua M, Adler A, Mitelman R, Vaadia E, Bergman H. Midbrain dopaminergic neurons and striatal cholinergic interneurons encode the difference between reward and aversive events at different epochs of probabilistic classical conditioning trials. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2008;28:11673–11684. doi: 10.1523/JNEUROSCI.3839-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. The European journal of neuroscience. 2009;29:2061–2073. doi: 10.1111/j.1460-9568.2009.06743.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauwereyns J, Takikawa Y, Kawagoe R, Kobayashi S, Koizumi M, Coe B, Sakagami M, Hikosaka O. Feature-based anticipation of cues that predict reward in monkey caudate nucleus. Neuron. 2002a;33:463–473. doi: 10.1016/s0896-6273(02)00571-8. [DOI] [PubMed] [Google Scholar]

- Lauwereyns J, Watanabe K, Coe B, Hikosaka O. A neural correlate of response bias in monkey caudate nucleus. Nature. 2002b;418:413–417. doi: 10.1038/nature00892. [DOI] [PubMed] [Google Scholar]

- Lavin A, Nogueira L, Lapish CC, Wightman RM, Phillips PE, Seamans JK. Mesocortical dopamine neurons operate in distinct temporal domains using multimodal signaling. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2005;25:5013–5023. doi: 10.1523/JNEUROSCI.0557-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Marsden CD. The mysterious motor function of the basal ganglia: the Robert Wartenberg Lecture. Neurology. 1982;32:514–539. doi: 10.1212/wnl.32.5.514. [DOI] [PubMed] [Google Scholar]

- Marsden CD, Obeso JA. The functions of the basal ganglia and the paradox of stereotaxic surgery in Parkinson’s disease. Brain. 1994;117(Pt 4):877–897. doi: 10.1093/brain/117.4.877. [DOI] [PubMed] [Google Scholar]

- Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature. 2009;459:837–841. doi: 10.1038/nature08028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsumoto N, Hanakawa T, Maki S, Graybiel AM, Kimura M. Role of [corrected] nigrostriatal dopamine system in learning to perform sequential motor tasks in a predictive manner. J Neurophysiol. 1999;82:978–998. doi: 10.1152/jn.1999.82.2.978. [DOI] [PubMed] [Google Scholar]

- Matsumoto N, Minamimoto T, Graybiel AM, Kimura M. Neurons in the thalamic CM-Pf complex supply striatal neurons with information about behaviorally significant sensory events. J Neurophysiol. 2001;85:960–976. doi: 10.1152/jn.2001.85.2.960. [DOI] [PubMed] [Google Scholar]

- Middleton FA, Strick PL. Basal ganglia and cerebellar loops: motor and cognitive circuits. Brain Res Brain Res Rev. 2000;31:236–250. doi: 10.1016/s0165-0173(99)00040-5. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Minamimoto T, Hori Y, Kimura M. Complementary process to response bias in the centromedian nucleus of the thalamus. Science. 2005;308:1798–1801. doi: 10.1126/science.1109154. [DOI] [PubMed] [Google Scholar]

- Mink JW. The basal ganglia: focused selection and inhibition of competing motor programs. Prog Neurobiol. 1996;50:381–425. doi: 10.1016/s0301-0082(96)00042-1. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Hikosaka O. Role of dopamine in the primate caudate nucleus in reward modulation of saccades. J Neurosci. 2006;26:5360–5369. doi: 10.1523/JNEUROSCI.4853-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakano K, Hasegawa Y, Tokushige A, Nakagawa S, Kayahara T, Mizuno N. Topographical projections from the thalamus, subthalamic nucleus and pedunculopontine tegmental nucleus to the striatum in the Japanese monkey, Macaca fuscata. Brain research. 1990;537:54–68. doi: 10.1016/0006-8993(90)90339-d. [DOI] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- Norman DA, Shallice T. Attention to Action Willed and Automatic Control of Behavior. In: Davidson RJ, Schwartz GE, Shapiro D, editors. Consciousness and Self-Regulation. Advances in Research and Theory. New York: Plenum Press; 1986. pp. 1–18. [Google Scholar]

- O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Parent A, Hazrati LN. Anatomical aspects of information processing in primate basal ganglia. Trends in Neurosciences. 1993;16:111–116. doi: 10.1016/0166-2236(93)90135-9. [DOI] [PubMed] [Google Scholar]

- Parent A, Hazrati LN. Functional anatomy of the basal ganglia. I. The cortico-basal ganglia-thalamo-cortical loop. Brain research. Brain research reviews. 1995;20:91–127. doi: 10.1016/0165-0173(94)00007-c. [DOI] [PubMed] [Google Scholar]

- Parthasarathy HB, Schall JD, Graybiel AM. Distributed but convergent ordering of corticostriatal projections: analysis of the frontal eye field and the supplementary eye field in the macaque monkey. J Neurosci. 1992;12:4468–4488. doi: 10.1523/JNEUROSCI.12-11-04468.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parush N, Tishby N, Bergman H. Dopaminergic Balance between Reward Maximization and Policy Complexity. Front Syst Neurosci. 2011;5:22. doi: 10.3389/fnsys.2011.00022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasupathy A, Miller EK. Different time courses of learning-related activity in the prefrontal cortex and striatum. Nature. 2005;433:873–876. doi: 10.1038/nature03287. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Redgrave P, Prescott TJ, Gurney K. The basal ganglia: a vertebrate solution to the selection problem? Neuroscience. 1999;89:1009–1023. doi: 10.1016/s0306-4522(98)00319-4. [DOI] [PubMed] [Google Scholar]

- Rubchinsky LL, Kopell N, Sigvardt KA. Modeling facilitation and inhibition of competing motor programs in basal ganglia subthalamic nucleus-pallidal circuits. Proceedings of the National Academy of Sciences of the United States of America. 2003;100:14427–14432. doi: 10.1073/pnas.2036283100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Sarvestani IK, Lindahl M, Hellgren-Kotaleski J, Ekeberg O. The arbitration-extension hypothesis: a hierarchical interpretation of the functional organization of the Basal Ganglia. Front Syst Neurosci. 2011;5:13. doi: 10.3389/fnsys.2011.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Getting formal with dopamine and reward. Neuron. 2002;36:241–263. doi: 10.1016/s0896-6273(02)00967-4. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Seamans JK, Robbins TW. Dopamine modulation of the prefrontal cortex and cognitive function. In: Neve KA, editor. In The Dopamine Receptors. New York: Humana Press; 2010. pp. 373–398. [Google Scholar]

- Seo M, Beigi M, Jahanshahi M, Averbeck BB. Effects of dopamine medication on sequence learning with stochastic feedback in Parkinson’s disease. Front Syst Neurosci. 2010:4. doi: 10.3389/fnsys.2010.00036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Aigner TG, Richmond BJ. Neuronal signals in the monkey ventral striatum related to progress through a predictable series of trials. The Journal of neuroscience: the official journal of the Society for Neuroscience. 1998;18:2613–2625. doi: 10.1523/JNEUROSCI.18-07-02613.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons JM, Ravel S, Shidara M, Richmond BJ. A comparison of reward-contingent neuronal activity in monkey orbitofrontal cortex and ventral striatum: guiding actions toward rewards. Annals of the New York Academy of Sciences. 2007;1121:376–394. doi: 10.1196/annals.1401.028. [DOI] [PubMed] [Google Scholar]

- Sul JH, Jo S, Lee D, Jung MW. Role of rodent secondary motor cortex in value-based action selection. Nature Neuroscience. 2011;14:1202–1208. doi: 10.1038/nn.2881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Surmeier DJ, Ding J, Day M, Wang Z, Shen W. D1 and D2 dopamine-receptor modulation of striatal glutamatergic signaling in striatal medium spiny neurons. Trends in Neurosciences. 2007;30:228–235. doi: 10.1016/j.tins.2007.03.008. [DOI] [PubMed] [Google Scholar]

- Surmeier DJ, Song WJ, Yan Z. Coordinated expression of dopamine receptors in neostriatal medium spiny neurons. J Neurosci. 1996;16:6579–6591. doi: 10.1523/JNEUROSCI.16-20-06579.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge, Mass: MIT Press; 1998. [Google Scholar]

- Turner RS, Desmurget M. Basal ganglia contributions to motor control: a vigorous tutor. Current opinion in neurobiology. 2010;20:704–716. doi: 10.1016/j.conb.2010.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams ZM, Eskandar EN. Selective enhancement of associative learning by microstimulation of the anterior caudate. Nature Neuroscience. 2006;9:562–568. doi: 10.1038/nn1662. [DOI] [PubMed] [Google Scholar]

- Zar JH. Biostatistical Analysis. Upper Saddle River: Prentice Hall; 1999. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.