Abstract

Graded exams are prerequisites for the admission to the medical state examination. Accordingly the exams must be of good quality in order to allow benchmarking with the faculty and between different universities. Criteria for good quality need to be considered - namely objectivity, validity and reliability. The guidelines for the processing of exams published by the GMA are supposed to help maintaining those criteria. In 2008 the Department of General Medicine at the University of Munich fulfils only 14 of 18 items. A review process, appropriate training of the staff and the introduction of the IMSm software were the main changes that helped to improve the ‘GMA-score’ to 30 fulfilled items. We see the introduction of the IMSm system as our biggest challenge ahead. IMSm helps to streamline the necessary workflow and improves their quality (e.g. by the detection of cueing, item analysis). Overall, we evaluate the steps to improve the exam process as very positive. We plan to engage co-workers outside the department to assist in the various review processes in the future. Furthermore we think it might be of value to get into contact with other departments and faculties to benefit from each other’s question pools.

Keywords: Tests, General Medicine, Item Management System

Abstract

Benotete Prüfungen sind Voraussetzung für die Zulassung zum zweiten Abschnitt der Ärztlichen Prüfung (Staatsexamen) in der Medizin. Daraus lässt sich die Notwendigkeit ableiten, qualitativ hochwertige Prüfungen zu konzipieren, die einen Leistungsvergleich unter Absolventen einer Fakultät und darüber hinaus auch interfakultär erlauben. Hierbei sind Kernqualitätsmerkmale Objektivität, Validität und Reliabilität zu beachten. Die im Leitlinienkatalog der GMA genannten Kriterien sollen die Qualität der Prüfungen sicherstellen. Das Prüfungskonzept des Lehrbereichs Allgemeinmedizin an der LMU erreichte bei Betrachtung der MC-Klausur 2008 nur 14 von 48 möglichen Kriterien. Ein fest eingeplanter Review-Prozess, stetige Weiterbildung des Prüfungsverantwortlichen und die Einführung der Prüfungsverwaltungssoftware IMSm waren Kernpunkte der Veränderung. Heute liegen die Anzahl der erreichten GMA-Kriterien bei 30. Besonders die Einführung der Prüfungsverwaltungssoftware IMSm wurde als zukunftsweisend betrachtet. Die eingebauten Review-Elemente des Systems erleichtern die Etablierung dieser Prozesse und erhöhen die Qualität der Fragen (Item-Analyse, Erkennung von Cueing und weiteren Problemen im Review-Prozess). Die aktuellen Verbesserungsmaßnahmen sind im Sinne einer Qualitätssicherung der Prüfungen als sehr positiv zu bewerten. Es erscheint erstrebenswert, den Kontakt zu Prüfungsverantwortlichen anderer Fakultäten zu suchen, um durch Vernetzung der gegenseitigen Fragepools zu profitieren.

Background

With the implementation of a new licensing regulation for doctors in 2002 [1] the faculties have to organise graded exams to benchmark students’ course achievements. The course achievements are prerequisites for the admission to the medical state examination and are being printed in the certificate since the new licensing requirements in 2002. Student grades can be important in the process of applications and scholarships. Recent studies showed that preparation, structuring, accomplishment and correction of exams in Germany are often not ideal. Space for improvement exists and even first attempts at improving the process were noted [2], [3]. We want to demonstrate the exam processing in the Department of General Medicine and evaluate its progress.

Introduction

Given the new importance of faculty internal exams, the faculties had to find exam types and modalities to ensure significant and comparable grades.

It was in 2003 when the Didaktikzentrum der Eidgenössischen Technischen Hochschule Zürich (ETHZ) first published the „Leitfaden für das Planen, Durchführen und Auswertung von Prüfungen (…)“ [4]. This guideline was meant to be a helpful checklist and includes criteria of good quality which aim to be indicators for the quality of an exam. Those main criteria are objectivity, reliability and validity but the guideline also includes factors such as comparability, scaling and economy.

On the basis of the Swiss’ guideline, the GMA (Gesellschaft für Medizinische Ausbildung) and the Kompetenzzentrum Prüfungen Baden-Württemberg published the “Leitlinien für Fakultäts-intere Leistungsnachweise während des Medizinstudiums” in 2008 [5]. The authors formulate suggestions for the design of exams to balance the resources with respect to the amount of people that are required and the technical help that exists for the preparation of the exam in comparison with the high prerequisites. The catalogue has 48 items that are grouped into seven categories:

General structural prerequisites

Exam design

Organisational preparations for the realisation of the exam

Realisation of the exam

Correction of the exam and documentation

Publishing the results

Review process

The items are presented to help organising exams effectively and relevantly. For example, the appropriate exam design and a consensus on how to correct and deal with grades is a topic to be discussed before the exam. The catalogue focuses on the designation of a person responsible for the exam, appropriate training and an economical use of technical instruments for the correction and the review process.

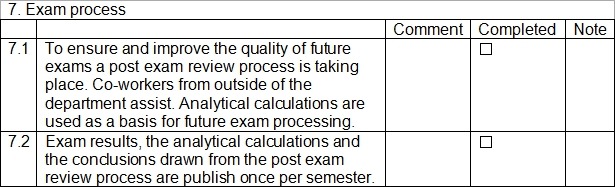

The review process encompasses a careful revision of the exam, including possible collaboration with faculty outside the department, before and after the actual exam takes place. It would include the calculation of the criteria for good quality (see figure 1 (Fig. 1)).

Figure 1. GMA guidelines, Chapter 7: Review process [5].

To evaluate the progress and changes that have been made in the field of exams since the implementation of a new licensing regulation for doctors, the Deutsche Fakultätentag initiated a survey in 2007. The German faculties were asked to describe the organisation, exam processing and different review processes at their university. Professor Resch published the first results of this survey at the Fakultätentag in 2008 [2]. One of the main results was that, despite the fact that most of the exams were designed as multiple choice-exams, less than half of the faculties used existing central infrastructures for the preparation and correction. A more detailed analysis of the data was published by Möltner et al two years later [3]. Only 40% of the universities used organised review processes. The authors pointed out that efforts that have been made in the field of exams were measureable but that the results have been very heterogeneous.

General medicine at the University of Munich

Since the implementation of “MeCuM”, the name of the new curriculum for medical students in Munich, the Department of General Medicine is involved in medical education with two exams [6], [https://e-learning.mecum-online.de/Informationambu_Allg_L6_L7.PDF] and a variety of classes.

During the clinical part of the medical studies the department offers classes and lectures covering the clinic out-patient [https://e-learning.mecum-online.de/Informationambu_Allg_L6_L7.PDF]. The course achievements are benchmarked with the help of a multiple choice (MC) exam consisting of 40 questions taking place at the end of year four. This exam will be evaluated for further consideration.

For many years each lecturer was responsible for the creation of multiple choice (MC) questions covering their topic. The final exam consists of only one question per lecture. Automatic versioning or export for printing was not available. The correction of the exams was done by a large number of assistants in order to be able to publish the results in an adequate time. In the course of time a confusing and unordered file record originated. A statistical analysis or a review process was never planned.

Using the items published by the GMA [5] to describe the exam, the department fulfils only 14 of 48 possible items. Items were fulfilled if they matched the criteria that were described in detail.

On the basis of the new relevance the faculty exams gained, the Department of General Medicine drew the conclusion that an evaluation of the actual exam concept was necessary.

Methods

Steps to improve the exam concept

Introduction of IMSm

To make the process more efficient, the department worked hard on the introduction of a software to accompany the whole process of exams called ItemManagementSystem für Medizin (IMSm) [7]. The software had already been tested by universities in Berlin, Heidelberg and Munich before and was used by the Department of General Medicine for the first time in 2008.

The software was developed as a joint project by the universities mentioned above and insures standardisation and quality management to a high degree [7].

How IMSm works

Users can log into a web interface that gives full control over all steps of the exam. A well-thought-out user management process allows the integration of co-workers at each of the steps individually. IMSm facilitates the co-operation between departments or faculties by giving the parties the possibility to exchange their pool of questions.

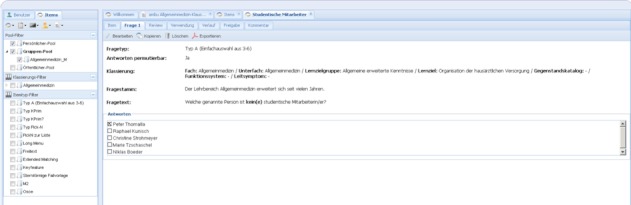

The system supports not only multiple choice questions (see figure 2 (Fig. 2)) but also cases that can consist of multiple questions that can be linked. The current version even supports OSCE exams and thereby can be used in almost every case.

Figure 2. Creation of a new multiple choice question.

The users can tag the questions to certain categories, e.g. educational objectives, in order to help not losing the overview over a growing database. The educational objectives are chosen to be general but the software supports department specific objectives as well.

To generate a new exam the user only has to choose questions out of the database. At all steps of the creation questions can be edited or exchanged. The software also allows the user to integrate a faculty logo, versioning or page numbering, for example.

Technical improvements

Introducing IMSm as a tool to facilitate the exam preparation thereby moving the file-based maintenance to a database-based web interface was not the only change. IMSm supports the ability to read the answer sheets after scanning.

The answer sheets are scanned centrally to ensure a fast and accurate processing and publishing of the results. Also the scanned data is being used by the software to calculate statistical values. This is important for further exams as values like the discriminatory power can be used to improve the quality.

Structural steps

The department tries to improve communication with the students by publishing the exact date, place and time the exam will take place as well as a written, detailed catalogue of topics that will be covered by the exam. To ensure quality the co-workers responsible for the questions meet regularly to talk about the questions and their possible answers. Questions must fulfil criteria regarding their content and form. This happens not only before but also after the exam. The department aims to reach a reliability (Cronbachs alpha) of 0.8 which because of the small number of questions is not always easy.

Results

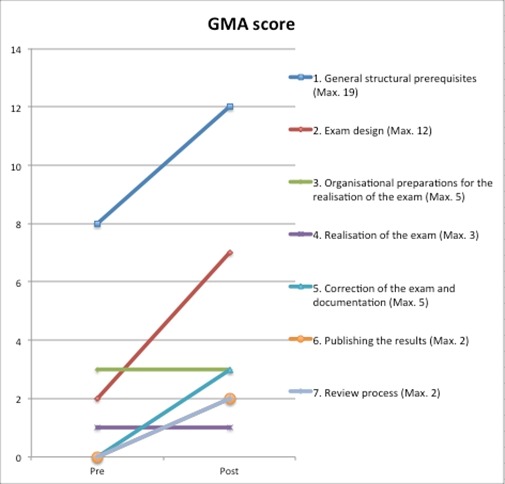

The steps that have been undertaken to improve the exam concept of the Department of General Medicine at the University of Munich lead to a significant increase with respect to the fulfilment of the criteria enunciated by the GMA (see figure 3 (Fig. 3)).

Figure 3. Fulfilled items before and after the introduction of IMSm.

The number of fulfilled items in the category “general structural prerequisites” mainly evolves from the realisation of the following: introduction of a catalogue of educational objectives, formal improvements of the general process (e.g. structured seating plan and grouping), introduction of a system to cope with wrong questions and announcing regularly dates for inspection of the exams by students.

In the category “exam design” we achieved the best improvement by: introduction of a pre-review process and its results, improvement of the economical use of existing infrastructures by delegating tasks to the central examining section and the introduction of a binding correction scheme. The overall reliability (Cronbachs alpha) was calculated to be 0.65 and did not change very much.

Figure 3 (Fig. 3) shows the positive trend towards improvements in the categories “organisational preparations for the realisation of the exam” and “realisation of the exam”. In each of these categories two items remain unimproved.

In the category “correction of the exam and documentation” we have improved on three items. Those items cover the analytical calculation before results are published. In addition a protocol is created covering any change in the process of correction.

With the introduced software and the technical help we were able to speed up the exam correction process and are now able to publish the results within at least two weeks. As mentioned before, the students now have the chance to inspect their exams afterwards.

Within the category “review process” both items are fulfilled: there is a documented session reviewing the exam afterwards. Almost immediately any relevant topic will be forwarded to the person that handed in the particular question.

Discussion

The implementation of a new licensing regulation for doctors in 2002 has great impact on the relevance of exams within the faculty. Since 2002 the faculties have to organise graded exams to benchmark students’ course achievements. The grades can be important when it comes to the admission of work places for scholarships. Accordingly the exams must be of good quality in order to allow benchmarking with the faculty and between different universities.

To assist in the creation of higher quality exams the Didaktikzentrum der ETHZ followed by the Ausschuss Prüfungen der GMA and the Kompetenzzentrum Prüfungen Bayern-Würtemberg, published guidelines and quality criteria for the whole process. The main criteria for good quality were objectivity, reliability and validity. Effort was put into transparent communication, review process including the integration of external department reviews and analytical calculations. The published guideline consists of 48 items the aim of which is to improve the quality and design of exams effectively and relevantly including current and future exams.

During internal discussions about the possible steps to improve the exam process of our department we oriented ourselves strongly on the GMA guidelines. Main changes were the declaration of one co-worker who is responsible for the entire process and educational objectives. We expect the new review processes will guarantee quality.

Today, the department fulfils 30 out of the 48 possible items (‘GMA-score’, see figure 3 (Fig. 3)).

In the category “general structural prerequisites” and “exam design” we focused on the implementation of a catalogue of educational objectives. This catalogue can be seen as a basis for the creation of questions, but is also helpful for the students as the lectures are geared to it.

We see the introduction of the IMSm system as our biggest challenge ahead. The possibility to use technical help, such as reading the answer sheets automatically, takes a burden of the small department that mainly consists of one 40%-full time position. The technical help allows automatic correction and calculation of analytic values almost instantaneously. IMSm helps to streamline the necessary workflow as it allows the integration of co-workers at each individual step by a well-though-through user management, especially in the review process (e.g. detection of cueing).

There were no measureable improvements in the categories “organisational preparations for the realisation of the exam” and “realisation of the exam”. This is largely the result of the faculty that registers every single student for the exam automatically. Only if a student decides not to take part, he or she must contact the faculty.

In the future we will advise those people watching the students during the exam to document those who try to cheat and the department will work on a general procedure on how to deal with those cases.

Overall, we evaluate the steps to improve the exam process as very positive. It has to be noted that the items being used to calculate the ‘GMA-score’ do not cover all aspects of an exam, e.g. they do not cover the question of how satisfied the students were with the process. A survey about this question was not yet performed. The very positive trends toward a better system are an encouraging sign that the process has improved.

We plan to engage co-workers outside the department to assist in the various review processes in the future. Furthermore we think it might be of value to get into contact with other departments and faculties to benefit from each other’s question pools.

Competing interests

The authors declare that they have no competing interests.

References

- 1.Bundesministerium für Gesundheit. Approbationsordnung für Ärzte vom 27. Juni 2002. Bundesgesetzbl. 2002;1:2405–2435. [Google Scholar]

- 2.Resch F. Universitäre Prüfungen im Licht der neuen ÄAppO. Tagungsband des MFT. Berlin: Medizinischer Fakultätentag; 2008. [Google Scholar]

- 3.Möltner A, Duelli R, Resch F, Schultz JH, Jünger J. Fakultätsinterne Prüfungen an den deutschen medizinischen Fakultäten. GMS Z Med Ausbild. 2010;27(3):Doc44. doi: 10.3205/zma000681. Available from: http://dx.doi.org/10.3205/zma000681. [DOI] [Google Scholar]

- 4.Eugster B, Lutz L. Leitfaden für das Planen, Durchführen und Auswerten von Prüfungen an der ETHZ. Zürich: Didaktikzentrum Eidgenössische Technische Hochschule Zürich; 2004. [Google Scholar]

- 5.Gesellschaft für Medizinische Ausbildung, Kompetenzzentrum Prüfungen Baden-Württemberg, Fischer MR. Leitlinie für Fakultäts-interne Leistungsnachweise während des Medizinstudiums: Ein Positionspapier des GMA-Ausschusses Prüfungen und des Kompetenzzentrums Prüfungen Baden-Württemberg. GMS Z Med Ausbild. 2008;25(1):Doc74. Available from: http://www.egms.de/static/de/journals/zma/2008-25/zma000558.shtml. [Google Scholar]

- 6.Schelling J, Boeder N, Schelling U, Oberprieler G. Evaluation des "Blockpraktikums Allgemeinmedizin". Überblick, Auswertung und Rückschlüsse an der LMU. Z Allg Med. 2010;86:461–465. [Google Scholar]

- 7.Brass K, Hochlehnert A, Jünger J, Fischer MR, Holzer M. Studiumbegleitende Prüfungen mit einem System: ItemManagementSystem für die Medizin. GMS Z Med. 2008;25(1):Doc37. Available from: http://www.egms.de/static/de/journals/zma/2008-25/zma000521.shtml. [Google Scholar]