Abstract

Aims: Different approaches to performance-oriented allocation of resources according to teaching quality are currently being discussed within German medical schools. The implementation of these programmes is impeded by a lack of valid criteria to measure teaching quality. An assessment of teaching quality should include structural and procedural aspects but focus on learning outcome itself. The aim of this study was to implement a novel, outcome-based evaluation tool within the clinical phase of a medical curriculum and address differences between the novel tool and traditional evaluation methods.

Methods: Student self-assessments before and after completion of a teaching module were used to compute performance gains for specific learning objectives. Mean performance gains in each module were compared to student expectations before the module and data derived from a traditional evaluation tool using overall course ratings at the end of the module.

Results: A ranking of the 21 modules according to computed performance gains yielded entirely different results than module rankings based on overall course ratings. There was no significant correlation between performance gain and overall ratings. However, the latter were significantly correlated to student expectations before entering the module as well as structural and procedural parameters (Pearson’s r 0.7-0.9).

Conclusion: Performance gain computed from comparative self-assessments adds an important new dimension to course evaluation in medical education. In contrast to overall course ratings, the novel tool is less heavily confounded by construct-irrelevant factors. Thus, it appears to be more appropriate than overall course ratings in determining teaching quality and developing algorithms to guide performance-oriented resource allocation in medical education.

Keywords: evaluation, self-assessment, performance, learning objective, congruence, clinical studies

Abstract

Zielsetzung: Aktuell werden an den deutschen medizinischen Fakultäten unterschiedliche Konzepte zur leistungsorientierten Mittelvergabe (LOM) in der Lehre diskutiert. Die Umsetzung scheitert mitunter am Mangel valider Messkriterien zur Beurteilung der Lehrqualität. Neben der Struktur und den Prozessen der Lehre sollte das Ergebnis der Lehre im Mittelpunkt der Qualitätsbewertung stehen. Ziele dieser Arbeit waren die Erprobung eines neuen, lernzielbezogenen Evaluationssystems im klinischen Abschnitt des Studiums der Humanmedizin und der Vergleich der Ergebnisse mit den Daten eines traditionellen Evaluationsverfahrens.

Methodik: Aus studentischen Selbsteinschätzungen zu Beginn und Ende eines jeden Lehrmoduls wurde nach einer neu entwickelten Formel der lernzielbezogene, prozentuale Lernerfolg berechnet. Die Lernerfolgs-Mittelwerte pro Modul wurden mit traditionellen Evaluationsparametern, insbesondere mit Globalbewertungen, ins Verhältnis gesetzt.

Ergebnisse: Der mittels vergleichender Selbsteinschätzungen berechnete Lernerfolg und die Globalbewertungen produzierten deutlich unterschiedliche Rangfolgen der 21 klinischen Module. Zwischen dem Lernerfolg und den Globalbewertungen fand sich keine statistisch signifikante Korrelation. Allerdings korrelierten die Globalbewertungen stark mit den studentischen Erwartungen vor Modulbeginn und mit strukturellen und prozeduralen Parametern der Lehre (Pearson’s r zwischen 0,7 und 0,9).

Schlussfolgerung: Die Messung des Lernzuwachses mittels vergleichender studentischer Selbsteinschätzungen kann die traditionelle Evaluation um eine wichtige Dimension erweitern. Im Unterschied zu studentischen Globalbewertungen ist das neue Instrument lernzielbezogen und unabhängiger vom Einfluss Konstrukt-irrelevanter Parameter. Hinsichtlich der Entwicklung eines LOM-Algorithmus eignet sich das neue Instrument gut zur Beurteilung der Lehrqualität.

Introduction

Following suggestions made by the German Council of Science and Humanities [1], half of all German medical schools internally allocated resources based on teaching performance in 2009. Such performance measures are also increasingly used to distribute funds to different medical schools within German federal states. In North Rhine-Westphalia, indicators used for this purpose can be attributed to three levels [2]: structural, procedural and outcome quality of teaching. Upon introduction of their algorithm, medical school Deans in North Rhine-Westphalia acknowledged the importance of structures (resources, time-tables) and processes (instructional format, examinations) but also suggested to include global performance indicators such as student results in high-stakes examinations, study time and drop-out rates as measures of study outcome. Within the clinical phase of undergraduate medical education, these parameters cannot be broken down to clinical specialties and are thus of little use to guide performance-dependent resource allocation within a particular medical school. Consequently, there is a need to develop quality indicators referring to structures, processes and teaching outcome that can be used to rank courses or specialities.

A survey among German medical schools carried out in 2009 showed that many schools used evaluation data (i.e. student ratings) to guide internal fund distribution [3]. Traditional evaluation forms are predominantly made up of questions regarding structural and organisational aspects of teaching as well as global ratings on six-point scales resembling those used in German schools (with 1 being the best mark). While these instruments likely allow some appraisal of structures and processes, the extent to which they actually measure outcome quality is unknown.

The definition of desired outcomes in undergraduate medical education is a matter of debate; however, it is reasonable to suggest student performance gain as a possible primary outcome variable. Ideally, an evaluation tool would provide some measure of performance gain. Such a tool has been developed at Göttingen Medical School, and its reproducibility and criterion validity have recently been established [4]. By introducing ‘learning success’ as an outcome variable, it adds to traditional evaluation methods focusing on structural and procedural aspects of teaching quality.

In order to establish discriminant validity of the novel evaluation tool this study aimed at answering the following research questions:

Is there a difference between course rankings derived from performance gain data and traditional evaluation parameters? We hypothesized that the two approaches cover different aspects of teaching. As a consequence, rankings based on structural/procedural criteria should be considerably different from rankings based on performance gain.

Is there a correlation between student expectations towards specific courses, traditional evaluation parameters obtained at the end of courses and performance gain data? We hypothesized that traditional evaluation parameters would be highly correlated with each other. Since structural and procedural aspects considerably impact on teaching quality, we expected moderate correlations between the traditional and the novel evaluation tool.

Methods

Clinical curriculum and evaluation tools at Göttingen Medical School

The three-year clinical curriculum at our institution adopts a modular structure. There are 21 modules lasting two to seven weeks. The first clinical year covers basic practical skills, infectiology and pharmacotherapy, followed by 18 months of systematic teaching on the diagnosis and treatment of specific diseases. Aspects regarding differential diagnosis are focused on in the last 6 months.

-

1.

Traditional evaluation tool: Students complete online evaluation forms (EvaSys®, Electric Paper, Lüneburg, Germany) at the end of each module. Ratings on organisational and structural aspects of teaching are obtained on six-point scales. The following statements are used for these ratings:

-

1.1.

“The implementation of interdisciplinary teaching was well done in this module.” (Interdisciplinarity)

-

1.2.

“During this module, self-directed learning was promoted.” (Self-directed learning)

-

1.3.

“Regarding my future professional life, I perceive my performance gain in this module as high.” (Subjective performance gain)

-

1.4.

“I was very satisfied with the basic structure of this module (design, instructional formats, time-tables).” (Structure)

-

1.5.

“This module should be continued unchanged.” (Continuation)

-

1.1.

-

2.

The final item of this part of the questionnaire read “Please provide an overall school mark rating of the module.”

-

3.

In order to assess correlations between student expectations before a module and their ratings at the end of a module, an additional online survey at the beginning of each module was set up specifically for this study. In this survey, students were asked to rate the following statements on a six-point scale:

-

3.1.

“I believe that this module is important for my future professional life.” (Importance)

-

3.2.

“I am looking forward to this module.” (Anticipation)

-

3.3.

“The module has a good reputation with my more advanced fellow students.” (Reputation)

-

3.1.

-

4.

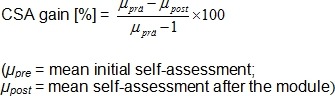

Performance gain evaluation using comparative self-assessments (CSA): The novel tool used in this study calculates performance gain for specific learning objectives on the basis of repeated student self-assessments. At the beginning of each module, students are asked in an online survey to self-rate their performance levels regarding specific learning objectives (e.g. “I can interpret an electrocardiogram.”) on a six-point scale anchored by “fully agree” (1) and “completely disagree” (6). Self-ratings were again obtained at the end of each module. The percent CSA gain for a specific learning objective was calculated by dividing the difference of mean values (pre-post) by the corrected mean of initial self-ratings across student cohorts according to the following formula (see figure 1 (Fig. 1)):

Figure 1. CSA gain [%].

Aggregated CSA gain of a module was calculated as the mean of CSA gains obtained for 15 specific learning objectives which were derived from the Göttingen Catalogue of Specific Learning Objectives (http://www.med.uni-goettingen.de/de/media/G1-2_lehre/lernzielkatalog.pdf). The major difference between the aforementioned evaluation item “Regarding my future professional life, I perceive my performance gain in this module as high.” and CSA gain was that subjective performance gain was obtained at one time-point only and was global in nature while CSA gain was calculated from ratings obtained at different time-points and related to specific learning objectives. A recently published study demonstrates a good correlation between CSA gain and an increase in objective performance measures [4].

Description of the study sample

In winter 2008/09, a total of 977 students were enrolled in clinical modules at our institution. All students were invited to participate in online evaluations via e-mail; each student automatically received three e-mails per survey containing a link to the online evaluation platform. Pre-surveys were opened three days before the beginning of a module and closed three days into the module. The same time-frame was used for post-surveys. Participation was voluntary, and all data were entered anonymously. Thus, further characterisation of the sample with regard to age and sex was not possible.

Data acquisition and analysis

Anonymous evaluation data collected during winter term 2008/09 were included in this study. During completion of the post-survey, students were asked to indicate whether they had also taken part in the pre-survey. Pre-post comparisons were only performed on data obtained from students who indicated to have completed both the pre- and the post-survey. Since data were aggregated across student cohorts, no individual labelling of students was necessary.

In order to address the first research question, the 21 modules were ranked according to mean global ratings or mean CSA gain values (aggregated from 15 specific learning objective gains). The second research question was addressed by calculating correlations between mean values of traditional evaluation items and aggregated CSA gain per module.

Analyses were run with SPSS® 14.0 (Illinois, USA). A Kolmogorov-Smirnov Test indicated that all data were normally distributed. Thus, correlations are reported as Pearson’s r. Squared r values indicate the proportion of variance explained.

Results

Response rates

Of all 977 students enrolled in clinical modules, 573 provided a total of 51,915 ratings. Response rates in specific modules ranged from 36.7% to 75.4%.

Comparison of module rankings

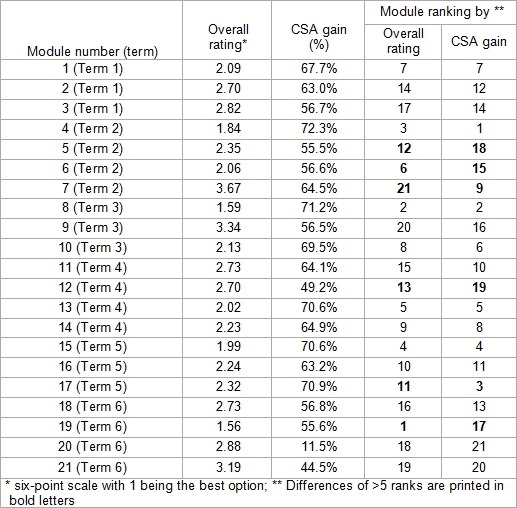

Table 1 (Tab. 1) compares global student ratings and CSA gain values at the level of individual modules. Modules were ranked using both indicators (see the last two columns of the table). In some instances, ranks differed substantially depending on the method used. A difference of at least 6 ranks between the two methods was found for six out of 21 modules. For example, module no. 19 received the best overall rating (1.56) despite taking position 17 in the CSA gain ranking (55.6%). Conversely, module no. 7 (“Evidence-based medicine”) received a mean overall rating of 3.67 and thus came last in this ranking while mean CSA gain was 64.3% (Rank 9). Notably, students had already provided negative ratings for this module in the pre-survey (Importance: 3.64; anticipation: 4.20; Reputation: 4.59).

Table 1. Overall ratings, mean CSA gain and rankings of all 21 clinical modules, listed in their order of appearance in the three-year clinical curriculum. CSA, comparative self-assessment. Each term lasts 6 months.

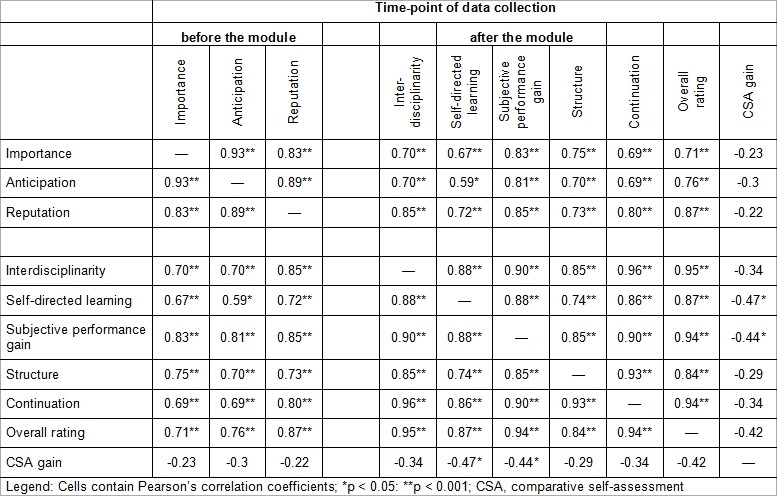

Correlations between evaluation parameters

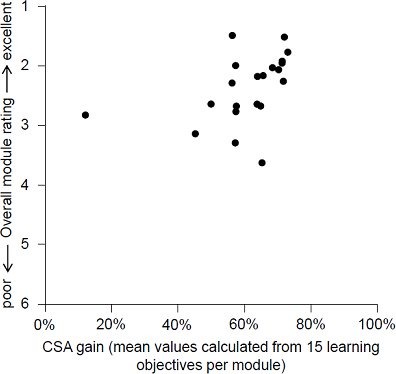

Results of correlation analyses are presented in Table 2 (Tab. 2). Student expectations before a module and ratings of organisational and structural aspects obtained after a module showed a strong positive correlation. There was also a strong correlation between overall ratings and approval of the statement “Regarding my future professional life, I perceive my performance gain in this module as high.” (r=0.94; p<0.001). In contrast, there was no significant correlation between CSA gain and student expectations in the pre-survey. Weak correlations were observed between CSA gain and two variables included in the traditional evaluation form (maximum proportion of variance explained: 22%). CSA gain was weakly correlated with the subjective perception of having learned a lot (r=-0.44; p=0.044; proportion of variance explained 19%) and non-significantly with overall module ratings (r=-0.42; p=0.061; proportion of variance explained 18%; see Figure 2 (Fig. 2)).

Table 2. Correlations between student ratings of the 21 modules and CSA gain (aggregated across 15 learning objectives per module).

Figure 2. Correlation between overall module ratings and CSA gain for all 21 modules of the clinical phase of medical education (r = -0,42; r2 = 18%; p = 0,061). CSA, comparative self-assessment.

Discussion

Principal findings

In this study, we observed significant correlations between overall module ratings and student expectations before a module as well as with retrospective ratings of curricular structure and subjective performance gain. Conversely, there was no correlation between CSA gain and student expectations and only a weak correlation between CSA gain and perceived subjective performance gain. Accordingly, module rankings were different depending on them being based on overall ratings or CSA gain values.

Strengths and limitations

The new evaluation tool presented here facilitates a critical appraisal of performance gain by using comparative student self-assessments. It is easy to implement and addresses specific learning objectives. The data used for this study were obtained as part of our evaluation routine and comprised a large number of single ratings provided by over 500 students.

The validity of singular self-assessments has repeatedly been criticised in the past [5], [6] as their accuracy can be influenced by factors which are irrelevant to the construct examined. However, Colthart et al. [7] demonstrated that the ability to self-assess can be improved by using well-defined criteria, anchoring of scales and feedback. Accordingly, using specific learning objectives [8] for self-assessments is a prerequisite for the new tool to be functional. The potential impact of individual characteristics on CSA gain values is reduced by repeatedly collecting self-assessments from the same student group.

Relation to published research and significance of findings

Teaching quality is a multi-dimensional construct including several parameters related to process, structure, content and outcome of teaching [9]. Evaluation data provided by students contribute to the critical appraisal of teaching quality within medical schools. However, subjective ratings are influenced by various variables. As a consequence, details of the construct underlying student evaluation data may not be readily discernible. This particularly pertains to overall course ratings which are usually captured using a simple marking system. This study demonstrates that overall module ratings are strongly correlated with student expectations before taking a module as well as retrospective ratings of curricular structure and subjective performance gain (see Table 2 (Tab. 2)). There are two possible explanations for this finding which may not be mutually exclusive: Student ratings may either feed into a construct of ‘good teaching’ which includes well-structured, interdisciplinary teaching that fosters self-directed learning and produces a considerable performance gain. Alternatively, it may be suggested that all seemingly different parameters used in traditional evaluation forms feed into the same, homogeneous construct within which specific aspects cannot be differentiated. In an extreme case, this would mean measuring mere student satisfaction which – as indicated by the correlations seen in this study – is strongly associated with student expectations before a module.

In fact, previous research indicates that overall ratings are not only influenced by structural and procedural aspects [10], [11], [12], [13], [14] but also by the behaviour of faculty [15], their rapport with students [16] and a lecturer’s or a course’s reputation [17]. While professional bearing of faculty might be attributed to a construct of ‘good teaching’, interpretation of student overall ratings is complicated by the extent of such contributions to the composite mark being largely unknown.

In addition to addressing structural and procedural parameters, a critical appraisal of teaching should take student learning outcome into consideration [9]. At first glance, student achievements in end-of-course assessments or high-stakes examinations might appear helpful in this regard. However, it has recently been reported that the assessments performed at many German medical schools do not live up to international quality standards [18] and thus cannot be regarded as being sufficiently valid. In addition, multiple choice questions address but one dimension of physician training (knowledge) and do not allow any conclusions to be drawn on the quality of practical training. Attributing exam results to teaching quality in specific courses or even specialties can be particularly difficult in reformed curricula. Thus, internal fund redistribution based on examination results does not seem advisable.

Practical implications

A clear definition of the construct of ‘good teaching’ and proof of validity for the tools used to assess are prerequisites for the interpretation of evaluation data. Accordingly, evaluation tools capturing specific aspects of good teaching and avoiding cross-correlations between different items are desirable. As correlations between overall ratings and CSA gain were either absent or weak in this study, the novel evaluation tool described here is likely to produce additional information that would have been missed by traditional evaluation tools. It might thus be used for a valid appraisal of teaching quality.

Research agenda

We did not assess students’ definitions of performance gain in this study; this should be done in future studies, including research into teachers’ definitions of learning outcome which may be different from students’ views.

Future use of the novel evaluation tool needs to be informed by research regarding the number of learning objectives that need to be included per module in order to cover the whole range of aspects addressed in a module and, thus, produce reliable results. In addition, potential biases arising from the online data acquisition tool [19] and differing response rates across modules and their influence on CSA gain data needs to be studied in more detail.

The novel tool necessitates data collection from identical student groups at different time-points, thus potentially raising concerns regarding data protection issues as students should be able to complete evaluation forms anonymously. In order to solve this problem, the pre-/post-design could be changed to a singular data collection period at the end of each module during which students would provide retrospective ratings of their performance levels at the beginning of the module [20]. Finally, future research needs to show to what extent the novel tool can be transferred to other medical schools and curricula.

Conclusions

Overall course ratings provided by students do not address all aspects of teaching quality and are of limited use to guide fund redistribution within medical schools. In contrast, by providing an estimate of actual performance gain, the tool described here appears to cover an important dimension of teaching quality. It was found to be valid and independent of traditional global ratings. Therefore, CSA gain might become an integral part of algorithms to inform performance-guided resource allocation within medical schools.

Note

The authors Raupach and Schiekirka have equally contributed to this manuscript.

Competing interests

The authors declare that they have no competing interests.

References

- 1.Wissenschaftsrat. Empfehlungen zur Qualitätsverbesserung von Lehre und Studium. Berlin: Wissenschaftsrat; 2008. [Google Scholar]

- 2.Herzig S, Marschall B, Nast-Kolb D, Soboll S, Rump LC, Hilgers RD. Positionspapier der nordrhein-westfälischen Studiendekane zur hochschulvergleichenden leistungsorientierten Mittelvergabe für die Lehre. GMS Z Med Ausbild. 2007;24(2):Doc109. Available from: http://www.egms.de/static/de/journals/zma/2007-24/zma000403.shtml. [Google Scholar]

- 3.Müller-Hilke B. "Ruhm und Ehre" oder LOM für Lehre? - eine qualitative Analyse von Anreizverfahren für gute Lehre an Medizinischen Fakultäten in Deutschland. GMS Z Med Ausbild. 2010;27(3):Doc43. doi: 10.3205/zma000680. Available from: http://dx.doi.org/10.3205/zma000680. [DOI] [Google Scholar]

- 4.Raupach T, Münscher C, Beißbarth T, Burckhardt G, Pukrop T. Towards outcome-based programme evaluation: Using student comparative self-assessments to determine teaching effectiveness. Med Teach. 2011;33(8):e446–e453. doi: 10.3109/0142159X.2011.586751. Available from: http://dx.doi.org/10.3109/0142159X.2011.586751. [DOI] [PubMed] [Google Scholar]

- 5.Falchikov N, Boud D. Student Self-Assessment in Higher Education: A Meta-Analysis. Rev Educ Res. 1989;59(4):395–430. [Google Scholar]

- 6.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. Jama. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. Available from: http://dx.doi.org/10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 7.Colthart I, Bagnall G, Evans A, Allbutt H, Haig A, Illing J, et al. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 2008;30(2):124–145. doi: 10.1080/01421590701881699. Available from: http://dx.doi.org/10.1080/01421590701881699. [DOI] [PubMed] [Google Scholar]

- 8.Harden RM. Learning outcomes as a tool to assess progression. Med Teach. 2007;29(7):678–682. doi: 10.1080/01421590701729955. Available from: http://dx.doi.org/10.1080/01421590701729955. [DOI] [PubMed] [Google Scholar]

- 9.Rindermann H. Lehrevaluation an Hochschulen: Schlussfolgerungen aus Forschung und Anwendung für Hochschulunterricht und seine Evaluation. Z Evaluation. 2003;(2):233–256. [Google Scholar]

- 10.Beckman TJ, Ghosh AK, Cook DA, Erwin PJ, Mandrekar JN. How reliable are assessments of clinical teaching? A review of the published instruments. J Gen Intern Med. 2004;19(9):971–977. doi: 10.1111/j.1525-1497.2004.40066.x. Available from: http://dx.doi.org/10.1111/j.1525-1497.2004.40066.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kogan JR, Shea JA. Course evaluation in medical education. Teach Teach Educ. 2007;23(3):251–264. doi: 10.1016/j.tate.2006.12.020. Available from: http://dx.doi.org/10.1016/j.tate.2006.12.020. [DOI] [Google Scholar]

- 12.Marsh HW. The Influence of Student, Course, and Instructor Characteristics in Evaluations of University Teaching. Am Educ Res J. 1980;17(2):219–237. [Google Scholar]

- 13.Marsh HW. Multidimensional ratings of teaching effectiveness by students from different academic settings and their relation to student/course/instructor characteristics. J Educ Psychol. 1983;75:150–166. doi: 10.1037/0022-0663.75.1.150. Available from: http://dx.doi.org/10.1037/0022-0663.75.1.150. [DOI] [Google Scholar]

- 14.McKeachie W. Student ratings; the validity of use. Am Psychol. 1997;52(11):1218–1225. doi: 10.1037/0003-066X.52.11.1218. Available from: http://dx.doi.org/10.1037/0003-066X.52.11.1218. [DOI] [Google Scholar]

- 15.Marsh HW, Ware JE. Effects of expressiveness, content coverage, and incentive on multidimensional student rating scales: New interpretations of the Dr. Fox effect. J Educ Psychol. 1982;74(1):126–134. doi: 10.1037/0022-0663.74.1.126. Available from: http://dx.doi.org/10.1037/0022-0663.74.1.126. [DOI] [Google Scholar]

- 16.Jackson DL, Teal CR, Raines SJ, Nansel TR, Force RC, Burdsal CA. The dimensions of students' perceptions of teaching effectiveness. Educ Psychol Meas. 1999;59:580–596. doi: 10.1177/00131649921970035. Available from: http://dx.doi.org/10.1177/00131649921970035. [DOI] [Google Scholar]

- 17.Griffin BW. Instructor Reputation and Student Ratings of Instruction. Contemp Educ Psychol. 2001;26(4) doi: 10.1006/ceps.2000.1075. Available from: http://dx.doi.org/10.1006/ceps.2000.1075. [DOI] [PubMed] [Google Scholar]

- 18.Möltner A, Duelli R, Resch F, Schultz JH, Jünger J. Fakultätsinterne Prüfungen an den deutschen medizinischen Fakultäten. GMS Z Med Ausbild. 2010;27(3):Doc44. doi: 10.3205/zma000681. Available from: http://dx.doi.org/10.3205/zma000681. [DOI] [Google Scholar]

- 19.Thompson BM, Rogers JC. Exploring the learning curve in medical education: using self-assessment as a measure of learning. Acad Med. 2008;83(10 Suppl):S86–S88. doi: 10.1097/ACM.0b013e318183e5fd. Available from: http://dx.doi.org/10.1097/ACM.0b013e318183e5fd. [DOI] [PubMed] [Google Scholar]

- 20.Skeff KM, Stratos GA, Bergen MR. Evaluation of a Medical Faculty Development Program. Eval Health Prof. 1992;15(3):350–366. doi: 10.1177/016327879201500307. Available from: http://dx.doi.org/10.1177/016327879201500307. [DOI] [Google Scholar]