Abstract

Background

HealthWise South Africa: Life Skills for Adolescents (HW) is an evidence-based substance use and sexual risk prevention program that emphasizes the positive use of leisure time. Since 2000, this program has evolved from pilot testing through an efficacy trial involving over 7,000 youth in the Cape Town area. Beginning in 2011, through 2015, we are undertaking a new study that expands HW to all schools in the Metro South Education District.

Objective

This paper describes a research study designed in partnership with our South African collaborators that examines three factors hypothesized to affect the quality and fidelity of HW implementation: enhanced teacher training; teacher support, structure and supervision; and enhanced school environment.

Methods

Teachers and students from 56 schools in the Cape Town area will participate in this study. Teacher observations are the primary means of collecting data on factors affecting implementation quality. These factors address the practical concerns of teachers and schools related to likelihood of use and cost-effectiveness, and are hypothesized to be “active ingredients” related to high-quality program implementation in real-world settings. An innovative factorial experimental design was chosen to enable estimation of the individual effect of each of the three factors.

Results

Because this paper describes the conceptualization of our study, results are not yet available.

Conclusions

The results of this study may have both substantive and methodological implications for advancing Type 2 translational research.

Keywords: Factorial design, Implementation quality, Prevention, Translational research

Introduction: The Need for Translational Research in South Africa

Adolescent sexual behavior and substance use are both directly tied to risk of contracting HIV. With the highest rates of HIV in the world, South Africa has an urgent need for adolescent risk reduction and health promotion. It is estimated that in 2009 5.6 million people in South Africa lived with HIV, making it the largest epidemic in the world (Joint United Nations Programme on HIV/AIDS 2010). In total, about one-quarter of HIV-infected individuals in South Africa are under the age of 25, and AIDS is responsible for 71% of all deaths in the 15–49 year age group (Dorrington et al. 2006). One national study found that 67% of South African youth aged 15–24 had engaged in penetrative sex (Pettifor et al. 2004). A review of the literature by Meintjies (2010) indicated that of the 39% of youth aged 12–22 who reported ever having sex in the 2008 National Lifestyle Study, 32% reported having sex with four or more partners in their lifetime. Meintjies also reported on another study that found that 41% of Grades 8 through 11 students who were sexually active had more than one partner in the 3 months prior to the survey.

Our previous research on adolescents in the Western Cape Province of South Africa has documented the widespread use of drugs (72% reporting lifetime use of alcohol, cigarettes, dagga, or inhalants by Grade 9; Patrick et al. 2009) and engagement in sexual behavior (Palen et al. 2006, 2009; Smith et al. 2008). This research corresponds with the findings of the national 2008 South Africa Youth Risk Behavior Survey (Reddy et al. 2010) that revealed similar rates of risk behaviors throughout the country.

The prevalence of risk is even higher among Black South African adolescents (Reddy et al. 2010; Shisana et al. 2005). For example, among youth in high school, Black adolescents are more likely to have ever had sex than Coloured (mixed-race ancestry) or White adolescents and are more likely to use no method of contraception. Correspondingly, the prevalence of HIV among youth aged 15–24 is also differentially distributed among the three racial groups (Shisana et al. 2005).

Although the statistics on sexual risk and substance use among South African adolescents are alarming, the current situation in South Africa provides unique opportunities for advancing translational research in terms of addressing gaps in the literature as well as “real-world” needs. The prevention science literature is replete with research on the development and evaluation of evidence-based interventions (EBIs) and promising programs that promote health and reduce risk under controlled conditions (e.g., Conduct Problems Prevention Research Group 2010, 2007; Furr-Holden et al. 2004; Kulis et al. 2005; Kumpfer et al. 2003; Storr et al. 2002). Additionally, the US Substance Abuse and Mental Health Services Administration (SAMHSA) houses a website that provides a guide to evidence based programs (http://www.samhsa.gov/ebpwebguide/) and the USAID AIDSTART-One (AIDS Support and Technical Assistance Resources) houses a website on promising practices (http://www.aidstar-one.com/).

Much less research exists on the process of how EBIs are adopted and disseminated on a wide-scale, population level (Collins et al. 2011). Some have estimated that it takes 17 years for an EBI to be put into practice (Bales and Soren 2000) and that even the most successful interventions reach only 1% of the target population (Jenson 2003). Thus, the potential for EBIs to have an impact on population-wide public health has not been realized (e.g., Woolf 2008). At the core of this type of research, called Type 2 or translational research, is the need to study and identify the processes by which EBIs are effectively adopted and sustained by community practitioners (August et al. 2004).

Clearly, there is a pressing need to disseminate EBIs known to reduce sexual risk and substance use behaviors to South African schools. However, if efficacious programs under tightly controlled conditions are prematurely disseminated without understanding whether and how they can be implemented with fidelity, these programs may be ineffective in the real world (August et al. 2004).

Currently we have a unique opportunity for advancing Type 2 translational research as we take an evidence-based substance use and sexual risk prevention program, HealthWise South Africa: Life Skills for Adolescents (HW), to scale in 56 schools in the Metro South Education District (MSED) in Cape Town, South Africa. HW showed promising positive results on sexual risk behaviors and substance use (described subsequently in more detail) and is listed on the AIDSTAR-One website as a promising practice, category 3 with good evidence and high promise (http://www.aidstar-one.com/promising_practices_database/g3ps/south_africa_healthwise_program).

The purpose of this article is to describe our current study, which is funded by the US National Institute on Drug Abuse. The main focus of this research is to study factors that affect implementation fidelity and quality as the HW curriculum is taken to scale, although we will continue to assess student outcomes. Of particular note is the innovative factorial experimental design that will be used in this study. Thus, in this paper we will first describe the background of HW, followed by a description of the current study (HWStudy2). We will then explain why a factorial experimental design was chosen and describe the design in some detail. Our intent with this article is to stimulate thought and discussion about how to sustainably take an evidence-based program to scale while preserving implementation fidelity and quality in real world contexts.

Background on the HealthWise Studies

The HW curriculum is an evidence-based substance use and sexual risk prevention program that has systematically evolved since 2000 from pilot testing through an efficacy trial involving over 7,000 youth in the Cape Town area, conducted between 2003 and 2008. The HW efficacy trial (HWStudy1) was the first school-based, multi-year experimental study conducted in South Africa to report any positive findings on substance use and sexual risk. During this process we gained much experience regarding the implementation of HW in a particular context in South Africa. Through close collaboration with teachers and school district personnel we have been able to understand the potential key components of effective program delivery in this environment.

The theoretical basis of the HW curriculum integrates ecological systems theory (e.g., Bronfenbrenner 1995), behavioral change processes, and positive youth development and focuses on free and leisure time as an important health risk/health promotion context (see Caldwell et al. 2004 and Wegner et al. 2008 for a more complete description of theory behind HW and its evolution in the South African context). The overall goals of the HW curriculum are to reduce sexual risk behavior and substance abuse, and increase positive use and experience of free and leisure time.

The curriculum consists of 12 lessons in Grade 8, followed by six lessons in Grade 9. Each lesson requires two to three class sessions of approximately 40 min. Table 1 lists the topics covered in each lesson. In each lesson a combination of methods is used to convey content: teaching factual knowledge, practicing skill development, shaping peer norms, and encouraging self-reflection. In addition, students are encouraged to learn more about and interact with the health and recreational services available in their specific communities.

Table 1.

HealthWise lessons

| Grade 8 | Grade 9 |

|---|---|

|

|

Results from HWStudy1 suggest that HW was effective in reducing both substance use and sexual risk (Smith et al. 2008). Cigarette and alcohol use, for example, were significantly lower among those students in the HWStudy1 intervention schools. In addition, fewer males initiated sexual behavior (Tibbits et al. 2009) and males were less likely to push girls into unwanted sex (Bradley et al. 2010). Importantly, from the perspective of reducing HIV risk, both males and females reported less use of substances at sexual encounters and were less likely to report having sex with someone they had recently met (Tibbits et al. 2009). There was also increased perception of condom availability, condom skills, and conversations with partners surrounding condom use (Coffman et al. 2011).

Findings also demonstrated that leisure variables (e.g., boredom and motivation) as well as type of activity participation (e.g., spending time with friends) were associated with substance use and sexual risk. For example, students reporting higher levels of intrinsic motivation and lower levels of extrinsic motivation and amotivation had the lowest odds of past month cigarette, alcohol, and dagga (marijuana) use (Caldwell et al. 2010). HW was effective in changing levels of boredom and motivation, but the strongest effects were found for the school that had the highest degree of implementation fidelity (Caldwell et al. 2010). Spending time with friends positively predicted lifetime alcohol use while participating in hobbies and music and singing activities negatively predicted lifetime alcohol use (Tibbits et al. 2009).

HW was well received by school principals, teachers, and students. As a result, MSED selected the HW curriculum for dissemination to the 56 remaining schools in the district as part of an effort to fulfill a curricular mandate labeled Life Orientation (Department of Basic Education 2011). This mandate includes four objectives, three of which are directly addressed by HW: (1) knowledge of self in order to make informed decisions regarding health (including substance use and sexual risk), (2) the acquisition of skills to respond effectively to challenges, and (3) understanding of, and participation in, activities that promote movement and physical development.

The Current Study: HWStudy2

Although an effectiveness study may have been the next logical step after conducting an efficacy trial, teachers and administrators in the MSED wanted to use HW across all schools in the district. Therefore, we opted to focus on translational issues that would affect how well HW was implemented as intended. We are taking into consideration the four criteria August et al. (2004) suggested are important when taking an efficacy trial to scale: clients, practitioners, intervention structure, and organizational climate/culture. In particular these are important considerations when examining implementation fidelity and quality.

The main goal of HWStudy2 (study period = 2010–2015) is to test three independent variables hypothesized to affect the quality and fidelity of program implementation of HW: enhanced teacher training; enhanced teacher support, structure and supervision; and enhanced school environment. Although these independent variables were theoretically derived, in order for the implementation to be cost-effective, sustainable, and easy to use, we operationalized the factors in consultation with school district personnel to address their practical concerns. As we examine these three independent variables, we consider the practitioners, intervention structure and organizational climate/culture as suggested by August et al. (2004). A secondary goal of HWStudy2 is further to evaluate the effectiveness of HW with a more diverse population than represented in the first trial, which relates to the client consideration per August et al.

An effort of this magnitude (i.e., going to scale in 56 schools) requires that we test our data collection procedures, implementation of the experimental conditions and measures. Thus, we are now in the process of conducting the pilot phase of HWStudy2 in three schools outside the MSED but of comparable socioeconomic status.

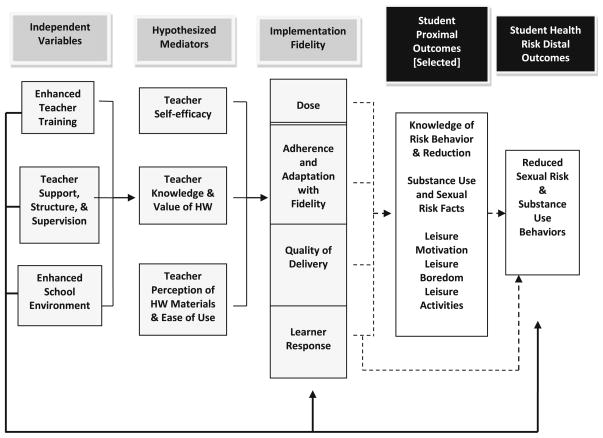

Study Model

HWStudy2 examines the processes by which each of the three independent variables may influence teacher implementation fidelity and quality (as shown in Fig. 1). We hypothesized that the three independent variables on the left would have main effects on implementation fidelity and student substance use and sexual risk outcomes, indicated by a direct, solid line from the conditions to the outcomes (at both the student and teacher levels). The relation between the independent variables (enhanced teacher training; teacher support, structure and supervision; and enhanced school environment) and the dependent variable implementation fidelity and quality is the main focus of the study. More detail on these variables is presented subsequently.

Fig. 1.

Conceptual model for primary and secondary hypotheses. Note: Interactions and control variables not shown in the model. Primary outcomes are represented by solid heavy line, secondary by lighter solid lines. Dashed lines represent relations that will be tested

The hypothesized mediators of the three factors on implementation fidelity and student health distal outcomes are indicated by lighter solid lines in Fig. 1. We hypothesized that enhanced teacher training; teacher support, structure and supervision (SSS); and enhanced school environment would increase implementation fidelity by influencing teachers’ personal and collective self-efficacy, knowledge and valuing of HW, and ease of use of HW curriculum materials (e.g., Domitrovich et al. 2008; Goddard and Goddard 2001; Greenberg et al. 2005).

Interactions of the factors and control variables are not included in this model but will be examined through statistical analyses. Dashed lines represent relations that will be tested but are not a specific focus of the study since the higher fidelity/better outcomes relation has been empirically established in other studies (e.g., Durlak and DuPre 2008). These putative mediators (e.g., leisure motivation) will be addressed in post hoc analyses.

Additionally, in HWStudy2 we will assess whether the treatment factors have been delivered with fidelity. For the same reasons it is necessary to document teacher implementation fidelity and quality, it is necessary to monitor how well we implemented the treatment factors at each school. This will allow us to answer the question, “If our results are not as anticipated, can we rule out the possibility that the treatment factors were not well implemented?” Thus, we are currently pilot testing methods and measures to assess whether the treatment factors were implemented as intended (i.e., factor fidelity).

Implementation Fidelity and Quality

The central motivation for understanding factors that affect implementation fidelity and quality is that high levels of implementation fidelity and quality are associated with better outcomes. There is literature to support this contention, including our own work (Caldwell et al. 2010). Most notably, Durlak and DuPre (2008) conducted a review of the literature to examine whether implementation fidelity does influence program outcomes and determine which factors influenced implementation fidelity. Their review located five meta-analyses based on 483 studies as well as 59 individual studies. Findings from all of these studies revealed some dramatic and consistent differences in outcomes when researchers monitored and measured implementation fidelity and used these measures in their analyses. Durlak and DuPre concluded that attention to implementation fidelity could increase a program’s mean effect sizes by at least two to three times.

One of the main issues with regard to large scale dissemination is implementation fidelity and quality of delivery, which are the main dependent variables of this study. Fidelity refers to implementation of the program as intended by the developers. Although high levels of fidelity have occurred in well controlled studies (e.g., Mihalic et al. 2004), when programs go to scale in the “real world,” strict fidelity to implementation typically is lost (Dusenbury et al. 2003; Bumbarger and Perkins 2008). The important link between fidelity and stronger outcomes is well documented in these controlled studies (Bumbarger and Perkins 2008; Durlak and DuPre 2008; Elliott and Mihalik 2004; Fixsen et al. 2005; Greenberg et al. 2005; Rohrbach et al. 2006). Key aspects of implementation fidelity have been identified (Dane and Schneider 1998; Dusenbury et al. 2003, 2005; Rohrbach et al. 2006) and provide guidance for our work. Thus, teacher implementation fidelity and quality is being assessed in HWStudy2 by measuring the following:

Adherence to program, which refers to whether the teacher covered all core components using teaching methods suggested.

Dosage, which refers to the amount of the material covered (e.g., whether there was 100% coverage of each lesson, or whether some lessons were omitted or partially offered).

Quality of program delivery, which refers to the effectiveness of delivery of program content (e.g., whether it was delivered with enthusiasm, using an interactive style where appropriate).

Student responsiveness, which refers to the reaction of the students (e.g., whether they were engaged) and whether or not they acquired the knowledge, attitudes and skills that were addressed by the program.

Factors Hypothesized to Affect Teacher Implementation Fidelity and Quality

In collaboration with our South African partners, we identified three factors (i.e., our independent variables) that were likely to have an impact on implementation fidelity and that made conceptual and practical sense in the South African context. The first two factors, enhanced teacher training and SSS, focus on how to prepare teachers and monitor their delivery of a prevention program with fidelity. The third factor, enhanced school environment, concerns development of a school environment that enhances and supports the teachers and the HW curriculum. There will be three research team members responsible for the conduct of each factor, with each assigned to only one factor (i.e., one team member handles the training factor, one for SSS, and one for enhanced school environment).

Enhanced Teacher Training

Training is an effective component of preparing implementers to deliver an intervention with quality and fidelity (e.g., Dusenbury et al. 2003, 2005; Ennett et al. 2003; Fagan et al. 2008; Gager and Elias 1997). Although studies examining the relationship between teacher training and fidelity have shown a positive association, the relationship is weaker than might be expected (e.g., Gottfredson and Gottfredson 2002). Thus, it is possible that in certain environments or under certain conditions, training is not the most effective method of giving knowledge about the intervention. This is a critical question to the MSED, because teacher training is a major component of their culture, especially around topics that are sensitive.

There have not been many studies that have specifically studied what elements of training are critical to successful teacher training regarding prevention program fidelity and quality, although there is a body of literature on professional development in general, there is little that focus on professional development of teachers as it relates to student outcomes (Garet et al. 2001). One implementation fidelity-focused study based on interviews with teachers (as well as other key individual) identified that teachers viewed training and detailed instruction manuals were critical to implementation fidelity and quality (Dusenbury et al. 2004). Details about aspect of training considered important, however, were lacking. Another US nationally representative study on mathematics teachers provided evidence that sustained professional development that focuses on content and provides opportunities for active learning are important (Garet et al. 2001). Garet and colleagues also found that helping teachers understand how the curriculum fits into the school’s mission (i.e., coherence) is important. Leach and Conto (1999) found that among a sample of three primary school teachers in Australia, performance feedback increased teachers’ use of techniques learned in a training workshop.

We have had considerable experience training teachers and have experimented with different forms of teacher training. Based on our experience as well as discussions with MSED, we decided that the two most practical and sustainable training methods are (a) the standard one-and-a-half day session for both 8th-and 9th-grade teachers and (b) an enhanced version (described below), which is what we recently used in HWStudy1. To date, however, the standard and enhanced training methods have not been compared as to their effect on implementation quality and fidelity. Thus our current study will provide valuable information to help make future decisions about teacher training.

Enhanced training includes three consecutive days of training sessions prior to implementation of the curriculum in each grade. Four months after the initial training there is a two-day training session focused specifically on teaching aspects of the leisure lessons and on teaching sexuality. Important elements of the training sessions include learning delivery techniques, anticipating problems and possible solutions, learning the theory of the program and its critical components, and practice of delivery and covering content (e.g., Elliott and Mihalik 2004; Gottfredson and Gottfredson 2002; Greenberg et al. 2005). In addition, trainers provide critical, specific feedback to teachers during training (Gottfredson and Gottfredson 2002).

To assess the degree to which teacher training is delivered as intended (i.e., factor fidelity) we keep a roster of all teachers who attended the training sessions and whether they missed any part of the training. An anonymous feedback survey is administered to teachers at the end of the training, requesting their reactions to the quality of the training and the extent to which it was responsive to their needs. Trainers complete a checklist identifying those elements of the training that were covered.

Enhanced Teacher Support, Structure and Supervision

Technical assistance, teacher support or coaching on an on-going basis is important to fidelity of implementation (e.g., Fagan et al. 2008; Harshbarger et al. 2006). Research indicates that some form of supervision and feedback, even at a global level, may be important to quality of implementation (Domitrovich et al. 2008). After discussion with MSED we decided that an appropriate and sustainable path to take is to provide enhanced support to teachers.

Enhanced teacher support is aimed at monitoring and supporting the timing, frequency, duration, and mode of delivery of the HW lessons (e.g., Domitrovich et al. 2008). It is motivated by the observation that the teachers with whom we work welcome support and guidance, but need flexibility (Wegner et al. 2008). Teachers in this factor are given support as appropriate to their own school, but there are common process elements across all schools. For example, monthly goals may be an effective way for all teachers to exercise some autonomy in dealing with contingencies that arise during the school day. These goals will help teachers target the content that should be covered across the month, which may be more realistic than targeting content and a day-by-day basis. A related consideration is that HWStudy1 teachers reported a great deal of stress in having to fit in HW lessons each day, especially during particularly chaotic times. Thus, in the HWStudy2 SSS factor, weekly text messages and monthly visits from research staff provide support and encouragement to teachers and assist with problem solving as needed. Teachers in this factor are given phone numbers of support staff and encouraged to contact them (either through calling or texting) with questions or hints on program content and delivery. We also employ social networking mechanisms to provide opportunities for teachers to share experiences and provide reinforcement to each other.

To assess the degree to which SSS was delivered as intended, the research staff person assigned to this factor summarizes his interactions with teachers (e.g., number of text messages sent each week and content/topic of interactions) in a log on a monthly basis. Teachers are asked to use a brief rating scale to assess the effectiveness of enhanced support each month.

Enhanced School Environment

At the school level there are numerous things that may influence implementation fidelity. The culture or climate of the environment in which an intervention takes place can promote implementation fidelity (Bradshaw et al. 2009; Gottfredson and Gottfredson 2002; Payne et al. 2006), although it is often overlooked in the dissemination process (Adelman and Taylor 2003; Burns and Hoagwood 2005; Dusenbury et al. 2003; Greenberg et al. 2005; Rohrbach et al. 2006; Wandersman et al. 2008).

Enhancing the school environment is particularly relevant for this study because the MSED (as in all provinces of South Africa) is beginning to adopt the Health Promoting Schools Framework (HPSF), which is based on empowerment and a more comprehensive community approach to education (Department of Health 2000; National Conference on Health Promoting Schools 2006). Although we are not able to adopt a pure HPSF format, we use principles behind this framework to brand schools in this condition as a “HealthWise South Africa School.” As with SSS, each school develops a strategy to become a HW School that will best fit the local context. However, there are aspects of being a HW School that are common across all schools to assure comparability. For example, in each school a steering group of teachers and parents (or school governing board members) is formed to determine how best to promote health and well-being of students, teachers, principals, and parents using HW as an organizing framework within the broader HPSF approach. We cultivate leadership within the schools and with parents and students to promote aspects of HW on a school-wide basis. We conduct activities such as placing HW posters in school hallways and classrooms that identify and brand the school as a HW School. Teachers in HWStudy1 had asked for these types of visuals because they were proud of the program and wanted it to be more visible. Another example of an enhanced school environment activity is publishing a regular HW newsletter that describes some of the activities associated with the program and suggestions for how teachers, students and parents can become “health wise.”

To assess the degree to which the school environment is enhanced, the steering committee meets with members of the research team twice a year. At this meeting, the research staff person assigned to this factor documents all activities that occur over the semester that enhance the school environment in support of the HW curriculum.

Rationale for Choice of Innovative Factorial Design

In the current study we are keenly interested in the individual causal effects of each of the three independent variables on implementation fidelity and, ultimately, student substance use and sexual risk outcomes. In our view, the two strategies most commonly used in empirical dissemination research, namely observational research and the randomized controlled trial (RCT) would have significant shortcomings if applied to estimation of these causal effects.

In an observational study, variables hypothesized to impact the success of dissemination are allowed to vary in an uncontrolled, naturalistic manner. These variables are measured, usually longitudinally, and later their relation to intervention outcomes is assessed. This approach has the advantage of enabling examination of many different variables, and is an excellent way to generate hypotheses for further research. However, because this approach is not an RCT, it is difficult to discern the individual effects of putative causal variables on mediators or outcome variables (Shadish et al. 2002). The variables may be interrelated in complex ways and may be differentially subject to influences by unmeasured confounders. Thus, the observational approach has the disadvantage of enabling only tentative causal inference.

In an RCT, there is random assignment to experimental conditions. The conditions usually consist of a treatment arm in which several strategies to enhance and support dissemination with fidelity are implemented and a control arm in which the intervention is disseminated without the enhancement strategies. This approach enables assessment of the causal effects of the enhancement strategies as a package. However, it is not an efficient means of evaluating the effectiveness of individual enhancement strategies (Collins et al. 2005, 2007, 2009a, 2011). Many post hoc analyses of data from RCTs suffer from threats to causal inference that are similar to the ones that weaken inferences based on observational studies. Moreover, data from an RCT can be used to conduct analyses to test hypotheses about mediation effects, but it is not generally possible to address hypotheses about the effects of individual aspects of an intervention on specific mediators (Collins et al. 2011).

Recently, behavioral scientists have noticed that a factorial experiment can be an attractive alternative to an observational study or an RCT (e.g., Collins et al. 2011; Strecher et al. 2008), when the objective is to estimate the individual effects of several different aspects of an intervention. In a factorial experimental design there are two or more factors (i.e., independent variables) and the factors are crossed. This means that a set of experimental conditions is created so that across the conditions, each level of every factor appears with each level of every other factor. The classic example of this is the 2 × 2 factorial design, in which there are two factors with two levels each, resulting in four experimental conditions. Factorial experiments are not new—they go back at least to Fisher (1935)—and they have a long history in engineering and other scientific disciplines. However, to date they have seldom been applied in intervention science.

For the purposes of the present study, factorial experiments offer several advantages. First, they enable estimation of the main effect of each of the three independent variables of interest (e.g., Kirk 1995). Second, unlike an RCT, a factorial design enables examination of interactions between factors. Third, a factorial experiment can be economical. Collins et al. (2009b) showed that although many behavioral scientists turn to individual experiments and single-factor (e.g., comparative treatment) approaches in order to conserve resources, in reality these designs are often much more resource-intensive and much less efficient than factorial designs. In the case of HWStudy2, in order to achieve the same level of statistical power as the factorial experiment, conducting individual experiments on each factor would have required three times as many schools, and the single-factor approach would have required twice as many (more detail about a variety of design alternatives, including an explanation of how to compute this comparison generally, can be found in Collins et al. 2009b). Fourth, because in a factorial experiment the independent variables are manipulated by the investigator and there is random assignment to experimental conditions, causal inference based on data from these experiments is usually much stronger than that based on observational studies or post hoc nonexperimental comparisons based on data from an RCT (Collins et al. 2009a; Shadish et al. 2002). Fifth, the factorial experiment enables analyses that address hypotheses about the effects of each of the three independent variables on specific mediating variables (Collins et al. 2011). For these reasons, we chose a factorial experiment for the present study.

Design and Method

Factorial Design

HWStudy2 employs an RCT using a factorial design manipulating the three independent variables described previously: enhanced teacher training, SSS, and enhanced school environment. Each independent variable has two levels: present and absent.

In this factorial design, school is the unit of random assignment. As Table 2 shows, there are eight experimental conditions consisting of different combinations of levels of the factors; seven schools are assigned to each condition. It is important to note that this design should not be considered an 8-arm trial in which each condition is compared in turn to a control condition. Such a design would have extremely low power with n = 7 per group. Instead, in this factorial experiment each effect is estimated based on the entire N = 56 schools. The analytic strategy involves comparing the computed means by combining various conditions to evaluate the main and interaction effects of the factors. For example, the main effect of enhanced teacher training is assessed by comparing the mean of conditions 1–4 against the mean of conditions 5–8.

Table 2.

Experimental design

| Experimental condition | Independent variables

|

|||

|---|---|---|---|---|

| Enhanced teacher training | Enhanced teacher support | Enhanced school environment | No. of schools | |

| 1 | Yes | Yes | Yes | 7 |

| 2 | Yes | Yes | No | 7 |

| 3 | Yes | No | Yes | 7 |

| 4 | Yes | No | No | 7 |

| 5 | No | Yes | Yes | 7 |

| 6 | No | Yes | No | 7 |

| 7 | No | No | Yes | 7 |

| 8 | No | No | No | 7 |

All 56 schools will receive basic training

One interesting difference between factorial experiments and two-arm RCTs is the proportion of experimental subjects—in this case, schools—that receive some version of a treatment. In a two-arm RCT half of the subjects are in a control group and receive essentially none of the experimental treatment (of course they will receive standard-of-care or similar, as appropriate). In contrast, in the design that we propose 7/8 of the schools receive at least one of the three elements (enhanced teacher training; enhanced SSS; and enhanced school environment). Only 1/8 of the schools receive none of these elements, and even these schools receive HW and 1.5 days of training. From the school district’s point of view this is a “better deal” than a design that would keep half of the schools in a control group. This provides an excellent basis for a sustained positive relationship between the investigators and school district personnel, which in turn helps the investigators to maintain experimental rigor.

School Randomization and Planned Missingness Design

The 56 schools taking part in the study are randomized into the eight conditions using the procedure suggested by Graham et al. (1984). The assignment procedure uses principal components analysis with all available school-level variables to create a single, composite blocking variable. The 56 schools are stratified into “high” and “low” on this composite variable, and are randomly assigned to the eight conditions with the limitation that equal numbers of “high” and “low” schools are assigned to each condition. Performance of the procedure has proven to be excellent for producing pretest equivalence, even at the level of individual school-level variables involved (Graham et al. 1984).

Cost-Effectiveness Analysis

While we conduct the factorial experiment, we include an assessment of costs involved to deliver each experimental condition and the potential for cost-effective intervention impact. Given the real-world setting of this study, this analysis provides very practical and useful information to MSED with regard to the costs of each of the conditions individually as well as in combination. This economic evaluation is necessary for determining the most efficient ways to allocate resources, and is especially relevant in this resource-limited environment (Drummond et al. 1997).

Sources of Data

Three sources of data are used to assess teacher implementation fidelity: daily self-report teacher logs, an annual teacher survey, and observations. Data from principals and students also help assess implementation fidelity. Although multiple sources of data provide insight into implementation fidelity, as described, the gold standard is observation (e.g., Snyder et al. 2006); other sources of data are used to triangulate and provide insight as needed.

Snyder et al. (2006) contended that explicit program content and process theories are central to determining how to measure aspects of an intervention. Well developed, theoretically based programs should possess a core set of lessons and techniques (mechanisms of change) that are central to the effectiveness of the intervention; these should be used to measure quality as well as the impact of program adaptations (Domitrovich et al. 2008). We have identified four lessons (two in Grade 8 and two in Grade 9) as the most central to reducing risk and increasing protective factors in HW, both conceptually and empirically; these are our target lessons for observation purposes. These lessons are “Beating Boredom and Developing Interests,” “Avoiding Sexual Risk Behavior,” “Leisure Motivation,” and “Relationships and Sexual Behavior.” These lessons are also the most challenging for teachers because of the content and interactive teaching format. Our logic in choosing these lessons is that if the teachers can teach these lessons well and with fidelity, they are likely to be able to teach the other lessons well.

Ideally we would video and audio record each lesson conducted by the teachers, but resources do not allow that luxury. Therefore, we are video recording a randomly selected set of lessons in addition to the four target lessons. A research assistant is assigned to specific schools and is responsible for video and audio recording the teachers teaching the lessons. This process also sensitizes the teachers to the research assistant and video and audio equipment, thus preventing special preparation for the target lessons of interest.

We have developed a coding system and manual similar to the work of others (Forgatch et al. 2005; Giles et al. 2008; Knutson et al. 2003). Specific codes have been developed for each of the four lessons with regard to dosage and adherence. Student response will be assessed on site by the research assistant who is doing the recording.

In addition to the observational protocol, other measures provide a secondary source of data with which to triangulate the observations. We have designed a daily teacher log that can quickly be completed after each lesson is taught. This log asks teachers questions such as how much content was covered, what was the student response, and to what extent, and how, did the teacher alter the lesson plan.

Finally, our annual teacher survey measures other individual-level factors often overlooked in implementation fidelity research, including teacher characteristics, such as enthusiasm, level of stress, and prior experience (e.g., Domitrovich et al. 2008; Dusenbury et al. 2005; Han and Weiss 2005; Greenberg et al. 2005). For example, we measure teacher perceptions of the value of HW, understanding of the content and comfort with teaching it (or feel they will be), individual and collective self-efficacy, general fit and appreciation within the school, and adequate resources, institutional support.

Considerations and Future Directions

We believe HWStudy2 described in this paper will result in several implications for prevention research overall. First, the study furthers Type 2 translational research on factors related to large-scale implementation fidelity of an evidence-based prevention program. Second, we will learn about large-scale, school-based dissemination in the South African context. Finally, HWStudy2 will demonstrate the advantages of using a factorial experimental design to examine which factors are related to high-quality program implementation, and the feasibility of implementing a factorial experiment in a real-world setting.

As we move forward with the complete study (i.e., not the pilot), we anticipate that we will reach approximately 14,000 youth. This large scale roll-out of HW in HWStudy2 presents a unique opportunity to understand factors critical to quality implementation of this program in a real-world setting and to contribute to the development of Type 2 translational research. This is particularly important in the South African context because HW will be delivered to students in all socio-economic conditions.

The MSED is committed to assuring high-quality education that contributes to prevention. Furthermore, MSED administrators are enthusiastic partners in this research project. This level of support is a critical component of successful large scale dissemination of EBIs (e.g., August et al. 2004; Fagan et al. 2008; Payne et al. 2006). We have been very deliberate in our efforts to work closely with MSED on all aspects of our projects and keep lines of communication open with administrators, principals and teachers. August et al. provided nine key lessons they learned in working with the community in going to scale. These lessons have resonated with our efforts. In particular the need to be culturally sensitive in program development, implementation and evaluation is critical, particularly as we move into African Black contexts (as opposed to mixed race or Coloured contexts where we have been). Another important lesson that we have learned is to expect organizational changes and challenges; this was also noted by August et al. Crises, changes in administration focus, changes in staff, and curricular changes are all par for the course. August et al. caution for the need to pay close attention to economic considerations. We have built into the study an ongoing cost-benefit analysis that began with our pilot study. If costs are prohibitive or do not fit easily within the current system, HW will not be sustainable.

Finally, we have addressed the notion of joint ownership, as advocated by August et al. (2004), since HWStudy1 began, although initially this ownership was limited to having the teachers and principals fully behind HW. Prior to HWStudy1 we conducted a pilot in which we asked teachers to deliver the curriculum as provided to them, but to note the modifications they would make in content and process. Through a series of focus groups with teachers, we learned of their concerns and suggestions and subsequently made important modifications to the curriculum. We believe this increased the willingness of the teachers and principals to work with us in HWStudy1. Since that time, we have worked hard to increase ownership of HW by the MSED through meetings and shared materials.

We know that there will be hurdles as HWStudy2 progresses, but we are convinced that we will emerge with a much better understanding of factors that contribute to implementation fidelity, and therefore, reducing risk among this vulnerable population. Furthermore, the answer to the practical questions in this study could serve as a model for dissemination of HW in other parts of South Africa, and for dissemination of other EBIs in other contexts.

Acknowledgments

HealthWise was supported by NIDA grant 1R01DA029084-01A1. Preparation of this article was supported by NIDA Center Grant P50 DA100075, NIDA 1R01DA029084-01A1, and F31 DA028155.

Footnotes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Drug Abuse (NIDA) or the National Institutes of Health (NIH).

Conflict of interest We declare that our research team has no conflict of interest with our association with the Metro South Education District (MSED). Our relationship with the district does not involve grants (other than the one secured by the U.S. National Institutes of Health/National Institute on Drug Abuse for this study which the MSED endorsed), employment, consultancy, shared ownership, or any close relationship that would influence or alter interests, financial or otherwise, that would be affected by the publication of this paper.

Ethics statement This study has been granted human subjects approval by the IRB boards at both Penn State University and the University of the Western Cape. We have followed and will follow all requirements for the protection of human subjects as stipulated by the IRB application and approval.

Contributor Information

Linda L. Caldwell, Email: llc7@psu.edu, The Pennsylvania State University, University Park, PA, USA

Edward A. Smith, The Pennsylvania State University, University Park, PA, USA

Linda M. Collins, The Pennsylvania State University, University Park, PA, USA

John W. Graham, The Pennsylvania State University, University Park, PA, USA

Mary Lai, The Pennsylvania State University, University Park, PA, USA.

Lisa Wegner, The University of the Western Cape, Cape Town, South Africa.

Tania Vergnani, The University of the Western Cape, Cape Town, South Africa.

Catherine Matthews, The University of Cape Town, Cape Town, South Africa. South African Medical Research Council, Cape Town, South Africa.

Joachim Jacobs, The University of the Western Cape, Cape Town, South Africa.

References

- Adelman HS, Taylor L. On sustainability of project innovations as systemic change. Journal of Educational and Psychological Consultation. 2003;14:1–25. [Google Scholar]

- August GJ, Winters KC, Realmuto GM, Tarter R, Perry C, Hektner JM. Moving evidence-based prevention programs from basic science to practice: “Bridging the efficacy-effectiveness interface”. Substance Use and Misuse. 2004;39:2017–2053. doi: 10.1081/ja-200033240. [DOI] [PubMed] [Google Scholar]

- Bales EA, Soren SA. Managing clinical knowledge for health care improvement. Yearbook of Medical Informatics. 2000:65–70. [PubMed] [Google Scholar]

- Bradley SA, Smith EA, Lai MH, Graham JW, Caldwell LL. Sexual coercion-risk in South African adolescent male youth: Risk correlates and HealthWise program impacts. In: Smith EA, editor. Sex, drugs, and deviance: Understanding prevention in South Africa; Symposium at the Society for Prevention Research annual meeting; Denver, CO. 2010. Jun, Chair. [Google Scholar]

- Bradshaw CP, Koch CW, Thornton LA, Leaf PJ. Altering school climate through school-wide positive behavioral interventions and supports: Findings from a group-randomized effectiveness trial. Prevention Science. 2009;10:100–115. doi: 10.1007/s11121-008-0114-9. [DOI] [PubMed] [Google Scholar]

- Bronfenbrenner U. Developmental ecology through space and time: A future perspective. In: Moen P, Elder GH Jr, Luscher K, editors. Examining lives in context: Perspectives on the ecology of human development. Washington, DC: The American Psychological Association; 1995. pp. 619–647. [Google Scholar]

- Bumbarger BK, Perkins DF. After randomized trials: Issues related to dissemination of evidence-based interventions. Journal of Children’s Services. 2008;3(2):55–64. [Google Scholar]

- Burns BJ, Hoagwood K, editors. Evidence-based practices Part II: Effecting change. Child and Adolescent Psychiatric Clinics of North America. 2005;14(2):xv–xvii. doi: 10.1016/j.chc.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Caldwell LL, Baldwin CK, Walls T, Smith EA. Preliminary effects of a leisure education program to promote healthy use of free time among middle school adolescents. Journal of Leisure Research. 2004;36(3):310–335. [Google Scholar]

- Caldwell LL, Patrick M, Smith EA, Palen L, Wegner L. Influencing adolescent leisure motivation: Intervention effects of HealthWise South Africa. Journal of Leisure Research. 2010;42:203–220. doi: 10.1080/00222216.2010.11950202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffman DL, Smith EA, Flisher AJ, Caldwell LL. Effects of HealthWise South Africa on condom use self-efficacy. Prevention Science. 2011;12:162–172. doi: 10.1007/s11121-010-0196-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Baker TB, Mermelstein RJ, Piper ME, Jorenby DE, Smith SS, et al. The multiphase optimization strategy for engineering effective tobacco use interventions. Annals of Behavioral Medicine. 2011;41:208–226. doi: 10.1007/s12160-010-9253-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Chakraborty B, Murphy SA, Strecher V. Comparison of a phased experimental approach and a single randomized clinical trial for developing multicomponent behavioral interventions. Clinical Trials. 2009a;6:5–15. doi: 10.1177/1740774508100973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Dziak JR, Li R. Design of experiments with multiple independent variables: A resource management perspective on complete and reduced factorial designs. Psychological Methods. 2009b;14:202–224. doi: 10.1037/a0015826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Murphy SA, Nair V, Strecher V. A strategy for optimizing and evaluating behavioral interventions. Annals of Behavioral Medicine. 2005;30:65–73. doi: 10.1207/s15324796abm3001_8. [DOI] [PubMed] [Google Scholar]

- Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): New methods for more potent e-health interventions. American Journal of Preventive Medicine. 2007;32:S112–S118. doi: 10.1016/j.amepre.2007.01.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conduct Problems Prevention Research Group. The fast track randomized controlled trial to prevent externalizing psychiatric disorders: Findings from grades 3 to 9. Journal of the American Academy of Child and Adolescent Psychiatry. 2007;46:1263–1272. doi: 10.1097/chi.0b013e31813e5d39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conduct Problems Prevention Research Group. The effects of a multi-year randomized clinical trial of a universal social-emotional learning program: The role of student and school characteristics. Journal of Consulting and Clinical Psychology. 2010;78(2):156–168. doi: 10.1037/a0018607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation efforts out of control? Clincial Pschology Review. 1998;18(1):23–45. doi: 10.1016/s0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- Department of Basic Education. Curriculum and Assessment Policy Statement (CAPS). Life Orientation Grades 7–9, Final Draft. Pretoria: Department of Basic Education; 2011. [Google Scholar]

- Department of Health. National guidelines for the development of health promoting schools/sites in South Africa. Pretoria, South Africa: Author; 2000. [Google Scholar]

- Domitrovich CE, Bradshaw CP, Poduska JM, Hoagwood K, Buckley JA, Olin S, et al. Maximizing the implementation quality of evidence-based preventive interventions in schools: A conceptual framework. Advances in School Mental Health Promotion. 2008;1(3):6–28. doi: 10.1080/1754730x.2008.9715730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorrington R, Johnson L, Bradshaw D, Daniel TJ. The demographic Impact of HIV/AIDS in South Africa: National and provincial indicators for 2006. Cape Town, South Africa: Centre for Actuarial Research, South African Medical Research Council, and Actuarial Society of South Africa; 2006. [Google Scholar]

- Drummond M, O’Brien B, Stoddart G, Torrance G. Methods for economic evaluation of health care programmes. Oxford, NY: Oxford Medical Publications; 1997. [Google Scholar]

- Durlak JA, DuPre E. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Education Research. 2003;18:237–256. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]

- Dusenbury L, Brannigan R, Falco M, Lake A. An exploration of fidelity of implementation in drug abuse prevention among five professional groups. Journal of Alcohol & Drug Education. 2004;47:4–19. [Google Scholar]

- Dusenbury L, Brannigan R, Hansen WB, Walsh J, Falco M. Quality of implementation: Developing measures crucial to understanding the diffusion of preventive interventions. Health Education Research. 2005;20:308–313. doi: 10.1093/her/cyg134. [DOI] [PubMed] [Google Scholar]

- Elliott DS, Mihalik S. Issues in disseminating and replicating effective prevention programs. Prevention Science. 2004;5:47–53. doi: 10.1023/b:prev.0000013981.28071.52. [DOI] [PubMed] [Google Scholar]

- Ennett ST, Ringwalt CL, Thorne J, Rohrbach LA, Vincus A, Simons-Rudolph A, et al. A comparison of current practice in school-based substance use prevention programs with meta-analytic findings. Prevention Science. 2003;4:1–14. doi: 10.1023/a:1021777109369. [DOI] [PubMed] [Google Scholar]

- Fagan AA, Hanson K, Hawkins JD, Arthur MW. Bridging science to practice: Achieving prevention program implementation fidelity in the community youth development study. American Journal of Community Psychology. 2008;41:235–249. doi: 10.1007/s10464-008-9176-x. [DOI] [PubMed] [Google Scholar]

- Fisher RA. The design of experiments. Oxford, England: Oliver & Boyd; 1935. [Google Scholar]

- Fixsen DL, Naoom SF, Blasé KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- Forgatch MS, Patterson GR, DeGarmo DS. Evaluating fidelity: Predictive validity for a measure of competent adherence to the Oregon Model of Parent Management Training (PMTO) Behavior Therapy. 2005;36:3–14. doi: 10.1016/s0005-7894(05)80049-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furr-Holden CDM, Ialongo N, Anthony JC, Petras H, Kellam S. Developmentally inspired drug prevention: Middle school outcomes in a school-based randomized prevention trial. Drug and Alcohol Dependence. 2004;73:149–158. doi: 10.1016/j.drugalcdep.2003.10.002. [DOI] [PubMed] [Google Scholar]

- Gager PJ, Elias MJ. Implementing prevention programs in high-risk environments: Application of the resiliency paradigm. American Journal of Orthopsychiatry. 1997;67(3):363–373. doi: 10.1037/h0080239. [DOI] [PubMed] [Google Scholar]

- Garet MS, Porter AC, Desimone L, Birman BF, Yoon KS. What makes professional development effective? Results from a national sample of teachers. American Educational Research Journal. 2001;38:915–945. [Google Scholar]

- Giles S, Jackson-Newsom J, Pankratz MM, Hansen WB, Ringwalt CL, Dusenbury L. Measuring quality of delivery in a substance use prevention program. Journal of Primary Prevention. 2008;29(6):489–501. doi: 10.1007/s10935-008-0155-7. [DOI] [PubMed] [Google Scholar]

- Goddard RD, Goddard YL. A multilevel analysis of teacher and collective efficacy. Teaching and Teacher Education. 2001;17:807–818. [Google Scholar]

- Gottfredson DC, Gottfredson GD. Quality of school-based prevention programs: Results from a national survey. Journal of Research on Crime and Delinquency. 2002;39:3–35. [Google Scholar]

- Graham JW, Flay BR, Johnson CA, Hansen WB, Collins LM. Group comparability: A multiattribute utility measurement approach to the use of random assignment with small numbers of aggregated units. Evaluation Review. 1984;8:247–260. [Google Scholar]

- Greenberg MT, Domitrovich CE, Graczyk PA, Zins JE. The study of implementation in school-based preventive interventions: Theory, research and practice. Rockville, MD: Substance Abuse and Mental Health Services Administration, Center for Substance Abuse Prevention; 2005. [Google Scholar]

- Han SS, Weiss B. Sustainability of teacher implementation of school-based mental health programs. Journal of Abnormal Child Psychology. 2005;33:665–679. doi: 10.1007/s10802-005-7646-2. [DOI] [PubMed] [Google Scholar]

- Harshbarger C, Simmons G, Coelho H, Sloop K, Collins C. An empirical assessment of implementation, adaptation, and tailoring: The evaluation of CDC’s national diffusion of VOICES/VOCES. AIDS Education and Prevention. 2006;18(Suppl A):184–197. doi: 10.1521/aeap.2006.18.supp.184. [DOI] [PubMed] [Google Scholar]

- Jenson PS. Commentary: The next generation is overdue. Journal of the American Academy of Adolescent Psychiatry. 2003;42:527–530. doi: 10.1097/01.CHI.0000046837.90931.A0. [DOI] [PubMed] [Google Scholar]

- Joint United Nations Programme on HIV/AIDS (UNAIDS) UNAIDS report on the global AIDS epidemic | 2010. UNAIDS; 2010. UNAIDS/10.11E | JC195BE. [Google Scholar]

- Kirk RE. Experimental design: Procedures for the behavioral sciences. 3. Belmont, CA, US: Thomson Brooks/Cole Publishing; 1995. [Google Scholar]

- Knutson NM, Forgatch MS, Rains LA. Fidelity of implementation rating system (FIMP): The training manual for PMTO [revised] Eugene, OR: Oregon Social Learning Center; 2003. [Google Scholar]

- Kulis S, Marsiglia FF, Elek E, Dustman P, Wagstaff DA, Hecht ML. Mexican/Mexican American adolescents and keepin’ it REAL: An evidence-based substance use prevention program. Children and Schools. 2005;3:133–145. doi: 10.1093/cs/27.3.133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumpfer KL, Alvarado R, Whiteside HO. Family-based interventions for substance use and misuse prevention. Substance Use and Misuse. 2003;11–13:1759–1787. doi: 10.1081/ja-120024240. [DOI] [PubMed] [Google Scholar]

- Leach DJ, Conto H. The additional effects of process and outcome feedback following brief in-service teacher training. Educational Psychology. 1999;19:441–462. [Google Scholar]

- Meintjies H. Orphans of the AIDS epidemic? The extent, nature and circumstances of child-lead households in South Africa. AIDS Care. 2010;22:40–49. doi: 10.1080/09540120903033029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mihalic S, Irwin K, Fagan A, Ballard D, Elliott D. Successful program implementation: Lessons learned from Blueprints. Washington, DC: U.S. Department of Justice, Office of Justice Programs; 2004. Retrieved from www.ojp.usdo.gov/ojjdp. [Google Scholar]

- National Conference on Health Promoting Schools. Executive summary of conference proceedings. South Africa: University of the Western Cape; 2006. [Google Scholar]

- Palen L, Smith EA, Caldwell LL, Mathews C, Vergnani T. Transitions to substance use and sexual intercourse among South African high school students. Substance Use and Misuse. 2009;44(13):1872–1887. doi: 10.3109/10826080802544547. [DOI] [PubMed] [Google Scholar]

- Palen L, Smith EA, Flisher AJ, Caldwell LL, Mpofu E. Substance use and sexual behavior among South African eighth grade students. Journal of Adolescent Health. 2006;39(5):761–763. doi: 10.1016/j.jadohealth.2006.04.016. [DOI] [PubMed] [Google Scholar]

- Patrick ME, Collins LM, Smith E, Caldwell L, Flisher A, Wegner L. A prospective longitudinal model of substance use onset among South African adolescents. Substance Use & Misuse. 2009;44:647–662. doi: 10.1080/10826080902810244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Payne AA, Gottfredson DC, Gottfredson GD. School predictors of the intensity of implementation of school-based prevention programs. Prevention Science. 2006;7:225–237. doi: 10.1007/s11121-006-0029-2. [DOI] [PubMed] [Google Scholar]

- Pettifor AE, Rees HV, Steffenson A, Hlongwa-Madikizela L, MacPahil C, Vermaak K, et al. HIV and sexual behavior among young South Africans: A national survey of 15–24 year olds. Johannesburg, South Africa: Reproductive Health Research Unit, University of the Witwatersrand; 2004. [Google Scholar]

- Reddy SP, Sewpaul JS, Koopman F, Funani NI, Sifunda S, Josie J, et al. Umthente Uhlaba Usamila—The South African Youth Risk Behaviour Survey 2008. Cape Town: South African Medical Research Council; 2010. [Google Scholar]

- Rohrbach LA, Grana R, Sussman S, Valente TW. Type II translation. Transporting prevention interventions from research to real-world settings. Evaluation and the Health Professions. 2006;29:302–333. doi: 10.1177/0163278706290408. [DOI] [PubMed] [Google Scholar]

- Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. Boston, MA: Houghton, Mifflin & Co; 2002. [Google Scholar]

- Shisana O, Rehle T, Simbayi LC, Parker W, Zuma K, Bhana A, et al. South African national HIV prevalence, HIV incidence, behaviour and communication survey, 2005. Cape Town, South Africa: HSRC Press; 2005. [Google Scholar]

- Smith EA, Palen L, Caldwell LL, Graham JW, Flisher AJ, Wegner L, et al. Substance use and sexual risk prevention in Cape Town, South Africa: An evaluation of the HealthWise program. Prevention Science. 2008;9(4):311–321. doi: 10.1007/s11121-008-0103-z. [DOI] [PubMed] [Google Scholar]

- Snyder J, Reid J, Stoolmiller M, Howe G, Brown H, Dagne G, et al. The role of behavior observation in measurement systems for randomized prevention trials. Prevention Science. 2006;7:43–56. doi: 10.1007/s11121-005-0020-3. [DOI] [PubMed] [Google Scholar]

- Storr C, Ialongo N, Anthony J, Kellam S. A randomized prevention trial of early onset tobacco use by school and family-based interventions implemented in primary school. Drug and Alcohol Dependence. 2002;66:51–60. doi: 10.1016/s0376-8716(01)00184-3. [DOI] [PubMed] [Google Scholar]

- Strecher VJ, McClure JB, Alexander GW, Chakraborty B, Nair VN, Konkel JM, et al. Web-based smoking cessation programs: Results of a randomized trial. American Journal of Preventive Medicine. 2008;34:373–381. doi: 10.1016/j.amepre.2007.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibbits MK, Caldwell LL, Smith EA, Wegner L. The relation between profiles of leisure activity participation and substance use among South African youth. World Leisure Journal. 2009;51:150–159. doi: 10.1080/04419057.2009.9728267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: The Interactive systems framework for dissemination and implementation. American Journal of Community Psychology. 2008;41:171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- Wegner L, Flisher AJ, Caldwell L, Vergnani T, Smith E. HealthWise South Africa: Cultural adaptation of a school-based risk prevention programme. Health Education Research. 2008;23:1085–1096. doi: 10.1093/her/cym064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolf SH. The meaning of translational research and why it matters. JAMA. 2008;299:211–213. doi: 10.1001/jama.2007.26. [DOI] [PubMed] [Google Scholar]