Abstract

The insula, a cortical brain region that is known to encode information about autonomic, visceral, and olfactory functions, has recently been shown to encode information during reward-seeking tasks in both single neuronal recording and functional magnetic resonance imaging studies. To examine the reward-related activation, we recorded from 170 single neurons in anterior insula of 2 monkeys during a multitrial reward schedule task, where the monkeys had to complete a schedule of 1, 2, 3, or 4 trials to earn a reward. In one block of trials a visual cue indicated whether a reward would or would not be delivered in the current trial after the monkey successfully detected that a red spot turned green, and in other blocks the visual cue was random with respect to reward delivery. Over one-quarter of 131 responsive neurons were activated when the current trial would (certain or uncertain) be rewarded if performed correctly. These same neurons failed to respond in trials that were certain, as indicated by the cue, to be unrewarded. Another group of neurons responded when the reward was delivered, similar to results reported previously. The dynamics of population activity in anterior insula also showed strong signals related to knowing when a reward is coming. The most parsimonious explanation is that this activity codes for a type of expected outcome, where the expectation encompasses both certain and uncertain rewards.

Keywords: reward expectation, reward seeking, single neuron

the insular cortex, a cortical brain region known to have neural activity related to somatosensory (Ibañez et al. 2010; Jones et al. 2010; Mesulam and Mufson 1982; Zhang et al. 1999), visceral (Augustine 1996; Mesulam and Mufson 1982), gustatory (Simon et al. 2006; Yaxley et al. 1990), olfactory (Ibañez et al. 2010; Mesulam and Mufson 1982), and interoception functions (Craig 2002, 2009), is strongly connected to brain structures related to predicting short- and long-term behavioral outcomes, such as the cingulate and orbitofrontal cortices. There is now evidence from single-neuron recording in monkeys (Asahi et al, 2006) and functional magnetic resonance imaging (fMRI) in human subjects that the insula might play a role in reward processing. The single-neuron results show that there are neurons activated by reward expectation. The fMRI studies of human insula also show that there are insular activations related to drug craving (Filbey et al. 2009; Garavan 2010; Kilts et al. 2001; Naqvi et al. 2007; Naqvi and Bechara 2010), various emotion-laden situations (Fusar-Poli et al. 2009; Jabbi et al. 2007; Kober et al. 2008; Lamm and Singer 2010; Singer et al. 2009), and stochastic processes such as gambling (Clark et al. 2009; Li et al. 2010), uncertainty (Critchley et al. 2001; Singer et al. 2009), and risk (Kuhnen and Knutson 2005; Preuschoff et al. 2008; Xue et al. 2010).

The anterior portion of the insula, the agranular and dysgranular parts, is preferentially interconnected with the orbitofrontal and anterior cingulate cortices and ventral striatum (Augustine 1996; Chikama et al. 1997; Mesulam and Mufson 1982), brain regions that seem to be related to predicting outcome of actions, making it of particular interest to us. We hypothesized that anterior insular neurons, because of their connections with areas important for assessing outcome, would encode information about predicted outcome.

We used a task design that has a range of incentive conditions. We recorded from single neurons while monkeys performed a reward schedule task in which the monkey had to complete schedules of sequential red-green discrimination trials to obtain a reward. In the task used in this study, there are both rewarded trials and unrewarded trials. The outcome is predicted by a visual cue when correct trials are rewarded. For unrewarded trials, the cue makes it explicit that the monkey will need to work further to obtain a reward. Comparing these different types of trials provides a means to observe whether the responses are related to outcome. In additional manipulation, we randomize the cue sequences so that we can also assess the activity when rewards are delivered on a stochastic schedule. The set of conditions makes it possible to infer whether the neural responses are related to the expectation of reward, whether there is a difference between knowing the predicted outcome without uncertainty (both reward and no-reward), and what happens to neural activity during stochastic reward delivery. In the results, we find that about one-quarter of the 131 responsive neurons recorded are activated in trials with a predicted reward, whether the reward was certain (rewarded trials with valid cue), or when the reward is delivered on a stochastic schedule (reward could happen in any trial with a random cue). The neurons do not respond in trials where the monkey knows there will be no reward (no reward in valid cue condition). We found that insula neurons show activity patterns related to whether or not an immediate (current trial) reward might be expected. This finding would have been difficult to observe without the distinction among fully predictable reward and unrewarded conditions, and stochastic conditions.

METHODS

All experiments and procedures were conducted in accordance with the guidelines for the care and use of laboratory animals as adopted by the Neuroscience Research Institute (NRI)/National Institute of Advanced Industrial Sciences and Technology (AIST) and were approved by the Animal Care and Use Committee of the NRI/AIST.

Multitrial reward schedule task.

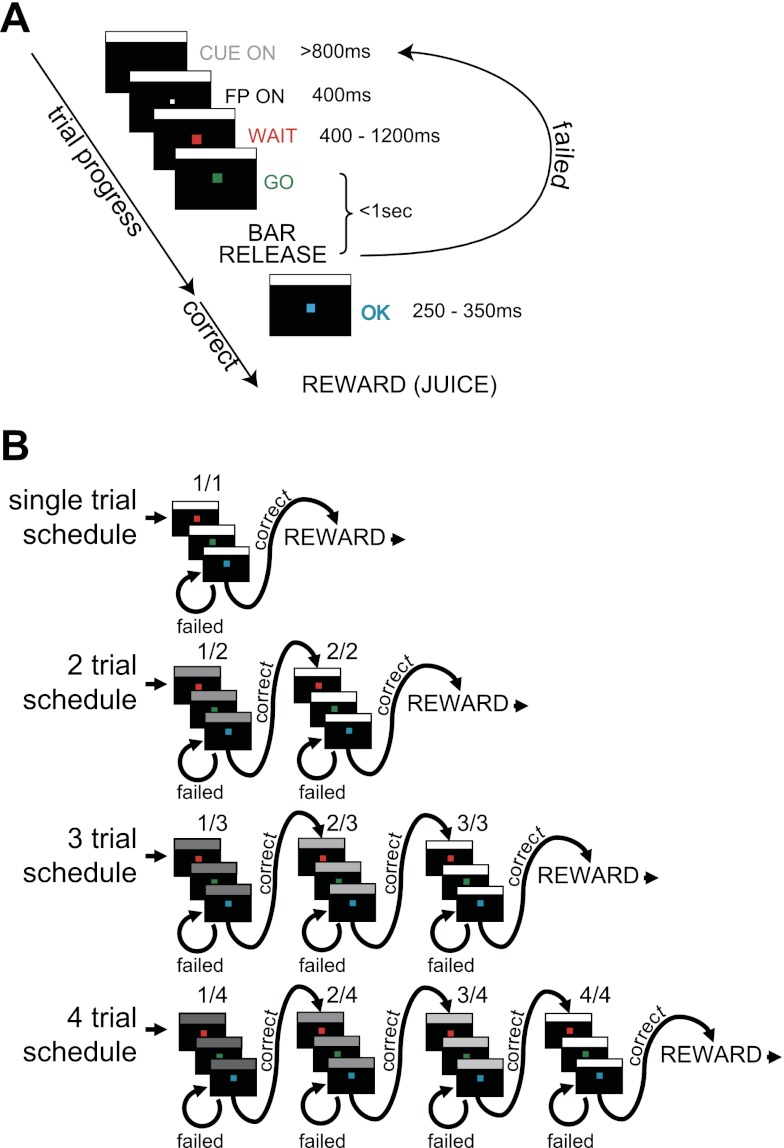

Behavioral and single-unit data were collected from two young adult (5–9 kg) monkeys (Macaca mulatta). Initially the monkeys were trained to perform a sequential red-to-green color discrimination (Fig. 1A). Each monkey squatted in a monkey chair in front of a computer video monitor. A trial began when the monkey touched a small bar on the chair. After the bar was touched, a small white fixation point (FP; 0.07 × 0.07 degree) appeared in the center of the monitor for 400 ms. A red WAIT target (0.2 × 0.2 degree) then replaced the white target for 400–1,200 ms, after which the red target changed to green (GO target). If the monkey released the bar while the green target was present (BAR event), the target color changed to blue (OK signal), indicating successful completion of the trial (Fig. 1A). During initial training, a juice reward was delivered 250–350 ms after each successful bar release. The trial was scored as an error if the monkey released the bar too early (during the red WAIT signal or earlier than 150 ms from GO onset), failed to release until the GO target disappeared, or moved its eyes further than 20 degrees from the target. During training, a white rectangle (10.5 × 0.26 degree) was presented at the top of the monitor when the bar was touched at the beginning of the trial and remained on until the trial ended; in the multitrial reward schedules (see below), this became a visual cue.

Fig. 1.

Task paradigm. A: in a visual discrimination trial, the monkey had to release the bar within 1 s after the red target (WAIT) changed to the green (GO) to complete the ongoing trial. B: the multitrial reward schedule task consists of 1, 2, 3, or 4 visual discrimination trials. A new 1 of these 4 schedules was randomly picked after completion of the current schedule. Successful completion of the last trial of each schedule was rewarded with a drop of juice. For successful completion of other trials, a sham reward apparatus was activated. As shown in B, the brightness of visual cue parallels with the number of remaining trials in the valid cue condition. In the random cue condition, the cue was chosen randomly from the set for the current schedule, thus dissociating the cue meaning from the reward schedule.

After the monkeys reached a criterion of 80% correct trials, the multitrial reward schedule task was started (Fig. 1B). In this task, the monkeys had to complete schedules of one, two, three, or four correct trials to earn a juice reward. If the monkey made an error on a trial, the same trial was repeated until done correctly (correction trials). Thus the monkey might initiate more trials than were completed correctly. After an unrewarded trial, the intertrial interval was 1,550 ms, and after a rewarded trial, it was 2,000 ms. For labeling in this report, the schedule states are abbreviated as “trial number/schedule length” (thus the 10 states are designated 1/1; 1/2, 2/2; 1/3, 2/3, 3/3; and 1/4, 2/4, 3/4, 4/4). A sham reward apparatus was activated in successful unrewarded trials (1/2, 1/3, 2/3, 1/4, 2/4, and 3/4). After successful completion of a schedule, a new schedule was chosen randomly.

There were two task conditions defined by the cue, a valid cue condition and a random cue condition. In the valid cue condition, the cue brightened as the schedule progressed, with the brightness being proportional to the schedule state [brightness = (trial number/schedule length) × brightest pixel value]. For example, the schedule states 1/4, 2/4, 3/4, and 4/4 were indicated by the cues with 25, 50, 75, and 100% brightness, respectively. In this valid cue condition, the brightest cue (100% brightness) was presented in the final state of each schedule (1/1, 2/2, 3/3, and 4/4), indicating that if the trial was completed successfully, a reward would be delivered (Fig. 1B).

In the random cue condition, the schedules were picked as indicated above. However, in each trial a cue was selected at random from the set of brightness available for that schedule in each trial (e.g., in the 4-trial schedule, trial by trial, one of the cues with 25, 50, 75, and 100% brightness was randomly selected), so now the cue had a less predictive value. The same cue could be presented two or more times within schedules containing two or more trials, or a particular cue might not appear at all. The reward was delivered in the last trial of the schedule, no matter which cue had been selected according to the random rule.

The valid and random cue conditions were run in blocks that consisted of 90–286 trials. Switches between the valid and random cue conditions were done abruptly and were not signaled to the monkeys. Eye position was captured with a charge-coupled device camera operating at 30 Hz. The eye position was extracted and monitored using software adapted for the LINUX operating system (http://staff.aist.go.jp/k.matsuda/eye/index.html).

Single-unit recording.

When the monkeys achieved an overall correct rate of 80% in the valid cue condition, the training was terminated and an aseptic surgical procedure was performed under ketamine-pentobarbital anesthesia to attach a recording cylinder and a head fixation post to the skull. On the basis of an MR image, the cylinder for unit recording was attached at 15 mm anterior and 22 mm lateral of the Horsley-Clark reference frame. Neuronal recordings began after a 1-wk recovery period.

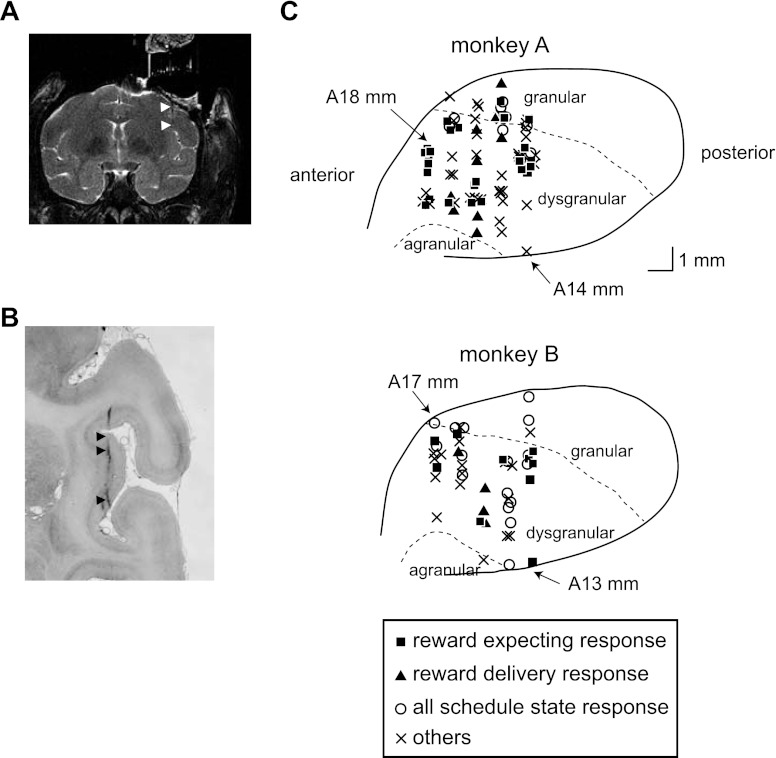

Single units were recorded using tungsten microelectrodes with impedance of 1.2–1.4 MΩ (MicroProbe, Clarksburg, MD). The electrodes were moved through a stainless steel guide tube using a hydraulic microdrive mounted on the recording cylinder (Narishige, Tokyo, Japan). To confirm that the neurons recorded were in the anterior insular cortex, in an early recording session an MR image was obtained with an electrode in place (Fig. 2) (Saunders et al. 1990). Experimental control and data collection were performed using the real-time data acquisition program REX, adapted for the QNX operating system running on a Gateway2000 personal computer (Hays et al. 1982). Single units were discriminated online according to spike shape and amplitude by calculating principal components using an IBM-compatible microcomputer (Abeles and Goldstein 1997; Gawne and Richmond 1993).

Fig. 2.

Recording site identification. A: before the recordings, the monkey brain MRI was taken to confirm whether the attached grid could guide the electrode toward the expected recording area. The brain was penetrated with tungsten electrode (white arrowheads). The insular cortex was located just beneath the tip of electrode. The plane is 15 mm anterior. B: after the recording, the sectioned tissue was stained with cresyl violet. The scar of tracks could be confirmed within insular cortex (black arrrowheads). C: the expected positions of recorded neurons. The positions on the planes of 19, 20, and 21 mm lateral and those on the planes of 17, 18, and 19 mm lateral in monkeys A and B, respectively, were overlaid. All tracks were located in the middle to anterior half of insular cortex. The neuronal classes (see Neuronal responses and selectivity in results) are denoted as different symbols. “Others” indicates the neurons that do not fall into any class.

Neural data analysis.

To identify responsive neurons, we tested whether there were periods when the spike counts within single schedule states were significantly different from the mean activity of the neuron at one or more points. For this procedure, the rate from every 100-ms window was compared with the overall average rate for all trials. In a sliding window procedure, the window was moved in 20-ms steps. The ranges for the sliding window were −400 to 800, 0 to 400, 0 to 400, −200 to 200, 0 to 400, and −400 to 1,500 ms from CUE, WAIT, GO, BAR, OK, and REW, respectively (CUE, cue onset; WAIT, wait signal; GO, go signal; BAR, bar release; OK, correct signal; REW, reward apparatus activation whether rewarded or not). The average rate for all trials was computed by first counting the spikes across each whole trial, next dividing by the length of the trial, and finally using these values from all of the individual trials to calculate the average for all trials. If the rate in three or more consecutive windows for one or more events differed significantly from the average rate (t-test, P < 0.05), the neuron was counted as responsive.

To identify the responsive task event for each responsive neuron, the responses were aligned with respect to each of seven events in each trial: CUE, WAIT, GO, BAR, OK, REW, and ITI (intertrial interval). The ITI was defined to be the epoch between 1,000 and 1,500 ms following reward delivery onset. The event that was associated with the largest number of spikes was labeled as the main responsive task event. One responsive period was identified in every neuron. Of the periods showing significant differences from the average rate (these periods were identified by the sliding window procedure in the analysis to investigate whether the neuron was responsive), the period that was the closest to or spread over the onset of the responsive task event was identified as the responsive period.

In another analysis carried out for neurons that had at least one response as described above, to determine whether there were effects of reward expectancy and immediate reward history, we carried out analysis of variance (ANOVA). For reward expectancy, we used one-factor, two-level ANOVA (significance threshold P < 0.05) on the spike counts in sliding windows where the two factor levels were rewarded (1/1, 2/2, 3/3, and 4/4) and unrewarded schedule states (1/2, 1/3, 2/3, 1/4, 2/4, and 3/4). For the reward history effect, the two factor levels were states where the preceding trial was rewarded (first schedule states: 1/1, 1/2, 1/3, and 1/4) or not (nonfirst schedule states: 2/2, 2/3, 3/3, 2/4, 3/4, and 4/4). The windows for the analysis were 100 ms wide and stepped by 20 ms as described above. If the activities showed significant differences in both comparisons of rewarded vs. unrewarded and first vs. nonfirst schedule states, the activities were categorized by the larger percent variance explained as calculated in the ANOVA. The dynamics were followed through their evolution over time by counting the neurons showing significant differences by two-level ANOVAs in 100-ms sliding windows aligned to each of the task events, as described above.

To determine whether neuronal firing in the responsive period showed selectivity (where selectivity is defined to be significant activation patterns as determined by ANOVA) for reward-related events, we used ANOVA analyses on all of the neurons. For this analysis, there were also two data models of interest to us: rewarded vs. unrewarded schedule states and trials following a preceding reward vs. those following an unrewarded trial, as described above. In addition, a single-factor, 10-level ANOVA was applied with one level for each of the 10 schedule states. To determine whether the 2-level ANOVA was preferred over the 10-level ANOVAs, the 2- and 10-level ANOVA models were compared with degrees of freedom adjustments to assess significance by F-test using the R function “anova” (R statistics package by Gentleman and Ihaka, R Development Core Team). The null hypothesis of the F-test for model comparison was that the variances of errors between actual values and expected values calculated from each model were equal (Venables and Ripley 2002). If a simpler (2-level ANOVA) was preferred; that is, if the 2-level ANOVA was not significantly different in explanatory power from the 10-level ANOVA, we chose the 2-level ANOVA.

For the neurons identified as coding reward influence (either history and/or expectation using the 2-level ANOVAs), the spike density of the response for each neuron was normalized. Neuron by neuron, we subtracted the smallest value of the spike density from whole spike density. The spike density was then multiplied by the inverse of the peak value, making the range of spike density 0 to 1.

To determine whether the responses in the random cue condition were selective to cue brightness, the trials were sorted by the cue brightness (25, 33, 50, 66, 75, and 100%) in each schedule state and the firing rates were compared across the trials with different cue using a single-factor, six-level ANOVA.

Localization of recording sites.

Monkeys were euthanized after recording had been completed. Histological examination showed that the recording tracks passed through insular cortex dorsoventrally (Fig. 2B) and were consistent with the previously obtained MRI (Fig. 2A). All recording tracks are shown in Fig. 2C. The neuronal types are denoted by different symbols (see results). There was no correlation between the recorded positions and neuronal classes that we could identify.

RESULTS

Behavioral performance.

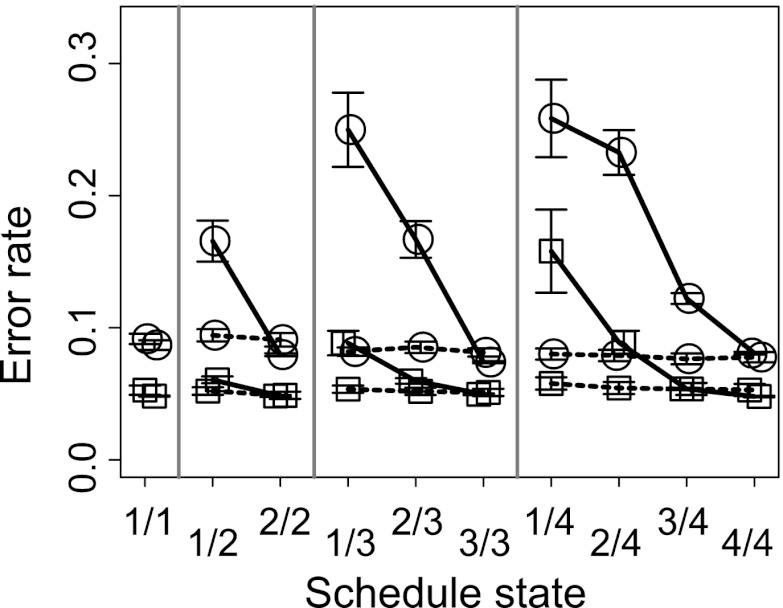

Each monkey made more errors on average, measured as session-by-session error rates, in unrewarded schedule states (1/2, 1/3, 2/3, 1/4, 2/4, and 3/4) than in rewarded schedule states (1/1, 2/2, 3/3, and 4/4) (Fig. 3; t-test, t = 8.6, df = 721.04, P ∼ 0 and t = 17.4, df = 596.8, P ∼ 0 for monkeys A and B, respectively), and the error rates were indistinguishable across rewarded schedule states (1-way, 4-level ANOVA, F3,412 = 0.943, P = 0.96 and F3,320 = 1.39, P = 0.24 for monkeys A and B, respectively) in the valid cue condition. The error rates were highest in the first schedule states (1/2, 1/3, and 1/4) and lowest in the rewarded schedule states (Fig. 3, solid lines). From the pattern of errors, we infer that the monkeys are using the visual cues to adjust their behavior; that is, they are paying attention to the cue's identity. They are less motivated in trials when they know there is no possibility for reward (La Camera and Richmond 2008). In the random cue condition, the monkeys made few errors in any schedule state whether rewarded or unrewarded (Fig. 3, dashed lines). Thus the monkeys were most strongly motivated to perform correctly when reward delivery would or might occur (La Camera and Richmond 2008). These results are similar to those reported previously with the same task (Bowman et al. 1996; Liu and Richmond 2000; Shidara et al. 1998; Shidara and Richmond 2002; Simmons and Richmond 2008; Sugase-Miyamoto and Richmond 2005).

Fig. 3.

Mean error rates of the task performance. Abscissa indicates schedule states (trial number/schedule length), and ordinate indicates error rates. Circles and squares denote monkeys A and B, respectively. In the valid cue condition, as the schedule progressed toward the rewarded schedule states, the error rates became low (solid line). In the random cue condition, the error rates were almost the same as or better than those of unrewarded schedule states in the valid cue condition (dashed line).

Although the monkeys performed well in all trials of the random cue condition, it seemed possible that there might be a bias in the first trials of reward schedules. In the random cue condition, when the first cue of the schedule comes on, the most unpredictable situation is when the brightest cue appears. Because the brightest cue appears in all schedules, it is not possible to guess which schedule has started; in other words, the monkeys cannot anticipate whether the upcoming trial might be rewarded when the first cue is the brightest. When the other cues appear as the first in a schedule, the monkey might have learned to use the information about the schedule (e.g., if either the 1/3 or the 2/3 brightness cue appears, then a 3-trial schedule must be starting). Therefore, we sorted the responses for the 1/2, 1/3, and 1/4 schedule states in the random cue condition according to whether the cue was the brightest or not. There was a significant bias to perform better in the first trials with the brightest cue (for monkeys A and B: error rate, 1.1 vs. 2.6% and 1.2 vs. 5.2%, respectively, χ2 = 6357 and 3296, respectively, df = 3, P ∼ 0) than in other first trials. Thus it appears that the monkeys learned to interpret the information contained in the cue in this stochastic situation.

During these experiments there was no signal indicating that the valid cue condition was about to be changed to the random cue condition. We investigated how quickly the monkeys noticed the condition change by comparing the error rate between the last 1/4 trial in the valid cue block with the second trial in the random cue condition (which was the first trial in which it was possible for the monkey to know that the condition had changed). There were significantly fewer errors after the switch from the valid cue to the random cue condition for both monkeys (16/104 to 2/104, χ2 = 10.28, df = 1, P = 0.0014 and 16/81 to 5/81, χ2 = 5.47, df = 1, P = 0.019 for monkeys A and B, respectively), showing that the monkeys detected the block change from valid to random immediately.

Neuronal responses and selectivity.

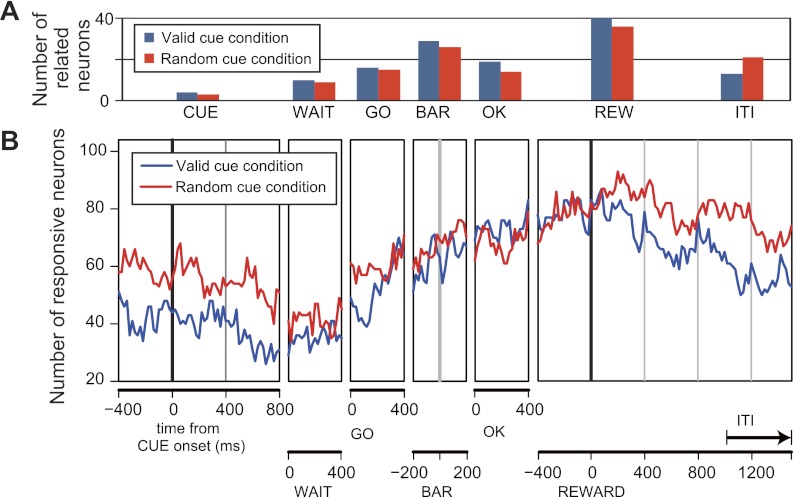

For 170 neurons (103 and 67 from monkeys A and B, respectively), single-unit isolation was maintained during both the valid and random cue conditions. A total of 131 neurons (81 and 50 from monkeys A and B, respectively) responded in relation to at least one task event (CUE, WAIT, GO, BAR, OK, REW, and ITI).

Across the population, in both the valid and random cue conditions, the strongest responses almost always occurred late in the trial (REW; Fig. 4A, χ2 = 47.6, df = 6, P ∼ 0), although many neurons did respond early in the trial (Fig. 4A). The total number of responding neurons was smallest (about 40) during the WAIT period and largest (over 70) just after the reward apparatus was activated (blue and red lines in Fig. 4B). The total number of responsive neurons decayed slowly during the ITI (Fig. 4B).

Fig. 4.

Number of responsive neurons. A: the number of event-related responses in the valid (blue bars) and random cue conditions (red bars) was plotted against task events. Abscissa labels the task events through the trial. CUE, WAIT, GO, BAR, OK, REW, and ITI correspond to the events of cue onset, wait signal, go signal, bar release, correct signal, reward apparatus activation, and intertrial interval, respectively. Ordinate indicates number of neurons. B: the number of significantly responsive neurons in every 100-ms window slid by 25 ms from the beginning to the end of trial. The firing rate of each schedule state within window was compared with the averaged activity calculated from the spike counts throughout all trials. Blue and red lines show the number of neurons in the valid and random cue conditions, respectively.

Reward expectation activity.

In the valid cue condition, 36 neurons (26 and 10 from monkeys A and B, respectively) showed a significant reward vs. no-reward difference in their responsive periods of the valid cue condition (ANOVA, see methods; Figs. 5A and 6A). The activity increased well before the rewarding event, showing that the activity was conditional on information provided by the cue, and ended after the reward delivery in the valid cue condition. These same neurons also showed activity before the end of every schedule state in the random cue condition. These two sets of observations taken together suggest that the activity was associated with reward expectation (Figs. 5B and 6B).

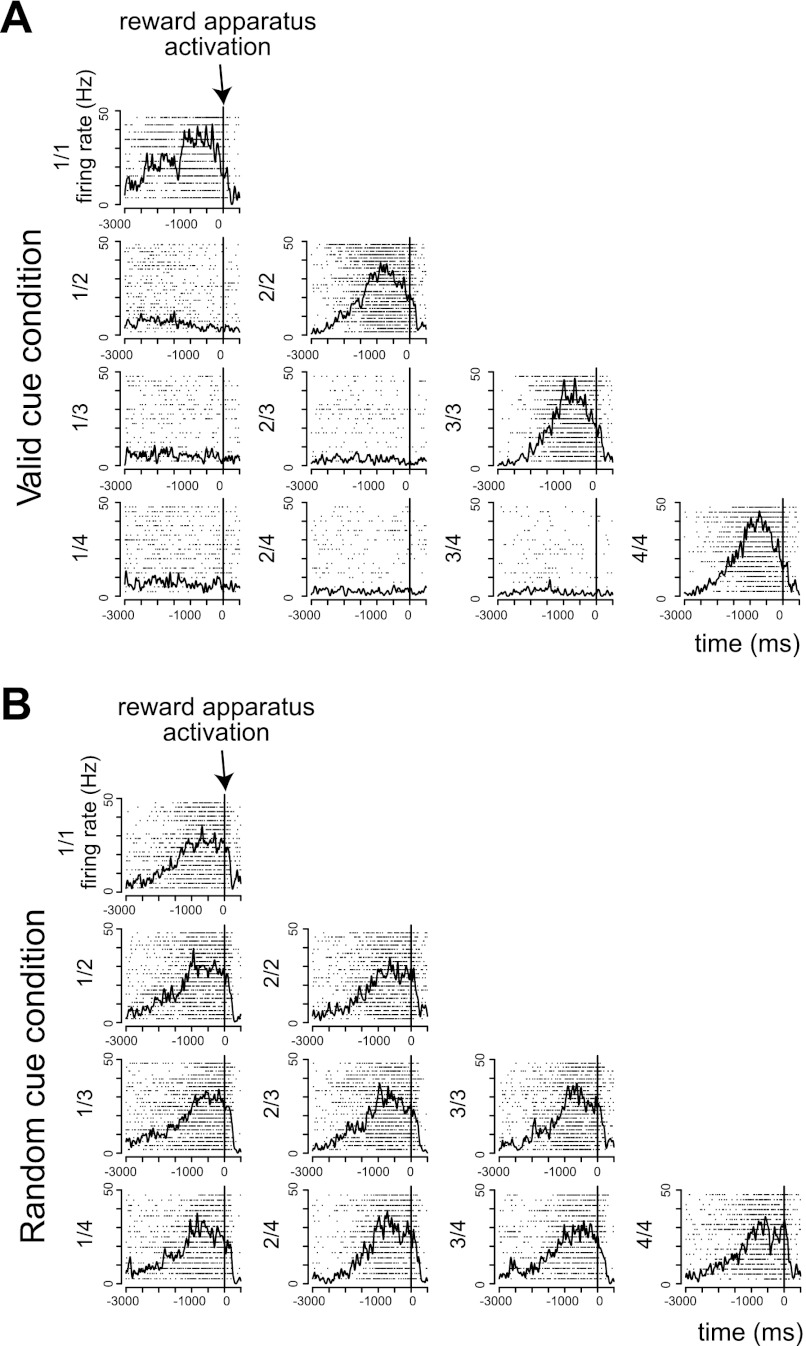

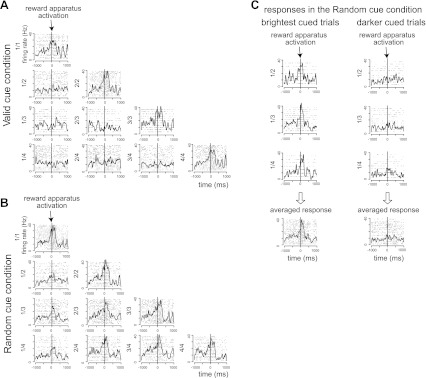

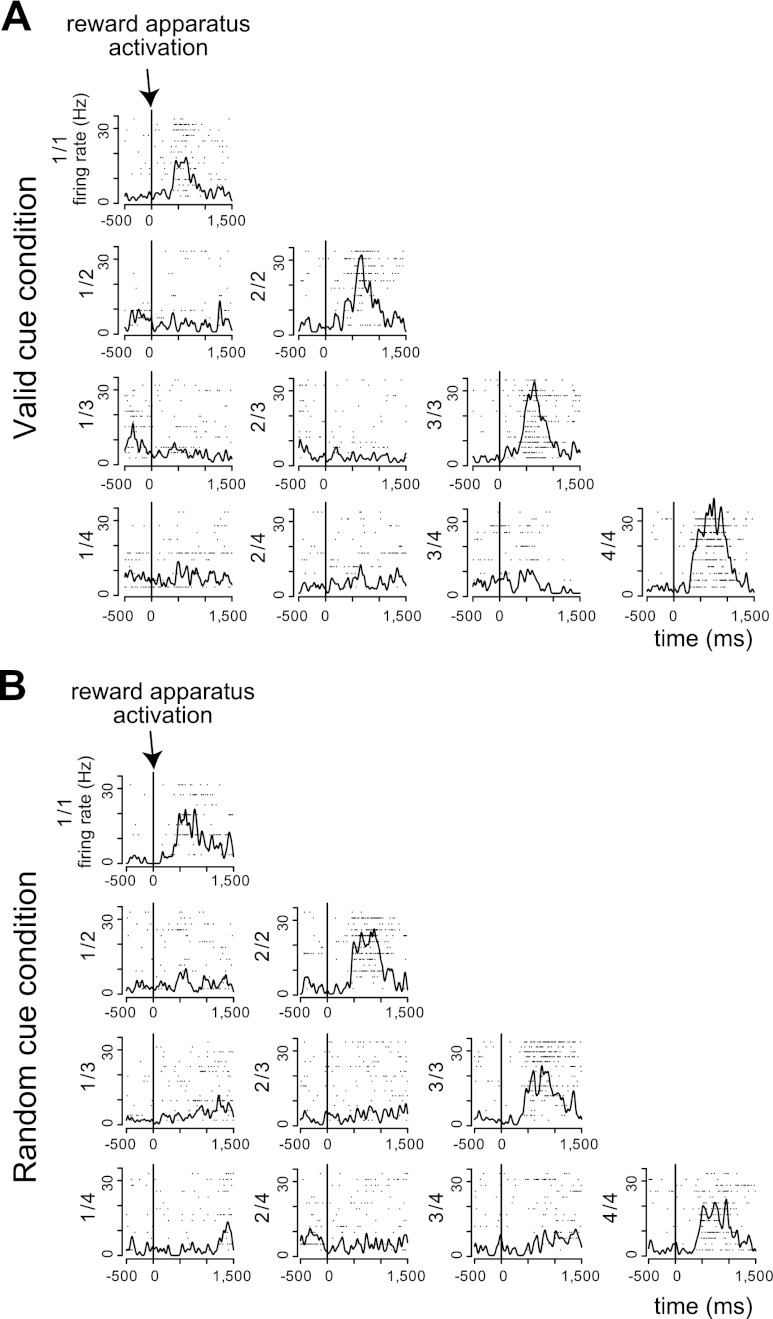

Fig. 5.

Example of reward expectation response. A: responses in the valid cue condition. B: responses in the random cue condition. Each plot shows the raster and spike density plot in each schedule state. Horizontal axes indicate the time from the activation of reward apparatus, and vertical axes indicate the firing rates. The activity of this neuron was greatest during the rewarded schedule states of the valid cue condition (1-way, 2-level ANOVA, F1,195 = 1,086.9, P = 0, r2 = 0.85). In the random cue condition, this neuron responded equally in all schedule states (1-way, 10-level ANOVA, F9,192 = 0.70, P = 0.49, r2 = 0.02).

Fig. 6.

Variance of reward expectation response. A: responses in the valid cue condition. B: responses in the random cue condition. Each plot shows the raster and spike density plot in each schedule state. Horizontal axes indicate the time from reward apparatus activation. This neuron responded in the rewarded schedule states in the valid cue condition. Although the neuron responded in all schedule states in the random cue condition, the responses in the period before reward delivery showed significant differences between first schedule states and the latter schedule states (1-way, 2-level ANOVA, F2,121 = 20.06, P = 1.25 × 10−5, r2 = 0.089). C: responses in first schedule states of the random cue condition sorted by cue brightness whether the cue was brightest or not. Left: responses for the brightest cued trials. These were indistinguishable from the responses in the rewarded schedule states of the valid cue condition (t-test, P = 0.15). Right: responses in the other, darker cued trials. These were indistinguishable from the responses for the unrewarded schedule states in the valid cue condition (t-test, P = 0.51). The cue brightness modulated the responses in the first schedule states in the random cue condition.

There appeared to be two patterns of response in the random cue condition. For 22 neurons, the activity in the random cue condition was about equal in all of the schedule states (Fig. 5B). For the other 14 neurons, there was apparently idiosyncratic activity across the schedule states (Fig. 6B). For these 14 neurons, we asked whether these differences in firing rates across the schedule states in the random cue condition were related to the monkeys' knowledge about the schedule given by the brightest/nonbrightest difference in first schedule states seen in the behavior, where the brightest cue is the most uncertain condition. For the analysis, we sorted the activity for the 1/2, 1/3, and 1/4 schedule states in the random cue condition according to whether the cue was the brightest or not. Figure 6C shows that when the brightest cue appeared in the first schedule states of the multitrial schedules in the random cue condition, the activity was large and indistinguishable from the activity in the rewarded schedule states of the valid cue condition (t-test, P = 0.15), Thus, in the random cue condition, the neuronal activity is elicited when a reward is possible but not certain. When any other cue appeared, that is, a cue indicating that no reward was possible for the current trial, the activity was small and indistinguishable from the activity related to the unrewarded schedule states in the valid cue condition (e.g., Fig. 6C vs. 6A, t-test, P = 0.51). It appears that when a nonbrightest cue appears as the first cue in a schedule in the random cue condition, the monkeys must act as if they know that there will be no reward forthcoming, and these neurons seem to reflect that knowledge (a nonbrightest cue cannot be rewarded). This is consistent with the interpretation that the slight increase in error rates for nonbrightest first cues in the random cue condition reflects that the monkey is attending to cue; that is, the monkeys behave best when they either know a reward will be forthcoming (with brightest cue in the valid cue condition) or when the reward is going to be stochastic (brightest cue as first in the random cue condition, 1 in 4 chance of reward in the trial; see Behavioral performance above). It is a puzzle as to why, in the random cue condition, some neurons seem to be sensitive to the difference between the brightest-first and other cues, whereas the other neurons are indifferent to the cue brightness. Overall, these neurons seem to encode information about reward expectation in an all-or-none fashion, distinguishing between trials where a reward is not possible (no response) and trials where a reward is possible, an encoding that does not scale with the expected value.

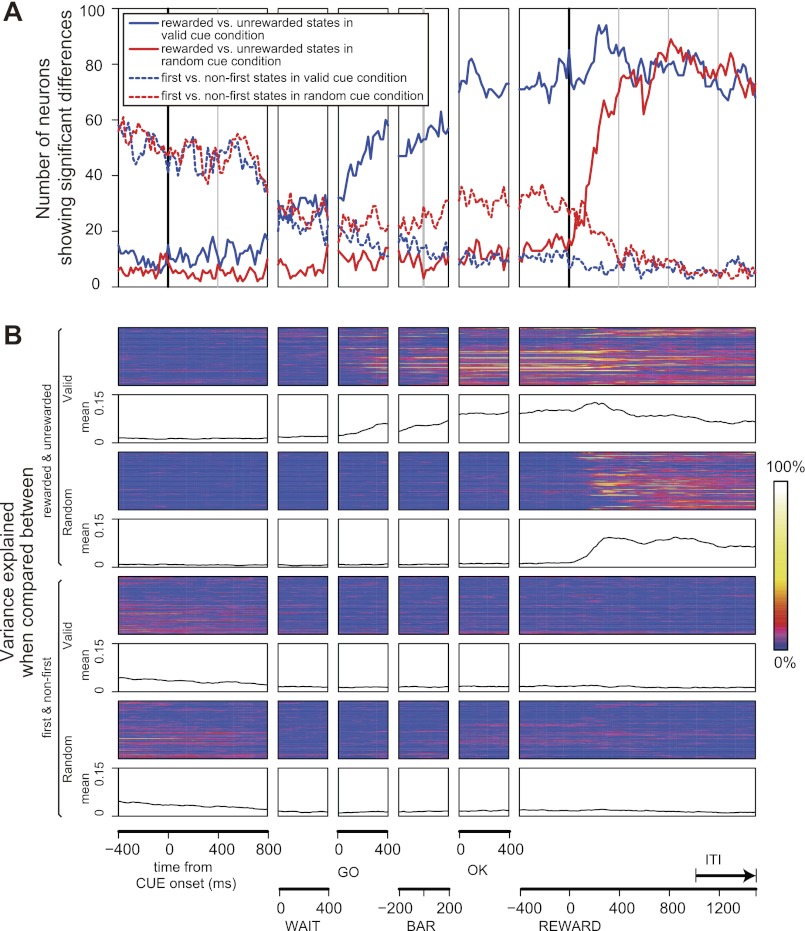

To investigate the population of responsive neurons further, for each of the 131 responsive neurons we characterized how reward expectation changed as time passed, using a sliding window one-way, two-level ANOVA (levels: reward, no reward; see methods). Figure 7A shows that the number of neurons having a significant signal was small at the beginning of trials and then rose beginning at the time of the green GO signal, peaking at the time of the blue OK signal (solid blue line). The percentage of variance explained by this reward vs. no reward ANOVA model was calculated throughout trial for each neuron using the sliding windows. The dynamics of this variance analysis is represented for all 131 neurons as a color density scale in the first and third rows of Fig. 7B. The average amount of variance explained by the single-factor ANOVAs across all 131 neurons is shown in the second and fourth rows. The percentage of variance explained by first vs. nonfirst ANOVA model is shown in the fifth and seventh rows, and the average amount of variance explained over time in the population is shown in the sixth and eighth rows of Fig. 7B. The activity distinguishing between the reward and no-reward conditions seems to carry through the beginning of following first schedule states in the next schedule (this is what the first-nonfirst factor detects, see methods; dashed lines in Fig. 7A and fifth to eighth rows in Fig. 7B). Overall, it appears that the population is sensitive to reward contingency beginning in the middle of the trial with the valid cues (Fig. 7A, solid blue line) and only at the reward with the random cues (Fig. 7A, solid red line), and in both cases this seems to carry through the ITI and into the beginning of the next schedule.

Fig. 7.

A: number of neurons showing significant differences between rewarded vs. unrewarded schedule states (solid lines) and between first vs. nonfirst schedule states (dashed lines) throughout a trial. Blue and red lines are those in the valid cue and the random cue conditions, respectively. B: variance explained when comparing the spike counts between rewarded vs. unrewarded schedule states in the valid cue condition (1st and 2nd rows), rewarded vs. unrewarded schedule states in the random cue condition (3rd and 4th rows), first vs. nonfirst schedule states in the valid cue condition (5th and 6th rows), and first vs. nonfirst schedule states in the random cue condition (7th and 8th rows) throughout a trial.

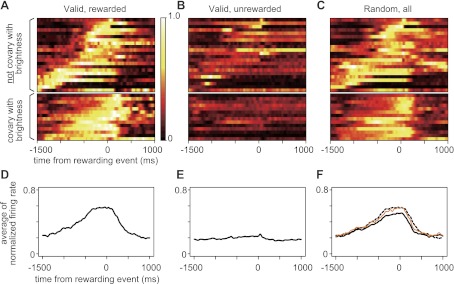

The normalized activity is shown for the 36 neurons with significant reward expectation activity (as found in ANOVA analyses for firing in responsive periods), in the rewarded trials of the valid cue condition (Fig. 8A), in the unrewarded trials of the valid cue condition (Fig. 8B), and in all trials of the random cue condition (Fig. 8C), together with the averaged firing rates of normalized 36 responses (Fig. 8, D–F). All 36 reward expectation neurons started to respond before either a certain or stochastic reward (Fig. 8A), but they were never activated when it was certain that no reward would be available (Fig. 8B).

Fig. 8.

Normalized firing rate profile of reward expectation responses. A: normalized firing rates in rewarded schedule states in the valid cue condition. B: normalized firing rates in unrewarded schedule states in the valid cue condition. C: normalized firing rates in all schedule states in the random cue condition. Top panels of A–C represent the 22 responses that did not covary with the cue brightness, as illustrated in Fig. 5, whereas bottom panels of A–C represent the 14 responses that did covary with the cue brightness, as illustrated in Fig. 6. D: averaged normalized firing rate of all neurons in rewarded schedule states in the valid cue condition. E: averaged normalized firing rate of all neurons in unrewarded schedule states in the valid cue condition. F: averaged normalized firing rate of all neurons in all schedule states (black line) and in schedule states with the brightest cues (orange line) in the random cue condition, together with the superimposed (dotted) line from D.

The 14/36 reward expectation neurons showing brightest cue sensitivity in the random cue condition seemed to show weaker activity (bottom panel in Fig. 8C) than in the rewarded schedule states in the valid cue condition (bottom panel in Fig. 8A). This leads to the slightly weaker population averages in the random cue condition than in the rewarded schedule states of the valid cue condition (compare dotted line from the population activity in the rewarded schedule states of the valid cue condition with solid black line from population activity of the random cue condition in Fig. 8F), since the population activity of the random cue condition includes weaker activity in the trials with darker cues. We collected the activity in the trials from the random cue condition with the brightest cues. The orange line showing the average of normalized activity of the trials with the brightest cues is overlaid in Fig. 8F. Although the activity is slightly weaker compared with population activity of rewarded schedule states in the valid cue condition, it is much closer to the dotted line than to the solid line.

Reward delivery activity.

Thirteen neurons (9 and 4 neurons from monkeys A and B, respectively) showed significantly different responses in the rewarded and unrewarded schedule states after reward apparatus activation in both the valid and random cue conditions (Fig. 9). For these neurons, a one-way, two-level ANOVA for reward vs. no reward explained an average of 0.48 and 0.40 of the variance in the valid and random cue conditions, respectively. All 13 neurons showed larger responses after the onset of reward delivery. Because most of these neurons are located within dysgranular insular cortex (illustrated in Fig. 2B), where the gustation-related responses were reported (Yaxley et al. 1990), the responses of these neurons initially seemed likely to be related to the reward itself and/or to the licking movements or taste. However, we observed that the monkeys moved their mouths at the end of every trial in the random cue condition, but these neuronal responses were observed only in the rewarded schedule states of the random cue condition. We do not have the data to identify whether the neuronal activities represent taste. Nonetheless, we can conclude that these responses were related to the reward, not the movements.

Fig. 9.

Example of reward delivery response. A: responses in the valid cue condition. B: responses in the random cue condition (1-way, 2-level ANOVA, F1,131 = 63.97, P = 0, r2 = 0.33 and F1,141 = 58.5, P = 0, r2 = 0.29 for the valid and random cue conditions, respectively). Horizontal axes indicate the time from reward apparatus activation, and vertical axes indicates the firing rates. The responses appeared several hundreds of milliseconds after the onset of reward apparatus activation in either condition. These neurons appear to respond to the reward itself.

All schedule states responses.

Another 24/131 neurons (8 and 16 neurons from monkeys A and B, respectively) showed responses in every schedule state of both the valid and random cue conditions. Some cells responded to behavioral cueing events (CUE, 2 neurons; WAIT, 3 neurons; GO, 2 neurons). A greater number of neurons (14) were responsive to the BAR event. No cells responded significantly to the OK or ITI events. The remaining four neurons responded to the REW event. Figure 10 shows an example of a neuron responding around the time of bar release (1-way, 20-level ANOVA, F19,379 = 1.57, P = 0.06 in both conditions).

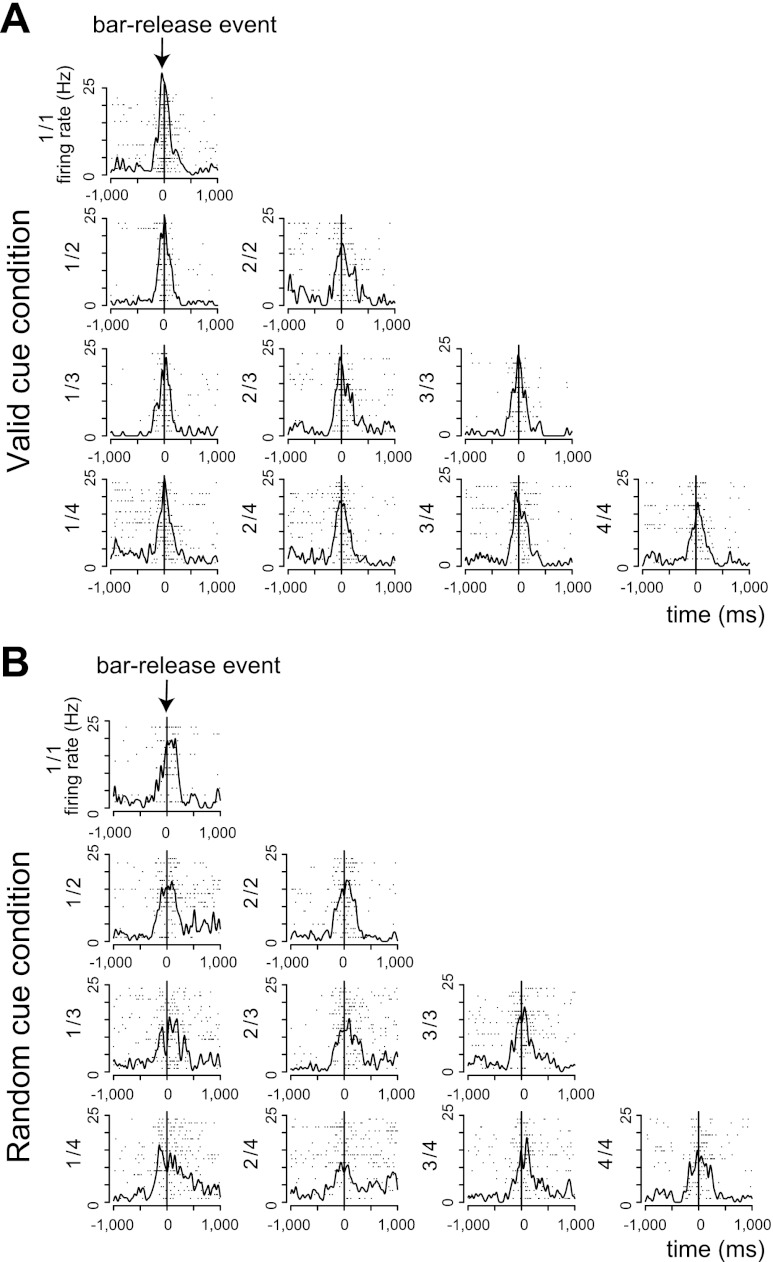

Fig. 10.

Example of all schedule state response. A: responses in the valid cue condition. B: responses in the random cue condition. Horizontal axes indicate the time from bar release, and vertical axes indicate the firing rates. This neuron showed phasic responses around the onset of bar release. This neuron did not distinguish any schedule states (2-way, 20-level ANOVA, F19,379 = 1.57, P = 0.06 in both conditions).

Others.

The remaining 58 neurons did not fall into any of the preceding three groups (Table 1). Of these, six neurons responded after the monkeys seemed to notice the reward was not delivered. Twenty-five neurons showed responses in idiosyncratic combinations of one or more schedule states. They were better explained by the single-factor, 10-level ANOVA than by either of the 1-factor, 2-level ANOVAs (see methods). Seven neurons responded only in the valid cue condition. Another seven neurons were sensitive only to cue brightness in both the valid and random cue conditions. Eight neurons showed graded activities; that is, the firing rate gradually changed in relation to schedule progress, as found in the anterior cingulate cortex (graded response in Shidara and Richmond 2002). Finally, the responses of five neurons appeared in all trials but were depressed in the later half of every trial.

Table 1.

Responsive schedule states of 58 neurons in “others” group

| Valid Cue Condition |

Random Cue Condition |

||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No. of Neurons | 1/1 | 1/2 | 2/2 | 1/3 | 2/3 | 3/3 | 1/4 | 2/4 | 3/4 | 4/4 | 1/1 | 1/2 | 2/2 | 1/3 | 2/3 | 3/3 | 1/4 | 2/4 | 3/4 | 4/4 | |

| No reward | 6 | + | + | + | + | + | + | + | + | + | + | + | + | ||||||||

| Idiosyncratic responses | 3 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | ||||||

| 3 | + | + | + | + | + | + | + | + | |||||||||||||

| 2 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||

| 2 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |

| 2 | + | + | + | + | + | + | + | + | + | + | |||||||||||

| 2 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||||

| 1 | + | + | + | + | + | + | |||||||||||||||

| 1 | + | + | + | + | |||||||||||||||||

| 1 | + | + | + | + | + | ||||||||||||||||

| 1 | + | + | + | + | |||||||||||||||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | ||||||||||

| 1 | + | + | + | + | + | + | + | + | + | + | |||||||||||

| Responded only in valid cue condition | 1 | + | |||||||||||||||||||

| 1 | + | + | + | + | |||||||||||||||||

| 1 | + | + | + | ||||||||||||||||||

| 1 | + | + | |||||||||||||||||||

| 1 | + | + | + | + | + | + | + | + | + | + | |||||||||||

| 1 | + | + | + | ||||||||||||||||||

| 1 | + | + | + | + | |||||||||||||||||

| Graded responses as found in anterior cingulate | 4 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + |

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||||

| 1 | + | + | + | + | + | + | + | + | + | + | |||||||||||

| 1 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | |||||||

| Cue brightness sensitive | 7 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | ||||||

| Depressed in latter half of every trial | 5 | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + | + |

Data are responsive schedule states of 58 neurons placed in “others” group because they could not be classified in any of the reward expectation responses, reward delivery responses, or all schedule state responses.

DISCUSSION

We found four classes of responsive neurons in these experiments. Two were similar to neurons seen before with this task in other brain regions, that is, idiosyncratic (Liu and Richmond 2000; Shidara et al. 1998) and graded (Shidara and Richmond 2002). A third class, related to reward delivery, was similar to those reported in the past (Ohgushi et al. 2005; Yaxley et al. 1990), therefore we did not carry out an intensive investigation of these reward delivery neurons. The last class of responsive neurons in the anterior insula was related to reward expectation. These neurons are activated in anticipation of a predicted reward, where the reward delivery is certain or stochastic, but they do not show activations when it is certain that the current trial will not be rewarded, even though the trial is embedded within a schedule of trials that the monkeys know will lead to a reward.

Two groups of previous physiological findings bear on our results, single neuronal recordings in monkeys and fMRI measurements in human subjects. Asahi et al. (2006) found a large class of neurons in the insula (a similar percentage in responsive neurons to what we have found) that are activated when a visual cue predicts that a reward will be forthcoming in either visually cued go or no-go trials but that are not activated in a cued, that is, predicted, unrewarded trial. The area from which they recorded included the granular and the posterior half of dysgranular parts of the insular cortex in Macaca fuscata. This is tissue that at least partly overlapped with our recording area (comparing the relation between their recording locations and ours precisely is complicated by the difference in brain size for M. mulatta vs. M. fuscata). Their experimental situation is consistent with our findings in the valid cue condition. Our results extend theirs through our observations in the random cue condition. The random cue condition reveals that these same neurons, ones that are activated only in rewarded trials in the valid cue condition, are activated in every trial when the reward's delivery is determined on a stochastic schedule with respect to the individual trials.

In the domain of human functional imaging, our results in the random cue condition are consistent with several reports. These fMRI studies generally revealed that the activation strength was related to gambling or other tasks with uncertainty about the outcome (Clark et al. 2009; Critchley et al. 2001; Hsu et al. 2005; Kuhnen and Knutson 2005; Li et al. 2010; Paulus et al. 2003; Preuschoff et al. 2008; Singer et al. 2009, Xue et al. 2010). Some of those studies related the activation strength to gambling (Paulus et al. 2003), ambiguity (supplementary data in Hsu et al. 2005), uncertainty (Critchley et al. 2001), and/or risk (Preuschoff et al. 2008; Xue et al. 2010). Our results do not seem explicable by reward prediction error, gambling, ambiguity, or risk as has been proposed in some of these fMRI studies. If the reward expectation neurons were encoding reward prediction error or risk as suggested (Seymour et al. 2004; Preuschoff et al. 2008), we would not have expected to find much signal in the completely predicted rewarded trials of the valid cue condition, where the uncertainty, and therefore the reward prediction error, should have been small. In addition, at the one time when there is uncertainty in the valid cue condition, that is, at the beginning of the first trial of a schedule, these neurons do not respond. As for the interpretation that these neurons encode some correlate of risk, we saw activations in all trials in the random cue condition where the outcome might be considered risky. This random cue condition is the condition closest to the risk conditions used in the functional imaging experiments, and the single neuronal activations reported here are consistent with the imaging results. However, the neurons gave equivalently strong activations in the rewarded trials of the valid cue condition, where the reward is certain and thus the risk is zero, making it seem unlikely that risk has been encoded. Our interpretation of these activations is that they occur in trials when the monkey can expect that a reward might or will be delivered, although the timing of these activations that arise relatively late in trials makes them poor candidates for instantiating the overall drive to perform from the beginnings of trials or schedules.

The reward expectation signal emphasized here is unlike those that have been identified elsewhere using reward schedule task. The anterior insula is the only brain region where we have recorded showing a substantial number of responses in all states of the random cue condition. In other brain regions the single neurons are activated either at different points (idiosyncratic patterns of schedule states) in the valid cue condition (ventral striatum and perirhinal cortex) (Bowman et al. 1996; Liu and Richmond 2000; Shidara et al. 1998), as the reward approaches (anterior cingulate) (Shidara and Richmond 2002), or at the beginning of trials or schedules (locus coeruleus and amygdala) (Bouret and Richmond 2009; Sugase-Miyamoto and Richmond 2005). These classes, as those described here, only respond in the valid cue condition. Again, this emphasizes the uniqueness of the activity in the reward expectation and the neurons reported here which have robust activations in the random cue condition. Dopamine neurons have more transient responses in the reward schedule task that occur at many points through the trials (Ravel and Richmond 2006), perhaps signaling salient events (Berridge and Robinson 2003; Kapur 2003).

The dynamics of the signals that we observed seem to show that the insula has strong signals related to knowing when a reward is coming (or has come, in the case of the random cue condition). This signal arises because the reward expectancy neurons seem to encode the difference between the expectation of a reward and the knowledge that no reward is forthcoming. It is possible that the signal we have seen in these neurons is related to arousal. However, the activity in the random cue condition changes immediately upon discovering that no reward is forthcoming (a time when it seems reasonable to expect the monkey to be aroused; cf. Fig. 7, the responses after reward delivery in the random cue condition). Thus we do not favor this as an interpretation. Overall, our findings seem consistent with the view that the anterior insular cortex is a site that represents reward expectation. What is new here is that this expectation occurs when the monkey knows it will or might receive a reward as a consequence of the immediately forthcoming action. The reward-expecting neurons are likely to be part of the neural circuits giving rise to reward expectation, and in that role it seems likely to be important for combining interoceptive signals for impending stimuli (Lovero et al. 2009) with the urge for addictive substances (Kilts et al. 2001; Naqvi and Bechara 2010) and trait optimism (Sharot et al. 2011).

GRANTS

The work was supported by a Grant-in-Aid for Scientific Research on Priority Areas—System Study on Higher-Order Brain Functions (17022052) from the Ministry of Education, Culture, Sports, Science, and Technology of Japan; Grant-in-Aid for Young Scientists (B) (23700701) from the Japan Society for the Promotion of Science (JSPS), 21st Century Center of Excellence Program/JSPS, and the National Institute of Advanced Industrial Science and Technology of Japan. B. J. Richmond is supported by the U.S. National Institute of Mental Health intramural program. We thank JSPS for providing support for B. J. Richmond in Japan.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

Author contributions: T.M., B.J.R., and M.S. conception and design of research; T.M. performed experiments; T.M. analyzed data; T.M., B.J.R., and M.S. interpreted results of experiments; T.M. prepared figures; T.M., B.J.R., and M.S. drafted manuscript; T.M., B.J.R., and M.S. edited and revised manuscript; T.M., B.J.R., and M.S. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Dr. Janine Simmons for very helpful discussions about this manuscript.

REFERENCES

- Abeles M, Goldstein MH. Multiple spike train analysis. Proc IEEE 65: 762–773, 1997 [Google Scholar]

- Asahi T, Uwano T, Eifuku S, Tamura R, Endo S, Ono T, Nishijo H. Neuronal responses to a delayed-response delayed-reward GO/NOGO task in the monkey posterior insular cortex. Neuroscience 143: 627–639, 2006 [DOI] [PubMed] [Google Scholar]

- Augustine JR. Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res Rev 22: 229–244, 1996 [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. Parsing reward. Trends Neurosci 26: 507–513, 2003 [DOI] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ. Relation of locus coeruleus neurons in monkeys to Pavlovian and operant behaviors. J Neurophysiol 101: 898–911, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowman EM, Aigner TG, Richmond BJ. Neural signals in the monkey ventral striatum related to motivation for juice and cocaine rewards. J Neurophysiol 75: 1061–1073, 1996 [DOI] [PubMed] [Google Scholar]

- Chikama M, McFarland NR, Amaral DG, Haber SN. Insular cortical projections to functional regions of the striatum correlate with cortical cytoarchitectonic organization in the primate. J Neurosci 17: 9686–9705, 1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark L, Lawrence AJ, Astley-Jones F, Gray N. Gambling near-misses enhance motivation to gamble and recruit win-related brain circuitry. Neuron 61: 481–490, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig AD. How do you feel? Interoception: the sense of the physiological condition of the body. Nat Rev Neurosci 3: 655–666, 2002 [DOI] [PubMed] [Google Scholar]

- Craig AD. How do you feel—now? The anterior insula and human awareness. Nat Rev Neurosci 10: 59–70, 2009 [DOI] [PubMed] [Google Scholar]

- Critchley HD, Mathias CJ, Dolan RJ. Neural activity in the human brain relating to uncertainty and arousal during anticipation. Neuron 29: 537–545, 2001 [DOI] [PubMed] [Google Scholar]

- Filbey FM, Schacht JP, Myers US, Chavez RS, Hutchison KE. Marijuana craving in the brain. Proc Natl Acad Sci USA 106: 13016–13021, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34: 418–432, 2009 [PMC free article] [PubMed] [Google Scholar]

- Garavan H. Insula and drug cravings. Brain Struct Funct 214: 593–601, 2010 [DOI] [PubMed] [Google Scholar]

- Gawne TJ, Richmond BJ. How independent are the messages carried by adjacent inferior temporal cortical neurons? J Neurosci 13: 2758–2771, 1993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hays AV, Richmond BJ, Optican LM. A UNIX-based multiple process system for real-time data acquisition and control. In: WESCON Conference Record, 1982 Ventura, CA: Western Periodicals, 1982, vol. 2, p. 1–10 [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science 310: 1680–1683, 2005 [DOI] [PubMed] [Google Scholar]

- Ibañez A, Gleichgerrcht E, Manes F. Clinical effects of insular damage in humans. Brain Struct Funct 214: 397–410, 2010 [DOI] [PubMed] [Google Scholar]

- Izuma K, Saito DN, Sadato N. Processing of social and monetary rewards in the human striatum. Neuron 58: 284–294, 2008 [DOI] [PubMed] [Google Scholar]

- Jabbi M, Swart M, Keysers C. Empathy for positive and negative emotions in the gustatory cortex. Neuroimage 34: 1744–1753, 2007 [DOI] [PubMed] [Google Scholar]

- Jones CL, Ward J, Critchley HD. The neuropsychological impact of insular cortex lesions. J Neurol Neurosurg Psychiatry 81: 611–618, 2010 [DOI] [PubMed] [Google Scholar]

- Kapur S. Psychosis as a state of aberrant salience: a framework linking biology, phenomenology, and pharmacology in schizophrenia. Am J Psychiatry 160: 13–23, 2003 [DOI] [PubMed] [Google Scholar]

- Kilts CD, Schweitzer JB, Quinn CK, Gross RE, Faber TL, Muhammad F, Ely TD, Hoffman JM, Drexler KP. Neural activity related to drug craving in cocaine addiction. Arch Gen Psychiatry 58: 334–341, 2001 [DOI] [PubMed] [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage 42: 998–1031, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron 47: 763–770, 2005 [DOI] [PubMed] [Google Scholar]

- La Camera G, Richmond BJ. Modeling the violation of reward maximization and invariance in reinforcement schedules. PLoS Comput Biol 4: e1000131, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamm C, Singer T. The role of anterior insular cortex in social emotions. Brain Struct Funct 214: 579–591, 2010 [DOI] [PubMed] [Google Scholar]

- Li X, Lu ZL, D'Argembeau A, Ng M, Bechara A. The Iowa Gambling Task in fMRI images. Hum Brain Mapp 31: 410–423, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Z, Richmond BJ. Response differences in monkey TE and perirhinal cortex: stimulus association related to reward schedules. J Neurophysiol 83: 1677–1692, 2000 [DOI] [PubMed] [Google Scholar]

- Lovero KL, Simmons AN, Aron JL, Paulus MP. Anterior insular cortex anticipates impending stimulus significance. Neuroimage 45: 976–983, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesulam MM, Mufson EJ. Insula of the old world monkey. III: Efferent cortical output and comments on function. J Comp Neurol 212: 38–52, 1982 [DOI] [PubMed] [Google Scholar]

- Naqvi NH, Bechara A. The insula and drug addiction: an interoceptive view of pleasure, urges, and decision-making. Brain Struct Funct 214: 435–450, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naqvi NH, Rudrauf D, Damasio H, Bechara A. Damage to the insula disrupts addiction to cigarette smoking. Science 315: 531–534, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohgushi M, Ifuku H, Ito S, Ogawa H. Response properties of neurons to sucrose in the reward phase and the areal distribution in the monkey fronto-operculuro-insular and prefrontal cortices during a taste discrimination GO/NOGO task. Neurosci Res 51: 253–263, 2005 [DOI] [PubMed] [Google Scholar]

- Paulus MP, Rogalsky C, Simmons A, Feinstein JS, Stein MB. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. Neuroimage 19: 1439–1448, 2003 [DOI] [PubMed] [Google Scholar]

- Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci 28: 2745–2752, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravel S, Richmond BJ. Dopamine neuronal responses in monkeys performing visually cued reward schedules. Eur J Neurosci 24: 277–290, 2006 [DOI] [PubMed] [Google Scholar]

- Saunders RC, Aigner TG, Frank JA. Magnetic resonance imaging of rhesus monkey brain: use for stereotactic neurosurgery. Exp Brain Res 81: 443–446, 1990 [DOI] [PubMed] [Google Scholar]

- Seymour B, O'Doherty JP, Dayan P, Koltzenburg M, Jones AK, Dolan RJ, Friston KJ, Frackowiak RS. Temporal difference models describe higher-order learning in humans. Nature 429: 664–667, 2004 [DOI] [PubMed] [Google Scholar]

- Sharot T, Korn CW, Dolan RJ. How unrealistic optimism is maintained in the face of reality. Nat Neurosci 14: 1475–1479, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Aigner TG, Richmond BJ. Neuronal signals in the monkey ventral striatum related to progress through a predictable series of trials. J Neurosci 18: 2613–2625, 1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science 296: 1709–1711, 2002 [DOI] [PubMed] [Google Scholar]

- Simmons JM, Richmond BJ. Dynamic changes in representations of preceding and upcoming reward in monkey orbitofrontal cortex. Cereb Cortex 18: 93–103, 2008 [DOI] [PubMed] [Google Scholar]

- Simon SA, de Araujo IE, Gutierrez R, Nicolelis MA. The neural mechanisms of gustation: a distributed processing code. Nat Rev Neurosci 7: 890–901, 2006 [DOI] [PubMed] [Google Scholar]

- Singer T, Critchley HD, Preuschoff K. A common role of insula in feelings, empathy and uncertainty. Trends Cogn Sci 13: 334–340, 2009 [DOI] [PubMed] [Google Scholar]

- Sugase-Miyamoto Y, Richmond BJ. Neuronal signals in the monkey basolateral amygdala during reward schedules. J Neurosci 25: 11071–11083, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Venables WN, Ripley BD. Modern applied statistics with S. New York: Springer, 2002 [Google Scholar]

- Xue G, Lu Z, Levin IP, Bechara A. The impact of prior risk experiences on subsequent risky decision-making: the role of the insula. Neuroimage 50: 709–716, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yaxley S, Rolls ET, Sienkiewicz ZJ. Gustatory responses of single neurons in the insula of the macaque monkey. J Neurophysiol 63: 689–700, 1990 [DOI] [PubMed] [Google Scholar]

- Zhang ZH, Dougherty PM, Oppenheimer SM. Monkey insular cortex neurons respond to baroreceptive and somatosensory convergent inputs. Neuroscience 94: 351–360, 1999 [DOI] [PubMed] [Google Scholar]