Abstract

Cerebral organization during sentence processing in English and in American Sign Language (ASL) was characterized by employing functional magnetic resonance imaging (fMRI) at 4 T. Effects of deafness, age of language acquisition, and bilingualism were assessed by comparing results from (i) normally hearing, monolingual, native speakers of English, (ii) congenitally, genetically deaf, native signers of ASL who learned English late and through the visual modality, and (iii) normally hearing bilinguals who were native signers of ASL and speakers of English. All groups, hearing and deaf, processing their native language, English or ASL, displayed strong and repeated activation within classical language areas of the left hemisphere. Deaf subjects reading English did not display activation in these regions. These results suggest that the early acquisition of a natural language is important in the expression of the strong bias for these areas to mediate language, independently of the form of the language. In addition, native signers, hearing and deaf, displayed extensive activation of homologous areas within the right hemisphere, indicating that the specific processing requirements of the language also in part determine the organization of the language systems of the brain.

The development and accessibility of neuroimaging techniques continue to permit detailed characterization of the neural systems active during perception and cognition in the intact human brain. Research along these lines reveals the adult human brain as a highly differentiated amalgam of systems and subsystems, each specialized to process specific and different kinds of information. A central issue is how these highly specialized systems arise in human development, the degree to which they are biologically constrained, and the extent to which they depend on and can be modified by input from the environment. Extensive research at many levels of analysis has documented that, within the domain of sensory processing, strong biases constrain development, but many aspects of sensory organization can adapt and reorganize after both increases and decreases in sensory input (1–13). For example, in humans who have sustained auditory deprivation since birth some aspects of visual processing are unchanged whereas the processing of motion is enhanced and reorganized (10, 14, 15).

It is likely that the development of the neural systems important for higher cognitive functions, including language, are also guided by strong biases but that some of these are modifiable by experience, within limits. Several lines of evidence suggest that cerebral organization for a language depends on the age of acquisition of the language, the ultimate proficiency in the language, whether an individual learned more than one language, and the degree of similarity between the languages learned (16–21). Behavioral studies suggest that early exposure to language is necessary for complete linguistic proficiency, and several different types of evidence raise the hypothesis that early exposure to a language may be necessary for the hallmark specialization of the left hemisphere for language (19, 22–24). Little is known about which aspect(s) of early language experience may be important in this development. It has been proposed that the demands of processing rapidly changing acoustic spectra is a key factor whereas other evidence suggests that the grammatical recoding of information may be central in the differentiation of the left hemisphere for language (25–27). The effects of language structure and modality on neural development can provide evidence on these proposals.

Comparison of cerebral organization in native speakers who are and are not also native users of American Sign Language (ASL) and of native signers who are and are not also native users of English permits a unique perspective on these issues. Studies of the effects of brain damage in early learners of ASL suggest that sound-based processing of language may not be necessary for the specialization of the left hemisphere (28–30). Electrophysiological and lesion-based evidence provide limited and apparently contradictory evidence on the proposal that the right hemisphere may play a role in processing ASL (28–32). Different lines of evidence suggest that areas within the right hemisphere may be included in the language system when the perception and/or production of the language depend on spatial contrasts. Additionally, several studies suggest that bilingualism is associated with a different cerebral organization than monolingualism, but there is very little evidence on the effects of sign/spoken bilingualism on the development of the language systems of the brain.

To address these issues we employed fMRI to compare cerebral organization in three groups of individuals with different language experience. (i) Normally hearing, monolingual, native speakers of English who did not know any ASL. (ii) Congenitally, genetically deaf individuals who learned English late and imperfectly and without auditory input. The deaf subjects’ native language was ASL, a language that makes use of spatial location and motion of the hands in grammatical encoding of linguistic information (33). (iii) A group of normally hearing, bilingual subjects who were born to deaf parents and who acquired both ASL and English as native languages (hearing native signers).

METHODS

Subjects.

All subjects were right-handed, healthy adults (see Table 1).

Table 1.

Demographic and behavioral data for the three subject groups

| Hearing | Deaf | Hearing native signers | |

|---|---|---|---|

| English exposure | Birth | School age | Birth |

| English proficiency | Native | Moderate* | Native |

| ASL exposure | None | Birth | Birth |

| ASL proficiency | None | Native | Native |

| Hearing | Normal | Profound deafness | Normal |

| Mean age | 26 | 23 | 35 |

| Handedness | Right | Right | Right |

| Performance on fMRI task, percent correct | |||

| English | |||

| Sentences | 85 | 85 | 80 |

| Consonant strings | 52 | 56 | 55 |

| ASL | |||

| Sentences | 56 | 92 | 92 |

| Nonsigns | 51 | 62 | 60 |

| Numbers of subjects | |||

| English | |||

| Left hemisphere | 8 | 11 | 7 |

| Right hemisphere | 8 | 12 | 9 |

| ASL | |||

| Left hemisphere | 8 | 11 | 8 |

| Right hemisphere | 8 | 12 | 10 |

Range of errors on different subtests of Grammaticality Test of English: 6–26%.

Experimental Design/Stimulus Material.

Each population was scanned by using functional magnetic resonance imaging while processing sentences in English and in ASL. The English runs consisted of alternating blocks of simple declarative sentences (read silently) and consonant strings, all presented one word/string at a time (600 msec/item) in the center of a screen at the foot of the magnet. The ASL runs consisted of film of a native deaf signer producing sentences in ASL or nonsign gestures that were physically similar to ASL signs. The material was presented in four different runs (two of English and two of ASL—presentation counterbalanced across subjects). Each run consisted of four cycles of alternating 32-sec blocks of sentences (English or ASL) and baseline (consonant strings or nonsigns). None of the stimuli were repeated. Subjects had a practice run of ASL and of English to become familiar with the task and the nature of the stimuli.

Behavioral Tests.

At the end of each run, subjects were asked yes/no recognition questions on the sentences and nonwords/nonsigns to ensure attention to the experimental stimuli (see Table 1). ANOVAs were performed on the percent-correct recognition. Deaf subjects also took 10 subtests of the Grammaticality Judgment Test (34) to assess knowledge of English grammar (see Table 1). At the end of each run subjects indicated whether or not specific sentences and nonword/nonsign strings had been presented.

MR Scans.

Gradient-echo echo-planar images were obtained by using a 4-T whole body MR system, fitted with a removable z-axis head gradient coil (35). Eight parasagittal slices, positioned from the lateral surface of the brain to a depth of 40 mm, were collected (TR = 4 sec, TE = 28 ms, resolution 2.5 mm × 2.5 mm × 5 mm, 64 time points per image). For each of the subjects, only one hemisphere was imaged in a given session because a 20-cm diameter transmit/receive radio-frequency surface coil was used to minimize rf interaction with the head gradient coil. The surface coil had a region of high sensitivity that was limited to a single hemisphere.

MR Analysis.

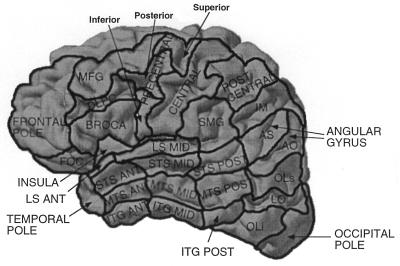

Subjects were asked to participate in two separate sessions (one for each hemisphere). However, this was not always possible, leading to the following numbers of subjects: (i) hearing, eight subjects on both left and right hemispheres; (ii) deaf, seven subjects on both left and right hemispheres, plus four subjects left hemisphere only and five subjects right hemisphere only, (iii) hearing native signers, six subjects on both left and right hemispheres, plus three subjects left hemisphere only and four subjects right hemisphere only. Between-subject analyses were performed by considering left and right hemisphere data from all three groups as a between-subject variable. Individual data sets were first checked for artifacts (runs with visible motion and/or signal loss were discarded from the analysis resulting in the loss of the data from four hearing native signers: two left hemisphere on English, one left hemisphere on ASL, and one right hemisphere on English). A cross-correlation thresholding method was used to determine active voxels (36) (r ≥ 0.5, effective df = 35, alpha = .001). MR structural images were divided into 31 anatomical regions according to the Rademacher et al. (37) division of the lateral surface of the brain (see Fig. 1); between-subject analyses were performed on these predetermined anatomical regions. Activation measurements were made on the following two variables for each region and run: (i) the mean percent change of the activation for active voxels in a region and (ii) the mean spatial extent of the activation in the region (corrected for size of the region). In addition, a region was not considered further unless at least 30% of runs displayed activation. Multivariate analysis was used to take into account each of these different aspects of the activation. The analyses relied on Hotelling’s T2 (38) statistic, a natural generalization of the Student’s t-statistic, and were performed by using bmdp statistical software. In all analyses, the log transforms of the percent change and spatial extent were used as dependent variables, and data sets were used as independent variables. Activation within a region was assessed by testing the null hypothesis that the level of activation in the region was zero. Comparisons across hemispheres and/or languages were performed by entering these factors as treatments.

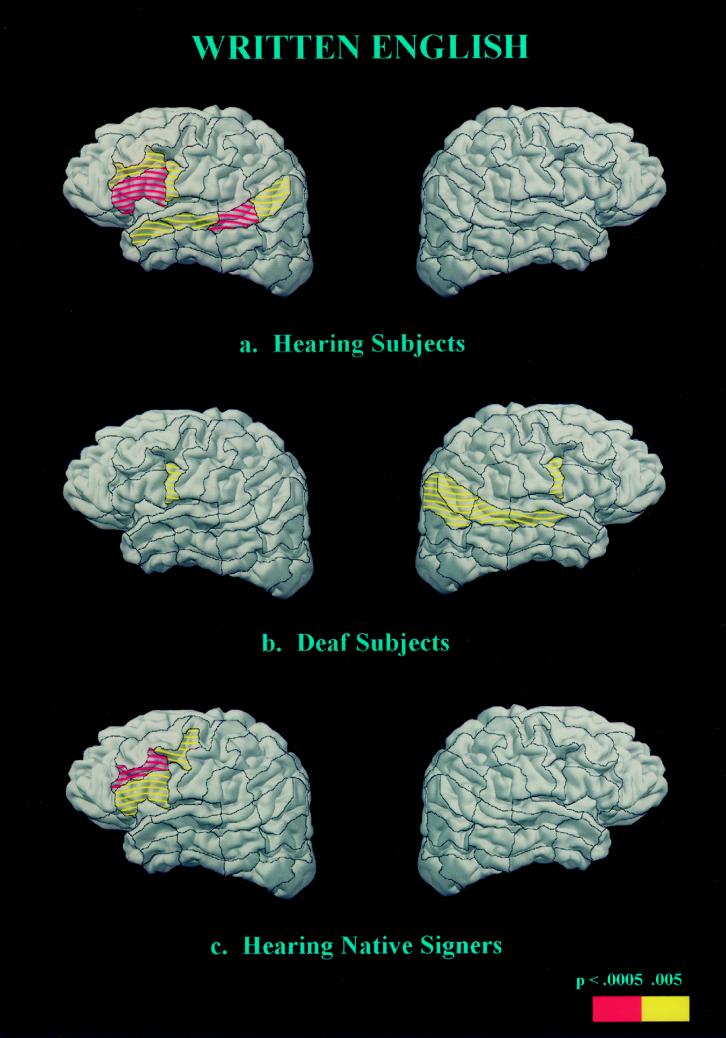

Figure 1.

Cortical areas displaying activation (P < .005) for English sentences (vs. nonwords) for each subject group.

RESULTS

The behavioral data confirmed that subjects were attending to the stimuli and were better at recognizing sentences than nonsense strings [stimulus effect F(1,55) = 156, P < .0001]. All groups performed equally well in remembering both the (simple, declarative) English sentences and the consonant strings [group effect not significant (NS)]. Hearing subjects who did not know ASL performed at chance in recognizing ASL sentences and nonsigns unlike the two other native signer groups (group effect, F(2,55) = 41, P < .0001). Deaf and hearing signers performed equally well on ASL stimuli (group effect NS) (Table 1).

English.

When normally hearing subjects read English sentences they displayed robust activation within the standard language areas of the left hemisphere, including inferior frontal (Broca’s) area, Wernicke’s area [superior temporal sulcus (STS) posterior], and the angular gyrus. Additionally, the dorsolateral prefrontal cortex (DLPC), inferior precentral cortex, and anterior and middle STS were active, in agreement with recent studies indicating a role for these areas in language processing and memory (39–42). Within the right hemisphere there was only weak and variable activation (less than 50% of runs) reflecting the ubiquitous left hemisphere asymmetry described by over a century of language research (Fig. 1a; Table 2). In contrast to the hearing subjects, deaf subjects did not display left hemisphere dominance when reading English (Fig. 1b; Table 2). In particular, none of the standard language structures within the left hemisphere displayed reliable and asymmetrical activation (all less than 50% of runs). In addition, unlike hearing subjects, deaf subjects displayed robust activation of middle and posterior temporal–parietal structures within the right hemisphere [see Table 2 and Fig. 1b; group (deaf/hearing) × hemisphere effect, Wernicke’s (STS post) P < .01; angular gyrus P < .01, angular occipital sulcus P < .008]. There are several aspects of these deaf subjects’ different experiences with English that might have accounted for this departure from the pattern seen in normally hearing subjects. First, the possibility that learning ASL as a native language contributed to the activation within the right hemisphere was assessed by observing the results displayed by the bilingual, hearing native signers. As seen in Fig. 1c and Table 2, these subjects did not display reliable right hemisphere activation, but, as for the normally hearing, monolingual subjects, they displayed a clear left hemisphere lateralization of the activation and a reliable recruitment of anterior left hemisphere language areas [group (hearing/hearing native signers) all areas NS]. Posterior language areas within the left hemisphere were more weakly activated but were significantly asymmetrical (left > right, Table 2). Thus, the lack of left hemisphere asymmetry and the presence of right hemisphere activation when deaf subjects read English was probably not due to the acquisition of ASL as a first language, because the hearing native signers did not display this pattern. The results for ASL clarify the interpretation of these results.

Table 2.

Significance levels for English (sentences vs. consonant strings) for the left hemisphere (left), right hemisphere (right), and the hemisphere asymmetry (hemi) for each group studied.

| AREAS | WRITTEN ENGLISH CONDITION

|

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Hearing

|

Deaf

|

Hearing

native signers

|

|||||||

| Left | Right | Hemi | Left | Right | Hemi | Left | Right | Hemi | |

| Frontal | |||||||||

| Middle frontal gyrus | NS | NS | NS | NS | NS | NS | NS | NS | NS |

| Frontal pole | NS | NS | NS | NS | NS | NS | NS | NS | NS |

| Frontal orbital cortex | NS | NS | .043 | NS | NS | NS | NS | NS | NS |

| Dorsolateral prefrontal cortex | .005 | NS | .024 | .038 | NS | NS | .0005 | NS | .0000 |

| Broca’s* | .0004 | .039 | .011 | .038 | .030 | NS | .0009 | NS | .0005 |

| Precentral sulcus, inf. | .002 | .048 | .038 | .003 | .004 | NS | .007 | NS | NS |

| Precentral sulcus, post. | .007 | NS | .012 | .020 | NS | NS | .004 | NS | NS |

| Precentral sulcus, sup. | .010 | NS | .011 | .007 | .046 | .041 | .020 | NS | NS |

| Central sulcus | .035 | NS | NS | NS | NS | NS | .018 | NS | .017 |

| Temporal | |||||||||

| Temporal Pole | .021 | NS | NS | NS | NS | NS | NS | NS | NS |

| Superior temp. sulcus, ant. | .001 | .047 | .007 | NS | NS | NS | NS | NS | NS |

| Superior temp. sulcus, mid. | .0007 | .010 | .005 | .017 | .001 | NS | NS | NS | NS |

| Superior temp. sulcus, post. | .0000 | NS | .0001 | .019 | .0009 | NS | .019 | NS | .032 |

| Sylvian fissure, ant. | .044 | NS | NS | .013 | NS | NS | NS | NS | .015 |

| Sylvian fissure, mid. | .015 | NS | NS | .022 | NS | .030 | NS | NS | NS |

| Temporoparietal | |||||||||

| Supramarginal gyrus | .007 | NS | NS | NS | NS | NS | NS | NS | .023 |

| Angular gyrus | .003 | NS | .0002 | NS | .005 | NS | .042 | NS | .010 |

| Anterior occipital sulcus | .039 | NS | NS | .044 | .001 | NS | .028 | NS | .005 |

| Intraparietal sulcus | NS | NS | NS | .044 | .020 | NS | .044 | NS | NS |

| Postcentral sulcus | NS | NS | NS | NS | NS | NS | NS | NS | NS |

NS, not significant (P > .05). The cortical areas were defined relative to sulcal anatomy.

Pars frontalis, opercularis, triangularis.

ASL.

Next, by observing the pattern of activation during sentence processing in ASL, we evaluated the roles of other factors that have been implicated in establishing and/or modulating language specialization within the left hemisphere, including the acquisition of an aural/oral language that requires the processing of rapid shifts of auditory temporal information, the acquisition of a natural (grammatical) language early in development, and the structure and modality of the language(s) acquired.

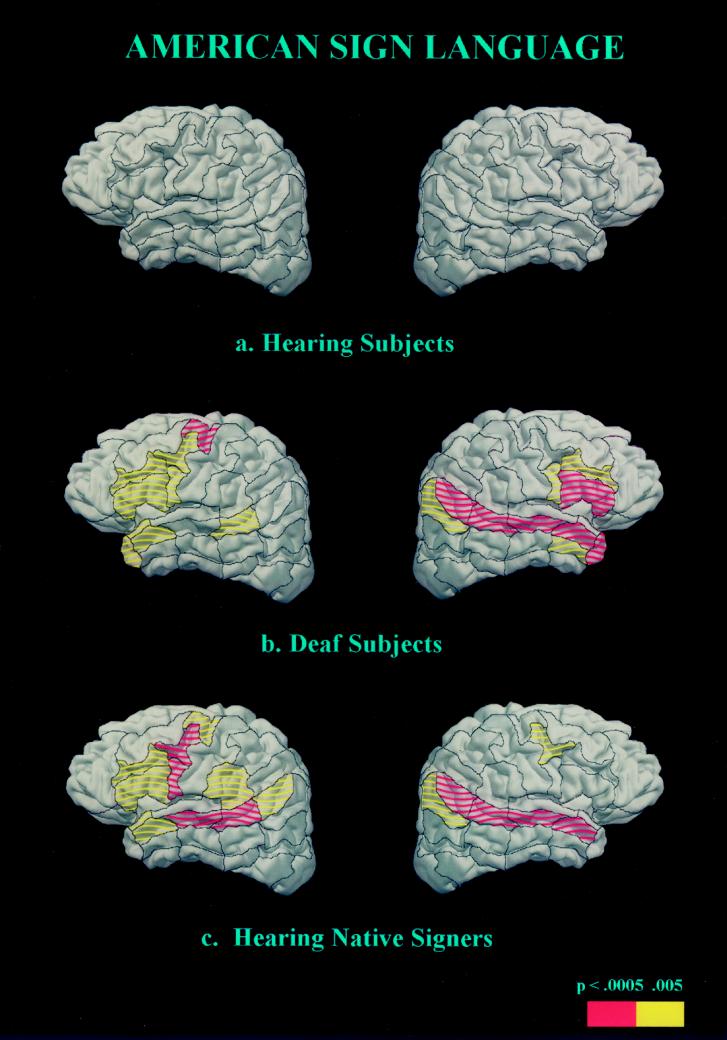

As seen in Fig. 2a and Table 3, hearing subjects who did not know ASL did not display any difference in activation between meaningful and nonmeaningful signs (consistent with their behavioral data).

Figure 2.

Cortical areas displaying activation (P < .005) for ASL sentences (vs. nonsigns) for each subject group

Table 3.

Significance levels for ASL (sentences vs. nonsigns) for the left hemisphere (left), right hemisphere (right), and the hemisphere asymmetry (hemi) for each of the groups studied

| Areas | American Sign Language condition

|

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Hearing

|

Deaf

|

Hearing

native signers

|

|||||||

| Left | Right | Hemi | Left | Right | Hemi | Left | Right | Hemi | |

| Frontal | |||||||||

| Middle frontal gyrus | NS | NS | NS | .013 | NS | NS | NS | NS | NS |

| Frontal pole | NS | NS | NS | NS | .016 | NS | NS | .013 | NS |

| Frontal orbital cortex | NS | NS | NS | .025 | NS | NS | .028 | .025 | NS |

| Dorsolateral prefrontal cortex | NS | NS | NS | .001 | .001 | NS | .001 | NS | NS |

| Broca’s* | NS | NS | NS | .004 | .0004 | NS | .0008 | .038 | .001 |

| Precentral sulcus, inf. | NS | NS | NS | .005 | .004 | NS | .0000 | .017 | .007 |

| Precentral sulcus, post. | NS | NS | NS | .001 | .016 | NS | .0003 | .003 | NS |

| Precentral sulcus, sup. | NS | NS | NS | .0001 | .007 | .030 | .002 | .014 | .005 |

| Central sulcus | NS | NS | NS | .033 | .031 | NS | .010 | NS | NS |

| Temporal | |||||||||

| Temporal pole | NS | NS | NS | .002 | .0001 | NS | .020 | .011 | NS |

| Superior temp. sulcus, ant. | NS | NS | NS | .004 | .0002 | NS | .002 | .0001 | NS |

| Superior temp. sulcus, mid. | NS | NS | NS | .010 | .0000 | NS | .0004 | .0000 | NS |

| Superior temp. sulcus, post. | NS | NS | NS | .002 | .0000 | NS | .0003 | .0002 | NS |

| Sylvian fissure, ant. | NS | NS | NS | .005 | .033 | NS | .004 | NS | NS |

| Sylvian fissure, mid. | NS | NS | NS | .017 | NS | NS | .044 | NS | NS |

| Temporoparietal | |||||||||

| Supramarginal gyrus | NS | NS | NS | NS | NS | NS | .001 | .044 | .006 |

| Angular gyrus | NS | NS | NS | .020 | .0002 | NS | .0006 | .0002 | NS |

| Anterior occipital sulcus | NS | NS | NS | NS | .003 | NS | .006 | .003 | NS |

| Intraparietal sulcus | NS | NS | NS | NS | NS | NS | .013 | NS | NS |

| Postcentral sulcus | NS | NS | NS | NS | .037 | NS | NS | NS | NS |

The cortical areas were defined relative to sulcal anatomy. NS, not significant (P > .05).

Pars frontalis, opercularis, triangularis.

Would sentence processing within a language that relies on the perception of spatial layout, hand shape, and motion recruit classical language areas within the left hemisphere? As seen in Fig. 2b and Table 3, when processing ASL deaf subjects displayed significant left hemisphere activation within Broca’s area and Wernicke’s area. In addition, activation within DLPC, the inferior precentral sulcus, and anterior STS was similar to that observed in hearing subjects when processing English (Fig. 2b; Table 3). This result suggests that acquisition of a spoken language is not necessary to establish specialized language systems within the left hemisphere. Remarkably, processing of ASL sentences in deaf subjects also strongly recruited the right hemisphere, including the entire extent of the superior temporal lobe, the angular region, and inferior prefrontal cortex (see Fig. 2b and Table 3). Is this striking right hemisphere activation during ASL sentence processing attributable to auditory deprivation or to the acquisition of a language that depends on visual/spatial contrasts? As seen in Fig. 2c and Table 3, when processing ASL, hearing native signers also displayed right hemisphere activation similar to that of the deaf subjects (all group effects NS). Thus, the activation of the right hemisphere when processing sentences in ASL appears to be a consequence of the temporal coincidence between language information and visuospatial decoding. In addition, within the left hemisphere, robust activation of Broca’s area, DLPC, precentral sulcus, Wernicke’s area, and the angular gyrus also was observed in these hearing native signers (Fig. 2c; Table 3).

DISCUSSION

In summary, every subject processing their native language (i.e., hearing subjects processing English, deaf subjects processing ASL, hearing native signers processing English, and hearing native signers processing ASL) displayed significant activation within left hemisphere structures classically linked to language processing. These results imply that there are strong biological constraints that render these particular brain areas well designed to process linguistic information independently of the structure or the modality of the language. This effect was more robust for anterior than for posterior regions and suggests that language experience may more strongly influence the development of posterior language areas. Elucidation of the functional organization and the functional significance of the language-invariant areas identified by the type of neuroimaging technique used in the present study (i.e., blood oxygenation level-dependent contrast) requires further research. However, event-related brain potential (ERP) studies comparing the timing and activation patterns of sentence processing in English and ASL suggest that similar neural events with similar timing and functional organization occur within the left hemisphere of native speakers and signers (31). The ERP research, in line with the present study, also points to extensive right hemisphere activation in early learners of ASL and supports the proposal that activation within parietooccipital and anterior frontal areas of the right hemisphere may be specifically linked to the linguistic use of space. Although fMRI and ERP results suggest both hemispheres are active during ASL processing in neurologically intact native signers, lesion evidence suggests that the contribution of the right hemisphere may not be as central as that of the left hemisphere because lasting impairments of sign production and perception have been reported after left hemisphere lesions but fewer deficits have been reported after right hemisphere damage (28, 32). If upheld in future studies of congenitally deaf native signers who sustain neurological damage, such results would suggest that studies of the effects of lesions on behavior and neuroimaging studies of neurologically intact subjects provide different types of information. The former point to areas that may be necessary and sufficient for particular types of processing whereas the latter index areas that participate in processing in the neurologically intact individual.

Recent lesion studies and neuroimaging studies of normal adults reading English have identified additional language-relevant structures beyond Broca’s and Wernicke’s areas, including the left DLPC and the entire extent of the left superior temporal gyrus (39–46). In the present study these areas were active when normal hearing subjects read English and when hearing and deaf native signers viewed ASL. These results suggest that these newly identified language areas may, like the classical language areas, mediate language independently of the sensory modality and structure of the language.

The results showing a lack of left hemisphere asymmetry when deaf subjects read English are consistent with previous behavioral and electrophysiological studies (23, 24). Previous studies of deaf subjects and hearing bilingual subjects who perform differently on tests of English grammar suggest this effect may be linked to the age of acquisition and/or the degree to which grammatical skills have been acquired in English (19, 24). The strong right hemisphere activation in deaf subjects reading English may be interpreted in light of behavioral studies that report many deaf individuals rely on visual-form information when reading and encoding written English (47). In the present study, as in previous studies, the right hemisphere effect was variable in extent and was observed in about 70% of deaf subjects.

The hearing native signers were true bilinguals, native users of both English and ASL from infancy. Their results are striking because they displayed, within the same experimental session, a strongly left-lateralized pattern of activation for reading English but robust and repeated activation of both the left and the right hemispheres for sentence processing in ASL. The activation patterns of these subjects, although similar, were not identical to either those of the monolingual hearing subjects reading English or to the congenitally deaf subjects viewing ASL. For example, when reading English, both hearing groups displayed strong left hemisphere asymmetries over inferior frontal regions. However, the hearing native signers did not display as robust activation over temporal brain regions. It may be that anterior regions perform similar processing on language input independently of the form and structure of the language whereas posterior regions may be organized to process language primarily of one form (e.g., manual/spatial or oral/aural). Further research characterizing cerebral organization during language acquisition will contribute to an understanding of this effect. When processing ASL, both deaf and hearing native signers displayed significant activation of both left and right frontal and temporal regions. However, whereas the activations were uniformly bilateral or larger in the right hemisphere for the deaf subjects, over anterior areas they tended to be larger from the left hemisphere in the hearing native signers. This pattern suggests that the early acquisition of oral/aural language influences the organization of anterior areas for ASL.

The hearing native signers (bilinguals) displayed considerable individual differences during sentence processing of both English and ASL. These results are reminiscent of recent reports of a high degree of variability from individual to individual and area to area of language activation in hearing, speaking bilinguals who learned their second language after the age of 7 years (20, 21). Thus, these data are consistent with the proposal that in addition to individual differences in the age of acquisition, proficiency, and learning and/or biological substrates, the structure and modality of the first and second languages also determine cerebral organization in the bilingual.

In summary, classical language areas within the left hemisphere were recruited in all groups (hearing or deaf) when processing their native language (ASL or English). In contrast, deaf subjects reading English did not display activation in these areas. These results suggest that the early acquisition of a fully grammatical, natural language is important in the specialization of these systems and support the hypothesis that the delayed and/or imperfect acquisition of a language leads to an anomalous pattern of brain organization for that language.†† Furthermore, the activation of right hemisphere areas when hearing and deaf native signers process sentences in ASL, but not when native speakers process English, implies that the specific nature and structure of ASL results in the recruitment of the right hemisphere into the language system. This study highlights the presence of strong biases that render regions of the left hemisphere well suited to process a natural language independently of the form of the language, and reveals that the specific structural processing requirements of the language also in part determine the final form of the language systems of the brain.

Figure 3.

Anatomical regions analyzed in this study. See Tables 1 and 2.

Acknowledgments

We thank Dr. Robert Balaban of the National Heart, Lung, and Blood Institute at the National Institutes of Health for providing access to the MRI system, students and staff at Gallaudet University for participation in these studies, Drs. S. Padmanhaban and A. Prinster and C. Hutton, T. Mitchell, A. Newman, and A. Tomann for help with these studies, Dr. J. Haxby for lending us equipment, and M. Baker, C. Vande Voorde, and L. Heidenreich for help with the manuscript. This research was supported by grants from the National Institute on Deafness and Communication Disorders, the Human Frontiers Grant, and the J.S. McDonnell Foundation.

ABBREVIATIONS

- fMRI

functional magnetic resonance imaging

- ASL

American Sign Language

- ERP

event-related brain potential

- DLPC

dorsolateral prefrontal cortex

- STS

superior temporal sulcus

Footnotes

References

- 1.Rice C. Res Bull Am Found Blind. 1979;22:1–22. [Google Scholar]

- 2.Hyvarinen J. The Parietal Cortex of Monkey and Man. New York: Springer; 1982. [Google Scholar]

- 3.Merzenich M M, Jenkins W M. J Hand Ther. 1993;6:89–104. doi: 10.1016/s0894-1130(12)80290-0. [DOI] [PubMed] [Google Scholar]

- 4.Recanzone G H, Schreiner C E, Merzenich M M. J Neurosci. 1993;13:87–103. doi: 10.1523/JNEUROSCI.13-01-00087.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Uhl F, Kretschmer T, Linginger G, Goldenberg G, Lang W, Oder W, Deecke L. Electroenceph Clin Neurophys. 1994;91:249–255. doi: 10.1016/0013-4694(94)90188-0. [DOI] [PubMed] [Google Scholar]

- 6.Kujala T, Alho K, Kekoni J, Hämäläinen H, Reinikainen K, Salonen O, Standerskjöld-Nordenstam C-G, Näätänen R. Exp Brain Res. 1995;104:519–526. doi: 10.1007/BF00231986. [DOI] [PubMed] [Google Scholar]

- 7.Rauschecker J P. Trends Neurosci. 1995;18:36–43. doi: 10.1016/0166-2236(95)93948-w. [DOI] [PubMed] [Google Scholar]

- 8.Kaas J H. In: The Cognitive Neurosciences. Gazzaniga M S, editor. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- 9.Karni A, Meyer G, Jezzard P, Adams M M, Turner R, Ungerleider L G. Nature (London) 1995;377:155–158. doi: 10.1038/377155a0. [DOI] [PubMed] [Google Scholar]

- 10.Neville H J. In: The Cognitive Neurosciences. Gazzaniga M S, editor. Cambridge, MA: MIT Press; 1995. pp. 219–231. [Google Scholar]

- 11.Sadato N, Pascual-Leone A, Grafman J, Ibanez V, Deiber M-P, Dold G, Hallett M. Nature (London) 1996;380:526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- 12.Röder B, Rösler F, Hennighausen E, Naecker F. Cog Brain Res. 1996;4:77–93. [PubMed] [Google Scholar]

- 13.Röder B, Teder-Salejarvi W, Sterr A, Rösler F, Hillyard S A, Neville H J. Soc Neurosci. 1997;23:1590. [Google Scholar]

- 14.Neville, H. J. & Bavelier, D. (1998) Proc. NIMH Conf. Adv. Res. Dev. Plasticity, in press.

- 15.Mitchell T V, Armstrong B A, Hillyard S A, Neville H J. Society for Neurosci. 1997;23:1585. [Google Scholar]

- 16.Newport E. Cognit Sci. 1990;14:11–28. [Google Scholar]

- 17.Mayberry R. J Speech Hearing Res. 1993;36:1258–1270. doi: 10.1044/jshr.3606.1258. [DOI] [PubMed] [Google Scholar]

- 18.Paradis M. Aspects of Bilingual Aphasia. Oxford: Pergamon; 1995. [Google Scholar]

- 19.Weber-Fox C, Neville H J. J Cognit Neurosci. 1996;8:231–256. doi: 10.1162/jocn.1996.8.3.231. [DOI] [PubMed] [Google Scholar]

- 20.Kim K H S, Relkin N R, Lee K M, Hirsch J. Nature (London) 1997;388:171–174. doi: 10.1038/40623. [DOI] [PubMed] [Google Scholar]

- 21.Dehaene, S., Dupoux, E., Mehler, J., Cohen, L., Paulesu, E., Perani, D., van de Moortele, P., Lehéricy, S. & Le Bihan, D. (1998) Neuroreport, in press. [DOI] [PubMed]

- 22.Lenneberg E. Biological Foundations of Language. New York: Wiley; 1967. [Google Scholar]

- 23.Neville H J, Kutas M, Schmidt A. Brain Lang. 1982;16:316–337. doi: 10.1016/0093-934x(82)90089-x. [DOI] [PubMed] [Google Scholar]

- 24.Neville H J, Mills D L, Lawson D S. Cereb Cortex. 1992;2:244–258. doi: 10.1093/cercor/2.3.244. [DOI] [PubMed] [Google Scholar]

- 25.Liberman A. In: The Neurosciences Third Study Program. Schmitt F O, Worden F G, editors. Cambridge, MA: MIT Press; 1974. pp. 43–56. [Google Scholar]

- 26.Corina D P, Vaid J, Bellugi U. Science. 1992;225:1258–1260. doi: 10.1126/science.1546327. [DOI] [PubMed] [Google Scholar]

- 27.Tallal P, Miller S, Fitch R H. Ann N Y Acad Sci. 1993;482:27–47. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- 28.Poizner H, Klima E S, Bellugi U. What the Hands Reveal about the Brain. Cambridge, MA: MIT Press; 1987. [Google Scholar]

- 29.Söderfeldt B, Rönnberg J, Risberg J. Brain Lang. 1994;46:59–68. doi: 10.1006/brln.1994.1004. [DOI] [PubMed] [Google Scholar]

- 30.Hickok G, Bellugi U, Klima E S. Nature (London) 1996;381:699–702. doi: 10.1038/381699a0. [DOI] [PubMed] [Google Scholar]

- 31.Neville H J, Coffey S A, Lawson D, Fischer A, Emmorey K, Bellugi U. Brain Lang. 1997;57:285–308. doi: 10.1006/brln.1997.1739. [DOI] [PubMed] [Google Scholar]

- 32.Corina D P. In: Aphasia in Atypical Populations. Coppens P, editor. Hillsdale, NJ: Erlbaum; 1997. [Google Scholar]

- 33.Klima D, Bellugi U. The Signs of Language. Cambridge MA: Harvard Univ. Press; 1979. [Google Scholar]

- 34.Linebarger M C, Schwartz M F, Saffran E M. Cognition. 1983;13:361–397. doi: 10.1016/0010-0277(83)90015-x. [DOI] [PubMed] [Google Scholar]

- 35.Turner R, Jezzard P, Wen K K, Kwong D, LeBihan T, Zeffiro T, Balaban R S. Magn Reson Med. 1993;29:277–279. doi: 10.1002/mrm.1910290221. [DOI] [PubMed] [Google Scholar]

- 36.Bandettini P A, Jesmanowicz A, Wong E C, Hyde J S. Magn Reson Med. 1993;30:161–173. doi: 10.1002/mrm.1910300204. [DOI] [PubMed] [Google Scholar]

- 37.Rademacher J, Galaburda A M, Kennedy D N, Filipek P A, Caviness V S. J Cognit Neurosci. 1992;4:352–374. doi: 10.1162/jocn.1992.4.4.352. [DOI] [PubMed] [Google Scholar]

- 38.Srivastava M S, Carter E M. An Introduction to Applied Multivariate Statistics. Amsterdam: North–Holland; 1983. [Google Scholar]

- 39.Mazoyer B, Tzourio N, Frak V, Syrota A, Murayama N, Levrier O, Salamon G, Dehaene S, Cohen L, Mehler J. J Cognit Neurosci. 1993;5:467–479. doi: 10.1162/jocn.1993.5.4.467. [DOI] [PubMed] [Google Scholar]

- 40.Demonet J-F, Wise R, Frackowiak R S J. Human Brain Map. 1993;1:39–47. [Google Scholar]

- 41.Cohen J D, Perstein W M, Baver T S, Nystrom L E, Noll D C, Jonides J, Smith E E. Nature (London) 1997;386:604–608. doi: 10.1038/386604a0. [DOI] [PubMed] [Google Scholar]

- 42.Dronkers N F, Wilkins D P, Van Valin R D, Redfern B B, Jaeger J J. Brain Lang. 1994;47:461–463. [Google Scholar]

- 43.Petersen S E, Fiez J A. Annu Rev Neurosci. 1993;16:509–530. doi: 10.1146/annurev.ne.16.030193.002453. [DOI] [PubMed] [Google Scholar]

- 44.Dronkers, N. F. & Pinker, S. (1998) in Principles in Neural Science, in press, 4th Ed.

- 45.Nobre A C, McCarthy G. J Neurosci. 1995;15:1090–1099. doi: 10.1523/JNEUROSCI.15-02-01090.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Bavelier D, Corina D P, Jezzard P, Padmanhaban S, Clark V P, Karni A, Prinster A, Braun A, Lalwani A, Rauschecker J, Turner R, Neville H J. J Cog Neurosci. 1997;9:664–686. doi: 10.1162/jocn.1997.9.5.664. [DOI] [PubMed] [Google Scholar]

- 47.Conrad R. Br J Educ Psychol. 1977;47:138–148. doi: 10.1111/j.2044-8279.1977.tb02339.x. [DOI] [PubMed] [Google Scholar]

- 48.Neville H J. In: Brain Maturation and Cognitive Development: Comparative and Cross-Cultural Perspectives. Gibson K R, Petersen A C, editors. Hawthorne, NY: Aldine de Gruyter; 1991. pp. 355–380. [Google Scholar]