Abstract

The laterality difference in the occipitotemporal region between Chinese (bilaterality) and alphabetic languages (left laterality) has been attributed to their difference in visual appearance. However, these languages also differ in orthographic transparency. To disentangle the effect of orthographic transparency from visual appearance, we trained subjects to read the same artificial script either as an alphabetic (i.e., transparent orthography) or a logographic (i.e., nontransparent orthography) language. Consistent with our previous results, both types of phonological training enhanced activations in the left fusiform gyrus. More interestingly, the laterality in the fusiform gyrus (especially the posterior region) was modulated by the orthographic transparency of the artificial script (more left-lateralized activation after alphabetic training than after logographic training). These results provide an alternative account (i.e., orthographic transparency) for the laterality difference between Chinese and alphabetic languages, and may have important implications for the role of the fusiform in reading.

Keywords: Reading, Fusiform laterality, Orthographic transparency, Language learning, fMRI

1. Introduction

A longstanding question in the neurobiology of language is whether there are specific neural networks for different language systems (e.g., Chen, Xue, Mei, Chen, & Dong, 2009; Paulesu et al., 2001; Paulesu et al., 2000; Siok, Perfetti, Jin, & Tan, 2004; Tan, Laird, Li, & Fox, 2005). One way to answer this question is to use the contrast of logographic (e.g., Chinese) and alphabetic (e.g., English) languages, because of their dramatic differences in visual appearance (Bolger, Perfetti, & Schneider, 2005; Perfetti et al., 2007). Chinese characters possess a number of intricate strokes that are packed into a square shape, whereas alphabetic languages have linear combinations of letters. Based on this difference, researchers have hypothesized that, compared with alphabetic languages, reading Chinese characters might involve more visuospatial analysis, and consequently recruit more regions in the right hemisphere (Liu, Dunlap, Fiez, & Perfetti, 2007; Tan et al., 2000).

Neuroimaging studies on the processing of Chinese characters are generally consistent with this hypothesis. Specifically, within the typical reading network, previous studies have generally revealed left-lateralized frontal activations for both logographic and alphabetic languages (e.g., Bolger et al., 2005; Chee, Tan, & Thiel, 1999; Chee et al., 2000; Chen, Fu, Iversen, Smith, & Matthews, 2002; Xue, Dong, Jin, & Chen, 2004). However, in the occipitotemporal cortex, many studies on Chinese characters have reported bilateral (e.g., Liu et al., 2007; Tan et al., 2001) or even right-lateralized activations (Tan et al., 2000). The finding of greater involvement of the right occipitotemporal region in the processing of Chinese characters was further confirmed by many other studies (e.g., Bolger et al., 2005; Guo & Burgund, 2010; Kuo et al., 2001; Kuo et al., 2003; Kuo et al., 2004; Peng et al., 2004; Peng et al., 2003; Tan et al., 2005; Wang, Yang, Shu, & Zevin, 2011), although several other studies showed left-lateralized activations in the middle fusiform when comparing Chinese characters with other objects, such as an artificial script (Liu et al., 2008), faces (Bai, Shi, Jiang, He, & Weng, 2011), and common objects (Mei et al., 2010). Using a voxel-wise direct comparison method that provided higher spatial resolution, we found that the functional laterality of Chinese processing varies across different regions in the fusiform gyrus, i.e., bilaterality in the posterior fusiform cortex and left laterality in the anterior fusiform cortex (Xue et al., 2005). This is in clear contrast to the left-hemisphere dominance in the processing of alphabetic languages (Cohen et al., 2002; Price, Wise, & Frackowiak, 1996; Vigneau, Jobard, Mazoyer, & Tzourio-Mazoyer, 2005).

Currently, the prevailing explanation for this laterality difference in the occipitemporal region is that more visuospatial analysis is needed for processing Chinese characters compared with alphabetic writings (Liu et al., 2007; Tan et al., 2000). However, in addition to visual appearance, Chinese and alphabetic languages also differ significantly in orthographic transparency (Chen et al., 2009; Perfetti et al., 2007). Alphabetic languages typically use letter-phoneme mapping and reading in alphabetic languages can be achieved through grapheme-to-phoneme correspondence (GPC) rules, although there are variations between shallow (e.g., Italian) and deep orthographies (e.g., English). In contrast, Chinese is a nontransparent orthography because there is no letter-phoneme mapping in Chinese. Although most of them have a phonetic radical that can provide clues to the pronunciation, only a small proportion of Chinese characters sound the same as their phonetic radicals. Thus, reading Chinese characters mainly relies on the association of whole characters and sounds (Liu et al., 2007; Tan et al., 2005).

Since Chinese (or other logographic languages) and alphabetic languages differ in both visual appearance and orthographic transparency, studies relying on the contrast between them have difficulties in testing whether their differences in orthographic transparency can account for the different laterality patterns in the occipitotemporal region. One way to tease apart the effect of orthographic transparency from visual appearance is to use the artificial language training paradigm, which allows researchers to manipulate the unit size of orthography-to-phonology mapping (i.e., orthographic transparency) while controlling for visual appearance (i.e., using the same set of words). In a recent ERP study, Yoncheva et al. (2010) trained two groups of subjects to read an artificial script (letter-like figures) either as an alphabetic or logographic (i.e., non-alphabetic) language for about 20 mins. ERP recordings during a reading verification task (visual-auditory matching) after training showed a left-lateralized N170 response in the alphabetic condition, but a right-lateralized response in the logographic condition. This result suggests an important role of orthographic transparency in shaping the laterality in the occipitotemporal region in reading tasks.

Due to the limited spatial resolution of ERP, it is however unclear from Yoncheva et al (2010) whether the subregions in the occipitotemporal cortex are differentially modulated by the script’s orthographic transparency. Several studies have suggested that the anterior and posterior parts of the occipitotemporal region are engaged in lexico-semantic and visuo-perceptual processing, respectively (Simons, Koutstaal, Prince, Wagner, & Schacter, 2003; Xue & Poldrack, 2007). Our previous studies have revealed that, although bilaterality in the middle fusiform was found for novel logographic characters, i.e., Korean Hangul (Xue, Chen, Jin, & Dong, 2006a), processing familiar logographic characters such as Chinese showed bilaterality in the posterior regions but left laterality in the middle and anterior fusiform cortex (Xue et al., 2006a; Xue et al., 2005). Consistent with this idea, one recent study has suggested that the functional asymmetry in the anterior and posterior fusiform cortex was determined by semantic and visuospatial factors, respectively (Seghier & Price, 2011).

Disentangling effects of orthographic transparency and visual appearance on the laterality patterns would also provide important clues to the functional role of the left occipitotemporal region in reading. There are currently two prevailing perspectives. The visual word form area (VWFA) perspective (Cohen & Dehaene, 2004; Cohen et al., 2002) has proposed that the left mid-fusiform is specialized in processing abstract visual word forms. It predicts no effect of orthographic transparency on fusiform laterality if the visual forms are the same. In contrast, the interactive perspective (Price & Devlin, 2011) posits that the VWFA integrates low-level visuospatial features with higher level associations (e.g., phonology and semantics), and its activity emerges from the interaction between bottom-up sensory inputs and top-down predictions. In supporting the interactive perspective, our previous artificial language training result has clearly isolated the role of phonological association in modulating the fusiform activity (Xue et al., 2006b). The present study aimed at extending this line of research by examining how different phonological access route, as determined by orthographic transparency, would differentially modulate fusiform activity.

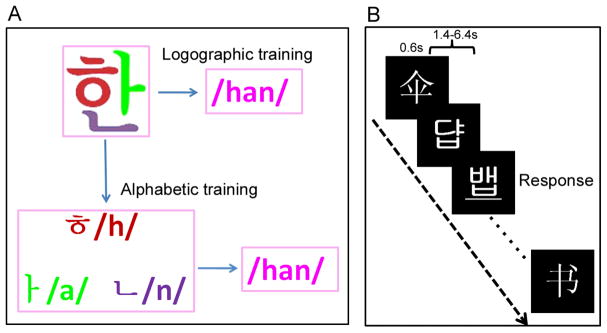

To examine the effect of orthographic transparency on occipitotemporal laterality in reading, the present study used (1) fMRI technology, with a higher spatial resolution, to examine the effect of orthographic transparency in different subregions in the occipitotemporal cortex, (2) a perceptual task (i.e., underline detection, see Fig. 1), administered both before and after training, to control potential laterality differences before training, and (3) a longer training period (eight days, one hour per day) than Yoncheva et al.’s study (20 mins) to reach a higher level of reading automaticity. The artificial language used in our study was created based on the visual forms and sounds of 60 Korean Hangul characters (see Fig. 1A for examples) because the design principle of Korean Hangul, i.e., logographic visual appearance but alphabetic orthography, is ideal for our purposes. We trained two matched groups of subjects to read the artificial language either as an alphabetic (letter-to-phoneme mapping) or a logographic (word-to-sound mapping) language. Training-related changes in neural activity were compared between the two training conditions to examine whether occipitotemporal laterality was modulated by the script’s orthographic transparency, and in which subregions such modulation effect occurred.

Fig. 1.

Experiment design and examples of the stimuli. The artificial language was created by adopting the visual forms and sounds of 60 Korean Hangul characters. Two matched groups of subjects received alphabetic and logographic training (A) for eight days (one hour per day). Before and after training, subjects were scanned when performing a perceptual task (B), in which subjects were asked to respond to the underlined words.

2. Material and Methods

2.1. Subjects

Forty-four Chinese college students (23 males; mean age = 22.04 ± 1.82 years old, with a range from 19 to 25 years) participated in this study. They were divided into two groups to receive either alphabetic or logographic training. The two groups were matched on age, gender (12 males and 10 females in the logographic group; 11 males and 11 females in the alphabetic group), nonverbal intelligence, and performance on Chinese reading tasks (see Table 1). All subjects had normal or corrected-to-normal vision, with no previous history of neurological or psychiatric disease and no previous experience with Korean, and were strongly right-handed as judged by Snyder and Harris’s handedness inventory (Snyder & Harris, 1993). Informed written consent was obtained from the subjects before the experiment. This study was approved by the IRBs of the University of California, Irvine, the National Key Laboratory of Cognitive Neuroscience and Learning at Beijing Normal University, and the University of Southern California.

Table 1.

Background characteristics of the alphabetic and logographic groups

| Variables | Logographic group | Alphabetic group | t | p |

|---|---|---|---|---|

| Age | 22.27 (1.83) | 21.82 (1.82) | 0.83 | .41 |

| Chinese word efficiency | 83.95 (12.90) | 80.55 (12.32) | 0.90 | .38 |

| Chinese word identification | 25.14 (5.63) | 24.73 (7.28) | 0.21 | .84 |

| Visual-auditory learning | 123.59 (8.98) | 124.14 (8.65) | 0.21 | .84 |

| Rapid color naming | 50.24 (9.95) | 49.25 (8.71) | 0.35 | .73 |

| Rapid object naming | 41.56 (5.42) | 44.21 (5.05) | 1.68 | .10 |

| Memory of digits | 12.64 (3.14) | 12.27 (2.95) | 0.40 | .69 |

| Raven advanced matrix | 27.95 (4.46) | 27.45 (5.52) | 0.41 | .68 |

Note: Numbers inside the parentheses represent standard deviations. The scores for the rapid color and object naming tasks are the total number of seconds taken to name all the items, and those for all other tests are the number of correct items. The Chinese word efficiency and identification tasks were designed by the authors of this study; the visual-auditory learning was a subtest of the Woodcock Reading Mastery Tests - Revised (WRMT-R); the rapid color and object naming, and memory of digits were subtests of the Comprehensive Test of Phonological Processing (CTOPP).

2.2. Materials

Fig. 1 illustrates the materials and experimental design. In total, 60 Chinese words and 60 artificial language words were used in the study. The Chinese words were medium- to high-frequency words (higher than 50 per million according to the Chinese word frequency dictionary, mean = 498.50 per million) (Wang & Chang, 1985), with 2–9 strokes (mean = 5.98), and 2–3 units (mean = 2.70) according to the definition by Chen et al. (1996). The artificial language words were constructed using 22 Korean Hangul letters, including 12 consonants and 10 vowels. All the phonemes we chose are present in Chinese because of our specific focus on form-sound association but not on new phonemes. To confirm our judgment, three Chinese college students were asked to assess the ease of pronouncing the phonemes. The average scores were higher than 3 on a 5-point scale (1 = very difficult to pronounce; 5 = very easy to pronounce) for all phonemes. The artificial words were matched with Chinese words in visual complexity (mean number of units = 2.67; mean number of strokes = 6.15). All stimuli were presented in gray-scale with 151 × 151 pixels in size.

The sounds of the Chinese and artificial language words were recorded from a native Chinese female speaker and a native Korean female speaker, respectively. All the sounds were denoised and normalized to the same length (600 ms) and loudness using Audacity 1.3 (audacity.sourceforge.net).

2.3. Training procedure and behavioral task

All subjects underwent an eight-day training program (about one hour per day) on the association between visual forms and sounds of 60 artificial language words. Two training conditions (i.e., alphabetic and logographic training) were designed to examine the effect of orthographic transparency on the laterality of the fusiform cortex (see Fig. 1A). As noted before, two matched groups of subjects received the two types of training, respectively, to avoid interference between training conditions. In the logographic group, subjects were asked to memorize the association between each whole word and its pronunciation. The original correspondences between the visual forms and sounds were shuffled to avoid implicit acquisition of the grapheme-phoneme correspondence (GPC) rules. In the alphabetic group, subjects were first taught the pronunciation of the letters and then to assemble the phonology of the words from their letters. To facilitate learning of the GPC rules, 30 new words were tested in the end of each training session. For both groups, we used a combination of various learning tasks to maximize the efficiency and to ensure that subjects could acquire the respective phonology at the end of the training. The learning tasks included letter learning (memorizing the sounds of letters in the alphabetic condition), whole word learning (learning the sounds of whole words), naming, naming with feedback, fast naming (reading ten randomly selected words (from the 60 trained words) as fast as possible), and phonological choice task (selecting the correct pronunciation for a word from four potential pronunciations). It should be noted that, except the type of training, all other intervening variables such as time-on-task were controlled across the two groups.

After 8 days of training, a naming task was adopted to test the effect of training. For both groups, all 60 trained words were tested. For the alphabetic group, 60 new words were tested to evaluate transfer of learning. In each trial, a word was presented for 3 secs, followed by a 1 sec blank interval. Subjects were asked to read each word aloud as fast and accurately as possible. Subjects’ oral responses were recorded through a microphone connected to the computer.

2.4. fMRI task

Before and after training, subjects were scanned when performing a perceptual task (Fig. 1B) (Chen et al., 2007; Cohen et al., 2002; Xue, Chen, Jin, & Dong, 2006b) that consisted of four types of stimuli, namely Chinese words, English words, English pseudowords, and artificial words. Each type of materials contained 60 items. A rapid event-related design was used, with the four types of materials pseudo-randomly mixed. Trial sequences were optimized with OPTSEQ (http://surfer.nmr.mgh.harvard.edu/optseq/) (Dale, 1999). The English materials were included to address other research questions, and thus excluded from data analysis in this paper. Stimulus presentation and response collection was programmed using Matlab (Mathworks) with Psychtoolbox extensions (www.psychtoolbox.org) on an IBM laptop.

The fMRI task consisted of two runs. Each run lasted for 10mins 10secs. During each run, the stimuli were presented either in the visual, auditory, or audiovisual modality. The stimuli in the auditory and audiovisual modality were included to address other research questions, and consequently excluded from data analysis in the current paper. Each trial lasted for 600 ms, followed by a blank that varied randomly from 1.4 to 6.4 sec (mean = 1.9 sec) to improve design efficiency. Subjects were asked to carefully view and/or listen to the stimuli. To ensure that subjects were awake and attentive, they were instructed to press a key whenever they noticed that the visual word was underlined. This happened 6 times per run. The task has at least two advantages: (1) it puts relatively low demand on phonological access, and consequently reduces the effect of the top-down process; (2) it can be administered both before and after training, allowing us to examine training-related neural changes. Subjects correctly responded to 10.8 ± 1.0 of 12 underlined words at the pre-training stage and 11.3 ± 1.1 at the post-training stage, suggesting that they were attentive to the stimuli during the perceptual task.

2.5. MRI data acquisition

Data were acquired with a 3.0 T Siemens MRI scanner in the MRI Center of Beijing Normal University. A single-shot T2*-weighted gradient-echo EPI sequence was used for functional imaging acquisition with the following parameters: TR/TE/θ = 2000ms/25ms/90°, FOV = 192×192mm, matrix = 64×64, and slice thickness = 3mm. Forty-one contiguous axial slices parallel to the AC-PC line were obtained to cover the whole cerebrum and part of the cerebellum. Anatomical MRI was acquired using a T1-weighted, three-dimensional, gradient-echo pulse-sequence (MPRAGE) with TR/TE/θ = 2530ms/3.09ms/10°, FOV = 256×256mm, matrix = 256×256, and slice thickness = 1mm. Two hundreds and eight sagittal slices were acquired to provide a high-resolution structural image of the whole brain.

2.6. Image preprocessing and statistical analysis

Initial analysis was carried out using tools from the FMRIB’s software library (www.fmrib.ox.ac.uk/fsl) version 4.1.2. The rst three volumes in each time series were automatically discarded by the scanner to allow for T1 equilibrium effects. The remaining images were then realigned to compensate for small head movements (Jenkinson & Smith, 2001). Translational movement parameters never exceeded 1 voxel in any direction for any subject or session. All data were spatially smoothed using a 5-mm full-width-half-maximum Gaussian kernel. The smoothed data were then ltered in the temporal domain using a nonlinear high-pass lter with a 60-s cutoff. A 2-step registration procedure was used whereby EPI images were rst registered to the MPRAGE structural image, and then into the standard (Montreal Neurological Institute [MNI]) space, using af ne transformations with FLIRT (Jenkinson & Smith, 2001) to the avg152 T1 MNI template.

At the first level, the data were modeled with the general linear model within the FILM module of FSL for each subject and each session. Events were modeled at the time of the stimulus presentation. These event onsets and their durations were convolved with a canonical hemodynamic response function (double-gamma) to generate the regressors used in the general linear model. Temporal derivatives and the 6 motion parameters were included as covariates of no interest to improve statistical sensitivity. Null events were not explicitly modeled, and therefore constituted an implicit baseline. In this analysis, the underlined words were modeled as nuisance variables to avoid the effect of other confounding factors. Three contrast images (Chinese words-baseline, artificial words-baseline, and artificial words-Chinese words) were computed for each session and for each subject.

A second-level model (fixed-effects model) created a cross-run contrast to examine the training effect for each subject. Training effect was calculated across the four sessions (two at the pre-training stage, and the other two at the post-training stage) for each condition and for each subject by using the contrast of post-training minus pre-training. They were then put in the third-level analyses to compute group differences using the contrast of artificial words-Chinese words in the alphabetic group vs. that in the logographic group. Group activations were computed using a random-effects model (treating subjects as a random effect) with FLAME stage 1 only (Beckmann, Jenkinson, & Smith, 2003; Woolrich, 2008; Woolrich, Behrens, Beckmann, Jenkinson, & Smith, 2004). Unless otherwise indicated, group images were thresholded with a height threshold of z > 2.3 and a cluster probability, P < 0.05, corrected for whole-brain multiple comparisons using the Gaussian random eld theory.

2.7. Region of interest analysis

The fusiform gyrus was defined as the region of interest (ROI) to assess functional laterality. Following Xue et al. (2007), we split the fusiform region into three smaller equal sized regions, namely the anterior fusiform region (MNI center: −40, −48, −18), middle fusiform region (MNI center: −40, −60, −18), and posterior fusiform region (MNI center: −40, −72, −18). It should be noted that the center of the middle fusiform region is near the VWFA region (−42, −57, −15) defined by Cohen et al. (2002). Each ROI was defined as a region of a 6 mm radius sphere around the center. The right homologue of these regions was also defined. In each ROI, we extracted parameter estimates (betas) of each event type from the fitted GLM model and averaged them across all voxels in the cluster for each subject. Percent signal changes were calculated using the following formula: [contrast image/(mean of run)] × ppheight × 100%, where ppheight is the peak height of the hemodynamic response versus the baseline level of activity [J. Mumford (2007) A Guide to Calculating Percent Change with Featquery. Unpublished Tech Report available at http://mumford.bol.ucla.edu/perchange_guide.pdf].

To quantify the laterality of the three subregions in the fusiform gyrus, we calculated the laterality index (LI) using the following formula: LI = L – R, where L and R represent percent signal changes in the left and right ROI, respectively (Vigneau et al., 2005). A positive LI indicates left-hemispheric lateralization and a negative number indicates right-hemispheric lateralization. It should be noted that the percent signal changes used to calculate the LIs were extracted using the contrast between artificial words and Chinese words to control for the test-retest variability of BOLD response. As a result, the LI is not an absolute measure of laterality, but instead it is sensitive to the relative differences in laterality change between different training methods.

3. Results

3.1. Behavioral performance after training

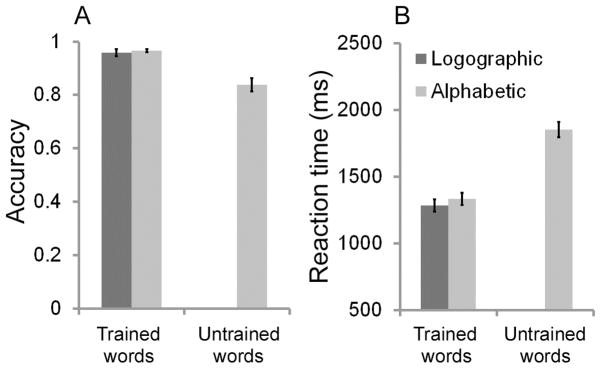

For both groups, subjects correctly named more than 95% of the trained words after training (the alphabetic group: 96.6% ± 2.7; the logographic group: 95.8% ± 6.1), suggesting that the training was effective (Fig. 2A). There was no significant between-group difference for the trained words (t(42) = 0.53, n.s.). Subjects in the alphabetic group also correctly named 83.7% of the untrained words, suggesting that they had learned the GPC rules.

Fig. 2.

Accuracies (A) and reaction times (B) of trained and untrained artificial words for the alphabetic and logographic groups. Error bars represent the standard error of the mean.

For reaction time (Fig. 2B) on the naming task, there was no significant difference between the two groups (t(42) = 0.75, n.s.), although the alphabetic group (1333.89 ms ± 216.37) named a little slower than the logographic group (1285.18 ms ± 213.96) (Ellis et al., 2004; Yoncheva et al., 2010).

3.2. Training enhanced neural activities in the fusiform cortex for both groups

In this analysis, we first examined between-group differences before training. For both Chinese and artificial language words, no region exhibited significant between-group differences in neural activities, suggesting the two groups of subjects were well matched.

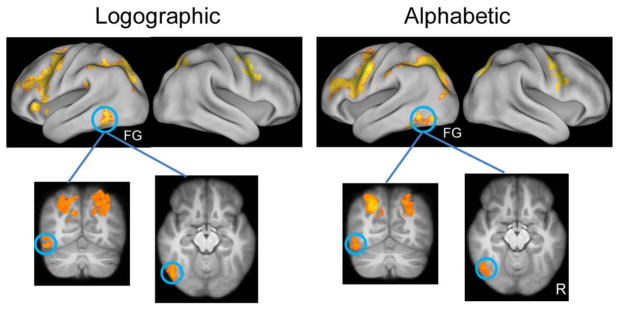

We then examined the training effect by comparing the BOLD activations at the post-training stage with those at the pre-training stage. In this analysis, data from the Chinese word condition were used as the baseline to control for test-retest variability of the BOLD response. Results showed that training significantly enhanced neural activities of the artificial language words in the left fusiform cortex for both groups (MNI center: −48, −54, −20, Z = 3.89 for the alphabetic group, and −48, −58, −14, Z = 3.60 for the logographic group, see Fig. 3 & Table 2). It should be noted that the peak coordinates of activations in the left fusiform cortex were close to the VWFA reported in previous studies (Bolger et al., 2005; Cohen & Dehaene, 2004; Cohen et al., 2002). Training-related increases in neural activation were also found in several other regions, such as the prefrontal cortex and occipitoparietal cortex (for details, see Mei et al., submitted).

Fig. 3.

Training-related changes in activations for the artificial words in the alphabetic and logographic groups. All activations were thresholded at z > 2.3 (whole-brain corrected), and and rendered onto PALS-B12 atlas (Van Essen, 2002, 2005) via average fiducial mapping using caret software (Van Essen et al., 2001).. R = Right; FG = fusiform gyrus.

Table 2.

Brain regions showing training-related increases for the artificial words in the logographic and alphabetic groups

| Brain regions | Logographic | Alphabetic | ||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| x | y | z | Z | x | y | z | Z | |

| Left precentral gyrus/inferior frontal gyrus | −46 | 10 | 28 | 4.27 | −48 | −2 | 36 | 5.69 |

| Right precentral gyrus/inferior frontal gyrus | 42 | −4 | 46 | 3.85 | 52 | 8 | 32 | 4.43 |

| Anterior cingulate cortex | −4 | 16 | 46 | 5.48 | −2 | 20 | 40 | 4.03 |

| Left supramarginal gyrus | −46 | −40 | 46 | 3.67 | −34 | −48 | 38 | 4.92 |

| Left superior occipital gyrus | −12 | −72 | 56 | 4.53 | −26 | −66 | 40 | 4.97 |

| Right superior occipital gyrus | 16 | −72 | 56 | 4.24 | 28 | −66 | 36 | 3.75 |

| Left inferior occipital gyrus/fusiform gyrus | −48 | −58 | −14 | 3.60 | −48 | −54 | −20 | 3.89 |

Finally, we examined between-group differences in terms of the training effect. There were no significant differences across the two groups in the bilateral fusiform cortex with a threshold of Z > 2.3 (whole-brain corrected).

3.3. Differential effects of alphabetic and logographic training on fusiform laterality

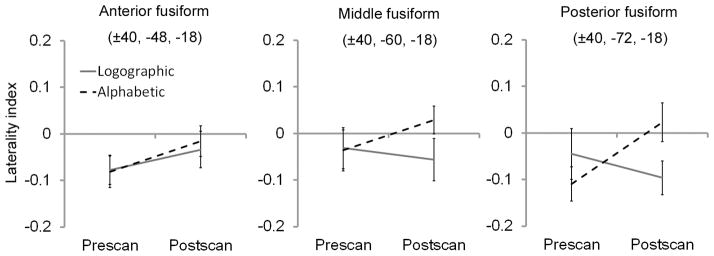

In this section, we first extracted the percent signal changes from the six pre-defined ROIs (i.e., the bilateral anterior, middle, and posterior fusiform regions) for the Chinese words to evaluate the test-retest variability of the BOLD responses. Results showed no significant differences across the two scans for the Chinese words in all six ROIs (all ps > .1), although the neural activities decreased slightly after training (Fig. S1). We then extracted the percent signal changes from the six ROIs (see Fig. S2) to examine the effect of orthographic transparency on laterality. As described in the “Methods” section, the fusiform laterality was calculated by using the neural activities in the left ROI minus those in the right homologue. We first performed a three-way (region: anterior, middle, and posterior; group: alphabetic and logographic; test: pre- and post-training) analysis of variance (ANOVA) to examine between-group differences in fusiform laterality. This analysis revealed a trend of group-by-test interaction (F(1,42) = 2.80, p = .102), and suggested that alphabetic and logographic training resulted in left- and right-lateralized activations, respectively. None of the main effects or other interactions was significant. We then performed a two-way (i.e., group and test) ANOVA for each region to examine between-group differences in the three subregions in the fusiform cortex (Fig. 4). Results showed that the fusiform laterality demonstrated gradient sensitivity to the learning method (i.e., orthographic transparency) from the anterior to posterior regions. Specifically, compared with the pre-training stage, neural activities of the artificial language words in the posterior fusiform region were more left-lateralized after alphabetic training, but more right-lateralized after logographic training (group-by-test interaction: F(1,42) = 4.92, p < .05). The neural activities in the middle fusiform region showed the same trend, but it was not statistically significant (group-by-test interaction: F(1,42) = 1, p = .324). In contrast, in the anterior region, both groups showed a trend of more left-lateralized activations after training, and consequently demonstrated no significant group-by-test interaction (F(1,42) = 0.10, n.s.). In the three regions, none of the main effects was significant (all ps > .1).

Fig. 4.

The between-group (alphabetic and logographic) differences in fusiform laterality for the artificial words. Percent signal changes were extracted from the left and right anterior, middle, and posterior fusiform regions at pre- and post-training stages. The laterality index (vertical coordinate) was calculated by comparing the percent signal change in the left and right regions. Error bars represent the standard error of the mean.

4. Discussion

Using an artificial language training paradigm, the present study examined the effect of orthographic transparency on the laterality of the subregions in the fusiform gyrus. Consistent with one previous study (Xue et al., 2006b), we found that phonological training resulted in increased activations in the fusiform gyrus. More importantly, we found that the laterality of fusiform activation was significantly modulated by the orthographic transparency of the artificial language, with more left-lateralized activation after alphabetic training than after logographic training. This difference manifested in the posterior portion of the fusiform gyrus, decreased in the middle portion, and diminished in the anterior portion. This result provides clear evidence for the effect of orthographic transparency on fusiform laterality, and has improved our understanding of the functions of the fusiform cortex in reading.

The effect of orthographic transparency on fusiform laterality found in this study provides an alternative account to the observed difference in occipitotemporal laterality between Chinese and alphabetic languages. As discussed in the “Introduction” section, different laterality in occipitotemporal cortex between Chinese and alphabetic languages has been attributed to their difference in visual appearance (Liu et al., 2007; Tan et al., 2000). In contrast, using the artificial language training paradigm, our study showed that the functional laterality in the fusiform cortex was modulated by the scripts’ orthographic transparency (another important difference between Chinese and alphabetic languages) after controlling for visual appearance. Specifically, neural activities in the fusiform gyrus were more left-lateralized after alphabetic training, but more right-lateralized after logographic training. Consistent with our results, one previous ERP study has revealed similar dissociation in N170 laterality following alphabetic and logographic training (Yoncheva et al., 2010). These results suggest that the laterality difference between Chinese and alphabetic languages in the occipitotemporal region may be at least partially accounted for by their difference in orthographic transparency.

Our results also have important implications for understanding the functions of the fusiform gyrus. Two rival perspectives (the VWFA perspective and interactive perspective) have been proposed regarding fusiform functions. Our study on phonological training provides evidence against the VWFA perspective (Cohen & Dehaene, 2004; Cohen et al., 2002). On the one hand, we found that phonological training enhanced neural activations in the VWFA for both groups in a passive viewing task (i.e., little effort was involved). Although our paradigm involved a combination of orthographic and phonological trainings, the increased activations in the VWFA were probably caused by the phonological training, but not by the orthographic training, because our previous studies revealed decreased activation in the fusiform after orthographic training (Xue et al., 2006b), but increased activation after phonological training (Xue et al., 2006b). On the other hand, the laterality of fusiform activation was modulated by the script’s orthographic transparency. These two lines of evidence suggest an important role of phonology in shaping VWFA activations, and argue against the VWFA hypothesis that the mid-fusiform is specialized for processing abstract visual word forms and is thus not influenced by other linguistic features such as phonology. Instead, our results support the interactive account of VWFA function, that is, activation of the VWFA results from interactions between the process of low-level visuospatial features (the bottom-up process) and that of higher level associations such as phonology and semantics (the top-down process) (Price & Devlin, 2011).

From this perspective, the phonological pathway determined by orthographic transparency might shape visual processing of scripts. Specifically, the part information was emphasized during learning under the alphabetic condition, whereas the global information was emphasized by the whole word learning under the logographic condition. Therefore, the alphabetic and logographic groups would adopt part- and whole-based processing strategy during reading after training, respectively, either because of their differential instructions during training or because of differential top-down requirement determined by different phonological access routes. As a result, our observation is consistent with the interactive model of reading (Price & Devlin, 2011) as well as the hemispheric specialization view (Hellige, Laeng, & Michimata, 2010), which posits that the left and the right hemispheres are specialized for processing, respectively, high-versus low-spatial-frequency information (Kitterle & Selig, 1991), part versus whole (Robertson & Lamb, 1991), and features versus holistic information (Grill-Spector, 2001). Consistent with our results, one neuroimaging study revealed a right-fusiform advantage for the processing of faces as a whole and a left-fusiform superiority for the processing of facial features (Rossion et al., 2000). Similarly, another recent study reported that successful episodic memory encoding of faces relied on the left fusiform cortex because of the involvement of feature/part information processing (Mei et al., 2010).

We also found that laterality in the fusiform subregions was differentially modulated by the artificial language’s orthographic transparency. In particular, the effect of orthographic transparency on the laterality manifested in the posterior fusiform region, but not in the anterior region. The lack of effect in the anterior fusiform region probably reflected the observation that the anterior fusiform region is mainly responsible for semantic processing (Seghier & Price, 2011; Simons et al., 2003; Xue & Poldrack, 2007), and no semantic component was included in our training. The involvement of the anterior fusiform region in semantic processing was also confirmed by many other studies, which found more activations in the anterior fusiform region for materials with semantics (e.g., words) than those without semantics (e.g., pseudowords) (Herbster, Mintun, Nebes, & Becker, 1997; Mechelli et al., 2005), or for semantic tasks than perceptual/phonological tasks (Binder, Desai, Graves, & Conant, 2009; Sharp et al., 2010).

In sum, using the artificial language training paradigm to remove the effect of visual appearance, our study clearly demonstrated that orthographic transparency affected fusiform laterality and that the effect varied across different subregions of the fusiform. These results provide an alternative account (i.e., orthographic transparency) for the differences between Chinese and alphabetic languages in fusiform laterality, and support the interactive and anterior-to-posterior distinction views on the functions of the fusiform in reading.

Supplementary Material

Acknowledgments

This work was supported by the National Science Foundation (grant numbers BCS 0823624 and BCS 0823495), the National Institute of Health (grant number HD057884-01A2), the 111 Project (B07008), the National Natural Science Foundation of China (31130025) and the Program for New Century Excellent Talents in University (NCET-09-0234).

References

- Bai Je, Shi J, Jiang Y, He S, Weng X. Chinese and Korean Characters Engage the Same Visual Word Form Area in Proficient Early Chinese-Korean Bilinguals. PLoS ONE. 2011;6(7):e22765. doi: 10.1371/journal.pone.0022765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in FMRI. NeuroImage. 2003;20(2):1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where Is the Semantic System? A Critical Review and Meta-Analysis of 120 Functional Neuroimaging Studies. Cerebral Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolger DJ, Perfetti CA, Schneider W. Cross-cultural effect on the brain revisited: universal structures plus writing system variation. Hum Brain Mapp. 2005;25(1):92–104. doi: 10.1002/hbm.20124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chee MWL, Tan EW, Thiel T. Mandarin and English single word processing studied with functional magnetic resonance imaging. J Neurosci. 1999;19(8):3050–3056. doi: 10.1523/JNEUROSCI.19-08-03050.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chee MWL, Weekes B, Lee KM, Soon CS, Schreiber A, Hoon JJ, et al. Overlap and Dissociation of Semantic Processing of Chinese Characters, English Words, and Pictures: Evidence from fMRI. Neuroimage. 2000;12(4):392–403. doi: 10.1006/nimg.2000.0631. [DOI] [PubMed] [Google Scholar]

- Chen C, Xue G, Dong Q, Jin Z, Li T, Xue F, et al. Sex determines the neurofunctional predictors of visual word learning. Neuropsychologia. 2007;45(4):741–747. doi: 10.1016/j.neuropsychologia.2006.08.018. [DOI] [PubMed] [Google Scholar]

- Chen C, Xue G, Mei L, Chen C, Dong Q. Cultural neurolinguistics. In: Joan YC, editor. Progress in Brain Research. Elsevier; 2009. pp. 159–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Fu S, Iversen SD, Smith SM, Matthews PM. Testing for Dual Brain Processing Routes in Reading: A Direct Contrast of Chinese Character and Pinyin Reading Using fMRI. Journal of Cognitive Neuroscience. 2002;14(7):1088–1098. doi: 10.1162/089892902320474535. [DOI] [PubMed] [Google Scholar]

- Chen YP, Allport DA, Marshall JC. What are the functional orthographic units in Chinese word recognition: the stroke or the stroke pattern? Quarterly Journal of Experimental Psychology. 1996;49:1024–1043. [Google Scholar]

- Cohen L, Dehaene S. Specialization within the ventral stream: the case for the visual word form area. Neuroimage. 2004;22(1):466–476. doi: 10.1016/j.neuroimage.2003.12.049. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehericy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain. 2002;125(5):1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Human Brain Mapping. 1999;8(2–3):109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ellis NC, Natsume M, Stavropoulou K, Hoxhallari L, Daal VHPV, Polyzoe N, et al. The Effects of Orthographic Depth on Learning to Read Alphabetic, Syllabic, and Logographic Scripts. Reading Research Quarterly. 2004;39(4):438–468. [Google Scholar]

- Grill-Spector K. Semantic versus perceptual priming in fusiform cortex. Trends Cogn Sci. 2001;5:227–228. doi: 10.1016/s1364-6613(00)01665-x. [DOI] [PubMed] [Google Scholar]

- Guo Y, Burgund ED. Task effects in the mid-fusiform gyrus: A comparison of orthographic, phonological, and semantic processing of Chinese characters. Brain and Language. 2010;115(2):113–120. doi: 10.1016/j.bandl.2010.08.001. [DOI] [PubMed] [Google Scholar]

- Hellige JB, Laeng B, Michimata C. Processing Asymmetries in the Visual System. In: Hugdahl K, Westerhausen R, editors. The Two Halves of the Brain. MA MIT Press; Cambridge: 2010. [Google Scholar]

- Herbster AN, Mintun MA, Nebes RD, Becker JT. Regional cerebral blood flow during word and nonword reading. Human Brain Mapping. 1997;5(2):84–92. doi: 10.1002/(sici)1097-0193(1997)5:2<84::aid-hbm2>3.0.co;2-i. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Medical Image Analysis. 2001;5(2):143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Kitterle FL, Selig LM. Visual field effects in the discrimination of sine-wave gratings. Percept Psychophys. 1991;50:15–18. doi: 10.3758/bf03212201. [DOI] [PubMed] [Google Scholar]

- Kuo WJ, Yeh TC, Duann JR, Wu YT, Ho LT, Hung D, et al. A left-lateralized network for reading Chinese words: a 3 T fMRI study. Neuroreport. 2001;12(18):3997–4001. doi: 10.1097/00001756-200112210-00029. [DOI] [PubMed] [Google Scholar]

- Kuo WJ, Yeh TC, Lee CY, Wu YT, Chou CC, Ho LT, et al. Frequency effects of Chinese character processing in the brain: an event-related fMRI study. Neuroimage. 2003;18(3):720–730. doi: 10.1016/s1053-8119(03)00015-6. [DOI] [PubMed] [Google Scholar]

- Kuo WJ, Yeh TC, Lee JR, Chen LF, Lee PL, Chen SS, et al. Orthographic and phonological processing of Chinese characters: an fMRI study. Neuroimage. 2004;21(4):1721–1731. doi: 10.1016/j.neuroimage.2003.12.007. [DOI] [PubMed] [Google Scholar]

- Liu C, Zhang WT, Tang YY, Mai XQ, Chen HC, Tardif T, et al. The Visual Word Form Area: Evidence from an fMRI study of implicit processing of Chinese characters. NeuroImage. 2008;40(3):1350–1361. doi: 10.1016/j.neuroimage.2007.10.014. [DOI] [PubMed] [Google Scholar]

- Liu Y, Dunlap S, Fiez J, Perfetti C. Evidence for neural accommodation to a writing system following learning. Hum Brain Mapp. 2007;28(11):1223–1234. doi: 10.1002/hbm.20356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechelli A, Crinion JT, Long S, Friston KJ, Ralph MAL, Patterson K, et al. Dissociating Reading Processes on the Basis of Neuronal Interactions. Journal of Cognitive Neuroscience. 2005;17(11):1753–1765. doi: 10.1162/089892905774589190. [DOI] [PubMed] [Google Scholar]

- Mei L, Xue G, Chen C, Xue F, Zhang M, Dong Q. The “visual word form area” is involved in successful memory encoding of both words and faces. NeuroImage. 2010;52(1):371–378. doi: 10.1016/j.neuroimage.2010.03.067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mei L, Xue G, Lu Z, He Q, Zhang M, Xue F, et al. The Neural Substrates Underlying Addressed and Assembled Phonologies: An Artificial Language Training Study. doi: 10.1371/journal.pone.0093548. submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulesu E, Démonet JF, Fazio F, McCrory E, Chanoine V, Brunswick N, et al. Dyslexia: Cultural Diversity and Biological Unity. Science. 2001;291(5511):2165–2167. doi: 10.1126/science.1057179. [DOI] [PubMed] [Google Scholar]

- Paulesu E, McCrory E, Fazio F, Menoncello L, Brunswick N, Cappa SF, et al. A cultural effect on brain function. Nat Neurosci. 2000;3(1):91–96. doi: 10.1038/71163. [DOI] [PubMed] [Google Scholar]

- Peng D-l, Ding G-s, Perry C, Xu D, Jin Z, Luo Q, et al. fMRI evidence for the automatic phonological activation of briefly presented words. Cognitive Brain Research. 2004;20(2):156–164. doi: 10.1016/j.cogbrainres.2004.02.006. [DOI] [PubMed] [Google Scholar]

- Peng D-l, Xu D, Jin Z, Luo Q, Ding G-s, Perry C, et al. Neural basis of the non-attentional processing of briefly presented words. Human Brain Mapping. 2003;18(3):215–221. doi: 10.1002/hbm.10096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perfetti CA, Liu Y, Fiez J, Nelson J, Bolger DJ, Tan LH. Reading in two writing systems: Accommodation and assimilation of the brain’s reading network. Bilingualism: Language and Cognition. 2007;10(02):131–146. [Google Scholar]

- Price CJ, Devlin JT. The Interactive Account of ventral occipitotemporal contributions to reading. Trends in Cognitive Sciences. 2011;15(6):246–253. doi: 10.1016/j.tics.2011.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Wise RJS, Frackowiak RSJ. Demonstrating the Implicit Processing of Visually Presented Words and Pseudowords. Cereb Cortex. 1996;6(1):62–70. doi: 10.1093/cercor/6.1.62. [DOI] [PubMed] [Google Scholar]

- Robertson LC, Lamb MR. Neuropsychological contributions to theories of part/whole organization. Cognitive Psychology. 1991;23:299–330. doi: 10.1016/0010-0285(91)90012-d. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M, Gelder Bd, et al. Hemispheric Asymmetries for Whole-Based and Part-Based Face Processing in the Human Fusiform Gyrus. Journal of Cognitive Neuroscience. 2000;12(5):793–802. doi: 10.1162/089892900562606. [DOI] [PubMed] [Google Scholar]

- Seghier ML, Price CJ. Explaining Left Lateralization for Words in the Ventral Occipitotemporal Cortex. The Journal of Neuroscience. 2011;31(41):14745–14753. doi: 10.1523/JNEUROSCI.2238-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharp DJ, Awad M, Warren JE, Wise RJS, Vigliocco G, Scott SK. The neural response to changing semantic and perceptual complexity during language processing. Human Brain Mapping. 2010;31(3):365–377. doi: 10.1002/hbm.20871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simons JS, Koutstaal W, Prince S, Wagner AD, Schacter DL. Neural mechanisms of visual object priming: evidence for perceptual and semantic distinctions in fusiform cortex. NeuroImage. 2003;19(3):613–626. doi: 10.1016/s1053-8119(03)00096-x. [DOI] [PubMed] [Google Scholar]

- Siok WT, Perfetti CA, Jin Z, Tan LH. Biological abnormality of impaired reading is constrained by culture. Nature. 2004;431(7004):71–76. doi: 10.1038/nature02865. [DOI] [PubMed] [Google Scholar]

- Snyder PJ, Harris LJ. Handedness, sex, and familial sinistrality effects on spatial tasks. Cortex. 1993;29(1):115–134. doi: 10.1016/s0010-9452(13)80216-x. [DOI] [PubMed] [Google Scholar]

- Tan LH, Laird AR, Li K, Fox PT. Neuroanatomical correlates of phonological processing of Chinese characters and alphabetic words: a meta-analysis. Hum Brain Mapp. 2005;25(1):83–91. doi: 10.1002/hbm.20134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan LH, Liu HL, Perfetti CA, Spinks JA, Fox PT, Gao JH. The Neural System Underlying Chinese Logograph Reading. Neuroimage. 2001;13(5):836–846. doi: 10.1006/nimg.2001.0749. [DOI] [PubMed] [Google Scholar]

- Tan LH, Spinks JA, Gao JH, Liu HL, Perfetti CA, Xiong J, et al. Brain activation in the processing of Chinese characters and words: A functional MRI study. Human Brain Mapping. 2000;10(1):16–27. doi: 10.1002/(SICI)1097-0193(200005)10:1<16::AID-HBM30>3.0.CO;2-M. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC. Windows on the brain: the emerging role of atlases and databases in neuroscience. Current Opinion in Neurobiology. 2002;12(5):574–579. doi: 10.1016/s0959-4388(02)00361-6. [DOI] [PubMed] [Google Scholar]

- Van Essen DC. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. NeuroImage. 2005;28(3):635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An Integrated Software Suite for Surface-based Analyses of Cerebral Cortex. Journal of the American Medical Informatics Association. 2001;8(5):443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigneau M, Jobard G, Mazoyer B, Tzourio-Mazoyer N. Word and non-word reading: What role for the Visual Word Form Area? NeuroImage. 2005;27(3):694–705. doi: 10.1016/j.neuroimage.2005.04.038. [DOI] [PubMed] [Google Scholar]

- Wang H, Chang RB. Beijing Language University Press; Beijing: 1985. Modern Chinese frequency dictionary. [Google Scholar]

- Wang X, Yang J, Shu H, Zevin JD. Left fusiform BOLD responses are inversely related to word-likeness in a one-back task. NeuroImage. 2011;55(3):1346–1356. doi: 10.1016/j.neuroimage.2010.12.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich MW. Robust group analysis using outlier inference. NeuroImage. 2008;41(2):286–301. doi: 10.1016/j.neuroimage.2008.02.042. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TEJ, Beckmann CF, Jenkinson M, Smith SM. Multilevel linear modelling for FMRI group analysis using Bayesian inference. NeuroImage. 2004;21(4):1732–1747. doi: 10.1016/j.neuroimage.2003.12.023. [DOI] [PubMed] [Google Scholar]

- Xue G, Chen C, Jin Z, Dong Q. Cerebral Asymmetry in the Fusiform Areas Predicted the Efficiency of Learning a New Writing System. Journal of Cognitive Neuroscience. 2006a;18(6):923–931. doi: 10.1162/jocn.2006.18.6.923. [DOI] [PubMed] [Google Scholar]

- Xue G, Chen C, Jin Z, Dong Q. Language experience shapes fusiform activation when processing a logographic artificial language: An fMRI training study. Neuroimage. 2006b;31(3):1315–1326. doi: 10.1016/j.neuroimage.2005.11.055. [DOI] [PubMed] [Google Scholar]

- Xue G, Dong Q, Chen K, Jin Z, Chen C, Zeng Y, et al. Cerebral asymmetry in children when reading Chinese characters. Cognitive Brain Research. 2005;24(2):206–214. doi: 10.1016/j.cogbrainres.2005.01.022. [DOI] [PubMed] [Google Scholar]

- Xue G, Dong Q, Jin Z, Chen C. Mapping of verbal working memory in nonfluent Chinese-English bilinguals with functional MRI. Neuroimage. 2004;22(1):1–10. doi: 10.1016/j.neuroimage.2004.01.013. [DOI] [PubMed] [Google Scholar]

- Xue G, Poldrack RA. The Neural Substrates of Visual Perceptual Learning of Words: Implications for the Visual Word Form Area Hypothesis. Journal of Cognitive Neuroscience. 2007;19(10):1643–1655. doi: 10.1162/jocn.2007.19.10.1643. [DOI] [PubMed] [Google Scholar]

- Yoncheva YN, Blau VC, Maurer U, McCandliss BD. Attentional Focus During Learning Impacts N170 ERP Responses to an Artificial Script. Developmental Neuropsychology. 2010;35(4):423–445. doi: 10.1080/87565641.2010.480918. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.