Abstract

The Children’s Health Insurance Program Reauthorization Act of 2009 (CHIPRA) includes provisions for identifying standardized pediatric care quality measures. These 24 “CHIPRA measures” were designed to be evaluated by using claims data from health insurance plan populations. Such data have limited ability to evaluate population health, especially among uninsured people. The rapid expansion of data from electronic health records (EHRs) may help address this limitation by augmenting claims data in care quality assessments. We outline how to operationalize many of the CHIPRA measures for application in EHR data through a case study of a network of >40 outpatient community health centers in 2009–2010 with a single EHR. We assess the differences seen when applying the original claims-based versus adapted EHR-based specifications, using 2 CHIPRA measures (Chlamydia screening among sexually active female patients; BMI percentile documentation) as examples. Sixteen of the original CHIPRA measures could feasibly be evaluated in this dataset. Three main adaptations were necessary (specifying a visit-based population denominator, calculating some pregnancy-related factors by using EHR data, substituting for medication dispense data). Although it is feasible to adapt many of the CHIPRA measures for use in outpatient EHR data, information is gained and lost depending on how numerators and denominators are specified. We suggest first steps toward application of the CHIPRA measures in uninsured populations, and in EHR data. The results highlight the importance of considering the limitations of the original CHIPRA measures in care quality evaluations.

KEY WORDS: health care quality assessment, health care quality indicators, pediatric care quality assessment, CHIPRA measures, electronic health record data collection

Several national efforts have developed standardized measures of health care quality for use in quality assessment and reporting. Although most are measures of adults’ care, the Children’s Health Insurance Program Reauthorization Act of 2009 (CHIPRA), mainly adopted to provide health insurance coverage for an estimated 6 million children,1 includes provisions for identification of an initial core set of recommended pediatric quality measures. The Centers for Medicare and Medicaid Services and the Agency for Healthcare Research and Quality developed this set of measures.

The “CHIPRA core measures” were developed based on pediatric health care quality indicators previously used in public and private health insurance reporting on components of recommended preventive care and treatment of common morbidities.1–5 Measures were included in the initial set based on their validity, feasibility of use by Medicaid and Children’s Health Insurance Programs, and importance to health outcomes.

The measures were intended to adva-nce evaluation of pediatric health care quality in the United States.2,6 Medicaid and states’ Children’s Health Insurance Programs are encouraged to use the recommended measures to assess the quality of services provided to the children they serve. With that application in mind, measures were primarily selected to be evaluated by using claims data from health insurance plan enrollee populations. Thus, most of the CHIPRA measures are annual benchmarks expressed as rates, with denominators specified to include people continuously enrolled in an insurance program during the measurement year.

Ten of the measures are designed to be calculated by using administrative data only; that is, claims data. Two are calculated by using claims data linked to vital records data. Eleven are “hybrid” measures, designed to be calculated by using either claims data or a combination of claims data and systematic review of medical records. The remain-ing measure uses data from a patient survey.7 None of the current CHIPRA measures are designed to be calculated by using automated data from an electronic health record (EHR), presumably because not all health plans currently have access to EHR data and/or have the functional ability to search EHR data.

The initial core measures have some important limitations.8–11 Health insurance claims data include only services provided during a period of coverage under any given plan. Thus, the CHIPRA measures could compare the quality of care received by people continuously enrolled in one plan, or possibly compare longitudinal data from enrollees in different plans. Claims-based measures cannot be used to evaluate care provided to uninsured or discontinuously insured people, however, limiting the measures’ usefulness in assessing care quality in a clinic if patients’ payers change, or if patients lose coverage but are still seen at the clinic.8,12–14 This is unfortunate, as such assessments would be particularly helpful in evaluating how health care reforms initiated through the Patient Protection and Affordable Care Act affect care quality.15 Further, claims data represent bills issued to insurance plans, potentially missing important care information, as not all care is consistently billed; for example, claims may be entered only if providers believe the services will be reimbursed.16,17

Data from EHRs may help to address the limitations of the claims-based CHIPRA measures. The use of EHRs in ambulatory care settings has rapidly expanded in recent years owing to federal mandates and incentives, especially in community health centers (CHCs) and other settings where uninsured children receive care.18–20 As the availability and quality of EHR data continues to improve, these data could replace or augment administrative claims data in care quality assessments. This article discusses the feasibility of applying the CHIPRA measures in EHR data from a network of outpatient CHCs; suggests how these measures could be adapted for application in outpatient EHR data (including both claims and medical record data); and assesses the differences in results seen when applying the original claims-based versus adapted EHR-based specifications, by using 2 measures as examples. This study was reviewed and approved by the Oregon Health & Science University Institutional Review Board.

Methods

Case Study: EHR Data From the OCHIN Collaborative of CHCs

To determine the feasibility of applying the CHIPRA measures in outpatient EHR data, we collaborated with OCHIN (originally Oregon Community Health Information Network, now just OCHIN), a 501(c)(3) collaboration of CHCs in more than 50 primary care member organizations from several states.21 OCHIN provides and maintains a comprehensive Epic EHR infrastructure for its member clinics. All OCHIN clinics share this EHR; patients have a single health record number linked across all sites. OCHIN clinics provide ambulatory care regardless of patients’ ability to pay. Almost all OCHIN clinic patients are from households below 200% of the federal poverty level; about half of visits are from uninsured people, and half from publicly insured people.

The OCHIN EHR includes administrative data for all member clinics, and clinical data for most of these clinics. The administrative data include appointment information and diagnostic and procedure codes (similar to billing data in insurance claims); the full EHR clinical data contains problem lists, physician notes, prescription records, laboratory results, and so forth. OCHIN has these data for both insured and uninsured patients. The OCHIN clinics’ data can be searched electronically, without manual chart review; are stored in a central repository; and are regularly checked and “cleaned” by OCHIN data staff and clinic staff.

Adapting the CHIPRA Measures to EHR Data

To assess the extent to which clinical data could be used to evaluate each of the CHIPRA measures, and how each meas-ure could be adapted for application in this outpatient EHR dataset, we used an iterative process involving input from researchers, policy makers, informatics experts, and clinicians. We started with the 2011 CHIPRA Initial Core Set Technical Specifications Manual’s numerator and denominator specifications.7 We reviewed each measure’s specifications to determine whether the needed data points could be obtained in OCHIN’s EHR data, and how each measure could be adapted for application in the EHR data while maintaining the integrity of its original specifications.

Two Examples Comparing Original and Adapted CHIPRA Measure Specifications

For 2 CHIPRA measures, we compared the results obtained from the original, claims-based measure specifications, to the results obtained when using our “adapted” specifications, which include electronically abstracted EHR clinical data. We calculated the descriptive differences between the numerators and denominators obtained by using each method. We conducted these analyses among Oregon clinics from OCHIN’s collaborative that had the full EHR in operation before the start of the measurement year (44 clinics in 2009 and 47 clinics in 2010), so that both administrative and clinical data were available for our study population.

Example 1: Rates of Annual Chlamydia Screening Among Sexually Active Women Aged 16 to 24

This CHIPRA measure assesses the percentage of sexually active young women receiving Chlamydia screening in a given measurement year. In both the original and adapted versions of this measure, the numerator is bas-ed on the CHIPRA specifications’ list of Current Procedural Terminology (CPT) and Logical Observation Identifiers Names and Codes for Chlamydia screening. In this example, we assessed whether adapting the measure for use in EHR data could improve the accuracy of the measure’s denominator. The CHIPRA denominator for this measure is “sexually active women aged 16 to 24 who had ≤1 enrollment gap of up to 45 days during the measurement year.” We identified young women aged 16 to 24 who had ≥1 clinic visit during the measurement year, defining visits by using the CHIPRA measures’ list of “visit type” codes. We then identified which of these women were sexually active, comparing 2 identification methods: (1) the original CHIPRA specifications, based on claims data, and (2) our adapted specifications, based on EHR data.

The original claims-based specifications consider a female patient to be sexually active if, at any point in the measurement year, her claims data contain 1 of a set of CPT, Healthcare Common Procedure Coding System, International Classification of Diseases, Ninth Revision diagnosis, or Logical Observation Identifiers Names and Codes that identify sexually transmitted infections, pregnancy tests or care, or prescriptions for contraceptives. In the “adapted” specifications, we captured data on sexual activity from the OCHIN EHR’s “social history” section, which includes a field for “Sexual Activity,” with response options “Yes,” “No,” “Not currently”, and “Not asked.” We considered a woman ever sexually active if indicated by “Yes” or “Not currently” in the sexual history field.

In both specifications, we considered a person sexually active if the relevant codes (original measure) or sexual activity status in the social history section (adapted measure) were ever seen in the medical record, before or including the first visit in a given measurement year; to better capture women who ever had sex and therefore should receive screening, we did not limit our capture to data only from the measurement year, as the CHIPRA measure specifies.

We calculated rates separately for women who were pregnant during the measurement year. We defined pregnancy according to the EHR data, which uses a complex, validated algorithm to identify pregnancies based on data from myriad sources.

Example 2: Rates of BMI Percentile Documentation

This measure assesses the rate of documented BMI percentile among children aged 3 to 17 in a given measurement year. The original claims-based specifications of this measure limits the denominator to children (aged 3–17) who had ≤1 gap in enrollment of up to 45 days and ≥1 outpatient visits with a primary care provider or obstetrician/gynecologist in the measurement year. Similarly, the denominator in our calculations included children with ≥1 outpatient visits. In this example, we assessed how adapting the BMI measure for use in EHR data could improve the accuracy of the numerator. The numerator in the original claims-based specifications uses International Classification of Diseases, Ninth Revision codes for BMI documentation. Our adapted specifications captured data on BMI percentile as documented in the EHR’s vitals data. We then compared the rates by using each method. As suggested in the original specifications, we present data for pregnant adolescents separately.

Results

Feasibility of Applying the CHIPRA Measures in Outpatient EHR Data

We determined that 16 of the 24 original CHIPRA measures could feasibly be evaluated in the OCHIN outpatient EHR dataset (Table 1); the rest required data not available in EHR data from primary care clinics, such as patient-reported, hospital, or dental care data (Table 2).

TABLE 1.

CHIPRA Measures Feasible in Our Outpatient EHR Data, and Suggested Adaptations Needed

| Summary of Original CHIPRA Measure | Suggested Adaptations for Application in EHR Data | Relevant Adaption Themesa | |

|---|---|---|---|

| 1 | Timeliness of PNC | I, II | |

| N: Number who received PNC with appropriate timeliness | N: Same | ||

| D: Live births to continuously enrolled women in the measurement year | D: Women with both ≥1 PNC visit, and ≥1 WCV or postpartum visit, in the measurement year | ||

| 2 | Frequency of PNC | ||

| N: Number who received PNC with appropriate frequency | N: Same | ||

| D: Live births to continuously enrolled women in the measurement year | D: Women with both ≥1 PNC visit, and ≥1 WCV or postpartum visit, in the measurement year | ||

| 3 | Cesarean deliveries: data from regional vital statistics records | I, II | |

| N: Number delivered by cesarean delivery | N: Same | ||

| D: Live births in the measurement year | D: Women with both ≥1 PNC visit, and ≥1 WCV or post partum visit, in the measurement year; obtain EHR data | ||

| 4 | Low birth weight births: data from regional vital statistics records | ||

| N: Number with birth weight <2500 g, and a Medicaid/Children’s Health Insurance Programs payer | N: Number with birth weight <2500 g | ||

| D: Live births in the measurement year | D: Women with both ≥1 PNC visit, and ≥1 WCV or post partum visit, in the measurement year; obtain EHR data | ||

| 5 | Annual Chlamydia screening | I, III | |

| N: Number who had a Chlamydia screening in the measurement year | N: Same | ||

| D: Women aged 16–24, with continuous enrollment in the measurement year, who are identified as sexually active using an algorithm that includes codes for pregnancy testing and care, STIs, and dispensed prescription contraceptive medications. (In the original measure, a person is considered sexually active if these codes are seen at any point during the measurement year. In the example presented in Table 3, a person is considered sexually active if identified as such in the medical record at any point prior to or including the first visit in the measurement year). | D: Women aged 16-24, with ≥1 visit in the measurement year, who are identified as sexually active using an algorithm which includes codes for pregnancy testing and care, STIs, and prescribed contraceptive medications, augmented by data from the EHR social history section | ||

| 6 | Immunization status: 0–2 y | I | |

| N: Number who received recommended immunizations by age 2 y | N: Same | ||

| D: Children with continuous enrollment in the measurement year | D: Children / adolescents with ≥1 visit in the measurement year (We do not suggest requiring a visit in the year prior, as rates of vaccination among persons who had any visit identifies “missed opportunities” for vaccination.) | ||

| 7 | Immunization status: 11–13 y | I | |

| N: Number who received recommended immunizations by age 13 y | N: Same | ||

| D: Adolescents with continuous enrollment in the measurement year | D: Children / adolescents with ≥1 visit in the measurement year (We do not suggest requiring a visit in the year prior, as rates of vaccination among persons who had any visit identifies “missed opportunities” for vaccination.) | ||

| 8 | BMI percentile documentation | I | |

| N: Number who had BMI percentile documented (using ICD9 codes) in the measurement year | N: Same, augmented by BMI data from the EHR | ||

| D: Children / teens with continuous enrollment, and ≥1 visit, in the measurement year | D: Children / teens with ≥1 visit in the measurement year | ||

| 9 | Well-child care: Infants | I | |

| N: Number who had appropriate number of well-child checks by 15 mo | N: Same | ||

| D: Children with continuous enrollment in the measurement year | D: Children with ≥1 visit in the measurement year | ||

| 10 | Well-child care: Age 3–6 y | I | |

| N: Number who had appropriate number of well-child checks in years 3-6 | N: Same | ||

| D: Children with continuous enrollment in the measurement year | D: Children with ≥1 visit in the measurement year, and ≥1 visit in the year prior | ||

| 11 | Well-child care: Age 12–21 y | I | |

| N: Number who had the appropriate number of well-child checks in years 12–21 | N: Same | ||

| D: Children with continuous enrollment in the measurement year | D: Children with ≥1 visit in the measurement year, and ≥1 visit in the year prior | ||

| 12 | Developmental screening: Age 12–36 mo | I | |

| N: % of children who were screened for risk of developmental, behavioral, and social delays using a standardized screening tool in the first 3 years of life | N: Same | ||

| D: Children with continuous enrollment in the measurement year (Although developmental screening is not reported in a standardized way in our data, this measure would be feasible with consistent reporting).29 | D: Children with ≥1 visit in the measurement year, and ≥1 visit in the year prior | ||

| 13 | Follow-up on ADHD medication | III | |

| N: (a) Number with ≥1 in-person follow-up visit within 30 d of dispense date; (b) no. in (a) who also had ≥2 follow-up visits within 31–300 d of dispense date | N: (a) Number with ≥1 in-person follow-up visit within 30 d of prescription date; (b) no. in (a) who also had ≥2 follow-up visits within 270 d of initial prescription | ||

| D: Children age 6–12 y, continuously enrolled 120 d pre- and 30 d postambulatory dispense of an ADHD medication, who stayed on the medication ≥210 d | D: Children aged 6–12 y who were prescribed an ADHD medication, and stayed on it for ≥210 d; duration of prescription may need to be estimated | ||

| 14 | Annual HbA1c testing | III | |

| N: Number with documentation of date and result of most recent HbA1c test [Requires manual chart review] | N: Same [Does not require manual chart review] | ||

| D: Children age 5–17 y, ≥2 visits with diabetes diagnosis over the past 2 y, and /or notation of prescribed insulin or oral hypoglycemics / antihyperglycemics for ≥12 mo | D: Children age 5–17 y, ≥2 visits with a diabetes diagnosis over the past 2 y, and/or notation of same prescribed meds as in original; duration of prescription may need to be estimated | ||

| 15 | Strep testing when dispensing antibiotics | I, III; also no ED data | |

| N: Number with a strep test administered in the 3 d prior to 3 d after the date of the pharyngitis diagnosis | N: Same | ||

| D: Children aged 2–18 y, with continuous enrollment in the measurement year, who had an outpatient or ED visit with a diagnosis of pharyngitis, and were dispensed an antibiotic | D: Children aged 2–18 y who had an outpatient visit with a diagnosis of pharyngitis, and were issued an antibiotic prescription | ||

| 16 | Antimicrobials for otitis media | None | |

| N: Number not prescribed systemic antimicrobial agents | N: Same | ||

| D: Patients aged 2 mo to 12 y with an OME diagnosis | D: Same |

D, denominator; ED, emergency department; HbA1c, Hemoglobin A1c; ICD9, International Classification of Diseases, Ninth Revision; N, numerator; OME, otitis media with effusion.

Adaptation themes include (I) Defining a population denominator; (II) Calculating data on trimester at enrollment by using available EHR data; (III) Substituting for medication dispense data.

TABLE 2.

CHIPRA Measures Not Feasible in Our Outpatient EHR Data, and Explanation

| Summary of Original CHIPRA Measure | Reason Why Adaptation Not Feasible | |

|---|---|---|

| 17 | Continuously enrolled children/adolescents with access to primary care physicians | No enrollment data; cannot identify “eligible” people who receive no care |

| 18 | Continuously enrolled children receiving dental preventive services | Some clinics provide dental services, but these are recorded in a different system. Other clinics do not provide these services. |

| 19 | Continuously enrolled children receiving dental treatment services | |

| 20 | Average number of emergency department visits | The clinics provide outpatient care only; no hospital or emergency department data |

| 21 | Asthma patients with ≥1 asthma-related emergency department visits | |

| 22 | Catheter-associated blood stream infections per line day in PICU, NICU | |

| 23 | Follow-up after hospitalization for mental illness | |

| 24 | Ratings assigned by parents of children with chronic conditions to various aspects of care, as collected in the Consumer Assessment of Healthcare Providers and Systems Medicaid version 4.0 survey (including supplemental chronic illness items) for parents of children and youth 0–18 y old | Survey measure not collected |

Adaptations Necessary to Apply the CHIPRA Measures in Outpatient EHR Data

There were 3 main adaptations necessary to apply the CHIPRA measures in the OCHIN EHR data set. We outline these as follows and include examples of their application.

I. Specifying a Population Denominator

Because our EHR data are not limited to health plan enrollees, we developed a visit-based denominator relevant to patients who were “active” at the study clinics in the measurement year. Examples are discussed here; for a complete list, see Table 1.

In the original CHIPRA prenatal (Table 1, numbers 1 and 2) and birth outcome measures (numbers 3 and 4), the denominators are live births in an enrolled population. In the EHR data, there was no direct way to identify live births. Thus, we recommend that EHR-based analyses be limited to populations of women with ≥1 prenatal care (PNC) visit and ≥1 infant/first-year well-child visit (WCV) or postpartum visit. We recognize that this specification might bias the results toward women with fewer options about where to receive care, and/or toward those who sought more care; however, including women with only a WCV or postpartum visit (and no PNC visit) could lead to even greater bias, as these women may have received PNC elsewhere. Further, including women who had only a PNC visit (and no WCV or postpartum visit) could also underestimate rates of receipt of appropriate care because some women who received a PNC visit might not have taken the pregnancy to term (and thus might not be expected to continue PNC), or might have transferred PNC, or received WCVs elsewhere.

The original specifications of the childhood preventive care measures (Table 1, numbers 6 and 7, 9–12) are also based on an enrolled population. To calculate these measures for 3- to 6- and 12- to 21-year-olds in EHR data, we suggest defining the denominator as children and youth who have ≥1 clinic visit in a given measurement year and also had ≥1 visit in the year prior, to identify “established” clinic patients. This requirement selects for regular care users, which could lead to falsely elevated performance on this measure. If children with only 1 acute visit are included, however, it could underestimate rates of appropriate WCV receipt, as patients who have only 1 visit may not be “established” at the clinic, and possibly received this care elsewhere.

II. Obtaining Data on Gestational Age and Pregnancy Outcomes By Using EHR Data

The original PNC measures (Table 1, numbers 1 and 2) account for month of pregnancy at time of enrollment. Lacking enrollment data, we suggest defining this measure by accounting for month of pregnancy at the date of the first PNC visit. The birth outcome measures (Table 1, numbers 3 and 4) are specified to be calculated by using regional vital statistics data, which include gestational age at delivery, birth weight, parity, and delivery presentation; however, such data often take time to become available. Thus, to assess current care quality, we suggest calculating these measures based on EHR data on birth weight, gestational age, pregnancy end date, delivery mode, singleton status, and parity, as recorded either at postpartum visits or the first WCV.

III. Substituting for Medication Dispense Data

Several of the original CHIPRA measures involve medication dispense data (Table 1, numbers 5, 13–15). Our EHR data include medication prescriptions, but not dispenses. Medication schedule and dosing data are available, but are currently not standardized, making it difficult to determine how long a patient remained on a medication. The medication dispense data measures can, however, be adapted to the available data. For example, the denominator for the follow-up to attention-deficit/hyperactivity disorder (ADHD) medication prescription measure requires documentation that a patient was prescribed a given ADHD medication and remained on it for ≥210 days. Without medication dispense data in the EHR, we cannot be certain that a patient received the medication, nor whether refills were authorized. Many ADHD medications cannot be issued for more than 1 month at a time, so we could estimate continuity and duration using refill date information, although this could introduce bias toward patients regularly seeking refills. In addition, patients issued prescriptions for longer than a month or those with erratic refill requests may differ from those who need regular refills. In another example, the original Hemoglobin A1c testing measure is calculated among children aged 5 to 17 with a diabetes diagnosis and/or notation of being prescribed insulin or oral hypoglycemic for ≥12 months; we suggest adapting this to children prescribed these medications in the measurement year, regardless of duration of time on the medication.

CHIPRA Measures Not Currently Feasible in Our Outpatient EHR Data

We cannot assess the number of children who did not receive care in our clinic network; therefore, we cannot measure access to primary care physicians. Few of our clinics offer dental services and we do not have a systematic way of documenting receipt of these services; thus, neither of the dental measures could be assessed by using the OCHIN data. Our EHR data are from outpatient visits only, and we do not now have a systematic way to capture emergency department or inpatient care use, so none of the hospital-based quality measures are feasible. Finally, 1 CHIPRA meas-ure is based on data from the Consumer Assessments of Healthcare Providers and Systems Medicaid 4.0 survey; this self-reported data are not captured in the EHR.

Results of Comparing the Original and Adapted CHIPRA Measure Specifications: Chlamydia Screening and BMI Documentation Examples

Example 1: Annual Chlamydia Testing Among Sexually Active Female Patients

There were 8476 nonpregnant female patients aged 16 to 24 years who had ≥1 clinic visit during 2009, and 9816 in 2010 (Table 3). Among these nonpregnant patients, we identified a total of 6113 (72%) sexually active female patients in 2009 and 7166 (73%) in 2010. We identified 1999 pregnant patients in 2009 and 2098 in 2010.

TABLE 3.

Established Female Patients Aged 16 to 24, Identified as Sexually Active Using the Original CHIPRA Specifications and/or the Adapted Specifications, and Chlamydia Testing Rates, 2009–2010

| Not Pregnant in the Measurement Year | Pregnant in the Measurement Year | |||||||

|---|---|---|---|---|---|---|---|---|

| N Females in Specified Populations | % in Specified Population Identified as Sexually Active | N Sexually Active Who Received a Chlamydia Test | % of Sexually Active Who Received a Chlamydia Test | N Females in Specified Populations | % in Specified Population Identified as Sexually Active | N Sexually Active Who Received a Chlamydia Test | % of Sexually Active Who Received a Chlamydia Test | |

| 2009 | Total females aged 16–24 with ≥1 visit in the measurement year (n = 8476) | Total females aged 16–24 with ≥1 visit in the measurement year (n = 1999) | ||||||

| Subset identified as sexually active: | ||||||||

| … using the original measure specifications only, not found in EHR social history sectiona | 2241 | 36.7 | 751 | 33.5 | 709 | 36.5 | 327 | 46.1 |

| … found in the EHR social history section only, not found using the original measure specificationsb | 486 | 8.0 | 76 | 15.6 | 12 | 0.6 | 0 | 0.0 |

| … found both in the EHR social history and when using the original measure specifications | 3386 | 55.3 | 1696 | 50.1 | 1222 | 62.9 | 628 | 51.4 |

| Total identified as sexually active using one or both specifications | 6113 | 100 | 2523 | 41.3 | 1943 | 100 | 955 | 49.2 |

| Subset not identified as sexually active using either set of specifications | 2363 | NA | NA | 56 | NA | NA | ||

| 2010 | Total females aged 16–24 with ≥1 visit in the measurement year (n = 9816) | Total females aged 16–24 with ≥1 visit in the measurement year (n = 2098) | ||||||

| Subset identified as sexually active: | ||||||||

| … using the original measure specifications only, not found in EHR social history sectiona | 2229 | 31.1 | 724 | 32.5 | 750 | 36.4 | 327 | 43.6 |

| … found in the EHR social history section only, not found using the original measure specificationsb | 668 | 9.3 | 77 | 11.5 | 22 | 1.1 | 0 | 0.0 |

| … found both in the EHR social history and when using the original measure specifications | 4269 | 59.6 | 1955 | 45.8 | 1289 | 62.5 | 554 | 43.0 |

| Total identified as sexually active using one or both specifications | 7166 | 100 | 2756 | 38.5 | 2061 | 100 | 881 | 42.7 |

| Subset not identified as sexually active using either set of specifications | 2650 | NA | NA | 37 | NA | NA | ||

Data Source: OCHIN linked CHC EHR data. NA, not applicable.

Identified as sexually active using codes for pregnancy testing and care, STIs, and prescribed contraceptive medications, as found in claims data.

Identified as sexually active using data from the EHR’s social history section for “Sexual Activity” – ever “Yes” or “Not now.”

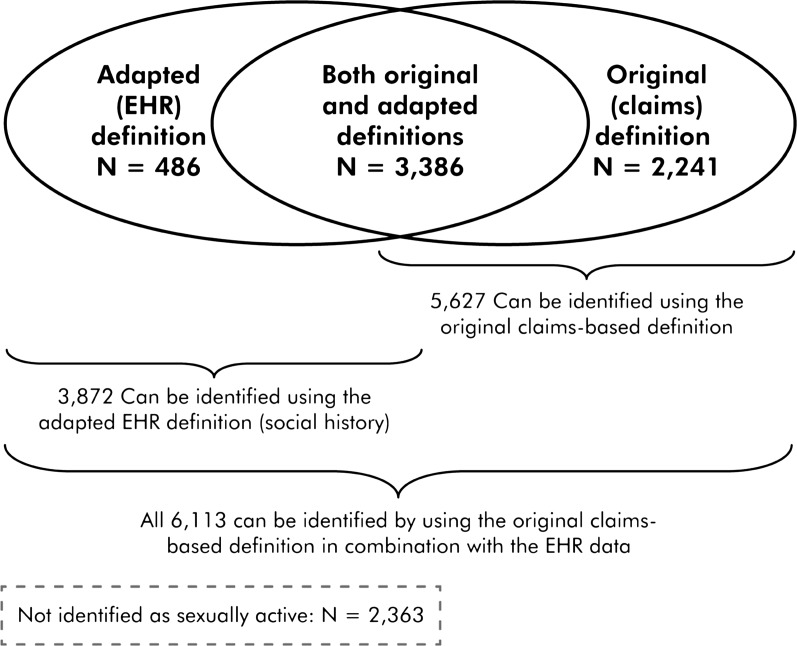

Most nonpregnant patients identified as sexually active (55% in 2009, 60% in 2010) could be found by using either EHR social history documentation or the original CHIPRA measure specifications (ie, claims codes associated with oral contracep-tives, pregnancy tests, or tests for sexually transmitted infections) (Table 3 and Fig 1). Thirty-seven percent of female patients were identified as sexually active in 2009, and 31% in 2010, by using the original specifications only (no corresponding identification in the EHR). In contrast, 8% of female patients in 2009 and 9% in 2010, could be identified as sexually active in the EHR social history section only (no corresponding identification using the original specification). The resulting rates of annual Chlamydia testing differed depending on how the denominator was identified. Table 3 illustrates how rates might change depending on the method of identifying sexually active women. Also of note, 56 women known to be pregnant in 2009 and 37 known to be pregnant in 2010 were not identified as sexually active by using either the original or the adapted measure specifications.

FIGURE 1.

Females aged 16 to 24 identified as sexually active in 2009, as identified by the original and adapted measure definitions.

Example 2: Annual BMI Percentile Documentation

When BMI percentile documentation was measured based on International Classification of Diseases, Ninth Revision codes, as in the original CHIPRA measure specifications (Table 4), about 1% of the population appeared to have had documented BMI. In contrast, when BMI percentile documentation was identified by using data from the EHR vitals fields, 71% to 73% of children age 3 to 17 years had their BMI percentile documented in the measurement year. In both years, BMI was significantly more likely to be found in the EHR only among persons aged 12 to 17 compared with those 11 and younger (χ2 P < .0001; results not shown).

TABLE 4.

BMI Measurement Documentations Among Children and Adolescents Aged 3 to 17 y, Identified by Using the Original CHIPRA Specifications and/or the Adapted Specifications, 2009–2010

| Year | Data Sources Where BMI Measurement Documentation Was Identified | Not Pregnant | Pregnant, 12–17 y, Female | ||||

|---|---|---|---|---|---|---|---|

| Total | Age | Gender | |||||

| 3–11 | 12–17 | Female | Male | ||||

| n (% of Total) | n (% of Column Total) | ||||||

| 2009 | Identified in the original (claims-based) measure only | 1 (0.0) | 1 (0.0) | 0 (0.0) | 0 (0.0) | 1 (0.0) | 0 (0.0) |

| Identified in the adapted (EHR vitals data) measure only | 18 227 (70.6) | 9899 (68.8) | 8328 (72.9) | 9343 (70.2) | 8884 (71.1) | 176 (66.4) | |

| Identified in both the original and the adapted measure | 30 (0.1) | 16 (0.1) | 14 (0.1) | 17 (0.1) | 13 (0.1) | 1 (0.4) | |

| Identified in neither data source (claims or EHR) | 7546 (29.2) | 4470 (31.1) | 3076 (26.9) | 3944 (29.7) | 3602 (28.8) | 88 (33.2) | |

| Total | 25 804 (100.0) | 14 386 (100.0) | 11 418 (100.0) | 13 304 (100.0) | 12 500 (100.0) | 265 (100.0) | |

| 2010 | Identified in the original (claims-based) measure only | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) |

| Identified in the adapted (EHR vitals data) measure only | 22 743 (73.0) | 12 908 (71.7) | 9835 (74.7) | 11 496 (72.3) | 11 247 (73.6) | 232 (80.0) | |

| Identified in both the original and the adapted measure | 115 (0.4) | 58 (0.3) | 57 (0.4) | 65 (0.4) | 50 (0.3) | 0 (0.0) | |

| Identified in neither data source (claims or EHR) | 8320 (26.7) | 5044 (28.0) | 3276 (24.9) | 4330 (27.3) | 3990 (26.1) | 58 (20.0) | |

| Total | 31 178 (100.0) | 18 010 (100.0) | 13 168 (100.0) | 15 891 (100.0) | 15 287 (100.0) | 290 (100.0) | |

Data Source: OCHIN linked CHC EHR.

Discussion

The CHIPRA measures are an important step toward standardizing the assessment of children’s health care quality in the United States6; however, they are designed to be evaluated by using health plan claims data, limiting their ability to assess population health care quality. Importantly, insurance claims do not include uninsured and discontinuously insured people, potentially making them “invisible” in care quality evaluations. Further, clinic-level quality evaluations based on claims alone will unfairly represent clinics serving discontinuously insured populations, as many of their patients receive services during periods without coverage.13,22,23

To measure population health care quality, including the uninsured and discontinuously insured, we assessed the feasibility of adapting the CHIPRA measures for application in EHR data, concluding that most of the measures could be adapted, with modifications. The most notable adaptation pertains to determining population denominators. Our approach to identifying an “established” patient population was to include patients with ≥1 visit in a measurement year, and in some cases a visit in the year prior. This visit-based approach differed from the original enrollment-based measure specifications. In addition, our suggested methods for adapting some of the measures’ denominators allow for either well visits or urgent outpatient visits. We made this choice on the premise that any visit can be an opportunity to deliver needed care; however, another approach would be to define denominators as persons with ≥1 “well” visits.

We used data from a network of CHCs because these clinics serve many uninsured and discontinuously insured children, who would be missed in insurance claims data. For example, 25% of OCHIN clinics’ pediatric visits in 2009, and 18% in 2010, were self-paid, suggesting the child was uninsured. However, our suggested adaptations are relevant to any practice wanting to use EHR data to measure care quality. This approach is relevant to current policies, which establish Accountable Care Organizations and similar infrastructure, that require providers to measure the quality of care delivered to populations.24 If Accountable Care Organizations are fully realized with defined populations, there may be less need for visit-based denominators; however, the current standard requires this approach for identifying a population denominator in many practices.

Claims data document services billed and associated diagnoses, which does not include all care received, as in the BMI example.17,25–27 Thus, even apparently complete claims data may yield inaccurate rates when used alone. In addition, such data are often not accessible to clinicians in a useable form. In this article, we took the next step and outlined how to operationalize many of the CHIPRA measures for use in EHR data.

Limitations to the Chlamydia Testing and BMI Documentation Examples

The examples presented in this article (Chlamydia testing and BMI percentile documentation) illustrate how quality of care measurements may vary depending on the content of the measure, the specifications, and the availability of appropriate data. For example, <1% of patients were identified as having annual BMI documentation by using claims-based specifications, versus 71% to 73% in the EHR-adapted measurement. The Chlamydia testing example demonstrated substantial variability in rates depending on how the denominator population was identified, similar to findings of Mangione-Smith et al.28

There are limitations to the “adapted” methods we used. When measuring Chlamydia testing, we were unable to access the inpatient codes that are part of the original claims-based algorithm used to identify sexually active women. Had we been able to include inpatient data, the original measure might have identified even more women as sexually active. It may have also been useful to add pregnancy to our definition of sexually active women, as we found 93 who were known to be pregnant, but were not identified as sexually active in the social history section of the EHR. In addition, in OCHIN’s network of CHCs, the sexual activity field is not populated for about 15% of patients. Different health care organizations collect and store data differently in the EHR. Thus, including additional fields to identify sexual activity in the EHR would likely have yielded a larger denominator. The data for these analyses came from up to 47 Oregon clinics, which may have different coding practices; some of the CPT codes used in the original specifications were not found in all of the clinics’ data, likely because those codes are not regularly used at those sites. For example, a CPT code from the original specifications to identify Chlamydia screening (87810) was rarely used by several of the clinics.

Limitations to Using Outpatient EHR Data

Although there are clear limitations to using claims data only, there are also important limitations to EHR data when adapting the CHIPRA measures, as discussed here. First, we could adapt only a subset of the measures because the data needed for some measures (such as data on hospital and dental care) were not available in outpatient EHR data. Claims data linked to EHR data might address this limitation for some populations, but would still exclude people receiving inpatient care during periods of uninsurance. A linked inpatient-outpatient EHR dataset may also help to address this limitation. Second, the lack of medication dispense data required the adaptation of several measures to use prescribing data; however, this adaptation may be beneficial, as prescribing data are commonly used in care quality measures because it reflects providers’ actions.27 Third, visit-based population denominator definitions may introduce bias toward care “users” who receive care at rates greater than the overall patient population. Similarly, this approach is limited to people who are seen by the clinic. When uninsured people are not seen at the clinic, it is difficult to determine whether they received care elsewhere. Fourth, similar to claims data, the completeness of EHR data are reliant on individual clinicians and may not include all of the care provided. Standardization of documentation practices would assist in ensuring complete EHR data. Finally, we conducted the 2 example analyses by using data from a single EHR that is linked across multiple clinics. This kind of data resource is not, at present, commonly available, but will likely become more widely available with the expansion of EHRs. The importance of applying standardized measures of care quality in EHR data will increase with the prevalence of such data systems.

Conclusions

The clinical and policy implications of these findings are important. Care quality assessments based on claims data alone exclude the uninsured, as well as services not commonly billed (eg, BMI documentation); thus, claims-based measures may present a limited view of population care. This work is a first step toward enabling the application of the CHIPRA measures in uninsured and other CHC populations, and in EHR data. Although it is feasible to adapt many of the CHIPRA meas-ures for use in outpatient EHR data, depending on how numerators and denominators are re-specified, some information is gained and some is lost. Immediate next steps must involve assessment of the validity of each adapted measure, similar to our validation of the 2 examples presented here. Next, the use of CHIPRA measures in EHR data should be tested in other outpatient settings. The results highlight the importance of considering the limitations of the original CHIPRA measures when they are used to evaluate care quality.

Footnotes

- ADHD

- attention-deficit/hyperactivity disorder

- CHC

- community health center

- CHIPRA

- Children’s Health Insurance Program Reauthorization Act

- CPT

- Current Procedural Terminology

- OCHIN

- Oregon Community Health Information Network

- PNC

- prenatal care

- WCV

- well-child visit

All authors listed here have made substantive intellectual contributions to this manuscript. Dr Gold led the analysis design and overall manuscript content, and was the lead writer; Ms Angier contributed substantially to the intellectual work involved, wrote first drafts of several sections of the article, and oversaw reference management; Drs Smith and Gallia provided high-level intellectual input, and careful editing; Ms McIntire, Mr Cowburn, and Ms Tillotson were responsible for all data handling and analysis programming; Dr DeVoe contributed major editing to the manuscript and was a primary driver of the intellectual content presented here; and all authors approved this version of the manuscript for submission.

The funding agencies had no involvement in the design and conduct of the study; analysis and interpretation of the data; or preparation, review, or approval of the manuscript.

FINANCIAL DISCLOSURE: The authors have indicated they have no financial relationships relevant to this article to disclose.

FUNDING: This project was directly supported by grant 1 R01 HS018569 from the Agency for Healthcare Research and Quality, grant UB2NA20235 from the Health Resources and Services Administration, grant 1RC4LM010852 from the National Library of Medicine, National Institutes of Health, and the Oregon Health & Science University Department of Family Medicine. Funded by the National Institutes of Health (NIH).

References

- 1.Children’s Health Insurance Program Reauthorization Act of 2009. Pub L No. 111–3. 123 Stat 8. Available at: https://www.cms.gov/HealthInsReformforConsume/Downloads/CHIPRA.pdf. Accessed December 6, 2011 [Google Scholar]

- 2.US Department of Health and Human Services Medicaid and CHIP programs: initial core set of children's healthcare quality measures for voluntary use by Medicaid and CHIP programs. Fed Regist. 2009;74:68846–68849 [Google Scholar]

- 3.Mangione-Smith R, Schiff J, Dougherty D. Identifying children’s health care quality measures for Medicaid and CHIP: an evidence-informed, publicly transparent expert process. Acad Pediatr. 2011;11(suppl 3):S11–S21 [DOI] [PubMed] [Google Scholar]

- 4.Dougherty D, Schiff J, Mangione-Smith R. The Children’s Health Insurance Program Reauthorization Act quality measures initiatives: moving forward to improve measurement, care, and child and adolescent outcomes. Acad Pediatr. 2011;11(suppl 3):S1–S10 [DOI] [PubMed] [Google Scholar]

- 5.Agency for Healthcare Research and Quality. Initial Core Set of Children's Healthcare Quality Measures. 2009. Available at: http//www.ahrq.gov/chip/listtable.htm. Accessed December 6, 2011

- 6.Mangione-Smith R, DeCristofaro AH, Setodji CM, et al. The quality of ambulatory care delivered to children in the United States. N Engl J Med. 2007;357(15):1515–1523 [DOI] [PubMed] [Google Scholar]

- 7.Centers for Medicare and Medicaid Services. CHIPRA Initial Core Set Technical Specifications Manual 2011. 2011

- 8.Fairbrother G, Simpson LA. Measuring and reporting quality of health care for children: CHIPRA and beyond. Acad Pediatr. 2011;11(suppl 3):S77–S84 [DOI] [PubMed] [Google Scholar]

- 9.Sternberg SB, Co JP, Homer CJ. Review of quality measures of the most integra-ted health care settings for children and the need for improved measures: recommendations for initial core measurement set for CHIPRA. Acad Pediatr. 2011;11(suppl 3):S49–S58, e3 [DOI] [PubMed] [Google Scholar]

- 10.Delone SE, Hess CA. Medicaid and CHIP children’s healthcare quality measures: what states use and what they want. Acad Pediatr. 2011;11(suppl 3):S68–S76 [DOI] [PubMed] [Google Scholar]

- 11.Zuvekas A, Hur R, Richmond D, Stevens D, Ayoama C, Modica C. Applying HEDIS clinical measures to community health centers: a feasibility study. J Ambul Care Manage. 1999;22(4):53–62 [DOI] [PubMed] [Google Scholar]

- 12.Kenney GM, Pelletier JE. Monitoring duration of coverage in Medicaid and CHIP to assess program performance and quality. Acad Pediatr. 2011;11(suppl 3):S34–S41 [DOI] [PubMed] [Google Scholar]

- 13.DeVoe JE, Graham A, Krois L, Smith J, Fairbrother GL. “Mind the Gap” in children’s health insurance coverage: does the length of a child’s coverage gap matter? Ambul Pediatr. 2008;8(2):129–134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kuhlthau KA. Measures of availability of health care services for children. Acad Pediatr. 2011;11(suppl 3):S42–S48 [DOI] [PubMed] [Google Scholar]

- 15.The Patient Protection and Affordable Care Act, HR 3590, 111th Cong, 2nd Sess (2010). Available at: www.medicaid.gov/Medicaid-CHIP-Program-Information/By-Topics/Quality-of-Care/CHIPRA-Initial-Core-Set-of-Childrens-Health-Care-Quality-Measures.html. Accessed April 19, 2012

- 16.Jollis JG, Ancukiewicz M, DeLong ER, Pryor DB, Muhlbaier LH, Mark DB. Discordance of databases designed for claims payment versus clinical information systems. Implications for outcomes research. Ann Intern Med. 1993;119(8):844–850 [DOI] [PubMed] [Google Scholar]

- 17.Iezzoni LI. Assessing quality using administrative data. Ann Intern Med. 1997;127(8 pt 2):666–674 [DOI] [PubMed] [Google Scholar]

- 18.Blumenthal D. New EHR adoption statistics. Available at: www.healthit.gov/buzz-blog/ehr-case-studies/new-ehr-adoption-statistics/. Accessed November 21, 2011

- 19.Important facts about EHR adoption and EHR incentive findings. Available at: http://healthit.hhs.gov/media/important-facts-about-ehr-adoption-ehr-incentive-program-011311.pdf. Accessed November 21, 2011

- 20.American Recovery and Reinvestment Act. Pub L No. 111-5. Available at: http://frwebgate.access.gpo.gov/cgi-bin/getdoc.cgi?dbname=111_cong_bills&docid=f:h1enr pdf. Accessed November 29, 2011

- 21.Gold R. The Guide to Conducting Research in the OCHIN Practice Management Data. Portland, OR: Kaiser Permanente Center for Health Research; 2007 [Google Scholar]

- 22.Cummings JR, Lavarreda SA, Rice T, Brown ER. The effects of varying periods of uninsurance on children’s access to health care. Pediatrics. 2009;123(3). Available at: www.pediatrics.org/cgi/content/full/123/3/e411. [DOI] [PubMed] [Google Scholar]

- 23.Olson LM, Tang SF, Newacheck PW. Children in the United States with discontinuous health insurance coverage. N Engl J Med. 2005;353(4):382–391 [DOI] [PubMed] [Google Scholar]

- 24.Department of Health and Human Services Centers for Medicare & Medicaid Services. Medicare Program; Medicare Shared Savings Program: Accountable Care Organizations. Federal Register. 2011;76(212):67802–67990 [PubMed]

- 25.Lawthers AG, McCarthy EP, Davis RB, Peterson LE, Palmer RH, Iezzoni LI. Identification of in-hospital complications from claims data. Is it valid? Med Care. 2000;38(8):785–795 [DOI] [PubMed] [Google Scholar]

- 26.Iezzoni LI. Using administrative data to study persons with disabilities. Milbank Q. 2002;80(2):347–379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mangione-Smith R, Elliott MN, Wong L, McDonald L, Roski J. Measuring the quality of care for group A streptococcal pharyngitis in 5 US health plans. Arch Pediatr Adolesc Med. 2005;159(5):491–497 [DOI] [PubMed] [Google Scholar]

- 28.Mangione-Smith R, McGlynn EA, Hiatt L. Screening for chlamydia in adolescents and young women. Arch Pediatr Adolesc Med. 2000;154(11):1108–1113 [DOI] [PubMed] [Google Scholar]

- 29.Jensen RE, Chan KS, Weiner JP, Fowles JB, Neale SM. Implementing electronic health record-based quality measures for developmental screening. Pediatrics. 2009;124(4). Available at: www.pediatrics.org/cgi/content/full/124/4/e648. [DOI] [PubMed] [Google Scholar]