Abstract

Objectives

Measurement of outcomes has become increasingly important to assess the benefit of audiologic rehabilitation, including hearing aids, in adults. Data from questionnaires, however, are based on retrospective recall of events and experiences, and often can be inaccurate. Questionnaires also do not capture the daily variation that typically occurs in relevant events and experiences. Clinical researchers in a variety of fields have turned to a methodology known as ecological momentary assessment (EMA) to assess quotidian experiences associated with health problems. The objective of this study was to determine the feasibility of using EMA to obtain real-time responses from hearing aid users describing their experiences with challenging hearing situations.

Design

This study required three phases: (1) develop EMA methodology to assess hearing difficulties experienced by hearing aid users; (2) utilize focus groups to refine the methodology; and (3) test the methodology with 24 hearing aid users. Phase 3 participants carried a personal digital assistant (PDA) 12 hr per day for 2 wk. The PDA alerted participants to respond to questions four times a day. Each assessment started with a question to determine if a hearing problem was experienced since the last alert. If “yes,” then up to 23 questions (depending on contingent response branching) obtained details about the situation. If “no,” then up to 11 questions obtained information that would help to explain why hearing was not a problem. Each participant completed the Hearing Handicap Inventory for the Elderly (HHIE) both before and after the 2-wk EMA testing period to evaluate for “reactivity” (exacerbation of self-perceived hearing problems that could result from the repeated assessments).

Results

Participants responded to the alerts with a 77% compliance rate, providing a total of 991 completed momentary assessments (mean = 43.1 per participant). A substantial amount of data was obtained with the methodology. Notably, participants reported a “hearing problem situation since the last alert” 37.6% of the time (372 responses). The most common problem situation involved “face-to-face conversation” (53.8% of the time). The next most common problem situation was “telephone conversation” (17.2%) followed by “TV, radio, iPod, etc.” (15.3%), “environmental sounds” (9.7%), and “movies, lecture, etc.” (4.0%). Comparison of pre- and post-EMA mean HHIE scores revealed no significant difference (p>.05), indicating that reactivity did not occur for this group. It should be noted, however, that 37.5% of participants reported a greater sense of awareness regarding their hearing loss and use of hearing aids.

Conclusions

Results showed participants were compliant, gave positive feedback, and did not demonstrate reactivity based on pre- and post-HHIE scores. We conclude that EMA methodology is feasible with patients who use hearing aids and could potentially inform hearing healthcare (HHC) services. The next step is to develop and evaluate EMA protocols that provide detailed daily patient information to audiologists at each stage of HHC. The advantages of such an approach would be to obtain real-life outcome measures, and to determine within- and between-day variability in outcomes and associated factors. Such information currently is not available from patients who seek and use HHC services.

Keywords: hearing disorders, hearing aids, rehabilitation, experience sampling

INTRODUCTION

Although understanding of the clinical and basic science foundations of hearing disorders has increased dramatically in the past decade, an observation common to rehabilitative auditory research is that those treated for hearing problems represent only a fraction of the total population with symptomatic hearing problems. For instance, three of every four people with hearing loss (and six of every 10 with moderate-to-severe hearing loss) do not use hearing aids (Kochkin 2009). Further, many patients who wear hearing aids are dissatisfied with their performance. Kochkin (2010) surveyed over 3,000 owners of hearing aids that were less than 4 years old to determine ratings of satisfaction with the hearing aids. Overall results indicated that about 55% of all owners were either “satisfied” or “very satisfied” with their hearing aids. Another 23% were “somewhat satisfied,” which the author noted was “hardly a strong endorsement for hearing aids” (p. 22). The survey also indicated over 12% did not use their hearing aids. Audiologists also implement other rehabilitative interventions via individual and group counseling, including the teaching of communication strategies and coping skills, to assist their patients in adapting to hearing loss (Boothroyd 2007; Prendergast & Kelley 2002), but again with varying results (Chisolm et al. 2004).

The factors that cause individuals with hearing loss to seek or not seek clinical care, or to be satisfied or dissatisfied with treatment, are the focus of considerable study (see Knudsen et al. 2010 for a review). Investigators have examined a range of rehabilitation outcomes in search of evidence-based principles for making optimal decisions about clinical intervention (e.g., Bentler 2005; Cox 2005). Objective outcome measures are routinely used in the controlled settings of soundproof suites, focusing on whether technological features of hearing aids and other devices meet engineering criteria and enhance speech discrimination or reduce tinnitus distress (Noble 2000). Researchers have also examined the role of subjective and real-world outcome measures in the development and implementation of effective audiologic rehabilitation (Compton-Conley et al. 2004; Gagne 2000; Gatehouse 1994; Kricos 2000; Wong et al. 2003).

Research in the bio-behavioral and cognitive sciences has shown that the recall of events and behaviors, as well as the frequency, intensity, and duration of symptoms, is subject to both random and systematic inaccuracies (Robinson & Clore 2002; Stone et al. 1999). Also, recent symptoms and events are typically recalled more accurately and influence summary appraisals (e.g., the past month) more than distant symptoms and events, suggesting that there is a recency effect in recall (Kahneman et al. 1993). Further, patients reconstruct their memories of events and the symptoms that may be associated with these events in a manner that seems to be logical and “tells a good story” but may not reflect what actually occurred (e.g., Brown & Moskowitz 1997). Among hearing aid users, recent findings suggest that they often reveal discrepant results between clinical measures and self-report outcomes (Saunders et al. 2005).

In an effort to provide a more accurate picture of the real-life experience associated with health problems, clinical researchers in a variety of fields have turned to a methodology known as experience sampling or ecological momentary assessment (EMA). Experience sampling refers to the generic practice of obtaining field or real-time records of behaviors, situations, and affective assessments and has traditionally focused on the use of paper diaries or questionnaires. EMA refers to the more specific use of technological adjuncts in the field of experience sampling. A common tool for EMA is a standard personal digital assistant (PDA), which is programmed to signal patients with an audible or vibratory alert as they go about their day-to-day lives. This alert serves as a prompt to answer a series of questions using a standard PDA stylus or touch screen. Assessments can be programmed to occur randomly within specific time windows, at fixed time intervals, or the person may be instructed to respond if a specific event is experienced such as a listening situation characterized by an adverse acoustical environment or frequent miscommunication. By asking individuals to provide reports of their symptoms and experiences close to the time of occurrence, recall and report bias are substantially reduced. Further, real-time assessment of symptoms and experiences permits the examination of diurnal or other forms of variation in symptoms or response to treatment, as well as the temporal association of symptoms with specific life events or experiences.

Because researchers in auditory rehabilitation often administer questionnaires that require retrospective recall of hearing instrument performance, human communication factors, emotional responses, or situational circumstances when an auditory problem is experienced, EMA methods may have value as an especially useful tool for the study of the impact of hearing difficulties on the course of day-to-day life. EMA offers the ability to collect data that more closely represent what “really” happened in the course of day-to-day events, whereas appraisals provided by retrospective interviews or questionnaires provide a view through a lens tinted by an individual’s ability to recall, summarize, and evaluate their experiences. Further, with the rapid advance of wireless and data-logging technologies for hearing aids and other auditory assistive devices, as well as the proliferation of smart phones, tablet computers, and other devices, there may soon be many opportunities to integrate real-time EMA data with other parallel data streams (e.g., hearing aid usage time, noise exposure).

The purpose of this article is to present data from a study conducted to determine the feasibility of using EMA to evaluate hearing difficulties encountered among hearing aid users. It should be noted that this study comprised one arm of a two-arm study. The other arm evaluated the feasibility of using EMA methodology with patients who experience bothersome tinnitus (Henry et al. 2011). Any overlap between arms is mentioned in the next section.

METHODS

This study involved three phases conducted over 2 years. For Phase 1: (a) a personal digital assistant (PDA) was identified that would be capable of implementing EMA for the stated purpose; (b) a prototype EMA assessment battery and sampling schedule were developed; and (c) initial PDA programming was completed. For Phase 2, focus groups were conducted to refine the EMA protocol. Phase 3 involved recruiting and testing research participants to implement the EMA protocol. This study was approved by the Institutional Review Boards at Oregon Health & Science University and the Portland Veterans Affairs Medical Center (PVAMC). For Phases 2 and 3 all participants signed informed consent prior to their enrollment in the study.

Phase 1

EMA Devices

Numerous PDAs were examined to identify one that would perform the EMA protocols for both arms of the study. We selected the Palm Pilot Model Tungsten (Palm, Inc., Sunnyvale, California) because it is capable of running EMA software (CERTAS®, Personal Improvement Computer Systems, Inc., Reston, Virginia). Fourteen PDAs were purchased, which were used for both study arms. Individual licenses for each participant were purchased from CERTAS®.

Sampling Protocol

Developing the sampling protocol involved making decisions with respect to: (a) daily sampling schedule; (b) number of items for each assessment; (c) whether responses should reflect the time period since the previous survey or the moment of each response; and (d) content and formatting of survey items.

Daily sampling schedule

Most EMA studies use a sampling density of between four and eight assessments per day (Beal & Weiss 2003; Morren et al. 2009). We decided to administer the assessment four times per day to minimize response burden and the potential for reactivity (which could cause participants to become more impacted by their hearing difficulties) (Stone & Shiffman 2002). Participants would be prompted across a 2-wk period—a time frame that is commonly used with EMA studies (Stone et al. 2003).

The testing period was limited to 12 hr each day – 8:00 AM to 8:00 PM. Participants were alerted four times during the 12-hr period as follows: The first alert occurred at a random time within a window of the first 15 min of the test day. Subsequent alerts were programmed to occur randomly within a window from 150 to 180 min after a previous alert. Each alert initiated a 20-min response window. If the participant did not respond to the first alert, then a “reminder alert” was triggered every 5 min for 20 min. If no response was obtained, then the next alert would occur 150 to 180 min after the end of the previous response window. This sampling schedule typically resulted in four assessments per day. However, based on the timing of the alerts and how quickly a participant responded, a fifth assessment was occasionally generated prior to the end of the 12-hr testing period.

Number of survey items for each momentary assessment

Maximizing compliance to repeated assessments requires that each assessment be limited to 3 min or less (Stone & Shiffman 2002). We decided to limit the number of survey items responded to during each assessment to 24. Many of these items were contingent upon previous responses, thus it was unlikely that participants would respond to all 24 questions. The time of responding for a single alert was not expected to exceed 1–2 min on average. Indeed, logging data from the PDA showed that the total average time for participants to respond to the items was approximately 1 min (range: <1 min to 8 min).

Time period considered for each momentary assessment

For EMA testing, participants can be asked to respond to questions with respect to their experiences since the last alert, or with respect to the present moment. Each approach has its advantages and disadvantages (Beal & Weiss 2003). Responses that reflect the period of time since the last assessment are subject to memory biases. Describing the immediate experience eliminates those biases, but also doesn’t take into account all of the experiences since the previous assessment. When describing difficult listening situations, participants would not necessarily experience listening difficulties at the moment of responding. We also reasoned that being in the midst of a difficult listening situation (e.g., a conversation at work) would directly impede participants’ ability to enter EMA data. It was therefore decided that the most relevant data would come from surveying experiences since the previous assessment. After each alert, participants were instructed on the PDA screen to respond to the questions about their experiences since the previous alert. Individual questions were worded similarly.

Content and formatting of EMA survey items

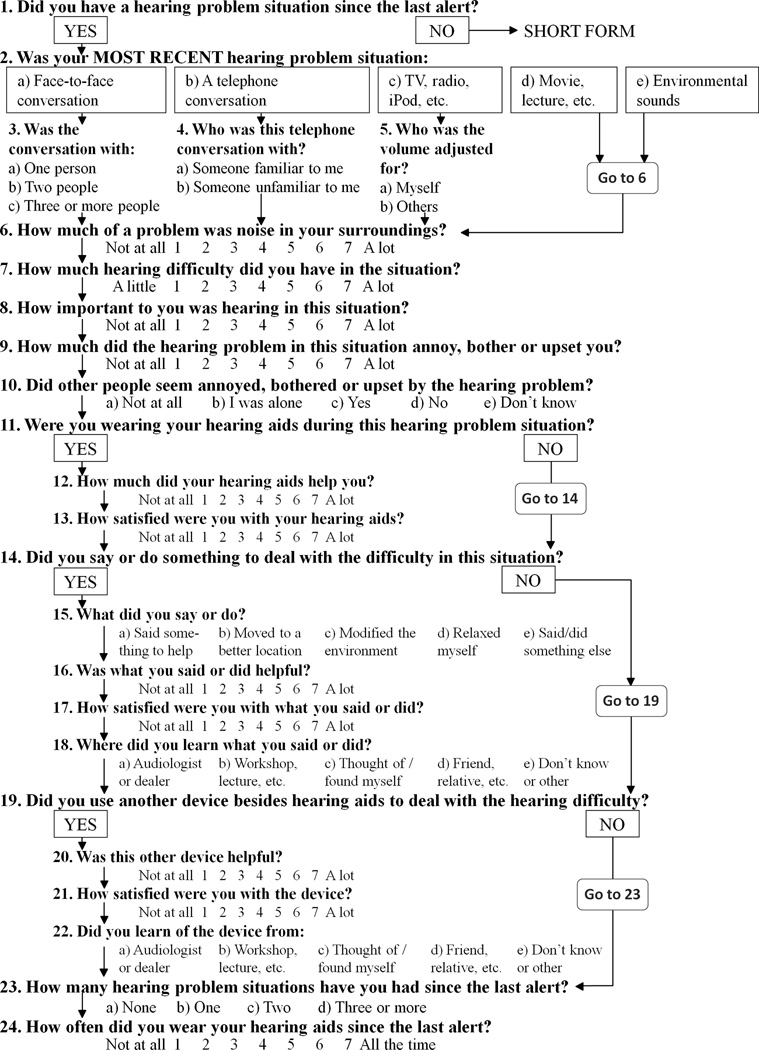

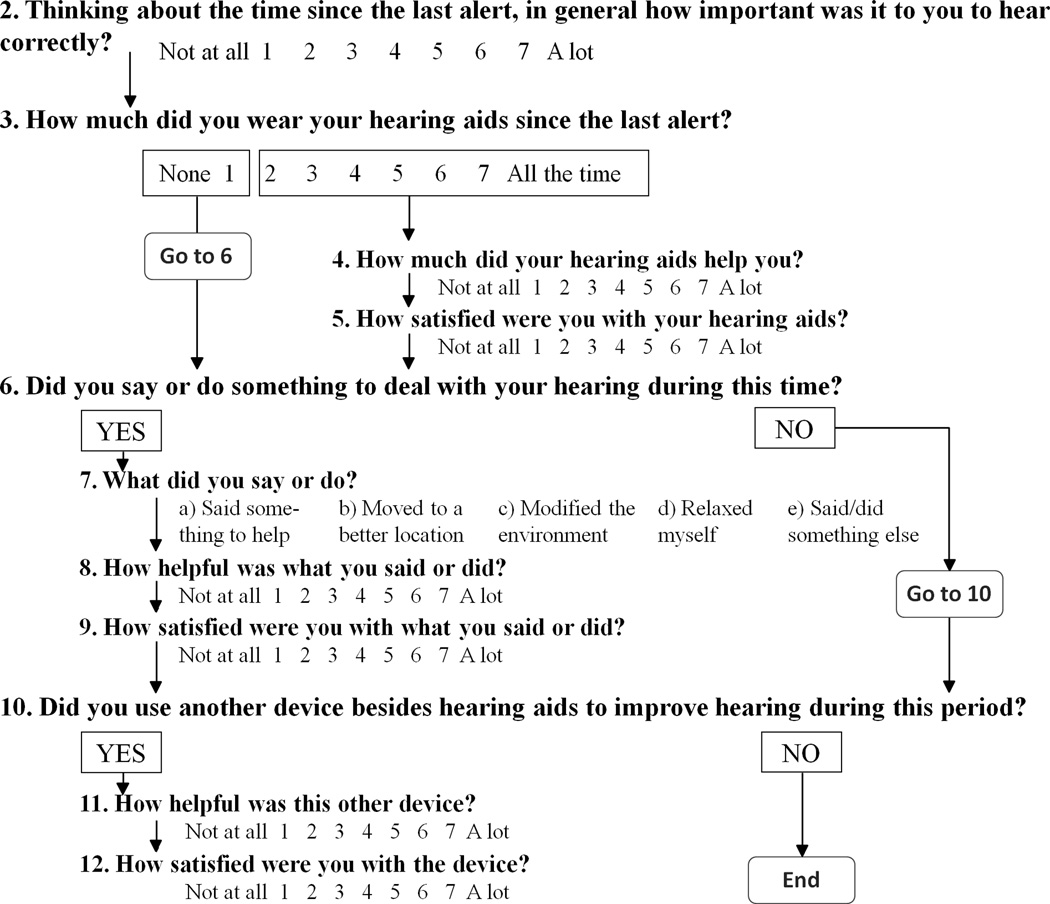

The research team developed the EMA survey items, some of which were adapted from the Glasgow Hearing Aid Benefit Profile (GHABP) (Gatehouse 1999) and the International Outcome Inventory-Alternative Interventions (IOI-AI) (Noble 2002). The overall purpose of each assessment was to determine if the participant experienced a hearing problem situation, and, if so, to specify the details of the situation. A “hearing problem situation” is operationally defined as a personally salient situation in which a person experiences communication difficulties as a result of hearing loss. The study focused on hearing problem situations to collect data that would potentially be useful to audiologists. To provide tailored auditory rehabilitation for their patients, audiologists would need to know the frequency and ratings of different hearing problem situations experienced by a patient. Furthermore, since EMA methodology is specifically designed to capture real-time records of behaviors, situations, and affective reactions, focusing on hearing problem situations offers the ability to gain a variety of perspectives with respect to each hearing problem situation experienced by a patient. Figure 1 (“long form”) shows a flowchart that displays the individual questions, response items, and the branching algorithm. In addition, a separate set of questions (“short form”—see Fig. 2) was developed for use when participants reported not having a hearing problem situation. Although the wording of the questions on the short form was slightly different, each question was designed to be comparable to a question on the long form. For both sets of questions, many of the response choices utilized a 7-point Likert-type scale, anchored in most instances by “not at all” and “a lot.”

Figure 1.

Flowchart for the “long form” version of the PDA questionnaire.

Figure 2.

Flowchart for the “short form” version of the PDA questionnaire.

Phase 2

After selection, purchase, and programming of the PDAs, two focus groups were conducted (Powell et al. 1996; Vogt et al. 2004). The groups, lasting about 2 hr each, were designed to obtain feedback from hearing aid users concerning the EMA protocol that was developed during Phase 1 for use in Phase 3.

Focus group participants were recruited from previous participants of research at the National Center for Rehabilitative Auditory Research (NCRAR). The first group included five male participants (mean age 57.0 yr; SD = 10.3). The second group included four male participants (mean age 63.3 yr; SD = 3.9). All participants were Veterans. Authors MBT and JAI were co-facilitators of the groups and two research assistants attended. Each participant received $15.

The PDAs, programmed with the survey items (Figs. 1 & 2), were demonstrated to the group. Different aspects of the EMA protocol were discussed, including: the response period after each alert; whether audio alerts were audible (vibratory alerts were not possible with the PDAs used in this study); issues pertaining to carrying or wearing the PDA (including which of a variety of cases or holsters was preferred); the number of questions for each assessment; the sampling density (four alerts per day); and the time period for testing (8:00 AM to 8:00 PM). Each focus group was audio-recorded, and a research assistant transcribed and then summarized the recordings with respect to the most relevant and useful information (Krueger 1998).

Focus group participants generally agreed with the proposed EMA protocol. The research team was particularly concerned about the inability of the PDA to produce vibratory alerts (the study was originally conceived with vibratory alerts as a key component). Participants in both focus groups, however, expressed confidence that the PDA audible signal was loud enough to be heard in most situations. The participants expressed concern that the momentary assessments were oriented toward hearing problems rather than communication successes associated with use of hearing aids. That concern led us to develop the short form, which asked questions designed to identify factors that contributed to positive listening experiences (see Fig. 2).

Phase 3

Phase 3 participants were recruited by contacting previous NCRAR research participants, in addition to the use of recruitment flyers posted in various locations at the PVAMC. The primary inclusion criterion was the regular use of hearing aids. Candidates who engaged in activities making it difficult to respond to the EMA protocol (e.g., night workers, driving more than 2 hours per day) were excluded. The protocol involved wearing the PDA and responding to the alerts four times per day for 2 wk. Participants attended a research appointment at the beginning (orientation session) and end (follow-up session) of the 2-wk EMA testing period. Given the sampling schedule and the overall feasibility goals of the study, we developed a remuneration schedule that would optimize compliance. EMA studies place a premium on obtaining high levels of compliance because missing data have the potential to bias the obtained sample of behavior and experience (Shiffman et al. 2008). Therefore, EMA researchers recommend a combination of monetary incentives and other procedures (e.g., thorough participant training of the sampling protocol) to maximize compliance (Morren et al. 2009; Scollon et al. 2003; Stone & Shiffman 2002). In our study, each participant received $100 plus $1 per completed assessment.

Twenty four participants were enrolled. They were, on average, 60.2 yr of age (SD = 10.0; range 42–78), and predominantly male (70.8%) and Veterans (66.7%). Less than half were married (45.8%) and half were retired (50.0%). The majority had some college or post-high school vocational training (45.8%) or possessed a college or graduate degree (45.8%). Table 1 provides further characteristics of the participants.

Table 1.

Characteristics of participants (N = 24).

| Characteristics | n | % |

|---|---|---|

| Marital Status | ||

| Married living with spouse | 11 | 45.8 |

| Separated | 1 | 4.2 |

| Divorced | 7 | 29.2 |

| Never married | 5 | 20.8 |

| Employment Status | ||

| Full Time | 5 | 20.8 |

| Part Time | 4 | 16.7 |

| Unemployed | 1 | 4.2 |

| Retired | 12 | 50.0 |

| Looking for work | 1 | 4.2 |

| Other | 1 | 4.2 |

| Veteran Status | ||

| Veteran | 16 | 66.7 |

| Nonveteran | 8 | 33.3 |

| Education | ||

| High School Diploma/G.E.D | 2 | 8.3 |

| Some college/post-high school vocational | 11 | 45.8 |

| Four-year college | 5 | 20.8 |

| Post-college education | 1 | 4.2 |

| Graduate degree | 5 | 20.8 |

Orientation Session

At the initial appointment (lasting up to 1 hr), participants: (a) completed informed consent; (b) filled out questionnaires (e.g., demographic information, use of non-hearing aid technology); (c) completed the Hearing Handicap Inventory for the Elderly (HHIE) (Ventry & Weinstein 1982); (d) received a detailed explanation of a “hearing problem situation” and were given examples of the types of hearing problem situations that would elicit a “yes” response to EMA question 1 (Fig. 1); (e) reviewed the individual items comprising the EMA questionnaire to ensure understanding of each item; (f) received instructions regarding use of the PDA to perform the EMA protocol (e.g., demonstration of alerting sound, explanation of how to use the stylus pen to tap on-screen buttons to select responses); and (g) completed one assessment using the PDA based on a familiar hearing problem situation. The EMA testing period began immediately after the orientation session.

Follow-up Session

After the 2-wk EMA testing period, participants returned the PDA, completed the HHIE, participated in an interview, and were debriefed. The semi-structured interview consisted of questions designed specifically to obtain participants’ opinions regarding their use of the PDA and the momentary assessment questions. Questions focused on: whether they felt differently about their hearing loss and use of hearing aids as a result of participating in the study; whether using the PDA interfered significantly with their daily activities; if they experienced reactions from other people; their ability to hear the alerts; if they felt that they had responded to all or most of the assessments; and reasons why they missed any alerts. In addition, participants were shown their responses and asked to comment on their level of compliance.

Data Analysis

Descriptive analyses of the Phase 3 EMA data were conducted to evaluate group responses to each item. Also, the proportion of responses for each participant indicating a hearing problem situation per day in the study was calculated. These proportions were summarized across days in the study and within time blocks throughout the day to examine patterns of both within- and between-day responses. For these analyses, the daily testing period was partitioned into four time blocks: 8:00 AM – 11:00 AM, 11:00 AM – 2:00 PM, 2:00 PM – 5:00 PM, and 5:00 PM – 8:00 PM.

To assess whether responding to the EMA assessments affected participants’ self-perceived effects of hearing impairment, a reactivity analysis was conducted using data from the HHIE (Ventry & Weinstein 1982). The HHIE is comprised of 25 questions with a response format of “yes,” “sometimes,” and “no.” For scoring, a “yes” response is assigned 4 points, “sometimes” is assigned 2 points, and “no” is assigned 0 points. The HHIE index score thus can range from 0 to 100 points, with higher scores representing greater perceived effects of hearing impairment. Pre- and post-EMA data from the HHIE were compared to determine if the EMA assessments affected participants’ self-perceived handicap in both emotional and situational contexts related to their hearing impairment.

RESULTS

Descriptive Analyses

The EMA testing period was intended to last 14 days. As a result of scheduling, some participants responded to the PDA for more or less than 14 days (M = 14.7, SD = 0.9, range = 13–17). One participant lost the device during the testing period resulting in missing data. Averaging across responses within the 14-day period, participants responded to 77% of the alerts (M = 43.1, SD = 12.0), resulting in a total of 991 momentary assessments.

Responses When a Hearing Problem Situation Occurred

(Please see Fig. 1, which shows the long form questions referred to in this section.) Based on the total number of responses, 372 (37.6%) responses indicated the experience of a hearing problem situation since the last alert (question 1). Table 2 displays the data regarding the types of hearing problems reported in response to question 2 (face-to-face conversation; telephone conversation; TV, radio, iPod, etc.; movie, lecture, etc.; environmental sounds) and follow-up questions (3–5). Table 2 depicts how group data can be obtained with this technique. For example, 53.8% of the responses indicated that the reported hearing problem situation was “face-to-face conversation.” The next most common problem was “telephone conversation” (17.2%) followed by “TV, radio, iPod, etc.” (15.3%), “environmental sounds” (9.7), and “movies, lecture, etc.” (4.0%).

Table 2.

Summary of responses to questions 2–5 on the PDA “long form” survey. These questions were asked when a hearing problem situation was reported to occur “since the last alert” (i.e., response of “Yes” to Question 1).

| Question 2: What was your most recent hearing problem situation? |

Number of Responses (%) |

Branched follow-up question (if applicable) |

Number of Responses (%) |

||||

|---|---|---|---|---|---|---|---|

| Face-to-face conversation |

200 | (53.8%) | → |

Question 3: Was the conversation with: |

One person | 132 | (66.0%) |

| Two people | 24 | (12.0%) | |||||

| Three or more people |

44 | (22.0%) | |||||

| Subtotal: | 200 | ||||||

| Telephone conversation |

64 | (17.2%) | → |

Question 4: Who was this telephone conversation with? |

Someone familiar to me |

52 | (81.3%) |

| Someone unfamiliar to me |

12 | (18.7%) | |||||

| Subtotal: | 64 | ||||||

| TV, radio, iPod, etc. |

57 | (15.3%) | → |

Question 5: Who was the volume adjusted for? |

Myself | 43 | (75.4%) |

| Others | 14 | (24.6%) | |||||

| Subtotal: | 57 | ||||||

| Movie, lecture, etc. | 15 | (4.0%) | |||||

| Environmental sounds |

36 | (9.7%) | |||||

| Total: | 372 | ||||||

Note. Arrows in this table indicate branching follow-up questions.

Table 2 also shows responses to branching questions that followed question 2. For example, question 3 (“Was the conversation with:”) was asked only if participants reported a problem with “face-to-face conversation.” If such a problem was reported, the data indicate that the problem occurred with “one person” 66.0% of the time, with “two people” (12.0%), or “three or more people” (22.0%). Similarly, question 4 provided further details if a participant reported a problem with “telephone conversation” and question 5 provided details with respect to a problem with “TV, radio, iPod, etc.” Appendix A (see Table, Supplemental Digital Content 1) shows comparable data for questions 6–24.

Within- and Between-Day Analyses

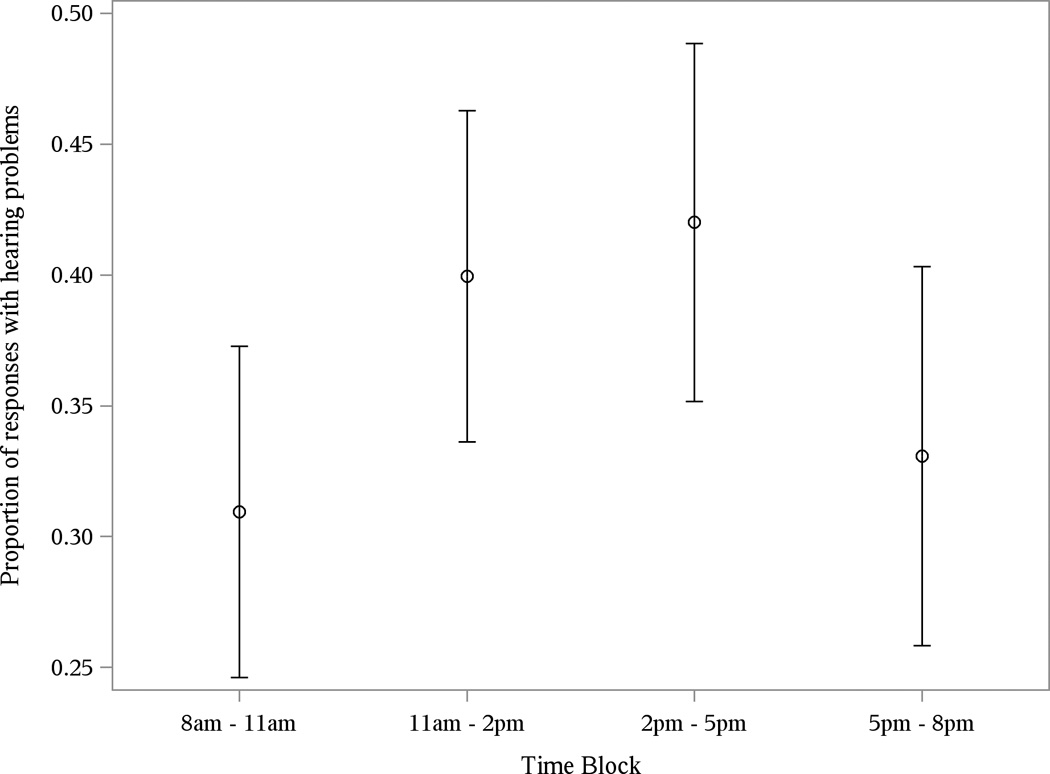

A graphical analysis was conducted to examine within- and between-day effects of hearing loss. To examine within-day effects, four time blocks were created: 8:00 AM – 11:00 AM, 11:00 AM – 2:00 PM, 2:00 PM – 5:00 PM, and 5:00 PM – 8:00 PM. Next, the proportion of responses indicating a hearing problem situation within each time block per each participant was calculated. After this, the mean proportion of responses that indicated a hearing problem for each time block for all participants was calculated. The results are presented in Figure 3.

Figure 3.

Mean proportion of responses indicating a hearing problem situation by time block. This figure shows within-day variability of the proportion of responses where problems were reported. The bars indicate the standard error of the mean.

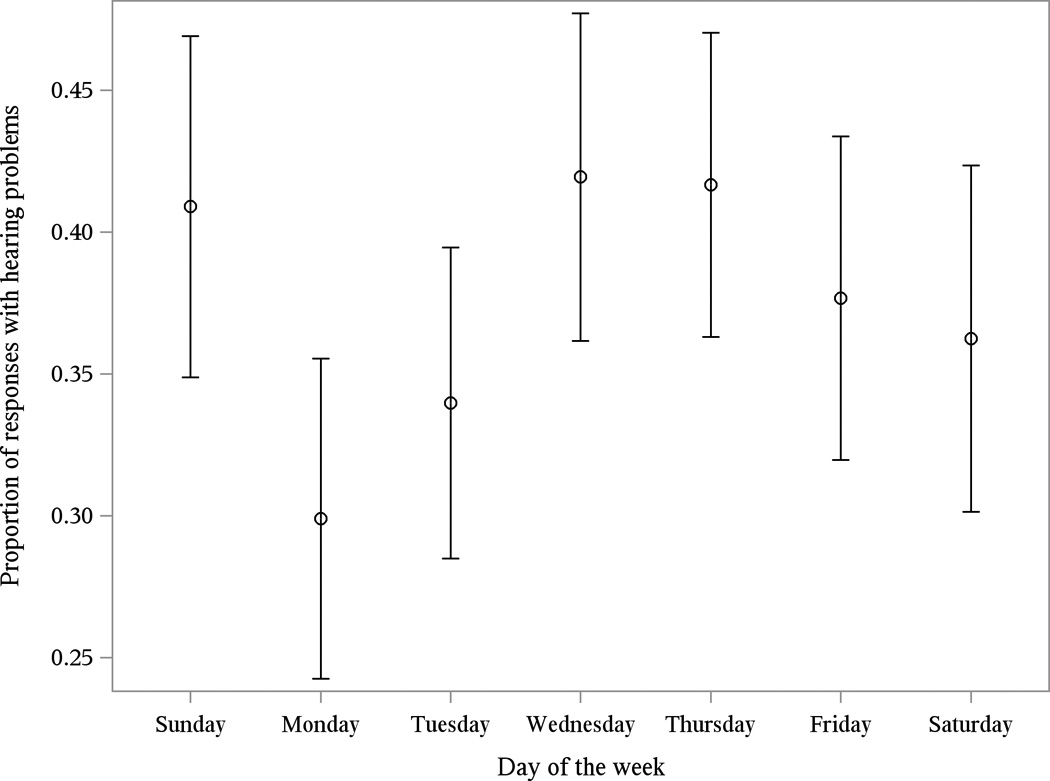

To examine patterns of hearing problem situations over the course of a week, the proportion of assessments indicating a hearing problem situation per day in the study for each participant was calculated. Subsequently, the mean proportion of assessments that indicated a hearing problem for each day of the week for all participants was calculated. Results are presented in Figure 4. In addition, individual data are presented in Appendix B (see Figure, Supplemental Digital Content 2). These plots show the total responses and hearing problem situations per day for each participant in the study.

Figure 4.

Mean proportion of responses indicating a hearing problem situation per day of week, showing between-day variability of the proportion of responses where problems were reported. The bars indicate the standard error of the mean.

Reactivity Analysis

To assess reactivity, mean HHIE scores were compared between the pre- and post-EMA assessments. The mean HHIE score at the orientation session was 41.4 (SD = 23.4) indicating a “moderate handicap.” The mean HHIE score assessed during the debriefing session was 42.7 (SD = 23.6) also indicating a “moderate handicap.” A paired samples t-test was conducted to determine if HHIE scores from the orientation and debriefing sessions were significantly different. The difference in means was 1.4 units, which was not significant, t(21) = 0.73, p = 0.48.

Follow-up Session Interview Data

As part of the follow-up session, a semi-structured interview protocol was used to elicit feedback from participants regarding their general experience of participating in the study. When asked whether they believed that using the PDA and completing the assessments changed their feelings about their hearing loss and hearing aids, 15 (62.5%) participants reported no change and nine (37.5%) reported a change. The nine who reported a change discussed having a greater sense of awareness regarding their hearing loss and use of hearing aids. Of these nine, six (66.7%) perceived that the changes were positive and three (33.3%) perceived both positive and negative changes. It is important to note that not one of these nine participants reported a solely negative change. Furthermore, follow-up statistical analyses of these nine participants indicated no significant change in pre- and post-HHIE scores, t(7) = −0.34, p = 0.75. The three participants who expressed both positive and negative changes tended to discuss positive aspects associated with their increased awareness of their hearing loss and their use of hearing aids. For example, one participant who reported greater awareness of his hearing impairment said that the change was “a call to action on my part. To correct the hearing aid issue and do something else to improve my hearing.” Another participant said, “I can see both positive and negative. Awareness of how much I was wearing my hearing aids and situations in which I should have been using my hearing aids.”

Participants were asked if they believed that using the PDA and completing the assessments changed the things they did during the day or the activities they engaged in. Of the 22 participants who responded to this question, 17 (77.3%) stated that they did not change their normal routine. However, five (22.7%) participants reported changes in their normal routine. A few indicated changing their normal routine so that they could hear and respond to the PDA. For example, one participant said, “I didn’t go shopping as much as I usually do because it was hard to carry the PDA in my purse and I had to look at it pretty good. I think it needs to be louder.” One participant said that he had to tell others what he was doing when responding to the PDA. Another participant described using hearing aids more often as a result of being in the study, saying, “Normally, I wouldn’t wear the hearing aids but I did just to hear the PDA alerts.”

We asked participants if they experienced reactions from other people during the EMA testing period. Overall, 22 (91.7%) participants reported that they did not experience reactions from other people. Most participants reported that people were generally not aware of their use of the PDA. For example, one participant said, “I did not notice that. Nobody raised any objections over me taking the time out to use the PDA.” Even though most of the participants reported not experiencing reactions from people, the majority of the participants commented on other people’s positive interest in the study or conveyed various positive interactions. For example, one participant said, “I think some people were interested. I pulled the PDA out in a couple of meetings and it raised awareness of some coworkers.”

Participants were also asked whether the PDA alerts were easy or hard to hear. Of the 24 participants, 14 (58.3%) reported that the alerts were hard to hear, five (20.8%) reported that the alerts were easy to hear, and five (20.8%) reported both easy and hard to hear. Participants who reported the alerts being hard to hear discussed the difficulty of hearing the PDA alerts in loud or noisy environments, the lack of a vibrating option or inability to raise the volume of the PDA, and not being able to hear the alerts without using their hearing aids. For those who reported both easy and hard to hear, many described situational environment noise that affected their ability to hear the PDA alerts. For example, one participant said, “It depended on where the device was. If I had it on me, it was easy to hear. But if it wasn’t, it was hard to hear. Like in the car, it was hard to hear.”

Lastly, the participants provided estimates of their response rate and reasons why they may have missed an alert. On average, participants reported that they had completed 82% (SD = 12.5) of the PDA alerts. As reported earlier, the actual average response rate was 77% (M = 43.1, SD = 12.0). Participants described a range of reasons why they had missed alerts. Some of the reasons included turning off the PDA alarm because of their involvement in specific activities (e.g., yoga, meetings), missing AM alerts because they were sleeping in or showering, and not hearing the PDA alert because of an activity they were engaged in (e.g., mowing lawn, noisy environments).

DISCUSSION

The overall objective of this study was to examine the feasibility of using EMA methodology among hearing aid users. Feasibility was indeed demonstrated based on implementation of the EMA device and protocol, reliable results obtained from participants, and lack of reactivity. Specific objectives such as developing questions, designing the sampling protocol, selecting PDA devices, and device programming were all successfully achieved.

Phase 3 participants provided a total of 991 completed momentary assessments (M = 43.1 responses per participant). Response compliance was good (77%), indicating that the sampling protocol and schedule were not overly burdensome to participants. Based on participants’ reports during the follow-up session, compliance was reduced as a result of occasional inability to hear the alerts. Although the compliance rate was reduced, research indicates that response compliance rate with other methods such as manual diary-keeping can be as low as 11% (Stone & Shiffman 2002).

Analyses of pre- and post-HHIE scores indicated that responding to the EMA assessments did not exacerbate participants’ self-perceived hearing handicap. Although there has been some concern that EMA may be subject to so-called “reactive” contamination—i.e., rating a symptom or clinical problem may increase attentiveness and experienced distress—research to date has shown that EMA is generally free of such effects (e.g., Cruise et al. 1996). Our own EMA feasibility studies—the current study with hearing aid users and the study among participants with bothersome tinnitus (Henry et al. 2011)—did not indicate reactivity based on pre- and post-survey measures. However, in this study follow-up session results suggested that several participants experienced greater awareness of their hearing in general. While nine participants reported a greater awareness of their hearing and five of these nine made changes to their normal routines as a result of EMA, their perception of their emotional and social/situational consequences associated with hearing impairment did not increase over the 2-wk period as measured by the HHIE. In other words, some participants experienced more awareness of their hearing in general as they responded to the EMA but it did not increase their level of distress associated with their hearing impairment.

The data obtained using EMA methodology can be examined in aggregate or individually. The particular approach used to analyze the data depends on the purpose of asking real-time questions in this manner. In general, group data, as shown in Table 2, Appendix A, and Figures 3 and 4, would be useful primarily for research purposes. Group data reveal patterns of hearing difficulties (and successes), which can be useful to evaluate, e.g., different parameters of hearing aids and their utilization. Individual data, as shown in Appendix B, have the potential to reveal important information that could be used clinically to evaluate and improve a patient’s hearing health care.

Research Application of EMA Data

The descriptive analyses of group data shown in Table 2 and Appendix A reveal the research potential for using EMA as a tool to evaluate hearing aid benefit. For example, the Table 2 data indicate that participants experienced higher percentages of hearing problems in situations involving two-way communication. These frequencies of hearing problem situations likely reflect: (a) how often these types of situations arise in everyday life; and (b) the types of situations in which hearing problems are most common.

Analyses of within- and between-day effects of hearing loss revealed diurnal patterns of hearing problem situations (Fig. 3). Hearing problem situations peaked during two time blocks: 11:00 AM – 2:00 PM and 2:00 PM – 5:00 PM. Participants thus experienced a higher proportion of hearing problem situations during the afternoon than during morning or evening time blocks. A possible explanation for this trend is that participants were more likely to interact with other people during afternoon hours, which might suggest a greater need for hearing aids during the afternoon. Furthermore, a pattern was evident when hearing problem situations were examined over the course of a week (Fig. 4), showing that hearing problem situations tended to increase during mid-week, tapered off as the weekend approached, with a peak again on Sunday. It is again possible that participants tended to interact more with people during periods of greater hearing problem situations. The reduction toward the end of the week and increase on Sunday are more difficult to interpret. We can conjecture that participants had fewer social activities as the weekend approached but on Sunday experienced greater social interaction by attending church-related events. These results summarize only a selection of the data collected in this study. Many more of these types of analyses were conducted, which describe situations both when participants had a hearing problem and when they did not. Please refer to Appendix A (see Table, Supplemental Digital Content 1) to see mean data obtained with respect to hearing problem situations for questions 6 through 24 (obtained from the long form questionnaire shown in Fig. 1).

The ability to collect such detailed real-time data describing hearing abilities in various random situations has potential to significantly enhance clinical research evaluating the benefits of hearing aids. It was not possible until recently to intrude in a person’s life at random times throughout each day and electronically obtain detailed information concerning hearing situations and abilities. Hearing aid research could utilize EMA data to more accurately determine the benefit of different hearing aids, models, settings, and other parameters relevant to the listening experience. EMA also could be used to assess patients who are being evaluated to determine their need for hearing aids. No other form of evaluation can provide such detailed and comprehensive data on groups of research participants.

Clinical Application of EMA Data

Appendix B (see Figure, Supplemental Digital Content 2) shows EMA traces graphed individually for each participant. These kinds of individualized graphs can be plotted for any of the questions that were asked during the assessment, or for any other question that could be asked. The amount of data potentially obtained from an individual is vast, which may seem cumbersome and unusable in the clinical environment. However, it is possible to distill individual EMA data into a format revealing the most salient information that would be useful to a clinician. Appendix C (see Figure, Supplemental Digital Content 3) shows an example of what an EMA data summary sheet could look like. Such a summary sheet could be generated by downloading the data from an EMA device into a computer program. This kind of distilled EMA data could be of significant benefit to clinicians to determine the real-life effectiveness of hearing aids.

EMA summary data could be used by clinicians not only to tailor the evaluation and counseling to patients’ specific needs but also to optimize and capitalize on situations in which patients report higher levels of satisfaction. Future EMA studies aimed at evaluating hearing aid efficacy should consider the acclimatization period when designing the duration of the EMA testing period. Research suggests that self reports conducted too early in the fitting process may provide misleading information because users have not had enough time to fully realize the benefits of the new hearing aids (Humes & Humes 2004; Kuk et al. 2003; Vestergaard 2006). EMA has the potential to elucidate the time course of acclimatization in a way that is not possible using standard evaluation methods.

Although all of our participants wore hearing aids, the technique could be used with patients who have hearing loss but have not received hearing aids. The EMA methodology could be incorporated into hearing aid fitting assessments, which could assist in obtaining details of unaided hearing difficulties. As an example, EMA data could reveal that a repeated situation causing hearing difficulty is talking on mobile phones. As a result, specific hearing aid models offering compatibility with mobile phones could be selected and settings could be programmed to better enhance listening while using mobile phones.

Using a similar approach, EMA methods could be used to explore outcomes of individual or group auditory rehabilitation training. The potential also exists to provide intervention via the same device that performs EMA (e.g., tablet computer, smart phone). That is, if patients are experiencing certain difficulties, the device could provide them with recommendations for alleviating the difficulties. In effect, EMA has the potential to implement a form of auditory rehabilitation in addition to conducting assessments.

Limitations of this Study

The response compliance of 77% reveals that for 23% of the alerts no response was obtained. Understanding the circumstances involved in a non-response would be interesting and informative. However, the software program (CERTAS) used in this study does not register a time stamp for non-responses. This is partly based on the programming involved in creating the random alert schedule. Future studies should consider data logging of non-responses.

Although this study showed no reactivity based on pre- and post-EMA testing, the potential for reactivity cannot be ruled out. A more complete analysis of potential reactive effects would require the comparison of several groups under different conditions of EMA use, including different numbers of alerts each day, and a group that did not perform the EMA protocol. Such studies are necessary to more definitively address this concern.

Several features of the research design limit the ability to generalize our findings. First, the data were not collected from a nationally representative sample. The participants in this pilot study were a convenience sample of mostly older male Veterans. The findings regarding hearing difficulties that hearing aid users experienced may not generalize to the larger population because of our use of convenience sampling. However, given that this was a pilot study with the objective of assessing the feasibility of using EMA with hearing aid users, convenience sampling was an appropriate method for obtaining basic data and trends. Also, it was not, at this pilot project stage, our goal to generate data that could be widely generalized to fundamental clinical issues. This would more properly be the focus of follow-up study, which would involve (for example) reasonable and appropriate control groups or conditions that would include participants completing or not completing EMA assessments. To increase the generalizability of findings, future research should include a representative mix of males and females, represent different age ranges with respect to patients who suffer from hearing loss and use hearing aids, and also sample from non-Veteran populations.

Remuneration is a common strategy used to enhance compliance in EMA studies (Morren et al. 2009). Without remuneration in a clinical sample, it is possible that the compliance would be lower and data obtained would be biased and incomplete. However, compliance in a clinical sample may not necessarily be lower without monetary incentives as there are other advantages associated with using EMA methods in a treatment context. For example, patients are likely motivated to devote energy to assessment, there are usually clear target behaviors or experiences to report on, and these behaviors or experiences are influenced by the patients’ presenting problem, the nature of the pathology, or the clinical formulation of the case (Shiffman et al. 2008). Furthermore, in a review of EMA studies on clinical assessment, the authors posited that compliance would be similar if not higher in everyday clinical practice because EMA studies in general report high compliance and their own research suggests that compliance rates are largely influenced by the interest that researchers show to the patients involved in the clinical assessment (Ebner-Priemer & Trull 2009). However, until relevant clinical studies are conducted using EMA, it remains unknown whether these advantages over other methods are compelling enough to merit widespread use in clinical practice (Piasecki et al. 2007).

Another limitation of this study was that we used a PDA device that did not have vibratory capabilities. This issue was considered by the researchers and discussed with participants in Phase 2 of the study. During the focus groups, the alarm was demonstrated with the PDA in a large, relatively quiet room. In this environment, all focus group participants reported that the audible alert was loud enough to be heard. However, during the follow-up sessions more than half (58.3%) of the participants reported that the alerts were hard for them to hear over the 2-wk testing period. This greater difficulty in hearing the alarm was likely a result of greater background noise in the participants’ day-to-day environments. Future studies among participants with auditory impairments should use PDAs (or other handheld computers) that have both auditory and vibratory alerting capabilities. Also, as technology advances, PDAs may be able to offer other alerting possibilities (e.g., text alerts, email) and could incorporate multiple methods of data entry (e.g., audio recording, touch screen) that could circumvent fixed responses and allow participants to more uniquely report on their affect, behaviors, and situations.

It is important to note that EMA produces a complex data structure that presents challenges to traditional forms of analysis. In this pilot study, the goal was to assess the feasibility of using EMA methodology with hearing aid users. Accordingly, various analyses examined aggregated forms of data to make comparisons between key variables of interest (e.g., type of hearing problems when use of hearing aids was reported). However, EMA data are generally hierarchical in structure because participants are measured repeatedly at various time points throughout a day over many days in a study period. Traditional forms of analysis in many cases should not be used because most time-series data tend to show serial autocorrelation, which violates the assumption of independence underlying various parametric statistical methods. In contrast, multilevel modeling (or hierarchical linear modeling) may be best suited for EMA data because this approach takes into account the dependencies that exist in the data (Beal & Weiss 2003; Schwartz & Stone 1998). The use of multilevel modeling in future research would allow researchers to examine the effects of both between- and within-person factors on an outcome.

Conclusion

This pilot study has demonstrated the feasibility of using EMA methodology to evaluate outcomes of hearing aid use. Further study is needed to determine if this technique can be used in the clinical setting with patients, both before and after receiving hearing aids. For this study, reactivity was not observed, thus indicating that the protocol that we designed and used was appropriate to avoid overly burdening patients with these procedures. We plan to conduct a follow-up study, which should answer remaining questions and potentially develop the technique for clinical application.

The use of questionnaires to measure audiologic rehabilitation outcomes can often be inaccurate because of retrospective recall of events and experiences. Ecological momentary assessment (EMA) is a methodological approach that can alleviate biases because participants are asked about immediate experiences in their natural environment. The objective of this feasibility study was to examine the applications of EMA to the evaluation of hearing difficulties encountered by hearing aid users. Twenty-four participants carried a personal digital assistant (PDA) that was programmed to perform EMA four times per day for 14 days. Results indicate that EMA methodology is feasible with hearing aid users.

Supplementary Material

ACKNOWLEDGMENTS

We thank Erin Conner for her work as a research assistant during the first half of this project. The contents of this report do not represent the views of the Department of Veterans Affairs or the United States Government.

Sources of support:

Research Grant from the National Institute on Deafness and Other Communications Disorders (R03DC009012)

Research Career Scientist Award from VA Rehabilitation Research and Development Service for JAH (F7070S)

Rehabilitation Research Disability Supplement Award from VA Rehabilitation Research and Development Service for MBT (C3128I)

Administrative support and facilities provided by:

VA National Center for Rehabilitative Auditory Research, Portland, OR

Oregon Health & Science University, Portland, OR

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Beal DJ, Weiss HM. Methods of ecological momentary assessment in organizational research. Organizational Research Methods. 2003;6(4):440–464. [Google Scholar]

- Bentler RA. Effectiveness of directional microphones and noise reduction schemes in hearing aids: a systematic review of the evidence. J Am Acad Audiol. 2005;16(7):473–484. doi: 10.3766/jaaa.16.7.7. [DOI] [PubMed] [Google Scholar]

- Boothroyd A. Adult aural rehabilitation: what is it and does it work? Trends Amplif. 2007;11(2):63–71. doi: 10.1177/1084713807301073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown KW, Moskowitz DS. Does unhappiness make you sick? The role of affect and neuroticism in the experience of common physical symptoms. J Pers Soc Psychol. 1997;72(4):907–917. doi: 10.1037//0022-3514.72.4.907. [DOI] [PubMed] [Google Scholar]

- Chisolm TH, Abrams HB, McArdle R. Short- and long-term outcomes of adult audiological rehabilitation. Ear Hear. 2004;25(5):464–477. doi: 10.1097/01.aud.0000145114.24651.4e. [DOI] [PubMed] [Google Scholar]

- Compton-Conley CL, Neuman AC, Killion MC, Levitt H. Performance of directional microphones for hearing aids: real-world versus simulation. J Am Acad Audiol. 2004;15(6):440–455. doi: 10.3766/jaaa.15.6.5. [DOI] [PubMed] [Google Scholar]

- Cox RM. Evidence-based practice in provision of amplification. J Am Acad Audiol. 2005;16(7):419–438. doi: 10.3766/jaaa.16.7.3. [DOI] [PubMed] [Google Scholar]

- Cruise CE, Broderick J, Porter L, Kaell A, Stone AA. Reactive effects of diary self-assessment in chronic pain patients. Pain. 1996;67(2–3):253–258. doi: 10.1016/0304-3959(96)03125-9. [DOI] [PubMed] [Google Scholar]

- Ebner-Priemer UW, Trull TJ. Ecological momentary assessment of mood disorders and mood dysregulation. Psychol Assess. 2009;21(4):463–475. doi: 10.1037/a0017075. [DOI] [PubMed] [Google Scholar]

- Gagne JP. What is treatment evaluation research? What is its relationship to the goals of audiological rehabilitation? Who are the stakeholders of this type of research? Ear Hear. 2000;21(4 Suppl):60S–73S. doi: 10.1097/00003446-200008001-00008. [DOI] [PubMed] [Google Scholar]

- Gatehouse S. Components and determinants of hearing aid benefit. Ear Hear. 1994;15(1):30–49. doi: 10.1097/00003446-199402000-00005. [DOI] [PubMed] [Google Scholar]

- Gatehouse S. Glasgow Hearing Aid Benefit Profile: Derivation and validation of a client-centered outcome measure for hearing aid services. Journal of the American Academy of Audiology. 1999;10:80–103. [Google Scholar]

- Henry JA, Galvez G, Turbin MB, Thielman E, McMillan G, Istvan J. Pilot study to evaluate ecological momentary assessment of tinnitus. Ear Hear (Epub ahead of print) 2011 doi: 10.1097/AUD.0b013e31822f6740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes L, Humes L. Factors affecting long-term hearing aid success. Seminars in Hearing. 2004;25(1):63–72. [Google Scholar]

- Kahneman D, Fredericson BL, Schreiber CA. When more pain is preferred to less: Adding a better end. Psychological Science. 1993;4:401–405. [Google Scholar]

- Knudsen LV, Oberg M, Nielsen C, Naylor G, Kramer SE. Factors influencing help seeking, hearing aid uptake, hearing aid use and satisfaction with hearing aids: a review of the literature. Trends Amplif. 2010;14(3):127–154. doi: 10.1177/1084713810385712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kochkin S. MarkeTrak VIII: 25-year trends in the hearing health market. Hearing Review. 2009;62(10):12–31. [Google Scholar]

- Kochkin S. MarkeTrak VIII: Consumer satisfaction with hearing aids is slowly increasing. The Hearing Journal. 2010;63(1):19–32. [Google Scholar]

- Kricos PB. The influence of nonaudiological variables on audiological rehabilitation outcomes. Ear Hear. 2000;21(4 Suppl):7S–14S. doi: 10.1097/00003446-200008001-00003. [DOI] [PubMed] [Google Scholar]

- Krueger RA. Analyzing and reporting focus group results. Thousand Oaks, CA: Sage; 1998. [Google Scholar]

- Kuk FK, Potts L, Valente M, Lee L, Picirrillo J. Evidence of acclimatization in persons with severe-to-profound hearing loss. J Am Acad Audiol. 2003;14(2):84–99. doi: 10.3766/jaaa.14.2.4. [DOI] [PubMed] [Google Scholar]

- Morren M, van Dulmen S, Ouwerkerk J, Bensing J. Compliance with momentary pain measurement using electronic diaries: a systematic review. Eur J Pain. 2009;13(4):354–365. doi: 10.1016/j.ejpain.2008.05.010. [DOI] [PubMed] [Google Scholar]

- Noble W. Self-reports about tinnitus and about cochlear implants. Ear Hear. 2000;21(4 Suppl):50S–59S. doi: 10.1097/00003446-200008001-00007. [DOI] [PubMed] [Google Scholar]

- Noble W. Extending the IOI to significant others and to non-hearing-aid-based interventions. Int J Audiol. 2002;41(1):27–29. doi: 10.3109/14992020209101308. [DOI] [PubMed] [Google Scholar]

- Piasecki TM, Hufford MR, Solhan M, Trull TJ. Assessing clients in their natural environments with electronic diaries: rationale, benefits, limitations, and barriers. Psychol Assess. 2007;19(1):25–43. doi: 10.1037/1040-3590.19.1.25. [DOI] [PubMed] [Google Scholar]

- Powell RA, Single HM, Lloyd KR. Focus groups in mental health research: Enhancing the validity of user and provider questionnaires. Intern J Soc Psychiat. 1996;42:193–206. doi: 10.1177/002076409604200303. [DOI] [PubMed] [Google Scholar]

- Prendergast SG, Kelley LA. Aural rehab services: Survey reports who offers which ones and how often, and by whom. The Hearing Journal. 2002;55(9):30–35. [Google Scholar]

- Robinson MD, Clore GL. Belief and feeling: evidence for an accessibility model of emotional self-report. Psychol Bull. 2002;128(6):934–960. doi: 10.1037/0033-2909.128.6.934. [DOI] [PubMed] [Google Scholar]

- Saunders GH, Chisolm TH, Abrams HB. Measuring hearing aid outcomes--not as easy as it seems. J Rehabil Res Dev. 2005;42(4 Suppl 2):157–168. doi: 10.1682/jrrd.2005.01.0001. [DOI] [PubMed] [Google Scholar]

- Schwartz JE, Stone AA. Strategies for analyzing ecological momentary assessment data. Health Psychol. 1998;17(1):6–16. doi: 10.1037//0278-6133.17.1.6. [DOI] [PubMed] [Google Scholar]

- Scollon CN, Kim-Prieto C, Diener E. Experience sampling: Promises and pitfalls, strengths and weaknesses. Journal of Happiness Studies. 2003;4:5–34. [Google Scholar]

- Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annu Rev Clin Psychol. 2008;4:1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415. [DOI] [PubMed] [Google Scholar]

- Stone AA, Broderick JE, Schwartz JE, Shiffman S, Litcher-Kelly L, Calvanese P. Intensive momentary reporting of pain with an electronic diary: reactivity, compliance, and patient satisfaction. Pain. 2003;104(1–2):343–351. doi: 10.1016/s0304-3959(03)00040-x. [DOI] [PubMed] [Google Scholar]

- Stone AA, Shiffman S. Capturing momentary, self-report data: a proposal for reporting guidelines. Ann Behav Med. 2002;24(3):236–243. doi: 10.1207/S15324796ABM2403_09. [DOI] [PubMed] [Google Scholar]

- Stone AA, Shiffman SS, DeVries MW. Ecological momentary assessment. In: Kahneman D, Diener E, Schwarz N, editors. Well-being: The foundations of hedonic psychology. New York: Russell Sage Foundation; 1999. [Google Scholar]

- Ventry IM, Weinstein BE. The hearing handicap inventory for the elderly: a new tool. Ear Hear. 1982;3(3):128–134. doi: 10.1097/00003446-198205000-00006. [DOI] [PubMed] [Google Scholar]

- Vestergaard MD. Self-report outcome in new hearing-aid users: Longitudinal trends and relationships between subjective measures of benefit and satisfaction. Int J Audiol. 2006;45(7):382–392. doi: 10.1080/14992020600690977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogt DS, King DW, King LA. Focus groups in psychological assessment: enhancing content validity by consulting members of the target population. Psychol Assess. 2004;16(3):231–243. doi: 10.1037/1040-3590.16.3.231. [DOI] [PubMed] [Google Scholar]

- Wong LL, Hickson L, McPherson B. Hearing aid satisfaction: what does research from the past 20 years say? Trends Amplif. 2003;7(4):117–161. doi: 10.1177/108471380300700402. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.