Abstract

Background

Since 2007, New York City's primary care information project has assisted over 3000 providers to adopt and use a prevention-oriented electronic health record (EHR). Participating practices were taught to re-adjust their workflows to use the EHR built-in population health monitoring tools, including automated quality measures, patient registries and a clinical decision support system. Practices received a comprehensive suite of technical assistance, which included quality improvement, EHR customization and configuration, privacy and security training, and revenue cycle optimization. These services were aimed at helping providers understand how to use their EHR to track and improve the quality of care delivered to patients.

Materials and Methods

Retrospective electronic chart reviews of 4081 patient records across 57 practices were analyzed to determine the validity of EHR-derived quality measures and documented preventive services.

Results

Results from this study show that workflow and documentation habits have a profound impact on EHR-derived quality measures. Compared with the manual review of electronic charts, EHR-derived measures can undercount practice performance, with a disproportionately negative impact on the number of patients captured as receiving a clinical preventive service or meeting a recommended treatment goal.

Conclusion

This study provides a cautionary note in using EHR-derived measurement for public reporting of provider performance or use for payment.

Keywords: EHR-derived quality measures, electronic health records, electronic medical records, extension center, meaningful use measures, New York City, primary care, primary care information project, PCIP)

The American Recovery and Reinvestment Act of 2009 authorized US$19 billion in funding for the deployment and meaningful use of electronic health records (EHR), and introduced a national framework for the adoption of health information technology.1 The Center for Medicare and Medicaid Services has offered eligible providers financial incentives for demonstrating meaningful use of EHR and reporting on the quality of care.2 Starting with stage 1 of meaningful use, the Center for Medicare and Medicaid Services calls for the submission of provider-level quality measures, initially by attestation but then through electronic submission, starting as early as 2012. Many stakeholders, including payers, independent physician associations, and consumers have a vested interest in accessing and utilizing EHR-derived quality measures for purposes of accountability or rankings. However, quality measures derived from EHR have yet to be validated as representative of provider performance for incentives or comparative purposes.

Unlike most claims-based quality measurement, measures derived from EHR can incorporate clinical findings, allowing for the tracking of intermediate outcomes such as blood pressure and body mass index. However, documentation habits by providers can vary, and the necessary data entered into the EHR may not be interpreted or recognized by standard EHR software programming. This may lead to undercounting the patients eligible for a preventive service (eg, diagnosis of ischemic cardiovascular disease) or receiving a recommended treatment (eg, screening or medication) or meeting a recommended target (eg, control of blood pressure to less than 140/90 mm Hg).

Formed in 2005, the New York City Primary Care Information Project (PCIP) has assisted over 3000 providers to adopt and use a prevention-oriented EHR as a means to improve the delivery of primary care. Nearly 40% of the participating providers are operating in small (fewer than 10 providers), physician-owned practices. PCIP selected an EHR vendor through a competitive process and co-developed prevention-oriented functionality and population health monitoring tools, including automated quality reporting and a clinical decision support system (CDSS). The quality reporting tool displays by measure, for each eligible patient, whether the practice has or has not met the recommended preventive service. In addition, at the point of care, the CDSS function displays the preventive services a patient is eligible for and has not yet received, allowing the provider to take action (eg, order a mammogram, adjust medications, discuss smoking cessation aids) during the visit.

Providers were trained by both the EHR vendor's training staff and practice consultants employed by PCIP, who provided on-site technical assistance. Providers were taught to re-adjust the practice's workflows to document diagnoses and key preventive services in structured fields that are searchable and capable of generating the quality measures and preventive service reminders. Providers were also shown how to view their EHR calculated quality measures both within the EHR and through monthly reports generated by PCIP staff and emailed to individual providers. In addition, efforts were made to create alignment between payment and improved quality of care by informing providers of the various available incentive programmes as well as launching PCIP designed programmes. Through these synergistic changes: (1) prevention-oriented EHR; (2) practice workflow redesign; and (3) payment that rewards prevention, PCIP worked with primary care providers to prioritize prevention and facilitate management of chronic disease.3

This report provides an assessment of the validity of quality measures derived from information entered into the EHR and describes the issues contributing to variations in the results of automated EHR quality measurement.

Methods

Practice selection

A subset of 82 practices enrolled in a pilot rewards and recognition programme were invited to participate in the data validation study, as they had all implemented the eClinicalWorks EHR software before January 2009 and received technical assistance through the PCIP programme, and had a majority of their patient panel recorded in the EHR. Practices were required to have a minimum of 200 electronic patient records with a diagnosis of diabetes, hypertension, dyslipidemia, or ischemic cardiovascular disease. All 82 practices received a software upgrade between February and August 2009 to implement automated quality measurement reporting and the CDSS functionality. Participating practices signed a letter of consent allowing independent medical reviewers to conduct abstraction of the EHR and received an honorarium of US$500 for completing the study. This study was approved by the Department of Health and Mental Hygiene institutional review board no 09-067.

Electronic chart reviews

Medical reviewers randomly sampled 120 electronic patient charts per practice for established patients between 18 and 75 years of age, with at least one office visit since the practice implemented the EHR. For this study, data from the manual review of the electronic chart (e-chart) were analyzed if the patient had an office visit during the 6-month period after the activation date of the quality measurement reporting tool and implementation of the CDSS.

For each e-chart, reviewers abstracted the patient's age and gender, along with vitals, diagnoses, medications, laboratory results, diagnostic images, vaccinations, and receipt of or referral to counselling for the most recent visit. Depending on the data element, reviewers were instructed to search in multiple locations of the EHR: problem list, medical history, social history, progress notes (chief complaint, history of present illness, assessment), procedures, diagnostic images, vitals, and laboratory tests. Each reviewer was trained and tested by a standardized approach to ensure interrater reliability. If there was uncertainty whether documentation would meet the quality measure criteria a senior reviewer and PCIP staff would determine whether to include or exclude the observation.

Analytical methods

For each patient, each data element was coded based on whether it was documented in a structured field recognized by the existing EHR software for automated quality measurement (1=location recognized and 0=not recognized for quality measurement reporting). In addition, two sets of numerator and denominator counts were generated for each of the 11 quality measures. The first set of counts included only those patients whose information was documented in structured fields recognized by the existing software (EHR automated). The second set of counts incorporated all information about patients documented in the EHR (e-chart review). Data element coding and all counts were calculated using Microsoft Access structured query language.

Simple frequencies and descriptive statistics were generated to determine data element documentation patterns and estimate population-level numerators and denominators for each quality measure. Wilcoxon non-parametric tests were used to compare practice-level numerators and denominators calculated for each measure.

All descriptive statistics and tests were conducted using SAS V.9.2 analytical software. Practice distribution of documentation and calculated proportions of elements documented in various locations of the electronic chart were generated in MS Excel and MS Access.

Results

Of the 82 practices eligible for the study, 57 practices agreed to allow PCIP to conduct the e-chart review. Based on self-reported information and practice registries, practices that agreed to participate in the study had a higher average percentage of Medicaid insured patients (42.7% vs 30.7%), and a higher number of patients with diabetes (209.9 vs 110.0) and hypertension (477.8 vs 353.2) (table 1).

Table 1.

Characteristics of practices and patient charts reviewed

| Participating in chart review (N=57) | Not participating in chart review (N=28) | |

| Mean (min−max) | Mean (min−max) | |

| Practice characteristics | ||

| No of providers per practice | 2.9 (1−24) | 2.4 (1−13) |

| No of full-time equivalent | 1.7 (0.7−9.2) | 1.9 (1.0−10.8) |

| Percentage of patients with Medicaid insurance or uninsured | 42.7 (0.0−90.0) | 30.7 (2.0−81.0) |

| Estimated population* | ||

| Patients >18 years of age with at least one office visit in the past year | 1936.2 (0−9117) | 1905.5 (12−9723) |

| Diabetes | 209.9 (3−1480) | 110.0 (1−450) |

| Hypertension | 477.8 (2−2460) | 353.2 (1−1121) |

| Months using EHR by 1 July 2009 | 14.3 (7.5−39.1) | 13.7 (3.7−22.4) |

| Mean (Min − Max) | Not applicable | |

| Patient characteristics of charts reviewed | ||

| Medical charts reviewed (total 4081) | 71.6 (19−111) | – |

| %Female | 59.4 (35.0−80.7) | – |

| Patient age, years | 48.1 (32.8−61.5) | – |

| Percentage of records with diagnoses | ||

| Diabetes | 17.6 (0.0−48.1) | – |

| Ischemic cardiovascular disease | 7.3 (0.0−39.0) | – |

| Hypertension | 41.2 (8.2–87.0) | – |

| Dyslipidemia | 36.0 (3.3−85.4) | – |

| Current smokers | 10.3 (1.4−37.9) | – |

From automated reporting from the electronic health record (EHR)—separate from electronic chart reviews.

A total of 4081 e-charts was available for this analysis. An additional 2759 e-charts were reviewed, but were excluded from the analysis because the patients did not have a qualifying office visit during the 6-month study period. More than half of the final study sample of patients were women (59.4%), and the average patient age was 48.1 years. Participating practices varied in their distribution of patients with diagnoses of diabetes, ischemic cardiovascular disease, hypertension, dyslipidemia and patients who were current smokers (table 1). The majority (89.9%) of participating providers were primary care providers (ie, internal medicine, family medicine, pediatrics, obstetrics/gynecology). Non-primary care providers specialized in cardiology, pulmonology, endocrinology, allergy, gastroenterology, or did not specify a specialty (data not shown).

We looked across the 11 clinical quality measures to assess where information was documented. The presence of data recognized for automated quality measurement varied widely, ranging from 10.7% to 99.9% (table 2). Measure components relying on vitals, vaccinations, and medications had the highest proportion of information documented in structured fields recognized by the automated quality measures. The majority of diagnoses for chronic conditions such as diabetes (>91.4% across measures), hypertension (89.3%), ischemic cardiovascular disease (>78.8% across measures) and dyslipidemia (75.1%) were documented in the problem list, a structured field used for automated quality measurement. Patient diagnoses not recognized for inclusion in the measure were recorded in the medical history, assessment, chief complaint, or history of present illness, sections that typically allow for free-text entries.

Table 2.

Frequency of clinical information and locations in electronic charts for quality measurement

| Name of measure and brief description | Description of denominator (D) or numerator (N) data element (no of eligible charts) | Recognized for quality measurement | Not recognized for quality measurement | |||||

| Location | No | % | Location | No | % | |||

| Screening measures (patients for the denominator are identified by their age and gender) | ||||||||

| Breast cancer screening | ||||||||

| Female patients ≥40 years of age who received a mammogram in the past 2 years | N | Diagnostic order and result for mammogram (543) | Procedures | 29 | 10.7 | Scanned patient docs | 270 | 48.7 |

| Diagnostic imaging | 204 | 36.8 | ||||||

| Other | 10 | 3.6 | ||||||

| BMI | ||||||||

| Patients ≥18 years of age who have a BMI measured in the past 2 years | N | BMI documented (3122) | Vitals | 3116 | 99.8 | Medical history | 2 | 0.1 |

| Other | 4 | 0.1 | ||||||

| Influenza vaccine | ||||||||

| Patients >50 years of age who received a flu shot in the past year | N | Influenza vaccination documented (480) | Immunizations | 475 | 99.0 | Chief complaint/HPI | 3 | 0.6 |

| Procedures | 2 | 0.4 | ||||||

| Smoking status | ||||||||

| Smoking status updated annually in patients ≥18 years of age | N | Smoking status documented (3357) | Smart form | 1796 | 53.4 | Social history | 1530 | 45.5 |

| Other | 26 | 0.8 | ||||||

| Medical history | 5 | 0.2 | ||||||

| Intervention measures | ||||||||

| Antithrombotic therapy | ||||||||

| Patients ≥18 years of age with a diagnosis of IVD or ≥40 years of age with a diagnosis of diabetes taking aspirin or another antithrombotic therapy | D | Diabetes diagnosis and age ≥40 years (680) | Problem list | 623 | 91.6 | Medical history | 42 | 6.2 |

| Assessment | 11 | 1.6 | ||||||

| Chief complaint/HPI | 4 | 0.6 | ||||||

| D | IVD diagnosis (303) | Problem list | 250 | 82.5 | Medical history | 45 | 14.9 | |

| Assessment | 7 | 2.3 | ||||||

| Chief complaint/HPI | 1 | 0.3 | ||||||

| N | Appropriate medication prescribed (759) | Medications | 757 | 99.7 | Plan/treatment | 2 | 0.3 | |

| BP control | ||||||||

| Patients 18–75 with a diagnosis of hypertension, with or without IVD, with a recorded systolic BP <140 mm Hg and diastolic BP <90 mm Hg in the past 12 months (<130 mm Hg and 80 mm Hg in patients with hypertension and DM) | D | Hypertension diagnosis (1676) | Problem list | 1497 | 89.3 | Medical history | 157 | 9.4 |

| Assessment | 11 | 0.7 | ||||||

| Chief complaint/HPI | 11 | 0.7 | ||||||

| Comorbid diagnoses | ||||||||

| D | Diabetes (551) | Problem list | 512 | 92.9 | Medical history | 28 | 5.1 | |

| Assessment | 10 | 1.8 | ||||||

| HPI | 1 | 0.2 | ||||||

| D | IVD (118) | Problem list | 93 | 78.8 | Medical history | 24 | 20.3 | |

| Assessment | 1 | 0.9 | ||||||

| N | BP documented (3868) | Vitals | 3866 | 99.9 | Medical history | 2 | 0.1 | |

| Cholesterol screening and control | D | Dyslipidemia diagnosis (1468) | Problem list | 1112 | 75.1 | Medical history | 269 | 18.2 |

| Assessment | 86 | 5.8 | ||||||

| Chief complaint/HPI | 12 | 0.8 | ||||||

| High risk | ||||||||

| Screening: Patients 18–75 years of age with a diagnosis of dyslipidemia and DM or IVD with a measured LDL in the past year | Comorbid diagnoses | |||||||

| D | Diabetes (515) | Problem list | 477 | 92.6 | Medical history | 30 | 5.8 | |

| Assessment | 6 | 1.2 | ||||||

| Chief complaint/HPI | 2 | 0.4 | ||||||

| Control: Screened patients with LDL <100 mg/dl | D | IVD (211) | Problem list | 182 | 86.3 | Medical history | 27 | 12.8 |

| Assessment | 1 | 0.5 | ||||||

| Chief complaint/HPI | 1 | 0.5 | ||||||

| General population | ||||||||

| Screening: M 35+, F45+ w/no DM/IVD with a measured total cholesterol or LDL in the past 5 years | N | LDL cholesterol test result (2425) | Laboratory tests | 1294 | 53.4 | Scanned patient docs | 1106 | 45.6 |

| Other | 24 | 1.0 | ||||||

| Medical history | 1 | 0.0 | ||||||

| Control: Screened patients with total cholesterol <240 mg/dl or LDL <160 mg/dl | N | Total cholesterol test result (2545) | Laboratory tests | 1383 | 53.4 | Scanned patient docs | 1137 | 44.7 |

| Other | 24 | 0.9 | ||||||

| Medical history | 1 | 0.0 | ||||||

| Hemoglobin A1c screening and control | ||||||||

| Screening: Patients 18–75 years of age with a diagnosis of DM with a documented hemoglobin A1c test within the past 6 months | D | Diabetes diagnosis (740) | Problem list | 676 | 91.4 | Medical history | 48 | 6.5 |

| Assessment | 12 | 1.6 | ||||||

| Chief complaint/HPI | 4 | 0.5 | ||||||

| Control: Screened patients with hemoglobin A1c <7% | N | Hemoglobin A1c test result (642) | Laboratory tests | 294 | 63.0 | Scanned patient docs | 171 | 36.6 |

| Other | 2 | 0.4 | ||||||

| Smoking cessation intervention | ||||||||

| Current smokers who received cessation interventions or counselling in the past 12 months | D | Documented current smokers (409) | Smart form | 243 | 59.4 | Social history | 161 | 39.4 |

| Other | 3 | 0.7 | ||||||

| Assessment | 2 | 0.5 | ||||||

| N | Smoking cessation intervention (129) | Smart form | 85 | 64.9 | Other | 33 | 25.2 | |

| Medications | 8 | 6.1 | ||||||

| Scanned patient docs | 3 | 2.3 | ||||||

BMI, body mass index; BP, blood pressure; DM, diabetes mellitus; HPI, history of present illness; IVD, ischemic cardiovascular disease; LDL, low-density lipoprotein.

Diagnostic orders or results for mammogram had the lowest proportion (10.7%) of data recorded in structured fields recognized for automated quality measurement. The majority of the information for breast cancer screening was found as scanned patient documents and diagnostic imaging; both sources of information are not amenable for automated electronic queries.

Nearly half of the information for measures that require a laboratory test result, such as control of hemoglobin A1c and cholesterol, was documented in structured fields recognized for automated quality measurement (range 53.4–63.0%). Similarly, only half of the information regarding patient smoking status (53.4%) was recognized for automated quality measurement.

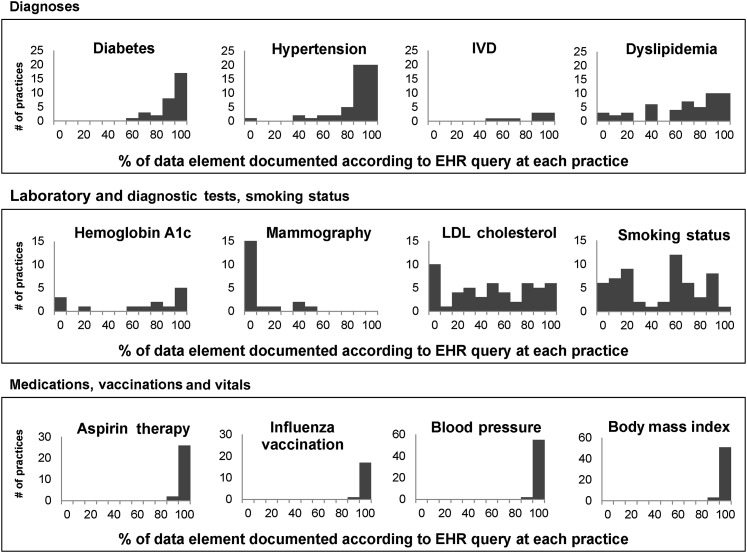

With the exception of medications, vaccinations, and blood pressure readings, practices varied substantially in where they chose to document the data elements required for automated quality measurement (figure 1).

Figure 1.

Distribution of documentation and data elements recognized for quality measurement across 57 practices. EHR, electronic health record; IVD, ischemic cardiovascular disease; LDL, low-density lipoprotein.

In estimating the denominator loss due to unrecognizable documentation, the average practice missed half of the eligible patients for three of the 11 quality measures—hemoglobin A1c control, cholesterol control, and smoking cessation intervention (table 3). No statistically significant differences were observed between the e-chart and EHR automated quality measurement scores in the number of patients captured for the denominator for the remaining eight measures. Current EHR reporting would underreport practice numerators for six of the 11 measures—hemoglobin A1c control, hemoglobin A1c screening, breast cancer screening, cholesterol control, cholesterol screening, and smoking status recorded.

Table 3.

Comparison of numerator and denominator counts for EHR automated quality measurement and electronic chart review (n=57 practices)

| Measure | Denominator | Numerator | ||||||

| EHR query | e-Chart review | EHR query | e-Chart review | |||||

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| A1c control | 4.9 | 5.2 | 8.2** | 6.1 | 1.8 | 2.4 | 2.7* | 2.6 |

| A1c screening | 11.8 | 8.6 | 12.9 | 8.6 | 4.9 | 5.2 | 8.2** | 6.1 |

| Antithrombotic therapy | 13.1 | 10.3 | 14.7 | 10.6 | 7.5 | 7.8 | 8.2 | 7.8 |

| Body mass index | 71.4 | 18.1 | 71.4 | 18.1 | 54.6 | 23.2 | 54.8 | 23.2 |

| Blood pressure control | 25.7 | 14.4 | 29.3 | 14.4 | 14.1 | 9.6 | 16.2 | 10.0 |

| Breast cancer screening | 28.7 | 9.9 | 28.7 | 9.9 | 1.0 | 2.3 | 9.5** | 8.2 |

| Cholesterol control | 15.7 | 14.3 | 29.1** | 15.7 | 13.0 | 12.5 | 23.8** | 13.5 |

| Cholesterol screening | 41.7 | 15.2 | 44.2 | 15.8 | 15.7 | 14.3 | 29.1** | 15.7 |

| Influenza vaccination | 34.4 | 15.1 | 34.4 | 15.1 | 8.1 | 8.7 | 8.3 | 8.9 |

| Smoking cessation intervention | 4.3 | 3.8 | 7.2** | 4.8 | 1.5 | 2.7 | 2.3 | 3.5 |

| Smoking status recorded | 71.5 | 18.1 | 71.5 | 18.1 | 31.5 | 24.0 | 59.5** | 18.9 |

*p<0.01 **p<0.001.

EHR, electronic health record.

Discussion

As the nation continues to drive EHR adoption through significant infusions of funding for health information technology infrastructure and support, and payment reform carries the promise of improved quality at lower cost, it is important and timely to assess the validity of EHR-derived clinical quality measures.

Data from this study suggest that clinical diagnoses for the majority of patients are documented in structured fields needed for automated quality reporting and point-of-care reminders, and that there is not a significant amount of ‘denominator loss’ when using EHR-derived measurement versus e-chart reviews. However, EHR-derived quality measurement can result in significant numerator loss, resulting in underestimates of the delivery of clinical preventive services.

It is important to understand the impact of workflows and documentation habits on EHR-derived quality measures. In this study, the majority of practices correctly documented diagnoses of hypertension and diabetes over 80% of the time, but rates of appropriate documentation for dyslipidemia and ischemic cardiovascular disease were substantially lower. Providers may be more likely to overlook chronic diseases that are documented elsewhere in the chart, a finding we commonly see in the case of obesity and active smoking. Anecdotally, providers have reported to us that they limit the number of assessments assigned to a patient at any given visit due to the historical limitation related to paper claims (some payers limit the number of assessments that can be reported in paper claims to four or fewer), which may have the unintended consequence of ‘underdocumentation’.

For laboratory tests, the presence or absence of laboratory interfaces and the appropriate logical observation identifiers names and codes creates significant variation in practice scores. Even if a practice has an electronic interface, reference laboratories do not consistently provide EHR vendors with compendiums that have logical observation identifiers names and codes codes for each test, and therefore, some test orders and results remain undetected by EHR software. For practices with no electronic laboratory interface, the quality measures that rely on laboratory data can only be addressed by manually entering the results into structured fields, which few providers do.

In addition, for quality measures that rely on tests or procedures performed in a different office setting, the difficulty of getting the results back, and in structured form, makes it challenging to satisfy those measures. In this study, the mammography quality measure was only satisfied by the presence of a structured test result, yet most mammography results come back in the form of faxed results, necessitating an additional step by the practice to codify the results in structured form. Eventually, developments in natural language processing may help convert unstructured text results into their structured counterparts needed to satisfy quality measures and trigger clinical decision support.

Finally, some quality measures may be impacted by incorrect or imprecise logic used to code the measure. For instance, with mammography rates, the relatively low practice performance seen in this study is largely attributable to a specific flaw in the design of the EHR's quality measure. For over a year, this particular measure would only allow a mammogram result to satisfy the measure if the results were structured and the test was ordered as a ‘procedure’, and not a ‘diagnostic image’, the latter being the more consonant with provider preference.

Providers uniformly scored well on documentation of aspirin therapy, influenza vaccination and blood pressure, probably due to the relative lack of options in the EHR to document these data in fields other than the designated locations. For some other measures, such as smoking status, documentation can vary widely provider to provider, including a variety of notations (eg, ‘+ smoker’, ‘+ cig’, ‘2 ppd’, ‘smoker’, and ‘+ tobacco’) and locations (eg, smart form, preventive medicine, social history). This variability in documentation preference has been shown to lead to significant variations in quality measurement depending on which fields are chosen and how much granularity is provided. The bimodal distribution of practice documentation for smoking status is probably due to the difference in whether practices used the structured fields in the smart form to satisfy the quality measure.

This study has several limitations. Several practices refused to participate in the chart review. The majority that did not participate stated they lacked sufficient physical space for the chart reviewers to conduct their reviews. Practices electing not to participate in the study did not differ in their population characteristics, as shown in table 1. This study also limited its chart reviews to practices that were using eClinicalWorks; therefore, the findings may not be generalizable to other EHR. In addition, the study focused on available documentation in the electronic record, and we did not conduct an audit of whether providers or practice staff actually delivered the services recorded. Separate studies will need to be conducted to ascertain whether information recorded in the EHR may not reflect the actual care delivered.

Finally, this study did not assess how practice characteristics, interventions by PCIP, or the use of specific EHR functionality may have impacted differences in documentation variation. These studies are being conducted separately.

Until recently, quality measures were largely derived from manual paper chart reviews, secondary analysis of claims databases, or patient surveys, as opposed to being calculated from EHR derived data. This was driven in large part by the historically low rates of EHR use nationwide and the ensuing lack of data availability, quality, and comparability. Studies comparing claims data with clinical data have noted significant disparities between the two sources,4 5 yet claims-based quality measurement has continued to be the dominant form of large-scale quality analysis because no other data sources have been readily available either as a complement or replacement. In some measures, such as breast cancer screening, the use of administrative or claims data may still be more reliable until health information exchanges are established, more broadly adopted, and can integrate multiple data sources to establish more comprehensive measures on recommended care delivery and health outcomes.

EHR offer new potential for performance measurement given that most commercially available EHR use standard dictionaries to capture information in coded forms, such as ICD for problem list and SNOMED for medications. Using these codified data, EHR can help identify patient populations and calculate a significant number of quality measures that leverage data available in the EHR.6 These measures can range from adherence to clinical guidelines to assessments of rates of clinical preventive services to rates of screening. However, EHR-derived quality measurement has limitations due to several factors, most notably variations in EHR content, structure and data format, as well as local data capture and extraction procedures.7 8

Several steps can be taken to mitigate the variability of EHR documentation. As part of PCIP's programme, providers are trained on proper documentation techniques during their initial EHR training, and then quality improvement specialists reinforce their use, but ultimately there are no mechanisms to force providers to document in a particular location in the chart. Providers need regular prompts, training and feedback to alter their documentation habits. Studies have shown that clinical decision support can help improve the quality and accuracy of documentation.9 Another way to mitigate the variability of documentation would be to include claims data to populate the EHR, thereby providing a more robust and complete profile of the patient. In addition, standards need to be developed for what needs to be documented in the various medical record components, such as a clinical encounter note or a care plan document. Much work is being done to standardize the output of EHR for use in health information exchange (eg, the continuity of care document), but few efforts are aimed at standardizing what data inputs should go into the EHR.

More studies are needed to assess the validity of EHR-derived quality measures and to ascertain which measures are best calculated using claims or administrative data or a combination of data sources. If provider-specific quality measurements are to be reported and made public, as is the plan for the meaningful use quality measures, further analysis is needed to understand the limitations of these data, particularly if they are prone to underestimation of true provider performance.

Acknowledgments

The authors would like to acknowledge Dr Farzad Mostashari for his initial concept and design of PCIP as well as his vision for the prevention-oriented EHR tools deployed in this project. They would also like to thank the participating practices and the Island Peer Review Organization staff for their dedication and many hours spent conducting chart reviews. They also wish to thank the PCIP staff for their tireless dedication to improving health.

Footnotes

Funding: This study was supported by the Agency for Healthcare Research and Quality (grant nos R18HS17059 and 17294). The funder played no role in the study design, in the collection, analysis and interpretation of data, in the writing of the report or in the decision to submit the paper for publication.

Competing interests: None.

Ethics approval: This study was approved by the Department of Health and Mental Hygiene institutional review board no 09067.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Blumenthal D. Promoting use of health IT: why be a meaningful user? Md Med 2010;11:18–19 [PubMed] [Google Scholar]

- 2.CMS Office of Public Affairs. Fact Sheet Details for Medicare and Medicaid Health Information Technology: Title IV of the American Recovery and Reinvestment Act. 2009. http://www.cms.hhs.gov/apps/media/press/factsheet.asp?Counter=3466&intNumPerPage=10&checkDate=&checkKey=&srchType=1&numDays=3500&srchOpt=0&srchData=&keywordType=All&chkNewsType=6&intPage=&showAll=&pYear=&year=&desc=&cboOrder=date (accessed 18 Aug 2011). [Google Scholar]

- 3.Frieden TR, Mostashari F. Health care as if health mattered. JAMA 2008;299:950–2 [DOI] [PubMed] [Google Scholar]

- 4.Jollis JG, Ancukiewicz M, DeLong ER, et al. Discordance of databases designed for claims payment versus clinical information systems. Implications for outcomes research. Ann Intern Med 1993;119:844–50 [DOI] [PubMed] [Google Scholar]

- 5.Tang PC, Ralston M, Arrigotti MF, et al. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc 2007;14:10–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Roski J, McClellan M. Measuring health care performance now, not tomorrow: essential steps to support effective health reform. Health Aff (Millwood) 2011;30:682–9 [DOI] [PubMed] [Google Scholar]

- 7.Kahn M, Ranade D. The impact of electronic medical records data sources on an adverse drug event quality measure. J Am Med Inform Assoc 2010;17:185–91 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chan K, Fowles J, Weiner J. Electronic health records and the reliability and validity of quality measures: a review of the literature. Med Care Res Rev 2010;67:503–27 [DOI] [PubMed] [Google Scholar]

- 9.Galanter WL, Hier DB, Jao C, et al. Computerized physician order entry of medications and clinical decision support can improve problem list documentation compliance. Int J Med Inform 2010;79:332–8 [DOI] [PubMed] [Google Scholar]