Abstract

To investigate how hippocampal neurons code behaviorally salient stimuli, we recorded from neurons in the CA1 region of hippocampus in rats while they learned to associate the presence of sound with water reward. Rats learned to alternate between two reward ports at which, in 50% of the trials, sound stimuli were presented followed by water reward after a 3-s delay. Sound at the water port predicted subsequent reward delivery in 100% of the trials and the absence of sound predicted reward omission. During this task, 40% of recorded neurons fired differently according to which of the two reward ports the rat was visiting. A smaller fraction of neurons demonstrated onset response to sound/nosepoke (19%) and reward delivery (24%). When the sounds were played during passive wakefulness, 8% of neurons responded with short latency onset responses; 25% of neurons responded to sounds when they were played during sleep. During sleep the short-latency responses in hippocampus are intermingled with long lasting responses which in the current experiment could last for 1–2 s. Based on the current findings and the results of previous experiments we described the existence of two types of hippocampal neuronal responses to sounds: sound-onset responses with very short latency and longer-lasting sound-specific responses that are likely to be present when the animal is actively engaged in the task.

Keywords: auditory, sensory, stimulus, sleep, space, location, hippocampus

Introduction

Hippocampus is located on top of the cortical hierarchy (Felleman and van Essen, 1991; Burwell et al., 1995; Burwell and Amaral, 1998; Vertes, 2006; van Strien et al., 2009) and is necessary for the formation of new episodic memories (Scoville and Milner, 1957; Aggleton and Brown, 1999; Eacott and Norman, 2004; Cipolotti and Bird, 2006). It is also necessary for the formation of spatial memory and navigation (Morris et al., 1982). During the last 40 years of study of the firing properties of hippocampal neurons, knowledge about the spatial variables that drive hippocampal firing became extensive in both phenomenology and details (O'Keefe, 1976; O'Keefe and Nadel, 1978; Muller and Kubie, 1987; Kubie et al., 1990; O'Keefe and Recce, 1993; Wilson and McNaughton, 1993; O'Keefe and Burgess, 1996; Wood et al., 1999; Jensen and Lisman, 2000; Anderson and Jeffery, 2003; Wills et al., 2005; Leutgeb et al., 2005a,b; Colgin et al., 2008). Non-spatial variables are also represented by hippocampal neurons and studies using various sensory modalities demonstrate that hippocampal neurons respond to sounds (Berger et al., 1976; Christian and Deadwyler, 1986; Sakurai, 2002; Moita et al., 2003; Takahashi and Sakurai, 2009; Itskov et al., 2012), textures (Itskov et al., 2011), odors (Wood et al., 1999; Wiebe and Staubli, 1999, 2001; Komorowski et al., 2009), and gustatory cues (Ho et al., 2011). The majority of place unrelated sensory responses in hippocampus have been demonstrated in animals actively engaged in a behavioral task. We recently developed a categorization task (Itskov et al., 2011, 2012) which allowed us to demonstrate that hippocampal neurons discriminate between sounds. However, in those experiments hippocampal neurons did not respond to sounds when played to the animal not engaged in a discrimination task. These recordings were performed in highly over trained animals and it is possible that some of the features of neuronal responses were absent due to repetition of the same stimuli over many thousands of trials. In the current experiment we characterized responses of hippocampal neurons to behaviorally relevant sounds which were played during sleep and to awake passively listening rats. In the behavioral task, the sounds cued the upcoming release of a reward. Outside the task, the sounds were unrelated to the animal's behavior.

Materials and methods

Ethics statement

All experiments were conducted in accordance with standards for the care and use of animals in research outlined in European Directive 2010/63/EU, and were supervised by a consulting veterinarian.

Subjects

Six male rats weighing about 350 g were housed individually and maintained on a 14 h/10 h light/dark cycle. Animals were placed on water-restricted diet 1 day prior to the beginning of the experiments. To ensure that the animals did not suffer dehydration as a consequence of water restriction, they were allowed to continue the behavioral testing to satiation and were given access to ad lib drinking water for 1 h after the end of each recording session. The animals' body weight and general state of health was monitored throughout the experiments. Out of the six animals trained to perform the behavioral task, four were implanted using microdrives for chronic recordings. Neuronal data suitable for analysis was collected from two animals.

Stimuli

The stimuli were chosen so that they were short sounds, easily perceived by rats. We chose artificial vowels as a more “naturalistic” class of stimuli than pure tones. Artificial vowels are a simplified version of vocalization sounds used by many species of mammals, and have been used in studies of the ascending auditory pathway from the auditory nerve (Cariani and Delgutte, 1996; Holmberg and Hemmert, 2004) to the auditory cortex (Bizley et al., 2009, 2010). The spectra of natural vowels are characterized by “formant” peaks which result from resonances in the vocal tract of the vocalizing animal (Schnupp et al., 2011). Formant peaks therefore carry information about both the size and the configuration of the vocal tract, and human listeners readily categorize vowels according to vowel type (e.g., /a/ vs /o/, Peterson and Barney, 1952) as well as according to speaker type (e.g., male vs. female voice) or speaker identity (Gelfer and Mikos, 2005). Many species of animals, including rats (Eriksson and Villa, 2006), chinchillas (Burdick and Miller, 1975), cats (Dewson, 1964), monkeys (Kuhl, 1991), and many bird species (Kluender et al., 1987; Dooling and Brown, 1990) readily learn to discriminate synthetic vowels.

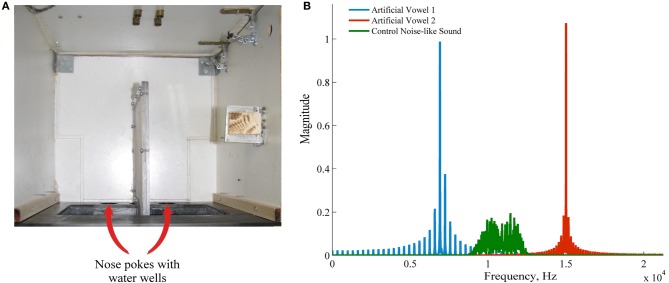

The stimuli were generated using binary click trains at the fundamental frequency of 330 Hz and 100 ms duration which were bandpass filtered with a bandwidth equal to 1/50th of the formant frequency using Malcolm Slaney's Auditory Toolbox. Formant center frequencies were 6.912 and 15.008 kHz, respectively. The spectra of the stimuli are shown in Figure 1B. The formant frequencies chosen here lie within rat and human sensitivity range (Heffner et al., 1994). As demonstrated in our previous experiments (Itskov et al., 2012) the rats can perceive and discriminate similar artificial vowels. A third, control sound used in passive recordings as a novel stimulus was a naturalistic noise-like sound (see the spectrum in Figure 1B). The stimuli were ramped on and off (supplementary audio files 1–2). They were presented at a sound level of 70 dB SPL, from a speaker located above the experimental apparatus.

Figure 1.

Apparatus and the stimuli. (A) Experimental apparatus, view from the top. Nose pokes are marked with arrows. A water well was positioned behind each nose poke. (B) Frequency spectrum of the two artificial sounds used in the behavioral sound (blue and red) and a control noise-like sound also used in passive listening experiments.

Sounds were presented through Visaton FRS 8 speaker, which has a flat frequency response (<±2 dB) between 200 Hz and 10 kHz. The speaker was driven through a standard PC sound card controlled by LabView (National Instruments, Austin, TX, USA).

Apparatus

The arena for the behavioral task consisted of a dim-lit sound-attenuated box (35 × 30 × 40 cm). One of the walls had two round holes (called “nose pokes” throughout the text, see Figure 1A). A water well was placed behind each of the nose pokes. Infrared sensors at the edge of the nose pokes monitored the rat's entry and exit from the holes. Outside the context of the behavioral task, passive exposures to the awake rat were done in an unfamiliar dark plastic bin (33 × 22 × 35 cm) which differed from the training environment by geometry, texture of the floor and the walls, the lighting, and the smell. For recordings during the sleep we used a tall box (26 × 20 × 60 cm) lined with thick cotton tissue. Light sensors, sound, and water delivery were controlled by a custom-written LABview script, operating a National Instruments card (National Instruments Corporation, Austin, TX, USA).

Surgery and electrophysiological recordings

The rats were implanted with chronic recording electrodes. They were anesthetized with a mixture of Zoletil (30 mg/kg) and Xylazine (5 mg/kg) delivered i.p. A craniotomy was made above left dorsal hippocampus, centered 3.0 mm posterior to bregma and 2.5 mm lateral to the midline. A Neuralynx “Harlan 12” microdrive loaded with 12 tetrodes (25 μm diameter Pl/Ir wire, California Fine Wire) was mounted over the craniotomy. Tetrodes were loaded perpendicular to the brain surface and individually advanced in small steps of 40–80 μm, until they reached the CA1 area, indicated by the amplitude and the shape of the sharp wave/ripples. After surgery, animals were given antibiotic (Baytril; 5 mg/kg delivered through the water bottle) and the analgesic caprofen (Rimadyl; 2.5 mg/kg, subcutaneous injection) for postoperative analgesia and prophylaxis against infection, and were allowed to recover for one week to 10 days after surgery before training started.

After recovery from electrode implantation the animals were trained, during which neuronal responses were recorded from the tetrode array using TDT data acquisition equipment (RZ2, Tucker and Davis Technologies, Alachua, FL, USA). We usually recorded from 1 to 4 single units per tetrode. Spikes were presorted automatically using KlustaKwik (Harris et al., 2000), using waveform energy on each of the four channels of the tetrode as coordinates in a 4 dimensional feature space. The result of the automatic clustering was inspected visually after importing the data into MClust (kindly provided by A. D. Redish). Each single unit was recorded only in one session.

Behavioral task

The task was designed in a way that allowed us to examine neuronal responses to behaviorally relevant auditory stimuli. We took into account the richness of location-related neuronal signals in hippocampus that are known to influence sensory responses recorded in different spatial locations (Itskov et al., 2012). The animal initiated each trial by poking its snout into a nose poke. During the recording sessions (referred to as “lottery,” see below) the sounds were presented with 50% probability for a given nose poke entry. Trials with and without sound presentation were compared, assuring that the animal was in exactly the same position and motivational state in both types of trials.

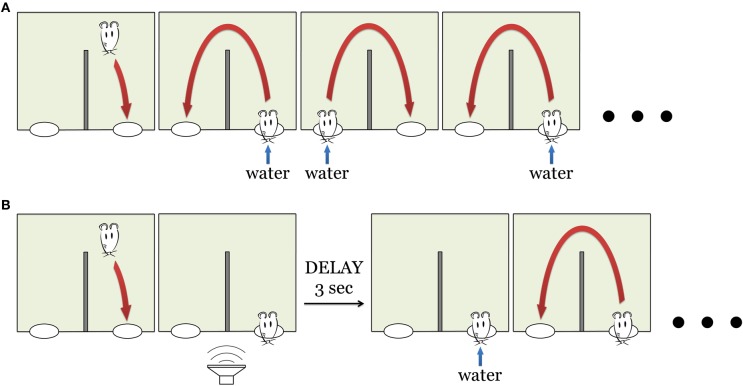

Pre-training

The day prior to behavioral testing, animals were placed on a water restricted dry food diet. To accustom the animals to the set-up and the task they were given four pre-training sessions with 100% reward probability (Figure 2A). During these four sessions the rats learned to alternate between two water spouts, collecting a reward at each site. After receiving water in one of the spouts, the rat had to go to the second spout to receive reward, and then back to the first spout. Attempts to collect water from the same spout twice were neither rewarded nor punished. Initially water reward was delivered immediately upon the nose poke. During the following sessions, reward was delayed by 1 s on each successive session. Thus, by the end of fourth pre-training session, delay was 3 s. At this point, the rat was introduced to the main “lottery” task (see below). The rats typically started to perform the alternation task at the end of the first or beginning of the second session. By the end of the fourth session, rats reached stable performance of ~300 trials per session (206–510, mean 309). No sounds were played during the pre-training.

Figure 2.

Behavioral task. (A) Pre-training. The animal had to collect water reward by continuously alternating between the two nose pokes (right and left). During the first pre-training session water was delivered instantly upon nose poke entry. The delay between the nose poke and water delivery increases during subsequent sessions (see Materials and Methods). (B) “Lottery” task: now water was delivered only in 50% of the trials. This sequence of pictures outlines the case when the animal had a “lucky” trial in one of the nose poke. Other “unlucky” trials have neither sound nor reward, the animal proceeded to the opposite nose poke (see Materials and Methods).

“Lottery”

On the fifth day of the training, the reinforcement schedule was changed to increase the salience of the reward and, especially, to make the rat attend to novel sounds associated with reward. Now the water was delivered only on 50% (not 100%, as before) of nose pokes (Figure 2B). The rat still had to alternate between right and left nose pokes and wait for at least 3 s for the water reward delivery. Whether the current trial would turn out to be “lucky” or “unlucky” was determined randomly by software. On “lucky” trials, a sound was played immediately upon entry into the nose poke, predicting the upcoming reward. There were two sounds used in the behavioral task (two artificial vowels, Figure 1B), each of them associated with just one of the reward spouts.

This task was designed so that we could examine neuronal responses to sound presentation in awake behaving animals. Importantly, in the “unlucky” trials the animals decreased waiting time over the course of the session, suggesting that the occurrence of the sound became associated with the water reward and absence of sound became a cue to go to the alternate nose poke (see Results).

Passive listening

To record hippocampal responses to sounds in a context unrelated to the behavioral task we placed the rat in a novel environment 2–3 h after completion of the behavioral session. Three types of sound stimuli (familiar vowel associated with the right well, familiar vowel associated with a left well, and a novel noise-like sound) were presented pseudo-randomly, so that no sound was repeated more than two times in a row. Inter-stimulus interval varied randomly from 25 to 30 s. The sounds were presented irrespectively of the animal's action and location. Each stimulus type was presented 43 times on average. The long inter-stimulus interval was intended to reduce the animal's adaptation to stimulus repetitions (Ulanovsky et al., 2004).

Passive sound presentation during sleep started 5 min after the animal had fallen asleep, as determined by total immobility. The animal was monitored continuously via remote video camera. As was the case during the session in which the rat remained awake in a novel environment, three types of sound stimuli (familiar vowel associated with the right spout, familiar vowel associated with a left spout, and a novel noise-like sound) were presented pseudo-randomly, so that no sound repeated more than two times in a raw. Inter-stimulus interval varied randomly from 25 to 30 s. Each stimulus type was presented 49 times on average.

To ensure the proper timing of the responses, we recorded a copy of the signal sent to the loudspeaker along with the neuronal signals.

Statistical procedures

Firing rates of hippocampal neurons typically vary over the course of a single trial, since they tend to respond to different aspects of the task. The timing and the latency of the response can vary across individual cells. Taking this into account, to quantify the responses to sounds we used a method intended to (1) take into account multiple time points, (2) provide a single measure of statistical significance of the effect, (3) take into account variability in the timescale of the responses, fast in some cases (on the order of tens of milliseconds, Brankack and Buzsaki, 1986) and slow (hundreds of milliseconds) in others.

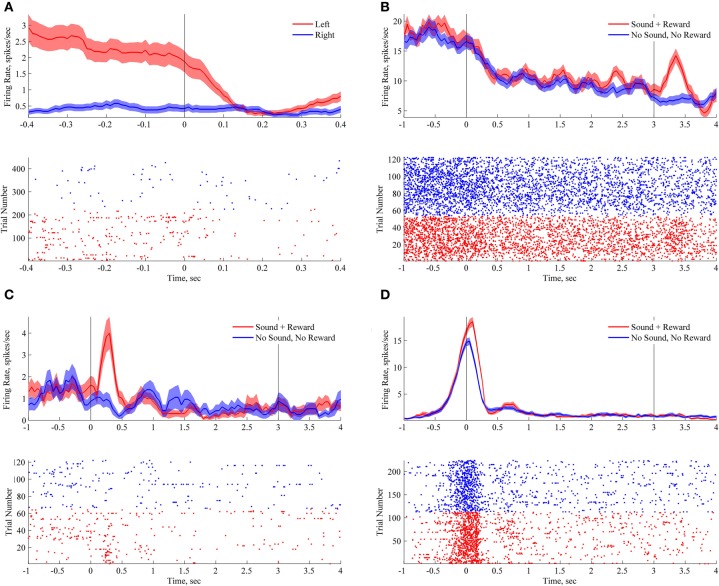

Statistical significance of neuronal responses in the behavioral task

To quantify firing rate changes related to animal's location in space we measured firing rate in non-overlapping 50 ms bins during the approach to the nose pokes (see Figure 3A). Firing rates in each time bin were compared using Wilcoxon rank-sum test for two independent samples (Siegel and Castellan, 1988). Benjamini–Hochberg false discovery rate corrections were used to correct for multiple comparisons (FDR, Benjamini and Hochberg, 1995). A neuron was considered to distinguish between the two nose pokes and therefore to be a “location-encoding” neuron if any of the time bins showed a corrected p-value smaller than 0.05.

Figure 3.

Encoding of spatial and sensory features of the task. Each panel with a peri-stimulus histogram and a raster plot shows responses of an individual neuron. On each plot zero time corresponds to nose poke, upon which in “lucky” trials a sound was triggered. On plots B–D line at 3 s corresponds to reward delivery in “lucky” trials. In unlucky trials the animal always stayed in the nose poke for at least 3 s (see Results). (A) Location-responsive neuron. (B) Responses of reward-responsive neuron during nosepokes to the left nosepoke. This neuron responded upon water delivery (at 3 s). (C) Responses at the right nosepoke of the sound-responsive neuron. This neuron responded to the sound presentation. (D) Responses at the right nosepoke of sound-responsive neuron. This neuron responded to the nosepoke, but fired at higher frequency when the sound was presented. All of the demonstrated neurons showed very similar robust responses at the opposite nosepokes, but fired at smaller peak rates (data not shown). Bin size for peri-stimulus histograms is 200 ms with 190 ms overlap.

To quantify a neuron's responses to sound presentation and water reward delivery during the behavioral task, we compared firing rates in the “lucky” trials to firing rates in trials in which no sound was played and no water delivered. To take into account the variability of the responses (e.g., fast transient or sustained responses), we used four a-priori defined bin sizes (50 ms, 500 ms, 1 and 2 s). In case of 50 ms bins, we measured firing rate in six non-overapping bins (0–300 ms). Wilcoxon rank-sum test for two independent samples was used to compare the firing rates, bin by bin, in “lucky” trials versus not reward trials. Benjamini–Hochberg FDR correction were used to correct for testing multiple time points (n = 6 in 50 ms bins) and bin sizes (50 ms, 500 ms, 1 and 2 s, FDR, Benjamini and Hochberg, 1995). A neuron was considered a “sound or reward-encoding” neuron if any of the time bins showed a corrected p-value smaller than 0.05.

Statistical significance of sound-evoked responses in passive listening and during sleep

To quantify sound-evoked responses during passive presentation, we compared post-stimulus firing rate with the baseline. To take into account the variability of responses we again used four a-priori defined bin sizes (50 ms, 500 ms, 1 and 2 s). For each analysis, baseline firing rates were defined as the firing rate in the time immediately preceding the presentation of the sounds. We used baseline bin size equivalent to the time bin size used to measure evoked activity (50 ms, 500 ms, 1 or 2 s).

In the case of the smallest bin size, firing rate was calculated in 50 ms non-overlapping bins in first 300 ms after sound onset, yielding six individual time points. Firing rate values in each of them were compared to firing rate values in 50 ms baseline (sign test for dependent measurements, Siegel and Castellan, 1988). This yielded six tests for each neuron, which were corrected for using FDR. Latency of the responses was calculated as time of the first bin after sound onset with activity significantly different from baseline.

In the case of 500 ms bins, we took a mean firing rate in 500 ms baseline and compared it with mean firing rate in the 500 ms post-stimulus interval. In the case of 1 s bins we took a mean firing rate in 1 s baseline and compared it with mean firing rate in 1 s post-stimulus interval. In the case of 2 s bins we took a mean firing rate in 2 s baseline and compared it with mean firing rate in 2 s post-stimulus. This yielded four measurements for each neuron: p-value for 50 ms bins (already corrected for comparing multiple bins), and p-values for 500 ms, 1 and 2 s bins. These p-values were corrected for multiple testing using FDR. A neuron was considered sound-responsive if any of the time bins showed a corrected p-value smaller than 0.05.

For the purposes of illustration, the firing rate was calculated in a 50 ms wide window sliding in steps of 25 ms along the whole duration of the trial (see Figures 3–4).

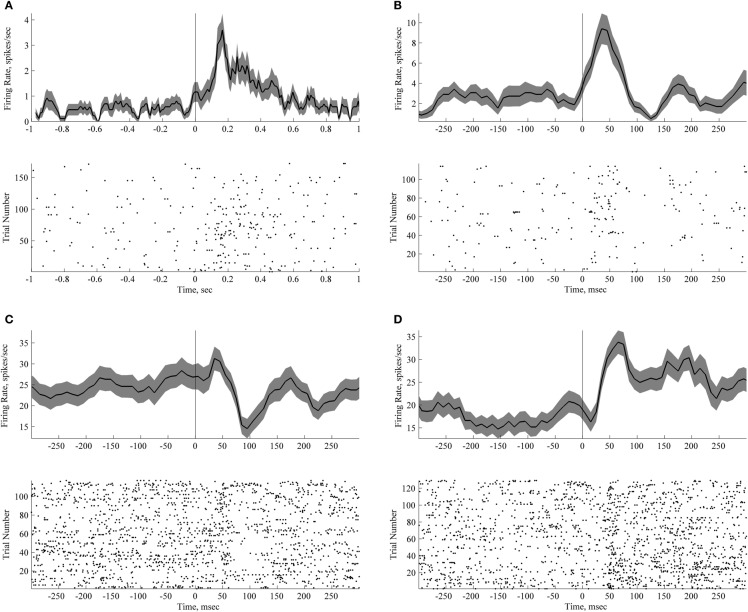

Figure 4.

Short lasting neuronal responses to passive sound presentation during quiet wakefulness and sleep. Each panel with a peri-stimulus histogram and a raster plot shows responses of an individual neuron. Zero time corresponds to the time of sound presentation. Trials with three different sounds are pooled together (vowel 1, vowel 2 and a novel noise-like sound). (A) Neuron responsive to sound presentation during passive listening. (B–D) Neurons responsive to sound presentation during sleep. Bin size for panels peri-stimulus histograms is 50 ms with 40 ms overlap.

Histology

After conclusion of the recording experiments, the animals were overdosed with intraperitoneal injection of the anesthetic urethane and transcardially perfused with 4% paraformaldehyde solution. Brains were sectioned in the coronal plane and stained with cresyl-violet. Electrode tracks were localized on the serial sections in CA1 field.

Results

Behavior

This study set out to test how neurons in rat hippocampus represent auditory stimuli in the context of a behavioral task, during passive exposure and during sleep. Familiar sounds that during the training preceded water reward were played in the novel context (new enclosure) or during sleep.

Rats were given four pre-training sessions, during which they learned to alternate between two water spouts to get water reward (see Figure 2A and Materials and Methods). In the main experimental session (“lottery”, Figure 2B) the water reward contingencies were changed: now water was given only in 50% of the entries to the nosepokes. On these “lucky” trials one of the sounds was played immediately upon the nosepoke entry. Distinct sounds were used for each of the two nose pokes (see Figure 1B and Materials and Methods).

Neuronal representation of the behavioral task

In awake rats, hippocampal neurons exhibit strong spatial selectivity: they encode the animal's position, head direction, and other spatial variables (O'Keefe and Dostrovsky, 1971, reviewed in Moser et al., 2008). Consistent with the existing literature, the neurons recorded in this task exhibited significant sensitivity to animal's location in space. Forty-one percent of tested neurons (24 out of 58) discriminated between right and left nosepokes (see Figure 3A; p < 0.05, corrected, rank sum test on firing rates 400 ms before the nose pokes, see Materials and Methods).

On the “lucky” trials (50% of trials, selected randomly), upon entry to the nosepoke the rats heard a sound and received water reward after a 3 s delay. Two different sounds were used in two different locations (nose pokes). In “unlucky” trials (50% of trials) the rat heard no sound and received no water reward (see Figure 2B and Materials and Methods). The rats stayed still with their snouts in the nosepoke waiting for 3 s between the nosepoke entry/sound onset and the water reward delivery. The average time of waiting in no-sound-no-reward trials was 5.09 ± 0.19 s (SEM). Waiting time decreased over the course of the session (p < 0.001, paired-sample t-test). To examine responses to sound and reward, we aligned neuronal activity on the entry to the nosepoke and compared firing rate observed in the two types of trials, separately for the two water wells. The responses are illustrated on Figures 3B–D; the line at 3 s denotes reward delivery. Out of 58 cells recorded in the task, 11 cells responded in the first 300 ms upon sound presentation (19%, p < 0.05, rank sum tests done in 50 ms windows, corrected for testing six time points and also four different bin sizes, see Materials and Methods). Fourteen (24%) responded upon the reward delivery (Figure 3B). There were no cells with responses to both sound and reward.

Passive sound exposure

The aim of this experiment was to characterize the representation of sound identity during passive listening. We played three different sounds to the animals outside the context of the behavioral task, in a novel box. Two of the sounds were artificial vowel stimuli associated with water in the two different nosepokes and familiar to the animals and one of the sounds was a dissimilar novel band pass filtered noise stimulus. Our intention was to compare the responses to familiar and relevant sounds with the responses to novel and behaviorally irrelevant ones. The animals were placed in a novel context 24 h after they performed the “lottery” behavioral task. Sounds were triggered with no relation to the animal's posture or movement, in a random sequence lasting approximately 50 min with a random interstimulus interval ranging from 25 to 30 s. The rats did not respond to the sounds with any overt behavior.

Three out of 25 neurons recorded during the awake passive conditions were classified as sound-sensitive (see Materials and Methods, p-values were 0.0029, 0.0000016, and 0.01, corrected). The responses are illustrated in Figure 4A. The latencies of response to passive sound presentation were less than 150 ms in two cases and less than 50 ms in the third case (see Figure 4A). Two of the neurons showed a significantly increased firing rate in response to the sound, and one a decreased the firing rate. It would be interesting to know whether the neurons that responded to sounds were also responsive to events occurring within the task, such as sounds, reward, or locations. Although we were able to keep the same neurons across the recording sessions, as evidenced by the neuronal waveforms, autocorrelations and firing rates, we feel that the number of recorded neurons does not allow us to make any conclusive statements regarding a single neuron's properties across time and context. None of the three sound-responding neurons discriminated between different sounds i.e., their responses to different sounds were identical.

Auditory responses during sleep

During sleep sessions, the rats were placed in a small familiar box in which they soon curled up and fell asleep. Animals' activity was monitored remotely via webcam, placed above the box. Five minutes after the animals curled up and fell asleep, the sound stimuli were turned on. The animal's sleep status was constantly monitored online by the experimenter and sound presentation did not cause the animals to wake up. The sounds were presented the entire time when the animals were asleep (117–180 times, 49 presentations per sound type on average) and were turned off when they awoke. Only the trials where sounds did not cause any visible behavioral response from the sleeping animal were analyzed further.

The responses to sounds during sleep are illustrated in Figures 4B–D and Figure 5. Twenty-four percent of recorded neurons (8 out of 33) exhibited significant sound-evoked responses. Across neurons, latency ranged from 50 to 150 ms. In five cases firing rate decreased after sound presentation, in three cases the firing rate increased.

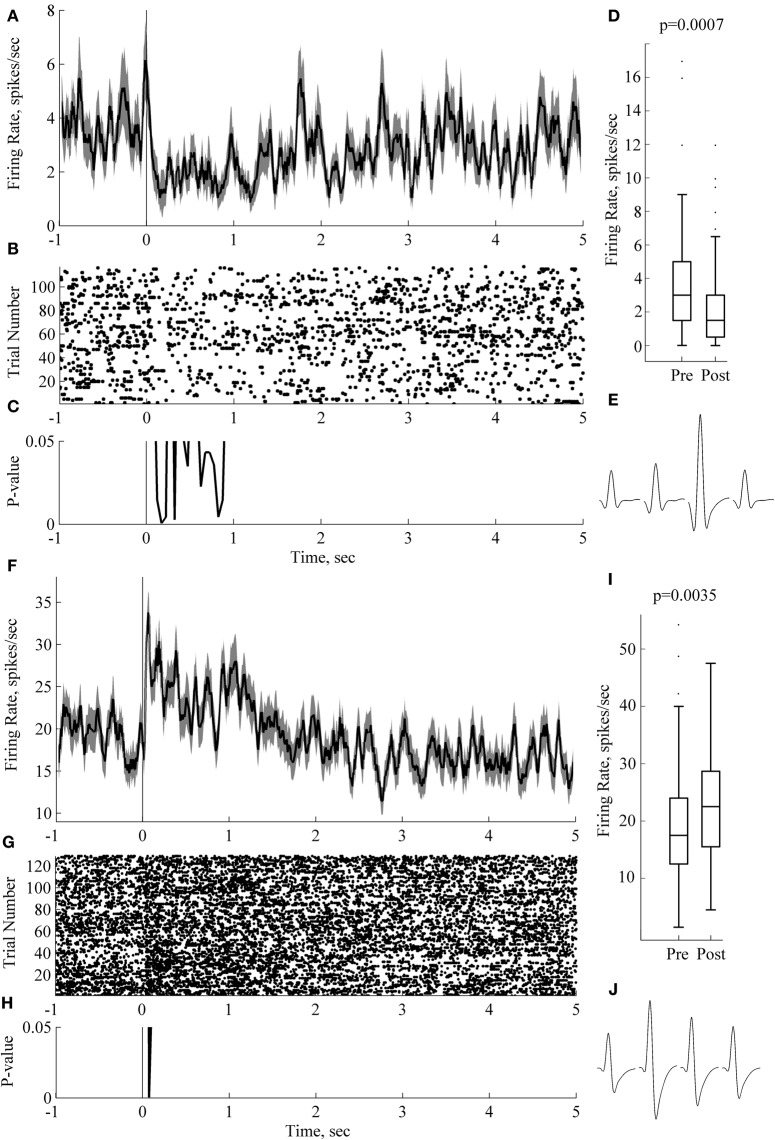

Figure 5.

Sound-evoked responses during sleep can last for over 1 s. Panels (A–E) and (F–J) depict responses and results of statistical analysis for two individual neurons recorded from two rats. Panels (A, F) show peri-stimulus time histograms, PSTH (0 ms corresponds to sound onset; trials with three sounds are pooled together); (B, G) show raster plots on the same time scale as the PSTH. Panels (C, H) show p-value (sign-test for dependent measures, 50 ms windows). Box plots in panels (D, I) present comparison of 2 s long baseline and post-stimulus periods. On each box, the central mark is the median, the edges of the box are the 25th and 75th percentiles, the whiskers extend to the most extreme data points not considering outliers; outliers are plotted individually as dots. p-values are indicated at the outset (paired-sample sign test). Panels (E, J) depict average waveforms on each of the four channels of the tetrode for the corresponding neuron. Spike width (at 25% max spike amplitude) and firing rate for neuron on panels (A–E) are 0.2 ms and 1.8 Hz, respectively; for the neuron in panels (F, J), 0.2 ms and 1.8 Hz, respectively. Bin size for panels (A, C, F, H) is 50 ms with 40 ms overlap.

Figure 5 illustrates neurons with significant differences between baseline and post-stimulus activity lasing more than 1 s, even when considered in short 50 ms long bins (e.g., black line in Figure 5C). In some cases an initial time-locked response was followed by a long sustained activity response (Figures 5A,B,C,D).

In five cases we observed significant differences between mean firing rate in 2 s post-stimulus and the baseline (p < 0.05, corrected). A neuron in Figure 5 (panels F-G-H-I-J) illustrates the case. A short-latency onset response was followed by a sustained, long lasting response (Figure 5F). Panel I plots average firing rate in 2 s pre-and post-stimulus windows. p-value is indicated at the outset (p = 0.0035, sign test for dependent measures). Both neurons depicted on this figure had narrow spikes (Figures 5E and J), 0.2 ms when measured on 25% of spike amplitude. Both of these neurons are likely to be putative interneurons because of the narrow spike width and the absence of the 3–4 ms peak in the autocorrelation plot (data not shown). Unfortunately, limited size of our dataset does not allow us to make any further conclusions on this issue.

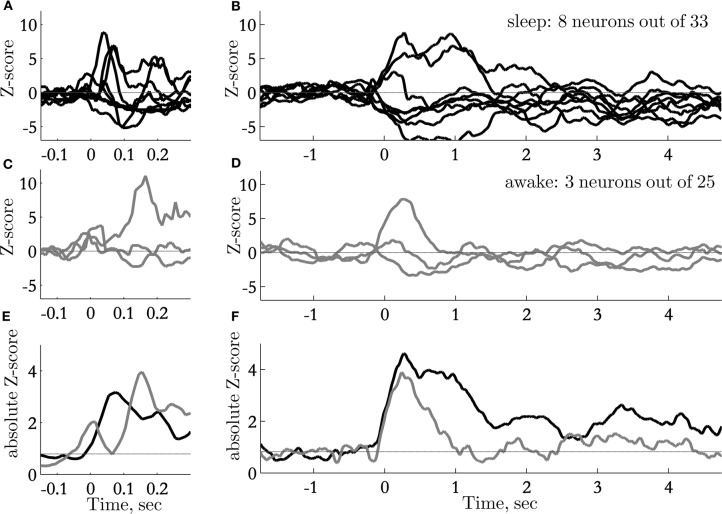

Long lasting responses to sounds were unique to responses recorded during sleep (Figure 6). The responses to sounds during sleep were on average almost twice as long as the response to sounds presented during passive wakefulness (Figure 6F). Responses to sounds during sleep also appeared slightly earlier than the responses during passive wakefulness (Figure 6E).

Figure 6.

Time course of sound-evoked responses: transient and sustained responses. (A) normalized PSTHs of neurons with significant responses on a fast time scale (window size 50 ms), recorded during sleep; (B) normalized PSTHs of neurons with significant responses on a slow time scale (window size 500 ms), recorded during sleep; (C) normalized PSTHs of neurons with significant responses on a fast time scale (window size 50 ms), recorded during awake passive listening; (D) normalized PSTHs of neurons with significant responses on a slow time scale (window size 500 ms), recorded during passive listening. Out of 25 neurons only three showed small, but significant responses; (E, F) Absolute values of normalized PSHT (pulled data from neurons that significantly increase and decrease the firing rate). Black trace represents the average of neurons recorded during sleep (n = 8 out of 33), Gray trace is the mean activity of neurons responsive during awake conditions (n = 3 out of 25).

The majority of cells did not discriminate between different sounds. We found only one neuron with significant sound identity-dependent responses (p = 0.0101, Kruskal–Wallis test) comparing responses to three sounds, two artificial vowels and the novel noise-like sound.

Taken together, these results indicate that hippocampal neurons showed sound-evoked responses during awake passive listening. One quarter of recorded neurons showed reliable sound-evoked responses during sleep. Short latencies of the responses (less than 50 ms) were observed alongside with long-lasting sustained activity.

Discussion

In this study we show that a significant subset of hippocampal neurons responds to sounds presented passively to a rat during both quiet wakefulness and during sleep. During sleep the responses were characterized by a sharp, short latency onset, and in some cases by long-lasting sustained activity. These responses were as strong as the responses to the sounds are associated with water reward and the novel sounds that had never been played to the animal before.

The absence of neuronal responses to sound stimuli outside the categorization task (Itskov et al., 2012) seems at the first glance inconsistent with the results of our current experiment, where we have found that hippocampal neurons responded to the sound stimuli presented during passive wakefulness. We think that this discrepancy may be explained by the differences in the behavioral task and experimental conditions. In the former study highly familiar sounds (used during 4–5 weeks of training) were played in the familiar context after the animal had quenched its thirst and was quietly sitting in the apparatus. In the present study the animals were thirsty during passive presentation and were placed in a completely novel dim environment, which could have facilitated attention to the auditory stimuli. In this situation we observed strong sound evoked responses in passive listening conditions.

Thus, there are three possible differences that could have explained the difference between these two results: motivational state of the animal (thirsty/satiated), familiarity of the stimuli and familiarity of the context. Short latency responses to sounds in hippocampus have previously described in the literature (Brankack and Buzsaki, 1986). This study described responses of extremely short latency evoked potentials with first component around 27 ms after the click onset and neuronal responses with latency similar latency (see their Figure 5). Several reports have demonstrated that stimulus-elicited responses appear in hippocampal neurons with latency of around 80 ms after the stimulus onset (Christian and Deadwyler, 1986; Moita et al., 2003). What is probably even more important is the similarity of the behavioral paradigms that we and the above mentioned authors used in their studies: the use of naive animals (Moita et al., 2003) or the lack of overtraining due to fast learning in a simple behavioral task (Christian and Deadwyler, 1986). Unfortunately it is not possible to compare response specificity to individual sound stimuli in these studies since the authors did not consider this feature in their experimental design. In addition to the abovementioned facts, one study that used tactile stimuli in anesthetized and awake naive passively stimulated rats (Pereira et al., 2007) have found similar short latency responses to trigeminal nerve shock. In over-trained, behaving animals tactile responses have longer latency (Itskov et al., 2011). Furthermore, the stimulus-specific responses to behaviorally relevant sensory stimuli can persist for at least several seconds after the stimulus has disappeared, as demonstrated using tactile-guided task (Itskov et al., 2011).

Based on our observations we suggest that there might be two types of neuronal responses in the hippocampus to sensory stimuli:

Short-latency responses (from ~27 ms latency to tens of milliseconds). These responses are not present in the over-trained and/or satiated animals suggesting that they might disappear when the animal expects the stimuli.

Long-latency responses. These responses are likely to be evoked by behaviorally relevant stimuli. This second type of hippocampal responses to sounds is likely to encode object identity e.g., are specific to particular behaviorally relevant sounds (Itskov et al., 2012) and textures (Itskov et al., 2011). We posit that these responses are associated with the behavioral “meaning” of the sound.

The evoked responses in the CA1 region may be generated by perforant path terminals, originating in the entorhinal cortex or polisynaptically via the Schaffer collaterals. The origin of this short-latency signal in the entorhinal cortex is not known; previous studies suggest that it is unlikely to be of cortical origin, because bilateral ablation of the somatosensory cortex had no effect on the amplitude or the depth distribution of tooth pulp evoked responses in the hippocampus (Brankack and Buzsaki, 1986).

The long-latency responses might be routed to hippocampus through the same entorhinal cortex pathway or perhaps even through medial prefrontal cortex and thalamus, contributions of which remain, to the best of our knowledge, unexplored. It is likely that long-latency responses originate from higher-level cortices with more elaborated “object-related” rather sensory feature processing. Stimulus-specific representations can persist in hippocampus for at least several seconds after the offset of the behaviorally relevant stimulus (Itskov et al., 2011). This memory trace could be stored by sustained activity, previously described in entorhinal cortex (Egorov et al., 2002), or by some other cellular or network mechanisms in higher order associative cortices or hippocampus itself.

Hippocampal responses during sleep

It has been recently demonstrated that re-exposure to olfactory and auditory stimuli during sleep can enhance memory for specific events, associated with those stimuli (Rasch et al., 2007; Rudoy et al., 2009). Rasch and co-authors (2007) cued new memories in humans during sleep by an odor that had been presented as context during prior learning, and showed that this manipulation enhanced subjects' performance. Re-exposure to the odor during slow-wave sleep improved the retention of hippocampus dependent declarative memories but not of hippocampus-independent procedural memories. Re-exposure was ineffective during rapid eye movement sleep or wakefulness or when the odor had been omitted during prior learning. Concurring with these findings, functional magnetic resonance imaging revealed significant hippocampal activation in response to odor re-exposure during slow wave sleep. Rudoy and co-authors (2009) trained participants to associate each of 50 unique object images with a location on a computer screen before a nap. Each object was paired with its characteristic sound (e.g., cat with meow and kettle with whistle). Presentation of the sounds during sleep resulted in better memory performance. Interestingly, the memory enhancement was specific to the played sounds and did not generalize to the other stimuli; those corresponding sounds were not played during sleep. Average electroencephologram (EEG) amplitudes measured over the interval from 600 to 1000 ms after sound onset were larger when there was less forgetting.

In the present study we investigated the hippocampal responses to behaviorally relevant sounds during re-exposure to the sounds during awake state and sleep. We found short-latency responses to sound presentation during sleep, as well as long-lasting (more than 1 s) sustained responses. The latter finding might be related to the sustained EEG response found in Rudoy et al. (2009) after presentation of auditory stimuli to humans during sleep. Rasch et al. (2007) reported an increased BOLD-signal in hippocampus after presentation of the odors. There results suggest that long-lasting changes in neuronal activity contribute to stimulus-evoked responses observed in previous studies, in addition to previously shown local field potential change and short-latency onset responses. It is hoped that further studies will clarify how exactly the presentation of the relevant sensory cues during sleep facilitates the hippocampus-dependent memory.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to Fabrizio Manzino and Marco Gigante for technical support and to Francesca Pulecchi for help with histological procedures. This work was supported by the Human Frontier Science Program (contract RG0041/2009-C), the European Research Council Advanced grant CONCEPT, the European Union (contracts BIOTACT-21590 and CORONET), and the Compagnia San Paolo.

References

- Aggleton J. P., Brown M. W. (1999). Episodic memory, amnesia, and the hippocampal-anterior thalamic axis. Behav. Brain Sci. 22, 425–444. discussion 444–489. [PubMed] [Google Scholar]

- Anderson M. I., Jeffery K. J. (2003). Heterogeneous modulation of place cell firing by changes in context. J. Neurosci. 23, 8827–8835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y., Hochberg Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B 57, 289–300. [Google Scholar]

- Berger T. W., Alger B., Thompson R. F. (1976). Neuronal substrate of classical conditioning in the hippocampus. Science 192, 483–485. 10.1126/science.1257783 [DOI] [PubMed] [Google Scholar]

- Bizley J. K., Walker K. M., King A. J., Schnupp J. W. (2010). Neural ensemble codes for stimulus periodicity in auditory cortex. J. Neurosci. 30, 5078–5091. 10.1523/JNEUROSCI.5475-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizley J. K., Walker K. M., Silverman B. W., King A. J., Schnupp J. W. (2009). Interdependent encoding of pitch, timbre, and spatial location in auditory cortex. J. Neurosci. 29, 2064–2075. 10.1523/JNEUROSCI.4755-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brankack J., Buzsaki G. (1986). Hippocampal responses evoked by tooth pulp and acoustic stimulation: depth profiles and effect of behavior. Brain Res. 378, 303–314. 10.1016/0006-8993(86)90933-9 [DOI] [PubMed] [Google Scholar]

- Burdick C. K., Miller J. D. (1975). Speech perception by the chinchilla: discrimination of sustained. J. Acoust. Soc. Am. 58, 415–427. 10.1121/1.380686 [DOI] [PubMed] [Google Scholar]

- Burwell R. D., Amaral D. G. (1998). Cortical afferents of the perirhinal, postrhinal, and entorhinal cortices of the rat. J. Comp. Neurol. 398, 179–205. [DOI] [PubMed] [Google Scholar]

- Burwell R. D., Witter M. P., Amaral D. G. (1995). Perirhinal and postrhinal cortices of the rat: a review of the neuroanatomical literature and comparison with findings from the monkey brain. Hippocampus 5, 390–408. 10.1002/hipo.450050503 [DOI] [PubMed] [Google Scholar]

- Cariani P. A., Delgutte B. (1996). Neural correlates of the pitch of complex tones. I. Pitch and pitch salience. J. Neurophysiol. 76, 1698–1716. [DOI] [PubMed] [Google Scholar]

- Christian E. P., Deadwyler S. A. (1986). Behavioral functions and hippocampal cell types: evidence for two nonoverlapping populations in the rat. J. Neurophysiol. 55, 331–348. [DOI] [PubMed] [Google Scholar]

- Cipolotti L., Bird C. M. (2006). Amnesia and the hippocampus. Curr. Opin. Neurol. 19, 593–598. 10.1097/01.wco.0000247608.42320.f9 [DOI] [PubMed] [Google Scholar]

- Colgin L. L., Moser E. I., Moser M. B. (2008). Understanding memory through hippocampal remapping. Trends Neurosci. 31, 469–477. 10.1016/j.tins.2008.06.008 [DOI] [PubMed] [Google Scholar]

- Dewson J. H., 3rd. (1964). Speech sound discrimination by cats. Science 144, 555–556. 10.1126/science.144.3618.555 [DOI] [PubMed] [Google Scholar]

- Dooling R. J., Brown S. D. (1990). Speech perception by budgerigars (Melopsittacus undulatus): spoken vowels. Percept. Psychophys. 47, 568–574. [DOI] [PubMed] [Google Scholar]

- Eacott M. J., Norman G. (2004). Integrated memory for object, place, and context in rats: a possible model of episodic-like memory? J. Neurosci. 24, 1948–1953. 10.1523/JNEUROSCI.2975-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egorov A. V., Hamam B. N., Fransen E., Hasselmo M. E., Alonso A. A. (2002). Graded persistent activity in entorhinal cortex neurons. Nature 420, 173–178. 10.1038/nature01171 [DOI] [PubMed] [Google Scholar]

- Eriksson J. L., Villa A. E. (2006). Learning of auditory equivalence classes for vowels by rats. Behav. Processes 73, 348–359. 10.1016/j.beproc.2006.08.005 [DOI] [PubMed] [Google Scholar]

- Felleman D. J., van Essen D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47. 10.1016/j.neuroimage.2009.04.061 [DOI] [PubMed] [Google Scholar]

- Gelfer M. P., Mikos V. A. (2005). The relative contributions of speaking fundamental frequency and formant frequencies to gender identification based on isolated vowels. J. Voice 19, 544–554. 10.1016/j.jvoice.2004.10.006 [DOI] [PubMed] [Google Scholar]

- Harris K. D., Henze D. A., Csicsvari J., Hirase H., Buzsaki G. (2000). Accuracy of tetrode spike separation as determined by simultaneous intracellular and extracellular measurements. J. Neurophysiol. 84, 401–414. [DOI] [PubMed] [Google Scholar]

- Heffner H. E., Heffner R. S., Contos C., Ott T. (1994). Audiogram of the hooded Norway rat. Hear. Res. 73, 244–247. [DOI] [PubMed] [Google Scholar]

- Ho A. S., Hori E., Nguyen P. H., Urakawa S., Kondoh T., Torii K., Ono T., Nishijo H. (2011). Hippocampal neuronal responses during signaled licking of gustatory stimuli in different contexts. Hippocampus 21, 502–519. 10.1002/hipo.20766 [DOI] [PubMed] [Google Scholar]

- Holmberg M., Hemmert W. (2004). “Auditory information processing with nerve-action potentials,” in 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, (Montreal, QC: Canada; ), iv–193–iv–196. [Google Scholar]

- Itskov P. M., Vinnik E., Diamond M. E. (2011). Hippocampal representation of touch-guided behavior in rats: persistent and independent traces of stimulus and reward location. PLoS ONE 6:e16462. 10.1371/journal.pone.0016462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itskov P. M., Vinnik E., Honey C., Schnupp J. W., Diamond M. E. (2012). Sound sensitivity of neurons in rat hippocampus during performance of a sound-guided task. J. Neurophysiol. 107, 1822–1834. 10.1152/jn.00404.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O., Lisman J. E. (2000). Position reconstruction from an ensemble of hippocampal place cells: contribution of theta phase coding. J. Neurophysiol. 83, 2602–2609. [DOI] [PubMed] [Google Scholar]

- Kluender K. R., Diehl R. L., Killeen P. R. (1987). Japanese quail can learn phonetic categories. Science 237, 1195–1197. 10.1126/science.3629235 [DOI] [PubMed] [Google Scholar]

- Komorowski R. W., Manns J. R., Eichenbaum H. (2009). Robust conjunctive item-place coding by hippocampal neurons parallels learning what happens where. J. Neurosci. 29, 9918–9929. 10.1523/JNEUROSCI.1378-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubie J. L., Muller R. U., Bostock E. (1990). Spatial firing properties of hippocampal theta cells. J. Neurosci. 10, 1110–1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl P. K. (1991). Human adults and human infants show a “perceptual magnet effect” for the prototypes of speech categories, monkeys do not. Percept. Psychophys. 50, 93–107. [DOI] [PubMed] [Google Scholar]

- Leutgeb J. K., Leutgeb S., Treves A., Meyer R., Barnes C. A., McNaughton B. L., Moser M. B., Moser E. I. (2005a). Progressive transformation of hippocampal neuronal representations in “morphed” environments. Neuron 48, 345–358. 10.1016/j.neuron.2005.09.007 [DOI] [PubMed] [Google Scholar]

- Leutgeb S., Leutgeb J. K., Barnes C. A., Moser E. I., Mcnaughton B. L., Moser M. B. (2005b). Independent codes for spatial and episodic memory in hippocampal neuronal ensembles. Science 309, 619–623. 10.1126/science.1114037 [DOI] [PubMed] [Google Scholar]

- Moita M. A., Rosis S., Zhou Y., Ledoux J. E., Blair H. T. (2003). Hippocampal place cells acquire location-specific responses to the conditioned stimulus during auditory fear conditioning. Neuron 37, 485–497. 10.1016/S0896-6273(03)00033-3 [DOI] [PubMed] [Google Scholar]

- Morris R., Garrud P., Rawlins J., O'Keefe J. (1982). Place navigation impaired in rats with hippocampal lesions. Nature 297, 681–683. [DOI] [PubMed] [Google Scholar]

- Moser E. I., Kropff E., Moser M. B. (2008). Place cells, grid cells, and the brain's spatial representation system. Annu. Rev. Neurosci. 31, 69–89. 10.1146/annurev.neuro.31.061307.090723 [DOI] [PubMed] [Google Scholar]

- Muller R. U., Kubie J. L. (1987). The effects of changes in the environment on the spatial firing of hippocampal complex-spike cells. J. Neurosci. 7, 1951–1968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J. (1976). Place units in the hippocampus of the freely moving rat. Exp. Neurol. 51, 78–109. 10.1016/0014-4886(76)90055-8 [DOI] [PubMed] [Google Scholar]

- O'Keefe J., Burgess N. (1996). Geometric determinants of the place fields of hippocampal neurons. Nature 381, 425–428. 10.1038/381425a0 [DOI] [PubMed] [Google Scholar]

- O'Keefe J., Dostrovsky J. (1971). The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175. 10.1016/0006-8993(71)90358-1 [DOI] [PubMed] [Google Scholar]

- O'Keefe J., Nadel L. (1978). The Hippocampus as a Cognitive Map. Clarindon: Oxford. [Google Scholar]

- O'Keefe J., Recce M. L. (1993). Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3, 317–330. 10.1002/hipo.450030307 [DOI] [PubMed] [Google Scholar]

- Pereira A., Ribeiro S., Wiest M., Moore L. C., Pantoja J., Lin S. C., Nicolelis M. A. (2007). Processing of tactile information by the hippocampus. Proc. Natl. Acad. Sci. U.S.A. 104, 18286–18291. 10.1073/pnas.0708611104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson G. E., Barney H. L. (1952). Control methods used in a study of the vowels. J. Acoust. Soc. Am. 24, 175. [Google Scholar]

- Rasch B., Buchel C., Gais S., Born J. (2007). Odor cues during slow-wave sleep prompt declarative memory consolidation. Science 315, 1426–1429. 10.1126/science.1138581 [DOI] [PubMed] [Google Scholar]

- Rudoy J. D., Voss J. L., Westerberg C. E., Paller K. A. (2009). Strengthening individual memories by reactivating them during sleep. Science 326, 1079. 10.1126/science.1179013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakurai Y. (2002). Coding of auditory temporal and pitch information by hippocampal individual cells and cell assemblies in the rat. Neuroscience 115, 1153–1163. 10.1016/S0306-4522(02)00509-2 [DOI] [PubMed] [Google Scholar]

- Schnupp J., Nelken I., King A. (2011). Auditory Neuroscience: Making Sense of Sound. Cambridge, MA: MIT Press. [Google Scholar]

- Scoville W. B., Milner B. (1957). Loss of recent memory after bilateral hippocampal lesions. J. Neurol. Neurosurg. Psychiatry 20, 11–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel S., Castellan N. J. (1988). Nonparametric Statistics for the Behavioral Sciences. New York, NY: McGraw-Hill. [Google Scholar]

- Takahashi S., Sakurai Y. (2009). Sub-millisecond firing synchrony of closely neighboring pyramidal neurons in hippocampal CA1 of rats during delayed non-matching to sample task. Front. Neural Circuits 3:9. 10.3389/neuro.04.009.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ulanovsky N., Las L., Farkas D., Nelken I. (2004). Multiple time scales of adaptation in auditory cortex neurons. J. Neurosci. 24, 10440–10453. 10.1523/JNEUROSCI.1905-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Strien N. M., Cappaert N. L., Witter M. P. (2009). The anatomy of memory: an interactive overview of the parahippocampal-hippocampal network. Nat. Rev. Neurosci. 10, 272–282. 10.1038/nrn2614 [DOI] [PubMed] [Google Scholar]

- Vertes R. P. (2006). Interactions among the medial prefrontal cortex, hippocampus and midline thalamus in emotional and cognitive processing in the rat. Neuroscience 142, 1–20. 10.1016/j.neuroscience.2006.06.027 [DOI] [PubMed] [Google Scholar]

- Wiebe S. P., Staubli U. V. (1999). Dynamic filtering of recognition memory codes in the hippocampus. J. Neurosci. 19, 10562–10574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiebe S. P., Staubli U. V. (2001). Recognition memory correlates of hippocampal theta cells. J. Neurosci. 21, 3955–3967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wills T. J., Lever C., Cacucci F., Burgess N., O'Keefe J. (2005). Attractor dynamics in the hippocampal representation of the local environment. Science 308, 873. 10.1126/science.1108905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson M. A., McNaughton B. L. (1993). Dynamics of the hippocampal ensemble code for space. Science 261, 1055–1058. 10.1126/science.8351520 [DOI] [PubMed] [Google Scholar]

- Wood E. R., Dudchenko P. A., Eichenbaum H. (1999). The global record of memory in hippocampal neuronal activity. Nature 397, 613–616. 10.1038/17605 [DOI] [PubMed] [Google Scholar]