Summary

Economists and cognitive psychologists have long known that prior rewards bias decision making in favor of options with high expected value. Accordingly, value modulates the activity of sensorimotor neurons involved in initiating movements towards one of two competing decision alternatives. However, little is known about how value influences the acquisition and representation of incoming sensory information, or about the neural mechanisms that track the relative value of each available stimulus to guide behavior. Here, fMRI revealed value-related modulations throughout spatially selective areas of the human visual system in the absence of overt saccadic responses (including in V1). These modulations are primarily associated with the reward history of each stimulus and not to self-reported estimates of stimulus value. Finally, subregions of frontal and parietal cortex represent the differential value of competing alternatives and may provide signals to bias spatially-selective visual areas in favor of more valuable stimuli.

Moment-to-moment commerce with the world requires efficiently acquiring and evaluating incoming sensory information in order to guide adaptive motor interactions with the environment. While a good deal of research has focused on understanding how the quality of sensory information affects this decision process (Gold and Shadlen, 2007; Shadlen and Newsome, 2001), non-sensory factors – such as the prior reward history of a stimulus – also exert a strong influence on behavior and on neural activity in cortical areas that guide motor responses. For example, Platt and Glimcher (1999) recorded from occulomotor neurons in the lateral intraparietal area (LIP) during a saccadic decision making task in which a monkey had to choose between a red and a green stimulus; although the spatial position of each stimulus was unpredictable from trial to trial, the reward associated with each alternative was known in advance (e.g. 0.1ml juice reward paired with the green target, and 0.2ml paired with a red target). Even when sensory and motor factors were perfectly controlled, the firing rate of LIP neurons scaled with the value of the stimulus in their response field (see also, Dorris and Glimcher, 2004; Glimcher, 2003; Sugrue et al., 2004). Similar value-related signals have been recorded from occulomotor neurons in the superior colliculus (Basso and Wurtz, 1997, 1998; Dorris and Munoz, 1998; Ikeda and Hikosaka, 2003), supplemental eye fields (Amador et al., 2000), posterior cingulate cortex (PCC, McCoy et al., 2003), and dorsal lateral prefrontal cortex (DLPFC, Barraclough et al., 2004; Leon and Shadlen, 1999).

While these studies reveal that value influences activity in a distributed network of occulomotor areas, many questions remain unaddressed. First, neurons in most previous studies were specifically selected for their saccadic response properties, so the influence of value on neural activity in other areas of the visual system is not well understood (although see Haenny et al., 1988; Haenny and Schiller, 1988; Shuler and Bear, 2006). Second, it is unclear if reward-related modulations are driven primarily by the reward history of each available item, or if modulations are instead driven by the observer's overt assessment of reward probability (henceforth referred to as subjective value). Finally, little work has been done to examine the control mechanisms that are involved in biasing spatially selective areas of visual cortex in favor of more valuable stimuli.

To address these questions, I employed human observers, functional magnetic resonance imaging (fMRI), and a paradigm that required selecting one of two spatially separated targets that varied in value across the course of the experimental session. In order to measure value-related modulations within spatially selective areas of visual cortex in the absence of saccadic responses, observers maintained central fixation throughout the task and made manual button-press responses to indicate their choices. To separately assess the influence of prior rewards and subjective-value, the ‘value’ of each choice was estimated using a quantitative model based solely on reward history and also by acquiring subjective ratings from observers on a trial-by-trial basis. Finally, a whole-brain group analysis identified regions of frontal and parietal cortex in which activation levels increased monotonically as the differential value between the two alternatives increased. These signals are consistent with control regions that act to bias activation levels within spatially selective areas of the visual system so that more valuable stimuli win representation at the expense of less valuable alternatives.

Results

In the behavioral task, 14 human observers tried to maximize the amount of reward they earned by choosing one of two visually presented colored alternatives (a ‘red’ choice and a ‘green’ choice, see Figure 1a, task based on Sugrue et al., 2004; Corrado et al., 2005). Rewards were assigned to each color in an independent and stochastic manner, with the average red/green reward ratio changing unpredictably after every two blocks of trials (selected from the set {1:1, 1:3, 3:1}). Once a reward was assigned to a particular color, the reward remained available until that color was selected; therefore, the probability of earning a reward increased as a function of the time since that color was last chosen. In addition, rewards were never given on trials in which an observer switched between alternatives (referred to as a change over delay, or COD, see Corrado et al., 2005; Sugrue et al., 2004). Unsignaled changes in the reward ratio and reward persistence encouraged exploratory choices, and observers eventually selected each color roughly in proportion to the reward earned from that alternative, consistent with Herrnstein's matching law (de Villiers and Herrnstein, 1976; Glimcher, 2005; Herrnstein, 1961). Observers did tend to select the red stimulus slightly more often than the green stimulus, although the deviation was modest and only significant when the red/green reward ratio was 1:3 (leftmost bar in Figure 1b, t(13)=2.57, p<.01). Since eye movements can modulate activity in nearly all visual areas, observers in the present study indicated their choices via manual button press responses so that value-related modulations could be assessed in the absence of saccades (all observers were instructed and trained to maintain central fixation throughout the task, which was verified using eye tracking during scanning in 6 observers, see Supplemental Figures 1-4).

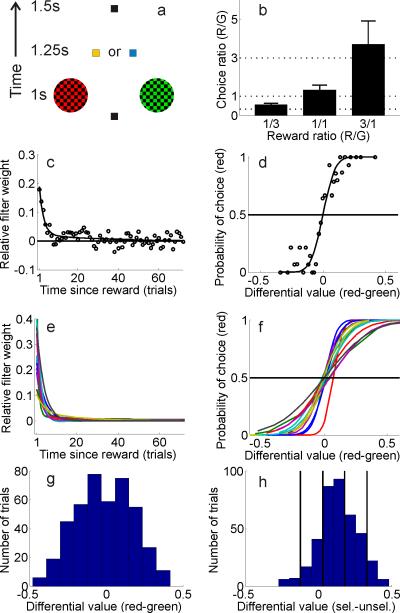

Figure 1. Behavioral paradigm and model for estimating value.

(a) Schematic of trial sequence in behavioral task; 1.25s after the observer chose the red or green stimulus, the fixation point changed to yellow to indicate a reward, or blue to indicate no reward (see text for more details). (b) Mean red/green choice ratios across all observers for scans with a {1:3, 1:1, and 3:1 } average red/green reward ratio (indicated by dotted lines). (c) Filter (open circles) and exponential approximation (solid line) relating reward history and choice for a single observer. (d) Relationship between differential value of the red and green stimuli and the probability of choosing red for the same single observer (solid line is best fitting cumulative normal). (e) Best fitting exponential filter relating reward history and choice for each of the 14 observers (as in panel c). (f) Best fitting cumulative normal relating differential value and choice for each of the 14 observers (as in panel d, see also Supplemental Table 1). (g) Sample distribution of differential value for the same single observer shown in panels (c,d). (h) Same data as in panel (g), but resorted so that differential value is now defined as the value of the unselected stimulus subtracted from the value of the selected stimulus, collapsed across red and green alternatives. The solid vertical lines show the boundaries for sorting trials into 5 bins based on differential value for this observer.

The first step in examining the influence of value in spatially-selective regions of visual cortex was to use reward history to estimate the value of each stimulus on a trial-by-trial basis. Intuitively, the stimulus that is associated with the most rewards per unit time should be assigned a higher value and should be selected more often. Thus, in a stationary environment where average reward ratios are fixed, an observer should perfectly integrate all past rewards to compute the value of each stimulus. On the other hand, if reward ratios change rapidly (say every few trials) an observer should only consider rewards earned in the very recent past. In the present experiment where reward ratios changed every 144 trials (every 2 blocks or ‘scans’, with each scan containing 72 trials), the optimal strategy falls somewhere in between these two extremes.

To estimate how previous rewards influenced the choice behavior of each observer, a filter was analytically derived to describe the relationship between reward history and choices (see Corrado et al., 2005). This filter approximates the average reward history of the selected stimulus and thus describes the relationship between a reward earned n trials in the past and choice on the current trial (although see Supplemental Experimental Procedures section describing the model for important additional details). The filter derived for each subject was well approximated by either a weighted sum of two exponential functions (11/14 observers) or a single exponential function (3/14 observers), indicating that recent rewards influenced choices more than distant rewards (Figures 1c,e, Supplemental Table 1). Using an exponential function as an approximation was simply a way to characterize the shape of the filters derived for each subject. However, the general analysis approach is capable in principle of recreating arbitrarily shaped functions, so no strong apriori assumptions about shape were imposed (Corrado et al., 2005).

Given a function that describes how past rewards influence choice, the value of each stimulus was estimated on a trial-by-trial basis by convolving an observer's exponential filter with a vector describing the reward history of each stimulus (a vector of 0's and 1's marking unrewarded and rewarded trials, respectively). Thus, if a choice to the red stimulus was rewarded on the previous trial, then red would be assigned a relatively high value on the current trial. Conversely, if red was last rewarded approximately 5 or more trials in the past, then its value on the current trial would be relatively low (mean estimated filter weights across subjects were significantly greater than zero for previous 5 choices, all t's(13)>3.57, all p's<.01). Since the goal of the task was to maximize rewards obtained over the course of the experimental session, observers should logically choose the more valuable of the two stimuli on each trial (where ‘value’ in this case is determined solely by recent rewards). Accordingly, behavioral choices are strongly predicted by the differential value of the red and green stimuli on each trial (Figures 1d,f, Corrado et al., 2005).

To evaluate the predictive power of the model, data from five out of six scans were used to estimate the cumulative normal distribution that best approximates the relationship between differential value and choice probabilities (see e.g. solid line in Figure 1f, Supplemental Experimental Procedures). The height of the cumulative normal at a given differential value was then used to estimate the probability of choosing red on each trial in the sixth scan; when the estimate was greater than .5, the model guessed that the observer selected red, otherwise the model guessed that the observer selected green. This procedure was iterated across all six unique permutations of holding one scan out, and on average the model predicted observer's choices on 79% (±0.2% S.E.M.) of the trials. This predictive accuracy is reasonably high given the inherently stochastic nature of human decision making, and is comparable to estimates derived using highly trained non-human primates (Corrado et al., 2005, see also Supplemental Experimental Procedures for an additional metric of model performance and Daw et al., 2006; Lau and Glimcher, 2005; Lee, 2006; Samejima et al., 2005; Seo and Lee, 2007 for other approaches to modeling stimulus value).

Spatially selective areas in left visual cortex receive sensory input primarily from the right visual field and areas in right visual cortex receive sensory input primarily from the left visual field. This contralateral stimulus-to-cortex mapping permitted a measurement of the blood oxygenation level dependent (BOLD) response evoked separately by each stimulus on every trial because the two stimuli projected to visual areas in opposite cortical hemispheres. Spatially selective areas in early visual cortex (occipital areas V1, V2v, V3v, and hV4) were identified using standard retinotopic mapping procedures (Engel et al., 1994; Sereno et al., 1995) and independent functional localizer scans were used to find the most selective voxel in each of these areas that responded to the region of space occupied by each stimulus in the main experimental task (see Supplemental Experimental Procedures). Spatially selective regions of the intraparietal sulcus (IPS, the putative homolog of monkey LIP) and frontal eye fields (FEF) were identified using a delayed-saccade task in which observers made eye movements to a remembered spatial location (Supplemental Figures 5,6, and Supplemental Table 2, Saygin and Sereno, 2008; Sereno et al., 2001).

Trials were first sorted into 5 equally spaced bins based on the differential value between the selected and unselected stimuli, collapsed across color (see Figures 1g,h). Then, the amplitude of the evoked BOLD response within each value bin was estimated within visual areas contralateral to the selected stimulus as well as contralateral to the unselected stimulus (using a general linear model, or GLM). Responses assigned to like conditions were averaged across hemispheres since no significant differences were observed between left and right visual areas. Overall, selected stimuli evoked larger BOLD responses than unselected stimuli (Figure 2a-g, repeated measures ANOVA comparing magnitude of response evoked by selected and unselected stimuli, collapsed across differential value levels and visual areas: F(1,13)=56.7, p<.001). However, the difference between responses evoked by selected and unselected stimuli increased as differential value increased (repeated measures ANOVA comparing responses evoked by selected vs. unselected stimuli at each differential value level, collapsed across visual areas: F(4,52)=7.5, p<.001). The qualitative pattern shown in Figure 2g was observed in most visual areas, with the exception of V3v (three-way repeated-measures ANOVA with visual area, selected vs. unselected stimuli, and differential value as factors did not approach significance F(20,260)=1.0, p=0.44). In addition, the interaction shown in Figure 2g was still significant when ‘switch’ trials were removed from the data set, confirming that the pattern was not simply driven by low-value choices that never earned a reward (switch trials were never rewarded due to the COD, F(4,52)=3.35, p<.025). Finally, the interaction shown in Figure 2 is unlikely to have been driven by the lateralized manual button-press responses that were required (see Supplemental Results).

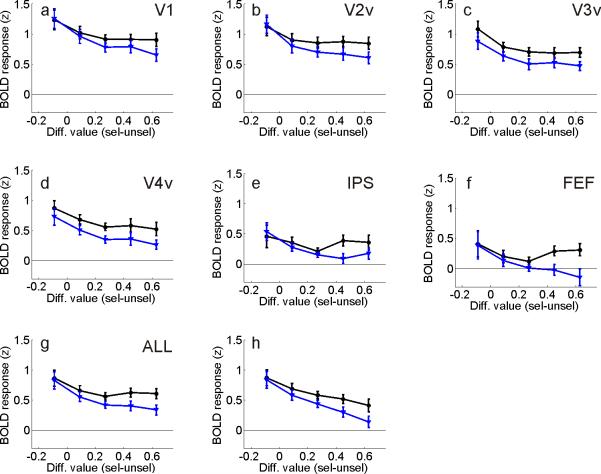

Figure 2. Influence of differential value in spatially selective regions of visual cortex.

(a-f) Influence of differential value on BOLD responses in each identified subregion of visual cortex and mean across all visual areas (g). Overall responses evoked by selected stimuli (black lines) and unselected stimuli (blue lines) diverged as differential value increased. (h) Same as panel (g) after including a regressor marking the onset time of all earned rewards.

When present, rewards were signaled 1.25s after the offset of the stimulus display; therefore, it is possible that the value-related modulations reported in Figure 2 were influenced by signals that occurred after the experience of a reward. To test this account, an additional GLM was performed that included a regressor to explicitly account for variance in the BOLD signal related to the presentation of rewards. Just as in Figure 2g, the difference between responses evoked by selected and unselected stimuli increased as differential value increased, demonstrating that the value-related modulations were not caused solely by a bias in the number of rewards received in each differential value bin (Figure 2h, F(4,52)=7.9, p<.001; the same general pattern was observed across the visual hierarchy as the three-way interaction with visual area, selected vs. unselected stimuli, and differential value as factors did not approach significance even when the reward regressor was included, F(20,260)=1.1, p=0.38).

As shown in Figure 2g, the overall amplitude of the BOLD responses tended to decrease slightly as the differential value between selected and unselected stimuli increased (repeated measures ANOVA for main effect of differential value after collapsing across selected and unselected stimuli, F(4,52)=6.6, p<.001). A speculative explanation for this general decrease in activation levels holds that observers spent longer making their decisions when the two alternatives were of approximately equal value (low differential value, leftmost points in Figure 2g) compared to when one alternative was far more valuable than the other (high differential value, rightmost points in Figures 2g). If true, then the longer decision times may have contributed to larger overall BOLD responses due to time-on-task effects (D'Esposito et al., 1997). Ideally, decision time could be indirectly inferred by examining response times (RTs); however, RTs were not meaningful in the main experiment because observers were required to respond after the offset of the stimuli. Therefore, in a separate behavioral study we tested for a relationship between decision time and differential value: stimuli were presented for only 100ms and observers (n=5) had to respond as quickly as possible. RTs systematically decreased as differential value increased, consistent with the ‘decision time’ explanation (mean RTs decreased from 611ms to 518ms, see Supplemental Figure 7, F(4,16)=5.9, p<.005). However, these RT differences cannot account for the interaction between differential value and the magnitude of the response evoked by selected and unselected stimuli since BOLD responses were measured for selected and unselected stimuli on the same trials (thus decision times were equated between corresponding points along the black and blue lines in Figure 2g).

Reward history or subjective value?

At least two accounts of the value-related modulations reported in Figure 2 can be considered. First, rewards may influence activation levels via a mechanism that integrates the prior reward history of each stimulus over a time scale that is appropriate to guide behavior. Second, value-based modulations might be driven by overt estimates of the probability of earning a reward for making a particular choice (referred to here simply as subjective-value). To distinguish these two accounts, observers were asked to report the subjective value of the selected stimulus on a trial-by-trial basis [on a scale ranging from 1 (low value – unlikely to yield a reward) to 3 (high value – likely to yield a reward)]. These subjective-value ratings were collected in the scanner at the same time that the observers selected the red or the green alternative (e.g. pressing button ‘3’ with your left hand would indicate that you are selecting the left stimulus and that you subjectively rate your choice as ‘high value’). Overall, there was a significant correlation between these subjective ratings and stimulus-value as estimated using the quantitative model described above that only took into account reward history (which will henceforth be referred to exclusively as differential value to distinguish the model-based metric from the subjective-value ratings, Figure 3a, one-way repeated measures ANOVA: F(2,26)=21.2, p<0.001). However, this relationship was driven primarily by a tendency to rate ‘switch’ trials – which never yielded a reward because of the COD – as having a low subjective value. There was no difference between the differential value levels associated with subjective value ratings of ‘2’ and ‘3’ (t(13)=0.31, p=0.76). Consistent with this observation, removing switch trials from the data set substantially attenuated the relationship between subjective and differential value (Figure 3b, although the relationship was still significant, one-way repeated measures ANOVA: F(2,26)=4.02, p<0.05; additional two-way repeated measures ANOVA comparing magnitude of relationship between subjective and differential value with and without switch trials: F(2,26)=7.51, p<0.005, revealing significant difference between panels 3a, 3b). Thus, observers seemed to be aware of the fact that switch trials never produced a reward, but otherwise subjective value ratings were not strongly correlated with differential value, indicating some degree of independence in these measures.

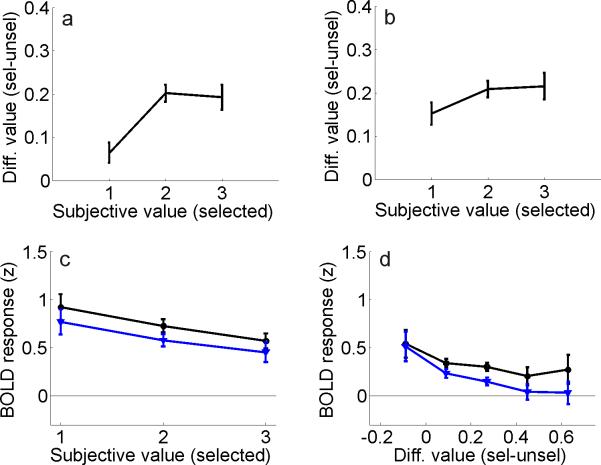

Figure 3. Influence of subjective value in spatially selective regions of visual cortex.

(a) Relationship between differential value and the reported subjective-value of the selected stimulus (where 1 indicates low subjective value, and 3 indicates high subjective value). (b) Same function as in panel (a), but with switch trials removed from the analysis. (c) Same data as in Figure 2g, but re-sorted to show activation levels evoked by selected (black) and unselected (blue) stimuli as a function of subjective value ratings (averaged across all visual areas, See Supplemental Figure 8 for data from individual visual areas). The difference between responses evoked by selected and unselected stimuli did not vary as the subjective value of the selected stimulus increased. (d) BOLD responses sorted by differential value (as in Figure 2g), but computed based only on data from scans with a 1:1 red/green reward ratio. Error bars reflect ±S.E.M. across observers.

If the modulations reported in Figure 2 were driven by subjective estimates of value, then higher subjective value ratings should be associated with relatively large BOLD responses. On the other hand, if the modulations in Figure 2 were driven primarily by the reward history of each item – and not by an overt representation of perceived value – then there should be little correspondence between relative cortical activation levels and subjective-value ratings. As shown in Figure 3c, selected stimuli did evoke larger overall responses than unselected stimuli, demonstrating sensitivity to modulations of the BOLD response induced by selection (repeated measures ANOVA testing main effect of selected vs. unselected stimuli, collapsed across differential value levels and visual areas: F(1,13)=40.3, p<0.001; note that these are the same data shown in Figure 2g, just sorted differently, so the larger responses associated with selected stimuli are fully expected). However, the difference between responses evoked by selected and unselected stimuli did not increase as the subjective-value of the selected stimulus increased, as would be expected if estimates of subjective value were driving the modulations shown in Figure 2 (see Figure 3c, two-way repeated measures ANOVA comparing responses evoked by selected and unselected stimuli at each subjective value level: F(2,26)=0.08, p=0.92, see also Supplemental Figure 8 for data from each visual area). A reanalysis of the data using a GLM with an additional regressor marking the onset time of all rewards (as in Figure 2h) did not alter this null result (F(2,26)=0.1, p=0.91).

The failure to find a relationship between subjective-value and relative activation levels suggests that trial-by-trial changes in the perceived probability of earning a reward were not driving the modulations reported in Figure 2. However, it is possible that observers generated a global estimate of subjective value on a scan-by-scan basis, and that this sustained valuation biased cortical activation in favor of the choice alternative that was more likely to be rewarded over the course of an entire scan. For example, observers might place more value on the red stimulus during scans in which the red stimulus was 3 times more likely to yield a reward than the green stimulus. In turn, such sustained biases in subjective value may have contributed to the graded modulations shown in Figure 2 if high differential trials were primarily pulled from scans in which one stimulus was rewarded more frequently than the other (i.e. scans with a red/green reward ratio of 3:1 or 1:3 as opposed to scans with a reward ratio of 1:1). To evaluate this possibility, observers were asked to report which color was more valuable at the end of each scan (using a two-alternative forced choice procedure). The more valuable color was reported with a high degree of accuracy following scans in which there was a strong bias in the reward ratio (mean correct guess rate ±S.E.M.: 89% ±5% on 3:1 and 1:3 red/green reward ratio scans). Thus, observers were generally aware of the global reward ratios that were in effect on a scan-by-scan basis.

To determine if this awareness of global reward ratios was responsible for generating the modulations shown in Figure 2, the influence of differential value on visual cortex was re-evaluated using only scans in which the average reward ratio was 1:1 and sustained biases in subjective value should be minimized. Regions of interest in IPS and FEF were excluded from this analysis because they were only identified in one hemisphere for many observers (see Supplemental Table 2); therefore, there was not enough data to estimate a reliable response from these regions when considering only scans with a 1:1 reward-ratio (which reduced the size of the data set by 2/3). However, reasonably robust estimates were available for all observers from areas V1-hV4 after collapsing across corresponding regions in the left and right hemisphere; the difference between responses evoked by selected and unselected stimuli increased as differential value increased, just as in Figure 2g (Figure 3d, repeated measures ANOVA comparing responses evoked by selected vs. unselected stimuli at each differential value level: F(4,52)=2.93, p<0.05). The observation of value-based modulations even when the global probability of reward for each alternative was fixed and equated suggests that sustained biases in subjective-value that may have occurred on a scan-by-scan basis cannot account for the modulations shown in Figure 2.

Representing differential value outside of spatially-selective visual cortex

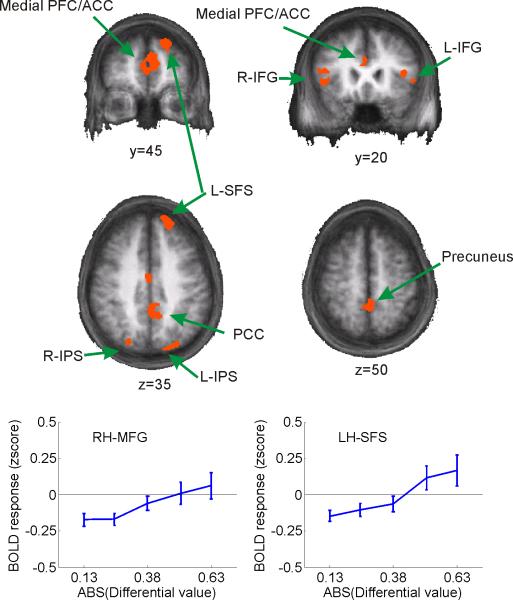

The spatially-selective visual areas described in the previous section – by definition – represent stimuli in one circumscribed region of the visual scene (although they may also represent many different visual features of stimuli from within that circumscribed region, even in higher-order areas like IPS, e.g. Sereno and Maunsell, 1998; Toth and Assad, 2002) . Since spatial position is independent of differential value in this task, and since the spatial position of each stimulus is unknown at the start of each trial, it stands to reason that modulations in spatially-selective regions of visual cortex are driven by biasing signals that are generated elsewhere. In the context of the present task, a putative control region should exhibit weak biasing signals when differential value is low and spatially selective areas of visual cortex represent each alternative equally (see Figure 2g). In contrast, strong biasing signals should be observed when differential value is high and relative activation levels in spatially-selective areas of visual cortex favor the more valuable alternative (for similar logic in the domain of perceptual decision making, see e.g. Heekeren et al., 2004; Mazurek et al., 2003). A whole-brain random effects group analysis was therefore used to identify regions in which activation levels increased as the absolute value of the differential value between the stimuli increased. To help ensure that these activation patterns reflected the influence of prior rewards as opposed to the actual presentation of a reward, an additional regressor was included that marked the onset time of all rewards (the same approach that was used to generate data in Figure 2h). Figure 4 shows the locus of each activation, including bilateral regions of inferior and middle frontal gyrus (IFG/MFG), left superior frontal sulcus (SFS), medial frontal cortex (MFC), posterior cingulate cortex (PCC), and bilateral inferior parietal sulcus/lobe (IPS/IPL, see Table 1). The identified regions of left and right IPS were just lateral and inferior to the average location of the spatially-selective regions of IPS identified using the saccadic localizer task (see Table 1 and Supplemental Table 2). In addition, none of these regions showed a positive response to the presentation of a reward (all p's>0.78) or to the spatial position of the selected stimulus (all p's>0.24 for main effect of spatial location).

Figure 4. Whole-brain analysis revealing representations of differential value outside of spatially-selective regions of visual cortex.

Regions showing a monotonic rise in BOLD activation level with increasing differential value between selected and unselected stimuli (see Table 1). Note that the x-axis on the line plots represents the absolute value of the differential value. Line plots shown only to provide a graphical representation of the monotonic rise of BOLD activation levels; no additional inferences should be drawn based on these plots as the statistical contrast used to identify the areas predetermined the shape of the functions and the size of the error bars (which reflect ±S.E.M. across observers).

Table 1.

Coordinates and volume of regions showing increased activation with increased differential value. T-value refers to the significance of the linear trend relating the amplitude of the BOLD response with increasing differential value (see Figure 4, coordinates from the atlas of Talairach and Tournoux, 1988).

| Region | t(13) | Mean X | Mean Y | Mean Z | Std X | Std Y | Std Z | Size (mL) |

|---|---|---|---|---|---|---|---|---|

| RH IFG | 3.42* | 40 | 1.4 | -7.8 | 7.4 | 4.2 | 3.9 | 0.92 |

| RH MFG | 3.38* | 43 | 21 | 9.3 | 3.3 | 1.9 | 6 | 0.62 |

| RH IPS-Anterior | 3.36* | 40 | -66 | 23 | 3.7 | 5.1 | 2.8 | 1.48 |

| RH IPS-Posterior | 3.43* | 21 | -77 | 36 | 2.1 | 2.6 | 4 | 0.51 |

| LH MFG | 3.64** | -41 | 20 | 9.7 | 4 | 2.9 | 4.3 | 0.58 |

| LH SFS | 3.62** | -17 | 50 | 32 | 3.1 | 5.5 | 6.8 | 1.81 |

| LH IPS | 3.69** | -27 | -70 | 31 | 6.5 | 8.7 | 4.3 | 1.25 |

| PCC-Inferior | 3.68** | 2 | -50 | 11 | 8.6 | 6.7 | 6.4 | 4.44 |

| PCC-Dorsal | 3.59** | -2.3 | -36 | 40 | 4 | 12 | 6.4 | 4.76 |

| Medial Frontal | 3.62** | -2.3 | 42 | 15 | 5.7 | 10 | 6 | 7.08 |

None of these regions exhibited a significant amplitude increase related to the presentation of a reward.

p<.01.

p<.005.

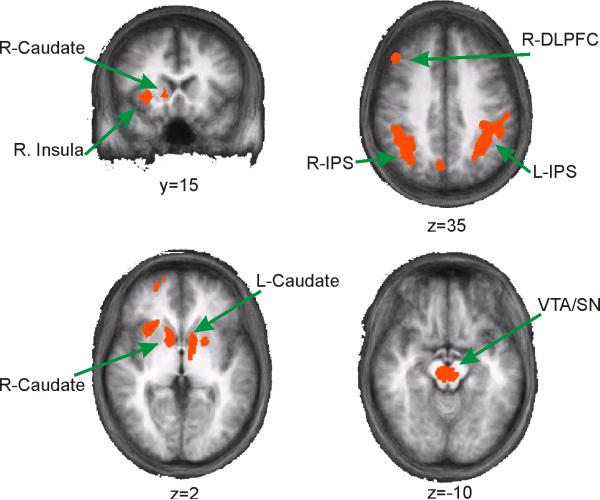

A whole-brain contrast was also performed to identify regions that responded more to a central cue signaling the presentation of a reward compared to a cue that signaled no-reward (Figure 5 and Table 2). Replicating previous studies, the cuadate nucleus and a region of the midbrain encompassing the ventral tegmental area (VTA) and substantia nigra (SN) were sensitive to the presentation of rewards (e.g. D'Ardenne et al., 2008; Haruno et al., 2004). However, the VTA/SN activation encompassed a wide swath in the midbrain, so it is not possible to differentiate the exact source(s) of the activation (see Figure 5). In addition, regions of the right insusla, right DLPFC, and large areas of bilateral IPS were sensitive to the presentation of a reward. None of these regions exhibited significant sensitivity to changes in differential value (all p's > 0.18) or to the spatial position of the selected stimulus (all p's > 0.34).

Figure 5.

Whole-brain analysis revealing regions outside of spatially-selective regions of visual cortex that respond more on rewarded trials compared to non-rewarded trials (see Table 2).

Table 2.

Coordinates and volume of regions showing heightened responses following the presentation of a reward (see Figure 5, coordinates from the atlas of Talairach and Tournoux, 1988).

| Region | t(13) | Mean X | Mean Y | Mean Z | Std X | Std Y | Std Z | Size (mL) |

|---|---|---|---|---|---|---|---|---|

| RH-IPS | 4.26** | 37 | -50 | 40 | 6.3 | 7.9 | 6.2 | 8.20 |

| RH-DLPFC | 3.70** | 41 | 26 | 31 | 3 | 3.2 | 5.3 | 1.22 |

| RH-Insula | 3.94** | 26 | 17 | 0.79 | 3.4 | 3.4 | 2.8 | 1.08 |

| RH-SFS | 3.43* | 23 | 49 | 5.1 | 4 | 5.1 | 5.5 | 0.99 |

| RH-Caudate | 3.72** | 10 | 7.5 | 2.9 | 2 | 4.6 | 3.1 | 1.01 |

| LH-IPS | 4 1** | -39 | -45 | 40 | 7.4 | 9.4 | 5.6 | 9.50 |

| LH-Caudate | 3.75** | -10 | 1.6 | 2.2 | 2.6 | 5.9 | 2.3 | 0.99 |

| LH-Putamen | 3.53** | -20 | 4.3 | -1.4 | 1.8 | 2.5 | 2.8 | 0.46 |

| Precun | 3.75** | 4.1 | -67 | 32 | 2.6 | 2.6 | 2.1 | 0.63 |

None of these regions exhibited a significant linear increase in BOLD amplitude with differential value.

p<.01.

p<.005.

Discussion

Several recent reports have documented value-based modulations within areas of the visual system that are thought to play a role in transforming incoming sensory information into an appropriate motor response (e.g. Dorris and Glimcher, 2004; Glimcher, 2003; Krawczyk et al., 2007; Platt and Glimcher, 1999; Sugrue et al., 2004, 2005). The present data show that value also influences activation levels within early regions of visual cortex that are thought to play a role in representing low-level stimulus features (e.g. V1), even in the absence of saccadic responses (see also Shuler and Bear, 2006). In addition, these modulations appear to be influenced primarily by prior rewards as opposed to biases in the subjective value of the selected stimulus that occurred on either a trial-by-trial or a scan-by-scan basis. This result raises the intriguing possibility that the value-based modulations across the visual hierarchy operate largely via an implicit representation that is not necessarily accessible to the observer. Finally, whereas regions of the midbrain and dorsal striatum signal the presentation of rewards, activation levels in regions of IFG/MFG, left SFS, medial frontal cortex, PCC, and parietal cortex increase monotonically as differential value increases.This sensitivity to differential value makes the later areas suitable candidates for issuing biasing signals so that valuable stimuli compete effectively for representation in the visual system.

Recently, Pleger and coworkers demonstrated that primary somatosensory cortex is also modulated by reward-related factors, suggesting that the present observations are not unique to the visual system (Pleger et al., 2008, see also Pantoja et al., 2007 for a related study in rats). However, the modulations reported by Pleger et al. were tied specifically to the presentation of the reward, as opposed to the processing of incoming somatosensory information during the decision making process. Thus, their results are more consistent with a delayed ‘teaching signal’ that modifies synaptic efficacy in somatosensory cortex to facilitate future perceptual discriminations. In contrast, the present data suggest that the prior reward history of a stimulus modulates the processing of incoming sensory information in the visual domain (although the modulations reported here likely reflect relatively fast reentrant processing). This apparent discrepancy raises the possibility that value has differential effects on activation levels in visual and somatosensory cortices. However, it is also possible that the observed differences are related to the perceptual task that was employed: Pleger et al. (2008) used a very difficult tactile discrimination task that may have benefited substantially from reentrant learning signals. In contrast, the present task was relatively trivial from a perceptual standpoint, thus minimizing the need for perceptual learning. Future studies that directly manipulate perceptual difficulty and that employ converging methodologies with improved temporal resolution such as EEG/MEG will likely be required to resolve this issue.

As shown in Figure 2, the differential value of the choice alternatives as determined by prior rewards biases activation levels in spatially selective regions of the visual system even in the absence of saccadic responses. Similar spatially-selective biasing effects have been reported in response to cues instructing shifts of spatial attention (Gandhi et al., 1999; Hopfinger et al., 2000; Kastner et al., 1998; Serences and Yantis, 2007; Silver et al., 2005) or to stimuli that are highly salient by virtue of their emotional or social importance (Keil et al., 2005; Padmala and Pessoa, 2008; Pourtois and Vuilleumier, 2006; Sabatinelli et al., 2005; Sugase et al., 1999; Vuilleumier, 2005). Thus, stimulus ‘value’ as defined in economic terms is but one of several known factors that biases activation levels within the regions of visual cortex that represent incoming sensory information from the visual field.

Control mechanisms of value-based modulations

Decision making involves predicting and experiencing the outcome of a particular behavior, and then using the outcome to update future expectations; a complex network of subcortical and cortical brain structures is thought to support different component operations of this process. First, dopaminergic neurons in the midbrain (e.g. VTA and SN) signal the difference between predicted and experienced rewards in a relatively stimulus-independent manner; behavior should be modified when there is a large discrepancy between expected and actual rewards, and should remain unchanged when expectations are met (Hollerman and Schultz, 1998; Schultz et al., 1997). Accordingly, dopaminergic neurons in the VTA and SN are more active following an unexpected or infrequent reward and thus serve to signal that a shift in behavioral strategy might be appropriate in order to maximize the utility of future choices. These dopaminergic neurons send projections to the caudate nucleus, where many neurons anticipate rewards and/or represent the specific behavioral response that immediately preceded the presentation of a reward (i.e. the direction of a rewarded saccade, Hikosaka, 2007; Lau and Glimcher, 2007; Lauwereyns et al., 2002; Schultz et al., 1998). In turn, the caudate nucleus has extensive cortical projections (in part through the thalamus) to occulomotor circuits (e.g. LIP) as well as to regions of frontal cortex, where neurons respond selectively and rapidly to the value of a stimulus (Leh et al., 2007; Leon and Shadlen, 1999; Schilman et al., 2008; Wallis and Miller, 2003). Together, this midbrain-striatal-cortical network is thought to signal with increasing specificity the presence and precise nature of a rewarding stimulus as well as the action(s) that led up to the reward (Hikosaka, 2007; Schultz, 1998).

As shown in Figure 5, many of the regions implicated in this reward signaling circuit were found to respond more following rewarded trials compared to unrewarded trials, including the VTA/SN region, caudate nucleus, IPS (LIP), and DLPFC. This general pattern of activation is consistent with the model outlined above in which midbrain neurons signal the presentation of a reward, and striatal/cortical centers then act to utilize the reward signal to appropriately modify future behavior. Note that the region of the caudate nucleus identified here was insensitive to the spatial position of the selected stimulus, even though recent studies demonstrate that many caudate neurons encode the specific saccade vector associated with a reward (e.g. Lau and Glimcher, 2007). However, the present task did not employ saccadic responses, and BOLD fMRI substantially blurs spatial selectivity within small cortical areas by averaging signals from many neurons. Thus, future studies will be needed to more precisely characterize the role that the caudate nucleus plays in signaling response-reward pairings in the absence of saccades.

The whole brain analysis also revealed regions of frontal and parietal cortex in which activation levels increased as the differential value between the alternatives increased (Figure 4); many of these regions have been previously implicated in the anticipation and tracking of rewards (e.g. left SFS, medial frontal cortex, and PCC, Glascher et al., 2008; Haruno et al., 2004; Kable and Glimcher, 2007; Knutson and Cooper, 2005; Knutson et al., 2005; Knutson et al., 2000; Krawczyk et al., 2007; O'Doherty, 2004). The monotonic rise in activation levels with increasing differential value is an appropriate neural signature of regions involved in generating biasing signals that modulate spatially selective visual areas in favor of more valuable stimuli (e.g. V1-hV4, IPS and FEF). For example, these regions may integrate reward signals from the caudate nucleus and connected cortical centers that refine the reward signals generated by midbrain dopaminergic neurons (e.g. Hollerman and Schultz, 1998; Schultz et al., 1998). Thus, if a stimulus is rewarded several times over a relatively short period of time, then it is adaptive to update the decision rule governing choice and to issue strong biasing signals to spatially selective visual areas in order to ensure that the more valuable alternative competes effectively for representation in the visual system.

One region in particular – the left SFS – has been previously implicated in decision making. Heekeren and coworkers used fMRI and human observers to demonstrate that a similar region (or more generally: the DLPFC) was sensitive to both the differential and absolute amount of sensory evidence favoring one choice alternative over another during perceptual decision making tasks (Heekeren et al., 2004; Heekeren et al., 2006). In addition, this decision-related activity is largely independent of the motor effector being used to a make a response (Heekeren et al., 2006), and DLPFC neurons track stimulus value and participate in holding information online during working memory delay periods (Barraclough et al., 2004; Fecteau et al., 2007; Fuster, 1995; Gilbert and Fiez, 2004; Goldman-Rakic, 1987; Heekeren et al., 2004; Heekeren et al., 2006; Ichihara-Takeda and Funahashi, 2006; Kim and Shadlen, 1999; Krawczyk et al., 2007; Leon and Shadlen, 1999; Miller and Cohen, 2001; Tanaka et al., 2006; Tsujimoto and Sawaguchi, 2004, 2005; Wallis and Miller, 2003). The present study extends the generality of these results by showing that the left SFS/DLPFC represents the differential value of two stimuli that are primarily distinguished by their respective reward histories. This representation of differential value was not influenced by the spatial position of the selected stimulus and is observed even though the present study employed manual button press responses (see also Heekeren et al., 2006). Thus, the data support the notion that the left SFS plays a domain general role in indexing the relevant variable during decision making, whether it is sensory evidence or a top-down factor like differential value. However, since fMRI is relatively coarse when it comes to distinguishing fine-grained activation changes related to making saccadic or manual responses in one direction or another, the present data do not rule out a role for this region in more precisely specifying the exact response that should be made in an effort to optimize rewards.

An important question for future research concerns the degree to which the neural systems that mediate spatially-selective responses associated with reward are dissociable from the systems that mediate modulations associated with attention and emotional salience. For instance, converging evidence strongly implicates subregions of parietal cortex and the frontal eye field (FEF) in attentional control: fMRI studies reveal frontoparietal activation concurrent with shifts of attention (reviewed in Corbetta and Shulman, 2002; Kastner and Ungerleider, 2000; Serences and Yantis, 2006), damage to these regions can lead to attentional deficits on the contralesional side of space (neglect, Heilman and Valenstein, 1979; Mesulam, 1981), and microstimulation and transcranial magnetic stimulation (TMS) can bias the locus of spatial attention as well as the firing rates of neurons in early visual areas (Moore and Armstrong, 2003; Moore et al., 2003; Moore and Fallah, 2004; Ruff et al., 2008; Ruff et al., 2006). In contrast to this frontoparietal attentional control network, the presentation of emotionally salient stimuli strongly activates the amygdala (reviewed in Phelps and LeDoux, 2005), damage to the amygdala attenuates emotional modulations but not attentional modulations (Vuilleumier et al., 2004), damage to parietal cortex that causes attentional neglect can spare emotional modulations of visual cortex (Vuilleumier et al., 2002), and emotionally salient stimuli that are ignored (unattended) can still evoke a relatively large cortical response (Vuilleumier et al., 2001, although see also Pessoa et al., 2002). The combined evidence therefore suggests that the neural mechanisms mediating attention- and emotion-based modulations of early visual cortex are at least partially dissociable (Phelps et al., 2006; reviewed in Vuilleumier and Driver, 2007).

Although no explicit comparison was carried out in the present experiment, many of the regions depicted in Figure 4 have also been implicated in attentional control. In particular, similar areas of parietal cortex are activated during voluntary shifts of attention between spatial locations, features, and objects (reviewed in Corbetta and Shulman, 2002; Serences and Yantis, 2006), and similar regions of IFG/MFG have been associated with attention shifts following the unexpected presentation of a behaviorally relevant stimulus (Downar et al., 2001; Kincade et al., 2005; Serences et al., 2005). These results raise the possibility that overlapping neural mechanisms play a role in orienting to stimuli based on either attentional demands or on stimulus value as determined by recently earned rewards. Alternatively, spatial orienting in general may recruit a common set of neural mechanisms to bias the representation of incoming sensory information. To clarify this issue, future studies should test the link between neural mechanisms mediating attention and value by directly comparing the two types of orienting within the same experimental setting to determine the extent to which the ‘control’ systems overlap. Ideally these studies might employ neuroimaging and TMS/lesion methodologies to more precisely establish causal roles for each region in issuing biasing signals to spatially selective regions of visual cortex (see e.g. Ruff et al., 2008; Ruff et al., 2006; Vuilleumier et al., 2004 for examples of such approaches).

Experimental Procedures

Observers

Fifteen neurologically intact right-handed observers (nine females), ranging in age from 22-28 years old, participated in the study. All observers gave written informed consent in accord with the human subjects Institutional Review Board at the University of California, Irvine. Data from one observer (female) were discarded due to technical difficulties collecting button press responses in the scanner. Each observer was trained for two, 1 hour sessions outside the scanner and then participated in a single 1.5 hour scanning session. Compensation was $10/hour for training and $20/hour for scanning. Observers could also earn a monetary bonus based on behavioral performance during the scanning session (see below for details).

Main experimental task

Observers maintained gaze on a small square fixation point (0.4° visual angle on a side) that was continuously visible in the center of the screen throughout each scan. At the start of each trial, contrast reversing checkerboards (8Hz) were presented within circular apertures (4° radius) for 1s on each side of fixation, centered 10° to the left and right and 5° above the center of the screen. One of the checkerboard stimuli was comprised of green and black squares, and the other red and black squares; the spatial position (left or right of fixation) of each colored stimulus varied in a pseudo-random order across trials with the constraint that each color appeared an equal number of times in each position over the course of a single block of trials. Observers held two MR compatible keypads, one in each hand, and each keypad contained three buttons. Following the offset of the checkerboards, observers pressed one of the three buttons with the hand corresponding to the spatial position of the selected color. Thus, when the right stimulus was selected, observers pressed the rightmost of the three buttons held in their right hand to signal a high-value choice, the middle button to indicate a medium-value choice, and the inner button to indicate a low-value choice; a complementary pattern was used to indicate subjective value when the left stimulus was selected. The observers were required to respond within 1.25s following the offset of the stimuli. Following the response window, the central fixation point turned yellow for 0.25s if the choice was rewarded or blue for 0.25s if the choice was not rewarded. The next trial began 1.25s later, yielding a trial duration of 3.75s. There were 96 trials in each block, but one-quarter were ‘null’ trials on which no stimulus was presented to aid in the estimation of the event-locked hemodynamic response function (Dale, 1999). The sequence of real and null trials on each run was selected from a set of the 50 most statistically efficient pseudo-random sequences as determined using Monte-Carlo simulations (Dale, 1999).

Reward structure and payment scheme

On average, a reward was assigned to a stimulus on 33% of the trials; this average reward rate was distributed in a red/green ratio of either 1:3, 1:1, or 3:1 (expressed in percentage of trials, these ratios were approximately: 8%:25%, 16%:16%, or 25%:8%). Although all observers experienced the same reward ratios over the course of the experiment, the order of presentation was randomly determined for each observer with the constraint that the same ratio was used on two consecutive blocks of trials (e.g. observer #1 might experience red/green reward ratios of {1:1, 1:1, 3:1, 3:1, 1:3, 1:3} over the course of the 6 scans that were run during an experimental session). Rewards were assigned to each color in an independent and stochastic manner on each trial, so the actual number of rewards assigned to each color deviated to some extent on a given block. Once a reward was assigned to a color, the reward remained available until that option was selected. As a result, the probability that selecting a given color would yield a reward increased as a function of the time since that option was last chosen. This ‘baiting’ scheme was adopted to ensure that observers would not simply pick the color with the highest perceived reward probability on every single trial (i.e. to encourage exploration). Finally, a reward was never given on trials where the subject switched from one alternative to the other. While not strictly necessary to observe matching behavior (Lau and Glimcher, 2005), this change over delay (COD) was introduced to discourage ‘win stay, loose switch’ strategies (Corrado et al., 2005; Sugrue et al., 2004). As a result, trials following a switch were not considered further in the analysis because they were not free choices. At the end of the scanning session, observers were given $0.10 for every reward they earned up to a maximum of $10; eight participants earned the maximum (mean total income: $10.85) and the remaining six earned an average of $9.60. Overall, observers obtained 69%±1.4% (mean ±S.E.M.) of all available rewards (where 100% is unachievable since both stimuli were assigned a reward on some trials, and only one of the stimuli could be selected). See section Model relating reward history to choice behavior in Supplemental Experimental Procedures to see the full details of the model used for estimating value based on previous rewards.

fMRI Data Acquisition and Analysis

MRI scanning was carried out on a Phillips Intera 3-Tesla scanner equipped with an 8-channel head coil at University of California, Irvine. Anatomical images were acquired using a MPRAGE T1-weighted sequence that yielded images with a 1x1x1mm resolution. Whole brain echo planar functional images (EPI) were acquired in 35 transverse slices (TR = 2000 ms, TE = 30 ms, flip angle = 70°, image matrix = 64 × 64, FOV = 240 mm, slice thickness = 3 mm, 1mm gap, SENSE factor = 1.5).

Data analysis was performed using BrainVoyager QX (v 1.91; Brain Innovation, Maastricht, The Netherlands) and custom timeseries analysis routines written in Matlab (version 7.1; The Math Works, Natick, Massachusetts). Data from the main experiment were collected in 6 scans per subject, with each scan lasting 372s; each functional localizer scan lasted for 300s (see Supplemental Experimental Procedures). EPI images were slice-time corrected, motion-corrected (both within and between scans) and high pass filtered (3 cycles/run) to remove low frequency temporal components from the timeseries. The estimated motion parameters were then used to estimate and remove motion induced artifacts in the timeseries of each voxel using a general linear model. All EPI and anatomical images were transformed into the standardized atlas space of Talairach and Tournoux (1988) to enable the whole-brain group analysis described below.

The magnitude of the evoked BOLD response in each region of interest (ROI) in visual cortex (see section on functional localizers in Supplemental Experimental Procedures) was estimated using a GLM and a design matrix that modeled the response to each event type as the scalar multiplier (beta weight) of a canonical double-gamma function (double-gamma function was implemented in Brain Voyager: time to peak 5s, undershoot ratio 6, time to undershoot peak 16s). This approach to estimating the magnitude of the response evoked by selected and unselected stimuli in each value bin was chosen to correspond to the method chosen for estimating the influence of differential value in the whole-brain analysis described below (see Figures 4,5). However, qualitatively similar and statistically significant results were also obtained using a finite impulse response function (FIR) approach to estimate the event-related response in each category on a timepoint-by-timepoint basis (e.g. Dale, 1999).

Whole-brain analysis of representation of differential value

To examine the representations of differential value outside of spatially selective regions of occipital, parietal (IPS), and frontal cortex (FEF), a whole brain random effects group analysis was carried out after applying a 4mm FWHM spatial smoothing kernel to the functional data from each observer. A GLM was defined with canonical HRF's (same double-gamma shape described above) marking the onset time of trials belonging to each of five bins based on the absolute value of the differential value, and a separate regressor was included that marked the onset time of all rewards to account for variance in the BOLD signal induced by the actual presentation of a reward (see e.g. Figure 2h and Figure 5). A balanced contrast that weighted each regressor with respect to its corresponding differential value bin (contrast coefficients of [-2, -1, 0, 1, 2]) was used to find voxels that showed an increasing response amplitude with increasing differential value. The mean position of the activations is reported in Table 1 and all coordinates correspond to the atlas of Talairach and Tournoux (1988). The single voxel statistical threshold was set to t(13)=3.05, p<0.01 and a minimum cluster size of 16 edge-contiguous voxels (0.432mL) was adopted to correct for multiple comparisons, yielding a map-wise false positive probability of p<0.05 (as computed based on all voxels included in the GLM using the AFNI program AlphaSim, written by Robert Cox). However, all areas except the left putamen also passed a more stringent cluster threshold of 19 voxels which corresponds to a map-wise false positive probability of p<0.01.

Supplementary Material

Acknowledgements

Thanks to Gregory Corrado, Leo Sugrue and Michael D. Lee for technical advice, and the staff at the John Tu and Thomas Yuen Center for Functional Onco Imaging for assistance with data collection.

References

- Amador N, Schlag-Rey M, Schlag J. Reward-predicting and reward-detecting neuronal activity in the primate supplementary eye field. J Neurophysiol. 2000;84:2166–2170. doi: 10.1152/jn.2000.84.4.2166. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Basso MA, Wurtz RH. Modulation of neuronal activity by target uncertainty. Nature. 1997;389:66–69. doi: 10.1038/37975. [DOI] [PubMed] [Google Scholar]

- Basso MA, Wurtz RH. Modulation of neuronal activity in superior colliculus by changes in target probability. J Neurosci. 1998;18:7519–7534. doi: 10.1523/JNEUROSCI.18-18-07519.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Corrado GS, Sugrue LP, Seung HS, Newsome WT. Linear-Nonlinear-Poisson models of primate choice dynamics. Journal of the experimental analysis of behavior. 2005;84:581–617. doi: 10.1901/jeab.2005.23-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science (New York, N.Y. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Zarahn E, Aguirre GK, Shin RK, Auerbach P, Detre JA. The effect of pacing of experimental stimuli on observed functional MRI activity. Neuroimage. 1997;6:113–121. doi: 10.1006/nimg.1997.0281. [DOI] [PubMed] [Google Scholar]

- Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villiers PA, Herrnstein RJ. Toward a law of response strength. Psychological Bulletin. 1976;83:1131–1153. [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Dorris MC, Munoz DP. Saccadic probability influences motor preparation signals and time to saccadic initiation. J Neurosci. 1998;18:7015–7026. doi: 10.1523/JNEUROSCI.18-17-07015.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downar J, Crawley AP, Mikulis DJ, Davis KD. The effect of task relevance on the cortical response to changes in visual and auditory stimuli: an event-related fMRI study. Neuroimage. 2001;14:1256–1267. doi: 10.1006/nimg.2001.0946. [DOI] [PubMed] [Google Scholar]

- Engel SA, Rumelhart DE, Wandell BA, Lee AT, Glover GH, Chichilnisky EJ, Shadlen MN. fMRI of human visual cortex. Nature. 1994;369:525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Knoch D, Fregni F, Sultani N, Boggio P, Pascual-Leone A. Diminishing risk-taking behavior by modulating activity in the prefrontal cortex: a direct current stimulation study. J Neurosci. 2007;27:12500–12505. doi: 10.1523/JNEUROSCI.3283-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster J. Memory in the Cerebral Cortex: An Empirical Approach to Neural Networks in the Human and Nonhuman Primate. MIT Press; Cambridge, MA: 1995. [Google Scholar]

- Gandhi SP, Heeger DJ, Boynton GM. Spatial attention affects brain activity in human primary visual cortex. Proc Natl Acad Sci U S A. 1999;96:3314–3319. doi: 10.1073/pnas.96.6.3314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert AM, Fiez JA. Integrating rewards and cognition in the frontal cortex. Cogn Affect Behav Neurosci. 2004;4:540–552. doi: 10.3758/cabn.4.4.540. [DOI] [PubMed] [Google Scholar]

- Glascher J, Hampton AN, O'Doherty JP. Determining a Role for Ventromedial Prefrontal Cortex in Encoding Action-Based Value Signals During Reward-Related Decision Making. Cereb Cortex. 2008 doi: 10.1093/cercor/bhn098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher PW. The neurobiology of visual-saccadic decision making. Annu Rev Neurosci. 2003;26:133–179. doi: 10.1146/annurev.neuro.26.010302.081134. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Indeterminacy in brain and behavior. Annual review of psychology. 2005;56:25–56. doi: 10.1146/annurev.psych.55.090902.141429. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory. In: Plum F, editor. Handbook of Physiology. American Physiological Society; Bethesda, MD: 1987. [Google Scholar]

- Haenny PE, Maunsell JH, Schiller PH. State dependent activity in monkey visual cortex. II. Retinal and extraretinal factors in V4. Exp Brain Res. 1988;69:245–259. doi: 10.1007/BF00247570. [DOI] [PubMed] [Google Scholar]

- Haenny PE, Schiller PH. State dependent activity in monkey visual cortex. I. Single cell activity in V1 and V4 on visual tasks. Exp Brain Res. 1988;69:225–244. doi: 10.1007/BF00247569. [DOI] [PubMed] [Google Scholar]

- Haruno M, Kuroda T, Doya K, Toyama K, Kimura M, Samejima K, Imamizu H, Kawato M. A neural correlate of reward-based behavioral learning in caudate nucleus: a functional magnetic resonance imaging study of a stochastic decision task. J Neurosci. 2004;24:1660–1665. doi: 10.1523/JNEUROSCI.3417-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431:859–862. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Ruff DA, Bandettini PA, Ungerleider LG. Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proc Natl Acad Sci U S A. 2006;103:10023–10028. doi: 10.1073/pnas.0603949103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heilman KM, Valenstein E. Mechanisms underlying hemispatial neglect. Ann Neurol. 1979;5:166–170. doi: 10.1002/ana.410050210. [DOI] [PubMed] [Google Scholar]

- Herrnstein RJ. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the experimental analysis of behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hikosaka O. Basal ganglia mechanisms of reward-oriented eye movement. Ann N Y Acad Sci. 2007;1104:229–249. doi: 10.1196/annals.1390.012. [DOI] [PubMed] [Google Scholar]

- Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat Neurosci. 1998;1:304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR. The neural mechanisms of top-down attentional control. Nat Neurosci. 2000;3:284–291. doi: 10.1038/72999. [DOI] [PubMed] [Google Scholar]

- Ichihara-Takeda S, Funahashi S. Reward-period activity in primate dorsolateral prefrontal and orbitofrontal neurons is affected by reward schedules. J Cogn Neurosci. 2006;18:212–226. doi: 10.1162/089892906775783679. [DOI] [PubMed] [Google Scholar]

- Ikeda T, Hikosaka O. Reward-dependent gain and bias of visual responses in primate superior colliculus. Neuron. 2003;39:693–700. doi: 10.1016/s0896-6273(03)00464-1. [DOI] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, De Weerd P, Desimone R, Ungerleider LG. Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI. Science (New York, N.Y. 1998;282:108–111. doi: 10.1126/science.282.5386.108. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Keil A, Moratti S, Sabatinelli D, Bradley MM, Lang PJ. Additive effects of emotional content and spatial selective attention on electrocortical facilitation. Cereb Cortex. 2005;15:1187–1197. doi: 10.1093/cercor/bhi001. [DOI] [PubMed] [Google Scholar]

- Kim JN, Shadlen MN. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nat Neurosci. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- Kincade JM, Abrams RA, Astafiev SV, Shulman GL, Corbetta M. An event-related functional magnetic resonance imaging study of voluntary and stimulus-driven orienting of attention. J Neurosci. 2005;25:4593–4604. doi: 10.1523/JNEUROSCI.0236-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Cooper JC. Functional magnetic resonance imaging of reward prediction. Curr Opin Neurol. 2005;18:411–417. doi: 10.1097/01.wco.0000173463.24758.f6. [DOI] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Westdorp A, Kaiser E, Hommer D. FMRI visualization of brain activity during a monetary incentive delay task. Neuroimage. 2000;12:20–27. doi: 10.1006/nimg.2000.0593. [DOI] [PubMed] [Google Scholar]

- Krawczyk DC, Gazzaley A, D'Esposito M. Reward modulation of prefrontal and visual association cortex during an incentive working memory task. Brain Res. 2007;1141:168–177. doi: 10.1016/j.brainres.2007.01.052. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Dynamic response-by-response models of matching behavior in rhesus monkeys. Journal of the experimental analysis of behavior. 2005;84:555–579. doi: 10.1901/jeab.2005.110-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Action and outcome encoding in the primate caudate nucleus. J Neurosci. 2007;27:14502–14514. doi: 10.1523/JNEUROSCI.3060-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauwereyns J, Takikawa Y, Kawagoe R, Kobayashi S, Koizumi M, Coe B, Sakagami M, Hikosaka O. Feature-based anticipation of cues that predict reward in monkey caudate nucleus. Neuron. 2002;33:463–473. doi: 10.1016/s0896-6273(02)00571-8. [DOI] [PubMed] [Google Scholar]

- Lee D. Neural basis of quasi-rational decision making. Curr Opin Neurobiol. 2006;16:191–198. doi: 10.1016/j.conb.2006.02.001. [DOI] [PubMed] [Google Scholar]

- Leh SE, Ptito A, Chakravarty MM, Strafella AP. Fronto-striatal connections in the human brain: a probabilistic diffusion tractography study. Neurosci Lett. 2007;419:113–118. doi: 10.1016/j.neulet.2007.04.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Mazurek ME, Roitman JD, Ditterich J, Shadlen MN. A role for neural integrators in perceptual decision making. Cereb Cortex. 2003;13:1257–1269. doi: 10.1093/cercor/bhg097. [DOI] [PubMed] [Google Scholar]

- McCoy AN, Crowley JC, Haghighian G, Dean HL, Platt ML. Saccade reward signals in posterior cingulate cortex. Neuron. 2003;40:1031–1040. doi: 10.1016/s0896-6273(03)00719-0. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. A cortical network for directed attention and unilateral neglect. Ann Neurol. 1981;10:309–325. doi: 10.1002/ana.410100402. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Moore T, Armstrong KM. Selective gating of visual signals by microstimulation of frontal cortex. Nature. 2003;421:370–373. doi: 10.1038/nature01341. [DOI] [PubMed] [Google Scholar]

- Moore T, Armstrong KM, Fallah M. Visuomotor origins of covert spatial attention. Neuron. 2003;40:671–683. doi: 10.1016/s0896-6273(03)00716-5. [DOI] [PubMed] [Google Scholar]

- Moore T, Fallah M. Microstimulation of the frontal eye field and its effects on covert spatial attention. J Neurophysiol. 2004;91:152–162. doi: 10.1152/jn.00741.2002. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- Padmala S, Pessoa L. Affective learning enhances visual detection and responses in primary visual cortex. J Neurosci. 2008;28:6202–6210. doi: 10.1523/JNEUROSCI.1233-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pantoja J, Ribeiro S, Wiest M, Soares E, Gervasoni D, Lemos NA, Nicolelis MA. Neuronal activity in the primary somatosensory thalamocortical loop is modulated by reward contingency during tactile discrimination. J Neurosci. 2007;27:10608–10620. doi: 10.1523/JNEUROSCI.5279-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proc Natl Acad Sci U S A. 2002;99:11458–11463. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps EA, LeDoux JE. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron. 2005;48:175–187. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychol Sci. 2006;17:292–299. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Pleger B, Blankenburg F, Ruff CC, Driver J, Dolan RJ. Reward facilitates tactile judgments and modulates hemodynamic responses in human primary somatosensory cortex. J Neurosci. 2008;28:8161–8168. doi: 10.1523/JNEUROSCI.1093-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Vuilleumier P. Dynamics of emotional effects on spatial attention in the human visual cortex. Prog Brain Res. 2006;156:67–91. doi: 10.1016/S0079-6123(06)56004-2. [DOI] [PubMed] [Google Scholar]

- Ruff CC, Bestmann S, Blankenburg F, Bjoertomt O, Josephs O, Weiskopf N, Deichmann R, Driver J. Distinct causal influences of parietal versus frontal areas on human visual cortex: evidence from concurrent TMS-fMRI. Cereb Cortex. 2008;18:817–827. doi: 10.1093/cercor/bhm128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruff CC, Blankenburg F, Bjoertomt O, Bestmann S, Freeman E, Haynes JD, Rees G, Josephs O, Deichmann R, Driver J. Concurrent TMS-fMRI and psychophysics reveal frontal influences on human retinotopic visual cortex. Curr Biol. 2006;16:1479–1488. doi: 10.1016/j.cub.2006.06.057. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Bradley MM, Fitzsimmons JR, Lang PJ. Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. Neuroimage. 2005;24:1265–1270. doi: 10.1016/j.neuroimage.2004.12.015. [DOI] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science (New York, N.Y. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Saygin AP, Sereno MI. Retinotopy and Attention in Human Occipital, Temporal, Parietal, and Frontal Cortex. Cereb Cortex. 2008 doi: 10.1093/cercor/bhm242. [DOI] [PubMed] [Google Scholar]

- Schilman EA, Uylings HB, Galis-de Graaf Y, Joel D, Groenewegen HJ. The orbital cortex in rats topographically projects to central parts of the caudate-putamen complex. Neurosci Lett. 2008;432:40–45. doi: 10.1016/j.neulet.2007.12.024. [DOI] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science (New York, N.Y. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Schultz W, Tremblay L, Hollerman JR. Reward prediction in primate basal ganglia and frontal cortex. Neuropharmacology. 1998;37:421–429. doi: 10.1016/s0028-3908(98)00071-9. [DOI] [PubMed] [Google Scholar]

- Seo H, Lee D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J Neurosci. 2007;27:8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Shomstein S, Leber AB, Golay X, Egeth HE, Yantis S. Coordination of voluntary and stimulus-driven attentional control in human cortex. Psychol Sci. 2005;16:114–122. doi: 10.1111/j.0956-7976.2005.00791.x. [DOI] [PubMed] [Google Scholar]

- Serences JT, Yantis S. Selective visual attention and perceptual coherence. Trends Cogn Sci. 2006;10:38–45. doi: 10.1016/j.tics.2005.11.008. [DOI] [PubMed] [Google Scholar]

- Serences JT, Yantis S. Spatially selective representations of voluntary and stimulus-driven attentional priority in human occipital, parietal, and frontal cortex. Cereb Cortex. 2007;17:284–293. doi: 10.1093/cercor/bhj146. [DOI] [PubMed] [Google Scholar]

- Sereno AB, Maunsell JH. Shape selectivity in primate lateral intraparietal cortex. Nature. 1998;395:500–503. doi: 10.1038/26752. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science (New York, N.Y. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Pitzalis S, Martinez A. Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science (New York, N.Y. 2001;294:1350–1354. doi: 10.1126/science.1063695. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J Neurophysiol. 2001;86:1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- Shuler MG, Bear MF. Reward timing in the primary visual cortex. Science (New York, N.Y. 2006;311:1606–1609. doi: 10.1126/science.1123513. [DOI] [PubMed] [Google Scholar]

- Silver MA, Ress D, Heeger DJ. Topographic maps of visual spatial attention in human parietal cortex. J Neurophysiol. 2005;94:1358–1371. doi: 10.1152/jn.01316.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science (New York, N.Y. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Choosing the greater of two goods: neural currencies for valuation and decision making. Nat Rev Neurosci. 2005;6:363–375. doi: 10.1038/nrn1666. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar sterotaxic atlas of the human brain. Thieme; New York: 1988. [Google Scholar]

- Tanaka SC, Samejima K, Okada G, Ueda K, Okamoto Y, Yamawaki S, Doya K. Brain mechanism of reward prediction under predictable and unpredictable environmental dynamics. Neural Netw. 2006;19:1233–1241. doi: 10.1016/j.neunet.2006.05.039. [DOI] [PubMed] [Google Scholar]

- Toth LJ, Assad JA. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415:165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Tsujimoto S, Sawaguchi T. Neuronal representation of response-outcome in the primate prefrontal cortex. Cereb Cortex. 2004;14:47–55. doi: 10.1093/cercor/bhg090. [DOI] [PubMed] [Google Scholar]

- Tsujimoto S, Sawaguchi T. Neuronal activity representing temporal prediction of reward in the primate prefrontal cortex. J Neurophysiol. 2005;93:3687–3692. doi: 10.1152/jn.01149.2004. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Clarke K, Husain M, Driver J, Dolan RJ. Neural response to emotional faces with and without awareness: event-related fMRI in a parietal patient with visual extinction and spatial neglect. Neuropsychologia. 2002;40:2156–2166. doi: 10.1016/s0028-3932(02)00045-3. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Driver J. Modulation of visual processing by attention and emotion: windows on causal interactions between human brain regions. Philos Trans R Soc Lond B Biol Sci. 2007;362:837–855. doi: 10.1098/rstb.2007.2092. [DOI] [PMC free article] [PubMed] [Google Scholar]