Abstract

This article presents a model of how a build-up of interruptions can shift the dynamics of the emergency department (ED) from an adaptive, self-regulating system into a fragile, crisis-prone one. Drawing on case studies of organizational disasters and insights from the theory of high-reliability organizations, the authors use computer simulations to show how the accumulation of small interruptions could have disproportionately large effects in the ED. In the face of a mounting workload created by interruptions, EDs, like other organizational systems, have tipping points, thresholds beyond which a vicious cycle can lead rather quickly to the collapse of normal operating routines and in the extreme to a crisis of organizational paralysis. The authors discuss some possible implications for emergency medicine, emphasizing the potential threat from routine, non-novel demands on EDs and raising the concern that EDs are operating closer to the precipitous edge of crisis as ED crowding exacerbates the problem.

Major disasters are of interest to the emergency medicine (EM) community because they generate demand for EM services, but disaster and crisis may be of interest to EM for another reason: lessons from the study of disasters and crisis may be useful to the organization and practice of EM. Studies in the organizational theory literature examining the phenomenon of disasters, crises, and accidents, drawing on rich case studies and in-depth analysis,1–5 have yielded three significant insights that suggest there may be important parallels in EM. First, major disasters often do not have proportionately large causes. Rather, minor, everyday events can lead to major disasters, what Perrow calls “normal accidents.”5 Second, the likelihood of triggering chain reactions and a cascade of malfunctions and breakdowns greatly increases as the delivery system becomes increasingly sophisticated and interconnected with other systems. Such cascades lead to disproportionate and occasionally disastrous effects. Third, interruptions to ongoing activities or plans are associated with or implicated in the evolution of crisis.2,3,5–8 In particular, Rudolph and Repenning7 establish how the sheer quantity of interruptions to established routines and expectations plays a significant role in precipitating disasters.

Emergency departments (EDs) are sophisticated and interconnected with other systems. ED crowding and overcrowding, major challenges to the field that continue despite years of efforts to address the problem,9–12 are likely getting worse.13,14 Crowding occasions tighter coupling of the systems5 and will likely make sophistication and interconnectedness even more salient features of the ED. EDs have also been characterized as “interrupt-driven.”15 Several studies have reported that emergency physicians (EPs) are interrupted an average of approximately 10 times per hour,15–17 and nurses are interrupted even more often.16 Interruptions contribute to medical error18 and have been implicated in increasing stress and reducing efficiency among physicians.19 However, the role of interruptions in precipitating organizational crises in the ED has not been explored.

While it is tempting to invoke proportional logic—the more interruptions there are, the worse things get—the dynamics of tightly coupled systems are more complex than this simple proportional logic would suggest.2,3,5,7,8 To understand the dynamic interplay among quantities of small events, we present a theory that takes into account the state of the environment surrounding the ED, the ED’s capacity to respond, and the manner in which the system adjusts to cope with varying demand. The approach will be to draw on simulation and analysis of a system dynamics model, developed by Rudolph and Repenning7 to understand the dynamics of disasters, that examines the interconnections between the quantity of small events and organizational crises. We begin with a description of some case studies of disasters, then present the model and analyses, and conclude with a discussion of some implications for EM.

CASE STUDIES

We offer two short case studies as examples. The focal case for developing the theory represented here is Weick’s3 report and analysis of a major disaster, an account that chronicles a series of small interruptions that combined to produce the Tenerife (Canary Islands) air disaster that occurred on March 27, 1977. On that day, the Las Palmas airport was unexpectedly closed due to a terrorist bomb attack. Two Boeing 747s, one operated by KLM and the other operated by Pan Am, were diverted to Tenerife because of this. The diversion resulted in a series of small interruptions to plans and standard procedures. The KLM crew had strict duty time constraints, but the diversion interrupted the plan to return to Amsterdam within those limits. Plans to leave the airfield were interrupted by a cloud drifting 3000 feet down the runway. The runways at Tenerife were not designed for 747s, so their narrow widths interrupted protocols for normal maneuvering. Transmissions from the central tower were both garbled and nonstandard, interrupting the usual preflight communications patterns and protocols. As the situation progressed, the KLM crew communicated less and less clearly, and eventually the KLM captain, directly violating standard procedure, cleared himself for takeoff. He began accelerating the plane in an effort to outrace a cloud floating toward him at the other end of the runway. Meanwhile, the Pan Am aircraft had missed its parking turnoff due to the limited visibility and was parked at the other end of the runway, obscured by the very cloud the KLM pilot was trying to outrun. The KLM plane collided with the Pan Am plane, killing all 583 people on both planes in one of the worst accidents in aviation history.

Our second example is the tragedy of Iran Air 655, a passenger airline jet that was mistakenly shot down by the AEGIS-system guided-missile cruiser USS Vincennes on July 3, 1988, resulting in the deaths of all 290 people on board. Based on various accounts of the event,20–24 a continuing stream of interruptions to ongoing processes and tasks occurred in the moments before the fatal shoot-down. As Iran Air 655 approached, it was an unknown aircraft that interrupted the team’s attention to ongoing gun battles. The combat information center team had to determine a course of action in just 7 minutes, given the trajectory of the unknown approaching aircraft. Noise levels were high, and information about the several ongoing gunboat battles came in bursts, interrupting attempts to deal with the incoming aircraft. One of the forward guns jammed, causing yet more interruptions to the ongoing battle maneuvers. Crew member communications were occurring over several channels simultaneously, often resulting in different messages coming into left and right ears. Periodic changes in communication channels added another layer of interruptions.

MODELING THE FEEDBACK STRUCTURE OF DISASTER AND CRISIS

Motivated by the observation that small events can cause large crises, Rudolph and Repenning7 developed a system dynamics model drawing on source data from several case studies of large-scale disasters such as those of Tenerife and the USS Vincennes. Drawing primarily on Weick’s theoretical analysis3 as the initial source data, the model formulations translate the text-based constructs and theoretical relationships into the system dynamics language of stocks, flows, and feedback. The paper carefully develops the causal feedback structure of the model, explains the mathematical formulations, and provides access to the fully documented model. Here, we provide a summary of the model, highlighting some key features, and focus attention on the results of simulation analyses that may have some bearing on managerial practices in the ED.

The original model7 grounded in source data from disasters is based on several key assumptions that are likely quite consistent with most ED contexts. First, the model assumes the organization faces a continuing, possibly varying, stream of non-novel interruptions. The definition of an interruption follows Mandler’s: any unanticipated event, external to the individual, that temporarily or permanently prevents completion of some organized action, thought sequence, or plan.25 Second, the model focuses on interruptions for which the organization has an appropriate response within its existing repertoire, that is, the interruptions are non-novel interruptions. Resolving a non-novel interruption still requires significant cognitive effort,26 first to shift to an active mode of cognition and then to execute the three processes of attention to determine which interruptions are considered, activation to trigger the necessary knowledge, and strategic processes to prioritize goals and allocate resources.1 However, the difficulty of resolving an interruption can vary widely. For example, the stream of interruptions an EP faces might include a question from a nurse to confirm a medication order, which could be readily and quickly handled, as well as a request to read an electrocardiogram (ECG), which might be more time-consuming and cognitively taxing. The model captures this variability in the time required to resolve different interruptions by interpreting the unit in the flow of interruptions not as the raw number of interruptions, but as the number of component mental steps needed to resolve interruptions. So, in the above example, reading an ECG may constitute many interruption units, whereas answering a simple question might be interpreted as only a few units of interruption. The model also assumes that resolving pending interruptions is required for ongoing survival of both the physical and social functioning of the organization. Finally, the model does not include the primary task being interrupted, but instead focuses on organizational performance as a function of the ability to resolve interruptions.

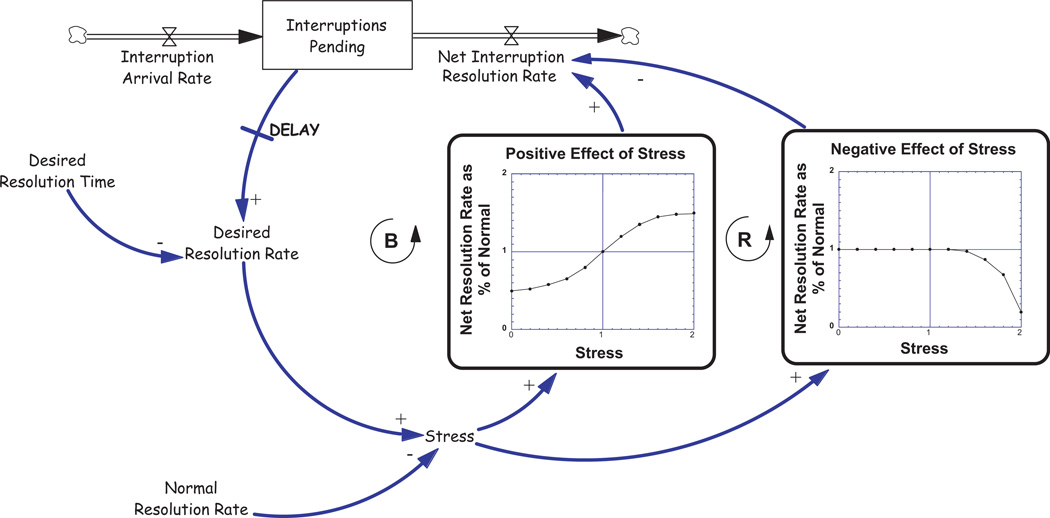

The model synthesizes three main ideas, each of which when taken alone is a relatively uncontested statement, but which when taken together form the basis for some important and somewhat counterintuitive dynamics. The first of these ideas is that interruptions are not processed instantaneously, but instead accumulate while pending resolution. Figure 1 is a stock and flow and feedback diagram using the standard icons of system dynamics to represent the relevant system structure.27,28 At the top of the diagram, we see the stream of incoming interruptions represented as the interruption arrival rate and depicted with a pipe and valve icon, signaling a flow variable. Interruptions accumulate in the stock of interruptions pending, represented with a rectangle, until they are resolved as depicted by the outflow net interruption resolution rate. Thus, the stock of interruptions pending is increased by the interruption arrival rate and decreased by the net interruption resolution rate. The dynamics of stocks are often conceptualized as akin to the dynamics of the level of water in a bathtub.29–31 The stock of interruptions pending will increase any time the interruption arrival rate exceeds the net interruption resolution rate, just as the level of water in a bathtub increases any time the inflow from the water spigot exceeds the outflow through the drain of the bathtub. Examples of accumulated unresolved interruptions in the ED include requests to come visit a patient, alerts that new test results are available, and pages that signal incoming stroke alert patients. To resolve each of these interruptions requires cognitive and physical effort that will take time and energy. Because interruptions may arrive at a rate that is greater than that at which they are resolved, unresolved interruptions can accumulate.

Figure 1.

A model of disaster dynamics. Reprinted from “Disaster Dynamics: Understanding the Role of Quantity in Organizational Collapse,” by Rudolph and Repenning, published in Administrative Science Quarterly (Vol. 47, 2002) by permission of Administrative Science Quarterly.

The second main idea underlying the causal structure of the model is that accumulating interruptions increase the levels of stress on the individuals in the organization. Unresolved interruptions do not disappear, but instead continue to wait for resolution. Mental bookkeeping becomes more challenging, raising the risks of losing situational awareness or committing fixation errors.32 Moreover, the time pressure these accumulations generate may decrease access to inert knowledge, increase errors, or trigger a maladaptive problem-solving routine.1,33–35 This notion is an important conceptual step that distinguishes the present work from many traditional studies of stress and performance that consider stress as an independent variable, exogenously manipulated or viewed as imposed by forces outside the system under study. Rather, given that accumulating interruptions cause increases in stress, the level of stress at any given time is largely determined by the organization’s past performance. Stress and performance become part of a feedback system in which stress affects the current performance and the consequences of past performance, as captured by the stock of interruptions pending, affect stress. In Figure 1, stress is modeled as the mismatch between the desired resolution rate, which depends on interruptions pending and a desired resolution time, and the normal resolution rate, which reflects a sense of performance under typical unstressed conditions. As pending interruptions increase, so does the desired resolution rate, increasing the model variable stress as well.

The third main idea asserts that the influence of stress on performance is captured by an inverted U-shaped relationship, as is posited by the Yerkes Dodson law.36 When stress is low, small increases result in an increase in performance, but the favorable effects of stress on performance continue only up to a point, after which further increases cause performance to deteriorate.37–39 The Yerkes Dodson law, based on experiments applying varying intensities of electrical shocks to mice running through a maze, has a long history in psychological research and has been used to describe the effects of anxiety, arousal, drive, motivation, activation, and reward and punishment on performance, problem solving, coping, and memory.39–41 Figure 1 shows the Yerkes Dodson curve separated into its upward- and downward-sloping components. The insert labeled positive effect of stress describes the upward-sloping portion of the Yerkes Dodson curve. The causal arrow from the variable stress to the positive effect of stress indicates that as stress increases, so too does the effect of stress, and the causal arrow from the positive effect of stress to the net interruption resolution rate indicates that as this effect increases, so does the net interruption resolution rate. This portion of the model operationalizes the idea that increasing stress increases performance (i.e., interruption resolution rate). Eventually, the peak of the Yerkes Dodson curve is reached, and increasing stress further causes performance to deteriorate. The insert labeled negative effect of stress describes the downward-sloping portion of the Yerkes Dodson curve, and the causal arrow from negative effect of stress to the net interruption resolution rate indicates that as this effect increases, the interruption resolution rate decreases.

The links among interruptions pending, the desired resolution rate, stress, the effects of stress, and the net interruption resolution rate form feedback loops that govern the organization’s response to variations in the interruption arrival rate. Figure 1 shows two feedback loops corresponding to the two regions of the Yerkes Dodson curve. To understand the diagram, consider a scenario in which an ED experiences a surge in interruptions compared to the baseline levels during normal operating conditions. The surge constitutes a rapid increase in the interruption arrival rate. These interruptions are not processed and resolved instantaneously, but instead accumulate in the stock of interruptions pending. As the stock of interruptions pending increases, the ED practitioners notice and interpret these interruptions and consequently experience an increase in their desired resolution rate for these pending interruptions. As the desired resolution rate rises above the normal resolution rate, stress (as it is modeled here) increases. Practitioners get more focused, experience autonomic arousal, and ratchet up their performance as in the upward-sloping portion of the Yerkes Dodson curve. In Figure 1, increasing stress causes an increase in the positive effect of stress, which then in turn causes an increase in the interruption resolution rate. With a higher interruption resolution rate the stock of interruptions pending decreases. Beginning with an increase in interruptions pending and tracing the process around to the resulting decrease in interruptions pending, we see that the response represented by this feedback loop offsets, or balances out, the initial increase. We call this a balancing feedback loop and label it in Figure 1 with the letter B for balancing. Balancing feedback loops act to correct deviations, regulate performance, or move systems toward their target conditions. Common examples of balancing feedback loops in other contexts include thermostatic control of room temperature, homeostasis, and eating in response to pangs of hunger. Balancing loops bring stability to systems. In the context of this example, the stress-induced enhancement in performance is a stabilizing response that helps the ED to restore normal operating conditions in the wake of the surge of interruptions.

The system response changes considerably when the stress level rises enough to trigger the downward-sloping portion of the Yerkes Dodson curve. Consider an ED experiencing larger or longer-lasting surges than described above, such that the stock of interruptions pending accumulates to a level high enough to push the ED into the region where the negative effects of stress dominate. The increase in stress that results from increases in interruptions pending now causes a decline in the interruption resolution rate, and as the interruption arrival rate continues, the stock of interruptions pending grows still further. Beginning with the increase in interruptions pending and tracing around the feedback loop, we have an increase in the desired resolution rate that causes an increase in stress, which in turn causes more negative effects of stress, reducing the interruption resolution rate and resulting in further increases in the stock of interruptions pending. The response represented by this feedback loop amplifies or reinforces the original increase. We call this a reinforcing feedback loop and label it with the letter R in Figure 1. Reinforcing feedback loops generate growth or decline at increasing rates of change, and they generate instability in systems. Common examples of reinforcing feedback loops include compounding interest, population growth, and the contagious spread of infectious disease. In the context of Figure 1, when stress levels increase, at first the balancing loop determines the overall behavior of the system, but when stress becomes high enough, the reinforcing loop begins to determine the system’s behavior. This transition is a shift in loop dominance and is key to understanding the dynamics of quantity-induced crises. We turn next to simulation experiments to highlight these important dynamics.

SIMULATING THE DYNAMICS OF DISASTER AND CRISIS

We present several simulation experiments using the mathematical model associated with the diagram in Figure 1. Model equations translate the causal logic described into simple algebraic equations. The resulting model is a set of nonlinear integral equations, one for each of the variables shown in the diagram. The full model description and equations are available elsewhere7 or from the authors on request. The experiments we show here begin with the system in equilibrium, which occurs when the interruption arrival rate equals the interruption resolution rate. Starting from these conditions, representing a steady-state or baseline condition, allows for controlled experiments when we perturb the system using a test input.

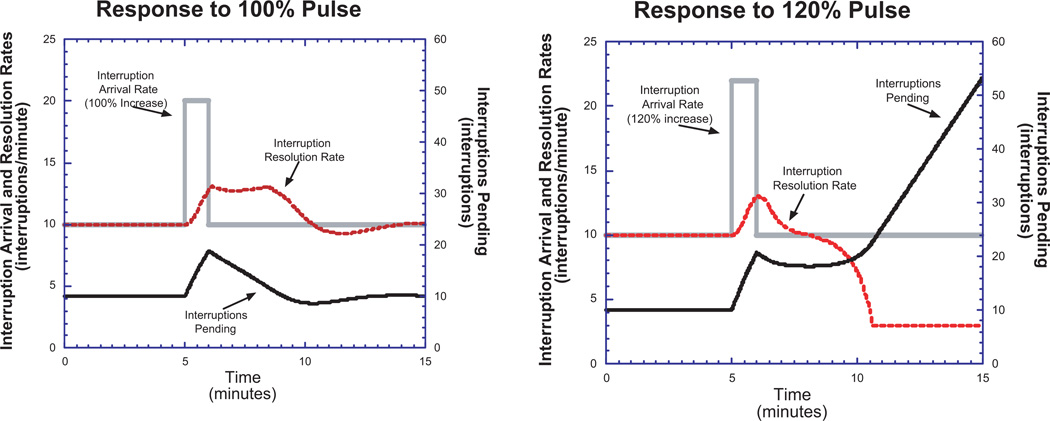

The first experiment tests the system’s response to a temporary, one-time surge in the interruption arrival rate. The left panel of Figure 2 displays the results when the interruption arrival rate is increased by 100% for one minute, after which it returns to its original baseline value. The surge in arrivals causes a sharp increase in the stock of interruptions pending, as seen in the solid dark line. The accumulation of interruptions pending increases stress levels, which in turn stimulates an increase in the interruption resolution rate, as seen in the dotted line. By the time the burst in arrivals has subsided, the stock of interruptions pending has accumulated to well above its baseline level. Stress remains a bit high, and interruption resolutions continue at a higher rate, above the rate of interruption arrivals, so the stock of interruptions pending begins to fall and eventually returns to its baseline level. The system returns to its steady state. The balancing feedback between stress and performance dominates behavior, regulating the resolution rate and enabling the system to recover from the surge in interruption arrivals.

Figure 2.

Response to a temporary surge in interruption arrivals. Reprinted from “Disaster Dynamics: Understanding the Role of Quantity in Organizational Collapse,” by Rudolph and Repenning, published in Administrative Science Quarterly (Vol. 47, 2002) by permission of Administrative Science Quarterly.

Next, consider the system’s response to a slightly larger surge in the interruption arrival rates. The right panel of Figure 2 displays the results when the interruption arrival rate is increased by 120% for 1 minute. As before, the increase in interruption arrivals causes a sharp increase in the stock of interruptions pending, increasing stress levels, which in turn increases the interruption resolution rate. However, the stock of interruptions pending grows to somewhat higher levels than in the previous scenario, causing stress to build still further. The negative effects of stress begin to take effect, and the reinforcing feedback loop begins to dominate the benign balancing feedback loop responsible for the adaptive response in the previous scenario. The negative effects of stress cause the interruption resolution rate to fall, and once this rate drops below the interruption arrival rate, the stock of interruptions pending begins to grow. The larger increase in interruption arrivals has pushed the system into the maladaptive region of the Yerkes Dodson curve, and the feedback between stress and performance has become a vicious cycle, propelling the system into a state of collapse.

These experiments highlight several important features of the system’s dynamics. First, the system has two qualitatively different modes of behavior, an adaptive response as in the first scenario and a catastrophic response as in the second scenario. In systems such as this one where loop dominance can shift, proportional logic, such as “more resources in, more performance out,” is not well-suited to understanding the complexities of the dynamic behavior. Second, a small change in the size of the surge in interruptions can precipitate a profoundly different outcome—indeed, the difference between adaptation and crisis. Once the system crosses a critical threshold, or tipping point, the behavior is dominated by a reinforcing loop acting as a vicious cycle that dooms the system to catastrophic collapse. Third, these experiments demonstrate that the consequences of a temporary surge in the rate of interruption arrivals can linger far beyond the short period of the transient increase.

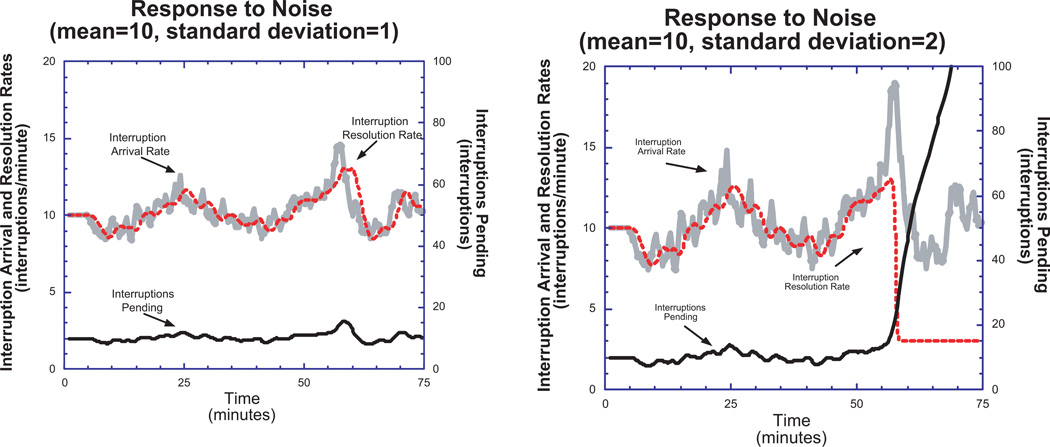

The next set of experiments explores how variability affects system performance. In Figure 3, we show two simulations in which the interruption arrival rate is now a noisy stream varying around a constant mean. The two simulations are identical except that the standard deviation of the variability in the simulation in the right panel is twice that of the standard deviation in the simulation in the left. The results in the left panel show that the system easily accommodates the variation in the interruption arrival rate. The system never crosses the tipping point, despite some significant increases in the arrival rates, so the balancing feedback loop dominates behavior. The system response is adaptive and resilient. Contrast these results with those shown in the right panel where the standard deviation is larger. Here the system responds well for approximately the first 50 minutes, but then a large increase in interruption arrivals pushes the system past the tipping point. The negative effects of stress are activated, and the interruption resolution rate drops below the arrival rate. Interruptions pending and stress grow, reducing performance, leading to further increases in interruptions pending and stress. The vicious cycle of the destabilizing reinforcing loop has been triggered. Monte Carlo analysis (not presented here),7 based on thousands of simulations using different standard deviations of the noise stream, confirms the important point of these experiments: greater variability increases the likelihood that the system will cross the tipping point threshold. Note also that once the system is overloaded beyond the tipping point, performance deteriorates quite rapidly.

Figure 3.

Response to variability in the stream of interruption arrivals. Reprinted from “Disaster Dynamics: Understanding the Role of Quantity in Organizational Collapse,” by Rudolph and Repenning, published in Administrative Science Quarterly (Vol. 47, 2002) by permission of Administrative Science Quarterly.

The main finding of this analysis is that organizations can have tipping points beyond which their response to interruptions fundamentally changes. Below the tipping point threshold, systems facing ongoing streams of interruption are resilient, readily restoring stability in response to modest changes in the number of interruptions. However, once the threshold is crossed, system performance rapidly deteriorates, leading to crisis conditions. The existence of such tipping points inevitably raises the question of just how much additional interruption the system can tolerate before crossing into crisis mode. Figure 4 helps explain the existence of tipping points and the margin of tolerance beyond which the systems lose their resilience.

Figure 4.

Location of the tipping point under two scenarios for baseline system utilization. Adapted from Rudolph and Repenning.7

Consider first the left panel of Figure 4. The inverted U-shape of the Yerkes Dodson curve maps the relationship between stress on the horizontal axis and performance as represented by the interruption resolution rate on the vertical axis. Note that in the upward-sloping region of the curve, the balancing loop dominates the system’s behavior, whereas in the downward-sloping region, the reinforcing loop dominates. The horizontal line in the diagram depicts the interruption arrival rate and intersects the curve for the resolution rate at two points highlighted with black dots. These two points are both equilibria, because the inflow to the stock (the interruption arrival rate) equals the outflow (the interruption resolution rate). The intersection on the left side of the curve corresponds to the starting conditions in which the inflow equals the outflow in the simulation experiments above. Whenever the interruption resolution rate curve is above the horizontal line, the outflow to the stock exceeds the inflow (just as the rate of water draining from a bathtub exceeds the rate of water flowing into the bathtub) and interruptions pending will be decreasing (just as the level of water in the bathtub will be decreasing). Conversely, whenever the interruption resolution rate curve is below the horizontal line for the interruption arrival rate, the stock of interruptions pending will be increasing. Thus, the arrows in Figure 4 show the direction of the trajectory when the system is not in equilibrium. Beginning at the equilibrium on the left side of the graph, we can see that following a small shift to the right (corresponding to an increase in interruptions and thus an increase in stress), the system’s response brings the resolution rate above the arrival rate, thus reducing the stock of interruptions pending, reducing stress, and restoring the system to its original equilibrium, just as we saw in the first simulation experiment. This intersection occurs in the upward-sloping region of the Yerkes Dodson curve, where the balancing loop is dominant. The equilibrium is a stable one, meaning that the system counteracts small perturbations to bring the system back into equilibrium.

The equilibrium on the right side of the curve occurs in the downward-sloping portion of the Yerkes Dodson curve, a region where the reinforcing loop dominates. This is an unstable equilibrium, because a small perturbation will be amplified by the system, moving further away from the unstable equilibrium. In particular, note the direction of the arrows. Once the system reaches the unstable equilibrium on the right, any further increase in stress pushes the system into the region where the resolution rate is less than the arrival rate. The stock of interruptions pending increases, further increasing stress and further reducing the resolution rate. The reinforcing loop dominates the system behavior, just as we saw in the second simulation of Figure 2 where the system was propelled into crisis. The unstable equilibrium on the right is also the tipping point, the threshold that distinguishes the adaptive mode of system response from the catastrophic mode. The further right this tipping point is relative to normal baseline conditions, the more additional interruptions the system can tolerate before plunging into crisis. To highlight the relationship between the tipping point and normal baseline conditions, we add to Figure 4 a notation for this span and label it “flex” capacity.

The right panel of Figure 4 displays a different scenario. System capability as indicated by the Yerkes Dodson curve is the same, but the interruption arrival rate of baseline conditions is higher, closer to the peak of system performance capability. Consequently, flex capacity now is considerably less. This system is operating under normal conditions much closer to the tipping point, and we would expect that it would take a much smaller surge in interruption arrivals to push this system into the catastrophic crisis beyond the tipping point. We offer the two scenarios in Figure 4 to suggest that the state of EDs across the United States over the past several decades has evolved from a situation more akin to the left panel toward a situation more akin to the right panel. The continuing increase in arrivals that our nation’s EDs have experienced42 constitutes a pushing up of the horizontal line in Figure 4. The fact that chronic overcrowding conditions have not resulted in more catastrophic crises suggests that ED practitioners have often successfully functioned near the peak of the curve. However, the resilient and adaptive ability of our EDs to accommodate these increasing stresses on the system is potentially misleading if we take seriously the implications of the right panel of Figure 4. Our nation’s EDs may be much closer to the tipping point than we realize.

IMPLICATIONS FOR EMERGENCY MEDICINE

The model and analyses presented here were developed drawing on data from the study of large-scale disasters, so a major limitation of this work is that applications to EM are speculative. Nevertheless, there are compelling parallels between the feedback structure of the disaster dynamics posited here and the sociotechnical environments of our EDs. Interruptions, which figure prominently in the quantity-induced crises we have been discussing, are a quintessential feature in the practice of EM.15

We conclude in this section with some observations about how the lessons from the study of disaster might apply to EM. First and foremost, EDs, like the organizations modeled in our analysis, are sophisticated and interconnected systems that face an unpredictable stream of demands for their services. The major finding of the above analysis is that such systems are prone to tipping. As long as mounting stress on the individuals, groups, or the system as a whole eventually leads to degradation of performance, the system will have a tipping point. Once the ED crosses a critical threshold of some combination of census, workload, confusion, access block, pending radiologic and laboratory results, and accumulated interruptions, the reinforcing feedback dynamics of the vicious cycle can lead rather quickly to the collapse of normal operating routines and in the extreme to a crisis of organizational paralysis. One account of such a fundamental shift in the operating regime of an ED describes the status beyond the tipping point as one of “freefall.”43

The analysis here underscores the importance of quantity rather than novelty as an antecedent of crisis. For EM, the message is that increasing volumes of otherwise routine patient arrivals and commonplace interruptions in the ED may be a greater threat than is often appreciated. EDs have well-developed protocols for responding to mass casualty incidents, and providers train to manage and deliver care effectively when they occur. These protocols often trigger a flexing up of resources that bring additional providers and ancillary personnel to the trauma bays or other sites. EDs can use these protocols to address the challenges of mass casualty incidents, because they are readily identified. On the other hand, a quantity-induced overload enjoys no such special designation, nor clear signals that it might be occurring. More attention is needed to the identification, prevention, and management of scenarios based on a high quantity of non-novel interruptions in the ED.

A second important implication for EM is that practitioners may be lulled into a false sense of capability by their experiences in the precrisis mode. The curvilinear relationship of performance and stress suggests that practitioners and departments have some latent ability to ratchet up their performance when conditions require. The experiences of providers in successfully managing overload conditions confirm their belief in their ability to handle such trying conditions, when in fact they could be operating dangerously close to the tipping point. Such lessons learned may breed an artificial sense that the system is safely distant from a crisis beyond the tipping point, especially when coupled with overconfidence bias,44 which may be even more extreme among ED practitioners who are attracted to the intensity of such demanding situations. However, tipping points are not only unexpected, but also trigger a highly nonlinear response that once started is extremely challenging to stop.

Third, managing an impending crisis presents a challenge that is more difficult and subtle than most theoretical analyses and practical wisdom acknowledges. The ability to recognize an impending crisis likely declines as pending interruptions increase, drawing on cognitive resources. Moreover, even when people recognize an impending crisis, attempts to fashion a response may actually make things worse. One response advocated by some organizational theorists and in studies of error prevention is to enlarge the repertoire of responses by stepping back, reframing, and engaging in higher-order evaluation such as double loop learning.45,46 Such responses may be useful and adaptive when the novelty of the situation is the challenge, but they can be counterproductive when the crisis is driven by non-novel quantity. Under such conditions, it may be that unquestioned adherence to preexisting routines is the best response to the accumulation of pending interruptions. Worse, many real-world crises combine elements of both novelty and quantity (e.g., Weick’s description of the Mann Gulch fire47), so striking a balance between confident adherence to learned responses and shifting gears to explore novel alternatives becomes critical to adaptively meeting the challenge.22,31

Finally, at a higher level of analysis we note that the nation’s EM system seems to be moving toward riskier and riskier baseline conditions. The chronic overcrowding of our nation’s EDs has become somewhat commonplace, with a likely implication that many EDs have severely limited flex capacity to tolerate upticks in routine demand for services. Stretching our nation’s EDs to see how far they can stretch is a form of hill climbing search. In a hill climbing search for the peak, we start climbing (i.e., increasing the value of some variable), check to see if our most recent progress has been upward, and if so continue climbing. If not, we stop or go back a notch. Such a method works well in many situations, such as titrating the dose of a drug until the desired response is achieved. However, hill climbing is a potentially disastrous approach in a landscape prone to tipping because the consequences of crossing the threshold are often irreversible. The Institute of Medicine characterized our EM system as “At the Breaking Point.”42 We need to avoid succumbing to the trap of using hill climbing as the means to discover that breaking point.

Acknowledgments

Funding for this conference was made possible (in part) by 1R13HS020139-01 from the Agency for Healthcare Research and Quality (AHRQ). The views expressed in written conference materials or publications and by speakers and moderators do not necessarily reflect the official policies of the Department of Health and Human Services, nor does mention of trade names, commercial practices, or organizations imply endorsement by the U.S. Government. This issue of Academic Emergency Medicine is funded by the Robert Wood Johnson Foundation.

Footnotes

The authors have no potential conflicts of interest to disclose.

References

- 1.Cook RI, Woods DD. Operating at the sharp end: the complexity of human error. In: Bogner BS, editor. Human Error in Medicine. Hillsdale, NJ: Lawrence Earlbaum; 1994. pp. 255–310. [Google Scholar]

- 2.Vaughan D. The Challenger Launch Decision: Risky Technology, Culture, and Deviance at NASA. Chicago, IL: University of Chicago Press; 1996. [Google Scholar]

- 3.Weick KE. The Vulnerable System: An Analysis of the Tenerife Air Disaster. In: Roberts KH, editor. New Challenges to Understanding Organizations. New York, NY: MacMillan; 1993. pp. 173–198. [Google Scholar]

- 4.Shrivastava P, Mitroff I, Miller D, Miglani A. Understanding industrial crises. J Manag Stud. 1988;25:285–303. [Google Scholar]

- 5.Perrow C. Normal Accidents: Living with High Risk Systems. New York, NY: Basic Books; 1984. [Google Scholar]

- 6.Staw BM, Sandelands LE, Dutton JE. Threat-rigidity effects in organizational behavior: a multi-level analysis. Admin Sci Q. 1981;26:501–524. [Google Scholar]

- 7.Rudolph JW, Repenning NR. Disaster dynamics: understanding the role of stress and interruptions in organizational collapse. Admin Sci Q. 2002;47:1–30. [Google Scholar]

- 8.Reason J. Managing the Risks of Organizational Accidents. Alsershot, England: Ashgate Publishing Limited; 1997. [Google Scholar]

- 9.Derlet RW, Richards JR. Overcrowding in the nation’s emergency departments: complex causes and disturbing effects. Ann Emerg Med. 2000;35:63–68. doi: 10.1016/s0196-0644(00)70105-3. [DOI] [PubMed] [Google Scholar]

- 10.Goldberg C. Emergency crews worry as hospitals say, ‘No vacancy.’. [Accessed Sep 18, 2011];New York Times. Available at: http://www.nytimes.com/2000/12/17/us/emergency-crews-worry-as-hospitals-say-no-vacancy.html.

- 11.Kellermann AL, Déjà vu. Ann Emerg Med. 2000;35:83–85. doi: 10.1016/s0196-0644(00)70110-7. [DOI] [PubMed] [Google Scholar]

- 12.Zwemer FL. Emergency department overcrowding. Ann Emerg Med. 2000;36:279. doi: 10.1067/mem.2000.109261. [DOI] [PubMed] [Google Scholar]

- 13.U.S. General Accounting Office. Hospital Emergency Departments: Crowded Conditions Vary Among Hospitals and Communities. Washington, DC: US General Accounting Office; 2003. p. 71. [Google Scholar]

- 14.Kellermann AL. Crisis in the emergency department. N Engl J Med. 2006;355:1300–1303. doi: 10.1056/NEJMp068194. [DOI] [PubMed] [Google Scholar]

- 15.Chisholm CD, Collison EK, Nelson DR, Cordell WH. Emergency department workplace interruptions: are emergency physicians “interrupt-driven” and “multitasking”? Acad Emerg Med. 2000;7:1239–1243. doi: 10.1111/j.1553-2712.2000.tb00469.x. [DOI] [PubMed] [Google Scholar]

- 16.Brixey JJ, Robinson DJ, Turley JP, Zhang J. The roles of MDs and RNs as initiators and recipients of interruptions in workflow. Int J Med Informat. 2010;79:e109–e115. doi: 10.1016/j.ijmedinf.2008.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chisholm CD, Dornfeld AM, Nelson DR, Cordell WH. Work interrupted: a comparison of workplace interruptions in emergency departments and primary care offices. Ann Emerg Med. 2001;38:146–151. doi: 10.1067/mem.2001.115440. [DOI] [PubMed] [Google Scholar]

- 18.Kohn L, Corrigan J, Donaldson M, editors. To Err is Human: Building a Safer Health System. Washington, DC: National Academies Press; 1999. [PubMed] [Google Scholar]

- 19.Cooper C, Rout U, Faragher B. Mental health, job satisfaction, and job stress among general practitioners. BMJ. 1989;298:366–370. doi: 10.1136/bmj.298.6670.366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Barry J. Sea of lies. [Accessed Sep 18, 2011];Newsweek. Available at: http://www.thedailybeast.com/newsweek/1992/07/12/sea-of-lies.html.

- 21.Klein G. Sources of Power. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 22.Roberts NC, Dotterway KA. The Vincennes incident: another player on the stage? Def Anal. 1995;11:31–45. [Google Scholar]

- 23.U.S. Senate Committee on Armed Services. Washington, DC: U.S. Government Printing Office; 1988. Investigation into the Downing of an Iranian Airliner by the USS Vincennes. [Google Scholar]

- 24.Collyer SC, Malecki GS. Tactical decision making under stress: history and overview. In: Cannon-Bowers JA, Salas E, editors. Making Decisions Under Stress: Implications for Individual and Team Training. Washington, DC: American Psychological Association; 1998. pp. 3–15. [Google Scholar]

- 25.Mandler G. Stress and thought processes. In: Goldberger S, Breznitz S, editors. Handbook of Stress. New York, NY: Free Press; 1982. pp. 88–164. [Google Scholar]

- 26.Louis MR, Sutton RI. Switching cognitive gears: from habits of mind to active thinking. Human Relations. 1991;44:55–76. [Google Scholar]

- 27.Forrester JW. Industrial Dynamics. Cambridge, MA: Productivity Press; 1961. [Google Scholar]

- 28.Sterman JD. Business Dynamics: Systems Thinking and Modeling for a Complex World. Chicago, IL: Irwin-McGraw Hill; 2000. [Google Scholar]

- 29.Kunzig R. The carbon bathtub. Nat Geograph. 2009;216:26–29. [Google Scholar]

- 30.Booth-Sweeney L, Sterman JD. Bathtub dynamics: initial results of a systems thinking inventory. Sys Dynam Rev. 2000;16:249–286. [Google Scholar]

- 31.Boulding KE. The consumption concept in economic theory. Am Econ Rev. 1945;35:1–14. [Google Scholar]

- 32.De Keyser V, Woods DD. Fixation errors: failures to revise situation assessment in dynamic and risky systems. In: Colombo G, de Bustamante AS, editors. Systems Reliability Assessment. Amsterdam: Kluwer; 1990. pp. 231–251. [Google Scholar]

- 33.Gentner D, Stevens AL. Mental Models. Hillsdale, NJ: Lawrence Erlbaum; 1983. [Google Scholar]

- 34.Rudolph J, Morrison JB, Carroll J. Confidence, Error, and Ingenuity in Diagnostic Problem Solving: Clarifying the Role of Exploration and Exploitation. In: Solomon GT, editor. Best Paper Proceedings of the Sixty-Seventh Annual Meeting of the Academy of Management; Philadelphia, PA. 2007. [Google Scholar]

- 35.Raufaste E, Eyrolle H, Marine C. Pertinence generation in radiological diagnosis: spreading activation and the nature of expertise. Cognitive Sci. 1998;22:517–546. [Google Scholar]

- 36.Yerkes RM, Dodson JD. The relation of strength of stimulus to rapidity of habit formation. J Comp Neurolog Psychology. 1908;18:459–482. [Google Scholar]

- 37.Sullivan SE, Bhagat RS. Organizational stress, job satisfaction and job performance: where do we go from here? J Manage. 1992;18:353. [Google Scholar]

- 38.Coles MG. Physiological activity and detection: the effects of attentional requirements and the prediction of performance. Biol Psychol. 1974;2:113–125. doi: 10.1016/0301-0511(74)90019-2. [DOI] [PubMed] [Google Scholar]

- 39.Miller JG. Living Systems. New York, NY: McGraw-Hill; 1978. [Google Scholar]

- 40.Teigen KH. Yerkes-Dodson: a law for all seasons. Theory Psych. 1994;4:525–547. [Google Scholar]

- 41.Fisher S. Stress and Strategy. London: Lawrence Erlbaum; 1986. [Google Scholar]

- 42.Institute of Medicine. Hospital-based Emergency Care At the Breaking Point. Washington, DC: National Academies Press; 2006. [Google Scholar]

- 43.Wears RL, Perry SJ. Free fall - a case study of resilience, its degradation, and recovery, in an emergency department. In: Hollnagel E, Rigaud E, editors. 2nd International Symposium on Resilience Engineer; Mines Paris Les Presses; Juan-les-Pins, France. 2006. pp. 325–332. [Google Scholar]

- 44.Plous S. The Psychology of Judgment and Decision Making. New York, NY: McGraw-Hill; 1993. [Google Scholar]

- 45.Weick KE, Sutcliffe KM, Obstfeld D. Organizing for high reliability: processes of collective mindfulness. In: Sutton RI, Staw BM, editors. Research in Organizational Behavior. Stamford, CT: JAI Press; 1999. pp. 81–123. [Google Scholar]

- 46.Argyris C, Schon DA. Theory in Practice: Increasing Professional Effectiveness. London: Jossey-Bass; 1974. [Google Scholar]