Abstract

Objective:

To evaluate the effect of simulation-based mastery learning (SBML) on internal medicine residents' lumbar puncture (LP) skills, assess neurology residents' acquired LP skills from traditional clinical education, and compare the results of SBML to traditional clinical education.

Methods:

This study was a pretest-posttest design with a comparison group. Fifty-eight postgraduate year (PGY) 1 internal medicine residents received an SBML intervention in LP. Residents completed a baseline skill assessment (pretest) using a 21-item LP checklist. After a 3-hour session featuring deliberate practice and feedback, residents completed a posttest and were expected to meet or exceed a minimum passing score (MPS) set by an expert panel. Simulator-trained residents' pretest and posttest scores were compared to assess the impact of the intervention. Thirty-six PGY2, 3, and 4 neurology residents from 3 medical centers completed the same simulated LP assessment without SBML. SBML posttest scores were compared to neurology residents' baseline scores.

Results:

PGY1 internal medicine residents improved from a mean of 46.3% to 95.7% after SBML (p < 0.001) and all met the MPS at final posttest. The performance of traditionally trained neurology residents was significantly lower than simulator-trained residents (mean 65.4%, p < 0.001) and only 6% met the MPS.

Conclusions:

Residents who completed SBML showed significant improvement in LP procedural skills. Few neurology residents were competent to perform a simulated LP despite clinical experience with the procedure.

Lumbar puncture (LP) is commonly performed by physicians-in-training who often learn vicariously by observing procedures performed by peers. This method leads to uneven skill acquisition and trainee discomfort.1

The American Board of Psychiatry and Neurology,2 the American Association of Neurology,3 and the Accreditation Council for Graduate Medical Education4 have no formal policies to ensure the competence of neurology residents at performing LP. The American Board of Internal Medicine does not require procedural competence in LP, but advises use of simulation training before procedures are performed on patients.5 Despite this, many residents will ultimately practice in a setting where competence in LP is required.

Simulation technology increases procedural skill by providing the opportunity for deliberate practice in a safe environment.6 Researchers at Northwestern University use simulation-based education to train medical residents to mastery skill levels in procedures such as central venous catheter insertion,7–9 thoracentesis,10 and advanced cardiac life support.11 Mastery learning is a stringent form of competency-based education that requires trainees to acquire clinical skill measured against a fixed achievement standard.12 In mastery learning, educational practice time varies but results are uniform. This approach improves patient care outcomes13,14 and is more effective than clinical training alone.15

The current study had 3 aims: first, to evaluate the impact of a simulation-based mastery learning (SBML) intervention on internal medicine residents' LP skills; second, to assess neurology residents' acquired LP skills from traditional clinical education; third, to compare the results of SBML with traditional clinical education for LP skill acquisition.

METHODS

Design.

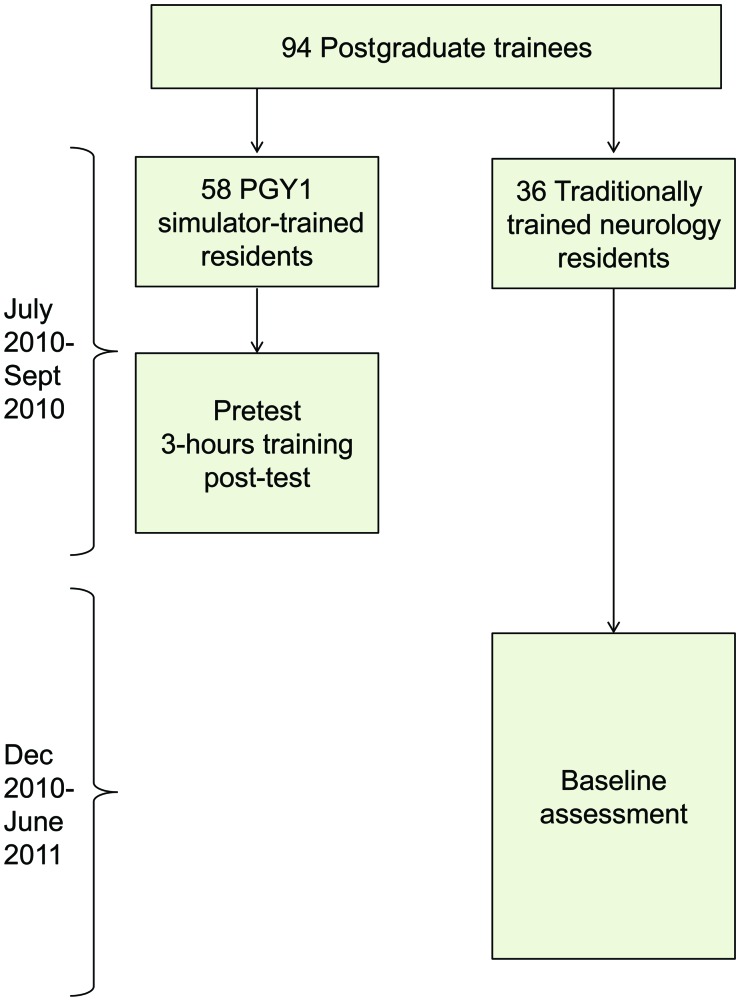

This was a pretest-posttest design with a comparison group16 of an SBML intervention in LP performance. Postgraduate year (PGY) 1 internal medicine residents who received the intervention were compared to PGY2–4 neurology residents who received clinical training alone. Intervention group (simulator-trained) internal medicine residents underwent a pretest in LP clinical skills using a simulator, completed an SBML educational intervention, and a skills posttest. PGY2–4 neurology residents (traditionally trained) completed an LP clinical skills examination (baseline test) and served as a comparison group (figure 1). The definition of traditional training was conventional clinical neurology residency education at participating institutions.3,4

Figure 1. Flow diagram of study design.

PGY = postgraduate year.

Setting and participants.

Intervention group participants were all PGY1 internal medicine residents (n = 58) at Northwestern University from July–September 2010. PGY2–4 neurology residents (n = 49) at 3 university-affiliated academic centers in Chicago, IL, were eligible to serve as the traditionally trained comparison group. Neurology residents from Northwestern University were not eligible to participate in the study because they previously received simulation-based education in LP. Participating neurology residents underwent baseline testing from December 2010 to June 2011.

Standard protocol approvals, registrations, and patient consents.

The Northwestern University Institutional Review Board approved the study, and all participants provided informed consent before participating.

Procedure.

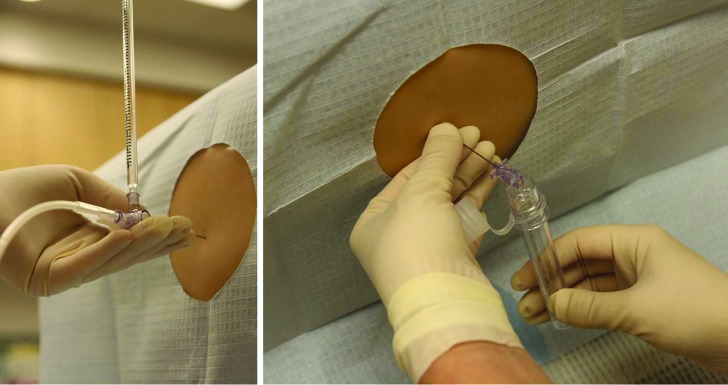

This study was conducted using an LP simulator from Kyoto Kagaku (Torrance, CA) which costs approximately $2,000.17 This LP simulator represents a patient's lower torso and resembles lumbar spinal anatomy closely. The skin and tissues provide life-like resistance to a spinal needle, and allow trainees to measure an opening pressure and collect CSF (figure 2).

Figure 2. Lumbar puncture (LP) simulator.

Left: Trainee measuring an opening pressure on the LP simulator. Right: Trainee collecting spinal fluid from the LP simulator.

Simulator-trained PGY1 internal medicine residents underwent a clinical skills examination using a 21-item LP performance checklist (pretest). Subsequently, they completed a 3-hour education session featuring the New England Journal of Medicine video on LP,18 an interactive LP demonstration, and deliberate practice with directed feedback.19 All participants were required to complete the educational program regardless of pretest performance. A senior faculty member (J.H.B.) with expertise in SBML and LP supervised all sessions. Immediately after the educational intervention, simulator-trained residents were required to meet or exceed a minimum passing score (MPS) on a clinical skills examination (posttest) using the checklist. Residents who did not achieve the MPS engaged in more deliberate practice and were retested until the MPS was reached—the key feature of mastery learning.12 Traditionally trained PGY2–4 neurology residents completed a baseline test in LP using the simulator and 21-item checklist. All skills examinations were video recorded. Residents were blind to individual items on the checklist during testing and training sessions.

Measurement.

We developed the 21-item LP checklist using relevant sources18,20 and rigorous step-by-step methods.7–11,21,22 Each skill or action was listed in order and given equal weight using a dichotomous scoring system (done correctly or incorrectly). The checklist was designed by 1 author with expertise in LP (J.H.B.) and reviewed for completeness and accuracy by 3 authors with expertise in LP, simulation-based education, and checklist design (T.S., E.R.C., D.B.W.). We pilot tested the checklist on a group of 5 medical educators who were not study subjects to estimate reliability and face validity. The final checklist was a consensus between authors.

A multidisciplinary panel of 10 clinical experts determined the MPS using the Angoff (item-based) and Hofstee (group-based) standard setting methods.23,24 The panel was composed of Northwestern University faculty members board certified in neurology (n = 2), anesthesiology (n = 2), emergency medicine (n = 3), and internal medicine (n = 3). Each panelist received instruction in standard setting and used the Angoff and Hofstee methods to assign pass/fail standards. The mean of the Angoff and Hofstee scores was used as the final MPS.24

A second rater rescored a 50% random sample of video recorded clinical skills examinations using the 21-item checklist to assess inter-rater reliability. This examiner was blind to examinee group assignment, pre/posttest status, and the aims of the study.

Participants provided demographic data including age, gender, training program affiliation, and year of training. Each resident assessed procedural confidence on a self-rating scale (0 = not confident to 100 = very confident) and provided information about experience performing LP (number of procedures performed in actual clinical care).

Primary outcome measures were performance of simulator-trained and traditionally trained residents on the clinical skills examinations. Secondary outcome measures were correlations between clinical skills examination performance and resident demographics including year of training, self-confidence, and prior LP experience.

Data analysis.

Checklist score reliability was estimated by calculating interrater reliability using the mean kappa coefficient across all checklist items. Internal medicine residents' mean pre- and posttest checklist scores were compared using a paired t test. Mean internal medicine posttest scores were compared to mean neurology baseline scores using the independent t test. χ2 tests were used to compare individual checklist items between the 2 groups. Multiple linear regression was used to evaluate between group differences in posttest (internal medicine) and baseline test scores (neurology) while controlling for age, gender, year of training, self-confidence, and LP procedure experience. Multiple linear regression was also used to assess relationships within groups. In simulator-trained internal medicine residents, we measured associations between posttest clinical skills examination performance and age, gender, reported self-confidence, LP procedure experience, and pretest performance. In the traditionally trained neurology residents, we measured associations between baseline clinical skills examination performance and age, gender, year of training, institution affiliation, reported self-confidence, and LP procedure experience.

RESULTS

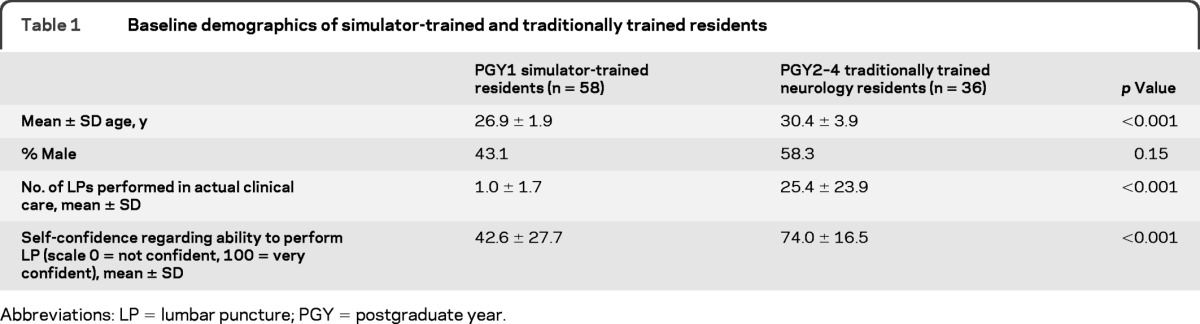

All 58 internal medicine residents participated in the study and completed the entire protocol. Thirty-six of 49 (74%) eligible neurology residents participated in the study and completed the entire protocol. The other 26% did not participate due to scheduling difficulties. Fourteen PGY2, 11 PGY3, and 11 PGY4 neurology residents enrolled in the study. Table 1 shows baseline demographics of all 94 residents.

Table 1.

Baseline demographics of simulator-trained and traditionally trained residents

Abbreviations: LP = lumbar puncture; PGY = postgraduate year.

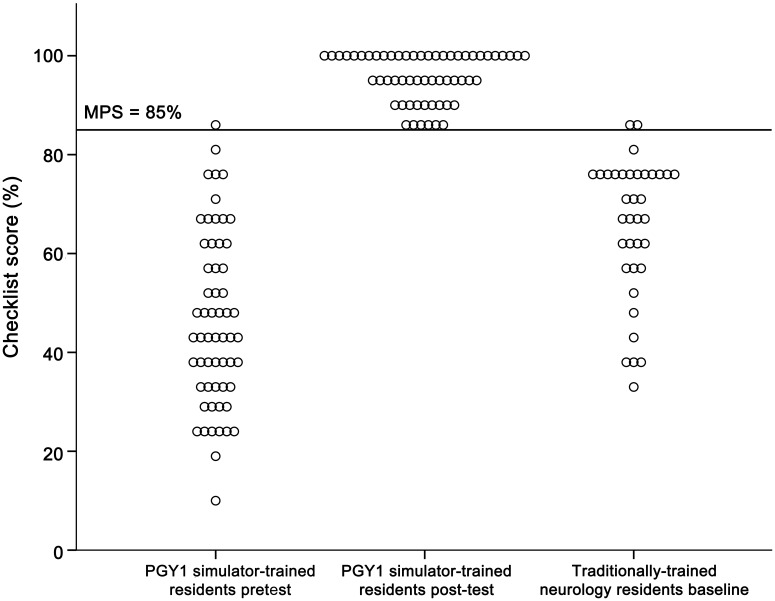

Interrater reliability was high across the 21 checklist items (κn = 0.75). The expert panel set the MPS used in the clinical skills examination at 85%. One of 58 (2%) PGY1 internal medicine residents met the MPS at pretest. Fifty-five of 58 (95%) met the MPS at posttest after the 3-hour intervention. The 3 PGY1 residents who did not meet the MPS at immediate posttest subsequently reached the MPS within 1 hour of further training. In the traditionally trained group of PGY2–4 neurology residents, 2 of 36 (6%) met or exceeded the MPS at baseline testing. Figure 3 is a graphic portrayal of internal medicine and neurology residents' clinical examination scores.

Figure 3. Clinical skills examination (checklist) performance.

Clinical skills examination (checklist) pre- and final posttest performance of 58 first-year simulator-trained internal medicine residents and baseline performance of 36 traditionally trained neurology residents. Three internal medicine residents failed to meet the minimum passing score (MPS) at initial post-testing. PGY = postgraduate year.

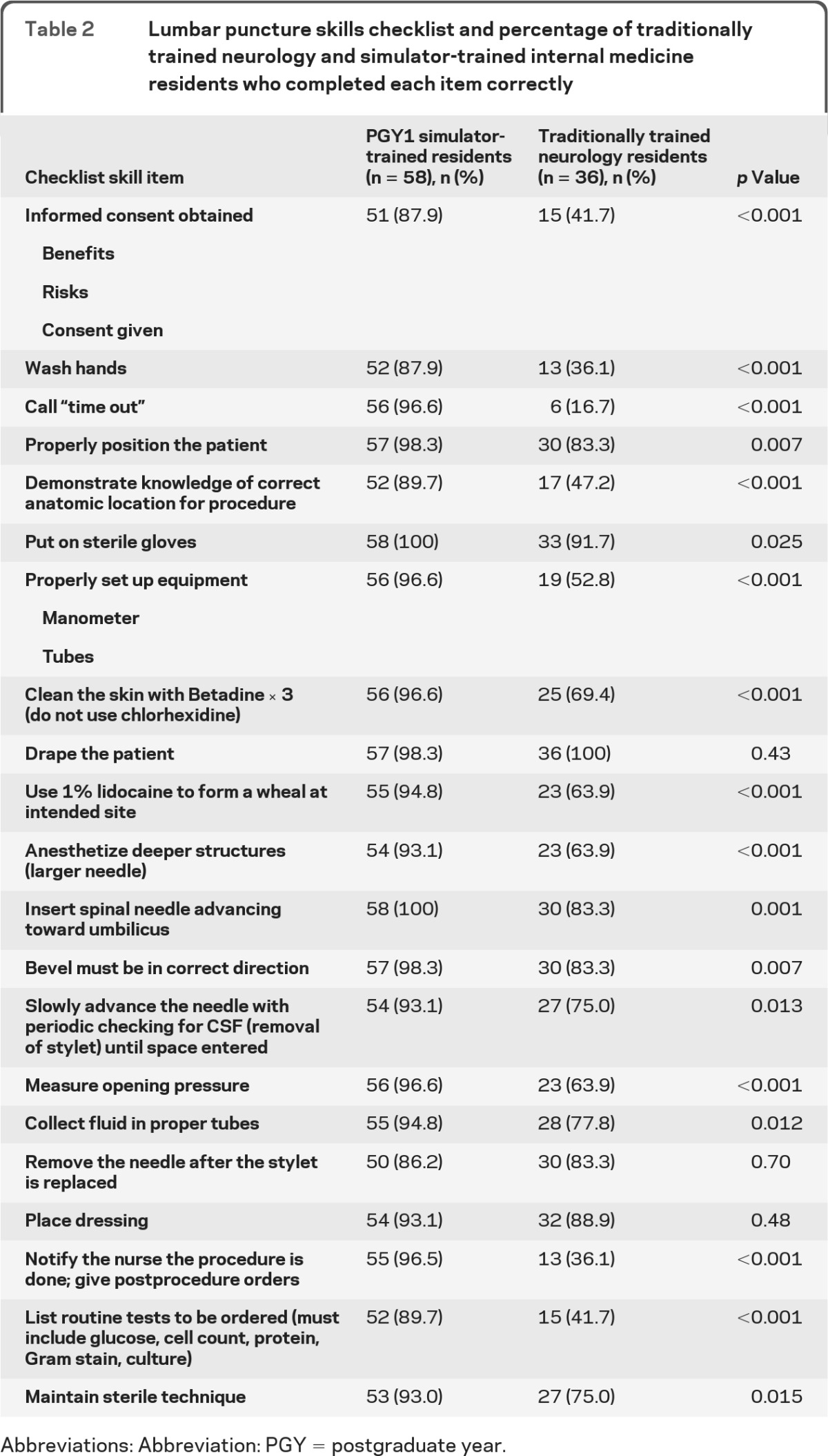

Simulator-trained residents showed a highly significant 107% pretest-to-posttest gain as cohort checklist performance mean scores increased from 46.3% (SD = 17.4) to 95.7% (SD = 4.9; p < 0.001). Traditionally trained neurology residents scored a mean of 65.4% (SD = 14.2) on the checklist. Posttest scores of simulator-trained internal medicine residents were 46% higher than scores of traditionally trained neurology residents (p < 0.001). Results remained significant after controlling for age, gender, year of training, self-confidence, and LP procedural experience (p < 0.001). At the individual item level, simulator-trained residents displayed significantly higher skill on 18 of the 21 items on the LP checklist (table 2).

Table 2.

Lumbar puncture skills checklist and percentage of traditionally trained neurology and simulator-trained internal medicine residents who completed each item correctly

Abbreviations: Abbreviation: PGY = postgraduate year.

Regression analysis also showed no relationship within groups between clinical skills examination performance and age, gender, year of training, institution affiliation, reported self-confidence, and LP procedural experience.

DISCUSSION

Use of SBML featuring an opportunity for deliberate practice and feedback produced a large and consistent skill improvement and almost eliminated performance variability among residents. By contrast, neurology residents from 3 Chicago academic institutions demonstrated poor procedural skill despite standard residency training and substantial experience with actual LPs. Surprisingly, more than half of neurology residents did not correctly identify the anatomic location for an LP and were unable to list routine tests ordered on CSF. These results illustrate the limitations of traditional clinical training and suggest a role for simulation training during neurology residency.

This study contributes to knowledge about procedural skill acquisition among medical trainees. There was no association between resident demographic variables such as age, gender, institution, and year of training and LP procedural skill. Within-group self-confidence and number of LP procedures performed in actual clinical care also did not correlate with measured procedural skill. This confirms results of studies of other medical procedures where trainee clinical experience did not predict procedural skill.7–10,21 In an earlier study of 501 LPs, a surrogate for procedural skill (incidence of post LP headache) was not related to the number of years in practice or training level of the clinician who performed the procedure.25 This consistent finding is a reminder that clinical experience alone is not a proxy for skill26 and that procedural competence should only be assessed using reliable methods involving simulation or clinical observation.

A recently published article calls for additional educational research in neurology.27 Our study evaluated the effect of simulation-based education with mastery learning for resident education using rigorous assessment standards. Earlier studies used simulation to evaluate LP skills of internal medicine,28 emergency medicine,29 and pediatric residents,30 but mastery standards were not imposed. Use of the mastery model improves clinical care in advanced cardiac life support13 and central venous catheter insertion.14 Further study is required on the effect of SBML on neurology resident education.

This study has several limitations. First, the study enrolled a relatively small group of trainees although they represent multiple training programs. Second, we used the LP model for both education and testing, potentially confounding the improvement in posttest scores. However, this does not diminish the pronounced impact simulation-based training had on LP skills. Third, only 74% of neurology residents at the 3 institutions participated, and neurology residents did not complete simulation-based education. We have no reason to believe our results would be different if the entire cohort of neurology residents participated in the educational intervention. Fourth, posttesting occurred immediately after training potentially enhancing recall of the procedure. Further study is required to address long-term retention of LP skills. We previously showed that simulation-based education for central venous catheter insertion and advanced cardiac life support is associated with significant skills retention 1 year after training.31,32 Finally, in service of translational science goals33 we have not yet linked improved LP skills in the simulated environment to improved clinical health care delivery practices and better patient outcomes.

This study demonstrates that simulation-based education boosts LP skills beyond traditional training methods. Few neurology residents in our study were competent to perform a simulated LP despite substantial clinical experience with the procedure. Residents who completed simulation-based education displayed better procedural skills despite significantly less clinical experience with the procedure. In light of these results, we believe a procedural standard should be set and documented for all residents prior to performing an LP in actual clinical care.

Supplementary Material

ACKNOWLEDGMENT

The authors thank the Northwestern University internal medicine residents and participating Chicago neurology residency programs for their dedication to education and patient care and Drs. Douglas Vaughan and Mark Williams for their support and encouragement of this work.

GLOSSARY

- LP

lumbar puncture

- MPS

minimum passing score

- PGY

postgraduate year

- SBML

simulation-based mastery learning

Footnotes

Editorial, page 115

AUTHOR CONTRIBUTIONS

J. Barsuk was involved in study concept and design, collecting data, statistical analysis and interpretation of data, study supervision, and drafting and revising the manuscript. E. Cohen was involved in study concept and design, collecting data, statistical analysis and interpretation of data, study supervision, and drafting and revising the manuscript. T. Caprio was involved in collecting and interpreting study data, and revising the manuscript. W. McGaghie was involved in study concept and design, statistical analysis and interpretation of data, study supervision, and revising the manuscript. T. Simuni was involved in study concept and design, interpretation of data, and revising the manuscript. D. Wayne was involved in study concept and design, statistical analysis and interpretation of data, study supervision, obtaining funding, and revising the manuscript.

DISCLOSURE

The authors report no disclosures relevant to the manuscript. Go to Neurology.org for full disclosures.

REFERENCES

- 1. Huang GC, Smith CC, Gordon CE, et al. Beyond the comfort zone: residents assess their comfort performing inpatient medical procedures. Am J Med 2006;119:71. [DOI] [PubMed] [Google Scholar]

- 2.American Board of Psychiatry and Neurology. [Accessed July 24, 2011]. Available at: http://www.abpn.com/neuro.html.

- 3.American Association of Neurology. [Accessed July 28, 2011]. Available at: http://www.aan.com/go/about/sections/curricula.

- 4. [Accessed July 28, 2011]. Accreditation Council for Graduate Medical Education Neurology Program Requirements. Available at: http://www.acgme.org/acWebsite/RRC_180/180_prIndex.asp.

- 5.American Board of Internal Medicine. [Accessed August 10, 2011]. Available at: http://www.abim.org/certification/policies/imss/im.aspx.

- 6. Issenberg SB, McGaghie WC, Hart IR, et al. Simulation technology for health care professional skills training and assessment. JAMA 1999;282:861–866 [DOI] [PubMed] [Google Scholar]

- 7. Barsuk JH, Ahya SN, Cohen ER, McGaghie WC, Wayne DB. Mastery learning of temporary hemodialysis catheter insertion by nephrology fellows using simulation technology and deliberate practice. Am J Kidney Dis 2009;54:70–76 [DOI] [PubMed] [Google Scholar]

- 8. Barsuk JH, McGaghie WC, Cohen ER, Balachandran JS, Wayne DB. Use of simulation-based mastery learning to improve the quality of central venous catheter placement in a medical intensive care unit. J Hosp Med 2009;4:397–403 [DOI] [PubMed] [Google Scholar]

- 9. Barsuk JH, McGaghie WC, Cohen ER, O'Leary KJ, Wayne DB. Simulation-based mastery learning reduces complications during central venous catheter insertion in a medical intensive care unit. Crit Care Med 2009;37:2697–2701 [PubMed] [Google Scholar]

- 10. Wayne DB, Barsuk JH, O'Leary KJ, Fudala MJ, McGaghie WC. Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med 2008;3:48–54 [DOI] [PubMed] [Google Scholar]

- 11. Wayne DB, Butter J, Siddall VJ, et al. Mastery learning of advanced cardiac life support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med 2006;21:251–256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. McGaghie WC, Miller GE, Sajid A, Telder TV. Competency-based Curriculum Development in Medical Education: An Introduction. Public Health Paper No. 68 Geneva: World Health Organization; 1978 [PubMed] [Google Scholar]

- 13. Wayne DB, Didwania A, Feinglass J, Fudala MJ, Barsuk JH, McGaghie WC. Simulation-based education improves quality of care during cardiac arrest team responses at an academic teaching hospital: a case-control study. Chest 2008;133:56–61 [DOI] [PubMed] [Google Scholar]

- 14. Barsuk JH, Cohen ER, Feinglass J, McGaghie WC, Wayne DB. Use of simulation-based education to reduce catheter-related bloodstream infections. Arch Intern Med 2009;169:1420–1423 [DOI] [PubMed] [Google Scholar]

- 15. McGaghie WC, Issenberg B, Cohen ER, Wayne DB. Simulation-based medical education with deliberate practice yields better results than traditional clinical education: a meta-analytic comparative review of the evidence. Acad Med 2011;86:706–711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. Boston: Houghton Mifflin; 2002 [Google Scholar]

- 17. Kyoto Kagaku Lumbar Puncture Simulator. Available at: http://www.kyotokagaku.com/products/detail01/m43b.html Accessed July 28, 2011

- 18. Ellenby MS, Tegtmeyer K, Lai S, Braner DA. Videos in clinical medicine: lumbar puncture. N Engl J Med 2006;355:e12. [DOI] [PubMed] [Google Scholar]

- 19. Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med 2004;79(suppl 10):S70–S81 [DOI] [PubMed] [Google Scholar]

- 20. Fishman RA. Cerebrospinal Fluid in Diseases of the Nervous System, 2nd ed Philadelphia: W.B. Saunders Company; 1992 [Google Scholar]

- 21. Wayne DB, Butter J, Siddall VJ, et al. Simulation-based training of internal medicine residents in advanced cardiac life support protocols: a randomized trial. Teach Learn Med 2005;17:202–208 [DOI] [PubMed] [Google Scholar]

- 22. Stufflebeam DL. The Checklist Development Checklist. Available at: http://www.wmich.edu/evalctr/archive_checklists/guidelines.htm Accessed July 28, 2011

- 23. Downing SM, Tekian A, Yudkowsky R. Procedures for establishing defensible absolute passing scores on examinations in health professions education. Teach Learn Med 2006;18:50–57 [DOI] [PubMed] [Google Scholar]

- 24. Wayne DB, Barsuk JH, Cohen E, McGaghie WC. Do baseline data influence standard setting for a clinical skills examination? Acad Med 2007;82(suppl 10):S105–108 [DOI] [PubMed] [Google Scholar]

- 25. Kuntz KM, Kokmen E, Stevens JC, Miller P, Offord KP, Ho MM. Post-lumbar puncture headaches: experience in 501 consecutive procedures. Neurology 1992;42:1884–1887 [DOI] [PubMed] [Google Scholar]

- 26. Choudhry NK, Fletcher RH, Soumerai SB. Systematic review: the relationship between clinical experience and quality of health care. Ann Intern Med 2005;142:260–273 [DOI] [PubMed] [Google Scholar]

- 27. Stern BJ, Lowenstein DH, Schuh LA. Neurology education research. Neurology 2008;70:876–883 [DOI] [PubMed] [Google Scholar]

- 28. Lenchus J, Issenberg SB, Murphy D, et al. A blended approach to invasive bedside procedural instruction. Med Teach 2011;33:116–123 [DOI] [PubMed] [Google Scholar]

- 29. Conroy SM, Bond WF, Pheasant KS, Ceccacci N. Competence and retention in performance of the lumbar puncture procedure in a task trainer model. Simul Healthc 2010;5:133–138 [DOI] [PubMed] [Google Scholar]

- 30. Kessler DO, Auerbach M, Pusic M, Tunik MG, Foltin JC. A randomized trial of simulation-based deliberate practice for infant lumbar puncture skills. Simul Healthc 2011;6:197–203 [DOI] [PubMed] [Google Scholar]

- 31. Barsuk JH, Cohen ER, McGaghie WC, Wayne DB. Long-term retention of central venous catheter insertion skills after simulation-based mastery learning. Acad Med 2010;85(suppl 10):S9–12 [DOI] [PubMed] [Google Scholar]

- 32. Wayne DB, Siddall VJ, Butter J, et al. A longitudinal study of internal medicine residents' retention of advanced cardiac life support skills. Acad Med 2006;81(suppl 10):S9–12 [DOI] [PubMed] [Google Scholar]

- 33. McGaghie WC, Draycott TJ, Dunn WF, Lopez CM, Stefanidis D. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc 2011;6(suppl):S42–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.