Abstract

Research suggests that the exercise of control is desirable and adaptive, but the precise mechanisms underlying the value of control are not well understood. The current study characterizes the affective experience of personal control by examining the neural substrates recruited when anticipating the opportunity for choice, the means by which individuals exercise control. Using an experimental paradigm that probed the value of choice, participants reported liking cues that predicted future choice opportunity more than cues predicting no-choice. Anticipation of choice itself was associated with increased activity in corticostriatal regions involved in affective and motivational processes, particularly the ventral striatum. This study provides the first direct examination of the affective value of having the opportunity to choose. These findings have important implications for understanding the role of perception of control, and choice itself, in self-regulatory processes.

Keywords: fMRI, striatum, anticipation, choice, perceived control, reward

INTRODUCTION

Belief in personal control is highly adaptive, for its presence or absence can have a significant impact on the regulation of cognition, emotion, and even physical health (Bandura, Caprara, Barbaranelli, Gerbino, & Pastorelli, 2003; Ryan & Deci, 2006; Shapiro, Schwartz, & Astin, 1996). Individuals exercise control over their environment by making choices – ranging from basic perceptual decisions to complex and emotionally salient decisions. Converging evidence suggests that choice is desirable (for a review, see Leotti, Iyengar, & Ochsner, 2010). For example, animals and humans demonstrate a preference for choice over non-choice, even when that choice confers no additional reward (Bown, Read, & Summers, 2003; Suzuki, 1997, 1999). The fact that choice is desirable under such conditions suggests that choice, itself, has a positive affective component that increases the value of the choice option relative to the no-choice option. However, this hypothesis has not been directly tested experimentally, and as a result, the neural mechanisms underlying the value of choice and control are not well understood.

The goal of the present study was to examine the affective experience of perceiving control, as it is exercised through choice behavior. We used fMRI to identify the neural substrates recruited during the anticipation of future choice opportunity. If choice is rewarding, we would expect the anticipation of choice (relative to no-choice) to modulate activity in corticostriatal systems implicated in motivated behavior and reward processing (Delgado, 2007; Haber & Knutson, 2010; Montague & Berns, 2002; O’Doherty, 2004; Rangel, Camerer, & Montague, 2008; Robbins & Everitt, 1996).

Our hypothesis is motivated by findings from previous neuroimaging studies that indirectly suggest that the exercise of personal control may be particularly motivating and rewarding. For example, rewards that are instrumentally delivered activate reward-related circuitry to a greater extent than rewards that are passively received (Arana et al., 2003; Bjork & Hommer, 2007; O’Doherty, Critchley, Deichmann, & Dolan, 2003; O’Doherty et al., 2004; Tricomi, Delgado, & Fiez, 2004; Zink, Pagnoni, Martin-Skurski, Chappelow, & Berns, 2004), and simply choosing an item (as opposed to rejecting an item) increases its subjective rating and recruits reward-related circuitry, such as the striatum (Sharot, De Martino, & Dolan, 2009). While such results help promote the idea that control is an important component of the valuation process, the studies were not specifically designed to examine the affective value of the opportunity for choice, and thus the contributions of cognitive and affective processes involved in decision-making cannot be teased apart. The current study builds and extends upon these findings to characterize the affective components of choice and perceived control, which is an important contribution to our understanding of why the perception of control is so adaptive in diverse spheres of psychosocial functioning.

Here, we examine whether the mere anticipation of personal involvement, through choice, will recruit reward-related brain circuitry, particularly the striatum, suggesting that choice is valuable in and of itself. The experimental paradigm involved the opportunity to choose between two keys (choice condition), which could lead to a potential monetary gain, or acceptance of a computer-selected key, which led to similar outcomes (no-choice condition). Symbolic cues signaled upcoming Choice vs. No-choice trial types. Participants indicated how much they liked or disliked these symbolic cues, and fMRI analyses focused on BOLD activity in response to the cue during the anticipation of choice. Since Choice and No-choice trial types were matched in expected value, we interpreted any differences in behavioral ratings and BOLD activity between conditions as the affective valuation of choice itself.

MATERIALS & METHODS

Participants

Eighteen healthy right-handed individuals from the Rutgers University – Newark campus were included in the final sample (10 females, median age=21; see Supplement). Participants gave informed consent according to the Institutional Review Boards of Rutgers University and the University of Medicine and Dentistry of New Jersey.

Procedure

A simple choice paradigm was used to examine the affective experience of anticipating choice. On each trial, participants were presented with a selection of two keys (represented by a blue and a yellow rectangle displayed on the screen). When participants chose either key, they received feedback that they gained $0, $50, or $100 (each outcome occurring 33% of the time). Participants were not informed of reward probabilities in advance. On some trials, participants could freely choose between the keys (Choice condition) and on other trials, participants were forced to accept a computer-selected key (No-choice condition). Participants were informed that their goal was to earn as many experimental dollars as possible, which would be translated into a monetary bonus at the end of the experiment. Because both keys had the same average value, all subjects earned the same range of experimental dollars, which was translated into $5 bonus, independent of specific choices. Data collection and stimuli presentation were done with E-prime-2.0 (PST, Pittsburgh, PA).

Choice Task

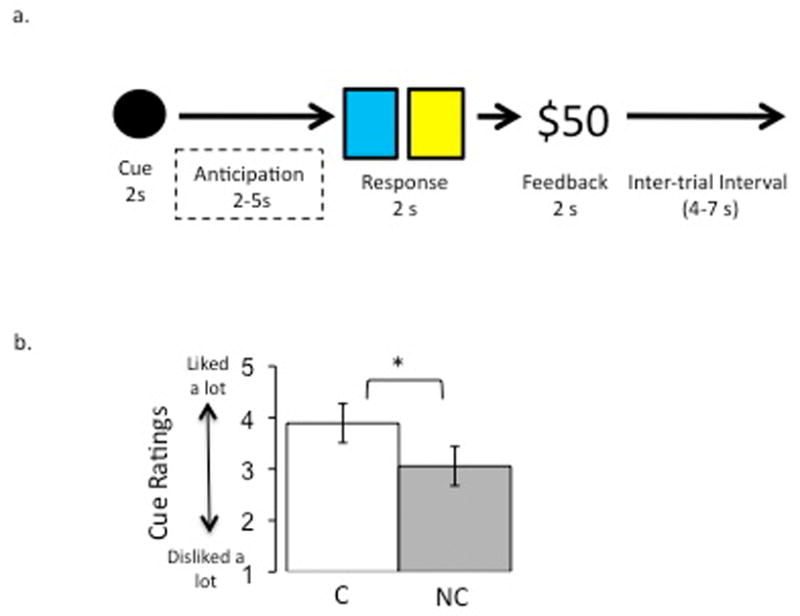

The trial structure of this task is outlined in Figure 1A. At the start of each trial, a symbolic cue explicitly indicated whether or not the subject would have choice on the trial, was presented centrally for 2s. Following the cue, there was a randomly jittered inter-stimulus interval (2-5s) marking the anticipation phase. Participants were then presented with the response phase (presented for 2s): either a choice scenario, in which they indicated the location (left or right) of the key they wished to choose (either the yellow or the blue key), or a no-choice scenario, where participants indicated the location of the one available key (blue or yellow) selected by the computer. Responses were recorded on each trial. Immediately after the response phase, the monetary outcome ($0, $50, or $100) was presented for 2s during an outcome phase. The reward outcome was followed by a randomly jittered inter-trial interval (4-7s). Participants experienced the same expected values for all trial types, ensuring that experienced rewards and perceived success was controlled. The position of the blue and yellow keys (left vs. right) varied across trials to avoid any confounding effects of motor response preparation during the anticipation phase.

Figure 1. Examining the anticipation of choice.

(a) Trial Structure: Symbolic shape (e.g. circle) informs participant about upcoming trial type (choice vs. no-choice). During a response phase (2s), participants choose between a yellow and blue key (choice condition) or respond to the location of the computer-selected key (no-choice condition). After the response phase, monetary outcome ($0, $50, or $100) is displayed for 2s. (b) Subjective behavioral ratings of cues illustrates that participants reported liking cues predicting choice (C) significantly more than cues predicting no-choice (NC).

There were four types of symbolic cues presented (e.g. circle, triangle). Each cue marked the beginning of a new trial and indicated which one of four trial types would occur. Associations between cues and trial types were learned explicitly prior to the scanning session. The two most important cue types were (1) Choice: cue signaled that participants would have the opportunity to choose between both colored keys; and (2) No-Choice: participants were forced to accept the computer-selected key (one colored key was presented with an unavailable gray key). These two cues served as the main conditions of interest.

In addition, two other cues were included that served as experimental controls: (3) non-informative cue (No-Info) and a (4) predictable no-choice cue (Predictable). In the No-Info cue, participants had no information about whether they would have a choice or not (these trials were equally followed by choice and no-choice trials). The purpose of this cue was to provide an expectation-free condition against which anticipation of choice and no-choice cues could be compared, and which would elicit uncertainty (of choice availability) during the anticipation phase. Conversely, the predictable cue indicated participants would have no choice between keys but they knew ahead of time which key the computer selected (blue or yellow). This cue provided an experimental control for potential anticipatory differences between choice and no-choice conditions due to differences in predictability of outcomes (i.e. key selection). For the choice condition, the participant chose which key (blue or yellow) would be selected, whereas, on no choice trials, the key that would be selected by the computer was unknown. Thus, the predictable cue provides a controlled amount of information about the upcoming key selection, which may be important if participants develop preferences for one key over the other (see Supplement). Each of the four trial types occurred thirty times throughout the task and trial order was randomized within four separate functional runs.

Immediately following the scanning session, participants were asked to rate how much they liked/disliked each of the trial types on a scale from 1 (disliked a lot) to 5 (liked a lot). A rating of 3 indicated that the trial was neither liked nor disliked (neutral rating).

Choice Preference Task

After the choice task (in the scanner), participants also performed a choice preference task (outside of the scanner) based on an experimental design previously tested across species (Suzuki, 1997, 1999). This task was included to provide an independent measure of choice desirability, based on participant behavior, rather than self-reports of choice preference. On a given trial, the participant could select either the white key (Path A) or the black key (Path B). Selection of the black key led to another choice (striped vs. dotted keys) and selection of the white key always led to a single option (striped or dotted key presented on left or right). Each path (black vs. white key, and striped vs. dotted key) was associated with the same probability of reward ($0, $1, or $5). Participants instrumentally learned these associations in an initial learning block of 100 trials. On each trial, participants chose between Path A or Path B, and then made either a choice (Path B) or responded to the location of the forced-choice option (Path A), before receiving feedback on the trial’s outcome (monetary reward). In the next block of trials (n= 50), participants were instructed to strategically choose the keys that they believed would win the most money. All trial timing and reward feedback was identical to the first block. In this second block, we assessed preference for the path that leads to subsequent choice (Path B) over the path that leads to no-choice (Path A). If choice itself does not confer any additional value, then participants should choose the black key (Path B) only 50% of the time. Alternately, if choice is desirable, then subjects will choose the black key a significantly greater proportion of the time relative to the white key.

fMRI Data Acquisition and Analyses

Imaging data were collected on a 3T Siemens Allegra head-only scanner at University Heights Center for Advanced Imaging and analyses were performed using Brain Voyager software (v1.9; Brain Innovation, The Netherlands). We focused on two main analyses to identify regions of interest (ROI)s (see Supplementary Materials for additional details). First, we examined activity related to anticipation of choice in regions that have previously been shown to respond to the anticipation of reward (Knutson, Taylor, Kaufman, Peterson, & Glover, 2005), including the midbrain, bilateral ventral striatum (VS) and orbitofrontal cortex (OFC). Second, we conducted a whole-brain analysis to identify all regions showing main effects of cue type (i.e. not limiting results to our reward anticipation regions defined a priori).

RESULTS

Behavioral Results

Participants demonstrated a preference for choice trials over no-choice trials. Specifically, they rated cues predicting future choice opportunity (M= 3.9, SD= 0.9) significantly higher than cues predicting future no-choice (M= 3.1, SD= 1.1; t(17)= 2.14, p< .05; see Figure 1B). Additionally, participants’ ratings of choice cues was significantly higher than the neutral score of 3 (t(17)= 4.89, p= .0006), but ratings of no-choice cues was not significantly different from the neutral score (t(17)= 0.212, p= 0.83). This finding suggests that choice cues were liked more than no-choice cues.

During the response phase of each trial on the choice task, response times (RTs) were collected and examined for each of the main cue types to determine if other factors (e.g., response preparation, attentional demands) differed for choice and no-choice conditions. There were no significant differences between RTs following Choice and No-Choice cues (p> .05). These results suggest that any anticipatory differences in BOLD activity may not reflect differences in factors such as response preparation or attentional demands, but rather likely reflect processes related to goal-directed behavior.

As another measure of choice desirability, we conducted a choice preference task outside the scanner (n=17; one participant withdrew due to time constraints). Participants selected options that led to future choice significantly more than options that led to no choice, despite equivalent rewards. On average, participants chose the path that led to subsequent choice 64% of the time, which was significantly different from 50%, or chance (t(16)= 3.98, p< .001). Combined with the direct evidence from subjective cue ratings, this indirect evidence of a preference for choice supports the notion that, within this cohort, choice opportunity was perceived as inherently valuable.

Neuroimaging Results

Analysis 1 – ROI Analysis

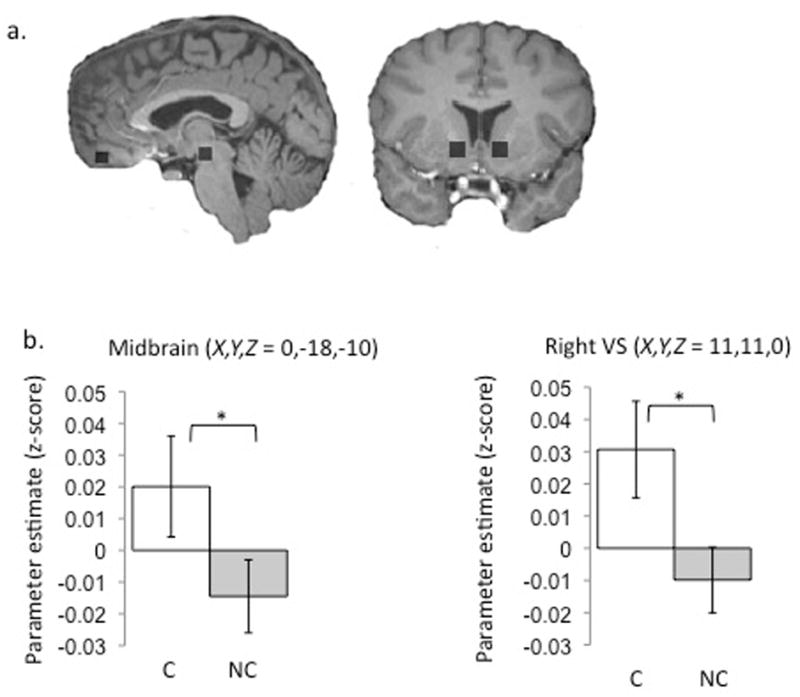

To probe the value associated with the anticipation of choice in an unbiased manner, we extracted parameter estimates (mean beta) for the four cue types from ROIs defined independently in a previous study about monetary reward anticipation (Knutson et al., 2005). Betas associated with the Choice cues were significantly greater than those for the No-choice cues in the midbrain (t(17)= 3.2, p= .005), left VS (t(17)= 2.4, p= .03), and right VS (t(17)= 3.4, p= .004; Figure 2b). In the bilateral OFC, BOLD activity was greater when anticipating Choice relative to No-choice, but this difference was not significant. There were no significant differences between betas extracted for the choice condition and for either of the control conditions in any of the ROIs, with one exception in the right VS where activity in response to the choice cue was greater than activity in response to the predictable cue (see Supplementary Table 1 for details).

Figure 2. Greater activity in reward regions when anticipating choice.

(a) A priori ROIs involved in anticipation of reward magnitude (Knutson et al., 2005) in the bilateral ventral striatum (VS), orbitofrontal cortex, and midbrain. (b) Bar plots show BOLD response in midbrain (left side) right VS (right side) is greater when anticipating choice vs. no-choice (Error bars are ±S.E.M.).

Analysis 2 – Whole-brain Analysis for Main Effects of Cue Type during Anticipation Phase

We performed a one-way repeated measures ANOVA of BOLD activity during the anticipation phase with the four different cue types (Choice, No-Choice, No-Info, Predictable). This analysis allowed us to explore main effects of cue type without limiting the search to putative reward regions while also including all experimental conditions.

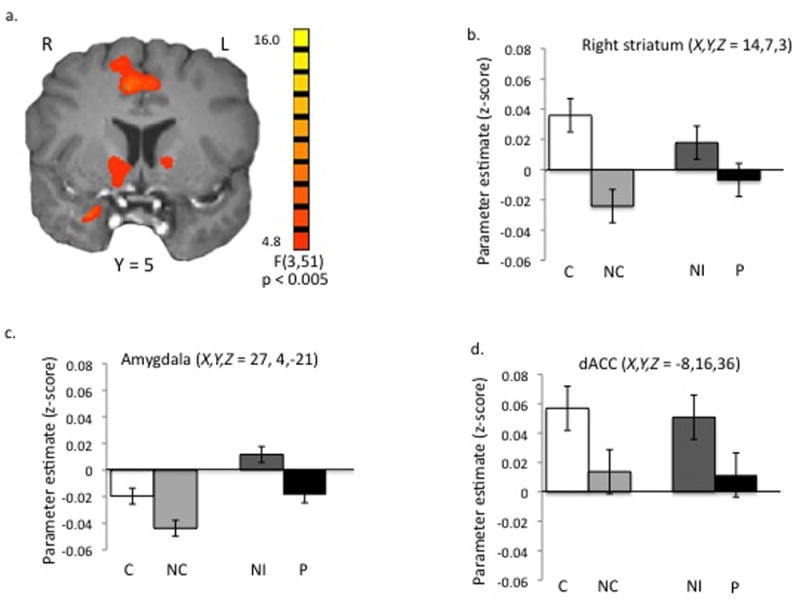

Main effects of cue type were observed in regions previously involved in affective and motivational processes (Table 1), including the right striatum (extending both ventrally and dorsally), left caudate, dorsal anterior cingulate cortex (dACC), right inferior frontal cortex (IFC), and amygdala (Figure 3). As Figure 3B illustrates, we found that Choice recruits the right striatum to the greatest extent, with the No-Info and Predictable cues eliciting intermediary activity relative to Choice and No-Choice cues. In addition to the striatum, main effects of cue-type were also observed in other regions involved in affective and motivational processing, such as the dACC and amygdala. Cue-related differences in activity in the ventral amygdala region (Figure 3C) may reflect effects of perceived uncertainty (Hsu, Bhatt, Adolphs, Tranel, & Camerer, 2005; Sarinopoulos et al., 2009; Whalen, 2007), given that this effect was driven primarily by greater activity for the ambiguous No-Info cue. In the dACC (Figure 3D), activity was greater for the two cues predicting certain (Choice) and possible choice (No Info) relative to the cues predicting no-choice (No-Choice and Predictable), potentially reflecting the motivational salience of future choice opportunity when effortful decision-making is anticipated (Rushworth, Walton, Kennerley, & Bannerman, 2004; Sanfey, Loewenstein, McClure, & Cohen, 2006).

Table 1.

Main Effects of Cue Type during Anticipation Phase

| Region (R/L) | X | Y | Z | BA | Voxels (1mm3) | F-value |

|---|---|---|---|---|---|---|

| dorsal ACC | -8 | 16 | 36 | 32 | 645 | 7.33 |

| dorsal ACC | 1 | 10 | 42 | 32 | 824 | 8.98 |

| IFC/insula (R) | 38 | 13 | 9 | 13 | 531 | 7.51 |

| caudate (L) | -13 | 1 | 6 | 486 | 7.14 | |

| caudate (R) | 14 | 7 | 3 | 736 | 6.40 | |

| amygdala | 27 | 4 | -21 | 345 | 8.46 | |

| fusiform gyrus (R) | 26 | -41 | -15 | 20 | 568 | 6.78 |

| cuneus | -7 | -74 | 27 | 18 | 3678 | 9.94 |

| occipital cortex (R) | 32 | -89 | 3 | 18 | 1896 | 8.86 |

| occipital cortex (L) | -28 | -98 | -3 | 18 | 3307 | 11.75 |

Note: Talairach coordinates (X,Y,Z) are reported. Abbreviations: BA = Brodmann Area; ACC = anterior cingulate cortex, IFC = inferior frontal cortex, VS = ventral striatum.

Figure 3. Neural activity of predictive cues during anticipation phase.

(a) Main effects of cue type observed in bilateral striatum, amygdala, and dorsal ACC (dACC). (b) Parameter estimates within the right VS for Choice (C) and No-Choice (NC), No-Info (NI), and Predictable (P) cues, (c) parameter estimates within the dACC and (d) amygdala. Error bars are ±S.E.M.

DISCUSSION

In summary, we observed behavioral evidence that choice is desirable, and furthermore, we found that anticipation of choice opportunity was associated with increased activity in a network of brain regions assumed to be involved in reward processing. Collectively, the findings suggest that simply having the opportunity to choose may be inherently valuable in some situations. These results provide empirical evidence supporting the hypothesis that the need for control – and choice – is biologically motivated (Leotti et al., 2010). Choice is the means by which individuals exercise control over the environment, and the perception of control seems to be critical for an individual’s well-being (Bandura et al., 2003; Ryan & Deci, 2006; Shapiro et al., 1996). If individuals did not believe they could exercise control over their environments, there would be little motivation to thrive. If the need for control is biologically motivated, and choice is a vehicle for exercising control, then it makes sense that we would find choice opportunity rewarding, and that the anticipation of choice would engage affective and motivational brain circuitry that promotes behavior adaptive for survival. Thus, the findings of the current study are critical for understanding the neural substrates of the affective experience of choice, which may be an important aspect of emotion regulation.

Our behavioral findings provide both direct (subjective ratings) and indirect (decision-making) evidence that choice is preferred over non-choice. These findings are consistent with previous studies demonstrating, through indirect measures, that choice is desirable for both animals and humans (Bown et al., 2003; Suzuki, 1997, 1999). In support of the behavioral results, BOLD activity in reward anticipation ROIs, including the VS and midbrain, was significantly greater for the choice relative to the no-choice condition. Furthermore, in the whole-brain analysis, the anticipation of choice opportunity recruited corticostriatal circuitry previously linked to reward processing (Delgado, 2007; Knutson, Adams, Fong, & Hommer, 2001; Knutson et al., 2005; O’Doherty, 2004), suggesting that the anticipation of choice signals within this circuitry may reflect greater expectation/prediction of potential rewards. Because the actual rewards did not vary for the choice and no-choice condition, differences observed between conditions may reflect differences in anticipated reward associated with the exercise of control through choice.

Greater choice-related activity was also observed in the dorsal striatum, consistent with the literature demonstrating that this region is highly responsive to action-outcome contingencies (e.g., Bjork & Hommer, 2007; O’Doherty et al., 2004; Tricomi et al., 2004; see Supplement for further discussion). Additionally, recruitment of the dACC, as well as the IFC, may reflect adaptive updating of reward information, which is important for strategic control over behavior (Botvinick, Cohen, & Carter, 2004; Sanfey et al., 2006), and may be even more imperative when anticipating trials on which the participant has control (O’Doherty et al., 2003). Interestingly, our exploratory analysis did not reveal significant main effects of cue types in other reward regions that have been shown to respond to reward under choice conditions in previous studies, including the orbitofrontal cortex (Arana et al., 2003; O’Doherty et al., 2003). These discrepancies may be explained by differences in experimental paradigms which may influence processes related to reward and decision-making (see Supplement for additional discussion).

In the striatum bilaterally, we observed the greatest activity for the Choice cue, the lowest activity for the No Choice cue, and intermediary parameter estimates for No-Info and Predictable cues. One interpretation of these results is that striatal activity reflects the value of each of these cues, where Choice has the highest value and No-choice has the lowest value, with such signal being important for learning and goal-directed behavior. This may be because choice is perceived as appetitive, representing personal control. Alternately, it may be that No-Choice is perceived as aversive, as BOLD signals in the human striatum have been shown to decrease upon receipt of a negative stimulus such as a monetary loss (Delgado, Nystrom, Fissell, Noll, & Fiez, 2000; Seymour, Daw, Dayan, Singer, & Dolan, 2007; Tom, Fox, Trepel, & Poldrack, 2007).

Merely having an opportunity to choose is known to elicit an increased perception of personal control (Langer, 1975; Langer & Rodin, 1976). As a result, differences in cue-related activity may reflect the value associated with each of the cues based on variations in perceived control or reward associated with experiencing control. Because both uncertainty and predictability may contribute to the perception of control (Thompson, 1999), we included experimental control conditions to address these potential influences. These control conditions, though somewhat limited, nonetheless provide important information about the uncertainty of choice (no info condition) and the predictability of outcomes (predictable condition). Our findings suggest that uncertainty of choice opportunity modulated activity in the striatum, where activity increased with increasing probability of choice opportunity (Choice > No-Info > No-choice). This graded response is consistent with prediction of choice, which occurred on 50% of No-Info trials, as compared to 100% of Choice trials, and 0% of No-choice trials. Increases in the perceived probability for choice may have led to concurrent increases in BOLD signals in the striatum, in a similar fashion to studies demonstrating increased activity in this region as a function of probability of reward (Knutson et al., 2005; Tobler, O’Doherty, Dolan, & Schultz, 2007; Yacubian et al., 2006). In contrast, the Predictable cue did not elicit a discernable change in striatal activity, suggesting that choice-related activity in this region was not driven by the predictability of the outcome. The Predictable cue also allowed us to rule out the possibility that choice anticipation is influenced by anticipation of a specific choice (i.e. blue or yellow; see Supplement). Nonetheless, because the choice condition is not statistically different from the control conditions, in most of the reward ROIs (see Supplement), we cannot conclude definitively that the affective experience of choice is free from the influence of uncertainty and predictability. Future research designed to specifically address the roles of uncertainty and predictability will be paramount to the accurate characterization of the affective experience of choice and control.

In the current experiment, increased perception of control in the choice condition is only one possible reason that participants may find choice rewarding. They may also prefer choice because it is more engaging (and less boring) than the other experimental conditions, or perhaps because they perceive differences between the key options, even though there were no actual differences in expected value for the keys for any of the participants, nor were there any differences in BOLD signals when probing the whole-brain for activity during the Predictable cues when participants were anticipating their reported preferred color (i.e. “blue key was better than yellow key”; see Supplement) relative to the non-preferred color. One issue that merits exploration in future investigations, however, is the idea that trial-by-trial fluctuations in experienced rewards may actually induce temporary changes in key preference, which in turn create perceived advantages for choice opportunity. Each of these possibilities may explain why choice opportunity may be inherently valuable, and perhaps desirable, and lead to an increased response in reward-related regions such as the ventral striatum (see Supplement for additional analyses and discussion).

Though previous research has suggested that personal involvement in decision-making may modulate activity in similar brain networks (Arana et al., 2003; Bjork & Hommer, 2007; O’Doherty et al., 2003; O’Doherty et al., 2004; Sharot et al., 2009; Tricomi et al., 2004; Zink et al., 2004), this study is one of the first to directly demonstrate that simply anticipating choice recruits affective brain circuitry, suggesting that having an opportunity to choose may be valuable in and of itself. Whereas most of the decision-making literature has focused on understanding the value of specific choices as they relate to specific consequences, here, we argue that the opportunity to choose is inherently rewarding, independent of the outcomes. The findings specifically suggest that choice, or the opportunity for choice, is associated with a positive value signal, above and beyond what is observed when anticipating potential reward in the absence of choice. Nonetheless, additional work is needed to determine how other reward-related processes, such as fluctuations in learning, may influence this signal. Characterization of the affective properties of choice presented in this study may provide the foundation for understanding how the presence or absence of choice can influence self-regulation ability and may contribute to maladaptive control-seeking behavior.

Acknowledgments

This work was supported by grants from NIMH (MH-084041), NIDA (F32-DA027308). We are grateful to Michael Niznikiewicz for assistance and Nathaniel Daw, Susan Ravizza, and Elizabeth Tricomi for discussion.

References

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. J Neurosci. 2003;23(29):9632–9638. doi: 10.1523/JNEUROSCI.23-29-09632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A, Caprara GV, Barbaranelli C, Gerbino M, Pastorelli C. Role of affective self-regulatory efficacy in diverse spheres of psychosocial functioning. Child Dev. 2003;74(3):769–782. doi: 10.1111/1467-8624.00567. [DOI] [PubMed] [Google Scholar]

- Bjork JM, Hommer DW. Anticipating instrumentally obtained and passively-received rewards: a factorial fMRI investigation. Behav Brain Res. 2007;177(1):165–170. doi: 10.1016/j.bbr.2006.10.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn Sci. 2004;8(12):539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Bown NJ, Read D, Summers B. The lure of choice. Journal of Behavioral Decision Making. 2003;16:297–308. [Google Scholar]

- Delgado MR. Reward-related responses in the human striatum. Ann N Y Acad Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J Neurophysiol. 2000;84(6):3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35(1):4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310(5754):1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21(16):RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25(19):4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langer EJ. The Illusion of Control. Journal of Personality and Social Psychology. 1975;32(2):311–328. [Google Scholar]

- Langer EJ, Rodin J. The effects of choice and enhanced personal responsibility for the aged: a field experiment in an institutional setting. J Pers Soc Psychol. 1976;34(2):191–198. doi: 10.1037//0022-3514.34.2.191. [DOI] [PubMed] [Google Scholar]

- Leotti LA, Iyengar SS, Ochsner KN. Born to Choose: The Origins and Value of the Need for Control. Trends in Cognitive Sciences. 2010;14(10):457–463. doi: 10.1016/j.tics.2010.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague P, Berns G. Neural economics and the biological substrates of valuation. Neuron. 2002;36(2):265. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- O’Doherty J, Critchley H, Deichmann R, Dolan RJ. Dissociating valence of outcome from behavioral control in human orbital and ventral prefrontal cortices. J Neurosci. 2003;23(21):7931–7939. doi: 10.1523/JNEUROSCI.23-21-07931.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14(6):769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304(5669):452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9(7):545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins TW, Everitt BJ. Neurobehavioural mechanisms of reward and motivation. Curr Opin Neurobiol. 1996;6(2):228–236. doi: 10.1016/s0959-4388(96)80077-8. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends Cogn Sci. 2004;8(9):410–417. doi: 10.1016/j.tics.2004.07.009. [DOI] [PubMed] [Google Scholar]

- Ryan RM, Deci EL. Self-regulation and the problem of human autonomy: does psychology need choice, self-determination, and will? J Pers. 2006;74(6):1557–1585. doi: 10.1111/j.1467-6494.2006.00420.x. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Loewenstein G, McClure SM, Cohen JD. Neuroeconomics: cross-currents in research on decision-making. Trends Cogn Sci. 2006;10(3):108–116. doi: 10.1016/j.tics.2006.01.009. [DOI] [PubMed] [Google Scholar]

- Sarinopoulos I, Grupe DW, Mackiewicz KL, Herrington JD, Lor M, Steege EE, et al. Uncertainty during anticipation modulates neural responses to aversion in human insula and amygdala. Cereb Cortex. 2009;20(4):929–940. doi: 10.1093/cercor/bhp155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seymour B, Daw N, Dayan P, Singer T, Dolan R. Differential encoding of losses and gains in the human striatum. J Neurosci. 2007;27(18):4826–4831. doi: 10.1523/JNEUROSCI.0400-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapiro DH, Jr, Schwartz CE, Astin JA. Controlling ourselves, controlling our world. Psychology’s role in understanding positive and negative consequences of seeking and gaining control. Am Psychol. 1996;51(12):1213–1230. doi: 10.1037//0003-066x.51.12.1213. [DOI] [PubMed] [Google Scholar]

- Sharot T, De Martino B, Dolan RJ. How choice reveals and shapes expected hedonic outcome. J Neurosci. 2009;29(12):3760–3765. doi: 10.1523/JNEUROSCI.4972-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki S. Effects of number of alternatives on choice in humans. Behavioural Processes. 1997;39:205–214. doi: 10.1016/s0376-6357(96)00049-6. [DOI] [PubMed] [Google Scholar]

- Suzuki S. Selection of force- and free-choice by monkeys. Percept Mot Skills. 1999;88:242–250. [Google Scholar]

- Thompson S. Illusions of Control: How We Overestimate Our Personal Influence. Current Directions in Psychological Science. 1999;8(6):187–190. [Google Scholar]

- Tobler PN, O’Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97(2):1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315(5811):515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron. 2004;41(2):281–292. doi: 10.1016/s0896-6273(03)00848-1. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. The uncertainty of it all. Trends Cogn Sci. 2007;11(12):499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Yacubian J, Glascher J, Schroeder K, Sommer T, Braus DF, Buchel C. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26(37):9530–9537. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Martin-Skurski ME, Chappelow JC, Berns GS. Human striatal responses to monetary reward depend on saliency. Neuron. 2004;42(3):509–517. doi: 10.1016/s0896-6273(04)00183-7. [DOI] [PubMed] [Google Scholar]