Abstract

The current work examined contributions of emotion-resembling facial cues to impression formation. There exist common facial cues that make people look male or female, emotional, and from which we derive personality inferences. We first conducted a Pilot study to assess these effects. We found that neutral female versus neutral male faces were rated as more submissive, affiliative, naïve, honest, cooperative, babyish, fearful, happy, and less angry than neutral male faces. In our Primary Study, we then “warped” these same neutral faces over their corresponding anger and fear displays so the resultant facial appearance cues now structurally resembled emotion while retaining a neutral visage (e.g., no wrinkles, furrows, creases etc.). The gender effects found in the Pilot Study were replicated in the Primary Study, suggesting clear stereotype driven impressions. Critically, ratings of the neutral-over-fear warps versus neutral-over-anger warps also revealed a profile similar to the gender-based ratings, revealing perceptually driven impressions directly attributable to emotion overgeneralization.

Keywords: Impression formation, gender stereotypes, emotion overgeneralization, facial appearance, emotional expression

When we encounter others for the first time, we often make spontaneous inferences regarding their character (Gilbert, Pelham, & Krull, 1988; Van Overwalle, Drenth, & Marsman, 1999). Aspects of appearance such as facial maturity and attractiveness (Zebrowitz, 1997), and cues related to age, race, and sex (Bargh, Chen, & Burrows, 1996) profoundly impact our impressions. We attribute personality, inner thoughts, and beliefs to others based simply on facial appearance in what appears to be an effortless and nonreflective manner. Yet, these impressions are often nondiagnostic of people’s actual traits and states such as their tendency for sociability, responsibility, caring, happiness, and aggression (see Said, Sebe, & Todorov, 2009). This begs the question as to why it is we form such impressions in the first place, and why we do so with such high agreement. The current work attempts to address this question by examining the role of emotion overgeneralization in impression formation (i.e., the tendency to generalize from emotion resembling facial appearance when making stable trait inferences; see, Zebrowitz, 1997).

The face contains an overlapping array of morphological and movement related features. It is perhaps not surprising then that appearance and expression often share physical properties that give rise to similar social perceptions. Indeed, low versus high eyebrows—a particularly salient cue differentiating male and female faces (Campbell, Wallace, & Benson, 1996)—on otherwise non-expressive human faces yield both dominant versus submissive perceptions and anger versus fear impressions, respectively (Keating, Mazur, & Segall, 1977; Laser & Mathie, 1982). Similarly, hypermature faces with low brows, thin lips, and angular features, which are more characteristically found in male faces, directly resemble the facial features associated with anger expressions. Conversely, “babyish” faces with high brows, full lips, and round features, which are more characteristically found in female faces, closely resemble fear and surprise expressions (Marsh, Adams, & Kleck, 2005; see also Zebrowitz, 1997). In turn, anger expressions have been found to give rise to high dominant and low affiliative-oriented trait inferences, whereas fear expressions have been found to give rise to perceptions of submissiveness and high affiliative-oriented inferences (Hess, Blairy, & Kleck, 2000; Knutson, 1996) -- again patterns tied to common gender-stereotypic expectations (Hess, Adams, & Kleck, 2004, 2005). The goal of the current study, therefore, was to examine the extent to which facial features associated with anger and fear contribute to impression formation when embedded in otherwise emotionally neutral male and female faces, and to explore the potential contribution they have in gender-typical impressions.

The Gender-Emotion Confound

Features such as nose shape and size, eye size, and mouth size, all convey gender-relevant information, even when viewed separately from the face as a whole (Brown & Perrett, 1993). Much of what differentiates male and female appearance has also been linked to facial features associated with facial maturity and dominance (Keating, 1985; Zebrowitz, 1997). For example, a square jaw, thin lips, and heavy, low-set eyebrows, are features commonly associated with men, whereas rounded features, full lips, and high eyebrows are commonly associated with women (Keating, 1985; Keating et al., 1977). Men are, on average, also perceived as more dominant, and women as more sociable (Hess et al., 2005; Zebrowitz, 1997). As such, differences in facial dominance/maturity are highly associated with expectations regarding gender-typical appearance and impressions (Friedman & Zebrowitz, 1992).

It has also been found that high mature faces (e.g., low brows, angular features) tend to be rated as more likely to express anger, whereas babyish faces (e.g., high brows, rounded features) are rated as more likely to express fear, sadness, and joy (Adams & Kleck, 2002), a pattern highly consistent with typical gender-emotion expectations (Adams, Hess, Kleck, & Walbott, 2004). Expressive faces also influence attributions of personality trait inferences generally found as a function of variation in stable facial maturity cues. In one study, for instance, ratings based on differences in fearful compared to angry eyes (including lowered and heightened brows) yielded greater attributions of femininity, youthfulness, dependence, honesty, and naivety; thus replicating patterns previously found when examining both babyish versus mature faces, and female versus male stereotypes (Marsh et al., 2005). The confounded nature of facial cues across gender and emotion judgments would suggest that emotion overgeneralization may play a role in generating gender-related impressions.

Although emotion overgeneralization effects have yet to be subjected to intensive scientific inquiry, recent research offers credence to this mechanism playing a role in gender-linked impression formation. In one study, for example, a connectionist model was trained to detect overt emotional displays. Once trained, it then erroneously, though systematically, categorized neutral male and female faces into different emotion categories, such that male faces were relatively more often categorized as angry and female faces as surprised (Zebrowitz, Kikuchi, & Fellous, 2010). What is particularly striking about this study is that these effects were based purely on facial metric data, and thus were necessarily free from any cultural learning or stereotypic driven associations. Across a number of additional studies, a similar link between the physical properties of male and female faces and expression has become increasingly apparent (Becker, Kenrick, Neuberg, Blackwell, & Smith, 2007; see also Hess et al., 2004, 2005). In one study, (Hess, Adams, Grammer, & Kleck, 2009) both happy and fearful expressions biased perception of otherwise androgynous faces toward female classification, whereas anger expressions biased perception toward male classification. In this same study, sadness appeared relatively unconfounded with the physical properties of gender perception.

Clearly, there exist pervasive gender-emotion stereotypes as well (see Brody & Hall, 1993; Fischer, 1993 for reviews). Women are expected to experience and express more fear, sadness, and joy, whereas men are expected to experience and express more anger (Birnbaum, 1983; Briton & Hall, 1995; Fabes & Martin, 1991). Consequently, gender cues provide fertile ground to examine emotion overgeneralization effects. In addition to evidence for such strong stereotypes, the emerging literature reviewed above underscores a new question: What role do gender-emotion stereotypes, emotion-resembling facial appearance cues, and/or the interplay between the two contribute to first impressions?

The Current Work

The central thesis of the current work is that most faces cannot aptly be described as emotionally “neutral” even when completely devoid of overt muscle movement related to expression. As such, it stands to reason that our first impressions of others are (at least in part) based on the perceived emotional tone of a face. Differences in facial expression-resembling appearance, such as those also associated with male versus female faces, may therefore systematically drive differences in person perception. Therefore, the hypotheses driving the current work were three-fold. First, for our initial Pilot Study, based on prior evidence for pervasive gender-stereotypes, we predicted that male and female neutral faces would give rise to common gender-stereotypical patterns of emotion and person perception. In this regard, the Pilot Study was run to establish that the stimuli we selected for the Primary Study represented typical gender exemplars. Second, we hypothesized that when warping these same faces over their corresponding anger or fear expressions,1 neutral-over-anger warps would yield a more stereotypical male pattern of impressions, whereas neutral-over-fear warps would yield a more stereotypical female pattern of impressions. This prediction is based on the assumption that the pattern of effects we predicted for the Pilot Study would be at least partially due to emotion overgeneralization effects. Third, we hypothesized that when warped in this manner, and thus effectively reducing the variation that is naturally typical of gendered facial cues, the gender-typical pattern of impressions based on these faces would also be attenuated or even, in some cases, reversed (see Adams et al., 2004; Friedman & Zebrowitz, 1992; Hess, et al., 2005). We assumed that equating male and female faces on the very features that drive emotion overgeneralization would likewise control for the original source of variation in impression formation.

Pilot Study

The purpose of this study was to confirm that the neutral male and female facial stimuli selected for the current work yield typical, well-documented gender-stereotypic impressions.

Method

Participants

Seventeen undergraduates were recruited to participate in this study (12 women, 5 men; average age = 20.12 SD = 2.89) in exchange for partial course credit.

Stimuli and Design

Eight female and eight male faces depicting neutral expressions were selected from the Pictures of Facial Affect (Ekman & Friesen, 1976) and the Montreal Set of Facial Displays (Beaupré, Cheung, & Hess, 2000). All of the faces were of Caucasian descent and were grey-scaled.

Procedure

Participants were presented with the faces on a computer screen using E-Prime 2.0 software (Psychology Software Tools, Inc.). Faces were presented as 3.1 by 4.5 inch images in the center of the monitor while participants entered their responses using a keyboard. Participants rated each face on characteristics associated with 1) physical appearance: age, attractive/unattractive, gender prototypicality, youthful/mature, 2) emotionality: anger, fear, sad, joy, and 3) person perception scales previously found to be related to gender stereotypes but not necessarily with emotion per se: dominant/submissive, unfriendly/friendly, cooperative/competitive, naïve/shrewd, honest/deceitful, and rational/intuitive. Age ratings consisted of an open-ended response in which participants were asked to enter the exact age they thought the person in the picture was. For emotionality ratings participants indicated how frequently they believed the persons depicted in the pictures experienced the various emotions in their everyday lives, from 1 = infrequently to 7 = frequently. For gender prototypicality the faces were rated for how typical-looking they were for people of that gender on a scale from 1 = not at all typical to 7 = very typical. Person perception ratings were all made on 7-point scales, anchored with the labels as indicated above.

Results

Four of the rating scales (youthful/mature, cooperative/competitive, naïve/shrewd, honest/deceitful) were reverse coded such that higher ratings indicated more stereotypical female versus male impressions. One additional rating scale (attractive/unattractive) was reverse coded so that high scores were associated with high attractiveness. All but one of the fourteen scales yielded moderate to high inter-rater reliabilities across the participants including: youthful/mature (Cronbach’s α = .955), age (α = .979), gender prototypicality (α = .850), attractiveness (α = .844), cooperative (α = .920), naïve (α = .880), honest (α = .905), submissive (α = .920), affiliative (α = .949), anger (α = .920), fear, (α = .895), sad (α = .634), and joy (α = .898). Intuitive/rational, however, received relatively low reliability (Cronbach’s α = .340). Next, a series of paired-sample t-tests were run on the ratings made of male and female faces for each of fourteen rating scales (see Table 1). In terms of physical appearance male faces were rated as older and more gender prototypical, whereas female faces as more attractive and babyish. In terms of social and emotion perception, in accordance with common gender-stereotypes, female faces were rated significantly higher than male faces in perceived affiliativeness, submissiveness, cooperation, naivety, honesty, fearfulness, and lower in perceived anger. Female faces were also rated as appearing marginally more joyful. Differences for sadness and intuitiveness, however, did not approach significance. All comparisons reported above were significant when applying a Bonferroni correction for multiple comparisons, a = .0035, except where otherwise noted.

Table 1.

Direct t-test comparisons as a function of gender of face, Pilot Study

| Gender | Female faces | Male faces | t-statistic | r2 |

|---|---|---|---|---|

| Mean(SD) | Mean(SD) | |||

| Physical Appearance Ratings | ||||

| Age | 29.92 (2.95) | 35.76 (3.86) | −7.338*** | .771 |

| Attractiveness Gender | 3.72 (0.67) | 2.71 (0.99) | 3.493*** | .433 |

| Prototypicality | 4.14 (0.96) | 5.45 (0.66) | −5.53*** | .667 |

| Babyishness | 3.76 (0.89) | 2.80 (0.72) | 6.965*** | .752 |

| Emotionality Ratings | ||||

| Anger | 3.49 (0.57) | 4.68 (0.63) | −5.991*** | .692 |

| Fear | 3.82 (0.74) | 2.47 (0.94) | 4.478*** | .556 |

| Joy | 4.33 (0.58) | 3.49 (0.77) | 3.161** | .384 |

| Sadness | 3.91 (0.70) | 3.61 (0.90) | 1.415 | .111 |

| Person Perception Ratings | ||||

| Subordinate | 4.50 (0.52) | 3.21 (0.65) | 5.920*** | .687 |

| Affiliative | 4.36 (0.59) | 3.21 (0.64) | 5.712*** | .671 |

| Naïve | 3.98 (0.61) | 3.18 (0.56) | 3.874*** | .484 |

| Honest | 4.55 (0.79) | 3.40 (0.81) | 5.409*** | .646 |

| Cooperative | 4.52 (0.62) | 3.34 (0.55) | 5.291*** | .636 |

| Intuitive | 3.94 (0.71) | 3.82 (0.93) | 0.400 | .010 |

Note. df = 16.

p < .05,

p < .01,

p < .001 (i.e., surviving Bonferroni correction for multiple comparisons. Higher means are presented in bold for ease of pattern interpretation)

In sum, these results largely confirmed our expectation that this stimulus set of expressively neutral male and female faces would elicit the common gender-stereotypic pattern of social impressions.

Primary Study

In order to examine the influence of emotion resembling cues present in otherwise “neutral” faces, we created stimuli in which appearance cues were manipulated to resemble the structural characteristics of anger and fear expressions (e.g., high versus low brows, thin versus full lips). To do this, we used a morphing algorithm to average across the structural properties of a neutral and expressive face while retaining the textural properties of the original neutral face (i.e., no bulging, wrinkles, furrows etc. that are characteristic of overt expression). In doing this, we could then directly examine the impact that such emotion-resembling cues on impression formation. Our prediction was that neutral-over-fear versus neutral-over-anger warps would generate a pattern of responses similar to that found in the Pilot Study for female versus male faces respectively. We further predicted that when these faces were manipulated in this way, so that male and female faces now shared the same emotion-resembling cues, typical gender-stereotypic patterns of impressions would be attenuated.

Method

Participants

Twenty-six undergraduates were recruited to participate in this study (20 women, 6 men, mean age = 20.08, SD = 2.76) in exchange for partial course credit.

Stimuli and Design

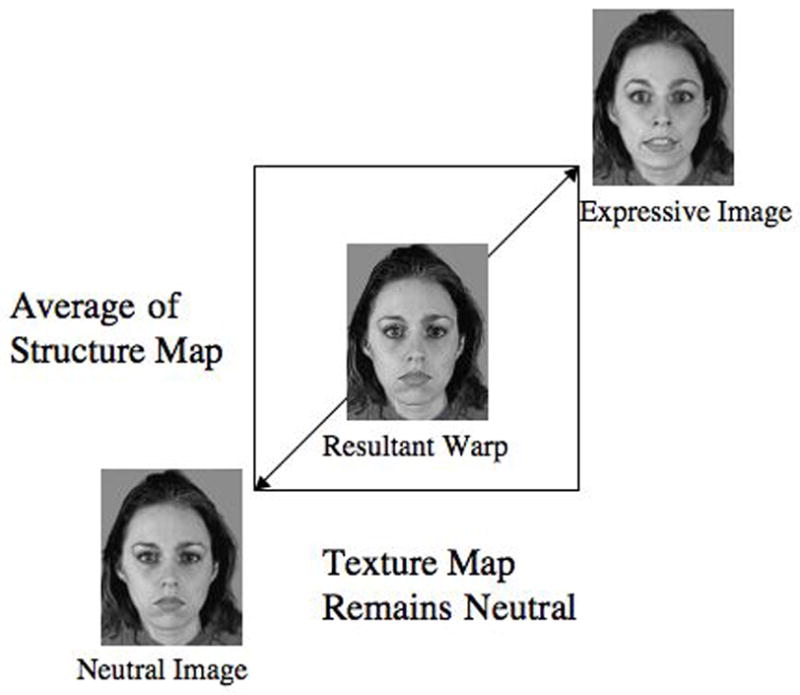

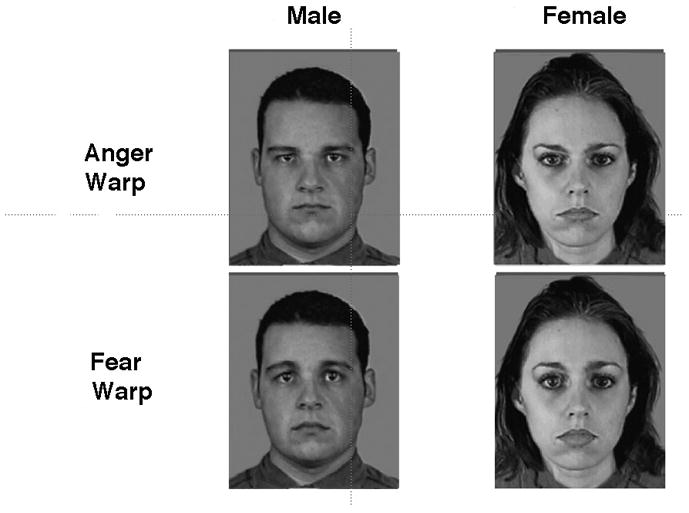

Emotion warps were created using the same eight male and eight female faces rated in the Pilot Study. To manipulate these faces to resemble various emotional expressions, we utilized a morphing program (Morph 2.5™) to generate a 50/50 average of the structural map of a neutral face and its corresponding anger or fear expression, while keeping the neutral texture map constant (see Figure 1). This manipulation resulted in stimuli that were not overtly expressive (no wrinkles, furrows, creases), but that did contain facial appearance features associated with emotional expressions (e.g., fuller lips, larger eyes, heightened brows; see Figure 2). For each exemplar face, one neutral-over-anger warp and one neutral-over-fear warp were created. However, participants saw only one version of each exemplar. Thus, two rating packets of faces were created and counterbalanced with regard to how neutral-over-anger and neutral-over-fear warps were distributed across participants, with an equal number of participants rating each version of a face. If one booklet included an anger warp of a particular exemplar, the other included the corresponding fear warp, and vice versa. Each booklet included an equal number of anger/fear warps and male/female stimuli, resulting in a 2 (emotion warp) by 2 (face gender) within-subjects factorial design.

Figure 1.

Example of stimulus warping manipulation used in the Primary Study in which a neutral image is averaged with its corresponding fear expression to generate a 50/50 average of the images structural components, while holding the neutral image’s texture map constant. The resultant image shares a structural resemblance with the fear expression, but is not overtly expressive.

Figure 2.

Example of male and female neutral facial image warped over corresponding anger and fear expressions.

Procedure

Participants were run as a group. Each participant was randomly assigned one of the two booklets of faces and rated the stimuli on the same fourteen ratings scales as in the Pilot study. As in the Pilot Study, they were instructed to examine the appearance of each face in the booklet and to rate it on perceived age and the various other rating scales using a number between 1 and 7.

Results

In this study all scales received moderate to high inter-rater reliability: youthful/mature (Cronbach’s α = .955), age (α = .979), gender prototypicality (α = .850), attractiveness (α = .920), cooperative (α = .920), naïve (α = .774), honest (α = .819), submissive (α = .892), affiliative (α = .916), anger (α = .902), fear, (α = .905), sad (α = .781), joy (α = .858), and Intuitive/rational (α = .790).

As in the Pilot Study, responses for five ratings scales were reverse coded. Mean responses for each treatment condition were then calculated including each combination of emotion warp and face gender. The subsequent data were then submitted to a 2 (warp: neutral-over-anger/neutral-over-fear) by 2 (face gender: male/female) repeated measures MANOVA with the fourteen impression measures serving as our dependent variables on interest. Overall, there was a main effect of emotion warp, F(14,12) = 15.145, p < .001, and a main effect of face gender, F(14,12) = 5.105, p = .004. There was, however, no significant interaction between warp type and face gender, F(14,12) = 1.094, p = .443.

Separate univariate analyses were then performed (see Table 2 for paired-sample t-test comparisons) and confirmed our hypothesis that emotion warps would yield patterns of impression formation similar to those found in the Pilot Study as a function of face gender. That is, neutral-over-fear warps were rated higher than neutral-over-anger warps on affiliativeness, submissiveness, cooperation, naivety, intuitiveness, honesty, babyishness, fearfulness, sad, and joy. Neutral-over-anger warps were rated higher on anger than neutral-over-fear warps. In terms of physical appearance no differences were apparent as a function of emotion warp for perceived age, prototypicality, or attractiveness of face. Fear warped faces, however, were rated as more babyish than anger warped faces.

Table 2.

Direct t-test comparisons as a function of expressive warp, Primary Study

| Emotion | Fear Warps | Anger Warps | t-statistic | r2 |

|---|---|---|---|---|

| Mean(SD) | Mean(SD) | |||

| Physical Appearance Ratings | ||||

| Age | 32.09 (4.34) | 32.17 (4.13) | −0.156 | .001 |

| Attractiveness Gender | 3.88 (0.73) | 3.77 (0.77) | 0.534 | .011 |

| Prototypicality | 5.07 (0.74) | 4.84 (0.72) | 2.358 | .182 |

| Babyishness | 3.96 (0.66) | 3.71 (0.66) | 2.398* | .187 |

| Emotionality Ratings | ||||

| Anger | 3.54 (0.60) | 4.70 (0.71) | −7.834*** | .711 |

| Fear | 4.11 (0.53) | 3.30 (0.57) | 6.420*** | .622 |

| Joy | 4.05 (0.52) | 3.50 (0.53) | 3.279*** | .301 |

| Sadness | 4.17 (0.50) | 3.74 (0.64) | 2.513* | .202 |

| Person Perception Ratings | ||||

| Subordinate | 4.27 (0.45) | 3.15 (0.67) | 7.034*** | .664 |

| Affiliative | 4.26 (0.58) | 3.46 (0.53) | 5.468*** | .545 |

| Naïve | 4.10 (0.55) | 3.33 (0.54) | 4.692*** | .468 |

| Honest | 4.44 (0.55) | 3.57 (0.47) | 6.343*** | .617 |

| Cooperative | 4.45 (0.39) | 3.29 (0.63) | 7.306*** | .681 |

| Intuitive | 4.10 (0.52) | 3.60 (0.57) | 2.781* | .236 |

Note. df = 25.

p < .05,

p < .01,

p < .001

Interestingly, ratings of the emotion-warped faces used in this study also replicated the pattern of gender-driven effects found in the Pilot Study based on unwarped faces. Specifically, female faces were rated as higher than male faces on affiliativeness, submissiveness, cooperation, naivety, intuitiveness, honesty, babyishness, fearfulness, sadness and joy, and were rated as younger, more attractive, and less angry (see Table 3). Inspection of effect sizes across the Pilot and Primary Studies offered no evidence for the predicted attenuation of gender-typical effects in emotion warped faces.

Table 3.

Direct t-test comparisons as a function of gender of face, Primary Study

| Gender | Female faces | Male faces | t-statistic | r2 |

|---|---|---|---|---|

| Mean(SD) | Mean(SD) | |||

| Physical Appearance Ratings | ||||

| Age | 28.11 (3.04) | 36.15 (5.43) | 12.284*** | .858 |

| Attractiveness Gender | 4.14 (0.76) | 3.51 (0.67) | 3.576*** | .338 |

| Prototypicality | 4.61 (.90) | 5.30 (.68) | −3.488** | .327 |

| Babyishness | 4.25 (0.80) | 3.42 (0.56) | 6.945*** | .659 |

| Emotionality Ratings | ||||

| Anger | 3.64 (0.64) | 4.60 (0.72) | −6.142*** | .601 |

| Fear | 4.28 (0.58) | 3.13 (0.66) | 6.816*** | .650 |

| Joy | 3.97 (0.41) | 3.58 (0.46) | 3.199** | .290 |

| Sadness | 4.25 (0.61) | 3.66 (0.62) | 3.127** | .281 |

| Person Perception Ratings | ||||

| Subordinate | 4.13 (0.48) | 3.30 (0.51) | 7.257*** | .678 |

| Affiliative | 4.18 (0.54) | 3.54 (0.50) | 5.297*** | .529 |

| Naïve | 4.03 (0.49) | 3.40 (0.65) | 3.437*** | .321 |

| Honest | 4.23 (0.49) | 3.79 (0.54) | 3.130** | .282 |

| Cooperative | 4.27 (0.54) | 3.47 (0.49) | 4.850*** | .485 |

| Intuitive | 4.20 (0.60) | 3.50 (0.59) | 3.568*** | .337 |

Note. df = 25.

p < .05,

p < .01,

p < .001

General Discussion

The current work examined the impact of emotion overgeneralization on impression formation. First, we confirmed that the neutral female faces relative to neutral male faces selected for this study were perceived in a gender-stereotypic manner, such that they were rated as more babyish, submissive, affiliative, naïve, honest, cooperative, fearful, joyful, and less angry. Surprisingly, we did not find gender differences in the Pilot Study for ratings of intuitive/rational or for ratings of sadness, though both gave rise to significant gender-typical differences in the Primary Study.

Critically, in the Primary Study—when we warped these same faces over their corresponding anger and fear expressions in order to directly examine the influence of perceptually driven emotion overgeneralization effects on trait impressions—we found a profile similar to that found in the Pilot Study as a function of gender. Thus, neutral faces warped over anger expressions were rated higher on perceived anger, and neutral faces warped over fear expressions were rated as more fearful, joyful, sad, babyish, submissive, affiliative, naïve, honest, cooperative, and intuitive. Given that these effects were consistent across both male and female faces, with no evidence of interactions, these findings suggest a purely perceptually driven influence on impression formation. Also as predicted, the influence of emotion warping on trait impressions closely paralleled the pattern of effects found as a function of gender in the Pilot Study. This provides indirect evidence that emotion overgeneralization may contribute to gender-typical perceptions, even along attributes not typically associated with emotions.

Given the known confounded nature of gender-related facial appearance and expression, we expected that the gender-typical effects found in our Pilot Study would have been at least partially due to direct emotion overgeneralization, i.e., perceptually rather stereotypically triggered. This conclusion is consistent with previous research showing that the female visage is in some ways perceptually similar to the fearful expression, whereas the male visage is perceptually similar to anger (Hess et al., 2009). In this way women and men may still signal information consistent with gender-emotion expectations even without displaying an overt expression. An important consideration here is that gender differences in facial appearance are themselves part of the cultural stereotype; people expect women to have more submissive/babyfaced facial features, whereas men are expected to have more dominant/mature features, and these appearance cues in turn happen to directly resemble fear and anger expressions (Marsh et al., 2005; Zebrowitz et al., 2010). Further, incongruent gender-related appearance has been found to be able to override traditional gender stereotypes, even with respect to core dimensions of social perception such as power and sociability (Friedman & Zebrowitz, 1992).

Based on these considerations, we predicted that the gender typical effects found in the Primary Study would likely be attenuated or, in some cases, even reversed (see Adams et al., 2004; Hess et al., 2005) when warping male and female faces over anger and fear expressions. What we found, however, was the same gender-typical pattern of effects found in the Pilot Study with no evidence for attenuation due to the warping procedure. Further, the lack of interaction between gender and emotional warp condition suggests that the contribution of top-down driven gender stereotypic impressions and bottom-up perceptually triggered impressions both represented robust and independent contributions to impression formation, at least in the context of the current study.

The contribution of learned gender stereotypes on impression formation is well-established and thus not a particularly surprising finding. The impact of emotion resembling facial appearance cues, however, represents a novel contribution to our understanding of impression formation, one that is consistent with theoretical claims associated with the concept of emotion overgeneralization (Zebrowitz, 1997). Our findings here lead to an additional question: How is it that emotion overgeneralization yields such systematic effects on impression formation, even on traits not typically associated directly with emotionality per se? To address this question, it is prudent to first consider how it is that overt emotional expressions yield similarly consistent effects on impression formation. A recent approach to address this question is the Reverse Engineering Model (Hareli & Hess, 2010), which implicates appraisal theory as a primary mechanism by which observing facial expression can inform stable personality inferences made of others. This account suggests that people use appraisals that are associated with specific emotions to reconstruct inferences of others’ underling motives, intents, and personal dispositions, which they then use to derive stable impressions. Insofar as appraisals associated with emotions can be automatic and nonreflective, this process may be a likely mechanism to explain how emotion-resembling features in an otherwise neutral face can likewise drive person perception.

Although relatively stable, facial appearance does change over time due to aging, life style, and environmental factors. The impact of these changes on impression formation and emotion recognition remains relatively uncharted. Early work conducted by Malatesta, Fiore, and Messina (1987) suggests that morphological changes in the face due to aging can be misinterpreted as emotional cues due to their resemblance to aspects of various expressions. Drooping of the eyelids or corners of the mouth, for example, might be misinterpreted as sadness. More recently, Hess, Adams, Simard, Stevenson, and Kleck (2012) demonstrated that advanced aging of the face can degrade the clarity of specific emotional expressions. Given that emotional communication between the young and old can have a critical impact on the quality of life of the latter, particularly within health care settings, future research examining the impact of facial appearance changes on emotion and social perception is an important domain where more work examining emotional messages communicated by neutral faces could have clear applied significance.

In summary, we found direct evidence that emotion-resembling features of the face can directly drive social impressions, likely due to overgeneralizations of highly adaptive perceptual processes. Once an overgeneralization is perceptually triggered, a cascade of social-cognitive processes arguably unfolds and guides our impressions of and responses to others as we navigate our social world. That we did not find an interaction between stimulus gender and facial appearance suggests these influences represent independent contributions to impression formation, one that is stereotype driven, the other perceptually triggered. Future work examining the interplay of perceptually and stereotypically triggered impressions holds the promise of advancing our understanding of and clarifying the complex nature of impression formation. For now, what is clear is that the impressions we form of others is likely driven, in large part, by emotion resembling cues in facial appearance. This insight helps explain both why our impressions of others are so often nondiagnostic of how they truly are, and why such social impressions are nonetheless so widely shared.

Acknowledgments

This research was supported in part by a National Institute of Aging grant (NIMH Award # 1 R01 AG035028-01) to REK, UH, and RBA, Jr.

Footnotes

Warping employs an algorithm for averaging across the structural maps of images while holding the textural map of one image constant. In this case, we held the textural map of the neutral facial image constant while averaging across the structural map of its corresponding expressive image after applying carefully aligned landmarks around the eyes, mouth etc. This procedure therefore yielded changes in the relative size and position of facial features without producing the bulging, furrows, wrinkles, crinkles, and changes in contrast that are often apparent during overt displays of emotion.

Contributor Information

Reginald B. Adams, Jr., The Pennsylvania State University

Anthony J. Nelson, The Pennsylvania State University

José A. Soto, The Pennsylvania State University

Ursula Hess, Humboldt-University, Berlin.

Robert E. Kleck, Dartmouth College

References

- Adams RB, Jr, Kleck RE. Differences in perceived emotional disposition based on static facial structure. Society for Personality and Social Psychology; Savannah, GA: 2002. [Google Scholar]

- Adams RB, Jr, Kleck RE. Differences in perceived emotional disposition based on static facial structure. Poster presented at the annual meeting of the Society for Personality and Social Psychology; Savannah, GA. 2002. Jan, [Google Scholar]

- Bargh JA, Chen M, Burrows L. Automaticity of social behavior: Direct effects of trait construct and stereotype activation on action. Journal of Personality & Social Psychology. 1996;71:230–244. doi: 10.1037//0022-3514.71.2.230. [DOI] [PubMed] [Google Scholar]

- Beaupré MG, Cheung N, Hess U. Unpublished stimulus set. 2000. The Montreal Set of Facial Displays of Emotion. [Google Scholar]

- Becker DV, Kenrick DT, Neuberg SL, Blackwell KC, Smith DM. The Confounded Nature of Angry Men and Happy Women. Journal of Personality and Social Psychology. 2007;92:179–190. doi: 10.1037/0022-3514.92.2.179. [DOI] [PubMed] [Google Scholar]

- Birnbaum DW. Preschoolers’ stereotypes about sex differences in emotionality: A reaffirmation. Psychological Bulletin. 1983;143:139–140. [Google Scholar]

- Briton NJ, Hall JA. Beliefs about female and male nonverbal communication. Sex Roles. 1995;32:79–90. [Google Scholar]

- Brody LR, Hall JA. Gender and emotion. In: Lewis M, Haviland JM, editors. Handbook of emotions. NY: Guilford Press; 1993. pp. 447–460. [Google Scholar]

- Brown E, Perrett DI. What gives a face its gender? Perception. 1993;22:829–840. doi: 10.1068/p220829. [DOI] [PubMed] [Google Scholar]

- Campbell R, Wallace S, Benson PJ. Real Men Don’t Look Down: Direction of Gaze Affects Sex Decisions on Faces. Visual Cognition. 1996;3:393–412. [Google Scholar]

- Ekman P, Friesen WV. The facial action coding system. Palo Alto, CA: Consulting Psychologists Press, Inc; 1976. [Google Scholar]

- Fabes RA, Martin CL. Gender and age stereotypes of emotionality. Personality & Social Psychology Bulletin. 1991;17:532–540. [Google Scholar]

- Fischer A. Sex differences in emotionality: Fact or Stereotype? Feminism & Psychology. 1993;3:303–318. [Google Scholar]

- Friedman H, Zebrowitz LA. The contribution of typical sex differences in facial maturity to sex role stereotypes. Personality and Psychological Bulletin. 1992;18:430–438. [Google Scholar]

- Gilbert DT, Pelham BW, Krull DS. On cognitive busyness: When person perceivers meet persons perceived. Journal of Personality & Social Psychology. 1988;54:733–740. [Google Scholar]

- Hareli S, Hess U. What emotional reactions can tell us about the nature of others: An appraisal perspective on person perception. Cognition and Emotion. 2010;24:128–140. [Google Scholar]

- Hess U, Adams RB, Jr, Kleck RE. Dominance, gender and emotion expression. Emotion. 2004;4:378–388. doi: 10.1037/1528-3542.4.4.378. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB, Jr, Kleck RE. Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cognition & Emotion. 2005;19:515–536. [Google Scholar]

- Hess U, Adams RB, Jr, Grammer K, Kleck RE. Sex and emotion expression: Are angry women more like men? Journal of Vision. 2009;9:1–8. doi: 10.1167/9.12.19. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB, Jr, Simard A, Stevenson MT, Kleck RE. Smiling and sad wrinkles: Age-related changes in the face and the perception of emotions and intentions. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hess U, Blairy S, Kleck RE. The influence of expression intensity, gender, and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior. 2000;24:265–283. [Google Scholar]

- Keating CF. Gender and the physiognomy of dominance and attractiveness. Social Psychology Quarterly. 1985;48:61–70. [Google Scholar]

- Keating CF, Mazur A, Segall MH. Facial gestures which influence the perception of status. Social Psychology Quarterly. 1977;40:374–378. [Google Scholar]

- Knutson B. Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior. 1996;20:165–182. [Google Scholar]

- Laser PS, Mathie VA. Face facts: An unbidden role for features in communication. Journal of Nonverbal Behavior. 1982;7:3–19. [Google Scholar]

- Malatesta CZ, Fiore MJ, Messina JJ. Affect, personality, and facial expressive characteristics of older people. Psychology & Aging. 1987;2:64–69. doi: 10.1037//0882-7974.2.1.64. [DOI] [PubMed] [Google Scholar]

- Marsh AA, Adams J, RB, Kleck RE. Form and Social Function in Facial Expressions. 2005. Why do Fear and Anger Look the Way They Do? Submitted for publication. [DOI] [PubMed] [Google Scholar]

- Said CP, Sebe N, Todorov A. Structural resemblance to emotional expressions predicts evaluation of emotionally neutral faces. Emotion. 2009;9:260–264. doi: 10.1037/a0014681. [DOI] [PubMed] [Google Scholar]

- Van Overwalle F, Drenth T, Marsman G. Spontaneous trait interferences: Are they linked to the actor or to the action? Personality & Social Psychology Bulletin. 1999;25:450–462. [Google Scholar]

- Zebrowitz L. Reading Faces: Window to the Soul? Boulder: Westview Press; 1997. [Google Scholar]

- Zebrowitz L, Kikuchi M, Fellous JM. Facial resemblance to emotions: group differences, impression effects, and race stereotypes. Journal of Personality and Social Psychology. 2010;98:175–189. doi: 10.1037/a0017990. [DOI] [PMC free article] [PubMed] [Google Scholar]