Abstract

Context:

Neurocognitive testing is a recommended component in a concussion assessment. Clinicians should be aware of age and practice effects on these measures to ensure appropriate understanding of results.

Objective:

To assess age and practice effects on computerized and paper-and-pencil neurocognitive testing batteries in collegiate and high school athletes.

Design:

Cohort study.

Setting:

Classroom and laboratory.

Patients or Other Participants:

Participants consisted of 20 collegiate student-athletes (age = 20.00 ± 0.79 years) and 20 high school student-athletes (age = 16.00 ± 0.86 years).

Main Outcome Measure(s):

Hopkins Verbal Learning Test scores, Brief Visual-Spatial Memory Test scores, Trail Making Test B total time, Symbol Digit Modalities Test score, Stroop Test total score, and 5 composite scores from the Immediate Post-Concussion Assessment and Cognitive Testing (ImPACT) served as outcome measures. Mixed-model analyses of variance were used to examine each measure.

Results:

Collegiate student-athletes performed better than high school student-athletes on ImPACT processing speed composite score (F1,38 = 5.03, P = .031) at all time points. No other age effects were observed. The Trail Making Test B total time (F2,66 = 73.432, P < .001), Stroop Test total score (F2,76 = 96.85, P = < .001) and ImPACT processing speed composite score (F2,76 = 5.81, P = .005) improved in test sessions 2 and 3 compared with test session 1. Intraclass correlation coefficient calculations demonstrated values ranging from 0.12 to 0.72.

Conclusions:

An athlete's neurocognitive performance may vary across sessions. It is important for clinicians to know the reliability and precision of these tests in order to properly interpret test scores.

Keywords: concussions, traumatic brain injuries, serial testing

Key Points

An athlete's neurocognitive test performance may vary across serial testing sessions. To properly interpret score variations, the clinician must know the reliability and precision of these tests.

With practice effects, the greatest improvement in test scores occurs between the first and second administrations of a neurocognitive test.

Baseline measures of processing speed may need to be reassessed as an athlete ages.

Concussion is a serious injury that occurs at all levels of sport and can affect the cognitive, physical, and behavioral abilities of an athlete.1–8 Therefore, it is important that these injuries be properly evaluated and managed. Currently, a comprehensive evaluation is recommended, in which clinical attributes, symptoms, neurocognitive performance, and balance are assessed.9–11 This multifaceted clinical model is more than 90% sensitive in identifying concussion;12 however, when any of these measures is used in isolation, the sensitivity often drops to less than 60%.12 One important component of this evaluation is the neurocognitive assessment. This can be performed using either traditional paper-and-pencil neurocognitive tests or computerized neurocognitive tests. For reasons including ease of administration, reduced testing time, and availability of the tests to sports medicine clinicians, computerized neurocognitive tests have gained considerable popularity in sports medicine settings. Despite their widespread use, the psychometric properties, practice effects, and age effects of neurocognitive testing are not well understood in the athletic population.

The vast majority of people participating in contact and collision sports are under 19 years of age,13 and an earlier study14 suggested that high school athletes may be more at risk for concussion than college athletes. Given the number of athletes participating across many age levels, clinicians should be mindful of any age effects in the interpretation of both baseline and postinjury data. Furthermore, high school athletes may recover at a slower rate than do collegiate athletes after a concussion.15

Performance on many neurocognitive tests may be improved by prior exposure to testing stimuli and procedures16 in the absence of any actual recovery by the patient.16 This false improvement is due to 2 factors: the athlete has already learned the procedures involved in taking the test, and he or she already knows the specific content of the tests.17 Improvement in test performance due to practice effects may cause inflated neurocognitive test scores, which can mimic neurocognitive recovery, and may lead to returning an athlete to competition prematurely. An example of these practice effects occurs with processing speed on the Immediate Post-Concussion Assessment and Cognitive Testing (ImPACT).18 Conversely, lack of improvement with serial assessments on neurocognitive tests can imply continued concussive impairment.19

Serial (repeated) testing is often used to track an athlete's neurocognitive recovery over time.2,4,19 The consistency of an individual's performance on these measures must be carefully considered in the context of interpreting postinjury serial neurocognitive testing. Reliability relates to consistency of measurement on the same task at different time intervals.20,21 Reliability is a property of tests that can be established by various statistical methods: test-retest, alternate forms, or split-half or internal consistency. It is examined and estimated through the systematic and ongoing evaluation of different kinds of reliability evidence, applied in different clinical and nonclinical contexts, to numerous groups. Efforts have been made to address these psychometric issues in recent studies.19,22,23 One recent investigation22 suggested that 3 of the most commonly used computerized tests had only low to moderate test-retest reliability across test sessions22; however, the number of days between test sessions constituted a wide range. In addition, participants in this study were asked to complete 3 computerized testing batteries during each of the 3 testing sessions included in the study, which may not be clinically applicable.

These psychometric factors should not be dismissed. Rather, they should be viewed as practical and essential considerations when using cognitive testing to make concussion management and return-to-play decisions. Therefore, the purpose of our study was to examine age-group differences (collegiate versus high school) on neurocognitive performance in healthy athletes with no history of concussion in the last 5 years. Two additional aims were to compare the consistency (reliability) of responses across all participants and practice effects between the age groups.

METHODS

Participants

Forty healthy and active volunteers participated in this study. Participants consisted of 20 National Collegiate Athletic Association Division I student-athletes (age = 20.00 ± 0.79 years) and 20 student-athletes (age = 16.00 ± 0.86 years) from 2 high schools. Each age group contained 10 males and 10 females. Participants were classified as healthy if they had no history of diagnosed concussion within the last 5 years and no known neurologic, psychiatric, or psychological conditions that would affect cognition. They were classified as active athletes if they engaged in athletics 3 or more days per week. Individuals 18 years of age were excluded in order to maintain a clear separation between the high school and collegiate groups. Effort level for each participant's scores was evaluated by assessing the ImPACT impulse control composite score: a score greater than 30 constituted an invalid test. All participants included in the study met the criteria for valid tests.

Instrumentation

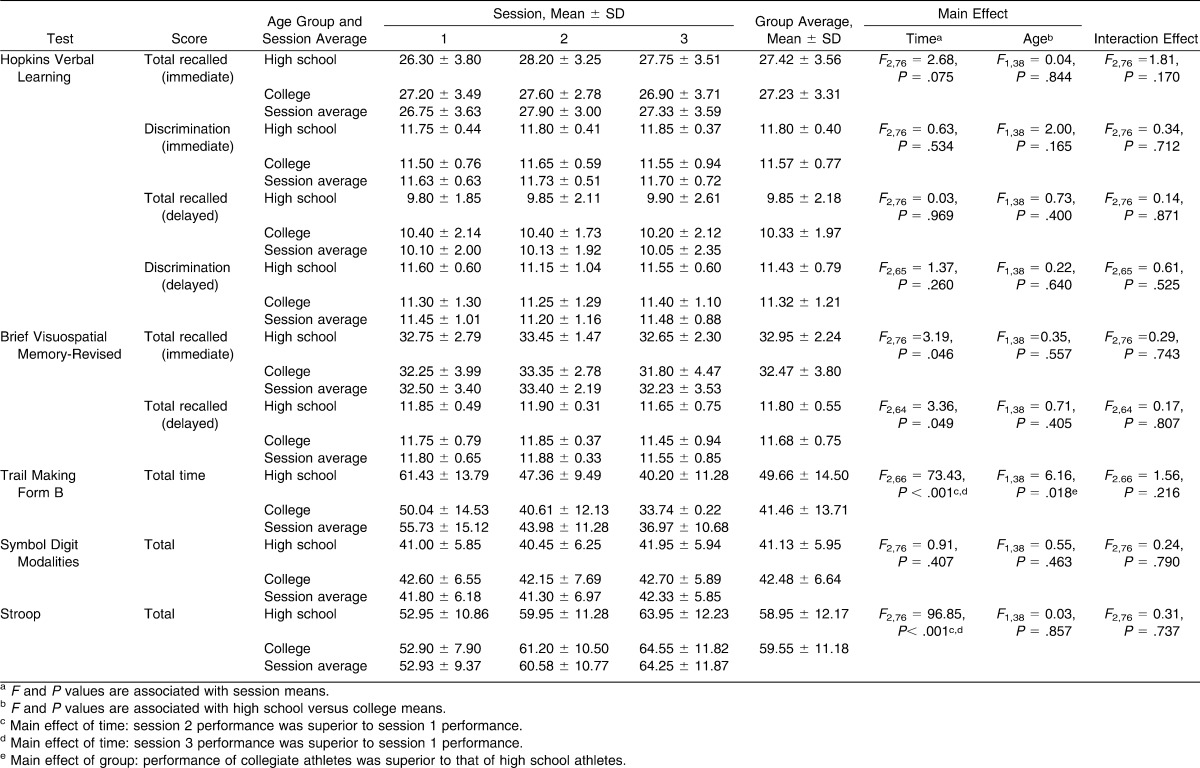

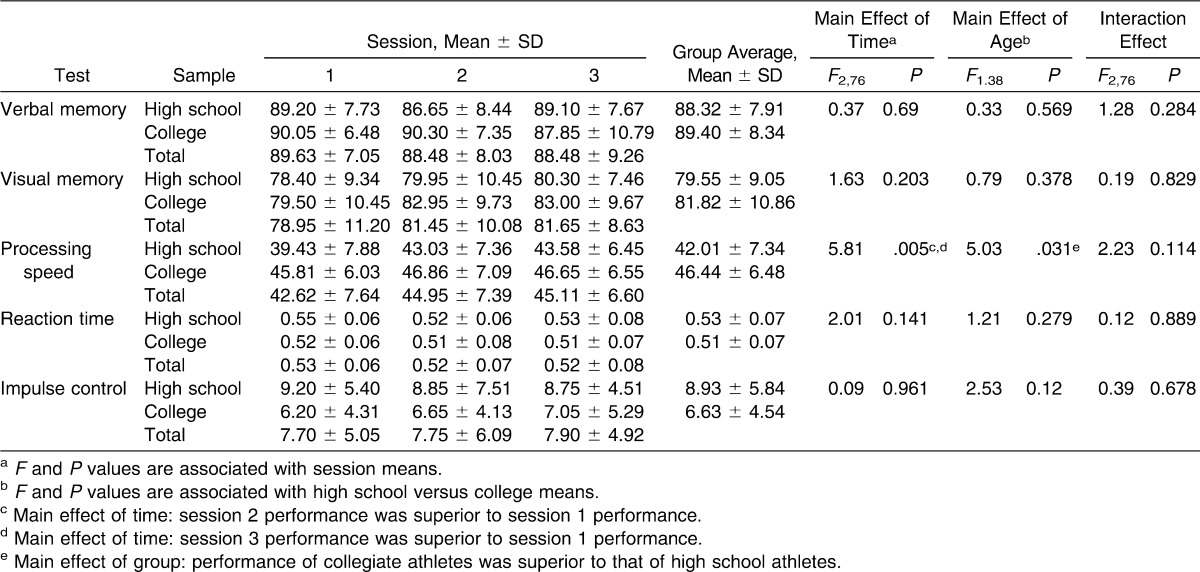

Participants were tested on both a computer-based test battery and a traditional paper-and-pencil–based test battery to assess neurocognitive performance during 3 test sessions. Outcome measures for each test are presented in Tables 1 and 2. The paper-and-pencil battery was designed to include tests theoretically measuring cognitive domains similar to those assessed in the computerized battery.

Table 1.

Main and Interaction Effects for Paper-and-Pencil Neuropsychological Test Scores

Table 2.

Main Effects and Interaction Effects for the ImPACT Composite Scores

The computer-based test battery used was the ImPACT (version 3; ImPACT Applications, Inc, Pittsburgh, PA). This computerized neurocognitive testing program assesses a number of cognitive processes, including visual and verbal memory, attention, working memory, processing speed, reaction time, impulse control, and response inhibition. The 6 subtests are Word Memory, Design Memory, X's and O's, Symbol Match, Color Match, and Three Letters. Five composite scores are provided in the clinical report: verbal memory, visual memory, processing speed, reaction time, and impulse control. A self-reported postconcussion symptom scale (PCSS) is also included in the ImPACT program. However, we did not analyze symptom scores as part of this study. Reliability of the ImPACT composite scores has been demonstrated with intraclass correlation coefficient (ICC) values ranging from 0.23 to 0.46 for verbal memory, 0.32 to 0.65 for visual memory, 0.38 to 0.75 for processing speed, 0.39 to 0.68 for reaction time, and 0.15 to 0.54 for impulse control.22,24

Paper-and-Pencil Battery

Hopkins Verbal Learning Test–Revised (HVLT-R)25

This test is a measure of verbal learning and memory in which the clinician reads 12 words aloud to the athlete. The athlete then attempts to immediately free recall as many of the words as possible in any order. This process is repeated 2 more times for a total of 3 free-recall trials. The other paper-and-pencil tests described below are then completed. At the end of the traditional neurocognitive test battery, the athlete is asked to complete a delayed trial in which he or she tries to free recall as many words as possible from the original list. Lastly, a discrimination trial is completed, in which the clinician reads 24 words aloud to the athlete; 12 are from the original list, for which a response of yes is expected, and 12 additional words (6 semantically related false-positives and 6 semantically unrelated false-positives), for which a response of no is expected. Three alternate forms were used (A, B, and C) to reduce learning effects across testing sessions. Reliability for the HVLT-R is lower than for some of the other measures included in the study and ranges from 0.36 to 0.49.26

Brief Visuospatial Memory Test–Revised (BVMT-R)27

This test assesses the participant's visual-spatial memory with 3 learning trials and a delayed free-recall trial. Participants must learn and reproduce 6 abstract designs arranged in 2 columns and 3 rows. Three alternate forms were used (1, 2, and 3) to reduce learning effects across testing sessions. The BVMT-R is moderately to highly reliable, with values ranging from 0.73 to 0.91.28

Trail Making Test Form B (TMT-B; Trails B)29

This test assesses the participant's visual scanning, attention, mental flexibility, and visual-motor speed. The TMT-B requires the participant to draw a continuous line connecting circles in ascending order, alternating between number (1 through 13) and letter (A through K). The score is the time, in seconds, required to complete the test. Only 1 form was used for this test. Because of previous findings regarding sport-related concussion,2 we omitted the Trail Making Test Form A (Trails A) from our testing battery and only included TMT-B. In most previously published traditional neuropsychology literature, Trails A is completed before Trails B; however, Trails B is more sensitive than Trails A for cognitive flexibility,30 brain dysfunction, and sport concussions.2 Other authors2 have used Trails B exclusively. Reliability of the TMT-B is moderate to high, ranging from 0.65 to 0.85.31,32

Symbol Digit Modalities Test (SDMT)33

This test assesses psychomotor speed, visual short-term memory, attention, and concentration. Participants are asked to fill in a series of empty boxes underneath symbols with the corresponding number, using a key on top of the test form to identify which number goes with each symbol. The score was calculated as the number of correct responses in 60 seconds (abbreviated from the typical 90 seconds to shorten overall testing time). Three alternate forms were used (A, B, and C) to reduce learning effects across test sessions. Reliability ranges from 0.82 to 0.87.32,34

Stroop Test35

This test assesses speed of processing and cognitive flexibility. A participant is given a page with columns of color names (red, green, and blue), which are printed in different font colors, and asked to say the name of the font color in which each word is printed, ignoring the word that was spelled out. For example, if the printed word “red” appears in blue color font, the answer should be “blue.” The person is given 45 seconds to correctly identify the font color of as many words as possible. We did not administer the word reading and color naming subtests. Only the color-word subtest was given, and only 1 form was used for this test. Reliability ranges from 0.54 to 0.60.36,37

Procedures

High school participants reported to a classroom at their respective high schools, and collegiate participants reported to a sports medicine research laboratory. A single certified athletic trainer, trained in the administration of neurocognitive testing, administered all tests. No more than 2 participants were tested at the same time. All participants reported to their respective testing site for a total of 3 visits, with at least 24 hours but no more than 72 hours between visits (average time between sessions 1 and 2 = 1.8 ± 0.61 days and between sessions 2 and 3 = 1.6 ± 0.59 days). Each testing session lasted for approximately 1 hour. All participants completed both the ImPACT and the traditional battery of 5 paper-and-pencil tests in counterbalanced order for each test session. The first participant determined which test battery (computerized or traditional) to begin by random selection (ie, coin flip). All of the following participants began with the test battery that counterbalanced the previous participant and used the same test battery order for all 3 test sessions.

The rate of testing (how fast the individual completed the test) was determined by the participant for both the ImPACT and paper-and-pencil test batteries. Upon completion of 1 test, the participant confirmed that he or she was ready to proceed to the next test and continued until all tests for that battery were completed. Upon completion of 1 test battery, the participant began the remaining test battery after a 5-minute rest period. The test session was concluded once both test batteries were completed.

Data Analysis

One 2 × 3 mixed-model analysis of variance (age × time) was calculated for each of the 14 outcome measures. For each outcome measure, analysis of variance was conducted to examine the main effects for group (age) and test time (practice effects) to determine differences between collegiate and high school athletes for the ImPACT and paper-and-pencil neurocognitive test scores. Interaction effects were analyzed to examine the joint effects of age and test time (practice) for each outcome measure.

An ICC [2,1] with standard error of measurement (SEM) was calculated to determine the consistency of the athletes' performance across serial neurocognitive tests for each of the 14 clinically relevant outcome measures. Pearson bivariate correlations were used to examine correlation of these measures across time in the combined sample. The change scores within each group (college and high school) were compared with the previously published reliable change indices (RCIs) for the ImPACT composite measures.19 This comparison was made to the table given in the article19 that provided established RCIs for each of the composite measures produced by ImPACT, allowing both researchers and clinicians to see if the change occurring across test sessions is a meaningful change.

To analyze the data, we used SPSS (version 16.0; SPSS Inc, Chicago, IL). Mean scores and standard deviations were calculated for each outcome measure. An a priori α level of significance was set at .05 for all analyses. Because our 9 paper-and-pencil outcome measures may be related, we adjusted our level of significance to .0056 for all analyses related to the paper-and-pencil testing battery. The .05 level was applied for the ImPACT composite score measures. We calculated that 20 participants per group would be needed for an effect size of 0.80 and power of 0.80.

RESULTS

Effects of Age and Practice

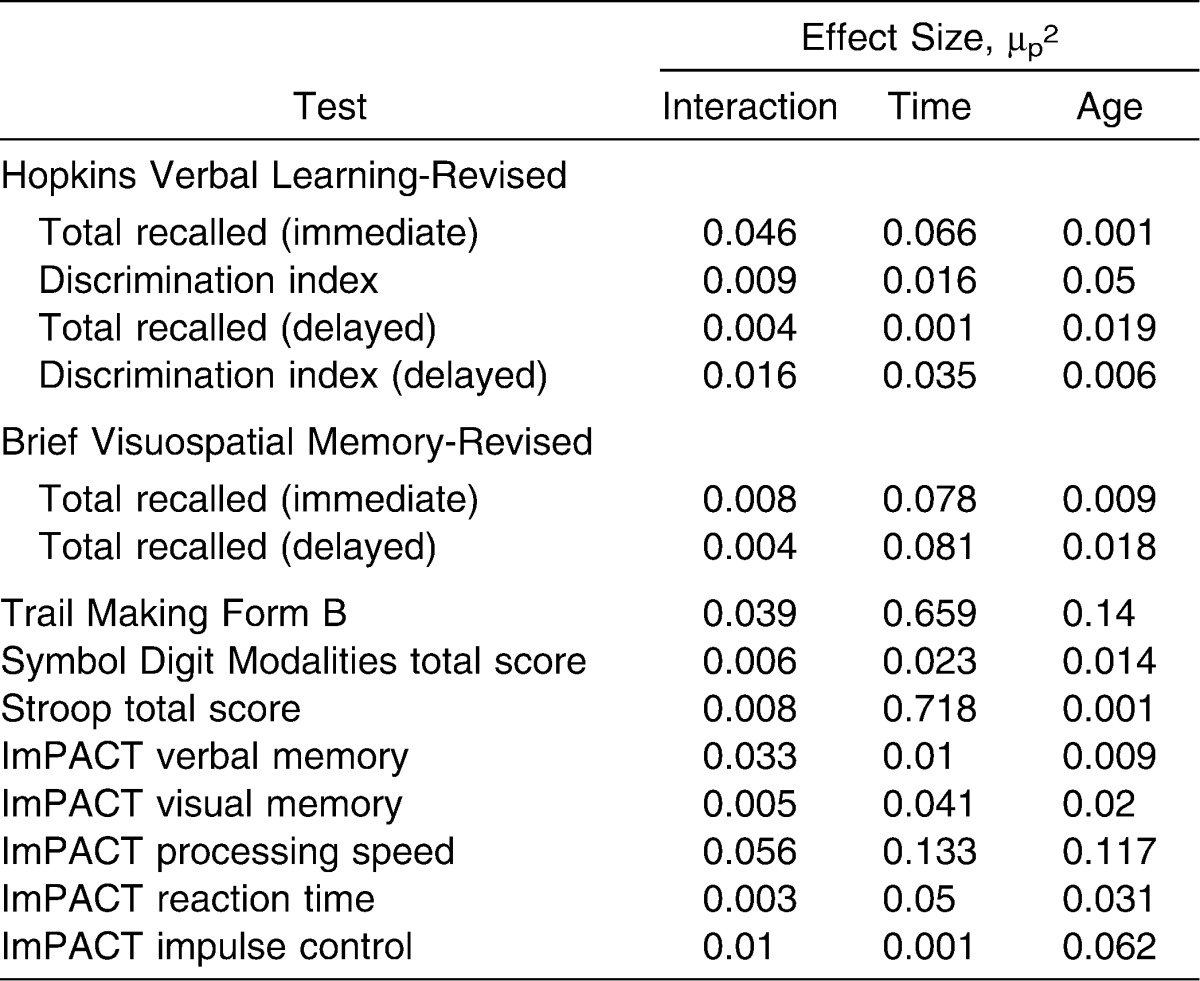

No significant interaction effects were noted for the computerized or paper-and-pencil batteries (Tables 1 and 2). No statistical differences were observed for the effect of age on any of the paper-and-pencil outcome measures. A main effect of age was observed for ImPACT processing speed score (F1,38 = 5.03, P = .031) whereby college students performed better than did high school students. Effect sizes for the main effects and interaction effect related to each outcome measure are shown in Table 3.

Table 3.

Effect Sizes for Outcome Measures

A main effect of test session was seen for TMT-B total time (F2,66 = 73.43, P < .001), Stroop Test total score (F2,76 = 96.85, P < .001), and ImPACT processing speed composite score (F2,76 = 5.81, P = .005). For each of these measures, averages for test sessions 2 and 3 were significantly better than for session 1 (Tables 1 and 2), with improvement of 22% from sessions 1 and 2 and improvement of more than 30% from session 1 to session 3 for TMT-B. The ImPACT processing speed scores improved by more than 5% from session 1 to session 2 and from session 1 to session 3. Performance on the Stroop Test improved by 14% from session 1 to session 2 and by 21% from session 1 and session 3. No differences were seen between sessions 2 and 3, suggesting that test scores had stabilized by this point. Effect sizes for all measures are presented in Table 3.

Reliability and Precision

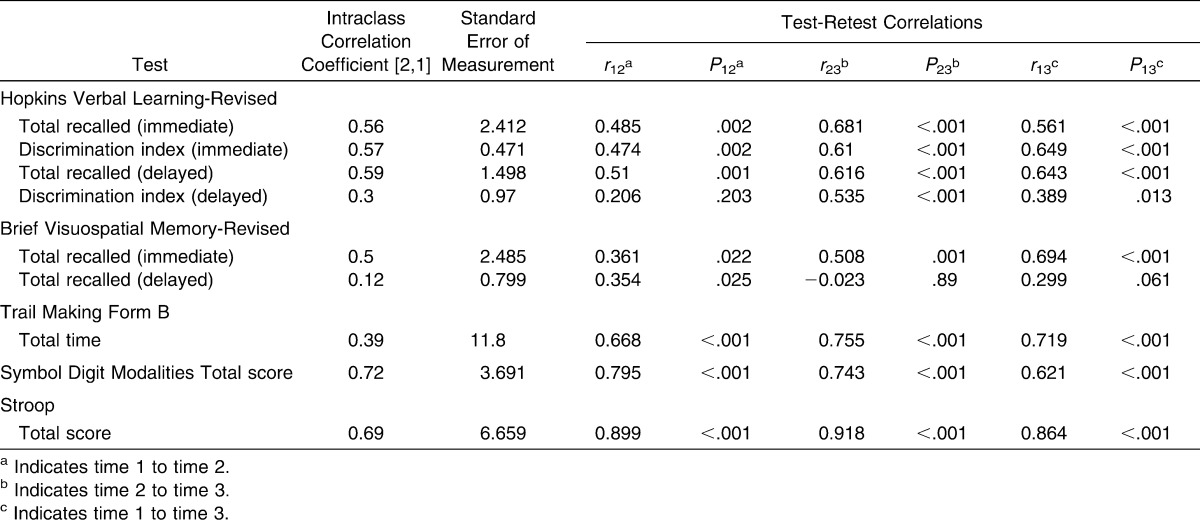

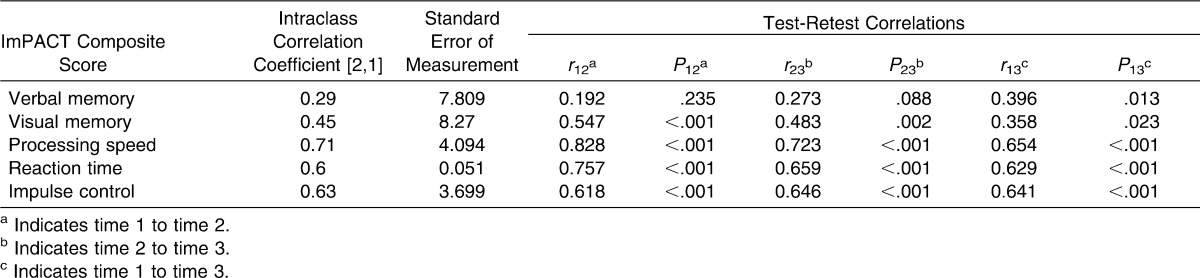

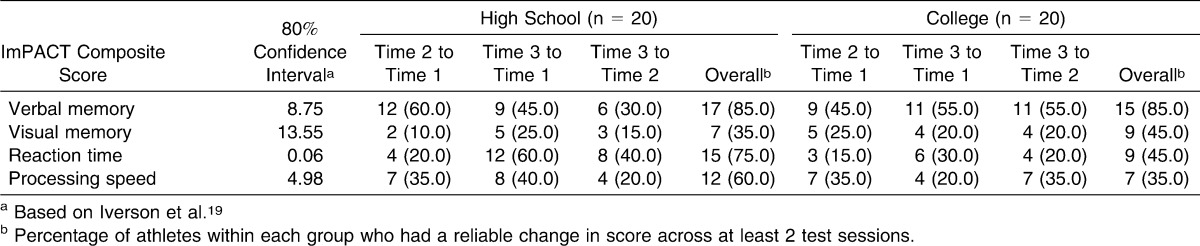

The ICC values ranged from 0.12 to 0.72. The 3 lowest values were for BVMT-R total delayed recall (ICC [2,1] = 0.12), ImPACT verbal memory composite score (ICC [2,1] = 0.29), and HVLT-R delayed discrimination index (ICC [2,1] = 0.30). The 3 highest values were for SDMT total score (ICC [2,1] = 0.72), ImPACT processing speed composite score (ICC [2,1] = 0.71), and Stroop total score (ICC [2,1] = 0.69). Test-retest correlations ranged from low to high depending on the outcome. Lists of ICCs, SEMs, and test-retest correlations for all variables are provided in Tables 4 and 5. Table 6 includes comparisons of our data with previously published reliable change indices19 and represents the percentage of athletes within each group whose performance across test sessions changed reliably. We included this table to illustrate the percentage of participants in the sample whose scores changed at clinically meaningful levels.

Table 4.

Reliability and Precision of Paper-and-Pencil Neuropsychological Test Scores

Table 5.

Reliability and Precision of ImPACT Composite Scores

Table 6.

Athletes with Reliable Change (Percent Improvement or Decline) Across Test Sessions on ImPACT Composite Scores

DISCUSSION

Overall, our most clinically relevant findings concern the variability in performance on measures included in both the computerized and paper-and-pencil testing across sessions. These findings were most noticeable between test sessions 1 and 2. These results highlight the need to understand this variability and to control for as many factors as possible to produce more stable results across serial testing sessions.

Age and Practice Effects

One purpose of our study was to determine if age affects neurocognitive test performance. Of all the tests we used, age-related differences were found for only ImPACT processing speed composite scores, with collegiate athletes performing better than high school athletes across all 3 test sessions. This result adds support to the finding of Iverson et al38 that adolescents (ages 13–18 years) displayed age effects for processing speed. Hunt and Ferrara39 observed age-related differences among high school students on the TMT-B and suggested that measures of processing speed may differ by age group. Presumably these differences reflect ongoing brain development between adolescence and early adulthood40,41 and may reflect underlying neuromaturational processes. These results are consistent with the changes in cognitive maturity and decline described in the current literature.40,41

Clinicians should be aware of this difference between age groups when evaluating an athlete's performance on processing-speed measures. Most importantly, these findings suggest that a baseline score (at least on these types of measures) for a young athlete should be reassessed once he or she reaches college. Future researchers should continue to monitor the effects of age and study a larger spectrum of age groups, including athletes at the high school, college, and even professional levels.

In addition, practice effects were similar across the collegiate and high school athletes, with the most drastic improvements occurring between test sessions 1 and 2. Overall, both groups displayed improvement on both the immediate and delayed portions of the TMT-B, Stroop Test, and ImPACT processing speed composite score. These results reflect those in similar studies19,23 and indicate that some orientation to a task may be needed to obtain a stable baseline measure. However, in a different patient population, previous authors42 suggested that dual baseline testing (obtaining a second baseline measurement) may be most useful to obtain a stabilized baseline score on some measures of cognitive function. In addition, this may indicate that individuals who have not taken a baseline test in a few months or years and undergo postinjury tests on 2 days in close proximity may exhibit practice effects between those sessions. Also, the lack of a learning effect after multiple postinjury test sessions may itself indicate deficits,43 although few investigators have examined this point empirically. Furthermore, even though the previous literature has identified practice effects on many measures of neurocognitive function, a variety of factors may cause the variability in performance. Therefore, practice effects should often be interpreted with caution.44 Regarding the influence of practice, the greatest effects were seen on the 2 paper-and-pencil neurocognitive tests for which there was only a single form each: TMT-B and Stroop Test. Significantly lower completion times for Trails B were noted both for the high school and collegiate groups (Trails B is scored by the amount of time to task completion; a lower time reflects better performance) in sessions after the initial test. Improved performance in correct color-word reading was noted on the Stroop Test. These results highlight the false improvement that can occur from an athlete's repeated exposure to the same form of test and result in premature return to play.

Among the pencil-and-paper tests with alternate (and presumably equivalent) forms, observed practice effects were minimal and statistically nonsignificant. This was shown especially with the lack of practice effects on the BVMT-R, HVLT-R, and SDMT. However, although alternative forms can factor out the content practice effect, they do not factor out the procedural practice effect from identical test instructions.17 On the ImPACT, practice effects on processing speed composite were similar to those reported by Iverson et al in 2003.19

Reliability and Consistency

In sports medicine, neurocognitive testing is used to identify cognitive deficits and track an athlete's improvement over time. Consistency of the athlete's performance and stability of the actual measure are difficult to differentiate. Regardless, performance consistency during serial neurocognitive testing becomes critical for an accurate evaluation. The reliabilities across testing sessions within our sample ranged from low to moderate, indicating a need for further investigation into the stability and consistency of these measures over time. In a previous study45 using an alternate computerized battery, an aggregated score from all outcomes on the Automated Neuropsychological Assessment Metrics displayed high reliability, but the individual module scores were similar to those we observed. Two previous groups22,46 examined the reliability of ImPACT scores and found similar results; however, these authors assessed reliability over a longer timeframe.

A few observations may help to explain the range of reliability measures. The HVLT-R discrimination index (delayed) and the BVMT-R total recall (delayed) showed high ceiling effects for absolute scores, with little to no variability across test sessions. This lack of variability may have confounded the ICC results. Although no ceiling effect for scores was seen with TMT-B total time, ImPACT verbal memory composite score, or ImPACT visual memory composite score, reliability measures were low. Another factor that appeared to affect reliability was the time limit. In our study, tests with a set time limit for completion resulted in higher ICC values than did tests with no time limit. The SDMT, Stroop Test, and ImPACT processing speed composite had set time limits for completion and had the highest reliability values. This known endpoint of the test may increase motivation for the test taker, resulting in a more accurate representation of the individual's highest potential from one test to the next. To further examine stability over time, we compared our results using the reliable change methods proposed for the ImPACT19 (Table 5). This method can account for test psychometric values and an individual's performance, which may be useful given the variability in these measures. The method has been suggested as a useful tool in understanding what represents clinical change after a concussive injury.19,47,48 More than 35% of both our high school and collegiate athletes performed significantly better or worse across at least 2 sessions on all composite measures of the ImPACT. This indicates that performance may differ across testing time points, specifically when compared with the initial test session, and may reflect familiarity with the task and learning effects.

Test reliability and precision should be carefully considered by the clinician conducting serial neurocognitive testing in an athletic population. Based on ICC values and test-retest correlations, measures such as the HVLT-R total recalled (immediate and delayed), Stroop Test, and SDMT total score may be more appropriate for serial neurocognitive testing than the TMT-B total time, ImPACT verbal memory composite score, or ImPACT visual memory composite score. Variability is likely to occur across any serial neurocognitive tests, but these ICC and SEM values may give the clinician a better understanding of how much variability to expect from one test to the next. Future researchers should continue to explore the consistency of athletes' performance across serial neurocognitive tests. Increased duration of serial neurocognitive testing may provide a more accurate measure of each athlete's performance over time. In addition, alternative analyses that account for high ceiling effects may be ideal.

Limitations

As with any study, ours is not without limitations. A small window of time was allowed between test sessions; often the period of time to the initial postinjury session from baseline is longer. In addition, we only used a few of the paper-and-pencil tests available and 1 computerized test battery, which may limit our findings to these particular batteries. Lastly, the study had a relatively small sample size. However, given the effect sizes observed in the study, the lack of differences observed was most likely not clinically meaningful.

CONCLUSIONS

Outcomes of this study warrant attention from clinicians who are tasked with caring for athletes at risk of sport-related concussion. We demonstrated that athletes' neurocognitive test performances may vary across serial testing sessions. It is important for the clinician to know the reliability and precision of these tests in order to properly interpret the variations in test scores. In some cases, the variability across serial neurocognitive testing is due to practice effects.

In the presence of a practice effect, the clinician can expect the greatest improvement in test scores to occur between the first and second administrations of a neurocognitive test. The clinician must be able to differentiate between a learning effect and neurocognitive recovery so as to make an accurate decision about whether the concussed athlete has recovered and is ready to return to competition. In addition, this finding of low to moderate reliability further illustrates the need for trained neuropsychologists to assist in the interpretation of neurocognitive testing results because many factors can influence the variability and accuracy of these scores.

This study also illustrated that for tests of processing speed, age-related differences exist between high school and collegiate athletes, with a much higher percentage of high school athletes showing improvements on reaction time and processing speed variables across test sessions. Therefore, at a minimum, baseline measures of processing speed may need to be reassessed as an athlete ages to ensure the most accurate representation of proper cognitive function due to continued brain development, among other factors. Accurate baseline assessments are important because depressed baseline levels may lead to faulty interpretation of postinjury results and possible premature return to play.

REFERENCES

- 1.Collins MW, Lovell MR, Iverson GL, Cantu RC, Maroon JC, Field M. Cumulative effects of concussion in high school athletes. Neurosurgery. 2002;51(5):1175–1181. doi: 10.1097/00006123-200211000-00011. [DOI] [PubMed] [Google Scholar]

- 2.Guskiewicz KM, Ross SE, Marshall SW. Postural stability and neuropsychological deficits after concussion in collegiate athletes. J Athl Train. 2001;36(3):263–273. [PMC free article] [PubMed] [Google Scholar]

- 3.Iverson GL, Brooks BL, Collins MW, Lovell MR. Tracking neuropsychological recovery following concussion in sport. Brain Inj. 2006;20(3):245–252. doi: 10.1080/02699050500487910. [DOI] [PubMed] [Google Scholar]

- 4.Lovell MR, Collins MW, Iverson GL, et al. Recovery from mild concussion in high school athletes. J Neurosurg. 2003;98(2):296–301. doi: 10.3171/jns.2003.98.2.0296. [DOI] [PubMed] [Google Scholar]

- 5.Lovell MR, Collins MW, Iverson GL, Johnston KM, Bradley JP. Grade 1 or “ding” concussions in high school athletes. Am J Sports Med. 2004;32(1):47–54. doi: 10.1177/0363546503260723. [DOI] [PubMed] [Google Scholar]

- 6.Lincoln AE, Caswell SV, Almquist JL, Dunn RE, Norris JB, Hinton RY. Trends in concussion incidence in high school sports: a prospective 11-year study. Am J Sports Med. 2011;39(5):958–963. doi: 10.1177/0363546510392326. [DOI] [PubMed] [Google Scholar]

- 7.Daneshvar DH, Nowinski CJ, McKee AC, Cantu RC. The epidemiology of sport-related concussion. Clin Sports Med. 2011;30(1):1–17, vii. doi: 10.1016/j.csm.2010.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bakhos LL, Lockhart GR, Myers R, Linakis JG. Emergency department visits for concussion in young child athletes. Pediatrics. 2010;126(3):e550–e556. doi: 10.1542/peds.2009-3101. [DOI] [PubMed] [Google Scholar]

- 9.Guskiewicz KM, Bruce SL, Cantu RC, et al. National Athletic Trainers' Association position statement: management of sport-related concussion. J Athl Train. 2004;39(3):280–297. [PMC free article] [PubMed] [Google Scholar]

- 10.McCrory P, Meeuwisse W, Johnston K, et al. Consensus statement on concussion in sport: The 3rd International Conference on Concussion in Sport held in Zurich, November 2008. Br J Sports Med. 2009;43(suppl 1):i76–i90. doi: 10.1136/bjsm.2009.058248. [DOI] [PubMed] [Google Scholar]

- 11.McCrory P, Meeuwisse W, Johnston K, et al. Consensus statement on concussion in sport: 3rd International Conference on Concussion in Sport held in Zurich, November 2008. Clin J Sport Med. 2009;19(3):185–200. doi: 10.1097/JSM.0b013e3181a501db. [DOI] [PubMed] [Google Scholar]

- 12.Broglio SP, Macciocchi SN, Ferrara MS. Sensitivity of the concussion assessment battery. Neurosurgery. 2007;60(6):1050–1058. doi: 10.1227/01.NEU.0000255479.90999.C0. [DOI] [PubMed] [Google Scholar]

- 13.Buzzini SR, Guskiewicz KM. Sport-related concussion in the young athlete. Curr Opin Pediatr. 2006;18(4):376–382. doi: 10.1097/01.mop.0000236385.26284.ec. [DOI] [PubMed] [Google Scholar]

- 14.Guskiewicz KM, Weaver NL, Padua DA, Garrett WE., Jr Epidemiology of concussion in collegiate and high school football players. Am J Sports Med. 2000;28(5):643–650. doi: 10.1177/03635465000280050401. [DOI] [PubMed] [Google Scholar]

- 15.Field M, Collins MW, Lovell MR, Maroon J. Does age play a role in recovery from sports-related concussion? A comparison of high school and collegiate athletes. J Pediatr. 2003;142(5):546–553. doi: 10.1067/mpd.2003.190. [DOI] [PubMed] [Google Scholar]

- 16.Collie A, Maruff P, Darby DG, McStephen M. The effects of practice on the cognitive test performance of neurologically normal individuals assessed at brief test-retest intervals. J Int Neuropsychol Soc. 2003;9(3):419–428. doi: 10.1017/S1355617703930074. [DOI] [PubMed] [Google Scholar]

- 17.Rosenbaum AM, Arnett PA, Bailey CM, Echemendia RJ. Neuropsychological assessment of sports-related concussion: measuring clinically significant change. In: Slobounov S, Sebastianelli W, editors. Foundation of Sports-Related Brain Injuries. New York, NY: Springer; 2006. pp. 137–169. [Google Scholar]

- 18.Iverson GL, Lovell MR, Collins MW. Interpreting change on ImPACT following sport concussion. Clin Neuropsychol. 2003;17(4):460–467. doi: 10.1076/clin.17.4.460.27934. [DOI] [PubMed] [Google Scholar]

- 19.Duff K, Beglinger LJ, Schultz SK, et al. Practice effects in the prediction of long-term cognitive outcome in three patient samples: a novel prognostic index. Arch Clin Neuropsychol. 2007;22(1):15–24. doi: 10.1016/j.acn.2006.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Frazen MD. Reliability and Validity in Neuropsychological Assessment. New York, NY: Plenum; 1989. [Google Scholar]

- 21.Mitrushina MN, Boone KB, D'Elia L. Handbook of Normative Data for Neuropsychological Assessment. New York, NY: Oxford University Press; 1999. [Google Scholar]

- 22.Broglio SP, Ferrara MS, Macciocchi SN, Baumgartner TA, Elliott R. Test-retest reliability of computerized concussion assessment programs. J Athl Train. 2007;42(4):509–514. [PMC free article] [PubMed] [Google Scholar]

- 23.Iverson GL, Lovell MR, Collins MW. Validity of ImPACT for measuring processing speed following sports-related concussion. J Clin Exp Neuropsychol. 2005;27(6):683–689. doi: 10.1081/13803390490918435. [DOI] [PubMed] [Google Scholar]

- 24.Schatz P. Long-term test-retest reliability of baseline cognitive assessments using ImPACT. Am J Sports Med. 2010;38(1):47–53. doi: 10.1177/0363546509343805. [DOI] [PubMed] [Google Scholar]

- 25.Brandt J, Benedict RHB. Hopkins Verbal Learning Test-Revised: Professional Manual. Odessa, FL: Psychological Assessment Resources; 2001. [Google Scholar]

- 26.Woods SP, Scott JC, Conover E, et al. Test-retest reliability of component process variables within the Hopkins Verbal Learning Test-Revised. Assessment. 2005;12(1):96–100. doi: 10.1177/1073191104270342. [DOI] [PubMed] [Google Scholar]

- 27.Benedict RHB. Brief Visuospatial Memory Test-Revised: Professional Manual. Odessa, FL: Psychological Assessment Resources; 1997. [Google Scholar]

- 28.Benedict RHB. Effects of using same-versus alternate-form memory tests during short-interval repeated assessments in multiple sclerosis. J Int Neuropsychol Soc. 2005;11(6):727–736. doi: 10.1017/S1355617705050782. [DOI] [PubMed] [Google Scholar]

- 29.Reitan RM. Trail Making Test: Manual for Administration and Scoring. Tucson, AZ: Reitan Neuropsychology Laboratory; 1992. [Google Scholar]

- 30.Kortte KB, Horner MD, Windham WK. The Trail Making Test, Part B: cognitive flexibility or ability to maintain set. Appl Neuropsychol. 2002;9(2):106–109. doi: 10.1207/S15324826AN0902_5. [DOI] [PubMed] [Google Scholar]

- 31.Giovagnoli AR, Del Pesce M, Mascheroni S, Simoncelli M, Laiacona M, Capitani E. Trail Making Test: normative values from 287 normal adult controls. Ital J Neurol Sci. 1996;17(4):305–309. doi: 10.1007/BF01997792. [DOI] [PubMed] [Google Scholar]

- 32.Valovich McLeod TC, Barr WB, McCrea M, Guskiewicz KM. Psychometric and measurement properties of concussion assessment tools in youth sports. J Athl Train. 2006;41(4):399–408. [PMC free article] [PubMed] [Google Scholar]

- 33.Smith A. Symbol Digit Modalities Test Manual. Los Angeles, CA: Western Psychological Services; 1972. [Google Scholar]

- 34.Benedict RH, Duquin JA, Jurgensen S, et al. Repeated assessment of neuropsychological deficits in multiple sclerosis using the Symbol Digit Modalities Test and the MS Neuropsychological Screening Questionnaire. Mult Scler. 2008;14(7):940–946. doi: 10.1177/1352458508090923. [DOI] [PubMed] [Google Scholar]

- 35.Trenerry MR, DeBoe J, Leber WR. Stroop Neuropsychological Screening Test: Manual. Odessa, FL: Psychological Assessment Resources; 1989. [Google Scholar]

- 36.Lemay S, Bedard MA, Rouleau I, Tremblay PL. Practice effect and test-retest reliability of attentional and executive tests in middle-aged to elderly subjects. Clin Neuropsychol. 2004;18(2):284–302. doi: 10.1080/13854040490501718. [DOI] [PubMed] [Google Scholar]

- 37.Franzen MD, Tishelman AC, Sharp BH, Friedman AG. An investigation of the test-retest reliability of the Stroop Color-Word Test across two intervals. Arch Clin Neuropsychol. 1987;2(3):265–272. [PubMed] [Google Scholar]

- 38.Iverson GL, Lovell MR, Collins MW. Immediate Post-Concussion Assessment and Cognitive Test (ImPACT): normative data. Version 2.0. 2003. http://www.impacttest.com/ArticlesPage_images/Articles_Docs/7ImPACTNormativeDataversion%202.pdf. Accessed May 2, 2007. [Google Scholar]

- 39.Hunt TN, Ferrara MS. Age-related differences in neuropsychological testing among high school athletes. J Athl Train. 2009;44(4):405–409. doi: 10.4085/1062-6050-44.4.405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Salthouse TA. Decomposing age correlations on neuropsychological and cognitive variables. J Int Neuropsychol Soc. 2009;15(5):650–661. doi: 10.1017/S1355617709990385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Salthouse TA. When does age-related cognitive decline begin. Neurobiol Aging. 2009;30(4):507–514. doi: 10.1016/j.neurobiolaging.2008.09.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Duff K, Westervelt HJ, McCaffrey RJ, Haase RF. Practice effects, test-retest stability, and dual baseline assessments with the California Verbal Learning Test in an HIV sample. Arch Clin Neuropsychol. 2001;16(5):461–476. [PubMed] [Google Scholar]

- 43.Bleiberg J, Cernich AN, Cameron K, et al. Duration of cognitive impairment after sports concussion. Neurosurgery. 2004;54(5):1073–1080. doi: 10.1227/01.neu.0000118820.33396.6a. [DOI] [PubMed] [Google Scholar]

- 44.McCaffrey RJ, Ortega A, Orsillo SM, Nelles WB, Haase RF. Practice effects in repeated neuropsychological assessments. Clin Neuropsychol. 1992;6(1):32–42. [PubMed] [Google Scholar]

- 45.Segalowitz SJ, Mahaney P, Santesso DL, MacGregor L, Dywan J, Willer B. Retest reliability in adolescents of a computerized neuropsychological battery used to assess recovery from concussion. Neuro Rehabil. 2007;22(3):243–251. [PubMed] [Google Scholar]

- 46.Schatz P. Long-term test-retest reliability of baseline cognitive assessments using ImPACT. Am J Sports Med. 2010;38(1):47–53. doi: 10.1177/0363546509343805. [DOI] [PubMed] [Google Scholar]

- 47.Parsons TD, Notebaert AJ, Shields EW, Guskiewicz KM. Application of reliable change indices to computerized neuropsychological measures of concussion. Int J Neurosci. 2009;119(4):492–507. doi: 10.1080/00207450802330876. [DOI] [PubMed] [Google Scholar]

- 48.Hinton-Bayre AD, Geffen GM, Geffen LB, McFarland KA, Friis P. Concussion in contact sports: reliable change indices of impairment and recovery. J Clin Exp Neuropsychol. 1999;21(1):70–86. doi: 10.1076/jcen.21.1.70.945. [DOI] [PubMed] [Google Scholar]