Abstract

Objective

Inadequate participant recruitment is a major problem facing clinical research. Recent studies have demonstrated that electronic health record (EHR)-based, point-of-care, clinical trial alerts (CTA) can improve participant recruitment to certain clinical research studies. Despite their promise, much remains to be learned about the use of CTAs. Our objective was to study whether repeated exposure to such alerts leads to declining user responsiveness and to characterize its extent if present to better inform future CTA deployments.

Methods

During a 36-week study period, we systematically documented the response patterns of 178 physician users randomized to receive CTAs for an ongoing clinical trial. Data were collected on: (1) response rates to the CTA; and (2) referral rates per physician, per time unit. Variables of interest were offset by the log of the total number of alerts received by that physician during that time period, in a Poisson regression.

Results

Response rates demonstrated a significant downward trend across time, with response rates decreasing by 2.7% for each advancing time period, significantly different from zero (flat) (p<0.0001). Even after 36 weeks, response rates remained in the 30%–40% range. Subgroup analyses revealed differences between community-based versus university-based physicians (p=0.0489).

Discussion

CTA responsiveness declined gradually over prolonged exposure, although it remained reasonably high even after 36 weeks of exposure. There were also notable differences between community-based versus university-based users.

Conclusions

These findings add to the limited literature on this form of EHR-based alert fatigue and should help inform future tailoring, deployment, and further study of CTAs.

Keywords: Clinical decision support, clinical trials, clinical research, medical informatics, qualitative/ethnographic field study, enhancing the conduct of biological/clinical research and trials, classical experimental and quasi-experimental study methods (lab and field), developing/using clinical decision support (other than diagnostic) and guideline systems

Background and significance

Clinical trials are essential to the advancement of medicine, and research participant recruitment is critical to successful trial conduct. Unfortunately, difficulties achieving recruitment goals are common, and failure to meet such goals can impede the development and evaluation of new medical therapies.1 2 It is well recognized that physicians often play a vital role in the recruitment of participants for certain trials. However, barriers including time constraints, unfamiliarity with available trials, and difficulty referring patients to trials, often make it challenging to recruit during routine practice.3–6 Consequently, most clinicians do not engage in traditional recruitment activities and recruitment rates suffer.4 6

The increasing availability of electronic health records (EHRs) presents an opportunity to address the issue of inadequate recruitment for clinical trials by leveraging the information and decision support resources often built into such systems. Indeed, recent studies of EHR-based, point-of-care, clinical trial alerts (CTA) have demonstrated they have the potential to improve recruitment rates when applied to clinical trials.7–9 Despite their promise and the fact that they have been well tolerated in recent studies, CTAs like any point-of-care alert do have the potential for misuse, and further study is needed to better understand and inform their appropriate, widespread use.10 One important but poorly understood aspect relates to the performance characteristics of such alerts, particularly the issue of clinician responsiveness to alerts over time, and the implications of such phenomena on alert design and deployment decisions.

It is well recognized that when clinicians are exposed to too many clinical decision support (CDS) alerts they may eventually stop responding to them. This phenomenon is often called alert fatigue.11 12 While definitions vary and empirical evidence as to its cause is limited, alert fatigue is generally thought to result from one or more distinct but closely related factors. One such factor is declining clinician responsiveness to alerts as the number of simultaneous alerts increases. This is thought to be related to issues such as alert irrelevance and cognitive overload, and has also been referred to as ‘alert overload.’13 14 A second factor relates to declining clinician responsiveness to a particular type of alert as the clinician is repeatedly exposed to that alert over a period of time, gradually becoming ‘fatigued’ or desensitized to it.15 Few studies have explored this latter issue of ‘fatigue’ due to repeated exposure to alerts over time,16 and it is this latter aspect of alert fatigue that motivated and was the focus of the current study.

Although the purpose of CTAs is to provide decision support for trial recruitment rather than for clinical care, we hypothesized that this phenomenon of alert fatigue over time would be a factor in the usage patterns of CTAs, as also noted for CDS alerts, and would present itself as a gradual reduction in response rates over time. As the nature and extent of this issue and the overall performance characteristics of CTAs are not fully understood, the current study was performed among physician subjects as a planned part of a recently conducted randomized controlled intervention study of a CTA.17

Methods

We performed a cluster-randomized controlled study of a CTA intervention across three health system environments that share a common, commercial ambulatory EHR (GE Centricity EMR). The study of the CTA and the clinical trial to which it was applied were approved by our institutional review board. The associated clinical trial involving patients with insulin resistance after a recent stroke event was registered in Clinical Trials.gov (NCT00091949).

Subjects involved in this 36-week study of the CTA intervention in 2009 (the first, pre-cross-over phase of a larger study on the impact of this CTA intervention) included 178 physicians who were randomly divided into equal groups within their specialties (ie, neurologists, n=26; family medicine physicians, n=35; general internists, n=46; internal medicine-pediatrics specialists, n=8; and internal medicine house staff, n=63). All neurologists were university-based practitioners, while the other generalist physicians practiced either in university-based or community-based settings. Prior to CTA activation, all physicians were encouraged via traditional means (eg, discussion at staff meetings, email blasts, flyers) to recruit patients to the trial.

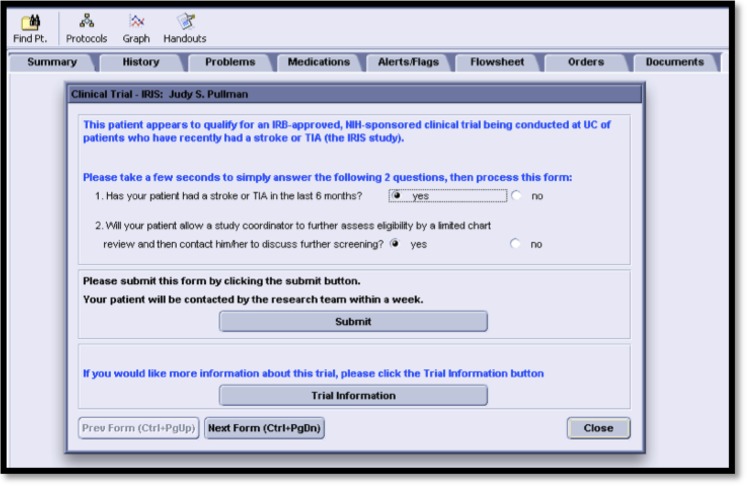

Upon CTA activation, intervention physicians seeing eligible patients were presented with on-screen CTAs that suggested they consider and discuss trial recruitment with the patient, and click an on-screen button to send a secure referral message to the trial coordinator if appropriate (figure 1). The physicians involved in this study did not receive any incentives for their participation in these recruitment efforts. It is worth noting that the results of the phase of the underlying CTA intervention study during which this analysis of responses took place revealed a significant 20-fold increase in referrals (p<0.0002) and a ninefold increase in enrollments (p<0.006).17 The design of the CTA intervention itself has also previously been reported and involved a minimal amount of novel programming as the built-in tools and resources of the particular EHR system were used.18 This was a similar approach to that employed with another popular EHR system upon which we have previously reported.19

Figure 1.

Screen shot of the clinical trial alert used in the randomized controlled trial that was the basis for the current study.

Additional data were also collected during the same period via direct query of the EHR-fed enterprise data warehouse for the two types of events in which we were interested: (1) responses that indicated interaction with the CTA (ie, taking action by responding to at least one of the questions posed in the alert); and (2) flags (referrals) sent to the study coordinator via positive responses to both questions and then processing of the CTA. In addition, data on the total number of alerts triggered were extracted as the denominator value for determination of a ratio of (1) response rate and (2) referral rate per physician, per time unit.

In our analyses, the dependent variables ‘responses’ and ‘referrals’ were offset by the log of the total number of alerts received by that physician during the same period, in a Poisson regression. Correlations across time were modeled as a ‘spatial power’ process (equivalent to an autoregressive 1 process). While CTAs could have triggered more than once for a given patient–physician pair if the initial CTA was ignored and if the patient returned during the study period and was still eligible for the alert, any such subsequent alerts were not included in our analyses. We used 2-week time periods for our analyses, for a total of 18 time periods over this 36-week study. Results are presented in numeric and graphical form along with the relevant p values to indicate the significance of the findings.

Results

During the 36-week CTA exposure period, 915 total alerts were triggered for 178 physicians in the intervention arm of the associated randomized controlled study of the CTA. Eight alerts were discarded because they represented second alerts for patients who had earlier received an alert during the study period that was not responded to, leaving 907 eligible alerts for analysis.

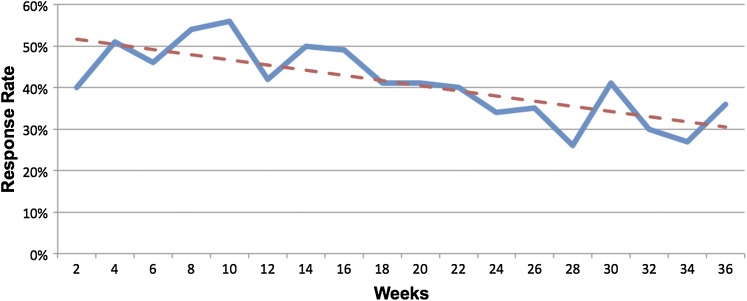

During the initial time period, the response rate to CTAs was about 50% among all users but dropped significantly over time by 2.7% for each advancing 2-week period, and that trend was significantly different from zero (flat) (p<0.0001; figure 2). Notably, there was still a 35% response rate at the 36th week of exposure.

Figure 2.

Physician response rates to clinical trial alerts (CTAs) are plotted at 2-week intervals over the 36-week study. The solid line tracks response rates at each time point. The dashed line represents the linear regression line through each time point. Response rates declined at a rate of 2.7% per 2-week time period (p<0.0001).

Subgroup analyses of response rate changes over time reveal that there are no significant differences between subspecialists (neurologists in this case) and generalists, with both groups trending downward to a similar extent, whether or not community generalists are included in the analyses. However, there is a greater response rate drop-off among all community-based providers compared with all university-based physicians (p=0.0489). Further, that drop-off is stronger when university-based subspecialists (neurologists) are removed and the analyses are restricted to generalists in both types of settings (p=0.0146).

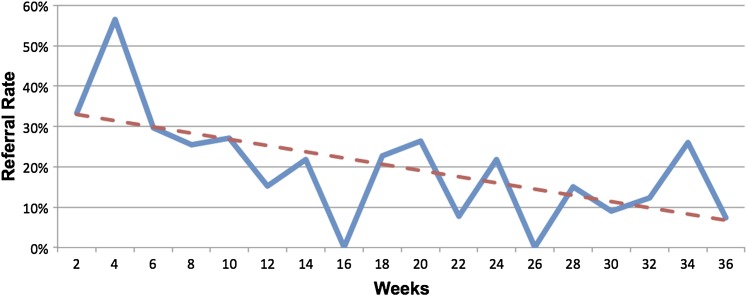

Referrals rates started at about 33% and, although they fluctuated, declined to about 9% by the end of the study period. Of note, generalists made fewer referrals than subspecialists (p<0.0001), and community-based physicians made fewer referrals than university-based physicians (p=0.006). The decline in referrals rates over time was more pronounced than the decline in response rates noted above. Specifically, there was a significant 4.9% decrease in referral rates per time period (p=0.0294) (figure 3).

Figure 3.

Physician-generated referral rates using clinical trial alerts (CTAs) are plotted at 2-week intervals over the 36-week study. The solid line tracks referrals rates at each time point. The dashed line represents the linear regression line through each time point. Referral rates declined at a rate of 4.9% per 2-week time period (p=0.0294).

While absolute referral rates differed between groups, subgroup analyses of physician-generated CTA referral rates revealed no significant differences in rate declines between: (1) subspecialists versus generalists; (2) community-based versus university-based physicians; and (3) community-based versus university-based generalists only (ie, excluding neurologists).

Discussion

The use of EHR-based CTAs has been demonstrated to increase participant recruitment rates to clinical trials, and is a promising approach for overcoming the major problem of inadequate and slow participant recruitment.7–9 17 Because such an approach will necessarily be employed in the context of complex and varied clinical care environments, information on the performance characteristics and response patterns among different groups of potential end-users is needed to inform its application and use.

These findings add to our understanding of how such alerts for clinical trials operate in real-world implementations by demonstrating empirically how and to what extent the rates of responses to such alerts decline across a variety of settings and end-users. Notably, they reveal, as hypothesized, that responses to point-of-care CTAs decline over time, although not as severely as anticipated, at least with regard to response rates.

Indeed, overall response rates to this series of alerts was initially high at 50% and remained reasonably high at 35% even after 36 months of exposure, compared to CDS alerts which tend to have 4%–51% response rates.12 While the fall in response rate suggests alert fatigue over time, the fact that a substantial proportion of the alerts were still being responded to at 36 weeks suggests that such a duration of use may still provide benefit. However, the finding that referral rates declined more quickly and more precipitously over time than response rates suggests there might be a point after which use of a CTA might not be worth even the minimal disruption they cause.10 In addition, the differences seen among community-based versus university-based physicians suggest that future CTA deployments should be tailored to a particular setting (ie, shorter in community-based settings and longer in university-based settings) in order to maximize benefit while avoiding excess fatigue. Additionally, as noted with some CDS alerts, tailoring of the alert's operating characteristics (eg, increasing specificity such that they trigger less often) might also affect response patterns and ultimately effectiveness, particularly in practice settings or specialties where response rates fall more rapidly.13 20

While the design of this study does not allow for definitive determination of the reasons for the declines noted, the difference between the response rate decline (2.7% per time period) and the referral rate decline (4.9% per time period) might reflect the fact that the act of CTA referral requires more effort than a simple response, and therefore causes more fatigability over time. However, this difference could also suggest the presence of other factors such as the possibility that declines in referrals reflect a drop in the available pool of eligible or interested candidates rather than alert fatigue. However, the population of potentially eligible participants (ie, patients with a recent stroke) remained relatively constant during the study, making this less likely. Nevertheless, it is probable that the reasons for the declines were multi-factorial, reflecting the combined influence of alert fatigue and other factors. Additional studies, including qualitative studies to assess physician-user perceptions, are ongoing and should help clarify other reasons for the declines noted.

Comparison of physician response patterns over time and apparent alert fatigue with those when similar CDS approaches are employed for clinical use would be useful. Unfortunately, data on such changes over time in CDS response rates appear to be lacking in the published literature. As noted above, plentiful circumstantial evidence of this aspect of alert fatigue in many studies reveals less than ideal average rates of response to CDS interventions,11 12 with some studies commenting on the common behavior of overriding alerts,20 and still others addressing changes that can increase average response rates by improving the usability or appropriateness of alerts.13 However, although this form of alert fatigue over time undoubtedly exists, there has been surprisingly little empirical evidence of it, or data to characterize the nature of the phenomenon. Our study appears to be among the first to empirically demonstrate this aspect of alert fatigue by tracking changes in clinician response to alerts over time. Therefore, we believe it has implications beyond recruitment using CTAs, and that such an approach to measuring responses over time can help advance understanding of alert fatigue in general. We also believe that the methodology employed here could be used to evaluate and refine the design and application of decision support alerts in the future.

Although the randomized study design and multi-user, multi-environment setting strengthen these findings and advance our understanding of CTA usage, this study has some limitations. These findings were derived from a single study of CTAs employed in a single trial of patients with recent stroke. Whether these findings would differ if the CTA were applied to another type of trial or in different settings remains to be determined. Also, while the CTA approach has been demonstrated to be effective using multiple EHR platforms,7–9 17 this study employed a single EHR and these findings might differ with the use of another EHR. Furthermore, this alert was employed in a setting where other alerts were rarely triggered. Another factor possibly impacting response rates over time is the threshold setting (ie, sensitivity vs specificity) for a given alert. Whether the findings of this study would differ if there were multiple or more frequent alerts is not known but is possible given that multiple simultaneous alerts are a commonly cited factor leading to alert fatigue as noted above, and should be studied.

Conclusion

Physician response rates to CTAs started and remained relatively high even after a period of use, although they gradually but significantly declined over time. While overall response rates were lower among generalists than subspecialists, the rates of decline in CTA responses and referrals varied significantly only between university-based versus community-based physicians, and not between generalists versus subspecialists. These data also suggest that alert fatigue over time is likely a factor that must be taken into account when CTAs are employed.

While it is currently unclear how the nature and degree of alert fatigue for CTAs compares to that of other types of CDS alerts, this study has implications for the implementation and management of such alerts. The methodology used here also appears to have implications for studies into the relative impact of alert fatigue across a range of decision support alert interventions. Overall, these findings offer much-needed empirical data about the performance characteristics of CTAs, data that should help inform the tailoring and application of CTAs in real-world environments in order to overcome the major research challenge of improving and accelerating participant recruitment.

Acknowledgments

Preliminary findings from this study were presented in abstract form at the 2011 AMIA Joint Summits on Translational Science. Special thanks go to our collaborators on the associated intervention study: Drs Mark Eckman, Philip Payne, Nancy Elder, Sian Cotton, and Emily Patterson, and Ms. Ruth Wise.

Footnotes

Contributors: PJE contributed to the conception, design, and acquisition and interpretation of data, and drafted and revised the manuscript. AL contributed to the design, interpretation of data, and critical revisions to the manuscript. Both authors approved the final version to be published.

Funding: This project was supported by a grant from the National Library of Medicine of the National Institutes of Health, R01-LM009533.

Competing interests: None.

Ethics approval: Ethics approval was granted by the University of Cincinnati Institutional Review Board.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Nathan DG, Wilson JD. Clinical research and the NIH: a report card. N Engl J Med 2003;349:1860–5 [DOI] [PubMed] [Google Scholar]

- 2.Campbell EG, Weissman JS, Moy E, et al. Status of clinical research in academic health centers: views from the research leadership. JAMA 2001;286:800–6 [DOI] [PubMed] [Google Scholar]

- 3.Mansour EG. Barriers to clinical trials. Part III: knowledge and attitudes of health care providers. Cancer 1994;74(9 Suppl):2672–5 [DOI] [PubMed] [Google Scholar]

- 4.Siminoff LA, Zhang A, Colabianchi N, et al. Factors that predict the referral of breast cancer patients onto clinical trials by their surgeons and medical oncologists. J Clin Oncol 2000;18:1203–11 [DOI] [PubMed] [Google Scholar]

- 5.Somkin CP, Altschuler A, Ackerson L, et al. Organizational barriers to physician participation in cancer clinical trials. Am J Manag Care 2005;11:413–21 [PubMed] [Google Scholar]

- 6.Winn RJ. Obstacles to the accrual of patients to clinical trials in the community setting. Semin Oncol 1994;21(4 Suppl 7):112–17 [PubMed] [Google Scholar]

- 7.Embi PJ, Jain A, Clark J, et al. Effect of a clinical trial alert system on physician participation in trial recruitment. Arch Intern Med 2005;165:2272–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rollman BL, Fischer GS, Zhu F, et al. Comparison of electronic physician prompts versus waitroom case-finding on clinical trial enrollment. J Gen Intern Med 2008;23:447–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Grundmeier RW, Swietlik M, Bell LM. Research subject enrollment by primary care pediatricians using an electronic health record. AMIA Annu Symp Proc 2007:289–93 [PMC free article] [PubMed] [Google Scholar]

- 10.Embi PJ, Jain A, Harris CM. Physicians' perceptions of an electronic health record-based clinical trial alert approach to subject recruitment: a survey. BMC Med Inform Decis Mak 2008;8:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ash JS, Sittig DF, Campbell EM, et al. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc 2007:26–30 [PMC free article] [PubMed] [Google Scholar]

- 12.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13:5–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Horsky J, Zhang J, Patel VL. To err is not entirely human: complex technology and user cognition. J Biomed Inform 2005;38:264–6 [DOI] [PubMed] [Google Scholar]

- 15.Cash JJ. Alert fatigue. Am J Health Syst Pharm 2009;66:2098–101 [DOI] [PubMed] [Google Scholar]

- 16.Shah A. Alert Fatigue. 2011. http://clinfowiki.org/wiki/index.php/Alert_fatigue (accessed 15 Jan 2012). [Google Scholar]

- 17.Embi PJ, Eckman MH, Payne PR, et al. EHR-based clinical trial alert effects on recruitment to a neurology trial across settings: interim analysis of a randomized controlled Study. AMIA Summits Transl Sci Proc; March 2010. San Francisco, CA, 2010 [PubMed] [Google Scholar]

- 18.Embi PJ, Lieberman MI, Ricciardi TN. Early Development of a Clinical Trial Alert System in an EHR Used in Small Practices: Toward Generalizability. Phoenix, AZ: AMIA Spring Congress, 2006 [Google Scholar]

- 19.Embi PJ, Jain A, Clark J, et al. Development of an electronic health record-based Clinical Trial Alert system to enhance recruitment at the point of care. AMIA Annu Symp Proc 2005:231–5 [PMC free article] [PubMed] [Google Scholar]

- 20.Weingart SN, Toth M, Sands DZ, et al. Physicians' decisions to override computerized drug alerts in primary care. Arch Intern Med 2003;163:2625–31 [DOI] [PubMed] [Google Scholar]