Abstract

Many aberration detection algorithms are used in infectious disease surveillance systems to assist in the early detection of potential outbreaks. In this study, we explored a novel approach to adjusting aberration detection algorithms to account for the impact of seasonality inherent in some surveillance data. By using surveillance data for hand-foot-and-mouth disease in Shandong province, China, we evaluated the use of seasonally-adjusted alerting thresholds with three aberration detection methods (C1, C2, and C3). We found that the optimal thresholds of C1, C2, and C3 varied between the epidemic and non-epidemic seasons of hand-foot-and-mouth disease, and the application of seasonally adjusted thresholds improved the performance of outbreak detection by maintaining the same sensitivity and timeliness while decreasing by nearly half the false alert rate during the non-epidemic season. Our preliminary findings suggest a general approach to improving aberration detection for outbreaks of infectious disease with seasonally variable incidence.

Keywords: Surveillance, outbreaks, signal detection, algorithms, seasonal variation, detection, simulation of complex systems (at all levels: molecules to work groups to organizations), monitoring the health of populations, detecting disease outbreaks and biological threats, machine learning, predictive modeling, statistical learning, privacy technology, decision modeling, education, public health informatics, surveillance, outbreaks, signal detection, algorithms, seasonal variation

Introduction

Aberration detection algorithms are used in surveillance systems to detect potential outbreaks and to facilitate rapid response.1–6 However, many infectious diseases are seasonal, which affects the performance of aberration detection algorithms.1 7 Some algorithms use historical data over several years to model the effect of seasonality on disease incidence,2 5 6 but there is no general approach to adjusting algorithms to account for seasonality, especially when historical data are limited.

The objective of our study was to evaluate a new way to adjust aberration detection algorithms to account for the seasonality inherent in some surveillance data. Our approach is to identify optimal alerting thresholds for algorithms during epidemic and non-epidemic seasons. We report results from an evaluation of this approach using data for hand-foot-and-mouth disease (HFMD) cases and outbreaks in Shandong Province, in China.

Methods

We evaluated our seasonal adjustment approach using the C1, C2, and C3 aberration detection algorithms, which were developed by the US Centers for Disease Control and Prevention.6 The three algorithms require few historical baseline data and are based on a positive one-sided cumulative sum calculation. These algorithms estimate the expected value on any given day as the average of the observed values over the previous 7 days. For the C1 algorithm, the baseline is the past 7 days (ie, t-1 to t-7), while for the C2 and C3 algorithms, the baseline begins 2 days before the current day (ie, t-3 to t-9). The C3 algorithm also maintains a 2-day running sum, and all three algorithms are described in detail elsewhere.6 8 The commonly used threshold for C1 and C2 is 2.0.6 8 In our study, acknowledging that algorithm alert thresholds impact detection performance,4 5 we considered 20 threshold values (from 0.2 to 4.0, with intervals of 0.2) for C1, C2, and C3 to determine the optimal threshold during epidemic and non-epidemic periods. All algorithms and analyses were implemented in R software.9

Since May 2008, HFMD has been a notifiable infectious disease in China. We used reports of HFMD from Shandong province, China for 2009; these data included time series of cases reported in all 1886 townships of Shandong province. The epidemic season was identified by consultation with epidemiologists and consideration of the epidemiological characteristics of incident cases of HFMD. Reported HFMD outbreaks in Shandong province in 2009 were taken as the reference standard for algorithm evaluation, as all of these outbreaks were verified through field investigation by local public health departments.10 As with previous research,4 8 11 we defined the start and end of an outbreak in our study as the first and last dates cases associated with the outbreak were reported. An outbreak was considered detected when a signal was triggered by the algorithm during the outbreak.

We evaluated algorithms in terms of their sensitivity, false alarm rate, and time to detection.1 8 12 Sensitivity was defined as the number of outbreaks flagged, divided by the total number of reported outbreaks. The false alarm rate was defined as the number of non-outbreak days flagged, divided by the total number of non-outbreak days. Time to detection was defined as the median number of days from the beginning of each outbreak to the first day the outbreak was flagged.4 11 If the algorithm flagged the first day of an outbreak, the detection time of this outbreak was zero.4 To enable the calculation of timeliness data for all outbreaks, if an outbreak was undetected, the detection time was set to the total duration of the outbreak, so as to enable calculation of the median timeliness across all outbreaks.11 Therefore, time to detection is an integrated index that reflects both the timeliness and sensitivity of an algorithm.4 11 The optimal threshold for an algorithm was that which gave the shortest detection time, or that which gave the lowest false alarm rate when the time to detection was same among different thresholds.4 We used the Student t test to examine whether the false alarm rates were significantly different by using the thresholds estimated for the non-epidemic season and the thresholds estimated for the entire year.

Results

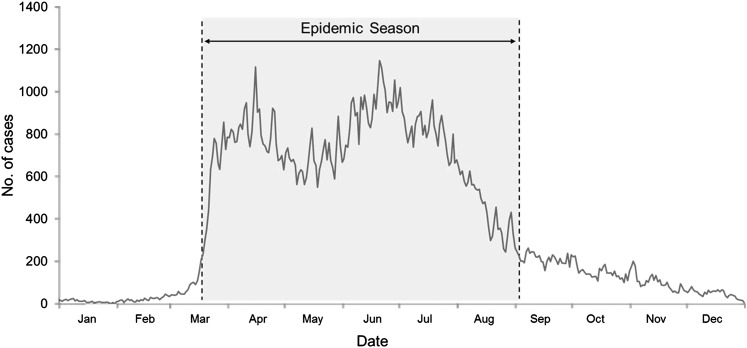

In 2009, 138 593 cases and 108 outbreaks of HFMD were reported in Shandong province. For this study, the epidemic season was defined as the period from March 20, 2009 to September 2, 2009 (167 days), during which the daily number of cases was over 200; the other days of the year were defined as the non-epidemic season (198 days) (figure 1). There were 120 853 cases (87.2%) and 97 outbreaks (89.8%) in the epidemic season.

Figure 1.

The hand-foot-and-mouth epidemic in 2009 in Shandong province, China. The epidemic season is the period from March 20, 2009 to September 2, 2009.

When considering data for the whole year, the optimal thresholds for C1, C2, and C3 were 0.2, 0.6, and 0.6, respectively. During the epidemic season, the sensitivity was 95.88% and time to detection was 1 day for all three algorithms; C2 had the lowest false alarm rate (1.61%) and the least number of signals (5850) (table 1). During the non-epidemic season, the sensitivity of the three algorithms was 100% and time to detection was 4 days for each; C1, C2, and C3 had false alarm rates of 0.13%, 0.12%, and 0.12%, respectively.

Table 1.

The number of signals, sensitivity, time to detection, and false alarm rates for the C1, C2, and C3 algorithms by epidemic and non-epidemic season, based on the optimal thresholds identified using data for the whole year

| Period | Algorithm | Optimal threshold | No. signals | Sensitivity (%) | Time to detection (days) | False alarm rate (%) |

| Epidemic season* | C1 | 0.2 | 6614 | 95.88 | 1.0 | 1.83 |

| C2 | 0.6 | 5850 | 95.88 | 1.0 | 1.61 | |

| C3 | 0.6 | 6000 | 95.88 | 1.0 | 1.65 | |

| Non-epidemic season* | C1 | 0.2 | 491 | 100 | 4.0 | 0.13 |

| C2 | 0.6 | 456 | 100 | 4.0 | 0.12 | |

| C3 | 0.6 | 463 | 100 | 4.0 | 0.12 |

The period from March 20, 2009 to September 2, 2009 was defined as the epidemic season, a total of 167 days. The rest of the year was defined as the non-epidemic season, a total of 198 days.

When considering data from the epidemic and non-epidemic seasons separately, the optimal thresholds during the epidemic season for C1, C2, and C3 were 0.2, 0.6, and 0.6, respectively. These thresholds were the same as those identified when considering data from the whole year (table 2). For the non-epidemic season, the optimal threshold for all three algorithms was 2.2. Despite this alert threshold being higher than those used during the epidemic season, the time to detection and sensitivity still remained at 4 days and 100%, respectively, but the false alarm rates (0.07% and 0.08%) were significantly lower than when using the thresholds estimated for the entire year (C1: p<0.001; C2: p<0.001; C3: p<0.05); the number of signals also largely decreased.

Table 2.

The number of signals, sensitivity, time to detection, and false alarm rates for the C1, C2, and C3 algorithms by epidemic and non-epidemic season, based on the optimal thresholds identified separately for the epidemic and non-epidemic seasons

| Period | Algorithm | Optimal threshold | No. signals | Sensitivity (%) | Time to detection (days) | False alarm rate (%) |

| Epidemic season* | C1 | 0.2 | 6614 | 95.88 | 1.0 | 1.83 |

| C2 | 0.6 | 5850 | 95.88 | 1.0 | 1.61 | |

| C3 | 0.6 | 6000 | 95.88 | 1.0 | 1.65 | |

| Non-epidemic season* | C1 | 2.2 | 278 | 100 | 4.0 | 0.07 |

| C2 | 2.2 | 295 | 100 | 4.0 | 0.07 | |

| C3 | 2.2 | 328 | 100 | 4.0 | 0.08 |

The period from March 20, 2009 to September 2, 2009 was defined as the epidemic season, a total of 167 days. The rest of the year was defined as the non-epidemic season, a total of 198 days.

Discussion

In configuring aberration detection algorithms, surveillance analysts aim to minimize the false alarm rate, while maintaining timely and sensitive detection. Our study demonstrated that, for an infectious disease exhibiting seasonal variation, the optimal thresholds of the C1, C2, and C3 aberration detection algorithms were different in the epidemic season and in the non-epidemic season. Using alerting thresholds optimized for each season reduced the false alarm rate significantly during the non-epidemic season without any loss of timeliness or sensitivity.

For diseases that exhibit obvious seasonality, the number of cases and outbreaks differ greatly between the epidemic season and the non-epidemic season. The scale of outbreaks and characteristics of cases related to outbreaks during the epidemic season may also differ, and all of these factors help to explain why the optimal alerting threshold of an algorithm would be different during epidemic and non-epidemic seasons.

In our study, when used with optimal thresholds, C1, C2, and C3 demonstrated high sensitivity (>95%), a low false alarm rate (<2%), and a short time to detection (1 day in the epidemic season, 4 days in the non-epidemic season) based on a large number of reported HFMD cases and outbreaks. Therefore, we believe these three methods can be used with seasonally-adjusted alert thresholds to detect outbreaks of infectious disease with as little as 1 year of historical surveillance data.

The use of epidemiologically confirmed outbreaks as the reference standard to evaluate the methods was a strength of our study. Although it is likely that not all outbreaks were reported, the real data objectively reflect the true characteristics of cases and outbreaks, such as epidemic trends, seasonal cycles, day-of-the-week variation, and holiday effects. When real data are available, we believe their use for evaluation generates a more realistic reference standard than can be created by simulating outbreaks.

One limitation of this study is that the optimal thresholds were identified without being cross-validated empirically and evaluated by prospective study with additional data from outside of the study period. As data for cases were only available for 1 year, we only used the data of real outbreaks during the same period to define and validate the optimal thresholds, an approach adopted by other researchers.4 5 The optimal algorithms and thresholds can be further refined through cross-validation when more data become available in the future.

The definition of epidemic and non-epidemic seasons may be another limitation of study. Epidemic periods were identified through consultation with local epidemiologists and review of cases, without taking into consideration the historical patterns of the disease. We used this approach because historical data were limited, but we expect that epidemiologists draw on their experience with historical outbreaks when identifying the timing of an epidemic in a given year.

Since the optimal algorithms and thresholds identified in our study are based on retrospective analysis of data from one specific region, the thresholds we identified are not expected to result in optimal performance in different regions. Our findings do, however, provide a general solution to improving the performance of outbreak detection methods, especially for infectious diseases with seasonal variation and when historical data are limited, a situation that is often encountered in real public health practice.8 11 13

Acknowledgments

We thank Mr Liu Jizeng and Mr Liu Wenhua for assisting with data collection and collation.

Footnotes

Funding: This study was supported by a grant from the National Key Science and Technology Project on Infectious Disease Surveillance Technique Platform of China (2009ZX10004-201) and the Research of Early Detection and Automatic Warning Technology of Infectious Disease Outbreaks in China (2006BAK01A13, 2008BAI56B02).

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Moore AW, Wagner MM, Aryel RM. Handbook of Biosurveillance. Burlington: Elsevier, 2006:217–33 [Google Scholar]

- 2.Hutwagner LC, Maloney EK, Bean NH, et al. Using laboratory-based surveillance data for prevention: an algorithm for detecting Salmonella outbreaks. Emerg Infect Dis 1997;3:395–400 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Widdowson MA, Bosman A, Straten E, et al. Automated, laboratory-based system using the internet for disease outbreak detection, the Netherlands. Emerg Infect Dis 2003;9:1046–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang X, Zeng D, Seale H, et al. Comparing early outbreak detection algorithms based on their optimized parameter values. J Biomed Inform 2010;43:97–103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Buckeridge DL, Okhmatovskaia A, Tu S, et al. Understanding detection performance in public health surveillance: modeling aberrancy-detection algorithms. J Am Med Inform Assoc 2008;15:760–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hutwagner L, Thompson W, Seeman GM, et al. The bioterrorism preparedness and response Early Aberration Reporting System (EARS). J Urban Health 2003;80:i89–96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Shmueli G, Burkom H. Statistical challenges facing early outbreak detection in biosurveillance. Technometrics 2010;52:39–51 [Google Scholar]

- 8.Hutwagner L, Browne T, Seeman GM, et al. Comparing aberration detection methods with simulated data. Emerg Infect Dis 2005;11:314–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.R Development Core Team R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria, 2008: ISBN 3-900051-07-0 http://www.R-project.org/ [Google Scholar]

- 10.Chinese Centers for Disease Control and Prevention Guideline of Hand-Foot-Mouth Disease Control and Prevention, 2009. http://www.chinacdc.cn/n272442/n272530/n3479265/n347930831860.html (accessed 20 Nov 2010). [Google Scholar]

- 11.Watkins RE, Eagleson S, Veenendaal B, et al. Applying cusum-based methods for the detection of outbreaks of Ross River virus disease in Western Australia. BMC Med Inform Decis Mak 2008;8:37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Watkins RE, Eagleson S, Hall RG, et al. Approaches to the evaluation of outbreak detection methods. BMC Public Health 2006;6:263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Straetemans M, Altmann D, Eckmanns T, et al. Automatic outbreak detection algorithm versus electronic reporting system. Emerg Infect Dis 2008;14:1610–12 [DOI] [PMC free article] [PubMed] [Google Scholar]