Abstract

Objective

We evaluate the effects of the Nursing Home Quality Initiative (NHQI), which introduced quality measures to the Centers for Medicare and Medicaid Services' Nursing Home Compare website, on facility performance and consumer demand for services.

Data Sources

The nursing home Minimum Data Set facility reports from 1999 to 2005 merged with facility-level data from the On-Line Survey, Certification, and Reporting System.

Study Design

We rely on the staggered rollout of the report cards across pilot and nonpilot states to examine the effect of report cards on market share and quality of care. We also exploit differences in nursing home market competition at baseline to identify the impacts of the new information on nursing home quality.

Results

The introduction of the NHQI was generally unrelated to facility quality and consumer demand. However, nursing homes facing greater competition improved their quality more than facilities in less competitive markets.

Conclusions

The lack of competition in many nursing home markets may help to explain why the NHQI report card effort had a minimal effect on nursing home quality. With the introduction of market-based reforms such as report cards, this result suggests policy makers must also consider market structure in efforts to improve nursing home performance.

Keywords: Quality of care, nursing homes, report cards, competition

It is well known that the lack of available consumer information about product quality can lead to poor market outcomes (Akerlof 1970). If the quality of a good is difficult to assess, consumers and sellers may have difficulty agreeing on a price. Furthermore, if consumers have difficulty assessing quality, then it diminishes the incentive for firms to invest in improving quality. Asymmetric information about quality is present in health care markets (Arrow 1963), and the lack of quality information in the nursing home industry is thought to be particularly acute (Hirth 1999; Chou 2002). Although nursing home care is fairly nontechnical in nature, monitoring of care can often be difficult, and the learning period may be nontrivial relative to the length of stay in some instances. The patient is often neither the decision maker nor able to easily evaluate quality or communicate concerns to family members and staff.

Since the late 1980s, there has been increasing interest in providing useful information to consumers regarding the quality of care across the medical care sector. This interest has given rise to several public and private provider health plan report cards, including nursing home report card initiatives. However, the overall welfare implications of these report card efforts are unclear. The issuance of report cards may change the incentives of a nursing home to invest in quality, but it is uncertain what the net impact of the change in incentives will be on the quality of care. On the one hand, report cards may empower consumers to make more informed choices and increase quality competition among providers. However, report cards may also increase market power on the part of providers, which may ultimately decrease quality of care (Miller 2006). Failure to account for the underlying market structure in constructing quality policy initiatives may doom these initiatives to irrelevancy. This paper examines the introduction of nursing home report cards by the Centers for Medicare and Medicaid Services (CMS) on nursing home quality and the demand for nursing home care.

BACKGROUND

Conceptual Framework

The underlying economic theory that more quality information improves consumer welfare dates back to Akerlof (1970). In his model, the presence of asymmetric information leads the market to fail in the sense that welfare-improving trades between suppliers and consumers fail to occur. Akerlof also shows that there exists a price premium in the presence of asymmetric information. Prices are higher because of these informational asymmetries. Akerlof compared a world with asymmetric information to one with perfect information. Levin (2001) explores the impact of a reduction in the asymmetry of information and shows that an increase in the buyer's information improves the opportunities for trade and hence welfare. Thus, the proposition that more information can lead to better market outcomes has some theoretical support.

The theoretical relationship between information, competition, and quality is complex. Gaynor (2006) concludes that the relationship between quality and competition is theoretically ambiguous in settings where prices are set by providers. However, when prices are administratively set and greater than marginal cost, greater competition will increase the quality of health care. However, Miller (2006) notes that administratively set prices may lead to counterintuitive effect of report cards on quality. Specifically, Miller shows that when there are relatively few competitors and health care providers treat heterogeneous populations with different and often administered prices, changing the information structure may not lead to improved quality. Indeed, greater quality information can reduce performance if there is large cost heterogeneity across nursing home residents, administered pricing, and greater quality responsiveness among the high-cost residents (Miller 2006). Quality information is expected to positively impact provider behavior when the appropriate market incentives are in place. Specifically, the impact of a reduction in informational asymmetries on a market depends upon two important environmental variables—the marginal profit that a facility receives from increasing the number of residents it admits and the facility elasticity of demand for quality, which is a function of the market structure (i.e., competitiveness) (Scanlon et al. 2008). Toward the first factor, most nursing homes receive the majority of their revenues from Medicaid and Medicare enrollees, and thus have limited control over their marginal profits. Toward the second factor, report cards are more likely to improve quality when the providers in a market are more homogeneous (i.e., providers have similar cost structures and market share). Thus, we hypothesize that the introduction of report cards will have the largest positive impact on quality of care in more competitive markets.

Introduction of Nursing Home Report Cards

In October 1998, CMS introduced a web-based nursing home report card initiative—“Nursing Home Compare” (http://www.medicare.gov/NHCompare) in order to improve consumer information. In addition to information on facility characteristics (e.g., size, ownership status) and location, Nursing Home Compare reports data on various dimensions of quality. The initial report cards introduced in 1998 included only reports of deficiencies, but CMS has increasingly expanded the quality information available on the website. Information on professional and nurse aide staffing were introduced on the website in June 2000.

The Nursing Home Quality Initiative (NHQI) in 2002 introduced quality indicators (QIs) to the Nursing Home Compare website. CMS piloted the NHQI in six states—Colorado, Florida, Maryland, Ohio, Rhode Island, and Washington—beginning April 24 and then introduced the NHQI nationally on November 12. Ten QIs in total, seven chronic care measures and three postacute measures, were released nationally as part of the NHQI. In January 2004, several additional QIs were introduced on the website. In December 2008, the website added a new “five-star” reporting system, which will be described in more detail in the final section of the paper. The exact timing and nature of the quality report cards as they relate to long-stay nursing home residents are summarized in Table 1.

Table 1.

Summary of Nursing Home Compare Changes for Long-Stay Measures

| Timing | Change |

|---|---|

| October 1998 | Launch of Nursing Home Compare; website contains facility characteristics (e.g., ownership) and health-related deficiencies |

| June 2000 | Nurse staffing and nurse aide data added to Nursing Home Compare website |

| April 2002 | Nursing Home Quality Initiative (NHQI) launched in six pilot states adding quality indicators measuring the percentage of residents with the following: loss of ability in basic daily activities; infections; pain; pressure sores (high risk); pressure sores (low risk); physical restraints; and excessive weight loss |

| November 2002 | NHQI launched nationally (using all the pilot measures except the weight loss measure) |

| January 2004 | Quality measures added to the NHQI include the percentage of residents who had the following characteristics: spent most of their time in bed or in a chair; ability to move about in and around their room got worse; have become more depressed or anxious; lose control of their bowels or bladder (low risk); have/had a catheter inserted and left in their bladder |

| November 2004 | Weight loss measure added to the Nursing Home Compare website |

| December 2008 | Nursing Home Compare adds a five-star rating system with an overall star rating and then specific star ratings based on the facility's inspection, staffing, and quality of care |

In addition to the release of QIs on the Nursing Home Compare website, CMS embarked on a media campaign aimed to increase public awareness of the website as a principal source of nursing home quality information. In the six pilot states, CMS ran both newspaper and television advertisements. In November 2002 for the national NHQI rollout, CMS placed advertisements in 71 newspapers across all 50 states and also promoted Nursing Home Compare in national television advertisements. The state Quality Improvement Organizations were also given the responsibility to promote awareness and use of Nursing Home Compare, and to provide assistance to nursing homes that seek to improve performance in the publicly reported quality dimensions. Finally, under the NHQI, the State Offices of the Long-Term Care Ombudsman were to assist residents, family members, concerned citizens, and others with the use of quality measures in nursing home selection.

Although our results will evaluate whether the Nursing Home Compare report card effort influenced quality and market share, a necessary “first stage” is that consumers and providers use Nursing Home Compare. Among consumers, Nursing Home Compare's popularity increased greatly following the national rollout of the NHQI. Before the media campaign and launch of the quality measures, the Nursing Home Compare website received fewer than 100,000 visits per month, but in November 2002, Nursing Home Compare received about 400,000 visits (Office of Inspector General 2004). Similarly, CMS data indicated a much larger growth in the number of website searches and calls to 1-800-MEDICARE in the six pilot states relative to the nonpilot states in the immediate time period around the April 24, 2002 release of the QI data in the pilot states (Rollow 2002).

On the provider side, a CMS-sponsored survey of nursing homes in the NHQI pilot states indicated that 88 percent of facilities had familiarity with Nursing Home Compare (KPMG Consulting 2003). In response to the quality report cards, 46 percent of facilities said that they changed their unit-level clinical process, and 78 percent said that they have changed, or plan to change, their quality improvement activities. Similarly, in a four-state survey of nursing home administrators, Castle (2005) found that 90 percent of the administrators had examined the Nursing Home Compare website, 51 percent stated that they would be using the information for quality improvement in the future, and 33 percent stated that they were using Nursing Home Compare information in quality-improvement initiatives.

Previous Report Card Literature

Public initiatives to report health care provider information dates back at least to the 1980s when Medicare reported mortality rates for hospitals (Mennemeyer, Morrisey, and Howard 1997). Over the intervening decades several different efforts by public and private agencies have attempted to objectively measure and report the quality of care provided by hospitals, physicians, and health plans. These efforts have met with mixed success in terms of altering consumer behavior or improving performance (Epstein 1998; Marshall et al. 2000; Mukamel and Mushlin 2001; Schauffler and Mordavsky 2001; Dafny and Dranove 2005; Werner and Asch 2005; Jin and Sorenson 2006; Kolstad 2009).

Research has begun to examine the implications of the introduction of the Nursing Home Compare website. In examining the initial report card effort (before the NHQI), Stevenson (2006) found little effect of reported staffing and deficiencies on facility occupancy rates. Zinn et al. (2005) examined trends in postacute and long-stay quality measures following the national release of the NHQI. Certain measures exhibited improvement, while others showed little change. Werner et al. (2009a) found that both reported and unreported measures of postacute quality generally improved following the introduction of Nursing Home Compare in 2002. Interestingly, the improvement largely occurred among those facilities that were strong at baseline, with low-scoring facilities experiencing no change or a worsening of their unreported quality of care. In related work, Werner et al. (2009b) used the 15 percent of small nursing homes that were not subject to public reporting as a contemporaneous control and found that the introduction of the federal report card effort improved two out of four postacute measures of nursing home quality when examining mean impacts over a 3-year window.

Mukamel et al. (2007) surveyed a random sample of roughly 700 nursing home administrators to examine their initial reaction to Nursing Home Compare. A majority of facilities (69 percent) reported reviewing their quality scores regularly and many report having taken specific actions to improve quality. Facilities with poor quality scores were more likely to take action following publication of the report card. In a follow-up study, this study team linked the actions taken by the nursing home administrators in response to Nursing Home Compare with five reported quality measures using a pre/poststudy design (Mukamel et al. 2008). Two of the five measures showed improvement following publication on the website and several specific actions were found to be associated with these improvements.

Our Contribution

Collectively, these previous studies show a modest response to the introduction of Nursing Home Compare. This current study offers two contributions to the nursing home report card literature. First, rather than relying on simple pre–post differences, we identify the overall effect of Nursing Home Compare based on the differential timing of the introduction of the report card in the six pilot states relative to the rest of the country. It is well known that a pre/postidentification strategy will lead to misleading inferences if the econometric model excludes any time-varying factors (e.g., payment rates, wages, demand) that affect quality. Unlike many of the earlier studies, our strategy has the advantage that the treatment group is plausibly quasi-random and the control group of facilities is likely “unaffected” by Nursing Home Compare.

The potential challenges associated with this approach include the assumption that nursing homes in the pilot states are similar to nursing homes in the nonpilot states, and the assumption that nursing homes in nonpilot states did not anticipate the release of these measures. Moreover, the relatively short 7-month period between the NHQI pilot and the full rollout may not provide sufficient time to observe a differential response across pilot and nonpilot states. We discuss these trade-offs with our approach in more detail below.

Second, this paper examines whether the response to Nursing Home Compare varies based on the competitiveness of the local market. One potential explanation for the modest findings in previous studies is that the nursing home market may not be very competitive in many parts of the country and therefore have little incentive to improve quality. Our work examines the role of market concentration in mediating nursing home's responses to the Nursing Home Compare report cards.

METHODS

Data

We utilized two sources of nursing home data in this study. First, we obtained quality information from the Minimum Data Set (MDS). The MDS is designed to assess resident functional, cognitive, and affective levels. The MDS has demonstrated good reliability and validity in measuring nursing home quality at the resident level (Morris et al. 1997). Nursing homes have been required to submit these data electronically since June 1998. MDS QIs were developed from the MDS as part of the nursing home case mix and quality demonstration. MDS QIs are facility-level indicators for use by state surveyors to monitor changes in residents' health status and care outcomes and to identify potential problem areas at particular facilities. Some of the 24 MDS QIs are stratified by risk, whereas others are not. MDS QIs have shown good reliability in identifying potential quality problems (Zimmerman et al. 1995; Karon, Sainfort, and Zimmerman 1999). The quality data currently available on the CMS Nursing Home Compare website are derived from the MDS, and a strong similarity exists between the MDS QIs and the information available on the website.1

For this study, we accessed the MDS facility reports submitted by the facilities to CMS. These facility-level data are reported monthly and provide the proportion of residents in the numerator and denominator for the QIs. Because all residents are surveyed once per quarter, we aggregated the monthly QI data up to the quarter level. Thus, we have facility-level QI data across 25 quarters (first quarter of 1999 through the first quarter of 2005). With an average of 15,553 nursing homes surveyed per quarter, we have a total sample size of 388,813 facility-quarters total in our dataset.

We analyze the following five measures introduced as part of the NHQI in 2002: loss of ability in basic daily activities, infections, pressure sores (high risk), pressure sores (low risk), and physical restraints. We exclude the three postacute measures (delirium, pressure ulcers, pain) and one long-stay measure (pain) because we are not able to create these measures in the MDS QI/QM reports for the pre-NHQI period. We exclude the weight loss measure because it was introduced in the pilot states, but ultimately not released in the national NHQI rollout in November 2002 (see Table 1).

The second source of nursing home data is the On-Line Survey, Certification and Reporting (OSCAR) system. The OSCAR system contains information from state surveys of all federally certified Medicaid (nursing facilities) and Medicare (skilled nursing care) homes in the United States. Certified nursing homes represent almost 96 percent of all facilities nationwide (Strahan 1997). Collected and maintained by the CMS, the OSCAR data include information about whether nursing homes are in compliance with federal regulatory requirements. Every facility is required to have an initial survey to verify compliance. Thereafter, states are required to survey each facility no less often than every 15 months, and the average is about 12 months (Harrington et al., 1999).

Using the OSCAR, we also formulated two market-based measures. For each facility in our sample, we define a nursing home market to be the 25 km circle about the facility. Our market-based measures take into account joint ownership of facilities within markets for the 100 largest chain-owned facilities. That is, any facilities with common ownership within a geographic area are not considered to be in competition with one another. The first measure, market share, is used as a dependent variable in our regressions (N = 84,661). In order to take account of skewness in this measure, we use the natural logarithm of nursing home market share. For the MDS quality regressions, we also construct a measure of market competition using a Herfindahl–Hirshman index (HHI). This index is constructed by summing the squared market shares of all facilities in a market at baseline (1999). The index ranges from 0 to 1, with higher values signifying a higher concentration of facilities (i.e., less competition). In order to test the robustness of our results, we examined the impact of calculating the market share or HHI measures using the county as the geographic market boundaries, or to calculating the market share or HHI based on particular payer types (private, Medicaid, Medicare). Our findings are insensitive to these alternate measures. We also obtained a series of control measures from the OSCAR including ownership status (for-profit, nonprofit, government), chain membership, hospital-based status, bed size, and the average activities of daily living score.

In sum, our nursing home data span the period 1999 through the first quarter of 2005. Thus, our analysis period stretches from well before the introduction of the NHQI report cards through their introduction and maturation. Table 2a and b provides a summary of the two primary data files, with panel A presenting the summary statistics for the OSCAR-based analysis of market share and panel B providing the summary statistics for the MDS-based analysis of quality.

Table 2a.

Summary Statistics, 1996–2004: Nursing Home Market Share Model (N = 84,661)

| Variable | Mean | SD |

|---|---|---|

| Government owned | 0.053 | 0.224 |

| Nonprofit | 0.28 | 0.45 |

| For-profit | 0.67 | 0.47 |

| Chain member | 0.56 | 0.50 |

| Hospital based | 0.089 | 0.285 |

| Average ADL score | 3.83 | 0.59 |

| Beds: 0–49 | 0.12 | 0.33 |

| Beds: 50–99 | 0.35 | 0.48 |

| Beds: 100–149 | 0.33 | 0.47 |

| Beds: 150–199 | 0.12 | 0.33 |

| Beds: 200+ | 0.082 | 0.275 |

| Market share | 0.15 | 0.16 |

Notes. The unit of analysis is the roughly annual OSCAR survey for the period 1999–2004.

OSCAR, On-Line Survey, Certification, and Reporting.

Table 2b.

Summary Statistics, 1999–2005 1st Quarter: Quality Indicator Models (N = 388,813)

| Variable | Mean | SD |

|---|---|---|

| Herfindahl–Hirshman Index in 1999 | 0.22 | 0.23 |

| Government owned | 0.063 | 0.24 |

| Nonprofit | 0.28 | 0.45 |

| For-profit | 0.65 | 0.48 |

| Chain member | 0.54 | 0.50 |

| Hospital based | 0.12 | 0.32 |

| Average ADL score | 3.81 | 0.61 |

| Beds: 0–49 | 0.16 | 0.37 |

| Beds: 50–99 | 0.35 | 0.48 |

| Beds: 100–149 | 0.31 | 0.46 |

| Beds: 150–199 | 0.11 | 0.31 |

| Beds: 200+ | 0.078 | 0.27 |

| Urinary tract infection | 0.089 | 0.082 |

| Activities of daily living loss | 0.17 | 0.11 |

| Physical restraint | 0.092 | 0.10 |

| Pressure ulcer (high risk) | 0.16 | 0.12 |

| Pressure ulcer (low risk) | 0.036 | 0.073 |

Notes. The unit of analysis is the facility quarter. We have 25 quarters of data with an average of 15,533 nursing homes in any given quarter.

Empirical Strategy

A key issue in studying report cards is the construction of a valid comparison group that is “unaffected” by the report card initiative. Many analyses of nursing home reports are identified off of a pre–post difference, which leaves open the possibility that the findings are an artifact of some other unmeasured factor. A key source of variation in the introduction of Nursing Home Compare is the six-state NHQI pilot, which was introduced 6 months ahead of the national rollout in 2002. As such, we focus our analyses around the measures introduced as part of the NHQI rather than the measures introduced nationally in other years. We analyze the effect of the introduction of the NHQI on the demand for nursing home care and the overall quality of care.

months ahead of the national rollout in 2002. As such, we focus our analyses around the measures introduced as part of the NHQI rather than the measures introduced nationally in other years. We analyze the effect of the introduction of the NHQI on the demand for nursing home care and the overall quality of care.

In order to investigate the impact of NHC on the demand for nursing home care, we estimate the following differences-in-differences (DD) equation:

| 1 |

where Ln(share)ist is the logarithm of the nursing home market share (defined by the 25 km fixed radius about the nursing home) for nursing home i in state s at time t, High is an indicator of whether the facility ranked in the top quartile at baseline based on a specific report card measure (e.g., urinary tract infections), NHC is a dummy variable corresponding to the rollout of Nursing Home Compare across the pilot and nonpilot states, X is a set of time-varying characteristics, ηi and λt are nursing home and time fixed effects, and ɛist is the error term. Importantly, the main effect of the time invariant High indicator is captured by the facility dummies. We also estimate a similar model that replaces the dummy variable High with a variable Low, indicating whether the facility ranked in the bottom quartile at baseline on the specific report card measure. A potential limitation of the traditional DD model is that it does not account for unobserved factors (e.g., demographic shifts) that are correlated with the early report card adoption within states. Thus, we also introduced state-specific linear time trend variables (t*vs) as a means of addressing omitted variable bias. In order to account for potential “ceiling effects” at high occupancy facilities, we ran this model for all facilities but also for those facilities at below median occupancy at baseline. The parameters for (1) are generated by running a separate regression for each QI. This strategy is similar to the one used by Dafny and Dranove (2005) to estimate the impact of the release of Medicare HMO quality information to Medicare beneficiaries.

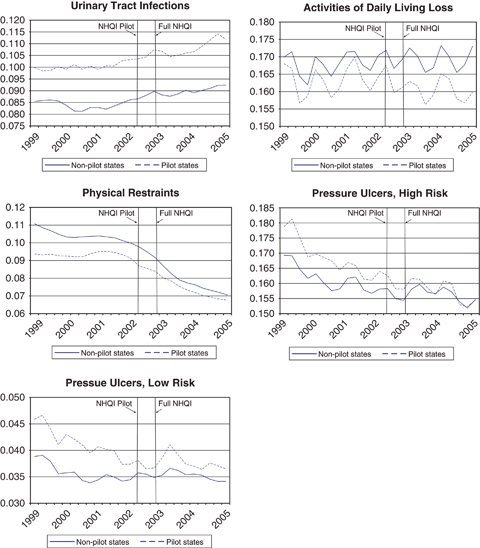

Although the DD approach has the advantage of comparing outcomes across treatment and control facilities, this approach must meet the identifying assumptions that nursing homes in the pilot states are similar to nursing homes in nonpilot states, and the assumption that nursing homes in nonpilot states did not anticipate the release of these measures, especially given the 6-month period between the NHQI pilot and the full rollout. Toward the first assumption, observables look relatively similar across nursing homes in the pilot and nonpilot states (results available upon request), and the pre-NHQI trends in quality look similar across pilot and nonpilot states (see Figure 1). In our analyses of the OSCAR, we also gain an additional source of identification in that the calendar year timing of the OSCAR survey introduces additional variation outside the control of the participating facilities.2 Toward the second assumption, we did not observe a major shift in the quality trend in the nonpilot states during the 6-month pilot NHQI (see Figure 1). A related concern is the relatively short response period between the pilot and the national rollout of Nursing Home Compare. Across a number of studies, nursing homes have been shown to respond relatively quickly to federal and state policy changes (Grabowski 2008). For example, Konetzka et al. (2004) use the fiscal year timing of the Medicare skilled nursing facility Prospective Payment System to help identify changes in staffing and deficiencies. Regardless, as suggested by Figure 1, any response lag present in the pilot states will also be present in the nonpilot states.

Figure 1.

Trends in Nursing Home Compare Quality Measures across Nursing Home Quality Initiative (NHQI) Pilot and Nonpilot States (1999–2005q1)

We next estimate the following DD equation to examine the effect of the NHQI on quality of care:

| 2 |

where Y is the quality outcome of interest in nursing home i in state s at time t, X is a set of time-varying characteristics, ηi and λt are nursing home and time fixed effects, t*vs are state-specific linear time trends, and ɛist is the error term. Once again, this DD model is identified off of the staggered introduction of the NHQI within the six pilot states. That is, we compare the early adoption of the report cards in the six pilot (treatment) states relative to the later adopting “control” states.

Our final model investigates whether the magnitude of the informational impact of report cards is influenced by the amount of competition a facility faces in its local market. The idea is that facilities in more competitive areas have a greater incentive to increase quality in response to report cards or otherwise lose market share, while facilities in less competitive markets face fewer repercussions of not responding to a poor quality report. A similar identification strategy has been used to examine the effect of national Medicare payment changes across high- and low-Medicare providers (e.g., Konetzka et al. 2004; Acemoglu and Finkelstein 2008). The basic model specification is as follows:

| 3 |

where the model is identical to equation (2) with the exception of the introduction of the interaction term NHCst× HHIi.3 Specifically, we interact the NHC variable with the HHI at baseline given concerns that a contemporaneous measure would be endogenous. The time invariant, baseline HHI measure is not affected by either future policy changes or contemporaneous changes in quality. The results generated by this measure are robust to those generated by a 1-year lagged HHI measure. Given the lack of within-facility variation in the HHI over time (within-facility SD=0.046), we opted to use the baseline measure.

This nursing home demand model (equation [1]) is estimated via least squares with the standard errors clustered at the market level. Because the QIs are represented as a percentage of residents in (equations [2] and [3]), we use the logit transformation, so dependent variables are of the form

where Pi represents the proportion of residents of nursing home i. Because the logit transformation assigns no value when the percent is equal to either zero or one, zero values were recoded as 0.0001 and values of one were recoded as 0.9999. In all our quality models, we cluster our standard error estimates at the level of the facility.

RESULTS

First, we estimate the impact of the interaction of the indicators of high and low quality at baseline with Nursing Home Compare on the logarithm of the market share facility residents (see Table 3). Nursing homes below median quality (high values of the QI measures) saw very little impact from the introduction of Nursing Home Compare on market share. The estimates for the low quality facilities (presented in the top panel of Table 3) are small in magnitude and predominantly insignificant. The ADL loss result is statistically significant at the 10 percent level, but it indicates a (wrong-sided) positive effect of poor quality on resident share. The estimates also suggest that nursing homes in the top half of the quality distribution also did not experience a substantial change in market share post-Nursing Home Compare. The estimates are all positive, but only one is statistically significant at the 5 percent level (pressure ulcer for low-risk residents). Taken together, we cannot conclude that the adoption of the NHQI report card had a meaningful impact on patient demand.

Table 3.

The Impact of Nursing Home Compare on Logarithm of Share of Residents, Conditional on Quality Measure at Baseline

| Quality Indicator | Coefficient | SE | N |

|---|---|---|---|

| Bottom quartile quality (high values of the indicator) | |||

| Urinary tract infection | −0.0001 | 0.004 | 84,655 |

| Activities of daily living loss | 0.009* | 0.005 | 83,757 |

| Physical restraints | −0.0001 | 0.005 | 84,654 |

| Ulcers, high risk | 0.006 | 0.005 | 83,667 |

| Ulcers, low risk | 0.003 | 0.005 | 83,332 |

| Top quartile quality (low values of the indicator) | |||

| Urinary tract infection | 0.007 | 0.006 | 84,655 |

| Activities of daily living loss | 0.002 | 0.005 | 83,757 |

| Physical restraints | 0.008 | 0.006 | 84,654 |

| Ulcers, high risk | 0.009 | 0.005 | 83,667 |

| Ulcers, low risk | 0.010** | 0.005 | 83,332 |

Notes. The unit of analysis is the roughly annual OSCAR survey for the period 1999–2004. The coefficient of interest is the interaction of high/low MDS quality indicator at baseline with an indicator Nursing Home Quality Initiative in April or November of 2002 (depending on state). SEs are adjusted for correlation within markets. Analysis includes state specific trends to control for unobserved demographic changes.

Significant at the 10% level.

Significant at the 5% level.

MDS, Minimum Data Set; OSCAR, On-Line Survey, Certification, and Reporting.

In order to account for potential ceiling effects in the occupancy levels at high-quality nursing homes, we reran the result including only facilities with below median occupancy at baseline (see Table 4). At lower occupancy facilities, the idea is that residents can respond to a positive quality report by filling an empty bed. Once again, the ADL loss measure indicates a (wrong-sided) positive effect of poor quality on resident share. However, two quality measures—urinary tract infections and low-risk pressure ulcers—both suggest a positive and statistically significant relationship between better quality at baseline and facility market share.

Table 4.

The Impact of Nursing Home Compare on Logarithm of Share of Residents, Conditional on Quality Measure at Baseline: Includes Only Those Facilities with below Median Occupancy at Baseline

| Quality Indicator | Coefficient | SE | N |

|---|---|---|---|

| Bottom quartile quality (high values of the indicator) | |||

| Urinary tract infection | 0.004 | 0.009 | 34,851 |

| Activities of daily living loss | 0.023** | 0.010 | 34,142 |

| Physical restraints | −0.005 | 0.009 | 34,857 |

| Ulcers, high risk | 0.004 | 0.009 | 34,235 |

| Ulcers, low risk | 0.010 | 0.009 | 33,818 |

| Top quartile quality (low values of the indicator) | |||

| Urinary tract infection | 0.018* | 0.011 | 34,851 |

| Activities of daily living loss | −0.002 | 0.009 | 34,142 |

| Physical restraints | 0.015 | 0.010 | 34,857 |

| Ulcers, high risk | 0.018 | 0.012 | 34,235 |

| Ulcers, low risk | 0.022** | 0.009 | 33,818 |

Notes. The unit of analysis is the roughly annual OSCAR survey for the period 1999–2004. The coefficient of interest is the interaction of high/low MDS quality indicator at baseline with an indicator Nursing Home Quality Initiative in April or November of 2002 (depending on state). SEs are adjusted for correlation within markets. Analysis includes state specific trends to control for unobserved demographic changes.

Significant at the 10% level.

Significant at the 5% level.

MDS, Minimum Data Set; OSCAR, On-Line Survey, Certification, and Reporting.

We next turn to the analyses of nursing home reports cards and quality of care. In the unadjusted trends (see Figure 1), a differential quality effect is not present across the pilot and nonpilot states in the period following the NHQI pilot and national rollout. In the regression results (see Table 5), the model is explicitly identified off this staggered rollout of the NHQI report cards across the pilot and nonpilot states. The estimated coefficients of all of the report card measures are statistically insignificant, and for three of the five outcomes, are even wrong-signed (i.e., positive). That is, we find no statistical evidence here that the adoption of nursing home report cards affects the overall quality of care. The estimated effects are substantively insignificant as well, and given the small standard errors we can rule out meaningful effects of report cards.

Table 5.

The Impact of Nursing Home Compare on Quality Indicators

| Outcome | Coefficient | SE | N |

|---|---|---|---|

| Urinary tract infection | 0.013 | 0.017 | 369,907 |

| Activities of daily living loss | 0.002 | 0.018 | 367,998 |

| Physical restraints | 0.015 | 0.021 | 369,913 |

| Pressure ulcers, high risk | −0.012 | 0.020 | 366,338 |

| Pressure ulcers, low risk | −0.028 | 0.033 | 364,597 |

Notes. The unit of analysis is the facility quarter. The coefficient of interest is a dummy variable for the introduction of the Nursing Home Quality Initiative in April or November of 2002 (depending on state). SEs are adjusted for correlation within facilities.

We next evaluate the role of competition toward mediating the role of report cards and nursing home quality. For each quality measure, Table 6 presents the estimated coefficient on the interaction of the HHI measure and the timing of the NHQI implementation within the state. Two out of the five estimated coefficients are positive and statistically significant (p<.1). The outcomes are pressure ulcers for high-risk residents and pressure ulcer for low-risk residents. The estimated coefficient for one of the outcomes, ADL loss, is positive but not statistically significant. Finally, the estimated coefficients for the urinary tract infections and physical restraints models are negative but not statistically significant at conventional levels.

Table 6.

The Impact of Nursing Home Compare on Quality Indicators by the Competitiveness of the Local Market

| Outcome | Coefficient | SE | N |

|---|---|---|---|

| Urinary tract infection | −0.040 | 0.029 | 369,907 |

| Activities of daily living loss | 0.036 | 0.024 | 367,998 |

| Physical restraints | −0.022 | 0.051 | 369,913 |

| Pressure ulcers, high risk | 0.062* | 0.034 | 366,338 |

| Pressure ulcers, low risk | 0.217** | 0.048 | 364,597 |

Notes. The unit of analysis is the facility quarter. The primary coefficient of interest is the interaction of Herfindahl–Hirshman Index (HHI) at baseline with an indicator for the Nursing Home Quality Initiative in April or November of 2002 (depending on state). SEs are adjusted for correlation within facilities. To interpret the magnitude, the average HHI at baseline was 0.22.

Significant at the 10% level.

Significant at the 1% level.

Taken together, the estimates presented in Table 6 suggest that the report card efforts under the NHQI did change the incentives for nursing homes to provide quality care. Nursing homes in more competitive markets responded to the Nursing Home Compare information by increasing their quality (for two of the measures) relative to nursing homes with greater market power. The magnitude of impact implied by the coefficients is meaningfully large. A difference in HHI between 0.5 and 0.2—equivalent to a market going from two equally sized facilities to five equally sized facilities—generates changes in the expected values of the outcomes that are 15 percent (pressure ulcers—high risk) and 89 percent (pressure ulcers—low risk) of a standard deviation for those outcomes.

CONCLUSION

Ultimately, the policy goal of health care report cards is to improve the quality of care. Previous research has found that nursing home report cards generally have a modest effect on nursing home performance. Similarly, we found very little evidence to suggest that the staggered introduction of the NHQI report card measures led to increased patient demand or better long-stay quality. However, we found that nursing homes residing in more competitive markets improved their reported quality more than facilities in less competitive markets.

The idea that market-oriented solutions such as report cards work best in more competitive markets is somewhat intuitive. Indeed, Nursing Home Compare was designed with the goal of harnessing “market forces to encourage poorly performing homes to improve quality or face the loss of revenue” (U.S. General Accounting Office 2002, p. 3). In the 1970s and 1980s, the nursing home market was not very competitive due to certificate-of-need regulations, which impeded new entry and created an “excess demand” for services in many local markets (Scanlon 1980). However, occupancy rates have greatly declined over the past two decades due to the emergence of assisted living and the growth of Medicaid home- and community-based services. Our results support more recent studies suggesting “markets matter” in nursing home care (Grabowski 2008).

In spite of the growing role of competition in the nursing home sector, considerable variation still exists in the degree of competition across local markets. If public reporting is less effective for facilities with greater market power, report cards alone may not be sufficient to encourage improved nursing home performance in certain parts of the country. In the absence of other initiatives, report cards may even magnify the disparities in care across facilities (Miller 2006). If only better informed, higher socioeconomic residents use report cards in the more competitive markets, then report cards have the potential to widen the gulf between the “haves” and “have nots” in the nursing home sector. From a policy perspective, report cards may be best utilized as one of several initiatives to encourage improved performance across the full distribution of facilities.

A key feature of this paper relative to some of the previous studies in the literature is our analysis of the long-stay nursing home report card measures. As background, long-stay nursing home care is often paid for by Medicaid, while short-stay care is typically paid by Medicare. Although some specialization exists across facilities, the majority of nursing homes care for both short-stay and long-stay residents. Most of the research documenting poor nursing home quality has been among the long-stay population (Institute of Medicine 2001). To the extent previous research has found an effect of Nursing Home Compare, it has largely been among the short-stay quality measures (e.g., Werner et al. 2009b). Given that Medicare residents are associated with higher profit margins relative to Medicaid residents (Troyer 2002; Medicare Payment Advisory Commission 2005), the greater responsiveness to the short-stay quality report card measures is not surprising.

Recent developments with the Nursing Home Compare website have blurred the distinction between long-stay and short-stay quality measures in the report cards. Beginning in December 2008, the Nursing Home Compare website now reports four new composite quality measures for each nursing home, an overall five-star rating along with specific five-star ratings for inspections (deficiencies), staffing, and the MDS-based QIs. In constructing the MDS-based score, the short-stay and long-stay quality measures are combined. Obviously, this development has simplified the presentation of information on the website for consumers. Under the previous system, nursing home consumers may not have been able to interpret multiple, often conflicting, quality measures within a facility's report card. However, the shift to the five-star system may mask or distort the heterogeneity of facilities in their reported quality, especially among short-stay and long-stay residents. Importantly, Nursing Home Compare will still allow consumers to link on the website to the specific short-stay and long-stay QIs, but in practice, it is possible that consumers may choose to focus only on the global five-star ratings. Moving forward, it will be important to determine whether the gains to consumers from simplifying the quality report cards outweigh the potential costs of presenting homogeneous quality information to heterogeneous consumers. Ultimately, the five-star system may have positive implications for long-stay quality due to positive spillovers from facility's responsiveness to short-stay consumers, but negative implications for short-stay quality due to the lack of responsiveness to long-stay consumers.

Several potential limitations exist with our analysis. First, we acknowledge that the results generated in Tables 3–5 are based on the assumptions that the pilot and nonpilot states were relatively similar and nursing homes in the control group (nonpilot states) were unaffected by the NHQI pilot. Second, it is possible that the NHQI mattered for other types of facilities beyond those in more competitive markets. We did not find large differences across facilities in terms of their share of private-pay residents (see Table SA1) or their occupancy at baseline (see Table 4), but it is possible that other attributes may explain facility responsiveness to nursing home quality. Finally, the media attention around the NHQI makes it somewhat unique relative to the introduction and use of the typical nursing home report card. Thus, the results generated here may be somewhat different relative to other initiatives.

With the recent implementation of the five-star initiative and the evolving nature of competition in the long-term care sector, future work needs to continue to monitor the role of Nursing Home Compare toward influencing quality of care. However, this paper suggests quality report cards alone may not improve nursing home performance.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: David Grabowski was supported in part by a National Institute on Aging career development award (no. K01 AG024403).

Disclosures: Prior versions of this paper were presented at the biennial conference of the American Society of Health Economists in Madison, WI, in June 2006; the Annual Meeting of the Gerontological Society of America in National Harbor, MD, in November 2008; and the AcademyHealth Annual Research Meeting in Washington, DC, in June 2008.

Disclaimers: No funding source played a role in the design or conduct of the study, the collection, analysis, or interpretation of data, or the preparation, review, or approval of this manuscript.

NOTES

Technically, the MDS quality information published on the Nursing Home Compare website are quality “measures” (QMs) derived as part of a later effort. The QMs and the quality indicators have a number of common quality dimensions (e.g., pressure ulcers, physical restraints). Although they are constructed with slightly different input variables from the MDS, they result in very similar estimates for the specific quality dimensions. Importantly, CMS cannot construct the QMs as part for the facility reports for the pre-Nursing Home Compare period.

In the pilot states, 1,306 (or 52.7 percent) of the 2,476 OSCAR surveys conducted during 2002 were administered during the May–October pilot period.

The main effect of the time invariant HHI indicator is captured by the facility dummies.

SUPPORTING INFORMATION

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Table SA1: The Impact of Nursing Home Compare on Quality Indicators, Conditional on Private-Pay Share at Baseline.

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Acemoglu D, Finkelstein A. “Input and Technology Choices in Regulated Industries: Evidence from the Health Care Sector””. Journal of Political Economy. 2008;116(5):837–80. [Google Scholar]

- Akerlof GA. “The Market for Lemons: Quality Uncertainty and the Market Mechanism””. Quarterly Journal of Economics. 1970;84(3):488–500. [Google Scholar]

- Arrow KG. “Uncertainty and the Welfare Economics of Medical Care””. American Economic Review. 1963;53(5):941–73. [Google Scholar]

- Castle NG. “Nursing Home Administrators' Opinions of the Nursing Home Compare Web Site”. Gerontologist. 2005;45(3):299–308. doi: 10.1093/geront/45.3.299. [DOI] [PubMed] [Google Scholar]

- Chou SY. “Asymmetric Information, Ownership and Quality of Care: An Empirical Analysis of Nursing Homes”. Journal of Health Economics. 2002;21(2):293–311. doi: 10.1016/s0167-6296(01)00123-0. [DOI] [PubMed] [Google Scholar]

- Dafny L, Dranove D. Do Report Cards Tell Consumers Anything They Don't Already Know? The Case of Medicare HMOs. 2005. NBER Working Papers: 11420. National Bureau of Economic Research Inc. [DOI] [PubMed]

- Epstein AM. “Rolling Down the Runway: The Challenges Ahead for Quality Report Cards”. Journal of American Medical Association. 1998;279(21):1691–6. doi: 10.1001/jama.279.21.1691. [DOI] [PubMed] [Google Scholar]

- Gaynor M. What Do We Know about Competition and Quality in Health Care Markets? Cambridge, MA: National Bureau of Economic Research Inc; 2006. NBER Working Papers: 12301. [Google Scholar]

- Grabowski DC. “The Market for Long-Term Care Services”. Inquiry. 2008;45(1):58–74. doi: 10.5034/inquiryjrnl_45.01.58. [DOI] [PubMed] [Google Scholar]

- Harrington C, Swan JH, Wellin V, Clemena W, Bedney B, Carillo H. San Francisco, CA: Department of Social and Behavioral Sciences, University of California; 1999. “1998 State Data Book on Long Term Care Program and Market Characteristics.”. [Google Scholar]

- Hirth RA. “Consumer Information and Competition between Nonprofit and For-Profit Nursing Homes”. Journal of Health Economics. 1999;18(2):219–40. doi: 10.1016/s0167-6296(98)00035-6. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Improving the Quality of Long-Term Care. Washington, DC: National Academy Press; 2001. [Google Scholar]

- Jin GZ, Sorenson AT. “Information and Consumer Choice: The Value of Publicized Health Plan Ratings”. Journal of Health Economics. 2006;25(2):248–75. doi: 10.1016/j.jhealeco.2005.06.002. [DOI] [PubMed] [Google Scholar]

- Karon SL, Sainfort F, Zimmerman DR. “Stability of Nursing Home Quality Indicators over Time”. Medical Care. 1999;37(6):570–9. doi: 10.1097/00005650-199906000-00006. [DOI] [PubMed] [Google Scholar]

- Kolstad JT. Information and Quality when Motivation is Intrinsic: Evidence from Surgeon Report Cards. Philadelphia, PA: University of Pennsylvania; 2009. Working Paper. [Google Scholar]

- Konetzka RT, Yi D, Norton EC, Kilpatrick KE. “Effects of Medicare Payment Changes on Nursing Home Staffing and Deficiencies”. Health Services Research. 2004;39(3):463–88. doi: 10.1111/j.1475-6773.2004.00240.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- KPMG Consulting. Evaluation of the Nursing Home Quality Initiative Pilot Project: Final Report Submitted to Centers for Medicare & Medicaid Services. McLean, VA: KPMG Consulting; 2003. [Google Scholar]

- Levin J. “Information and the Market for Lemons”. Rand Journal of Economics. 2001;32(4):657–66. [Google Scholar]

- Marshall MN, Shekelle PG, Leatherman S, Brook RH. “The Public Release of Performance Data: What Do We Expect to Gain? A Review of the Evidence”. Journal of American Medical Association. 2000;283(14):1866–74. doi: 10.1001/jama.283.14.1866. [DOI] [PubMed] [Google Scholar]

- Medicare Payment Advisory Commission. Report to the Congress: Medicare Payment Policy. Washington, DC: Medicare Payment Advisory Commission; 2005. [Google Scholar]

- Mennemeyer ST, Morrisey MA, Howard LZ. “Death and Reputation: How Consumers Acted upon HCFA Mortality Information”. Inquiry. 1997;34(2):117–28. [PubMed] [Google Scholar]

- Miller N. Report Cards, Incentives, and Quality Competition in Health Care. Cambridge, MA: 2006. Working Paper. [Google Scholar]

- Morris JN, Nonemaker S, Murphy K, Hawes C, Fries BE, Mor V, Phillips C. “A Commitment to Change: Revision of HCFA's RAI”. Journal of the American Geriatrics Society. 1997;45(8):1011–6. doi: 10.1111/j.1532-5415.1997.tb02974.x. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Mushlin AI. “The Impact of Quality Report Cards on Choice of Physicians, Hospitals, and HMOs: A Midcourse Evaluation”. Joint Commission Journal on Quality Improvement. 2001;27(1):20–7. doi: 10.1016/s1070-3241(01)27003-5. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Spector WD, Zinn JS, Huang L, Weimer DL, Dozier A. “Nursing Homes' Response to the Nursing Home Compare Report Card”. Journals of Gerontology. Series B, Psychological Sciences and Social Sciences. 2007;62(4):S218–25. doi: 10.1093/geronb/62.4.s218. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Weimer DL, Spector WD, Ladd H, Zinn JS. “Publication of Quality Report Cards and Trends in Reported Quality Measures in Nursing Homes”. Health Services Research. 2008;43(4):1244–62. doi: 10.1111/j.1475-6773.2007.00829.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Office of Inspector General. Inspection Results on Nursing Home Compare: Completeness and Accuracy. Washington, DC: Office of the Inspector General; 2004. [Google Scholar]

- Rollow W. 2002. “Improving Quality of Care for Medicare Beneficiaries: 7th SOW and the Future of QI” [accessed on May 25, 2006, 2002]. Available at http://www.lhcr.org/html/providers/Presentations/Rollow.ppt.

- Scanlon DP, Swaminathan S, Lee W, Chernew M. “Does Competition Improve Health Care Quality?”. Health Services Research. 2008;43(6):1931–51. doi: 10.1111/j.1475-6773.2008.00899.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scanlon WJ. “A Theory of the Nursing Home Market”. Inquiry. 1980;17(1):25–41. [PubMed] [Google Scholar]

- Schauffler HH, Mordavsky JK. “Consumer Reports in Health Care: Do They Make a Difference?”. Annual Review of Public Health. 2001;22:69–89. doi: 10.1146/annurev.publhealth.22.1.69. [DOI] [PubMed] [Google Scholar]

- Stevenson DG. “Is a Public Reporting Approach Appropriate for Nursing Home Care?”. Journal of Health Politics, Policy and Law. 2006;31(4):773–810. doi: 10.1215/03616878-2006-003. [DOI] [PubMed] [Google Scholar]

- Strahan GW. An Overview of Nursing Homes and Their Current Residents: Data From the 1995 National Nursing Home Survey. Rockville, MD: National Center for Health Statistics, Centers for Disease Control and Prevention, U.S. Department of Health and Human Services; 1997. [PubMed] [Google Scholar]

- Troyer JL. “Cross-Subsidization in Nursing Homes: Explaining Rate Differentials among Payer Types”. Southern Economic Journal. 2002;68(4):750–73. [Google Scholar]

- U.S. General Accounting Office. Nursing Homes: Public Reporting of Quality Indicators Has Merit, But National Implementation Is Premature. Washington, DC: U.S. General Accounting Office; 2002. [Google Scholar]

- Werner RM, Asch DA. “The Unintended Consequences of Publicly Reporting Quality Information”. Journal of American Medical Association. 2005;293(10):1239–44. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- Werner RM, Konetzka RT, Kruse GB. “Impact of Public Reporting on Unreported Quality of Care”. Health Services Research. 2009a;44(2, part 1):379–98. doi: 10.1111/j.1475-6773.2008.00915.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner RM, Konetzka RT, Stuart EA, Norton EC, Polsky D, Park J. “Impact of Public Reporting on Quality of Postacute Care”. Health Services Research. 2009b;44(4):1169–87. doi: 10.1111/j.1475-6773.2009.00967.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmerman DR, Karon SL, Arling G, Clark BR, Collins T, Ross R, Sainfort F. “Development and Testing of Nursing Home Quality Indicators”. Health Care Financing Review. 1995;16(4):107–27. [PMC free article] [PubMed] [Google Scholar]

- Zinn J, Spector W, Hsieh L, Mukamel DB. “Do Trends in the Reporting of Quality Measures on the Nursing Home Compare Web Site Differ by Nursing Home Characteristics?”. Gerontologist. 2005;45(6):720–30. doi: 10.1093/geront/45.6.720. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.