Abstract

To better understand the reward circuitry in human brain, we conducted activation likelihood estimation (ALE) and parametric voxel-based meta-analyses (PVM) on 142 neuroimaging studies that examined brain activation in reward-related tasks in healthy adults. We observed several core brain areas that participated in reward-related decision making, including the nucleus accumbens (NAcc), caudate, putamen, thalamus, orbitofrontal cortex (OFC), bilateral anterior insula, anterior (ACC) and posterior (PCC) cingulate cortex, as well as cognitive control regions in the inferior parietal lobule and prefrontal cortex (PFC). The NAcc was commonly activated by both positive and negative rewards across various stages of reward processing (e.g., anticipation, outcome, and evaluation). In addition, the medial OFC and PCC preferentially responded to positive rewards, whereas the ACC, bilateral anterior insula, and lateral PFC selectively responded to negative rewards. Reward anticipation activated the ACC, bilateral anterior insula, and brain stem, whereas reward outcome more significantly activated the NAcc, medial OFC, and amygdala. Neurobiological theories of reward-related decision making should therefore distributed and interrelated representations of reward valuation and valence assessment into account.

Keywords: meta-analysis, reward, nucleus accumbens, orbitofrontal cortex, anterior cingulate cortex, anterior insula

1. Introduction

People face countless reward-related decision making opportunities everyday. Our physical, mental, and socio-economical well-being critically depends on the consequences of the choices we make. It is thus crucial to understand what underlies normal functioning of reward-related decision making. Studying the normal functioning of reward-related decision making also helps us to better understand the various behavioral and mental disorders which arise when such function is disrupted, such as depression (Drevets, 2001), substance abuse (Bechara, 2005; Garavan and Stout, 2005; Volkow et al., 2003), and eating disorders (Kringelbach et al., 2003; Volkow and Wise, 2005).

Functional neuroimaging research on reward has become a rapidly growing field. We have observed a huge surge of neuroimaging research in this domain, with dozens of relevant articles showing up in the PubMed database every month. On the one hand, this is exciting because the mounting results are paramount to formalizing behavioral and neural mechanisms of reward-related decision making (Fellows, 2004; Trepel et al., 2005). On the other hand, the heterogeneity of the results in conjunction with the occasional opposing patterns make it difficult to obtain a clear picture of the reward circuitry in human brain. The mixture of results is partly due to diverse experimental paradigms developed by various research groups that aimed to address different aspects of reward-related decision making, such as the distinction between reward anticipation and outcome (Breiter et al., 2001; Knutson et al., 2001b; McClure et al., 2003; Rogers et al., 2004), valuation of positive and negative rewards (Liu et al., 2007; Nieuwenhuis et al., 2005; O’Doherty et al., 2003a; O’Doherty et al., 2001; Ullsperger and von Cramon, 2003), and assessment of risk (Bach et al., 2009; d’Acremont and Bossaerts, 2008; Hsu et al., 2009; Huettel, 2006).

Therefore, it is crucial to pool existing studies together and examine the core reward networks in human brain, from both data-driven and theory-driven approaches to test the commonality and distinction of different aspects of reward-related decision making. To achieve this goal, we employed and compared two coordinate-based meta-analysis (CBMA) methods (Salimi-Khorshidi et al., 2009), activation likelihood estimation (ALE) (Laird et al., 2005; Turkeltaub et al., 2002) and parametric voxel-based meta-analysis (PVM) (Costafreda et al., 2009), so as to reveal the concordance across a large number of neuroimaging studies on reward-related decision making. We anticipated that the ventral striatum and orbitofrontal cortex (OFC), two major dopaminergic projection areas that have been associated with reward processing, would be consistently activated.

In addition, from a theory-driven perspective, we aimed to elucidate whether there exist distinctions in the brain networks that are responsible for processing positive and negative reward information, and that are preferentially involved in different stages of reward processing such as reward anticipation, outcome monitoring, and decision evaluation. Decision making involves encoding and representation of the alternative options and comparing the values or utilities associated with these options. Across these processes, decision making is usually affiliated with positive or negative valence from either the outcomes or emotional responses toward the choices made. Positive reward valence refers to the positive subjective states we experience (e.g., happiness or satisfaction) when the outcome is positive (e.g., winning a lottery) or better than we anticipate (e.g., losing less value than projected). Negative reward valence refers to the negative feelings we go through (e.g., frustration or regret) when the outcome is negative (e.g., losing a gamble) or worse than what we expect (e.g., stock value increasing lower than projected). Although previous studies have attempted to distinguish reward networks that are sensitive to processing positive or negative information (Kringelbach, 2005; Liu et al., 2007), as well as those that are involved in reward anticipation or outcome (Knutson et al., 2003; Ramnani et al., 2004), empirical results have been mixed. We aimed to extract consistent patterns by pooling over a large number of studies examining these distinctions.

2. Methods

2.1 Literature search and organization

2.1.1 Study identification

Two independent researchers conducted a thorough search of the literature for fMRI studies examining reward-based decision making in humans. The terms used to search the online citation indexing service PUBMED (through June 2009) were “fMRI”, “reward”, and “decision” (by the first researcher), “reward decision making task”, “fMRI”, and “human” (by the second researcher). These initial search results were merged to yield a total of 182 articles. Another 90 articles were identified from a reference database of a third researcher accumulated through June 2009 using “reward” and “MRI” as filtering criteria. We also searched the BrainMap database using Sleuth, with “reward task” and “fMRI” as search terms, and found 59 articles. All of these articles were pooled into a database and redundant entries were eliminated. We then applied several exclusion criteria to further eliminate articles that are not directly relevant to the current study. These criteria are: 1) non-first hand empirical studies (e.g., review articles); 2) studies that did not report results in standard stereotactic coordinate space (either Talairach or Montreal Neurological Institute, MNI); 3) studies using tasks unrelated to reward or value-based decision making; 4) studies of structural brain analyses (e.g., voxel-based morphometry or diffusion tensor imaging); 5) studies purely based on region of interest (ROI) analysis (e.g., using anatomical masks or coordinates from other studies); 6) studies of special populations whose brain functions may be deviated from those of normal healthy adults (e.g., children, aging adults, or substance dependent individuals), although coordinates reported in these studies for the healthy adult group alone were included. Variability among methods with which subjects were instructed to report decisions during the tasks (i.e., verbal, nonverbal button-press) was accepted. This resulted in 142 articles in the final database (listed in the Appendix).

During the data extraction stage, studies were then grouped by different spatial normalization schemes according to coordinate transformations implemented in the GingerALE toolbox (http://brainmap.org, Research Imaging Center of the University of Texas Health Science Center, San Antonio, Texas): using FSL to report MNI coordinates, using SPM to report MNI coordinates, using other programs to report MNI coordinates, using Brett methods to convert MNI coordinates into Talairach space, using a Talairach native template. Lists of coordinates that were in Talairach space were converted into the MNI space according to their original normalization schemes. For the Brett-Talairach list, we converted the coordinates back into the MNI space using reverse transformation by Brett (i.e., tal2mni)(Brett et al., 2002). For the native Talairach list, we used BrainMap’s Talairach-MNI transformation (i.e., tal2icbm_other). A master list of all studies was created by combining all coordinates in MNI space in preparation for the ALE meta-analyses in GingerALE.

2.1.2 Experiment categorization

To test hypotheses with regards to the common and distinct reward pathways that are recruited by different aspects of reward-related decision making, we categorized coordinates according to two types of classification: reward valence and decision stages. We adopted the term of “experiments” used by the BrainMap database to refer to individual regressors or contrasts typically reported in fMRI studies. For reward valence, we organized the experiments into positive and negative rewards. For decision stages, we separated the experiments into reward anticipation, outcome, and evaluation. Coordinates in the master list that fit into these categories were put into sub-lists; those that were difficult to interpret or not clearly defined were omitted. Below we list some examples that were put into each of these categories.

The following contrasts were classified as processing of positive rewards: those in which subjects won money or points (Elliott et al., 2000)(reward during run of success); avoided losing money or points (Kim et al., 2006)(direct comparison between avoidance of an averse outcome and reward receipt); won the larger of two sums of money or points (Knutson et al., 2001a)(large vs. small reward anticipation); lost the smaller of two sums of money or points (Ernst et al., 2005)(no-win $0.50 > no-win $4); received encouraging words or graphics on the screen(Zalla et al., 2000) (increase for “win”); received sweet taste in their mouths (O’Doherty et al., 2002)(glucose > neutral taste); positively evaluated the choice (Liu et al., 2007)(right > wrong), or received any other type of positive rewards as a result of successful completion of the task.

Experiments classified for negative rewards included those in which subjects lost money or points (Elliott et al., 2000)(penalty during run of failure); did not win money or points (Ernst et al., 2005)(dissatisfaction of no-win); won the smaller of two sums of money or points (Knutson et al., 2001a)($1 vs. $50 reward); lost the larger of two sums of money or points (Knutson et al., 2001a)(large vs. small punishment anticipation); negatively evaluated the choice (Liu et al., 2007)(wrong > right); or received any other negative rewards such as the administration of a bitter taste in their mouths (O’Doherty et al., 2002)(salt > neutral taste) or discouraging words or images (Zalla et al., 2000)(increase for “lose” and decrease for “win”).

Reward anticipation was defined as the time period when the subject was pondering potential options before making a decision. For example, placing a bet and expecting to win money on that bet would be classified as anticipation (Cohen and Ranganath, 2005)(high-risk vs. low-risk decision). Reward outcome/delivery was classified as the period when the subject received feedback on the chosen option, such as a screen with the words “win x$” or “lose x$” (Bjork et al., 2004)(gain vs. non-gain outcome). When the feedback influenced the subject’s decision and behavior in a subsequent trial or was used as a learning signal, the contrast was classified as reward evaluation. For example, a risky decision that is rewarded in the initial trial may lead a subject to take another, perhaps bigger, risk in the next trial (Cohen and Ranganath, 2005)(low-risk rewards followed by high-risk vs. low-risk decisions). Loss aversion, the tendency for people to strongly prefer avoiding losses to acquiring gains, is another example of evaluation (Tom et al., 2007)(relation between lambda and neural loss aversion).

2.2 Activation likelihood estimation (ALE)

The algorithm of ALE is based on (Eickhoff et al., 2009). ALE models the activation foci as 3D Gaussian distributions centered at the reported coordinates, and then calculates the overlap of these distributions across different experiments (ALE treats each contrast in a study as a separate experiment). The spatial uncertainty associated with activation foci is estimated with respect to the number of subjects in each study (i.e., a larger sample produces more reliable activation patterns and localization; therefore the coordinates are convolved with a tighter Gaussian kernel). The convergence of activation patterns across experiments is calculated by taking the union of the above modeled activation maps. A null distribution that represents ALE scores generated by random spatial overlap across studies is estimated through permutation procedure. Finally the ALE map computed from the real activation coordinates is tested against the ALE scores from the null distribution, producing a statistical map representing the p values of the ALE scores. The nonparametric p values are then transformed into z scores and thresholded at a cluster-level corrected p<0.05.

Six different ALE analyses were conducted using GingerALE 2.0 (Eickhoff et al., 2009), one for the main analysis of all studies, and one for each of the five sub-lists characterizing brain activation by positive or negative rewards as well as anticipation, outcome, and evaluation. Two subtraction ALE analyses were conducted using GingerALE 1.2 (Turkeltaub et al., 2002), one for the contrast between positive and negative rewards, and the other for the contrast between anticipation and outcome.

2.2.1 Main analysis of all studies

All 142 studies were included in the main analysis, which consisted of 5214 foci from 655 experiments (contrasts). We used the algorithm implemented in GingerALE 2.0, which models the ALE based on the spatial uncertainty of each focus using an estimation of the inter-subject and inter-experiment variability. The estimation was constrained by a gray matter mask and estimated the above-chance clustering with the experiments as a random-effects factor, rather than using a fixed-effects analysis on foci (Eickhoff et al., 2009). The resulting ALE map was thresholded using the false discover rate (FDR) method with p<0.05 and a minimum cluster size of 60 voxels of 2×2×2 mm (for a total of 480 mm3) to protect against false positives of multiple comparisons.

2.2.2 Individual analyses of sub-lists

Five other ALE analyses were also conducted based on the sub-lists that categorize different experiments into positive and negative rewards, as well as reward anticipation, reward delivery (outcome), and choice evaluation. For the positive reward analysis, 2167 foci from 283 experiments were included. The negative reward analysis consisted of 935 foci from 140 experiments. The numbers of foci included in the analyses for anticipation, outcome, and choice evaluation were 1553 foci (185 experiments), 1977 (253), and 520 (97), respectively. We applied the same analysis and threshold approaches as we did for the main analysis above.

2.2.3 Subtraction analyses

We were also interested in contrasting the brain areas that were selectively or preferentially activated by positive versus negative rewards, and by reward anticipation versus reward delivery. GingerALE 1.2 was used to conduct these two analyses. ALE maps were smoothed with a kernel with a FWHM of 10 mm. A permutation test of randomly distributed foci with 10000 simulations was run to determine statistical significance of the ALE maps. To correct for multiple comparisons, the resulting ALE maps were thresholded using the FDR method with p<0.05 and a minimum cluster size of 60 voxels.

2.3 Parametric voxel-based meta-analysis (PVM)

We also analyzed the same coordinate lists using another meta-analysis approach, PVM. In contrast to the ALE analysis, which treats different contrasts within a study as distinct experiments, PVM analysis pools peaks from all different contrasts within a study and creates a single coordinate map for the specific study (Costafreda et al., 2009). Therefore, the random-effects factor in the PVM analysis is the studies, in comparison to individual experiments/contrasts in the ALE analysis. This further reduces estimation bias caused by studies with multiple contrasts that reporting similar activation patterns. Similar to the ALE approach, we conducted six different PVM analyses using the algorithms implemented in R statistical software (http://www.R-project.org) from a previous study (Costafreda et al., 2009), one for the main analysis of all studies, and one for each of the five sub-lists characterizing brain activation by different aspects of reward processing. Two additional PVM analyses were conducted using the same code base to compare between positive and negative rewards as well as between reward anticipation and outcome.

2.3.1 Main analysis of all studies

MNI coordinates (5214) from the same 142 studies used in the ALE analysis were transformed into a text table, with each study identified by a unique study identification label. Computations on the peak map were constrained within a mask in MNI space. The peak map was first smoothed with a uniform kernel (ρ = 10 mm) to generate the summary map, which represents the number of studies reporting overlapping activation peaks within a neighborhood of 10 mm radius. Next, random-effects PVM analysis was run to estimate statistical significance associated with each voxel in the summary map. The number of studies in the summary map was converted into the proportion of studies that reported concordant activation. We used the same threshold as used in ALE analysis to identify significant clusters for the proportion map (using the FDR method with p<0.05 and a minimum cluster size of 60 voxels).

2.3.2 Individual analyses of sub-lists

Five other PVM analyses were conducted on the sub-lists for positive and negative rewards, as well as reward anticipation, outcome, and evaluation. The positive reward analysis included 2167 foci from 111 studies whereas the negative reward analysis included 935 foci from 67 studies. The numbers of studies included in the analyses for anticipation, outcome, and choice evaluation were 1553 foci (65 studies), 1977 (86), and 520 (39), respectively. We applied the same analysis and threshold approaches as we did for the main analysis above.

2.3.3 Comparison analyses

We also conducted two PVM analyses to compare the activation patterns between positive and negative rewards as well as between reward anticipation and outcome. Two peak maps (e.g., one for positive and the other for negative) were first smoothed with a uniform kernel (ρ = 10 mm) to generate the summary maps, each representing the number of studies with overlapping activation peak within a neighborhood of 10 mm radius. These two summary maps were entered into a Fisher test to estimate the odds ratio and statistical significance p value for each contributing voxel within the MNI space mask. Since the Fisher test is not specifically developed for fMRI data analysis and empirically less sensitive than the other methods, we applied a relatively lenient threshold for the direct comparison PVM analysis, using uncorrected p<0.01 and a minimum cluster size of 60 voxels (Xiong et al., 1995), to correct for multiple comparison Type I error.

3. Results

3.1 ALE results

The all-inclusive analysis of 142 studies showed significant activation of a large cluster that encompassed the bilateral nucleus accumbens (NAcc), pallidum, anterior insula, lateral/medial OFC, anterior cingulate cortex (ACC), supplementary motor area (SMA), lateral prefrontal cortex (PFC), right amygdala, left hippocampus, thalamus, and brain stem (Figure 1A). Other smaller clusters included the right middle frontal gyrus and left middle/inferior frontal gyrus, bilateral inferior/superior parietal lobule, and posterior cingulate cortex (PCC) (Table 1).

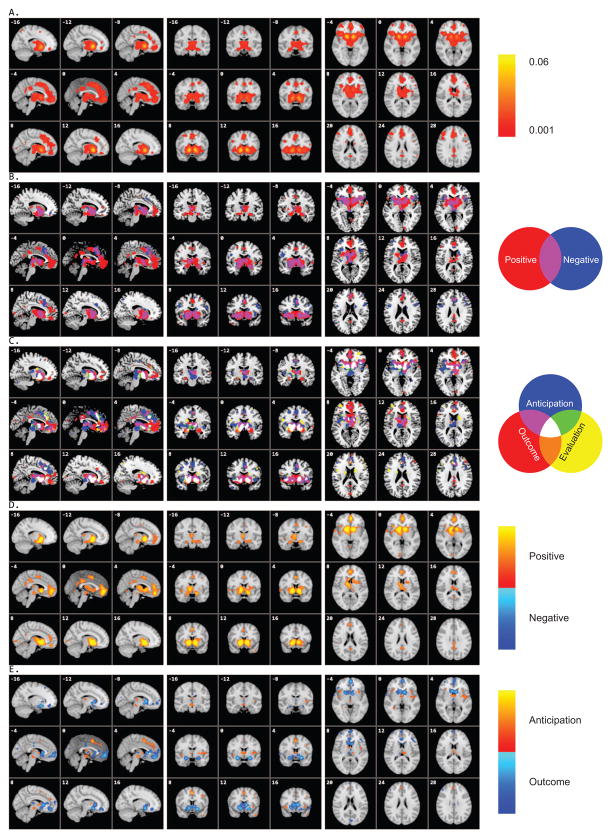

Figure 1.

Concordance of brain activation from the ALE analyses. A. Core network activated by all contrasts/experiments. B. Overlay of brain areas separately involved in positive versus negative reward processing. C. Overlay of brain areas individually activated by different reward processing stages, anticipation, outcome, and evaluation. D. Direct contrast of brain activation between positive and negative reward processing. E. Direct contrast of brain activation between reward anticipation and outcome.

Table 1.

Brain areas commonly activated by all studies from the ALE analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Region | L/R | x | y | z | ALE (10−3) | Size |

|---|---|---|---|---|---|---|

| Nucleus Accumbens | R | 12 | 10 | −6 | 59 | 19461 |

| Pallidum | L | −10 | 8 | −4 | 56 | |

| Insula | R | 36 | 20 | −6 | 23 | |

| Insula | L | −32 | 20 | −4 | 21 | |

| Dorsomedial Frontal Cortex | L | 0 | 24 | 40 | 19 | |

| Medial Orbitofrontal Cortex | L | 0 | 54 | −8 | 19 | |

| Amygdala | R | 24 | −2 | −16 | 15 | |

| Thalamus | R | 4 | −14 | 8 | 15 | |

| Thalamus | L | −6 | −16 | 8 | 15 | |

| Supplementary Motor Area | 0 | 8 | 48 | 14 | ||

| Brain Stem | R | 8 | −18 | −10 | 14 | |

| Anterior Cingulate Cortex | R | 2 | 44 | 20 | 12 | |

| Supplementary Motor Area | L | −2 | −8 | 50 | 11 | |

| Brain Stem | L | −6 | −18 | −10 | 11 | |

| Anterior Cingulate Cortex | 0 | 44 | 10 | 11 | ||

| Brain Stem | L | −4 | −24 | −4 | 10 | |

| Middle Frontal Gyrus | L | −24 | 2 | 52 | 9 | |

| Insula | L | −38 | −4 | 6 | 9 | |

| Mid-Orbitofrontal Cortex | R | 24 | 40 | −14 | 9 | |

| Mid-Orbitofrontal Cortex | L | −16 | 42 | −14 | 9 | |

| Middle Frontal Gyrus | R | 40 | 32 | 32 | 10 | 739 |

| Middle Frontal Gyrus | R | 44 | 16 | 30 | 8 | |

| Inferior Parietal Lobule | L | −28 | −56 | 48 | 11 | 598 |

| Superior Parietal Lobule | L | −24 | −68 | 56 | 10 | |

| Angular Gyrus | R | 28 | −58 | 50 | 10 | 475 |

| Angular Gyrus | R | 44 | −52 | 50 | 8 | |

| Posterior Cingulate Cortex | L | 0 | −32 | 32 | 12 | 425 |

| Frontal Pole | L | −36 | 50 | 10 | 9 | 337 |

| Lateral Orbitofrontal Cortex | L | −46 | 42 | −4 | 7 | |

| Lateral Orbitofrontal Cortex | L | −42 | 52 | −6 | 7 | |

| Middle Frontal Gyrus | R | 30 | 4 | 50 | 7 | 210 |

| Posterior Cingulate Cortex | R | 2 | −50 | 26 | 8 | 205 |

| Middle Frontal Gyrus | L | −44 | 28 | 30 | 7 | 139 |

| Superior Frontal Gyrus | L | −22 | 30 | 48 | 9 | 129 |

Positive rewards activated a subset of the above mentioned networks, including the bilateral pallidum, anterior insula, thalamus, brain stem, medial OFC, ACC, SMA, PCC, and other frontal and parietal areas (Figure 1B and Table 2, also see Supplementary Materials - Figure S1A). Negative rewards showed activation in the bilateral NAcc, caudate, pallidum, anterior insula, amygdale, thalamus, brain stem, rostral ACC, dorsomedial PFC, lateral OFC, and right middle and inferior frontal gyrus (Figure 1B and Table 2, also see Supplementary Materials - Figure S1B). Contrasting activation by positive versus negative rewards, we found that positive rewards significantly activated the following regions to a great degree: bilateral NAcc, anterior insula, medial OFC, hippocampus, left putamen, and thalamus (Figure 1D and Table 4). None showed more activation by negative than positive rewards.

Table 2.

Brain areas activated by positive or negative rewards from the ALE analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Region | L/R | x | y | z | ALE (10−3) | Size |

|---|---|---|---|---|---|---|

| Positive | ||||||

| Pallidum | R | 12 | 8 | −4 | 35 | 9254 |

| Pallidum | L | −12 | 8 | −4 | 33 | |

| Insula | R | 36 | 20 | −2 | 10 | |

| Insula | L | −32 | 18 | −4 | 8 | |

| Thalamus | R | 4 | −14 | 8 | 7 | |

| Thalamus | L | −10 | −22 | 12 | 5 | |

| Hippocampus | L | −30 | −20 | −18 | 6 | |

| Brain Stem | L | −4 | −18 | −12 | 5 | |

| Hippocampus | L | −24 | −14 | −12 | 5 | |

| Mid-Orbitofrontal Cortex | L | −28 | 28 | −12 | 5 | |

| Inferior Frontal Gyrus | L | −52 | 18 | 0 | 4 | |

| Medial Orbitofrontal Cortex | L | −2 | 54 | −6 | 10 | 3483 |

| Medial Orbitofrontal Cortex | R | 2 | 48 | −14 | 10 | |

| Supplementary Motor Area | R | 2 | 8 | 48 | 8 | |

| Pregenual Cingulate Cortex | R | 4 | 42 | 18 | 7 | |

| Posterior Cingulate Cortex | L | 0 | −30 | 32 | 6 | 292 |

| Inferior Parietal Lobule | L | −30 | −60 | 48 | 5 | 222 |

| Posterior Cingulate Cortex | R | 2 | −50 | 26 | 4 | 166 |

| Negative | ||||||

| Pallidum | L | −10 | 6 | −2 | 9 | 5705 |

| Nucleus Accumbens | L | −16 | 12 | −10 | 8 | |

| Nucleus Accumbens | R | 12 | 10 | −8 | 7 | |

| Insula | R | 36 | 20 | −6 | 6 | |

| Caudate | R | 10 | 6 | 4 | 5 | |

| Insula | L | −28 | 24 | −8 | 5 | |

| Amygdala | R | 26 | 0 | −18 | 5 | |

| Anterior Cingulate Cortex | R | 6 | 24 | 34 | 7 | 1102 |

| Inferior Frontal Gyrus | R | 52 | 10 | 22 | 3 | 195 |

| Precentral Gyrus | L | −48 | 4 | 26 | 4 | 189 |

| Middle Frontal Gyrus | R | 44 | 28 | 36 | 2 | 125 |

| Mid-Orbitofrontal Cortex | L | −18 | 44 | −12 | 3 | 98 |

| Frontal Pole | L | −36 | 50 | 10 | 4 | 91 |

Table 4.

Brain areas differentially activated by positive and negative rewards from the ALE subtraction analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Region | L/R | x | y | z | ALE (10−3) | Size |

|---|---|---|---|---|---|---|

| Positive > Negative | ||||||

| Nucleus Accumbens | R | 12 | 8 | −4 | 177 | 5951 |

| Nucleus Accumbens | L | −10 | 10 | −4 | 157 | |

| Putamen | L | −24 | 4 | 6 | 38 | |

| Brain Stem | L | −4 | −18 | −14 | 37 | |

| Hippocampus | L | −30 | −20 | −18 | 36 | |

| Hippocampus | L | −24 | −14 | −12 | 35 | |

| Insula | R | 42 | −4 | −4 | 32 | |

| Thalamus | L | −10 | −24 | 12 | 32 | |

| Insula | L | −30 | 18 | −2 | 30 | |

| Hippocampus | R | 20 | −22 | −10 | 27 | |

| Medial Orbitofrontal Cortex | 0 | 48 | −12 | 72 | 1855 | |

| Pregenual Cingulate Cortex | R | 2 | 46 | 8 | 52 | |

| Pregenual Cingulate Cortex | R | 4 | 44 | 16 | 45 | |

| Supplementary Motor Area | R | 2 | 6 | 48 | 49 | 419 |

| Posterior Cingulate Cortex | 0 | −32 | 32 | 43 | 361 | |

| Insula | R | 36 | 22 | 0 | 38 | 207 |

| Posterior Cingulate Cortex | 0 | −50 | 26 | 30 | 181 | |

| Inferior Parietal Lobule | L | −30 | −62 | 48 | 33 | 149 |

| Frontal Pole | R | 30 | 46 | −10 | 30 | 145 |

| Positive < Negative | ||||||

| None | ||||||

Different reward processing stages shared similar brain activation patterns in the above-mentioned core networks, including the bilateral NAcc, anterior insula, thalamus, medial OFC, ACC, and dorsomedial PFC (Figure 1C and Table 3, also see Supplementary Materials - Figures S1C–E). Reward anticipation, as compared to reward outcome, revealed greater activation in the bilateral anterior insula, ACC, SMA, left inferior parietal lobule and middle frontal gyrus (Figure 1E and Table 5). Outcome preferential activation included bilateral NAcc, caudate, thalamus, and medial/lateral OFC (Table 5).

Table 3.

Brain areas activated by anticipation, outcome, and evaluation from the ALE analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Region | L/R | x | y | z | ALE (10−3) | Size |

|---|---|---|---|---|---|---|

| Anticipation | ||||||

| Nucleus Accumbens | R | 12 | 10 | −4 | 20 | 7960 |

| Nucleus Accumbens | L | −12 | 10 | −6 | 20 | |

| Insula | R | 38 | 20 | −8 | 10 | |

| Insula | L | −32 | 18 | −6 | 8 | |

| Thalamus | R | 4 | −12 | 12 | 6 | |

| Thalamus | L | −10 | −22 | 12 | 6 | |

| Brain Stem | R | 8 | −18 | −10 | 6 | |

| Brain Stem | L | −4 | −24 | −6 | 5 | |

| Putamen | R | 24 | 4 | 0 | 5 | |

| Supplementary Motor Area | R | 2 | 8 | 48 | 8 | 2258 |

| Anterior Cingulate Cortex | L | 2 | 24 | 40 | 7 | |

| Anterior Cingulate Cortex | R | 4 | 38 | 38 | 5 | |

| Anterior Cingulate Cortex | R | 2 | 28 | 34 | 7 | |

| Supplementary Motor Area | L | −2 | −6 | 50 | 4 | |

| Medial Orbitofrontal Cortex | L | −2 | 50 | −16 | 5 | 450 |

| Inferior Parietal Lobule | L | −28 | −58 | 50 | 5 | 327 |

| Middle Frontal Gyrus | R | 40 | 28 | 34 | 4 | 192 |

| Superior Parietal Lobule | R | 34 | −52 | 52 | 3 | 131 |

| Middle Frontal Gyrus | L | −26 | 4 | 52 | 4 | 119 |

| Precentral Gyrus | L | −44 | 6 | 30 | 3 | 95 |

| Posterior Cingulate Cortex | L | 0 | −30 | 32 | 4 | 94 |

| Outcome | ||||||

| Nucleus Accumbens | R | 12 | 10 | −6 | 27 | 11322 |

| Nucleus Accumbens | L | −10 | 8 | −4 | 26 | |

| Medial Orbitofrontal Cortex | L | −2 | 56 | −6 | 10 | |

| Medial Orbitofrontal Cortex | R | 2 | 48 | −14 | 9 | |

| Amygdala | R | 26 | 0 | −16 | 10 | |

| Insula | R | 36 | 22 | −8 | 9 | |

| Insula | L | −28 | 24 | −8 | 7 | |

| Thalamus | R | 4 | −16 | 6 | 9 | |

| Anterior Cingulate Cortex | R | 8 | 24 | 32 | 7 | |

| Supplementary Motor Area | R | 4 | 22 | 52 | 6 | |

| Frontal Pole | L | −18 | 40 | −16 | 6 | |

| Posterior Cingulate Cortex | 0 | −22 | 32 | 5 | 345 | |

| Superior Frontal Gyrus | L | −24 | 30 | 48 | 5 | 150 |

| Supplementary Motor Area | R | 2 | −6 | 50 | 4 | 147 |

| Inferior Frontal Gyrus | L | −54 | 18 | 16 | 3 | 113 |

| Occipital Pole | L | −32 | −94 | −12 | 5 | 111 |

| Middle Frontal Gyrus | R | 44 | 36 | 28 | 4 | 110 |

| Evaluation | ||||||

| Pallidum | L | −10 | 4 | −4 | 7 | 2846 |

| Putamen | L | −26 | 6 | −8 | 5 | |

| Nucleus Accumbens | R | 10 | 10 | −10 | 5 | |

| Nucleus Accumbens | L | −16 | 4 | −14 | 5 | |

| Dorsomedial Frontal Cortex | L | −2 | 24 | 42 | 3 | 585 |

| Anterior Cingulate Cortex | R | 6 | 26 | 34 | 3 | |

| Anterior Cingulate Cortex | L | −2 | 32 | 30 | 3 | |

| Lateral Orbitofrontal Cortex | R | 30 | 30 | −16 | 3 | 363 |

| Insula | R | 38 | 18 | −4 | 2 | |

| Caudate | R | 20 | 4 | 18 | 2 | 202 |

| Frontal Pole | L | −36 | 50 | 10 | 4 | 137 |

| Frontal Pole | R | 32 | 54 | −4 | 2 | 132 |

| Precentral Gyrus | L | −48 | 4 | 24 | 3 | 100 |

Table 5.

Brain areas differentially activated by anticipation and outcome from the ALE subtraction analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Region | L/R | x | y | z | ALE (10−3) | Size |

|---|---|---|---|---|---|---|

| Anticipation > Outcome | ||||||

| Supplementary Motor Area | L | 2 | 8 | 50 | 52 | 545 |

| Anterior Cingulate Cortex | R | 4 | 40 | 36 | 30 | |

| Anterior Cingulate Cortex | R | 4 | 22 | 40 | 29 | |

| Anterior Cingulate Cortex | R | 6 | 46 | 24 | 24 | |

| Anterior Cingulate Cortex | R | 2 | 30 | 32 | 21 | |

| Brain Stem | R | 6 | −18 | −10 | 34 | 275 |

| Brain Stem | L | −6 | −24 | −12 | 23 | |

| Insula | L | −42 | −6 | 4 | 32 | 229 |

| Pallidum | L | −22 | −4 | 2 | 19 | |

| Insula | R | 40 | 16 | −6 | 31 | 150 |

| Insula | R | 34 | 26 | 2 | 25 | |

| Thalamus | R | 6 | 0 | 4 | 37 | 143 |

| Thalamus | L | −10 | −22 | 12 | 27 | 136 |

| Inferior Parietal Lobule | L | −28 | −60 | 50 | 31 | 113 |

| Middle Frontal Gyrus | L | −44 | 18 | 36 | 22 | 99 |

| Anticipation < Outcome | ||||||

| Nucleus Accumbens | L | −18 | 8 | −14 | 69 | 4491 |

| Amygdala | R | 26 | 0 | −16 | 61 | |

| Nucleus Accumbens | R | 14 | 10 | −12 | 57 | |

| Caudate | L | −8 | 14 | 2 | 56 | |

| Medial Orbitofrontal Cortex | L | −2 | 56 | −6 | 54 | |

| Caudate | R | 8 | 20 | 2 | 52 | |

| Medial Orbitofrontal Cortex | R | 4 | 48 | −14 | 50 | |

| Nucleus Accumbens | L | −8 | 8 | −4 | 48 | |

| Pregenual Cingulate Cortex | R | 4 | 34 | 10 | 34 | |

| Mid-Orbitofrontal Cortex | L | −18 | 40 | −16 | 33 | |

| Lateral Orbitofrontal Cortex | L | −40 | 44 | −16 | 28 | |

| Medial Superior Frontal Cortex | R | 4 | 62 | 14 | 27 | |

| Medial Orbitofrontal Cortex | L | −10 | 42 | −8 | 27 | |

| Occipital Pole | L | −30 | −94 | −14 | 34 | 175 |

| Inferior Frontal Gyrus | L | −38 | 34 | 12 | 25 | 113 |

| Lateral Orbitofrontal Cortex | L | −50 | 24 | −14 | 26 | 111 |

| Frontal Pole | R | 46 | 34 | −6 | 22 | 110 |

3.2 PVM results

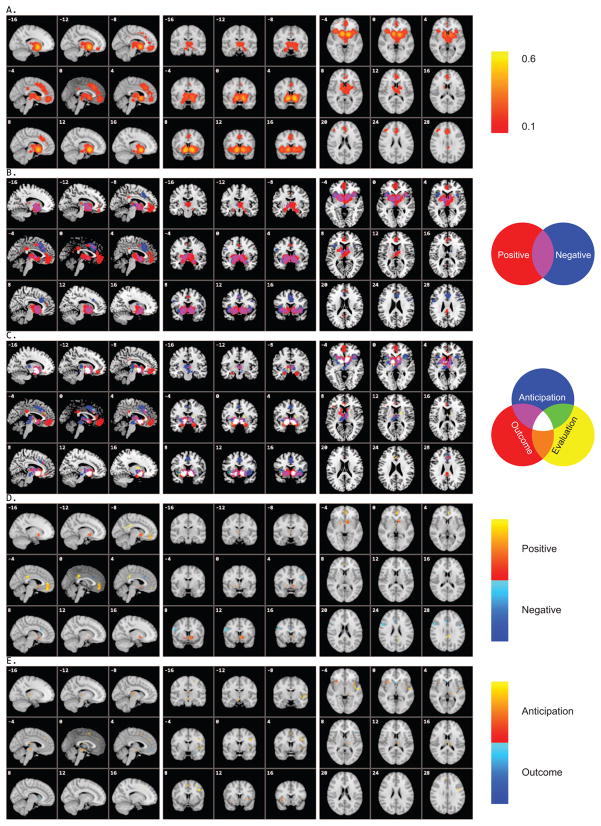

The main analysis of 142 studies showed significant activation in bilateral NAcc, anterior insula, lateral/medial OFC, ACC, PCC, inferior parietal lobule, and middle frontal Gyrus (Figure 2A and Table 6).

Figure 2.

Concordance of brain activation from the PVM analyses. A. Core network activated by all contrasts/experiments. B. Overlay of brain areas separately involved in positive versus negative reward processing. C. Overlay of brain areas individually activated by different reward processing stages, anticipation, outcome, and evaluation. D. Direct contrast of brain activation between positive and negative reward processing. E. Direct contrast of brain activation between reward anticipation and outcome.

Table 6.

Brain areas commonly activated by all studies from the PVM analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Label | L/R | x | y | z | PVM (10−2) | Size |

|---|---|---|---|---|---|---|

| Nucleus Accumbens | R | 12 | 8 | −10 | 54 | 9216 |

| Putamen | L | −16 | 4 | −10 | 54 | |

| Nucleus Accumbens | L | −8 | 6 | −6 | 51 | |

| Insula | R | 38 | 20 | −2 | 32 | |

| Insula | R | 30 | 18 | −8 | 31 | |

| Anterior Cingulate Cortex | L | 2 | 22 | 36 | 30 | 3032 |

| Medial Orbitofrontal Cortex | L | −4 | 50 | −10 | 27 | |

| Medial Orbitofrontal Cortex | L | −8 | 42 | −18 | 25 | |

| Anterior Cingulate Cortex | L | 0 | 34 | 28 | 24 | |

| Medial Orbitofrontal Cortex | L | −6 | 38 | −12 | 24 | |

| Middle Frontal Gyrus | R | 36 | 36 | 30 | 20 | 288 |

| Middle Frontal Gyrus | R | 44 | 34 | 22 | 20 | |

| Middle Frontal Gyrus | R | 40 | 22 | 34 | 17 | |

| Middle Frontal Gyrus | R | 48 | 38 | 16 | 15 | |

| Inferior Parietal Lobule | L | −36 | −58 | 48 | 20 | 155 |

| Superior Parietal Lobule | L | −26 | −66 | 50 | 18 | |

| Posterior Cingulate Cortex | L | −2 | −34 | 30 | 19 | 114 |

| Lateral Orbitofrontal Cortex | R | 34 | 50 | −6 | 21 | 63 |

| Lateral Orbitofrontal Cortex | R | 30 | 52 | 0 | 17 |

Positive rewards activated the bilateral NAcc, pallidum, putamen, thalamus, medial OFC, pregenual cingulate cortex, SMA, and PCC (Figure 2B and Table 7, also see Supplementary Materials - Figure S2A). Activation by negative rewards was found in the bilateral NAcc and anterior insula, pallidum, ACC, SMA, and middle/inferior frontal gyrus (Figure 2B and Table 7, also see Supplementary Materials - Figure S2B). Direct contrast between positive and negative rewards revealed preferential activation by positive rewards in the NAcc, pallidum, medial OFC, and PCC, and greater activation by negative rewards in ACC and middle/inferior frontal gyrus (Figure 2D and Table 9).

Table 7.

Brain areas activated by positive or negative rewards from the PVM analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Label | L/R | x | y | z | PVM (10−2) | Size |

|---|---|---|---|---|---|---|

| Positive | ||||||

| Nucleus Accumbens | L | −16 | 8 | −8 | 46 | 6609 |

| Nucleus Accumbens | R | 14 | 10 | −10 | 46 | |

| Putamen | L | −18 | 4 | −14 | 41 | |

| Thalamus | R | 10 | −8 | 6 | 23 | |

| Thalamus | R | 6 | −12 | 2 | 21 | |

| Medial Orbitofrontal Cortex | L | −4 | 50 | −10 | 23 | 1521 |

| Pregenual Cingulate Cortex | L | −6 | 42 | −2 | 19 | |

| Medial Orbitofrontal Cortex | L | −8 | 40 | −18 | 19 | |

| Pregenual Cingulate Cortex | L | 2 | 38 | 10 | 18 | |

| Medial Orbitofrontal Cortex | L | −4 | 58 | −2 | 17 | |

| Middle Cingulate Cortex | L | 0 | 2 | 40 | 15 | 343 |

| Supplementary Motor Area | L | −2 | 18 | 42 | 14 | |

| Supplementary Motor Area | L | −8 | −4 | 44 | 12 | |

| Middle Cingulate Cortex | L | −2 | 10 | 34 | 12 | |

| Posterior Cingulate Cortex | L | −4 | −32 | 32 | 16 | 243 |

| Posterior Cingulate Cortex | L | −2 | −46 | 30 | 14 | |

| Mid-Orbitofrontal Cortex | R | 24 | 40 | −14 | 14 | 65 |

| Mid-Orbitofrontal Cortex | R | 16 | 38 | −18 | 13 | |

| Negative | ||||||

| Nucleus Accumbens | L | −18 | 4 | −10 | 31 | 4891 |

| Nucleus Accumbens | R | 12 | 2 | −10 | 27 | |

| Nucleus Accumbens | R | 14 | 8 | −14 | 27 | |

| Pallidum | L | −16 | 0 | −2 | 25 | |

| Insula | R | 36 | 20 | −10 | 25 | |

| Insula | L | −32 | 20 | −2 | 22 | |

| Anterior Cingulate Cortex | R | 2 | 20 | 36 | 27 | 1166 |

| Anterior Cingulate Cortex | 0 | 22 | 28 | 25 | ||

| Supplementary Motor Area | L | 2 | 12 | 50 | 16 | |

| Anterior Cingulate Cortex | L | 0 | 36 | 26 | 15 | |

| Middle Frontal Gyrus | R | 42 | 26 | 28 | 16 | 139 |

| Middle Frontal Gyrus | R | 44 | 18 | 30 | 15 | |

| Inferior Frontal Gyrus | R | 50 | 6 | 26 | 15 | |

| Inferior Frontal Gyrus | L | −52 | 4 | 26 | 15 | 82 |

Table 9.

Brain areas differentially activated by positive and negative rewards from the PVM Fisher odds ratio analysis (voxel p<0.01 and a minimum cluster size of 60 voxels).

| Label | L/R | x | y | z | OR | Size |

|---|---|---|---|---|---|---|

| Positive > Negative | ||||||

| Medial Orbitofrontal Cortex | L | −12 | 48 | −22 | 0.001 | 371 |

| Medial Orbitofrontal Cortex | L | −4 | 38 | −22 | 0.097 | |

| Medial Orbitofrontal Cortex | L | −6 | 52 | −12 | 0.202 | |

| Medial Orbitofrontal Cortex | L | −8 | 42 | −18 | 0.202 | |

| Medial Orbitofrontal Cortex | L | −6 | 48 | −6 | 0.244 | |

| Nucleus Accumbens | L | −6 | 4 | −10 | 0.352 | 192 |

| Pallidum | L | −12 | 4 | −2 | 0.409 | |

| Posterior Cingulate Cortex | R | 4 | −30 | 38 | 0.001 | 129 |

| Posterior Cingulate Cortex | R | 6 | −34 | 30 | 0.001 | |

| Posterior Cingulate Cortex | L | −8 | −38 | 34 | 0.097 | |

| Posterior Cingulate Cortex | L | −2 | −46 | 30 | 0.097 | |

| Posterior Cingulate Cortex | L | −4 | −34 | 28 | 0.097 | |

| Pallidum | R | 16 | 2 | −2 | 0.336 | 60 |

| Nucleus Accumbens | R | 8 | 12 | −8 | 0.409 | |

| Positive < Negative | ||||||

| Middle Frontal Gyrus | R | 46 | 18 | 32 | 1724.2 | 149 |

| Inferior Frontal Gyrus | R | 52 | 12 | 24 | 1724.2 | |

| Middle Frontal Gyrus | R | 50 | 24 | 32 | 1093.5 | |

| Inferior Frontal Gyrus | R | 60 | 12 | 16 | 896.9 | |

| Precentral Gyrus | R | 50 | 6 | 26 | 19.0 | |

| Anterior Cingulate Cortex | R | 8 | 24 | 24 | 7.8 | 65 |

| Anterior Cingulate Cortex | R | 6 | 16 | 30 | 5.2 | |

| Anterior Cingulate Cortex | L | −4 | 20 | 28 | 4.2 | |

| Anterior Cingulate Cortex | R | 4 | 24 | 34 | 3.4 | |

Different reward processing stages similarly activated the NAcc and ACC whereas they differentially recruited other brain areas such as medial OFC, anterior insula, and amygdala (Figure 2C and Table 8, also see Supplementary Materials - Figure S2C–E). Reward anticipation, as compared to reward outcome, revealed significant activation in the bilateral anterior insula, thalamus, precentral gyrus, and inferior parietal lobule (Figure 2E and Table 10). No brain area showed greater activation by reward outcome in comparison to anticipation.

Table 8.

Brain areas activated by anticipation, outcome, and evaluation from the PVM analysis (FDR p<0.05 and a minimum cluster size of 60 voxels).

| Label | L/R | x | y | z | PVM (10−2) | Size |

|---|---|---|---|---|---|---|

| Anticipation | ||||||

| Nucleus Accumbens | R | 12 | 2 | −4 | 46 | 5623 |

| Nucleus Accumbens | L | −16 | 4 | −10 | 46 | |

| Insula | R | 34 | 20 | −6 | 40 | |

| Thalamus | L | −8 | −20 | 8 | 29 | |

| Thalamus | R | 8 | −6 | 6 | 29 | |

| Thalamus | L | −2 | −16 | 6 | 26 | |

| Anterior Cingulate Cortex | 0 | 20 | 42 | 28 | 1003 | |

| Anterior Cingulate Cortex | R | 2 | 30 | 34 | 25 | |

| Supplementary Motor Area | L | −4 | 4 | 50 | 20 | |

| Supplementary Motor Area | L | 0 | 0 | 46 | 20 | |

| Supplementary Motor Area | L | −8 | −4 | 44 | 18 | |

| Outcome | ||||||

| Nucleus Accumbens | R | 12 | 12 | −6 | 51 | 5288 |

| Nucleus Accumbens | R | 14 | 8 | −12 | 48 | |

| Nucleus Accumbens | L | −16 | 8 | −10 | 45 | |

| Nucleus Accumbens | L | −10 | 8 | −4 | 43 | |

| Amygdala | L | −18 | 0 | −18 | 29 | |

| Medial Orbitofrontal Cortex | 0 | 44 | −10 | 27 | 1254 | |

| Medial Orbitofrontal Cortex | L | −8 | 46 | −12 | 23 | |

| Medial Orbitofrontal Cortex | L | −8 | 38 | −16 | 23 | |

| Medial Orbitofrontal Cortex | L | −2 | 60 | −6 | 21 | |

| Anterior Cingulate Cortex | R | 2 | 24 | 30 | 21 | 234 |

| Anterior Cingulate Cortex | R | 2 | 18 | 38 | 17 | |

| Supplementary Motor Area | L | −4 | 16 | 46 | 15 | |

| Posterior Cingulate Cortex | L | −2 | −34 | 30 | 20 | 210 |

| Posterior Cingulate Cortex | R | 2 | −46 | 24 | 15 | |

| Evaluation | ||||||

| Nucleus Accumbens | L | −20 | 6 | −12 | 38 | 1796 |

| Nucleus Accumbens | R | 12 | 2 | −10 | 36 | |

| Amygdala | L | −12 | 0 | −14 | 33 | |

| Pallidum | L | −12 | 2 | −2 | 28 | |

| Anterior Cingulate Cortex | L | 0 | 26 | 24 | 23 | 115 |

| Anterior Cingulate Cortex | L | 2 | 22 | 38 | 20 | |

| Anterior Cingulate Cortex | L | 0 | 36 | 26 | 20 | |

| Anterior Cingulate Cortex | L | −6 | 32 | 22 | 18 | |

Table 10.

Brain areas differentially activated by anticipation and outcome from the PVM Fisher odds ratio analysis (voxel p<0.01 and a minimum cluster size of 60 voxels).

| Label | L/R | x | y | z | OR | Size |

|---|---|---|---|---|---|---|

| Anticipation > Outcome | ||||||

| Superior Temporal Gyrus | L | −58 | −6 | 0 | 0.149 | 143 |

| Heschl Gyrus | L | −50 | −10 | 0 | 0.149 | |

| Insula | L | −40 | −2 | −2 | 0.149 | |

| Rolandic Opercular | L | −46 | −4 | 6 | 0.179 | |

| Insula | L | −34 | 6 | −4 | 0.217 | |

| Angular Gyrus | L | −36 | −68 | 42 | 0.149 | 117 |

| Angular Gyrus | L | −42 | −56 | 36 | 0.149 | |

| Inferior Parietal Lobule | L | −32 | −62 | 46 | 0.179 | |

| Angular Gyrus | L | −42 | −66 | 38 | 0.179 | |

| Insula | R | 38 | 22 | 4 | 0.307 | 116 |

| Insula | R | 28 | 24 | −6 | 0.307 | |

| Insula | R | 36 | 20 | −4 | 0.357 | |

| Precentral Gyrus | L | −42 | 2 | 38 | 0.001 | 106 |

| Precentral Gyrus | L | −48 | 0 | 30 | 0.149 | |

| Brain Stem | 0 | −24 | −10 | 0.273 | 79 | |

| Thalamus | L | −4 | −16 | 14 | 0.217 | 78 |

| Thalamus | L | −8 | −18 | 8 | 0.270 | |

| Thalamus | L | −10 | −24 | 4 | 0.273 | |

| Anticipation < Outcome | ||||||

| None | ||||||

3.3 Comparison of ALE and PVM results

The current study also showed that although ALE and PVM methods treated the coordinate-based data differently and adopted distinct estimation algorithms, the results for a single list of coordinates from these two meta-analysis approaches were very similar and comparable (Figures 1A–C and 2A–C, Table 11, also see Figures S1 and S2 in the Supplementary Materials). The improved ALE algorithm implemented in GingerALE 2.0, by design, treats experiments (or contrasts) as the random-effects factor, which significantly reduces the bias caused by experiments reporting more loci versus those with fewer loci. Different studies, however, include different number of experiments/contrasts. Therefore, the results of GingerALE 2.0 may still be affected by the bias that weighs more toward studies reporting more contrasts, potentially overestimating cross-study concordance. However, by choice, users can combine coordinates from different contrasts together so that GingerALE 2.0 can treat each study as a single experiment. This is what PVM implements, pooling coordinates from all contrasts within a study into a single activation map, thus weighing all studies equally to estimate activation overlap across studies.

Table 11.

Summary of ALE and PVM results on key regions of interest.

| VS | aINS | mOFC | lOFC | AMY | ACC | IPL | dlPFC | dmPFC | |

|---|---|---|---|---|---|---|---|---|---|

| Overall | ALE, PVM | ALE, PVM | ALE, PVM | ALE, PVM | ALE | ALE, PVM | ALE, PVM | ALE, PVM | ALE |

| Positive | ALE, PVM | ALE | ALE, PVM | ALE | ALE | ||||

| Negative | ALE, PVM | ALE, PVM | ALE | ALE, PVM | ALE, PVM | ||||

| Anticipation | ALE, PVM | ALE, PVM | ALE | ALE, PVM | ALE | ALE | |||

| Outcome | ALE, PVM | ALE | ALE, PVM | ALE, PVM | ALE, PVM | ALE | |||

| Evaluation | ALE, PVM | ALE | ALE | ALE, PVM | ALE, PVM | ALE | |||

| Positive>Negative | ALE, PVM | ALE | ALE, PVM | ALE | |||||

| Positive<Negative | PVM | PVM | |||||||

| Anticipation>Outcome | ALE, PVM | ALE | ALE, PVM | ALE | |||||

| Anticipation<Outcome | ALE | ALE | ALE | ALE | ALE |

VS - ventral striatum; aINS - anterior insula; mOFC - medial orbitofrontal cortex; lOFC - lateral orbitofrontal cortex; AMY – amygdala; ACC - anterior cingulate cortex; IPL - inferior parietal lobule; dlPFC - dorsolateral prefrontal cortex; dmPFC - dorsomedial prefrontal cortex

In contrast, comparison of two lists of coordinates differed significantly between ALE and PVM approaches (Table 11), as a result of their differences in sensitivity to within-study and cross-study convergence. Since the improved ALE algorithm has not been implemented for the subtractive ALE analysis, we used an earlier version, GingerALE 1.2, which treats the coordinates as the random-effects factor and experiments as the fixed-effects variable. Therefore differences in both the numbers of coordinates and experiments in two lists may affect the subtraction results. The subtractive ALE analysis biased toward the list with more experiments against the other with fewer (Figure 1D/E). Positive reward studies (2167 foci from 283 experiments) clearly predominated over negative studies (935 foci from 140 experiments). The difference between reward anticipation (1553 foci from 185 experiments) and outcome (1977 foci from 253 experiments) was smaller, but could have also caused the bias toward the outcome phase. On the other hand, the use of the Fisher test to estimate the odds ratio and assign voxels in one of the two lists by PVM seemed to be less sensitive in detecting activation difference between the two lists (Figure 2D/E).

4. Discussion

We are constantly making decisions in our everyday life. Some decisions involve no apparent positive or negative values of the outcomes whereas others have significant impacts on the valence of the results and our emotional responses toward the choices we make. We may feel happy and satisfied when the outcome is positive or our expectation is fulfilled, or feel frustrated when the outcome is negative or lower than what we anticipated. Moreover, many decisions must be made without advance knowledge of their consequences. Therefore, we need to be able to make predictions about the future reward, and evaluate the reward value and potential risk of obtaining it or being penalized. This requires us to evaluate the choice we make based on the presence of prediction errors and to use these signals to guide our learning and future behaviors. Many neuroimaging studies have examined reward-related decision making. However, given the complex and heterogeneous psychological processes involved in value-based decision making, it is no trivial task to examine neural networks that subserve representation and processing of reward-related information. We have observed a rapid growth in the number of empirical studies in the field of neuroeconomics, yet thus far it has been hard to see how these studies have converged so as to clearly delineate the reward circuitry in the human brain. In the current meta-analysis study, we have showed concordance across a large number of studies and revealed the common and distinct patterns of brain activation by different aspects of reward processing. In a data-driven fashion, we pooled over all coordinates from different contrasts/experiments of 142 studies, and observed a core reward network, which consists of the NAcc, lateral/medial OFC, ACC, anterior insula, dorsomedial PFC, as well as the lateral frontoparietal areas. A recent meta-analysis study focusing on risk assessment in decision making reported a similar reward circuitry (Mohr et al., 2010). In addition, from a theory-driven perspective, we contrasted neural networks that were involved in positive and negative valence across anticipation and outcome stages of reward processing, and elucidated distinct neural substrates subserving valence-related assessment as well as their preferential involvement in anticipation and outcome.

4.1 Core reward areas: NAcc and OFC

The NAcc and OFC have long been conceived as the major players in reward processing because they are the main projection areas of two distinct dopaminergic pathways, the mesolimbic and mesocortical pathways, respectively. However, it remains unknown how dopamine neurons distinctively modulate activity in these limbic and cortical areas. Previous studies have tried to differentiate the roles of these two structures in terms of temporal stages, associating the NAcc with reward anticipation and relating the medial OFC to receipt of reward (Knutson et al., 2001b; Knutson et al., 2003; Ramnani et al., 2004). Results from other studies questioned such a distinction (Breiter et al., 2001; Delgado et al., 2005; Rogers et al., 2004). Many studies also implied that the NAcc was responsible for detecting prediction error, a crucial signal in incentive learning and reward association (McClure et al., 2003; O’Doherty et al., 2003b; Pagnoni et al., 2002). Studies also found that the NAcc showed a biphasic response, such that activity in the NAcc would decrease and drop below the baseline in response to negative prediction errors (Knutson et al., 2001b; McClure et al., 2003; O’Doherty et al., 2003b). Although the OFC usually displays similar patterns of activity as the NAcc, previous neuroimaging studies in humans have suggested that the OFC serves to convert a variety of stimuli into a common currency in terms of their reward values (Arana et al., 2003; Cox et al., 2005; Elliott et al., 2010; FitzGerald et al., 2009; Gottfried et al., 2003; Kringelbach et al., 2003; O’Doherty et al., 2001; Plassmann et al., 2007). These findings paralleled those obtained from single cell recording and lesion studies in animals (Schoenbaum and Roesch, 2005; Schoenbaum et al., 2009; Schoenbaum et al., 2003; Schultz et al., 2000; Tremblay and Schultz, 1999, 2000; Wallis, 2007).

Our overall analyses showed that the NAcc and OFC responded to general reward processing (Figure 1A and Figure 2A). Activation in the NAcc largely overlapped across different stages, whereas the medial OFC was more tuned to reward receipt (Figure 1C/E and Figure 2C). These findings highlighted that the NAcc may be responsible for tracking both positive and negative signals of reward and using them to modulate learning of reward association, whereas the OFC mostly monitors and evaluates reward outcomes. Further investigation is needed to better differentiate the roles of the NAcc and OFC in reward-related decision making (Frank and Claus, 2006; Hare et al., 2008).

4.2 Valence-related assessment

In addition to converting various reward options into common currency and representing their reward values, distinct brain regions in the reward circuitry may separately encode positive and negative valences of reward. Direct comparisons across reward valence revealed that both the NAcc and medial OFC were more active in response to positive versus negative rewards (Figure 1B/D and Figure 2B/D). In contrast, the anterior insular cortex was involved in the processing of negative reward information (Figure 1B and Figure 2B). These results confirmed the medial-lateral distinction for positive versus negative rewards (Kringelbach, 2005; Kringelbach and Rolls, 2004), and were consistent with what we observed in our previous study on a reward task (Liu et al., 2007). Sub-regions of the ACC uniquely responded to positive and negative rewards. Pregenual and rostral ACC, close to the medial OFC, were activated by positive rewards whereas the caudal ACC responded to negative rewards (Figure 1B and Figure 2B). ALE and PVM meta-analyses also revealed that the PCC was consistently activated by positive rewards (Figure 1B and Figure 2B).

Interestingly, separate networks encoding positive and negative valences are similar to the distinction between two anti-correlated networks, the default-mode network and task-related network (Fox et al., 2005; Raichle et al., 2001; Raichle and Snyder, 2007). Recent meta-analyses found that the default-mode network mainly involved the medial prefrontal regions (including the medial OFC) and medial posterior cortex (including the PCC and precuneus), and the task-related network includes the ACC, insula, and lateral frontoparietal regions (Laird et al., 2009; Toro et al., 2008). Activation in the medial OFC and PCC by positive rewards mirrored the default-mode network commonly observed during the resting state, whereas activation in the ACC, insula, lateral prefrontal cortex by negative rewards paralleled the task-related network. This intrinsic functional organization of the brain was found to influence reward and risky decision making and account for individual differences in risk-taking traits (Cox et al., 2010).

4.3 Anticipation versus outcome

The bilateral anterior insula, ACC/SMA, inferior parietal lobule, and brain stem showed more consistent activation in anticipation in comparison to the outcome phase (Figure 1C/E and Figure 2C/E). The anterior insula and ACC have previously been implicated in interoception, emotion and empathy (Craig, 2002, 2009; Gu et al., 2010; Phan et al., 2002), and risk and uncertainty assessment (Critchley et al., 2001; Kuhnen and Knutson, 2005; Paulus et al., 2003), lending its role in anticipation. The anterior insula was consistently involved in risk processing, especially in anticipation of loss, as revealed by a recent meta-analysis (Mohr et al., 2010). Similar to the role of the OFC, the parietal lobule has been associated with valuation of different options (Sugrue et al., 2005), numerical representation (Cohen Kadosh et al., 2005; Hubbard et al., 2005), and information integration (Gold and Shadlen, 2007; Yang and Shadlen, 2007). Therefore, it is crucial for the parietal lobule to be involved in the anticipation stage of reward processing so as to plan and prepare for an informed action (Andersen and Cui, 2009; Lau et al., 2004a; Lau et al., 2004b).

On the other hand, the ventral striatum, medial OFC, and amygdala showed preferential activation during reward outcome in comparison to the anticipation stage (Figure 1C/E and Figure 2C). These patterns were consistent with what we and other investigators found previously (Breiter et al., 2001; Delgado et al., 2005; Liu et al., 2007; Rogers et al., 2004), standing against the functional dissociation between the ventral striatum and medial OFC in terms of their respective roles in reward anticipation and reward outcome (Knutson et al., 2001a; Knutson et al., 2001b; Knutson et al., 2003).

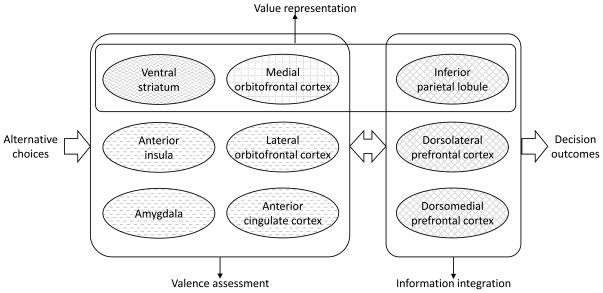

4.4 A schematic illustration of reward processing

Based on the findings of common and distinct networks involved in various aspects of reward decision making, we have come up with a schematic illustration to summarize the distributed representations of valuation and valence in reward processing (Figure 3). We tentatively group different brain regions based on their roles in different processes, although each region may serve multiple functions and interact with other brain areas in a far more complex way. When facing alternative choices, each of which has distinctive characteristics such as magnitude and probability, these properties need to be converted into comparable value-based information, a “common currency”. Not only do we compare the values of these alternative choices, but we also compare the factual and projected values as well as the fictional values associated with the un-chosen choice (e.g., the prediction error signal). The ventral striatum and medial OFC have been implicated in this value-based representation. The inferior parietal lobule has also been found to be involved in representing and comparing numerical information. In addition, value-based decision making inevitably results in evaluation of the choices, based on the valence of the outcomes and associated emotional responses. While the ventral striatum and medial OFC are also involved in detecting the positive reward valence, the lateral OFC, anterior insula, ACC and amygdala are mostly implicated in processing of the negative reward valence, most likely linked to their evaluative roles in negative emotional responses. Because of the negative affect usually associated with risk, the anterior insula and ACC are also involved in reward anticipation of risky decisions, especially for uncertainty-averse responses in anticipation of loss. Finally, the frontoparietal regions serve to integrate and act upon these signals in order to produce optimal decisions (e.g., win-stay-loss-switch).

Figure 3.

A schematic framework illustrates the roles of core brain areas involved in different aspects of reward-related decision making. The grid pattern denotes the medial orbitofrontal cortex encoding positive valence; the dash pattern denotes the anterior insula, lateral orbitofrontal cortex, anterior cingulate cortex, and amygdala encoding negative valence; the wave pattern denotes the ventral striatum encoding both positive and negative valence; the diamond pattern denotes the frontoparietal network being involved in information integration.

4.5 Caveats

A couple of methodological caveats need to be noted. The first is related to the bias in reporting the results in different studies. Some studies are purely ROI-based, which were excluded from the current study. Still, others singled out or put more emphasis on a prior regions by reporting more coordinates or contrasts related to those regions. They could bias the results toward confirming the “hotspots”. Secondly, we want to caution about conceptual distinction of different aspects of reward processing. We classified various contrasts into different categories of theoretical interest. However, with real life decisions or in many experimental tasks, these aspects do not necessarily have clear divisions. For example, evaluation of the previous choice and reward outcome may intermingle with upcoming reward anticipation and decision making. There is no clear boundary across different stages of reward processing, leaving our current classification open for discussion. Nonetheless, this hypothesis-driven approach is greatly needed (Caspers et al., 2010; Mohr et al., 2010; Richlan et al., 2009), which complements the data-driven nature of meta-analysis. Many factors related to reward decision making, such as risk assessment and types of reward (e.g., primary vs. secondary, monetary vs. social), call for additional meta-analyses.

Research Highlights.

We conducted two sets of coordinate-based meta-analyses on 142 fMRI studies of reward.

The core reward circuitry included the nucleus accumbens, insula, orbitofrontal, cingulate, and frontoparietal regions.

The nucleus accumbens was activated by both positive and negative rewards across various reward processing stages.

Other regions showed preferential responses toward positive or negative rewards, or during anticipation or outcome.

Supplementary Material

Acknowledgments

This study is supported by the Hundred-Talent Project of the Chinese Academy of Sciences, NARSAD Young Investigator Award (XL), and NIH Grant R21MH083164 (JF). The authors wish to thank the development team of BrainMap and Sergi G. Costafreda for providing excellent tools for this study.

Appendix

List of articles included in the meta-analyses of the current study.

- 1.Abler B, Walter H, Erk S, Kammerer H, Spitzer M. Prediction error as a linear function of reward probability is coded in human nucleus accumbens. Neuroimage. 2006;31:790–795. doi: 10.1016/j.neuroimage.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 2.Adcock RA, Thangavel A, Whitfield-Gabrieli S, Knutson B, Gabrieli JD. Reward-motivated learning: mesolimbic activation precedes memory formation. Neuron. 2006;50:507–517. doi: 10.1016/j.neuron.2006.03.036. [DOI] [PubMed] [Google Scholar]

- 3.Akitsuki Y, Sugiura M, Watanabe J, Yamashita K, Sassa Y, Awata S, Matsuoka H, Maeda Y, Matsue Y, Fukuda H, Kawashima R. Context-dependent cortical activation in response to financial reward and penalty: an event-related fMRI study. Neuroimage. 2003;19:1674–1685. doi: 10.1016/s1053-8119(03)00250-7. [DOI] [PubMed] [Google Scholar]

- 4.Ballard K, Knutson B. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage. 2009;45:143–150. doi: 10.1016/j.neuroimage.2008.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 6.Bjork JM, Hommer DW. Anticipating instrumentally obtained and passively-received rewards: a factorial fMRI investigation. Behavioural Brain Research. 2007;177:165–170. doi: 10.1016/j.bbr.2006.10.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bjork JM, Knutson B, Fong GW, Caggiano DM, Bennett SM, Hommer DW. Incentive-elicited brain activation in adolescents: similarities and differences from young adults. Journal of Neuroscience. 2004;24:1793–1802. doi: 10.1523/JNEUROSCI.4862-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bjork JM, Momenan R, Smith AR, Hommer DW. Reduced posterior mesofrontal cortex activation by risky rewards in substance-dependent patients. Drug and Alcohol Dependence. 2008;95:115–128. doi: 10.1016/j.drugalcdep.2007.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bjork JM, Smith AR, Danube CL, Hommer DW. Developmental differences in posterior mesofrontal cortex recruitment by risky rewards. Journal of Neuroscience. 2007;27:4839–4849. doi: 10.1523/JNEUROSCI.5469-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Blair K, Marsh AA, Morton J, Vythilingam M, Jones M, Mondillo K, Pine DC, Drevets WC, Blair JR. Choosing the lesser of two evils, the better of two goods: specifying the roles of ventromedial prefrontal cortex and dorsal anterior cingulate in object choice. Journal of Neuroscience. 2006;26:11379–11386. doi: 10.1523/JNEUROSCI.1640-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- 12.Budhani S, Marsh AA, Pine DS, Blair RJ. Neural correlates of response reversal: considering acquisition. Neuroimage. 2007;34:1754–1765. doi: 10.1016/j.neuroimage.2006.08.060. [DOI] [PubMed] [Google Scholar]

- 13.Bush G, Vogt BA, Holmes J, Dale AM, Greve D, Jenike MA, Rosen BR. Dorsal anterior cingulate cortex: a role in reward-based decision making. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:523–528. doi: 10.1073/pnas.012470999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Camara E, Rodriguez-Fornells A, Munte TF. Functional connectivity of reward processing in the brain. Frontiers in Human Neuroscience. 2008;2:19. doi: 10.3389/neuro.09.019.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Campbell-Meiklejohn DK, Woolrich MW, Passingham RE, Rogers RD. Knowing when to stop: the brain mechanisms of chasing losses. Biological Psychiatry. 2008;63:293–300. doi: 10.1016/j.biopsych.2007.05.014. [DOI] [PubMed] [Google Scholar]

- 16.Chiu PH, Lohrenz TM, Montague PR. Smokers’ brains compute, but ignore, a fictive error signal in a sequential investment task. Nature Neuroscience. 2008;11:514–520. doi: 10.1038/nn2067. [DOI] [PubMed] [Google Scholar]

- 17.Clithero JA, Carter RM, Huettel SA. Local pattern classification differentiates processes of economic valuation. Neuroimage. 2009;45:1329–1338. doi: 10.1016/j.neuroimage.2008.12.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cohen MX. Individual differences and the neural representations of reward expectation and reward prediction error. Social Cognitive and Affective Neuroscience. 2007;2:20–30. doi: 10.1093/scan/nsl021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cohen MX, Elger CE, Weber B. Amygdala tractography predicts functional connectivity and learning during feedback-guided decision-making. Neuroimage. 2008;39:1396–1407. doi: 10.1016/j.neuroimage.2007.10.004. [DOI] [PubMed] [Google Scholar]

- 20.Cohen MX, Heller AS, Ranganath C. Functional connectivity with anterior cingulate and orbitofrontal cortices during decision-making. Brain Research Cognitive Brain Research. 2005a;23:61–70. doi: 10.1016/j.cogbrainres.2005.01.010. [DOI] [PubMed] [Google Scholar]

- 21.Cohen MX, Ranganath C. Behavioral and neural predictors of upcoming decisions. Cognitive, Affective, & Behavioral Neuroscience. 2005;5:117–126. doi: 10.3758/cabn.5.2.117. [DOI] [PubMed] [Google Scholar]

- 22.Cohen MX, Young J, Baek JM, Kessler C, Ranganath C. Individual differences in extraversion and dopamine genetics predict neural reward responses. Brain Research Cognitive Brain Research. 2005b;25:851–861. doi: 10.1016/j.cogbrainres.2005.09.018. [DOI] [PubMed] [Google Scholar]

- 23.Cooper JC, Knutson B. Valence and salience contribute to nucleus accumbens activation. Neuroimage. 2008;39:538–547. doi: 10.1016/j.neuroimage.2007.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Coricelli G, Critchley HD, Joffily M, O’Doherty JP, Sirigu A, Dolan RJ. Regret and its avoidance: a neuroimaging study of choice behavior. Nature Neuroscience. 2005;8:1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- 25.Cox SM, Andrade A, Johnsrude IS. Learning to like: a role for human orbitofrontal cortex in conditioned reward. Journal of Neuroscience. 2005;25:2733–2740. doi: 10.1523/JNEUROSCI.3360-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Critchley HD, Mathias CJ, Dolan RJ. Neural activity in the human brain relating to uncertainty and arousal during anticipation. Neuron. 2001;29:537–545. doi: 10.1016/s0896-6273(01)00225-2. [DOI] [PubMed] [Google Scholar]

- 27.D’Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- 28.Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Delgado MR, Locke HM, Stenger VA, Fiez JA. Dorsal striatum responses to reward and punishment: effects of valence and magnitude manipulations. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:27–38. doi: 10.3758/cabn.3.1.27. [DOI] [PubMed] [Google Scholar]

- 30.Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. Neuroimage. 2005;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- 31.Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. Journal of Neurophysiology. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- 32.Delgado MR, Schotter A, Ozbay EY, Phelps EA. Understanding overbidding: using the neural circuitry of reward to design economic auctions. Science. 2008;321:1849–1852. doi: 10.1126/science.1158860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dillon DG, Holmes AJ, Jahn AL, Bogdan R, Wald LL, Pizzagalli DA. Dissociation of neural regions associated with anticipatory versus consummatory phases of incentive processing. Psychophysiology. 2008;45:36–49. doi: 10.1111/j.1469-8986.2007.00594.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elliott R, Agnew Z, Deakin JF. Medial orbitofrontal cortex codes relative rather than absolute value of financial rewards in humans. European Journal of Neuroscience. 2008;27:2213–2218. doi: 10.1111/j.1460-9568.2008.06202.x. [DOI] [PubMed] [Google Scholar]

- 35.Elliott R, Agnew Z, Deakin JF. Hedonic and Informational Functions of the Human Orbitofrontal Cortex. Cerebral Cortex. 2009 doi: 10.1093/cercor/bhp092. [DOI] [PubMed] [Google Scholar]

- 36.Elliott R, Friston KJ, Dolan RJ. Dissociable neural responses in human reward systems. Journal of Neuroscience. 2000;20:6159–6165. doi: 10.1523/JNEUROSCI.20-16-06159.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Elliott R, Newman JL, Longe OA, Deakin JF. Differential response patterns in the striatum and orbitofrontal cortex to financial reward in humans: a parametric functional magnetic resonance imaging study. Journal of Neuroscience. 2003;23:303–307. doi: 10.1523/JNEUROSCI.23-01-00303.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Elliott R, Newman JL, Longe OA, William Deakin JF. Instrumental responding for rewards is associated with enhanced neuronal response in subcortical reward systems. Neuroimage. 2004;21:984–990. doi: 10.1016/j.neuroimage.2003.10.010. [DOI] [PubMed] [Google Scholar]

- 39.Ernst M, Nelson EE, Jazbec S, McClure EB, Monk CS, Leibenluft E, Blair J, Pine DS. Amygdala and nucleus accumbens in responses to receipt and omission of gains in adults and adolescents. Neuroimage. 2005;25:1279–1291. doi: 10.1016/j.neuroimage.2004.12.038. [DOI] [PubMed] [Google Scholar]

- 40.Ernst M, Nelson EE, McClure EB, Monk CS, Munson S, Eshel N, Zarahn E, Leibenluft E, Zametkin A, Towbin K, Blair J, Charney D, Pine DS. Choice selection and reward anticipation: an fMRI study. Neuropsychologia. 2004;42:1585–1597. doi: 10.1016/j.neuropsychologia.2004.05.011. [DOI] [PubMed] [Google Scholar]

- 41.Fujiwara J, Tobler PN, Taira M, Iijima T, Tsutsui K. A parametric relief signal in human ventrolateral prefrontal cortex. Neuroimage. 2009a;44:1163–1170. doi: 10.1016/j.neuroimage.2008.09.050. [DOI] [PubMed] [Google Scholar]

- 42.Fujiwara J, Tobler PN, Taira M, Iijima T, Tsutsui K. Segregated and integrated coding of reward and punishment in the cingulate cortex. Journal of Neurophysiology. 2009b;101:3284–3293. doi: 10.1152/jn.90909.2008. [DOI] [PubMed] [Google Scholar]

- 43.Fukui H, Murai T, Fukuyama H, Hayashi T, Hanakawa T. Functional activity related to risk anticipation during performance of the Iowa Gambling Task. Neuroimage. 2005;24:253–259. doi: 10.1016/j.neuroimage.2004.08.028. [DOI] [PubMed] [Google Scholar]

- 44.Glascher J, Hampton AN, O’Doherty JP. Determining a role for ventromedial prefrontal cortex in encoding action-based value signals during reward-related decision making. Cerebral Cortex. 2009;19:483–495. doi: 10.1093/cercor/bhn098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Gottfried JA, O’Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- 46.Hare TA, O’Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. Journal of Neuroscience. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hariri AR, Brown SM, Williamson DE, Flory JD, de Wit H, Manuck SB. Preference for immediate over delayed rewards is associated with magnitude of ventral striatal activity. Journal of Neuroscience. 2006;26:13213–13217. doi: 10.1523/JNEUROSCI.3446-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. Journal of Neurophysiology. 2006;95:948–959. doi: 10.1152/jn.00382.2005. [DOI] [PubMed] [Google Scholar]

- 49.Haruno M, Kuroda T, Doya K, Toyama K, Kimura M, Samejima K, Imamizu H, Kawato M. A neural correlate of reward-based behavioral learning in caudate nucleus: a functional magnetic resonance imaging study of a stochastic decision task. Journal of Neuroscience. 2004;24:1660–1665. doi: 10.1523/JNEUROSCI.3417-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Heekeren HR, Wartenburger I, Marschner A, Mell T, Villringer A, Reischies FM. Role of ventral striatum in reward-based decision making. Neuroreport. 2007;18:951–955. doi: 10.1097/WNR.0b013e3281532bd7. [DOI] [PubMed] [Google Scholar]

- 51.Hewig J, Straube T, Trippe RH, Kretschmer N, Hecht H, Coles MG, Miltner WH. Decision-making under Risk: An fMRI Study. Journal of Cognitive Neuroscience. 2008 doi: 10.1162/jocn.2009.21112. [DOI] [PubMed] [Google Scholar]

- 52.Hommer DW, Knutson B, Fong GW, Bennett S, Adams CM, Varnera JL. Amygdalar recruitment during anticipation of monetary rewards: an event-related fMRI study. Annals of the New York Academy of Sciences. 2003;985:476–478. doi: 10.1111/j.1749-6632.2003.tb07103.x. [DOI] [PubMed] [Google Scholar]

- 53.Hsu M, Krajbich I, Zhao C, Camerer CF. Neural response to reward anticipation under risk is nonlinear in probabilities. Journal of Neuroscience. 2009;29:2231–2237. doi: 10.1523/JNEUROSCI.5296-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Huettel SA. Behavioral, but not reward, risk modulates activation of prefrontal, parietal, and insular cortices. Cognitive, Affective, & Behavioral Neuroscience. 2006;6:141–151. doi: 10.3758/cabn.6.2.141. [DOI] [PubMed] [Google Scholar]

- 55.Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- 56.Ino T, Nakai R, Azuma T, Kimura T, Fukuyama H. Differential activation of the striatum for decision making and outcomes in a monetary task with gain and loss. Cortex. 2009 doi: 10.1016/j.cortex.2009.02.022. [DOI] [PubMed] [Google Scholar]

- 57.Izuma K, Saito DN, Sadato N. Processing of social and monetary rewards in the human striatum. Neuron. 2008;58:284–294. doi: 10.1016/j.neuron.2008.03.020. [DOI] [PubMed] [Google Scholar]

- 58.Izuma K, Saito DN, Sadato N. Processing of the Incentive for Social Approval in the Ventral Striatum during Charitable Donation. Journal of Cognitive Neuroscience. 2009 doi: 10.1162/jocn.2009.21228. [DOI] [PubMed] [Google Scholar]

- 59.Juckel G, Schlagenhauf F, Koslowski M, Wustenberg T, Villringer A, Knutson B, Wrase J, Heinz A. Dysfunction of ventral striatal reward prediction in schizophrenia. Neuroimage. 2006;29:409–416. doi: 10.1016/j.neuroimage.2005.07.051. [DOI] [PubMed] [Google Scholar]

- 60.Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nature Neuroscience. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kahnt T, Park SQ, Cohen MX, Beck A, Heinz A, Wrase J. Dorsal Striatal-midbrain Connectivity in Humans Predicts How Reinforcements Are Used to Guide Decisions. Journal of Cognitive Neuroscience. 2009;21:1332–1345. doi: 10.1162/jocn.2009.21092. [DOI] [PubMed] [Google Scholar]

- 62.Kim H, Shimojo S, O’Doherty JP. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS Biology. 2006;4:e233. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Kirsch P, Schienle A, Stark R, Sammer G, Blecker C, Walter B, Ott U, Burkart J, Vaitl D. Anticipation of reward in a nonaversive differential conditioning paradigm and the brain reward system: an event-related fMRI study. Neuroimage. 2003;20:1086–1095. doi: 10.1016/S1053-8119(03)00381-1. [DOI] [PubMed] [Google Scholar]

- 64.Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. Journal of Neuroscience. 2001a;21:RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001b;12:3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- 66.Knutson B, Fong GW, Bennett SM, Adams CM, Hommer D. A region of mesial prefrontal cortex tracks monetarily rewarding outcomes: characterization with rapid event-related fMRI. Neuroimage. 2003;18:263–272. doi: 10.1016/s1053-8119(02)00057-5. [DOI] [PubMed] [Google Scholar]

- 67.Knutson B, Rick S, Wimmer GE, Prelec D, Loewenstein G. Neural predictors of purchases. Neuron. 2007;53:147–156. doi: 10.1016/j.neuron.2006.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. Journal of Neuroscience. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]