Abstract

This paper describes the development of a cognitive prosthesis designed to restore the ability to form new long-term memories typically lost after damage to the hippocampus. The animal model used is delayed nonmatch-to-sample (DNMS) behavior in the rat, and the “core” of the prosthesis is a biomimetic multi-input/multi-output (MIMO) nonlinear model that provides the capability for predicting spatio-temporal spike train output of hippocampus (CA1) based on spatio-temporal spike train inputs recorded presynaptically to CA1 (e.g., CA3). We demonstrate the capability of the MIMO model for highly accurate predictions of CA1 coded memories that can be made on a single-trial basis and in real-time. When hippocampal CA1 function is blocked and long-term memory formation is lost, successful DNMS behavior also is abolished. However, when MIMO model predictions are used to reinstate CA1 memory-related activity by driving spatio-temporal electrical stimulation of hippocampal output to mimic the patterns of activity observed in control conditions, successful DNMS behavior is restored. We also outline the design in very-large-scale integration for a hardware implementation of a 16-input, 16-output MIMO model, along with spike sorting, amplification, and other functions necessary for a total system, when coupled together with electrode arrays to record extracellularly from populations of hippocampal neurons, that can serve as a cognitive prosthesis in behaving animals.

Index Terms: Hippocampus, multi-input/multi-output (MIMO) nonlinear model, neural prosthesis, spatio-temporal coding

I. Introduction

Damage to the hippocampus and surrounding regions of the medial temporal lobe can result in a permanent loss of the ability to form new long-term memories [1]–[4]. Because the hippocampus is involved in the creation but not the storage of new memories, long-term memories formed before the damage often remain intact, as does short-term memory. The anterograde amnesia so characteristic of hippocampal system damage can result from stroke or epilepsy, and is one of the defining features of dementia and Alzheimer’s disease. There is no known treatment for these debilitating cognitive deficits. Damage-induced impairments in temporal lobe function can result from both primary or invasive (e.g., “projectile”) wounds to the brain, and from secondary or indirect damage, such as occurs in traumatic brain injury. Temporal-lobe damage related memory loss is particularly acute for military personnel in war zones, where over 50% of injuries sustained in combat are the result of explosive munitions, and 50%–60% of those surviving blasts sustain significant brain injury [5], [6], most often to the temporal and prefrontal lobes. Rehabilitation can rescue some of these lost faculties, but for moderate to severe damage, there are often substantial long-lasting cognitive disorders [7]. Damage-induced loss of fundamental memory and organizational thought processes severely limit patients’ overall functional capacity and degree of independence, and impose a low ceiling on the quality of life. Given the severity of the consequences of temporal lobe and/or prefrontal lobe damage-induced cognitive impairments, and the relative intransigence of these deficits to current treatments, we feel that solutions to the problem of severe memory deficits require a radical, new approach in the form of a cognitive prosthesis that bi-directionally communicates with the functioning brain.

One of the obstacles to designing restorative treatments for amnesia is the limited knowledge about the nature of neural processing in brain areas responsible for higher cognitive function. This paper outlines a plan for addressing this fundamental issue through the replacement of damaged tissue with microelectronics that mimics the functions of the original biological circuitry. The proposed microelectronic systems do not just electrically stimulate cells to generally heighten or lower their average firing rates. Instead, the proposed prosthesis incorporates mathematical models that, for the first time, replicate the fine-tuned, spatio-temporal coding of information by the hippocampal memory system, thus allowing memories for specific items or events experienced to be formed and stored in the brain.

Our approach is based on several assumptions about how information is coded in the brain, and on the relationship between cognitive function and the electrophysiological activity of populations of neurons [8]. For example, we assume that the majority of information in the brain is coded in terms of spatio-temporal patterns of activity. Neurons communicate with each other using all-or-none action potentials, or “spikes.” Because spikes are of nearly equivalent amplitude, information can be transmitted only through variation in the sequence of inter-spike intervals, or temporal pattern. Populations of neurons are distributed in space, so information transmitted by neural systems can be coded only through variation in the spatio-temporal pattern of spikes.

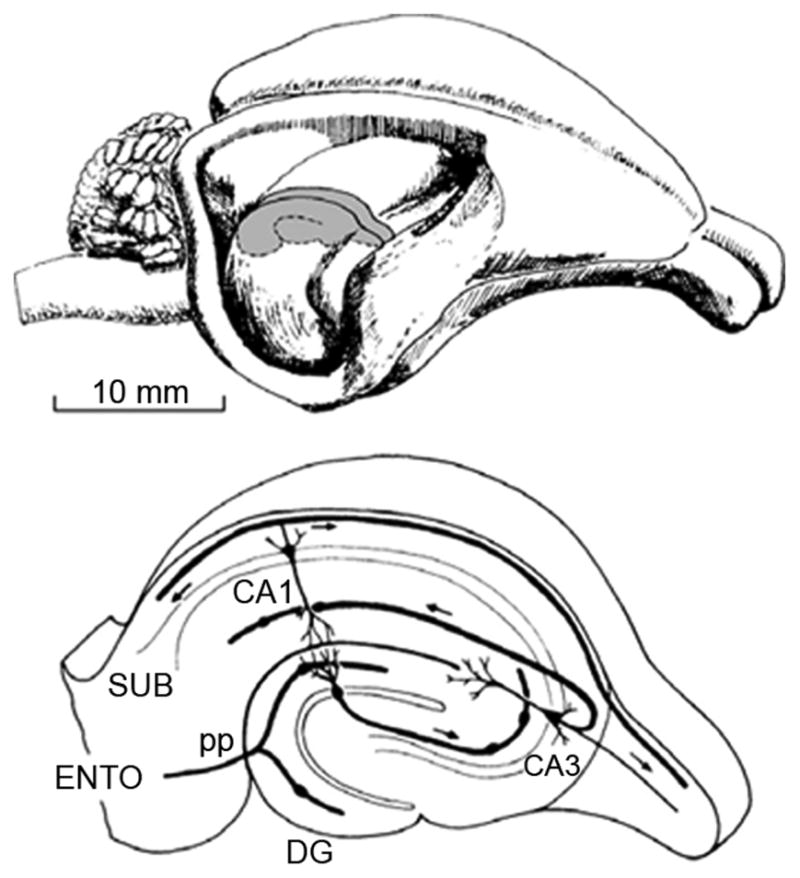

The essential signal processing capability of a neuron is derived from its capacity to change input sequences of inter-spike intervals into different, output sequences of inter-spike intervals. In all brain areas, the resulting input/output transformations are strongly nonlinear (Fig. 1), due to the nonlinear dynamics inherent in the cellular/molecular mechanisms of neurons and synapses. The nonlinearities of neural mechanisms go far beyond that of the step-function (“hard”) nonlinearity of action potential threshold. All voltage-dependent conductances are, by definition nonlinear, with a variety of identifying slopes, infection points (orders), activation voltages, inactivation properties, etc., resulting in sources for nonlinearities that are large in number and varied in function. Thus, information in the brain is coded in terms of spatio-temporal patterns of activity, and the functionality of any given brain system can be understood and quantified in terms of the nonlinear transformation which that brain system performs on input spatio-temporal patterns. For example, the conversion of short-term memory into long-term memory must be equivalent to the cascade of nonlinear transformations comprising the internal circuitry of the hippocampus—as spatio-temporal patterns of activity propagate through the hippocampus, the series of nonlinear transformations resulting from synaptic transmission through its intrinsic cell layers gradually converges on the representations required for long-term storage (Fig. 2: the circuit from entorhinal cortex (ENTO) to dentate gyrus (DG) to CA3 to CA1). It is not understood why a particular end-point of spatio-temporal representations, or sequence of spatio-temporal representations, is required for long-term memory; this is a goal for the future. At present we can speak only to the spatio-temporal patterns observed, and to the nonlinearities of neural processes responsible for those patterns. Given this information, however, we are constructing multi-circuit, biomimetic systems in the form of our proposed memory prosthesis [9]–[12], that are intended to circumvent the damaged hippocampal tissue and to re-establish the ensemble, or “population” coding of spike trains performed by a normal population of hippocampal neurons (Fig. 3). If our conceptualization of the problem outlined here and implemented in the following experiments and modeling is correct, then we should be able to re-establish long-term memory function in a stimulus-specific manner using the proposed hippocampal prosthesis.

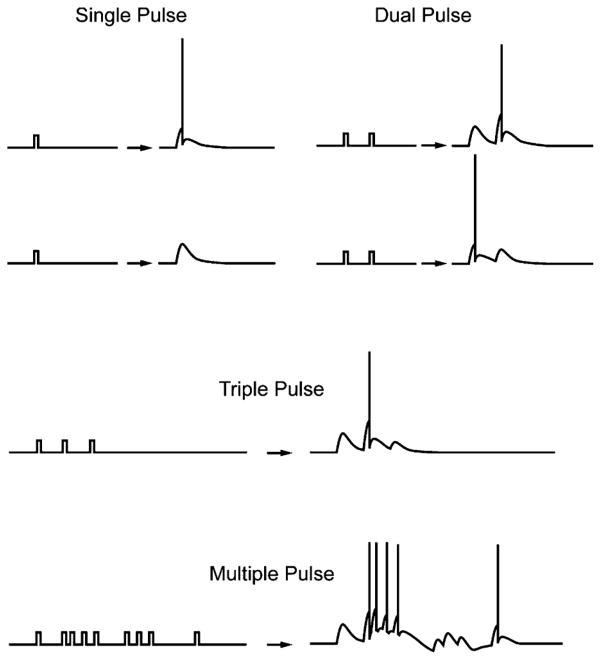

Fig. 1.

Nonlinearity of input–output relations in brain. Single pulse: With intensity set near threshold, single pulses may or may not elicit an action potential (AP). Dual pulses: if the first pulse of the pair does not elicit an AP, then pre-synaptic facilitation (residual Ca2+ in the presynaptic terminal) and/or NMDA receptor activation causes an AP; if the first pulse does cause an AP, then the response to second pulse is suppressed (possibly due to GABAergic feedback) and elicits only an EPSP. Triplets can cause an EPSP-AP-EPSP predictable from the dual pulse response. Bursts of APs can generate Ca2+ influx triggering hyperpolarizations that block subsequent APs. The main point to be made here is that in almost no case is simple “additivity” of the responses observed—two inputs do not cause two outputs that simply add together. There is almost always a nonadditivity caused by underlying mechanisms.

Fig. 2.

Top: Drawing of the rabbit brain, with the neocortex removed to expose the hippocampus. Shaded portion represents a section transverse to the longitudinal axis that is subsequently shown in the bottom panel. Bottom: Internal circuitry of the hippocampus, i.e., the “tri-synaptic pathway,” indicating the entorhinal cortex (ENTO), perforant path (pp) input to the dentate gyrus (DG), CA3 pyramidal cell layer, and the CA1 pyramidal cell layer. CA1 pyramids project the subiculum (SUB), and from the subiculum to cortical and subcortical sites, ultimately for long-term memory storage.

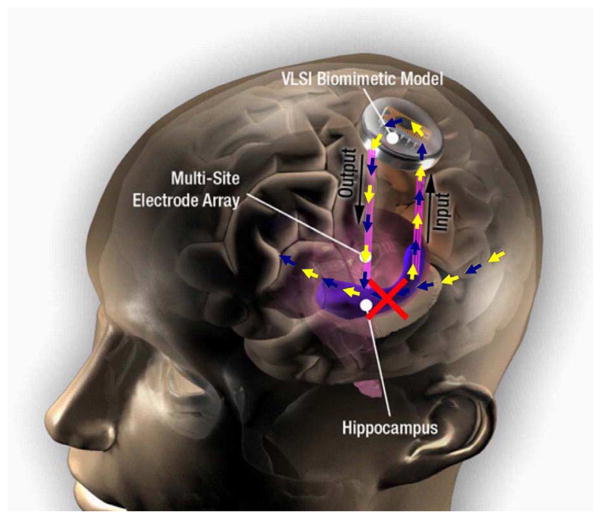

Fig. 3.

Conceptual representation of a hippocampal prosthesis for the human. The biomimetic MIMO model implemented in VLSI performs the same nonlinear transformation as a damaged portion of hippocampus (red “X”). The VLSI device is connected “up-stream” and “down-stream” from the damaged hippocampal area through multi-site electrode arrays.

In this paper, we will demonstrate success in exactly the above goal, namely, re-establishing a capability for long-term memory in behaving animals by substituting a model-predicted code, delivered by electrical stimulation through an array of electrodes, for spatio-temporal hippocampal activity normally generated endogenously. Here we will show that we can recover memory function lost after pharmacological blockade of hippocampal output. In a companion paper [35], we will show that delivering the same model-predicted code to electrode-implanted control animals with a normally functioning hippocampus, substantially enhances animals’ memory capacity above control levels. With respect to the multi-input, multi-output (MIMO) nonlinear dynamic modeling used to predict hippocampal spatio-temporal activity, we will introduce major advances in the procedures for estimating parameters of such models through the introduction of methods derived from two new concepts, the generalized Laguerre-Volterra model (GLVM), which combines the generalized linear model and the Laguerre expansion of Volterra model, and the sparse Volterra model (sVM), which is a reduced form of Volterra model containing only a statistically selected subset of its all possible model coefficients. Finally, we will provide our first outline of the very-large-scale integration (VLSI) design for a programmable MIMO model-based prosthetic system for bi-directional communication with the brain.

II. Methods

The hippocampus is a complex structure consisting of three main subsystems: the dentate gyrus (D), the CA3 subfield, and the CA1 subfield (see Fig. 2). Excitatory, glutamatergic synapses connect each of the subsystems consecutively into a cascade, or, “trisynaptic pathway.” However, we have shown that excitatory projections (perforant path or pp) from the entorhinal cortex (Ento) not only excite granule cells of the dentate gyrus, but also directly excite pyramidal cells of CA3, so a “feedforward” component must be considered in addition to the cascade [13], [14], though this architectural feature of the system is not relevant to the model developed here. Electrophysiological extracellular action potential (“spike”) recordings of single hippocampal neuron activity were made simultaneously from the CA3 and CA1 cell populations while animals performed in a delayed non-match-to-sample (DNMS) memory task (see [35], for all descriptions of animals, behavioral training, electrophysiological recording, etc.), and constituted the data from which the hippocampal model was derived. CA3 activity was “driving,” or causing, CA1 cell spikes.

A. MIMO Nonlinear Dynamic Model of Neural Population Activities

1) Model Configuration

The MIMO nonlinear dynamical model is decomposed into of a series of multi-input, single-output (MISO) models for each output neurons (Fig. 4). The MISO model takes a physiologically plausible structure that contains the following major components [15]–[18]: 1) a feedforward block K transforming the input spike trains x into a continuous hidden variable u that can be interpreted as the synaptic potential, 2) a feedback block H transforming the preceding output spikes into a continuous hidden variable a that can be interpreted as the spike-triggered after-potential, 3) a noise term ε that captures the system uncertainty caused by both the intrinsic neuronal noise and the unobserved inputs, 4) an adder generating a continuous hidden variable w that can be interpreted as the prethreshold potential, and 5) a threshold generating output spike when the value of w is above θ. The model can be expressed by the following equations:

| (1) |

| (2) |

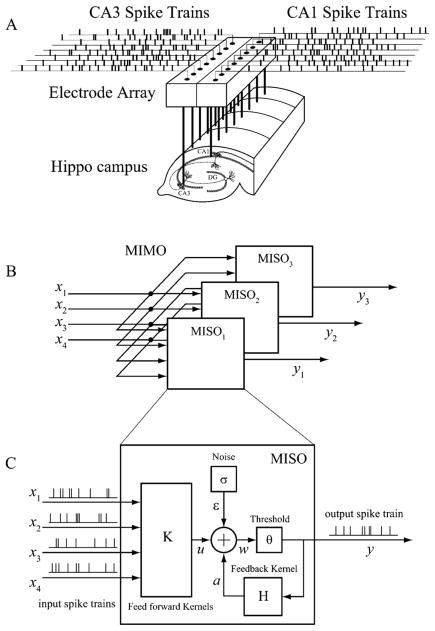

Fig. 4.

MIMO model for hippocampal CA3-CA1 population dynamics. (A) Schematic diagram of spike train propagation from hippocampal CA3 region to hippocampal CA1 region. (B) MIMO model as a series of MISO models. (C) Structure of a MISO model.

K takes the form of a MISO Volterra model, in which u is expressed in terms of the inputs x by means of the Volterra series as in the case of second-order

| (3) |

In K, the zeroth-order kernel, k0, is the value of u when the input is absent. First-order kernels describe the linear relation between the nth input xn and u, as functions of the time intervals (τ)between the present time and the past time. Second-order self-kernels describe the second-order nonlinear relation between the nth input xn and u. Second-order cross-kernels describe the second-order nonlinear interactions between each unique pair of inputs (xn1 and ) as they affect u. N is the number of inputs. Mk denotes the memory length of the feedforward process.

Similarly, H takes the form of a first-order single-input, single-output Volterra model as in

| (4) |

h is the feedback kernel. Mh is the memory length of the feed-back process. The noise term ε is modeled as a Gaussian white noise with a standard deviation σ.

To reduce the total number of model coefficients to be estimated. Laguerre expansion of Volterra kernel (LEV) is employed [19]–[22]. Using LEV, both k and h are expanded with orthonormal Laguerre basis functions b. With input and output spike trains x and y convolved with b

| (5) |

| (6) |

The feedforward and feedback dynamics in (3) and (4) are rewritten as

| (7) |

| (8) |

c0, , and ch are the sought Laguerre expansion coefficients of the kernel functions, k0, , and h, respectively (c0 = k0). Since the kernels are typically smooth functions, the number of basis functions (L) can be made much smaller than the memory length (Mk and Mh), and thus greatly reduces the total number of model coefficients to be estimated.

2) Coefficients Estimation

With recorded input and output spike trains x and y, the negative log-likelihood function NLL can be expressed as [16], [17]

| (9) |

where T is the data length, and P is the probability of generating the recorded output y

| (10) |

Since ε is assumed Gaussian, the conditional firing probability intensity function Pf [the conditional probability of generating a spike, i.e., Prob(w ≤ θ|x, k, h, σ, θ) in (10)] at time t can be calculated with the Gaussian error function (integral of Gaussian function). Model coefficients c then can be estimated by minimizing the negative log-likelihood function NLL

| (11) |

As shown previously [16], [18], this model is mathematically equivalent to a generalized linear model (GLM) with a probit link function (inverse cumulative distribution function of the normal distribution). Given this equivalence, model co-efficients c are estimated using the iterative reweighted least-squares method, the standard algorithm for fitting GLMs [23], [24]. For the same reason, this model can be termed a generalized Laguerre–Volterra model (GLVM). Since u, a, and ε are dimensionless variables, without loss of generality, both θ and σ can be set to unity value; only c are estimated. σ is later restored and θ remains unity value. The final coefficients ĉ and σ̂ can be obtained from estimated Laguerre expansion coefficients, c̃, with a simple normalization/conversion procedure [16], [18].

3) Statistical Model Selection of the MIMO Model

Solving (11) yields the maximum likelihood estimates of the model co-efficients. However, in practice, model complexity often needs to be further reduced in addition to the LEV procedure. For example, neurons in brain regions such as the hippocampal CA3 and CA1, are sparsely connected. The full Volterra kernel model described in (3) and (7) does not contain sparsity and thus is not the most efficient or interpretable way of representing such a system. Furthermore, the number of coefficients to be estimated in a full Volterra kernel model grows exponentially with the dimension of the input and the model order, even with LEV. Estimation of such a model can suffer easily from the overfitting problem—the model coefficients tend to fit the noise instead of the signal in the system.

To solve this problem, we introduce the concept of sparse Volterra model (sVM). A sVM is defined as a Volterra model that contains only a subset of its possible model coefficients corresponding to its significant inputs and the significant terms of those inputs [25]. Similarly, a generalized Laguerre-Volterra model with sparsity can be termed a sparse GLVM (sGLVM). Estimation of sGLVM (or sVM) can be achieved by statistically selecting an optimal subset of model coefficients based on a certain error measure (e.g., out-of-sample likelihood). In this study, we use a forward step-wise model selection method for the estimations of sGLVMs [16], [18].

4) Kernel Reconstruction and Interpretation

With the Laguerre basis functions and the estimated coefficients, the feed-forward and feedback kernels are reconstructed and normalized [16], [18]. The normalized Volterra kernels provide an intuitive representation of the system input–output nonlinear dynamics [16], [18]. Single-pulse and paired-pulse response functions (r1 and r2) of each input can be derived as

| (12) |

| (13) |

is the response in u elicited by a single spike (i.e., single “EPSP”) from the nth input neuron; describes the joint nonlinear effect of pairs of spikes from the nth input neuron in addition to the summation of their first-order responses (i.e., paired-pulse facilitation/depression function). , τ2 represents the joint nonlinear effect of pairs of spikes (i.e., paired-pulse facilitation/depression function) with one spike from neuron n1 and one spike from neuron n2. h represents the output spike-triggered after-potential on u.

5) Model Validation and Prediction

Due to the stochastic nature of the system, model goodness-of-fit is evaluated with an out-of-sample Kolmogorov–Smirnov (KS) test based on the time-rescaling theorem [26]. This method directly evaluates the continuous firing probability intensity predicted by the model with the recorded output spike train. According to the time-rescaling theorem, an accurate model should generate a conditional firing intensity function Pf that can rescale the recorded output spike train into a Poisson process with unit rate. By further variable conversion, inter-spike intervals should be rescaled into independent uniform random variables on the interval (0, 1). The model goodness-of-fit then can be assessed with a KS test, in which the rescaled intervals are ordered from the smallest to the largest and then plotted against the cumulative distribution function of the uniform density. If the model is accurate, all points should be close to the 45° line of the KS plot. Confidence bounds (e.g., 95%) can be used to determine the statistical significance.

Given the estimated model, output spike trains are predicted recursively [16], [18]. First, synaptic potential u is calculated with input spike trains x and the estimated feedforward kernels. This forms the deterministic part of the prethreshold potential w. Then, a Gaussian random sequence with the estimated noise standard deviation is generated and added to u to obtain w. This operation introduces the stochastic component into w. At time t, if the value of w is higher than the threshold (θ = 1), a spike is generated and a feedback component a is added to the future values of w. The calculation then repeats for time t + 1 with updated w until it reaches the end of the trace.

B. VLSI Implementation of the MIMO Model

Our design methodology for mixed-signal systems on a chip (MS-SoC) uses conventional VLSI design practices for both the digital and the analog circuitry.

The primary digital circuit design for the spike detection and sorting and MIMO computation unit was done in VHDL and synthesized using a standard cell library. There was no need to perform special clock-gating or use custom cells to lower power in the current design. Some specialized circuitry like the Divide by 32 circuit in the analog RF section was custom designed as a differential circuit to reduce phase noise in the phase locked loop and to meet the overall power budget.

The analog circuitry was all custom and used several unique designs, but the key design paradigm was to make the “building blocks” simple and robust. Standard techniques of symmetry and ratioing were exploited to minimize process variation and layout effects on the design’s performance. Extensive SPICE simulations were used to insure that the design was “centered” and adequate tolerance in all the corner cases.

III. Results

A. Nonlinear Dynamic MIMO Models of the Hippocampal CA3-CA1 Pathway

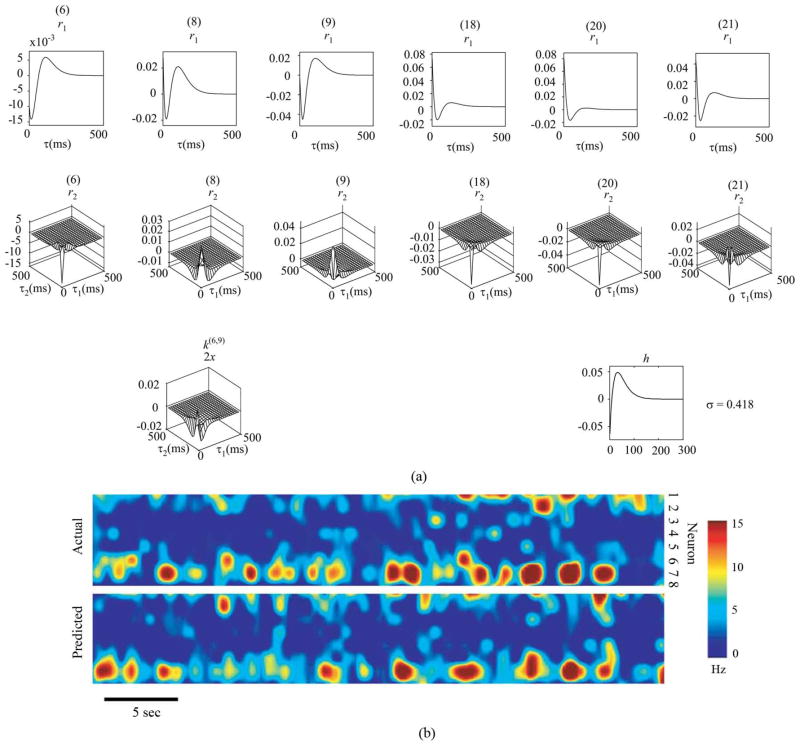

As described in the Methods section, each MIMO model consists of a series of independently estimated MISO models. Fig. 5 illustrates a 24-input MISO (i.e., sGLVM) model estimated with the forward step-wise model selection method. The feedback kernel is found to be significant and thus included in the model. Among all 24 inputs, six significant inputs with six first-order kernels and six second-order self-kernels were selected. Among the 21 possible cross-kernels for the six selected inputs, one second-order cross-kernel for inputs No. 6 and No. 9 was selected. Without model selection, the total number of model coefficients to be estimated is 2704—one for zeroth-order kernel; 72 for first-order kernels; 144 for second-order self-kernels; 2484 for second-order cross-kernels (L = 3). With the model selection procedure, the total number of coefficients is reduced to 67. In addition to the significant reduction in model complexity, the model also achieves maximal out-of-sample likelihood value, as guaranteed by the model selection and cross-validation procedures. For easier interpretation, the first-order and second-order response functions (r1 and r2) are shown in Fig. 5. These functions quantitatively describe how the synaptic potential is influenced by a single spike and pairs of spikes from a single input neuron or pairs of input neurons.

Fig. 5.

MISO model and MIMO prediction. (A) 24-input MISO model of hippocampal CA3-CA1. r1 are the single-pulse response functions; k2s are the paired-pulse response functions for the same input neurons. k2x are the cross-kernels for pairs of neurons. This particular MISO model has six r1, six r2x, and one k2x. (B) Comparison of actual and predicted spatio-temporal patterns of CA1 spikes. Top: Actual spatio-temporal pattern; Bottom: Spatio-temporal pattern predicted by a MIMO model.

All individually estimated and validated MISO models are concatenated to form the MIMO model of the hippocampal CA3-CA1 pathway. Fig. 5 (bottom) compares the actual spatio-temporal pattern of CA1 spikes with the spatio-temporal pattern predicted by the MIMO model. This specific MIMO model has eight outputs. It is evident that, despite the difference in fine details (mostly due to the stochastic nature of the system), the MIMO model replicates the salient features of the actual CA1 spatio-temporal pattern. We found this to be true for the majority of MIMO models generated from these data.

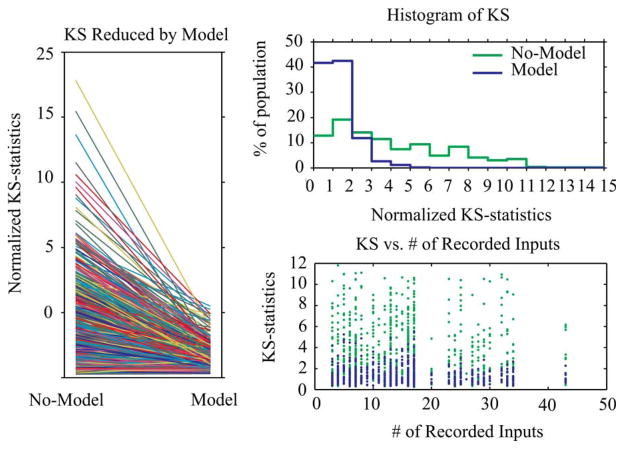

B. Statistical Summary of Modeling Results

We have analyzed 62 MIMO datasets from 62 animals. These datasets include 826 CA3 units and 848 CA1 units (848 independent MISO models). The model performances are summarized in Fig. 6. Normalized KS-statistics (i.e., the maximal distance between the KS plot and the 45° diagonal line divided by the distance between the 95% confidence bound and the 45° diagonal line) of the zeroth-order model (No-Model; control condition) and the optimized MISO model (Model) are plotted and compared. Results show that the normalized KS-statistics are significant reduced by the MISO model in virtually all instances (Fig. 6, left). The distributions of the normalized KS-statistics are illustrated in the histograms (Fig. 6, right-top). Without applying the MISO model (No-Model, green line), the normalized KS-statistics are more uniformly distributed in a wide range of the x-axis; after applying the MISO model (Model, blue line), the distribution of the normalized KS-statistics is markedly skewed to small values. Among all 848 MISO models, 42% of them have a normalized KS-statistics smaller than one, indicating their KS plots are within the 95% confidence bounds; 43% of them have a normalized KS-statistics larger than one but smaller than two, showing KS plots out of the 95% confidence bounds but nonetheless remain close to the 45° diagonal lines.

Fig. 6.

Summary of the MIMO modeling results. Left: Normalized KS-statistics are reduced by the MISO models. Right top: Comparison of distributions of normalized KS-statistics with and without MISO models. Right bottom: Distributions of normalized KS-statistics with various numbers of recorded CA3 units in the datasets.

Since CA1 spike trains are predicted based on the CA3 spike trains in our approach, the accuracy of CA1 prediction is obviously dependent on the richness of information contained in the CA3 recordings, e.g., the number of CA3 units recorded. We further analyze the relations between the model performance and the CA3 recordings by plotting the normalized KS-statistics against the number of CA3 units in each of the datasets (Fig. 6, right-bottom). The normalized KS-statistics of No-Model and Model are shown in green and blue dots, respectively. Each blue dot represents one MISO model while each green dot represents one zeroth-order model. The MIMO datasets can be divided roughly into two large groups with smaller and larger number of CA3 units, respectively. It is evident that the normalized KS-statistics are markedly smaller in the group with larger number of CA3 units—indicating a positive correlation between the model performance and the number of CA3 units.

C. The Hippocampal MIMO Model as a Cognitive Prosthesis

The ultimate test of our hippocampal MIMO model is in the context of a cognitive prosthesis, i.e., can the model run online and in real time to provide the spatio-temporal codes for the correct memories needed to perform on the delayed nonmatch-to-sample (DNMS) task, when the biological hippocampus is impaired? We tested this possibility in the following manner. First, even when well-trained animals perform the DNMS task, the “strength” of the spatio-temporal code in CA1 varies from trial to trial [27]. Deadwyler and Hampson have found that, in implanted control animals, the strength of the CA1 code (spatio-temporal pattern) during the response to the sample stimulus (the “sample response,” or SR) is highly predictive of the response on the nonmatch phase of the same trial, e.g., a strong code to the SR predicts a high probability of a correct, nonmatch response, and a weak code predicts a high probability of an error, or, a match response. We have found that the MIMO model can accurately predict strong and weak codes from CA3 cell population input, and can do so online and in real-time.

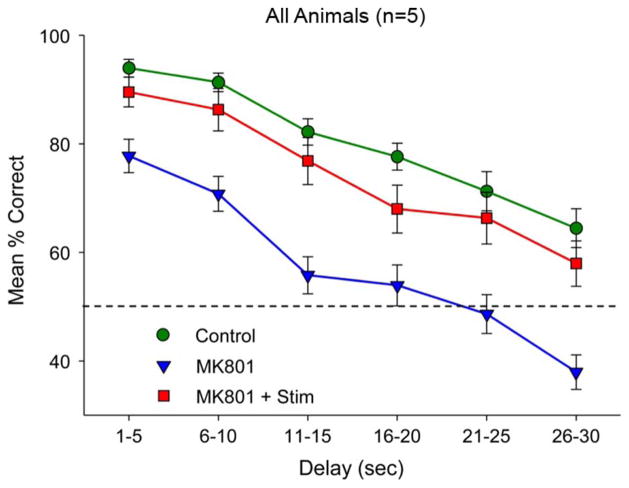

A group of animals performed the DNMS task while the MIMO model identified strong CA1 codes, which were computer saved and stored. During this control period, a mini-pump implanted into the hippocampus continuously introduced saline during the session [28]. A forgetting curve is shown in Fig. 7 (green line), and shows that animals successfully maintained memory of the “sample” stimulus for intervals as long as 30 s, the longest delay period tested. Instead of saline during testing, introduction of MK801, which blocks the glutamatergic NMDA channel, severely disrupted hippocampal pyramidal cell activity, primarily in CA1 but also in the CA3 subfield. MK801 altered the temporal pattern of pyramidal cell activity as well as suppressing both spontaneous and stimulus-evoked responses. So hippocampal pyramidal cells remained active and excitable, but hippocampal output was lessened and assumed an altered, abnormal spatio-temporal distribution of activity when driven by the conditioned stimuli. In the presence of the NMDA channel blocker, rats performing the DNMS task demonstrated the presence of a short-term memory, maintaining response rates of 70%–80% for delay intervals less than 10 s; longer than 10 s, however, animals responded at chance levels, indicating the absence of any long-term memory (Fig. 7, blue line).

Fig. 7.

Cognitive prosthesis reversal of MK801-induced impairment of hippocampal memory encoding using electrical stimulation of CA1 electrodes with MIMO model-predicted spatio-temporal pulse train patterns. Intra-hippocampal infusion of the glutamatergic NMDA channel blocker MK801 (37.5 μg/h; 1.5 mg/ml, 0.25 μl/h) delivered for 14 days suppressed MIMO derived ensemble firing in CA1 and CA3. CA1 spatio-temporal patterns used for recovering memory loss were “strong” patterns derived from previous DNMS trials (see text), and were delivered bilaterally to the same CA1 locations when the sample response occurred to the sample stimulus. Bottom: Mean % correct (±SEM) performance (n = 5 animals) summed over all conditions, including vehicle-infusion (control) without stimulation, MK801 infusion (MK801) without stimulation, and MK801 infusion with the CA1 stimulation (substitute) patterns (MK801 + Stim).

While continuing the infusion of MK801, we electrically stimulated through the same electrodes used for recording with the temporal pattern (for pulse width and amplitude, see [35]) that mimicked the “strong code” highly correlated with successful nonmatch performance during control conditions. In other words, while the presence of the NMDA channel blocker prevented the hippocampus from endogenously generating the spatio-temporal code necessary for long-term memory of the sample conditioned stimulus, we provided that spatio-temporal code exogenously (as best we could through the electrode array implanted; certainly we could not replicate the long-term memory code with either the spatial or the temporal code occurring on a biological scale) in an attempt to create a long-term memory representation sufficient to support DNMS behavior. The spatio-temporal code used was that specific for each sample stimulus, and for the sample stimuli specific for each animal. Results were unambiguous—our exogenously supplied spatio-temporal code for sample stimuli increased the percent correct responding almost to control levels (Fig. 7, red line). This effect was not simply a result of the electrical stimulation per se, i.e., nonspecifically increasing the excitability of hippocampal CA1 neurons: stimulating with 1) fixed frequencies so as to approximate the overall number of pulses delivered during MIMO-predicted spatio-temporal patterned stimulation, or 2) random inter-impulse intervals, again approximating the total number of pulses delivered during MIMO spatio-temporal patterned stimulation, did not change behavioral responding (data not shown). As shown in a companion paper [35], electrical stimulation using the same strategy but applied to control animals substantially strengthens endogenously generated long-term memory so that percent correct responding increases above control levels, resulting in 100% and near 100% response rates to much longer delay intervals than responded to above chance in control conditions. In total, this test of the hippocampal MIMO model as a neural prosthesis was consistent with our global proposition for a spatio-temporal code based biomimetic memory system that substitutes for damaged hippocampus.

D. Hardware Implementation

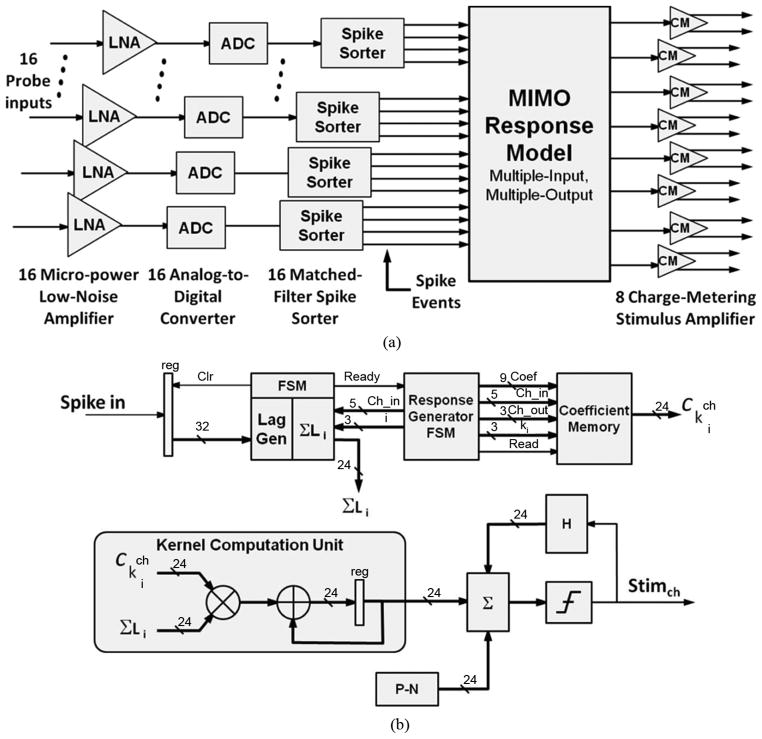

Given that the hippocampal MIMO model, even at this early stage of development, can effectively substitute for the limited number of memories that provide a basis for DNMS and DMS ([35]) memory, we have begun examining hardware implementations of the model and the supporting functions that allow it to interact with the brain. These include: 1) an ability to sense the signaling patterns of individual neurons; 2) the ability to distinguish the signals from each of multiple neurons and to track and process these signals in time; 3) the ability to process spatio-temporal patterns based on MIMO models that have been implemented in hardware; and 4) the ability to provide model predictions in the form of electrical stimulation back to the healthy part of the tissue.

We present here a system-on-a-chip (SoC) mixed-mode ASIC designed to serve as a forerunner neural prosthesis for repairing damage to the hippocampus. This device is the first in a series, developed to detect, amplify and analyze input spiking activity, compute an appropriate response in biologically-realistic time, and return stimulus signals to the subject. The device has a number of key features that push the envelope for implantable biomimetic processors having the capability for bi-directional communication with the brain: 1) this device functions are parameterized to enable responses to be tailored to meet the needs of individual subjects, and may be adjusted during operation, 2) the initial processing path includes spike sorting, 3) the on-board model provides for up to third-order nonlinear input/output transformations for up to 16 channels of data, with programmable model parameters, 4) the design incorporates micropower, low-noise amplifiers, 5) pulse output back to the tissue is via a charge-metering circuit requiring no blocking capacitors, and finally 6) the device reports data from multiple points in the processing chain for diagnosis and adjustment.

1) Device Organization

Major Functional Units

Fig. 8(a) shows the processing path and the major elements of the prosthetic device. The analog front-end, consisting of 16 micropower low-noise amplifiers (MPLNA, labeled LNA in Fig. 1) and 16 analog-to-digital converters (ADC) and a spike-sorter with 16 input channels are implemented in parallel, that is, 16 input electrodes implanted in the hippocampus deliver neural signals for amplification and digitization. The digitized signals then are classified by 16 matched-filter spike sorters into spike-event channels, where events are represented by a single bit. Outputs (responses to the spike events) are computed by a single MIMO model processor, which delivers up to 32 channels of output to a charge-metering stimulus amplifier (CM). At present, probe technology limits the device to eight differential outputs. Additional detailed information on the performance of the analog building blocks and comparisons to other state of the art can be found in the references.

Fig. 8.

VLSI design of the hippocampal prosthesis. (A) Functional block diagram of the hippocampal prosthesis shows the parallel front-end signal processing for each probe. This architecture simplifies the processing and the separate channels that result in spike events make the design more robust and more reliable. (B) Block diagram of the MIMO model computational logic showing the finite state machine control for the Laguerre generator (Lag Gen) and the response generator and the interface to the coefficient memory. The simplified kernel computation and the final stimulation output are shown in the lower half of the figure.

Analog Front-End

-

Low-Noise Amplifier

Neural signals are processed by micropower, low-noise amplifiers [29]. These amplifiers are five-stage circuits to deliver 30 dB gain and to enable multiple auto-zeroing points to eliminate flicker noise. The auto-zero stages also provide band-pass filtering to limit the output bandwidth to 400–4000 Hz. As with all analog building blocks used on this SoC, and unlike other conventional designs, this low-noise amplifier requires no external components.

-

Analog-to-Digital Converter

The ADC [29] design is a hybrid consisting of a charge-redistributing successive-approximation register (SAR) and a dual-slope integrator. The dual-slope is employed to limit the geometric growth of the SAR capacitor array. ADC resolution is user-selectable at 6, 8, 10, or 12 bits, allowing a trade-off between resolution and speed: the ADC design is capable of operation up to 220 kSa/s. We chose to operate the ADC at 20 kSa/s with 12 bits of resolution. As reported in [30], this state-of-the-art ADC as measured by the traditional FOM is comparable to most other ADCs reported in the literature, but when compared in area has a footprint which is two orders of magnitude smaller.

Spike Detection and Sorting

The current state of the art in high-density probes produces electrodes that are relatively large (25–200 μm electrodes versus 10–25 μm neuron cell body diameters). In addition, probes are situated in a volume of densely packed neurons. As a result, each wire may display signals generated by as many as four neurons, their action potential signals conducted by the extracellular electrolytes. These signals must therefore be classified and “sorted” to distinguish between the different neuron sources of the multiple spikes recorded. We designed the spike sorter to differentiate signals generated by up to four neurons.

-

Input Band-Pass Filters

Before the input signals are processed to detect and classify spiking events, two digital IIR filters again set the sorter input data bandwidth to 400–4000 Hz.

-

Matched-Filter Sorter

Because the matched filters must be synchronized with the digitized data, the input data are first compared to a user-defined threshold level to start the finite-state machine (FSM) that controls the sorting process. The use of such a threshold function also prevents false-event detections on background noise. The supra-threshold signals are applied to matched filters for spike event detection and sorting. The matched filters represent a convolution of the input with a set of time-reversed canonical waveform elements determined earlier by means of a principal component analysis (PCA) performed on a large database of spike profiles. The convolution projects the input signals onto the PCA components, with the results represented as vectors in 3-D space. The sign of the inner products of projection vectors and user-programmed decision-plane normal vectors form a 3-bit address into a spike-type memory. The outputs of the memory represent the spike channel assignments. Note that this design simplifies the hardware required to perform this complex processing step.

MIMO Model Computation

The MIMO model is based on a series expansion of first-, second-, and third-order Volterra kernels, using Laguerre basis functions. Laguerre basis was chosen for two reasons: 1) they have a damped and oscillatory impulse response, giving a more compact basis than exponentials, and 2) they can be computed digitally by a simple recursion shown in following equation:

where j is the order of Laguerre basis function, τ is the time step, and α is the Laguerre parameter.

The Laguerre expansion of the Volterra kernels is done as in (7). In essence, the intervals between spike pairs and spike triplets of events occurring on the same channel, and the intervals between spike pairs of events occurring on different channels contain the neural information that the MIMO model processes. It is worth noting that the neural architecture—active connections between neurons—is represented by nonzero terms in the expansion; pairs of channels having no interaction or dependencies have trivial coefficients. This greatly reduces the memory required in calculating the model. At present the MIMO model is required to deliver results every two milliseconds to enable direct comparison with software models acting on animal subjects. This sets the operating frequency at approximately 8 MHz. The hardware is capable of higher speeds, for example, can produce results as rapidly as every sample period (50 μs). The MIMO uses a 24-bit fixed point ALU.

Stimulation Outputs

Conventional stimulation circuits use a dc blocking capacitor to prevent residual charge from being left in tissue. In future prostheses with hundreds of output channels, the size and weight of these components will become a problem. To mitigate the need for external components, a novel stimulation amplifier has been designed for the MIMO [31] model output to eliminate discrete coupling capacitors. This “charge-metering” approach uses a dual-slope integrator to measure the charge delivered during the anodic phase of the stimulation waveform, and guarantees an equivalent charge is recovered during the cathodic phase.

2) Physical Layout

Analog Circuit Design

The physical layouts of the input amplifiers, ADC, and the output stimulation amplifiers are available in the references from the previous section.

Digital Circuit Design

Fig. 8(b) shows a block diagram of the MIMO engine, which comprises the bulk of the device logic. Spike events provide impulse excitations to three orders of Laguerre filters maintained for each input. Volterra-kernel processing consists primarily of coefficient look-up and multiply-accumulate operations, using the Laguerre values that are updated every millisecond. All digital logic on the chip was described with a mix of Verilog and VHDL, enabling full-chip at-speed evaluation on an FPGA platform before committing to VLSI. 180-nm CMOS is easily capable of supporting the computation, which can be performed at only 8 MHz due to parallelism in the processing hardware. No special effort has yet been made to reduce power consumption by clock-gating or other circuit techniques.

Layout

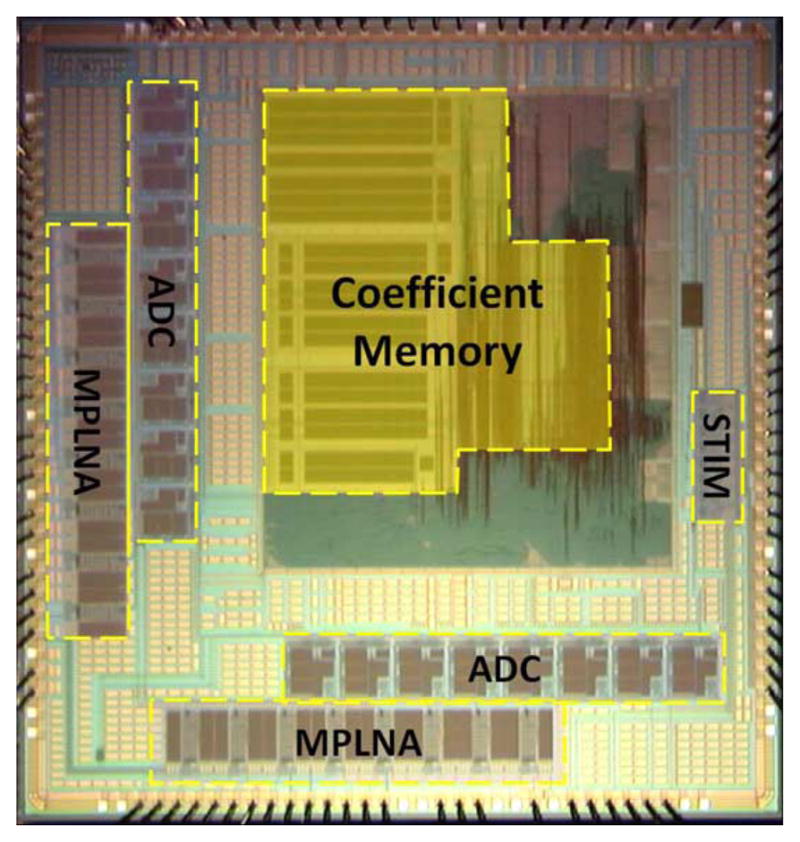

We use the acronym SWEPT (size, weight, efficiency, power, throughput) as a primary design driver for this type of embedded system. A cost-performance study revealed that extremely dense CMOS technologies—65 nm and smaller—are size and power efficient, but not area cost-efficient for mixed-signal designs. As a result, we elected to implement the MIMO device in 180 nm bulk (logic process) CMOS. This technology supports dense logic for computation and control without undue cost for large transistors and passive components needed by analog circuits. Fig. 9 shows the layout of the MIMO device. Because the minimum die size is limited by the I/O pad frame, considerable area is devoted to metal “area-fill” (the unlabeled portions of the layout) required by manufacturer processes. These regions will be used in subsequent devices for wireless telemetry and extending the number of input and output channels. It is worth noting that the largest component of the digital area is the memory used to store the Volterra kernel coefficient arrays. These are plainly visible in Fig. 9. Recent developments by the modeling part of our team have reduced the size of kernel coefficients substantially (see Sections III-A and III-B). Future devices will require only 6% of the area presently allocated for memory, allowing more channels to be computed per input sample period.

Fig. 9.

Die photo of the Hippocampal Prosthesis VLSI fabricated in National Semiconductor Corporation’s 180-nm CMOS process with labels on the major functional elements MPLNA (Micro-Power Low-Noise Amplifier) and ADC, the Coefficient Memory and the STIM (Charge Metering Stimulation Outputs). The unlabeled areas surrounding these functional elements are “metal fill” to ensure uniformity of processing and are available if needed for additional circuitry.

IV. Discussion

One of the first conclusions of the present series of studies is that these data support our initial claim that information is represented in the hippocampus and transmitted between hippocampal neurons using spatio-temporal codes. Although we have yet to investigate specific codes for individual objects/events, it is clear that the two levers that the rats had to associate with either “Sample” or “Non-Sample” in the first phase of each trial were associated with different spatio-temporal codes. In addition, it was very interesting to find that the same lever that rats had to associate with both “Sample” and “Non-Match” on the same trial also were associated with different spatio-temporal codes—the very same stimulus was coded differently because of its memory-related context. One of our next steps will be to train animals to an array of different stimuli and then examine the spatio-temporal codes for each. We also will train animals to more complex stimuli, and then present “degraded” versions of the conditioned stimuli to the animals during test trials to see which features, if any, of the hippocampal representations are altered. This will give us a better understanding of the precise mapping of stimulus features to spatio-temporal code, but we do not expect the relationship to be a simple one.

We consider a strong interpretation in favor of temporal coding—and not rate coding—to be consistent with what else is known about the biophysics and neurotransmitter properties, i.e., the signal processing characteristics of the nervous system. The complexity of the timed step and holding protocols that are the essence of studying conductances under voltage-clamp point to the importance of precision sequences of temporal intervals for activating specific conductance inputs to neurons. This sensitivity not just to temporal interval but to particular sequences of temporal intervals also is apparent in the variety of receptor kinetics across neurotransmitter systems, and in the resulting complexity of receptor states and state transition paths. Temporal coding, and the resulting spatio-temporal coding that emerges from population representations, is a fundamental property of the nervous system.

Likewise are nonlinearities fundamental to synaptic and neuronal function, and in fact, act to emphasize temporal coding by facilitating or suppressing neuronal responsiveness to particular combinations of inter-spike interval input patterns. It is at least in part for this reason that nonlinear modeling is an essential strategy for characterizing neural function, particularly in the context of cognitive prostheses for central brain regions. For the reasons outlined above in discussing temporal patterns, virtually all input–output relations in neurobiology are nonlinear; in dealing with spike train input systems, many input–output relations are nonlinear and of high-order (depend on multiple spikes for their differential expression). In this manner, nonlinear modeling can be seen as an essential step in identifying temporal codes. For differentiating among small numbers of memory patterns, linear modeling methods may suffice, but for differentiating among larger memory sets, nonlinear characterizations will be critical.

While the MIMO modeling methodology we have developed and improved upon [16]–[18] remains to be tested in a wider variety of conditions, to date that methodology appears to be strong in its ability to capture key features of the spatio-temporal dynamics of two functionally connected populations of neurons. (Although strong cross-correlations were required for neuron-pairs to be included for MIMO analysis, we cannot yet claim that all of those same neuron-pairs are indeed synaptically connected; resolution of this complex issue must await a more complete and stronger procedure and analysis for discriminating between “functional” and “synaptic” connectivity). Use of the present method with the described data sets, however, represents quite a rigorous test of the current MIMO methodology for the following reasons. The selection procedures used by Deadwyler and Hampson restrict the cell samples almost exclusively to pyramidal neurons. Hippocampal pyramidal cells are known to fire at very low rates in behaving animals (averages of less than 5 spikes/s are common), so that relatively few CA3 and CA1 firings are available to train the model. In addition, the model is stochastic, introducing variability into every trial during both training and prediction. Nevertheless, the majority of the models constructed performed highly accurately in terms of predicting output spatio-temporal patterns, even on a single-trial basis.

The tests of the model as a prosthesis demonstrated that the models were extracting features of behavioral significance, and that model predictions could be made not only accurately and on a single-trial basis, but also in real-time sufficient to change behavior. Also, and importantly, the behavioral consequences of the models were consistent with the established functioning of the hippocampus as forming new long-term memories, so the models were not only accurate in predicting hippocampal (CA1) output, but that output apparently was used by the rest of the brain to secure a usable long-term, or working, memory. This latter point is an hypothesis that must be tested by experimental examination of the electrophysiological activity of the targets of CA1 cells, e.g., the subiculum, before and after implantation of a prosthesis to an animal with a damaged hippocampus. This very difficult experiment is one for which we are now preparing.

The larger issue is the extent to which a prosthesis designed to replace damaged neural tissue can “normalize” activity by electrically stimulating residual tissue in the target neural system. In the experiments reported here, we are electrically stimulating hippocampal CA1 neurons in each of a small number of sites within the total structure—the behavioral outcome was an increase in the percent correct responding to the “sample” stimulus (when the total number of sample stimuli was two). There may be a number of explanations for why the effect of the prosthetic tissue activations with patterned stimuli reversed the consequences of tissue damage, and in effect, “corrected” the tissue dysfunction apparently at a systems level, allowing for “normal” behavior. For the DNMS studies reported here, there are many experimental and modeling studies to be completed before a conclusion could be justified that electrical stimulation with model-predicted spatio-temporal patterns alters subicular and/or other hippocampal target system function to “normalize” memory-dependent behavior. Nonetheless, the following should be considered.

We have shown previously using a rat hippocampal slice preparation that the nonlinear dynamics of the intrinsic trisynaptic pathway of the hippocampus (dentate granule cells ⇒ CA3 pyramidal cells ⇒ CA1 pyramidal cells) could be studied and modeled using random impulse train stimulation of excitatory perforant path input, so as to progressively excite dentate ⇒ CA3 ⇒ CA1. After modeling input–output properties of the CA3 region and implementing that model in both FPGA and VLSI, we surgically eliminated the CA3 component of the circuit. With specially designed and fabricated microelectrodes and microstimulators, we “re-connected” dentate granule cell output to the hardware model of CA3, and CA3 pyramidal cell output to the appropriate CA1 input dendritic region. Thus, we effectively replaced the biological CA3 with a “biomimetic” hardware model of its nonlinear dynamics: random impulse train electrical stimulation of perforant path now elicited dentate ⇒ FPGA or VLSI model ⇒ CA1. Not only did the model accurately predict the output of CA3 when driven by input from real hippocampal dentate granule cells, but substituting the CA3 model also normalized the output from CA1. In general, it was not possible to distinguish the output of a biologically intact trisynaptic pathway from a hybrid trisynaptic pathway having a “CA3 prosthesis” [9], [10], [32]. Although the differences between a slice preparation and an intact hippocampus clearly do not justify generalization of the in vitro result just described to an explanation for how model-predicted spatio-temporal patterned stimulation in vivo leads to improved DNMS performance, the slice findings just as clearly provide strong evidence for considering the likelihood of a common mechanism.

Despite these achievements, the current system requires major advances before it can approach a working prosthesis (in rats). One clear limitation in the current system is the relatively small number of features extracted from each memory, which is dependent on the number of recording electrodes. Space does not allow a full treatment of this issue here, but given that the number of CA1 hippocampal pyramidal cells (approximately 450 000 in one hemisphere of the rat) far exceeds the number of recording sites (16/hemisphere in the present studies), the latter must be increased substantially if the number of memories the device stores is to be useful behaviorally. Increasing the number of recording sites can be accommodated relatively easily, but when the number reaches into the hundreds, the computational demands may impose limitations of their own. This will require novel improvements in modeling methodologies, an area of concern for us even at present. Likewise, analogous issues exist concerning electrical stimulation used to induce memory states. We have very little knowledge of the space-time distribution of neurons activated with electrical stimulation, an issue that certainly will influence the number of memories capable of being differentiated by a prosthetic system. It is the complex relation between memory features, their neuronal representation, and our experimental ability to mimic the spatio-temporal dynamics of this neuronal representation that will be the key to maximizing capacity of cognitive prostheses. Other substantive issues, such as coding both objects/events and their contexts, remain to be dealt with.

We remain virtually the only group exploring the arena of cognitive prostheses [33], [34], and as already made clear, our proposed implementation of a prosthesis for central brain tissue many synapses removed from both primary sensory and primary motor systems demands bi-directional communication between prosthesis and brain using biologically based neural coding. We believe that the general lack of understanding of neural coding will impede attempts to develop neural prostheses for cognitive functions of the brain, and that as prostheses for sensory and motor systems consider the consequences for, or the contributions of, more centrally located brain regions that understanding fundamental principles of neural coding will become of increasingly greater importance. A deeper knowledge of neural coding will provide insights into the informational role of cellular and molecular mechanisms, representational structures in the brain, and badly needed bridges between neural and cognitive functions. From this perspective, issues surrounding neural coding represent one of the next “great frontiers” of neuroscience and neural engineering.

Acknowledgments

This work was supported in part by Defense Advanced research Projects Agency (DARPA) contracts to S. A. Deadwyler N66601-09-C-2080 and to T. W. Berger N66601-09-C-2081 (Prog. Dir: COL G. Ling), and grants NSF EEC-0310723 to USC (T. W. Berger), NIH/NIBIB Grant P41-EB001978 to the Biomedical Simulations Resource at USC (to V. Z. Marmarelis and NIH R01DA07625 (to S. A. Deadwyler).

Biographies

Theodore W. Berger (SM’05–F’10) received the Ph.D. degree from Harvard University, Cambridge, MA, in 1976.

He holds the David Packard Chair of Engineering in the Viterbi School of Engineering at the University of Southern California, Los Angeles. He is a Professor of Biomedical Engineering and Neuroscience, and Director of the Center for Neural Engineering. He has published over 200 journal articles and book chapters, and over 100 refereed conference papers.

Dr. Berger has received, among other awards, an NIMH Senior Scientist Award, was elected a Fellow of the American Institute for Medical and Biological Engineering, and was a National Academy of Sciences International Scientist Lecturer.

Dong Song (S’02–M’04) received the B.S. degree in biophysics from the University of Science and Technology of China, Hefei, China, in 1994, and the Ph.D. degree in biomedical engineering from the University of Southern California, Los Angeles, in 2003.

He is a Research Assistant Professor in the Department of Biomedical Engineering, University of Southern California, Los Angeles. His research interests include nonlinear systems analysis, cortical neural prosthesis, electrophysiology of the hippocampus, and development of novel modeling techniques incorporating both parametric and nonparametric modeling methods.

Dr. Song is a member of the American Statistical Association, the Biomedical Engineering Society, and the Society for Neuroscience.

Rosa H. M. Chan (S’08) received the B.Eng. (1st Hon.) degree in automation and computer-aided engineering from the Chinese University of Hong Kong, in 2003. She was later awarded the Croucher Scholarship and Sir Edward Youde Memorial Fellowship for Overseas Studies in 2004. She received M.S. degrees in biomedical engineering, electrical engineering, and aerospace engineering, and the Ph.D. degree in biomedical engineering, in 2011, all from the University of Southern California, Los Angeles.

She is currently an Assistant Professor in the Department of Electronic Engineering at City University of Hong Kong. Her research interests include computational neuroscience and development of neural prosthesis.

Vasilis Z. Marmarelis (M’78–F’95) was born in Mytiline, Greece, on November 16, 1949. He received the Diploma in electrical engineering and mechanical engineering from the National Technical University of Athens, Athens, Greece, in 1972, and the M.S. and Ph.D. degrees in engineering science (information science and bioinformation systems) from the California Institute of Technology, Pasadena, in 1973 and 1976, respectively.

He has published more than 100 papers and book chapters in the areas of system modeling and signal analysis.

He is a Fellow of the American Institute for Medical and Biological Engineering.

Jeff LaCoss (M’89) received the B.S. degree in computer science and engineering from the University of California, Los Angeles, in 1987.

He is at present a Project Leader at the University of California, Information Sciences Institute. His research has focused on digital VLSI systems architecture and design as well as design of high-performance scalable simulators and FPGA-based emulators for configurable heterogeneous processors. His projects have ranged from very-high-performance, scalable embedded sensor processing for real-time applications, to very-low-power high-performance processors in mixed-signal single-chip systems for neural prosthetics.

Mr. LaCoss is a member of the Society for Neuroscience.

Jack Wills received the B.A. degree in mathematics, the M.S. degree in electrical engineering, and the Ph.D. degree in electrical engineering, all from the University of California, Los Angeles, in 1972, 1985, and 1990, respectively. His thesis research applied spectral estimation techniques to time domain electromagnetic simulations.

He is a Senior Researcher in Division 7 at University of California, Information Sciences Institute. He has worked at ISI since January 1997. His research involves wireless communication, and high-speed analog and mixed signal CMOS circuit design. He is currently working on mixed signal VLSI design for implantable electronics as part of the National Science Foundation Biomimetic MicroElectronic Systems Engineering Resource Center.

Robert E. Hampson (M’09) received the Ph.D. degree in physiology from Wake Forest University, Winston-Salem, NC, in 1988.

He is an Associate Professor at the Department of Physiology and Pharmacology, Wake Forest University School of Medicine, Winston-Salem, NC. His main interests are in learning and memory: in particular deciphering the neural code utilized by the hippocampus and other related structures to encode behavioral events and cognitive decisions. He has published extensively in the areas of cannabinoid effects on behavior and electrophysiology, and the correlation of behavior with multineuron activity patterns, particularly applying linear discriminant analysis to neural data to decipher population encoding and representation.

Sam A. Deadwyler (M’09) is a Professor and former Vice Chair (1989–2005) in the Department of Physiology and Pharmacology, Wake Forest University School of Medicine, Winston-Salem, NC, where he has been since 1978. He has published extensively in the area of neural mechanisms of learning and memory. His current research interests include mechanisms of information encoding in hippocampus and frontal cortex also funded by DARPA to design and implement neural prostheses in primate brain.

Dr. Deadwyler has been funded by the National Institutes of Health (NIH) continuously since 1974, recipient of a NIH Senior Research Scientist award from 1987 to 2008, and a MERIT award from 1990 to 2000.

John J. Granacki (SM’08) received the B.A. from Rutgers University, New Brunswick, NJ, the M.S. degree in physics from Drexel University, Philadelphia, PA, and the Ph.D. degree in electrical engineering from the University of Southern California, Los Angeles, in 1986.

He is the Director of Advanced Systems at the University of Southern California, Information Sciences Institute and Research Associate Professor in the Department of Electrical Engineering and Biomedical Engineering. He is also the leader of the Mixed-Signal Systems on a Chip thrust in the NSF Biomimetic Microelectronics Engineering Research Center. His research interest includes high performance embedded computing systems, many-core system-on-chip architectures, and biologically inspired computing.

Footnotes

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org.

Contributor Information

Theodore W. Berger, Email: berger@bmsr.usc.edu, Department of Biomedical Engineering, Center for Neural Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Dong Song, Email: dsong@usc.edu, Department of Biomedical Engineering, Center for Neural Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Rosa H. M. Chan, Email: homchan@usc.edu, Department of Biomedical Engineering, Center for Neural Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Vasilis Z. Marmarelis, Email: vzm@bmsrs.usc.edu, Department of Biomedical Engineering, Center for Neural Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA. Department of Biomedical Engineering, and the Department of Electrical Engineering, Center for Neural Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Jeff LaCoss, Email: jlacoss@isi.edu, Information Sciences Institute (ISI), Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Jack Wills, Email: jackw@isi.edu, Information Sciences Institute (ISI), Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Robert E. Hampson, Email: rhampson@wfubmc.edu, Department of Physiology and Pharmacology, Wake Forest School of Medicine, Winston-Salem, NC 27157 USA.

Sam A. Deadwyler, Email: sdeadwyl@wfubmc.edu, Department of Physiology and Pharmacology, Wake Forest School of Medicine, Winston-Salem, NC 27157 USA.

John J. Granacki, Email: granacki@usc.edu, Department of Electrical Engineering and Biomedical Engineering and with the Information Sciences Institute (ISI), Viterbi School of Engineering, University of Southern California, Los Angeles, CA 90089 USA.

References

- 1.Berger TW, Orr WB. Hippocampectomy selectively disrupts discrimination reversal conditioning of the rabbit nictitating membrane response. Behav Brain Res. 1983 Apr;8:49–68. doi: 10.1016/0166-4328(83)90171-7. [DOI] [PubMed] [Google Scholar]

- 2.Eichenbaum H, Fagan A, Mathews P, Cohen NJ. Hippocampal system dysfunction and odor discrimination learning in rats: Impairment or facilitation depending on representational demands. Behav Neurosci. 1988 Jun;102:331–9. doi: 10.1037//0735-7044.102.3.331. [DOI] [PubMed] [Google Scholar]

- 3.Milner B. Memory and The Medial Temporal Regions of the Brain. In: Pribram KH, Broadbent DE, editors. Biology of Memory. New York: Academic; 1970. pp. 29–50. [Google Scholar]

- 4.Squire LR, Zola-Morgan S. The medial temporal lobe memory system. Science. 1991 Sep;253:1380–6. doi: 10.1126/science.1896849. [DOI] [PubMed] [Google Scholar]

- 5.Carey ME. Analysis of wounds incurred by U.S. army seventh corps personnel treated in corps hospitals during operation desert storm. J Trauma. 1996 Mar;40:S165–9. doi: 10.1097/00005373-199603001-00036. [DOI] [PubMed] [Google Scholar]

- 6.Okie S. Traumatic brain injury in the war zone. N Engl J Med. 2005 May;352:2043–7. doi: 10.1056/NEJMp058102. [DOI] [PubMed] [Google Scholar]

- 7.Cope DN. The effectiveness of traumatic brain injury rehabilitation: A review. Brain Inj. 1995 Oct;9:649–70. doi: 10.3109/02699059509008224. [DOI] [PubMed] [Google Scholar]

- 8.Berger TW, Song D, Chan RHM, Marmarelis VZ. The neurobiological basis of cognition: Identification by multi-input, multioutput nonlinear dynamic modeling. Proc IEEE. 2010 Mar;98(3):356–374. doi: 10.1109/JPROC.2009.2038804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Berger TW, Ahuja A, Courellis SH, Deadwyler SA, Erinjippurath G, Gerhardt GA, Gholmieh G, Granacki JJ, Hampson R, Hsaio MC, LaCoss J, Marmarelis VZ, Nasiatka P, Srinivasan V, Song D, Tanguay AR, Wills J. Restoring lost cognitive function. IEEE Eng Med Biol Mag. 2005 Sep-Oct;24(5):30–44. doi: 10.1109/memb.2005.1511498. [DOI] [PubMed] [Google Scholar]

- 10.Berger TW, Ahuja A, Courellis SH, Erinjippurath G, Gholmieh G, Granacki JJ, Hsaio MC, LaCoss J, Marmarelis VZ, Nasiatka P, Srinivasan V, Song D, Tanguay AR, Wills J. Brain-implantable biomimetic electronics as neural prostheses to restore lost cognitive function. In: Akay M, editor. Neuro-Nanotechnology: Artificial Implants and Neural Prostheses. New York: Wiley/IEEE Press; 2007. pp. 309–336. [Google Scholar]

- 11.Berger TW, Baudry M, Brinton RD, Liaw JS, Marmarelis VZ, Park AY, Sheu BJ, Tanguay AR. Brain-implantable biomimetic electronics as the next era in neural prosthetics. Proc IEEE. 2001 Jul;89(7):993–1012. [Google Scholar]

- 12.Berger TW, Glanzman DL. Toward Replacement Parts for the Brain: Implantable Biomimetic Electronics as the Next Era in Neural Prosthetics. Cambridge, MA: MIT Press; 2005. [Google Scholar]

- 13.Yeckel MF, Berger TW. Feedforward excitation of the hippocampus by afferents from the entorhinal cortex: Redefinition of the role of the trisynaptic pathway. Proc Nat Acad Sci USA. 1990 Aug;87:5832–6. doi: 10.1073/pnas.87.15.5832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yeckel MF, Berger TW. Monosynaptic excitation of hippocampal CA1 pyramidal cells by afferents from the entorhinal cortex. Hippocampus. 1995;5:108–14. doi: 10.1002/hipo.450050204. [DOI] [PubMed] [Google Scholar]

- 15.Song D, Chan RHM, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Physiologically plausible stochastic nonlinear kernel models of spike train to spike train transformation. Proc 28th Annu Int Conf IEEE EMBS. 2006:6129–6132. doi: 10.1109/IEMBS.2006.259253. [DOI] [PubMed] [Google Scholar]

- 16.Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Nonlinear modeling of neural population dynamics for hippocampal prostheses. Neural Netw. 2009 Nov;22:1340–51. doi: 10.1016/j.neunet.2009.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Nonlinear dynamic modeling of spike train transformations for hippocampal-cortical prostheses. IEEE Trans Biomed Eng. 2007 Jun;54(6):1053–66. doi: 10.1109/TBME.2007.891948. [DOI] [PubMed] [Google Scholar]

- 18.Song D, Berger TW. Identification of nonlinear dynamics in neural population activity. In: Oweiss KG, editor. Statistical Signal Processing for Neuroscience and Neurotechnology. Boston, MA: Mc-Graw-Hill/Irwin; 2009. [Google Scholar]

- 19.Song D, Wang Z, Marmarelis VZ, Berger TW. Parametric and non-parametric modeling of short-term synaptic plasticity. Part II: Experimental study. J Comput Neurosci. 2009 Feb;26:21–37. doi: 10.1007/s10827-008-0098-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Song D, Marmarelis VZ, Berger TW. Parametric and non-parametric modeling of short-term synaptic plasticity. Part I: Computational study. J Comput Neurosci. 2009 Feb;26:1–19. doi: 10.1007/s10827-008-0097-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Marmarelis VZ. Identification of nonlinear biological systems using Laguerre expansions of kernels. Ann Biomed Eng. 1993 Nov-Dec;21:573–89. doi: 10.1007/BF02368639. [DOI] [PubMed] [Google Scholar]

- 22.Marmarelis VZ, Berger TW. General methodology for nonlinear modeling of neural systems with Poisson point-process inputs. Math Biosci. 2005;196:1–13. doi: 10.1016/j.mbs.2005.04.002. [DOI] [PubMed] [Google Scholar]

- 23.McCullagh P, Nelder JA. Generalized Linear Models. 2. Boca Raton, FL: Chapman & Hall/CRC; 1989. [Google Scholar]

- 24.Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol. 2005 Feb;93:1074–89. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- 25.Song D, Chan RH, Marmarelis VZ, Hampson RE, Deadwyler SA, Berger TW. Sparse generalized Laguerre-Volterra model of neural population dynamics. Proc IEEE Eng Med Biol Soc Conf. 2009;2009:4555–8. doi: 10.1109/IEMBS.2009.5332719. [DOI] [PubMed] [Google Scholar]

- 26.Brown EN, Barbieri R, Ventura V, Kass RE, Frank LM. The time-rescaling theorem and its application to neural spike train data analysis. Neural Computat. 2002;14:325–46. doi: 10.1162/08997660252741149. [DOI] [PubMed] [Google Scholar]

- 27.Deadwyler SA, Goonawardena AV, Hampson RE. Short-term memory is modulated by the spontaneous release of endocannabinoids: Evidence from hippocampal population codes. Behav Pharmacol. 2007 Sep;18:571–80. doi: 10.1097/FBP.0b013e3282ee2adb. [DOI] [PubMed] [Google Scholar]

- 28.Berger TW, Hampson RE, Song D, Goonawardena AV, Marmarelis VZ, Deadwyler SA. A cortical neural prosthesis for restoring and enhancing memory. J Neural Eng. 2011:8 046017. doi: 10.1088/1741-2560/8/4/046017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chan C-H, Wills J, LaCoss J, Granacki JJ, Choma J. A micropower low-noise auto-zeroing CMOS amplifier for cortical neural prostheses. Proc IEEE Biomed Circuits Syst Conf. 2006:214–217. [Google Scholar]

- 30.Fang X, Srinivasan V, Wills J, Granacki JJ, LaCoss J, Choma J. CMOS 12 bits 50 kS/s micropower SAR and dual-slope hybrid ADC. Proc. 52nd IEEE Int. Midwest Symp. Circuits Syst; 2009. pp. 180–183. [Google Scholar]

- 31.Fang X, Srinivasan V, Wills J, Granacki JJ, LaCoss J, Choma J. CMOS charge-metering microstimulator for implantable prosthetic device. Proc. 51st IEEE Int. Midwest Symp. Circuits Syst; 2008. pp. 826–829. [Google Scholar]

- 32.Hsiao MC, Song D, Berger TW. Control theory-based regulation of hippocampal CA1 nonlinear dynamics. Proc. 30th Annu. Int. IEEE EMBS Conf.; 2008. pp. 5535–5538. [DOI] [PubMed] [Google Scholar]

- 33.Berger TW, Chapin JK, Gerhardt GA, McFarland DJ, Principe JC, Soussou WV, Taylor DM, Tresco PA. Brain-Computer Interfaces: An International Assessment of Research and Development. New York: Springer; 2008. [Google Scholar]

- 34.Kelper A. New Atlantis. 2006. The age of neuroelectronics. [PubMed] [Google Scholar]

- 35.Hampson RE, Song D, Chan RHM, Sweatt AJ, Riley MR, Gerhardt GA, Shin D, Marmarelis VZ, Berger TD, Deadwyler SA. A nonlinear model for Hippocampal cognitive prosthesis: Memory facilitation by Hippocampal ensemble stimulation. IEEE Trans Neural Syst Rehabil Eng. 2012 Mar;20(2) doi: 10.1109/TNSRE.2012.2189163. [DOI] [PMC free article] [PubMed] [Google Scholar]