Abstract

The space of visual signals is high-dimensional and natural visual images have a highly complex statistical structure. While many studies suggest that only a limited number of image statistics are used for perceptual judgments, a full understanding of visual function requires analysis not only of the impact of individual image statistics, but also, how they interact. In natural images, these statistical elements (luminance distributions, correlations of low and high order, edges, occlusions, etc.) are intermixed, and their effects are difficult to disentangle. Thus, there is a need for construction of stimuli in which one or more statistical elements are introduced in a controlled fashion, so that their individual and joint contributions can be analyzed. With this as motivation, we present algorithms to construct synthetic images in which local image statistics—including luminance distributions, pair-wise correlations, and higher-order correlations—are explicitly specified and all other statistics are determined implicitly by maximum-entropy. We then apply this approach to measure the sensitivity of the human visual system to local image statistics and to sample their interactions.

1. INTRODUCTION

Basic visual judgments, such as detection, discrimination, and segmentation, are fundamentally statistical in nature. Because the space of signals that the visual system encounters is very high-dimensional, there is a very wide variety of image statistics that, a priori, could be used to drive these judgments. On the other hand, there is much evidence that only a very limited number of image statistics are, in fact, actually used. For example, in texture discrimination and segmentation, only certain features of the luminance histogram appear perceptually relevant [1] and, with a limited number of exceptions, only pair-wise spatial correlations are used [2–4]. However, these conclusions are based on stimuli in which a single, mathematically-convenient, image statistic is introduced or manipulated. This is in contrast to natural visual stimuli, whose statistical structure is complex [5,6]: the well-known “1/f” correlation structure [7] coexists with highly non-Gaussian luminance statistics [8], as well as many other kinds of statistical features [9–12].

Thus, to understand visual responses to natural images, it is necessary to analyze not only how image statistics are processed individually, but also how they interact. To pursue such an analysis, it is desirable to have stimulus sets in which multiple image statistics—including luminance statistics and spatial correlations—can be introduced in a controlled and independent fashion. The goal of this paper is to present a procedure for doing this and to illustrate its use in delineating the perceptual saliency of these statistics, alone and in combination.

A related motivation for this work arises out of the analysis of receptive fields at the physiologic level. As a consequence of the high-dimensional nature of visual signals, one cannot characterize input-output relationships exhaustively—so it is necessary to sample the space of inputs in some fashion. Generally, the sampling strategies fall into two categories. One category has a primarily mathematical motivation, and relies on stimuli such as sinusoids and white noise because they are convenient for determining the parameters of simple model classes (such as linear transformations or Taylor expansions and their generalizations). The other category is primarily biologically-motivated and focuses on the inputs under which the visual system functions and evolves, i.e., “natural scenes.”

Either approach alone appears to be insufficient. Mathematical models built from stimuli such as white noise provide only a fair account of responses to natural scenes [13]. The obstacle is that mathematically-convenient stimuli rarely sample the kinds of stimulus features that are common in natural scenes, so substantial errors in model structure may be overlooked. The use of naturalistic stimuli avoids that problem, but makes it difficult to achieve a mechanistic understanding. This is because natural scenes have many different kinds of statistical structure (as mentioned above: luminance histograms, correlations of low and high order, edges, occlusions, etc.). Since these elements are entangled in natural scenes, the role(s) that they play in visual processing can be difficult to sort out. As is the case for understanding visual function at a psychophysical level, there is a need for principled stimulus sets in which multiple statistical elements can be independently controlled.

To meet this need, three related issues need to be addressed. First, because the number of image statistics is so large, one must select a subset to focus on. Second, selecting this subset of statistics and specifying their values stops short of specifying the entire stimulus, because of all the other image statistics whose values are unspecified. Thus, it is also necessary to have a principled procedure for choosing the values of the statistics that are not specified explicitly. Finally, one needs a procedure to create images that exemplify these statistics.

In choosing the statistical elements to focus on, two considerations are immediately relevant: which image statistics are informative about natural images and which ones are salient to the visual system. The notion of “efficient coding” [14] suggests that these considerations are likely to be aligned. A recent analysis of natural images [10] supports this view, not only for luminance statistics and pair-wise correlations, but also for multipoint correlations. The latter analysis used binarized natural images; this made it possible to enumerate the distribution of four-point configurations that are present in natural images. A main conclusion of the analysis [10] was that only some kinds of four-point correlations were informative. Moreover, the dichotomy between informative and uninformative configurations matched the dichotomy between four-point correlations that are visually salient and those that are not [4].

Therefore, we focus on the correlations that this previous study[10] identified as both informative and visually-salient: the statistics of 2 × 2 arrays of binarized pixels. As we show below, the joint probability distribution that describes the ways that these arrays can be colored is specified by 10 parameters, i.e., 10 local image statistics.

Having selected a subset of image statistics and specified their values, the next step is to address the second issue: choosing the values of all of the image statistics outside of the selected subset. Here our strategy is guided by the goal of analyzing the effect of the specified statistics on visual processing. In view of this goal, it makes sense to choose the other statistics in a manner that adds no further structure. We do this implicitly—by maximizing the entropy of the image ensemble, subject to the constraints of the subset of specified image statistics. Maximizing the entropy of the image ensemble solves the problem of choosing values for all other statistics, since the maximum-entropy distribution is unique.

Because the maximum-entropy criterion represents a principled way to create distributions that are as assumption-free (i.e., as random) as possible given specific constraints [15,16], maximum-entropy methods have been applied in numerous domains, including analysis of neural data [17–19] and image analysis [20]. Maximum-entropy distributions are simple and easy to construct when the constraints are few and simple: a Poisson distribution maximizes the entropy when the mean is constrained, a Gaussian distribution maximizes the entropy when the variance is constrained, and a Markov process maximizes the entropy when sequential correlations are constrained.

When the constraints are more complex—as they are for local image statistics—maximum-entropy distributions are less-familiar and explicit construction of them is not necessarily straightforward. The basic problem that arises is that iterative constructions—which are guaranteed to work for one-dimensional processes such as Markov chains—may fail for two-dimensional processes. The reason that iterative constructions do not necessarily extend from one dimension to two dimensions is that the constructions along each dimension may conflict with each other. Below, we use an important result of Pickard [21] to determine when these conflicts occur. In the absence of such conflicts, iterative constructions enable creation of examples of images with the desired statistics (i.e., sampling the maximum-entropy ensemble). In the presence of these conflicts, we develop a set of alternative image-synthesis algorithms, that allow us to achieve our goal. The result is a set of procedures for construction of images that have independently specified values of the 10 local image statistics. Finally, we use these stimulus sets in psychophysical studies, to demonstrate the selective sensitivity of the visual system to the individual statistics and their interactions.

Although we focus on construction of maximum-entropy binary images given a specified set of statistics for 2 × 2 arrays, most of the strategies we develop are not restricted to this particular case. Therefore, to facilitate extensions of this approach, we describe not only the algorithms themselves, but also their interrelationships and the conditions that allow them to succeed.

2. IMAGE CONSTRUCTION

There are two components of this paper: first, algorithms for the construction of visual stimuli that are specified by a set of image statistics and second, psychophysical studies based on selected examples of these constructions. As mentioned in the Introduction, we focus on the image statistics that describe 2 × 2 blocks of pixels in a binary image. In this section, we show that this is a 10-dimensional space and how to navigate in it. That is, we construct stimuli along the coordinate axes, stimuli on or near the coordinate planes, and in other directions, corresponding to arbitrary natural stimuli. Below (Section 4), we use these stimuli in psychophysical experiments: we measure the sensitivity along the axes of the space and in selected coordinate planes, to provide a glimpse of the ways in which the coordinates interact. The results of the psychophysical experiments are also important to support some of the strategic choices made during stimulus construction.

The basic problem that we wish to solve is the following: given a set of local image statistics, construct images that are as random as possible—i.e., maximum entropy—given these constraints. As described below, the local image statistics we consider are those that refer to the contents of a 2 × 2 array of pixels or on a subset of this array.

We begin this section by setting up a notation and defining the key terms. We then use this notation to refine the statement of the problem and to provide a formal characterization of the solution. The formal characterization, though, does not provide a construction, and a constructive solution is necessary to achieve our goal. We then develop constructive solutions, proceeding incrementally from the simplest case (independent pixels) to correlations along one dimension, to correlations along two dimensions that are specified by a single parameter, to correlations along two dimensions that are specified by pairs of parameters, to more complex correlation structures, including those that arise in natural images.

A. Preliminaries: Ensembles, Images, and Block Probabilities

To make the notion of randomness rigorous, we need to consider image ensembles, rather than individual images. An image ensemble is simply a collection of images, with a probability assigned to each. Within the ensemble, individual images are represented as an array of values aij, where aij is the luminance of the pixel in row i and column j. Arrays are of finite size (so that averages can be simply calculated), but we are only concerned with the limiting behavior as the size of the array grows without bound.

We will only consider binary images: each aij is either 0 or 1, with 0 arbitrarily is assigned to represent black and 1 to represent white. We note, though, that many of the constructions below readily generalize to images with multiple gray levels (see for example Appendix A).

A “block probability” is the probability that a set of pixels in particular configuration have a given set of values. As a simple example, p(0) is the probability that a pixel value aij is black; is the probability that a 1 × 2 window contains a black pixel on the left and is the probability that an L-shaped region contains three white pixels.

To ensure that the formalisms of image ensembles are relevant to laboratory experiments and real world visual behavior, it is essential that the statistics of local patches of images typify the statistics of the ensemble. To meet this criterion, we require that the ensemble of images has two properties: stationarity and ergodicity. Stationarity formalizes the notion that the statistics of the images are the same in all locations and ergodicity formalizes the notion that the statistics of an individual image typify those of the ensemble. Each of these properties can be expressed in terms of different ways of calculating the block probabilities. For example, one can choose a specific location and sample that location across the ensemble. Or, one can choose a specific image within the ensemble and sample that image at all locations. Stationarity asserts that the first calculation does not depend on the location sampled. Ergodicity asserts that the second calculation yields the same result as the first. Together, these properties ensure that the statistics of local patches typify the statistics of the ensemble.

B. A Coordinate System to Simplify the Dependencies among Block Probabilities

While at first it might appear that the individual block probabilities are the most natural way to describe local statistics, they have a significant drawback: the property of stationarity (along with the general rules of probability) implies certain interrelationships among them. So our next step is to introduce a coordinate system for the block probabilities that simplifies the task of specifying sets of block probabilities that conform to these constraints.

To see how these constraints arise, assume that we have specified the block probabilities for all colorings of a 2 × 2 array, as . These implicitly specify the probabilities of smaller blocks—for example,

| (1) |

and

| (2) |

Similarly, can be written in terms of the 2 × 2 block probabilities:

| (3) |

Stationarity requires that . Thus, for A = C and B = D, the right-hand sides of Eqs. (2) and (3) must be equal. This in turn means that for each of the four ways of assigning binary values to A and B, there is a linear relationship among eight of the 2 × 2 block probabilities:

| (4) |

Similar relationships among subsets of block probabilities follow from stationarity requirements for the probabilities of 2 × 1 blocks, namely,

| (5) |

and individual pixels, namely,

| (6) |

An additional complication is that these relationships are not independent of each other.

As we next show, we can replace the block probabilities with a coordinate system in which these interdependencies are eliminated. The coordinate system is a transformation of the block probabilities:

| (7) |

where s1, s2, s3, and s4 are 0 or 1. Note that the original block probabilities can readily be obtained from the transformed quantities ϕ:

| (8) |

This is because the new quantities ϕ are, in essence, the Fourier transforms of the block probabilities, along the four intensity axes A1 through A4. (For additional details on this construction, its further properties, and how it generalizes to images with multiple gray levels, see Appendix A.)

The reason that the transformed quantities ϕ simplify the relationships among the block probabilities is the following: setting one of the indices si to 0 in Eq. (8) removes Ai from the exponent and hence produces transformed quantities that sum over the corresponding location Ai. For example, depends only on and depends only on . So the stationarity constraints [Eq. (5)] due to 1 × 2 block probabilities, , are compactly expressed in the transformed coordinates as

| (9) |

This is a much more compact form than Eq. (4), which entails eight of the original block probabilities.

In like manner, the stationarity constraints related to the 2 × 1 block probabilities [Eq. (5)] are equivalent to

| (10) |

and the stationarity constraints related to individual pixels, [Eq. (6)], becomes

| (11) |

The 16 2 × 2 block probabilities must of course also sum to 1 and this is equivalent to

| (12) |

The constraints (9), (10), (11), and (12) are still not independent, but their relationship to each other is much simpler than that of Eq. (4) and its analogs. Specifically, when expressed in terms of the transformed quantities ϕ, the constraints are nested: setting one of the arguments in Eqs. (9) or 10 to zero leads to Eq. (11), and setting both to zero leads to Eq. (12). This motivates our final step in setting up the coordinate system: ranking the quantities ϕ according to the number of arguments that are nonzero. The result is 10 independent quantities, as follows:

| (13) |

| (14) |

| (15) |

and

| (16) |

(We have introduced minus signs in Eqs. (14) and (16) for consistency with previous work [22,23]).

Note that the expressions for γ, β, and θ involve ϕ’s with zero arguments. (Here and below, an unsubscripted β or θ denotes any one of the β’s or θ’s.) Thus, when expressed in terms of block probabilities, γ, β, and θ can be calculated from smaller blocks [as can be seen formally from Eq. (7)]. For example,

| (17) |

| (18) |

and

| (19) |

In sum, the linear transformation (7) replaces the 16 interdependent block probabilities by 10 independent quantities, {γ, β−, β|, β\, β/, θ⌟, θ⌜, θ⌝, α}, which we denote collectively by ϕi (i ∈ {1, …, 10}). (The reverse transformation, from the ϕi to the block probabilities according to Eq. (8), is provided in Table 1). Each of these range from −1 to 1 and has a simple interpretation. γ captures the overall luminance bias of the image: γ = 1 means that all pixels are white, γ = −1 means that all pixels are black. The β’s capture the pair-wise statistics: β = 1 means that all pixels match its nearest neighbor (in the direction indicated by the subscript) and β = −1 means that they all mismatch. The θ’s capture the statistics of triplets of pixels arranged in an L: θ = 1 means that all such L-shapes contain either three white pixels or one white pixel and two black ones; θ = −1 means the opposite. α captures the statistics of quadruplets of pixels in a 2 × 2 block: α = 1 means that an even number of them are white and α = −1 means that an odd number are white. For an image in which all pixels are independently assigned to black and white, with equal probability, all of these coordinates are 0.

Table 1.

Conversion between Block Probabilities and Coordinatesa

| 1 × 1 blocks | |

| 1 × 2 blocks | |

| 2 × 2 blocks | |

|

|

Each kind of coordinate thus corresponds to a “glider”—for γ, a single pixel; for β, a pair of pixels that share an edge or corner; for θ, a triplet of pixels in an L, and for α, four pixels in a 2 × 2 block. The value of the coordinate compares the fraction of positions in which the glider contains an even number of white pixels to the fraction of positions in which the glider contains an odd number of white pixels. Specifically, for an image R, the value of a coordinate ϕi can be expressed as

| (20) |

where n+(R, i) denotes the number of placements of the glider for ϕi that contain an even number of white pixels, n−(R, i) denotes the number of placements that contain an odd number of white pixels, and n(R, i) = n+(R, i) + n−(R, i) denotes the total number of placements. We call n+(R, i) and n−(R, i) the “parity counts.” In sum, the gamut of each coordinate ϕi is from +1 to −1: a value of +1 indicates that all glider placements contain an even number of white pixels (n−(R, i) = n(R, i)) and a coordinate value of −1 indicates that all placements contain an odd number of white pixels (n−(R, i) = n(R, i)).

We mention that there are bounds on certain linear combinations of the coordinates because block probabilities and their sums must be nonnegative. Some examples are: the expressions for and (Table 1) together imply that

| (21) |

the expressions for and similar quantities together imply that

| (22) |

and the expressions for and similar quantities together imply that

| (23) |

C. Formal Solution and Overview of Approach

Our aim is to construct images in which one or more of these coordinates are assigned a value and all other image statistics are chosen so that the ensemble is as random as possible. In principle, this consists of two steps: first, determination of the image ensemble whose entropy is maximum, given the constraints of the coordinates ϕi and, second, unbiased sampling of images within this ensemble. The first step has a formal solution that follows readily from general properties of maximum-entropy distributions. However, the formal solution is not a constructive one: that is, while it determines the unique maximum-entropy distribution, it does not show how to create it. The formal solution therefore does not directly address the second step, that of choosing typical images from within this distribution. Therefore, we need to develop special-purpose constructive algorithms to sample the distribution and show that the samples they construct correspond to the distribution specified by the formal solution.

The formal solution is a specification of the probability p(R) of encountering a large block R in an image drawn at random from the ensemble (or, equivalently, in a random location within a single typical image). These probabilities are to be determined so that the entropy

| (24) |

is maximized, subject to the block-probability constraints for one or more of the coordinates ϕi. The constraints refer to the expected value of a coordinate ϕi(R), averaged over all regions placements on R:

| (25) |

In view of Eq. (20), this can be written as

| (26) |

Note that n(R, i), which is the number of ways that the glider corresponding to ϕi can be placed on the block R, depends only on the shape of the glider and of R, but is independent of how R is colored. Thus, n(R, i) is constant for all terms in the above sum.

We use a standard approach to maximize the entropy [Eq. (24)] under the constraints of Eq. (26), namely, Lagrange multipliers. To apply the Lagrange multiplier method, we introduce a multiplier λi for each constraint [Eq. (26)], as well as a multiplier λ0 for the normalization ΣRp(R) = 1. We then maximize

| (27) |

by setting ∂Q/∂p(S) = 0 and considering each of the p(S)’s to be independent. This yields equations for the p(R)’s in terms of the Lagrange multipliers λi, which then need to be solved to satisfy the constraints of Eq. (26) and the normalization constraint. Importantly, the maximizing distribution p(R) is guaranteed to be unique, since the constraints [Eq. (26)] are linear and entropy is a convex function. That is, there cannot be two separate local maxima—because if there were, then a mixture of them would necessarily yield a solution of even higher entropy.

To carry out the maximization, we calculate ∂Q/∂p(S) from Eq. (27) and set it to 0:

| (28) |

Setting this to 0 yields a formal solution:

| (29) |

where we have used μ0 = λ0 − 1 and μi = λi/n(S, i). (The latter is justified since n(S, i), the number of placements of the glider for ϕi, depends only on i and the size of the region S and not on its contents.)

As is typical of maximum-entropy problems, we can eliminate the multiplier μ0 by enforcing the normalization constraint:

| (30) |

where

| (31) |

and

| (32) |

Z corresponds to the “partition function” that is central to the maximum-entropy problems that arise in statistical mechanics. As in statistical mechanics, the partition function provides for a concise formal representation of the constraints. Combining Eqs. (32), (31), and (26) yields

| (33) |

We note that Eq. (29) [or Eqs. (30) and (31)] have an intuitive interpretation that extends the tie-in to statistical-mechanics. Since n+(S, i) and n−(S, i) count the number of occurrences of even- and odd-parity counts of white pixels for each placement of the glider for ϕi, we can view the Lagrange multiplier μi as an kind of interaction energy within each placement of the glider. We calculate Z(S) by inspecting each glider placement, one by one, and accumulating counts for n+(S, i) and n−(S, i). If the number of white pixels in the glider is even, n+(S, i) is incremented, which in turn changes the probability of the configuration S by a factor of eμi. If the number of pixels is odd, n−(S, i) is incremented and the probability is changed by the reciprocal of that factor.

Although Eq. (30) provides an expression for the block probabilities of the unique maximum-entropy ensemble, it is not a constructive solution. The reason is that the Lagrange multipliers μi are as yet unknown. Finding them requires solving the constraint equations for the counts n+(S, i) and n−(S, i) [Eq. (26) or, equivalently, Eq. (33)], which are nonlinear. If this can be done, then the image ensemble is explicitly specified via Eq. (30) and we can then choose random samples from within it. In some cases (Section 2.4–Section 2.7), the direct approach works; in other cases, another strategy appears necessary (Section 2.8).

D. Ensembles Specified by a Bias on the Number of White and Black Pixels

We consider first the simplest case, in which there is only a single constraint and it is a constraint on λ, the bias between the number of white and black pixels. Since there are no correlations between pixels, the solution to the maximum-entropy problem is well known: an image ensemble in which each pixel is independently colored (an independent, identically-distributed (IID) distribution). The probabilities of white and black pixels are determined by p(1) − p(0) = λ [Eq. (19)] and the normalization constraint p(1) + p(0) = 1. Nevertheless, it is helpful to carry out this simple example in detail, as it illustrates how the above formalism works in a situation in which the constraint equations can be solved. This will also indicate how elements of this IID solution can be generalized.

We begin by calculating the partition function, Eq. (32). Here, there is only one multiplier μ1. Substituting Eq. (31) into Eq. (32) yields.

| (34) |

This is a sum over all possible colorings S. For each coloring S, n+(S, 1) is the number of black pixels (i.e., a 1-block containing an even number of white pixels) and n−(S, 1) is the number of white pixels (i.e., a 1-block containing an odd number of white pixels). Since each term of the sum [Eq. (34)] depends only on the number of pixels of each color and not their arrangement, we can evaluate the sum by grouping the configurations S according to the number of black and white pixels (n+ and n−, with n+ + n− = n) that it contains. The number of such configurations is given by the binomial coefficient . With this regrouping, we find,

| (35) |

Applying the binomial theorem yields a simple expression for the partition function:

| (36) |

Equation (33) now yields the relationship between the unknown Lagrange multiplier μ1 and the constraint, :

| (37) |

The Lagrange multiplier μ1 is thus given by

| (38) |

so the partition function [Eq. (36)] is equal to

| (39) |

We can now obtain an expression for the probability of any configuration. We start with Eq. (30), p(S) = Z(S)/Z, and substitute the value of μ1 determined by Eq. (38) into Eq (31) for Z(S). This yields

| (40) |

We then use Eq. (39) for the partition function Z:

| (41) |

Making use of the relationship n = n+(S, 1) = n−(S, 1) leads to the desired result, which has a simple and symmetric form:

| (42) |

Equation (42) thus defines the maximum-entropy ensemble constrained by . The result is not surprising and corresponds to an IID process: if a configuration S has n+(S, 1) black pixels and n−(S, 1) white pixels, then its probability is a product of n+(S, 1) copies of the probability of a black pixel ( ) and n−(S, 1) copies of the probability of a white pixel ( ).

There are two important aspects of this analysis that are crucial for the more complex cases that we consider below. First, we note that Eq. (42) does more than just define the probability of a configuration S—it also provides a way of generating samples that have the desired probability distribution. Specifically, it indicates that each pixel’s color can be assigned independently. Each pixel is considered in turn and it is colored white with probability and black with probability . This construction, rather than the explicit value of p(S), is our goal. For other kinds of textures, we will be able to sample the distribution, but we may not be able to write an explicit expression for the probability of any given configuration. The latter requires explicit summation of the partition function [Eq. (32)], as well as solution of the constraint equations, Eq. (33).

The second point is even though we only specified one coordinate (in this case, ϕ1 = −γ), the maximum-entropy construction determined the values of the other coordinates, and these values turn out to be nonzero. This is readily seen from Eq. (42): the probability of a two-pixel block S with two white pixels is ; with one white pixel, ; and with no white pixels, . From these, it follows [e.g., from Eq. (18)] that β = γ2. Similar calculations show that θ = γ3 and α = γ4. That is, the trajectories specified by maximum entropy (here, β, θ, α) = (γ2, γ3, γ4)), are curved with respect to the coordinate axes, a phenomenon that is characteristic of “information geometry” [24]. Near the origin (i.e., near γ = 0), this curvature is small and the maximum-entropy trajectories approximate the coordinate axes. As our psychophysical data will show, this mild curvature does not interfere with measurement of meaningful thresholds along the coordinate axes.

E. Ensembles Specified by One Parameter

The above analysis immediately puts us in a position to construct maximum-entropy ensembles specified by a single-parameter constraint , for parameters other than ϕ1 = − γ. The main point is that we can construct samples S of these ensembles in a pixel-by-pixel fashion. This will lead to expressions for the probability of a sample p(S) that are similar to Eq. (42). As is the case for ϕ1 = −γ, this is equivalent to an expression of the form [Eq. 29)], which demonstrates that it is indeed maximum-entropy.

To begin, we note that each of the coordinates ϕi corresponds to a “glider”, i.e., a configuration of pixels that are relevant to the calculation of n+(S, i) and n−(S, i) [see comments following Eq. (33)]. We now assign colors to each pixel in S in sequence: top row to bottom row and left to right. The first pixel, a11, is assigned to white or black with equal probability. As each subsequent pixel is considered, we determine whether it is the last pixel to be assigned within a glider. For example, in the case of the glider for β− (a 1 × 2 block), the second pixel completes a glider (at positions a11 and a12), as does each subsequent pixel within each row. In the case of the glider for α (a 2 × 2 block), the entire first row does not complete any gliders, but the second and subsequent pixels in the later rows do so.

The assignment of a color depends on whether the pixel completes a glider. If it does not, it is randomly assigned to white or black, each with probability 0.5. If it does complete a glider, we need to choose whether to give it a color which makes the total number of white pixels within the glider even or odd. We do the former with probability and the latter with probability . The average number of gliders that contribute to n+(S, i) is thus and the average number of gliders that contribute to n−(S, i) is thus , where, as above, n(S, i) is the number of times that the glider can be placed entirely within the region S.

We now verify that this construction has the required properties. The first requirement is that the expected value of the coordinate ϕi is, in fact, (i.e., that the construction satisfies the constraint). This is straightforward and follows from the biased assignment of colors that is invoked when a pixel completes a glider:

| (43) |

The other requirement is that p(S) is of the form of Eq. (29), which means that it is maximum-entropy. To show this, we begin by noting that, according to the above construction, the assignments of pixels to colors were made in one of three ways. Some of the pixels were assigned to white or black with equal probability, since these pixels did not complete a glider. For a region S of size n, the number of such pixels is ninit = n − n(S, i), since n(S, i) is the number of pixels at which a glider was completed. After these initial pixels were assigned, the others (the ones that completed gliders) were assigned in a biased fashion. There were n+(S, i) such assignments, each with probability , that resulted in an even number of white pixels within a glider and there were n−(S, i) assignments, each with probability , that resulted in an odd number of white pixels within a glider. Thus,

| (44) |

analogous to Eq. (42). Using the relationship n(S, i) = n+(S, i) + n−(S, i), this is seen to be equivalent to

| (45) |

Since n(S, i), the number of pixels at which a glider was completed, is independent of the way in which S is colored, Eq. (45) is in the form of Eq. (29). Thus, the construction is indeed maximum-entropy and satisfies the coordinate constraints.

Equation (44) or, equivalently Eq. (45), summarizes how the analysis in the previous section for ϕ1 extends to each of the other coordinates. For these other coordinates, whose gliders are larger than a single pixel, there is an initial step in which ninit = n − n(S, i) pixels are colored at random. Following this, the colors of the remaining n − ninit = n+(S, i) + n−(S, i) pixels are assigned to make the parity of the white pixels within the glider as either even or odd, with a probability ratio of . Note that while this choice (even versus odd) is independent at each pixel, the resulting color (white or black) depends on the previous assignments. Thus, the exponents n+(S, i) and n−(S, i) tally the choices of even and odd, not the colors themselves.

For reference, we restate the results of the previous section in a form that applies to all coordinates. The generic form of the partition function [Eq. (35)] is

| (46) |

which, after applying the binomial theorem, becomes

| (47) |

The generic form of Eq. (38) for the Lagrange multiplier μi is

| (48) |

so the partition function [Eq. (47)] can be rewritten as

| (49) |

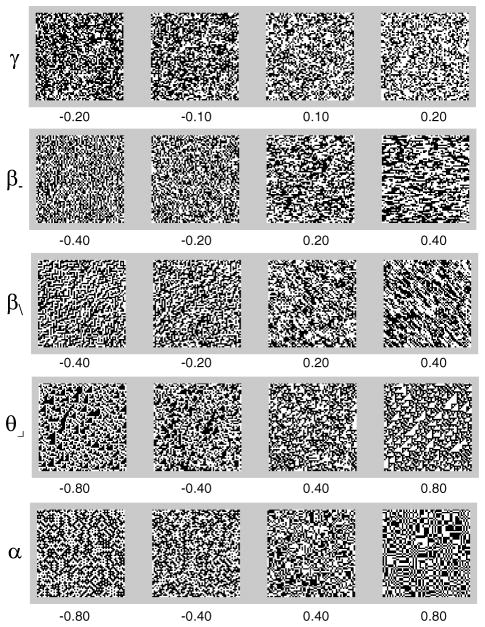

Figure 1 shows examples of images generated according to this construction, for each of the coordinates. As can be seen from these examples, each coordinate ϕi leads to a different kind of structure. All are visually salient, but to different degrees—an informal observation that we will quantify below (Section 4).

Fig. 1.

The image-statistic coordinate axes. Each patch is a typical sample of an image ensemble in which the indicated statistic is set to a nonzero value and higher-order statistics are determined by maximum entropy.

Interestingly, these differences in salience are solely due the processing limitations of the visual system: from the point of view of an ideal observer (limited only by the statistics of sampling of images), the alterations in image statistics associated with movement along each of the coordinates is equally salient. This property of the coordinates ϕi is demonstrated in Appendix B.

Finally, we mention that specification of a nonzero value for one coordinate can lead to nonzero values for the others, as is the case for ϕ1 = −γ. This happens for β− or β|: in these cases, α = β2. This can be seen by calculating the 2 × 2 block probabilities from Eq. (44) and then calculating from Eq. (7).

F. Ensembles Specified by Two Parameters, along One Dimension

The next sections consider the construction of maximum-entropy ensembles specified by two parameters, say ϕi and ϕj. We begin with the simplest case, in which the gliders associated with these parameters lie within a single spatial dimension (i.e., a row, a column, or a diagonal). For definiteness, we focus on γ paired with β−, but the analysis applies equally well to γ paired with one of the other β’s.

Since the coordinates γ and β− only refer to correlations within rows, the rows of the maximum-entropy ensemble must be independent. It therefore suffices to provide an algorithm to generate a row of the image and then to apply this algorithm separately to each row. To create each row, we use a recursive procedure, a Markov process, to define each pixel assignment. We show that probabilities of the resulting image samples are consistent with Eq. (29), which confirms the maximum-entropy property. A Markov process is a natural strategy for a maximum-entropy construction, since each state of a Markov process depends only on the previous one.

The Markov property, along with the 1 × 2 block probabilities, specifies the probabilities of all 1 × k blocks. To see this, we first determine the probabilities of the 1 × 3 blocks:

| (50) |

Here, the first equality expresses the Markov property (that the assignment of A3 depends only on the state of A2 and not on A1) and the second equality follows from the fact that joint probability p(X, Y) is related to the conditional probability p(YjX) by p(X, Y) = p(X)p(Y|X).

Equation (50), applied recursively, specifies the probabilities of all 1 × k blocks:

| (51) |

The numerator is the product of all 1 × 2 block probabilities contained within the 1 × k block; the denominator is the product of all the singleton probabilities, excluding both ends. We note that the 1 × 2 block and singleton probabilities are known, since they are determined from the constraints ϕ1 = −γ and ϕ2 = β− via Eq. (8) (also, see Table 1).

To show that the probabilities of image samples generated in this fashion are consistent with Eq. (29), we proceed as follows. The probability of an image S is a product of terms such as Eq. (51), one for each row. For each of the four kinds of 1 × 2 blocks, the corresponding block probability will occur as a factor in the numerator every time that this block appears in S. A similar reasoning applies to the denominator. Thus,

| (52) |

where nAB counts the number of blocks in S and nA counts the number of A-singletons in the interiors of the rows of S. To show that these probabilities are consistent with Eq. (29), the key step is to relate the block counts nAB and nA in the above equation to the parity counts n+(S, i) and n−(S, i). These count the occurrences of even- and odd-parity 1 × 2 blocks (n±(S, 2)) and the black and white singletons (n±(S, 1)). The relationship between the block counts and the parity counts follows from the transformation between the block probabilities and coordinates [Eq. (7)] and Table 1). For example,

| (53) |

where pX (S) indicates the probability of an X block in S and ϕ(S) indicates the transformed coordinate ϕ evaluated from the block probabilities within S. Note that Eq. (53) neglects “end effects”, but this is justified because in the large-k limit, the interior pixels are far more numerous than the edge pixels and consequently dominate the product [Eq. (52)].

Equation (53) and its analogs for the other blocks, along with Eq. (20), lead to the desired relationships between the block counts and the parity counts n±(S, i):

| (54) |

Substitution of Eqs. (54) into Eq. (52) yields an equation of the form Eq. (29), confirming the maximum-entropy property of the construction.

G. Ensembles Specified by Two Parameters, along Two Dimensions: Pickard Case

Next we continue the construction of ensembles specified by a pair of constraints, but now consider the case when these constraints correspond to gliders that involve both spatial dimensions. The natural approach is to attempt to extend the Markov construction to two dimensions. However, it is not clear whether this approach will work, as the correlations in the two dimensions may interact. As we will see, the extension works in most cases (the cases in which the “Pickard conditions” [21] hold), but not in all. We first discuss these cases and then handle each of the “non-Pickard” cases separately.

Extending the Markov construction to two dimensions consists of two stages: creating 2 × k blocks by a Markov process and then assembling these blocks by a second Markov process. The rationale for creating the rows in pairs (i.e., 2 × k blocks rather than 1 × k blocks) is that the second row is needed to allow for correlations in the vertical dimension.

To determine the feasibility of this approach, we begin with the row process and calculate the probability of a 2 × 3 block, as a Markov process on 2 × 2 blocks with one column of overlap:

| (55) |

That is, the probability of a 2 × 3 block is the product of the probabilities of the 2 × 2 blocks it contains, divided by the probability of the 2 × 1 block at their intersection. Once we have created a 2 × k row by a Markov process, we can consider assembling these rows via a second Markov process. At the very least, we need to assemble two 2 × 3 rows to form a 3 × 3 block:

| (56) |

Here, the denominator, the probability of the 1 × 3 block intersection, is determined by “marginalizing” Eq. (55), i.e., summing over the possible states of the pixels A1k that are in the previously determined row:

| (57) |

Equations (56) and (57) reveal an issue that did not arise in the one-dimensional case: we need to verify that the probabilities of the lower-row 2 × 2 subblocks of Eq. (56) are consistent with the probabilities of the upper-row 2 × 2 blocks that we started with. Here, the Markov processes along the two dimensions may interact, via the 1 × 3 block probabilities of Eq. (57). So stability of the 2 × 2 block probabilities across rows is not guaranteed. For the one-dimensional case (Section 2.F.), this issue did not arise, since each row was created independently.

1. Pickard Conditions

Pickard [21] identified conditions on the block probabilities that not only guarantee the above stability, but also something much stronger: that the two-dimensional Markov construction samples a maximum-entropy distribution, constrained by the 2 × 2 block probabilities (see also [25]). We state the Pickard conditions and then discuss how they fulfill the above need.

One set of Pickard conditions is that

| (58) |

This condition may be mirrored in either the horizontal or vertical axis to obtain an alternative set of conditions [not equivalent to Eq. (58)]:

| (59) |

As Pickard showed (see also [25]), if a set of block probabilities satisfies both halves of (58), or both halves of (59), then the Markov algorithm will generate maximum-entropy samples. Pickard [21] stated the conditions in terms of conditional probabilities, e.g.

| (60) |

This form emphasizes that the Pickard conditions effectively factorize the way that the two dimensions interact; Eq. (60) is readily seen to be equivalent to Eq. (58) above according to the rules of conditional probabilities.

While we do not reproduce Pickard’s proof that either set of conditions guarantees that construction is maximum-entropy, we carry out a simpler calculation that provides an intuition for why this is true: we show how the Pickard conditions are related to the stability of the 2 × 2 block probabilities. Additionally—and this will be useful in situations when the Pickard conditions do not hold—the calculation shows that half of a Pickard condition simplifies the expression for 1 × 3 (or 3 × 1) blocks. In particular, we will show that the first half of either Eq. (58) or Eq. (59) simplifies the expression for 1 × 3 block probabilities, when the Markov process is run from left to right and top to bottom. This, in turn, allows us to show that the 2 × 2 block probabilities are stable for a left-to-right, top-to-bottom process. Consequently, when both halves of a Pickard condition hold, then the 2 × 2 block probabilities are stable when the two-dimensional Markov process is run from left-to-right and then from top-to-bottom OR, from right-to-left and then from bottom-to-top. Intuitively, this stability and reversibility are the reasons that the Markov algorithm is a maximum-entropy construction that satisfies the desired constraints (see [21] and [25] for background).

To show that the first half of the Pickard condition simplifies the expression for the 1 × 3 block probabilities, we calculate as follows:

| (61) |

where the first equality is from Eq. (57), the second equality follows from the first half of the Pickard condition [Eq. (58)], and the final equality follows by marginalizing over X.

This simplified expression for 1 × 3 block probabilities, along with Eqs. (55) and (56), combine to provide an expression for the 3 × 3 block probabilities, which we need to determine whether the 2 × 2 probabilities are stable:

| (62) |

To show that the 2 × 2 probabilities are stable, we show that the distribution of 2 × 2 blocks in the interior of the image is identical to their distribution in the rows and columns that have already been generated. We carry this out by marginalizing over the states of the pixels in the first row and first column of the left-hand-side of 3 × 3 block probabilities [Eq. (62)] and then use the first half of the Pickard condition (58) to simplify the resulting expression:

| (63) |

This analysis applies, by symmetry, to the other halves of the Pickard conditions. For example, if only the second half of the second Pickard condition, Eq. (59), held, then the result (63) would still be valid for left-to-right and top-to-bottom processes, but the expression (Eq. 61) for 1 × 3 blocks would need to be replaced by a similar expression for 3 × 1 blocks.

2. Pickard Conditions in Transformed Coordinates

To see how the Pickard conditions apply to our setting, we transform them into the coordinates ϕi. We begin with the first half of the condition [Eq. (58)]. The first step is to transform the block probabilities into the coordinates ϕi, via Eq. (8) and Eqs. (14) through (16):

| (64) |

When the above expressions are substituted into the first half of the Pickard condition [Eq. (58)], most terms cancel. The ones that do not cancel have a common factor (−1)B+C; removing this leads to

| (65) |

This equation must hold both for D = 0 and for D = 1. This means that the terms not involving D must be equal, as must the terms that are multiplied by (−1)D. The terms not involving D yield

| (66) |

and the terms multiplied by (−1)D yield

| (67) |

Because of symmetry, the transformed version of the second half of the Pickard condition (58) can be obtained from Eqs. (66) and (67) by replacing θ⌟ with θ⌜ :

| (68) |

| (69) |

Thus, it follows that if either half of the Pickard condition holds, then the other half is equivalent to θ⌜ = θ⌟.

Note that the Pickard conditions are nonlinear in the coordinates. Geometrically, this means that the coordinates that satisfy either or both halves of a Pickard condition lie in a curved subset within the coordinate space.

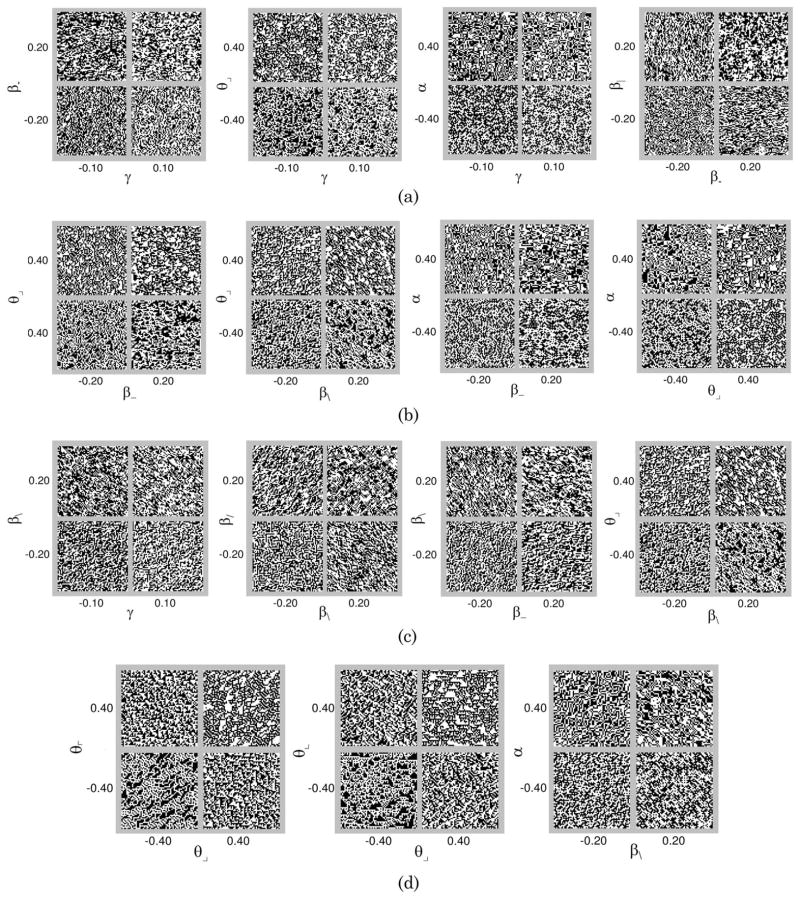

3. Coordinate Pairs, Case-by-Case

Pickard’s result [21] means that the two-dimensional Markov procedure is valid within the curved subsets specified by the Pickard conditions, Eqs. (66) and (67) or Eqs. (68) and (69). Thus, to determine the coordinate pairs for which we can use this procedure to sample the maximum-entropy ensemble, we need to relate the various coordinate planes (ϕi, ϕj) to the Pickard conditions. We begin with some general comments and then consider the planes in a case-by-case fashion below. The full analysis is summarized in Table 2 and samples of images are shown in Figure 2.

Table 2.

| Coordinate Method Pair and {Multiplicity} | Value

|

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| γ | β− | β| | β\ | β/ | θ⌟ | θ⌞ | θ⌜ | θ⌝ | α | |||

| (γ, β−){2} | 1DM (P2) | γ | β− | γ2 | γ2 | γ2 | γβ− | γβ− | γβ− | γβ− |

|

|

| (γ, β\){2} | 1DM (P1) | γ | γ2 | γ2 | β\ | γ2 | γ3 | γβ\ | γ3 | γβ\ | γ2β\ | |

| (γ, θ⌟){4} | 2DM (P1) | γ | γ2 | γ2 | γ2 | γ2 | θ⌟ | γ3 | γ3 | γ3 | γθ⌜ | |

| (γ, α){1} | 2DM (P2) | γ | γ2 | γ2 | γ2 | γ2 | γ3 | γ3 | γ3 | γ3 | α | |

| (β−, β|){1} | 2DM (P2) | 0 | β− | β| | β−β| | β−β| | 0 | 0 | 0 | 0 | r1(β−, β|) | |

| (β−, β\){4} | 2DM (P1) | 0 | β− | 0 | β\ | 0 | 0 | 0 | 0 | 0 | r2(β−, β\) | |

| (β\, β/){1} | 2DMO | 0 | 0 | 0 | β\ | β/ | 0 | 0 | 0 | 0 | β\β/ | |

| (β−, θ⌟){8} | 2DM (P1) | 0 | β− | 0 | 0 | 0 | θ⌟ | 0 | 0 | 0 | r3(β−, θ⌟) | |

| (β/, θ⌟){4} | 2DM (P1) | 0 | 0 | 0 | 0 | β/ | θ⌟ | 0 | 0 | 0 | 0 | |

| (β\, θ⌟){4} | 2DMT-DA | 0 | 0 | 0 | β\* | 0 | θ⌟* | 0 | β\θ⌜* | 0 | 0 | |

| (β−, α){2} | 2DM (P2) | 0 | β− | 0 | 0 | 0 | 0 | 0 | 0 | 0 | α | |

| (β\, α){2} | 2DM (P1) | 0 | 0 | 0 | β\ | 0 | 0 | 0 | 0 | 0 | α | |

| (θ⌟, θ⌜){2} | 2DM (P1) | 0 | 0 | 0 | 0 | 0 | θ⌟ | 0 | θ⌜ | 0 | 0 | |

| (θ⌟, θ⌞){4} | 2DM-DA | 0 | 0 | 0 | 0 | 0 | θ⌟ | θ⌞ | 0 | 0 | 0 | |

| (θ⌟, α){4} | 2DM (P1) | 0 | 0 | 0 | 0 | 0 | θ⌟ | 0 | 0 | 0 | α | |

The row headed by each pair of coordinates indicates the subspaces in which simple maximum-entropy ensembles may be sampled by specific algorithms. Algorithms designated as follows: 1DM: one-dimensional Markov process; 2DM: two-dimensional Markov process; P1: One set of Pickard conditions [Eq. (58) or Eq. (59)] hold; P2: Both sets of Pickard conditions hold; 2DMO: two-dimensional Markov process on oblique axes; 2DMT: two-dimensional Markov process on a tee-shaped glider. For this algorithm,

denotes that the parameter values obtained are highly accurate approximations, but not exact—see Appendix C, Section C2. DA: donut algorithm. r1(β−, β|), r2(β−, β\), and r3(β−, θ⌟) denote the roots of specific cubic polynomials (see Appendix C, Section C3).

Because of symmetry, the 45 pairs that can be drawn from the 10 coordinates {γ, β−, β|, β\, β/, θ⌟, θ⌞, θ⌝, θ⌟, α} constitute 15 unique classes; only one member of each class is listed. The number in braces next to each coordinate pair indicates the total number of pairs in that class. For example, (β\, θ⌟) indicates the four pairs (β\, θ⌟), (β\, θ⌜), (β/, θ⌞), and (β/, θ⌝)

Fig. 2.

The image-statistic coordinate planes. Each patch is a typical sample of an image ensemble in which the indicated pair of statistics is set to a nonzero value and the rest are determined according to Table 2.

In many of the coordinate planes, the Pickard conditions hold. This is because within a coordinate plane, two of the texture coordinates are nonzero, and the remaining 8 coordinates are zero. The Pickard conditions involve subsets of the six coordinates {γ, β−, β|, β/, θ⌟, θ⌜} and {γ, β−, β|, β/, θ⌞, θ⌝}—so if at least one of these subsets is entirely contained in the eight coordinates that are orthogonal to the plane of interest, the Pickard conditions will hold. For these coordinate pairs, a two-dimensional Markov process creates images that are specified by the coordinate pair of interest and are otherwise maximum-entropy.

Among the coordinate planes that are not strictly within the Pickard subset, many are closely approximated by it, i.e., they are tangent to the Pickard subset at the origin. In these cases, our goal of creating images that probe the effects of a pair of coordinates are, in fact, best served by working within this curved subset. The curved subset is a more natural choice than the coordinate plane itself, because of the natural curvature of the space. The natural curvature can be seen by focusing on single texture coordinates. Specifically, (as mentioned above), the maximum-entropy ensembles specified by the coordinate λ, the IID images, do not lie on the coordinate axis itself, but rather, form a curved trajectory, (β, θ, α) = γ2, γ3, γ4). A further justification for the use of a curved trajectory is that in these cases, the deviation between the curved set and the planes is well below perceptual threshold (see Section 3.B). After considering the coordinate pairs whose planes are tangent to the Pickard subset, we will handle the remaining few pairs, whose planes are not close to the Pickard subset, via another approach, in Section 2.H.

We now turn to the individual coordinate pairs; Table 2 provides a comprehensive summary. (Since there are 10 coordinates, there are a total of 45 coordinate pairs, but because of symmetries among the β’s and θ’s, only 15 coordinate pairs need to be considered explicitly.)

To begin, the coordinate pairs (γ, β−) and (γ, β\) have already been handled as one-dimensional Markov processes, so each of them necessarily satisfies a Pickard condition. Note that even in this simple case, it is natural to work in a curved subset, in which the unspecified coordinates are given nonzero values (e.g., the unspecified β’s are set equal to γ2). These are the unique choices for which the one-dimensional Markov processes are independent of each other—thus guaranteeing maximum entropy—and, for the β’s and θ’s, they are the unique choices for which the Pickard conditions hold.

For (γ, θ) and (γ, α), the second coordinate corresponds to a glider that occupies more than one row or column, so the one-dimensional Markov construction is not applicable. But here, the Pickard conditions hold, with the appropriate choices for the β’s and the unspecified θ’s. These choices also guarantee independence of the pixels within the unspecified gliders. The choice of α is unconstrained by the Pickard conditions, so, for (γ, θ), its value is chosen to achieve maximum entropy within the 2 × 2 block. α = γθ⌟ achieves this because it means that the pixel not contained in the θ⌟-glider is independent of the ones constrained by θ⌟. (The procedure of Appendix C, Section C3, confirms that this choice for α maximizes the entropy.)

(β|, β−) and (β|, β\) also satisfy the Pickard conditions. A zero value for γ and the θ’s is the maximum-entropy choice by the following symmetry argument, based on contrast-inversion. Since contrast-inversion negates the value of γ and θ, the entropy of an ensemble with nonzero values of these parameters could always be further increased by mixing it with its contrast-inverse. Consequently, when maximum-entropy is achieved, these parameters must have a zero value. The choice of α, however, is less straightforward, since any value is consistent with the Pickard conditions and with the above symmetry argument. Appendix C, Section C3 details how it is chosen as a function of the β’s to maximize entropy.

(β\, β/) is the first coordinate pair that does not satisfy either Pickard condition, even for limitingly small values of the coordinates. However, we can handle this case by noting that the gliders corresponding to both coordinates only induce correlations of pixels Aij for which i + j have the same parity. That is, these gliders only induce correlations within the two diagonal sublattices (corresponding to the “red” and “black” pixels of an ordinary checkerboard) and not between them. Since these sublattices are independent, we can construct the image on each of them separately. The latter construction is straightforward: within each diagonal sublattice, (β\, β/) behaves like (β|, β−). In sum, the (β\, β/) case is equivalent to the (β|, β−) case on each of two independent sublattices: the sublattice for which i + j is even and the sublattice for which i + j is odd.

The (β, θ) cases depend on how the gliders relate. For a horizontal or vertical β (e.g., (β−, θ⌟)), a Pickard condition holds and α is chosen to maximize entropy (Appendix C, Section C3). For a diagonal β that is contained within the θ glider (e.g., (β/, θ⌟)), the Pickard conditions hold as well and the choice of α = 0 corresponds to independence of the fourth pixel in a 2 × 2 block and hence, maximum-entropy. Finally, for a diagonal β that is not contained within the θ glider (e.g., (β\, θ⌟)), the Pickard conditions do not hold; this case will be treated in the next section (and in detail in Appendix C, Section C1 and Section C2).

The (β, α) cases are straightforward: they all satisfy at least one Pickard condition and symmetry requires that the maximum-entropy choices of the other parameters are zero.

The (θ, θ) cases differ, depending on how the gliders relate. When they overlap on a diagonal (e.g., (θ⌟, θ⌜)), a Pickard condition holds and a symmetry argument (discussed in Appendix C, Section C2, in relation to the (β\, θ⌟) case) shows that α = 0 is the maximum-entropy choice. When they overlap along an edge (e.g., (α⌟, α⌞)), the Pickard conditions do not hold and we use the method described in the following section.

Finally, the (θ, α) case satisfies both Pickard conditions.

H. Donut Algorithm

We now consider the two coordinate pairs, (β\, θ⌟) and (θ⌟, θ⌞). Because the Pickard conditions do not hold, it is necessary to depart from the Markov construction. The alternative we describe turns out to be much more useful than just providing a solution for these last cases: it also allows for creation of maximum-entropy image ensembles constrained by the 2 × 2 block probabilities derived from an arbitrary image.

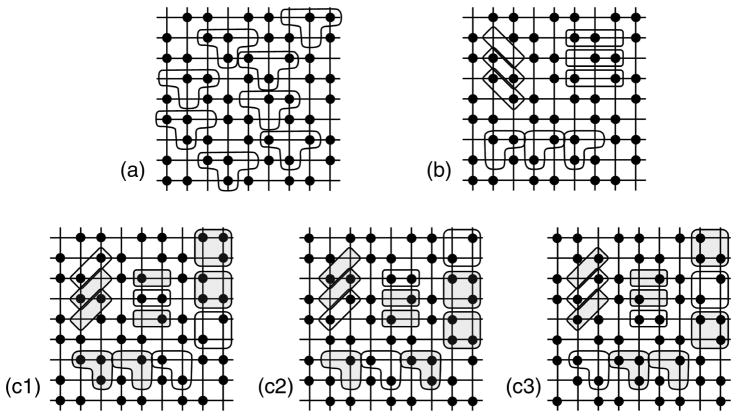

The basic idea is a two-step process. In the first step, we generate an ensemble (or a large example drawn from an ensemble) that satisfies the coordinate constraints, but is not necessarily maximum entropy. That is, we generate an ensemble that satisfies the constraints on 2 × 2 blocks, but may have unnecessary correlations among larger blocks. This step is somewhat ad hoc; we use different constructions for (β\, θ⌟) and (θ⌟, θ⌞), as described below. In the second step, which is generic, we increase the entropy of this ensemble by mixing it, in a way that preserves the constraints.

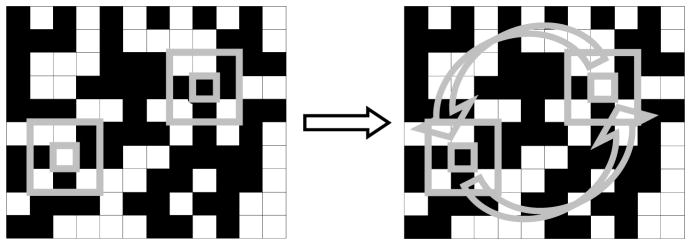

The second step—a procedure for maximizing the entropy by scrambling the pixels in a manner that preserves 2 × 2 block probabilities but not longer-range correlations—is diagrammed in Figure 3. The image is searched to identify two 3 × 3 regions for which the outer 8 pixels match identically. (Since there are 28 possible configurations, many such matches will be present in a typical image of thousands to millions of pixels.) Then, the interior of two such randomly-chosen “do-nuts” are swapped, say, between locations A and B. This swap necessarily preserves the number of 2 × 2 blocks of each configuration, since the 2 × 2 blocks at location A have now been moved to location B and vice-versa. However, longer-range correlations have been reduced, since the pixel at location A now has the longer-range context (e.g., next-nearest neighbors) of B and vice-versa. The procedure is then iterated until the statistical properties of the image have stabilized. Further details and background on this algorithm are provided in Appendix D, including comments on the mathematical justification for the algorithm, its relationship to the classical Metropolis algorithm [26] and how it can be implemented efficiently.

Fig. 3.

Donut construction for maximizing entropy while maintaining 2 × 2 block probabilities. Left: a pair of 3 × 3 regions is identified, for which the outer eight pixels match identically. Right: the interior pixels of the two regions are swapped. This step preserves all 2 × 2 block probabilities. Iterations of this step destroy longer-range correlations.

1. Applying the Donut Algorithm to the Non-Pickard Cases

The simpler of the non-Pickard cases is that in which the coordinates (θ⌟, θ⌞) are specified. With γ, the β’s, and the unspecified θ’s (θ⌝and θ⌜) set to zero, the second halves of both Pickard conditions [in block probability form, Eqs. (58) and (59)] hold, since all pixels within the θ⌝ and θ⌜ gliders are independent. As shown in Appendix C, Section C1, this ensures that when the block probabilities are used to drive a two-dimensional Markov process, the resulting image retains the same block probabilities. However, this does not mean that the construction is maximum-entropy, since neither Pickard condition holds in its entirety, only one half of each. The problem is nonstationarity: the two gliders θ⌟ and θ⌞ combine to induce correlations of the end pixels within a 1 × 3 block. The reason for this becomes apparent from considering a block . Say we choose the first row at random, since we would like the β’s to be zero. θ⌟ biases the parity of the pixels and θ⌞ biases the parity of the pixels . Since these two blocks both contain B and E, the parity biases within each induce a correlation among D and F, the end pixels of the second row of the block, . This happens even if no correlation were present between the end pixels of the first row, . It is unclear how to choose the original row to ensure that subsequent rows have similar and maximally-random statistics.

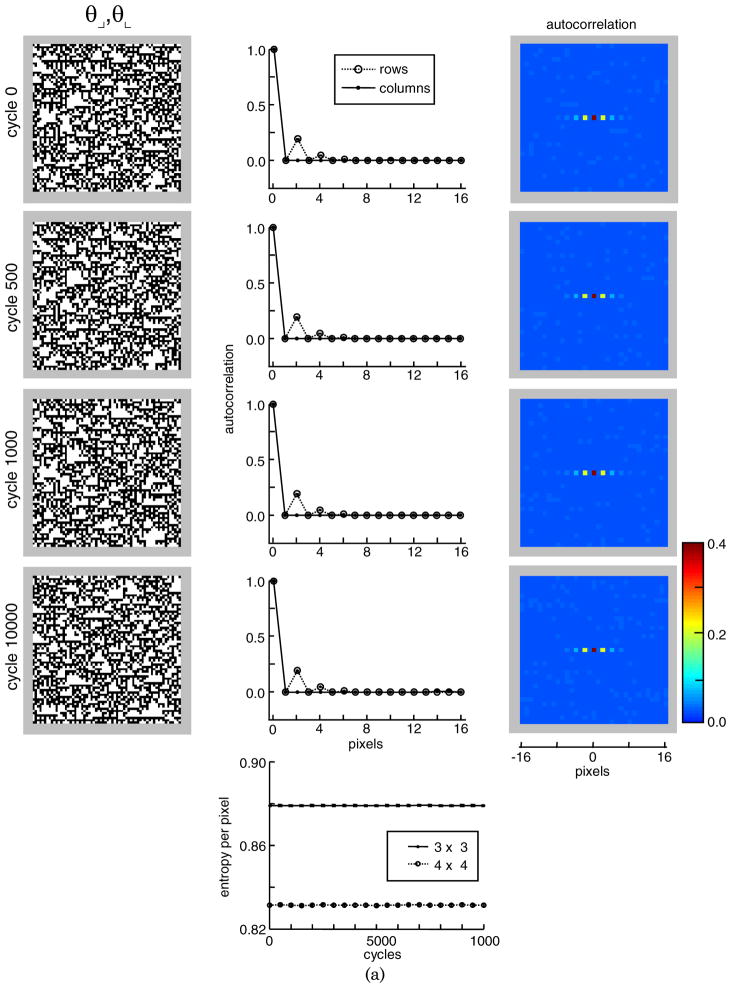

The donut algorithm circumvents this problem. Applying it to the results of the two-dimensional Markov process preserves the 2 × 2 block probabilities and the scrambling process guarantees that a stable, maximum-entropy sample results. In this case—as is seen from Figure 4(a)—there is virtually no change in the entropy that results from the donut procedure or in the visual characteristics of the image samples. So, while the donut procedure is necessary for the construction to be rigorously correct, it appears to have little practical impact.

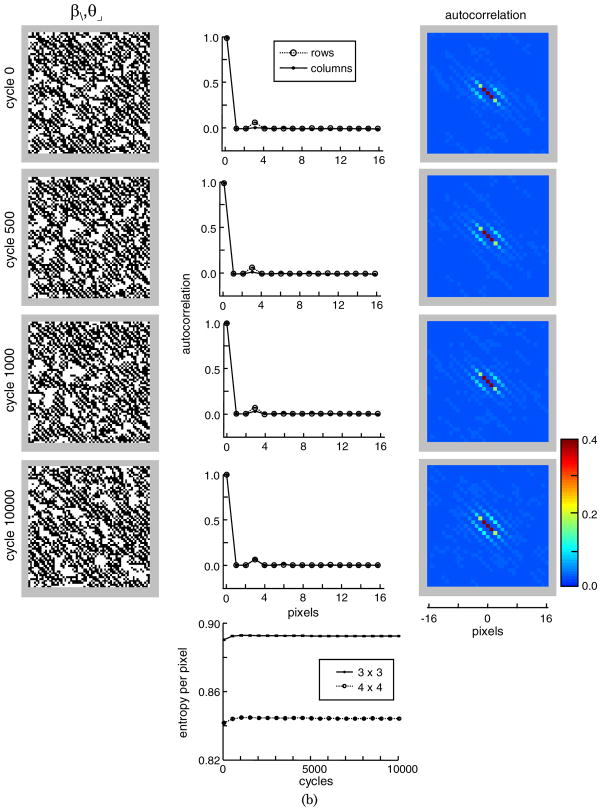

Fig. 4.

(Color online) Construction of images specified by (θ⌟, θ⌞) panel (a) and (β\, θ⌟) (b) (see next page). In each case, a starting image is created via an iterative rule [Appendix C, Section C1 for panel (a), Section C2 for panel (b)] and the donut algorithm is applied to ensure maximum entropy. Parameter values are 0.4 in each case. Autocorrelations (along rows, along columns, and in two dimensions) are shown adjacent to each image. The central pixel in the two-dimensional autocorrelogram has a value of 1.0, above the range of the colorbar. Entropy per pixel for 3 × 3 and 4 × 4 blocks is shown in the lower panels. Error bars (most smaller than the symbols) indicate s.e.m across 16 runs. Part (b) is on next page.

For the (β\, θ⌟) case, only one half of one Pickard condition holds and the argument of Appendix C, Section C1, does not apply. Thus it is not readily apparent how to create a starting image with the requisite 2 × 2 block probabilities. Appendix C, Section C2 shows one way to do this, via a Markov process based on a “tee” configuration of pixels, . A consequence of this construction is that weak correlations are induced at a horizontal spacing of 3 (i.e., between the end-pixels of a 1 × 4 block), but not in a vertical direction. Since the parameters themselves are symmetric with respect to interchange of horizontal and vertical axes, the presence of horizontal but not vertical correlations must be a violation of maximum-entropy.

As shown in Fig. 4(b), the donut algorithm fixes this: as it proceeds, horizontal correlations spread into the vertical direction, until they are equal in strength. Even though vertical correlations are generated, overall entropy increases, because of the effect of the swapping procedure on high-order correlations and larger blocks. As is the case for the θ⌟ - and θ⌞-construction [Figure 4(a)], the changes induced by the donut algorithm have only a minor effect (if any) on the visual appearance of typical images.

2. Other Applications of the Donut Algorithm

Above, the donut algorithm was used to fine-tune a procedure that already generated nearly maximum-entropy samples. However, it is much more widely applicable, because it can be applied independent of the way in which the starting image is created. Thus, it can be applied to create classes of ensembles that are not at all close to those that are created by the Markov procedure. We illustrate this with two examples: first, construction of a maximum-entropy image specified by four texture coordinates and second, construction of a maximum-entropy image constrained by the 2 × 2 block probabilities of a binarized natural image.

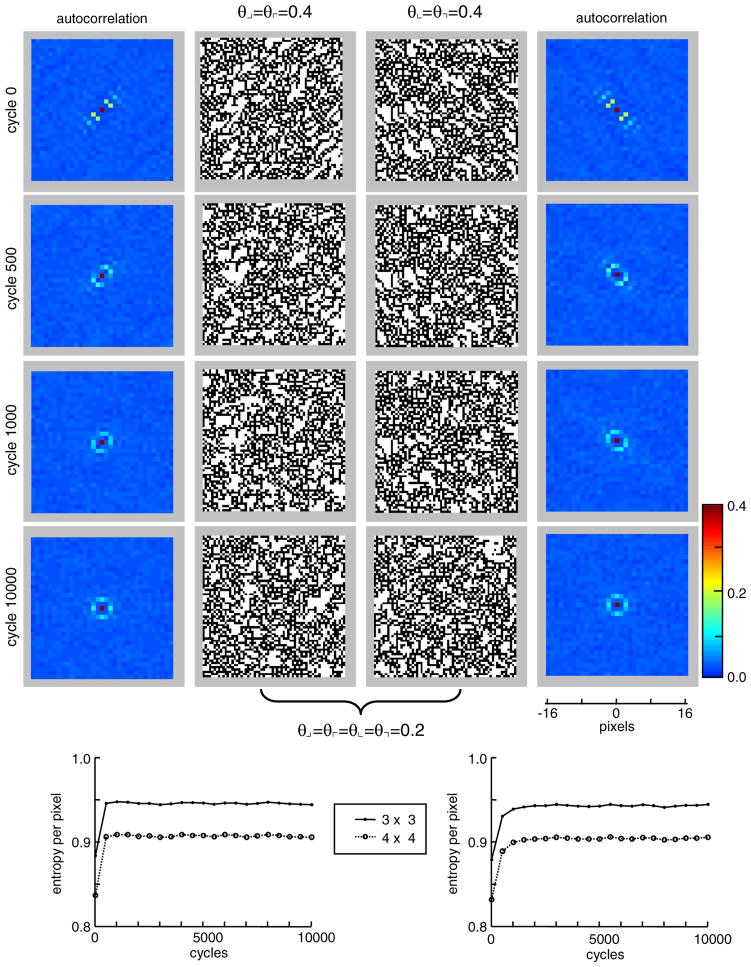

The first example, Fig. 5, is constructed to have a nonzero value of all the θ’s, i.e,. θ⌟ = θ⌞ = θ⌝ = θ⌜ = 0.2, and all other texture parameters equal to zero. Here, no Pickard conditions hold and the Markov approach also cannot be applied, because it is unclear how it should be initialized. However, this kind of ensemble is readily generated by mixing together a pair of ensembles, each of which satisfies the Pickard conditions: one with θ⌟ = θ⌜ = 0.4 and one with θ⌞ = θ⌝ =0.4. Swapping of pixels is carried out by the above donut procedure, with pixels free to swap between the ensembles. Since the swaps preserve the combined 2 × 2 counts, the resulting mixture has the desired block probabilities. In contrast to the examples of Fig. 4, the swapping procedure does have a visual effect: the diagonal structure of the starting images is lost. Not surprisingly, this is accompanied by a much larger change in the entropy (approximately 0.1 bit/pixel) compared to Fig. 4 (<0.01 bit/pixel).

Fig. 5.

(Color online) Synthesis of images with multiple specified parameter values by mixing images with specified parameter pairs. Each cycle of the donut algorithm constructs a pair of images, by mixing the pair created on the previous cycle. The starting pair (cycle 0) consists of an image with θ⌟ = θ⌜ = 0.4 (left) and an image with θ⌞ = θ⌝= 0.4 (right); the final pair consists of images with θ⌟ = θ⌞ = θ⌝ = θ⌜ = 0.2. All other texture parameters are equal to zero. Autocorrelation functions are shown adjacent to the images. The central pixel in the two-dimensional autocorrelogram has a value of 1.0, above the range of the colorbar. Entropy per pixel for 3 × 3 and 4 × 4 blocks is shown in the lower panels.

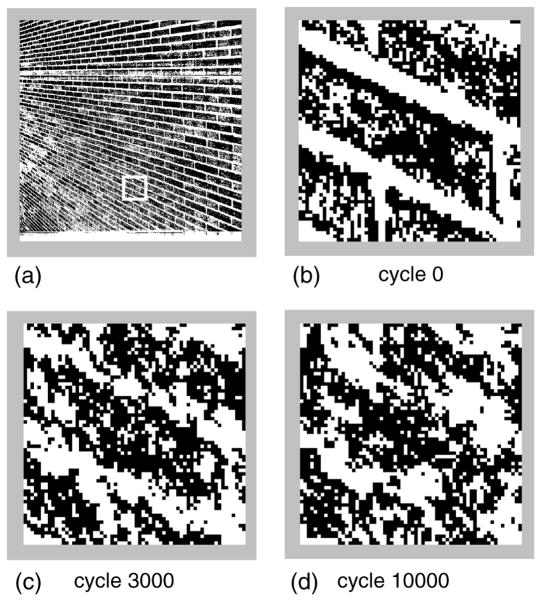

Finally, since the donut algorithm does not depend on the structure of the image, it can be applied to natural images as well. We illustrate this in Fig. 6, starting with a texture from the Brodatz [27] library [Fig. 6(a)]. We first binarize it and detrend it, Fig. 6(b), and then apply the donut algorithm. The result is an image in which the general diagonal correlation structure is preserved, but the multiscale detail (of bricks and mortar) is lost [Fig. 6(c), Fig. 6(d)]. This is just as one would expect from a procedure that explicitly preserves only the short-range correlations and the long-range correlations are limited to those that the short-range correlations imply. Put another way, the original image has both a short-range structure (the texture of the bricks and the typical orientation of the brick/mortar interface) and a long-range structure (the regularity of the brickwork lattice). The donut algorithm captures the former, but not the latter, and the resulting image [Fig. 6(d)] shows that the overall slant of the lattice, but not its regularity, is implied by the short-range structure.

Fig. 6.

Application of the donut algorithm to a natural texture. The starting image is a region taken from Brodatz texture 1.4.01 [panel (a)], median-thresholded to create a 64 × 64 binary image (b). (c) and (d): The result of applying the donut algorithm. These images have the same 2 × 2 block probabilities as the starting image [panel (b)]; other statistics are determined by maximum entropy. Even though only short-range correlations are specified, long-range correlations result. Following application of the donut algorithm, the overall oblique slant remains apparent, but the distinction between the two scales (mortar and bricks) is lost.

3. PSYCHOPHYSICAL METHODS

As described above, we established a coordinate system for the local statistics of binary images and developed algorithms that generate visual textures which have specified values of these statistics. Next, we determine visual sensitivity to these statistics, alone and in selected combinations. This section describes the psychophysical methods employed.

Each experiment focuses on one pair of image statistics (i.e., one coordinate pair) and consists of a determination of the salience of each coordinate in isolation and an analysis of how they interact. We use one of two schemes to explore each coordinate pair: a scheme in which we sample five points along each coordinate axis and two points along each of the four diagonals of the coordinate plane or a scheme in which we sample three points in each of 12 directions (including the four coordinate axes). To measure the visual salience of a set of image statistics, we used the texture segmentation paradigm developed by Chubb and coworkers in the study of IID textures [1], described below.

A. Stimuli

The basic stimulus consisted of a 64 × 64 array of pixels, in which a target region (a 16 × 64 rectangle, positioned eight pixels from one of the four edges of the array) is distinguished from the remainder of the array by its statistics. To ensure that the subject responds on the basis of segmentation (rather than, say, a texture gradient), we randomly intermix trials of two types: (a) trials in which the background is random and the target has a nonzero value of one or more image statistics and (b) trials in which the background has the nonzero values and the target is random. The range of values of the image statistics was determined in pilot experiments, to ensure that the experiment included conditions for which performance ranged from near-chance to near-ceiling.

Stimuli were presented on a mean-gray background, followed by a random mask. The display size was 15 × 15 deg (check size, 14 min), contrast was 1.0, and viewing distance was 1 m. Initial studies were carried out on a CRT monitor with a luminance of 57 cd/m2, a refresh rate of 75 Hz, and a presentation time of 160 ms driven by a Cambridge Research VSG2/5 system, later studies were carried out on an LCD monitor with a luminance of 23 cd/m2, a refresh rate of 100 Hz, and a presentation time of 120 ms, driven by a Cambridge Research ViSaGe system. Results (including one subject, MC, tested under both conditions) were very similar under these two conditions: for γ, sensitivities (see below) were 0.141 for both setups; for θ⌟, thresholds were 0.730 for the VSG 2/5 system versus 0.735 for the ViSaGe; and for α, 0.495, and 0.523 for the VSG (two measures) versus. 0.536 for the ViSaGe.

B. Subjects

Studies were conducted in 6 normal subjects (two male, four female), ages 25 to 51. Three subjects (AO, CC, RM) were naïve to the purpose of the experiment. Five subjects (all but DT) were practiced psychophysical observers in tasks involving visual textures. DT had no observing experience prior to the current study. All had visual acuities (corrected if necessary) of 20/20 or better.

C. Procedure

The subject’s task was to identify the position of the target (a four-alternative forced choice texture segregation task). Subjects were told that the target was equally likely to appear in any of four locations (top, right, bottom, left), and were instructed to maintain central fixation on a one-pixel dot, rather than to attempt to scan the stimulus. Auditory feedback for incorrect responses was given during training but not during data collection. After performance stabilized (approximately two hours for a new subject), blocks of the 288 trials described above (with trials presented in randomized order) were presented. We collected data from 15 such blocks (4320 trials per subject), grouped into three or four experimental sessions, yielding 120 to 240 responses for each coordinate pair.

D. Analysis

Measured values of the fraction correct (FC) are fit to Weibull functions via maximum likelihood:

| (70) |

Initially, this is carried out separately along each ray r. For the rays along the coordinate axes, x is the coordinate value; for the rays in the oblique directions, x is the distance from the origin. In most cases, the Weibull shape parameter (the exponent br) was in the range 2.2 to 2.6 for each ray or had confidence limits that included this range. Therefore, we then fit the entire dataset in each coordinate plane by a set of Weibull functions, constrained to share a common exponent b, but allowing for different values of the position parameter ar along each ray. The fitted value of ar, which is the value of the image statistic that yields performance halfway between floor and ceiling, is taken as a measure of threshold. This measured threshold provides a single point on an isodiscrimination contour (e.g., Fig. 8). Correspondingly, its reciprocal 1/ar measures perceptual sensitivity to changes in the direction of the ray r. Error bars (95% confidence limits) were determined via 200-sample bootstraps.

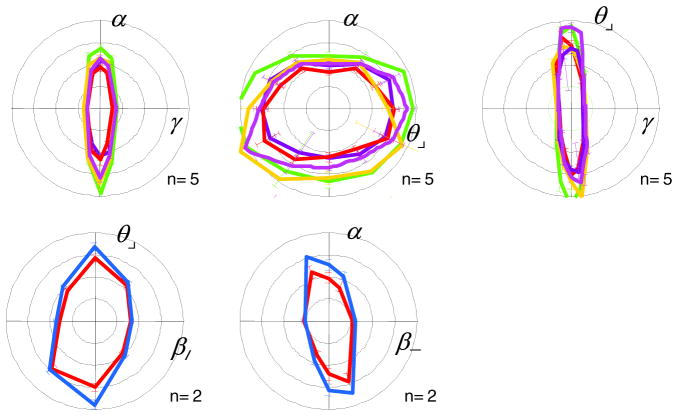

Fig. 8.

Isodiscrimination contours (ICs) in five coordinate planes. The distance of the contour from the origin indicates the threshold ar for individual image statistics (along the axes) and their mixtures (in oblique directions); threshold is defined by the value required to achieve a fraction correct of 0.625, halfway between chance and perfect [Eq. (70)]. The outermost circle corresponds to a coordinate value of 1.0. Error bars, most no larger than the contour line thickness, are 95% confidence limits. Task and subject key as in Fig. 7. ICs are approximately elliptical, and in some planes, they are tilted.

4. PSYCHOPHYSICAL RESULTS

Here, we measure the salience to the human visual system of the image statistics described above to the human visual system, alone and in combination. Following the work of Chubb et al. [1], salience is operationally defined by the ability of a change in a value of the statistic to support texture segmentation. We first consider changes in individual statistics, and then sample their interactions.

A. Discrimination along Coordinate Axes

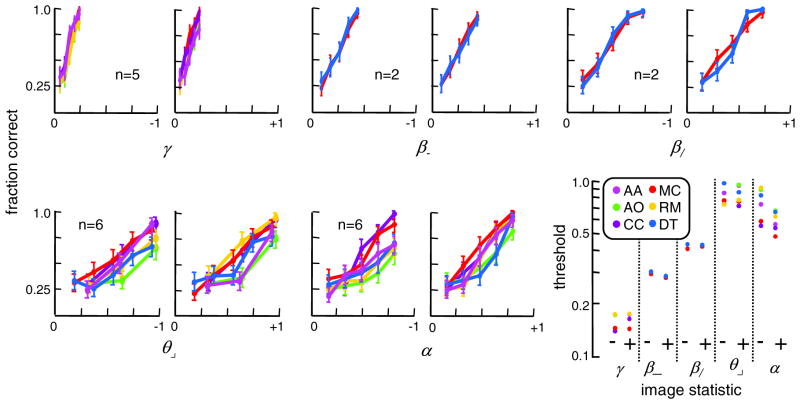

Figure 7 shows psychometric functions for performance in a four-alternative forced-choice segmentation task (see Methods), driven by variations of each of the kinds of texture coordinates.

Fig. 7.

Line graphs: performance in a brief four-alternative forced choice segmentation task for image statistics γ, β−, β/, θ⌟, and α. Chance performance is a fraction correct of 0.25; error bars are 95% confidence limits. Final panel: summary of thresholds ar, obtained by Weibull-function fits to the individual psychometric curves [Eq. (70)]. Consistently across subjects, thresholds for negative and positive variations of each statistic are closely matched and sensitivities across the statistics show systematic differences.

As seen from the individual plots, the variation across observers was quite small, for both the shape and position of the psychometric functions. In terms of the thresholds ar [corresponding to a fraction correct of 0.625, halfway between chance and perfect, Eq. (70)], there was approximately a 10% scatter for γ, β−, and β/ and a 20% scatter for θ⌟ and α.

Within observers, there was little difference in salience for negative versus positive variations of the coordinates: a 20% difference for α (higher sensitivity for positive variations than for negative ones, p < 0.01, two-tailed paired t-test) and less than a 10% difference (p > 0.1) for the others.

Across all observers, the thresholds for a fraction correct of 0.625 are θ⌟, 0.157 ± 0.006, n = 5; β−, 0.286 ± 0.003, n = 2; β/, 0.415 ± 0.009, n = 2; θ⌟, 0.824 ± 0.047, n = 6; α, 0.648±0.042, n = 6 (mean ± s.e.m, number of subjects). This corresponds to a sensitivity rank-order of γ>β−>β/> α >θ⌟, consistent across all subjects. While sensitivity to lower-order statistics is generally greater than for higher-order statistics, there is only a fivefold difference between the least-salient and the most-salient statistic and sensitivity is not a monotonic function of rank order (i.e., salience is greater for α than for θ⌟). Thus, the human observer is sensitive to statistics of all orders and the high-order statistics are not simply a sequence of progressively smaller corrections.

The relative sensitivities to the parameters are relevant to the logic of choosing coordinate axes, rather than maximum-entropy loci, when these entities differ. For example (as mentioned above at the end of Section 2.D.), the coordinate axis corresponding to γ (namely, all other parameters set to zero) is not the same as the maximum-entropy locus corresponding to γ [namely, (β, θ, α) = (γ2, γ3, γ4)]. For measuring the salience of γ, this distinction is moot—even when γ is twice threshold (e.g., γ = 0.3) and performance is at ceiling, the other parameter values are far below their thresholds: (β, θ, α) = (0.09, 0.027, 0.0081). However, when measuring the threshold for α, the distinction is critical: thresholds are at approximately α = 0.5. Had we attempted to measure this along a maximum-entropy trajectory, we would have chosen γ = α1/4 (rather than γ = 0), which would have been markedly suprathreshold. This generalizes: for measuring the sensitivity to a high-order parameter, low-order parameters must be set to zero so that they don’t contribute to detection, but for measuring the sensitivity to a low-order parameter, this choice (set-to-zero versus maximum-entropy) is moot.

B. Discrimination in Selected Planes

To determine how image statistics interact at the level of perception, we measured segmentation thresholds along oblique directions in coordinate planes. As indicated in Table 2, there are 15 unique kinds of planes, once the symmetries of the coordinates are taken into account. Here we focus on five of these planes ((γ, α), (θ⌟, α), (γ, θ⌟), (β/, θ⌟), (β−, α)); this suffices to identify several kinds of behavior and suggest what is likely to be generic. (The unspecified coordinates are set to zero or chosen by maximum entropy as discussed above; see Table 2.)