Abstract

Community-based participatory research (CBPR) has been widely used in public health research in the last decade as an approach to develop culturally centered interventions and collaborative research processes in which communities are directly involved in the construction and implementation of these interventions and in other application of findings. Little is known, however, about CBPR pathways of change and how these academic–community collaborations may contribute to successful outcomes. A new health CBPR conceptual model (Wallerstein N, Oetzel JG, Duran B et al. CBPR: What predicts outcomes? In: Minkler M, Wallerstein N (eds). Communication Based Participatory Research, 2nd edn. San Francisco, CA: John Wiley & Co., 2008) suggests that relationships between four components: context, group dynamics, the extent of community-centeredness in intervention and/or research design and the impact of these participatory processes on CBPR system change and health outcomes. This article seeks to identify instruments and measures in a comprehensive literature review that relates to these distinct components of the CBPR model and to present them in an organized and indexed format for researcher use. Specifically, 258 articles were identified in a review of CBPR (and related) literature from 2002 to 2008. Based on this review and from recommendations of a national advisory board, 46 CBPR instruments were identified and each was reviewed and coded using the CBPR logic model. The 46 instruments yielded 224 individual measures of characteristics in the CBPR model. While this study does not investigate the quality of the instruments, it does provide information about reliability and validity for specific measures. Group dynamics proved to have the largest number of identified measures, while context and CBPR system and health outcomes had the least. Consistent with other summaries of instruments, such as Granner and Sharpe’s inventory (Granner ML, Sharpe PA. Evaluating community coalition characteristics and functioning: a summary of measurement tools. Health Educ Res 2004; 19: 514–32), validity and reliability information were often lacking, and one or both were only available for 65 of the 224 measures. This summary of measures provides a place to start for new and continuing partnerships seeking to evaluate their progress.

Introduction

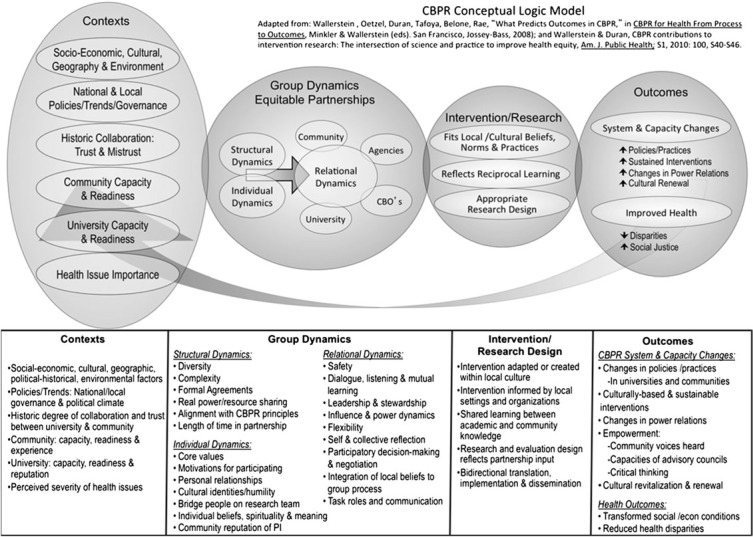

The use of community-based participatory research (CBPR) to address health disparities in under-represented communities has been widely regarded as a promising practice [1–4]. A collaborative effort between community partners and research institutions to engage in research that benefits community is a central tenet of CBPR. Even though this research approach has been increasingly and extensively utilized, gaps remain in how CBPR processes work or how success of partnerships is evaluated. A challenge to understanding the impact of CBPR partnerships and participation in health research, whether it be epidemiology, intervention or policy, is the paucity of conceptual models of CBPR that are empirically tested and validated. In addition, there is no comprehensive database for practitioners and researchers to locate instruments that have been constructed for the purpose of evaluating partnerships. In coordination with a national advisory board of academic and community experts, Wallerstein et al.[1] proposed a CBPR logic model (see Fig. 1) to identify and dissect the primary components, which describe how CBPR may be implemented across diverse settings, studies and populations. The model contains four major components including context, group dynamics, interventions and outcomes. Additionally, the model identifies a number of specific characteristics (i.e. concepts within each component) of each of the four primary components. Our manuscript uses this model to organize a matrix that identifies the instruments and measures we located through a comprehensive literature search. For clarification, we use the term instrument to refer to a whole tool developed by a single author(s), while we use measures to refer to specific parts of the instrument that may relate to multiple characteristics of the model. Thus, a single instrument might have multiple measures.

Fig. 1.

CBPR model.

Through a pilot study, funded by the National Center for Minority Health and Health Disparities in 2006 and administered through the Native American Research Centers for Health (NARCH), the Universities of New Mexico and Washington launched a 3-year CBPR national research project to set the groundwork for studying the complexity of how CBPR processes influence or predict outcomes. The advisory committee provided overall guidance and direction for the pilot study. A major focus of the pilot study was the development of a conceptual logic model that represents the state of CBPR research, provides an evaluation framework for partnership effectiveness and collective reflection among partners and indicates further directions for research on what constitutes successful CBPR.

To develop the model, the group used multiple methods in an iterative process, including a comprehensive literature review of public health and social science databases replicating and expanding the Agency for Healthcare Research and Quality (AHRQ) 2004 study [4]; an internet survey with 96 CBPR project participants, which pilot-tested components and characteristics uncovered in the literature review; reflection and input by 25 national academic and community CBPR experts and ongoing analysis by our research team [1]. The result is a synthesized model, which represents a consensus of core CBPR components including contexts, group dynamic processes (i.e. individual, relational and structural), intervention/research design and outcomes (see Fig. 1) and which can also evolve with adaptations to various partnerships.

With this synthesized conceptual model, the team then revisited the articles identified in the literature review to compile existing instruments that measure the identified CBPR components and characteristics. We also identified those characteristics in the model with few or no assessment instruments/measures. Though the conceptual model represents a step forward in providing a logic framework based on empirical research and expert practice, research is still needed to test its validity with different partnerships and to assess which components and characteristics may need to be adapted for evaluation of specific contexts and populations. Key to furthering our understanding of how CBPR works in diverse settings is identifying instruments for the distinct ‘processes’ and ‘outcomes’ in order to empirically test the pathways of change. This manuscript provides a review of the existing instruments and measures as framed by the conceptual logic model. The goal was to provide a matrix of available instruments/measures for CBPR researchers and practitioners who are looking to evaluate their own partnerships’ success and to point to future research needs to uncover the quality and usefulness of instruments and their measures for assessing best and emerging partnering processes that contribute to outcomes in various populations and projects.

Materials and methods

In order to identify the variety of instruments used for characteristics in the CBPR logic model, we began a search of terms (and related terms) to those listed in the CBPR model. This section identifies the date range, information sources, eligibility criteria and data coding process.

Date range of literature search

To assess the extent of measurement instruments pertaining to the components and characteristics of the CBPR model, a review of the literature that informed the CBPR conceptual model was conducted. The search procedures are detailed elsewhere [1], though a brief overview is presented here. In 2004, the AHRQ published results from a commissioned study to determine existing evidence on the conduct and evaluation of CBPR. According to this document, 3 March 2003 was the last date of their systematic search [4]. As an attempt to overlap and build upon the AHRQ work, this literature search began with 2002 publications through 2008. The end date was chosen as sufficient for coding instruments, constructing the matrices and analyzing the data. Some instruments outside of this date range are included in the matrices if they were cited and used by authors identified in the present search or if recommended by the national advisory board.

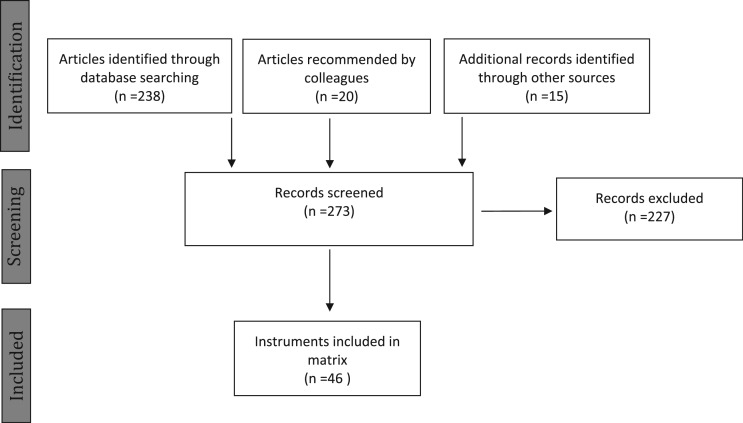

Information sources and search

The search was conducted using PubMed, SciSearch and SocioFile using the following terms: CBPR, participatory research, action research, participatory action research, participatory evaluation, community driven research, action science, collaborative inquiry, empowerment and evaluation and paralleling the earlier AHRQ study. To deepen the understanding of participation from the perspective of the macro and micro-forces of power and relationships, the Business Source Premier database was reviewed for full-text articles with the following terms: effectiveness, process, structure, communication, participation, satisfaction, roles, leadership, outcomes, climate and voice. A third search was conducted in PsycINFO and Communication and Mass Media Complete using the following terms: effectiveness, process, structure, communication, participation, satisfaction, roles, leadership, outcomes, climate and voice (with modifiers of group, organization and team for each key word), though we excluded: (i) therapy groups, (ii) computer-mediated communication and (iii) educational groups (research on teaching group dynamics). From the databases, we had the following distribution: 45 PubMed, 85 SciSearch/SocioFile, 21 Business Source Premiere and 87 PsychInfo/Communication Mass Media Complete. Additional survey instruments and articles were identified through a Google search of key CBPR terms, contributions from our national advisory board of experts in CBPR (detailed in 1) and our cross indexing of a key coalition inventory of coalition measures, with some instruments that pre-dated our search criteria [5]. The final pool of full-text articles, tools and web sites consisted of 273 articles: 238 from the databases, 20 recommended by advisory board colleagues and 15 from other sources (Fig. 2).

Fig. 2.

Description of method for comprehensive review of literature.

Eligibility criteria

The 273 articles were reviewed to identify instruments measuring the distinct ‘processes’ and/or ‘outcomes’ of the proposed CBPR model. Articles were included in this step of the study if they met the following criteria: (i) collaboration was discussed, (ii) participation was mentioned, (iii) characteristics of the participatory processes and/or outcomes were identified and (iv) the article included an instrument or referenced an instrument from another source. Articles identified from multiple sources were recorded once and attributed to the first source from which they were identified.

Articles were examined for instruments and measures that reflected the model characteristics. For example, within context, articles were reviewed for instruments/measures of community capacity, health issues, national/local policies/trends and political governance, historical context of collaboration and organizational capacity. The final 46 unique studies were composed of 21 articles or reports and 25 community projects. Each instrument contains multiple measures totaling 224. The studies are all quantitative with the exception of Pluye et al. [6], which was adapted from Goodman, McLeroy, Steckler and Hoyle’s 1993 [7] scales and they ultimately converted the qualitative data into quantitative analysis. Thus, we included this article in the final pool. References for all studies are included with the matrix. All instruments presented in this review are published and are publically available (Fig. 2).

Data coding process

The instruments were placed into this matrix using content analysis and by asking the following questions: (i) ‘What is the overall component?’ and (ii) ‘What is the specific characteristic within the component?’ Two graduate students completed the coding under the direction of one of the faculty research team members. The faculty member first trained the students how to recognize the components and characteristics in instruments, using a codebook of definitions of each characteristic and then how to code the instruments accordingly. Second, the students practiced coding several instruments together to increase the level of inter-coder agreement. Third, they coded the same 10% of the total instruments to establish inter-coder reliability; and finally, they completed the coding of the remaining instruments independently. When there were difficulties determining the appropriate categorizations, the coders worked together with one other members of the research team to determine the best category. Inter-coder reliability for the 10% overlap was 0.90 (Cohen’s kappa).

Data items

Moher et al. [8] identified 27 preferred items to include in systematic reviews of literature. With our goal to create a comprehensive review of the state of measurement science as a useful starting place, we were still able to apply most of the PRISMA criteria in our methods and final matrix. Many of these instruments do not yet have outcome data nor have they been evaluated for application to a cross-section of CBPR partnerships. The matrix has therefore organized the data according to the components of the logic model and their characteristics. We identify the following information in the matrix: the instrument or measure, citation of original sources, a source if the original was outside the data range, the original concept as described in the instrument or measure, the original item number or location of the items, for what population the instrument was originally created and reliability and validity information as available. Instruments obtained as secondary sources from journal articles will not have the original item number as the original instrument was not consulted. Most of the instruments have not been evaluated and therefore there is no within-study bias to address. However, we have included key information about the data we located.

Many instruments were not classified as a whole. In these cases, the subscales or even several items became the coding unit. The goal was to provide the most accurate and thorough inventory possible related to characteristics in the CBPR model. Thus, the concern was not with retaining the intention of the original instrument but rather in documenting measures that were related to characteristics in the specific CBPR model (thus some instruments are listed under multiple categories).

Results

The coding of the final 46 instruments led to the development of three major categorical tables in the matrix: supplementary Table 1: context; supplementary Table 2: group dynamics and supplementary Table 3: outcomes (Supplementary data are available at Health Education Research online). Each categorical table was then divided into sub-tables that list the measures related to each characteristic in the CBPR model. Finally, we report reliability and validity information and data on the original population of creation. For each table, we report the number of instruments that contain measures for each characteristic in the CBPR model. We identified a total of 224 measures of CBPR characteristics in the 46 instruments. Table I organizes the total number and respective percentage of measures found under each component of the CBPR logic model.

Table I.

Table of results showing the final percentages of measures for characteristics identified in the CBPR model

| Characteristics represented | N | % | |

|---|---|---|---|

| Context | Community capacity | 12 | 5.4 |

| Health issues | 5 | 2.2 | |

| Historical context of collaboration | 4 | 2 | |

| National/local policies and political governance | 2 | <1 | |

| Organizational capacity | 5 | 2.2 | |

| Total | 28 | 12.5 | |

| Group dynamics | Relational | ||

| Participatory decision making and negotiation | 24 | 10.7 | |

| Dialogue and mutual learning | 22 | 9.8 | |

| Leadership and stewardship | 19 | 8.5 | |

| Task communication and action | 18 | 8.0 | |

| Self and collective reflection | 12 | 5.4 | |

| Influence and power | 7 | 3.1 | |

| Flexibility | 2 | <1 | |

| Conflict | 3 | 1.3 | |

| Structural | |||

| Complexity | 16 | 7.1 | |

| Agreements | 9 | 4.0 | |

| Diversity | 7 | 3.1 | |

| Length of time in partnership | 7 | 3.1 | |

| Individual | |||

| Power and resource sharing | 3 | 1.3 | |

| Congruence of core values | 1 | <1 | |

| Individual beliefs | 11 | 4.9 | |

| Community reputation of principal investigator | 1 | <1 | |

| Total | 162 | 72 | |

| Outcomes | Empowerment and community capacity | 22 | 9.8 |

| Change in practice or policy | 10 | 4.5 | |

| Unintended consequences | 1 | <1 | |

| Health outcomes | 1 | <1 | |

| Total | 34 | 15.2 |

The denominator for these percentages is 224, the total number of measures identified.

In supplementary Table 1 (Supplementary data are available at Health Education Research online) Context, 28 measures were identified. The majority found were related to community capacity, organizational capacity, health issues and historical context of collaboration. The last subcategory, national/local policies and political governance lacked attention within this literature review with only two measured identified. This review did not capture measures specific to social–economic, cultural, geographic, political-historical, and environmental, the first Context characteristic listed in the model, though we recognize that many demographic questionnaires can attain this information. Supplementary Table 2 (Supplementary data are available at Health Education Research online), which represented the greatest proportion, included three different subgroups of group dynamics: (i) relational, (ii) structural and (iii) individual. In the Relational category, measures were identified for eight characteristics. Those that included the highest frequency of individual measures in the instruments included (i) participatory decision making and negotiation, (ii) dialogue and mutual learning; (iii) leadership and stewardship; (iv) task communication and action; (v) self and collective reflection and (vi) influence and power. Flexibility represented the lowest proportion of hits among characteristics presented in the model. The characteristic of conflict was not originally identified in the logic model as a separate subcategory for relational dynamics but was added based on the information that emerged in the coding. No measures were identified for the characteristics of integration of local beliefs to the group process or safety. For structural dynamics, the characteristic of complexity was identified most frequently in the literature, followed by agreements, diversity and length of time in partnership. Power and resource sharing was identified in three measures. Under individual dynamics, the model lists seven characteristics though only three had associate measures; congruence with core values, individual beliefs and community reputation of the principle investigator.

Outcomes, supplementary Table 3 (Supplementary data are available at Health Education Research online), identified measures for four characteristics empowerment and community capacity outcomes had 22 measures, which also included the community capacity measures in context; change in practice or policy; unintended consequences, a new characteristic added based on the information that emerged in the coding, and health outcomes. No measures were found for the changes in power relations, culturally based effectiveness, or cultural revitalization and renewal.

Of the 46 (Please contact the authors for additional sources, including guidebooks and other literature that did not meet the inclusion criteria for the final matrix but may have useful information) instruments used to evaluate CBPR, few reported analysis of reliability and validity information on the measures described. Of the 224 individual measures re-organized according to the CBPR logic model, only 54 measures had reliability information and only 31 had any information related to validity (and 65 total measures had one or the other). A majority of validity reports relied on information from previous measures that the authors had used to adapt their measure while several measures used expert opinion or exploratory factor analysis. The matrix demonstrates that reliable and valid measures available for CBPR are disproportionately focused on group dynamic and relationship process issues with relatively few reliable and valid instruments that measure contextor intermediate system and capacity change outcomes.

Discussion

The purpose of this comprehensive review of literature was to identify instruments and measures of characteristics in Wallerstein et al.’s [1] CBPR logic model . While the logic model has offered a consensus framework for CBPR, of necessity, the next steps were to identify measures that can be used to enhance our understandings of how community partners and academic–community partnerships contribute to changes in intermediate outcomes, such as culturally centered interventions, new practices and policy changes, which in turn have been increasingly recognized as leading to improved health outcomes and equity [2–4, 9].

The review of literature identified 273 articles related to CBPR and within these articles were 46 unique instruments containing items that measured some of the characteristics of the CBPR logic model. We then used the CBPR logic model to categorize the 224 measures within these 46 instruments and guided the interpretation and categorization of the instrument items.

While the model does not contain a unified change theory, theories at different levels along the socio-ecologic framework are embedded within the characteristics. The group dynamic measures available to CBPR, for example, have clearly benefited from the previously established and extensive coalition and organizational team literature [5, 10]. Many of these can be directly used for a study of CBPR. The literature on context measures was much more limited, except for the results related to community capacity (first as part of context and then as an outcome). The existence of community capacity measures is likely due to the long-time interest in the health education field in community capacity and its role in community change (see for example, Goodman et al. [11]) and the newer interest in implementation science and the capacity of communities to adapt and adopt interventions and develop strategies to improve their own health. In a comprehensive review of the implementation literature, Fixsen et al. [12] suggest that evidence-based interventions may have certain core elements that can be translated to multiple settings (e.g. principles and best-practice components/characteristics), but implementation success itself depends on context and flexibility to incorporate cultural and community knowledge and evidence. That is, the strategies for implementing interventions need to be based on and adapted within existing capacities and cultures of the organizations and communities in each setting [5]. A recent commentary about how CBPR can support intervention and implementation research reinforces the importance of drawing from the context for enhancing external validity and potential sustainability of programs once the research funding ends [13].

Although the number of measures for context was small, it is an important component that should be further researched. The CBPR logic model suggests that differences in context significantly influence processes, which form the core operational partnership features of CBPR [4, 9]. These differences such as levels of historic trust between partners or levels of capacity of communities to advocate for policy change or integrate new programs matter in terms of partnership success. Though capacity has been identified more in existing measures (largely from the coalition literature), a major area that is lacking are the contextual measures to assess research capacity of communities or the capacity of University and other research institutions to engage in respectful community engagement and CBPR processes.

The lack of measures related to the outcomes of CBPR is not surprising. The majority of CBPR literature to date has focused on case studies describing CBPR partnerships, research design and description of interventions, with articles reporting on how to create and maintain research partnerships, on methods that have been collaboratively adapted and adopted with community partners and on building trust among stakeholders. Impacts and outcomes attributable to CBPR have been less documented [14, 15]. However, as the field has matured, CBPR has begun to recognize and document the range of outcomes that can be attributed to CBPR partnerships and interventions. Case studies have increasingly documented policy and capacity changes, though links to health outcomes remain a challenge [1, 4, 14, 16]. Part of the challenge in linking CBPR to any sort of outcomes is the lack of valid instruments for documenting CBPR system and capacity outcomes and social justice outcomes, as well as the ongoing challenge of attributing change to any single effort (even if it fully encompasses the policy or intervention issue) within dynamic community contexts. These challenges will need to be met head on to advance the science of CBPR.

Currently, we are in the second year of a NARCH V National Institutes of Health (NIH) grant (2009–2013) to further explore how contexts and partnering processes impact interventions, research designs and intermediate system, capacity and health outcomes. Based on a partnership between the National Congress of American Indians Policy Research Center, the University of Washington Indigenous Wellness Research Institute and the University of New Mexico Center for Participatory Research, this research project is investigating the promoters and barriers to success in health and health-related CBPR policy and intervention research within underserved communities. This will be achieved through cross-site evaluation of CBPR projects nation-wide assessing variability in partnership processes, under varying contexts and conditions, which may be associated with system changes, new policies and other capacity-related outcomes. Plans currently include surveys for up to 318 sites, identified through NIH, Centers for Disease Control and Prevention and other databases; and six to eight in-depth case studies. The study is using many of the items identified here and constructing others to assess characteristics where measures are lacking. A variable matrix of the instrument items has been constructed which is linked to a web-based interactive version of the CBPR logic model (The interactive web-based matrix can be accessed at the following URL: http://hsc.unm.edu/som/fcm/cpr/cbprmodel/Variables/CBPRInteractiveModel/interactivemodel.shtml).

In sum, the database of instruments and measures presented here provides what is currently available in the literature to advance the art and science of CBPR and demonstrates the need for continuing development of CBPR measures. In addition to the need for measures of context and outcomes in CBPR, there is a need for more work in assessing the reliability and validity of the measures. Overall, only about 25% of the measures evaluated had information regarding either reliability or validity. Further, the majority of measures that had this information only provided minimal information about validity. Future assessment tools of CBPR should address issues of validity [i.e. face, content and may consider use of factorial, construct (concurrent and discriminant) and possibility predictive validity] [15, 17, 18]. Often instruments constructed for CBPR purposes do not address these validity issues because of the small sample sizes involved and their use for single partnerships.

Future research could establish validity of the measures through two approaches. First, measures could be tested on teams in clinical practice settings, agencies, not-for-profit organizations and inter-organizational coalitions or other alliances. While these partnerships may not be the same as CBPR partnerships, they would provide a reasonable comparison group to at least establish measurement validity. Second, CBPR researchers would benefit by pooling databases to establish a large enough sample size to test the validity of measures. Advancing the capacity to study CBPR will be contingent on strengthening the measurement quality of the measures being utilized.

This review of literature has some limitations. First, the search parameters resulted in a large number of articles but relatively few instruments (N = 46). While we utilized a number of databases and ‘word of mouth’ solicitations and a systematic procedure in searching, there are likely instruments that were missed or are in development. Second, the matrix is the result of our interpretations of the measures and not the instruments’ original intent. While we demonstrated a consistent coding of instruments, we also realize that other researchers may assign certain instruments in different categories. Additionally, some researchers may find that measures we included are not effective measures of the characteristics. Rather than being a judge of the quality of the instruments, we chose to provide as much information about reliability, validity and population for researchers to make their own judgments. In addition, it was difficult to provide a judgment of quality when so few instruments include measurement validity. In general, we recommend that researchers consider instruments that have sufficient evidence of internal consistency (e.g. Cronbach’s alpha of 0.70 or above) and concurrent and factorial validity. In addition to using validity and reliability data, we do suggest two instruments for consideration based on the high face validity and in-depth use within their respective populations, Schulz et al. [9] and Kenney and Sofaer [19]. These instruments were carefully crafted with their respective partners and thus hold promise for future use. The validity and reliability of these measures will need to be assessed.

An additional limitation may be the challenge of replicating our search process because we incorporated other articles (see ‘outside search dates’ column in supplementary Tables) than those that were identified from our database search terms. Although the database search itself can be replicated, we also chose to incorporate later recommendations from CBPR experts and from other sources, recognizing this is an evolving field, which will require further research. For these other sources, we applied the same inclusion criteria however and believe that these instruments accurately represent the balance of process and outcome measurement tools.

The focus of this article has been on measures. However, we recognize that CBPR change cannot be fully captured only using quantitative instruments. Interviews and focus groups and other qualitative approaches enable partners to share their perceptions of how and why outcomes have changed, including enhanced community capacity or new organizational practices or policies, or how CBPR processes have transformed contexts for collaborative research; these qualitative approaches become a critical and necessary complement for enhancing the science [2]. The opportunity for community partner reflections on their participation in the CBPR research (using the conceptual model as an evaluation and collective reflection tool) also enables a privileging of community voice as to which characteristics may have been more salient in any particular project’s success. Through community partners critically examining their lived realities in a dialogical encounter with researchers, a contextual and specific ‘face validity’ can emerge, which can only add to our knowledge base of how CBPR research processes can be generalized across communities.

In conclusion, this review of literature and categorization of instruments resulted in a large compendium of research tools for those interested in CBPR. A key aspect of advancing the study of CBPR is having adequate research tools and providing access to the range of tools available. This matrix provides a state of the art list, current up to 2008 about research instruments and should serve as a useful resource for academic and community researchers and practitioners of CBPR.

Funding

The project described was supported in part by Award #U26IHS300009/03 from the National Institute on Minority Health And Health Disparities and by the NARCH V #1 S06 GM08714301. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute On Minority Health And Health Disparities, the Native American Research Centers for Health, or the National Institutes of Health.

Supplementary Material

Acknowledgments

Appreciation to National Advisory Board of the CBPR Research and Evaluation Community of Practice: Margarita Alegria, Magdalena Avila, Elizabeth Baker, Beverly Becenti-Pigman, Charlotte Chung, Eugenia Eng, Shelley Frazier, Ella Greene-Morton, Lyndon Haviland, Jeffrey Henderson, Sarah Hicks, Barbara Israel, LorettaJones, Michele Kelley, Paul Koegel, Laurie Lachance, Diane Martin, Marjorie Mau, Meredith Minkler, Naeema Muhammad, Lynn Palmanteer-Holder, Tassy Parker, Cynthia Pearson, Victoria Sanchez, Amy Schulz, Lauro Silva,Edison Trickett, Jesus Ramirez-Valles, Kenneth Wells, Earnestine Willis and Kalvin White. Finally, we thank the community members who so generously answered all the questions asked of them.

Conflict of interest statement

None declared.

References

- 1.Wallerstein N, Oetzel JG, Duran B,, et al. CBPR: Whatpredicts outcomes? In: Minkler M, Wallerstein N, editors. Communication Based Participatory Research. 2nd edn. San Francisco, CA: John Wiley & Co.; 2008. [Google Scholar]

- 2.Israel B, Schulz A, Eng E, et al., editors. Methods in Community Based Participatory Research. San Francisco, CA: Jossey-Bass; 2005. [Google Scholar]

- 3.Minkler M, Wallerstein N, editors. Communication Based Participatory Research for Health: From Process to Outcomes. 2nd edn. San Francisco, CA: John Wiley & Co; 2008. [Google Scholar]

- 4.Viswanathan M, Ammerman A, Eng E, et al. Community-Based Participatory Research: Assessing the Evidence. Evidence Report/Technology Assessment No. 99 (Prepared by RTI–University of North Carolina Evidence-based Practice Center under Contract No. 290-02-0016) AHRQ Publication 04-E022-2. Rockville, MD: Agency for Healthcare Research and Quality; 2004. [Google Scholar]

- 5.Granner ML, Sharpe PA. Evaluating community coalition characteristics and functioning: a summary of measurement tools. Health Educ Res. 2004;19:514–32. doi: 10.1093/her/cyg056. [DOI] [PubMed] [Google Scholar]

- 6. Pluye, P. Potvin L., Denis, JL. Making public health programs last: conceptualizing sustainability. Eval Program Plann 2004; 27: 121–133.

- 7. Goodman RM, McLeroy KR, Steckler AB, Hoyle RH. Development of level of institutionalization scales for health promotion programs. Health Educ Q 1993; 20: 161–178. [DOI] [PubMed]

- 8.Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA Statement. PLoS Med. 2009;6:1–6. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schulz A, Israel BA, Lantz P. Instrument for evaluating dimensions of group dynamics within community-based participatory research partnerships. Eval Program Plann. 2003;26:249–62. [Google Scholar]

- 10.Hohmann A, Shear M. Community-based intervention research: Coping with the noise of real life in study design. Am J Psychiatry. 2002;159:201–7. doi: 10.1176/appi.ajp.159.2.201. [DOI] [PubMed] [Google Scholar]

- 11.Goodman RM, Speers MA, McLeroy K, et al. Identifying and defining the dimensions of community capacity to provide a basis for measurement. Health Educ Behav. 1997;25:258–78. doi: 10.1177/109019819802500303. [DOI] [PubMed] [Google Scholar]

- 12.Fixsen DL, Naoom SF, Blasé KA, et al. Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South-Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231); 2005. [Google Scholar]

- 13.Wallerstein N, Duran B. Participatory research contributions to intervention research: The intersection of science and practice to improve health equity. Am J Public Health. 2010;100:S40–46. doi: 10.2105/AJPH.2009.184036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cargo M, Mercer SL. The value and challenges of participatory research: Strengthening its practices. Annu Rev Public Health. 2008;29:325–50. doi: 10.1146/annurev.publhealth.29.091307.083824. [DOI] [PubMed] [Google Scholar]

- 15.Schaufeli WB, van Dierendonck D. The construct validity of two burnout measures. J Organ Behav. 1992;14:631–47. [Google Scholar]

- 16.Minkler M, Breckwich Vasquez VA, Tajik M, et al. Promoting environmental justice policy through Community Based Participatory Research: The role of community and partnership capacity, Health Educ Behav. 2008;35:119–37. doi: 10.1177/1090198106287692. [DOI] [PubMed] [Google Scholar]

- 17.Netemeyer RG, Bearden WO, Sharma S. Scaling Procedures: Issues and Applications. Thousand Oaks, CA: Sage; 2003. [Google Scholar]

- 18.Spector PE. Summated Rating Scale Construction. Newbury Park, CA: Sage; 1992. [Google Scholar]

- 19.Kenney E, Sofaer S, editors. Coalition Self-Assessment Survey B.C. School of Public Affairs. New York, NY: City University of New York; 2002. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.