Abstract

Evidence-based methods for assisting consumers, such as counties, in successfully implementing practices are lacking in the field of implementation science. To fill this gap, the Community Development Teams (CDT) approach was developed to assist counties in developing peer networks focused on problem-solving and resource sharing to enhance their possibility of successful implementation. The CDT is an interactive, solution-focused approach that shares many elements of the Interactive Systems Framework (ISF) for Dissemination and Implementation. An ongoing randomized implementation trial of Multidimensional Treatment Foster Care (MTFC) was designed to test the hypothesis that such interactive implementation methods are more successful at helping counties achieve successful and sustainable MTFC programs than standard individualized implementation methods. Using the Stages of Implementation Completion measure, developed for this study, the potential benefit of these interactive methods is examined at different stages of the implementation process ranging from initial engagement to program competency.

Keywords: Community Development Teams, Implementation, Scale-up

Introduction

According to current estimates, evidence-based practices (EBP) are implemented in only 10 % of agencies within child public service systems such as child welfare, juvenile justice and mental health (Hoagwood and Olin 2002). Thus, progressive agencies that implement one or more EBPs are the exception rather than the rule. Further, the agencies with an openness to adopt an EBP once are more likely to do so again (Aarons et al. 2011; Rogers 1995), creating a needs-innovation paradox. While those agencies who appreciate EBPs utilize them when appropriate, those agencies with the highest need for new technologies are the least likely to innovate by adopting an EBP, leaving a gap that could have negative implications for public health outcomes and child public service systems. To narrow this gap, evidence-based strategies are needed to increase the success rate of implementing new practices into existing systems; the effectiveness of these strategies should be rigorously evaluated so that a universal knowledge base can begin to accrue in the field of implementation science.

Community Development Team

As described in this article, the Community Development Team (CDT; Sosna and Marsenich 2006) is an implementation strategy that was established by the California Institute of Mental Health (CIMH) and has been used in California for the past 10 years. Unlike some traditional implementation strategies that rely on the adopter to engage with the purveyor and receive instruction in how to implement the practice, the CDT model is collaborative in nature and relies heavily on building peer-to-peer networks of adopters who are able to problem-solve implementation barriers together, with the assistance of their CDT facilitator. Currently, the CDT strategy is being rigorously evaluated in a randomized controlled trial (described in this article) being conducted across 51 counties in California and Ohio. Overall, the CDT model is intended to achieve three interrelated goals: (1) Increase the pace at which EBPs are routinely available through the public mental health system; (2) promote the sustainable, model adherent implementation of EBPS; and (3) improve outcomes for children and adults (Sosna and Marsenich 2006). This paper examines the CDT in relation to the Interactive System Framework for Dissemination and Implementation (ISF; Wandersman et al. 2008); the ISF is a heuristic model for dissemination and implementation that specifies three key systems that are thought to be critical for building the infrastructure to bridge science and practice: (1) The Synthesis and Translation System; (2) the Support System; and (3) the Delivery System. A full description of the ISF has been described elsewhere (Wandersman et al. 2008) and is the focus of this special issue. The goals of the CDT are consistent with the intention of the ISF to work together with the three systems to increase use of EBPs and improve public health outcomes.

The CDTs operate through 6 multi-county peer-to-peer development team meetings that are augmented by county-individualized technical assistance. Key stakeholders in each county are drawn from multiple levels to participate in the CDT intervention (e.g., system leaders, agency directors, practitioners, consumers). As shown in Table 1, similar to the ISF, the goals of the intervention target three phases: Pre-implementation, Implementation, and Sustainability. Just as the Prevention Synthesis and Translation System of the ISF aims to distill information about new technologies and prepare consumers to implement them, the pre-implementation phase is designed to engage, prepare, and train a diverse group of stakeholders from participating counties to consider implementing an evidence-based practice. In the case of the CDT, during these initial meetings anticipated and perceived barriers for counties are raised, problem-solved, and addressed. Counties are able to hear and learn from one another what strategies have or have not been successful and the CDT facilitator is able to map these experiences onto the needs of the current evidence-based practice. Furthermore, because the CDT facilitators develop strong relationships with each of the practice developers, specific concerns that are raised with regard to a particular practice can be problem-solved with the developers if need-be (e.g., size of program, cultural adaptations). Similar to the Prevention Support System of the ISF that aims to support those on the ground who are putting the practice into place, the goal of the Implementation phase is to assist sites to run model-adherent programs staffed by skilled practitioners and administrators. The CDT facilitators engage in monthly peer-to-peer and individual calls with administrators and program managers to understand their ongoing needs as their program is being initiated, and assists them with support when needed (e.g., provision of additional training if requested, assistance in developing referral streams). Moreover, the Prevention Delivery System of the ISF that targets the delivery of the programs in the real world, maps onto the Sustainability phase of the CDT which focuses on the promotion of autonomous model-adherent programs. For example, the CDT facilitators host an annual symposium for incoming and sustaining programs in order to bring these groups together to share experiences and gain advanced training. During this time participants are provided with updates on new adaptations that have been made for some counties and learn different strategies that have helped other programs that previously might not have been raised in their own peer-to-peer network. As shown in Table 1, multiple CDT activities conducted across different levels of involvement are necessary for successful movement through all three of the implementation phases. This process involves not only delivery of skills training or technical support by the CDT, but also the equally important assessment of the needs of the consumer and the context in which they are implementing their program.

Table 1.

CDT phases, processes, and activities

| Phase | Goal | Typical process | Typical activity |

|---|---|---|---|

| Pre-implementation | Engagement | Need-benefit analysis | Site-specific correspondence and conference call |

| Fidelity focus | |||

| Procedural skills development | |||

| Pre-implementation | Implementation planning | Need-benefit analysis | Community Development Team meeting Titrated technical assistance, as needed |

| Planning | |||

| Fidelity focus | |||

| Procedural skills development | |||

| Peer-to-peer exchange and support | |||

| Pre-implementation | Clinical training | Procedural skills development | Community Development Team meeting |

| Peer-to-peer exchange and support | |||

| Implementation | Model-adherence | Monitoring and support | CDT conference calls |

| Fidelity focus | Prompted listserv | ||

| Technical investigation and problem solving | Site-specific correspondence and calls | ||

| CDT practice developer conference calls | |||

| Procedural skills development | Titrated technical assistance | ||

| Peer-to-peer exchange and support | |||

| Implementation | Practitioner competence | Monitoring and support | CDT meeting |

| Fidelity focus | CDT conference calls | ||

| Technical investigation and problem solving | Prompted listserv | ||

| Procedural skills development | Site-specific conference calls | ||

| Peer-to-peer exchange and support | Fidelity monitoring and outcome evaluation templates | ||

| CiMH and practice developer conference calls | |||

| Titrated technical assistance | |||

| Sustainability | Autonomous site | Monitoring and support | CDT meeting |

| Fidelity focus | CDT conference calls | ||

| Procedural skills development | Prompted listserv | ||

| Peer-to-peer exchange and support | Site-specific conference calls | ||

| CDT organization and practice developer calls | |||

| Titrated technical assistance |

The development of bi-directional partnerships between researchers and communities is a primary goal of both the ISF and CDT. Bi-directional partnerships have been identified as a key component in translational research (Aarons et al. 2010). The ISF recognizes the importance of engaging diverse stakeholders who work in complementary systems, including users who work at multiple levels (e.g., system leaders, practitioners). The CDT model embraces the ISF core principles that center on establishing bi-directional partnerships between practitioners and researchers and maps well on to the Getting To Outcomes (GTO) logic model, a 10-step process aimed at helping communities achieve accountability for positive outcomes including planning, implementation, evaluation and sustainability (Wandersman et al. 2000).

Traditionally, research-to-practice models are used for the development and dissemination of EBPs, whereas the ISF is a community-centered model for implementation (Wandersman et al. 2008). The current paper describes a merging of these two approaches—a community centered model for implementation (i.e., Community Development Teams) of a research to practice developed EBP (i.e., Multidimensional Treatment Foster Care [MTFC]; Chamberlain 1998). The first EBP that was implemented using the CDT model was MTFC (described below). Subsequently, CDTs have been used to disseminate and implement numerous child- and family-based EBPs including Functional Family Therapy (Alexander et al. 2000), Aggression Replacement Training (Goldstein and Glick 1994), Multisystemic Therapy (Henggeler et al. 1998) and others (Sosna and Marsenich 2006). Because CDTs involve working with a cohort of counties or sites that implement a practice in concert, the practice developers have to be willing to adjust their training protocols from working with an individual agency to working with a group of agencies.

CDT Background

When CIMH built the CDT model, they already maintained a well-established relationship with the California State Department of Mental Health (CIMH), county mental health authorities, child welfare, juvenile probation agencies, and family/consumer organizations. Because CIMH also serves as a state training and technical assistance center, integrating these roles and relationships formed the foundation for sponsoring and conducting the CDT model.

Each CDT focuses on the implementation of a single EBP, and is staffed by two CIMH consultants who serve as facilitators and who have been selected based on previous training and knowledge about the California child service system and their ability to establish credible relationships with key stakeholders. The three phases of the CDT approach map well on to the goals of the ISF support system by facilitating interactions between CDT facilitators and counties to help determine the answers to the key questions prior to and during implementation. As described previously, the CDT Pre-implementation phase emphasizes proactive technical assistance–that is, anticipation of what the needs of counties will be based on ongoing feedback and discussions during peer-to-peer meetings and knowledge of the landscape in which the EBP will be implemented. Furthermore, part of the CDT structure is for the facilitators to be cognizant of the organizational structure and climate of the agency providing the EBP and to help the practice fit well within this system. The CDT facilitators anticipate what a community will need to successfully implement the EBP and catalyze the process by orienting the site leadership toward fidelity (both the need for and importance of it) and helping to make adjustments both within the organization and the practice (with the approval of the developers) to increase the likelihood that the program will be successful. The CDT facilitators help the community leaders and potential implementing agencies understand and develop the procedural skills they will need to successfully launch the site-specific EBP. Although these skills vary, they often involve adding previously unused protocols like incorporating EBP-specific data collection methods that focus on monitoring the fidelity of the EBP or changing practitioner behavior and availability (e.g., adding capacity for practitioners to be “on call”). The CDT Implementation phase emphasizes developing practitioner competence and model adherence. This involves adjusting the training protocols developed by the specific EBP so that they can be delivered in a group format (to CDT counties) rather than individually to single agencies. In the CDT model, a unique partnership exists between the CDT facilitators who are experts on implementation and the EBP developer or trainer who is an expert on the specific EBP. Through this relationship, the CDT facilitator is able to bring concerns or problems that particular programs are experiencing to the developers and problem-solve solutions that assist the program while maintaining adherence to the principles of the practice. Finally, during the CDT Sustainability phase, the emphasis shifts to monitoring and supporting the maintenance of a model adherent program via titrated technical assistance and peer support activities.

CDT Core Processes

During the three phases of the CDT intervention, seven core processes are enacted that are designed to facilitate the successful adoption, implementation, and sustainability of an EBP. These core processes are: (1) Needs-benefit analysis– designed to overcome risk hesitancy and promote enthusiasm for adopting and implementing the practice with fidelity; (2) Planning–designed to assist sites in overcoming implementation barriers specific to the particular practice and the fit (or lack thereof) of that practice to their established operating system; (3) Ongoing monitoring and support– designed to promote each site's individual advancement and motivate persistent effort; (4) Fidelity framing– designed to frame recommendations about the site's administrative and programmatic practices in the context of practice fidelity issues to prevent drift; (5) Technical investigation and problem solving– designed to clarify actual versus perceived implementation barriers and investigate potential solutions to actual barriers through CIMH's established relationships with state agencies and policy makers; (6) Procedural skills development– designed to provide guidance and technical assistance to the site on managerial and human resource skills needed to implement the practice; and (7) Peer-to-peer exchange and support– designed to promote engagement, commitment, and sharing of concrete strategies to overcome barriers to implementation and sustainability, and to reduce risk hesitancy. These core processes occur during the 6 CDT meetings, group conference calls with CDT facilitators and members of the CDT cohort, site-specific telephone calls, conference calls between CDT facilitators and developers, and via ongoing fidelity monitoring, prompted listserv interactions, and titrated technical assistance to the sites.

CDT Networking

A distinguishing factor of the CDT intervention from other implementation strategies is the cornerstone peer-to-peer networking and problem solving which maps onto the ISF focus of communication and interaction. At critical points in the process, peer-to-peer exchanges between local constituents and others (e.g., a county director or agency administrator) with similar social networks target increasing intra-county team building and support for collaboration between county systems. This networking strategy provides support for planning, team building, and cooperative problem solving for within-county teams, which sets the stage for promoting proactive constructive cultures (Verbeke et al. 1998) and positive psychological and organizational climates (Glisson and Hemmelgarn 1998).

Description of MTFC

Just as the goal of the ISF is to achieve better outcomes by bridging research and practice in prevention, treatment, and education, the goal of MTFC is to move evidence-based intervention strategies to achieve consistently better outcomes for youth with serious behavioral problems into the juvenile justice and child welfare systems to prevent future problems and contact with these systems. MTFC began as a community-based alternative to placement in congregate care for children and adolescents with severe emotional and behavioral problems (Chamberlain 2003). It was developed in 1983 in response to the State of Oregon's request for proposals from the juvenile justice system to develop community-based alternatives to incarceration and placing adolescents in residential/group care. Since then, a number of randomized trials have been conducted to test the efficacy and effectiveness of MTFC. Publication of these studies led to national attention and to MTFC's designation as a cost-effective alternative to institutional and residential care. MTFC was selected as 1 of 10 evidence-based National Blueprints Programs by the Office of Juvenile Justice and Delinquency Prevention (Elliott 1998), and was selected as 1 of 9 National Exemplary Safe, Disciplined, and Drug-Free Schools model programs. It was highlighted in two U.S. Surgeon General reports (U.S. Department of Health and Human Services 2000a, b), and was designated by the Center for Substance Abuse Prevention as an exemplary program (Chamberlain 1998; Chamberlain et al. 2007; Leve et al. 2005).

Training

Similar to the ISF, the MTFC model has a strong emphasis on training. A standardized training protocol is utilized across all MTFC sites regardless of the implementation strategy used to adopt the practice (i.e., these strategies are used whether or not a program is participating in a CDT). All staff participate in an intensive 5-day training program where the clinical interventions and cross-systems collaborations are described in detail. This involves observation of model-adherent behaviors (through video tapes and live observation) and practice through role-plays. Once the program is initiated, as indicated by readiness to place a child in the program, program staff participate in weekly telephone clinical consultation with an MTFC expert who has viewed videotapes of their foster parent and clinical meetings and who has monitored data on case progress. This intensive training and quality assurance protocol involves a continual feedback loop between those delivering the MTFC intervention and the expert consultants. This rigorous monitoring of programs “on the ground” is done to ensure that sites are operating in a manner similar to the randomized clinical trials in order to maximize the likelihood of outcomes achieved in these trials. Similar strategies have been empirically demonstrated to positively affect therapist behavior and youth outcomes in other EBPs (Schoenwald et al. 2004).

In MTFC, the process of intense training, support, and monitoring is utilized at every level of the practice. Youth are placed in community foster homes where foster parents are intensively trained, supervised, and supported to provide positive adult support and mentoring, close supervision, and consistent limit setting. Unlike many foster parenting trainings, MTFC foster parent training focuses on training in behavior management strategies and tools that have been demonstrated to be effective through research (Chamberlain 1998). MTFC placements typically last 6–9 months and involve coordinated interventions in the home, with peers, in educational settings, and with the child/adolescent's birthparents, adoptive family, or other long-term placement resource. Specific service components vary depending on child age and developmental level and include daily behavior management in the foster home and school environments emphasizing reinforcement for normative behavior and strengths, family and individual therapy, social skills training, academic support, and case management. Each youth's treatment plan is coordinated and monitored by a program supervisor.

Enhancing Parenting Skills Via Foster Parent Groups

Foster parents meet weekly (for 90 min) in small groups (7–10 foster parents) with a program supervisor for the duration of the child's foster placement. Foster parents are provided with support and instruction during these meetings and are encouraged to share experiences on positive parenting strategies. The program supervisor coaches the foster parents to consistently and regularly reinforce positive and normative child behaviors by using incentives such as small rewards or points for complying with routine expectations (e.g., getting up on time, doing household chores, and attending classes). Challenges experienced by foster parents with youth behaviors are openly discussed and strategies to address them are developed as a group. In these efforts, the foster parents are recognized as a valuable member of the clinical team rather than a client.

Support and Training for Aftercare Family Via Family Therapy

Simultaneous to the foster parent groups, a family therapist works with the birthparents, relatives, or other long-term aftercare resources to improve their reinforcement, relational, supervision, and limit-setting skills and behaviors. These caregivers are taught to use the same incentives or point systems being employed in the foster home to provide positive feedback and brief consequences for problem behavior. The family therapist coaches the parents to practice and adhere to these methods to help recalibrate the parent's executive role in the family. Regular home visits are scheduled throughout the youth's foster care placement so that aftercare parents can practice the skills with the support and feedback of the family therapist before the youth returns home.

Youth Strength Building Via Individual Therapy

Youth are assigned an individual therapist to help them identify and build on their strengths and assets, to find solutions to problems at school, and to have adaptive relationships with their foster parents and aftercare parents. Topics for the individual therapy are selected based on data from the daily point system in the foster home, at school, and during home visits. The individual therapist's role is to motivate and encourage the adolescent to find and practice alternatives to problem behaviors and negative emotions that appear to be having a negative impact on their short and long term goals.

Social Skills Coaching

A skills coach is assigned to each youth to help generalize developing skills to community settings and with peers. The skills coach typically is a recent college graduate who helps the youth to identify and participate in community activities that interest them. The skills coach also addresses the development of specific social skills through practice and feedback in real-world settings. The skills coach works with the program supervisor to identify specific behavioral targets and role-play options for reacting and behaving in both hypothetical situations and real-world settings.

Academic Support

Youth attend public schools. The foster parents and program supervisor work together to carefully monitor youth adjustment in the classroom and with peers and to build an individualized network of services that support academic and social success.

Coordinated Services Via the Program Supervisor

The program supervisor directs, coordinates, and monitors all of the services to the youth and family. (S)he conducts the weekly group foster parent meetings and oversees the daily behavior management charts for both the home and school settings. The program supervisor also conducts a weekly meeting with clinical staff (the family and individual therapists and skills coaches) to formulate the treatment plan and supervise their efforts. The youth's gains and foster parent stress levels are monitored by the program supervisor through the Parent Daily Report telephone interview (PDR; Chamberlain and Reid 1987), in which foster parents report on the occurrence/nonoccurrence of specific behaviors within the past 24 h and the level of stress that they experienced as a result. In addition, specific point gains and losses are recorded. The PDR data also are used to monitor the balance between the foster parent's use of encouragement/reinforcement and discipline. As a strength-focused model, the program supervisor monitors and encourages the use of reinforcement to promote behavioral change.

Context of the Current Study

In 2002, CIMH obtained foundation funding to promote the implementation of EBPs in the child welfare and juvenile justice systems in California. CIMH hoped to use the funds to address a problem that had been highlighted in a recent state report that criticized the use of group care placements to meet the needs of high-risk children and adolescents. The report (Marsenich 2002) discussed California's increasing reliance on group care placements for the most needy youth, the lack of evidence on the effectiveness of group care, and the escalating expenses associated with congregate care. Over the next 5 years, using the CDT approach, MTFC was implemented in 10 counties. It soon became apparent, however, that the needs-innovation paradox was in full effect such that counties that implemented successfully had more resources and were more experienced at implementing EBPs. In other words, the rich got richer while other small or less-resourced counties did not elect to participate, showed some interest and then withdrew, or indicated a desire to participate but an inability to do so.

Subsequently, an application was submitted to the National Institute of Mental Health (NIMH) to study two methods of MTFC program implementation in non-early adopting California counties. The two methods were CDT and Individualized Implementation (IND). In the study design, both methods were contrasted by employing a randomized control trial where the randomization occurred at the county level. Participation in the CDT condition involved implementing MTFC in concert with 6–7 other counties including participating in the seven core processes (described previously). Participation in the IND (control) condition involved delivery of the standard MTFC clinical training and consultation package to individual counties as has been done in more than 70 sites in the U.S. and Europe (see www.mtfc.com). Counties in the IND condition participated in a readiness process through six planning calls and an on-site stakeholder meeting prior to implementation. In both conditions, the county's MTFC program staff received 5 days of clinical training and weekly supervision from an MTFC expert for 18 months.

Method

Overview of the Study Design

In the described randomized trial, the primary research goal is to determine whether participation in the CDT model improves the rates of program adoption, implementation, and fidelity of MTFC in 51 counties (56 sites) in California and Ohio. In comparing the CDT to the standard individualized (IND) implementation, four primary outcomes are examined: (1) the proportion of counties that adopt MTFC; (2) the stage of implementation that counties attain (how far they progress); (3) the fidelity of implementation, including model adherence and practitioner competence; and (4) the sustainability of the MTFC program over time. Given the ongoing nature of this trial, differences between implementation strategies are not yet available; however preliminary trends will be discussed. Moreover, a tool for measuring implementation progress will be described.

Study Population, Sampling, and Randomization

Prior to this study, the California Institute of Mental Health extended a general invitation for all California counties to receive training in MTFC. At that time, a total of 9 of the 58 counties elected to participate; these early adopting counties were excluded from the current study. In addition, 8 other counties were excluded that had a low “need” for MTFC, defined as having fewer than 6 entries into group care (i.e., the target population for the MTFC model); this was measured during two snapshot days from the 2004 calendar year (the latest year data were available at the start of the study). The remaining California counties were targeted for recruitment into the study, as were multiple sites in LA County.

Randomization occurred at two levels: study condition (CDT or IND) and time-frame (cohort 1, 2, 3). LA sites were excluded from randomization due to a class action law-suit that placed them automatically into the CIMH CDT condition. Eligible counties were matched on background variables (e.g., size, number of children in poverty, use of Medicaid, and per capita and group home placement rate) to form three equivalent groupings. Next, these three matched groups were randomly assigned to three sequential cohorts with start-up timelines staggered at yearly intervals. This timing randomization process addressed grant resource issues for implementation. Finally, within each of the yearly cohorts, counties were randomly assigned to the CDT or IND conditions. These random assignments generated six replicate groups of counties, with three assigned to CDT and three assigned to IND. As described elsewhere, randomization was successful (Chamberlain et al. 2008). Three years into this study, the project was extended to Ohio counties using a similar randomization strategy in attempt to increase the sample size of implementing counties so that study hypotheses could be tested. Although all eligible Ohio counties underwent randomization procedures, only the 12 counties randomized to the first cohort were recruited due to study resource limitations. Of these 11 consented to participate.

Participating counties in all cohorts and in both conditions received all of the standard consulting and technical assistance typically offered to sites implementing MTFC. Half of the counties received additional CDT intervention services including the activities listed in Table 1 in the three phases of the CDT.

The Stages of Implementation Completion

In order to measure progress (or lack thereof) in the implementation process, The Stages of Implementation Completion (SIC; Chamberlain et al. 2011) scale was developed to evaluate completion of the stages of implementation in both the CDT and IND conditions. Similar to the previously described 3 phases of implementation (i.e., Pre-implementation, Implementation, and Sustainability) the SIC stages map onto the ISF and rely on interactions between the developers, system leaders, and practitioners. For example, the first three stages include system leaders in the activities such as agreement to consider implementation of the practice (Stage 1), completion of a feasibility questionnaire (Stage 2), and review of a funding plan (Stage 3); in this study, the system leader completes these activities through interactions with the IND purveyor or the CDT facilitator depending on randomization condition. Counties participating in the CDT complete the activities with their team and receive hands-on support from their facilitator, whereas those in the IND condition complete the activities on their own and then review them with the IND purveyor. The measure includes eight stages shown in Table 2 including those that measure progress through the three phases of implementation:

Pre-implementation: engagement (Stage 1); consideration of feasibility (Stage 2); and readiness planning (Stage 3).

Implementation: staff hiring and training (Stage 4); adherence monitoring measures in place (Stage 5); commencement of services and consultation with content experts (Stage 6); and ongoing services, consultation, and fidelity monitoring (Stage 7).

Sustainability: attaining competency (Stage 8).

Table 2.

MTFC Activities Within the 8 SIC Stages, Implementation Phases, and Agents Involved

| Stage | Phase | Activity | Agent involved |

|---|---|---|---|

| Stage 1: engagement | PI | Date site is informed services/program available (not scored) | System leader |

| Date of interest indicated | |||

| Date agreed to consider implementation | |||

| Stage 2: consideration of feasibility | PI | Date of first county response to first planning contact | System leader, agency |

| Date of first CDT meeting/IND Feasibility Assessment | |||

| Date feasibility questionnaire completed | |||

| Stage 3: readiness planning | PI | Date of cost/funding plan review | System leader, agency |

| Date of staff sequence, timeline, hire plan review | |||

| Date of Foster Parent recruitment review | |||

| Date of referral criteria review | |||

| Date of communication plan review | |||

| Date of CDT Meeting #2/IND Stakeholder meeting | |||

| Date written implementation plan complete | |||

| Date MTFC Service Provider Selected | |||

| Stage 4: staff hired and trained | I | Date agency checklist completed | Agency, practitioner |

| Date 1st staff hired | |||

| Date program supervisor trained | |||

| Date clinical training held | |||

| Date foster parent training held | |||

| Date expert consultant assigned to site | |||

| Stage 5: adherence monitoring processes in place | I | Date parent daily report training held (fidelity measure) | Practitioner, child/family |

| Date of 1st program admin. call | |||

| Stage 6: services and consultation begin | I | Date of first placement | Practitioner, child/family |

| Date of first consult call | |||

| Date of first clinical meeting video received | |||

| Date of first foster parent meeting video received | |||

| Stage 7: ongoing services, consultation, fidelity monitoring and feedback | I | Dates of site visits (3) | Practitioner, child/family |

| Date of implementation review (3) | |||

| Date of final program assessment | |||

| Stage 8: competency | S | Date of certification application | System leader, agency, practitioner |

| Date certified |

PI pr-implementation, I implementation, S sustainability

As shown in Table 2, the measure incorporates the actions of a diverse group of stakeholders whose involvement is relevant at different stages. For example, for SIC Stage 1 (engagement), decision makers/system leaders often play a key role in initial decisions to adopt an EBP, but by the time the practice is delivered to clients (SIC Stage 6), the key agent of implementation shifts to the service provider. As such, the SIC can be used to measure the involvement of diverse stakeholders in the implementation process as is specified in the ISF. As described next, there is evidence from data collected using the SIC that system leaders have influence over the ultimate success of program start-up (Saldana et al. 2011), suggesting that each of these stages and the associated key players are signifi-cant to the successful implementation of programs.

The eight SIC stages were designed so that they could potentially be applied to other EBPs. In each stage, it is expected that there will be some activities that are universal to all EBPs (e.g., staff are trained) while other activities within each stage are intended to be flexible in order to reflect the unique components of the specific practice being studied. In the current study, the SIC includes steps that have been identified as essential to the successful adoption, implementation, and sustainability of MTFC. Like most EBPs, MTFC follows a manualized protocol that includes numerous organizational and planning tasks and specific intervention strategies. The mapping of the activities in each stage for implementing MTFC onto the eight stages are shown in Table 2.

Scoring of the SIC

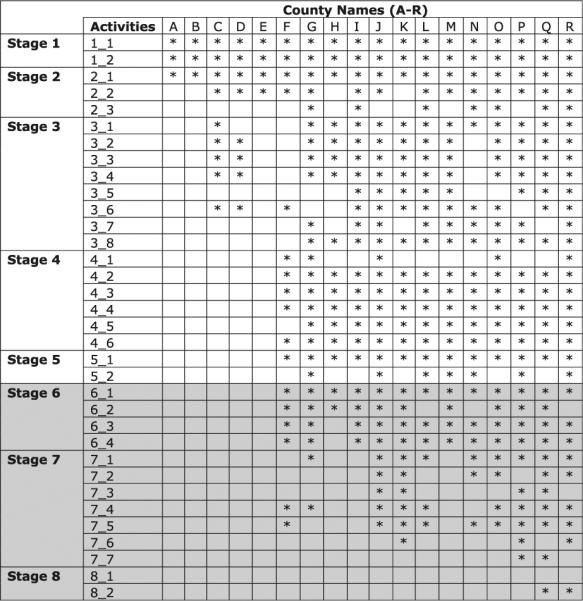

Activities in each stage are ordered based on their logical progression up to the last activity the site completes in that stage or completion of the final activity in that stage. Either indicates completion of that stage. After collecting data in the current trial on 56 sites, variations in the order that counties move through each stage have been noted. For example, as shown in Fig. 1, there are occasions when activities are skipped entirely, or instances when activities in a later stage precede completion of those in an earlier one (i.e., overlapping). This finding is consistent with the notion that implementation is a recursive, non-linear process with well defined stages that impact each other in complex ways. (Blasé et al. 2010; Fixsen and Blase 2009). In the current trial, the effects of skipping activities and optimal time frames for stage completion relative to two primary outcomes are being examined: (1) if and when services to children and families began (i.e., the time to the first MTFC placement), and (2) if and when the program competency is achieved (MTFC certification). As described under psychometrics, progress toward answering this first question has been made.

Fig. 1.

Sample of county activity as rated on the SIC. These processes are not necessarily linear and counties are not mandated to completed every activity. Includes a random sample of the 56 sites participating in the study. Shaded region indicates stages that are right censored. That is, because of the ongoing nature of this trial some counties have not yet moved far enough in time to complete all of the listed activities. Missing data might be a function of “no opportunity” rather than a skipping the activity

The SIC not only identifies completion of implementation activities, but also describes the process by which they are completed. In other words, this measure identifies both the “what” and the “how” of the implementation process in a manner that maps on well to the ISF (Wandersman et al. 2008). Three scores are derived from the SIC: (1) the number of stages completed, (2) the time spent in each stage, and (3) the proportion of activities completed in each stage. The time spent in each stage is calculated by taking the difference between the date of the first activity and the date of completion of the last activity completed by a site (i.e., skipped activities are not included in the time calculation). For sites that choose to discontinue implementation at any point in the process, the discontinue date is logged accordingly in the furthest stage that the site had entered. Once this occurs, the time spent in the final stage is calculated between the date of the earliest activity within said stage and the date of discontinuance. If the observation period ends before the stage is complete but a site has not discontinued implementation, the observation is treated as being right censored, just as it would in a standard time-to-event or survival analysis (Kalbfleisch and Prentice 2002).

The proportion of activities completed is calculated as the number of activities completed divided by the number of possible activities in each stage. Coding procedures for the activities and the stages are specified in a written protocol and are 100 percent double-entered to assess inter-rater reliability. For the current trial, SIC codes are provided by the research team based on information that is entered into the database by both CDT and IND purveyors; however, the SIC was developed so that any interested party could monitor progress through the implementation process and therefore, is designed to not be time-intensive to complete.

Psychometrics

Although a thorough psychometric evaluation of the SIC is still underway and dependent on completion of the trial because of right censoring (i.e., enough counties have not yet been given the opportunity to progress through all eight stages), preliminary analyses do suggest reliability and predictive validity of the measure. Agglomerative hierarchical clustering procedures conducted utilizing the SIC successfully defined counties into three distinct clusters based on duration and proportion of activities completed that mapped onto the reality of county behavior (i.e., face validity). A Cox proportional hazard survival model was then employed with days to first placement (i.e., program start-up; Stage 6) as a time to event outcome. Both the proportion of activities completed and the duration of time spent in the first three stages significantly predicted successful start-up of services (Saldana et al. 2011). This outcome suggested that stakeholder behavior (i.e., those involved in the first three stages) successfully predicted program implementation start-up.

Utility of the SIC in Comparing Implementation Strategies

The primary question being addressed in the current trial is whether implementation success is enhanced by participation in the CDT. That is, do those counties that were randomly assigned to the interactive CDT condition begin delivering MTFC program services more quickly (startup), do they deliver the services with greater quality as assessed by MTFC fidelity measures, and do the MTFC programs implemented under the CDT condition sustain longer than counties receiving the standard IND implementation. At this point in the trial, all counties have been enrolled and are proceeding through the implementation process and it is yet too soon to determine if there are positive effects related to participation in the CDT. Nevertheless, as discussed in the next section on preliminary results, it does appear that the SIC is a useful measure for describing implementation progress and will prove beneficial in distinguishing differences between the two conditions.

Preliminary Results

Figure 1 illustrates the progress of a random sample of counties as delineated on the SIC. Because the study is ongoing, progression through the stages continues and at this point, few counties have had the opportunity to reach the final stages. This “lack of opportunity” (i.e., right censoring) is illustrated by the shaded region on the figure. Said differently, some counties might not have data in Stages 6–8 not because they chose to not complete the activities, but because they are not yet at a point in time in which completion of such activities is appropriate. Figure 1 includes data for some but not all of the participating counties, as a means of illustrating the different patterns of implementation behavior that counties might display. Although, for some of the later stages, most of the counties have censored observations as noted above, the results from participation in initial stages are more complete. As can be seen, those that have been most successful also have been those who have completed the majority of activities. For example, county R completed the majority of implementation activities in each of the stages and successfully achieved competency in Stage 8. On the other hand, county F progressed far in the process despite skipping multiple activities, but then discontinued and ceased operating before achieving competency. Similarly, county H began skipping activities early on in the implementation process and discontinued shortly after initiating program start-up. This illustration exemplifies the potential importance of thoroughly completing the recommended implementation activities.

Table 3 provides data related to the average length of time spent per stage as well as the average proportion of activities completed per stage, across all 56 sites. As can be seen, some stages are more or less completed on average. For example, Stage 2 shows that on average, only 55 % of activities are completed. On the other hand, it is evident from the number of sites in a stage that there is a large drop in the number of sites moving forward to Stage 3 from Stage 2. Again, the shaded region indicates stages that not all counties have had the opportunity to complete at the time of this writing. Taken together, Fig. 1 and Table 3 illustrate how several counties ceased progress after Stage 2 (consideration of the feasibility) or Stage 3 (readiness planning), but that by and large, if a county progressed to Stage 4, that they continue on in the implementation process. This suggests that if stakeholders are successful in completing the pre-implementation phase (i.e., stages 1–3) and move onto stage 4 where staff are hired, that the likelihood of continuing is strong. Moreover, once they reach Stage 4, a high proportion of activities are completed on average. Therefore, an important future analysis will include an examination of CDT strategies in stages 1–3 and if their interactive nature contributed to stakeholder behavior.

Table 3.

Average length of time spent in, proportion of activities completed, and the number of sites that advance to and are thus included in each stage

| Stage | Average stage duration (days) | Percentage of activities (%) | No. of sites in stage |

|---|---|---|---|

| 1 | 1.85 | 93 | 56 of 56 Counties |

| 2 | 175 | 55 | 51 of 56 Counties |

| 3 | 216 | 71 | 28 of 56 Counties |

| 4 | 195 | 91 | 22 of 56 Counties |

| 5 | 81 | 84 | 22 of 56 Counties |

| 6 | 153 | 98 | 22 of 56 Counties |

| 7 | 324 | 61 | 18 of 56 Counties |

| 8 | 70 | 83 | 3 of 56 Counties |

Shaded region indicate stages with right-censored data from counties that have not yet had the opportunity to complete all activities

Nevertheless, Fig. 1 and Table 3 clearly show variability among sites in the number of activities that are completed in each stage. In Wang et al. (2010), county-level predictors of early engagement were reported. A key finding from that study (which examined the role of county demographic variables) was that system leaders appeared to be most influenced in Stage 1 (engagement) by their objective need for an alternative to group home placements in their county. They also were more likely to consider implementing MTFC in counties with positive organizational climates, as measured using standardized organizational measures. These outcomes suggest that the SIC might successfully assess and define the behaviors of stakeholders during the phases of implementation in meaningful ways.

Discussion

The Community Development Team (CDT) model was developed to help counties and agencies navigate the complex stages of implementation. The model grew out of the California Institute of Mental Health's efforts to promote and support evidence-based services in publicly-run mental health programs. The development of the CDT model capitalized on past experiences with failed implementation efforts. As is described in the CDT manual: “It appeared that awareness of, and interest in, an evidence-based practice (including detailed information on how to contact practice-specific developers for training and technical assistance), even when based on compelling information about the merits of the practice from credible and respected sources, did not result in adoption of new practices by the vast majority of participants” (Sosna and Marsenich 2006).

In this study, the CDT was one condition of a two-armed trial evaluating the effectiveness of implementation strategies for MTFC. In its own right, MTFC utilizes and maintains many of the elements of the ISF by focusing on the provision of close training, monitoring, and quality assurance in order to achieve positive outcomes for youth. The CDT model extends this mapping onto the ISF even further by providing additional support and technical assistance, and by ensuring that the model is delivered in a manner that addresses the needs of the consumers (both agency level and client level) by facilitating well coordinated interactions between providers, stakeholders, and developers. One concrete example of this interactive framework from the current trial occurred early on. Rural counties reported to their CDT facilitators that they were interested in adopting MTFC, but that they were unable to support the capacity of a typical MTFC program. With the assistance of the CDT facilitator, the rural counties were able to discuss this barrier with the developers and create a plan that worked within the resources of the rural counties, while maintaining the infrastructure needed to conduct the requirements of an MTFC program. While the rural strategy was then offered to counties in both conditions, this adaptation never would have occurred had the ongoing peer-to-peer problem solving with a CDT facilitator not happened.

The SIC as a Measure to Compare Implementation Strategies

The Stages of Implementation Completion (SIC) measure was developed in attempt to define and quantify the movement toward successful implementation (or lack thereof) of MTFC by county participants at multiple child public service system levels. Such a tool was necessary in order to evaluate the CDT compared to implementation services “as usual” in assisting counties toward successful program start-up and sustainability.

In each county, there are multiple levels of participants who provide data to the study at different phases of the implementation process. This methodology is consistent with the ISF notion that to achieve and maintain widespread and sustainable implementation of evidence-based programs, a framework is needed that integrates implementation activities across multiple levels (political, policy, organizational, practitioner) within a wide ecological context, rather than focusing solely on clinical competence of the practitioner in implementing a given evidence-based protocol. Toward this effort, the SIC shows promise as a tool to assess and define implementation behavior. Such tools are necessary for the evaluation of success of different implementation strategies such as the CDT. Further, this tool could prove beneficial in empirically validating frameworks, such as the ISF, that posit the importance of multi-agent interactions and behavior. Of note, in order to use the SIC to compare across implementation strategies, activities had to be standardized across conditions. The method for how the activities were achieved, however, is not well defined by the SIC and is of great interest in understanding how the procedures of one strategy differ from another. For example, the written implementation plan (Stage 3) is completed as a group activity for CDT participants, whereas it is completed individually for those receiving IND. Future analyses will focus on if the process (versus simply the act of completing or not) is important in implementation procedures. Previous analyses suggesting that the duration (i.e., how long it takes to complete a stage) significantly impacts successful program start-up (Saldana et al. 2011) suggests that this process will be important. That is, counties who complete activities as a group often complete multiple activities on a single meeting (over a day or two) decreasing the stage duration, whereas those who do them individually have a tendency to extend their progress over time. The implication, therefore, is that interactive implementation is more efficient and potentially successful.

Conclusion

As has been discussed in this paper, the CDT model incorporates many of the goals and specific features of the ISF support system. The overlap in these models which were conceptualized and developed within entirely separate disciplines (public mental health for the CDT and prevention science for the ISF) speaks to their potential utility to advance evidence based work in the young field of implementation science. As highlighted in the ISF support system, the CDT model relies heavily on interactions between developers, technical support staff, key stake-holders, and service providers with each providing essential information with regard for the potential of successful implementation. By including each of these perspectives in the SIC assessment, the current study provides a unique opportunity to empirically evaluate if the elements of the ISF, as exemplified through the CDT, are indeed beneficial in moving communities toward Getting To Outcomes. If the CDT model successfully demonstrates positive outcomes for implementation success, this study will help to support the ISF as a framework for filling the chasm between research and practice.

Acknowledgments

This work was supported by the National Institute of Mental Health R01MH076158 and the National Institute on Drug Abuse KDA021603.

Footnotes

Disclaimer: Chamberlain is a partner in Treatment Foster Care Consultants Inc, a company that provides consultation to systems and agencies wishing to implement MTFC.

References

- Aarons GA, Covert J, Skriner LC, Green A, Marto D, Garland AF, et al. The eye of the beholder: Youths and parents differ on what matters in mental health services. Administration and Policy in Mental Health and Mental Health Research. 2010;37:459–467. doi: 10.1007/s10488-010-0276-1. doi:10.1007/s10488-010-0276-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlbert M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Research. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander JF, Pugh C, Parsons BV, Sexton TL. Functional family therapy. In: Elliot DS, editor. Blueprints for violence prevention (Book 3) 2nd ed. Center for the Study and Prevention of Violence, Institute of Behavioral Science, University of Colorado; Boulder, CO: 2000. [Google Scholar]

- Blasé KA, Fixsen DL, Duda MA, Metz AJ, Naoom SF, Van Dyke MK. Implementation challenges and successes: Some big ideas. Presented at the Blueprints for Violence Prevention Conference; San Antonio, TX. 2010. [Google Scholar]

- Chamberlain P. Treatment foster care. U.S. Department of Justice; Washington, DC: 1998. (Family strengthening series). OJJDP Bulletin NCJ 1734211. [Google Scholar]

- Chamberlain P. The Oregon multidimensional treatment foster care model: Features, outcomes, and progress in dissemination. Moving evidence-based treatments from the laboratory into clinical practice. In: Schoenwald S, Henggeler S, editors. Cognitive Behavior Practice. Vol. 10. 2003. pp. 303–312. doi:10.1016/S1077-7229(03)80048-2. [Google Scholar]

- Chamberlain P, Brown CH, Saldana L. Observational measure of implementation progress: The stages of implementation completion (SIC) Manuscript submitted for publication. 2011 doi: 10.1186/1748-5908-6-116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Brown CH, Saldana L, Reid J, Wang W, Marsenich L, et al. Engaging and recruiting counties in an experiment on implementing evidence-based practice in California. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35:250–260. doi: 10.1007/s10488-008-0167-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Leve LD, DeGarmo DS. Multidimensional Treatment Foster Care for girls in the juvenile justice system: 2-year follow-up of a randomized clinical trial. Journal of Consulting and Clinical Psychology. 2007;75:187–193. doi: 10.1037/0022-006X.75.1.187. doi: 10.1037/0022-006X.75.1.187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Reid JB. Parent observation and report of child symptoms. Behavioral Assessment. 1987;9:97–109. [Google Scholar]

- Elliott DS, editor. Blueprints for violence prevention. Institute of Behavioral Science, Regents of the University of Colorado; Boulder, CO: 1998. [Google Scholar]

- Fixsen D, Blase K. NIRN Implementation Brief, 1. The University of North Carolina; Chapel Hill: 2009. Implementation: The missing link between research and practice. [Google Scholar]

- Glisson C, Hemmelgarn A. The effects of organizational climate and interorganizational coordination on the quality and outcomes of children's service systems. Child Abuse and Neglect. 1998;22:401–421. doi: 10.1016/s0145-2134(98)00005-2. [DOI] [PubMed] [Google Scholar]

- Goldstein AP, Glick B. Aggression replacement training: Curriculum and evaluation. Simulation & Gaming. 1994;25:9–26. doi:10.1177/1046878194251003. [Google Scholar]

- Henggeler SW, Schoenwald SK, Borduin CM, Rowland MD, Cunningham PB. Multisystemic treatment of antisocial behavior in children and adolescents. Guilford; New York: 1998. [Google Scholar]

- Hoagwood K, Olin S. The NIHM blueprint for change report: Research priorities in child and adolescent mental health. Journal of the American Academy of Child and Adolescent Psychology. 2002;41:760–767. doi: 10.1097/00004583-200207000-00006. [DOI] [PubMed] [Google Scholar]

- Kalbfleisch JD, Prentice RL. The statistical analysis of failure time data. 2nd ed. Wiley; New York: 2002. [Google Scholar]

- Leve LD, Chamberlain P, Reid JB. Intervention outcomes for girls referred from juvenile justice: Effects on delinquency. Journal of Consulting and Clinical Psychology. 2005;73:1181–1185. doi: 10.1037/0022-006X.73.6.1181. doi:10.1037/0022-006X.73.6.1181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsenich L. Evidence-based practices in mental health services for foster youth. California Institute for Mental Health; Sacramento, CA: 2002. [Google Scholar]

- Rogers EM. Diffusion of innovations. 4th ed. Free Press; New York: 1995. [Google Scholar]

- Saldana L, Chamberlain P, Wang W, Hendricks Brown C. Predicting Program Start-Up using the Stages of Implementation Measure. Administration and Policy in Mental Health and Mental Health Services Research. 2011 doi: 10.1007/s10488-011-0363-y. doi:10.1007/s10488-011-0363-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Sheidow AJ, Letourneau EJ. Toward effective quality assurance in evidence-based practice: Links between expert consultation, therapist fidelity, and child outcomes. Journal of Clinical Child & Adolescent Psychology. 2004;33:94–104. doi: 10.1207/S15374424JCCP3301_10. [DOI] [PubMed] [Google Scholar]

- Sosna T, Marsenich L. Community Development Team model: Supporting the model adherent implementation of programs and practices. California Institute for Mental Health Publication; Sacramento, CA: 2006. [Google Scholar]

- U. S. Department of Health and Human Services . Children and mental health. In Mental health: A report of the Surgeon General (DHHS Publication No. DSL 2000–0134–P) U. S. Government Printing Office; Washington, DC: 2000a. [Google Scholar]

- U. S. Department of Health and Human Services . In Mental health: A report of the Surgeon General (DHHS Publication No. DSL 2000–0134–P. U. S. Government Printing Office; Washington, DC: 2000b. Prevention of violence. [Google Scholar]

- Verbeke W, Volgering M, Hessels M. Exploring the conceptual expansion within the field of organizational behaviour: Organizational climate and organizational culture. Journal of Management Studies. 1998;35:303–329. [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. American Journal of Community Psychology. 2008;41:171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- Wandersman A, Imm P, Chinman M, Kaftarian S. Getting to outcomes: A results-based approach to accountability. Evaluation and Program Planning. 2000;23:389–395. doi:10.1016/S0149-7189(00)00028-8. [Google Scholar]

- Wang W, Saldana L, Brown CH, Chamberlain P. Factors that influenced county system leaders to implement an evidence-based program: A baseline survey within a randomized controlled trial. Implementation Science. 2010;5 doi: 10.1186/1748-5908-5-72. [DOI] [PMC free article] [PubMed] [Google Scholar]