Summary

Current views on multisensory motion integration assume separate substrates where visual motion perceptually dominates tactile motion [1, 2]. However, recent neuroimaging findings demonstrate strong activation of visual motion processing areas by tactile stimuli [3–6], implying a potentially bidirectional relationship. To test the relationship between visual and tactile motion processing, we examined the transfer of motion aftereffects. In the well-known visual motion aftereffect, adapting to visual motion in one direction causes a subsequently presented stationary stimulus to be perceived as moving in the opposite direction [7, 8]. The existence of motion aftereffects in the tactile domain was debated [9–11], though robust tactile motion aftereffects have recently been demonstrated [12, 13]. By using a motion adaptation paradigm, we found that repeated exposure to visual motion in a given direction produced a tactile motion aftereffect, the illusion of motion in the opponent direction across the finger pad. We also observed that repeated exposure to tactile motion induces a visual motion aftereffect, biasing the perceived direction of counterphase gratings. These crossmodal aftereffects, operating both from vision to touch and from touch to vision, present strong behavioral evidence that the processing of visual and tactile motion rely on shared representations that dynamically impact modality-specific perception.

Results

Experiment 1: Vision to Touch

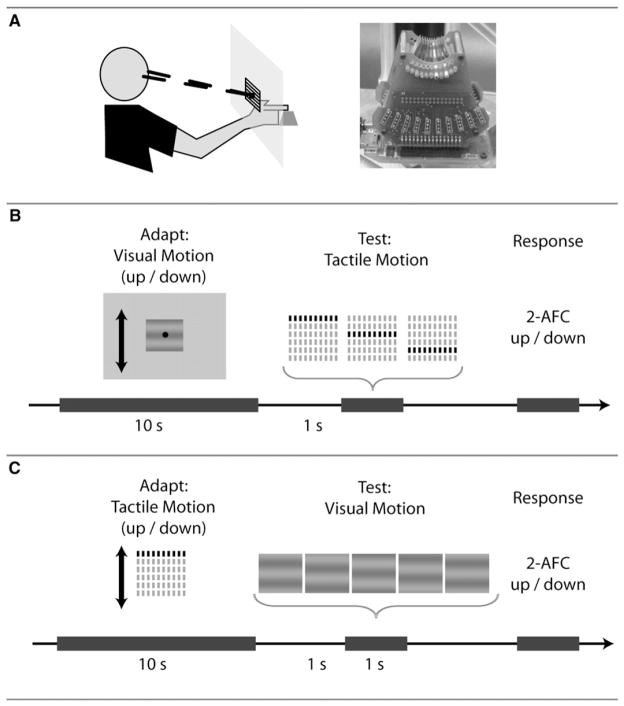

In the first experiment, we asked whether adaptation to visual motion could induce a tactile motion aftereffect. Observers placed their right index finger (distal phalangeal pad) on a tactile stimulation apparatus 35 cm behind and 35 cm below the fixation point on the monitor. The fingertip was positioned in the foveal visual field, with the index finger pointing “up” in a retinotopic projection. This experimental setup approximated the best three-dimensional correspondence between the point of regard and the finger tip (Figure 1A). In this experimental set-up, motion distal (outward) on the finger tip was matched to motion upward on the screen; if tactile motion along the fingertip were visible, it would induce upward motion in the observer’s visual field.

Figure 1. Experimental Set Up and Methods.

(A) Left: Observers were positioned with their right index finger placed on the tactile stimulation apparatus 35 cm behind and 35 cm below the fixation point on the monitor. Right: Image of the STReSS2 distributed tactile stimulator, composed of 60 piezoelectric actuators forming a 6 ×10 array with spatial resolution of 1.8 by 1.2 mm.

(B) Experiment 1 Trial Design. Observers were presented with 10 s of visual motion (up or down) followed by a 1 s blank interval and a brief tactile motion stimulus consisting of three successive rows of stimulation. The interstimulus onset (ISO) between the tactile rows was varied to measure the effect of the visual motion adaptation on the tactile test stimulus. Observers had to respond in a 2-alternative forced choice task whether tactile test stimulus moved up or down. (C) Experiment 2 Trial Design. Observers were first presented with ~10 s of tactile motion sweeps over the finger pad, either upward or downward. After a 1 s pause, a visual motion stimulus was presented consisting of 5 frames presented for 200 ms. The phase between the gratings (phase jump) at each frame was varied to measure the effect of the tactile motion stimulus on the visual test stimulus. Observers had to respond in a 2-alternative forced choice task whether visual test stimulus moved up or down.

On each trial, a drifting visual grating was presented for 10 s to the foveal visual field (subtending 1.9 × 2.3 degrees, 10% Michelson contrast, spatial frequency = 1.05 cpd, temporal frequency = 2 Hz). After a period of visual motion adaptation, the visual display blanked, and 1 s later, the tactile test stimulus was delivered, which consisted of a brief motion sweep across the finger pad parallel to the visual grating orientation. To deliver tactile sweeps, we used the STReSS2 tactile stimulator [14] comprising an array of 6 × 10 piezoelectric tactors (Figure 1A, schematically depicted in Figure 1B; see Experimental Procedures). Observers reported whether this tactile motion stimulus was perceived as moving upward or downward. The tactile motion stimulus varied in upward or downward motion speed by adjusting the time between three successively activated rows. Each row activated for 100 ms, with a 33.3 Hz vibration frequency to target rapidly adapting mechanoreceptor populations [12].

If aftereffects transfer from vision to touch, then adapting to visual motion upward would cause the unbiased tactile stimulus (0 ms interstimulus onset) to be perceived as downward. Thus, upward tactile motion would be required to “null” the motion aftereffect. The point of subjective equality (PSE) was reached when sufficient upward nulling motion was delivered so that the tactile stimulus was reported equally “upward” and “downward.” We quantified the magnitude of the induced aftereffect as the difference in the PSE after upward versus downward motion adaptation. This method allows a measure of the magnitude of the aftereffect quantified in terms of physical rather than subjective properties.

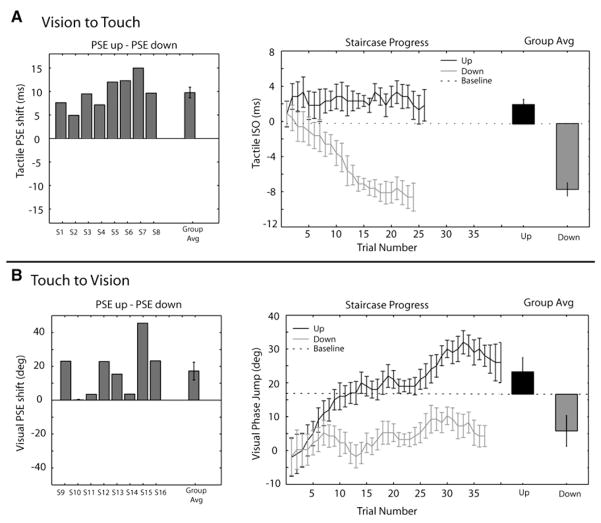

Adapting to upward versus downward visual motion significantly shifted the point of subjective equality of the tactile motion stimulus (Experiment 1). In Figure 2A, the right panel shows the average PSE estimation progress across subjects. Visual motion adaptation upward (gray line) caused the tactile stimulus to be perceived as moving downward, leading to an upward-shifted tactile PSE. Similarly, visual motion downward (black line) caused the tactile motion to be perceived as upward, leading to a downward tactile PSE. If there was no effect of visual motion adaptation on subsequent tactile motion judgments, these two staircases would not diverge. A significant difference in the point of subjective equality after upward adaptation compared to downward adaptation was observed (t(7) = 8.96, p < 0.001). The difference between the PSE after upward versus downward motion adaptation is plotted for each subject and the group (Figure 2A, left). The effect was observed in the predicted direction for all eight subjects (sign test: p < 0.01), demonstrating that visual motion adaptation can induce a tactile motion aftereffect. These data support the predicted crossmodal impact of visual motion stimulation on tactile motion discrimination.

Figure 2. Results of Experiments 1 and 2.

(A) Experiment 1 Results. Left: The point of subjective equality (PSE) was measured for each subject and condition, and the magnitude of the aftereffect was quantified as the difference in tactile PSE between adapting to upward and downward visual motion. The PSE shift is shown for each subject and the group average. Right: The average threshold estimation staircase across observers is plotted for upward (gray) and downward (black) visual motion-adaptation conditions. The y axis shows the tactile test stimulus setting, measured by the interstimulus onset (ISO) between the tactile bars. Positive values indicate upward tactile motion and negative values indicate downward tactile motion. Baseline PSE (dotted line) for the tactile stimulus without prior visual motion is also shown (see Supplemental Data). Adjacent to the average staircase data, the group average upward and downward component PSE shifts are plotted relative to baseline.

(B) Experiment 2 Results. Left: The point of subjective equality (PSE) was measured for each subject and condition, and the magnitude of the aftereffect was quantified as the difference in visual PSE between adapting to upward and downward tactile motion. The PSE shift is shown for each subject and the group average. Right: Average threshold estimation staircase shown for tactile adaptation upward (gray) and downward (black). The y axis shows the phase jump in degrees from 180, where 0 degrees is a perfect counterphase grating, positive degree jumps indicate upward visual motion, and negative degree phase jumps indicate downward visual motion. Baseline PSE (dotted line) for the visual stimulus without prior tactile motion is also shown (see Supplemental Data). Adjacent to the average staircase data, the group average upward and downward component PSE shifts are plotted relative to baseline. All error bars reflect within-subject standard error [39]

Next, we assessed the reliability of this effect within observers. Here, staircases for the two adaptation conditions can be said to significantly diverge if the distribution of the staircase reversals are significantly different from each other. This measure is strict, because the staircases for both conditions began at the same seed, and thus reversals that happen early in a trial are likely to be of similar magnitude. Despite use of this conservative metric, all eight subjects showed a significant difference between the reversals in the upward and downward adaptation condition (unpaired t tests, p’s < 0.05).

The magnitude of the aftereffect induced by each direction of motion adaptation can be assessed by examining the shift in the PSE after adaptation relative to the baseline point of subjective equality (no prior visual adaptation). Both the upward- and downward-induced motion aftereffects were different from the baseline PSE (upward adaptation: t(7) = 2.33, p = 0.052; downward adaptation: t(7) = 6.63, p < 0.001). Further, the magnitudes of these component aftereffects were significantly different (t(7) = 2.61, p < 0.05). There was a stronger tactile motion aftereffect after downward visual motion adaptation than after upward visual motion adaptation. Further work is required to understand what underlies this asymmetry, such as the postural configuration of the experimental setup, or more intrinsic properties of the system, such as anisotropies showing an overrepresentation of downward direction [15, 16].

Experiment 2: Touch to Vision

In the second experiment, we asked whether adaptation to tactile motion could induce a visual motion aftereffect. Pilot studies were conducted to ensure that tactile motion aftereffects could be reliably induced with the adapting parameters (see Supplemental Data available online). In each trial, observers were presented with ~10 s of tactile motion sweeps (30 ms/row for 6 rows, followed by a 150 ms gap, 33.3 Hz vibration frequency). One second after the cessation of the tactile motion adaptation stimulus, a lowcontrast visual counterphase flickering grating was presented for 1 s, and observers reported the direction of visual motion (see Figure 1B). This visual motion stimulus varied in motion speed by adjusting the phase jump between successive frames of the grating. A phase jump of 180° (a standard unbiased counterphase flickering grating) is denoted as a 0° motion bias, with positive numbers arbitrarily indicating upward motion in the stimulus and negative numbers indicating downward motion in the stimulus. As before, the point of subjective equality for the visual motion stimulus was estimated, and we quantified the effect as the shift in PSE after adapting to upward versus downward tactile motion.

Adapting to tactile motion caused a visual motion aftereffect, shifting the point of subjective equality for the visual flickering gratings in opponent directions. The right panel of Figure 2B shows the average PSE estimation for the visual counterphase gratings after upward tactile motion adaptation (gray line) and downward tactile motion adaptation (black line), compared to the baseline point of subjective equality measured with no prior adaptation (dashed line). If there was no effect of tactile motion adaptation on subsequent visual motion processing, the staircase progress for both conditions would not significantly diverge over time. However, we see a significant shift in the point of subjective equality after upward versus downward tactile motion exposure (t(7) = 3.25, p < 0.05). To quantify this effect, we again measured the difference in the PSE after upward versus downward motion and plot this difference score for all observers and the group average in Figure 2B (left). After exposure to tactile motion in one direction, visual motion bias in the same direction was required to null the motion aftereffect in all eight observers.

This crossmodal aftereffect was observed relative to an underlying net bias to perceive foveal low-contrast counterphase flickering gratings with downward motion. The point of subjective equality for perceiving the direction of low-contrast counterphase flickering gratings (with no prior motion adaptation) is not centered around the stimulus with 180° phase jump between successive frames. In fact, this physically unbiased stimulus is predominantly perceived as motion downward [17]. All observers showed a bias to perceive this visual stimulus as downward, both in the present experiment and in a supporting experiment conducted to verify this bias with a method of constant stimuli paradigm (see Supplemental Data). These data reveal an intrinsic pre-existing bias to see downward motion in a highly ambiguous vertical stimulus. Importantly, in the present investigation of crossmodal aftereffects, we see motion aftereffects relative to this bias within visual motion perception—this baseline does not affect our measurements of the shift in PSE observed between upward and downward motion adaptation.

To assess the reliability of this motion aftereffect within observers, we again examined the distributions of staircase reversals for upward and downward adaptation conditions. In 5 of the 8 subjects, there was a significant difference between the reversals in the upward and downward adaptation condition (unpaired t tests, p’s < 0.05). In all eight subjects, the average difference between upward and downward motion adaptation conditions was observed in the expected direction (see Figure 2B, left, sign test: p < 0.01).

The magnitude of the aftereffect induced by each direction of motion was further assessed by comparing the PSE after adaptation in each direction to the baseline PSE. Here, neither of the upward or downward motion aftereffect components was significantly different from baseline (upward: t(7) = 1.13, p > 0.1, downward: t(7) = 1.60, p > 0.1). Because of the visual baseline bias, any early staircase reversals might obscure the relative differences between these conditions. To address this possibility systematically, we conducted a supplemental analysis to calculate the PSEs with only later reversals (see Supplemental Data). This analysis showed that the effect of downward adaptation relative to baseline was significant over a range of PSE calculation methods, while the upward component approached significance. Further, when the magnitudes of each component aftereffect were averaged with all reversals, the PSE shift was significantly different from the baseline (t(7) = 3.25, p < 0.05). Importantly, if there was no impact of touch on vision, there would be no difference between upward and downward adaptation; however, the staircases shown in Figure 2B reliably diverge.

Discussion

These results demonstrate that visual motion processing and tactile motion processing are not isolated, but instead are susceptible to adaptation in the other modality. In Experiment 1, adaptation to a visual drifting grating led to a tactile motion aftereffect, the perception of tactile motion across the finger in the opposite direction to the visual motion. In Experiment 2, the reciprocal crossmodal interaction was observed: adaptation to repeated tactile motion across the finger tip led to a motion aftereffect in the visual modality, biasing the direction of ambiguous counterphase gratings. These crossmodal motion aftereffects, measured as a shift in the point of subjective equality after upward versus downward adaptation, were observed in all individual subjects and in the group average for both modalities. Within individual subjects, a significant difference in the mean reversal values was observed for all subjects in the visual-to-tactile illusion and in the majority of subjects (5 of 8) in the tactile-to-visual illusion.

There are several key implications of these findings. First, by using an adaptation paradigm, crossmodal aftereffects indicate that the perceptual experience of one modality can be influenced by a previously presented stimulus in another modality. Second, aftereffects are taken as evidence that there are adaptive filters tuned to specific stimulus properties [8], where the adapted state of those filters determines how subsequently presented stimuli will be processed. Here, the neural processing of tactile motion depends on substrates influenced by adaptation of vision. Similarly, processing of visual motion depends on substrates adapted by tactile motion. Thus, the transfer of these aftereffects between modalities directly supports the view that at some point in the motion processing streams, these two modalities have partially overlapping substrates. Third, these data show that at least some shared representations between touch and vision are directionally specific, and as such depend on correspondence between directional motion in the visual and tactile domain. Finally, although most studies examining visual and haptic motion find only that vision influences touch [1, 2], the present results demonstrate that touch can impact the perception of visual motion. This finding suggests that bidirectional crossmodal interactions may be more prevalent than currently believed.

Implications for Neural Architecture

The current psychophysical results cannot reveal the site of shared neural representations underlying these crossmodal interactions in motion processing. However, one prominent candidate is the V5/MT+ complex. Area hMT+ is well known for its visual motion selectivity (see [18] for a review). Recent studies have now shown tactile activation of visual MT+/V5 with tactile motion stimuli [3, 4], in blind participants [5], and even during the presentation of vibrotactile stimuli that do not overtly translate in space and time across the skin [6]. Interestingly, area hMT+ is deactivated by auditory motion in sighted participants [19], and thus does not follow the same pattern as tactile and visual motion in this area. More generally, activations of other visual areas by tactile and auditory stimuli [20–27] suggest that sensory input does not necessarily proceed independently through unisensory processing streams before being combined in multisensory zones ([28, 29]; see also [30]).

Unimodal motion aftereffects are thought to originate not from adaptation in one area (e.g., V1) but from adaptation at many stages of processing both in early sensory areas and in higher level areas (e.g., MT+ complex, superior temporal sulcus, intraparietal sulcus) [8]. In this model of hierarchical adaptation, as the stimulus is processed along the visual stream, more and more general properties of the stimulus are adapted. Each level of processing can be probed with different test stimuli. For example, after the same visual motion adaptation, a subsequent static grating will lead to retinotopic aftereffects, whereas a subsequent dynamic grating will generate aftereffects in a wider range of retinotopic locations [8, 31, 32]. In the present studies, the tactile test stimulus can be thought of as a further probe of the adapted state induced by the same visual motion adaptation. The extent to which processing of the tactile test stimulus relies on the adapted circuits from the previous visual stimulus determines the properties of aftereffects that can be observed.

Implications for Multisensory Integration

The use of adaptation is an important paradigm for understanding multisensory representation, because the stimuli are not delivered concurrently. This removes concerns about how attention is divided among competing sensory stimuli. Further, it demonstrates that processing sensory input from one modality in isolation can be influenced by adaptation from another modality. Kitagawa and Ichihara employed motion aftereffects to examine motion processing between vision and audition ([33], see also [34]). Interestingly, they found that adapting to visual motion induced an auditory motion aftereffect; however, the influence of auditory motion adaptation on vision was not observed. Most previous studies on multisensory motion use simultaneous stimulus presentation and have demonstrated that vision can influence tactile and auditory motion judgments (e.g., [35–37], see [2] for a review). However, the influence of tactile stimulation on the perception of visual motion is not often examined, and when studied, touch is typically reported to be incapable of biasing vision (e.g., [2]). Based on this body of evidence, some current views on motion processing hold that vision uniformly “dominates” motion judgments in both the auditory and the tactile modalities [2]. However, in contrast to the vision-dominant view, the present results demonstrate that touch can impact the perception of visual motion.

The precise arrangement of stimulus factors was likely crucial to the induction of the illusions reported here and provides an explanation for the divergence of these findings from previous studies. To probe the visual aftereffect after tactile adaptation (Experiment 2), we chose a visual stimulus that was of low contrast, dynamic, and counterphase flickering. This dynamic test stimulus has two clear perceptual interpretations as either upward or downward, and we believe that this bistability makes it more likely to be susceptible to tactile motion adaptation than, e.g., a static visual grating. Indeed, in Culham et al., high-level motion adaptation from attentive tracking induced aftereffects only when probed with a similar dynamic test stimulus and did not arise with a static test stimulus [38]. We suggest that tactile motion was able to influence visual motion processing in our study because of our choice of the visual stimulus used to probe the adapted state of the neural system.

Conclusion

Here we show that motion adaptation in the visual modality causes an illusion of tactile motion in the opposite direction. Further, we show the reciprocal interaction, in which tactile motion adaptation leads to a visual motion aftereffect. The transfer of these aftereffects directly supports the view that motion signals from visual and tactile input pathways are represented with partially overlapping neural substrates. Finally, these data generally support the emerging view that sensory processing, even at relatively “early” levels in neural pathways, can show robust crossmodal interactions leading to perceptual consequences [28, 29].

Experimental Procedures

Participants

16 observers participated (8 in Experiment 1 and 8 in Experiment 2), with normal or corrected-to-normal vision. All gave informed consent approved by the Committee On the Use of Humans as Experimental Subjects and all procedures conformed to the principles expressed in the Declaration of Helsinki.

Apparatus

Tactile stimuli were applied with the STReSS2 distributed tactile stimulator [14]. The active area of the stimulator was 10.6 ×11.3 mm, composed of 60 piezoelectric actuators forming a 6 ×10 array with spatial resolution of 1.8 by 1.2 mm. Each actuator can be independently driven to tangentially deform the skin with maximum 0.15 N force and a free deflection of 0.1 mm. A 33.3 Hz vibrotactile mode was employed. All actuators were controlled by custom developed software running on Xenomai real-time system. For terminology purposes only, a tactile “bar” of stimulation refers to one row of 10 stimulation sites, and tactile “sweeps” refer to successive rows being stimulated over time.

General Procedure

Observers underwent training, baseline measurements, and adaptation blocks. Training consisted of sweeps across the finger to familiarize participants with the tactile stimulator. Specific training for each experiment was also performed (see Supplemental Data). Three baseline staircases were completed, in which no adaptation preceded the judgment of the visual or tactile test stimuli. Next, staircases were presented for each condition, blocked, with the order counterbalanced across subjects. Instructions were to attend to the stimulation during the adaptation period and to report whether the subsequent test stimulus moved up or down.

Experiment 1

The adapting visual grating subtended 1.9 ×2.3 degrees, with 10% Michelson contrast, a spatial frequency of 1.05 cycles per degree and a temporal frequency of 2 Hz, updated at a 60 Hz monitor refresh rate, presented for 10 s. A black fixation dot remained on the screen through the adaptation period. The tactile test stimulus consisted of a three tactile bars (first row, third row, fifth row), played for 100 ms each, with a frequency of 33.3 Hz. The timing between the bar onset (interstimulus onset, ISO) varied according to the staircase.

Experiment 2

For the adapting tactile motion sweeps, each row was vibrated for 30 ms, for a total sweep time of 180 ms followed by 150 ms pause. This was repeated 28 times for 9.24 s of adaptation. Deflection frequency was 33.3 Hz. A black fixation dot remained on the screen through the adaptation period. The visual test stimulus used the same parameters as in the previous experiment with the contrast reduced to 1%. The test stimulus was 1 s in duration, consisting of 5 frames of a grating presented for 200 ms each, where the phase offset between successive frames was varied according to the staircase progress (see [38]).

Staircase Procedure

For each condition, one staircase was conducted and conditions were run in a blocked design with order counterbalanced across subjects. Each block proceeded for 20 reversals in the staircase up to a maximum of 40 trials. Breaks were given after 20 trials. The point of subjective equality (PSE) was calculated by discarding the first two reversals and averaging the remaining reversals. The interstimulus onset (ISO) between the three tactile bars or the phase jump between successive frames of the counterphase grating was controlled by the staircase. The tactile staircase seed was always 1 ms ISO, with steps of 2 ms following a 1-up 1-down staircase procedure. In the visual staircases, the seed was 2 degrees phase-jump, with a 4 degree step size.

Supplementary Material

Acknowledgments

This work was made possible with the support of theMcGovern Institute for Brain Research and the Mitsui Foundation to C.I.M., the NDSEG to T.K., the NSERC of Canada through a Discovery Grant to V.H., and the McGill University Eric L. Adler Fellowship to Q.W. The authors declare they have no competing interests.

Footnotes

Supplemental Data include Supplemental Experimental Procedures and five figures and can be found with this article online at http://www.cell.com/current-biology/supplemental/S0960-9822(09)00886-0.

References

- 1.Soto-Faraco S, Kingstone A. Multisensory integration of dynamic information. In: Calvert G, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. Cambridge, MA: The MIT Press; 2004. pp. 49–68. [Google Scholar]

- 2.Soto-Faraco S, Spence C, Lloyd D, Kingstone A. Moving multisensory research along Motion perception across sensory modalities. Curr Dir Psychol Sci. 2004;13:29–32. [Google Scholar]

- 3.Hagen MC, Franzen O, McGlone F, Essick G, Dancer C, Pardo JV. Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur J Neurosci. 2002;16:957–964. doi: 10.1046/j.1460-9568.2002.02139.x. [DOI] [PubMed] [Google Scholar]

- 4.Blake R, Sobel KV, James TW. Neural synergy between kinetic vision and touch. Psychol Sci. 2004;15:397–402. doi: 10.1111/j.0956-7976.2004.00691.x. [DOI] [PubMed] [Google Scholar]

- 5.Ricciardi E, Vanello N, Sani L, Gentili C, Scilingo EP, Landini L, Cuazzelli M, Bicchi A, Haxby JV, Pietrini P. The effect of visual experience on the development of functional architecture in hMT+ Cereb Cortex. 2007;17:2933–2939. doi: 10.1093/cercor/bhm018. [DOI] [PubMed] [Google Scholar]

- 6.Beauchamp MS, Yaser NE, Kishan N, Ro T. Human MST but not MT responds to tactile stimulation. J Neurosci. 2007;27:8261–8267. doi: 10.1523/JNEUROSCI.0754-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wolgemuth A. On the aftereffect of seen movement. Br J Psychol. 1911;1:1–117. [Google Scholar]

- 8.Mather G, Verstraten F, Anstis SM. The Motion Aftereffect: A Modern Perspective. Cambridge, MA: The MIT Press; 1998. [DOI] [PubMed] [Google Scholar]

- 9.Thalman WA. The after-effect of movement in the sense of touch. Am J Psychol. 1922;33:268–276. [Google Scholar]

- 10.Hollins M, Favorov O. The tactile movement aftereffect. Somatosens Mot Res. 1994;11:153–162. doi: 10.3109/08990229409028868. [DOI] [PubMed] [Google Scholar]

- 11.Lerner EA, Craig JC. The prevalence of tactile motion aftereffects. Somatosens Mot Res. 2002;19:24–29. doi: 10.1080/0899022012011309. [DOI] [PubMed] [Google Scholar]

- 12.Watanabe J, Hayashi S, Kajimoto H, Tachi S, Nishida S. Tactile motion aftereffects produced by appropriate presentation for mechanoreceptors. Exp Brain Res. 2007;180:577–582. doi: 10.1007/s00221-007-0979-z. [DOI] [PubMed] [Google Scholar]

- 13.Carter O, Konkle T, Wang Q, Hayward V, Moore CI. Tactile rivalry demonstrated with an ambiguous apparent-motion quartet. Curr Biol. 2008;18:1050–1054. doi: 10.1016/j.cub.2008.06.027. [DOI] [PubMed] [Google Scholar]

- 14.Wang Q, Hayward V. Compact, portable, modular, high-performance, distributed tactile transducer device based on lateral skin deformation. 14th Symposium on haptic interfaces for virtual environment and teleoperator systems IEEE VR; 2006. pp. 67–72. [Google Scholar]

- 15.Van Essen DC, Newsome WT, Maunsell JH. The visual field representation in striate cortex of the macaque monkey: Asymmetries, anisotropies, and individual variability. Vision Res. 1984;24:429–448. doi: 10.1016/0042-6989(84)90041-5. [DOI] [PubMed] [Google Scholar]

- 16.Ribot J, Tanaka S, O’Hashi K, Ajima A. Anisotropy in the representation of direction preferences in cat area 18. Eur J Neurosci. 2008;27:2773–2780. doi: 10.1111/j.1460-9568.2008.06219.x. [DOI] [PubMed] [Google Scholar]

- 17.Ohtani Y, Ejima Y. Anisotropy for direction discrimination in a two-frame apparent motion display. Vision Res. 1997;37:765–767. doi: 10.1016/s0042-6989(96)00242-8. [DOI] [PubMed] [Google Scholar]

- 18.Born RT, Bradley DC. Structure and function of visual area MT. Annu Rev Neurosci. 2005;28:157–189. doi: 10.1146/annurev.neuro.26.041002.131052. [DOI] [PubMed] [Google Scholar]

- 19.Lewis JW, Beauchamp MS, DeYoe EA. A comparison of visual and auditory motion processing in human cerebral cortex. Cereb Cortex. 2000;10:873–888. doi: 10.1093/cercor/10.9.873. [DOI] [PubMed] [Google Scholar]

- 20.Macaluso E, Frith CD, Driver J. Modulation of human visual cortex by crossmodal spatial attention. Science. 2000;289:1206–1208. doi: 10.1126/science.289.5482.1206. [DOI] [PubMed] [Google Scholar]

- 21.Merabet LB, Swisher JD, McMains SA, Halko MA, Amedi A, Pascual-Leoni A, Somers DC. Combined activation and deactivation of visual cortex during tactile sensory processing. J Neurophysiol. 2007;97:1633–1641. doi: 10.1152/jn.00806.2006. [DOI] [PubMed] [Google Scholar]

- 22.Beauchamp MS. See me, hear me, touch me: Multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol. 2005;15:145–153. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- 23.Prather SC, Votaw JR, Sathian K. Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia. 2004;42:1079–1087. doi: 10.1016/j.neuropsychologia.2003.12.013. [DOI] [PubMed] [Google Scholar]

- 24.Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: Objectbased representation in the human ventral pathway. Proc Natl Acad Sci USA. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- 26.Macaluso E, Frith CD, Driver J. Crossmodal spatial influences of touch on extrastriate visual areas take current gaze direction into account. Neuron. 2002;34:647–658. doi: 10.1016/s0896-6273(02)00678-5. [DOI] [PubMed] [Google Scholar]

- 27.Zangaladze A, Epstein CM, Grafton ST, Sathian K. Involvement of visual cortex in tactile discrimination of orientation. Nature. 1999;401:587–590. doi: 10.1038/44139. [DOI] [PubMed] [Google Scholar]

- 28.Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- 29.Stein BE, Stanford TR. Multisensory integration: Current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- 30.Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nishida S, Sato T. Motion aftereffect with flickering test patterns reveals higher stages of motion processing. Vision Res. 1995;25:477–490. doi: 10.1016/0042-6989(94)00144-b. [DOI] [PubMed] [Google Scholar]

- 32.Nishida S, Ashida H. A hierarchical structure of motion system revealed by interocular transfer of flicker motion aftereffects. Vision Res. 2000;40:265–278. doi: 10.1016/s0042-6989(99)00176-5. [DOI] [PubMed] [Google Scholar]

- 33.Kitagawa N, Ichihara W. Hearing visual motion in depth. Nature. 2002;416:172–174. doi: 10.1038/416172a. [DOI] [PubMed] [Google Scholar]

- 34.Valjamae A, Soto-Faraco S. Filling-in visual motion with sounds. Acta Psychol (Amst) 2008;129:249–254. doi: 10.1016/j.actpsy.2008.08.004. [DOI] [PubMed] [Google Scholar]

- 35.Bensmaia SJ, Killebrew JH, Craig JC. Influence of visual motion on tactile motion perception. J Neurophysiol. 2006;96:1625–1637. doi: 10.1152/jn.00192.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Craig JC. Visual motion interferes with tactile motion perception. Percept. 2006;35:351–367. doi: 10.1068/p5334. [DOI] [PubMed] [Google Scholar]

- 37.James TW, Blake R. Perceiving object motion using vision and touch. Cogn Affect Behav Neurosci. 2004;4:201–207. doi: 10.3758/cabn.4.2.201. [DOI] [PubMed] [Google Scholar]

- 38.Culham JC, Verstraten FA, Ashida H, Cavanagh P. Independent aftereffects of attention and motion. Neuron. 2000;28:607–615. doi: 10.1016/s0896-6273(00)00137-9. [DOI] [PubMed] [Google Scholar]

- 39.Loftus GR, Masson MEJ. Using confidence intervals in within-subject designs. Psychon Bull Rev. 1994;1:476–490. doi: 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.