Abstract

What progress prevention research has made comes through strategic partnerships with communities and institutions that host this research, as well as professional and practice networks that facilitate the diffusion of knowledge about prevention. We discuss partnership issues related to the design, analysis, and implementation of prevention research and especially how rigorous designs, including random assignment, get resolved through a partnership between community stakeholders, institutions, and researchers. These partnerships shape not only study design, but they determine the data that can be collected and how results and new methods are disseminated. We also examine a second type of partnership to improve the implementation of effective prevention programs into practice. We draw on social networks to studying partnership formation and function. The experience of the Prevention Science and Methodology Group, which itself is a networked partnership between scientists and methodologists, is highlighted.

Keywords: Prevention science, Implementation science, Social networks, Community-based participatory research

Introduction

The last two decades of scientific research have identified a wide range of interventions that show clear benefit in preventing drug and alcohol abuse, conduct disorder and delinquency, and internalizing behaviors (O’Connell et al.2009). Testing of these programs required the development of an expanded set of biostatistical and psychometric methods for measuring, modeling, and testing of prevention programs. Indeed, a major reason that prevention research evolved so quickly is that rigorous research designs and models were developed hand-in-hand with prevention researchers so that theories and programs could be tested and refined empirically (Kellam et al. 1999; Howe et al. 2002; Kellam and Langevin 2003).

One network that has been active in developing, disseminating, and applying these new measures, models, and testing for prevention is the Prevention Science and Methodology Group (PSMG), which has been funded for 24 years by the National Institute of Mental Health (NIMH) and the National Institute on Drug Abuse (NIDA). PSMG was constituted early on as a methods development group in service of prevention science, and evolved to form a much broader partnership between prevention scientists and methodologists across the United States to address pressing methodologic challenges in the field of prevention science and more recently in prevention implementation research. PSMG has collaborated on the design and analysis of dozens of preventive trials, and its contributory role in prevention science was recently highlighted in a National Academy of Science report on prevention (O’Connell et al. 2009).

In this paper, we begin by discussing partnerships involving methods development that were formed in this first phase of prevention science involving efficacy and effectiveness trials, followed by partnerships emerging in the implementation of effective programs. We discuss how interdisciplinary partnerships led to the use of innovative randomized trial designs for prevention, and how advanced analytic methods moved from esoteric technical journals to common practice. In the second phase, we describe how the recent drive to improve the practice of prevention through science is forming new partnerships that will guide the next generation of methods in prevention implementation. Other papers in this volume focus exclusively on the community-scientist partnership, so that we concentrate on the role that partnerships play in the development, refinement, and dissemination of methods for prevention research. Keeping with our background as methodologists and behavioral intervention scientists, we consider partnership formation and function as critical to the progress of prevention research and practice and use social network analysis to understand partnerships. We also use social network analysis to describe our own PSMG network and to examine the closeness of one of our partners in implementation research, the National Prevention Network, with members in the research community. Finally, we present how the modeling of partnerships through agent-based models can generate hypotheses about improving the implementation process for prevention.

Partnerships in the Conduct of Prevention Effectiveness Research

Modern prevention science developed rapidly from the 1980s as it was built on a long history of longitudinal risk research that identified potential targets for intervention; it then relied on NIH and other federal research agencies to support rigorous randomized experiments of preventive interventions with long-term follow-ups (Kellam et al.1999). Refinements to both prevention theory and interventions followed from rigorous experimental tests under optimal conditions—efficacy trials—and under more realistic community settings—effectiveness trials (Flay 1986). The scientific studies for the prevention of mental health problems and drug abuse differed in important ways from preventive trials in other fields such as heart disease (MRFIT-Research-Group 1982). In particular, most of the mental health and drug abuse prevention programs were delivered in naturally occurring groups, such as classrooms, schools, or communities (Szapocznik et al. 1997) rather than individually administered. Group-based randomized trials (Murray 1998) allowed one to modify social environments to affect behavior, and repeated measures in longitudinal follow-up allowed one to examine impact on developmental course.

Scientific advances in prevention occurred rapidly due to the bonding of three elements into a generally accepted scientific paradigm for prevention (Kuhn 1996) focused on preventive interventions for emotional, mental and behavioral problems. First, broad socio-behavioral theories, such as social learning theory, ecodevelopmental theory, and life course social field theory provided frameworks for identifying relevant modifiable risk factors that could serve as potential prevention targets and intervention strategies. More specific theories, such as Patterson’s and Reid’s coercion theory for antisocial behavior (Patterson et al.1992), and social influence and persuasive communication theory (McGuire 1964), then led to clearly defined preventive intervention strategies (e.g., resistance training for drug abuse). Secondly, there was a general acceptance in the scientific community that the randomized preventive trial should be the primary causal inference tool to test both proximal and distal impact of a prevention program in defined populations. While there continues to be debate about limits and alternatives to the use of randomized preventive trials for this first phase of prevention science (West et al. 2008), the randomized preventive trial still remains dominant (Brown and Liao 1999) as this allowed the testing and refinement of both theory and practice (Kellam and Rebok 1992). Third was a recognition that communities, advocates, and organizations including school districts and health systems had to be active partners in prevention research. For the most part, communities and organizations were quite open to research that was aligned with their own priorities and concerns (e.g., school success). Strength-based targets of many prevention programs, including improved pro-social behavior in school by the child, improved parent–child communication, and improved management of the classroom by the teacher, fit well with the main missions and values of these institutions (Kellam and Langevin 2003). The potential for these preventive interventions to benefit behaviors of direct interest to the institutions housing these preventive interventions greatly facilitated the acceptance of preventive interventions being tested in their communities, (Kellam 2000). Thus the mutual self-interests of prevention scientists, methodologists, and community or organizational leaders created natural partners in conducting experiments in prevention (Kellam and Langevin 2003).

These partnerships between behavioral scientists, communities and organizations, and methodologists, had a shared need to learn what prevention programs work, for whom and for how long (Kellam et al. 1999; Kellam and Langevin 2003). The utility of these partnerships depended on the quality of interactions between parties. Thus methodologists were expected to develop prevention trial designs that met both the scientific need of rigor and satisfied the community needs around accomplishing its missions and protecting individuals and community institutions from harm (Brown et al. 2006, 2007). Prevention scientists served as technical experts for communities and organizations in meeting their own goals through best available evidence (Kellam 2000). Community leaders provided oversight, access to populations and data sources that enabled scientists to conduct prevention research. The use of randomized preventive trials paved the way for the construction of more accurate theories of etiology and intervention, including predicting which interventions would have iatrogenic effects (Dishion et al. 1996, 1999; Szapocznik and Prado 2007), as well as the construction of new methodology to support complex trials (Brown 2003; Brown et al. 2008b, 2009).

This cooperation between communities, organizations, and researchers shared some characteristics of successful partnerships designed through a Community Based Participatory Research (CBPR) perspective. While prevention research was not often conducted under this CBPR banner, it is useful to note common features. CBPR is an interdisciplinary research methodology in which scientists and members of a community collaborate as equal partners in the development, conduct, and dissemination of research that is relevant to the community. In CBPR, partners learn from each other and respect each other’s areas of expertise. The principles of CBPR (Israel 2005) involves a long-term commitment to sustainability and builds on strengths and resources in the community; forms an equitable partnership; facilitates co-learning and capacity building among all partners; and disseminates results to all partners. CBPR enhances the ability of researchers to obtain more valid, quality results; helps to bridge gaps in understanding, trust, and knowledge between academic institutions and the community; and can help obtain more complete results by taking into account the full context environment, culture, and identity. CBPR also allows for the empowerment of and equal control by people who have historically been disenfranchised. Many of these principles apply to our own perspective on partnerships for prevention research.

Mutual Self-Interest in Establishing and Maintaining Partnerships

The essential element to an effective partnership involving prevention scientists, community leaders and organizations, and methodologists, is mutual self-interest (Kellam 2000). Kellam argues that an integral step in developing partnerships lies in establishing trust and negotiating mutually acceptable research goals in a way that meets the priorities and interests of all parties involved. All can benefit from such partnerships. In collaborating with methodologists, the scientist gains an increased sophistication in designs and analyses that inevitably lead to a greater ability to answer the complex prevention research questions. The methodologist gains from collaborating with prevention scientists and community leaders because his or her intellectual contributions depend on the availability of high quality datasets to evaluate different classes of models, designs, and computational strategies. Also, broad dissemination of new methods requires explicit examples that use these methods. The community gains by having effective prevention programs that meet their needs. Community activists and organizations also benefit research when positive findings serve to legitimize a social policy initiative, and can help save a successful program from budget cuts. Once a partnership has been established, each can access one another’s ‘insider’ knowledge that would otherwise be unattainable. For example, in planning our first Baltimore trial, the school district let researchers know which schools would be closed soon, prior to releasing this information to the public. As longitudinal follow-up was required, these schools were immediately excluded. Sharing of this information was an indication of the school district’s trust in the research team. Partnerships can also identifying and resolving factors that would affect the research. For example, low integration of recent immigrants into a community may require special engagement strategies directed towards this group. In the long-run, partnerships involving scientists, community leaders and organizations, and methodologists can benefit all parties.

It is easier to recognize these potential gains from this tripartite partnership than to realize them. Whether these gains are realized and sustained depends on the composition and nature of the partnership, trust and respect, and how and by whom priorities are set along with clear definitions of authority and oversight (Kellam 2000). Below we discuss how partnerships affected the design of two effectiveness trials involving PSMG; one initiated by researchers and one by community advocates.

The Role of Partnership in Designing Effectiveness Preventive Trials

As our first example, we discuss the development of a classroom-based randomized trial in Baltimore. Until that trial (Dolan et al. 1993), few researchers used randomization at the classroom within a school because of concerns about leakage of the active intervention into control classes. The precursor to PSMG, which partnered in this design, began as a spin-off methods grant to support the Johns Hopkins Epidemiologic Prevention Center, begun by the second author (SGK) in 1985. This NIMH/NIDA funded center in turn provided support for the first generation Baltimore Prevention Program trial, one of the first effectiveness trials aimed at early risk for conduct disorder, drug and alcohol abuse and delinquency (Kellam et al. 1999). Because this first generation trial broke new ground in its testing of two interventions’ effects on the course of early risk factors, its classroom level randomization, and its long-term follow-up (Kellam and Rebok 1992; Kellam et al. 1994), we anticipated that there would be very challenging methodologic problems in both the conduct of the trial and its analysis. In the first 5-years of funding the methods grant was closely tied to this first trial in Baltimore.

A critical early step involved the development of a research design for the two preventive interventions run concurrently in first and second grades. The design of this trial (Dolan et al. 1993; Brown et al. 2008b; Kellam et al. 2008) as well as the process of building trust and the formation of an ongoing partnership structure (Kellam 2000) have been described previously. Here we describe how the unique methodologic aspects of the design developed out of the existing partnership of scientists, the school district, and methodologists. In a retreat involving all the partners, it became clear that the first-grade classroom was the most important “unit of intervention.” That is, the success or failure of behavioral change in the youth depended critically on what went on at the level of the classroom (e.g., how well did the teacher deliver the curriculum or manage her classroom). Because of this, power was most strongly affected by the assignment of interventions to classrooms, and therefore, the methodologists recommended random assignment of intervention at this classroom level (i.e., blocking at the level of the school) and making sure that classrooms were comparable. By developing trust and sharing of concerns as to what was necessary from all viewpoints, random assignment of teachers and balancing of children were enthusiastically accepted by the school district. But this process required a year or more while the details of the aims and design were addressed. We all recognized that keeping the intervention condition from leaking into “control” classrooms within the same school would require an alternative support structure for those teachers. First, the “control” label itself sounded like “guinea-pigging” and certainly lacked dignity from the school district and community perspectives. We all agreed that the control schools, classrooms and students were to be labeled as “standard” schools, classrooms and children. Secondly, we collectively concluded that the same level of attention needed to be provided to standard setting teachers as we did to intervention teachers to protect the trial from bias due to attention differences. Standard settings received the same amount of training, and we also brought together standard setting teachers across schools in groups, as were those in the new intervention conditions. This established similar social network structures across interventions. The school administration fully supported these plans as a result of developing a responsive partnership.

Both scientists and methodologists also believed that much greater precision could be achieved if the two or three classrooms within a school, which were to be assigned randomly to active intervention or standard setting, were comparable at the start. This fact was later demonstrated through detailed statistical modeling (Brown and Liao 1999). It became clear that such comparability of classes within schools was not the norm; indeed many elementary schools practice ability tracking into classrooms based on the premise that homogeneous classrooms are easier to teach. The partnership explored the changes that would need to happen in order for classrooms to become comparable within schools. There were potential benefits for teachers as well as students if classrooms were compatible. If a teacher were assigned a disproportionate number of aggressive/disruptive children, her teaching task would be much more difficult, and other students assigned to such a class would receive less instruction. By making classrooms comparable in terms of the number of aggressive/disruptive students, no student would be unfairly exposed to a classroom with an environment less conducive to learning. In the Baltimore schools participating in the trial, principals and teachers supported dropping traditional tracking of students by ability. The researchers held community meetings with parents as part of the ongoing process of partnership, and once parents realized that this was a fair process there was no resistance to this plan. In this first trial, making classrooms comparable at baseline occurred by assigning children to classes stratified by kindergarten experience and behavior grades, yielding an excellent degree of balance across classrooms within schools (Kellam et al. 2008). In later trials, we adopted a full random allocation of students to classrooms (Ialongo et al. 1999; Poduska et al. 2009). These random allocations of children to classrooms were more rigorous than merely balancing children, but due to the on-going partnership they raised no additional concerns from the school district or parents.

We now discuss the design of a second trial; this one initiated by community advocates who brought together a research team, in contrast to the previous example where researchers sought funding to support a prevention program that met community and institutional needs. There are many situations where the community’s leaders drive the choice of which prevention programs they use in their community (Jasuja et al. 2005), and as we will see in our case, the study design may need to adapt to this. Positioned at the right time, methodologists and prevention scientists can often form partnerships that will lead to a carefully conducted evaluation of effectiveness. A case in point is the Georgia Gatekeeper Suicide Prevention Trial, in which an innovative randomized trial design was developed to meet both institutional and scientific needs (Brown et al.2006, 2007; Wyman et al. 2008). The impetus for this research came directly from community advocates, the Georgia Suicide Prevention Action Network (SPAN-GA), who secured seed funding from Georgia’s legislature to implement a gatekeeper training program called QPR in a large school district in Georgia. This school district had decided to train all schools in QPR, even though that intervention, like all other gatekeeper suicide prevention programs at the time, had never been evaluated for effectiveness. The director of SPAN-GA co-chaired a meeting in the school district with the first author to organize technical assistance in maximizing the scientific output of this program in the district’s secondary schools. A premier group of suicide prevention researchers attended this meeting, and NIMH staff were available by phone. It soon became apparent that a rigorous trial could be designed to assess program effectiveness, in a way that would also meet the needs of the community advocates and the district. Both the district and the advocates were highly optimistic of the value of this program and wanted this program available in all the schools, making a standard control group design impossible. Collectively, we decided to use a wait-listed design with half of the 32 schools being assigned to have training immediately, followed by training in the other half. With advice from NIMH we submitted an application and obtained quick funding through NIH’s RAPID mechanism. The superintendent of the district was one of the grant’s co-Investigators, indicating strong partnership.

We knew we could improve on this wait-listed design, so after funding, we proposed and investigated the statistical properties of a dynamic wait-listed design, or a “roll-out” trial, where schools are randomly assigned to one of a number of different times of training, not just a single time point (Brown et al. 2006, 2009). The advantages of this design included higher statistical power, less vulnerability to exogenous factors, and increased efficiency in scheduling and training staff in the schools (Brown et al.2006). With approval from the school district, our Data Safety and Monitoring Board, and NIMH, we shifted midway from the standard wait-listed design to this more powerful dynamic wait-listed design.

Partnerships Between Scientists and Methodologists Around Dissemination of Methods

As prevention science has advanced, so too have the methods in three areas (O’Connell et al. 2009). First, prevention now has a wide range of methods for measuring risk and protective factors and processes, outcomes, and mediators. Second, the field now has a range of methods for modeling of intervention impact, mediation and moderation. Third, there is a broad array of methods for testing these interventions in carefully constructed trials.

In addition to these general areas, other supports for researchers have been developed, including calculations of sample size and power, missing data tools, and model checking procedures. Also, there are highly flexible growth modeling and multilevel programs that can be applied to prevention (Gibbons et al. 1988; Muthén et al. 2002; Masyn 2003). Moreover, due to its prominence in causal inference, considerable work in mediation modeling has yielded dramatic advances. While all these methods have added to our ability to answer prevention hypotheses, several have had major impact on the practice of statistics. Below, we describe how the partnerships between scientists and methodologists facilitated the dissemination of one important method, generalized estimating equations.

Early on in our work with biostatisticians at Johns Hopkins, a major methodologic tool, General Estimating Equations (GEE), was developed for the analysis of longitudinal data (Liang and Zeger 1986; Zeger et al. 1988 through PSMG support and applied to prevention research (Brown 1993). GEE provided a very general way to take account of the correlation of observations across time and also clustering into classrooms, schools, and other group settings. Until GEE was developed, longitudinal analyses were primarily limited to continuous observations where the assumption of multivariate normality provided a direct expression of correlations across time. For normally distributed data, the effects of clustering into classrooms and schools could be handled through multilevel (Bryk and Raudenbush 1987), random or mixed effects (Laird and Ware 1982; Gibbons et al. 1988), or two-level latent variable models (Muthén 1997). Specialized methods for handling clustering with binary (Gibbons and Hedeker 1997), time-to-event (Hedeker et al. 2000), or other types of non-normal data were a decade away. GEE methods provided accurate confidence intervals and tests for longitudinal and clustered data even if one did not know the correlation structure. GEE thus became a convenient method to conduct complex longitudinal analyses.

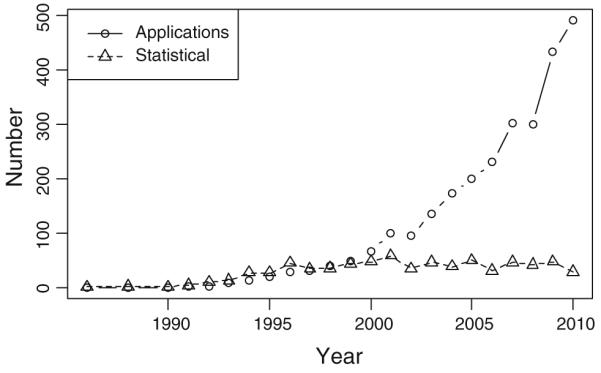

GEE was originally published in technical statistical journals (Liang and Zeger 1986; Zeger et al. 1988). Ordinarily, publications in such journals have relatively limited potential for dissemination to the scientific community because they are read by a small audience, but this story is different. We can follow the diffusion of GEE more clearly by examining publications in scientific as well as methodological journals. Figure 1 shows publications that cite GEE from 1986 to 2010. As shown by the lower curve, there was a modest growth in methodological publications from its invention to 1995, followed by a plateau with roughly 50 methodological citations per year. Up until 1995 the knowledge of this method rested mostly in the hands of methodologists. From 1995 on, however, there has been an exponential growth in the citation of GEE in the applied literature with this method continuing to be used across diverse scientific fields.

Fig. 1.

Number of statistical and application publications referencing GEE by year

GEE is now available as a computational procedure in major statistical packages (Horton and Lipsitz 1999), greatly expanding its use. One of the fields that used GEE early on was prevention science. Because of the close partnerships between methodologists and scientists, knowledge of this method transferred quickly to practice. Prevention researchers learned about GEE through their respective PSMG members, through PSMG’s meetings with NIMH and NIDA prevention centers and through the Society for Prevention Research. Thus the partnerships existing through the social networks of PSMG members accelerated the dissemination and adoption of this method.

Partnerships Between Methodologists and Statistical Software Developers

From the mid-1990s there has been considerable growth in the development of statistical models that took into account the complex person, context, and time interactions that characterized prevention research (Brown et al. 2008a, b). It was rare for biostatisticians to provide fully functioning programs to carry out the methods that they published, but rather “proof of concept” programs were the norm (Laird and Ware 1982). They would produce accurate inferences in restricted problems, and generally ignored important factors such as the handling of missing data. Early in the 1990s, no statistical program was available to solve all the computational issues of longitudinal, multilevel data simultaneously. To tackle this challenge, the director of PSMG (CHB) entered into a methodologic collaboration with the fourth author (BOM), an expert in growth modeling and computing, who has continued to serve as a co-Investigator to PSMG. Also, PSMG developed an informal partnership with the developer of Mplus (LM) so that important new methods could be integrated quickly into a statistical package that would be useable by the field (Muthén and Muthén 2007). The relationship between PSMG and Mplus was founded on mutual self-interest in providing the scientific field with innovative methods. Mplus was funded by the National Institute of Alcoholism and Alcohol Abuse through a Small Business Innovation Research grant to provide sophisticated computational software for the field, a task that takes a great deal of technical expertise and one that would not have been financially or technically viable for PSMG under its R01 funding. PSMG on the other hand has the opportunity to develop and test new methods in collaboration with ongoing research studies, and these innovations continue to be presented and criticized in an open forum discussion by PSMG members.

The lines of communication and collaboration between PSMG and Mplus solve complementary problems that each would not have been able to solve individually. One illustration involved a novel method that PSMG developed for analyzing dyadic behavior observation data (Dagne et al. 2002). Our initial method was developed as specialized code in Splus, but when we presented this to our colleagues in PSMG, it was clear that these same analyses could almost, but not quite, be accomplished in the current version of Mplus. The developer of Mplus decided to modify the program in the next release, so that such analyses could be conducted efficiently. We also received permission from the journal publisher to post the publication on the Mplus website, with the result that over 1,000 downloads of this paper occurred within a year, much wider than one could reach through standard publication channels.

PSMG as a Social Network for Knowledge Transfer around Methods

In January 2009, we conducted a PSMG member survey to monitor and improve how PSMG functioned as a collaborative network for prevention science. Each member was asked to identify 4 types of collaborations he or she has had with all other PSMG members.

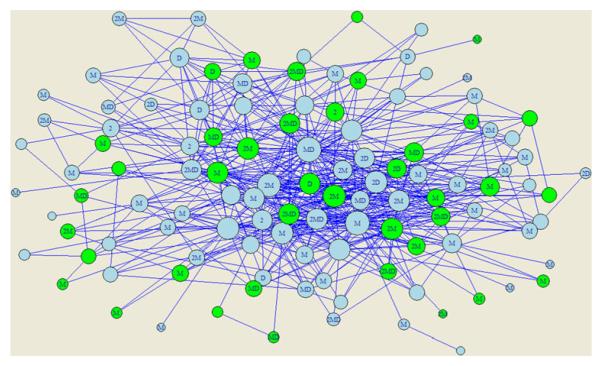

In keeping with our methodological interests and guide our strategic planning, we have conducted a network analysis on PSMG. Our view is that the topology or network social structure of PSMG has a great deal to do with its ability to generate new ideas and methods, and transfer them to the field (Valente 1995, 2003, 2010; Valente and Davis 1999). Shown in Fig. 2 are 572 scientific connections, represented by lines, between the 113 PSMG members on collaborative publications, grants, scientific presentations, and mentoring. A connection involves any of these four collaborations between two members. The number of connections each person has is represented by the size of their circle, and the colors and letters within the circle represent characteristics of PSMG members’ scientific background. Green circles correspond to the 41 (36%) early stage PSMG investigators. M represents a methodologist (67%); D represents having received specialized training in the drug abuse field (27%); and 2 represents a PSMG-2 member. PSMG-2 spontaneously evolved from PSMG as an early career sub-network that provided support and feedback specific to its members.

Fig. 2.

Social network for prevention science and methodology group

Figure 2 indicates the large number of interconnected professional collaborations between PSMG members as well as the central connection that some members have. There are no sub-networks with few ties to other members. The following characteristics of the network were computed using StOCNET (version 1.80). The density of the network, indicating the proportion of collaborations with other members, is 0.089. The average degree, or number of collaborations, is 10.035 with a range from 1 to 56 and standard deviation of 9.651. Heterogeneity of the network is 0.348, indicating a moderate variation in the distribution of collaborations. The network has 1,219 transitive triads and 6,820 intransitive triads. This yields a Transitivity measure of 0.211, indicating that substantial maturation in the collaborative structure is still possible, since in the majority of situations in which one person collaborated with two others, those two others did not collaborate with one another. In summary, the PSMG network is dense and centralized; transitivity is moderate, perhaps due to a large number of early stage investigators. Networks such as this provide rapid transfer of knowledge throughout its membership because of the large number of connections and few individuals who are relatively isolated. There is a large number of early stage investigators and its own self-forming PSMG-2 network, which is well integrated within PSMG. One structural characteristic of highly centralized, transitive networks is that they can become insular, with relatively few new ideas penetrating the network unless they are introduced by central members. Because we only asked PSMG members about their collaborations with other PSMG members, it is likely that there are a large number of collaborations outside of PSMG that can be tapped to bring in new ideas.

Partnerships for Prevention Implementation Research

We now turn to implementation research, which involves “the use of strategies to adopt and integrate evidence-based health interventions and change practice patterns within specific settings” (Chambers 2008). A major new scientific challenge for prevention involves understanding the processes that facilitate or impede quality implementation of programs demonstrated to have positive effects. To date, few of these successful programs have been implemented in communities, and the scientific community is in need of new methodological approaches to understand the implementation processes. Unlike the first phase of prevention research that was based on efficacy and effectiveness trials, this new phase of implementation is still in an early stage and has no single accepted paradigm to guide the scientific work. We already expect that the translation of the last two decades of prevention research into practice will be challenging and difficult, as evidenced by the small portion of “evidence-based” prevention programs that are now being used in practice (Kelly et al. 2000; Hallfors and Godette 2002; Hallfors et al. 2007). The partnerships that led to the conduct of effectiveness trials have for the most part not translated into wide scale adoption, and new partnerships are needed. Indeed, Kuhn’s reference to “advanced awareness of difficulties” in a field of shifting scientific paradigms is relevant to the current stage of implementation research.

There should be sufficient mutual self-interest to form and sustain partnerships around prevention implementation. Prevention scientists have been given a clear indication by NIH that research on implementation is a priority for translating science into practice. Communities are fundamentally interested in addressing critical problems such as drug abuse, mental disorders, and HIV, and implementation of evidence-based programs developed from research can be a major strategy for addressing these problems. Methodologists and computer scientists have now begun to address the novel design, measurement, modeling and testing problems in this young field (Wang et al. 2010; Landsverk et al. (Accepted for publication), 2011).

Factors Unique to Implementation That Affect Partnership

The young field of implementation research is still undergoing considerable change. It is therefore premature to provide a full conceptual model around the formation and functioning of partnerships at this time. However, sufficient experience is available to identify major areas affecting implementation partnerships. In this section we present four factors that distinguish implementation from effectiveness research and illustrate how they affect partner selection and their involvement in design and modeling. These factors relate to different scientific questions, the larger geographic coverage required for implementation, the types of data that are needed, and costs and other resource requirements that must be borne by communities and organizations.

Partnership Issues Related to Scientific Questions for Implementation

Implementation research can be framed in 4 broad phases involving pre-adoption, adoption, implementation with fidelity, and sustainability or moving to scale [Landsverk et al. (Accepted for publication); Aarons et al. (2011)]. Each phase has distinct research questions. Questions involving which factors lead to the adoption of particular programs have no counterpart in effectiveness research, which begins with communities that have already agreed to implement. A scientific study of adoption will require significant numbers of eligible communities in order to assess the rate of adoption. Thus an important focus for the partnership supporting program implementation is to build connections to large collections or networks of communities and institutions to be able to study the factors that lead to differential adoption. Rather than dealing with a single school district or service agency as in effectiveness research, implementation research would typically require involve organizations at regional, state, or national levels, as these have the potential to effect large-scale implementation.

Implementation Partnerships with Statewide Representation

It is hard to imagine an effectiveness trial being aimed at an entire state, but this can happen in implementation studies. To illustrate work at the level of the state, we briefly discuss the CAL-40 randomized implementation trial to test whether Multidimensional Treatment Foster Care, an evidence-based foster care program, can be implemented more efficiently using an implementation strategy called Community Development Team (CDT), compared to the existing standard implementation strategy. This trial involves randomizing counties to one of these two implementation strategies and to timing into one of three yearly cohorts, so that implementation can be studied in a small number of counties at a time. Trial details are described in this special issue (Chamberlain et al. 2011) and elsewhere (Brown et al. 2008a; Chamberlain et al. 2008, 2010a, b). One partner is the California Institute of Mental Health (CiMH), which is serving as an implementation “broker” or “intermediary” (Emshoff 2008) for counties assigned to CDT, to promote planning and organizational capacity through facilitated peer-to-peer exchanges. CiMH was especially important in maintaining consistency of the trial design through extremely challenging financial conditions at the state and county governmental level.

In terms of the trial design, neither the concept of randomization, nor the fair assignment to CDT or standard setting caused concern for the counties. The assignment of counties to timeframe cohorts, however, did cause some concern, especially since disruption in funding levels and disbursements from the state to the counties has resulted in increasing cautiousness on the part of county decision makers to authorize implementation of new programs. At the time of the initial recruitment, 9 (23%) of the 40 counties who agreed to participate expressed problems or concerns with the timeframe to which they had been assigned, with an additional four counties (10%) declining to participate altogether. Of the 9 counties who agreed to participate but objected to the timeline, two requested to “go early,” before their assigned cohort start-up date, and 7 wanted to delay. To accommodate counties that were unable to participate at their randomly chosen time, the design protocol was adapted to maximize the efficiency of study resources and maintain each county’s assigned condition while remaining sensitive to real-world limitations faced the counties. To fill vacancies while maintaining the original intervention assignment, counties within each condition in Cohorts 2 and 3 were randomly permuted to determine the order in which they would be offered the opportunity to move up in timing (e.g., to fill a CDT vacancy in Cohort 1, CDT assigned counties in Cohort 2 were randomly ordered). This permutation process was conducted by the research staff, and the county selected was not revealed to the field staff until the county decided to accept or decline the opportunity to “go early.” This precaution was taken to ensure that field staff did not influence a county’s decision to change their timing. The design continues to maintain equivalent comparison groups.

Implementation Partnerships with National Representation

In this next phase of implementation methods work, we have recently received funding by NIDA for a P30 Center of Excellence that focuses on Prevention Implementation Methodology (Ce-PIM), directed by the first author. Through Ce-PIM we have reached out to one of the organizations that shapes the national prevention agenda, the National Prevention Network (NPN). NPN is an organization of state alcohol and other drug abuse prevention representatives and a component of the National Association of State Alcohol/Drug Abuse Directors (NASADAD). NPN works to ensure the provision of effective alcohol, tobacco, and other drug abuse prevention services in each state and oversees the expenditure of the state block grants and other discretionary funds. To reduce incidence and prevalence of alcohol, tobacco and other drug problems, the NPN recognizes the importance of partnering with a large group of federal, state, and local levels of government and private entities. One of the goals of NPN is to advance the national prevention research agenda, recognizing the critical need to work with the research community to advance the dissemination of effective prevention policy and practice.

NPN is strategically positioned to influence policy and implementation practices. Having access to both national level policy makers as well as local level coalitions and programs, NPN members influence policy makers around the needs of practitioners as well as influence local communities to implement effective programs. NPN members have forged relationships with program purveyors, local communities, and other state agencies to coordinate prevention service delivery in each state and jurisdiction. Using federal grants that promote evidence based prevention, states have formed state-level coalitions that include programs and agencies with an interest in prevention. Through such coalitions, NPN encourages the use of evidence based programs and policies. NPN’s unique position in transmitting federal and state prevention policy and providing funding to communities in their respective states and territories makes them extremely valuable and effective partners in implementation research. For these reasons, NPN is a key partner in articulating and refining methodology for prevention implementation research.

To examine how closely tied researchers were to NPN members, NPN conducted a social network survey. Fifty percent of its 56 members responded. The vast majority (82%) said it was important to use of programs that had been rigorously tested by researchers, and just as many indicated that these research-based interventions would need to be adapted to fit their respective state’s or territory’s communities’ needs. All NPN members frequently consulted with community leaders and non-governmental organizations about prevention programs, but they had fewer affiliations with researchers. Less than half (46%) indicated that they communicated with researchers in choosing and sustaining prevention programs, and just one-third consulted or partnered with researchers on adoption or implementation of prevention programs. Similarly, just one-third of NPN members consulted with other members regarding the adoption and implementation of prevention programs. Among those that consulted with other NPN members, the average number of other members that were consulted was 5, a small proportion of the network’s membership. There does appear to be an important need to connect NPN members more closely to researchers, and to increase consultation among members.

New Methodologies to Tackle Different Problems in Implementation Research

Another difference between effectiveness and implementation research is that the research funds are often used to support the former while community and governmental service funding are required to support implementation. This means that in implementation studies, communities and organizations must address the viability of programs from financial and other resource perspectives. In all efficacy research and much of effectiveness research, these issues are typically not as pressing because research funds are available for fidelity monitoring, process, and outcome evaluation. The researcher’s priority to demonstrate impact sometimes runs diametrically opposed to having programs that are cost efficient. It is critical that a partnership supporting prevention implementation address issues of cost, benefit, and resources that are essential to the survival of the organization. This may mean a major redesign of the intervention or design of a new cost effective monitoring system for adherence checking. A partnership for implementation is likely to need methodological experts in cost effectiveness and computer science to model the behavior of alternative redesigns.

As an example of this methodological challenge in implementation, we consider the planned extension of research on the Familias Unidas family-based preventive intervention for adolescent drug abuse and sexual risk behavior (Coatsworth et al. 2002; Pantin et al. 2009; Prado et al. 2009). This program is currently funded by NIDA in an effectiveness trial where school counselors are trained to deliver Familias Unidas to groups of parents. To evaluate program adherence, all sessions are videotaped, coded by hand for adherence, and used in supervision. These costs, which are considerable, are all borne by the effectiveness research project. However, in planning a follow-up larger scale implementation research study, a new monitoring and feedback system would need to be housed in the school district, and sustaining such a system would require that adherence data be collected and coded in both a cost effective and valid manner.

We believe the answer to this problem lies in a methodological development that uses system science tools (Mabry et al. 2008; Landsverk et al. (Accepted for publication). In particular, we propose using a two-stage semiautomated coding system of program adherence. The first stage would use computational methods that include image-based gesture recognition in video (Ivanov and Bobick 2000; Inoue et al. 2010), supervised learning from text and video (Li and Ogihara 2005; Li et al. 2008; Inoue et al. 2010), and semi-automated coding of dialogues with computational linguistics techniques. The coding system is proposed to reduce human effort only required when the certainty of the rating is low; hence completing a much less expensive fidelity monitoring system.

Another distinction between effectiveness and implementation research relates to access to data being collected. The concentration in implementation research is on those systems that support the delivery of a prevention program, and not solely on the target population of that program. Thus more emphasis needs to be placed on obtaining system level data on performance and process within and between organizations. Getting permission to collect or use available data at the system level, where staff jobs may be at stake, needs permission and oversight from different stakeholders than those invested in and concerned about youth behavior. In analyzing the contact logs of the CAL-40 study mentioned previously, we have minimized the risk of misusing such data by replacing any identifying names with codes to ensure confidentiality.

Discussion

We have described how methodological choices for the conduct of prevention research involve the integrated requirements of communities and institutions as well as researchers. The expertise of methodologists in designing experimental and non-experimental studies, choice of measures, and the fitting of data to models is a necessary ingredient for the conduct of prevention research. However, these methodological decisions are not automatically driven just by statistical issues such as sample size. First, it is clear that close communication between the prevention scientist and methodologist is necessary because the design, measures, and models must address research questions shared by community, practitioners and scientists. Second, we have seen that dissemination of new methods is accelerated when there is a strong network involving research scientists and methodologists such as PSMG. The involvement of communities and institutions in considering what designs are feasible, manageable and appropriate, and what data should be made available, is also essential for all stages of prevention research. Thus, a third factor that drives methodological decisions involves the needs of those communities and institutions that house these prevention programs. A fourth factor involves the roles of local, state, and federal agencies and policy makers who set the prevention agenda, provide technical support and funding to local communities, and monitor program implementation. The role of NPN and similar groups in representing the prevention interests of states and territories is essential in framing the research agenda around prevention implementation, and their concern about the suitability of research-based programs meeting their communities’ needs must be addressed. This particular organization has important connections with prevention researchers and local communities that can potentially increase the adoption and effective implementation and sustainability of prevention programs.

Partnerships offer opportunities for change. Communities and institutions naturally feel discomfort whenever researchers suggest changes in their normal practices. Sensitive issues can come from study design, which disrupts customary procedures such as assigning children to classrooms, delivery of new services or programs, access to system level data, or introduction of evaluation monitoring and feedback systems. All these involve potential risks for community members, with comparatively less risk for the researchers themselves. For example, determining a prevention program to be ineffective for student achievement may mean limited publications for the researchers, whereas low student achievement may lead to a superintendent being fired or closing of a school. Similarly, a trial design that is improperly implemented may be considered a career setback for a methodologist, but a lack of clearly interpretable findings from the research may lead frustrated communities and organizations to disconnect from researchers.

There has been and continues to be resistance to the use of traditional randomized trials in community settings. Reasons for this resistance include a distrust of science brought about by unethical research conducted on minority and disadvantaged communities, and suspicion when scientific studies where outsiders assign one’s own children to a control or intervention condition. We agree that the traditional randomized trial does sometimes fails to meet the needs of scientists, community, and policy makers, and thus we have continued to expand the boundaries of both randomized trial design by involving community stakeholders.

This paper has provided a number of partnership illustrations but comparatively little data other than the network characteristics of PSMG and NPN and the dissemination of the GEE method from the published literature. The qualitative aspects of partnership are certainly critical, but we also contend that mixed-models, which combine qualitative and quantitative approaches, would be highly useful in both describing and comparing partnership models as well as the testing of interventions. In particular, the mixed-model use of social network analysis can help identify how individual positions and topologies affect implementation (Palinkas et al. 2011). Given the importance of partnerships in the conduct of prevention research, we believe the first step is to measure these partnerships as they evolve over time. In particular, if we had information on the degree of connectedness between state prevention agencies and prevention researchers, say whether there was no relationship, it was weak or one-directional, or it was strong and bidirectional, then a strategy could be selected that would either build new relations, strengthen, or activate existing ones.

Social network analyses can be used to study the role of partnerships in adopting evidence-based interventions. Valente and colleagues have studied the influence of community coalition network structures on the effectiveness of an intervention designed to accelerate the adoption of evidence-based substance abuse prevention programs (Valente and Davis 1999; Valente et al. 2007, 2008; Valente 2010). Inter-organizational relations are believed to affect health service delivery and are useful for creating community capacity. Network analysis can been used to develop implementation strategies (Valente 2005).

Two network indices that have the most potential for representing a coalition’s structure are density and centralization (Valente 2010). Valente’s study of community–research partnerships hypothesized that the adoption of evidence-based practices would be greater among dense coalitions than among sparse ones, and adoption would be greater among centralized coalitions than decentralized ones. Results turned out to be different. The social network intervention had a significant effect on decreasing the density of coalition networks, which in turn increased adoption of evidence-based practices. While optimal community network structures for the adoption of prevention programs are unknown, it appears as though lower-density networks may be more efficient for organizing evidence-based prevention in communities (Valente 2003). Communities with less density may have weak ties to other organizations that provide access to resources and power, which can be mobilized to adopt evidence-based practices. Increased density may signify that connections are directed within the group and have limited external pathways for information and behaviors, thus reinforcing the use of existing rather than new strategies. A dense coalition may be unable to mobilize resources it needs to adopt evidence-based prevention programs. These results suggest that coalitions should balance cohesion with connections to outside agencies and resources.

There is still important methodological work to accomplish regarding partnerships in implementation, starting with social network analysis. Traditional social network measures, such as we reported for PSMG above, are relevant to partnerships, but may need further specificity. First is an emphasis on dynamic rather than single point in time social networks. Changes in connections between individuals, groups, or organizations are essential for both adoption and sustainability of a partnership, so that network data from a single time point are insufficient. Second, people and organizations within a partnership play different roles and have different stature and levels of political power. Traditional social network analysis ignores these factors. Thus there could be strong relationships between researchers and some community leaders in a partnership, and this would appear to have high collaboration, but these may not be between politically powerful members. As important as composition is to partnership, just as important is the issue of which parties are not at the table. Social networks ignore those actors who have zero ties to those in the network, and the absence of such ties may be the determining factor of a partnership’s success. Third, social networks measure two-way relationships, but partnerships are dependent on group functioning. Methods that address higher order relations, such as hypergraphs, may be needed to characterize partnership relationships. Finally, we recommend using computer simulations to study the complex, interactional processes involved in partnerships, as this can generate new hypotheses that can later be tested empirically (Epstein 2007). Agent-based models appear to be particularly well-suited for simulation studies that can address unique aspects of communities and organizations (Axelrod 1997; Agar 2005; Railsback et al.2006; Miller and Page 2007; Heath et al. 2009; Ormerod and Rosewell 2009).

There are competing theories regarding how best to establish and sustain partnerships for the different stages of prevention research, with perhaps the most varying approaches being the “top down” and “bottom up” approaches that start with different basic premises (O’Connell et al. 2009). The “top down” approach is guided by a belief that programs that have been well researched in the past and found to be effective through rigorous evaluations are implemented with precision in other communities, are likely to lead to effective prevention in new communities as well. Such a strategy is embedded in the Communities that Care (CTC) model, which now has empirical evidence from a community-based randomized trial of impact on adolescent risk behaviors (Hawkins et al. 1998, 2008; Hawkins 1999; Arthur et al. 2010). In CTC, community leaders decide on their own prevention priorities and which evidence-based programs should be used in their community, while the researcher provides technical expertise related to the complex risk factor, scientific intervention, and implementation knowledge. Communities are led to adopt evidence-based programs based on their values and goals. The “bottom up” approach, typified by CBPR, is guided by the belief that communities and other stakeholders themselves are in the best position to make decisions about prevention strategies. For example, communities may feel that a cultural adaptation of an intervention or a new intervention may be required to meet their specialized needs (Guerra and Knox 2008). In this bottom up model, researchers are often invited into the community and serve to provide expert technical support and advice at key places. A full CBPR approach may result in the use of a program not on an evidence-based list whereas this does not happen with CTC. The top-down and bottom-up approaches have important implications regarding when and how partnerships are formed and the respective roles of different stakeholders. For example, a top-down approach that primarily funds researchers can sometimes result in a major imbalance in power compared to a bottom-up approach. But there are examples where viable and sustainable partnerships exist under both of these models. CTC, a top-down approach, supports the development of a powerful, decision-making community coalition that defines its own prevention priorities and is guided to make its own choice using a menu of programs with proven track records. The Baltimore studies (Kellam 2000) described earlier represent a hybrid collaborative model, and there the guiding principles involving trust, respect, and power sharing, are virtually identical to the CBPR principles described earlier. With long-term partnerships, power, requests, and rewards favor one party more than another at one point in time, but power can then be rebalanced or reversed at a later time. This provides a caution as the dynamic nature of partnerships suggests that describing implementation approaches can be reified to the point of caricature.

Regarding the use of rigorous designs including randomization, PSMG’s experience is that both the research-initiated Baltimore first-grade classroom trial and the advocacy/institution-initiated Georgia suicide prevention study succeeded in meeting community as well as science needs in community-engaged research. CBPR can be used with any research design, from epidemiologic observational studies to clinical trials (Buchanan et al. 2007). But it clearly has a tradition that focuses on qualitative research and the traditional randomized trial is not initially viewed as common design. Indeed, we generally agree that a pure control group is not viable for both community needs and research needs in the implementation phase. In testing out new implementation strategies, which are by definition strategies directed towards the organizations and community structures housing prevention programs, complete withholding an intervention from a group is counterproductive. We do recommend, however, that simple roll-out trials, where communities or settings are randomized to when they would receive an intervention, are highly valuable for this next stage of research. Finally, we note that lessons learned from our own experience about partnerships through PSMG can be useful in the formation of other networks and partnerships. Funded methodology centers, such as the Penn State Methodology Center and Ce-PIM, make strategic decisions about expanding partnerships with key stakeholders. These bring in a modest number of new participants, often scientists from diverse disciplines who can readily partner with methodologists. Early career methodologists can often use key scientific consultants and scientific advisors on their methodology grants to form broader partnerships that will lead to an expanded relevance of their methods. Community members and advocates who represent underserved populations may be appropriate as well; their roles can be justified by addressing important outcomes of immediate relevance such as “patient-oriented outcomes.” While PSMG and other networks are open by invitation only, there exist opportunities to form or join other networks that comprise relatively homogeneous members of methodologists. A recent example is the newly formed Interest Group in Statistics in Mental Health Research from the American Statistical Association, which is currently limited to statisticians. However, a broader partnership could come from the formation of a cross-cutting mission that is targeted to a particular set of prevention problems that requires new methods.

The structure of such methodologic partnerships has a great deal to do with the dissemination of methods to the field. An illustration is the differential application of missing data techniques in two scientific fields. In the prevention field there has been quick adoption of efficient methods for handling missing data in longitudinal studies. Full information maximum likelihood and multiple imputations are available in many statistical packages, and these have been adopted readily. This contrasts with pharmacologic treatment trials in psychiatry, where statistically inferior techniques, such as “last observation carried forward” (Siddique et al. 2008) continue to be used. To change these analytical practices will likely require a new type of partnership between methodologists and psychiatric treatment researchers.

More challenging is the building of truly heterogeneous networks or partnerships that deliberately integrate the shared interests of diverse communities, including combinations of advocates and community leaders, intervention providers and local or regional organizations, governmental agencies, and research scientists and methodologists working on the complex problems that face our families and communities. There are multiple ways to build and sustain such workgroups. One approach is by broadening the mission of community and institutional advisory boards to existing research projects as the research expands and unfolds. This broadening of advisory mission can lead to a more stable partnership. A second involves strategic support provided to sustain cross-discipline or cross-organizational committees that have been formed for specific purposes. For example, members of the Institute of Medicine’s committee that wrote the recent prevention report (O’Connell et al. 2009) have extended their dissemination to engage key federal, state, and practitioner communities around a broad implementation and coordination of prevention services, with continuing support from SAMHSA and other organizations. Third, methodologists can play a role in federal and state advisory committees as well as in professional prevention organizations such as the Society for Prevention Research and the National Prevention Network.

There are opportunities as well for community or practice coalitions and program purveyors to work more closely with research methodologists, especially program evaluators whose responsibilities are to examine factors leading to successful community implementations. Because of the recent emphasis on programs being labeled “evidence-based,” some communities are actively seeking partnerships with evaluators and methodologists so that their program can be considered to be so named; and prevention methodologists often resonate to work that addresses social justice, prevention and health promotion. These community initiatives provide new opportunities for finding the right mix of self-interests and strategic steps that can lead to rewarding collaborations at both the personal and professional level.

Acknowledgments

We thank our colleagues in the Prevention Science and Methodology Group for many comments and improvements in this presentation. Also, we thank the many community, organization, and policy leaders with whom we have partnered in our previous and continuing research. We acknowledge funding support for this work through joint support from the National Institute of Mental Health (NIMH) and the National Institute on Drug Abuse (R01-MH040859), from NIMH (R01-MH076158), and from NIDA (P30-DA027828).

Contributor Information

C. Hendricks Brown, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA.

Sheppard G. Kellam, Kellam Johns Hopkins University, Baltimore, MD, USA

Sheila Kaupert, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA.

Bengt O. Muthén, University of California Los Angeles, Los Angeles, CA, USA

Wei Wang, University of South Florida, Tampa, FL, USA.

Linda K. Muthén, Department of Product Development, Muthén & Muthén, Los Angeles, CA, USA

Patricia Chamberlain, Center for Research to Practice, Eugene, OR, USA.

Craig L. PoVey, Division of Substance Abuse and Mental Health, Salt Lake City, UT, USA

Rick Cady, Department of Human Services Oregon, Addiction and Mental Health Division, Salem, OR, USA.

Thomas W. Valente, University of Southern California, Los Angeles, CA, USA

Mitsunori Ogihara, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA.

Guillermo J. Prado, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA

Hilda M. Pantin, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA

Carlos G. Gallo, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA

José Szapocznik, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA.

Sara J. Czaja, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA

John W. McManus, Prevention Science Methodology Group, Center for Family Studies, Department of Epidemiology and Public Health, University of Miami Miller School of Medicine, 1425 NW 10th Avenue, Third Floor, Miami, FL 33136, USA

References

- Aarons GA, Hurlburt M, et al. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agar M. Agents in living color: Towards emic agent-based models. Jasss-the Journal of Artificial Societies and Social Simulation. 2005;8(1) http://jasss.soc.surrey.ac.uk/8/1/4.html. [Google Scholar]

- Arthur MA, Hawkins JD, et al. Implementation of the communities that care prevention system by coalitions in the community youth coalition study. Journal of Community Psychology. 2010;38(2):245–258. doi: 10.1002/jcop.20362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Axelrod RM. The complexity of cooperation: Agent-based models of competition and collaboration. Princeton University Press; Princeton, NJ, US: 1997. [Google Scholar]

- Brown CH. Statistical methods for preventive trials in mental health. Statistics in Medicine. 1993;12(3-4):289–300. doi: 10.1002/sim.4780120312. [DOI] [PubMed] [Google Scholar]

- Brown CH. Design principles and their application in preventive field trials. In: Bukoski WJ, Sloboda Z, editors. Handbook of drug abuse prevention: Theory, science, and practice. Kluwer Academic/Plenum Press; New York: 2003. pp. 523–540. [Google Scholar]

- Brown CH, Costigan T, et al. Data analytic frameworks: Analysis of variance, latent growth, and hierarchical models. In: Nezu A, Nezu C, editors. Evidence-based outcome research: A practical guide to conducting randomized clinical trials for psychosocial interventions. Oxford University Press; New York: 2008. pp. 285–313. [Google Scholar]

- Brown CH, Liao J. Principles for designing randomized preventive trials in mental health: An emerging developmental epidemiology paradigm. American Journal of Community Psychology. 1999;27(5):673–710. doi: 10.1023/A:1022142021441. [DOI] [PubMed] [Google Scholar]

- Brown CH, Ten Have TR, et al. Adaptive designs for randomized trials in public health. Annual Review of Public Health. 2009;30:1–25. doi: 10.1146/annurev.publhealth.031308.100223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CH, Wang W, et al. Methods for testing theory and evaluating impact in randomized field trials: Intent-to-treat analyses for integrating the perspectives of person, place, and time. Drug and Alcohol Dependence. 2008b;95(Suppl 1):S74–S104. doi: 10.1016/j.drugalcdep.2007.11.013. Supplementary data associated with this article can be found, in the online version, at doi:110.1016/j.drugalcdep.2008.1001.1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CH, Wyman PA, et al. Dynamic wait-listed designs for randomized trials: New designs for prevention of youth suicide. Clinical Trials. 2006;3(3):259–271. doi: 10.1191/1740774506cn152oa. [DOI] [PubMed] [Google Scholar]

- Brown CH, Wyman PA, et al. The role of randomized trials in testing interventions for the prevention of youth suicide. International Review of Psychiatry. 2007;19(6):617–631. doi: 10.1080/09540260701797779. [DOI] [PubMed] [Google Scholar]

- Bryk AS, Raudenbush SW. Application of hierarchical linear models to assessing change. Psychological Bulletin. 1987;101(1):147–158. [Google Scholar]

- Buchanan DR, Miller FG, et al. Ethical issues in community-based participatory research: balancing rigorous research with community participation in community intervention studies. Progress in Community Health Partnerships: Research, Education, and Action. 2007;1(2):153–160. doi: 10.1353/cpr.2007.0006. [DOI] [PubMed] [Google Scholar]

- Chamberlain P, Roberts R, et al. Three collaborative models for scaling up evidence-based practices. 2011 doi: 10.1007/s10488-011-0349-9. doi:10.1007/s10488-011-0349-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Brown CH, et al. Engaging and recruiting counties in an experiment on implementing evidence-based practice in California. Administration and Policy In Mental Health. 2008;35(4):250–260. doi: 10.1007/s10488-008-0167-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Saldana L, et al. Implementation of MTFC in California: A randomized trial of an evidence-based practice. In: Roberts-DeGennaro M, Fogel S, editors. Using evidence to inform practice for community and organizational change. Lyceum Books, Inc; Chicago: 2010a. pp. 218–234. [Google Scholar]

- Chamberlain P, Saldana L, et al. Implementation of multidimensional treatment foster care in California: A randomized trial of an evidence-based practice. In: Roberts-DeGennaro M, Fogel S, editors. Empirically supported interventions for community and organizational change. Lyceum Books, Inc; Chicago: 2010b. [Google Scholar]

- Chambers DA. Advancing the science of implementation: A workshop summary. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35(1-2):3–10. doi: 10.1007/s10488-007-0146-7. [DOI] [PubMed] [Google Scholar]

- Coatsworth J, Pantin H, et al. Familias Unidas: A family-centered ecodevelopmental intervention to reduce risk for problem behavior among Hispanic adolescents. Clinical Child and Family Psychology Review. 2002;5(2):113–132. doi: 10.1023/a:1015420503275. [DOI] [PubMed] [Google Scholar]

- Dagne GA, Howe GW, et al. Hierarchical modeling of sequential behavioral data: An empirical Bayesian approach. Psychological Methods. 2002;7(2):262–280. doi: 10.1037/1082-989x.7.2.262. [DOI] [PubMed] [Google Scholar]

- Dishion TJ, McCord J, et al. When interventions harm. Peer groups and problem behavior. American Psychologist. 1999;54(9):755–764. doi: 10.1037//0003-066x.54.9.755. [DOI] [PubMed] [Google Scholar]

- Dishion TJ, Spracklen KM, et al. Deviancy training in male adolescents friendships. Behavior Therapy. 1996;27(3):373–390. [Google Scholar]

- Dolan LJ, Kellam SG, et al. The short-term impact of two classroom-based preventive interventions on aggressive and shy behaviors and poor achievement. Journal of Applied Developmental Psychology. 1993;14(3):317–345. [Google Scholar]

- Emshoff J. Researchers, practitioners, and funders: Using the framework to get Us on the same page. American Journal of Community Psychology. 2008;41(3):393–403. doi: 10.1007/s10464-008-9168-x. [DOI] [PubMed] [Google Scholar]

- Epstein JM. Generative social science: Studies in agent-based computational modeling. Princeton University Press; Princeton, NJ: 2007. [Google Scholar]

- Flay BR. Efficacy and effectiveness trials (and other phases of research) in the development of health promotion programs. Preventive Medicine. 1986;15(5):451–474. doi: 10.1016/0091-7435(86)90024-1. [DOI] [PubMed] [Google Scholar]

- Gibbons RD, Hedeker D. Random effects probit and logistic regression models for three-level data. Biometrics. 1997;53(4):1527–1537. [PubMed] [Google Scholar]

- Gibbons RD, Hedeker D, et al. Random regression models: A comprehensive approach to the analysis of longitudinal psychiatric data. Psychopharmacology Bulletin. 1988;24(3):438–443. [PubMed] [Google Scholar]

- Guerra N, Knox L. How culture impacts the dissemination and implementation of innovation: A case study of the Families and Schools Together Program (FAST) for preventing violence with immigrant Latino youth. American Journal of Community Psychology. 2008;41(3):304–313. doi: 10.1007/s10464-008-9161-4. [DOI] [PubMed] [Google Scholar]

- Hallfors D, Godette D. Will the ‘Principles of Effectiveness’ improve prevention practice? Early findings from a diffusion study. Health Education Research. 2002;17(4):461–470. doi: 10.1093/her/17.4.461. [DOI] [PubMed] [Google Scholar]

- Hallfors D, Pankratz M, et al. Does federal policy support the use of scientific evidence in school-based prevention programs? Prevention Science. 2007;8(1):75–81. doi: 10.1007/s11121-006-0058-x. [DOI] [PubMed] [Google Scholar]

- Hawkins JD. Preventing crime and violence through communities that care. European Journal on Criminal Policy and Research. 1999;7(4):443–458. [Google Scholar]

- Hawkins JD, Arthur MW, et al. Community interventions to reduce risks and enhance protection against antisocial behavior. In: Stoff DW, Breiling J, Masers JD, editors. Handbook of antisocial behaviors. Wiley; New York: 1998. pp. 365–374. [Google Scholar]

- Hawkins J, Catalano R, et al. Testing communities that care: The rationale, design and behavioral baseline equivalence of the community youth development study. Prevention Science. 2008;9(3):178–190. doi: 10.1007/s11121-008-0092-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heath B, Hill R, et al. A survey of agent-based modeling practices (January 1998 to July 2008) The Journal of Artificial Societies and Social Simulation. 2009;12(4):A143–A177. [Google Scholar]

- Hedeker D, Siddiqui O, et al. Random-effects regression analysis of correlated grouped-time survival data. Statistical Methods in Medical Research. 2000;9(2):161–179. doi: 10.1177/096228020000900206. [DOI] [PubMed] [Google Scholar]

- Horton NJ, Lipsitz SR. Review of software to fit Generalized Estimating Equation (GEE) regression models. American Statistician. 1999;53:160–169. [Google Scholar]

- Howe GW, Reiss D, et al. Can prevention trials test theories of etiology? Development and Psychopathology. 2002;14:673–693. doi: 10.1017/s0954579402004029. [DOI] [PubMed] [Google Scholar]

- Ialongo NS, Werthamer L, et al. Proximal impact of two first-grade preventive interventions on the early risk behaviors for later substance abuse, depression, and antisocial behavior. American Journal of Community Psychology. 1999;27(5):599–641. doi: 10.1023/A:1022137920532. [DOI] [PubMed] [Google Scholar]

- Inoue M, Ogihara M, et al. Utility of gestural cues in indexing semantic miscommunication; 5th international conference on Future Information Technology; 2010; Future Tech 2010. [Google Scholar]

- Ivanov YA, Bobick AF. Recognition of visual activities and interactions by stochastic parsing. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22(8):852–872. [Google Scholar]

- Jasuja GK, Chou CP, et al. Using structural characteristics of community coalitions to predict progress in adopting evidence-based prevention programs. Eval Program Plan. 2005;28:173–184. [Google Scholar]