SUMMARY

The basic, still unanswered question about visual object representation is this: what specific information is encoded by neural signals? Theorists have long predicted that neurons would encode medial axis or skeletal object shape, yet recent studies reveal instead neural coding of boundary or surface shape. Here, we addressed this theoretical/experimental disconnect, using adaptive shape sampling to demonstrate for the first time explicit coding of medial axis shape in high-level object cortex (macaque monkey inferotemporal cortex or IT). Our metric shape analyses revealed a coding continuum, along which most neurons represent a configuration of both medial axis and surface components. Thus IT response functions embody a rich basis set for simultaneously representing skeletal and external shape of complex objects. This would be especially useful for representing biological shapes, which are often characterized by both complex, articulated skeletal structure and specific surface features.

INTRODUCTION

Object perception in humans and other primates depends on extensive neural processing in the ventral pathway of visual cortex (Ungerledier and Mishkin, 1982; Felleman and Van Essen, 1991; Kourtzi and Connor, 2011). Recent studies of ventral pathway processing support the longstanding theory that objects are represented as spatial configurations of their component parts (Tsunoda et al., 2001; Pasupathy and Connor, 2002; Brincat and Connor, 2004; Yamane et al., 2008). Theorists have often predicted that parts-based representation would depend on skeletal shape, which is defined geometrically for each part by the axis of radial symmetry, or medial axis (Blum, 1973; Marr and Nishihara, 1978; Biederman, 1987; Burbeck and Pizer, 1995; Leyton, 2001; Kimia, 2003). Axial representation has strong advantages for efficient, invariant shape coding, especially for biological shapes, and has been used extensively in computer vision (Arcelli, Cordella and Leviadi, 1981; Pizer, Oliver and Bloomberg, 1987; Leymarie and Levine, 1992; Rom and Medioni, 1993; Ogniewicz, 1993; Zhu and Yuille, 1996; Zhu, 1999; Siddiqi et al., 1999; Pizer et al., 2003; Giblin and Kimia, 2003; Sebastian, Klein and Kimia, 2004; Shokoufandeh et al.. 2006; Feldman and Singh, 2006; DeMirci, Shokoufandeh and Dickinson, 2009). A number of psychophysical results indicate a special role for medial axis structure in human object perception (Johansson, 1973; Kovacs and Julesz, 1994; Siddiqi, Tresness and Kimia, 1996; Wang and Burbeck, 1998; Siddiqi et al., 2001).

At the neural level, there is evidence for late-phase medial axis signals in primary visual cortex (Lee et al., 1998), but there has been no demonstration of explicit medial axis representation in the ventral pathway. While object responses have been extensively studied in ventral pathway areas V4 and IT (Gross, Rocha-Miranda and Bender, 1972; Fujita et al., 1992; Gallant, Braun and Van Essen, 1993; Kobatake and Tanaka, 1994; Janssen, Vogels and Orban, 2000; Rollenhagen and Olson, 2000; Tsunoda et al., 2001; Baker, Behrmann and Olson, 2002; Hung et al. 2005; Leopold, Bondar and Giese, 2006; Tsao et al., 2006; Freiwald, Tsao and Livingstone, 2009; Freiwald and Tsao, 2010), there has been no way to distinguish whether they are driven specifically by internal medial axis shape. In fact, studies have consistently shown that ventral pathway neurons represent external boundary shape fragments, either 2D contours or 3D surfaces, which require less computation to derive from visual images (Pasupathy and Connor, 1999; Pasupathy and Connor, 2001; Brincat and Connor, 2004; Yamane et al., 2008; Carlson et al., 2011).

Here, we addressed this theoretical/experimental gap by testing for medial axis coding directly and comparing medial axis and surface coding. We studied 111 visually responsive neurons recorded from central and anterior IT cortex (13-19 mm anterior to the inter-aural line) in two awake, fixating monkeys. We used adaptive shape sampling algorithms (Yamane et al., 2008; Carlson et al., 2011) for efficient exploration of neural responses in the medial axis and surface domains. We used metric shape analyses to characterize neural tuning in both domains. We found that many IT neurons explicitly encode medial axis information, consistently responding to configurations of 1–12 axial components. We found that this configural medial axis tuning exists on a continuum with surface tuning, and that most cells are tuned for shape configurations combining both axial and surface elements.

RESULTS

Sampling shape responses in the axial and surface domains

We used an adaptive stimulus strategy guided by online neural response feedback. Compared to random or systematic sampling, adaptive sampling makes it possible to study much larger domains of more complex shapes, by focusing sampling on the most relevant regions within those larger domains. To optimize sampling in both the axial and surface domains, it was necessary to use two different adaptive paradigms simultaneously. This is because complex surface shape and complex axial shape are geometrically exclusive. Elaborate skeletal shape is only perceptible if surfaces are shrunk around the medial axes, limiting surface complexity on a visible scale. Conversely, elaborate surface shape requires surface expansion, which eliminates and/or obscures complex skeletal structure.

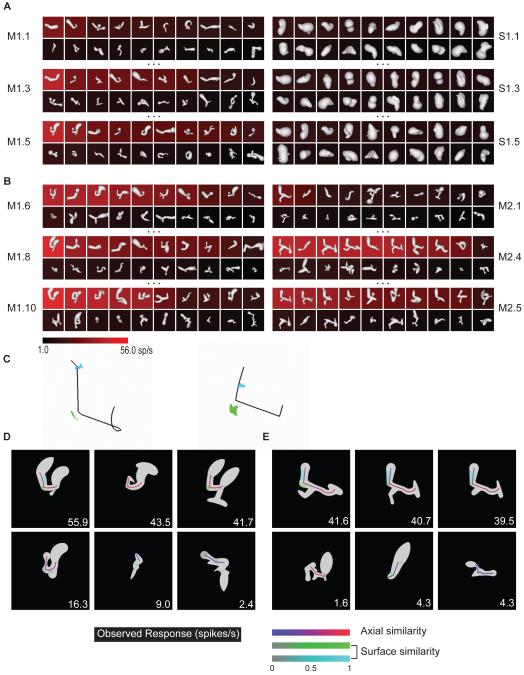

An example of the medial axis adaptive sampling paradigm is shown in the left column of Figure 1A. The first generation of medial axis stimuli (M1.1) comprised 20 randomly constructed shapes with 2–8 axial components that varied in orientation, curvature, connectivity, and radius (see Experimental Procedures and Figure S1A for stimulus generation details). These shapes were presented on a computer screen for 750 ms each, in random order, at the center of gaze while the monkey performed a fixation task. 3D shape was conveyed by shading cues combined with binocular disparity. The background color for each shape represents the average response (across 5 repetitions, see scale bar at bottom) of a single neuron recorded from anterior IT.

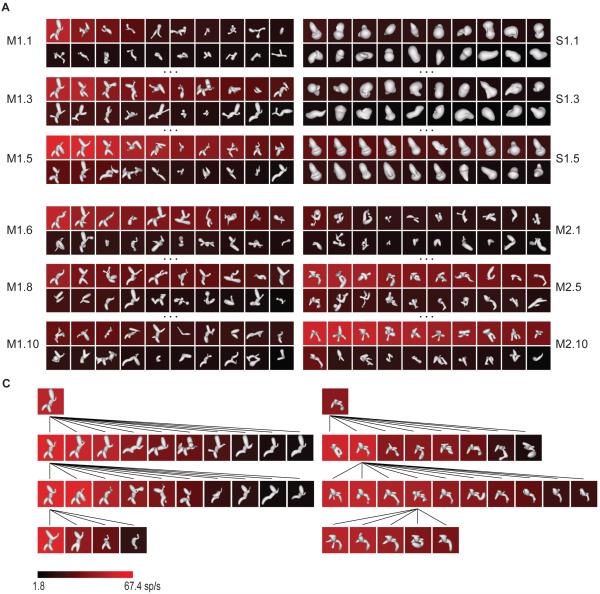

Figure 1.

Adaptive shape sampling example. See text for details. (A) 1st, 3rd, and 5th generations of a medial axis lineage (left, M1.1, M1.3, M1.5) and a surface shape lineage (right, S1.1, S1.3, S1.5). Stimuli are ordered within each generation by average response strength. Average response rate is indicated by background color (see scale bar). (B) 6th, 8th, and 10th generations of the original medial axis lineage (left, M1.6, M1.8, M1.10) and 1st, 5th, and 10th generations of a second, independent medial axis lineage (right, M2.1, M2.5, M2.10). (C) Partial family trees exemplifying shape evolution within the first (left) and second (right) medial axis lineages.

The first generation of surface stimuli used to study this same neuron (Figure 1A, right column, S1.1) comprised 20 random shapes constructed by deforming an ellipsoidal mesh with multiple protrusions and indentations (see Experimental Procedures and Figure S1B for stimulus generation details). This construction method produces much greater surface complexity coupled with relatively simple axial structure. These shapes were presented in the same manner, randomly interleaved with the axial stimuli.

Subsequent stimulus generations in both the axial and surface lineages comprised partially morphed descendants of ancestor stimuli from previous generations. A variety of random morphing procedures were applied in both domains (Figure S1). Selection of ancestor stimuli from previous generations was probabilistically weighted toward higher responses. This extended sampling toward higher response regions of shape space and promoted more even sampling across the response range (compare first generations M1.1, S1.1 with fifth generations M1.5, S1.5, and see Figure S1C).

After five generations of both axial and surface stimuli, we initiated another lineage in the domain that produced higher maximum responses (based on a Wilcoxon rank-sum test of the top ten responses in each domain). In this case, we initiated a new axial lineage, beginning with a new generation of randomly constructed axial shapes (Figure 1B, M2.1). This allowed us to test models in the highest response domain based on correlation between independent lineages. The new lineage evolved in parallel with the original lineage, and the procedure was terminated after obtaining 10 generations in the original medial axis lineage and 10 in the new medial axis lineage, for a total of 400 medial axis stimuli and 100 surface stimuli. Figure 1C illustrates the evolution of shapes in both axial lineages with partial family trees. Both lineages succeeded in sampling across the neuron’s entire firing rate range (Figure S1C). This neuron and others presented below exemplify how the axial shape algorithm could generate stimuli with the complexity of natural objects like bipedal and quadrupedal animal shapes.

Medial axis shape tuning

In previous studies, we have characterized complex shape tuning with linear/nonlinear models fitted using search algorithms (Brincat and Connor, 2004, 2006; Yamane et al., 2008). A drawback of this approach is the large number of free parameters required to quantify complex shape and the consequent dangers of overfitting and instability. Here, we avoided this problem by leveraging the shape information in high response stimuli that evolved in each experiment. We searched these stimuli for shape templates that could significantly predict response levels within and across lineages. The predicted response to a given shape was based on its geometric similarity to the shape template model.

To verify convergent evolution between lineages, we tested whether a template model derived from one lineage (the “source lineage”) could significantly predict responses in the other, independent lineage (the “test lineage”). We found candidate medial axis templates by first decomposing each shape in the source lineage into all possible connected substructures, ranging from single axis components to the entire shape (e.g. Figure 2A). The template that turned out to be optimal for this neuron is shown at the top. For this template (and for each candidate template drawn from this and other high response shapes), we first tested predictive power in the source lineage itself (Figure 2B). The predicted response to each shape was a linear function of the geometric similarity (Figure 2, color scale; see Experimental Procedures and Figure S2) of its closest matching substructure to the template. We searched for templates with the highest correlation between predicted responses (similarity values) and observed responses (Figure 2B, inset numbers) across all shapes in the source lineage. We identified 10 candidate templates (all with high correlations but also constrained to be geometrically dissimilar) from the source lineage and then tested each of these for its predictive power in the test lineage, again by measuring correlation between predicted responses (template similarities) and observed responses (Figure 2C). We selected the template with the greatest predictive power (highest correlation) in the test lineage. We performed the same procedure with either lineage as the source of template models, for a total of 20 candidate templates. In this case, the optimum template produced a highly significant cross-lineage correlation between predicted and observed responses of 0.33 (p < 0.00002, corrected for 20 comparisons), showing that comparable medial axis structure evolved in the two independent lineages.

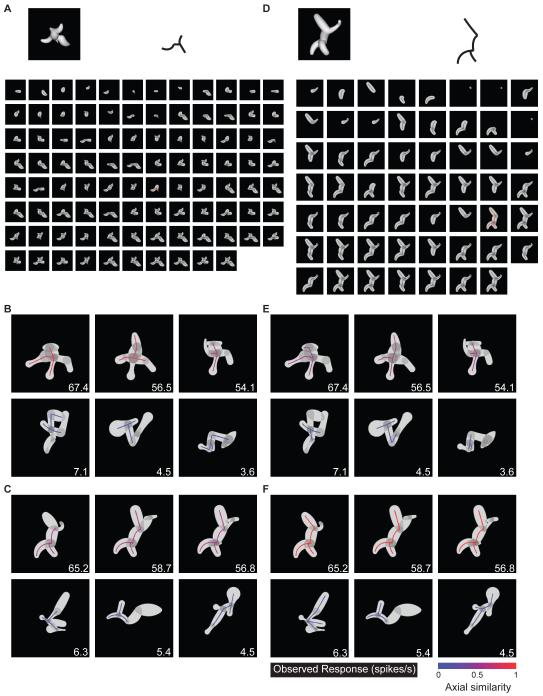

Figure 2.

Medial axis template model example (for Figure 1 neuron). (A) Optimum template, in this case from second lineage (outline, upper right). The source stimulus is shown (upper left) along with its complete set of substructures. (B) Highest similarity substructures (colored outlines) for three example high response stimuli (top row) and three low response stimuli (bottom row) from the same (second) lineage. Average observed response rates are indicated by inset numbers, template similarity values are indicated by color (see scale bar). (C) Highest similarity substructures for high response (top row) and low response (bottom row) stimuli from the first lineage. (D–F) Optimum template based on both lineages, presented as in A–C.

While the above procedure served to confirm convergent evolution across lineages, a more accurate template model can be obtained by simultaneously constraining the selection process with both lineages. This was accomplished by measuring correlation between predicted responses (template similarities) and observed responses across the entire dataset. For this neuron, constraining with both lineages produced a closely related template (Figure 2D) with a comparable pattern of similarity values (Figure 2E,F). The significance of models constrained by both lineages was confirmed with a two-stage cross-validation procedure, in which both model selection and final goodness of fit were based on testing against independent stimulus sets (see Experimental Procedures). The average cross-validation correlation for this neuron was 0.59 (p < 0.05).

Combined medial axis and surface shape tuning

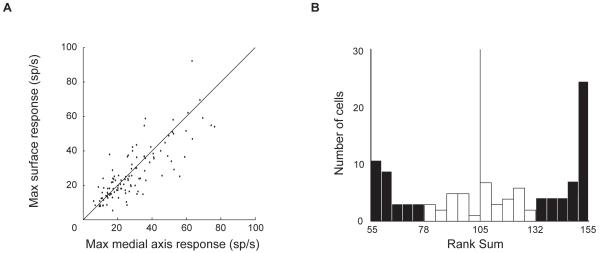

We found clear evidence for both medial axis and surface shape tuning in our neural sample. Maximum response rates in the two domains were comparable (Figure 3A), and there were no clusters of neurons with much higher axial shape responses (lower right corner) or much higher surface shape responses (upper left corner). Moreover, we observed no anatomical clustering of axial or surface tuning (Figure S3). The rank sum test of the 10 highest response rates in each domain identified 40 neurons with significantly (p < 0.05) stronger responses to medial axis stimuli and 29 neurons with significantly stronger responses to surface stimuli (Figure 3B). All 66 neurons above the midpoint of the rank sum statistic range (105) were studied with a second medial axis lineage.

Figure 3.

Response strength comparison for medial axis and surface stimuli. (A) Scatter plot of mean response across top ten stimuli in each domain (n=111). (B) Histogram of Wilcoxon rank sum statistics testing whether responses to top ten medial axis stimuli were higher than responses to top ten surface stimuli. Filled bars indicate significantly (p < 0.05) higher medial axis responses (right, n = 40) or higher surface responses (left, n = 29).

Even among these neurons, our analyses showed examples of weak medial axis tuning and strong surface shape tuning. For the cell depicted in Figure 4, maximum responses in the two domains were similar (Figure 4A), although the rank sum test dictated a second lineage in the medial axis domain (Figure 4B). The optimum medial axis template identified from a single source lineage produced low, non-significant correlation (0.19, p > 0.05, corrected) between predicted and observed response rates in the test lineage. In contrast, the optimum surface shape template model identified from a single source lineage produced higher, significant correlation (0.34, p < 0.05, corrected) in the test lineage. The optimum surface template was identified using a similarity-based search analogous to the medial axis analysis. Surface templates comprised 1-6 surface fragments, characterized in terms of their object-relative positions, surface normal orientations, and principle surface curvatures, as in our previous study of 3D surface shape representation (Yamane et al. 2008; see Experimental Procedures and Figure S3). As in that study, we found here that cross-prediction between lineages peaked at the 2-fragment complexity level, so we present 2-fragment models in the analyses below. For this neuron, the optimum template constrained by both lineages (Figure 4C) was a configuration of surface fragments (cyan and green) positioned below and to the left of object center (cross). This template produced high similarity values for high response stimuli and low similarity values for low response stimuli in both lineages (Figure 4D,E). The average cross-validation correlation for templates constrained by both lineages was 0.41 (p < 0.05).

Figure 4.

Surface tuning example. (A, B) Selected generations from two medial axis lineages and one surface lineage. Details as in Figure 1. (C) Optimum surface template model (based on correlation with response rates in both lineages), projected onto the template source stimulus (left) and schematized (right) as a combination of two surface fragment icons (green, cyan) positioned relative to object center (cross). The acute convex point (green) is enlarged for visibility. (D) Surface template similarity (see scale bar) for three example high response stimuli (top row) and three low response stimuli (bottom row) from the first medial axis lineage. (E) Surface template similarity for high and low response stimuli from the second medial axis lineage.

We tested the hypothesis that some IT neurons are tuned for both medial axis and surface shape by fitting composite models based on optimum templates in both domains. (These models were fit to the two medial axis lineages used to test 66 neurons, not to the surface lineages for these neurons.) For the example cell depicted in Figure 5, maximum responses were much higher in the medial axis domain (Figure 5A), and comparable axial structure emerged in a second medial axis lineage (Figure 5B). However, composite models based on optimum axial and surface templates (Figure 5C) revealed that this neuron was also sensitive to surface shape. The composite model was a linear/nonlinear combination of axial and surface tuning:

where Sm is the axial similarity score, Ss is the surface similarity score, a is the fitted relative weight for the linear axial term, (1–a) is the weight for the surface term, x is the fitted relative weight for the nonlinear product term, and (1–x) is the combined weight for the linear terms. In this case, the optimum composite model selected from a single source lineage (Figure 5C, left) produced a significant (p < 0.05, corrected) correlation (0.49) between predicted and observed responses in the test lineage. The optimum composite model constrained by both lineages (Figure 5C, right) was associated with an average cross-validation correlation of 0.55 (p < 0.05, corrected). Both models were characterized by a U-shaped medial axis template, with a surface template describing the left elbow and left limb. The model constrained by both lineages was evenly balanced between axial tuning (a = 0.46) and surface tuning (1–a = 0.54), with a substantial nonlinear weight (x = 0.37). Correspondingly, high response stimuli in both lineages (Figure 5D,E, top rows) had strong similarity to both templates, while stimuli with strong similarity to only the axial template or only the surface template elicited weak responses (bottom rows).

Figure 5.

Combined medial axis / surface tuning example. (A, B) Selected generations from two medial axis lineages and one surface lineage. Details as in Figure 1. (C) Optimum combined templates based on a single lineage (left) and based on both lineages (right). In each case, the black outline represents the medial axis template and the green and cyan surfaces represent the surface template. (D) Template similarity values (see scale bars) for example high (top row) and low (bottom row) response stimuli from the first medial axis lineage. (E) Template similarity values for example stimuli from the second medial axis lineage.

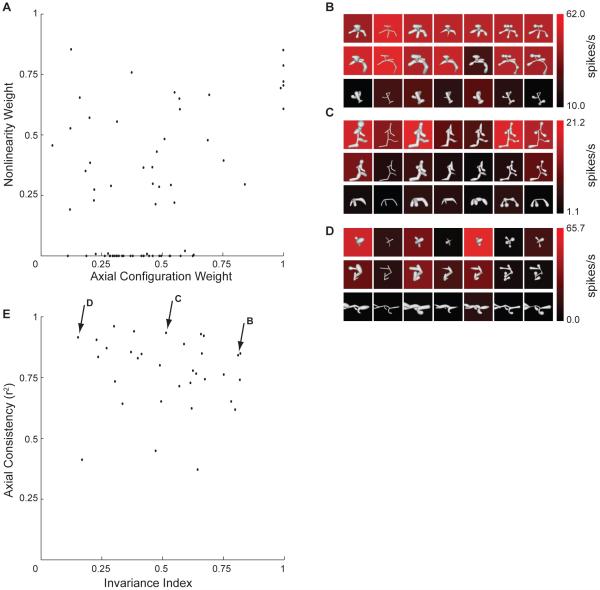

Figure 6 shows the distribution of linear and nonlinear weights across composite models fit to the 66 neurons studied with two medial axis lineages. The axial tuning weight (a), which represents how linear (additive) tuning is balanced between axial similarity and surface similarity, is plotted on the horizontal axis. Thus, points toward the right reflect stronger linear tuning for axial similarity, while points toward the left reflect stronger linear tuning for surface similarity. The nonlinear tuning weight is plotted on the y axis. Thus points toward the bottom represent mainly linear, additive tuning based on axial and/or surface similarity. Points near the top represent mainly nonlinear tuning, i.e. responsiveness only to combined axial and surface similiarity, expressed by the product term in the model. The distribution of model weights in this space was broad and continuous. There were few cases of exclusive tuning for surface shape (lower left corner) and no cases of exclusive tuning for axial shape (lower right corner). There were many models (along the very bottom of the plot) characterized by purely additive (linear) tuning for axial and surface shape. There were other models (higher on the vertical axis) characterized by strong nonlinear selectivity for composite axial/surface structure. In most cases, composite models showed significant correlation between predicted and observed response rates. In tests of cross-lineage prediction, 48/66 models were significant at a corrected threshold of p < 0.05 (Figure S5A). For models constrained by both lineages, 59/66 cross-validation correlations were significant at a threshold of p < 0.005 (Figure S5B).

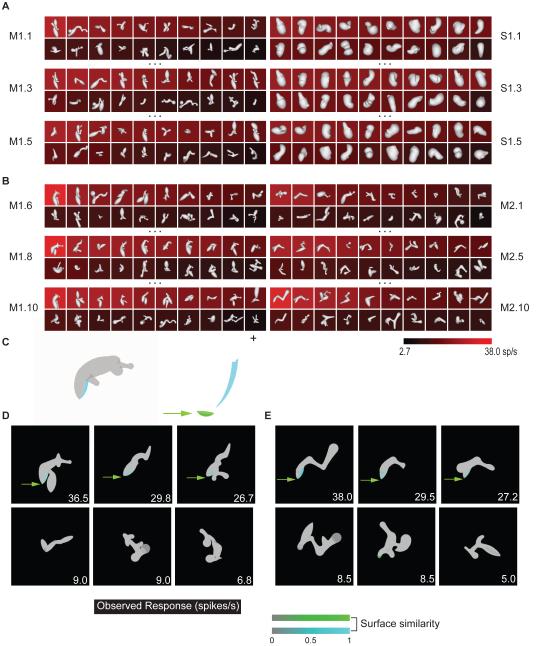

Figure 6.

(A) Distribution of axial weights (horizontal axis) and nonlinear weights (vertical axis) in the composite medial axis / surface tuning models. (B–D) Example tests of axial tuning consistency. These tests were based on one high (top rows), one medium (middle rows), and one low (bottom rows) response stimulus drawn from the adaptive tests. The original stimuli (left column) were morphed with six different radius profiles (columns 2–7) to alter surface shape while maintaining medial axis shape. (E) Scatter plot of response invariance (horizontal axis) vs. axial tuning consistency (vertical axis). Our index of invariance is explained in Experimental Procedures. Consistency was defined as the fraction of variance explained by the first component of a singular value decomposition model, which measures the separability of axial and surface tuning.

For 33 of these neurons, we further explored the relationship between axial and surface tuning with an additional test (Figures 6B–D) based on one high response medial axis stimulus, one intermediate response stimulus, and one low response stimulus. Medial axis structure was preserved while surface shape was substantially altered. For some neurons, responses to a given medial axis structure remained largely consistent across surface alterations (Figure 6B). In contrast, most neurons showed strong sensitivity to surface alterations (Figures 6C,D). The distribution of surface sensitivity (as measured by invariance to surface changes; Figure 6E, horizontal axis) was continuous. Even for neurons with substantial surface sensitivity (toward the left of the plot), tuning for medial axis structure remained consistent (as measured by correlation between axial tuning patterns across the different surface conditions; Figure 6E, vertical axis).

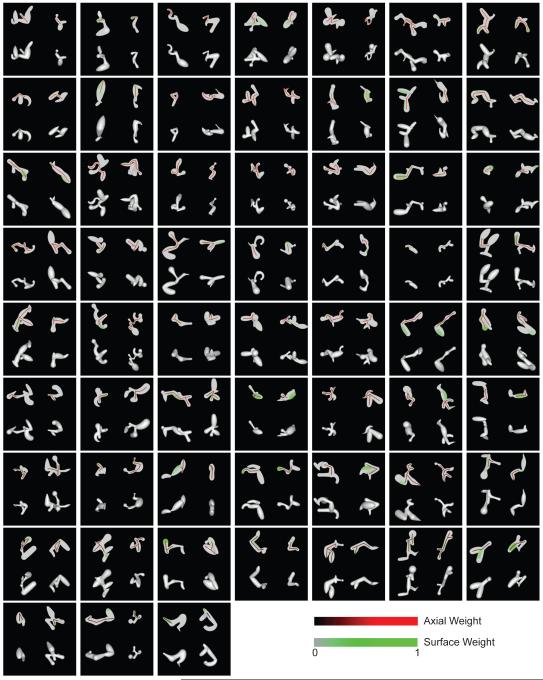

The full set of 59 significant composite models (constrained by both lineages) is depicted in Figure 7. In each case, the model is projected onto one high response stimulus from each of the two medial axis lineages (left and right), with the original shaded stimuli shown below. We identified a wide array of medial axis tuning configurations, ranging from 1–12 components, and including single and double Y/T junctions. In most cases (48/59), the surface templates were at least partially associated with the same object fragments described by the medial axis templates. Surface configuration tuning also varied widely, and this was substantiated by surface models identified for the 45 neurons studied with two surface lineages (Figure S6).

Figure 7.

Composite medial axis / surface tuning models. Models constrained by both lineages are shown for 59/66 neurons with significant cross-validation (r test, p < 0.005). For each neuron, the optimum model is projected onto a high response stimulus from the first medial axis lineage (left column) and a high response stimulus from the second lineage (right column). The model projections are shown in the top row, and the original shaded stimuli are shown in the bottom row. Medial axis and surface template similarity values are indicated by color (see scale bars). Models are arranged by decreasing medial axis weight from upper left to lower right.

It is important to note that, while these tuning templates were often complex, they did not define the entire global structure of high response stimuli. In fact, high response stimuli varied widely in global shape, both within and between stimulus lineages (Figs. 1, 4, 5, 7, 8). Thus, individual IT neurons do not appear to represent global shape, at least in the domain of novel, abstract objects studied here. Rather, novel objects must be represented by the ensemble activity of IT neurons encoding their constituent substructures.

Figure 8.

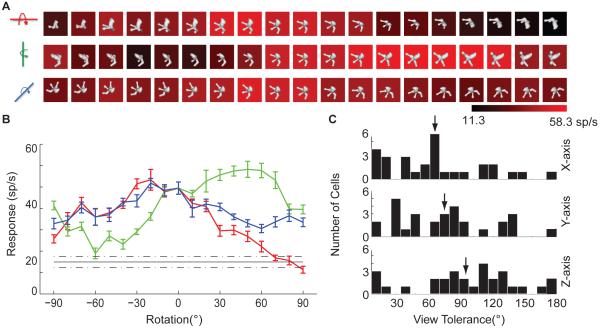

3D rotation test. (A) Example results, for the Figure 1 neuron. Average response level (see scale bar) was measured for versions of the highest response stimulus from the adaptive sampling procedure rotated across a 180° range in 10° increments around the x (top), y (middle), and z (bottom) axes. (B) Same data as in (A), for rotation around the x (red), y (green), and z (blue) axes (error bars indicate +/- s.e.m.), compared to average response across all stimuli in the main experiment (black, dashed lines indicate +/- s.e.m.). (C) Distribution of tolerances for rotation around the x (top), y (middle), and z (bottom) axes. Tolerance was defined as the width in degrees of the range over which responses to the highest response stimulus remained significantly (t test, p < 0.05) greater than the average response to random stimuli tested as part of the adaptive sampling experiment.

Specificity of tuning for 3D shape and 3D object orientation

Object shape in three dimensions is inferred from 2D image features, including shading and 2D occlusion boundary contours (Koenderink, 1984). Many IT neurons appear to encode inferred 3D object shape (Janssen, Vogels and Orban, 2000a,b), rather than low-level image features, since IT shape tuning remains consistent across dramatic changes in 2D shading patterns (produced by altered lighting direction) and is strongly diminished or abolished by removing depth cues (Yamane et al., 2008). Here, for a subset of neurons in our sample, we tested specificity of 3D shape tuning in an additional way, by measuring responses across a range of 3D object rotations, which preserve 3D shape while altering the 2D image.

For 29 neurons that remained isolated long enough for extended testing, we selected the highest response stimulus identified in the adaptive sampling lineages. We identified a roughly optimal orientation of this stimulus by measuring responses to 22 orientations produced by 45° increment rotations around the x, y, and z axes. We used the highest response orientation (typically the original version) as the basis for finer tests of x, y, and z rotation tolerance across 180° ranges centered on this optimum orientation (Figure 8). The example shown here is the same neuron presented in Figure 1. Consistent with previous studies (Logothetis, Pauls, and Poggio, 1995; Logothetis and Pauls, 1995), responses of this neuron were tolerant to a wide range of 3D rotations (Figure 8A,B). We quantified tolerance as the orientation range over which responses remained significantly (t test, p < 0.05) higher than the average response to random 3D shapes (black line, Figure 8B) generated during adaptive sampling (typically 148 shapes). In this case the tolerance ranges were 150, 140, and 180° for rotation about the x, y, and z axes respectively. Many neurons exhibited broad tolerance (Figure 8C), especially for in-plane z-axis rotation (mean = 93.4°), but also for 3D rotation about the x (61.7°) and y (70.7°) axes.

These broad tolerance values show that tuning for 3D shape remains consistent across substantial changes in the underlying 2D image. To quantify this, we used the composite 3D shape model derived for each neuron in the main experiment to predict responses to the 56 stimuli in the rotation experiment. The correlation between predicted and observed responses for this example neuron was 0.62. In contrast, correlations produced by standard 2D models based on contour shape and Gabor decomposition (Supplemental Experimental Procedures) were substantially lower (0.19 and 0.37, respectively). The average correlation for 3D shape models was 0.46 (compared to 0.11 for 2D contour models and 0.25 for Gabor decomposition models; see Figure S7). These results further substantiate the specificity of IT tuning for inferred 3D shape as opposed to 2D image features.

DISCUSSION

We used adaptive stimulus sampling (Figure 1) and metric shape analysis (Figure 2) to show that higher-level visual cortex represents objects in terms of their medial axis structures. We found that IT neurons are tuned for medial axis substructures comprising 1–12 components. We also found that most IT neurons are simultaneously tuned for medial axis and surface shape (Figure 7). In both domains, representation is fragmentary, i.e. IT neurons do not encode global shape (Figs. 1, 4, 5, 7, 8). Our results indicate that objects are represented in terms of constituent substructures defined by both axial and surface characteristics.

Our findings confirm longstanding theoretical predictions that the brain encodes natural objects in terms of medial axis structure (Blum, 1973; Marr and Nishihara, 1978; Biederman, 1987; Burbeck and Pizer, 1995; Leyton, 2001; Kimia, 2003). The theoretical appeal of medial axis representation is abstraction of complex shapes down to a small number of descriptive signals. Medial axis description is particularly efficient for capturing biological shapes (Blum, 1973; Pizer, 2003), especially when adjusted for prior probabilities through Bayesian estimation (Feldman and Singh, 2006). Medial axis components essentially sweep out volumes along trajectories (the medial axes) (Binford, 1971), thus recapitulating biological growth processes (Leyton, 2001). Medial axis descriptions efficiently capture postural changes of articulated structures, making them useful for both biological motion analysis and posture-invariant recognition (Johansson, 1973; Kovacs, Feher and Julesz, 1998; Sebastian, Klein and Kimia, 2004; Siddiqi et al., 1999).

These theoretical considerations are buttressed by psychophysical studies demonstrating the perceptual relevance of axial structure. Perception of both contrast and position is more acute at axial locations within two-dimensional shapes (Kovacs and Julesz, 1994; Wang and Burbeck, 1998). Human observers partition shapes into components defined by their axial form (Siddiqi, Tresness and Kimia, 1996). Object discrimination performance can be predicted in terms of medial axis structure (Siddiqi et al., 2001).

Our findings help explain a previous observation of late medial axis signals in primary visual cortex (V1) (Lee et al., 1998). Early V1 responses to texture-defined bars (<100 ms following stimulus onset) peaked only at the texture boundaries defining either side of the bar. But late responses (>100 ms) showed distinct peaks at the medial axis of the bar, as far away as 2° of visual angle from the physical boundary. Based on timing, the authors interpreted this phenomenon as a result of feedback from IT representations of larger scale shape. Our results demonstrate that IT is indeed a potential source for such medial axis feedback signals.

A salient aspect of our results is simultaneous tuning for axial and surface structure. Our previous results have demonstrated the prevalence of 3D surface shape tuning in IT (Yamane et al., 2008). Complex shape coding in terms of surface structure has strong theoretical foundations (Nakayama and Shimojo, 1992; Grossberg and Howe, 2003; Cao and Grossberg, 2005; Grossberg and Yazdanbakhsh, 2005), and surfaces dominate perceptual organization (He and Nakayama, 1992; He and Nakayama, 1994; Nakayama, He and Shimojo, 1995). Since any given medial axis configuration is compatible with a wide range of surrounding surfaces (Figure 6B–D), surface information is critical for complete shape representation. In fact, theorists have posited the existence of volumetric shape primitives, including “geons” (Biederman, 1987) and generalized cones (Binford, 1971; Marr and Nishihara, 1978), defined by both medial axis shape and the volume swept out along the axis. Many of our template models embody surface information superimposed on medial axis structures, and thus would meet this definition of volumetric primitive coding.

Combined representation of skeletal and surface structure is particularly relevant for encoding biological shapes. The basic human form, as an example, is characterized not only by a specific axial configuration of limbs but also by the broad convex surface curvature of the head. Composite axial/surface tuning in high-level visual cortex could provide an efficient, flexible basis for representing such biological shapes and encoding the many postural configurations they can adopt. Thus, our results are potentially relevant in the context of recent studies of anatomical and functional specialization for biological shape representation. Anatomical segregation of visual processing for biological object categories was originally established by fMRI studies of face and body representation in the human brain (Kanwisher, McDermott and Chun, 1997; Downing et al. 2001). Homologous categorical organization in old-world monkeys (Tsao et al., 2003; Moeller, Freiwald and Tsao, 2008) has made it possible to study processing of biological shapes at the level of individual neurons. This work has confirmed the specialization of face modules for face representation (Tsao et al., 2006), and begun to distinguish which structural and abstract properties of faces are processed at different levels of the face module system (Freiwald and Tsao, 2010). In particular, neurons in the monkey “middle” face module exhibit tuning for partial configurations of facial features, comparable to the tuning for partial configurations of abstract surface and axial features we describe here (Freiwald, Tsao and Livingstone, 2010). These modules are so small that they require fMRI-based targeting for neural recording experiments, so it is unlikely that we sampled extensively from them. However, IT as a whole shows strong evidence of sensitivity to biological categories (Kiani et al., 2007; Kriegeskorte et al., 2008), no doubt reflecting the prevalence and ecological importance of biological shapes in our world. The representation of axial/surface configurations we describe here could provide a structural basis for IT sensitivity to biological categories. Of course, IT represents many other kinds of information about objects, e.g. color (Conway, Moeller and Tsao, 2007; Koida and Komatsu, 2007; Banno et al., 2011), that would not entail tuning for axial or surface structure.

EXPERIMENTAL PROCEDURES

Behavioral Task and Stimulus Presentation

Two head-restrained rhesus monkeys (Macaca mulatta), a 7.2-kg male and a 5.3-kg female, were trained to maintain fixation within 1° (radius) of a 0.1° diameter spot for 4 seconds to obtain a juice reward. Eye position was monitored with an infrared eye tracker (ISCAN). 3D shape stimuli were rendered with shading and binocular disparity cues using openGL. Separate left- and right-eye images were presented via mirrors to convey binocular disparity depth cues. Binocular fusion was verified with a random dot stereogram search task. In each trial four randomly selected stimuli were flashed one at a time for 750 ms each, with inter-stimulus intervals of 250ms. All animal procedures were approved by the Johns Hopkins Animal Care and Use Committee and conformed to US National Institutes of Heath and US Department of Agriculture guidelines.

Electrophysiological recording

The electrical activity of well-isolated single neurons was recorded with epoxy-coated tungsten electrodes (Microprobe or FHC). We studied 111 neurons from central/anterior lower bank of the superior temporal sulcus and lateral convexity of the inferior temporal gyrus (13–19 mm anterior to the interaural line). IT cortex was identified on the basis of structural magnetic resonance images and the sequence of sulci and response characteristics observed while lowering the electrode.

Stimulus construction and morphing

Medial axis stimuli were constructed by randomly connecting 2-8 axial components end-to-end or end-to-side. Each component had a random length, curvature, and radius profile. The radius profile was defined by three random radius values at both ends and the midpoint of the medial axis. A quadratic function was used to interpolate a smooth profile between these radius values along the medial axis. Smooth surface junctions between components were created by interpolation and Gaussian smoothing. During the adaptive stimulus procedure, medial axis stimuli were morphed by randomly adding, subtracting, or replacing axial components, and by changing length, orientation, curvature, and radius profiles of axial components (See Figure S1A).

Each surface stimulus was constructed as an ellipsoidal, polar grid of Non-uniform rational B-splines (NURBS). The latitudinal cross-sections of this grid were assigned random radii, orientations and positions, with constraints on overall size and against self-intersection (Yamane et al., 2008). Local modulations of surface amplitude were defined by sweeping Gaussian profiles along random Bezier curves defined on the surface. During the adaptive stimulus procedure, surface stimuli were morphed by randomly altering the radii, orientations, and positions of the latitudinal cross-sections defining the ellipsoidal mesh or the Bezier curves and Gaussian profiles defining surface amplitude modulations (see Figure S1B).

Adaptive stimulus procedure

Each neuron was tested with independent lineages of medial axis and surface stimuli (see Figure 1). The first generation of each lineage comprised 20 randomly constructed stimuli. Subsequent generations in each lineage included randomly morphed descendants of ancestor stimuli randomly selected from previous generations, 4 from the 90%–100% of maximum response range, 3 from the 70–90% range, 3 from the 50–70% range, 3 from the 30–50% range, and 3 from 0-30% range. Each subsequent generation also included 4 new, randomly constructed stimuli. This distribution ensured that the adaptive procedure sampled across a wide domain including the peak, shoulders, and boundaries of the neuron’s tuning range (see Figure S1C).

After 5 generations of medial axis and surface stimuli (100 stimuli in each lineage), a Wilcoxon rank sum test applied to the 10 highest responses in each domain was used to determine which produced higher responses. For whichever domain produced higher responses, the original lineage was continued for 5 more generations and a second lineage in the same domain was initiated and tested through 5–10 generations. This protocol allowed us to compare responses across domains (based on the first 5 generations) but also provided a second, independent lineage to constrain and cross-validate tuning models in the higher-response domain. The total number of stimuli used to test each neuron ranged from 400–500, comprising 128–148 randomly generated stimuli and 272–352 adaptively modified stimuli.

Medial axis template models

For each candidate medial axis template, geometric similarity to a given shape was based on the closest matching substructure within that shape. This matching substructure was required to have the same axial topology (pattern of connected components). Most stimuli had one of four topologies: linear, Y/T-junction, X-junction, or two Y/T-junctions. The candidate template and the potentially matching substructure were densely sampled at points along each component. Points from the template and the matching substructure were compared for similarity of 3D position (relative to object center) and 3D orientation. The final similarity score was based on the product of these differences, averaged across points (see Supplemental Experimental Procedures and Figure S2).

Surface template models

We decomposed all shape stimuli into surface fragments with approximately constant surface curvatures and surface normal orientations (Yamane et al., 2008). Surface template models were configurations of 1-6 surface fragments. For a given shape, we measured similarity of the closest matching surface fragment configuration within that shape, based on 3D positions, surface normal orientations, and principal surface curvatures of the component fragments (see Supplemental Experimental Procedures and Figure S3). We tested all possible surface template models derived from the 30 highest response stimuli and selected the model with the highest correlation between similarity and neural response across all stimuli. In these analyses, as in our previous study (Yamane et al., 2008), highest correlations were obtained with 2-fragment models on average, and the results reported here are based on these models.

Composite template models

Composite models were generated by testing all combinations of the 10 highest correlation medial axis templates and the 10 highest correlation surface templates, and in each case fitting the following model by maximizing correlation between CompositeSimilarity and response rate, using the Matlab function lsqcurvefit:

where Sm is the axial similarity score for a given stimulus, Ss is the surface similarity score, a is the fitted relative weight for the linear axial term, (1–a) is the weight for the surface term, x is the fitted relative weight for the nonlinear product term, and (1–x) is the combined weight for the linear terms. The values of a and x were constrained to a range of 0–1.

Statistical Verification of model fits

To measure cross-prediction of composite models between lineages, for each source lineage we examined all 100 combinations of the 10 axial templates and 10 surface templates showing highest predictive power in the source lineage. Each of these 100 combinations was tested by measuring correlation between predicted and observed responses in the independent test lineage. Given two source lineages, this meant a total of 200 candidate models was tested. A significance threshold of p < 0.05 corrected for 200 comparisons required an actual threshold of p < 0.00025 (Figure S5A).

For the models generated from the overall dataset comprising both lineages, we used a two-stage cross validation procedure at a significance threshold of p < 0.005 (Figure S5B). The higher significance threshold was chosen because the more inclusive model source dataset could generate more accurate models, and because it is closer to the strict corrected threshold (p < 0.00025) used in the cross-lineage prediction test described above. In the “outer loop” of this procedure, we held out a random 20% of stimuli (from the combined, two-lineage dataset) for final model testing. This was done five times, as is standard in 5-fold, 20% holdout cross-validation. In the “inner loop” of this procedure, we again held out 20%, of the remaining stimuli, for testing the response prediction performance of candidate model templates (which were drawn only from stimuli remaining after both holdouts). This inner loop was also iterated five times (within each iteration of the outer loop). We selected the template with best response prediction performance on inner loop holdout stimuli, then measured the performance of this template model on the outer loop holdout stimuli. Thus, both model selection and final model testing were based on independent data. The values reported for examples in main text and shown in the Figure S5B distribution are averages across the five outer loop results for each neuron. In applying this procedure to the composite model, each inner loop test of a candidate model required fitting two variables to define the relative weights of the axial, surface, and product terms. Since this fitting was based solely on the inner loop holdout stimuli, the final test on the outer loop holdout stimuli was not subject to over-fitting.

Response invariance and axial tuning consistency

As a measure of response invariance across surface shape changes (Figure 6E, horizontal axis), we first normalized and sorted responses across the 7 stimulus conditions, and then calculated:

where Ri is the normalized response to the ith stimulus and Ki is the rank order (from 1 to 7) of the ith stimulus. Inv was normalized from a range of 1–140 to a range of 0–1. Larger values indicate higher the response invariance across the surface shape changes.

Our measure of axial tuning consistency (Figure 6E, vertical axis) was the fraction of variance explained by the first component of a singular value decomposition of the 3×7 response matrix (Figure 6B).

Supplementary Material

HIGHLIGHTS.

First demonstration of medial axis shape coding in ventral pathway

IT neurons represent configurations of 1–12 axial components

Most IT neurons represent both medial axis and surface shape information

ACKNOWLEDGMENTS

We thank Zhihong Wang, William Nash, William Quinlan, Lei Hao, and Virginia Weeks for technical assistance. This work was supported by NIH Grant #EY016711 and NSF Grant #0941463.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

SUPPLEMENTAL INFORMATION

Supplemental information includes seven figures and Supplemental Experimental Procedures and can be found with this article online at

REFERENCES

- Arcelli C, Cordella L, Levialdi S. From local maxima to connected skeletons. IEEE Transactions On Pattern Analysis and Machine Intelligence. 1981;3:134–143. doi: 10.1109/tpami.1981.4767071. [DOI] [PubMed] [Google Scholar]

- Baker CI, Behrmann M, Olson CR. Impact of learning on representation of parts and wholes in monkey inferotemporal cortex. Nat. Neurosci. 2002;5:1210–1216. doi: 10.1038/nn960. [DOI] [PubMed] [Google Scholar]

- Banno T, Ichinohe N, Rockland KS, Komatsu H. Reciprocal connectivity of identified color-processing modules in the monkey inferior temporal cortex. Cereb. Cortex. 2011;21:1295–1310. doi: 10.1093/cercor/bhq211. [DOI] [PubMed] [Google Scholar]

- Biederman I. Recognition-by-components: a theory of human image understanding. Psychol. Rev. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Binford TO. Visual perception by computer; Paper presented at IEEE Systems Science and Cybernetics Conference; Miami, FL. 1971. [Google Scholar]

- Blum H. Biological shape and visual Science .1. Journal of Theoretical Biology. 1973;38:205–287. doi: 10.1016/0022-5193(73)90175-6. [DOI] [PubMed] [Google Scholar]

- Brincat SL, Connor CE. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nat. Neurosci. 2004;7:880–886. doi: 10.1038/nn1278. [DOI] [PubMed] [Google Scholar]

- Brincat SL, Connor CE. Dynamic shape synthesis in posterior inferotemporal cortex. Neuron. 2006;49:17–24. doi: 10.1016/j.neuron.2005.11.026. [DOI] [PubMed] [Google Scholar]

- Burbeck C, Pizer S. Object representation by cores - identifying and representing primitive spatial regions. Vision Res. 1995;35:1917–1930. doi: 10.1016/0042-6989(94)00286-u. [DOI] [PubMed] [Google Scholar]

- Cao Y, Grossberg S. A laminar cortical model of stereopsis and 3D surface perception: closure and da Vinci stereopsis. Spat Vis. 2005;18:515–578. doi: 10.1163/156856805774406756. [DOI] [PubMed] [Google Scholar]

- Carlson ET, Rasquinha RJ, Zhang K, Connor CE. A Sparse Object Coding Scheme in Area V4. Curr. Biol. 2011;21:288–293. doi: 10.1016/j.cub.2011.01.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway BR, Moeller S, Tsao DY. Specialized color modules in macaque extrastriate cortex. Neuron. 2007;56:560–573. doi: 10.1016/j.neuron.2007.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demirci MF, Shokoufandeh A, Keselman Y, Bretzner L, Dickinson SJ. Object Recognition as Many-to-Many Feature Matching. International Journal of Computer Vision. 2006;69:203–222. [Google Scholar]

- Demirci F, Shokoufandeh A, Dickinson S. Skeletal shape abstraction from examples. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31:944–952. doi: 10.1109/TPAMI.2008.267. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwishwer N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Feldman J, Singh M. Bayesian estimation of the shape skeleton. Proc. Natl. Acad. Sci. USA. 2006;103:18014–18019. doi: 10.1073/pnas.0608811103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, Essen DCV. Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY, Livingstone MS. A face feature space in the macaque temporal lobe. Nat. Neurosci. 2009;12:1187–1196. doi: 10.1038/nn.2363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY. Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science. 2010;330:845–851. doi: 10.1126/science.1194908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujita I, Tanaka K, Ito M, Cheng K. Columns for visual features of objects in monkey inferotemporal cortex. Nature. 1992;360:343–346. doi: 10.1038/360343a0. [DOI] [PubMed] [Google Scholar]

- Gallant JL, Braun J, Essen DCV. Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual cortex. Science. 1993;259:100–103. doi: 10.1126/science.8418487. [DOI] [PubMed] [Google Scholar]

- Giblin P, Kimia B. On the local form and transitions of symmetry sets, medial axes, and shocks. International Journal of Computer Vision. 2003;54:143–156. [Google Scholar]

- Gross CG, Rocha-Miranda CE, Bender DB. Visual properties of neurons in inferotemporal cortex of the Macaque. J. Neurophysiol. 1972;35:96–111. doi: 10.1152/jn.1972.35.1.96. [DOI] [PubMed] [Google Scholar]

- Grossberg S. Laminar cortical dynamics of visual form perception. Neural Netw. 2003;16:925–931. doi: 10.1016/S0893-6080(03)00097-2. [DOI] [PubMed] [Google Scholar]

- Grossberg S, Yazdanbakhsh A. Laminar cortical dynamics of 3D surface perception: stratification, transparency, and neon color spreading. Vision Res. 2005;45:1725–1743. doi: 10.1016/j.visres.2005.01.006. [DOI] [PubMed] [Google Scholar]

- He ZJ, Nakayama K. Surfaces versus features in visual search. Nature. 1992;359:231–233. doi: 10.1038/359231a0. [DOI] [PubMed] [Google Scholar]

- He ZJ, Nakayama K. Apparent motion determined by surface layout not by disparity or three-dimensional distance. Nature. 1994;367:173–175. doi: 10.1038/367173a0. [DOI] [PubMed] [Google Scholar]

- Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast readout of object identity from macaque inferior temporal cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- Janssen P, Vogels R, Orban GA. Three-dimensional shape coding in inferior temporal cortex. Neuron. 2000a;27:385–397. doi: 10.1016/s0896-6273(00)00045-3. [DOI] [PubMed] [Google Scholar]

- Janssen P, Vogels R, Orban GA. Selectivity for 3D shape that reveals distinct areas within macaque inferior temporal cortex. Science. 2000b;288:2054–2056. doi: 10.1126/science.288.5473.2054. [DOI] [PubMed] [Google Scholar]

- Johansson G. Visual-perception of biological motion and a model for its analysis. Perception & Psychophysics. 1973;14:201–211. [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiani R, Esteky H, Mirpour K, Tanaka K. Object category structure in response patterns of neuronal population in monkey inferior temporal cortex. J. Neurophysiol. 2007;97:4296–4309. doi: 10.1152/jn.00024.2007. [DOI] [PubMed] [Google Scholar]

- Kimia BB. On the role of medial geometry in human vision. J. Physiol. Paris. 2003;97:155–190. doi: 10.1016/j.jphysparis.2003.09.003. [DOI] [PubMed] [Google Scholar]

- Kobatake E, Tanaka K. Neuronal selectivities to complex object features in the ventral visual pathway of the macaque cerebral cortex. J. Neurophysiol. 1994;71:856–867. doi: 10.1152/jn.1994.71.3.856. [DOI] [PubMed] [Google Scholar]

- Koenderink JJ. What does the occluding contour tell us about solid shape? Perception. 1984;13:321–330. doi: 10.1068/p130321. [DOI] [PubMed] [Google Scholar]

- Koida K, Komatsu H. Effects of task demands on the responses of color-selective neurons in the inferior temporal cortex. Nat Neurosci. 2007;10:108–116. doi: 10.1038/nn1823. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Connor CE. Neural representations for object perception: structure, category, and adaptive coding. Annu. Rev. Neurosci. 2011;34:45–67. doi: 10.1146/annurev-neuro-060909-153218. [DOI] [PubMed] [Google Scholar]

- Kovács I, Julesz B. Perceptual sensitivity maps within globally defined visual shapes. Nature. 1994;370:644–646. doi: 10.1038/370644a0. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron. 2008;60:1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee TS, Mumford D, Romero R, Lamme VA. The role of the primary visual cortex in higher level vision. Vision Res. 1998;38:2429–2454. doi: 10.1016/s0042-6989(97)00464-1. [DOI] [PubMed] [Google Scholar]

- Leopold DA, Bondar IV, Giese MA. Norm-based face encoding by single neurons in the monkey inferotemporal cortex. Nature. 2006;442:572–575. doi: 10.1038/nature04951. [DOI] [PubMed] [Google Scholar]

- Leymarie FF, Levine MD. Simulating the Grassfire Transform Using an Active Contour Model. IEEE Trans. Pattern Anal. Mach. Intell. 1992;14:56–75. [Google Scholar]

- Leyton M. A Generative Theory of Shape. Springer-Verlag; Berlin: 2001. [Google Scholar]

- Logothetis NK, Pauls J, Poggio T. Shape representation in the inferior temporal cortex of monkeys. Curr. Biol. 1995;5:552–563. doi: 10.1016/s0960-9822(95)00108-4. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J. Psychophysical and physiological evidence for viewer-centered object representations in the primate. Cereb. Cortex. 1995;3:270–288. doi: 10.1093/cercor/5.3.270. [DOI] [PubMed] [Google Scholar]

- Marr D, Nishihara HK. Representation and recognition of the spatial organization of three-dimensional shapes. Proc. R. Soc. Lond. B Biol. Sci. 1978;200:269–294. doi: 10.1098/rspb.1978.0020. [DOI] [PubMed] [Google Scholar]

- Moeller S, Freiwald WA, Tsao DY. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 2008;320:1355–1359. doi: 10.1126/science.1157436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, He ZJ, Shimojo S. Visual surface representation: a critical link between lower-level and higher level vision. In: Kosslyn SM, Osherson DN, editors. Visual Cognition. MIT Press; Cambridge, MA: 1995. pp. 1–70. [Google Scholar]

- Ogniewicz R, Ilg M. Voronoi Skeletons: Theory and Applications; Proc. Conf. on Computer Vision and Pattern Recognition; 1992.pp. 63–69. [Google Scholar]

- Pasupathy A, Connor CE. Responses to contour features in macaque area V4. J. Neurophysiol. 1999;82:2490–2502. doi: 10.1152/jn.1999.82.5.2490. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Connor CE. Shape representation in area V4: position-specific tuning for boundary conformation. J. Neurophysiol. 2001;86:2505–2519. doi: 10.1152/jn.2001.86.5.2505. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Connor CE. Population coding of shape in area V4. Nat. Neurosci. 2002;5:1332–1338. doi: 10.1038/nn972. [DOI] [PubMed] [Google Scholar]

- Pizer SM, Oliver WR, Bloomberg SH. Hierarchical Shape Description Via the Multiresolution Symmetric Axis. Transform IEEE Trans. Pattern Anal. Mach. Intell. 1987;9:505–511. doi: 10.1109/tpami.1987.4767938. [DOI] [PubMed] [Google Scholar]

- Pizer SM, Fletcher PT, Joshi SC, Thall A, Chen JZ, Fridman Y, Fritsch DS, Gash AG, Glotzer JM, Jiroutek MR, Lu C, Muller KE, Tracton G, Yushkevich PA, Chaney EL. Deformable M-Reps for 3D Medical Image Segmentation. International Journal of Computer Vision. 2003;55:85–106. doi: 10.1023/a:1026313132218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rollenhagen JE, Olson CR. Mirror-image confusion in single neurons of the macaque inferotemporal cortex. Science. 2000;287:1506–1508. doi: 10.1126/science.287.5457.1506. [DOI] [PubMed] [Google Scholar]

- Rom H, Medioni GG. Hierarchical Decomposition and Axial Shape Description. IEEE Trans. Pattern Anal. Mach. Intell. 1993;15:973–981. [Google Scholar]

- Sebastian TB, Klein PN, Kimia BB. Recognition of Shapes by Editing Shock Graphs; IEEE International Conference on Computer Vision; 2001.pp. 755–762. [Google Scholar]

- Sebastian TB, Klein PN, Kimia BB. Recognition of Shapes by Editing Their Shock Graphs. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:550–571. doi: 10.1109/TPAMI.2004.1273924. [DOI] [PubMed] [Google Scholar]

- Siddiqi K, Tresness KJ, Kimia BB. Parts of visual form: psychophysical aspects. Perception. 1996;25:399–424. doi: 10.1068/p250399. [DOI] [PubMed] [Google Scholar]

- Siddiqi K, Shokoufandeh A, Dickinson SJ, Zucker SW. Shock Graphs and Shape Matching. International Journal of Computer Vision. 1999;35:13–32. [Google Scholar]

- Siddiqi K, Kimia BB, Tannenbaum A, Zucker SW. On the psychophysics of the shape triangle. Vision Res. 2001;41:1153–1178. doi: 10.1016/s0042-6989(00)00274-1. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, Tootell RBH. Faces and objects in the macaque cerebral cortex. Nat. Neurosci. 2003;6:989–995. doi: 10.1038/nn1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsunoda K, Yamane Y, Nishizaki M, Tanifuji M. Complex objects are represented in macaque inferotemporal cortex by the combination of feature columns. Nat. Neurosci. 2001;4:832–838. doi: 10.1038/90547. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of Visual Behaviour. MIT Press; Cambridge, MA: 1982. pp. 549–586. [Google Scholar]

- Wang X, Burbeck CA. Scaled medial axis representation: evidence from position discrimination task. Vision Res. 1998;38:1947–1959. doi: 10.1016/s0042-6989(97)00299-x. [DOI] [PubMed] [Google Scholar]

- Yamane Y, Carlson ET, Bowman KC, Wang Z, Connor CE. A neural code for three-dimensional object shape in macaque inferotemporal cortex. Nat. Neurosci. 2008;11:1352–1360. doi: 10.1038/nn.2202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu SC, Yuille AL. FROMS: A Flexible Objection Recognition and Modeling System. International Journal of Computer Vision. 1996;20:187–212. [Google Scholar]

- Zhu SC. Stochastic Jump-Diffusion Process for Computing Medial Axes in Markov Random Fields. IEEE Trans. Pattern Anal. Mach. Intell. 1999;21:1158–1169. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.