Abstract

Management continuity, operationally defined as “the extent to which services delivered by different providers are timely and complementary such that care is experienced as connected and coherent,” is a core attribute of primary healthcare. Continuity, as experienced by the patient, is the result of good care coordination or integration.

Objective:

To provide insight into how well management continuity is measured in validated coordination or integration subscales of primary healthcare instruments.

Method:

Relevant subscales from the Primary Care Assessment Survey (PCAS), the Primary Care Assessment Tool – Short Form (PCAT-S), the Components of Primary Care Instrument (CPCI) and the Veterans Affairs National Outpatient Customer Satisfaction Survey (VANOCSS) were administered to 432 adult respondents who had at least one healthcare contact with a provider other than their family physician in the previous 12 months. Subscales were examined descriptively, by correlation and factor analysis and item response theory analysis. Because the VANOCSS elicits coordination problems and is scored dichotomously, we used logistic regression to examine how evaluative subscales relate to reported problems.

Results:

Most responses to the PCAS, PCAT-S and CPCI subscales were positive, yet 83% of respondents reported having one or more problems on the VANOCSS Overall Coordination subscale and 41% on the VANOCSS Specialist Access subscale. Exploratory factor analysis suggests two distinct factors. The first (eigenvalue=6.98) is coordination actions by the primary care physician in transitioning patient care to other providers (PCAS Integration subscale and most of the PCAT-S Coordination subscale). The second (eigenvalue=1.20) is efforts by the primary care physician to create coherence between different visits both within and outside the regular doctor's office (CPCI Coordination subscale). The PCAS Integration subscale was most strongly associated with lower likelihood of problems reported on the VANOCSS subscales.

Conclusion:

Ratings of management continuity correspond only modestly to reporting of coordination problems, possibly because they rate only the primary care physician, whereas patients experience problems across the entire system. The subscales were developed as measures of integration and provider coordination and do not capture the patient's experience of connectedness and coherence.

Abstract

La définition opérationnelle de la continuité d'approche est « l'étendue selon laquelle les divers fournisseurs offrent des services complémentaires et opportuns de telle manière qu'ils sont vécus par le patient de façon cohérente et liés entre eux ». Il s'agit d'une caractéristique fondamentale des soins de santé primaires. La continuité, telle que vécue par le patient, est le résultat d'une bonne coordination ou d'une bonne intégration des soins.

Objectif :

Voir à quel point la continuité d'approche est mesurée par les sous-échelles validées de coordination et d'intégration des instruments d'évaluation des soins de santé primaires.

Méthode :

Les sous-échelles pertinentes du Primary Care Assessment Survey (PCAS), du Primary Care Assessment Tool – version courte (PCAT-S), du Components of Primary Care Instrument (CPCI) et du Veterans Affairs National Outpatient Customer Satisfaction Survey (VANOCSS) ont été administrées à 432 adultes qui avaient eu au moins un contact avec un fournisseur de services de santé autre que son médecin de famille au cours des 12 mois antérieurs. Les sous-échelles ont été examinées de façon descriptive, en effectuant des analyses de corrélation, des analyses factorielles et des analyses de réponse par item. Étant donné que le VANOCSS fait ressortir des problèmes de coordination par des résultats dichotomiques, nous avons employé la régression logistique pour examiner comment les sous-échelles évaluatives sont reliées aux problèmes rapportés.

Résultats :

La plupart des réponses aux sous-échelles du PCAS, du PCAT-S et du CPCI sont positives, mais 83 % des répondants ont indiqué (avec la sous-échelle “coordination générale” du VANOCSS) avoir eu un ou plusieurs problèmes et 41 % avec la sous-échelle « accès au spécialiste ». L'analyse factorielle exploratoire suggère deux facteurs distincts. Le premier (valeur propre = 6,98) est la coordination des actions par le médecin de première ligne dans l'aiguillage du patient vers d'autres types de fournisseurs (sous-échelle « intégration » du PCAS et la plupart des sous-échelles « coordination » du PCAT-S). Le second (valeur propre = 1,20) sont les efforts faits par le médecin de première ligne pour assurer une cohérence entre les visites tant dans le bureau du médecin régulier qu'en dehors de celui-ci (sous-échelle « coordination » du CPCI). La sous-échelle « intégration » du PCAS est plus fortement associée à une faible probabilité de problèmes rapportés dans les sous-échelles du VANOCSS.

Conclusion :

Les scores de la continuité d'approche ne correspondent que modestement aux problèmes indiqués en matière de coordination, possiblement parce qu'ils servent uniquement à évaluer le médecin de première ligne, alors que les patients connaissent des problèmes dans l'ensemble du système. Les sous-échelles ont été conçues comme mesure de l'intégration et de la coordination du fournisseur; elles ne captent pas le degré de connexion et de cohérence vécu par le patient.

Continuity of care, often invoked as a characteristic of good care deliv-ery, has different meanings in different healthcare disciplines, but all recognize three dimensions: relational, informational and management continuity (Haggerty et al. 2003).

Background

Conceptualizing management continuity

Management continuity as a characteristic of care was proposed in 2001 in an endeavour to clarify and harmonize the different meanings of continuity of care (Reid et al. 2002). Our consensus process with primary healthcare (PHC) experts across Canada (Haggerty et al. 2007) defined management continuity as “the extent to which services delivered by different providers are timely and complementary such that care is experienced as connected and coherent.” There was unanimous agreement that, while management continuity is not specific to PHC, it is an essential PHC attribute. In disease management and nursing care, continuity refers to the linking of care provided by different providers, a notion recognized in family medicine and general practice as coordination. However, coordination refers to exchanges and collaboration between providers – most of which activity is invisible to patients. We propose that patients experience coordination as management continuity (Reid et al. 2002; Shortell et al. 1996).

PHC reform has targeted increased service integration and multidisciplinary coordination. Program evaluators therefore require good information to inform their selection of tools that measure management continuity.

Evaluating management continuity

Management continuity can be evaluated from the patient or provider perspective. The reference to services being “experienced as connected and coherent” clearly pertains to the patient's perspective, while providers may be best placed to assess timeliness and complementarity of services.

Various validated instruments that evaluate PHC from the user's perspective contain subscales addressing coordination of care, which we mapped to management continuity. From the candidate instruments, we selected six that are in the public domain and that we believe to be most relevant for Canada. In this paper, we compare the equivalence of management continuity subscales from four instruments and determine whether they appear to be measuring the same construct. Where analysis suggested more than one factor or construct, we also examine how items capture different elements of the operational definition. Finally, we examine how well individual items perform in measuring the constructs that emerge across instruments. This analysis provides insight into how well different subscales fit the construct of management continuity according to our operational definition, and to provide guidance to evaluators in selecting appropriate measures.

Method

The method is described in detail elsewhere in this special issue of the journal (Haggerty, Burge et al. 2011). Briefly: six instruments that evaluate PHC from the patient's perspective were administered to 645 healthcare users balanced by English/French language, rural/urban location, low/high level of education and poor/average/excellent overall PHC experience.

Measure description

There were five relevant subscales in four instruments. They are described briefly here in the order in which they appeared in the questionnaire. Note the slight differences in reference points and eligible respondents.

The Primary Care Assessment Survey (PCAS) (Safran et al. 1998) has a six-item Integration subscale that asks patients to rate on a six-point Likert scale (“1=very poor” to “6=excellent”) different aspects of “times their doctor recommended they see a different doctor for a specific health problem”; they are to be answered only by those whose “doctor ever recommended … a different doctor for a specific health problem” (emphasis ours).

The Primary Care Assessment Tool – Short Form, adult (PCAT-S) (Shi et al. 2001) has a four-item Coordination subscale that elicits responses on a four-point Likert scale (“definitely not” to “definitely”) to questions about the primary care provider's coordination behaviours; the subscale was completed only by those who “ever had a visit to any kind of specialist or special service.”

The Components of Primary Care Index (CPCI) (Flocke 1997) has an eight-item Coordination of Care subscale that uses a six-point semantic differential response scale (poles of “1=strongly disagree” and “6=strongly agree”) to elicit agreement with various statements about the “regular doctor”; it was completed by all respondents whether or not they had seen other providers.

Finally, the Veterans Affairs National Outpatient Customer Satisfaction Survey (VANOCSS) (Borowsky et al. 2002), informed by the Picker Institute health surveys (Gerteis et al. 1993), has two relevant subscales. Both elicit the frequency of difficulties encountered using Likert-type response options, but apply a dichotomous scoring. The six-item Overall Coordination subscale pertains to “all the healthcare providers your regular doctor has recommended you see” and was answered only by respondents who had seen more than one provider in the last 12 months. The four-item Specialty Access subscale refers to access to and care from specialists; we indicated that it should be answered only by those who saw a specialist in the last 12 months.

Because the provider reference and eligible respondents were not the same for each subscale, we defined a common subgroup on which to analyze instruments: respondents who had seen more than one provider in the previous 12 months. Additionally, these instruments had two different measurement approaches. The PCAS, PCAT-S and CPCI are evaluative subscales based on the classic approach of indicator items informed by a not-directly observed (latent) variable. Operationally, the subscales are expressed as a continuous score by averaging the values of individual items so that high scores indicate the best management continuity. In contrast, the VANOCSS reports subscales that use a benchmarking approach, where the implicit performance target is to minimize the proportion of patients that experience any problems in healthcare delivery. The items are scored dichotomously, and the subscale is represented as either dichotomous (presence of any problem) or summed to the number of problems encountered (e.g., 0 to 6 problems with coordination). A score of 0 (zero) represents the best management continuity.

Analytic strategy

We largely applied the same analytic strategy that we used for all the PHC attributes (Santor et al. 2011), but the different scoring approach of the VANOCSS required some modification. For correlations and factor analysis, we treated VANOCSS subscales each as a single item representing the sum of reported problems. We also explored factor analysis leaving items in the original frequency options, but found that the recommended scoring performed better.

Finally, to account for the reporting versus evaluative approaches to measurement, we used binary logistic regression modelling to examine whether, in separate models, scores of the PCAS, PCAT-S and CPCI subscales predicted the presence of any reported problems with the VANOCSS Overall Coordination and Specialist Access subscales. We also explored the associations using ordinal regression models (number of problems) to determine whether there were ordinal effects.

Results

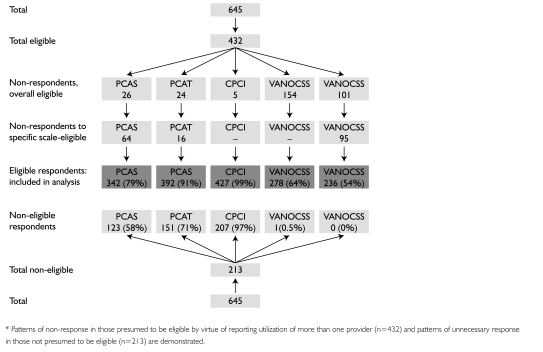

Of the 645 respondents to the overall survey, 432 reported having seen a provider other than their family physician, constituting our analytic subgroup for management continuity. As shown in Figure 1, however, some of our analytic subgroup were not eligible for a specific subscale (e.g., did not see another doctor or specialist) and, appropriately, did not respond. Others, though eligible, either did not respond (VANOCSS) or were excluded from subscale analysis because of missing values (n=179/432, 41.4%). Those excluded were more likely to have a regular clinic rather than a specific physician and appeared to be in slightly better health. The low number of responses to the VANOCSS Overall Coordination and Specialist Access subscales is likely due to respondent fatigue, as these were placed last in the questionnaire. In the analytic subgroup, 66.2% reported having a chronic health problem and 39.9% a disability limiting daily activities. Respondents had an average of 7.6 (SD=7.9) primary care visits in the last 12 months; 74% had seen a specialist.

FIGURE 1.

Flow chart of responses to various subscales (shown in order of appearance in questionnaire)*

Comparative descriptive statistics

Table 1 summarizes the item content and behaviour in the five subscales; the detailed content and distributions are available online at http://www.longwoods.com/content/22638. None has more than 5% missing values, though items asking about the doctor's communication with specialists (PCAS, CPCI) or involvement in care given by others (PCAS, CPCI) were at the limit of acceptable rates of missing values. Respondents endorse mostly positive assessments of management continuity, except with the VANOCSS items, where up to 59% report a problem in Overall Coordination items, and approximately 20% in Access to Specialists items. All items in the PCAS, PCAT-S and VANOCSS Overall Coordination subscales, and the majority in the other subscales, discriminate adequately between different levels of the subscale score, as indicated by discriminatory parameter a>1.

TABLE 1.

Summary of management continuity subscale content and distribution of item responses. (Detailed distribution available at http://www.longwoods.com/content/22638).

| Subscale and Item Description | Response Scale | Range Missing Values | Overall Modal Response | Range Item Discriminability | Comments on Distribution |

|---|---|---|---|---|---|

|

PCAS Integration (6 items) When your doctor recommends a different doctor for a specific problem, Rate: Help deciding who to see; help in getting an appointment; involvement of doctor while being treated by a specialist; communication with specialists; help understanding what other said; quality of specialist. |

Likert evaluative, 1=very poor to 6=excellent | 1%–4% | 4–5 (good to very good) | 1.85 (quality) to 4.89 (communication) | Only 5%–11% in the two most negative categories |

|

PCAT-S Coordination (4 items) Likelihood that primary care provider: Discussed alternatives for places to seek care; helped make the appointment; wrote information about the reason for the visit; talked about what happened at the visit. |

Likert evaluative, 1=definitely not to 4=definitely | 2%–3% (true missing) 3%–6% not sure | 4 (definitely) | 1.23 (alternatives) to 2.33 (information) | Over 52% of responses in the most positive category. Few missing values (True or Not sure) |

|

CPCI Coordination of Care (8 items) Agreement with statements about regular doctor: Positive statements – This doctor: knows when I'm due for a check-up; coordinates all care; keeps track; follows up on a problem; follows up on visits to other providers; helps interpret tests or visits Negative statement – This doctor: does not always know about care received at other places |

Semantic differential opinion, 1=strongly disagree, 6=strongly agree | 1%– % (true missing) 0%–4% not sure | 6 (strongly agree) | 0.32 (negative statement) to 4.14 (keeping track) | Most responses (22%–62% in the most positive category; “help interpret tests” seems to have U distribution |

|

VANOCSS Overall Coordination of Care (6 items) Frequency of different providers: Being familiar with recent medical history; not knowing about tests or their results; not knowing about changes in your treatment Frequency of patient: Being confused because of different information; knowing next steps; knowing who to ask for questions about care |

3–4 point frequency categories scored as problem/no problem | 0%–3% | n/a | 1.44 (next steps) to 2.81 (tests) | Two items with 59% presence of a problem (being familiar with recent medical history and next steps). Others had 26%–40% presence of a problem |

| VANOCSS Access to Specialists (4 items) Frequency of issues getting care from specialists: Access when needed; difficulty with getting an appointment; given information about who to see and why; specialists had information needed from medical record | 3–4 point frequency categories scored as problem/no problem | 1%–2% | 0.42 (appointment) to 2.68 (information needed) | 11%–20% reporting presence of a problem |

TABLE 1.

Distribution of values on the items in subscales mapped to management continuity among the 432 respondents who saw more than one provider

| Item Code | Item Statement | Missing % (n) | Percentage (Number) by Response Option | Item Discrimination1 | |||||

|---|---|---|---|---|---|---|---|---|---|

|

Primary Care Assessment Survey (PCAS): Integration Thinking about the times your doctor has recommended you see a different doctor for a specific health problem… |

Very poor | Poor | Fair | Good | Very good | Excellent | |||

| PS_i1 | How would you rate the help your regular doctor gave you in deciding who to see for specialty care? | 1 (4) | 2 (6) | 3 (9) | 8 (28) | 26 (87) | 34 (117) | 26 (89) | 2.30 (0.20) |

| PS_i2 | How would you rate the help your regular doctor gave you in getting an appointment for specialty care you needed? | 2 (6) | 2 (8) | 4 (13) | 9 (31) | 24 (82) | 32 (109) | 27 (91) | 2.35 (0.21) |

| PS_i3 | How would you rate regular doctor's involvement in your care when you were being treated by a specialist or were hospitalized? | 4 (13) | 1 (3) | 10 (33) | 12 (39) | 31 (104) | 26 (87) | 18 (61) | 3.68 (0.30) |

| PS_i4 | How would you rate regular doctor's communication with specialists or other doctors who saw you? | 4 (15) | 2 (8) | 7 (22) | 14 (46) | 32 (109) | 25 (84) | 17 (56) | 4.89 (0.42) |

| PS_i5 | How would you rate the help your regular doctor gave you in understanding what the specialist or other doctor said about you? | 4 (12) | 3 (10) | 4 (13) | 15 (52) | 28 (95) | 27 (91) | 20 (67) | 4.06 (0.35) |

| PS_i6 | How would you rate the quality of specialists or other doctors your regular doctor sent you to? | 1 (4) | 2 (5) | 2 (6) | 8 (27) | 23 (77) | 38 (130) | 27 (91) | 1.85 (0.19) |

|

Primary Care Assessment Tool (PCAT-S): Coordination Thinking about visit to specialist or specialized service… |

Definitely not | Probably not | Probably | Definitely | Not sure / Don't remember | ||||

| PT_c1 | Did your Primary Care Provider discuss with you different places you could have gone to get help with that problem? | 2 (9) | 10 (40) | 9 (36) | 23 (89) | 52 (203) | 4 (16) | 1.23 (0.17) | |

| PT_c2 | Did your Primary Care Provider or someone working with your Primary Care Provider help you make the appointment for that visit? | 3 (12) | 10 (40) | 6 (23) | 16 (61) | 64 (251) | 2 (6) | 2.07 (0.26) | |

| PT_c3 | Did your Primary Care Provider write down any information for the specialist about the reason for the visit? | 3 (11) | 7 (29) | 8 (33) | 22 (86) | 54 (212) | 6 (22) | 2.33 (0.24) | |

| PT_c4 | After you went to the specialist or special service, did your Primary Care Provider talk with you about what happened at the visit? | 2 (9) | 14 (56) | 8 (33) | 19 (76) | 53 (208) | 3 (11) | 2.23 (0.25) | |

| Components of Primary Care Index (CPCI) | Strongly disagree | 2 | 3 | 4 | 5 | Strongly agree | |||

| CP_coo1 | This doctor knows when I'm due for a check-up. | 2 (10) | 11 (46) | 11 (49) | 14 (59) | 12 (52) | 17 (74) | 33 (142) | 2.35 (0.21) |

| CP_coo2 | I want one doctor to coordinate all of the healthcare I receive. | 3 (11) | 3 (11) | 3 (14) | 4 (15) | 9 (37) | 18 (78) | 62 (266) | 1.13 (0.17) |

| CP_coo3 | This doctor keeps track of all my healthcare. | 1 (6) | 4 (19) | 7 (29) | 7 (29) | 12 (51) | 22 (93) | 48 (205) | 4.14 (0.36) |

| CP_coo4 | This doctor always follows up on a problem I've had, either at the next visit or by phone. | 3 (13) | 7 (31) | 9 (38) | 8 (33) | 12 (51) | 21 (91) | 41 (175) | 3.91 (0.30) |

| CP_coo5 | This doctor always follows up on my visits to other healthcare providers. | 3 (11) | 6 (27) | 8 (34) | 12 (53) | 15 (63) | 20 (86) | 37 (158) | 3.25 (0.26) |

| CP_coo6 | This doctor helps me interpret my lab tests, X-rays or visits to other doctors. | 2 (9) | 22 (94) | 15 (66) | 13 (56) | 11 (46) | 15 (66) | 22 (95) | 0.43 (0.13) |

| CP_coo7 | This doctor communicates with the other health providers I see. | 5 (21) | 10 (42) | 13 (55) | 16 (71) | 16 (71) | 17 (75) | 23 (97) | 1.62 (0.16) |

| CP_coo8 | This doctor does not always know about care I have received at other places.2 | 5 (22) | 11 (46) | 13 (58) | 17 (72) | 13 (55) | 20 (86) | 22 (93) | 0.32 (0.11) |

| Veterans Affairs National Outpatient Customer Satisfaction Survey (VANOCSS): Overall Coordination of Care3 | Problem | No problem | |||||||

| VA_cco1 | Were the providers who cared for you always familiar with your most recent medical history? | 0 (1) | 59 (165) | 40 (112) | 1.67 (0.26)4 | ||||

| VA_cco2 | Were there times when one of your providers did not know about tests you had or their results? | 1 (3) | 40 (111) | 59 (164) | 2.81 (0.46) | ||||

| VA_cco3 | Were there times when one of your providers did not know about changes in your treatment that another provider recommended? | 2 (5) | 26 (73) | 72 (200) | 1.90 (0.35) | ||||

| VA_cco4 | Were there times when you were confused because different providers told you different things? | 1 (3) | 28 (78) | 71 (197) | 1.90 (0.30) | ||||

| VA_cco5 | Did you always know what the next step in your care would be? | 3 (7) | 59 (163) | 39 (108) | 1.44 (0.26) | ||||

| VA_cco6 | Did you know who to ask when you had questions about your health care? | 2 (5) | 37 (102) | 62 (171) | 1.48 (0.28) | ||||

| Veterans Affairs National Outpatient Customer Satisfaction Survey (VANOCSS): Access to Specialists5 | Problem | No problem | |||||||

| VA_spa1 | How often did you get to see specialists when you thought you needed to? | 2 (5) | 12 (29) | 86 (202) | 1.26 (0.36)4 | ||||

| VA_spa2 | How often did you have difficulty making appointments with specialists you wanted to see? | 1 (3) | 19 (44) | 80 (189) | 0.42 (0.23) | ||||

| VA_spa3 | How often were you given enough information about why you were to see your specialists? | 1 (2) | 11 (25) | 89 (209) | 2.48 (0.62) | ||||

| VA_spa4 | How often did your specialists have the information they needed from your medical records? | 1 (3) | 20 (48) | 78 (185) | 2.68 (0.56) | ||||

Items were assessed against the construct of the original scale. Values >1 are considered to be discriminating.

Values were reversed for this reverse-worded item: only 11% (46) strongly agreed that the doctor did not know about care received at other places.

Descriptive statistics based on 279 respondents (subscale placed second-to-last in questionnaire).

Items are scored dichotomously; these are not Likert scales.

Descriptive statistics based on 236 respondents (subscale placed last in questionnaire).

Table 2 presents the descriptive statistics for each subscale, with PCAS, PCAT-S and CPCI subscale mean scores normalized to a 0-to-10 metric to permit comparison. As expected, those three subscales are skewed towards positive assessments with the PCAT-S, demonstrating the most extreme skewing. In contrast, 83% of respondents reported at least one problem on the VANOCSS Overall Coordination subscale and 41% on Specialist Access. Among the respondents in the top quartile of management continuity according to the PCAS, PCAT-S and CPCI subscales, 61%, 76% and 74%, respectively, report having one or more problems on the VANOCSS Overall Coordination subscale and 34%, 37% and 44%, respectively, on the VANOCSS Specialist Access subscale.

TABLE 2.

Mean and distributional scores for management continuity subscales, showing normalized mean scores and number of problems (n=432)

| Scale Range | Cronbach's Alpha | Mean | SD | Minimum Observed | Quartiles | ||

|---|---|---|---|---|---|---|---|

| Q1 (25%) | Q2 (50%) | Q3 (75%) | |||||

| Normalized Mean Scores | |||||||

| PCAS Integration normalized | .90 | 6.99 | 1.97 | 0 | 5.67 | 7.33 | 8.33 |

| PCAT-S Coordination normalized | .73 | 7.61 | 2.52 | 0 | 6.67 | 8.33 | 10.00 |

| CPCI Coordination of Care normalized | .79 | 6.65 | 2.04 | 0 | 5.25 | 6.75 | 8.00 |

| Number of problems | |||||||

| VANOCSS Coordination of Care (Overall): Number of problems (0 to 6) | .74 | 2.50 | 1.90 | 0 | 1.00 | 2.00 | 4.00 |

| VANOCSS Specialty Provider Access: Number of problems (0 to 4) | .54 | 0.60 | 0.90 | 0 | 0 | 0 | 1.00 |

Table 3 presents the Pearson correlations between the management continuity subscales. The PCAS, PCAT-S and CPCI subscales correlate highly with one another (r=.54 to .62) and negatively, but only modestly, with the number of problems reported on the VANOCSS subscales (r=–.19 to –.39). The five subscales correlate strongly with other subscales for relational continuity (mean, .40; range, .28 to .68) and interpersonal communication (mean, .42; range, .30 to .63). The VANOCSS subscales correlated weakly (and negatively) with all other subscales, underlining the difference in measurement approach.

TABLE 3.

Partial Pearson correlation coefficients between subscales for management continuity and subscales evaluating other attributes of primary healthcare.* Only statistically significant correlations are shown (at p<.05).

| n=246 | n=132 | |||||

|---|---|---|---|---|---|---|

| PCAS: Integration | PCAT-S: Coordination | CPCI: Coordination of Care | VANOCSS: Coordination of Care (Overall), number of problems | VANOCSS: Specialty Access, number of problems | ||

| PCAS Integration | 1.00 | 0.55 | 0.62 | –0.39 | –0.20 | |

| PCAT-S Coordination | 0.55 | 1.00 | 0.54 | –0.19 | –0.22 | |

| CPCI Coordination of care | 0.62 | 0.54 | 1.00 | –0.34 | –0.27 | |

| VANOCSS Coordination of Care (Overall), number of problems | –0.39 | –0.39 | –0.39 | 1.00 | 0.34 | |

| VANOCSS Specialty Access, number of problems | –0.20 | –0.20 | –0.20 | 0.34 | 1.00 | |

Controlling for language, education and geographic location.

Do all items measure a single attribute?

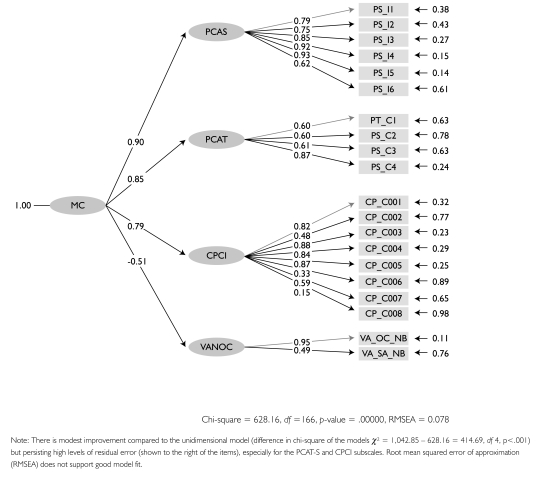

In exploratory factor analysis, most items loaded well (>.4) on a single factor. The VANOCSS subscales were added as single items summing the number of problems encountered, and had negative loadings. Items with low factor loadings were those with low discriminability values (help interpreting tests, doctor knows about other care in CPCI; difficulty making appointment with specialists in VANOCSS Specialty Access). However, in confirmatory factor analysis the goodness of fit of the one-dimensional model was barely adequate with a root mean squared error of approximation (RMSEA p=.128), considerably higher than the p=.05 criterion for good model fit, but a comparative fit index (CFI) of .92, which is above the .90 criterion. When subscale items were associated with their parent subscales and were in turn associated with a latent variable – presumed to be continuity (Figure 2) – fit statistics improved significantly over the one-dimensional model: chi-square difference = 1,042.85 – 628.16 = 414.69, df 4, p<.001; RMSEA=.078; CFI=.97. This finding suggests that items reflect a common underlying construct for subscales in all instruments.

FIGURE 2.

Parameter estimations for structural equation model showing loadings of items on parent scales (first-order variables), which in turn load on management continuity (second-order latent variable)

How do underlying factors fit with operational definition?

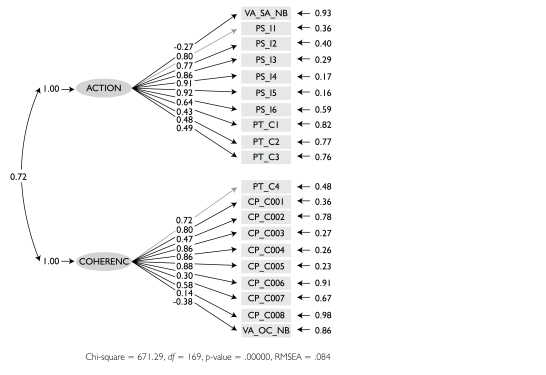

Exploratory factor analysis suggests two underlying factors (again, VANOCSS as single items). The first (eigenvalue=6.73) seems to capture observed behaviours of the primary care physician related to the transition of patient care to other providers, or coordination actions. It includes all items in the PCAS Integration and the VANOCSS Overall Coordination and VANOCSS Specialist Access, plus two items in the PCAT-S Coordination (“help with appointment” and “write information for specialist,” factor loading only .35). The second factor (eigenvalue=1.58) suggests provider efforts to produce coherence between visits within and outside the regular doctor's office. All but two items on the CPCI Coordination subscales load on this factor, as well as two of the PCAT-S Coordination items (“discuss places to get help” and “what happened at visit”). Two CPCI items do not load on any factor. One may be a slightly different construct (“want one doctor to coordinate”) and the other is problematic owing to reverse scoring (“doctor sometimes not aware of care”).

We examined the fit of individual items in the VANOCSS subscales both in original and dichotomously coded forms. They mostly load on coordination action, except for “difficulty making appointments with specialists you wanted to see,” an item that does load with any others and probably does not fit with the construct of management continuity.

The structural equation, two-factor model with items grouped by coordination actions and coherence is shown in Figure 3.

FIGURE 3.

Structural equation model showing loadings of items on sub-dimensions of coordination action and experienced coherence, shown to be correlated (curved lines)

Does worse management continuity predict reported problems?

Logistic regression modelling shows a statistically significant higher likelihood of reporting a problem in the VANOCSS Overall Coordination subscale for every unit of decrease in the score on the PCAS, PCAT-S and CPCI subscales (Table 4). Normalizing the scores to a 0-to-10 metric allows comparison of the magnitude of effect. Each unit decrease in the PCAS Integration subscale, the largest effect, is associated with a 2.2 higher likelihood of problems on Overall Coordination (OR=2.2); it accounts for 29% of the variance in Overall Coordination. On the non-normalized score, going from a score of 5 to 4 (very good to good) is associated with a 4.8 times higher likelihood of reporting problems. For Specialist Access, the effects are statistically significant only for the PCAS, and they are modest. The logistic model shows poor goodness of fit for the CPCI.

TABLE 4.

Results of logistic regression models examining the likelihood of any problem reported on the VANOCSS Overall Coordination and Specialty Access subscales, with PCAS, PCAT-S and CPCI subscale scores. Odds ratios show the likelihood of reporting any problem associated with each unit decrease in the assessment of management continuity.*

| Odds Ratio with Normalized Score OR (95% CI) | Odds Ratio with Raw Score OR (95% CI) | Explained Variance (Nagelkerke's R2**) | Goodness of Fit (Hosmer and Lemshow p-value***) | |

|---|---|---|---|---|

| VANOCSS Overall Coordination | ||||

| PCAS Integration | 2.2 (1.6, 3.0) | 4.8 (2.6, 9.1) | .29 | .11 |

| PCAT-S Coordination | 1.3 (1.0, 1.7) | 2.6 (1.1, 6.3) | .06 | .93 |

| CPCI Coordination of Care | 1.4 (1.1, 1.8) | 2.0 (1.3, 3.2) | .08 | .05 |

| VANOCSS Specialty Provider Access | ||||

| PCAS Integration | 1.4 (1.1, 1.6) | 1.8 (1.2, 2.7) | .09 | .09 |

| PCAT-S Coordination | 1.1 (0.9, 1.3) | 1.4 (0.8, 2.4) | .01 | .15 |

| CPCI Coordination of Care | 1.1 (0.9, 1.4) | 1.3 (0.9, 1.8) | .01 | .03 |

Each OR calculated in a separate regression model. OR-normalized refers to subscale scores normalized from 0 to 10.

R2 is interpreted as a reflection of outcome variance explained by a variable in the model.

Goodness of model fit; values <.05 indicate poor fit.

Individual item performance

Parametric item response theory analysis shows that positive skewing of the PCAS, PCAT-S and CPCI subscales results in diminished capacity to discriminate between different degrees of above-average continuity but is highly discriminatory of below-average levels. The VANOCSS subscales, in contrast, are more discriminatory for above-average continuity.

Discussion and Conclusion

In this study, we found that five validated subscales measuring patient assessments of care coordination relate adequately to a common construct, which we presume to be management continuity. Exploratory factor analysis suggests that two distinct sub-dimensions underlie this pool of items: coordination actions and coherence.

Coordination actions relate to physician behaviours to facilitate transition of patient care to other providers, presumably to achieve timeliness and complementarity of services, though no subscales directly addressed these qualities. The PCAS Integration subscale covers this dimension, as do most of the items of the PCAT-S Coordination subscale. However, providers – not patients – are probably the best source for assessing timeliness and complementarity of services.

The coherence dimension is highly correlated with coordination actions but seems to address the provider's effort, directed at the patient, to link different services and avoid gaps in care. This effort includes sense-making after a series of visits for a specific health condition and planning for future care based on results of past visits. The CPCI Coordination subscale addresses this factor, but it is also captured in the PCAT-S item about talking with the patient about what happened at the specialist visit. However, none of the subscales captures connectedness and coherence as experienced by the patient, though the absence of these qualities can be inferred from the occurrence of problems reported using the VANOCSS Overall Coordination subscale. Granted, the developers were measuring coordination, not management continuity, but all assume that the patient is aware of the provider's coordination efforts. Qualitative studies suggest that patients are often unaware of critical aspects of coordination, such as agreed-upon care plans or information transfers between providers (Gallagher and Hodge 1999; Woodward et al. 2004). They presume these elements are in place, and can detect only failures or gaps. Thus, they can more validly assess discontinuity than continuity. This finding would suggest that the VANOCSS approach may be a more accurate assessment of management continuity.

In qualitative studies, patients seem to express their experience of good coordination as giving a sense of security and of being taken care of rather than as connectedness or smoothness (Burkey et al. 1997; Kai and Crosland 2001; Kroll and Neri 2003). The French term for “continuity of care,” prise-en-charge, captures this notion but seems to have no English equivalent. The term implies the presence of a provider who takes responsibility for ensuring that required care is provided, as by case managers in mental healthcare or patient navigators in cancer care (Wells et al. 2008). Indeed, we observed strong correlations between subscales of management continuity and relational attributes of care. As measures of relational continuity or interpersonal communication increase, the number of reported coordination problems between all providers decreases.

This study has several limitations. The most striking is considering together instruments that use different reference points as well as two distinct approaches to measurement. The confirmatory factor analysis shows, not surprisingly, that the best model is the one where items are associated to their own parent instruments. The PCAS, PCAT-S and CPCI subscales focus specifically on the PHC physician and elicit predominantly positive assessments, a feature common to rating scales (Williams et al. 1998). In contrast, the two VANOCSS subscales elicit experienced difficulties across all providers, from which evaluators infer the degree of coordination or specialist access. This measurement approach, used by the Picker Institute (Gerteis et al. 1993), expressly increases sensitivity to problems in order to guide and monitor improvement efforts.

Nonetheless, the distinct approaches also create a unique opportunity to compare different formats and provide new information on how well assessments of coordination behaviours predict reported problems. Our item response analysis (not shown) suggests that subscales could be used in combination to reliably and validly identify persons with poor management continuity (low scores on the PCAS Integration and more than one problem on the VANOCSS Overall Coordination subscale) or good management continuity (high scores with no problems). We recently developed a measure of management continuity that combines both approaches; it appears to perform well and to predict continuity outcomes (Haggerty, Roberge et al. 2011).

In conclusion, these measures of coordination or management continuity seem to have a single underlying construct but capture only partially our definition of management continuity. Combining the PCAS and the VANOCSS Overall Coordination subscales is probably the most accurate way to detect both problematic continuity and good continuity. However, further development is needed of measures of how patients experience coordination as management continuity or discontinuity.

Acknowledgements

This research was funded by the Canadian Institutes of Health Research. During the conduct of the study Jeannie L. Haggerty held a Canada Research Chair in Population Impact of Healthcare at the Université de Sherbrooke. The authors wish to thank Beverley Lawson for conducting the survey in Nova Scotia and Christine Beaulieu in Quebec and Donna Riley for support in preparation and editing of the manuscript.

Contributor Information

Jeannie L. Haggerty, Department of Family Medicine, McGill University, Montreal, QC.

Frederick Burge, Department of Family Medicine, Dalhousie University, Halifax, NS.

Raynald Pineault, Centre de recherche du Centre hospitalier de l'Université de Montréal, Montréal, QC.

Marie-Dominique Beaulieu, Chaire Dr Sadok Besrour en médecine familiale, Centre de recherche du Centre hospitalier de l'Université de Montréal, Montréal, QC.

Fatima Bouharaoui, St. Mary's Research Centre, St. Mary's Hospital Center, Montreal, QC.

Christine Beaulieu, St. Mary's Research Centre, St. Mary's Hospital Center, Montreal, QC.

Darcy A. Santor, School of Psychology, University of Ottawa, Ottawa, ON.

Jean-Frédéric Lévesque, Centre de recherche du Centre hospitalier de l'Université de Montréal, Montréal, QC.

References

- Borowsky S.J., Nelson D.B., Fortney J.C., Hedeen A.N., Bradley J.L., Chapko M.K. 2002. “VA Community-Based Outpatient Clinics: Performance Measures Based on Patient Perceptions of Care.” Medical Care 40(70): 578–86 [DOI] [PubMed] [Google Scholar]

- Burkey Y., Black M., Reeve H. 1997. “Patients' Views on Their Discharge from Follow-up in Outpatient Clinics: Qualitative Study.” British Medical Journal 315(7116): 1138–41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flocke S. 1997. “Measuring Attributes of Primary Care: Development of a New Instrument.” Journal of Family Practice 45(1): 64–74 [PubMed] [Google Scholar]

- Gallagher E.M., Hodge G. 1999. “The Forgotten Stakeholders: Seniors' Values Concerning Their Health Care.” International Journal of Health Care Quality Assurance 12(3): 79–87 [DOI] [PubMed] [Google Scholar]

- Gerteis M., Edgman-Levitan S., Daley J., Delbanco T.L. 1993. Through the Patient's Eyes: Understanding and Promoting Patient-Centered Care. San Francisco: Jossey-Bass [Google Scholar]

- Haggerty J.L., Burge F., Beaulieu M.-D., Pineault R., Beaulieu C., Lévesque J.-F., et al. 2011. “Validation of Instruments to Evaluate Primary Healthcare from the Patient Perspective: Overview of the Method.” Healthcare Policy 7 (Special Issue): 31–46 [PMC free article] [PubMed] [Google Scholar]

- Haggerty J.L., Burge F., Lévesque J.-F., Gass D., Pineault R., Beaulieu M.-D., et al. 2007. “Operational Definitions of Attributes of Primary Health Care: Consensus Among Canadian Experts.” Annals of Family Medicine 5: 336–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggerty J.L., Reid R.J., Freeman G.K., Starfield B.H., Adair C.E., McKendry R. 2003. “Continuity of Care: A Multidisciplinary Review.” British Medical Journal 327: 1219–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggerty J.L., Roberge D., Freeman G.K., et al. 2011. “When Patients Encounter Several Providers: Validation of a Generic Measure of Continuity of Care.” Annals of Family Medicine. (Submitted.) [DOI] [PMC free article] [PubMed]

- Kai J., Crosland A. 2001. “Perspectives of People with Enduring Mental Ill Health from a Community-Based Qualitative Study.” British Journal of General Practice 51: 730–36 [PMC free article] [PubMed] [Google Scholar]

- Kroll T., Neri M.T. 2003. “Experiences with Care Co-ordination Among People with Cerebral Palsy, Multiple Sclerosis, or Spinal Cord Injury.” Disability and Rehabilitation 25: 1106–14 [DOI] [PubMed] [Google Scholar]

- Reid R., Haggerty J., McKendry R. 2002. Defusing the Confusion: Concepts and Measures of Continuity of Care. Ottawa: Canadian Health Services Research Foundation [Google Scholar]

- Safran D.G., Kosinski J., Tarlov A.R., Rogers W.H., Taira D.A., Lieberman N., Ware J.E. 1998. “The Primary Care Assessment Survey: Tests of Data Quality and Measurement Performance.” Medical Care 36(5): 728–39 [DOI] [PubMed] [Google Scholar]

- Santor D.A., Haggerty J.L., Lévesque J.-F., Burge F., Beaulieu M.-D., Gass D., et al. 2011. “An Overview of Confirmatory Factor Analysis and Item Response Analysis Applied to Instruments to Evaluate Primary Healthcare.” Healthcare Policy 7 (Special Issue): 79–92 [PMC free article] [PubMed] [Google Scholar]

- Shi L., Starfield B., Xu J. 2001. “Validating the Adult Primary Care Assessment Tool.” Journal of Family Practice 50(2): 161–71 [Google Scholar]

- Shortell S.M., Gillies R.R., Anderson D.A., Eirckson K.M., Mitchell J.B. 1996. Remaking Health Care in America: Building Organized Delivery Systems. San Fransisco: Jossey-Bass [Google Scholar]

- Wells K.J., Battaglia T.A., Dudley D.J., Garcia R., Greene A., Calhoun E., et al. 2008. “Patient Navigation: State of the Art, or Is It Science?” Cancer 113: 1999–2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B., Coyle J., Healy D. 1998. “The Meaning of Patient Satisfaction: An Explanation of High Reported Levels.” Social Science and Medicine 47(9): 1351–59 [DOI] [PubMed] [Google Scholar]

- Woodward C.A., Abelson J., Tedford S., Hutchison B. 2004. “What Is Important to Continuity in Home Care? Perspectives of Key Stakeholders.” Social Science and Medicine 58: 177–92 [DOI] [PubMed] [Google Scholar]