The Challenge

Many Residency Review Committees (RRCs) require that residents complete case and procedure logs. For instance, the requirements for general surgery state “All… must enter their operative experience concurrently during each year of the residency in the ACGME [Accreditation Council for Graduate Medical Education] case log system.”1(p15) This is not only a requirement for most procedural-based specialties but also a requirement for other specialties in which program directors and institutional officials must ensure that residents have “a satisfactory patient care experience that includes: sufficient numbers of patients, diversity of diagnoses, and acuity/complexity of the patients.”2(p13) The intent of these requirements is to ensure adequate clinical and procedural experiences for residents before independent practice, with the caveat that completion of x number of cases or procedures does not ensure competence in a given individual.

The purpose of this article is to provide an annual template for program directors and those involved with internal reviews to use when reviewing case logs, as part of an overall program evaluation.

What Is Known

As the completion of some prescribed number of cases does not ensure clinical or technical competency in a particular resident, the practice of reviewing case logs is part of program evaluation, not resident assessment. The minimum case or procedure numbers have been set by the various RRCs to represent a minimum number of cases to ensure most trainees can practice safely and independently. To date, there is little empirical evidence underlying the minimum numbers; those numbers have been determined by panels of experts, such as RRC members. The bottom line is that current minimum numbers are the best available target and programs are held to those numbers by their RRC.3

For program evaluations and improvements to be made, the data on which they are made need to be as accurate as possible. Timely data entry is likely to increase accuracy and should be stressed to residents as a professional requirement similar to the timely record-keeping they will maintain in practice. Automating and simplifying data entry as much as possible may also increase accuracy. Rowe and colleagues4 demonstrated that computer-based logging increased compliance and accuracy. The findings from a study of case logs in anesthesiology suggest that, compared with data obtained from an automated record, manual case-log data entry resulted in up to 5% of cases not being captured by at least half of the residents.5 Therefore, efforts to automate data entry may result in more accurate logs and more time for residents to devote to nonclerical matters.

A second consideration in case log accuracy is the pressure felt by programs to meet requirements because programs are judged by that achievement. A recent survey of plastic surgery chief residents suggests that although, on occasion, residents were asked to recode cases to fit a certain requirement (presumably legitimately), none reported being asked to falsify their logs to meet requirements. In that study, most residents felt that their logs exactly or very closely represented their actual experience (S. J. Kasten, MD, MHPE, FACS, oral communication, January 9, 2012, on recent survey's unpublished data).

Common Program Requirements

Program directors must “provide each resident with documented semiannual evaluation of performance with feedback.”6(p3) An important part of that review should be the review of case logs. This allows faculty to comment on a residents' experience to date; more important, the review will ensure that gaps are identified early, and actionable plans are developed during the ensuing 6 months. For programs in which more emphasis is traditionally placed on procedural numbers, more frequent checking is wise. Program directors or chief residents may assess case logs on an ongoing basis, particularly for categories or residents for whom there is a concern about “low numbers.”

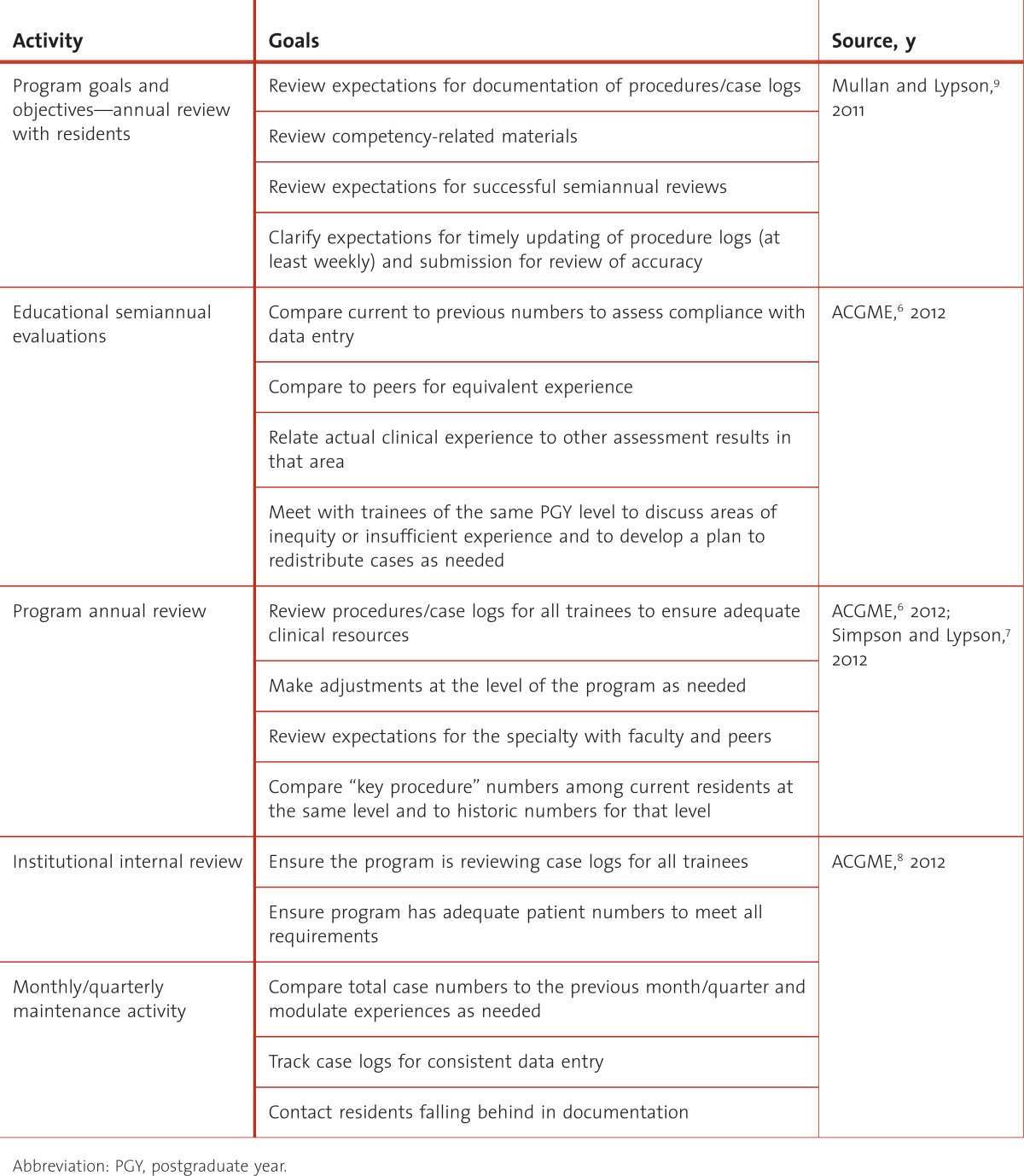

The program should review not only individual trainee logs but also the overall log for the program. This review should be in concert with the annual program review to ensure the programs' goals and objectives are being met as well as the RRC requirements.7 An annual review in concert with a midpoint accreditation internal review will highlight issues early and allow for interventions before an RRC site visit8 (table).

TABLE.

Program Activities Relating to Case Logs

In addition to reviewing procedure logs, program directors must ensure trainees demonstrate competency in the domain of professionalism. Professionalism includes proper, timely, and accurate documentation. In assessing professionalism, one strategy is to evaluate compliance with logging procedures and cases. However, program directors should develop clear goals and objectives for that documentation before using that documentation for trainee evaluation;9 and they may want to randomly pull a few patient records to verify the accuracy of the case log data.

Finally, if a resident is receiving poor marks in a particular clinical problem, case logs can be used to determine whether the resident needs remediation versus more clinical experience in the area.

Rip Out action items

Program directors must:

Ensure residents and faculty understand the importance of tracking their experiences

Review procedure/case logs at least semiannually at the time of the trainee's formative review

View recording of procedure/case numbers and associated details as a professionalism competency

Review procedure/case logs as a critical aspect of the institutional internal review process

Share case and procedure data with faculty and residents regularly to facilitate plans to resolve inequities between trainees, as needed

What Can You Start TODAY

Have faculty and residents review the requirements for procedures and various case experiences.

Discuss with faculty and residents the importance of recording procedure and case numbers.

Develop professional standards in your own program to ensure compliance with recording by trainees.

If your RRC does not offer guidelines for adequate experience, elicit feedback from faculty and peers.

What Can You Do LONG TERM

Develop a competency approach to assess the quality as well as number of resident procedures.10

Before an RRC visit, the internal review process should examine your logs in detail to ensure a breadth of experience and note any obvious gaps.

At least annually, obtain trainee feedback about their experiences and any gaps in their experiences.

Contact your specialty organization for national procedural and experience guidelines.

Advocate with your specialty organization to ensure that the list of requirements reflects current practice.

Footnotes

All authors are at University of Michigan Medical School. Steven J. Kasten, MD, MHPE, FACS, is Assistant Professor of Surgery, Program Director of the Integrated Plastic Surgery Residency Program, and Associate Director of the Craniofacial Anomalies Program; Mark E. P. Prince MD, FRCS(C), is Associate Professor and Program Director of Otolaryngology and Chief of the Division of Head and Neck Surgery; and Monica L. Lypson, MD, MHPE, FACP, is Associate Professor of the Departments of Internal Medicine and Medical Education and Assistant Dean for Graduate Medical Education.

Resources

- 1.[ACGME] Accreditation Council for Graduate Medical Education. ACGME program requirements for graduate medical education in general surgery. Page 15. http://www.acgme.org/acWebsite/downloads/RRC_progReq/440_general_surgery_01012008_u08102008.pdf. Accessed January 6, 2012. [Google Scholar]

- 2.[ACGME] Accreditation Council for Graduate Medical Education. ACGME program requirements for graduate medical education in pediatrics. http://www.acgme.org/acWebsite/downloads/RRC_progReq/320_pediatrics_07012007.pdf. Accessed January 6, 2012. [Google Scholar]

- 3.Vassiliou MC, Kaneva PA, Poulose BK, Dunkin BJ, Marks JM, Sadik R, et al. How should we establish the clinical case numbers required to achieve proficiency in flexible endoscopy. Am J Surg. 2010;199(1):121–125. doi: 10.1016/j.amjsurg.2009.10.004. [DOI] [PubMed] [Google Scholar]

- 4.Rowe BH, Ryan DT, Mulloy JV. Evaluation of a computer tracking program for resident–patient encounters. Can Fam Physician. 1995;41:2113–20. [PMC free article] [PubMed] [Google Scholar]

- 5.Simpao A, Heitz JW, McNulty SE, Chekemian B, Brenn BR, Epstein RH. The design and implementation of an automated system for logging clinical experiences using an anesthesia information management system [published online ahead of print December 14, 2010] Anesth Analg. 2011;112(2):422–429. doi: 10.1213/ANE.0b013e3182042e56. doi:10.1213/ANE.0b013e3182042e56. [DOI] [PubMed] [Google Scholar]

- 6.[ACGME] Accreditation Council for Graduate Medical Education. ACGME common program requirements. http://www.acgme.org/acWebsite/dutyHours/dh_dutyhoursCommonPR07012007.pdf. Accessed January 6, 2012. [Google Scholar]

- 7.Simpson D, Lypson ML. The year is over, now what? the annual program evaluation. J Grad Med Educ. 2011;3(3):435–437. doi: 10.4300/JGME-D-11-00150.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.[ACGME] Accreditation Council for Graduate Medical Education. ACGME institutional requirements. http://www.acgme.org/acWebsite/irc/irc_IRCpr07012007.pdf. Accessed January 6, 2012. [Google Scholar]

- 9.Mullan PB, Lypson ML. Communicating your program's goals and objectives. J Grad Med Educ. 2011;3(4):574–576. doi: 10.4300/JGME-03-04-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.King RW, Schiavone F, Counselman FL, Panacek EA. Patient care competency in emergency medicine graduate medical education: results of a consensus group on patient care. Acad Emerg Med. 2002;9(11):1227–1235. doi: 10.1111/j.1553-2712.2002.tb01582.x. doi:10.1197/aemj.9.11.1227. [DOI] [PubMed] [Google Scholar]