Abstract

We investigated the relevance of linguistic and perceptual factors to sign processing by comparing hearing individuals and deaf signers as they performed a handshape monitoring task, a sign-language analogue to the phoneme-monitoring paradigms used in many spoken-language studies. Each subject saw a series of brief video clips, each of which showed either an ASL sign or a phonologically possible but non-lexical “non-sign,” and responded when the viewed action was formed with a particular handshape. Stimuli varied with respect to the factors of Lexicality, handshape Markedness (Battison, 1978), and Type, defined according to whether the action is performed with one or two hands and for two-handed stimuli, whether or not the action is symmetrical.

Deaf signers performed faster and more accurately than hearing non-signers, and effects related to handshape Markedness and stimulus Type were observed in both groups. However, no effects or interactions related to Lexicality were seen. A further analysis restricted to the deaf group indicated that these results were not dependent upon subjects' age of acquisition of ASL. This work provides new insights into the processes by which the handshape component of sign forms is recognized in a sign language, the role of language experience, and the extent to which these processes may or may not be considered specifically linguistic.

Keywords: ASL, psycholinguistics, markedness, phoneme monitoring

INTRODUCTION

Signed languages used in Deaf communities are naturally occurring human languages. Signed languages like ASL are not universal and, just as there are many different spoken languages, there are also many separate, autonomous signed languages. Despite the differences in language form, signed languages have formal linguistic properties like those found in spoken languages (Stokoe, 1960; Klima & Bellugi, 1979; Sandler & Lillo-Martin, 2006). However, only a few psycholinguistic studies of on-line processing in signed languages exist (for recent reviews of psycholinguistic studies of ASL see Emmorey, 2002; Corina & Knapp, 2006); moreover, only a small subset of these have directly addressed processes involved in lexical and sub-lexical recognition.

Studies of lexical recognition in signed languages have provided evidence for well-known psycholinguistic properties such as lexicality, usage frequency, and semantic and form-based context effects (Corina & Emmorey, 1993; Corina & Hildebrandt, 2002; Mayberry & Witcher, 2005; Dye & Shih, 2006; Carreiras, Gutierrez-Sigu, Baquero & Corina, 2008). However, a fuller explication of the processes by which a gestural-manual linguistic form is mapped to a meaningful lexical representation remains to be determined.

The shape of the hand used to make a sign (henceforth, “handshape”) is a fundamental building block of sign formation. It serves as one of the four main “phonological” parameters along with movement, location (Stokoe, 1960) and orientation (Battison, 1978). Independent sign languages across the world differ considerably in their inventories of handshapes and within sign languages, subtle differences in handshape configuration can factor significantly in the identification of regional dialect or “accents” (e.g. Battison, Markowicz & Woodward, 1975; Bayley, Lucas & Rose, 2002). Thus studies of the perception of handshape in lexical contexts may contribute to our understanding of language-specific strategies used in sign perception. However, a prominent issue in sign language psycholinguistics is to determine whether the processes involved in sign recognition entail specialized linguistic processing or are driven by factors that are common to human action recognition in general. The present experiment investigates perceptual and linguistic factors of sign recognition in the context of a sub-lexical monitoring task.

Phoneme monitoring

Phoneme monitoring experiments are a staple of the spoken-language psycholinguistic literature (for a review see Connine & Titone, 1996). In the past this technique has been instrumental in motivating discussions of the relative importance of autonomous versus interactive processes in language comprehension. Though results are not always consistent, past researchers have generally found faster reaction times for phoneme monitoring in the context of words rather than non-words (e.g., Cutler, Mehler, Norris & Segui, 1987; Eimas, Hornstein & Payton, 1990; Pitt & Samuel, 1995). Such studies were taken as evidence that “top-down” feedback from lexical representations are relevant even in the processing of sub-lexical linguistic elements, as embodied in models such as the TRACE interactive model of word recognition (McClelland & Elman, 1986). However more recent challenges to such interpretations have come from autonomous models such as the Race Model (Cutler & Norris, 1979) and the MERGE model (Norris, McQueen & Cutler, 2000).

In the current paper, we make use of a similar monitoring task, not to adjudicate between autonomous and interactive models of ASL recognition, but rather as means to examine the uniformity of meta-linguistic judgments for signs and pseudo-signs by skilled deaf signers and sign-naive hearing subjects. These studies permit insights into whether the processes involved in the sub-lexical monitoring of sign forms are driven by factors that are common to human action recognition in general or entail specialized linguistic processing.

In this study, subjects viewed actions that were either real ASL signs or phonologically possible “non-signs” and were asked to respond as quickly as possible when seeing a sign form (sign or non-sign) articulated with a particular handshape. Based on the results from some spoken-language studies, one might expect to find faster RTs in the ASL sign context than in the non-sign context and additionally, such an effect would only be expected in those subjects able to tell the difference (i.e., the deaf signers and not the hearing non-signers). However there are fundamental structural differences between word forms and sign forms. Classic descriptions of signs have suggested that sign structure exhibits greater simultaneity than word forms, whose phonetic properties unfold across time. For example, distinctive handshape information appears early in sign formation and is often held constant across the duration of the sign. It has been suggested that sign structure, in part, reflects affordances of the visual system—for example, the greater ability of the visual system to monitor parallel streams of information, relative to more temporally constrained auditory systems. If handshape information is available early in perception, one might expect absent or reduced lexicality effects, as lexicality differences are typically not observed for word-initial phonemes (see Pitt & Samuel, 1995 for discussion).

Even in the absence of lexical differences during handshape monitoring, we might expect to see differences at the sub-lexical level emerging from lifelong experience with a signed language. Because handshape is evidently a salient characteristic of the linguistic (sign) signal, perhaps deaf signers are differentially sensitive to this component of sign formation, relative to hearing non-signers.

Although the paradigm used in this study has clear parallels with spoken-language phoneme-monitoring tasks, it should be noted that phonemes and sign parameters like handshape are not necessarily same-level units; in fact, the proper analogue of the phoneme in sign language is not a settled question. Spoken-language studies have found that monitoring RTs are slower, respectively, for phonemes, syllables, and words (Foss & Swinney, 1973; Savin & Bever, 1970; Segui, Frauenfelder & Mehler, 1981), but the status of sign parameters like handshape in such a hierarchy is as yet unclear.

In previous work examining handshape phonotactics, researchers have successfully run experiments in which subjects monitor for a handshape in moving signs. In Corina and Hildebrandt (2002), monitoring RTs for handshapes were comparable between sign forms with stable versus changing handshapes. The researchers noted that speech studies have found faster phoneme monitoring times, respectively, for consonants, semi-vowels, and vowels (Savin & Bever, 1970; van Ooijen et al., 1992; Cutler et al., 1996), but remarked that the interpretation of such results was problematic due to a confound between these items' acoustic differences (e.g. steady-state vowels vs. dynamic consonants) and their function within the language system (i.e. vowels can serve as syllable nuclei, but consonants generally do not). Positing that handshapes have a dual status as potentially dynamic or positional, Corina and Hildebrandt argued that their results provided evidence that RT differences between segment types in general may be due more to physical differences in the signal than to the segments' syllabic status (see Corina & Hildebrandt, 2002, for additional discussion).

With respect to the present study, these data suggest that the recognition of handshapes in moving signs might be driven by physical properties of the handshape to a greater extent than by its linguistic status per se. In addition, it has been noted (Corina, unpublished data) that non-signing hearing subjects are surprisingly good at handshape monitoring tasks in ASL as long as signs are not presented in connected discourse (the monitoring stimuli in the present study are individual sign clips). Here, we extend this methodology to further explore the contribution of linguistic properties of signs such as lexicality, markedness and type. The inclusion of hearing non-signers provides one means for determining whether observed effects reflect general processing mechanisms or specialized linguistic knowledge.

Lexicality

Lexicality as used here refers to whether a word form has a lexical entry in a speaker's mental lexicon. In the psycholinguistic literature, investigations of lexicality have examined how the word form influences a subject's decisions when he or she is asked to recognize or evaluate the lexical status of a true word as opposed to a “non-word” (a form which was made up by the experimenter and has no lexical entry). The assumption is that our ability to recognize a word is aided by its prior mental representation. A common and perhaps unsurprising finding is that the more word-like a non-word stimulus is, the harder it becomes to determine whether the word is a true word or a made-up form. The fact that pseudo-words like “nust” are more difficult to reject than phonotactically impossible word forms like “ntpw” is thought to be due to sub-lexical components of these stimulus forms engendering partial activations of existing mental representations, ultimately leading to more difficult correct rejections (Forster & Chambers, 1973; Forster, 1976; Coltheart, Davelaar, Jonasson & Besner, 1977; Gough & Cosky, 1977).

In the present experiment, the analogues for words and non-words are signs and non-signs, the latter of which are phonotactically possible but non-occurring sign-like forms, which we created by altering one parameter—the handshape—of well-formed existing signs. While several studies have reported lexicality effects in the context of lexical decision experiments for signs, whether such forms would be capable of engendering lexicality effects in the context of a sub-lexical handshape monitoring task which does not require an explicit lexical judgment is unknown. If such effects are found, this could provide support for a processing model of sign language in which handshapes serve as an organizing principle of lexical representations. With respect to the factor of lexicality we entertain three hypotheses.

Hypothesis L1

Faster responses (smaller RTs) should be seen for signs than non-signs, but with lexical effects limited to the ASL users, i.e. the deaf signing subjects.

Two alternative hypotheses are stated below. First, it is possible that the temporal-structural realization of handshape information within a sign (i.e. the early perceptual availability of handshape information in the course of sign formation) may attenuate lexical effects. Even if this is the case, we might still expect subjects familiar with signed language to respond more quickly than non-signers, due to their familiarity with the structure of sign forms. However, it is also possible that sub-lexical monitoring of handshapes will call upon general-purpose rather than language-specific mechanisms. In brief, the relevant hypotheses we will be testing can be stated as follows:

Hypothesis L2

Deaf signers will show no difference in the monitoring of handshapes in the context of signs relative to non-signs, but these subjects will be overall quicker than hearing subjects due to familiarity with the structural properties of sign forms.

Hypothesis L3

Deaf signers and hearing non-signers will not show differences in their patterns of response to signs and non-signs, as general-purpose perceptual mechanisms are evoked in the context of this monitoring task.

Markedness

In this study, we also investigate the putatively linguistic factor of markedness: the handshapes that subjects were monitoring in the various video clips were in some cases phonologically marked and in other cases unmarked.

The notion of markedness in phonological theory dates from the time of the Prague School, in particular the work of Trubetzkoy (1939/69) and Jakobson (1941/68). The term is generally used to indicate that the values of a phonological feature or parameter are in some sense patterned asymmetrically, in that one value may be realized with more natural, frequent, or simple forms than those of the other. The particular properties Jakobson (1941/1968) associated with unmarked elements included cross-linguistic frequency, ease of production and acquisition, and resistance to loss in aphasia. Within a specific language, “markedness” is often considered a synchronic property of the grammar; however, extra-linguistic factors such as ease of production and acquisition and resistance to loss in aphasia point to interplay between performance factors and grammaticalization.

Within the literature on sign language phonology, researchers have considered issues of markedness as it relates to handshape, based either on general notions of markedness like those mentioned above, or on others specific to sign language, such as the behavior of the non-dominant hand. Here, as in the case of spoken languages, the question of what constitutes a marked form remains unsettled. Battison (1978) argues for a limited set of unmarked handshapes (B, A, S, C, O, 1 and 5), based on properties such as distinctiveness, frequency in ASL and other sign languages, and weaker restrictions on their occurrence, relative to other handshapes (see also Sandler & Lillo-Martin, 2006; Wilbur, 1987).

In her work on sign handshapes, Ann (2006) cites Willerman (1994), noting that discussion in the wider linguistics literature involving ostensibly related concepts like markedness, frequency of occurrence and ease of articulation has often suffered from circularity (e.g. unmarked items are acquired first because they are easiest; evidence for ease of articulation includes early acquisition). Seeking to avoid this circularity, Ann first postulates a measure of ease of articulation based on various anatomical and physiological factors, and then relates this measure to frequency of occurrence within a Taiwanese Sign Language corpus.

Emmorey and Corina (1990; Experiment 1) hypothesized that because signs with unmarked handshapes are more numerous than those with marked handshapes (Klima & Bellugi, 1979), they will tend to have larger-sized cohorts and hence should be associated with slower response times among signers than marked-handshape signs. Markedness-related effects in the expected direction were seen, but only for non-native signers. The researchers suggested this could be due to a less-efficient lexical recognition process on the part of the non-native signers.

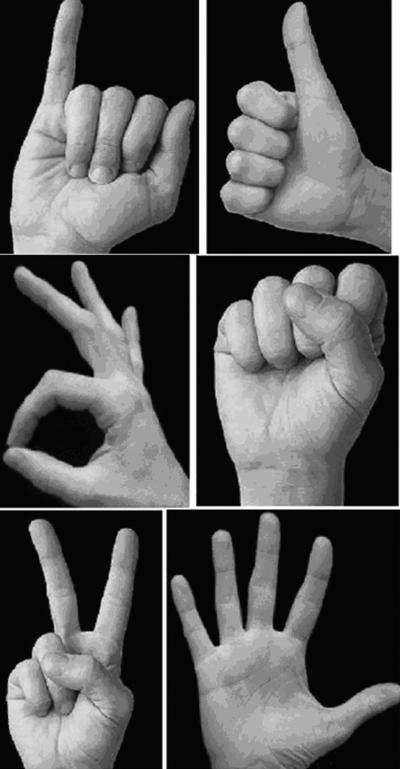

For the purposes of the present study, three of Battison's unmarked handshapes were used (A-thumb,1 S and 5) and were contrasted with three marked handshapes (I, F and V); see Figure 1 for an illustration of these handshapes. Because our task incorporates both signs and non-signs, and can be performed by both users of ASL and sign-naïve participants, our results may speak to the general question of to what extent markedness can be considered a linguistic, as opposed to purely perceptual, property. Two pairs of relevant hypotheses, each of which is mutually conflicting as stated, are as follows:

Figure 1.

From top to bottom are the marked handshapes “I,” “F” and “V” (left column), and unmarked handshapes “A-thumb” “S” and “5” (right column).

Hypothesis M-1a

The processing of marked items is relatively difficult and should therefore be associated with slower RTs in a monitoring task.

Hypothesis M-1b

Marked items stand out more readily and so should be associated with faster RTs in a monitoring task.

Hypothesis M-2a

Markedness should best be considered a linguistic property, so effects related to Markedness should be limited to the deaf group.

Hypothesis M-2b

Markedness is a linguistic manifestation of more general production and/or perceptual constraints, so Markedness effects should be seen in both groups.

Type

In the present study each stimulus was classified according to the factor of Type, where a sign or non-sign is either Type 1 (one-handed, using just the dominant hand), Type 2S (two-handed signs with symmetry in handshape and movement between the hands), or Type 2A (two-handed asymmetrically-formed signs).2 The hypotheses related to Type that we will investigate here, the first two of which are mutually opposed, are as follows:

Hypothesis T1

In single-handed (Type 1) sign forms, the target handshapes are seen most clearly and unambiguously and hence these will yield the fastest RTs.

Hypothesis T2S

Because symmetrical two-handed (Type 2S) sign forms are articulated with the same target handshapes on both hands, they offer additional and unambiguous information to subjects relative to one-handed signs, so will be associated with the fastest RTs.

Hypothesis T2A-1

The inconsistent information present in two-handed asymmetrical (Type 2A) sign forms will result in a slowing of RTs relative to the other two stimulus Types.

It has been noted in earlier work that the behavior of the non-dominant hand in two-handed asymmetrical signs is relatively restricted, such as in the kinds of handshapes or movements it may articulate. It has even been suggested that in such signs, the non-dominant hand serves as a place of articulation (or “base hand”) rather than an articulator in its own right (see Sandler & Lillo-Martin, 2006, for discussion). To the extent that signers are implicitly aware of such phonological/phonotactic constraints involving the non-dominant hand, we may expect them to make use of this knowledge as they carry out the handshape monitoring task. This suggests a fourth hypothesis related to Type:

Hypothesis T2A-2

If a Group by Type interaction is observed, this will be at least partially due to deaf signers being more likely to ignore the non-dominant hand in making their judgments, resulting in less slowing for Type 2A items for deaf signers relative to hearing non-signers.3

METHODS

Subjects

In this experiment, 56 participants took part, of whom 36 were deaf (age range = [18, 59], mean = 23.9, SD = 8.2; 25 female) and 20 were hearing (age range = [18, 28], mean = 20.6, SD = 2.1; 12 female). Five participants, all deaf, were left-handed. Of the deaf subjects, 19 were native signers, having learned ASL from infancy from deaf parents, and 17 were non-native signers (self-reported age of acquisition approximate range = [4, 17], mean = 8.6, SD = 3.3). The deaf subjects were recruited at Gallaudet University, while the majority of the hearing subjects were students at the University of California at Davis. The other hearing subjects were residents of northern California who were recruited through advertisements and word-of-mouth. Subjects were given either course credit or a small fee for participating; all gave informed consent in accordance with established IRB procedures at UC Davis and Gallaudet University.

Stimuli

Each subject viewed a sequence of short video clips showing a person performing an action that could either be an ASL sign or a non-sign. These non-signs were created by changing the handshape of an actual sign in order to produce a phonologically possible but non-lexical sign form. The action shown in each clip was performed by one of two deaf performers, one of whom was male and the other female. For the sake of greater homogeneity among stimuli, all clips were normalized to a length of 1034 ms using a video compression algorithm (Final Cut Pro 5.0.2, Apple Computer, Inc.). The amount of manipulation involved in each case was small enough that the movements within the clips were not noticeably slower or faster than in typical signing actions. The distance from the subject's eyes to the computer screen on which the stimuli were presented was 24 inches.

A total of 180 manual actions (90 signs and 90 non-signs) were chosen to appear in these clips, of which a third were the main object of study in this experiment (“target” clips) and the rest fillers.4 Each video clip was shown once. The set of target clips was designed to be balanced in terms of sign frequency. Handshapes classified by Battison (1978) as unmarked that were used in this study were “S,” “5” and “A-thumb,” while the set of marked handshapes consisted of “F,” “I” and “V.” These handshapes are illustrated in Figure 1, and a complete listing of the target stimuli used in this study can be found in the Appendix. During the task, these six handshapes were only seen in target clips, never in fillers. Filler clips could also show either signs or non-signs, but showed actions and handshapes distinct from those used in the target clips.

Task

The subject was told that he/she would be watching a series of short video clips and that his/her task was to decide as quickly as possible whether or not the action shown in each clip was formed using a particular handshape, and if it was, to respond by pressing a button on a custom-made serial response device (Engineering Solutions Inc.). No special instructions were given regarding two-handed signs or regarding the dominant or non-dominant hands of the performers in the clips. Stimuli were presented and data collected using Presentation software (Neurobehavioral Systems). The experimental task was organized into 12 blocks of 15 clips each, with signs and non-signs for each target handshape presented in different blocks, but the intervening fillers in each block consisted of both signs and non-signs. Ordering of the clips was random within blocks, subject to the condition that no more than two targets could occur consecutively (i.e. without an intervening filler clip). The total ISI (end of one video to start of next video) was about 350 ms. At the start of each block, the target handshape was shown onscreen. Within each block, five clips showed the target handshape and ten clips were fillers. Therefore, to achieve 100% accuracy on this go/no-go task, a subject would have to push the response button five times per block (once for each target clip) and 60 times total. The duration of the entire task (all 180 video clips) for each subject was approximately 8 minutes.

Before beginning the task, each subject was given a short training exercise, very similar in format to the actual experiment, but with a shorter duration (a total of 20 video clips separated into two blocks, with a different target handshape used in each block). The video clips and target handshapes in the training task were different from those used for the actual experiment.

Data analysis

Accuracy and RT for categorizing target video clips served as dependent measures. The analyses for accuracy were performed after performing an arcsine transformation (y=arcsin(sqrt(x)) on the initially-obtained percentage data, but for clarity of presentation the summary information given for accuracy has been back-transformed into percentage scores (Wheater & Cook, 2000). Response times were measured from the onset of each video clip, and RT analyses were performed on correct responses only.

RT values were considered to be outliers if they were more than 3 SDs away from the mean among the results for a given subject (over all actions of that action class, i.e. sign or non-sign) or a given action (over all subjects of the same group, i.e. deaf or hearing). Outliers were replaced with the average of two quantities—the subject's average RT for that action class, and the average RT over all subjects of the same group (deaf or hearing) associated with that action. Overall, the proportion of RT values classified as outliers and replaced in this way was approximately 1.5%.

RESULTS

To evaluate the contributions of the factors of interest in subjects' performance of this monitoring task, we utilized four-way ANOVAs with Group (deaf or hearing) as a between-subject factor, and within-subjects factors of Lexicality (sign or non-sign), Markedness (marked or unmarked) and Type (1, 2S or 2A). We examined reaction time and accuracy by subjects and items, and report significance results in terms of the minF' statistic (Clark, 1973), a conservative measure that seeks to ensure that results are simultaneously generalizable to both new subjects and new items. We then follow up with planned and post-hoc comparisons when such results are germane to the discussion. Overviews of the RT and accuracy results are presented in Tables 1 and 2, respectively. Standard errors for accuracy were uniformly low, presumably due the overall high accuracy scores, so for simplicity of presentation, SEs are given only for the RT data.

Table 1.

Overview of RT means (and standard errors) in ms for the deaf and hearing groups for various conditions of stimulus Lexicality, handshape Markedness, and Type.

| Unmarked | Marked | Overall | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Deaf | Type 1 | 2S | 2A | All Types | Type 1 | 2S | 2A | All Types | Type 1 | 2S | 2A | All Types |

| Signs | 683 (14.7) | 622 (14.5) | 654 (14.7) | 653 (12.5) | 595 (13.4) | 638 (13.5) | 664 (13.4) | 632 (11.3) | 639 (12.8) | 630 (12.2) | 659 (11.4) | 643 (10.9) |

| Non-signs | 676 (14.4) | 641 (13.6) | 693 (15.4) | 670 (12.6) | 644 (13.1) | 665 (13.5) | 647 (17.3) | 652 (11.3) | 660 (12.1) | 653 (11.9) | 670 (14.0) | 661 (11.2) |

| Overall | 680 (12.6) | 631 (12.7) | 674 (12.9) | 662 (11.8) | 619 (12.0) | 651 (11.6) | 655 (12.7) | 642 (10.4) | 650 (11.5) | 641 (11.0) | 664 (11.4) | 652 (10.6) |

| Hearing | ||||||||||||

| Signs | 788 (19.7) | 709 (19.4) | 755 (19.7) | 751 (16.7) | 679 (18.0) | 763 (18.1) | 859 (18.0) | 767 (15.2) | 733 (17.2) | 736 (16.3) | 807 (15.4) | 759 (14.6) |

| Non-signs | 763 (19.4) | 709 (18.3) | 787 (20.7) | 753 (17.0) | 754 (17.6) | 745 (18.1) | 855 (23.2) | 784 (15.2) | 758 (16.3) | 727 (15.9) | 821 (18.8) | 769 (15.1) |

| Overall | 775 (17.0) | 709 (17.1) | 771 (17.3) | 752 (15.9) | 716 (16.1) | 754 (15.6) | 857 (17.0) | 776 (14.0) | 746 (15.5) | 731 (14.8) | 814 (15.3) | 764 (14.2) |

| All | ||||||||||||

| Signs | 735 (12.3) | 666 (12.1) | 705 (12.3) | 702 (10.4) | 637 (11.2) | 700 (11.3) | 761 (11.2) | 699 (9.4) | 686 (10.7) | 683 (10.2) | 733 (9.6) | 701 (9.1) |

| Non-signs | 720 (12.1) | 675 (11.4) | 740 (12.9) | 712 (10.6) | 699 (10.9) | 705 (11.3) | 751 (14.4) | 718 (9.5) | 709 (10.1) | 690 (9.9) | 745 (11.7) | 715 (9.4) |

| Overall | 727 (10.6) | 670 (10.6) | 722 (10.8) | 707 (9.9) | 668 (10.0) | 702 (9.7) | 756 (10.6) | 709 (8.7) | 698 (9.6) | 686 (9.2) | 739 (9.5) | 708 (8.9) |

Table 2.

Overview of accuracy means (%) for the deaf and hearing groups for various conditions of stimulus Lexicality, handshape Markedness, and Type.

| Unmarked | Marked | Overall | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Deaf | Type 1 | 2S | 2A | All Types | Type 1 | 2S | 2A | All Types | Type 1 | 2S | 2A | All Types |

| Signs | 99.8 | 99.6 | 99.8 | 99.7 | 100.0 | 100.0 | 100.0 | 100.0 | 99.9 | 99.9 | 99.9 | 99.9 |

| Non-signs | 98.6 | 99.9 | 99.9 | 99.7 | 99.9 | 100.0 | 100.0 | 100.0 | 99.4 | 100.0 | 100.0 | 99.9 |

| Overall | 99.3 | 99.8 | 99.9 | 99.7 | 100.0 | 100.0 | 100.0 | 100.0 | 99.8 | 99.9 | 100.0 | 99.9 |

| Hearing | ||||||||||||

| Signs | 97.3 | 99.9 | 99.8 | 99.4 | 99.8 | 99.6 | 92.7 | 98.4 | 98.9 | 99.8 | 97.6 | 99.0 |

| Non-signs | 99.8 | 99.7 | 98.1 | 99.4 | 98.7 | 98.6 | 96.2 | 98.0 | 99.4 | 99.3 | 97.2 | 98.8 |

| Overall | 98.9 | 99.9 | 99.2 | 99.4 | 99.3 | 99.2 | 94.6 | 98.2 | 99.2 | 99.6 | 97.4 | 98.9 |

| All | ||||||||||||

| Signs | 98.9 | 99.8 | 99.8 | 99.6 | 99.9 | 99.9 | 97.9 | 99.6 | 99.6 | 99.9 | 99.1 | 99.6 |

| Non-signs | 99.4 | 99.8 | 99.3 | 99.5 | 99.4 | 99.6 | 99.0 | 99.4 | 99.4 | 99.7 | 99.2 | 99.5 |

| Overall | 99.1 | 99.8 | 99.6 | 99.6 | 99.8 | 99.8 | 98.5 | 99.5 | 99.5 | 99.8 | 99.2 | 99.5 |

In the RT analysis we found a main effect of Group (minF'(1, 74.0)=32.9, p<0.001) and a marginally significant effect of Type (minF'(2, 47.8)=2.91, p=0.064). Also seen were two-way interactions of Group-Markedness (minF'(1, 84.6)=5.49, p<0.05), Group-Type (minF'(2, 69.7)=3.77, p<0.05), and Markedness-Type (minF'(2, 49.3)=3.69, p<0.05), as well as a three-way interaction of Group-Markedness-Type (minF'(2, 73.8)=3.14, p<0.05). No significant main effect of Lexicality (minF'(1, 47.8)=0.47, p=0.50) or Group-Lexicality interaction (minF'(1, 87.8)=0.27, p=0.60) were observed.

In the accuracy analysis, there was a main effect of Group (minF'(1, 94.8)=7.33, p<0.01), a two-way interaction of Group-Markedness (minF'(1, 90.6)=5.26, p<0.05), and a marginally significant interaction of Group-Type (minF'(2, 81.0)=2.42, p=0.095).

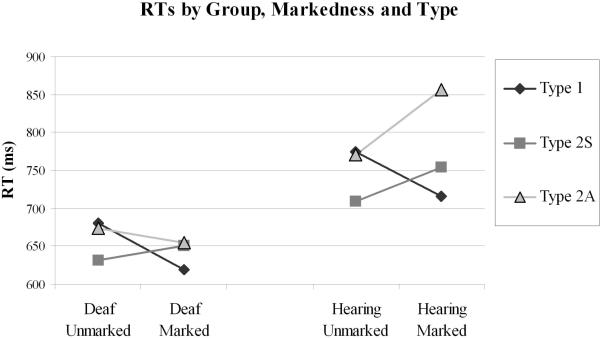

The three-way Group-Markedness-Type interaction for reaction times is illustrated in Figure 2, which allows us to see several important patterns in these data. First, deaf subjects were overall faster than hearing subjects at the detection of handshapes in these dynamic stimuli. The main effects of Group for RT and accuracy are due to the fact that relative to hearing non-signers, deaf signers were faster (652 ms vs. 764 ms for hearing) and more accurate (99.9% vs. 98.9%), which indicates that these data do not exhibit a speed-accuracy trade-off.

Figure 2.

The three-way Group-Markedness-Type interaction for reaction times.

The Group-Markedness interaction in the RT data is due to the fact that the deaf and hearing groups each showed Markedness-based differences in RT, but in the opposite direction, with marked items associated with faster RTs in the deaf group (ΔRT = 19 ms, p<0.01) but slower RTs in the hearing group (ΔRT = 23 ms, p<0.05). This result must however be qualified in light of the interactions with Type discussed below. As with the RT data, no Group-Lexicality interaction was observed in the accuracy data.

Several complex effects of Type and handshape Markedness were also seen. For deaf subjects, robust effects of handshape Markedness are seen in Type 1 stimuli (i.e. one-hand signs and non-signs), whereby marked handshapes were faster to recognize than unmarked handshapes (ΔRT for Type 1 unmarked vs. Type 1 marked = 60.2 ms, p<0.001). Type 2S stimuli showed an opposite-direction effect, which was marginally significant (ΔRT for Type 2S unmarked vs. marked = −19.9 ms, p=0.058). Handshape Markedness effects were not significant in Type 2A forms for the deaf group.

Similar effects were observed in the hearing subjects' data, for whom responses when detecting marked handshapes in one-hand stimuli were also faster than for unmarked handshapes (ΔRT for Type 1 unmarked vs. marked = 58.8 ms, p<0.001). Similar to the deaf subjects' performance, for the hearing group Type 2S stimuli show the opposite pattern, with unmarked handshapes being detected faster than marked handshapes (ΔRT for Type 2S unmarked vs. marked = −44.1 ms, p<0.01). Finally, Type 2A stimuli produce the slowest responses for hearing subjects, for whom unmarked handshapes were more easily detected than marked handshapes (ΔRT for Type 2A unmarked vs. marked = −85.9 ms, p<0.001).

These overall results are generally mirrored in the accuracy data (see Table 2); however, the excellent performance of subjects overall with respect to accuracy constrains strong interpretability of those effects.

Age of Acquisition

There is growing evidence to suggest that non-native signers may differ in the processing of form-based properties of signs (Mayberry & Eichen, 1991; Mayberry & Fischer, 1989; Emmorey, Corina & Bellugi, 1995; Emmorey & Corina, 1990; Corina & Hildebrandt, 2002). In a metalinguistic judgment task, Corina & Hildebrandt (2002) reported that native and non-native signers showed subtle differences in form-based similarity judgments of signs. In that study, deaf native signers rated movement as the most salient property by which to judge form-similarity, while non-native signers were more influenced by handshape similarity. In a recent series of studies of lexical recognition in Spanish Sign Language (Lengua de Signos Española, LSE), native signers seemed less affected by handshape similarity compared to non-native signers (Carreiras et al., 2008). These data indicate that sub-lexical properties of sign languages may be differentially weighted during online sign recognition, and accruing evidence suggests that non-native signers may be more strongly influenced by handshape properties during this process. Because of the demographic breakdown of our deaf subjects, we were we able to conduct an analysis which examined whether native signers and non-native signers showed differential effects in the handshape monitoring task.

In order to investigate whether age of exposure influenced the present findings, a four-way ANOVA was run on the deaf subjects' data separately, with Age of Acquisition (AoA: native or non-native) as a between-subject factor, and within-subjects factors of Lexicality (sign or non-sign), Markedness (marked or unmarked) and Type (1, 2S or 2A). As noted earlier, native signers (n=19) learned ASL from infancy from deaf parents; all others were classified as non-native signers (n=17; self-reported age of acquisition approximate range = [4, 17], mean = 8.6, SD = 3.3). Note that all signers, regardless of age of exposure, used ASL daily and specified that ASL was their preferred form of communication amongst their peers. However the total number of years of signing is not controlled across our sample. Given the near-ceiling performance of these subjects, accuracy data, which exhibited little variability, were not examined.

The ANOVA found as significant only a Markedness-Type interaction (minF'(2, 54.4)=3.22, p<0.05). This interaction mirrors the one observed in the left side of Figure 2, whereby the deaf subjects showed faster RTs to marked handshape in Type 1 forms, faster RTs to unmarked handshapes in Type 2S forms and no significant Markedness-related effects for Type 2A forms. There was no main effect of Age of Acquisition and there were no interactions involving Age of Acquisition.

DISCUSSION

The results for the deaf and hearing groups differ substantially, and permit us to draw some conclusions regarding our questions of interest. First, we observed that while there were no pronounced Lexicality effects, deaf signers were overall quicker more accurate than hearing subjects. This pattern suggests that deaf signers may rely upon their familiarly with the elements of sign forms, such as specific handshapes used in ASL, to speed their judgments. Thus these results provide support for Hypothesis L2: “Deaf signers will show no difference in the monitoring of handshapes in the context of signs relative to non-signs, but deaf signers will be overall quicker than hearing subjects due to familiarity with the structural properties of sign forms.”

However, these data do not allow us to explain the lack of lexicality effects which have been reported in similar spoken language studies. One possible explanation for this may come from Pitt and Samuel's (1995) finding that lexicality effects in a phoneme monitoring task were absent when the target phoneme was located before the uniqueness point of words in which the uniqueness point occurred early in the word. This did not occur when the initial portions of such stimuli were temporally “stretched,” indicating that subjects could make use of lexical information when given enough additional time. Signs, which incorporate more simultaneity and less sequentiality in their structure relative to spoken-language words, may be a sort of analogue of Pitt and Samuel's “early uniqueness point” words.

This is supported by a gating study performed by Emmorey and Corina (1990), who found that signers were able to correctly identify signs after viewing only the initial 240 ms (or about 35%) of the signs, in contrast to Grosjean's (1980) finding that the corresponding figure for spoken-language words is 330 ms (about 83% of the word). Emmorey and Corina (1990) also found that in the perception of signs, location and orientation were the parameters most quickly accessible to subjects (at about 145 ms); these were followed quickly by handshape (30 ms later), then finally by movement. As sign identification is not expected to occur sometime after this point (at about 240 ms, as just noted), the handshape monitoring task might be seen to function like the early target phoneme condition in Pitt and Samuel's study, resulting in an apparent absence of lexical effects.

Alternatively, the lack of lexicality results in the present study may suggest that handshape information does not act as an organizing principle for the sign lexicon, a possibility that is noted by Thompson, Emmorey and Gollan (2005) to explain findings of their work on the “tip of the fingers” phenomenon in signed language. It is also possible that there may not have been enough statistical power to observe lexical effects in the present case.

A second major finding were the unexpected effects of handshape Markedness that often interacted significantly with sign Type (i.e. number of hands and symmetry of handshape and movement within sign forms). For both groups, handshapes that in our experiment were designated as “marked” were identified faster in the one-handed stimuli, while handshapes in two-handed stimuli tended to show an opposite pattern whereby unmarked handshapes were detected faster than marked handshapes. As discussed further below, there were group differences in these patterns that were especially striking for Type 2A stimuli.

Thus depending on the type of stimuli involved, we find support for each of our first two hypotheses for Markedness. Regarding Hypothesis M-1b, “Marked items stand out more readily and so should be associated with faster RTs in a monitoring task,” we find support in the results for one-handed (Type 1) items. In contrast, examination of the effects for two-handed items (i.e. those of Types 2A and 2S) finds results more consistent with Hypothesis M-1a: “The processing of marked items is relatively difficult and should therefore be associated with slower RTs in a monitoring task.”

Several points here are noteworthy. First, given that these Markedness effects held across both signs and non-signs and were observed for both deaf (native and non-native) signers and hearing (sign-naive) subjects, these outcomes speak strongly to an interpretation of Markedness as something other than a purely abstract linguistic formalism. Rather, the data suggest a perceptual basis for these effects. Thus we find support for Hypothesis M-2b: “Markedness is a linguistic manifestation of more general production and/or perceptual constraints, so Markedness effects should be seen in both groups.” Correspondingly, these results argue against the premise that Markedness should best be considered a strictly linguistic property.

Furthermore, the fact that Markedness effects during handshape monitoring would change as a function of whether subjects were watching a one or two-handed stimulus suggests that perceptual complexity is a factor in these decisions. We speculate that in monitoring a dynamic stimulus where only one manual articulator is relevant, the added complexity of the marked handshape variants make this an easy target to discriminate. However in two-handed dynamic stimulus forms, the presence of multiple sources of information may overload the discrimination process and in these cases the increased handshape complexity reduces discriminability (or increases confusability), leading to slower detection times for marked handshapes.

An interesting related question is whether there could be neighborhood effects disguised as Markedness effects. However, our findings showed that non-signers exhibited effects related to Markedness and Type that were broadly consistent with those of deaf signers, which suggests that physical properties of the stimuli act as a much more important determinant of Markedness.

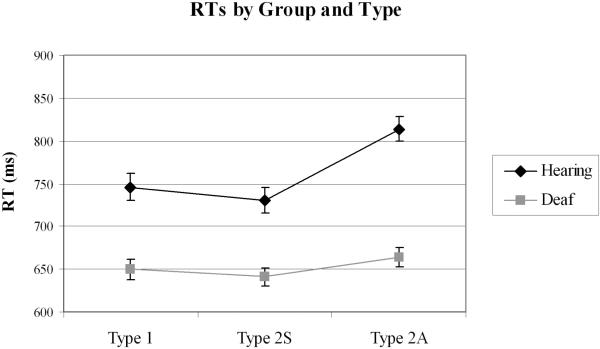

A closer examination of the results from the two-handed forms suggests that not all effects are perceptually driven; rather some language-specific knowledge may be playing a role in these outcomes. This is especially apparent when considering the Type 2A stimuli. Recall that for these forms, deaf and hearing subjects preformed quite differently, with deaf subjects showing a non-significant trend for faster detection of marked forms, and the hearing group overwhelmingly preferring unmarked handshapes. As noted earlier, in signs (and our non-signs) of Type 2A, the non-dominant hand is relatively restricted in its behavior. In some linguistic analyses this hand is considered a body location (i.e. “base hand”): just as the sign SCOTLAND is articulated on the shoulder and the sign FATHER on the head, other signs may be articulated with the dominant hand upon a relatively static, non-moving (albeit configurable) handshape formed on the non-dominant hand (see Lillo-Martin & Sandler, 2006). Though subjects were not overtly informed of these issues, it appears that deaf signers had little trouble disregarding the non-dominant hand in these asymmetrical Type 2A forms, while hearing subjects showed significant slowing. Figure 3 provides an illustration of the significant two-way Group-Type interaction, which clearly illustrates these group differences.

Figure 3.

The interaction of Group and Type in the RT results.

Borrowing terminology from the visual attention literature, we can say that it is as if these Type 2A stimuli had competing “to-be-ignored flankers.” Deaf subjects were not misled by this additional hand information; in fact they appear to treat these Type 2A forms more along the lines of the one-handed Type 1 forms, showing a trend towards speeded reaction times to marked handshapes under this condition. Hearing subjects, unfamiliar with these sign constraints, in contrast showed Markedness preferences that patterned with Type 2S forms (i.e. with RTs to unmarked items faster than to marked items). Finally it is worth noting that for signers, these effects did not differentiate upon the basis of lexical status, an indication that signers may have been using the same strategies for both signs and non-signs. This may imply that this ability to suppress the irrelevant handshape information is a pre-lexical property, though no doubt experience-dependant.

With respect to the factor of Type, as alluded to above, modest support for each of our four hypotheses has been revealed. In differing contexts of Markedness, we have seen this for both Hypothesis T1, “In single-handed (Type 1) sign forms, the target handshapes are seen most clearly and unambiguously and hence these will yield the fastest RTs,” and Hypothesis T2S, “Because symmetrical two-handed (Type 2S) sign forms are articulated with the same target handshapes on both hands, they offer additional and unambiguous information to subjects relative to one-handed signs, so will be associated with the fastest RTs.”

Regarding Hypothesis T2A-1, “The inconsistent information present in two-handed asymmetrical (Type 2A) sign forms will result in a slowing of RTs relative to the other two stimulus Types,” this pattern is captured in the Group-Type interaction shown in Figure 3. Note that while one-handed and symmetrical two-handed items are associated with relatively fast RTs, items of Type 2A sign show a different pattern. However, as discussed above, the deaf subjects show less disruption for Type 2A forms than do the hearing subjects.5 This is consistent with Hypothesis T2A-2, “If a Group by Type interaction is observed, this will be at least partially due to deaf signers being more likely to ignore the non-dominant hand in making their judgments, resulting in less slowing for Type 2A items for deaf subjects relative to hearing subjects.”

We have already noted that deaf subjects tended to be more accurate and faster in the detection of handshapes in these dynamic sign forms, relative to hearing subjects. It is noteworthy that these effects held even in the case of non-signs, suggesting that long-term experience with language as expressed in a particular modality may attune the perceptual categories relevant to the processing of articulatory forms distinctive to that modality. However these effects did not differ with respect to age of acquisition (deaf native and deaf non-native signers were equally fast at monitoring for these handshapes), which indicates that this kind of perceptual learning can be attained even with late exposure to sign language.

CONCLUSION

This research serves as an important early step in exploring the degree to which the processes involved in recognition of sub-lexical properties of signs are driven by perceptual factors that are common to human action recognition in general or entail specialized linguistic processing. The data provide evidence that familiarity with a signed language may increase perceivers' facility in identifying sub-lexical components of sign forms, but this facility does not appear to depend on perceivers' age of acquisition of such a language. The lack of significant Lexicality-based effects and interactions may be related to the fact that in the structural composition of sign formation, handshape information is available relatively quickly (Emmorey & Corina, 1990). An alternative hypothesis is that this task does not automatically activate lexical properties of sign forms and may reflect pre-lexical rather than lexical processing.

With respect to perceptual factors, we found evidence that both signing and sign-naive subjects were influenced by the surface physical forms of the signs, as was seen in the generally similar performance of the two groups with respect to stimulus Type, and the tendency for both groups to respond more quickly to marked signs if they were one-handed and to unmarked signs if they were two-handed. The fact that the hearing subjects were sensitive to Markedness-based differences in the stimuli, and patterned like the deaf group with respect to the Markedness-Type interaction, argues against Markedness being a purely linguistic property. Instead, our findings support the contention that Markedness derives from general articulatory and/or perceptual properties of items contained within a linguistic system.

Acknowledgements

This work was supported in part by National Institutes of Health□NIDCD Grant 2ROIDC030991, awarded to David Corina. We would like to thank the study participants, Gallaudet University, and Sarah Hafer and Jeff Greer for serving as sign models.

APPENDIX: LIST OF STIMULI

Following is a listing of the target stimuli used in this study. As is customary in the literature on sign language, glosses of ASL signs are given in all capital letters. Non-signs were formed from signs by substituting a different handshape; for example, the non-sign Tell.F is identical to the sign TELL except that Tell.F uses an “F” handshape instead of a “1” handshape. Of the handshapes used in target items, “S,” “5” and “A-thumb” are considered unmarked, while “F,” “I” and “V” are marked (Battison, 1978). Signs and non-signs are designated as Type 1 (articulated with the dominant hand only), 2S (two-handed, with symmetry in handshape and movement), or 2A (2-handed, with asymmetry between the hands in shape and/or movement).

| SIGNS | |||

|---|---|---|---|

| Handshape | HS Marked? | Sign Type | |

| ARTIST | I | y | 2A |

| INSURANCE | I | y | 1 |

| ISLAND | I | y | 2A |

| SPAGHETTI | I | y | 2S |

| FURNITURE | F | y | 1 |

| SOON | F | y | 1 |

| VOTE | F | y | 2A |

| FREE | F | y | 2S |

| PREACH | F | y | 1 |

| DETEST | 5 | n | 1 |

| TREE | 5 | n | 1 |

| FINISH | 5 | n | 2S |

| PARENTS | 5 | n | 1 |

| SAVE | V | y | 2A |

| VERB | V | y | 1 |

| VISIT | V | y | 2S |

| READ | V | y | 2A |

| ANY | A-thumb | n | 1 |

| GIRL | A-thumb | n | 1 |

| FAR | A-thumb | n | 1 |

| GAS | A-thumb | n | 2A |

| PAGETURN | A-thumb | n | 2A |

| CAR | S | n | 2S |

| SAFE | S | n | 2S |

| SHOES | S | n | 2S |

| WORK | S | n | 2S |

| CAN | S | n | 1 |

| NON-SIGNS | |||

|---|---|---|---|

| Handshape | HS Marked? | Sign Type | |

| Candy.I | I | y | 1 |

| Cookie.I | I | y | 2A |

| Order.I | I | y | 2S |

| Nice.I | I | y | 2A |

| No_Way.I | I | y | 2S |

| Bachelor.F | F | y | 1 |

| Black.F | F | y | 1 |

| Tell.F | F | y | 1 |

| Appointment.5 | 5 | n | 2A |

| Face.5 | 5 | n | 1 |

| Orbit.5 | 5 | n | 2A |

| Some.5 | 5 | n | 2A |

| Butter.5 | 5 | n | 2A |

| Class.V | V | y | 2S |

| Dizzy.V | V | y | 1 |

| Pah.V | V | y | 2S |

| Tree.V | V | y | 1 |

| Snob.A | A-thumb | n | 1 |

| See.A | A-thumb | n | 1 |

| Guilty.A | A-thumb | n | 1 |

| Order.A | A-thumb | n | 2S |

| Roommate.A | A-thumb | n | 2S |

| Really.S | S | n | 1 |

| Order.S | S | n | 2S |

| Proof.S | S | n | 2A |

| Skiing.S | S | n | 2S |

| Vomit.S | S | n | 1 |

Footnotes

The handshape A-thumb (see Figure 1) differs from the handshape A in that has an extended thumb. Battison (1978) does not specifically mention the A vs. A-thumb distinction, but in a footnote on p. 57, writes: “For the purpose of simplifying the discussion here, this `select set of seven handshapes' includes phonetically distinct variants which do not always contrast at any underlying level of representation,” then as an example gives S as a “permissible variant” of A. Therefore we feel we are on good grounds in considering A-thumb also to be such a variant of A, and therefore to be unmarked to the extent that A is.

Our use of the word “type” should be distinguished from Battison's use of the term in his study of sign language phonotactics. Battison's Types 1, 2 and 3 distinguish three kinds of two-handed signs, which can be loosely described respectively as: symmetrical, asymmetrical but with the same handshape on both hands, asymmetrical with two different handshapes.

We consider Type to be a lexical property of a given sign, though we acknowledge that everyday performance constraints (i.e. driving while signing) may restructure the form of the sign (see Battison, 1978, for a detailed exposition).

This hypothesis suggests that one aspect of the lexical information deaf signers bring to bear upon sign recognition is information relevant to handedness phonotactic constrains in ASL (cf. Battison, 1978).

Initial testing of the 60 initially-chosen target stimuli showed that a handful of these stood out from the rest in that they were particularly difficult relative to the other stimuli. An independent study in which subjects were asked to perform difficulty judgments on the stimuli substantiated these differences; as a result, three non-signs and three signs associated with particularly low mean accuracy scores in the initial testing were dropped. Subjects who participated in the difficulty judgment study were not included as subjects in the main study.

After completing the experiment, when asked about their responses to Type 2A stimuli many deaf subjects said that they had ignored the non-dominant hand altogether. Hearing non-signers did not in general comment on this point.

REFERENCES

- Ann J. Frequency of occurrence and ease of articulation of sign language handshapes: The Taiwanese example. Gallaudet University Press; Washington, D.C.: 2006. [Google Scholar]

- Baker S, Idsardi W, Golinkoff R, Petitto I. The perception of handshapes in American Sign Language. Memory & Cognition. 2005;33:887–904. doi: 10.3758/bf03193083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battison R. Lexical borrowing in American Sign Language. Linstok Press; Silver Spring, MD: 1978. [Google Scholar]

- Battison R, Markowicz H, Woodward JC. A good rule of thumb: Variable phonology in American Sign Language. In: Fasold RW, Shuy R, editors. Analyzing variation in language. Georgetown University Press; Washington, DC: 1975. [Google Scholar]

- Bayley R, Lucas C, Rose M. Phonological variation in American Sign Language: The case of 1 handshape. Language Variation and Change. 2002:19–53. [Google Scholar]

- Carreiras M, Gutierrez-Sigu E, Baquero S, Corina DP. Lexical processing in Spanish Sign Language. Journal of Memory and Language. 2008;58:100–122. [Google Scholar]

- Clark HH. The language-as-fixed-effect fallacy. Journal of Verbal Learning and Verbal Behaviour. 1973;12:335–359. [Google Scholar]

- Coltheart M, Davelaar E, Jonasson JF, Besner D. Access to the internal lexicon. In: Dornic S, editor. Attention and performance. Vol. VI. Erlbaum; Hillsdale, NJ: 1977. pp. 535–555. [Google Scholar]

- Connine CM, Titone D. Phoneme monitoring. Language and Cognitive Processes. 1996;11:635–64. [Google Scholar]

- Corina DP, Emmorey K. Lexical priming in American Sign Language. 34th annual meeting of the Psychonomics Society; Washington, DC. Nov, 1993. 1993. [Google Scholar]

- Corina DP, Hildebrandt U. Psycholinguistic investigations of phonological structure in ASL. In: Meier RP, Cormier K, et al., editors. Modality and structure in signed and spoken language. Cambridge University Press; New York: 2002. pp. 88–111. [Google Scholar]

- Corina DP, Knapp H. Sign language processing and the mirror neuron system. Cortex. 2006;42:4, 529–539. doi: 10.1016/s0010-9452(08)70393-9. [DOI] [PubMed] [Google Scholar]

- Cutler A, Mehler J, Norris D, Segui J. Phoneme identification and the lexicon. Cognitive Psychology. 1987;19:141–177. [Google Scholar]

- Cutler A, Norris D. Monitoring sentence comprehension. In: Cooper WE, Walker ETC, editors. Language processing: Psycholinguistic studies presented to Merrill Garrett. Erlbaum; Hillsdale, NJ: 1979. pp. 113–134. [Google Scholar]

- Cutler A, van Ooijen B, Norris D, Sánchez-Casas R. Speeded detection of vowels: A cross-linguistic study. Perception and Psychophysics. 1996;58:807–822. doi: 10.3758/bf03205485. [DOI] [PubMed] [Google Scholar]

- Dye MWG, Shih S. Phonological priming in British Sign Language. In: Goldstein LM, Whalen DH, Best CT, editors. Papers in laboratory of phonology. Vol. 8. Mouton de Gruyter; Berlin: 2006. [Google Scholar]

- Eimas PD, Hornstein SB, Payton P. Attention and the role of dual codes in phoneme monitoring. Journal of Memory and Language. 1990;29:160–180. [Google Scholar]

- Emmorey K. Language, cognition, and the brain: Insights from sign language research. Lawrence Erlbaum and Associates; Mahwah, NJ: 2002. [Google Scholar]

- Emmorey K, Corina D. Lexical recognition in sign language: Effects of phonetic structure and morphology. Perceptual & Motor Skills. 1990;71:1227–1252. doi: 10.2466/pms.1990.71.3f.1227. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Corina D, Bellugi U. Differential processing of topographic and referential functional of space. In: Emmorey K, Reilly J, editors. Language, gesture and space. Lawrence Erlbaum Associates; Mahwah, NJ: 1995. pp. 43–62. [Google Scholar]

- Emmorey K, McCullough S, Brentari D. Categorical perception in American Sign Language. Language & Cognitive Processes. 2003;18:21–45. [Google Scholar]

- Forster KI. Accessing the mental lexicon. In: Wales RJ, Walker EW, editors. New approaches to language mechanisms. North-Holland; Amsterdam: 1976. pp. 257–287. [Google Scholar]

- Forster KI, Chambers SM. Lexical access and naming time. Journal of Verbal Learning and Verbal Behavior. 1973;12:627–635. [Google Scholar]

- Foss D, Swinney D. On the psychological reality of the phoneme: Perception, identification and consciousness. Journal of Verbal Learning and Verbal Behavior. 1973;12:246–257. [Google Scholar]

- Goldinger SD, Luce PA, Pisoni DB, Marcario JK. Form-based priming in spoken word recognition: The roles of competition and bias. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1992;18:1211–1238. doi: 10.1037//0278-7393.18.6.1211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gough PB, Cosky MJ. One second of reading again. In: Castellan NJ Jr., Pisoni DB, Potts GR, editors. Cognitive Theory, II. Erlbaum; Hillsdale, N.J.: 1977. [Google Scholar]

- Gow DW, Segawa JA. Articulatory mediation of speech perception: A causal analysis of multi-modal imaging data. Cognition. 2009;110:222–236. doi: 10.1016/j.cognition.2008.11.011. [DOI] [PubMed] [Google Scholar]

- Gow DW, Segawa JA, Ahlfors SP, Lin FH. Lexical influences on speech perception: A Granger causality analysis of MEG and EEG source estimates. Neuroimage. 2008;43:614–623. doi: 10.1016/j.neuroimage.2008.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosjean F. Spoken word recognition processes and the gating paradigm. Perception and Psychophysics. 1980;28:267–283. doi: 10.3758/bf03204386. [DOI] [PubMed] [Google Scholar]

- Hildebrandt UC, Corina DP. Phonological similarity in American Sign Language. Language and Cognitive Processes. 2002;17:593–612. [Google Scholar]

- Jakobson R. Child language, aphasia and phonological universals. Mouton; The Hague: 1941/1968. [Google Scholar]

- Klima ES, Bellugi U. The signs of language. Harvard University Press; Cambridge, MA: 1979. [Google Scholar]

- Mayberry RI, Witcher P. Age of acquisition effects on lexical access in ASL: Evidence for the psychological reality of phonological processing in sign language. 30th Boston University Conference on Language Development.2005. [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cognitive Psychology. 1986;18:1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- Monsell S, Hirsh KW. Competitor priming in spoken word recognition. Journal of Experimental Psychology: Learning, Memory and Cognition. 1998;24:1495–1520. doi: 10.1037//0278-7393.24.6.1495. [DOI] [PubMed] [Google Scholar]

- Morford JP, Carlson ML. Sign perception and recognition in non-native signers of ASL. forthcoming doi: 10.1080/15475441.2011.543393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norris D, McQueen JM, Cutler A. Merging information in speech recognition: Feedback is never necessary. Behavioral and Brain Sciences. 2000;23:299–370. doi: 10.1017/s0140525x00003241. [DOI] [PubMed] [Google Scholar]

- Pitt MA, Samuel AG. An empirical and meta-analytic evaluation of the phoneme identification task. Journal of Experimental Psychology: Human Perception and Performance. 1993;19:699–725. doi: 10.1037//0096-1523.19.4.699. [DOI] [PubMed] [Google Scholar]

- Pitt MA, Samuel AG. Lexical and sublexical feedback in auditory word recognition. Cognitive Psychology. 1995;29:149–188. doi: 10.1006/cogp.1995.1014. [DOI] [PubMed] [Google Scholar]

- Radeau M, Morais J, Segui J. Phonological priming between monosyllabic spoken words. Journal of Experimental Psychology: Human Perception & Performance. 1995;21:1297–1311. [Google Scholar]

- Sandler W, Lillo-Martin D. Sign Language and Linguistic Universals. Cambridge University Press; Cambridge, UK: 2006. [Google Scholar]

- Savin HB, Bever TG. The nonperceptual reality of the phoneme. Journal of Verbal Learning and Verbal Behavior. 1970;9:295–302. [Google Scholar]

- Segui J, Frauenfelder U, Mehler J. Phoneme monitoring, syllable monitoring and lexical access. British Journal of Psychology. 1981;72:471–477. [Google Scholar]

- Slowiaczek LM, Hamburger M. Prelexical facilitation and lexical interference in auditory word recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1992;18:1239–1250. doi: 10.1037//0278-7393.18.6.1239. [DOI] [PubMed] [Google Scholar]

- Slowiaczek LM, Pisoni DB. Effects of phonological similarity on priming in auditory lexical decision. Memory & Cognition. 1986;14:230–237. doi: 10.3758/bf03197698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokoe W. Studies in Linguistics, Occasional Papers. Vol. 8. Linstok Press; Silver Spring, MD: 1960. Sign language structure: An outline of the visual communication systems of the American Deaf. [DOI] [PubMed] [Google Scholar]

- Thompson RL, Emmorey K, Gollan TH. “Tip of the fingers” experiences by deaf signers: Insights into the organization of sign-based lexicon. Psychological Science. 2005;16:856–860. doi: 10.1111/j.1467-9280.2005.01626.x. [DOI] [PubMed] [Google Scholar]

- Trubetzkoy N. Principles of phonology (translation of Grundzüge der Phonologie) University of California Press; Berkeley and Los Angeles: 1939/1969. [Google Scholar]

- van Ooijen B, Cutler A, Norris D. Detection of vowels and consonants with minimal acoustic variation. Speech Communication. 1992;11:101–108. [Google Scholar]

- Vitevitch MS. Influence of onset density on spoken word recognition. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:270–278. doi: 10.1037//0096-1523.28.2.270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren RM. Perceptual restoration of missing speech sounds. Science. 1970;167:392–393. doi: 10.1126/science.167.3917.392. [DOI] [PubMed] [Google Scholar]

- Wheater CP, Cook PA. Using statistics to understand the environment. Routledge; London: 2000. [Google Scholar]

- Wilbur R. American Sign Language: Linguistic and applied dimensions. College Hill; Boston: 1987. [Google Scholar]

- Willerman R. The phonetics of pronouns: Articulatory bases of markedness. Academic dissertation, University of Texas; Austin: 1994. [Google Scholar]