Abstract

Health information exchange (HIE) is an avenue to improving patient care and an important priority under the Meaningful Use requirements. However, we know very little about the usage of HIE systems. Understanding how healthcare professionals actually utilize HIE systems will provide practical insights to system evaluation, help guide system improvement, and help organizations assess performance. We developed a novel way of describing professionals’ HIE usage using the log files from an operational HIE-facilitating organization. The system employed a webpage-style interface. The screen number, types, and variation served to cluster all sessions in to five categories of HIE usage: minimal usage, repetitive searching, clinical information, mixed information, and demographic information. This method reduced the 1,661 different patterns into five recognizable groups for analysis. Overall, most users engaged with the system in a minimal fashion. In terms of user characteristics, minimal usage was highest among physicians and the highest percentage of clinical information usage was among nurses. Usage also differed by organization with repetitive searching most common in settings with scheduled encounters and uncommon in the faster-paced emergency department. Lastly, usage also varied by timing of the patient encounter. Within a single HIE system, discernible types of users behavior existed and varied across jobs, organizations, and time. This approach relied on objective data, can be replicated, and demonstrates the substantial variation in user behaviors like simple measures of adoption or any access. This approach can help leaders and evaluators assess their own and other organizations.

Keywords: health information technology, information seeking behavior, workplace, evaluation

Introduction

The federal government, industry leaders, and healthcare reformers have clearly identified and supported health information exchange (HIE) as one of the key health information technologies that will transform the healthcare system. As stated by the President’s Council of Advisors on Science and Technology, “Data exchange and aggregation are central to realizing the potential benefits of health IT” [1]. In addition to government endorsement, organizations such as the Healthcare Information & Management Systems Society [2] and the American Health Information Management Association [3] support HIE efforts, objectives, and technologies. Most importantly, the information exchange requirements for electronic health records (EHR) in the “Meaningful Use” criteria virtually requires the adoption and implementation of HIE [4, 5].

HIE possess the potential to improve healthcare along multiple dimensions due to the critical importance of the efficient exchange of patient information to nearly all job types and roles within health service organizations. For example, more information at the point of care may fill knowledge gaps and lead to better decisions by providers [6]. Likewise, access to diagnostic tests and laboratory reports from other organizations may decrease duplicative testing, thereby saving time and money [7]. Further, information sharing among disparate providers may improve patient coordination [8]. Lastly, patient records aggregated across organizational boundaries can help public health agencies monitor the health of their communities [9].

Despite the promised benefits and the wide applicability of HIE to the work of many health organizations and professionals, we know very little about the usage of HIE systems. In general, the evidence indicates that most organizations engage in low levels of HIE system usage [10–15]. Unfortunately, previous research has primarily measured HIE system use as whether or not the system was accessed [16]. As such, the prior work in HIE systems lacks an empirical examination of actual HIE system usage and how system usage varies according to user roles and workplace.

Rationale

We argue a better understanding of HIE system usage has practical importance from an evaluation, improvement, and policy perspective. First, to evaluate HIE and other health information technology efforts, health organization administrators and researchers often consider user individual acceptance or usage as indicators of a successful implementation [16–19]. Individual usage is one metric to monitor the progress and extent of HIE implementation. However, a simple, binary or yes/no, approach to usage measurement fails to describe when users engage with the system, what types of information they seek, which features they use, or how usage relates to job-related tasks [20]. The literature on EHR usage already indicates users do not interact with systems in a uniform fashion [21, 22]. A more descriptive approach to measuring HIE usage would benefit implementation monitoring and ongoing evaluation by stratifying users into more informative categories to target for additional training, support, and/or encouragement.

Second, better understanding of HIE system usage provides guidance for system improvement. Given the complex nature of healthcare and the diverse settings where HIE systems are used, (e.g., hospitals, emergency departments, medical groups, insurers, laboratories, public health agencies, and even long-term-care organizations), users of HIE systems have different information needs [14, 15, 23–25]. In addition, HIE systems are used by a broader set of users than many other clinical or administrative information systems. Due to the anticipated improvements on medical decision making provided by access to previously unavailable patient information, discussions regarding HIE system impact frequently focus on physicians as HIE users [6]. However, reports on existing HIE systems suggest a broader user base with a diverse set of information needs. Potential HIE information users include nurses, registration clerks, social workers, office managers, executives, and public health professionals [9, 13, 15, 23, 26]. Furthermore, recent analyses by Johnson and colleagues [27] demonstrate that HIE system access can vary by job type and location. More descriptive measures of HIE system usage will identify opportunities for system improvements like interface redesign and content tailoring to meet the needs of a diverse set of users.

Lastly, policy makers and organizational leaders may receive a significant benefit from a better understanding of HIE system utilization. Historically, organizations have struggled to establish the return on investment for their HIE efforts [28] and/or the organizational performance gains received from investments in health information technology [29]. Furthermore, in the context of significant political expectations, policy makers must measure and evaluate the return on taxpayer investment. Organizational benefits do not automatically accrue by buying information systems or technology, but by actually making use of it [30]. Considering HIE system usage in detail and by organizational type may better help measure and understand the link between system adoption and organizational performance.

This analysis applies a novel characterization of HIE system usage derived from system user log files. Furthermore, this analysis provides insight as to the nature of HIE system usage by job type and by place of work, which are just beginning to be understood.

Methods

Data source

For this study, we analyzed the system user logs obtained from the Integrated Care Collaboration (ICC) of Central Texas for the period of 1/1/2006 to 6/30/2009. ICC facilitates health information exchange among organizations that serve the medically indigent population of central Texas [13, 31, 32]. Area safety-net providers (i.e., multi-hospital systems, public and private clinics, governmental agencies operating federally qualified health centers, and public health agencies) contribute patient-level clinical and demographic data to I-Care, the ICC’s master patient index and clinical data repository. This proprietary, web-based, centralized database exists independent of each participating organization’s clinical data repository. The members of the ICC vary in their use of electronic records both by location and over time. For example, the Federally Qualified Health Centers and hospitals are longstanding EHR users, whereas several outpatient clinics were recent EHR adopters or only used practice management systems. In the I-Care database, ICC compiles a patient record by matching the I-Care master patient index with other patient identifiers (e.g., name, date of birth, address, etc.) and periodic manual review by ICC system administrators.

Authorized users at participating healthcare organizations access the database via a secured web interface. Users view and query patient records in I-Care through a series of specialized webpages (“screens”). I-Care includes 10 different screens covering patient demographics, recent utilization history, prescribed medications, and other topic areas (see Table 1 for a listing of the screens and the percentage of user sessions that included each screen). Some screens contain information from multiple sources summarized in tables. Other screens provide detailed records of particular encounters or medications. During the study period, the information organization and display within the I-Care system did not follow a particular standard, nor could it generate a Continuum of Care Document (CCD). To comply with the Health Insurance Portability and Accountability Act of 1996 (HIPAA), I-Care generates electronic logs to document the date and time of usage, patient records viewed, and screens viewed. To focus our examination on healthcare workers as end users, we excluded ICC and other database administrators from the sample.

Table 1.

Description of available I-Care system screens used for pattern classification

| Screen type | Screen name & description | Label | % of sessions (n=105,705)* |

|---|---|---|---|

| Administrative | Authorization report – consent for HIE inclusion | AH | 0.5% |

| Administrative | Funding report – payor history | FR | 5.1% |

| Administrative | Face sheet – demographic summary | FS | 5.9% |

| Administrative | Patient profile – demographics & contact information | PP | 12.0% |

| Clinical | Medication report – detail on single medication | MD | 0.1% |

| Clinical | Encounter detail – detailed record of encounter | ED | 2.2% |

| Clinical | Medication tab – prescribed medications | MT | 14.4% |

| Clinical | Encounter tab – table of most recent encounters | ET | 95.8% |

| Navigation | Miscellaneous report selection | MR | 2.0% |

| Navigational | Select patient – patient record search page | SP | 100.0% |

The total number of user sessions that included the screen.

Measuring system usage

We defined a unique user session as all system viewing activity (i.e., screens accessed) by a given user for a given patient on a given date. The sample included 105,705 unique user sessions. For each user session, we referred to the sequences of I-Care screens accessed as a usage pattern. The sample included 1,661 different usage patterns. To help us understand the usage variation across the user base, we applied an existing framework to classify each pattern according to: length, breadth, and information category [33].

The usage pattern length was the total number of screens viewed in a usage session. We defined usage pattern breadth as the total number of different screens viewed during a usage session. To distinguish between these two measures consider the following two user patterns: 1) Select Patient + Encounter Tab + Select Patient + Encounter Tab and 2) Select Patient + Encounter Tab + Medication Tab + Funding Report. In both examples, pattern length was the same (i.e., four total screens). However, the pattern breadth differs, because the second pattern included a greater variety of screens (four versus two). Lastly, the authors along with three health services researchers and two members of the ICC staff classified each system screen as clinical, administrative, or navigational depending on the type of information contained on the screen (see Table 1). The inter-rater reliability for categorizing screens was 0.77 and final agreement was obtained by consensus.

We used hierarchical cluster analysis with a single-linkage method to suggest pattern groupings based on length, breadth, and information category. We visually inspected the resulting groupings using dendograms. Within identified clusters, we looked for consistency of sequencing to arrive at categories of usage. We identified five usage categories, summarized in Table 2. In minimal use sessions (61.8% of all sessions), users only accessed two screens: the select patient (i.e., patient search) screen and the most recent encounters summary screen. These two screens serve as the “gateway” to most other screens. In the repetitive search category (11.2%), users accessed the same two screens as minimal use; however, they then repetitively cycled between the search screen and the encounter summary screen multiple times in a single user session. Beyond these two initial screens, 11.6% of sessions viewed only clinical information screens. We labeled patterns that viewed both clinical and demographic screens mixed information usage (11.3%). The final category included the sessions that only viewed demographic information (4.2%). We could not classify 35 user sessions (0.03%) and excluded them as too few for meaningful analysis.

Table 2.

I-Care usage categories

| Usage Category | Description | Characteristic pattern(s) |

Frequency (n) |

|---|---|---|---|

| Clinical | Only viewed screens with clinical information | SP-ET-MT SP-ET-ED-ED-ED‥‥ |

11.6% (12,258) |

| Demographic | Only viewed screens with demographic information |

SP-PP SP-PP-FR |

4.2% (4,388) |

| Minimal usage | Only viewed the screen showing a table of most recent encounters |

SP-ET | 61.8% (65,286) |

| Mixed information | Viewed both clinical and demographic information |

SP-PP-ET SP-ET-MR-FR |

11.3% (11,887) |

| Repetitive search | Cycled repetitively between patient search page and table of most recent encounters |

SP-ET-SP-ET SP-ET-SP-ET-SP-ET‥‥ |

11.2% (11,851) |

Other Measures

The 297 users in our sample self-reported 113 unique job titles. We collapsed these job titles into six categories: administration (59.3% of users), nurse (6.4% of users), pharmacy (1.4% of users), physician (11.8% of users), public health (6.4% of users), and social services (14.8% of users). In cases of ambiguous titles, we contacted informants in the organization for clarification. We were able to associate 95.1% of user sessions with a job category. Next, we grouped the users’ workplaces into the following broad categories based on services offered and organizational structure: ambulatory care (9.2% of users), emergency department (18.1% of users), children’s emergency department (3.2% of users), hospital (53.0% of users), public health agency (8.3% of users), or mental health agency (8.3% of users). Due to small cell counts, we combined the public health and mental health categories into a single category. Local government operates both the organizations in these categories participating in the ICC and the distribution of usage types was consistent between the groups.

Lastly, we considered how timing of system usage corresponded to a patient encounter. Because authorized users can access the I-Care system at any time, the user log is effectively independent from patient encounters. Using the unique identifier from the master patient index and dates of service encounters, we calculated the number of days between system usage for each session and the patient’s most recent healthcare encounter at the user’s place of work. However, our sample includes both scheduled, such as ambulatory care visits, and unscheduled encounters, such as emergency department visits. To account for the possibility of system usage to prepare for a scheduled visit, we considered usage by ambulatory care providers the day before an encounter as equivalent to a same day usage. We grouped the number of days between the patient encounter and system usage into the following five categories: same day, within a week, within a month, within a year, longer than a year, or no encounter recorded at that service location.

Analysis

User sequences were described by pattern length (total number of screens viewed) and pattern breadth (number of different screens viewed) using the sequence analysis commands in STATA [34]. User job categories, workplace categories, and timing of usage categories were described using frequencies and percentage of sessions. We used cross tabulation to compare usage categories with A) job categories, B) workplace categories, and C) timing of usage categories. We evaluated associations between types of usage and these variables using the Pearson χ2 test of independence.

Results

Session description

Despite the large variation in total usage patterns, in more than 6 out of 10 sessions users accessed the system in a minimal fashion. The average pattern length was 2.89 screens. The shortest pattern length included only one screen and the longest pattern involved 83 screens. The median pattern length was two screens. In fact, 65.7% of all user sessions had a pattern length of only two screens. In terms of pattern breadth, users viewed on average 2.38 different screens. The median pattern breadth was two screens and most user sessions (77.0%) included only two different screens. The least variation in pattern breadth was one screen. The broadest pattern included 10 different screens.

System usage by job category, work location, and usage timing

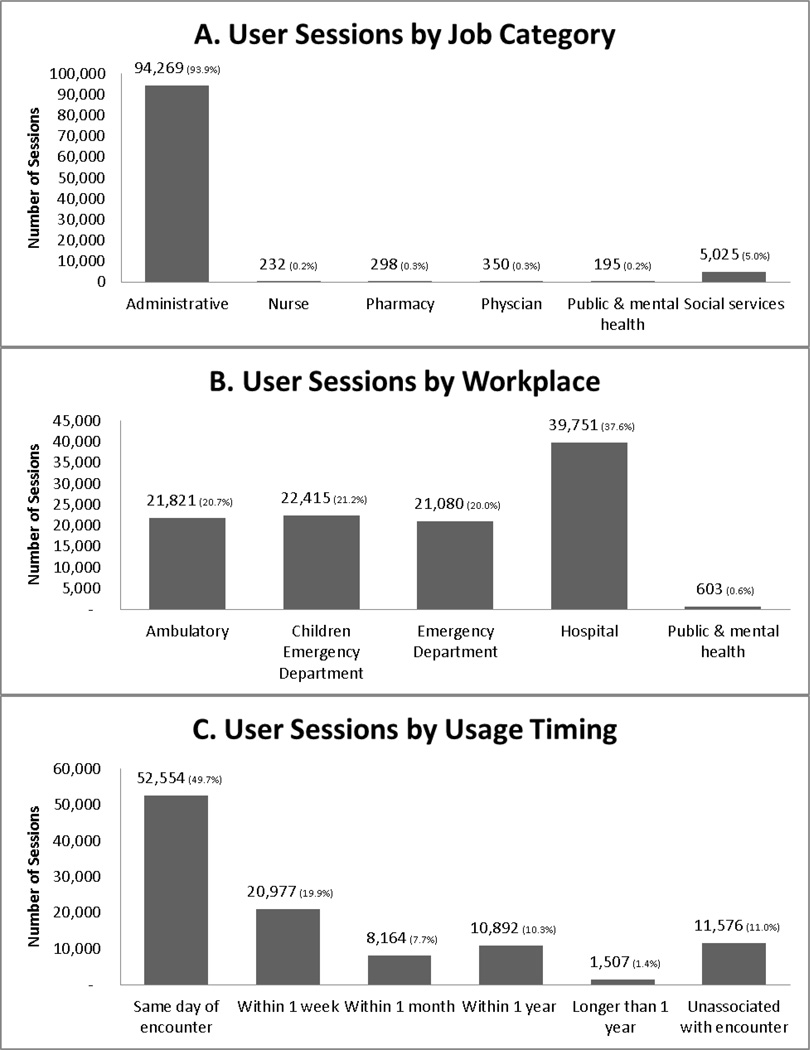

Figure 1 displays the percent of user sessions by job category, workplace, and usage timing. Users in administrative (93.9%) and social services (5.0%) job categories accessed the system the most. Each of the other job categories used the ICC database in less than one percent of our sample patterns. The median number of sessions per user was 2, but ranged from 1 to more than 10,000 sessions. Only 20% of users accessed the system more than 100 times. While users in hospitals comprised the largest user workplace (37.6%), users in ambulatory care (20.7%), children’s emergency departments (21.2%), and emergency departments (20.0%) had roughly equivalent use. Public or mental health organizations users accessed I-Care much less frequently (0.6%) (see Figure 1B). Approximately half of the user sessions (49.7%) occurred on the same day as the patient encounter. However, a number of users accessed the system without a corresponding patient encounter (11.0%) (see Figure 1C).

Figure 1.

Frequency of user sessions by job, workplace, and usage timing.

Associations with usage types

Table 3 displays the cross tabulation of usage category by job category. Based on the χ2 test of independence, we conclude job category is associated with type of usage (p<0.001). To facilitate comparisons of the usage habits of different job types, the percentages we report in Table 3 sum across each job category independent of the other job categories. The minimal usage pattern was the most common for users except nurses and those with pharmacy jobs. Most pharmacy users engaged in mixed usage (83.9%). While the majority of nurses sought mixed information (48.3%), a higher percentage of nurses engaged in clinical usage (18.5%), which as higher than any other job category. More than three out of four sessions by physician users were of the minimal pattern (78.3%). Also of note, physicians rarely engaged in repeated searching. Only a single physician user session involved a demographic usage pattern.

Table 3.

Cross tabulation of usage category and job category

| Job category | Minimal usage (% row) |

Repetitive usage (% row) |

Mixed information (% row) |

Demographic (% row) |

Clinical (% row) |

|---|---|---|---|---|---|

| Administration | 59,264 (62.3%) |

9,528 (10.1%) |

9,708 (10.3%) |

4,336 (4.6%) |

11,433 (12.2%) |

| Nurse | 58 (25.0%) |

12 (5.2%) |

112 (48.3%) |

7 (3.0%) |

43 (18.5%) |

| Pharmacy | 18 (6.0%) |

2 (0.7%) |

247 (83.9%) |

4 (1.3%) |

27 (9.1%) |

| Physician | 274 (78.3%) |

15 (4.3%) |

33 (9.4%) |

1 (0.3%) |

27 (7.7) |

| Public health & mental health |

109 (55.9%) |

19 (9.7%) |

38 (19.5%) |

2 (1.0%) |

27 (13.9%) |

| Social services | 2,657 (52.9%) |

686 (13.7%) |

1,016 (20.2%) |

12 (0.2%) |

654 (13.0%) |

Usage category and job category statistically associated according to using the Pearson χ2 test of independence at p<0.001.

Organizations vary in HIE system use as well (χ2 test of independence p<0.001). Several key differences in usage types appear in Table 4. First, repetitive searching was most common in the two settings where scheduled encounters occur: the hospital (14.2%) and ambulatory care (15.7%). In the faster-paced, unscheduled emergency department setting, users did not often engage in repetitive searching for patients (4.4%). Second, the minimal amount of information was obtained from the HIE system in most organizations. Furthermore, since the repetitive search pattern does not yield any additional screens over the minimal pattern, most session saw only a summary of recent encounters. Third, the highest proportions of clinical usage occurred in the emergency department settings (31.6%) and in public health & mental health agencies (15.8%). The reliance on clinical information in the case of the former makes sense, particularly in light for the low need for demographic information. Fourth, outside the hospital setting, purely demographic information usage did not occur often. Lastly, the most diverse usage occurred in public health and mental health agencies probably reflecting their need for clinical information as well as contacting patients for follow up and assessment. In similar regard, the higher levels of mixed and demographic usage in the hospital might be due to the fact those workplaces housed case managers and social workers.

Table 4.

Cross tabulation of usage category and workplace.

| Work location | Minimal usage (% row) |

Repetitive usage (% row) |

Mixed information (% row) |

Demographic (% row) |

Clinical (% row) |

|---|---|---|---|---|---|

| Ambulatory care | 16,656 (76.3%) |

3,419 (15.7%) |

1,570 (7.2%) |

30 (0.1%) |

146 (0.7%) |

| Children’s emergency dept. | 17,699 (78.9%) |

1,713 (7.6%) |

144 (0.6%) |

3 (0.1%) |

2,856 (12.7%) |

| Emergency department | 10,279 (48.7%) |

920 (4.4%) |

2,664 (12.6%) |

562 (2.7%) |

6,655 (31.6%) |

| Hospital | 20,432 (51.4%) |

5,773 (14.2%) |

7,288 (18.3%) |

3,752 (9.4%) |

2,506 (6.3%) |

| Public health & mental health | 220 (36.5%) |

26 (4.3%) |

221 (36.7%) |

41 (6.8%) |

95 (15.8%) |

Usage category and workplace statistically associated according to using the Pearson χ2 test of independence at p<0.001.

Table 5 displays how usage patterns differed statistically (p<0.001) according to timing of system usage. The most notable differences are evident in contrasting usage that occurred the same day as the patient’s encounter with sessions that occurred subsequent to patient encounters. For example, clinical usage was more likely to occur the same day as the patient encounter. In contrast, after the patient encounter, mixed information usage occurred at a greater frequency.

Table 5.

Cross tabulation of usage category and timing of usage.

| Timing of session | Minimal usage (% row) |

Repetitive usage (% row) |

Mixed information (% row) |

Demographic (% row) |

Clinical (% row) |

|---|---|---|---|---|---|

| Same day as encounter | 31,535 (60.0%) |

5,852 (11.1%) |

3,827 (7.3%) |

2,481 (4.7%) |

8,859 (16.9%) |

| Within 1 week | 13,698 (65.3%) |

2,805 (13.4%) |

3,216 (15.3%) |

270 (1.3%) |

988 (4.7%) |

| Within 1 month | 4,449 (54.5%) |

1,053 (12.9%) |

1,793 (22.0%) |

285 (3.5%) |

584 (7.2%) |

| Within 1 year | 6,817 (62.6%) |

1,244 (11.4%) |

1,457 (13.4%) |

488 (4.5%) |

886 (8.1%) |

| Longer than 1 year | 858 (56.9%) |

182 (12.1%) |

187 (12.4%) |

11 (7.9%) |

161 (10.7%) |

| Unassociated with an encounter |

7,929 (68.5%) |

715 (6.2%) |

1,407 (12.2%) |

745 (6.4%) |

780 (6.7%) |

Usage category and timing of usage statistically associated according to using the Pearson χ2 test of independence at p<0.001.

Discussion

Within a single HIE system, different and discernible types of users behavior existed. These usage categories ranged from minimalistic system interaction to very detailed and complex patterns of screen views that targeted certain types of information. The complexity and variety of usage categories suggests that researchers who employ simple measures such as access or acceptance obscure substantial variation in user behaviors. Furthermore, we found these categories of usage varied by type of user and workplace setting. Physicians and those working in the children’s emergency department were more likely to have minimal interaction with the system whereas nurses, public health workers, and pharmacists often sought demographic and clinical information within a session. These findings point towards multiple avenues of HIE and other health information systems improvement.

First, these results make the case for prioritizing the display of information in the HIE system to make it quickly available to users. The most comprehensive approach to matching the HIE system to user viewing behaviors would be an information display completely tailored to the user instead of a uniform interface for all system users. For example, “portal-like” user interfaces such as those popular on internet sites such as Yahoo! might provide more efficient information retrieval for healthcare workers. However, endeavoring to create custom user information displays or even some simpler form of screen reorganization to the tastes of every individual user of an HIE system might be beyond the technological or financial capabilities of regional health information organizations (RHIOs) or even software vendors. Additionally, these customized views may increase the time technical staff would have to dedicate to supporting the end users. Instead of full information customization, the results of this study suggest other simpler ways to accomplish better information provision. For example, creating default views based on general job types or work locations provides a feasible approach to match user’s information needs to his/her approach to accessing HIE information. The variety of usage types by broad job categories justifies this conclusion. Alternatively, the vast majority of users saw very few screens, which underscores the importance of the first screens viewed by users. Minimal usage could be either through job requirements, like completing intake forms by administrative employees, or out of time constraints, in the case of physicians. If resources only permit limited system redesign, system designers should give precedence to the content of the first system screens viewed by clearly understanding and identifying users’ information needs.

Furthermore, the above basic approaches to customization should be achievable working with the current technical standard for information exchange the Continuity of Care Document (CCD) or Record (CCR) [35]. As interoperable extracts from an EHR system, the CCDs/CCRs can be viewed and displayed in a variety of systems and formats [36, 37]. Therefore, the specific data elements should be amenable to customized viewing according to end user characteristics. In this single system, we identified variations in viewing patterns and general types of content accessed by users and organizations. The required elements and content areas of CCD/CCRs are even broader,[38] suggesting similar - if not greater - potential for individualized patterns of usage and information priorities. Problematically, however, evidence exists that only a small fraction of HIE-facilitating organizations can support these types of data [39].

Second, our results underscore the value of creating a quality, master-patient-index and record locator feature into an HIE system. About 11% of sessions included repetitive searching by users. These repetitions represented greater investments by the user in terms of time and cognitive effort. Beyond the administrative job titles, or those in settings with scheduled encounters, users did not make those efforts. For HIE systems, ensuring accurate record linkage, record de-duplication, and improve searching algorithms might help resolve information seeking faster and end repeated searches

Third, we recommend the development of new tools to analyze system user logs. User logs provide the mandatory audit trail to ensure patient privacy and HIPAA compliance. Understanding and categorizing usage behavior can supplement this application by helping identify inappropriate uses of the system. Individuals who use the system in a way very different than their peers or on dates after health care visits may represent potential privacy threats. Recently, Malin and colleagues [40] proposed and evaluated a more formalized methodology to leverage electronic log files for these very purposes in EHR systems. However, this analysis demonstrates the applicability of log files beyond liability protection. The provision of targeted, but simple analytic tools or queries (read-only in order to protect the audit trail) by software vendors or database administrators could leverage these data for quality management and improvement purposes. Very low levels of deep system usage, such as the categories of clinical or demographic usage, might indicate a training opportunity. Users may not be aware of applicable information obtainable by using the system in more than a minimal fashion. Likewise, HIE is anticipated to yield many benefits in terms of safety and quality. Looking at how HIE systems are actually utilized can help refine expectations and suggest reasonable evaluation measures.

Fourth, these results provide some guidance for those working to establish information exchange partnerships with other organizations. For example, ensuring demographic information sharing beyond what is necessary to identify patients should be a low priority. Demographic only sessions were the least common type of usage. Clinical information seems to be a greater value or is at least more often the apparent objective. This is consistent with previous examinations of the types of information users want and get from HIE systems [24, 27]. However, this research goes further by considering and stratifying these information categories by several types of users and healthcare settings.

Lastly, this conceptualization of usage can inform evaluations of other health information system– most notably EHRs. Our measurement approach did not include which specific data element individual clinicians or health professionals sought, but what broad type of content was available on a particular screen. EHRs present information in a similar fashion. While in many systems it is possible to view discrete data elements or single displays comprised of the same data (e.g. vital signs or recent medications), EHR screens dedicated to previous orders, history and physicals contain multiple pieces of disparate data. Measures of EHR usage will be similar in that they will include views of screens with multiple types of information. Considering EHRs as the base of HIE activities also illustrates how this measurement approach can be expanded. The stand-alone HIE system investigated in this study was read-only. However, through CCD generation according to HL7 standards or even the sharing and receiving information via the DirectProject [41], measures of HIE usage within the EHR environment could include the dimensions of edit / view, pushing data, or with what types of organizations the information is shared.

Limitations

The primary limitation of this analysis is our use of a single centralized HIE system, which limits the generalizability of the findings. For example, other HIE efforts may not have the same breadth of information types and sources as the ICC’s system. The scope of information exchanged by organizations through HIE has varied from minimal to more comprehensive [25, 39, 42]. Likewise, the display of information, navigational menus, and ease of patient searching could create differences in usage patterns when examining other systems. In addition, this system is a stand-alone, independent information system and not integrated into an organization’s EHR. Moving from a voluntary usage stand-alone system to an EHR that is a core component of workflows and patient care would change both the number and types of individuals who are accessing information made available by HIE.

A second limitation of this study is we do not capture individual user characteristics and organizational factors that might be important precursors to how individuals utilize the system. For example, we do not know individual user’s level of computer skills, general perceptions of system usability, or the time available to each user to search for patient records. In terms of the organization, the characteristics of their EHR or practice management system may influence usage of this stand-alone system. Furthermore, it is possible that characteristics of the patient population could influence usage behaviors. For example, the staff in an office with a small patient panel may only engage in minimal searching under the assumption the patient does not have a record in the system. Alternatively, it could mean that staff has to engage in repetitive patterns to ensure the proper patient is located. While using these categories of HIE system usage as dependent variables for quantitative analyses to answer these questions is a logical extension, the calls for using qualitative methods in HIE investigations [43, 44] may prove to be more informative given the very highly contextualized and personal nature of information seeking and clinical care.

Conclusion

While this system presented the same information and same interface for all users, we were able to distinguish five different types of usage and that usage types varied by job, workplace, and usage timing. This approach towards usage measurement relies on objective data, can be replicated, and does not obscure the substantial variation in user behaviors like simple measures of adoption or any access. Usage varied by job type and workplace and not all usage occurred concurrent with a patient encounter. Evaluation of HIE systems should pay attention to the users’ context as well as defining at what point in time usage is expected or desirable.

Acknowledgments

This work was supported by Award Number R21CA138605 from the National Cancer Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Cancer Institute or the National Institutes of Health. We would like to thank Dan Brown and Anjum Khurshid at the Integrated Care Collaboration of Central Texas for their assistance with obtaining the data for this study.

Contributor Information

Joshua R Vest, Jiann-Ping Hsu College of Public Health, Georgia Southern University, PO BOX 8015, Statesboro, GA 30460-8015, 912 478 5057, 912 478 0171 (fax), jvest@georgiasouthern.edu.

‘Jon (Sean) Jasperson, Mays Business School, Texas A&M University, 979-845-7946, jon.jasperson@tamu.edu.

References

- 1.President's Council of Advisors on Science and Technology. Report to the President. Realizing the Full Potential of Health Information Technology to Improve Healthcare for Americans: The Path Forward. Washington, DC: Executive Office of the President; 2010. [Google Scholar]

- 2.Healthcare Information & Management Systems Society. 2011–2012 Public Policy Principles. Chicago, IL.: 2010. [cited Feb 15 2011]. Available from: http://www.himss.org/advocacy/d/PolicyPrinciples2011.pdf. [Google Scholar]

- 3.American Health Information Management Association. [cited Feb 15 2011];Statement on National Healthcare Information Infrastructure. 2002 Available from: http://library.ahima.org/xpedio/groups/public/documents/ahima/bok1_013733.hcsp?dDocName=bok1_013733.

- 4.111th Congress of the United States of America. American Recovery and Reinvestment Act of 2009. [Google Scholar]

- 5.Department of Health & Human Services. 42 CFR Parts 412, 413, 422 et al. Medicare and Medicaid Programs; Electronic Health Record Incentive Program; Final Rule. Federal Register. 2010;75(144):44314–44588. [PubMed] [Google Scholar]

- 6.Hripcsak G, Kaushal R, Johnson KB, Ash JS, Bates DW, Block R, et al. The United Hospital Fund meeting on evaluating health information exchange. Journal of Biomedical Informatics. 2007;40(6 S1):S3–S10. doi: 10.1016/j.jbi.2007.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Corrigan J, Greiner A, Erikson S, editors. Institute of Medicine. Fostering Rapid Advances in Health Care: Learning from System Demonstrations. Washington, D.C.: National Academy Press; 2003. [PubMed] [Google Scholar]

- 8.Branger P, van't Hooft A, van der Wouden HC. Coordinating shared care using electronic data interchange. Medinfo. 1995;8(Pt 2):1669. [PubMed] [Google Scholar]

- 9.Hessler BJ, Soper P, Bondy J, Hanes P, Davidson A. Assessing the Relationship Between Health Information Exchanges and Public Health Agencies. Journal of Public Health Management and Practice. 2009;15(5):416–424. doi: 10.1097/01.PHH.0000359636.63529.74. [DOI] [PubMed] [Google Scholar]

- 10.Overhage J, Deter P, Perkins S, Cordell W, McGoff J, McGrath R. A randomized, controlled trial of clinical information shared from another institution. Ann Emerg Med. 2002;39(1):14–23. doi: 10.1067/mem.2002.120794. [DOI] [PubMed] [Google Scholar]

- 11.Wilcox A, Kuperman G, Dorr DA, Hripcsak G, Narus SP, Thornton SN, et al. Architectural strategies and issues with health information exchange. AMIA Annu Symp Proc. 2006:814–818. [PMC free article] [PubMed] [Google Scholar]

- 12.Grossman JM, Bodenheimer TS, McKenzie K. MARKETWATCH: Hospital-Physician Portals: The Role Of Competition In Driving Clinical Data Exchange. Health Affairs. 2006 Nov-Dec;25(6):1629–1636. doi: 10.1377/hlthaff.25.6.1629. [DOI] [PubMed] [Google Scholar]

- 13.Vest JR, Zhao H, Jasperson J, Gamm LD, Ohsfeldt RL. Factors motivating and affecting health information exchange usage. Journal of the American Medical Informatics Association. 2011;18(2):143–149. doi: 10.1136/jamia.2010.004812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Classen DC, Kanhouwa M, Will D, Casper J, Lewin J, Walker J. The patient safety institute demonstration project: a model for implementing a local health information infrastructure. J Healthc Inf Manag. 2005;19(4):75–86. [PubMed] [Google Scholar]

- 15.Johnson KB, Gadd C, Aronsky D, Yang K, Tang L, Estrin V, et al. The MidSouth eHealth Alliance: Use and Impact In the First Year. AMIA Annu Symp Proc. 2008:333–337. [PMC free article] [PubMed] [Google Scholar]

- 16.Vest JR, Jasperson J. What should we measure? Conceptualizing usage in health information exchange. Journal of the American Medical Informatics Association. 2010;17(3):302–307. doi: 10.1136/jamia.2009.000471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gadd CS, Ho Y-X, Cala CM, Blakemore D, Chen Q, Frisse ME, et al. User perspectives on the usability of a regional health information exchange. Journal of the American Medical Informatics Association. 2011 doi: 10.1136/amiajnl-2011-000281. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Labkoff SE, Yasnoff WA. A framework for systematic evaluation of health information infrastructure progress in communities. Journal of Biomedical Informatics. 2007;40(2):100–105. doi: 10.1016/j.jbi.2006.01.002. [DOI] [PubMed] [Google Scholar]

- 19.Johnson KB, Gadd C. Playing smallball: Approaches to evaluating pilot health information exchange systems. Journal of Biomedical Informatics. 2007;40(6) Supplement 1:S21–S26. doi: 10.1016/j.jbi.2007.08.006. [DOI] [PubMed] [Google Scholar]

- 20.Burton-Jones A, Straub DW. Reconceptualizing system usage: An approach and empirical test. Information Systems Research. 2006;17(3):228–246. [Google Scholar]

- 21.Hripcsak G, Sengupta S, Wilcox A, Green RA. Emergency department access to a longitudinal medical record. Journal of the American Medical Informatics Association. 2007;14(2):235–238. doi: 10.1197/jamia.M2206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Larum H, Ellingsen G, Faxvaag A. Doctors' use of electronic medical records systems in hospitals: cross sectional survey. BMJ. 2001;323(7325):1344–1348. doi: 10.1136/bmj.323.7325.1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ross SE, Schilling LM, Fernald DH, Davidson AJ, West DR. Health information exchange in small-to-medium sized family medicine practices: Motivators, barriers, and potential facilitators of adoption. International Journal of Medical Informatics. 2010;79(2):123–129. doi: 10.1016/j.ijmedinf.2009.12.001. [DOI] [PubMed] [Google Scholar]

- 24.Shapiro JS, Kannry J, Kushniruk AW, Kuperman G The New York Clinical Information Exchange Clinical Advisory S. Emergency Physicians' Perceptions of Health Information Exchange. Journal of the American Medical Informatics Association. 2007;14(6):700–705. doi: 10.1197/jamia.M2507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.eHealth Initiative. The State of Health Information Exchange in 2010: Connecting the Nation to Achieve Meaningful Use. Washington, D.C.: 2010. [Google Scholar]

- 26.Rudin RS, Simon SR, Volk LA, Tripathi M, Bates D. Understanding the Decisions and Values of Stakeholders in Health Information Exchanges: Experiences From Massachusetts. Am J Public Health. 2009;99(5):950–955. doi: 10.2105/AJPH.2008.144873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Johnson KB, Unertl KM, Chen Q, Lorenzi NM, Nian H, Bailey J, et al. Health information exchange usage in emergency departments and clinics: the who, what, and why. Journal of the American Medical Informatics Association. 2011;18(5):690–697. doi: 10.1136/amiajnl-2011-000308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vest JR, Gamm LD. Health information exchange: persistant challenges & new strategies. Journal of the American Medical Informatics Association. 2010;17(3):288–294. doi: 10.1136/jamia.2010.003673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Smith HL, Bullers WI, Jr, Piland NF. Does information technology make a difference in healthcare organization performance? A multiyear study. Hosp Top. 2000;78(2):13–22. doi: 10.1080/00185860009596548. [DOI] [PubMed] [Google Scholar]

- 30.Devaraj S, Kohli R. Performance impacts of information technology: is actual usage the missing link. Management Science. 2003;49(3):273–289. [Google Scholar]

- 31.Vest JR, Gamm L, Ohsfeldt R, Zhao H, Jasperson J. Factors Associated with Health Information Exchange System Usage in a Safety-Net Ambulatory Care Clinic Setting. Journal of Medical Systems. 2011:1–7. doi: 10.1007/s10916-011-9712-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vest JR. Health information exchange and healthcare utilization. Journal of Medical Systems. 2009;33(3):223–231. doi: 10.1007/s10916-008-9183-3. [DOI] [PubMed] [Google Scholar]

- 33.Huang C-Y, Shen Y-C, Chiang I-P, Lin C-S. Characterizing Web users' online information behavior. Journal of the American Society for Information Science and Technology. 2007;58(13):1988–1997. [Google Scholar]

- 34.Brzinsky-Fay C, Kohler U, Luniak M. Sequence analysis with Stata. The Stata Journal. 2006;6(4):435–460. [Google Scholar]

- 35.Department of Health & Human Services. 45 CFR Part 170 Health Information Technology: Initial Set of Standards, Implementation Specifications, and Certification Criteria for Electronic Health Record Technology; Interim Final Rule. Federal Register. 2010;75(8):2014–2047. [PubMed] [Google Scholar]

- 36.Kibbe DC. [cited Sep 5 2011];Unofficial FAQs About the ASTM CCR Standard. Center for Health IT. 2011 Available from: http://www.centerforhit.org/online/chit/home/project-ctr/astm/unofficialfaq.html.

- 37.Kuperman GJ, Blair JS, Franck RA, Devaraj S, Low AFH for the NHIN Trial Implementations Core Services Content Working Group. Developing data content specifications for the Nationwide Health Information Network Trial Implementations. Journal of the American Medical Informatics Association. 2010;17(1):6–12. doi: 10.1197/jamia.M3282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Healthcare Information Technology Standards Panel. Comparison of CCR/CCD and CDA Documents. American National Standards Institute; 2009. [cited Oct 31 2011]. Available from: http://publicaa.ansi.org/sites/apdl/hitspadmin/Matrices/HITSP_09_N_451.pdf. [Google Scholar]

- 39.Adler-Milstein J, Bates DW, Jha AK. A Survey of Health Information Exchange Organizations in the United States: Implications for Meaningful Use. Annals of Internal Medicine. 2011;154(10):666–671. doi: 10.7326/0003-4819-154-10-201105170-00006. [DOI] [PubMed] [Google Scholar]

- 40.Malin B, Nyemba S, Paulett J. Learning relational policies from electronic health record access logs. Journal of Biomedical Informatics. 2011;44(2):333–342. doi: 10.1016/j.jbi.2011.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.The Direct Project. [cited Dec 8 2010];The Direct Project Overview. 2010 Available from: http://wiki.directproject.org/file/view/DirectProjectOverview.pdf.

- 42.Healthcare Information & Management Systems Society. Health Information Exchanges: Similarities and Differences. Chicago, IL: HIMSS HIE Common Practices Survey Results White Paper; 2009. [Google Scholar]

- 43.Rudin RS. Why clinicians use or don't use health information exchange. Journal of the American Medical Informatics Association. 2011;18(4):529. doi: 10.1136/amiajnl-2011-000288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ash JS, Guappone KP. Qualitative evaluation of health information exchange efforts. Journal of Biomedical Informatics. 2007;40(6) Supplement 1:S33–S39. doi: 10.1016/j.jbi.2007.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]