Abstract

We studied activation magnitudes in core, belt, and parabelt auditory cortex in adults with normal hearing (NH) and unilateral hearing loss (UHL) using an interrupted, single-event design and monaural stimulation with random spectrographic sounds. NH patients had one ear blocked and received stimulation on the side matching the intact ear in UHL. The objective was to determine whether the side of deafness affected lateralization and magnitude of evoked blood oxygen level-dependent responses across different auditory cortical fields (ACFs). Regardless of ear of stimulation, NH showed larger contralateral responses in several ACFs. With right ear stimulation in UHL, ipsilateral responses were larger compared to NH in core and belt ACFs, indicating neuroplasticity in the right hemisphere. With left ear stimulation in UHL, only posterior core ACFs showed larger ipsilateral responses, suggesting that most ACFs in the left hemisphere had greater resilience against reduced crossed inputs from a deafferented right ear. Parabelt regions located posterolateral to core and belt auditory cortex showed reduced activation in UHL compared to NH irrespective of RE/LE stimulation and lateralization of inputs. Thus, the effect in UHL compared to NH differed by ACF and ear of deafness.

Keywords: Auditory cortex, Adult unilateral deafness, Single-event BOLD response, Neuroplasticity

1. Introduction

Auditory cortex displays lateralization asymmetries despite binaural inputs. For example, the left hemisphere especially perceives and produces spoken language (Zatorre and Binder, 2000). Additionally, monaural stimulation normally evokes larger magnitude and shorter latency responses in the contralateral hemisphere (Jäncke et al., 2002; Khosla et al., 2003; Ponton et al., 2001; Vasama and Mäkelä, 1997; Zatorre and Binder, 2000). Each hemisphere also preferentially responds to different parameters of complex acoustic stimulation. Left auditory cortex generally is better at processing temporally complex, rapidly changing sounds characteristic of non-tonal speech; the right is more responsive to the tonal or spectral content of stimuli (Belin et al., 1998; Johnsrude et al., 2000; Obleser et al., 2008; Schönwiesner et al., 2005b; Scott et al., 2000, 2006; Tervaniemi and Hugdahl, 2003; Zatorre and Belin, 2001; Zatorre et al., 2002). Evidence of spectral representation in the left hemisphere (Obleser et al., 2008; Zatorre and Gandour, 2008) indicates that the temporal/spectral lateralization dichotomy is not especially rigid. Despite this caveat, unilateral sensorineural hearing loss (UHL) leads to behavioral deficits that may reflect lateralized processing of different sound parameters. Deficits with UHL include increased difficulty with understanding speech in noise (Bishop and Eby, 2010; Wie et al., 2010), and poorer sound localization (Abel et al., 1982; Humes et al., 1980).

Prior studies in patients with UHL reported changes in some aspects of auditory cortex asymmetry. In normal hearing, asymmetry involves greater contralateral hemisphere activation versus ipsilateral. In UHL, activation increased in the hemisphere ipsilateral to the intact ear with less change in the contralateral hemisphere leading to more balanced activation between hemispheres (Bilecen et al., 2000; Langers et al., 2005; Ponton et al., 2001; Scheffler et al., 1998). These lateralization changes in UHL were attributed to primary auditory cortex based on identifying Heschl’s gyrus as the site of activity (Bilecen et al., 2000; Langers et al., 2005; Scheffler et al., 1998; Tschopp et al., 2000).

Few studies examined the effect of ear of deafness on cortical lateralization patterns. Right ear (RE) stimulation evoked equivalent ipsilateral and contralateral activation and left ear (LE) stimulation yielded larger contralateral responses (Hanss et al., 2009; Khosla et al., 2003; Schmithorst et al., 2005). An objective of the current study was to examine hemispheric asymmetries with respect to stimulated ear in different auditory cortex fields (ACFs).

Initial descriptions of ACFs arose from neurophysiological assessments of tonotopic organization in macaques and other animals. These findings included tonotopic mapping with pure tones in core (primary auditory, A1, rostral area, R, and rostral–temporal area, RT), surrounding belt (rostro-middle, RM, rostro-temporal-middle, RTM, caudo-medial, CM, caudo-lateral, CL, middle-lateral, ML, antero-lateral, AL and rostro-temporal–lateral, RTL), and lateral parabelt auditory fields (caudal parabelt, CPB and rostral parabelt, RPB) (Imig et al., 1977; Kaas and Hackett, 2000; Merzenich and Brugge, 1973).

Tonotopic maps obtained in fMRI studies in humans have noted mirror reversals across successive ACFs in core and belt regions (da Costa et al., 2011; Humphries et al., 2010; Striem-Amit et al., 2011; Woods et al., 2009, 2010). Core regions with sharply defined tonotopic organization occupy much, but not all of Heschl’s gyrus (da Costa et al., 2011; Penhune et al., 1996; Rademacher et al., 1993). As in animals, the core region of auditory cortex has a koniocortex cytoarchitecture and the surrounding cortex shows decreased layer IV thickness (Brodmann, 1909; Galaburda and Sanides, 1980; Morosan et al., 2001; Rademacher et al., 2001; von Economo, 1929). Based on quantitative and objectively defined criteria, these cytoarchitectonic differences along the superior temporal plane and gyrus show five subdivisions: Te1.0, Te1.1, Te1.2, Te2 and Te3 (Morosan et al., 2001; Rademacher et al., 2001).

Woods and colleagues overlaid acoustically activated regions onto average gyral and sulcal landmarks to relate different ACFs to the Te subdivisions (Downer et al., 2011; Woods and Alain, 2009; Woods et al., 2009). They showed that Te1.1 encompasses caudo-medial A1 core and part of medial belt areas (RM, CM); Te1.0 centers on core AFCs (medial A1 and caudal R); Te1.2 also includes some core ACFs (R rostrally and RT caudally); and Te3 comprises lateral belt ACFs (AL and ML). Additionally, based on myelin boundaries (Glasser and Van Essen, 2011), Te2 partially embraces caudal parts of core A1 and caudal belt ACFs (CL); and STG/STS-BA22 contains lateral belt (CL) and parabelt ACFs (CPB). A major objective of the current study was to contrast results from each of the Te defined regions and their associated ACFs from two hearing groups, UHL and normal hearing (NH).

Most prior studies in UHL used simple stimuli such as pure tones (Schmithorst et al., 2005), 1000 Hz pulsed tones or tone bursts (Bilecen et al., 2000; Hanss et al., 2009; Scheffler et al., 1998; Tschopp et al., 2000; Vasama and Mäkelä, 1997), click trains (Khosla et al., 2003; Ponton et al., 2001), and narrowband noise (Langers et al., 2005; Propst et al., 2010). A few used speech in quiet (Firszt et al., 2006; Hanss et al., 2009) or in noise (Propst et al., 2010). While simple stimuli avoid possible confounds due to the linguistic content of speech, simple stimuli generally suffer from bandwidth-by-duration limitations. All the random spectrogram sound (RSS) stimuli employed in the current study have, on average, the same spectral bandwidth and duration, and hence do not suffer from this bandwidth-by-duration limitation. Furthermore, all RSS stimuli had, on average, matching intensities across the same spectral region for the same time period, thereby removing intensity or spectral bandwidth or duration as potential confounding variables. In addition, RSS stimuli allowed for independent control of spectral complexity (akin to bandwidth) and temporal complexity (akin to duration) (Schönwiesner et al., 2005b). The RSS probed response differences associated with sounds distinct from speech, music, or pure tones. The current study therefore assessed the temporal/spectral dichotomy hypothesis by comparing auditory cortex activation distributions to monaural RSS (Schönwiesner et al., 2005b) in adults with left or right unilateral deafness and in similar age adults with normal hearing. A sparse sampling, single-event BOLD design (Amaro and Barker, 2006; Belin et al., 1999) also allowed examination of response time courses to the stimuli.

2. Results

2.1. Behavioral performance

Medians for correct identification were 100% for low and high complexity temporal targets and for high complexity spectral targets. The median was 75% for low complexity spectral targets. A two-way ANOVA using a within factor of RSS-type and a between factor of group found no significant group differences for correct target identification (F=0.55, df=3, 184, p=ns), indicating similar performance across groups. There was a significant effect of the RSS type (F=6.37, df=3, 184, p<0.0001) reflecting fewer correct responses for low complexity spectral targets. However, none of the three post hoc Mann–Whitney two-tailed tests was significant after Bonferroni correction (0.05/3=0.017) for contrasts between numbers of correctly identified targets using low complexity spectral compared to high complexity spectral, and each complexity of temporal RSS (all contrasts had p values=.03). Further evidence of high performance accuracy was low false positive rates (global median=0 and average=1.2).

2.2. Distribution of significant activation

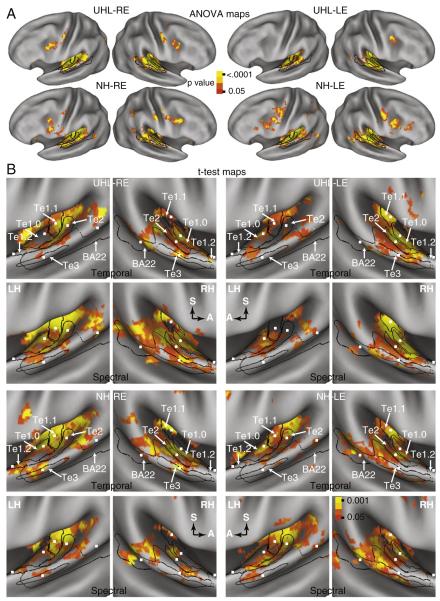

Brain maps resulting from an ANOVA analysis of response magnitudes showed bilateral activation across the superior temporal plane in all subgroups (Fig. 1A, ANOVA maps). The distributions similarly involved core, belt and parabelt regions of auditory cortex. The extension of activation to parabelt regions was entirely postero-lateral along the superior temporal gyrus.

Fig. 1.

Brain maps based on (A) random effect, multiple comparison corrected ANOVA and (B) t-tests of BOLD response amplitudes with 7 vs. 2 s delays from the onset of a RSS stimulus to the beginning of a subsequent EPI. The view shown for the t-test maps is into the superior temporal plane on the inflated CARET PALS-B12 atlas for the left (LH) and right (RH) hemispheres. A and S labeled arrows in the t-test map panels for spectral RSS indicate anterior and superior directions in each hemisphere. Paired LH and RH panels for the t-test maps show results from one hearing group, RSS stimulus type, and stimulated ear (LE, left and RE, right). Black lines mark the borders of auditory cortex fields: Te1.0, Te1.1, Te1.2, Te2, Te3, and a selected portion of BA22 for the studied STG/STS. The small white squares shown in the t-test maps mark the coordinate centers for the regions of interest in each studied auditory cortex field (see Table 2 for Talairach atlas coordinates). P-value color scale for ANOVA brain maps was .05 to <.0001 and for t-test maps was .05 to .001 after correction for multiple comparisons.

Brain maps based on t-tests of a specific contrast between larger magnitude BOLD signals for RSS presented with a longer stimulus–EPI delay of 7 s compared to 2 s also showed bilateral activation with monaural stimulation in both groups (Fig. 1B, stimulus–EPI t-test maps). Separate rows in Fig. 1B show results from imaging runs for Temporal or Spectral RSS stimuli. The maps in both hearing groups were similar in showing bilateral activation with monaural stimulation. These distributions primarily occupied the posterior half of the superior surface of the temporal gyrus, and stretched from caudomedial to rostro-lateral for each RSS-type (Fig. 1B). Nearly all of the Te subdivisions contained significant t-test results in both hearing groups and irrespective of stimulated ear or RSS-type. Thus, significant activity occurred in core, belt, and parabelt ACFs. However, significant t-test results were sparse in the Te1.2 ROI (Fig. 1B). There was no further analysis of activity in Te1.2 given the scarcity of significant t-test results in this region.

2.3. Spectral versus temporal RSS

There were no within or between group differences for the runs where the more numerous non-target trials had low compared to high complexities for each RSS-type. Consequently, the analysis of contrasts between spectral and temporal RSS types included data from the combined runs with 44/48 trials with low or high complexity parameters. The post-hoc t-tests showed no significant differences in response magnitudes evoked by spectral compared to temporal RSS for the 10 studied ROI (5 ROI per hemisphere) in the subgroups NH-RE and UHL-LE, for 9 ROI in NH-LE, and 8 ROI for UHL-RE after Bonferroni correction (p-value of 0.05/10=0.005). Spectral RSS compared to temporal RSS response magnitudes were larger for the UHL-RE group in left Te2 and right Te3, and for the NH-LE group in left Te2. Because few ROI showed distinctions between responses evoked by spectral and temporal RSS stimulation, subsequent analyses examined combined data across RSS types.

2.4. Hearing group contrasts

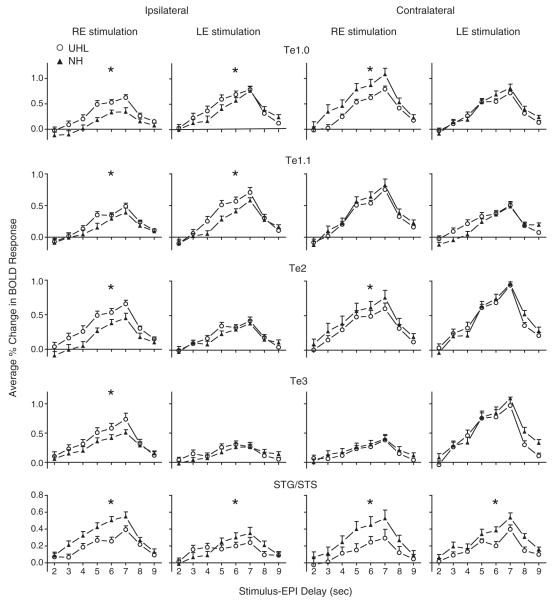

Factors affecting the differences between hearing groups in BOLD signal magnitudes across stimulus–EPI delays of 5 to 7 s included auditory cortex subdivision, stimulated ear, and lateralization of a ROI (Fig. 2). Generally, RE stimulation produced significant differences between responses in UHL compared to NH in more regions than LE stimulation. Ipsilateral to the stimulated ear, magnitudes in Te1.0 and Te1.1 ROI were larger in UHL compared to NH, both with RE and LE stimulation (Fig. 2: rows 1 and 2, columns 1 and 2). (Table 2 provides F and p values for each comparison displayed in Fig. 2. All F-ratio p-values shown in bold font in Tables 2 and 3 were significant after Bonferroni correction of 0.05/10=0.005). In Te2 and Te3, hearing group differences were significant only ipsilateral to RE stimulation (Fig. 2: rows 3 and 4, columns 1 and 2). Contralateral to the stimulated ear, hearing group response differences were more variable in these same four ROI. In Te1.0 and Te2, magnitudes were significantly smaller with RE stimulation in UHL compared to NH (Fig. 2: rows 1 and 3, column 3) but were comparable in Te1.1 and Te3 (Fig. 2: rows 2 and 4, column 3). Response magnitudes in the same four ROI were comparable with LE stimulation in UHL and NH (Fig. 2: rows 1–4, column 4). In parabelt ROI located in STG/STS cortex, magnitudes in UHL were always significantly smaller than in NH irrespective of response lateralization (ipsilateral or contralateral) or stimulated ear (Fig. 2: row 5, columns 1–4).

Fig. 2.

BOLD response comparisons between unilateral hearing loss (UHL, open circle) and normal hearing (NH, filled triangle) groups in different auditory cortex ROI. Plotted at each stimulus–EPI delay is the mean and standard error of the mean for the per cent change in BOLD responses over baseline across participants within a group and ROI. Asterisks denote statistically significant differences between comparisons (see Table 2 for p-values and Talairach coordinates).

Table 2.

Response differences between UHL and NH in auditory cortex ROI by stimulated ear.

| Region name |

Coordinates | RE stimulation |

LE stimulation |

||

|---|---|---|---|---|---|

| F (1/23) | Pr>F | F (1/23) | Pr>F | ||

| Te1.0-LH | −47.5,−26,8 | 62.4 | <.0001 | 8.2 | .005 |

| Te1.0-RH | 47,−23,11 | 49.9 | <.0001 | 7.0 | .01 |

| Te1.1-LH | −41,−32,9 | 7.1 | .009 | 35.0 | <.0001 |

| Te1.1-RH | 37,−29,14 | 12.6 | .0005 | 2.3 | .13 |

| Te2-LH | −45,−35.5,9 | 19.2 | <.0001 | 5.4 | .02 |

| Te2-RH | 53,−22,9 | 25.3 | <.0001 | .8 | .38 |

| Te3-LH | −60,−22,−1 | .8 | .39 | 2.3 | .13 |

| Te3-RH | 63,−18,5 | 29.4 | <.0001 | 1.9 | .17 |

| STG/STS-LH | −60,−46,13 | 21.1 | <.0001 | 10.5 | 0.0015 |

| STG/STS-RH | 56,−38,9 | 42.5 | <.0001 | 24.8 | <.0001 |

Table 3.

Response differences between ipsilateral and contralateral inputs in NH and UHL.

| Region | X,Y,Z | UHL |

NH |

||||||

|---|---|---|---|---|---|---|---|---|---|

| RE stimulation |

LE stimulation |

RE stimulation |

LE stimulation |

||||||

| F a | Pr>F | F | Pr>F | F | Pr>F | F | Pr>F | ||

| Te1.0 | −47.5,−26,8 47,−23,11 |

4.96 | .03 | 7.54 | .007 | 140.8 | <.0001 | 3.14 | .08 |

| Te1.1 | −41,−32,9 37,−29,14 |

42.8 | <.0001 | 26.9 | <.0001 | 64.7 | <.0001 | 4.2 | .04 |

| Te2 | −45,−35.5,9 53,−22,9 |

.55 | .46 | 117.5 | <.0001 | 7.7 | .006 | 122.6 | <.0001 |

| Te3 | −60,−22,−1 63,−18,5 |

41.1 | <.0001 | 76.3 | <.0001 | 30.9 | <.0001 | 142.8 | <.0001 |

| STG/STS | −60,−46,13 56,−38,9 |

2.3 | 0.13 | 5.38 | 0.02 | 0.48 | 0.49 | 9.68 | 0.002 |

NH F ratio df 1/11 and for UHL df 1/12.

In summary, the Te1 ROI located in core ACFs (A1 and R) generally showed larger ipsilateral response magnitudes in UHL than NH to either stimulated ear and smaller or comparable magnitudes than NH contralateral to the stimulated ear. Right ear stimulation evoked larger ipsilateral responses in UHL compared to NH in lateral and posterior belt ACFs (AL, ML, and CL). In the caudal parabelt ACF (CPB) associated with the STG/STS ROI, magnitudes in both UHL groups were smaller than in NH irrespective of lateralization and stimulated ear.

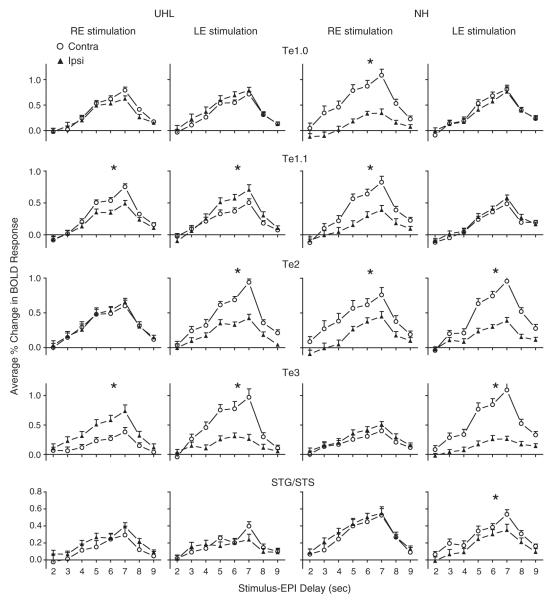

2.5. Ipsilateral versus contralateral activation

There were different lateralization effects on BOLD signal magnitudes in UHL and NH across the ROI and stimulated ear (Fig. 3 and Table 3). In UHL and ROI located in core and adjacent medial belt ACFs, LE stimulation evoked significantly larger ipsilateral magnitudes in Te1.1 and slightly larger responses in Te1.0; while RE stimulation evoked significantly larger contralateral magnitudes in Te1.1 and slightly larger in Te1.0. In NH and the same ROI, LE stimulation led to overlapping response magnitudes in Te1.0 and Te1.1; RE stimulation caused significantly larger magnitudes in contralateral Te1.0 and Te1.1 (Fig. 3: rows 1 and 2). In UHL and lateral and caudal belt ACFs, LE stimulation evoked significantly larger contralateral magnitudes in Te2 and Te3; and RE stimulation evoked significantly larger magnitudes in ipsilateral Te3. In NH and the same lateral and caudal ACFs, LE stimulation evoked significantly larger contralateral response magnitudes in Te2 and Te3; RE stimulation evoked significantly larger responses only in Te2. In UHL and caudal parabelt STG/STS ROI, LE or RE stimulation did not produce significant magnitude differences. In NH and the STG/STS ROI, only LE stimulation evoked significantly larger contralateral magnitudes.

Fig. 3.

Lateralization comparisons (Contra, open circle; Ipsi, filled triangle) of BOLD responses evoked by each stimulated ear (RE or LE) and in each hearing group (UHL or NH) for five different paired auditory cortex ROI. With RE stimulation, ipsilateral responses were in the right hemisphere and contralateral responses were in the left hemisphere. With LE stimulation, ipsilateral and contralateral responses were, respectively, in the left and right hemispheres. Plotted at each stimulus–EPI delay is the mean and standard error of the mean for the per cent change in BOLD responses over baseline across participants within a group and ROI. Asterisks denote statistically significant differences between comparisons (see Table 3 for p-values).

In summary, significantly larger contralateral responses to a monaurally stimulated ear in NH generally occurred in the left hemisphere ROI centered in core and belt ACFs and in the right hemisphere belt and parabelt ROI. UHL showed lateralization distinctions that varied by ROI and stimulated ear. Core ACFs associated with Te1.0 and Te1.1 ROI showed significantly larger contralateral responses with RE stimulation, but larger ipsilateral responses with LE stimulation. Lateral belt ACFs associated with Te2 and Te3 ROI showed larger contralateral responses with LE stimulation but Te3 showed larger ipsilateral responses with RE stimulation. Response magnitudes in UHL with either stimulated ear were more comparable in parabelt STG/STS ROI in both hemispheres and generally smaller than in NH.

2.6. Duration of deafness effect

Both UHL groups had similar mean and ranges of deafness durations (Table 1). Although the RE stimulated UHL group had three participants with duration of deafness of less than one year, the distribution of deafness periods across groups was not significantly different (Mann–Whitney U=82.5, p=.918). To examine whether deafness duration affected response magnitudes, we reanalyzed the data to include a regression analysis of deafness duration and response magnitudes in each of the studied ROI that were ipsilateral or contralateral to a stimulated ear. No linear correlations were greater than r2=0.1, 9 out of 12 were below .03, and none were significant. Thus, despite the wide range of deafness durations, all UHL participants showed comparable alterations in response magnitudes in the assessed ROI.

Table 1.

Demographic data for unilateral deaf participants.

| Partic # | Gender | Age | PTA a deaf ear | PTA stim ear | AAOPHL b (years) | DOD c (years) | Etiology |

|---|---|---|---|---|---|---|---|

| LE stim | |||||||

| 1 | M | 25 | 114 | 4 | 0 | 25 | Unknown |

| 2 | F | 26 | 125 | 1 | 0 | 26 | Unknown |

| 3 | M | 27 | 119 | 9 | 10 | 17 | Trauma/MG d |

| 4 | M | 33 | 125 | 15 | 31 | 2 | AN e |

| 5 | F | 35 | 119 | 5 | 33 | 2 | AN |

| 6 | M | 45 | 115 | 18 | 15 | 30 | Unknown |

| 7 | F | 50 | 125 | 13 | 48 | 2 | AN |

| 8 | F | 50 | 111 | 14 | 0 | 50 | Unknown |

| 9 | F | 53 | 89 | 12 | 13 | 40 | Viral |

| 10 | F | 53 | 125 | 16 | 13 | 40 | Unknown |

| 11 | M | 57 | 125 | 23 | 55 | 2 | Trauma |

| 12 | F | 62 | 84 | 18 | 59 | 3 | Unknown |

| 13 | M | 71 | 118 | 31 | 48 | 23 | AN |

| Mean | 45.2 | 114.9 | 13.8 | 25 | 20.2 | ||

| SEM | 4.1 | 3.7 | 2.2 | 6 | 4.7 | ||

| RE stim | |||||||

| 1 | F | 27 | 116 | 13 | 3 | 24 | LVAS f |

| 2 | M | 30 | 123 | 11 | 29 | 0.2 | Unknown |

| 3 | F | 39 | 119 | 0 | 37 | 2 | MG |

| 4 | M | 41 | 119 | 16 | 38 | 3 | AN |

| 5 | M | 43 | 118 | 3 | 43 | 0.5 | Unknown |

| 6 | F | 46 | 116 | 8 | 30 | 16 | Unknown |

| 7 | F | 47 | 108 | 21 | 0 | 47 | Genetic |

| 8 | F | 47 | 125 | 16 | 5 | 42 | MG |

| 9 | F | 55 | 117 | 21 | 46 | 9 | Unknown |

| 10 | M | 57 | 123 | 6 | 57 | 0.2 | AN |

| 11 | F | 60 | 93 | 14 | 3 | 57 | Unknown |

| 12 | F | 70 | 110 | 16 | 18 | 52 | Unknown |

| 13 | F | 72 | 116 | 16 | 0 | 72 | Unknown |

| Mean | 48.8 | 115.6 | 12.4 | 23.8 | 25 | ||

| SEM | 3.8 | 2.3 | 1.8 | 5.5 | 7.1 |

PTA, pure tone average thresholds at 500, 1000, and 2000 Hz.

AAOPHL, age at onset of profound hearing loss.

DOD, duration of deafness.

MG, meningitis.

AN, acoustic neuroma.

LVAS, large vestibular aqueduct syndrome.

3. Discussion

3.1. Studied auditory cortex regions

Monaural spectral or temporal RSS stimuli evoked bilateral activity in core, belt, and parabelt ACFs in individuals with UHL and age-matched NH participants. The studied regions included auditory cortex subdivisions defined a priori using surface based reconstructions of quantitative cytoarchitectonic probabilistic maps and in vivo myelin gradients (Eickhoff et al., 2005; Glasser and Van Essen, 2011; Morosan et al., 2001; Rademacher et al., 2001). Consequently, the analysis of activation in the Te1.0, Te1.1, and Te1.2 ROI included core ACFs A1 and R that mostly, but not entirely encompassed a morphological definition of primary auditory cortex based on HG (Penhune et al., 1996; Warrier et al., 2009). The analysis of activation in Te2 and Te3, respectively, in planum temporale and planum polare, encompassed caudal and lateral belt ACFs. Therefore, the analyses directly related to ACFs identified in humans (da Costa et al., 2011; Downer et al., 2011; Humphries et al., 2010; Woods and Alain, 2009; Woods et al., 2009, 2010, 2011) as homologues to auditory cortical regions in primates (Kaas and Hackett, 2000). Boundaries noted using myelin gradients in the STG/STS and within superior aspects of Brodmann area 22 (Glasser and Van Essen, 2011) placed the analysis of parabelt ACF, lateral and posterior to the superior temporal plane.

3.2. Enhanced ipsilateral activation in UHL

The current study confirmed reports of strengthened activation in the hemisphere ipsilateral to the intact ear of participants with UHL compared to ipsilateral responses evoked by matching monaural stimulation in NH (Bilecen et al., 2000; Hanss et al., 2009; Khosla et al., 2003; Langers et al., 2005; Ponton et al., 2001; Scheffler et al., 1998; Schmithorst et al., 2005; Tschopp et al., 2000; Vasama and Mäkelä, 1997). All core and belt ACFs located in the Te1 subdivisions showed larger ipsilateral response magnitudes in UHL compared to NH (Fig. 2). In belt ACFs included in Te2 and Te3 subdivisions of UHL, only RE stimulation evoked larger ipsilateral/right hemisphere responses compared to findings in NH (Fig. 2). These results confirm some reports of greater lateralization reorganization in the right hemisphere of left ear deaf (Hanss et al., 2009; Khosla et al., 2003; Schmithorst et al., 2005). For example, assessment of interhemispheric latencies showed the greatest reduction in hemispheric differences in adults with left ear deafness (Khosla et al., 2003).

One previously offered hypothesis was that different lateralization effects based on deafness side indicated predominance of the left hemisphere for language processing and greater reorganization plasticity in the less dominant right hemisphere (Khosla et al., 2003). Current findings suggest this explanation may primarily apply to core and belt ACFs. The parabelt STG/STS ROI showed reduced activation in UHL compared to NH irrespective of deafness side and lateralization of the inputs.

The observed reorganized lateralization differences by ACF and affected ear in unilateral deafness were consistent with a hierarchical organization of processing in the auditory cortex. Thus, core fields generally show greater response distinctions than belt ACFs for sensory parameters of tonotopic organization, sound intensity, and contralateral inputs whereas responses in belt ACFs are more prone to the effects of directed attention (Woods et al., 2010). Belt ACFs in planum temporale (Te2) also are involved in the analysis of sounds with complex spectrotemporal structure (Griffiths and Warren, 2002). Additionally, higher stage processing of speech-like sounds and spectral/temporal dichotomies occur in parabelt ACFs lateral to HG and extending from STG into STS (Obleser et al., 2008; Schönwiesner et al., 2005b; Scott and Johnsrude, 2003; Scott and Wise, 2004; Zatorre and Belin, 2001; Zatorre et al., 2002). Thus, observed lateralization changes were prominent in core ACFs possibly because the activity more closely reflected sensory inputs directly passed to cortex from the ventral nucleus of the medial geniculate. The ipsilateral lateralization possibly reflected disinhibition of ~30% uncrossed inputs by removal or reduction of the normally more predominant crossed inputs. Belt and parabelt ACFs might have been susceptible to the effects of unilateral deafferentation because these fields rely more on successive intracortical inputs (Kaas and Hackett, 2000) that might be less affected by the disinhibition resulting from losing more direct crossed sensory inputs to core fields. Future studies might establish that the complex sound parameters processed in belt fields differ between the hemispheres (Scott and Johnsrude, 2003). The effect of UHL in the STG/STS region was a global impairment potentially more critical for auditory functions like speech recognition in noise or the ability to represent sound sequences for short periods of time, similar to the current task (Scott and Johnsrude, 2003).

3.3. Contralateral response asymmetry

Prior studies reported greater lateralization asymmetry in those with normal binaural hearing that included larger magnitude and shorter latency evoked responses in the hemisphere contralateral to a monaurally stimulated ear (Jäncke et al., 2002; Khosla et al., 2003; Ponton et al., 2001; Vasama and Mäkelä, 1997; Zatorre and Binder, 2000). In NH, current results with RE stimulation confirmed a larger contralateral response in left hemispheres in all studied ROI except Te3 and STG/STS. With LE stimulation, responses in Te2, Te3 and parabelt STG/STS ROI were significantly larger in the contralateral right hemisphere.

In UHL, the presence of lateralization asymmetry varied by auditory subdivision and deaf ear. Possibly influencing the lateralization differences noted in the Te1 subdivisions of UHL participants was a hypothesized dominant left hemisphere in providing for “cortical processes of spoken language perception and production” (Khosla et al., 2003). Thus, for Te1.1 in UHL, we observed larger responses in the left hemisphere contralateral to RE stimulation and ipsilateral to LE stimulation (Fig. 3). In auditory belt AFCs included in Te2 and Te3, LE stimulation in UHL evoked larger contralateral right hemisphere responses resembling findings in NH. In the Te3 subdivision of UHL, however, RE stimulation evoked larger ipsilateral responses in the right hemisphere (Fig. 3). These results possibly indicated greater plasticity in the right hemisphere because it showed greater ipsilateral and contralateral responses with RE or LE stimulation in belt ACFs. The parabelt auditory STG/STS subdivision showed no evidence of contralateral response asymmetry in UHL and only in the right hemisphere with LE stimulation in NH.

The evidence of contralateral asymmetries especially in UHL indicated progressive differences: core Te1 ACFs showed left hemisphere dominance with RE or LE stimulation; belt Te2 and Te3 fields had greater reorganization in the right hemisphere; and the parabelt ROI showed no contralateral asymmetry. These differences across ACFs might reflect hierarchical processing based on sequential connections outward from core to parabelt fields (Kaas and Hackett, 2000), evidence of decreasingly discrete delineation of tonotopic organization from core to parabelt ACFs (Kaas and Hackett, 2000; Woods and Alain, 2009), and activation to basic acoustic features in core compared to responses to more abstract stimulus parameters and modulation by attention in surrounding ACFs (Woods et al., 2009, 2011). In parabelt ACFs, it is possible that the effect of deafferentation in UHL hypothetically reduced excitatory cortical responses in some regions leading to altered contralateral response asymmetry. A corollary notion is that disinhibition enhanced ipsilateral activation relative to responses in NH.

One concern with observing bilateral hearing in the NH group is transmission by bone conduction from the stimulated ear. Although the ear plug and circumaural headphones muted sound input to the contralateral ear, they might not have prevented transcranial transmission by bone conduction from the stimulated ear. However, Brännström and Lantz (2010) reported a mean interaural attenuation of 64 dB for this type of conduction of pure tones in the frequency bandwidth of the RSS stimulus from circumaural headphones and this would result in the 70 dB SPL RSS being at or below audibility for the contralateral ear.

3.4. Temporal/Spectral dichotomy and effects of task and study design

In normal hearing individuals, parabelt auditory regions in the superior temporal gyrus/superior temporal sulcus showed a dichotomous left and right hemisphere predominant activation, respectively, for temporally and spectrally modulated sounds (Obleser et al., 2008; Schönwiesner et al., 2005b; Tervaniemi and Hugdahl, 2003; Zatorre and Belin, 2001; Zatorre and Gandour, 2008; Zatorre et al., 2002). The temporal/spectral dichotomy hypothesis was that the left auditory cortex is best at processing fast temporal cues, especially critical for speech, and that the right auditory cortex is best for processing tonal or spectral information. Evidence for this dichotomy arose using a paired-comparison task with specified spectral or temporal parameterization of tones (Zatorre and Belin, 2001), passive listening to 7 s intervals of spectral or temporal RSS with complexity levels matched to prior psychophysical assessments (Schönwiesner et al., 2005b), or ratings of the intelligibility of spoken words degraded by 1 of 25 levels of spectral and/or temporal rate characteristics (Obleser et al., 2008). In each of these studies, participants listened actively or passively to stimulation intervals with a fixed combination of spectral and/or temporal rate complexities. The activity reported in these studies occurred in parabelt ACFs and showed a temporal/spectral dichotomy: greater magnitudes on the left for temporal parameters and on the right for spectral parameters. The affected cortex was mostly anterolateral to the superior temporal plane and, on the left, overlapped with prior reports of temporal lobe areas activated during speech (Scott and Johnsrude, 2003; Scott et al., 2000, 2006). For example, using similar RSS stimuli but of longer duration in a group of NH listeners, Schönwiesner and colleagues showed asymmetric hemispheric activation associated with spectral complexity in left and right HG (Te1 subdivisions) and the parabelt, right antero-lateral superior temporal gyral area (AL-STG). Group changes in temporal complexity were primarily in left AL-STG. Individual effect sizes from the ROIs demonstrated the described lateralization distinctions for many but not all subjects in AL-STG whereas individual results were inconsistent and non-significant in HG.

Because the evidence in NH indicated that each hemisphere contributes selectively to perceiving temporally or spectrally acoustical stimuli, we asked whether the side of hearing loss altered these asymmetrical functions within core, belt, or parabelt fields. Several procedural factors in the present study might have prevented finding evidence of a temporal/spectral dichotomy despite presenting spectrally and temporally manipulated RSS stimuli similar to those used previously (Schönwiesner et al., 2005b). A major difference was that participants in the present study performed a vigilance attention task in which they detected a change when a rare target RSS occurred. Being aware of a change, however, does not necessarily require knowing whether the change represented an increase or decrease in RSS complexity from the non-target RSSs. The short 2 s stimulation intervals needed for the single-event design possibly also encouraged detecting a change between a non-target and target trial and did not provide time or need to characterize the RSS parameters. Because performance accuracy on this task was at or near ceiling, participants easily detected the rare change condition. There were also no response magnitude differences for runs containing spectral or temporal stimuli with mostly low or high complexity parameters, indicating that the predominant complexity within a run did not differentially alter BOLD responses. Similarly, there were no significant differences in responses to spectral compared to temporal RSS. Consequently, the RSS stimuli per trial might have provoked responses comparable to the variety of transient sounds previously used in studies with UHL participants. As observed here and reported previously (Bilecen et al., 2000; Khosla et al., 2003; Ponton et al., 2001; Scheffler et al., 1998; Tschopp et al., 2000; Vasama and Mäkelä, 1997), transient acoustic events best activated core and belt ACFs. In addition, the tasks in prior studies that showed a left/right hemisphere-temporal/ spectral dichotomy involved prolonged 7 s exposures to a given RSS within an epoch (Schönwiesner et al., 2005a).

Utilizing a silent event design (Amaro and Barker, 2006; Belin et al., 1999; Hall et al., 1999) also might have affected the current findings. We employed an iterative stimulus delay schedule to avoid masking BOLD signals to RSS stimuli by scanner noises (Gaab et al., 2007; Talavage and Edmister, 2004). The event design particularly enabled determination of a hemodynamic response function from the piecemeal reassembly of BOLD signals by resorting activation for the eight different stimulus delay times spaced at 1 s intervals (Amaro and Barker, 2006; Belin et al., 1999; Hall et al., 1999). These constraints dictated presenting brief 2 s RSS stimulation per trial. However, the re-constituted BOLD response time course to the RSS stimuli allowed us to assess whether response dynamics differed between NH and UHL. The time courses of the BOLD hemodynamic responses were similar in all auditory subdivisions irrespective of response lateralization and stimulated ear. Equivalent hemodynamic functions for all recorded BOLD signals meant that within and between group statistical comparisons reliably used magnitudes found at three stimulus delays (5, 6, and 7 s), the intervals containing the BOLD response peak.

3.5. Summary and conclusions

Differences in response magnitude by lateralization varied in the auditory cortical fields and hemispheres in adults with unilateral hearing loss (UHL) versus normal hearing (NH). For between hearing group contrasts, bilateral core auditory cortical fields (A1 and R) had larger ipsilateral response magnitudes in UHL compared to activation with monaural stimulation in NH. In the Te2 and Te3 belt fields, the right hemisphere showed enhanced ipsilateral responses in UHL compared to NH. Left hemisphere contralateral response amplitudes were larger for NH in Te2 but comparable between groups in Te3. In caudal parabelt fields, both hemispheres showed larger contralateral response amplitudes in NH compared to UHL.

For within-group-contrasts and NH, contralateral response amplitudes were larger in the left hemisphere for core and mostly larger in the right hemisphere for belt and parabelt fields. For within-group-contrasts and UHL, contralateral response amplitudes were larger in the left hemisphere core, in the right hemisphere for belt fields associated with Te2 and Te3, and comparable to ipsilateral amplitudes for parabelt ACFs. Belt fields showed enhanced ipsilateral responses with RE stimulation that was not evident with LE stimulation. The enhanced ipsilateral responses in right hemisphere Te2 and Te3 ROI in UHL compared to NH suggested a plasticity effect in this hemisphere. However, with right ear deafness in UHL the left hemisphere ipsilateral responses in both groups were similar in Te2 and Te3, suggesting a predominant left hemisphere for these belt regions. Previous reports of a similar finding suggested greater resilience of the speech functions in the left hemisphere against reduced crossed inputs from a deafferented right ear. Current evidence suggests this might be a property of higher level belt compared to core ACFs because the latter showed stronger ipsilateral activation in UHL bilaterally. Furthermore, UHL showed bilateral reduction in response magnitudes in parabelt fields irrespective of deaf ear. In NH, nearly all auditory regions showed a larger magnitude contralateral response asymmetry. In UHL, few auditory subdivisions exhibited a similar contralateral response asymmetry.

4. Experimental procedures

4.1. Participants

All participants provided informed consent in compliance with the Code of Ethics of the World Medical Association (Declaration of Helsinki) and guidelines approved by the Human Studies Committee of Washington University. Individuals with unilateral hearing loss (UHL) retained an intact left or right ear. Pure tone average thresholds (PTA) for the deaf ear in UHL groups were ≥90 dB HL for octave frequencies (ANSI 1989) except for two participants where the PTA was 84 and 89 dB HL; the intact ear had PTAs of ≤25 dB HL except for one participant who had a PTA of 31 dB HL (Table 1). The cause of deafness in UHL varied and deafness duration was several months or years (Table 1). Age and gender matched between UHL subgroups and were comparable to the ages of the normal hearing (NH) controls who received stimulation in one ear. There were 26 UHL participants (16 female; mean age=47, SEM 2.8 years, range 25 to 72) and 24 NH participants (14 female; mean age=47, SEM 2.8 years, range 25 to 71). Recruitment of those with UHL was through the outpatient audiology and otology clinics and for those with NH through the Volunteer for Health program at Washington University School of Medicine. All participants indicated right-handed preference on the Edinburgh Handedness Inventory (Oldfield, 1971); left-handedness was an exclusion criterion.

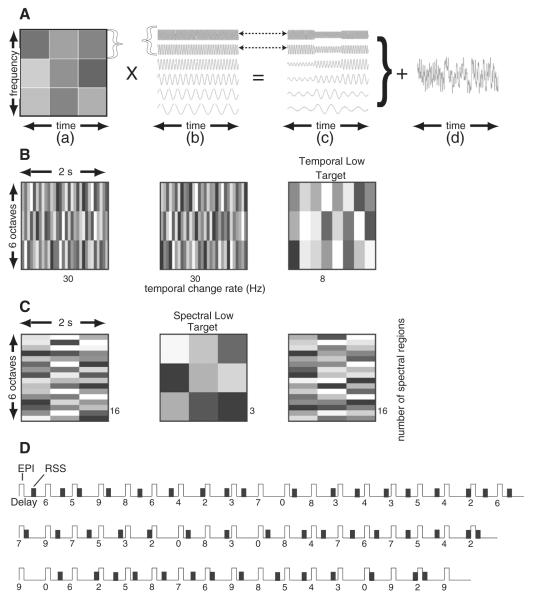

4.2. Auditory stimulation

Stimuli were random spectrogram sounds (RSS) that are noise-like with no resemblance to speech, pure tones, or music. Creation of a RSS involved independent control of temporal or spectral sound parameters. The resultant RSS stimuli all had similar bandwidth, overall intensity and duration—even though they varied systematically with respect to temporal or spectral complexity (Schönwiesner et al., 2005b; Warrier et al., 2009). Construction of a RSS stimulus entailed summing pure tones after modulating them by a spectrographic array of random amplitudes (represented in varied grey-level shades in Figs. 4A–C). A schematic example shows a set of 6 tones1 (Fig. 4A) modulated by 3 rows of different amplitudes that additionally varied three times in a fixed interval (Fig. 4Aa). Each horizontal row of amplitudes modulated an equal proportion of the pure tones and thereby segregated the input frequencies into a spectral region (Fig. 4Ab). By using more rows of random amplitudes, input frequencies split into a greater number of spectral bands. Similarly, each vertical column of amplitudes modulated a proportion of the total stimulus interval and thereby affected the rate of amplitude change. The number of rows or columns in the spectrogram of amplitudes directly related to RSS spectral or temporal complexity, respectively. There were multiple tokens due to using different random intensity grids for each complexity level.

Fig. 4.

Spectral and temporal random spectrogram sounds (RSS) and interrupted iterative stimulus presentation sequence. A. An example creation of an RSS stimulus based on a spectrographic grid of 9 random sound intensities (a) and a grouping of 6 pure tones (b). Amplitude modulated tones resulted from multiplying one row of random amplitudes for a group of tones whose rates of change in amplitude followed from the columns and the spectrum amplitude modulations followed from the rows (c). Summation across the groups of modulated tones produced a single RSS (d). B. Temporal RSS utilized spectrograms in which temporal rates reflected the number of columns (per unit of time) of different amplitudes. Higher complexity sounds had 30 and lower complexity had 8 Hz rate changes. Three tone groups divided the six octaves of tone frequencies into equal numbers of pure tones. C. Spectral RSS utilized spectrograms containing different subdivisions of the 6 octaves (rows in the spectrogram). Higher spectral complexity sounds had 16 and lower complexity had 3 spectral subdivisions. D. A single imaging run contained multiple 11 s volume acquisitions (TR) in which echo-planar pulse sequences (EPI) of 2 s duration were at the beginning of each acquisition. RSS presentation occurred during silent periods preceding each acquisition with delays of 2–9 s prior to the subsequent TR. There were six randomly distributed repeats of each delay time per run.

Participants performed an attention/vigilance, odd-ball task that required detection of four randomly occurring target trials whose complexity differed from 44 non-target trials. In imaging runs dedicated to temporal rate parameters, the number of spectral regions was constant at 3; for runs involving spectral parameters, the temporal change rate was always 3 Hz. In one run with temporal RSS, targets had temporal rates of 8 Hz and non-targets had rates of 30 Hz (Fig. 4B); in another run, targets had temporal rates of 30 and non-targets had rates of 8 Hz. Thus, in separate runs, the more frequent non-target trials had low or high RSS temporal complexity. In runs involving spectral RSS, targets had 3 bands and non-targets had 16 spectral bands (Fig. 4C) or targets had 16 versus non-targets with 3 spectral bands for low, or high spectral complexity target trials, respectively. Prior to each run, participants practiced distinguishing between three trials with non-target and one trial with target RSS using the parameters for temporal or spectral stimuli with the appropriate target complexity. Participants noted target trials by pressing an optical response key that illuminated an LED in the control room. Manual recording during each run tagged target detection as correct, missed, or false positives. Four imaging runs involved two each of temporally and spectrally modulated RSS. Each run included target stimuli with low or high complexities.

Two seconds of an RSS stimulus occurred during 9 s silent intervals in an interrupted single event design (Belin et al., 1998) that involved 11 s for repetition of volume acquisitions (TR). The capture of blood oxygenation level-dependent (BOLD) responses to a RSS stimulus occurred during 2 s of echo planar imaging (EPI) at the beginning of the next TR. Delays from the onset of a RSS stimulus to the beginning of the EPI randomly varied from 2 to 9 s (Fig. 4D), thereby enabling an image of different 1 s segments of a full hemodynamic response. A custom C++ program (Microsoft Corp, Redmond, WA) presented RSS wave files synchronized to the TRs. There was a different wave file for each stimulus delay. A pseudo-randomized sequence of these files presented six repeats of all eight delays per run (Fig. 4D), which allowed reconstruction of an average time course from these iterated BOLD responses.

Participants heard RSS stimuli in one ear through a MR compatible headphone system (Resonance Technology, Inc. — RTI, Northridge, CA) that received sounds played through a Roland UA-30 USB audio interface attached to a laptop and connected to a Radio Shack audio mixer. At the start of each test session, calibration of the audio mixer input–output levels was set using a 1 kHz sine wave file played through the laptop audio system. Sound intensities delivered to one ear were 70 dB SPL2 and muted to the other based on adjustments to the master gain of the RTI system and left and right channel gains. An E-A-R soft Fx taper fit ear-plug (E.A.R. Inc, Boulder, CO) and the circumaural headphone shell with cushion sealed and blocked sound inputs to the unstimulated ear.

4.3. Image acquisition and preprocessing

A Siemens 3 T TRIO scanner (Erlangen, Germany) and a twelve-element RF head matrix coil acquired whole brain images. Participants had their eyes covered by a blindfold and room lights were off during task based runs.

A gradient recalled EPI sequence (TR of 11 s, echo time of 27 ms, flip angle of 90°) measured BOLD contrast responses across 33 contiguous, 4 mm axial slices. In-plane resolution was 4×4 mm. Interleaved odd-even numbered slices paralleled the anterior–posterior commissure plane. Each run had 63 frames including 48 frames with RSS stimuli. Five frames with no stimuli occurred at the beginning and end of a run. Additionally, single frames with no RSS were randomly inter-spersed (Fig. 4D). The first frame of a run and software implemented dummy frames at the start of the EPI sequence allowed for magnetization equalization.

Structural images included a magnetization prepared rapid gradient echo (MP-RAGE) T1-weighted sequence acquired across 176 sagittal slices3 and a T2-weighted image across 33 axial slices.4 The latter aided registration of the EPI to the MP-RAGE images after computing 12 parameter affine transforms between an average from the first frames of each EPI run (Ojemann et al., 1997).

Preprocessing of the EPI images adjusted for differences in slice acquisition times by sinc interpolation so that all slices realigned to the start of the first volume after correcting for head movements within and across slices. Adjustment for global differences in signal intensity was by normalization of each scan relative to the global mode of all scans set to 1000. A single algorithmic step resampled all images into 2 mm isotropic voxels and registered them to a Talairach atlas template (Talairach and Tournoux, 1988). The atlas template was spatially normalized (Lancaster et al., 1995) and created from MP-RAGE images obtained in adults (Buckner et al., 2004) similar in age to the study sample.

4.4. Statistical analyses

Prior to a general linear model (GLM) analysis of the BOLD signals, an applied Gaussian filter with a full-width-at-half maximum of 4 mm spatially smoothed the data. GLMs for each run in every participant contained regressors for estimated magnitude in BOLD signal per voxel for eight events reflecting delays of 2 to 9 s from the onset of a RSS stimulus to the start of the EPI in the next TR. Additional regressors modeled baseline, linear trend, and low frequency components (<.014 Hz) of the BOLD signal in each scan.

A two-part initial analysis examined the distribution of activity to the RSS stimuli in each hearing and stimulated ear subgroup (UHL-LE, UHL-RE, NH-LE, and NH-RE). First, a separate whole brain voxel level random effect ANOVA indicated whether activation of auditory cortex was qualitatively similar in each group. For each ANOVA subject variance was the random factor. The within group factor was BOLD magnitudes across all runs for stimulus delay events d2 and d7. For the d2 stimulus delay, there was no interval between the end of a stimulus and onset of EPI in a following TR, resulting in near baseline BOLD amplitudes. For the d7 stimulus delay, 5 s intervened between the end of a stimulus and the onset of EPI leading to detectable BOLD signals given an expected hemodynamic delay of ~2 s. The resulting brain maps showed where activation occurred during the stimulus trials. The ANOVA results were corrected for multiple comparisons using a joint z-score/cluster size threshold of z=3.5 and cluster size of 24 face-contiguous voxels for a minimum p=0.05 in a Monte Carlo simulated distribution (Forman et al., 1995). Next, random effect t-tests in each subgroup evaluated the contrast between BOLD magnitudes for the d7 and d2 stimulus delays separately for the two runs of each RSS-type (e.g., spectral or temporal parameters).

The second stage analysis examined contrasts in activity individually extracted from regions of interest. In this analysis, regional ANOVAs (PROC GLM, Statistical Analysis System version 9.1.3, SAS Institute, Carey, NC), each of which had subjects as a random factor, assessed per cent change relative to baseline of response magnitudes across stimulus delays of 5 to 7 s (events d5 to d7). These extracted magnitudes were from the GLM matrices per run from each participant. The ANOVAs evaluated results within or combined across hearing groups for BOLD response amplitude differences based on several factors: (1) RSS complexity (8 vs. 30 Hz temporal rates, or 3 vs. 16 spectral bands), (2) RSS types (spectral vs. temporal), (3) hearing subgroups (NH vs. UHL), and (4) within each hearing sub-group, lateralization (e.g., whether paired left compared to right hemisphere responses in similar ROI were ipsilateral or contralateral to a stimulated ear). Figs. 2 and 3 show the full time courses obtained from each of the stimulus to EPI delays; these plots indicate the average per cent change in BOLD responses across participants within a group and ROI.

Two procedures aided the regional analysis. First, we reconstructed the brains of all participants into a common surface based atlas (PALS-B12) that accommodated gross anatomical differences to within 3% distortion (Van Essen, 2005; Van Essen and Dierker, 2007). Second, we had available the registration on the PALS-B12 atlas surface (Glasser and Van Essen, 2011) the probabilistic areal borders for three subareas within auditory cortex, Te1.1, Te1.0, and Te1.2 and the Te3 belt area located near planum polare (Eickhoff et al., 2005; Morosan et al., 2001; Rademacher et al., 2001). The borders of these areas reflected quantitative cytoarchitectonic, observer-independent determination of the probabilistic areal borders for Te1.0, Te1.1, Te1.2, and Te3 based on results from 10 autopsy brains (Morosan et al., 2001). The three Te1 subdivisions exhibit well-developed layer IV granularity (koniocortex) characteristic of core regions in auditory cortex. The three Te1 regions pre-dominantly occupy the caudomedial to rostrolateral axis of Heschl’s gyrus (HG). Te3 lies along the lateral edge of the superior temporal gyrus within planum polare, rostrolateral to Te1.0 and Te1.2. Recently, Glasser and Van Essen (2011) utilized volume based probabilistic maps available from the SPM anatomy toolbox (Eickhoff et al., 2005) to register areal borders for the Te1 and Te3 subdivisions into the CARET PALS-B12 atlas space (Van Essen and Dierker, 2007). The borders represented overlapping cytoarchitectonic boundaries from 40% of the autopsy cases. We also created borders for the Te2 belt area in planum temporale and nearby BA22 in parabelt auditory cortex using in vivo myelin gradients (Glasser and Van Essen, 2011). Te2 is within the planum temporale, posterolateral to the Te1.1 subdivision.

The ROI used in the current study involved the centers of gravity (COG) coordinates based on the borders for each region in PALS-B12 atlas space. ROI were spherical with 5 mm radii and centered on the respective COG in auditory cortex.

Acknowledgments

A grant from the National Institutes of Deafness and Other Communication Disorders (R01 DC009010) supported this research. The National Institute of Neurological Disorders and Stroke provided additional support for HB and AA (R01 NS37237).

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Deafness and Communication Disorders or the National Institute of Neurological Disorders and Stroke.

Footnotes

Fig. 4 shows plots only for six pure tones. In the current study, all RSS stimuli consisted of sums of 1638 pure tones with frequencies that spanned a 6-octave bandwidth (250–16,000 Hz). Tones within a spectral region were equally spaced based on an ERB frequency scale (Moore, 2007). The range of tones per spectral region was a function of number of spectral regions. For example, an RSS with 4 spectral regions has 4 bands that were amplitude-modulated together where Bands 1–4 contained, respectively, sinusoids #1–#410 for 250 Hz to 926 Hz, sinusoids #411–#819 for 928 Hz to 2556 Hz, sinusoids #820–#1228 for 2562 Hz to 6486 Hz, and sinusoids #1229– #1638 for 6501 Hz to 16,000 Hz.

Initial calibration of RSS stimulation through the MR sound system involved placing the RTI headphones on a Knowles Electronics Manikin for Acoustic Research (KEMAR) that had the right ear fitted with a B&K (Brel & Kjaer) 4134 microphone and a B&K 2619 microphone preamplifier. A B&K 2231 sound level meter (SLM) recorded output signals using settings for slow time weighting, RMS detection, and linear 20 Hz to 20 kHz frequency weighting. Through a Roland UA-30 USB audio interface, a Dell laptop recorded digitized AC outputs from the SLM. Measurement and calibration of these outputs utilized Yoshimasa Electronic Inc. Realtime Analyzer software. The RTI master gain and left and right channel gains were set so that all audio signals were at least 10 dB above the measured noise floor of the sound system. The sound system had a bandwidth of approximately 160 to 5 kHz and 10 dB/Octave falloff at >5 kHz. SPLs for RSS wave files were measured and subsequently, individual digital amplitude adjustments set each RSS wave file to normalized levels and together with RTI gain settings produced an acoustic target level of 70 dB SPL for each RSS stimulus.

TR=2100 ms; TE=3.93 ms; flip angle=7°; inversion time [TI]= 1000 ms; 1×1×1.25 mm voxels.

TR=8430 ms, TE=98 ms, 1.33×1.33×3 mm voxels.

REFERENCES

- Abel SM, Birt D, Mclean JA. Sound localization in hearing-impaired listeners. In: Gatehouse RW, editor. Localization of Sound: Theory and Applications. Amphora Press; Groten, CT: 1982. pp. 207–219. (Vol.) [Google Scholar]

- Amaro E, Jr., Barker GJ. Study design in fMRI: basic principles. Brain Cogn. 2006;60:220–232. doi: 10.1016/j.bandc.2005.11.009. [DOI] [PubMed] [Google Scholar]

- American National Standards Institute . ANSI S3.6-1989. New York: 1989. American National Standards Specifications for Audiometers. [Google Scholar]

- Belin P, Zilbovicius M, Crozier S, Thivard L, Fontaine A, Masure MC, Samson Y. Lateralization of speech and auditory temporal processing. J. Cogn. Neurosci. 1998;10:536–540. doi: 10.1162/089892998562834. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Hoge R, Evans AC, Pike B. Event-related fMRI of the auditory cortex. Neuroimage. 1999;10:417–429. doi: 10.1006/nimg.1999.0480. [DOI] [PubMed] [Google Scholar]

- Bilecen D, Seifritz E, Radü EW, Schmid N, Wetzel S, Probst R, Scheffler K. Cortical reorganization after acute unilateral hearing loss traced by fMRI. Neurology. 2000;54:765–767. doi: 10.1212/wnl.54.3.765. [DOI] [PubMed] [Google Scholar]

- Bishop CE, Eby TL. The current status of audiologic rehabilitation for profound unilateral sensorineural hearing loss. Laryngoscope. 2010;120:552–556. doi: 10.1002/lary.20735. [DOI] [PubMed] [Google Scholar]

- Brännström K, Lantz J. Interaural attenuation for Sennheiser HDA 200 circumaural earphones. Int. J. Audiol. 2010;49:467–471. doi: 10.3109/14992021003663111. [DOI] [PubMed] [Google Scholar]

- Brodmann K. Vergleichende Lokalisationslehere der Grosshirnrinde. Barth, Leipzig: 1909. [Google Scholar]

- Buckner RL, Head D, Parker J, Fotenos AF, Marcus D, Morris JC, Snyder AZ. A unified approach for morphometric and functional data analysis in young, old, and demented adults using automated atlas-based head size normalization: reliability and validation against manual measurement of total intracranial volume. Neuroimage. 2004;23:724–738. doi: 10.1016/j.neuroimage.2004.06.018. [DOI] [PubMed] [Google Scholar]

- da Costa S, van der Zwaag W, Marques JP, Frackowiak RSJ, Clarke S, Saenz M. Human primary auditory cortex follows the shape of Heschl’s gyrus. J. Neurosci. 2011;31:14067–14075. doi: 10.1523/JNEUROSCI.2000-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downer J, Herron TJ, Woods DL. Surface mapping of multimodal MRI data within cytoarchitectonically-defined auditory cortical fields in humans. 2011 Neuroscience Meeting Planner; Washington, DC: Society for Neuroscience; 2011. Program No. 171.16. Online. [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Ulmer JL, Gaggl W. Differential representation of speech sounds in the human cerebral hemispheres. Anat. Rec. A Discov. Mol. Cell. Evol. Biol. 2006;288:345–357. doi: 10.1002/ar.a.20295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn. Reson. Med. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Gaab N, Gabrieli JDE, Glover GH. Assessing the influence of scanner background noise on auditory processing. II. An fMRI study comparing auditory processing in the absence and presence of recorded scanner noise using a sparse design. Hum. Brain Mapp. 2007;28:721–732. doi: 10.1002/hbm.20299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galaburda A, Sanides F. Cytoarchitectonic organization of the human auditory cortex. J. Comp. Neurol. 1980;190:597–610. doi: 10.1002/cne.901900312. [DOI] [PubMed] [Google Scholar]

- Glasser MF, Van Essen DC. Mapping human cortical areas in vivo based on myelin content as revealed by T1- and T2-weighted MRI. J. Neurosci. 2011;31:11597–11616. doi: 10.1523/JNEUROSCI.2180-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD. The planum temporale as a computational hub. Trends Neurosci. 2002;25:348–353. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Gurney EM, Bowtell RW. “Sparse” temporal sampling in auditory fMRI. Hum. Brain Mapp. 1999;7:213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanss J, Veuillet E, Adjout K, Besle J, Collet L, Thai-Van H. The effect of long-term unilateral deafness on the activation pattern in the auditory cortices of French-native speakers: influence of deafness side. BMC Neurosci. 2009;10:23. doi: 10.1186/1471-2202-10-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE, Allen SK, Bess FH. Horizontal sound localization skills of unilaterally hearing-impaired children. Audiology. 1980;19:508–518. doi: 10.3109/00206098009070082. [DOI] [PubMed] [Google Scholar]

- Humphries C, Liebenthal E, Binder JR. Tonotopic organization of human auditory cortex. Neuroimage. 2010;50:1202–1211. doi: 10.1016/j.neuroimage.2010.01.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imig TJ, Ruggero MA, Kitzes LM, Javel E, Brugge JF. Organization of auditory cortex in the owl monkey (Aotus trivirgatus) J. Comp. Neurol. 1977;171:111–128. doi: 10.1002/cne.901710108. [DOI] [PubMed] [Google Scholar]

- Jäncke L, Wüstenberg T, Schulze K, Heinze HJ. Asymmetric hemodynamic responses of the human auditory cortex to monaural and binaural stimulation. Hear. Res. 2002;170:166–178. doi: 10.1016/s0378-5955(02)00488-4. [DOI] [PubMed] [Google Scholar]

- Johnsrude IS, Penhune VB, Zatorre RJ. Functional specificity in the right human auditory cortex for perceiving pitch direction. Brain. 2000;123:155–163. doi: 10.1093/brain/123.1.155. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc. Natl. Acad. Sci. U. S. A. 2000;97:11793–11799. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khosla D, Ponton CW, Eggermont JJ, Kwong B, Dort M, Vasama J-P. Differential ear effects of profound unilateral deafness on the adult human central auditory system. JARO. 2003;4:235–249. doi: 10.1007/s10162-002-3014-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Glass TG, Lankipalli BR, Downs H, Mayberg H, Fox PT. A modality-independent approach to spatial normalization of tomographic images of the human brain. Hum. Brain Mapp. 1995;3:209–223. [Google Scholar]

- Langers DRM, Van Dijk P, Backes WH. Lateralization, connectivity and plasticity in the human central auditory system. Neuroimage. 2005;28:490–499. doi: 10.1016/j.neuroimage.2005.06.024. [DOI] [PubMed] [Google Scholar]

- Merzenich MM, Brugge JF. Representation of the cochlear partition on the superior temporal plane of the Macaque monkey. Brain Res. 1973;50:275–296. doi: 10.1016/0006-8993(73)90731-2. [DOI] [PubMed] [Google Scholar]

- Moore BCJ. Frequency analysis and pitch perception. In: Crocker MJ, editor. Encyclopedia of Acoustics. Volume Three. John Wiley & Sons, Inc; Hoboken, NJ, USA: 2007. [Google Scholar]

- Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage. 2001;13:684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- Obleser J, Eisner F, Kotz SA. Bilateral speech comprehension reflects differential sensitivity to spectral and temporal features. J. Neurosci. 2008;28:8116–8123. doi: 10.1523/JNEUROSCI.1290-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ojemann JG, Akbudak E, Snyder AZ, McKinstry RC, Raichle ME, Conturo TE. Anatomic localization and quantitative analysis of gradient refocused echo-planar fMRI susceptibility artifacts. Neuroimage. 1997;6:156–167. doi: 10.1006/nimg.1997.0289. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: probabilistic mapping and volume measurement from magnetic resonance scans. Cereb. Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Vasama J-P, Tremblay K, Khosla D, Kwong B, Don M. Plasticity in the adult human central auditory system: evidence from late-onset profound unilateral deafness. Hear. Res. 2001;154:32–44. doi: 10.1016/s0378-5955(01)00214-3. [DOI] [PubMed] [Google Scholar]

- Propst EJ, Greinwald JH, Schmithorst V. Neuroanatomic differences in children with unilateral sensorineural hearing loss detected using functional magnetic resonance imaging. Arch. Otolaryngol. Head Neck Surg. 2010;136:22–26. doi: 10.1001/archoto.2009.208. [DOI] [PubMed] [Google Scholar]

- Rademacher J, Caviness VS, Steinmetz H, Galaburda AM. Topographical variation of the human primary cortices: implications for neuroimaging, brain mapping, and neurobiology. Cereb. Cortex. 1993;3:313–329. doi: 10.1093/cercor/3.4.313. [DOI] [PubMed] [Google Scholar]

- Rademacher J, Morosan P, Schormann T, Schleicher A, Werner C, Freund HJ, Zilles K. Probabilistic mapping and volume measurement of human primary auditory cortex. Neuroimage. 2001;13:669–683. doi: 10.1006/nimg.2000.0714. [DOI] [PubMed] [Google Scholar]

- Scheffler K, Bilecen D, Schmid N, Tschopp K, Seelig J. Auditory cortical responses in hearing subjects and unilateral deaf patients as detected by functional magnetic resonance imaging. Cereb. Cortex. 1998;8:156–163. doi: 10.1093/cercor/8.2.156. [DOI] [PubMed] [Google Scholar]

- Schmithorst VJ, Holland SK, Ret J, Duggins A, Arjmand E, Greinwald J. Cortical reorganization in children with unilateral sensorineural hearing loss. Neuroreport. 2005;16:463–467. doi: 10.1097/00001756-200504040-00009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schönwiesner M, Rübsamen R, von Cramon DY. Spectral and temporal processing in the human auditory cortex—revisited. Ann. NY Acad. Sci. 2005a;1060:89–92. doi: 10.1196/annals.1360.051. [DOI] [PubMed] [Google Scholar]

- Schönwiesner M, Rübsamen R, von Cramon DY. Hemispheric asymmetry for spectral and temporal processing in the human antero-lateral auditory belt cortex. Eur. J. Neurosci. 2005b;22:1521–1528. doi: 10.1111/j.1460-9568.2005.04315.x. [DOI] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 2003;26:100–107. doi: 10.1016/S0166-2236(02)00037-1. [DOI] [PubMed] [Google Scholar]

- Scott SK, Wise RJS. The functional neuroanatomy of prelexical processing in speech perception. Cognition. 2004;92:13–45. doi: 10.1016/j.cognition.2002.12.002. [DOI] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJS. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Rosen S, Lang H, Wise RJS. Neural correlates of intelligibility in speech investigated with noise vocoded speech—a positron emission tomography study. J. Acoust. Soc. Am. 2006;120:1075–1083. doi: 10.1121/1.2216725. [DOI] [PubMed] [Google Scholar]

- Striem-Amit E, Hertz U, Amedi A. Extensive cochleotopic mapping of human auditory cortical fields obtained with phase-encoding fMRI. PLoS One. 2011;6:1–18. doi: 10.1371/journal.pone.0017832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Coplanar Stereotaxic Atlas of the Human Brain. Thieme Medical; New York: 1988. (Vol.) [Google Scholar]

- Talavage TM, Edmister WB. Nonlinearity of FMRI responses in human auditory cortex. Hum. Brain Mapp. 2004;22:216–228. doi: 10.1002/hbm.20029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tervaniemi M, Hugdahl K. Lateralization of auditory-cortex functions. Brain Res. Rev. 2003;43:231–246. doi: 10.1016/j.brainresrev.2003.08.004. [DOI] [PubMed] [Google Scholar]

- Tschopp K, Schillinger C, Schmid N, Rausch M, Bilecen D, Scheffler K. Evidence of central-auditory compensation in unilateral deafpatients detected by functional MRI. Nachweis zentral-auditiver kompensationsvorgänge bei einseitiger ertaubung mit funktioneller magnetresonanztomographie. 2000;79:753–757. doi: 10.1055/s-2000-9136. [DOI] [PubMed] [Google Scholar]

- Van Essen DC. A population-average, landmark- and surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Dierker DL. Surface-based and probabilistic atlases of primate cerebral cortex. Neuron. 2007;56:209–225. doi: 10.1016/j.neuron.2007.10.015. [DOI] [PubMed] [Google Scholar]

- Vasama J-P, Mäkelä JP. Auditory cortical responses in humans with profound unilateral sensorineural hearing loss from early childhood. Hear. Res. 1997;104:183–190. doi: 10.1016/s0378-5955(96)00200-6. [DOI] [PubMed] [Google Scholar]

- von Economo C. The Cytoarchitectonics of the Human Cerebral Cortex. Oxford University Press; London: 1929. (Vol.) [Google Scholar]

- Warrier C, Wong P, Penhune V, Zatorre R, Parrish T, Abrams D, Kraus N. Relating structure to function: Heschl’s gyrus and acoustic processing. J. Neurosci. 2009;29:61–69. doi: 10.1523/JNEUROSCI.3489-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wie OB, Pripp AH, Tvete O. Unilateral deafness in adults: effects on communication and social interaction. Ann. Otol. Rhinol. Laryngol. 2010;119:772–781. [PubMed] [Google Scholar]

- Woods DL, Alain C. Functional imaging of human auditory cortex. Curr. Opin. Otolaryngol. Head Neck Surg. 2009;17:407–411. doi: 10.1097/MOO.0b013e3283303330. [DOI] [PubMed] [Google Scholar]

- Woods DL, Stecker GC, Rinne T, Herron TJ, Cate AD, Yund EW, Liao I, Kang X. Functional maps of human auditory cortex: effects of acoustic features and attention. PLoS One. 2009;4:1–19. doi: 10.1371/journal.pone.0005183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woods DL, Herron TJ, Cate AD, Yund EW, Stecker GC, Rinne T, Kang X. Functional properties of human auditory cortical fields. Front Syst. Neurosci. 2010;4:1–13. doi: 10.3389/fnsys.2010.00155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woods DL, Herron TJ, Cate AD, Kang X, Yund EW. Phonological processing in human auditory cortical fields. Front. Hum. Neurosci. 2011:1–15. doi: 10.3389/fnhum.2011.00042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb. Cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Binder J. Functional and structural imaging of the human auditory system. In: Toga AW, Mazziotta JC, editors. Brain Mapping the Systems. Academic Press; San Diego: 2000. pp. 365–402. (Vol.) [Google Scholar]

- Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: moving beyond the dichotomies. Phil. Trans. R. Soc. B. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn. Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]