Abstract

Traditional memory research has focused on identifying separate memory systems and exploring different stages of memory processing. This approach has been valuable for establishing a taxonomy of memory systems and characterizing their function, but has been less informative about the nature of stored memory representations. Recent research on visual memory has shifted towards a representation-based emphasis, focusing on the contents of memory, and attempting to determine the format and structure of remembered information. The main thesis of this review will be that one cannot fully understand memory systems or memory processes without also determining the nature of memory representations. Nowhere is this connection more obvious than in research that attempts to measure the capacity of visual memory. We will review research on the capacity of visual working memory and visual long-term memory, highlighting recent work that emphasizes the contents of memory. This focus impacts not only how we estimate the capacity of the system - going beyond quantifying how many items can be remembered, and moving towards structured representations - but how we model memory systems and memory processes.

Tulving (2000) provided a concise, general definition of memory as the “neurocognitive capacity to encode, store, and retrieve information,” and suggested the possibility that there are many separate memory systems that fit this definition. Indeed, one of the primary aims of modern memory research has been to identify these different memory systems (Schacter & Tulving, 1994). This approach has lead to an extensive taxonomy of memory systems which are characterized by differences in timing, storage capacity, conscious access, active maintenance, and mechanisms of operation.

Early on, William James (1890) proposed the distinction between primary memory – the information held in the ‘conscious present’ – and secondary memory, which consists of information that is acquired, stored outside of conscious awareness, and then later remembered. This distinction maps directly onto the modern distinction between short-term memory (henceforth working memory) and long-term memory (Atkinson & Shiffrin, 1968; Scoville & Milner, 1957; Waugh & Norman, 1965). The most salient difference between these systems is their capacity: the active, working memory system has an extremely limited capacity of only a few items (Cowan, 2001; Cowan, 2005; Miller, 1956), whereas the passive, long-term memory system can store thousands of items (Brady et al. 2008; Standing, 1973; Voss, 2009) with remarkable fidelity (Brady et al., 2008; Konkle et al., 2010a).

The emphasis on memory systems and memory processes has been quite valuable in shaping cognitive and neural models of memory. In general, this approach aims to characterize memory systems in a way that generalizes over representational content (Schacter & Tulving, 1994). For example, working memory is characterized by a severely limited capacity regardless of whether items are remembered visually or verbally (e.g., Baddeley, 1986), and long-term memory has a very high capacity whether the items remembered are pictures (e.g., Standing, 1973), words (e.g., Shepard, 1967), or associations (e.g., Voss, 2009). However, generalization across content leaves many basic questions unanswered regarding the nature of stored representations: What is the structure and format of those representations?

Research on visual perception takes the opposite approach, attempting to determine what is being represented, and to generalize across processes. For example, early stages of visual representation consist of orientation and spatial frequency features. Vision research has measured the properties of these features, such as their tuning curves and sensitivity (e.g., Blakemore & Campbell, 1969), and shown that these tuning properties are constant across several domains of processing (e.g., from simple detection to visual search).

Thus, the intersection between memory and vision is a particularly interesting domain of research because it concerns both the processes of memory and the nature of the stored representations (Luck & Hollingworth, 2008). Recent research within the vision science community at this intersection between memory and vision has been quite fruitful. For example, working memory research has shown an important item/resolution tradeoff: as the number of items remembered increases, the precision with which each one is remembered decreases, possibly with an upper bound on the number of items that may be stored (Alvarez & Cavanagh, 2004; Zhang & Luck, 2008), or possibly without an upper bound (Bays, Catalao & Husain, 2009; Wilken & Ma, 2004). In long-term memory, it is possible to store thousands of detailed object representations (Brady et al. 2008; Konkle et al., 2010b) but only for meaningful items that connect with stored knowledge (Konkle et al., 2010b; Wiseman and Neisser, 1974).

Here we review recent research in the domains of visual working memory and visual long-term memory, focusing on how models of these memory systems are altered and refined by taking the contents of memory into account.

VISUAL WORKING MEMORY

The working memory system is used to hold information actively in mind, and to manipulate that information to perform a cognitive task (Baddeley, 1986; Baddeley, 2000). While there is a long history of research on verbal working memory and working memory for spatial locations (e.g., Baddeley, 1986), the last 15 years has seen surge in research on visual working memory, specifically for visual feature information (Luck & Vogel, 1997).

The study of visual working memory has largely focused on the capacity of the system, both because limited capacity is one of the main hallmarks of working memory, and because individual differences in measures of working memory capacity are correlated with differences in fluid intelligence, reading comprehension, and academic achievement (Alloway & Alloway, 2010; Daneman & Carpenter, 1980; Fukuda, Vogel, Mayr & Awh, 2010; Kane, Bleckly, Conway & Engle, 2001). This relationship suggests that working memory may be a core cognitive ability that underlies, and constrains, our ability to process information across cognitive domains. Thus, understanding the capacity of working memory could provide important insight into cognitive function more generally.

In the broader working memory literature, a significant amount of research has focused on characterizing memory limits based on how quickly information can be refreshed (e.g., Baddeley, 1986) or the rate at which information decays (Broadbent, 1958; Baddeley & Scott, 1971). In contrast, research on the capacity of visual working memory has focused on the number of items that can be remembered (Luck & Vogel, 1997; Cowan, 2001). However, several recent advances in models of visual working memory have been driven by a focus on the content of working memory representations rather than how many individual items can be stored.

Here we review research that focuses on working memory representations, including their fidelity, structure, and effects of stored knowledge. While not an exhaustive review of the literature, these examples highlight the fact that working memory representations have a great deal of structure beyond the level of individual items. This structure can be characterized as a hierarchy of properties, from individual features, to individual objects, to across-object ensemble features (spatial context and featural context). Together, the work reviewed here illustrates how a representation-based approach has led to important advances, not just in understanding the nature of stored representations themselves, but also in characterizing working memory capacity and shaping models of visual working memory.

The fidelity of visual working memory

Recent progress in modeling visual working memory has resulted from an emphasis on estimating the fidelity of visual working memory representations. In general, the capacity of any memory system should be characterized both in terms of the number of items that can be stored, and in terms of the fidelity with which each individual item can be stored. Consider the case of a USB-drive that can store exactly 1000 images: the number of images alone is not a complete estimate of this USB-drive’s storage capacity. It is also important to consider the resolution with which those images can be stored: if each image can be stored with a very low resolution, say 16 × 16 pixels, then the drive has a lower capacity than if it can store the same number of images with a high resolution, say 1024 × 768 pixels. In general, the true capacity of a memory system can be estimated by multiplying the maximum number of items that can be stored by the fidelity with which each individual item can be stored (capacity = quantity X fidelity). For a memory system such as your USB-drive, there is only an information limit on memory storage, so the number of files that can be stored is limited only by the size of those files. Whether visual working memory is best characterized as an information limited system (Alvarez & Cavanagh, 2004; Wilken & Ma, 2004), or whether it has a pre-determined and fixed item limit (Luck & Vogel, 1997; Zhang & Luck, 2008) is an active topic of debate in the field.

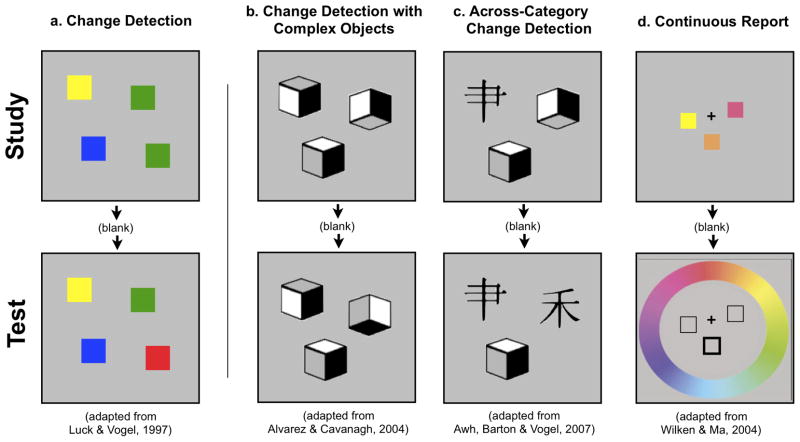

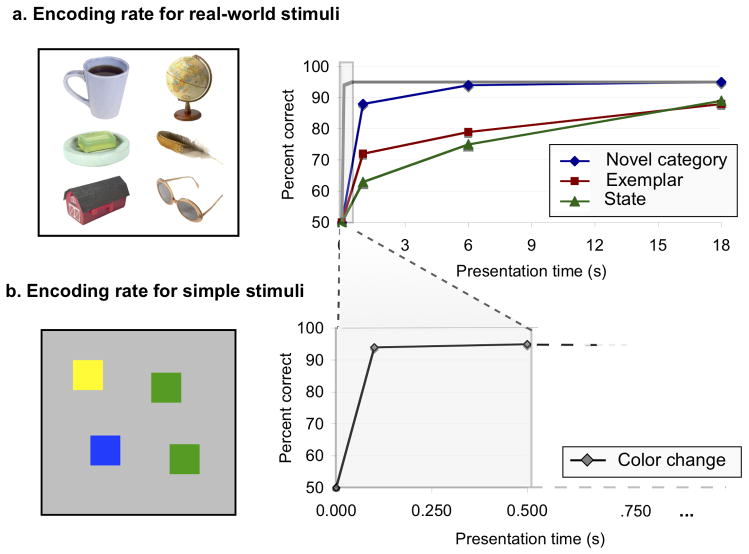

Luck and Vogel’s (1997) landmark study on the capacity of visual working memory spurred the surge in research on visual working memory over the past 15 years. Luck and Vogel (1997) used a change detection task to estimate working memory capacity for features and conjunctions of features (Figure 1a; see also Pashler, 1988; Phillips, 1974; Vogel, Woodman & Luck, 2001). On each trial, observers saw an array of colored squares and were asked to remember them. The squares then disappeared for about one second, and then reappeared with either all of the items exactly the same as before, or with a single square having changed color to a categorically different color (e.g., yellow to red). Observers were asked to say whether the display was exactly the same or whether one of the squares had changed (Figure 1a).

Figure 1.

Measures of visual working memory fidelity. (a) A change detection task. Observers see the ‘Study’ display, then after a blank must indicate whether the ‘Test’ display is identical to the Study display or whether a single item has changed color. (b) Change detection with complex objects. In this display, the cube changes to another cube (within-category change), requiring high-resolution representations to detect. (c) Change detection with complex objects. In this display, the cube changes to a Chinese character (across-category change), requiring only low-resolution representations to detect. (d) A continuous color report task. Observers see the Study display, and then at test are asked to report the exact color of a single item. This gives a continuous measure of the fidelity of memory.

Luck and Vogel (1997) found that observers were able to accurately detect changes most of the time when there were fewer than 3 or 4 items on the display, but that performance declined steadily as the number of items increased beyond 4. Luck and Vogel (1997) and Cowan (2001) have shown that this pattern of performance is well explained by a model in which a fixed number of objects (3–4) were remembered. Thus, these results are consistent with a “slot model” of visual working memory capacity (see also Cowan, 2005; Rouder, Morey, Cowan, Zwilling, Morey & Pratte, 2008) in which working memory can store a fixed number of items.

Importantly, this standard change detection paradigm provides little information about how well each individual object was remembered. The change detection paradigm indicates only that items were remembered with sufficient fidelity to distinguish an object’s color from a categorically different color. How much information do observers actually remember about each object?

Several new methods have been used to address this question (see Figure 1b, c, d). First, the change detection task can be modified to vary the amount of information that must be stored by varying the type of changes that can occur. For example, changing from one shade of red to a different, similar shade of red requires a high-resolution representation, whereas a change from red to blue can be detected with a low-resolution representation. Using such changes that require high-resolution representations has proved particularly fruitful for investigating memory capacity for complex objects (Figure 1b, c). Second, estimates of memory precision can be obtained by using a continuous report procedure in which observers are cued to report the features of an item, and then adjust that item to match the remembered properties. Using this method, the fidelity of a simple feature dimension like color can be investigated by having observers report the exact color of a single item (Figure 1d).

Fidelity of storage for complex objects

While early experiments using large changes in a change detection paradigm found evidence for a slot model, in which memory is limited to storing a fixed number of items, subsequent experiments with newer paradigms that focused on the precision of memory representations have suggested an information-limited model. Specifically, Alvarez and Cavanagh (2004) proposed that there is an information limit on working memory, which would predict a trade off between the number of items stored and the fidelity with which each item is stored. For example, suppose working memory could store 8 bits of information. It would be possible to store a lot of information about 1 object (8 bits/object = 8 bits), or a small amount of information about 4 objects (2 bits/object = 8 bits). To test this hypothesis, Alvarez and Cavanagh varied the amount of information required to remember objects, from categorically different colors (low information load), to perceptually similar 3D cubes (high information load). The results showed that the number of objects that could be remembered with sufficient fidelity to detect the changes depended systematically on the information load per item: the more information that had to be remembered from an individual item, the fewer the total number of items that could be stored with sufficient resolution, consistent with the hypothesis that there is a limit to the total amount of information stored.

This result was not due to an inability to discriminate the more complex shapes, such as 3D cubes: observers could easily detect a change between cubes when only a single cube was remembered, but they could not detect the same change when they tried to remember 4 cubes. This result suggests that encoding additional items reduced the resolution with which each individual item could be remembered, consistent with the idea that there is an information limit on memory. Using the same paradigm but varying the difficulty of the memory test, Awh, Barton and Vogel (2007) found a similar result: with only a single cube in memory, observers could easily detect small changes in the cube’s structure. However, with several cubes in memory, observers were worse at detecting these small changes but maintained the ability to detect larger changes (e.g., changing the cube to a completely different kind of stimulus, like a Chinese character; Figure 1b, c). This suggests that when many cubes are stored, less information is remembered about each cube, and this low-resolution representation is sufficient to make a coarse discrimination (3D cube vs. Chinese character), but not a fine discrimination (3D cube vs. 3D cube). Taken together, these two studies suggest that working memory does not store a fixed number of items with fixed fidelity: the fidelity of items in working memory depends on a flexible resource that is shared among items, such that a single item can be represented with high fidelity, or several items with significantly lower fidelity (see Zosh & Feigenson, 2009 for a similar conclusion with infants).

Fidelity of simple feature dimensions

While the work of Alvarez and Cavanagh (2004) suggests a tradeoff between the number of items stored and the resolution of storage, other research has demonstrated this trade off directly by measuring the precision of working memory along continuous feature dimensions (Wilken & Ma, 2004). For example, Wilken and Ma (2004) devised a paradigm in which a set of colors appeared momentarily and then disappeared. After a brief delay, the location of one color was cued, prompting the observer to report the exact color of the cued item by adjusting a continuous color wheel (Figure 1d). Wilken and Ma (2004) found that the accuracy of color reports decreased as the number of items remembered increased, suggesting that memory precision decreased systematically as more items were stored in memory. This result would be predicted by an information-limited system, because high precision responses contain more information than low-precision responses. In other words, as more items are stored and the precision of representations decreases, the amount of information stored per item decreases.

Wilken and Ma’s (2004) investigations into the precision of working memory appear to support an information-limited model. However, using the same continuous report paradigm and finding similar data, Zhang and Luck (2008) have argued in favor of a slot-model of working memory, in which memory stores a fixed number of items with fixed fidelity. To support this hypothesis, they used a mathematical model to partial errors in reported colors into two different classes: those resulting from noisy memory representations, and those resulting from random guesses. Given a particular distribution of errors, this modeling approach yields an estimate of the likelihood that items were remembered, and the fidelity with which they were remembered. Zhang and Luck (2008) found that the proportion of random guesses was low from 1 to 3 items, but that beyond 3 items the rate of random guessing increased. This result is naturally accounted for by a slot model in which a maximum of 3 items can be remembered.

However, Zhang and Luck (2008) also found that the fidelity of representations decreased from 1 to 3 items (representations became less and less precise). A slot model cannot easily account for this result without additional assumptions. To account for this pattern, Zhang and Luck proposed that working memory has 3 discrete slots. When only one item is remembered, each memory slot stores a separate copy of that one item, and these copies are then averaged together to yield a higher-resolution representation. Critically, this averaging process improves the fidelity of the item representation because each copy has error that is completely independent of the error in other copies, so when they are averaged these sources of error cancel out. When 3 items are remembered, each item occupies a single slot, and without the benefits of averaging multiple copies, each of the items is remembered with a lower resolution (matching the resolution limit of a single slot).

This version of the slot model was consistent with the data, but only when the number of slots was assumed to be 3. Thus, the decrease in memory precision with increasing number of items stored can be accounted for by re-casting memory slots as 3 quantum units of resources that can be flexibly allocated to at most 3 different items (a set of “discrete fixed-resolution representations”). This account depends critically on the finding that memory fidelity plateaus and remains constant after 3 items, which remains a point of active debate in the literature (e.g., Anderson, Vogel & Awh, 2011; Bays, Catalao & Husain, 2009; Bays & Husain, 2008). In particular, Bays, Catalao and Husain (2009) have proposed that the plateau in memory fidelity beyond 3 items (Zhang & Luck, 2008) is an artifact of an increase in “swap errors” in which the observer accidentally reports the wrong item from the display. However, the extent to which such swaps can account for this plateau is still under active investigation (Anderson, Vogel & Awh, 2011; Bays, Catalao & Husain, 2009).

Conclusion

To summarize, by focusing on the contents of visual working memory, and on the fidelity of representations in particular, there has been significant progress in models of visual working memory and its capacity. At present, there is widespread agreement in the visual working memory literature that visual working memory has an extremely limited capacity and that it can represent 1 item with greater fidelity than 3–4 items. This finding requires the conclusion that working memory is limited by a resource that is shared among the representations of different items (i.e., is information-limited). Some models claim that resource allocation is discrete and quantized into slots (Anderson, Vogel & Awh, 2011; Awh, Barton & Vogel, 2007; Zhang & Luck, 2008), while others claim that resource allocation is continuous (Bays & Husain, 2008; Huang, 2010; Wilken & Ma, 2004), but there is general agreement that working memory is a flexibly-allocated resource of limited capacity.

Research on the fidelity of working memory places important constraints on both continuous and discrete models. If working memory is slot-limited, then those slots must be recast as a flexible resource, all of which can be allocated to a single item to gain precision in its representation, or which can be divided separately among multiple items yielding relatively low-resolution representations of each item. If memory capacity is information-limited, then it is necessary to explain why under some conditions it appears that there is an upper bound on memory storage of 3–4 objects (e.g. Alvarez & Cavanagh, 2004; Awh, Barton & Vogel, 2007; Luck & Vogel, 1997; Zhang & Luck, 2008) and in other conditions it appears that memory is purely information-limited, capable of storing more-and-more, increasingly noisy representations even beyond 3–4 items (e..g, Bays & Husain, 2008; Bays, Catalao & Husain, 2009; Huang, 2010).

The representation of features vs. objects in visual working memory

Any estimate of memory capacity must be expressed with some unit, and what counts as the appropriate unit depends upon how information is represented. Since George Miller’s (1956) seminal paper claiming a limit of 7 +/− 2 chunks as the capacity of working memory, a significant amount of work has attempted to determine the units of storage in working memory. In the domain of verbal memory, for example, debate has flourished about the extent to which working memory capacity is limited by storing a fixed number of chunks vs. time-based decay (Baddeley, 1986; Cowan 2005; Cowan & AuBuchon, 2008). In visual working memory, this debate has focused largely on the issue of whether separate visual features (color, orientation, size) are stored in independent “buffers,” each with their own capacity limitations (e.g., Magnussen, Greenlee & Thomas, 1996), or whether visual working memory operates over integrated object representations (Luck & Vogel, 1997; Vogel, Woodman & Luck, 2001; see Figure 2b).

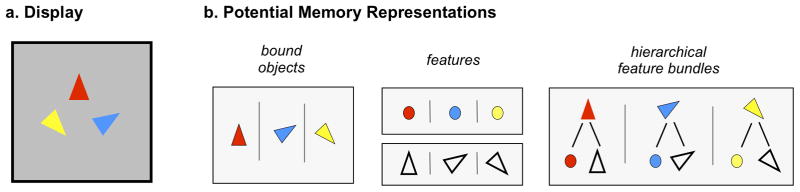

Figure 2.

Possible memory representations for a visual working memory display. (a) A display of oriented and colored items to remember. (b) Potential memory representations for the display in (a). The units of memory do not appear to be integrated bound objects, or completely independent feature representations. Instead, they might be characterized as hierarchical feature-bundles, which have both object-level and feature-level properties.

Luck and Vogel (1997) provided the first evidence that visual working memory representations should be thought of as object-based. In their seminal paper (Luck & Vogel, 1997), they found that observers’ performance on a change detection task was identical whether they had to remember only one feature per object (orientation or color), two features per object (both color and orientation), or even four features per object (color, size, orientation and shape). If memory was limited in terms of the number of features, then remembering more features per object should have a cost. Because there was no cost for remembering more features, Luck and Vogel concluded that objects are the units of visual working memory. In fact, Luck and Vogel (1997) initially provided data demonstrating that observers could remember 3–4 objects even when those objects each contained 2 colors. In other words, observers could only remember 3–4 colors when each color was on a separate object, but they could remember 6–8 colors when those colors were joined into bi-color objects. However, subsequent findings have provided a number of reasons to temper this strong, object-based view of working memory capacity. In particular, recent evidence has suggested that, while there is some benefit to object-based storage, objects are not always encoded in their entirety, and multiple features within an object are encoded with a cost.

Objects are not always encoded in their entirety

A significant body of work has demonstrated that observers do not always encode objects in their entirety. When multiple features of an object appear on distinct object parts, observers are significantly impaired at representing the entire object (Davis & Holmes, 2005; Delvenne & Bruyer, 2004; Delvenne & Bruyer, 2006; Xu, 2002a). For instance, if the color feature appears on one part of an object, and the orientation feature on another part of the object, then observers perform worse when required to remember both features than when trying to remember either feature alone (Xu, 2002a). In addition, observers sometimes encode some features of an object but not others, for example remembering their color but not their shape (Bays, Wu & Husain, 2011; Fougnie & Alvarez, submitted), particularly when only a subset of features is task-relevant (e.g., Droll, Hayhoe, Triesch, & Sullivan, 2005; Triesch, Ballard, Hayhoe & Sullivan, 2003; Woodman & Vogel, 2008). Thus, working memory does not always store integrated object representations.

Costs for encoding multiple features within an object

Furthermore, another body of work has demonstrated that encoding more than one feature of the same object does not always come without cost. Luck and Vogel (1997) provided evidence that observers could remember twice as many colors when those colors were joined into bi-color objects. This result suggested that memory was truly limited by the number of objects that could be stored, and not the number of features. However, this result has not been replicated, and indeed there appears to be a significant cost to remembering two colors on a single object (Olson & Jiang, 2002; Wheeler & Treisman, 2002; Xu, 2002b). In particular, Wheeler and Treisman’s work (2002) suggests that memory is limited to storing a fixed number of colors (3–4) independent of how those colors are organized into bi-color objects. This indicates that working memory capacity is not limited only by the number of objects to-be-remembered; instead, some limits are based on the number of values that can be stored for a particular feature dimension (e.g., only 3–4 colors may be stored).

In addition to limits on the number of values that may be stored within a particular feature dimension, data on the fidelity of representations suggests that even separate visual features from the same object are not stored completely independently. In an elegant design combining elements of the original work of Luck and Vogel (1997) with the newer method of continuous report (Wilken & Ma, 2004), Fougnie, Asplund and Marois (2010) examined observers’ representations of multi-feature objects (oriented triangles of different colors; see Figure 2a). Their results showed that, while there was no cost for remembering multiple features of the same object in a basic change-detection paradigm (as in Luck and Vogel, 1997), this null result was obtained because the paradigm was not sensitive to changes in the fidelity of the representation. In contrast, the continuous report paradigm showed that, even within a single simple object, remembering more features results in significant costs in the fidelity of each feature representation. This provides strong evidence against any theory of visual working memory capacity in which more information can be encoded about an object without cost (e.g., Luck and Vogel, 1997), but at the same time provides evidence against the idea of entirely separate memory capacities for each feature dimension.

Benefits of object-based storage beyond separate buffers

While observers cannot completely represent 3–4 objects independently of their information load, there is a benefit to encoding multiple features from the same object compared to the same number of features on different objects (Fougnie, Asplund and Marois, 2010; Olson & Jiang, 2002; Quinlan & Cohen, 2011). For example, Olson and Jiang showed that it is easier to remember the color and orientation of 2 objects (4 features total), than the color of 2 objects and the orientation of 2 separate objects (still 4 features total). In addition, while Fougnie, Asplund and Marois (2010) showed that there is a cost to remembering more features within an object, they found that there is greater cost to remembering features from different objects. Thus, while remembering multiple features within an object led to decreased fidelity for each feature, remembering multiple features on different objects led to both decreased fidelity and a decreased probability of successfully storing any particular feature (Fougnie, Asplund, & Marois, 2010).

Conclusion

So what is the basic unit of representation in visual working memory? While there are significant benefits to encoding multiple features of the same object compared to multiple features across different objects (e.g., Fougnie, Asplund and Marois, 2010; Olson & Jiang, 2002), visual working memory representations do not seem to be purely object-based. Memory for multi-part objects demonstrates that the relative location of features within an object limits how well those features can be stored (Xu, 2002a), and even within a single simple object, remembering more features results in significant costs in the fidelity of each feature representation (Fougnie, Asplund & Marois, 2010). These results suggest that what counts as the right “unit” in visual working memory is not a fully integrated object representation, or independent feature representations. In fact, no existing model captures all of the relevant data on the storage of objects and features in working memory.

One possibility is that the initial encoding process is object-based (or location-based), but that the “unit” of visual working memory is a hierarchically-structured feature-bundle (Figure 2b): at the top level of an individual “unit” is an integrated object representation, at the bottom level of an individual “unit” are low-level feature representations, with this hierarchy organized in a manner that parallels the hierarchical organization of the visual system. Thus, a hierarchical feature-bundle has the properties of independent feature stores at the lower level, and the properties of integrated objects at a higher level. Because there is some independence between lower-level features, it is possible to modulate the fidelity of features independently, and even to forget features independently. On the other hand, encoding a new hierarchical feature-bundle might come with an “overhead cost” that could explain the object-based benefits on encoding. On this view, remembering any feature from a new object would require instantiating a new hierarchical feature-bundle, which might be more costly than simply encoding new features into an existing bundle.

This proposal for the structure of memory representations is consistent with the full pattern of evidence described above, including the benefit for remembering multiple features from the same objects relative to different objects, and the cost for remembering multiple features from the same object. Moreover, this hierarchical working-memory theory is consistent with evidence showing a specific impairment in object-based working memory when attention is withdrawn from items (e.g., binding failures: Fougnie & Marois, 2009; Wheeler & Treisman, 2002; although this is an area of active debate; see Allen, Baddeley & Hitch, 2006; Baddeley, Allen, & Hitch, 2011; Gajewski & Brockmole, 2006; Johnson, Hollingworth & Luck, 2008; Stevanovski & Jilicoeur, 2011).

Furthermore, there is some direct evidence for separate capacities for feature-based and object-based working memory representations, with studies showing separable priming effects and memory capacities (Hollingworth & Rasmussen, 2010; Wood, 2009; Wood, 2011a). For example, observers may be capable of storing information about visual objects using both a scene-based feature memory (perhaps of a particular view), and also a higher-level visual memory system that is capable of storing view-invariant, 3D object information (Wood, 2011a; Wood, 2009).

It is important to note that our proposed hierarchical feature-bundle model is not compatible with a straightforward item-based or chunk-based model of working memory capacity. A key part of such proposals (e.g., Cowan, 2001; Cowan et al. 2004) is that memory capacity is limited only by the number of chunks encoded, not taking into account the information within the chunks. Consequently, these models are not compatible with evidence showing that there are limits simultaneously at the level of objects and the level of features (e.g., Fougnie et al. 2010). Even if a fixed number of objects or chunks could be stored, this limit would not capture the structure and content of the representations maintained in memory.

Thus far we have considered only the structure of individual items in working memory. Next we review research demonstrating that working memory representations includes another level of organization that represents properties that are computed across sets of items.

Interactions between items in visual working memory

In the previous two sections, we discussed the representation of individual items in visual working memory. However, research focusing on contextual effects in memory demonstrates that items are not stored in memory completely independent of one another. In particular, several studies have shown that items are encoded along with spatial context information (the spatial layout of items in the display), and with featural context information (the ensemble statistics of items the display). These results suggest that visual working memory representations have a great deal of structure beyond the individual item level. Therefore, even a complete model of how individual items are stored in working memory would not be sufficient to characterize the capacity of visual working memory. Instead, the following findings regarding what information is represented, and how representations at the group or ensemble level affect representations at the individual item level, must be taken into account in any complete model of working memory capacity.

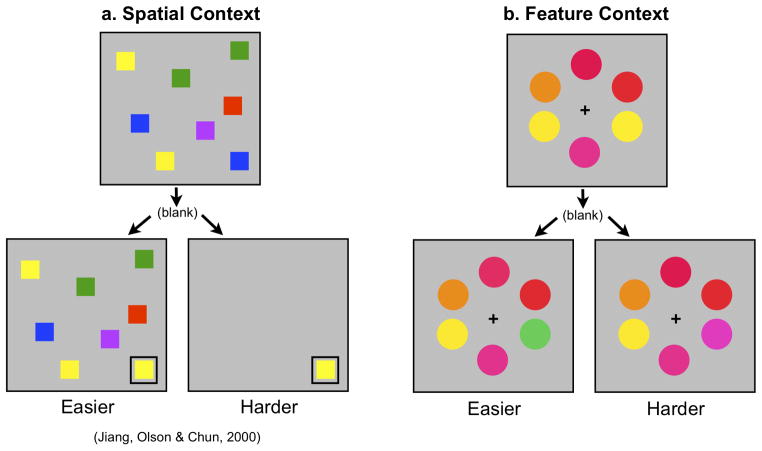

Influences of spatial context

Visual working memory paradigms often require observers to remember not only the featural properties of items (size, color, shape, identity), but also where those items appeared in the display. In these cases, memory for the features of individual items may be dependent on spatial working memory as well (for a review of spatial working memory, see Awh & Jonides, 2001). The most prominent example of this spatial-context dependence is the work of Jiang, Olson and Chun (2000), who demonstrated that changing the spatial context of items in a display impairs change detection. For example, when the task was to detect whether a particular item changed color, performance was worse if the other items in the display did not reappear (Figure 3a), or reappeared with their relative spatial locations changed. This interference suggests that the items were not represented independently of their spatial context (see also Vidal et al 2005; Olson & Marshuetz, 2005; and Hollingworth, 2006b, for a description of how such binding might work for real-world objects in scenes). This interaction between spatial working memory and visual working memory may be particularly strong when remembering complex shape, when binding shapes to colors, or when binding colors to locations (Wood, 2011b), but relatively small when remembering colors that do not need to be bound to locations (Wood, 2011b).

Figure 3.

Interactions between items in working memory. (a) Effects of spatial context. It is easier to detect a change to an item when the spatial context is the same in the original display and the test display than when the spatial context is altered, even if the item that may have changed is cued (with a black box). Displays adapted from the stimuli of Jiang, Olson & Chun (2000). (b) Effects of feature context on working memory. It is easer to detect a change to an item when the new color is outside the range of colors present in the original display, even for a change of equal magnitude.

Influence of feature context, or “ensemble statistics”

In addition to spatial context effects on item memory, it is likely that there are feature context effects as well. For instance, even in a display of squares with random colors, some displays will tend to have more “warm colors” on average, whereas others will have more “cool colors” on average, and others still will have no clear across-item structure. This featural context, or “ensemble statistics” (Alvarez, 2011), could influence memory for individual items (e.g., Brady & Alvarez, 2011). For instance, say you remember that the colors were “warm” on average, but the test display contains a green item (Figure 3b). In this case, it is more likely that the green item is a new color, and it would be easier to detect this change than a change of similar magnitude that remained within the range of colors present in the original display.

Given that ensemble information would be useful for remembering individual items, it is important to consider the possibility that these ensemble statistics will influence item memory. Indeed, Brady and Alvarez (2011) have provided evidence suggesting that the representation of ensemble statistics influences the representation of individual items. They found that observers are biased in reporting the size of an individual item by the size of the other items in the same color set, and by the size of all of the items on the particular display. They proposed that this bias reflects the integration of information about the ensemble size of items in the display with information about the size of a particular item. In fact, using an optimal observer model, they showed that observers’ reports were in line with what would be expected by combining information from both ensemble memory representations and memory representations of individual items (Brady & Alvarez, 2011).

These studies leave open the question of how ensemble representations interact with representations of individual items in working memory. The representation of ensemble statistics could take up space in memory that would otherwise be used to represent more information about individual items (as argued, for example, by Feigenson 2008 and Halberda, Sires and Feigenson, 2006), or such ensemble representations could be stored entirely independently of representations of individual items and integrated either at the time of encoding, or at the time of retrieval. For example, ensemble representations could make use of separate resource from individual item representations, perhaps analogous to the separable representations of real-world objects and real-world scenes (e.g., Greene & Oliva, 2009). Compatible with this view, ensemble representations themselves appear to be hierarchical (Haberman & Whitney, 2011), since observers compute both low-level summary statistics like mean orientation, and also object-level summary statistics like mean emotion of a face (Haberman & Whitney, 2009).

While these important questions remain for future research, the effects of ensemble statistics on individual item memory suggest several intriguing conclusions. First, it appears that visual working memory representations do not consist of independent, individual items. Instead, working memory representations are more structured, and include information at multiple levels of abstraction, from items, to the ensemble statistics of sub-groups, to ensemble statistics across all items, both in spatial and featural dimensions. Second, these levels of representation are not independent: ensemble statistics appear to be integrated with individual item representations. Thus, this structure must be taken into account in order to model and characterize the capacity of visual working memory. Limits on the number of features alone, the number of objects alone, or the number of ensemble representations alone, are not sufficient to explain the capacity of working memory.

Perceptual grouping and dependence between items

Other research has shown that items tend to be influenced by the other items in visual working memory, although such work has not explicitly attempted to distinguish influences due to the storage of individual items and influences from ensemble statistics. For example, Viswanathan, Perl, Bisscher, Kahana and Sekuler (2010; using Gabor stimuli) and Lin and Luck (2008; using colored squares) showed improved memory performance when items appear more similar to one another (see also Johnson, Spencer, Luck, & Schöner, 2009). In addition, Huang & Sekuler (2010) have demonstrated that when reporting the remembered spatial frequency of a Gabor patch, observers are biased to report it as more similar to a task-irrelevant stimulus seen on the same trial. It was as if memory for the relevant item was “pulled toward” the features of the irrelevant item.

Cases of explicit perceptual grouping make the non-independence between objects even more clear. For example, Woodman, Vecera and Luck (2003) have shown that perceptual grouping helps determine which objects are likely to be encoded in memory, and Xu and Chun (2007) have shown that such grouping facilitates visual working memory, allowing more shapes to be remembered. In fact, even the original use of the change detection paradigm varied the complexity of relatively structured checkerboard-like stimuli as a proxy for manipulating perceptual grouping in working memory (Phillips, 1974), and subsequent work using similar stimuli has demonstrated that changes which affect the statistical structure of a complex checkerboard-like stimulus are more easily detected (Victor & Conte, 2004). The extent to which such improvements of performance are supported by low-level perceptual grouping – treating multiple individual items as a single unit in memory – versus the extent to which such performance is supported by the representation of ensemble statistics of the display in addition to particular individual items is still an open question. Some work making use of formal models has begun to attempt to distinguish these possibilities, but the interaction between them is likely to be complex (Brady & Tenenbaum, 2010; submitted).

Perceptual Grouping vs. Chunking vs. Hierarchically Structured Memory

What is the relationship between perceptual grouping, chunking, and the hierarchically structured memory model we have described? Perceptual grouping and chunking are both processes by which multiple elements are combined into a single higher-order description. For example, a series of 10 evenly spaced dots could be grouped into a single line, and the letters F, B, and I can be chunked into the familiar acronym FBI (e.g., Cowan, 2001; Cowan et al. 2004). Critically, strong versions of perceptual grouping and chunking models posit that the resulting groups or chunks are the “units” of representation: if one part of the group or chunk is remembered, all components of the group or chunk can be retrieved. Moreover, strong versions of perceptual grouping and chunking models assume that the only limits on memory capacity come from the number of chunks or groups that can be encoded (Cowan, 2001).

Such models can account for some of the results reviewed here. For example, the influence of perceptual grouping on memory capacity (e.g., Xu & Chun, 2007) can be explained by positing a limit on the number of groups that can be remembered, rather than the number of individual objects. However, such models cannot directly account for the presence of memory limits at multiple levels, like the limits on both the number of objects stored and the number of features stored (Fougnie et al. 2010). Moreover, such models assume independence across chunks or groups and thus cannot account for the role of ensemble features in memory for individual items (Brady & Alvarez, 2011). Any model of memory capacity must account for the fact that groups or chunks themselves have sub-structure, that this sub-structure causes limits on capacity, and that we simultaneously represent both information about individual items and ensemble information across items. A hierarchically structured memory model captures these aspects of the data by proposing that information is maintained simultaneously at multiple, interacting levels of representation, and our final memory capacity is a result of limits at all of these levels.

Conclusion

Taken together, these results provide significant evidence that individual items are not represented independent of other items on the same display, and that visual working memory stores information beyond the level of individual items. Put another way, every display has multiple levels of structure, from the level of feature representations to individual items to the level of groups or ensembles, and these levels of structure interact. It is important to note that these levels of structure exist, and vary across trials, even if the display consists of randomly positioned objects that have randomly selected feature values. The visual system efficiently extracts and encodes structure from the spatial and featural information across the visual scene, even when, in the long run over displays, there may not be any consistent regularities. This suggests that any theory of visual working memory that specifies only the representation of individual items or groups cannot be a complete model of visual working memory.

The effects of stored knowledge on visual working memory

Most visual working memory research requires observers to remember meaningless, unrelated items, such as randomly selected colors or shapes. This is done to minimize the role of stored knowledge, and to isolate working memory limitations from long-term memory. However, in the real-world, working memory does not operate over meaningless, unrelated items. Observers have stored knowledge about most items in the real world, and this stored knowledge constrains what features and objects we expect to see, and where we expect to see them. The role of such stored knowledge in modulating visual working memory representations has been controversial. In the broader working memory literature, there is clear evidence of the use of stored knowledge to increase the number of items remembered in working memory (Ericsson, Chase & Faloon, 1980; Cowan, Chen & Rouder, 2004). For example, the original experiments on chunking were clear examples of using stored knowledge to recode stimuli into a new format to increase capacity (Miller, 1956) and such results have since been addressed in most models of working memory (e.g., Baddeley, 2000). However, in visual working memory, there has been less work towards understanding how stored knowledge modulates memory representations and the number of items that can be stored in memory.

Biases from stored knowledge

One uncontroversial effect of long-term memory on working memory is that there are biases in working memory resulting from prototypes or previous experience. For example, Huang and Sekuler (2010) have shown that when reporting the spatial frequency of a gabor patch, observers are influenced by stimuli seen on previous trials, tending to report a frequency that is pulled toward previously seen stimuli (see Spencer & Hund, 2002 for an example from spatial memory). Such biases can be understood as optimal behavior in the presence of noisy memory representations. For example, Huttenlocher et al. (2000) found that observers memory for the size of simple shapes is influenced by previous experience with those shapes; observers’ reported sizes are again ‘attracted’ to the sizes they have previously seen. Huttenlocher et al. (2000) model this as graceful errors resulting from a Bayesian updating process -- if you are not quite sure what you’ve seen, it makes sense to incorporate what you expected to see into your judgment of what you did see. In fact, such biases are even observed with real-world stimuli, for example, memory for the size of a real-world object is influenced by our prior expectations about its size (Hemmer & Steyvers, 2009; Konkle & Oliva, 2007). Thus, visual working memory representations do seem to incorporate information from both episodic long-term memory and from stored knowledge.

Stored knowledge effects on memory capacity

While these biases in visual working memory representations are systematic and important, they do not address the question of whether long-term knowledge can be used to store more items in visual working memory. This question has received considerable scrutiny, and in general it has been difficult to find strong evidence of benefits of stored knowledge on working memory capacity. For example, Pashler (1988) found little evidence for familiarity modulating change detection performance. However, other methods have shown promise for the use of long-term knowledge to modulate visual working memory representations. For example, Olsson and Poom (2005) used stimuli that were difficult to categorize or link to previous long-term representations, and found a significantly reduced memory capacity, and observers seem to perform better at working memory tasks with upright faces (Curby & Gauthier, 2007; Scolari, Vogel & Awh, 2008), familiar objects (see Experiment 2, Alvarez & Cavanagh, 2004), and objects of expertise (Curby et al 2009) than other stimulus classes. In addition, children’s capacity for simple colored shapes seems to grow significantly over the course of childhood (Cowan et al., 2005), possibly indicative of their growing visual knowledge base. Further, infants are able to use learned conceptual information to remember more items in a working memory task (Feigenson & Halberda, 2008).

However, several attempts to modulate working memory capacity directly using learning to create new long-term memories showed little effect of learning on working memory. For example, a series of studies has investigated the effects of associative learning on visual working memory capacity (Olson & Jiang, 2004; Olson, Jiang & Moore, 2005), and did not find clear evidence for the use of such learned information to increase working memory storage. For example, one study found evidence that learning did not increase the amount of information remembered, but that it improved memory performance by redirecting attention to the items that were subsequently tested (Olson, Jiang & Moore, 2005). Similarly, studies directly training observers on novel stimuli have found almost no effect of long-term familiarity on change detection performance (e.g., Chen, Eng & Jiang, 2006).

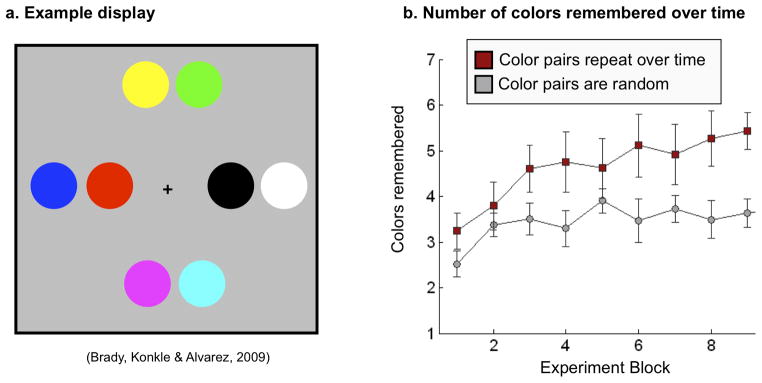

In contrast to this earlier work, Brady, Konkle and Alvarez (2009) have recently shown clear effects of learned knowledge on working memory. In their paradigm, observers were shown standard working memory stimuli in which they had to remember the color of multiple objects (Figure 4a). However, unbeknownst to the observers, some colors often appeared near each other in the display (e.g., red tended to appear next to blue). Observers were able to implicitly learn these regularities, and were also able to use this knowledge to encode the learned items more efficiently in working memory, representing nearly twice as many colors (~5–6) as a group who was shown the same displays without any regularities (Figure 4b). This suggests that statistical learning enabled observers to form compressed, efficient representations of familiar color pairs. Furthermore, using an information-theoretic model, Brady, Konkle and Alvarez (2009) found that observers’ memory for colors was compatible with a model in which observers have a fixed capacity in terms of information (bits), providing a possible avenue for formalizing this kind of learning and compression.

Figure 4.

Effects of learned knowledge on visual working memory. (a) Example memory display modeled after Brady, Konkle, & Alvarez (2009). The task was to remember all 8 colors. Memory was probed with a cued-recall test: a single location was cued, and the observer indicated which color appeared at the cued location. (b) Number of colors remembered over time in Brady, Konkle & Alvarez (2009). One group of observers saw certain color pairs more often than others (e.g., yellow and green might occur next to each other 80% of the time), whereas the other group saw completely random color pairs. For the group that saw repeated color pairs, the number of color remembered increased across blocks, nearly doubling the number remembered by the random group by the end of the session.

It is possible that Brady et al. (2009) found evidence for the use of stored knowledge in working memory coding because their paradigm teaches associations between items, rather than attempting to make the item’s themselves more familiar. For instance, seeing the same set of colors for hundreds of trials might not improve the encoding of colors or shapes, because the visual coding model used to encode colors and shapes has been built over a lifetime of visual experience that cannot not be overcome in the time-course of a single experimental session. However, arbitrary pairings of arbitrary features are unlikely to compete with previously existing associations, and might therefore lead to faster updating of the coding model used to encode information into working memory. Another important aspect of the Brady et al. (2009) study is that the items that co-occurred were always perceptually grouped. It is possible that compression only occurs when the correlated items are perceptually grouped (although learning clearly functions without explicit perceptual grouping, e.g., Orbán, Fiser, Aslin & Lengyel, 2008).

Conclusion

Observers have stored knowledge about most items in the real world, and this stored knowledge constrains what features and objects we expect to see, and where we expect to see them. There is significant evidence that the representation of items in working memory is dependent on this stored knowledge. Thus, items for which we have expertise, like faces, are represented with more fidelity (Curby & Gauthier, 2007; Scolari, Vogel & Awh, 2008), and more individual colors can be represented after statistical regularities between those colors are learned (Brady et al. 2009). In addition, the representation of individual items are biased by past experience (e.g., Huang and Sekuler, 2010; Huttenlocher et al. 2000). Taken together, these results suggest that the representation of even simple items in working memory depends upon our past experience with those items and our stored visual knowledge.

Visual working memory conclusion

A great deal of research on visual working memory has focused on how to characterize the capacity of the system. We have argued that in order to characterize working memory capacity, it is important to take into account both the number of individual items remembered, and the fidelity with which each individual item is remembered. Moreover, it is necessary to specify what the units of working memory storage are, how multiple units in memory interact, and how stored knowledge affects the representation of information in memory. In general we believe theories and models of working memory must be expanded to include memory representations that go beyond the representation of individual items and include hierarchically-structured representations, both at the individual item level (hierarchical feature-bundles), and across individual items. There is considerable evidence that working memory representations are not based on independent items, that working memory also stores ensembles that summarize the spatial and featural information across the display, and further, that there are interactions between working memory and stored knowledge even in simple displays.

Moving beyond individual items towards structured representations certainly complicates any attempt to estimate working memory capacity. The answer to how many items can you hold in visual working memory depends on what kind of items you are trying to remember, how precisely they must be remembered, how they are presented on the display, and your history with those items. Even representations of simple items have structure at multiple levels. Thus, models that wish to accurately account for the full breadth of data and memory phenomena must make use of structured representations, especially as we move beyond colored dot objects probed by their locations towards items with more featural dimensions or towards real-world objects in scenes.

VISUAL LONG-TERM MEMORY

Before discussing the capacity of long-term memory, it is important to make the distinction between visual long-term memory and stored knowledge. By “visual long-term memory,” we refer to the ability to explicitly remember an image that was seen previously, but which has not been continuously held actively in mind. Thus, visual long-term memory is the passive storage and subsequent retrieval of visual episodic information. By “stored knowledge,” we refer to the pre-existing visual representations that underlie our ability to perceive and recognize visual input. For example, when we first see an image, say of a red apple, stored knowledge about the visual form and features of apples in general enables us to recognize the object as such. If we are shown another picture of an apple hours later, visual long-term memory enables us to decide whether this is the exact same apple we saw previously.

While working memory is characterized by its severely limited capacity, long-term memory is characterized by its very large capacity: people can remember thousands of episodes from their lives, dating back to their childhood. However, in the same way that working memory capacity cannot be characterized simply in terms of the number of items stored, the capacity of long-term memory cannot be fully characterized by estimating the number of individual episodes that can be stored. Long-term memory representations are highly structured, consisting of multiple levels of representation from individual items to higher-level conceptual representations. Just as we proposed for working memory, these structured representations should be taken into account, both when quantifying and characterizing the capacity of the system, and when modeling memory processes such as retrieval.

Generally, work in the broader field of long-term memory has not emphasized the nature of stored representations, and has focused instead on identifying different memory systems (e.g., declarative vs. nondeclarative, episodic vs. semantic) and understanding the processing stages of those systems, particularly the encoding and retrieval of information (e.g. Squire, 2004). As is the case in the domain of working memory, theories of long-term memory encoding and retrieval are typically developed independent of what particular information is being stored and what particular features are used to represent stored items. A typical approach is to model memory phenomena that result from manipulations of timing (e.g. primacy and recency, rate of presentation), study procedure (e.g. massed or spaced presentation, the number of re-study events), and content-similarity (e.g. the fan-effect, category-size effect, category-length effect). For example, models of memory retrieval and storage that capture many of these phenomena have been proposed by Brown, Neath, and Chater (2007) and Shiffrin and Steyvers (1997).

Critically, in order to account for the range of performance across these manipulations, such models have postulated a role for some form of ‘psychological similarity’ between items, like how many features they share (e.g., Eysenck, 1979; Nairne, 2006; Rawson & Van Overschelde, 2008; Schmidt, 1985; von Restorff, 1933; see Shiffrin & Steyvers, 1997). For example, the effectiveness of a retrieval cue is based on the extent to which it cues the correct item in memory without cuing competing memories. Put simply, if items share more features, they interfere more in memory, leading to worse memory performance. Thus, in the domain of long-term memory, it is well-known that the nature of the representation, such as the features used in encoding the stimulus, are essential for predicting memory performance.

Clearly the more complete our model of the structure and content of long-term memory representations, the more accurately we will be able to model retrieval processes. Thus, the rich, structured nature of long-term memory representations, and the role of distinctiveness in long-term memory retrieval, pose challenges to quantifying and characterizing the capacity of visual long-term memory.

Here we review recent work that has examined these representation-based issues within the domain of visual long-term memory: What exactly is the content of the representations stored in visual long-term memory? What features of the incoming visual information are critical for facilitating successful memory for those items? By assessing both the quantity and the fidelity of the visual long-term memory representations, we can more accurately quantify the capacity of this visual-episodic-memory system. By measuring the content of visual long-term memory representations, and what forms of psychological similarity cause this information to be forgotten, we can use memory as a probe into the structure of stored knowledge about objects and scenes.

The fidelity of visual long-term memory

Quantifying the number of items observers can remember

In the late 60’s and 70’s, a series of landmark studies demonstrated that people have an extraordinary capacity to remember pictures (Shepard, 1967; Standing, Conezio, Haber, 1970; Standing, 1973). For example, Shepard (1967) showed observers ~600 pictures for 6 seconds each. Afterwards, he tested memory for these images with a two-alternative forced-choice task where participants had to indicate which of two images they had seen (Figure 5a). He found that observers could correctly indicate which picture they had seen almost perfectly (98% correct). In perhaps the most remarkable study of this kind, Standing (1973), showed observers 10,000 color photographs scanned from magazines and other sources, and displayed them one at a time for 5 seconds each. The 10,000 pictures were separated into distinct thematic categories (e.g. cars, animals, single-person, two-people, plants, etc.), and within each category only a few visually distinct exemplars were selected. Standing found that even after several days of studying images, participants could indicate which image they had seen with 83% accuracy. These results demonstrate that people can remember a surprisingly large number of pictures, even hours or days after studying each image just once.

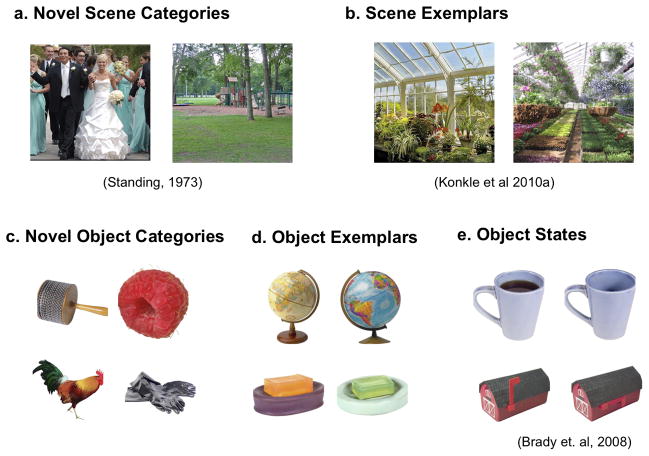

Figure 5.

Explorations of fidelity in visual long-term memory. (a) Examples of scenes from different, novel categories (modeled after Standing, 1973). (b) Exemplars of scenes from the same category, greenhouse garden (as in Konkle et al., 2010a). (c) Objects from different, novel categories, as in Brady et al. (2008). (d) Examples of objects exemplars from the same category (globes and soap). (e) Examples of objects with a different state (full vs. empty mug) or different pose (mailbox with flag up vs. down).

By correcting for guessing it is possible to estimate how many images observers must have successfully recognized to achieve a given level of performance. Standing (1973) found that when shown 100 images, observers performance suggested they remembered 90 of these images; when shown 1,000 images, performance suggested memory for 770; and when shown the full set of 10,000, their performance indicated memory for ~6,600 of the 10,000 images they had been shown. Extrapolating the function relating the number of items presented to the number of items recalled suggested no upper bound on the number of pictures that could be remembered (although see Landauer, 1986 for a possible model of fixed memory capacity in these studies; see also Dudai, 1997). These empirical results and models of memory performance have lead many to infer the number of visual items that can be stored in long-term memory is effectively unlimited, with memory performance depending primarily on how distinctive the information is rather than how many items are to be remembered.

Reasons for suspecting low-fidelity representations

These large-scale memory studies always used items that were as semantically and visually distinct as possible. For example, during the study phase there might be a single wedding scene, a single carnival scene, a single restaurant scene, etc; then, at test, an observer might see either the original wedding scene, or a park (e.g., Figure 5a). Under these conditions, to accurately indicate which of two images was studied, an observer would only need to remember the semantic gist of the images. This led many researchers in the field to assume that visual long-term memory stores relatively impoverished representations of each item, perhaps just a gist-like representation capturing the basic category, event, or meaning of the image along with a few specific details (Chun, 2003; Simons & Levin, 1997; Wolfe, 1998).

Influential studies demonstrating ‘change blindness’ also provided evidence suggesting that people likely only store gist-like representations of images (e.g. Rensink, 2000). Change blindness studies demonstrated that changes to an object part, or even large changes to a surface within a scene, often go undetected if the visual transients are masked (e.g., Rensink et al., 1997; Simons & Levin, 1997). This is even true in cases when memory demands are limited, for example when observers only need to retain information from one scene for a short amount of time before being presented with the altered scene. Together with the large-scale memory studies, change-blindness led to the widely accepted idea that memory representations for real-world stimuli are impoverished and lacked visual detail (see Hollingworth, 2006a for a review).

Evidence of high-fidelity long-term memory representations

A number of recent studies have overturned the assumption that representations of objects and scenes are sparse and lack detail. Experiments using both change detection paradigms (e.g., Mitroff, Simons & Levin, 2004; review by Simons & Rensink, 2005) and long-term memory tasks (e.g., Hollingworth, 2004; Brady et al. 2008), have demonstrated that visual memory representations often contain significant detail.

For example, a series of studies by Hollingworth and Henderson (2002) demonstrated that, after briefly attending to objects within a scene, memory for those objects was more visually detailed than just the category of the object, even after viewing 8–10 other objects (Hollingworth & Henderson, 2002; Hollingworth, 2004; Hollingworth, 2005). In fact, people maintained object details sufficient to distinguish between exemplars (this dumbbell vs. that dumbbell) and viewpoints (an object from this view, vs. the same object rotated 90 degrees) with above-chance recognition for around 400 studied objects intervening between initial presentation and memory test (Hollingworth, 2004), or even after a delay of 24 hours (Hollingworth, 2005). These results were the first to demonstrate that observers are capable of storing more than just the semantic category or gist of real-world objects over significant durations with a relatively large quantity of items.

To further assess the fidelity of visual long-term memory representations using a large-scale memory paradigm more closely matched to Standing (1973), Brady, Konkle, Alvarez, and Oliva (2008) had observers view 2500 categorically distinct objects, one at a time, for 3 seconds each, over the course of more than 5 hours. At the end of this study session, observers performed a series of 2-alternative-forced-choice tests that probed the fidelity of the memory representations by varying the relationship between the studied target item and the new foil item (Figure 5c-e). In the novel condition, the foil was categorically different from all 2500 studied objects. Success on this type of test required memory only for the semantic category of studied items, as in Standing (1973) and Shepard (1967). In the exemplar condition, the foil was a different exemplar from the same basic category as the target (e.g., if the target was a shoe, the foil would be a different kind of shoe). If only the semantic category of the target object was remembered, observers would fail on this type of test. To choose the right exemplar, observers had to remember specific visual details about the target item. In the state condition, the foil was the same object as the target, except it was in a different state or pose (e.g., if the target was a shoe with the laces tied, the foil could be the same shoe with the laces untied). To choose the target on state trials, observers would have to remember even more specific visual details about the target item.

As expected based on earlier studies, observers performed at 92% accuracy on the novel test, indicating that they had encoded at least the semantic category of thousands of objects. Surprisingly, observers could successfully perform the exemplar and state tests nearly as well (87% and 88%, respectively). For example, observers could confidently report whether a cup of orange juice they had seen was totally full or only mostly full of juice with almost 90% accuracy. It is important to note that observers did not know which of the 2500 studied items would be tested nor which particular object details had to be remembered for a particular item (e.g. category-level, exemplar-level, or state-level information), indicating that observers were remembering a significant amount of object detail about each item. Thus, visual long-term memory is capable of storing not only thousands of objects, but it can store thousands of detailed object representations.

One important difference between the work of Brady et al. (2008) and Standing (1973) is the complexity of the stimuli. In Brady et al. (2008), observers saw individual objects on a white background, whereas in Standing and Shepard’s seminal studies, observers saw full scenes (magazine clippings). Thus, it is possible that observers can store detailed object representations (as in Brady et al., 2008), but that their memory performance for exemplar-level differences in natural scenes would contain markedly less detail. Recent work has shown this is not the case: Using a paradigm much like that of Brady et al. (2008), Konkle, Brady, Alvarez and Oliva (2010a) demonstrated that thousands of scenes can be remembered with sufficient fidelity to distinguish between different exemplars of the same scene category (e.g., this garden or that garden, see Figure 5b). Furthermore, performance on these scene stimuli was nearly identical to performance with objects (Konkle, Brady, Alvarez & Oliva, 2010a; Konkle, Brady, Alvarez & Oliva, 2010b).

Conclusion

Quantifying the capacity of a memory system requires determining both the number of items that can be stored and the fidelity with which they are stored. The results reviewed here demonstrate that visual long-term memory is capable of storing not only thousands of objects, but store thousands of detailed object and scene representations (e.g., Brady et al. 2008; Konkle et al. 2010a). Thus, the capacity of visual long-term memory is greater than assumed based on the work of Standing (1973) and Shepard (1967). However, given that we previously believed the capacity of visual long-term memory was “virtually unbounded,” what is gained by showing the capacity is even greater than we thought? It is certainly informative to know that our intuitions about the fidelity of our visual long-term memory are incorrect: after studying thousands of unique pictures and tested with very “psychologically similar” foils, we will be much closer to perfect performance than chance performance. It is also valuable to know that, while in everyday life we may often fail to notice the details of objects or scenes (Rensink, O’Regan & Clark, 1997; Simons & Levin, 1997), this does not imply that our visual long-term memory system cannot encode and retrieve a huge amount of information, including specific visual details. Perhaps most importantly, earlier models assumed that visual long-term memory representations lacked detail and were gist-like and semantic in nature. Discovering that visual long-term memory representations can contain significant object-specific detail challenges this assumption, and suggests that visual episodes leave a more complete memory trace that includes more ‘visual’ or perceptual information.

Effects of Stored Knowledge on Visual Long-Term Memory

Stored knowledge provides a coding model for representing incoming information

Over a lifetime of visual experience, our visual system builds a storehouse of knowledge about the visual world. How does this stored knowledge affect the ability to remember a specific visual episode? In the case of visual working memory, we proposed that stored knowledge provides the coding model used to represent items in working memory. As we learn new information and update our stored knowledge, we update how we encode subsequent information (e.g., remembering more colors after learning regularities in which colors appear together: Brady, Konkle & Alvarez, 2009). We propose that stored knowledge plays the same role in visual long-term memory, providing the coding model used to encode incoming visual information and represent visual episodes.

One way to conceive of this coding model is as a multidimensional feature space, with an axis for each feature dimension. Of course this would be a massive feature space, but for illustration suppose there were some more perceptual feature dimensions (size, shape, and color), and some conceptual dimensions (living/nonliving, fast/slow). For any new incoming visual input, the visual system would extract information along these existing feature dimensions, creating a memory trace that can be thought of as a point in this multi-dimensional feature space. Thus, memory for a particular car is represented as a single point in this space (e.g., a race car might be encoded as small, aerodynamic, red, nonliving, and fast). Memory traces for different cars will fall relatively close to each other in this feature space. Memory traces for similar kinds of objects, like tractors might be nearby cars in this feature space, whereas memory traces for different kinds of objects, like cats, might be far away from cars in this feature space.

Most likely the coding model we derive from our past visual experience is considerably more complex than a single multidimensional space. In higher-level domains like categorization and induction, models of background knowledge based on more structured representations are required to fit human performance (Kemp & Tenenbaum, 2009; Tenenbaum, Griffiths & Kemp, 2006). For example, rather than representing animals in a single multidimensional space, observers seem to have multiple structured representations of animals: some kinds of inference draw on a tree structure expressing animals’ biological relatedness, whereas some inferences draw on a food web expressing which animals are likely to eat which other animals (Kemp & Tenenbaum, 2009).

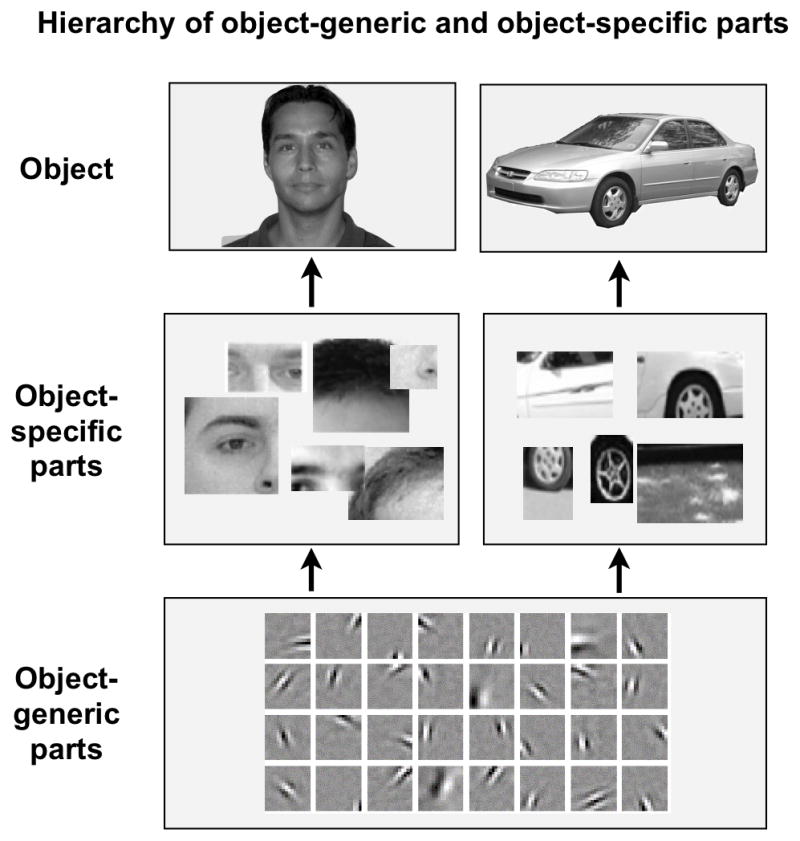

Visual background knowledge is likely to be similarly complex, perhaps based on a hierarchy of features ranging from generic perceptual features like color and orientation to mid-level features that have some specificity to particular object classes (e.g. Ullman, Vidal-Naquet, & Sali, 2002) to very high-level conceptual features that are entirely object-category specific (e.g. Ullman, 2007) (see Figure 6). In fact, modern models of object recognition propose that stored object knowledge consists of feature hierarchies (e.g. Epshtein & Ullman, 2005; Ommer & Buhman, 2010; Riesenhuber & Poggio, 1999; Torralba, Murphy, & Freeman, 2004; Ullman, 2007). For example, Ullman (2007) proposed that objects are represented by a hierarchy of image fragments: e.g. small image fragments of car parts combine to make larger car fragments, which further combine to make a car. In this sense, stored knowledge about different object concepts can be cached out in a hierarchy of visual features, which may be extracted and stored in visual long-term memory.

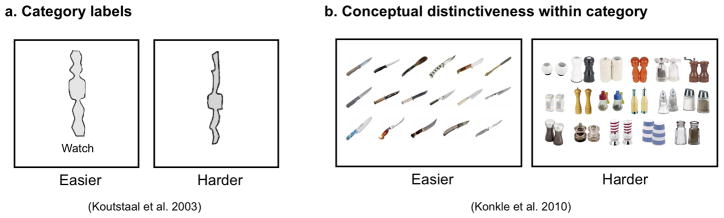

Figure 6.

Hierarchy of visual knowledge, from object-generic parts, to object-specific parts, to whole objects. Gabor patch stimuli adapted from Olshausen & Field (1996). Meaningful object fragments adapted from Ullman (2007).

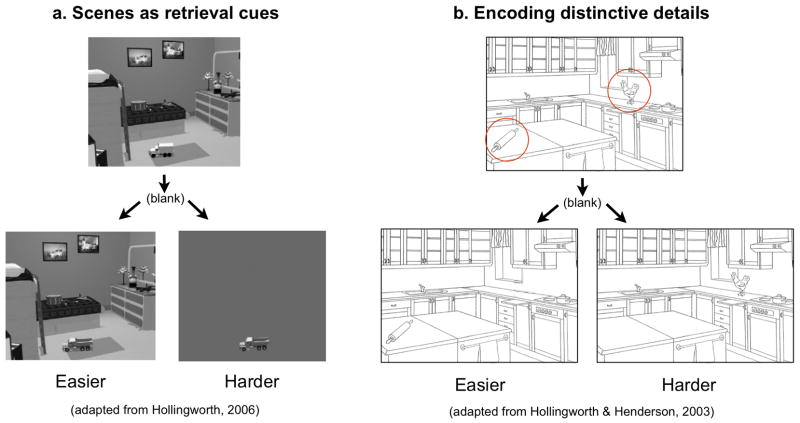

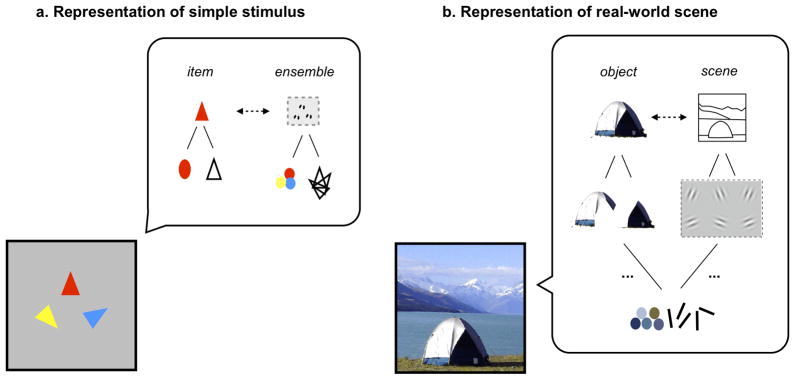

Furthermore, according to these computational models, precisely what features are represented in the hierarchy will depend on the task the system must perform (Ullman, 2007): to recognize a face as a face, the model learns one set of features, but to do a finer level of categorization (e.g. that this particular face is George Alvarez) larger features and feature combinations have to be learned by the model (see also Schyns & Rodet, 1997). Thus with increasing knowledge about particular exemplars and subordinate category structure, different kinds of visual features may be created in the visual hierarchy. This could explain why even memory for putatively “visual” information is dependent on conceptual structure, as high-level visual representations themselves are likely shaped by category knowledge.