Abstract

Hidden Markov Models (HMMs) are a commonly used tool for inference of transcription factor (TF) binding sites from DNA sequence data. We exploit the mathematical equivalence between HMMs for TF binding and the “inverse” statistical mechanics of hard rods in a one-dimensional disordered potential to investigate learning in HMMs. We derive analytic expressions for the Fisher information, a commonly employed measure of confidence in learned parameters, in the biologically relevant limit where the density of binding sites is low. We then use techniques from statistical mechanics to derive a scaling principle relating the specificity (binding energy) of a TF to the minimum amount of training data necessary to learn it.

Keywords: Bioinformatics, Hidden Markov Models, One-dimensional statistical mechanics, Fisher information, Machine learning

1 Introduction

Biological organisms control the expression of genes using transcription factor (TF) proteins. TFs bind to regulatory DNA segments (6-20bp) called binding sites thereby controlling the expression of nearby genes. An important task in Bioinformatics is identifying TF binding sites from DNA sequence data. This poses a non-trivial pattern recognition problem, and many computational and statistical techniques have been developed towards this goal. The goal of these algorithms is to identify new binding sites starting from a known collection of TF binding sites. Many different types of algorithms exist including Position Weight Matrices (PWMs) [1, 2], biophysics-inspired algorithms [2, 3], Hidden Markov Models (HMMs) [4–6], and information theoretic algorithms [7].

In general, only a limited number of binding sites are known for a given TF. Thus, any algorithm must build a general classifier based on limited training data. This places constraints of the type of algorithms and classifiers that can be used. The end goal of all models is generalization—the ability to correctly categorize new sequences that differ from the training set. This is especially important since the training set is comprised of a small sample fraction of all possible sequences. Most algorithms create a (often probabilistic) model for whether a particular DNA sequence is a binding site. The model contains a set of parameters, θ, that are fit, or learned, from training data.

All algorithms exploit the statistical differences between binding sites and background DNA in order to identify new binding sites. Two distinct factors contribute to how well one can learn θ, the size of the training data set and the specificity of the TF under consideration. Many TFs are highly specific. Namely, they bind strongly only to small subset of all possible DNA sequences which are statistically distinct from background DNA. Physically, this means that these TF have large binding energies for certain sequence motifs (binding sites) and low binding energies for random segments of DNA, i.e. “background” DNA. Other TFs are less specific and often exhibit non-specific binding to random DNA sequences. In this case, the statistical signatures that distinguish binding sites from background DNA are less clear. In general, the more training data one has and the more specific a TF, the easier it is to learn its binding sites.

This raises the natural question: how much data is needed to train an algorithm to learn the binding sites of a TF? In this paper, we explore this question in the context of a widely-used class of bioinformatic methods termed Hidden Markov Models (HMMs). We exploit the mathematical equivalence between HMMs for TF binding and the “inverse” statistical mechanics of hard rods in a one-dimensional disordered potential to derive a scaling principle relating the specificity (binding energy) of a TF to the minimum amount of training data necessary to learn its binding sites. Unlike ordinary statistical mechanics where the goal is to derive statistical properties from a given Hamiltonian, the goal of the “inverse” problem is to learn the Hamiltonian that most likely gave rise to the observed data. Thus, we are led to consider a well-studied physics problem [8]—the statistical mechanics of a one-dimensional gas of hard rods in an arbitrary external potential—from an entirely new perspective.

The paper is organized as follows. We start by reviewing the mapping between HMMs and the statistical mechanics of hard rods. We then introduce the Fisher Information, a commonly employed measure of confidence in learned parameters, and derive an analytic expression for the Fisher information in the dilute binding site limit. We then use this expression to formulate a simple criteria for how much sample data is needed to learn the binding sites of a TF of a given specificity.

2 HMMs for Binding Site Discovery

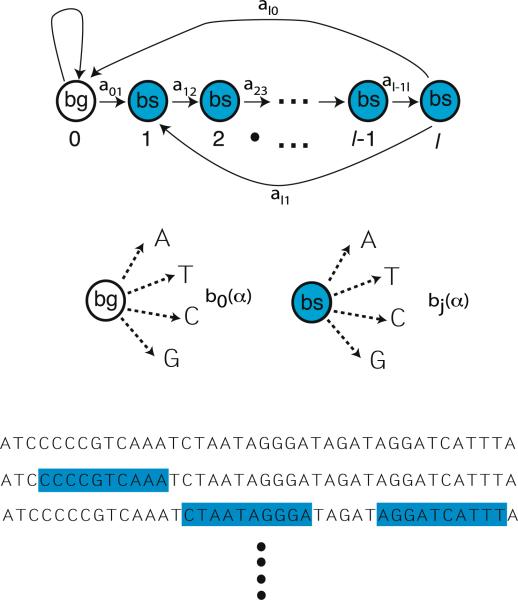

HMMs are powerful tools for analyzing sequential data [9, 10] that have been adapted to binding site discovery [5, 6]. HMMs model a system as a Markov process on internal states that are hidden and cannot be observed directly. Instead, the hidden states can only be inferred indirectly through an observable state-dependent output. In the context of binding site discovery, HMMs serve as generative models for DNA sequences. A DNA sequence is modeled as a mixture of hidden states—background DNA and binding sites—with a hidden state-dependent probability for observing a nucleotide (A, T , C, G) at a given location (see Fig. 1).

Fig. 1.

Hidden Markov Model for binding sites of size l. (Top) There are l + 1 hidden states, with state 0 background DNA and state j corresponding to position j = 1 . . . l in a binding site. The HMM is described by a Markov process with transition probabilities give by aij . (Middle) Each state j in an HMM is characterized by an observation symbol probability bj (k), for the probability of seeing symbol k = A, T , C, G in a state j. (Bottom) A given sequence of DNA is composed of binding sites and background DNA

For concreteness, consider a TF whose binding sites are of length l. An HMM for discovering the binding sites can be characterized by four distinct elements (see Fig. 1) [10]:

l + 1 hidden states with state 0 corresponding to background DNA and states j = 1 . . . l corresponding to position j of a binding site.

4 observation symbols corresponding to the four observable nucleotides α = A, T , C, G.

The transition probabilities, {aij} (i, j = 0 . . . l) between the hidden states which take the particular form shown in Fig. 1 with only aj,j+1, al0, and a01 non-zero. In addition, for simplicity, we assume that binding sites cannot touch (i.e. al1 0). The generalization to the case where the last assumption is relaxed is straightforward.

The observation symbols probabilities {bj(α)} for seeing a symbol α = A, C, G, T in a hidden state j. Often we will rewrite these probabilities in more transparent notation with pα = b0(α), the probability of seeing base α in the background DNA, and, the probability of seeing base α at position j in a binding site. Finally, denote the collection of all parameters of an HMM (aij and bi(α)) by the symbol θ.

A DNA sequence of length L, , is generated by an HMM starting in a hidden state q1 as follows. Starting with i = 1, choose si according to bqi (s1) and then switch to a new hidden state qi+1 using the switching probabilities aqiqi+i and repeat this process until i = L. In this way, one can associate a probability, , to each sequence , corresponding to the probability of generating using an HMM with parameters θ. The goal of bioinformatic approaches is to learn the parameters θ from training data and use the result to predict new binding sites. Many specialized algorithms, often termed dynamic programming in the computer science literature, have been developed to this end [9, 10].

2.1 Mapping HMMs to the Statistical Mechanics of Hard Rods

Before discussing the mapping between HMMs and statistical mechanics, we briefly review the physics of a one-dimensional gas of hard rods in a disordered external field [8]. The system consists of hard rods—one-dimensional hard core particles—of length l in a spatially dependent binding energy E(Sxi), with xi the location of the starting site, at a inverse temperature β, and a fugacity, z (i.e. chemical potential μ = log z). The equilibrium statistical mechanics of the system is determined by the grand canonical partition function obtained by summing over all possible configurations of hard rods obeying the hard-core constraint [8]. In addition, the pressure can be calculated by taking the logarithm of the grand canonical partition function. Since this model is one-dimensional and has only local interactions, many statistical properties can be calculated exactly using Transfer Matrix techniques. Consequently, variations of this simple hard rod model have been used extensively to model the sequence dependence of nucleosome positioning [11, 12].

We now discuss the mapping between HMMs and a gas of hard rods. We start by showing that the observation symbol probabilities bj(α) have a natural interpretation as a binding energy. Consider a DNA sequence S = s1 . . . sl, with l the length of a binding site. Denote the corresponding hidden state at the j-th position of S by qj . It is helpful to represent this sequence by a l by 4 matrix Sjα of DNA of length l where Sjα = 1 if base si = α and zero otherwise. Denote the probability of generating S from background DNA as , and the probability of generating the same sequence within a binding site is P(S|{qj = j, j = 1 . . . l}, θ) = ∏jbj(sj). Note that we can rewrite the ratio of these as probabilities as

| (1) |

where we have defined a “sequence-dependent” binding energy

| (2) |

with

| (3) |

Notice that the ratio (1) is of a Boltzmann form with a ‘binding energy’ that can be expressed in terms of a Position Weight Matrix (PWM), ε, related to the observation symbol probabilities (3).

Now consider a sequence of length . In this case, the probability of generating the sequence, , is obtained by summing over all possible hidden state configurations. Notice that we can uniquely denote a hidden state configuration by specifying the starting positions within the sequence of all the binding sites, {x1 . . . xn}. The hardrod constraint means that the only allowed configuration are those where |xu – xv| ≥ l + 1 for all u, v (the extra factor of 1 arises because al0 = 0). Consequently, the probability of generating a sequence is given by summing over all possible hidden state configurations

| (4) |

where P({x1 . . . xn}|θ) is the probability of generating an allowed hidden state configuration, {x1, . . . , xn} and we have factorized the probability using the fact that in a HMM, transition probabilities are independent of the observed output symbol. Furthermore, the ratio of P({x1 . . . xn}|θ) to the probability of generating a hidden-state configuration with no binding sites, is just

| (5) |

with the ‘fugacity’, z, given by

| (6) |

and μ = log z the chemical potential. Combining (1), (5), and (4) yields

| (7) |

with

| (8) |

and

| (9) |

where E(xi) is the binding energy, (1), for a sub-sequence of length l starting at position xi of .

Notice that is the grand canonical partition function for a classical fluid of hard rods in an external potential [8]. The sequence-dependence PWM ε acts as an arbitrary external potential, and the switching rate a01 sets the chemical potential for binding. Thus, up to a multiplicative factor that is independent of the emission probabilities for binding sites, an HMM is mathematically equivalent to a thermodynamic model of hard rods. Importantly, the amount of training data, L, plays the role of system size. Furthermore, the negative log-likelihood, is, up to a factor of L just the pressure of the gas of hard rods [8]. In what follows, we exploit the relationship between system size and the quantity of training data to use insights from finite-size scaling to better understand how much data one needs to learn small differences. The relationship between HMMs and the statistical mechanics of hard rods is summarized in Table 1.

Table 1.

Relationship between HMMs and the statistical mechanics of hard-rods

| HMMs | Hard-rods | |

|---|---|---|

| L | Size of training data | System size |

| Nucleotide sequence | Disorder | |

| bj(α) | Symbol Probability | Binding Energy |

| aij | Switching rates | Fugacity |

| P(S|θ) | Probability | Partition Function |

| log P(S|θ) | Log-likelihood | Pressure |

| [I(θ)]ij | Fisher Information | Correlation Functions |

| Dynamic Prog. | Transfer Matrices | |

| Expectation Maximization | Variational Methods |

2.2 HMMs, Position-Weight Matrices, and Cutoffs

The matrix of parameters, εiα, defined in (3), are often referred to in bioinformatics as the Position Weight Matrix (PWM) [1, 2]. PWMs are the most commonly used bioinformatic method for discovering new binding sites. In PWM-based approaches, sequences, S, whose binding energies, E(S) = ε · S are below some arbitrary threshold, are considered binding sites. This points to a major shortcoming of PWM based methods- namely the inability to learn a threshold directly from data. A major advantage of HMM models over PWM-only approached is that HMMs learn both a PWM, ε, and a natural “cutoff” through the chemical potential μ = log z [6]. In terms of the corresponding hard-rod model, the probability, Pbs(S), for a sequence, S, to be a binding site takes the form of a Fermi-function,

| (10) |

If one makes the reasonable assumption that a sequence S is a binding site if Pbs(S) > 1/2, we see that μ serves as a natural cut-off for binding site energies [6]. Thus, the switching probabilities aij of the HMM can be interpreted as providing a natural cut-off for binding energies through (6). This points to a natural advantage of HMMs over PWM-only approach, namely one learns the threshold binding energy for determining whether a sequence is a binding site self-consistently from the data. Thus, though in practice binding sites are dilute in the DNA and hard-rod constraints can often be neglected, it is still beneficial to use the full HMM machinery for binding site discovery.

3 Fisher Information & Learning with Finite Data

3.1 Fisher Information and Error-bars

In general, learning the parameters of an HMM from training data is a difficult task. Commonly, parameters of an HMM are chosen to maximize the likelihood of observed data, , through Maximum Likelihood Estimation (MLE), i.e. parameters are chosen so that

| (11) |

Finding the global maxima is an extremely difficult problem. However, one can often find a local maximum in parameter space, , using Expectation Maximization algorithms such as Baum-Welch [13]. In general, for any finite amount of training data, the learned parameters (even if they are a global maxima) will differ from the “true” parameters θT . The reason for this is that the probabilistic nature of HMMs leads to ‘finite size’ fluctuations so that the training data may not be representative of the data as a whole. These fluctuations are suppressed asymptotically as the training data size approaches infinity. For this reason, it is useful to have a measure of how well the learned parameters describe the data.

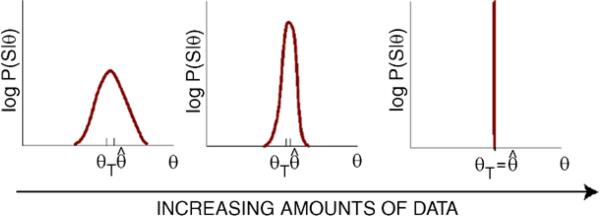

In the remainder of the paper, we assume there is enough training data to ensure that we can consider parameters in the neighborhood of the true parameters. The mapping between HMMs and the statistical mechanics of hard rods allows us to gain insight into the relationship between the amount of training data and the confidence in learned parameters. Recall that log-likelihood per unit volume, , is analogous to a pressure and the amount of training data is just the system size. From finite-size scaling in statistical mechanics, we know that as L → ∞ the log-likelihood/pressure becomes increasingly peaked around its true value (see Fig. 2). In addition, we can approximate the uncertainty we have about parameters by calculating the curvature of the log-likelihood, , around where ∂A denotes the derivative with respect to the A-th parameter.

Fig. 2.

The log-likelihood becomes peaked around true parameters with increasing data, analogous to finite-size scaling of pressure times volume (logarithm of the grand canonical partition function) in the corresponding statistical mechanical model

This intuition can be formalized for MLE using the Cramer-Rao bound which relates the covariance of estimated parameters to the Fisher Information (FI) Matrix, , defined by

| (12) |

where ([9] and see Appendices A, B and C). An important property of the Fisher information is that it provides a bound for how well one can estimate the parameters of the likelihood function by placing a lower bound on the covariance of the estimated parameters. The Cramer-Rao bound relates the Fisher information to the expected value of an unbiased estimator, , and the covariancematrix of the estimator,

through the inequality

| (13) |

For MLE, the Cramer-Rao bound is asymptotically saturated in the limit of infinite data. Thus, we expect the Fisher Information to be a good approximation for when the amount of training data is large. In the limit of large data, the pressure, or equivalently , “self-averages” and we can ignore the expectation value (12). Thus, to leading order in L, one can approximate the covariance matrix as

| (14) |

in agreement with intuition from finite size scaling. The previous expression provides a way to put error bars on learned parameters. However, in practice we seldom have access to the “true” parameters θ that generated the observed sequences. Instead, we only know the parameters learned from the training data, . Thus, one often substitutes , our best guess for the parameters θ in (14).

3.2 Fisher Information as Correlation Functions

It is worth noting that the expression above, in conjunction with the mapping to the hard-rod model, allows us to calculate error bars directly from data. In particular, we show below that the Fisher information can be interpreted as a correlation function and thus can be calculated using Transfer Matrix techniques. It is helpful to reframe the discussion above in the language of the statistical mechanics of disordered systems. Recall that up to a normalization constant, , in the corresponding hard-rod model is the grand canonical partition function, is the pressure, and the amount of training data, L, is just the size of the statistical mechanical system (see Table 1 of main text). When L is large, we expect that the sequence self-averages and the Fisher Information is related to the second derivative of the log-likelihood of the observed data,

| (15) |

Thus, aside from the normalization , the Fisher Information can be calculated from the second derivative of the pressure. From the fluctuation dissipation theorem, we conclude that the Fisher information can be expressed in terms of connected correlation functions. In particular, let be the operator conjugate to the A-the parameter, θA in the partition function . The Fisher Information then takes the form

| (16) |

where and

| (17) |

Note that these correlation functions can be calculated directly from the data using Transfer Matrix techniques without resorting to more complicated methods.

In general, the background statistics of the DNA are known and the parameters one wishes to learn are the switching rates, aij , and symbol observation probabilities, bj(α). In practice, it is often more convenient to work with the fugacity, z, rather than the switching rate (see Table 1). The operator conjugate to the fugacity is n, the number of binding sites. Consequently,

| (18) |

Thus, the uncertainty in the switching rates is controlled by the fluctuations in binding site number, as is intuitively expected. One can also derive the conjugate operators for the emission probabilities bj(α) and/or the sequence dependent “binding energies” εjα (see Table 1) via a straight forward calculation (see calculations in sections below).

The expression (16) provides a computationally tractable way to calculate the Fisher Information and, consequently, the covariance matrix . Not only can we learn the maximum likelihood estimate for parameters, we can also put ‘error bars’ on the MLE. We emphasize that in general, this requires powerful, computationally intensive techniques. However, by exploiting transfer matrix/ dynamic programming techniques, the correlation functions (16) can be computed in polynomial time. This result highlights how thinking about HMMs in the language of statistical mechanics can lead to interesting new results.

4 Analytic Expression Using a Virial Expansion

In general, calculating the log-likelihood analytically is intractable. However, we can exploit the fact that binding sites are relatively rare in DNA and perform a Virial expansion in the density of binding sites, ρ, or in the HMM language, the switching rate from background to binding site . This is a good approximation in most cases. For example, for the NF-κB TF family, a01 was recently found to be of order 10−2–10−4 [6] Thus, to leading order in ρ, we can ignore exclusion effects due to overlap between binding sites and write the partition function of the hard-rod model as

| (19) |

where E(Sσ) is the binding energy, (2), for a hard-rod bound to a sequence, Sσ, of length l starting at position σ on the full DNA sequence . The corrections due to steric exclusion are higher order in density and thus can be ignoredSto leading order. Thus, the log-likelihood takes the simple form

| (20) |

where is a normalization constant.

Notice the log-likelihood (20) is a sum over the free-energies of single particles in potentials given by the observed DNA. For long sequences where , we expect on average N = La01 binding sites, and L – N background DNA sequences in the sum. In this case, we expect that the single particle energy self-averages and we can replace the sum by the average value of the single-particle free energy in either background DNA or a binding site. In particular, we expect that

| (21) |

where 〈H(S)〉bg and 〈H(S)〉bs are the expectation value of H(S) for sequences S of length l drawn from the background DNA and binding site distributions, respectively.

4.1 Maximum Likelihood Equations via the Virial Expansion

We now derive the Maximum-Likelihood equations (MLE) within the Virial expansion to the log-likelihood (20). Recall from (11) that the Maximum Likelihood estimator is the set of parameters most likely to generate the data. Thus, we can derive MLE by taking the first derivatives of the log-likelihood and setting the expressions to zero. Consider first the MLE for the binding energy matrix εiα. Since C(S, θ) is independent of the binding energy, we focus only on the first term of (20). Define the matrix Siα which is one if position i has base α and zero otherwise. The MLE can be derived by taking the first derivative

| (22) |

where λi are Lagrange multipliers that ensure proper normalization of probabilities. Explicitly taking the derivative, using probability conservation, and noticing that gives

| (23) |

where

| (24) |

is the Fermi-Dirac distribution function.

We can also derive the MLE corresponding to the fugacity. The fugacity depends explicitly on the normalization constant . Note that in HMMs, and ensures probability conservation. Since z = a01/(1 – a01)l+1, to leading order in a01, naively log . However, choosing this normalization explicitly violates probability conservation in the corresponding HMM because we have truncated the Virial expansion for the log-likelihood at first order and consequently allowed unphysical configurations. Since deriving the MLEs requires probability conservation, we impose by hand that the normalization has the z dependence,

| (25) |

With this normalization, the log-likelihood (20) becomes analogous to that for a mixture model where the sequences Sσ are drawn from background DNA or binding sites. With this choice of C(S, θ) the MLE equations can be calculated in a straightforward manner by taking the derivative of (20) with respect to z (see Appendix B) to get

| (26) |

4.2 Fisher Information via the Virial Expansion

One can also derive an analytic expressions for the Fisher information within the Virial expansion. Generally, the background observation probabilities b0(α) = pα are known and the HMM parameters, θ, to be learned are the observation symbol probabilities in binding sites, and the switching probability a01. Technically, it is easier to work with the corresponding parameters of the hard-rod model, the binding energies εiα and the fugacity, z. Note that probability conservation and (3) imply that only three of the εiα (α = A, C, G) are independent. A straight forward calculation (see Appendices A, B and C) yields

| (27) |

with

| (28) |

and for i ≠ j,

where, as above, fz,ε(S) is the Fermi-Dirac distribution function

| (29) |

One also has (see Appendix B)

| (30) |

with

| (31) |

and

| (32) |

with

| (33) |

The expressions (27), (30), and (33) depend only on εiα and thus can be used to calculate the expected error in learned parameters as a function of training data using only the Position Weight Matrix (PWM) of a transcription factor and a rough estimate of the switching probability a01 or equivalently the fugacity z. The explicit dependence on base T reflects the fact that not all the elements of the PWM are independent.

5 Scaling Relation for Learning with Finite Data

An important issue in statistical learning is how much data is needed to learn the parameters of a statistical model. The more statistically similar the binding sites are to background DNA (i.e the smaller the binding energy of a TF), the more data is required to learn the model parameters. The underlying reason for this is that the probabilistic nature of HMMs means that the training data may not be representative of the data as a whole. Intuitively, it is clear that in order to be able to effectively learn model parameters, the training data set should be large enough to ensure that “finite-size” fluctuations resulting from limited data cannot mask the statistical differences between binding sites and background DNA. To address this question, we must consider PWMs learned from strictly random data. As the size of the training set is increased, the finite-size fluctuations are tamed. Our approach is then, in a sense, complementary to looking for rare, high-scoring sequence alignments which become more likely as L increases in random data [14]. Of course, estimations based on random data neglect non-trivial structure of real sequences [15].

5.1 Maximum Likelihood and Jeffreys Priors

Within the Maximum Likelihood framework, the probability that one learn a ML estimator, , given that the data is generated by parameters θ, can be approximated by a Gaussian whose width is related to the Fisher information using a Jeffreys prior [16],

| (34) |

As expected, the width of the Gaussian is set by the covariance matrix for , and is related to the second derivative of the log-likelihood through (14). Since the log-likelihood—in analogy with the pressure (times volume) of the corresponding hard-rod gas—is an extensive quantity, an increase in the amount of training data L means a narrower distribution for the learned parameters (see Fig. 2). When L is large, the inverse of the Fisher information is well approximated by the Jacobian of the log-likelihood, (14). In general, the Jacobian is a positive semi-definite, symmetric square matrix of dimension n, with n the number of parameters needed to specify the position weight-matrix and fugacity for a single TF. In most cases, n is large and typically ranges from 24–45, with the exact number equal to three time the length of a binding site.

Label the A-th component of θ by θA. Then, the probability distribution (34) can also be used to derive a distribution for the Mahalanobis distance [17]

| (35) |

The Mahalanobis distance is a scale-invariant measure of how far the learned parameters are from the true parameters θ. Intuitively, it measures distances in units of standard deviations. Furthermore, the Mahalanobis distance scales linearly with the amount of data/system size L since it is proportional to log-likelihood (i.e. pressure times volume). By changing variable to the eigenvectors of the Jacobian, normalizing by the eigenvalues, and integrating out angular variables, one can show that (34) yields the following distribution for r̂,

| (36) |

When n is large, we can perform a saddle-point approximation for r around its maximum value,

| (37) |

Writing r̂ = r̂* + δr̂, one has

| (38) |

Thus, for large n, almost in all cases the learned parameters will be peaked sharply around a distance, , with a width of order 1. This result is a general property of large-dimensional Gaussians and will be used below.

5.2 Scaling Relation for Learning with Finite Data

We now formulate a simple criteria for when there is enough data to learn the binding sites of a TF characterized by a PWM ε. We take as our null hypothesis that the data was generated entirely from background DNA (i.e. the true parameters are ε0 = 0 and z0 = z) and require enough data so that the probability of learning be negligible. In other words, we want to make sure that there is enough data so that there is almost no chance of learning for data generated entirely from background DNA, ε0 = 0. From (38), we know that for large n, with probability almost 1 due to finite size fluctuations, any learned will lie a Mahalanobis distance, away from the true parameters. Thus, we require enough data so that

| (39) |

with r̃2(ε, z) defined by the first equality. An explicit calculation of the left hand side of (39) yields (see Appendices A, B and C)

| (40) |

where we have defined

| (41) |

Together, (39) and (40) define a criteria for how much data is needed to learn the binding sites of a TF with PWM (binding energy), ε, whose binding sites occur in background DNA with a fugacity z. Notice that (40) contains terms that scale as the square of the energy difference, indicating that it is much easier to learn binding sites with a few large differences than many small differences.

6 Discussion

In this paper, we exploited the mathematical equivalence between HMMs for TF binding and the “inverse” statistical mechanics of hard rods in a one-dimensional disordered potential to investigate learning in HMMs. This allowed us to derive a scaling principle relating the specificity (binding energy) of a TF to the minimum amount of training data necessary to learn its binding sites. Thus, we were led to consider a well-studies physics problem [8]—the statistical mechanics of a one-dimensional gas of hard rods in an arbitrary external potential—from an entirely new perspective.

In this paper, we assumed that there was enough data so that we could focus on the neighborhood of a single maximum in the Maximum Likelihood problem. However, in principle, for very small amounts of data, the parameter landscape has the potential to be glassy and possess many local minima of about equal likelihood. However in our experience, this does not seem to be the case in practice for most TFs. In the future, it will be interesting to investigate the parameter landscape of HMMs in greater detail to understand when they exhibit glassy behavior.

The work presented here is part of a larger series of works that seeks to use methods from “inverse statistical mechanics” to study biological phenomenon [18–21]. Inverse statistical mechanics inverts the usual logic of statistical mechanics where one starts with a microscopic Hamiltonian and calculates statistical properties such as correlation functions. In the inverse problem, the goal is to start from observed correlations and find the Hamiltonian from which they were most likely generated. In the context of binding site discovery, considering the inverse statistical problem allows us to ask and answer new and interesting questions about how much data one needs to learn the binding sites of a TF. In particular, it allows us to calculate error bars for learned parameters directly from data and derive a simple scaling relation between the amount of training data and the specificity of TF encoded in its PWM.

Our understanding of how the size of training data affects our ability to learn the parameters in inverse statistical mechanics is still in its infancy. It will be interesting to see if the analogy between finite-size scaling in the thermodynamics of disordered systems and learning in inverse statistical mechanics holds in other systems, or if it is particular to the problem considered here. More generally, it will be interesting to see methods from physics and statistical mechanics yield new insights about large data sets now being generated in biology.

Acknowledgements

We would like to thank Amor Drawid and the Princeton Biophysics Theory group for useful discussion. This work was partially supported by NIH Grants K25GM086909 (to PM) and R01HG03470 (to AMS). DS was partially supported by DARPA grant HR0011-05-1-0057 and NSF grant PHY-0957573. PM would also like to thank the Aspen Center for Physics where part of this work was completed.

Appendix A: Covariance Matrix and Fisher Information

The Fisher information is a commonly employed measure of how well one learns the parameters, θ, of a probabilistic model from training data, . In our context, is the observed DNA sequence and θ are the parameters of the HMM for generating DNA sequences. The Fisher information matrix, , is given in terms of the log-likelihood, , by

| (A.1) |

where Eθ denotes the expectation value averaged over different data sets generated using the parameters θ and ∂A denotes the partial derivative with respect to the A-th component of θ .

The Fisher information can also be expressed as a second derivative of the log-likelihood function. This follows from differentiating both sides of the equation

| (A.2) |

with respect to θA and θB which yields the expression

| (A.3) |

Comparing with (A.1), we see that the Fisher information can also be expressed as

| (A.4) |

An important property of the Fisher information is that it provides a bound for how well one can estimate the parameters of the likelihood function. As discussed in the main text, the parameters of a HMM can be estimated from an observed sequence, , using a Maximum Likelihood Estimator (MLE), , defined as

| (A.5) |

The Cramer-Rao bound relates the Fisher Information to the expected value of the estimator

| (A.6) |

and the covariance matrix of the estimator,

For a multidimensional estimator, the Cramer-Rao bound is given by

| (A.7) |

To gain intuition, it is worth considering the special case where the estimator is unbiased, , in which case the Cramer-Rao bound simply reads

| (A.8) |

Thus, the Fisher information gives a fundamental bound on how well one can learn the parameters of our HMM.

For MLEs, the Cramer-Rao bound is asymptotically saturated in the limit of infinite data. Thus, we expect the Fisher Information to be a good approximation for when the length, L, of the DNA sequences, S, from which we learn parameters is long. In this case,

| (A.9) |

The previous expressions provide a way to put error bars on learned parameters. However, in practice we never have access to the “true” parameters θ that generated the observed sequences. Instead, we only know the parameters learned from the training data, . Thus, we make the additional approximation

| (A.10) |

Appendix B: Calculation of Fisher Information using a Virial Expansion

B.1 PWM Dependent Elements

We now calculate the Fisher information for a HMM for binding sites from a single binding site distribution using the Virial expansion. We are interested in the Fisher information for the parameters εiα (the energies in the corresponding Position Weight Matrix). An important complication is that not all the εiα are independent. In particular, we have

| (B.1) |

Thus, there are only three independent parameters at each position in the binding site. Let us choose εiT to depend on the other three energies. Rearranging the equation above, one has that

| (B.2) |

Taking the first derivative of (20) with respect to εiα with α ≠ T yields

| (B.3) |

Taking the second derivative yields

| (B.4) |

We can simplify the expressions further by noting

| (B.5) |

| (B.6) |

Plugging in these expressions into (B.4) yields

| (B.7) |

The Fisher information is obtained in the usual way from

| (B.8) |

B.1.1 Simplified Equations for i = j

When i = j, we can simplify the equations above using the ML equations (23) and noting that SiαSiβ = δαβSiα and SiαSiT = 0. Using the expressions above yields

| (B.9) |

From the MLE (23), we know that

| (B.10) |

Plugging this into the equations above yields

| (B.11) |

This is the operator Aii in the main text.

B.1.2 Simplified Equations for i ≠ j

In this case, we know that

| (B.12) |

This is the operator Aij in the main text.

B.2 Fugacity Dependent Elements

We start by calculating the elements . As before, within the virial expansion

| (B.13) |

Thus, we have

| (B.14) |

Taking the second derivate with respect to εiα yields

| (B.15) |

Furthermore, one has

| (B.16) |

B.3 Relating Expressions to Those in Main Text

The equations in the main text follow by noting that the sum over σ can be replaced by a sum over expectation value over sequences Sσ drawn from the binding site distribution and background DNA. For an arbitrary function, H(S), of a sequence S of length l,

| (B.17) |

| (B.18) |

with N the expected number of binding sites in a sequence of length L, and where 〈H(S)〉bg and 〈H(S)〉bs are the expectation value of H(S) for sequences S of length l drawn background DNA and binding site distributions, respectively. Combining (B.18) and (14) with the expressions above yields the equations in the main text.

Appendix C: Derivation of the Scaling Relationship

To derive the scaling relationship, we must calculate the quantity

| (C.1) |

where all the second derivatives are evaluated at ε = 0. Plugging (B.7), (B.15), and (B.16) into the expression above, one has

| (C.2) |

where we have defined

| (C.3) |

with γ = 1, 2 and used the fact that piT = pT when ε = 0. When L is large, we can replace the sum over σ by an expectation value in background DNA,

| (C.4) |

Furthermore,

| (C.5) |

Plugging these expressions into (C.2), noting that the third term averages to zero, and simplifying yields

| (C.6) |

Finally, it is often helpful to define a rescaled version of r2(z, ε) that makes the dependence of L explicit,

| (C.7) |

Contributor Information

Pankaj Mehta, Dept. of Physics, Boston University, Boston, MA, USA pankajm@bu.edu.

David J. Schwab, Dept. of Molecular Biology and Lewis-Sigler Institute, Princeton University, Princeton, NJ, USA dschwab@princeton.edu

Anirvan M. Sengupta, BioMAPS and Dept. of Physics, Rutgers University, Piscataway, NJ, USA anirvans@physics.rutgers.edu

References

- 1.Berg OG, von Hippel P. Trends Biochem. Sci. 1988;13:207. doi: 10.1016/0968-0004(88)90085-0. [DOI] [PubMed] [Google Scholar]

- 2.Stormo G, Fields D. Trends Biochem. Sci. 1998;23:109. doi: 10.1016/s0968-0004(98)01187-6. [DOI] [PubMed] [Google Scholar]

- 3.Djordjevic M, Sengupta AM, Shraiman BI. Genome Res. 2003;13:2381. doi: 10.1101/gr.1271603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rajewsky N, Vergassola M, Gaul U, Siggia E. BMC Bioinform. 2002;3 doi: 10.1186/1471-2105-3-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sinha S, van Nimwegen E, Siggia ED. Bioinformatics. 2003;19:292. doi: 10.1093/bioinformatics/btg1040. [DOI] [PubMed] [Google Scholar]

- 6.Drawid A, Gupta N, Nagaraj V, Gelinas C, Sengupta A. BMC Bioinform. 2009;10:208. doi: 10.1186/1471-2105-10-208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kinney JB, Tkaik G, Callan CG. Proc. Natl. Acad. Sci. USA. 2007;104:501. doi: 10.1073/pnas.0609908104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Percus J. J. Stat. Phys. 1976;15 [Google Scholar]

- 9.Bishop C. Pattern Recognition and Machine Learning. 2006.

- 10.Rabiner L. Proc. IEEE. 1989;257 [Google Scholar]

- 11.Schwab DJ, Bruinsma R, Rudnick J, Widom J. Phys. Rev. Lett. 2008;100:228105. doi: 10.1103/PhysRevLett.100.228105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Morozov A, Fortney K, Gaykalova DA, Studitsky V, Widom J, Siggia E. 2008. arXiv:0805.4017. [DOI] [PMC free article] [PubMed]

- 13.Baum LE, Petrie T, Soules G, Weiss N. Ann. Math. Stat. 1970;41:164. [Google Scholar]

- 14.Olsen R, Bundschuh R, Hwa T. Proceedings of the Seventh International Conference on Intelligent Systems for Molecular Biology. 1999. p. 211. [PubMed]

- 15.Tanay A, Siggia E. Genome Biol. 2008;9:37. doi: 10.1186/gb-2008-9-2-r37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jeffreys H. Proc. R. Soc. Lond. Ser. A, Math. Phys. Sci. 1946;186:453. doi: 10.1098/rspa.1946.0056. [DOI] [PubMed] [Google Scholar]

- 17.Mahalanobis P. Proc. Natl. Inst. Sci. India. 1936;2:49–55. [Google Scholar]

- 18.Mora T, Walczak A, Bialek W, Callan CG. Proc. Natl. Acad. Sci. USA. 2010;107:5405. doi: 10.1073/pnas.1001705107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schneidman E, Berry M, Segev R, Bialek W. Nature. 2006;440:1007. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Halabi N, Rivoire O, Leibler S, Ranganathan R. Cell. 2009;138:774. doi: 10.1016/j.cell.2009.07.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Weigt M, White R, Szurmant H, Hoch J, Hwa T. Proc. Natl. Acad. Sci. USA. 2009;106:67. doi: 10.1073/pnas.0805923106. [DOI] [PMC free article] [PubMed] [Google Scholar]