Abstract

Thirty-five U.S. states and territories have implemented policies requiring insurers to cover patient care costs in the context of cancer clinical trials; however, evidence of the effectiveness of these policies is limited. This study assesses the impact of state insurance mandates on clinical trial accrual among community-based practices participating in the NCI Community Clinical Oncology Program (CCOP), which enrolls approximately one-third of all NCI cancer trial participants. We analyzed CCOP clinical trial enrollment over 17 years in 37 states, 14 of which implemented coverage policies, using fixed effects least squares regression to estimate the effect of state policies on trial accrual among community providers, controlling for state and CCOP differences in capacity to recruit. Of 91 CCOPs active during this time, 28 were directly affected by coverage mandates. Average recruitment per CCOP between 1991 and 2007 was 95.1 participants per year (SD = 55.8). CCOPs in states with a mandate recruited similar numbers of participants compared to states without a mandate. In multivariable analysis, treatment trial accrual among CCOPs in states that had implemented a coverage mandate, was not statistically different than accrual among CCOPs in states that did not implement a coverage mandate (β = 2.95, p = 0.681). State mandates did not appear to confer a benefit in terms of CCOP clinical trial accrual. State policies vary in strength, which may have diluted their effect on accrual. Nonetheless, policy mandates alone may not have a meaningful impact on participation in clinical trials in these states.

Keywords: state policy, clinical trial enrollment, Community Clinical Oncology Program

Introduction

Although one third of U.S. adults say they would participate in cancer clinical trials given the opportunity, only 2.5 to 5% of eligible adults are successfully recruited [1-2]. The consequences are serious: low recruitment limits generalizability of trial results, threatens trial completion, and, ultimately, delays the development and availability of potentially beneficial new therapies. Among multiple patient, trial, provider, and health system characteristics associated with low participation [3], one long-cited barrier to enrollment is insurance coverage: Eight to 20% of eligible patients decline to participate in trials because of potential denial of coverage [4-6]. Although study sponsors typically cover the cost of experimental therapy and patients incur treatment costs regardless of trial participation, insurers are thought to refuse coverage for any treatment provided in the context of a clinical trial [7-10]. Subsequently, potential participants may not be willing to participate in trials when reimbursement for their care is jeopardized.

Since 1995, advocates have successfully lobbied for policy changes that ensure care coverage, culminating in the inclusion of a national mandate in the Patient Protection and Affordable Care Act, slated for implementation in 2014 [11-12]. Currently, 35 states and territories and the Centers for Medicaid and Medicare Services (CMS) have active policies mandating coverage of non-research costs for covered patients [13]. The impact of these policies on trial participation has not been demonstrated definitively, with studies of federal and state policies showing mixed results [14-18].

Results of state policy studies have been most promising. One single-state, uncontrolled comparison of participation before and after policy enactment showed an increase in trial participants and a simultaneous decrease (to zero) in the number of decliners citing insurance reimbursement fears as the reason for non-participation [17]. Another single-institution study found more frequent insurance denials among eligible participants in a state without an insurance mandate than in two states with mandates [19]. A third early assessment found an increase in accrual among NCI-sponsored phase II (but not phase III) trials in four states that had enacted mandates, compared to states with no such policies [18]. Since these earlier studies, state mandates have more than doubled. However, no studies to date have examined the effects of the mandates on enrollment in the community, where the majority of patients receive their cancer care [20].

The Community Clinical Oncology Program (CCOP) is a network of mostly non-academic hospitals and oncology practices throughout the U.S., funded by the National Cancer Institute (NCI) to provide infrastructure for community-based trial recruitment. Approximately one-third of research participants recruited to NCI trials are recruited by CCOP sites [21-22]. Between 1991 and 2007, 98 CCOPs were funded in 39 states and territories. This study analyzes a 17-year, longitudinal multi-state dataset of NCI-sponsored cancer clinical trial enrollment to examine the effect of state policies mandating clinical trial coverage on treatment trial accrual among community practices responsible for a substantial portion of recruitment to NCI cancer trials.

Methods

This analysis uses state-level fixed effects least squares estimation to determine the impact of state clinical trial coverage mandates on treatment trial accrual among CCOPs, while controlling for multiple time-varying and time-invariant factors associated with trial enrollment. This approach, with fixed effect estimation and examination of a long baseline period, allows for better isolation of the policy effect than traditional methods.

Data

The analytic dataset is derived from a secondary, unbalanced panel dataset created to evaluate the performance and sustainability of the NCI CCOP program. The study dataset represents the full population of CCOPs and includes CCOP-level characteristics and state-level factors describing the CCOP environment and associated with CCOP performance. Publicly available state policy information was abstracted from the NCI State Cancer Legislative Database, supplemented with details from the American Cancer Society and published research [10, 13, 23-24]. In 2007, new Institute of Medicine cancer clinical trial restructuring recommendations went into effect, limiting the number of trials offered, so data is restricted to the 17 years of data available prior to 2008. One CCOP, Southeast Cancer Control Consortium, was excluded from analysis because it is made up of oncology practices in multiple states and we were unable to attribute accrual to its individual state-specific components. After this exclusion, 97 unique CCOPs were represented during the years 1991-2007. CCOP enrollment occurred in waves, so 1079 CCOP-year observations were available for analysis.

Measures

CCOPs recruit patients to both cancer treatment and cancer prevention and control protocols. However, state policies mandate coverage of patient care costs and would be unlikely to affect prevention efforts occurring outside the context of the healthcare system [25]. Therefore, this analysis focuses on treatment trial accrual, measured as a count of the number of patients each CCOP recruited to treatment clinical trials each year.

The explanatory variable of interest is a binary variable representing the presence of a cancer clinical trial policy in a state in each year following its implementation. Also included in the consideration of state mandates are any statewide formal voluntary agreements among insurers to cover care in the context of cancer clinical trials. Four states have such agreements: Georgia, Michigan, New Jersey and Ohio.[13] We used implementation date, rather than passage date as the date of interest. For policies implemented January 1 through June 30 of any year, we considered the policy implemented in that year. For policies implemented July 1 through December 31, we considered the policy implemented in the next calendar year, providing opportunity for behavior change to take effect.

Clinical trial recruitment is expected to change over time for factors unrelated to state policies or the CCOP characteristics included in the model. Thus, a set of dummy variables indicating calendar years is included to capture changes in accrual over time, such as changes in national trial availability and the national Medicare Coverage Determination signed by President Bill Clinton in 2001 and implemented across all states [24], among other global trends.

Other control variables include CCOP characteristics representing the recruiting potential of each organization. We controlled for a program’s participation as a Minority-based CCOP (MBCCOP). MBCCOPs are specially organized programs designed to enhance minority participation in cancer research [26]. While successful in their mission, these CCOPs face distinct recruitment challenges, including both the environments in which they recruit as well as the populations they target, who may be more reluctant to participate in research [26-27].

The remaining control variables account for each CCOP’s size and productive capacity. Each CCOP is made up of multiple hospitals and community oncology practices. The greater number of sites from which a CCOP draws will directly influence its accrual. Likewise, the number of physicians who recruit for each CCOP is an indicator of the size and clinical diversity of the patient base from which a CCOP draws its clinical trial participants [28]. We assessed appropriate functional form of the continuous variables by comparing the adjusted R2 of models with alternative forms to the models with the original term. A quadratic form was shown to be the best characterization of the number of physicians accruing patients. A three-knot spline of four equal percentiles was chosen for the number of components.

Statistical Analysis

We compared pooled ordinary least squares, state fixed effects and random effects models for appropriate model fit. Specification tests, including an F-test of the joint significance of the fixed effects dummy intercepts, a Breusch-Pagan Lagrangian Multiplier test, and the Hausman test, indicated that the fixed effect model was most appropriate [29]. We used complete case analysis to address missing data.

Sensitivity Analyses

We made several assumptions in specifying our model, which required explicit review and assessment. Several CCOPs recruit participants across state lines, due to either geographic proximity (e.g., Kansas City, MO site lies on the border of Kansas and Missouri) or contractual arrangement. We reviewed each of these sites to assess whether they potentially recruited patients from states that had discordant policies from the state in which the CCOP was headquartered. For three of the sites, the policies were the same in the state in which the CCOP was headquartered and that state in which they recruited small numbers of patients: for each pair, both states had no cancer trial mandate. For two sites, state policies differed in the two jurisdictions from which participants were potentially drawn.

In addition, pediatric recruitment is known to be quite different from adult recruitment. For example, approximately half of children ages 5 to 9 are recruited to cancer trials compared to 1% of adults ages 75 to 79 [2]. We identified one CCOP that recruited exclusively for pediatric trials.

The requirements and breadth of policies vary among states [24], thus we also assessed a measure of policy strength by assigning a single score to each state, which assigned one point for each phase of trial covered (I-III) and one point for each payer type required to provide coverage, as these aspects should have most directly affected treatment trial accrual.

We also were concerned that clinical trial recruitment in more recent decades may be fundamentally different than that in the 1990s due to changes in healthcare payment [22]. Thus, we conducted a Chow test for structural differences in treatment accrual and a sensitivity analysis restricted to the years 2001 through 2007 to assess whether our model was robust, finding that the two periods were structurally similar and could be pooled. We also thought it important to assess potential bias induced by not controlling for varying levels of uninsured residents among the states.

Subsequently, a number of sensitivity analyses were conducted, repeating analyses after: 1) removing CCOPs which potentially recruit participants from states with discordant policies; 2) removing CCOPs which recruit only pediatric patients; 3) substituting a policy strength score for the binary indicator of mandate implementation; 4) restricting data to 2001-2007; and, 5) including the proportion of uninsured patients in the metropolitan statistical area surrounding each CCOP in this more recent time period.

Two-sided tests of statistical significance were used to assess the significance of regression coefficients. Heteroskedasticity of the model was indicated by the Breusch-Pagan test for heteroskedasticity [29]. Thus, Huber-White cluster robust standard errors are used. All analyses were conducted in Stata 11.2 [30]. The University of North Carolina, Chapel Hill Institutional Review Board deemed the study exempt from review.

Results

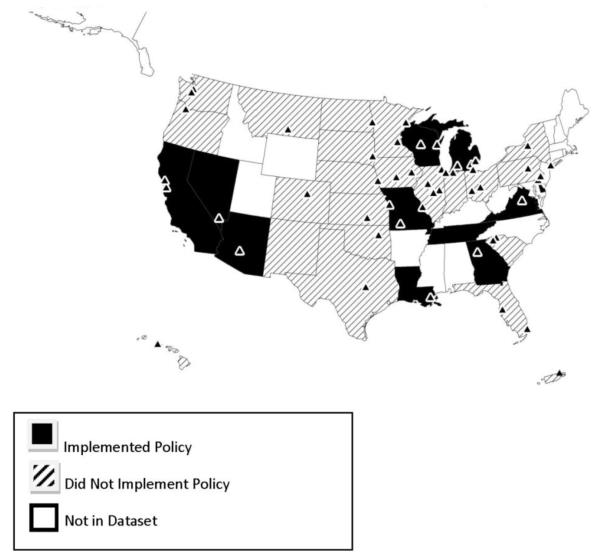

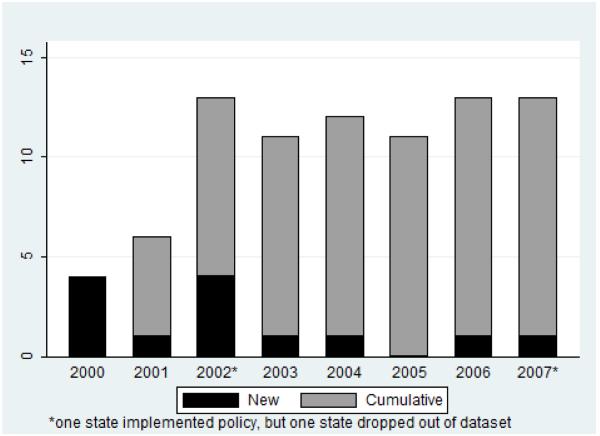

The final sample of 883 observations consisted of 85 CCOPs across 37 states and U.S. territories. Of the 37 states and territories represented, 14 states implemented a policy during the study period; 23 did not. One state (Illinois) allowed its mandate to expire [24] and CCOPs in two states closed (and thus their states dropped from the dataset), one before its state policy was implemented (see Figure 1). In total, 13 states contributed data both before and after policy implementation. Policy implementation occurred between 2000 and 2007. Figure 2 illustrates state policy implementation trends among states with CCOPs. Forty-three CCOPs were in states that implemented policies, and 28 were actively participating when the policy went into effect.

Figure 1. Policy states and CCOPs remaining in final year of study, 2007.

Figure 2. Number of states with policy mandates in study sample.

Table 1 shows descriptive statistics for the full sample and by presence of a state coverage mandate. Mean recruitment overall was 95.1 participants per year, with a range of 0 to 411. CCOPs in states with a mandate recruited fewer participants, although the difference was not statistically significant. Minority CCOP status did not vary by policy status. Compared to states with mandates, there were significantly fewer physicians participating in each CCOP per year in states without, or prior to mandated coverage, and fewer components per CCOP in the lowest quartile of CCOP components in states with the policy. Sites with missing data had significantly fewer accruals (68.63 vs. 95.08, p<0.001), lower rates of policy exposure (0.01 vs. 0.14, X2 = 14.54, p<0.001), and fewer physicians recruiting patients (9.35 vs. 16.96, p<0.001), though they had similar numbers of components (4.38 vs. 5.48, p=0.13).

Table 1. CCOP-Year Descriptive Characteristics by State Policy Implementation.

| All CCOP-Years | CCOP-Years in States Without Mandated Coverage |

CCOP-Years in States With Mandated Coverage |

|

|---|---|---|---|

| Mean (SD) | Mean (SD) | Mean (SD) | |

| Treatment Accrual | 95.1 (55.8) |

95.6 (55.2) |

92.3 (58.9) |

| Percent Observations with State Coverage Mandate |

13% | 0% | 100% |

| Percent Observations Per Year | |||

| 1991 | 6% | 7%** | 0% |

| 1992 | 4% | 5%** | 0% |

| 1993 | 4% | 5%** | 0% |

| 1994 | 6% | 7%** | 0% |

| 1995 | 6% | 7%** | 0% |

| 1996 | 6% | 7%** | 0% |

| 1997 | 6% | 7%** | 0% |

| 1998 | 5% | 6%** | 0% |

| 1999 | 5% | 6%** | 0% |

| 2000 | 6% | 6% | 7% |

| 2001 | 6% | 6% | 8% |

| 2002 | 7% | 5%*** | 14% |

| 2003 | 7% | 6%** | 13% |

| 2004 | 7% | 6%*** | 14% |

| 2005 | 7% | 6%** | 13% |

| 2006 | 7% | 5%*** | 15% |

| 2007 | 6% | 5%*** | 16% |

| Percent Observations Minority- base CCOP |

16% | 16% | 18% |

| Number of MDs Participating | 17.0 (11.0) |

16.6* (10.9) |

18.8 (11.3) |

| Number of MDs Participating Squared¥ | 408.0 (709.0) |

395.0 (728.0) |

479.0 (590.0) |

| Number of Components-1-25th percentile¥ |

2.67 (0.70) |

2.69* (0.68) |

2.56 (0.81) |

| Number of Components-26-50th percentile |

1.12 (0.94) |

1.12 (0.94) |

1.12 (0.97) |

| Number of Components-51-75th percentile |

0.67 (0.90) |

0.68 (0.90) |

0.58 (0.86) |

| Number of Components-76-100th percentile¥ |

1.02 (3.21) |

1.07*** (3.22) |

0.72 (3.19) |

| Percent Observations Per Region | |||

| Northeast | 12% | 13%** | 5% |

| South | 24% | 23%* | 31% |

| West | 19% | 18% | 20% |

| Midwest | 44% | 43% | 44% |

| n=883 CCOP-years | n=749 CCOP-years | n=134 CCOP-years |

Statistical significance reflects comparison of states without a mandate to states with a mandate

statistically significant at p<0.05

statistically significant at p<0.01

statistically significant at p<0.001 continuous variables compared with t-test assuming equal variances

continuous variables compared with t-test assuming unequal variances dichotomous variables compared with Pearson’s Chi-square

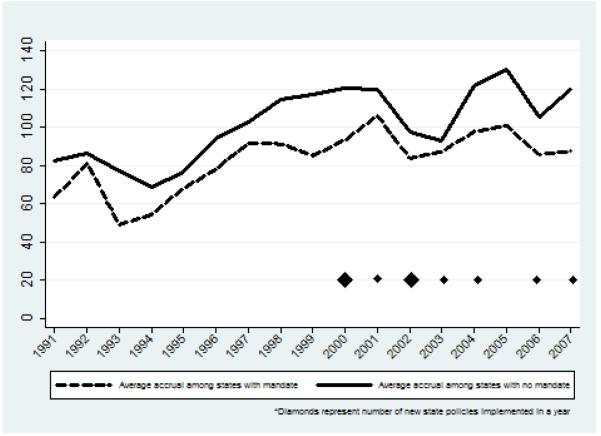

Recruitment fluctuated over time in both policy states and non-policy states (Figure 3). In the early 2000s as mandates were beginning to be implemented, gaps in average accrual between the policy states and non-policy states tightened; however, the effect was similar in size to the smallest gaps in the 1990s and the tightening did not last beyond 2003, even as additional states implemented coverage policies. Of the 13 states with accrual data before and after implementation, there was no significant difference in accrual in the two time periods for nine states. One state had a statistically significant drop in accrual and three states had a statistically significant increase in accrual.

Figure 3. Average treatment trial accrual by year.

Table 2 presents findings from the state fixed effects analysis. After adjusting for accrual trends over time, minority CCOP status, the number of physicians recruiting, the number of components per CCOP, and state characteristics, CCOP treatment trial accrual in states with a coverage mandate was not significantly different than in states with no such mandate.

Table 2. State Fixed Effects Regression Model Results.

| β | 95% CI | |

|---|---|---|

| State Coverage Mandate | 2.95 | −11.48, 17.38 |

| Year (referent 1991) | ||

| 1992 | −4.35 | −10.66, 1.96 |

| 1993 | −12.97** | −21.98, −3.95 |

| 1994 | −14.77** | −23.63, −5.91 |

| 1995 | −14.33** | −23.45, −5.20 |

| 1996 | −4.73 | −16.98, 7.51 |

| 1997 | 6.61 | −4.32, 17.55 |

| 1998 | 13.81* | 1.48, 26.14 |

| 1999 | 10.83 | −3.03, 24.69 |

| 2000 | 13.05 | −0.25, 26.36 |

| 2001 | 16.34* | 2.94, 29.74 |

| 2002 | −4.69 | −17.87, 8.50 |

| 2003 | −7.41 | −20.26, 5.44 |

| 2004 | 16.10* | 1.57, 30.63 |

| 2005 | 25.04** | 8.10, 41.98 |

| 2006 | 1.55 | −10.55, 13.65 |

| 2007 | 4.31 | −9.91, 18.52 |

| Minority-base CCOP | −27.06** | −43.07, −11.06 |

| Number of MDs Participating | 3.11*** | 2.00, 4.23 |

| Number of MDs Squared | −0.02** | −0.04, −0.01 |

| Number of Components:1-25th percentile | 2.43 | −6.36, 11.22 |

| Number of Components: 26-50th percentile | 0.51 | −5.86, 6.88 |

| Number of Components: 51-75th percentile | 0.78 | −8.41, 9.98 |

| Number of Components: 76-100th percentile | 4.05*** | 1.99, 6.12 |

| Region (referent Midwest) | ||

| Northeast¥ | -- | |

| South¥ | -- | |

| West¥ | -- | |

| Constant N | 40.85** | 15.86, 65.84 883 |

| N | 883 | |

95% Confidence Intervals calculated with Huber-White robust standard errors

statistically significant at p<0.05

statistically significant at p<0.01

statistically significant at P<0.001

Time-invariant at the state level, not estimated in state fixed effect model

As expected, minority-based CCOPs were associated with less trial accrual, 27.0 fewer participants, all else equal. The marginal effect of the number of physicians recruiting was an additional 2.3 participants recruited per year (95% CI 1.6, 3.0) which was statistically significant. The number of components in a CCOP positively influenced recruitment rates, but only at the highest quartile. Enrollment increased over time (p<0.001), suggesting that other factors that changed over time were important in influencing CCOP accrual.

Findings did not differ meaningfully among the multiple sensitivity analyses examining different sample or model assumptions. Specifically, excluding CCOPs with multi-state or pediatric enrollment or limiting the study to the post-Medicare mandate also produced non-significant point estimates of the effect of state policy. Replacing the explanatory variable of interest with a crude policy strength index and including the proportion of uninsured in the limited time period both provided a non-significant policy effect as well.

Discussion

In this analysis, state policies mandating cancer clinical trial coverage were not associated with differences in accrual to NCI clinical treatment trials among a diverse national group of community cancer centers. Although a few states saw gains in recruitment after policy implementation, most did not and there was no net effect of state policies.

These findings differ from earlier results, which showed greater accrual in at least some types of trials following state policy implementation [17-18]. By design, our study excluded academic centers and Veterans Administration (VA) sites that may have a different experience [6], and specifically examined accrual in the community setting where the majority of cancer patients receive treatment. Prior research examining academic centers or both community and academic centers showed positive effects [17] or mixed results [18] of such policies. We can think of no reason why insurance mandates would be more effective in academic sites than in community sites. In fact, mandates are more likely to enhance recruitment at community sites: Many mandates allow insurers to deny coverage of patient care costs when they are out of network [8] and because most patients have greater access to the more geographically dispersed community sites, academic medical centers are more likely to be out of network. Insurance mandates should have little or no effect on VA recruitment since the VA serves as both payer and provider.

There are a number of reasons why these policies may not be effective. Insurance concerns may be less of a barrier to recruitment than assumed. Although anecdotal evidence exists in the popular press and from within the ranks of experienced trial administrators [5-6], actual instances may be rare and reflect more of the exception rather than the rule of insurer practices, though they may generate a disproportionately negative perception among prospective trial participants. Individuals’ refusal to participate due to fear of coverage loss is low, cited by only 8% of refusers [4]. Further, 8% of patients denied coverage actually do participate [6]. From 80% to 85% of claims for treatment on clinical trial are ultimately paid [6, 31]. Additionally, payers’ policies on coverage of trials reportedly have begun favoring coverage since the early 2000s [32]. Trial participation rates increased among the privately insured between 1996 and 2001 [18]. It also may be that the majority of insurers actually do provide coverage—either as a matter of policy or unwittingly. While there is evidence that claims are denied when insurers are told that care is part of a clinical trial [19], it is often difficult to distinguish whether care is part of one and thus, insurers may pay for it, regardless of internal policies [8]. Ultimately, insurance coverage is only one potential barrier to participation in clinical trials. Only about 20% of cancer patients meet eligibility criteria for clinical trials and physicians may not even offer all eligible patients trial participation for a number of reasons [5]. Consequently, coverage concerns may be overshadowed.

This study used more recent data, incorporated more state policies, and made use of longitudinal data and estimation techniques that address selection bias. Our study design included lengthy baseline assessment to capture trends over time and the panel design allowed us to compare both within-state changes in accrual before and after mandate implementation among policy states and differences in accrual between mandate and non-mandate states. Nonetheless, a number of factors may have limited our ability to detect an effect, if there is one, including data availability, competing policies, and policy dilution.

Although we examined data for nearly all CCOPs in existence, spanning 17 years of trial enrollment, the study question mandated a data structure and approach that yielded relatively few observations (CCOP-years) for a multivariable examination. The limited observations, with correspondingly limited variance among the key variables, may have functionally been too small to yield a statistically detectable policy effect. Related to this, although selection of variables was driven by theory and practical knowledge, the limited effective sample size obliged us to be parsimonious in variable selection, and there may be other important variables for inclusion. For example, health insurance access, theoretically should be a relevant factor; however, in other analyses of these data, correlation between an area’s proportion of uninsured and accrual was low, and it was not a significant predictor of accrual [28]. In this sample, there was no difference in the average proportion uninsured between mandate and non-mandate states. Moreover, sensitivity analysis including this variable in the model showed no difference in the effect of state policy. Because the change in the proportion of uninsured per area measured is quite small from year to year, we believe it essentially is controlled for by the state fixed effect. Future research may overcome these challenges through a multi-level examination including person-level enrollment and additional relevant variables. For example, age is relevant for several reasons, including trial eligibility and age-related non-participation [33].

We cannot be certain that data availability did not limit our ability to detect an effect of state policies. Missing data and misclassification may have biased our results. Four CCOPs with missing data were excluded from the analysis, and some bias might have been introduced since missing data was associated with trial accrual and state policies. However, this would suggest our results overestimate the policy effect size and would not change our conclusions.

Competing policies also may have introduced noise and diluted our ability to detect the effect of state policies. For example, while our study focused on state policies, a series of federal policies were passed over the last decade to facilitate clinical trial participation. In particular, a 2000 executive order (later expanded) required Medicare to reimburse for routine care associated with trial participation [5, 16]. By including a measure for time in our analysis, we adjusted for such effects by controlling for trends that affected both mandate and non-mandate states. However, if this policy was implemented unevenly, as has been reported [8], our ability to control for it through the method would be limited. In our sample, it appears a change occurred after 2001 in both mandate and non-mandate states (Figure 3), limiting concerns that uneven implementation biased results.

Of some concern is that state laws may only affect as little as a third of the population because of federal exemptions for some employers. The Employee Retirement Security Income Act (ERISA) exempts some self-insured employer-sponsored plans from the state mandates, affecting about 67 million Americans [8]. We are unable to assess how distribution of these plans across states affects clinical trial accrual. As long as the distribution of ERISA plans remains constant over time within state, we have effectively controlled for them by including state fixed effects, but any changes in concentrations of self-insured employers in any state may bias the effect of state policies.

Finally, state policies mandating trial coverage vary in scope [24]. All require private insurers to cover trial costs, but some states additionally require certain government sponsored plans to participate [13, 24]. Nine states (18%) require coverage for all four phases of clinical trials, but other states specify that only certain phases of research qualify [24, 34]. The effect of mandated coverage of research-related injuries on trial participation is unknown, but some states additionally require it [24]. In addition, several states have voluntary agreements rather than statutes [8] and it is unknown whether these agreements have similar implementation rates as official policies. This variability among the policies could significantly impact our ability to estimate their effectiveness. We attempted a crude analysis of policy strength. We found no difference in our results. Results are not presented as we have concerns about the scale’s validity. We do not know if the weights given to each dimension are appropriate, nor if the effects of various components of the mandates are additive or multiplicative. Moreover, the mandates include other important coverage requirements such as coverage of research-related injuries [24] and the relative contributions of these requirements is unknown. An index that more appropriately weights all aspects of the provisions, including the relative effect of coverage of research-related injury and trial phases, is needed. Thus, future research should characterize fully the strength of each state’s policy, including identifying incremental changes in patients’ willingness to participate with each coverage stipulation; understanding the effects of seemingly non-complementary provisions; and, psychometrically testing a summary index. Other research should further evaluate how dissemination of information about the state mandates, and enforcement of them, additionally contributes to their strength. Dissemination of these policies to potential participants has been shown to be weak [31]. Greater differences may have been evidenced had potential participants been aware of them.

Conclusions

This study highlights many of the challenges to the effectiveness and evaluation of state-level policies, some of which the 2014 federal policy mandate will address. Nonetheless, developing and implementing such policies is resource intensive and represents an opportunity cost. Addressing lower-level factors not easily reached by policy interventions may be more fruitful. Research infrastructure is associated with physician participation in research [35] and on-site physician champions have been shown to sustain high accrual [36-37]. Efforts that directly support physician leaders and the physicians in research-supportive environments who do not participate in research [35] may more efficiently enhance accrual. Recent experience among the CCOP suggests that as stand-alone hospitals evolve into health systems, even greater interest exists to integrate research into the clinical mission, presenting opportunity to increase access to trials. Thus, interventions which support and protect interests of research participants in the context of this new delivery landscape, hold promise and require investigation. Understanding the practical challenges at the individual-, provider-, or health system-level are still necessary to ultimately encourage cancer patients to participate in clinical research. Whether these interventions can be delivered effectively through policy or through other mechanisms remains unanswered.

Although our results do not provide encouragement that state mandates improve community accrual, coverage policies warrant continuing assessment. Continued vigilance and ongoing communication with third party payers to assure high quality care and an environment that promotes scientific discovery in an efficient manner is still needed. Third party coverage of experimental therapies may keep potential participants from joining trials and lure participants off of protocols if their preferred treatment is covered outside the context of clinical trials [5, 38]. Continued efforts to partner with payers to facilitate trial participation will allow patients who want to participate to have the opportunity and payers to have the best evidence-based information upon which to base their coverage policies. Finally, even if these policies do not have an effect on trial accrual, they may serve other purposes such as increasing minority participation in clinical trials [10, 39] and ensuring providers are paid for their efforts and experience less burden and administrative costs, encouraging their continued participation, a necessary part of ensuring future trial availability [8, 40]. These additional potential outcomes are equally important and deserve to be assessed.

Acknowledgements

Charles Belden provided graphical assistance though the creation of CCOP maps. The paper also benefitted from the thoughtful considerations of anonymous peer reviewers.

Funding

This work was supported by the National Cancer Institute at the National Institutes of Health (R25CA116339 and R01CA124402). The sponsor had no role in the design of the study, data collection or analysis, or decision to submit the paper. As an expert in the Community Clinical Oncology Program and clinical trial infrastructure, Dr Minasian participated in the interpretation of results and writing of the manuscript.

Abbreviations

- NCI

National Cancer Institute

- CMS

Centers for Medicaid and Medicare Services

- CCOP

Community Clinical Oncology Program

- MBCCOP

Minority-Based Community Clinical Oncology Program

- OLS

Ordinary Least Squares

- VA

Veterans Administration

- ERISA

Employee Retirement Security Income Act

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Comis RL, Miller JD, Aldige CR, Krebs L, Stoval E. Public attitudes toward participation in cancer clinical trials. J Clin Oncol. 2003;21:830–5. doi: 10.1200/JCO.2003.02.105. [DOI] [PubMed] [Google Scholar]

- [2].Sateren WB, Trimble EL, Abrams J, Brawley O, Breen N, Ford L, et al. How sociodemographics, presence of oncology specialists, and hospital cancer programs affect accrual to cancer treatment trials. J Clin Oncol. 2002;20:2109–17. doi: 10.1200/JCO.2002.08.056. [DOI] [PubMed] [Google Scholar]

- [3].Fayter D, McDaid C, Eastwood A. A systematic review highlights threats to validity in studies of barriers to cancer trial participation. J Clin Epidemiol. 2007;60:990–1001. doi: 10.1016/j.jclinepi.2006.12.013. [DOI] [PubMed] [Google Scholar]

- [4].Lara PN, Jr., Higdon R, Lim N, Kwan K, Tanaka M, Lau DH, et al. Prospective evaluation of cancer clinical trial accrual patterns: identifying potential barriers to enrollment. J Clin Oncol. 2001;19:1728–33. doi: 10.1200/JCO.2001.19.6.1728. [DOI] [PubMed] [Google Scholar]

- [5].Abernathy AP, Allen Lapointe NM, Wheeler JL, Irvine RJ, Patwardhan M, Matchar D. Horizon Scan: To What Extent Do Changes in Third-Party Payment Affect Clinical Trials and the Evidence Base? In: Center DE-BP, editor. Technology Asessment Report. Agency for Healthcare Research and Quality; Rockville, MD: 2009. [PubMed] [Google Scholar]

- [6].Klabunde CN, Springer BC, Butler B, White MS, Atkins J. Factors influencing enrollment in clinical trials for cancer treatment. South Med J. 1999;92:1189–93. doi: 10.1097/00007611-199912000-00011. [DOI] [PubMed] [Google Scholar]

- [7].United States General Accounting Office. Report to Congressional Requesters . NIH Clinical Trials: Various Factors Affect Patient Participation. General Accounting Office; Washington, D.C.: 1999. p. 34. [Google Scholar]

- [8].Nass SJ, Moses HL, Mendelsohn J, Committee on Cancer Clinical Trials and the NCI Cooperative Group Program . A National Cancer Clinical Trials System for the 21st Century: Reinvigorating the NCI Cooperative Group Program. The National Academies Press; Washington, D.C.: 2011. [PubMed] [Google Scholar]

- [9].Mortenson LE. Health care policies affecting the treatment of patients with cancer and cancer research. Cancer. 1994;74:2204–7. doi: 10.1002/1097-0142(19941001)74:7+<2204::aid-cncr2820741736>3.0.co;2-e. [DOI] [PubMed] [Google Scholar]

- [10].Baquet CR, Mishra SI, Weinberg AD. A descriptive analysis of state legislation and policy addressing clinical trials participation. J Health Care Poor Underserved. 2009;20:24–39. doi: 10.1353/hpu.0.0156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Denicoff AMA, Jeffrey S. NCI Cancer Bulletin. National Cancer Institute; Bethesda, MD: 2010. Targeting Barriers, including Insurance Coverage, to Improve Clinical Trial Participation. [Google Scholar]

- [12].An Act Entitled the Patient Protection and Affordable Care Act. United States of America; 2010. [Google Scholar]

- [13].National Cancer Institute . States That Require Health Plans to Cover Patient Care Costs in Clinical Trials. National Cancer Institute; Bethesda, MD: 2009. [DOI] [PubMed] [Google Scholar]

- [14].McBride G. More states mandate coverage of clinical trial costs, but does it make a difference? J Natl Cancer Inst. 2003;95:1268–9. doi: 10.1093/jnci/95.17.1268. [DOI] [PubMed] [Google Scholar]

- [15].Unger JM, Coltman CA, Jr., Crowley JJ, Hutchins LF, Martino S, Livingston RB, et al. Impact of the year 2000 Medicare policy change on older patient enrollment to cancer clinical trials. J Clin Oncol. 2006;24:141–4. doi: 10.1200/JCO.2005.02.8928. [DOI] [PubMed] [Google Scholar]

- [16].Gross CP, Wong N, Dubin JA, Mayne ST, Krumholz HM. Enrollment of older persons in cancer trials after the medicare reimbursement policy change. Arch Intern Med. 2005;165:1514–20. doi: 10.1001/archinte.165.13.1514. [DOI] [PubMed] [Google Scholar]

- [17].Martel CL, Li Y, Beckett L, Chew H, Christensen S, Davies A, et al. An evaluation of barriers to accrual in the era of legislation requiring insurance coverage of cancer clinical trial costs in California. Cancer J. 2004;10:294–300. doi: 10.1097/00130404-200409000-00006. [DOI] [PubMed] [Google Scholar]

- [18].Gross CP, Murthy V, Li Y, Kaluzny AD, Krumholz HM. Cancer trial enrollment after state-mandated reimbursement. J Natl Cancer Inst. 2004;96:1063–9. doi: 10.1093/jnci/djh193. [DOI] [PubMed] [Google Scholar]

- [19].Klamerus JF, Bruinooge SS, Ye X, Klamerus ML, Damron D, Lansey D, et al. The impact of insurance on access to cancer clinical trials at a comprehensive cancer center. Clin Cancer Res. 2010;16:5997–6003. doi: 10.1158/1078-0432.CCR-10-1451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Green LA, Fryer GE, Jr., Yawn BP, Lanier D, Dovey SM. The ecology of medical care revisited. N Engl J Med. 2001;344:2021–5. doi: 10.1056/NEJM200106283442611. [DOI] [PubMed] [Google Scholar]

- [21].Kaluzny AD, Warnecke R, Lacey LM, Johnson T, Gillings D, Ozer H. Using a community clinical trials network for treatment, prevention, and control research: assuring access to state-of-the-art cancer care. Cancer Invest. 1995;13:517–25. doi: 10.3109/07357909509024917. [DOI] [PubMed] [Google Scholar]

- [22].Carpenter WR, Weiner BJ, Kaluzny AD, Domino ME, Lee SY. The effects of managed care and competition on community-based clinical research. Med Care. 2006;44:671–9. doi: 10.1097/01.mlr.0000220269.65196.72. [DOI] [PubMed] [Google Scholar]

- [23].American Cancer Society . Clinical Trials: State Laws About Insurance Coverage. Atlanta: 2011. [Google Scholar]

- [24].Taylor PL. State payer mandates to cover care in US oncology trials: do science and ethics matter? J Natl Cancer Inst. 2010;102:376–90. doi: 10.1093/jnci/djq028. [DOI] [PubMed] [Google Scholar]

- [25].Hillner BE. Barriers to clinical trial enrollment: are state mandates the solution? J Natl Cancer Inst. 2004;96:1048–9. doi: 10.1093/jnci/djh225. [DOI] [PubMed] [Google Scholar]

- [26].Kaluzny A, Brawley O, Garson-Angert D, Shaw J, Godley P, Warnecke R, et al. Assuring access to state-of-the-art care for U.S. minority populations: the first 2 years of the Minority-Based Community Clinical Oncology Program. J Natl Cancer Inst. 1993;85:1945–50. doi: 10.1093/jnci/85.23.1945. [DOI] [PubMed] [Google Scholar]

- [27].McCaskill-Stevens W, McKinney MM, Whitman CG, Minasian LM. Increasing minority participation in cancer clinical trials: the minority-based community clinical oncology program experience. J Clin Oncol. 2005;23:5247–54. doi: 10.1200/JCO.2005.22.236. [DOI] [PubMed] [Google Scholar]

- [28].Carpenter WR, Fortune-Greeley AK, Zullig LL, Lee SY, Weiner BJ. Sustainability and performance of the National Cancer Institute’s Community Clinical Oncology Program. Contemp Clin Trials. 2012;33:46–54. doi: 10.1016/j.cct.2011.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Wooldridge JM. Introductory Econometrics: A Modern Approach. Fourth ed South-Western Cengage Learning; Mason, OH: 2009. [Google Scholar]

- [30].StataCorp LP. Stata/IC 11.2 for Windows. StataCorp LP; College Station, TX: 2011. [Google Scholar]

- [31].Kelahan AM. Dissemination of information on legislative mandates and consensus-based programs addressing payment of the costs of routine care in clinical trials through the World Wide Web. Cancer. 2004;100:1238–45. doi: 10.1002/cncr.20066. [DOI] [PubMed] [Google Scholar]

- [32].ECRI Institute Should I Enter a Clinical Trial?. In: American Association of Health Plans, editor. A Patient Reference Guide for Adults with a Serious or Life-Threatening Illness A Report by ECRI Commissioned by AAHP; Plymouth Meeting, PA; American Association of Health Plans. 2002. [Google Scholar]

- [33].Aaron HJ, Gelband H. Extending Medicare Reimbursement in Clinical Trials. National Academies Press; Washington, D.C.: 2000. [PubMed] [Google Scholar]

- [34].Lowrey KM, Kline BI, Laestadius L, Arculli Re. State Legislative Efforts to Address Barriers to Racial and Ethnic Minorities’ Participation in Cancer Clinical Trials American Association for Cancer Research Conference on the Science of Cancer Health Disparities; Carefree, AZ. 2009. [Google Scholar]

- [35].Klabunde CN, Keating NL, Potosky AL, Ambs A, He Y, Hornbrook MC, et al. A population-based assessment of specialty physician involvement in cancer clinical trials. J Natl Cancer Inst. 2011;103:384–97. doi: 10.1093/jnci/djq549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Kumar N, Crocker T, Smith T, Pow-Sang J, Spiess PE, Egan K, et al. Challenges and potential solutions to meeting accrual goals in a Phase II chemoprevention trial for prostate cancer. Contemp Clin Trials. 2012;33:279–85. doi: 10.1016/j.cct.2011.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].McKinney MM, Warnecke RB, Kaluzny AD. Strategic approaches to cancer control research in NCI-funded research bases. Cancer Detect Prev. 1992;16:329–35. [PubMed] [Google Scholar]

- [38].Taylor PL. Overseeing innovative therapy without mistaking it for research: a function-based model based on old truths, new capacities, and lessons from stem cells. J Law Med Ethics. 2010;38:286–302. doi: 10.1111/j.1748-720X.2010.00489.x. [DOI] [PubMed] [Google Scholar]

- [39].Baquet CR, Ellison GL, Mishra SI. Analysis of Maryland cancer patient participation in National Cancer Institute-supported cancer treatment clinical trials. J Health Care Poor Underserved. 2009;20:120–34. doi: 10.1353/hpu.0.0162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Minasian LM, O’Mara AM. Accrual to clinical trials: let’s look at the physicians. J Natl Cancer Inst. 2011;103:357–8. doi: 10.1093/jnci/djr018. [DOI] [PubMed] [Google Scholar]