Abstract

Converging findings from behavioral, neurophysiological, and neuroimaging studies suggest an integration-to-boundary mechanism governing decision formation and choice selection. This mechanism is supported by sequential sampling models of choice decisions, which can implement statistically optimal decision strategies for selecting between multiple alternative options on the basis of sensory evidence. This review focuses on recent developments in understanding the evidence boundary, an important component of decision-making raised by experimental findings and models. The article starts by reviewing the neurobiology of perceptual decisions and several influential sequential sampling models, in particular the drift-diffusion model, the Ornstein–Uhlenbeck model and the leaky-competing-accumulator model. In the second part, the article examines how the boundary may affect a model’s dynamics and performance and to what extent it may improve a model’s fits to experimental data. In the third part, the article examines recent findings that support the presence and site of boundaries in the brain. The article considers two questions: (1) whether the boundary is a spontaneous property of neural integrators, or is controlled by dedicated neural circuits; (2) if the boundary is variable, what could be the driving factors behind boundary changes? The review brings together studies using different experimental methods in seeking answers to these questions, highlights psychological and physiological factors that may be associated with the boundary and its changes, and further considers the evidence boundary as a generic mechanism to guide complex behavior.

Keywords: decision, boundary, integration, modeling

Neural Mechanisms of Perceptual Decisions

Making decisions on the basis of sensory information is a frequent and critical element of human lives. Imagine you are driving toward a traffic light in clear weather. You can easily decide to stop or accelerate depending on the color of the traffic light ahead. When driving in foggy weather, however, since the scene is less visible, it is more difficult to distinguish between the red and green light. You may need longer to make the correct decision, and may sometimes even make a mistake.

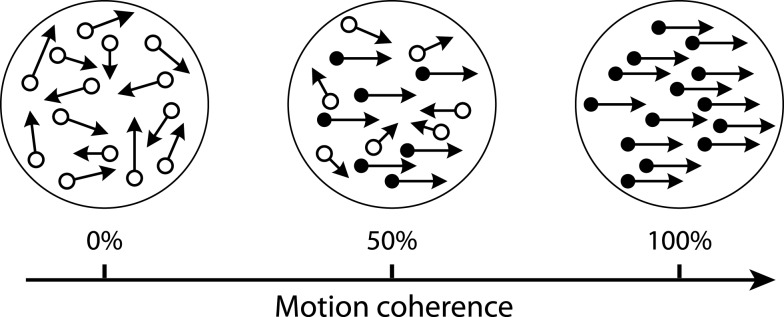

This type of process is often referred to as perceptual decision-making (Newsome et al., 1989; Gold and Shadlen, 2001, 2007; Heekeren et al., 2008), which requires one to discriminate sensory attributes from either stationary or dynamic stimuli – such as an illumination with different colors (Yellott, 1971), a geometric shape with different orientations (Swensson, 1972), or a pixel array with different brightness (Ratcliff and Rouder, 1998) – and map the subjective perception onto multiple alternative responses. Laboratory studies of the decision process often employ one of two forced-choice paradigms. In the time-controlled (TC) paradigm, subjects are required to give their response immediately after a decision time set by the experimenter (Yellott, 1971; Swensson, 1972; Dosher, 1976, 1984). In the information-controlled (IC) paradigm, subjects are allowed to respond freely whenever they feel confident, from which subjects’ response times (RTs) can be measured as a second dependent variable (Luce, 1986). The neural mechanisms of perceptual decisions have been extensively studied using a prototypical random dot motion (RDM) discrimination task (Britten et al., 1993; Shadlen and Newsome, 2001; Roitman and Shadlen, 2002; Palmer et al., 2005; Churchland et al., 2008; Kiani et al., 2008). The RDM stimulus consists of a dynamic field of moving dots, a proportion of which move coherently in one direction, while the other dots move randomly (Figure 1). The task is to decide the direction of coherent motion and respond with an eye movement or a button press. Its difficulty can be manipulated by varying the strength of motion coherence.

Figure 1.

Schematic diagram of the RDM stimulus with different motion coherence levels. In each frame a proportion of the dots (solid dots) are repositioned with fixed spatial offset, indicating the coherent motion direction, and the rest of the dots (open dots) are repositioned randomly. More detailed specification of the stimulus is available in Britten et al. (1992).

Single-unit recordings in trained monkeys performing the RDM task indicate that the formation of perceptual decisions involve distinct neural processes across different brain regions. First, neuronal activity in motion sensitive areas (MT/V5; Maunsell and Van Essen, 1983; Born and Bradley, 2005; Zeki, 2007) are closely related to the statistics of the RDM stimulus (i.e., the motion coherence; Newsome and Pare, 1988; Salzman et al., 1990, 1992; Ditterich et al., 2003), but only weakly correlate with behavioral responses (Britten et al., 1992, 1993, 1996), suggesting that sensory neurons encode noisy, transient, and stimulus dependent evidence to support an alternative (Gold and Shadlen, 2001, 2007). Second, neurons in the lateral intraparietal (LIP) area respond with ramp-like changes, and the rate of change depends on the level of motion coherence (Shadlen and Newsome, 2001; Roitman and Shadlen, 2002). Unlike the MT neurons that respond transiently to visual stimuli, the LIP neurons gradually build up or attenuate their activity even if the visual stimuli remain ambiguous (i.e., 0% coherence). This activity pattern starts shortly after the stimulus onset and terminates before a saccadic response. Importantly, around ∼80 ms before a response, there is no obvious variability in firing rates of LIP neurons when responses are made under different motion coherence levels, and neural activity correlates only with the direction of eye movement (i.e., the decision). These findings suggest that LIP neurons integrate sensory evidence up to a decision boundary1 prior to a response (Mazurek et al., 2003; Huk and Shadlen, 2005; Hanks et al., 2006). Similar activity patterns have also been observed in other brain regions, including frontal eye fields (FEF; Schall, 2002), superior colliculus (SC; Basso and Wurtz, 1998), and dorsolateral prefrontal cortex (DLPFC; Kim and Shadlen, 1999). Taken together, these studies suggest a generic integration-to-boundary mechanism manifested in different brain regions for perceptual decisions. That is, certain neuronal populations integrate sensory information over time, and a response is committed to when the accumulated evidence reaches a decision boundary (Schall and Thompson, 1999; Gold and Shadlen, 2001, 2007; Heekeren et al., 2008).

The integration-to-boundary mechanism receives further support from psychological models of choice decisions that have been developed over the last half-century, namely sequential sampling models (Wald, 1947; Lehmann, 1959; Stone, 1960; Link, 1975; Link and Heath, 1975; Townsend and Ashby, 1983; Luce, 1986; Ratcliff and Smith, 2004; Smith and Ratcliff, 2004; Bogacz et al., 2006; Barnard, 2007). Sequential sampling models assume that evidence supporting alternatives are represented by a sequence of noisy observations over time. A process essential to reduce the noise in evidence is to integrate momentary observations over time and make a decision on the basis of the accumulated evidence. The sequential sampling models provide a detailed account of behavioral performance on choice tasks, including RT distributions, response accuracy, and relationships between the two (e.g., the speed–accuracy tradeoff). These models have been widely used as a mechanistic framework for isolating the decision process from sensory inputs or motor outputs.

A key prediction of almost all sequential sampling models is the presence of evidence boundaries, which limit the quantity of evidence available for making a decision. This article reviews recent theoretical and experimental developments in understanding the functions and mechanisms of the evidence boundary. The focus on the boundary mechanisms in general, rather than on particular decision models, is primarily due to its empirical relevance and importance. First, both experimental data and psychological models imply that the evidence boundary does not depend solely on sensory evidence, but can be internally set and controlled by a decision-maker. This unique characteristic of the boundary raises two important questions: (1) how can the evidence boundary influence decision performance? (2) How is the boundary implemented and adapted in neural circuits? Answers to such questions may provide insight into high-level cognitive control that subserves decision-making processes. Second, although the presence of the boundary is consistently supported by the neurophysiological (Mazurek et al., 2003; Huk and Shadlen, 2005; Hanks et al., 2006; Kiani et al., 2008) and neuroimaging (Ploran et al., 2007; Heekeren et al., 2008; Kayser et al., 2010a,b) data, only recently have researchers begun to investigate the function and effects of the evidence boundary. The understanding of its neural mechanisms is still insufficient.

The article is organized as follows: Section “Models of Decision-Making” reviews the decision-making problem and three representative sequential sampling models: the drift-diffusion model (DDM; Ratcliff, 1978), the Ornstein–Uhlenbeck (OU) model (Busemeyer and Townsend, 1993), and the leaky-competing-accumulator (LCA) model (Usher and McClelland, 2001). Section “Theoretical Considerations of Evidence Boundaries” examines the effects of the evidence boundary on the three models. This section discusses how the boundary may affect the models’ dynamics and fits to experimental data, and to what extent the boundary may affect the performance of these models. Section “Neural Implementation of Decision Boundary” and “Effects of Boundary Changes” review recent experimental findings that reveal possible neural underpinnings and behavioral influences on the decision boundary. Finally, Section “Discussion” offers some concluding remarks.

Models of Decision-Making

The decision problem and the optimal decision-making theories

Perceptual decision-making can be formalized as a problem of statistical inference (Gold and Shadlen, 2001, 2007). Let us consider a decision task with a choice between N (N ≥ 2) alternatives, each supported by a population of sensory neurons exclusively selective to a choice (e.g., motion sensitive neurons in area MT/V5). Stimuli drive the N populations of sensory neurons to generate noisy evidence streams Ii(t) at time t, with mean μi and variance (i = 1, 2, 3, …, N). The goal of the decision process (e.g., reflected in activity of LIP neurons) is to identify which sensory population has the highest mean activity based on the evidence Ii(t). This article mainly considers three representative models under this framework, as a more complete survey on sequential sampling models is available elsewhere (Ratcliff and Smith, 2004; Smith and Ratcliff, 2004; Bogacz et al., 2006).

Statistically optimal strategies exit for solving the decision problem with two alternatives (N = 2), which would achieve the lowest error rates (ER; the probability of making an incorrect choice in a block of trials) and the shortest RT compared with all other decision-making strategies. This optimality criterion can be divided into two sub-criteria (Bogacz et al., 2006): (1) the strategy yielding the lowest ER for any fixed amount of evidence, and (2) the strategy yielding the fastest response for any given ER. The two criteria correspond with the optimal conditions of the TC and IC paradigms, respectively. The optimal strategy for the TC paradigm, i.e., the lowest ER for fixed RT, is provided by the Neyman–Pearson test (NPT; Neyman and Pearson, 1933). The optimal strategy for the IC paradigm, i.e., the fastest RT for a given ER, is provided by the sequential probability ratio test (SPRT; Wald, 1947; Wald and Wolfowitz, 1948; Barnard, 2007). For multiple alternative decision tasks (N > 2), asymptotically optimal strategies are also available for the TC (Mcmillen and Holmes, 2006) and IC paradigms (Draglia et al., 1999; Dragalin et al., 2000).

Decision strategies that meet the optimal criteria above require linear integration of evidence over time, which, as reviewed below, can be implemented by many accumulator models on different level of abstraction (the implementation of optimal strategies for multiple alternative decisions requires models with additional complexity to those discussed here, see Bogacz and Gurney, 2007; Zhang and Bogacz, 2010b). Models that can accomplish optimal strategies have been shown to provide better explanations of experimental data than other, non-optimal, models (Ratcliff and Smith, 2004). This leads us to an ecologically motivated assumption that the brain may implement strategies for optimizing the speed and accuracy of decision-making, and hence optimal decision theories may offer a normative benchmark to generate experimental predictions and link behaviors to neural circuits for decision-making (Bogacz, 2007).

The perspective that the brain implements optimal decision-making relies on precise and circumspect definitions of the decision problem and criteria for optimality per se. For the simple decision problem with time-invariant evidence, linear integration is the optimal strategy in the sense of its speed and accuracy (see van Ravenzwaaij et al., 2012 for a discussion on other possible definitions of optimality). For tasks with time-varying signal-to-noise ratio within each trial (Huk and Shadlen, 2005; Tsetsos et al., 2011), linear integration may no longer be optimal. Intuitively, if the statistics and regularities of the time-varying evidence (i.e., when more reliable evidence arrives) are known, a decision strategy that exploits such knowledge and gives greater weight to more reliable evidence would have better performance than linear integration strategy (Papoulis, 1977). Whether humans are biased toward early or late evidence, or if their weights of evidence vary with practice (Brown and Heathcote, 2005b), or if their decision strategies are flexibly adapted (Brown et al., 2005), is still not fully understood and merits further investigation.

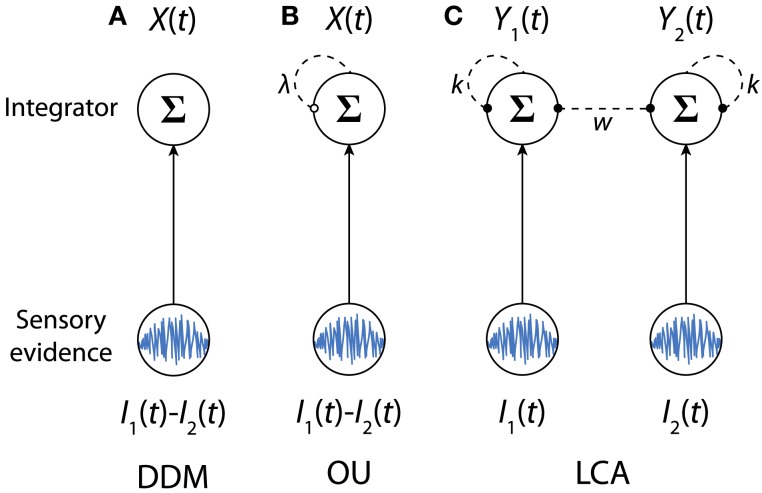

Drift-diffusion model

The DDM was proposed for two-alternative forced-choice (2AFC) tasks (Stone, 1960; Ratcliff, 1978). Mathematically, the DDM can be thought of as a standard Wiener process with external drift (Wiener, 1923), and is equivalent to a continuous limit of the random walk models (Estes, 1955; Laming, 1968; Link, 1975; Link and Heath, 1975; Luce, 1986). The model implies a single integrator that integrates the momentary difference between two sensory streams [I1(t) − I2(t)] supporting two alternatives (Figure 2A). The dynamics of the DDM can be characterized by a stochastic differential equation:

Figure 2.

The sequential sampling models for 2AFC tasks: (A) the DDM, (B) the OU model, (C) the LCA model. Arrows denote excitatory connections. Dashed lines with solid circle end denote inhibitory connections. For the OU model, the dashed line with an open circle end denotes the effect of the growth-decay parameter. For each model, the bottom nodes denote sensory evidence, and the top notes denote neural integrators. Model parameters are defined in Eqs 1–3.

| (1) |

Here dX(t) denotes the increment of the accumulated evidence X(t) over a small unit of time dt. The sign of dX(t) implies that the momentary evidence at time t supports the first [dX(t) > 0] or the second [dX(t) < 0] alternative. μ is the drift rate of integration, representing the mean evidence difference (μ1 – μ2) per unit of time. If σ1 = σ2. The magnitude of μ is determined by the quality of the stimulus (the drift rate may be also determined by the allocation of attention, see Schmiedek et al., 2007). For example, for the RDM task, μ would represent the coherence level of the RDM stimulus: a large μ implies high motion coherence and an easy task, while a small μ implies low motion coherence and a high-level of difficulty in distinguishing between two coherent motion directions. The second term σdW(t) denotes Gaussian noise with mean 0 and variance σ2dt. The DDM can be applied to either IC or TC paradigms. In the IC paradigm, decision time is unrestricted and two decision boundaries are introduced to indicate termination states (see Boundary Mechanisms). Once X(t) reaches a boundary, a corresponding choice is made. The predicted RT is equal to the duration of the integration, plus a non-decision time, corresponding to other cognitive processes unrelated to evidence integration (e.g., sensory encoding or response execution). For the TC paradigm, which requires subjects to respond at the experimenter-determined decision time Tc, the model selects an alternative by locating the ultimate integrator state X(Tc) and selecting the first alternative if X(Tc) > 0, or the second alternative if X(Tc) < 0.

Several extensions of the DDM have been proposed since its original introduction, allowing model parameters to vary across trials. First, between-trial variability in the starting point of the integrator X(0) was introduced to account for premature sampling (Laming, 1968), which predicts faster errors than correct responses. Second, between-trial variability in the drift rate was introduced to account for slower errors when compared to correct responses (Ratcliff, 1978). The additional sources of parameter viabilities have been shown to improve fits to experimental data (Ratcliff et al., 1999).

The DDM have been applied to a number of cognitive tasks, including memory retrieval (Ratcliff, 1978), lexical decisions (Ratcliff et al., 2004a; Wagenmakers et al., 2008), letter identification (Ratcliff and Rouder, 2000), and visual discrimination including the brightness discrimination (Ratcliff, 2002; Ratcliff et al., 2003b) and the RDM task (Palmer et al., 2005). In all its applications, the model has successfully accounted for response accuracies and RT distributions observed from individual subjects (Ratcliff and Rouder, 1998; Ratcliff and Smith, 2004; Ratcliff and McKoon, 2008). More importantly, the simple DDM without between-trial parameter variability has been shown to implement the statistically optimal strategies for choosing between two alternatives (the NPT and the SPRT) in both TC and IC paradigms (Wald, 1947; Edwards, 1965; Gold and Shadlen, 2001, 2007; Bogacz et al., 2006), and hence the DDM is often used as a benchmark to compare the performance of other decision models. For the extended version of the DDM, previous studies suggest that the DDM with variable drift rate may still be the optimal model in the TC paradigm but the DDM with variable starting point is not optimal compared to other models (Bogacz et al., 2006). However a strict proof of the optimality of the DDM with between-trial visibilities is still not available yet.

One limitation of the DDM is that it was initially designed for binary choice tasks. Recent studies have attempted to extend the DDM to account for N-alternative forced-choice (NAFC) tasks (N > 2). One approach has been suggested by Niwa and Ditterich (2008). For a RDM task with three alternatives (i.e., three possible motion directions), Niwa and Ditterich (2008) modeled three integrators supporting the three alternatives rather than using a single integrator. The three integrators compete against each other in a race toward a common decision boundary and a response is determined by the winning integrator. Crucially, each integrator not only integrates sensory evidence supporting its preferred choice in a diffusion process, but also receives weighted feed-forward inhibition from evidence supporting the other two alternatives (Ditterich, 2010; see also Mazurek et al., 2003 for a similar approach). Churchland et al. (2008) proposed a slightly different approach for modeling a RDM task with four possible motion directions orthogonal to each other. Their hypothesis was that discriminating between two opposite motion directions (e.g., upper-left and lower-right) is independent of sensory evidence supporting the other two orthogonal directions (e.g., lower-left and upper-right). As a result, any sensory evidence supporting the two alternatives neighboring the true alternative was assumed to have a zero mean. The model nicely predicts a feature of their behavioral data that the probability for choosing the alternative directly opposing the true alternative is higher than that for the two alternatives neighboring the true alternative (Churchland et al., 2008). Leite and Ratcliff (2010) examined a family of models with multiple integrators in NAFC tasks with different number of alternatives (N = 2, 3, 4). Their results suggest that the models with independent integrators (i.e., no mutual inhibition) and zero to moderate decay produce qualitatively good fits to the RT distributions.

Ornstein–uhlenbeck model

Similar to the DDM, the OU model has been proposed for 2AFC tasks (Busemeyer and Townsend, 1993), and has been applied to a variety of choice tasks to account for response accuracies and RT distributions (Heath, 1992; Diederich, 1995, 1997; Smith, 1995; Busemeyer, 2002). The OU model is identical to the DDM except that it includes a first-order filter that varies the change rate of an integrator (Busemeyer et al., 2006; Figure 2B). More precisely, the model is equivalent to a one-dimensional OU process (Uhlenbeck and Ornstein, 1930) and its dynamics can be described by the following differential equation:

| (2) |

The drift rate μ and the noise term σdW(t) have the same definitions as in Eq. 1 (see “Drift-diffusion model” above). The model contains a linear coefficient λ, a growth-decay parameter. As a result the rate of change of X(t) depends not only on the mean drift rate, but also on the current state of the integrator.

The growth-decay parameter brings some interesting properties to the OU model. First, in the TC paradigm, the response accuracy of the OU model reaches an asymptote for a large decision time Tc. Note that the same prediction can be made from the DDM by introducing variability in drift rate across trials (Ratcliff et al., 1999), and that therefore theoretically the two models can account for behavioral data equally well (but, see Ratcliff and Smith, 2004). However, recent studies suggest that the two models are distinguishable by introducing temporal uncertainty to the stimulus (Huk and Shadlen, 2005; Kiani et al., 2008; Zhou et al., 2009). Second, the value of λ can account for the serial position effects observed in decision-making tasks (Wallsten and Barton, 1982; Busemeyer and Townsend, 1993; Usher and McClelland, 2001). For λ < 0, the linear term λX(t) inhibits the integrator and the evolution of X(t) tends toward a stable attractor −μ/λ. Because evidence presented earlier in a trial decays over time, the choice mainly depends on the evidence later in the trial (a recency effect). In contrast, for λ > 0, the evolution of X(t) is repelled from the unstable fixed point −μ/λ, and the speed of repulsion is proportional to the distance between the current stage X(t) and −μ/λ. Therefore after X(t) has been driven to one side or other of the fixed point, subsequent evidence has little effect on the final choice due to repulsion (a primacy effect). For λ = 0, the OU model reduces to the DDM and hence implements the optimal decision strategy.

Leaky-competing-accumulator model

The LCA model was proposed by Usher and McClelland (2001). Unlike the DDM and the OU model which integrate the relative evidence for one alternative compared with another, the LCA model assumes that evidence supporting different alternatives is integrated by separate integrators (Figure 2C). Therefore the LCA model can be naturally extended to account for decision tasks with multiple alternatives (Usher and McClelland, 2004; Mcmillen and Holmes, 2006; Tsetsos et al., 2011). Each integrator in the LCA model is leaky, as accumulated information continuously decays, and receives mutual inhibition from other integrators. For 2AFC tasks, the dynamics of the two integrators Y1(t) and Y2(t) can be described by:

| (3) |

Here k (k ≥ 0) denotes the rate of decay, and w (w ≥ 0) denotes the weight of mutual inhibition from the other integrator. In the absence of sensory evidence (μ1 = μ2 = 0), the two integrators will converge to zero due to the effect of decay. The additional mutual inhibition means that the integrators are not independent, as each integrator can access the evidence that supports other alternatives. The LCA model can be applied to both IC and TC paradigms. In the IC paradigm, the first integrator that reaches a decision boundary renders its preferred choice. In the TC paradigm, the decision is determined by identifying which integrator has higher activity at a decision time Tc. The model in Eq. 3 is a simplified linear version of the LCA model and the integrators’ values are unconstrained. In their original publication, Usher and McClelland (2001) assumed that the integrators’ stages are transformed by using a threshold-linear activation function, which prevents any integrator having negative values (Brown and Holmes, 2001; Brown et al., 2005). This non-linearity is motivated by the fact that activities of neural integrators can never be negative (see Boundary Mechanisms).

The LCA model is closely related to other sequential sampling models. For w = k = 0 (no decay or inhibition), the LCA model is equivalent to a model with independent integrators, which resembles a continuous version of the accumulator or counter models (Pike, 1966; Vickers, 1970). For 2AFC tasks, the LCA model can be reduced to an OU model if both decay and inhibition are large relative to the noise strength σ (Bogacz et al., 2006, 2007). The relative difference between w and k determines the growth-decay parameter λ in the reduced OU model (λ = w − k). That is, if the inhibition is larger than the decay (w > k), the LCA model can be reduced to an OU model with λ > 0. In contrast, if the inhibition is smaller than the decay (w < k), the LCA model can be reduced to the OU model with λ < 0. Therefore, similar to the OU model with λ ≠ 0, the LCA model with unbalanced inhibition and decay (w ≠ k) can account for primacy and recency effects (Usher and McClelland, 2001). For balanced decay and inhibition (w = k), the LCA model can be approximated by the DDM and hence implements the optimal decision strategy.

Because the LCA model can mimic the DDM and the OU model within a certain parameter range, the LCA model retains the strength of the simpler models to account for detailed aspects of behavioral data from 2AFC tasks. The LCA model has also been successfully applied to perceptual decision tasks with multiple alternatives (Usher and McClelland, 2001; Tsetsos et al., 2011), and value-based decisions, in which the decisions are settled on subjective preferences, rather than perceptual information (Usher and McClelland, 2004; Usher et al., 2008).

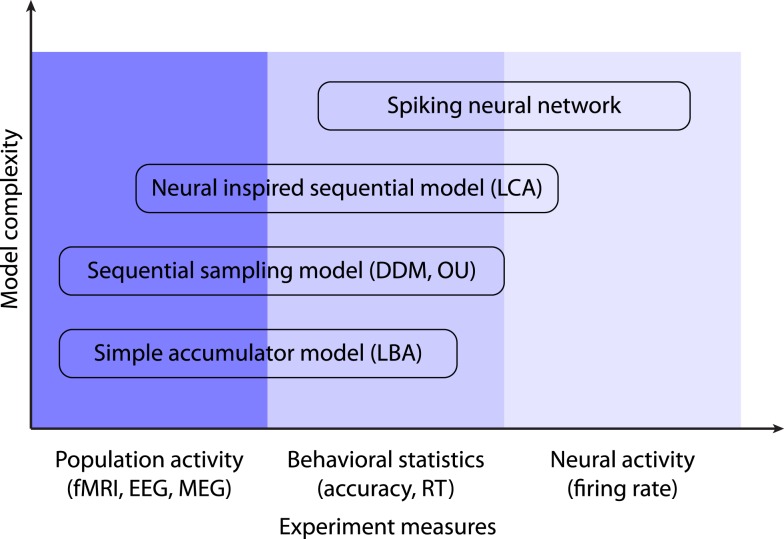

Decision-making models at different levels of complexity

The sequential sampling models do an excellent job of accounting for the variability of responses and RTs in various decision tasks. Over decades researchers have tended to extend existing models to account for more systematic effects (e.g., RT differences between correct and error responses) or more biologically realistic constraints (e.g., the mutual inhibition and decay in the LCA model). These attempts led to an increase of model complexity and number of model parameters, which, in practice, makes such models difficult to apply to experimental data. There are several previous attempts to simplify existing models. For example, Wagenmakers et al. (2007) proposed a simplified version of the DDM by assuming that there is no between-trial variability, and a further simplified DDM proposed by Grasman et al. (2009) additionally assumes the starting point of the integrator is not biased toward any alternative. These simplified models can directly estimate the DDM parameters from analytical solutions without a parameter-fitting procedure.

More recently, Brown and Heathcote (2008) proposed a linear ballistic accumulator (LBA) model of choice decisions (see Brown and Heathcote, 2005a for a non-linear version of the model). The LBA model has been applied to many choice tasks including perceptual discrimination (Forstmann et al., 2008, 2010a,b; Ho et al., 2009), absolute identification (Brown and Heathcote, 2008), lexical decisions (Donkin and Heathcote, 2009), and saccadic eye movements (Ludwig et al., 2009; Farrell et al., 2010). Similar to the LCA model, the LBA model assumes each integrator integrates evidence supporting one alternative and hence can be applied to NAFC tasks, but with two major simplifications. First, the integrators are independent (no mutual inhibition) and have no leakage (no decay). Second, the integration process within each trial is linear and deterministic (i.e., ballistic), omitting the within-trial variability in momentary evidence. These two assumptions greatly simplify the model dynamics and hence the LBA model has analytical solutions for RT distributions and response accuracies for NAFC tasks. This is a significant advantage in terms of computational complexity as one can estimate the model parameters without using Monte Carlo simulations. However, the strong assumptions inevitably introduce limitations. Because the integration process is assumed to be linear and deterministic, the LBA model cannot distinguish evidence arriving at different times over a trial, and hence it is not straightforward to apply the LBA model when accounting for primacy and recency effects, or any task paradigms that deliberately introduce temporal uncertainty within a trial (Usher and McClelland, 2001; Huk and Shadlen, 2005; Tsetsos et al., 2011).

Decision-making models can be used to isolate decision components (e.g., boundary and drift rates), from which estimated model parameters can infer experimental data collected from different sources, such as fMRI or EEG/MEG signals. This model-based approach provides an invaluable way of linking latent decision processes predicted by the accumulator models with their implementations in large neural populations, and not surprisingly has attracted increasing interest over the last few years (Philiastides et al., 2006; Philiastides and Sajda, 2007; Forstmann et al., 2008, 2010b; Ho et al., 2009; Ratcliff et al., 2009; Kayser et al., 2010a,b; Wenzlaff et al., 2011). It is worth noting that all models can be used for this purpose, although simpler models are often employed due to less computational complexity.

However, models at a highly abstract level (e.g., the DDM and the LBA model) are not sufficient to address some more fundamental questions of decision-making, such as the neural mechanism of slow ramping activity in LIP neurons during RDM tasks, or the mechanisms of decay and inhibition in neural integrators. The answers to these questions require more detailed models at the level of single neurons (the LCA model provides a middle ground in neural plausibility between single neuron models and the DDM). Wang (2002) proposed a biophysically based spiking neuron model for perceptual decision-making. For the RDM task with two alternatives, the model assumes two LIP neural populations supporting each alternative. Instead of mutual inhibition in the LCA model, all neurons from different populations project to a common pool of inhibitory neurons, which then inhibits each population via feedback inhibitory connections. Wang (2002) proposed that evidence integration over a long timescale (on the order of several hundred milliseconds to over 1 s), as assumed by most sequential sampling models, could be realistically carried out by neural populations with recurrent excitatory connections mediated by NMDA receptors at a very short timescale (on the order of less than 100 ms). This model has been demonstrated to successfully account for the activity of LIP neurons as well as behavioral performance in the RDM tasks (Wong and Wang, 2006; Wong et al., 2007), and has recently been applied to multiple alternative decision tasks (Furman and Wang, 2008). However, although the biophysical model is important for understanding the neural mechanisms of decision processes, due to the model complexity and the large number of model parameters it could be difficult to use such a specialized model as an exploratory tool for other decision tasks, or to search through the parameter space to fit the model to RT distributions. Smith and McKenzie (2011) recently proposed a simplified version of Wang’s (2002) model that overcomes these difficulties. In their minimal recurrent loop model, evidence is represented by Poisson shot noise processes (Smith, 2010) and evidence integration for each alternative is represented by the superposition of Poisson processes, resembling the essential statistical features of the reverberation loops in Wang’s model. The model provides a theoretical account of how diffusive-like evidence integration at an abstract level naturally emerges from the spike densities in the recurrent loops. Further, at a cost of two more free parameters, the minimal recurrent loop model can fit the RT distributions and associated choice accuracies almost equally well as the DDM (Smith and McKenzie, 2011), suggesting that the model offers a promising balance between biological plausibility and generality to predict experimental data. In summary, decision models at different levels of complexity could be useful to capture experimental data obtained from different modalities (Figure 3), and empirical researchers should choose an appropriate model that suits their research questions.

Figure 3.

The complexity and generality of the decision-making models. All models are capable of capturing basic behavioral statistics such as the RT and the response accuracy. The simple accumulator models and the sequential sampling models are suitable to describe the congregate activity of large neural populations (e.g., fMRI or EEG/MEG signals). The most complex model (i.e., the spiking neural network) can be used to account for dynamics of neural circuits.

Theoretical Considerations of Evidence Boundaries

Boundary mechanisms

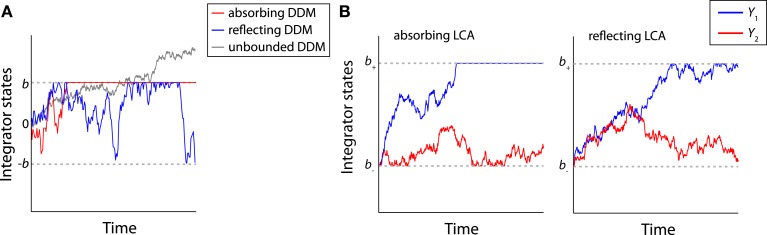

All the sequential sampling models discussed above describe a diffusion-like evidence integration during the decision process (Brown and Holmes, 2001; Brown et al., 2005). However they need to be bundled with evidence boundaries that constrain accumulation. This section examines evidence boundaries according to two different but not mutually exclusive definitions: (1) evidence boundaries that determine the amount of accumulated evidence required to make a decision (i.e., the decision boundaries), and (2) evidence boundaries that act as barriers to the amount of accumulated evidence (Figure 4A).

Figure 4.

Time course of the integrators of the DDM and LCA model with boundaries. (A) Examples of trajectories of the absorbing (red), reflecting (blue) and unbounded (gray) DDM. Two boundaries (±b) are indicated by the gray dashed lines. (B) Examples of trajectories of the absorbing (left panel) and reflecting (right panel) LCA models. The lower boundary (b−) and the upper boundary (b+) boundaries are indicated by the gray dashed lines.

The first type of evidence boundary, hereafter referred to as the absorbing boundary, provides an evidence criterion or threshold for the termination state of an integration process, and assumes a decision is made once accumulated evidence supporting one alternative reaches the boundary. The absorbing boundary is necessary for modeling tasks that require subjects to implement a self-initiated stopping rule (e.g., in the IC paradigm) and hence it has been widely used by many models in the choice RT modeling literature (Ratcliff, 1988, 2006; Gomez et al., 2007).

The second type of evidence boundary introduces biologically inspired constraints that limit the amount of accumulated evidence. Early decision models did not explicitly constrain activity of integrators (Ratcliff, 1978), which raised theoretical and practical concerns to the validity of the models. The theoretical concern is that unconstrained integrators imply a possibility of an unlimited amount of evidence being maintained by the model (Figure 4A). For example, in the TC paradigm, the integrator state of the DDM has infinite mean and variance as Tc approaches infinity (see Eq. 1). For the LCA model, unconstrained integrators further imply the possibility that model activation may become negative due to mutual inhibition. Unlimited or negative activations are undesirable for a biologically plausible model, because neural integrators cannot exceed certain values due to intrinsic limitations of biological neurons. Their activity should also be non-negative. These constraints need to be satisfied before attempting to extend abstract models to qualitatively account for neural firing rate patterns during the decision process (Usher and McClelland, 2001; Ratcliff et al., 2003a; Huk and Shadlen, 2005; Ditterich, 2006; Purcell et al., 2010).

The practical concern is that models with unconstrained integrators may not fit experimental data well. In the TC paradigm, the ER of the DDM with an unconstrained integrator diminishes to zero for a large decision time Tc (without between-trial variability), and hence the model predicts that subjects can achieve arbitrarily small ER even for difficult tasks. Nevertheless, it is known that humans cannot achieve 100% accuracy even for large Tc (Meyer et al., 1988; Usher and McClelland, 2001). Furthermore, negative activation in the LCA model may result in abnormal model predictions. Bogacz et al. (2007) showed that in a multi-alternative decision task, if the inputs to an LCA model favor only a small subset of possible alternatives, integrators favoring irrelevant choices (i.e., those that do not receive inputs) would become negative and send uninformative positive evidence via mutual inhibition to the relevant competing integrators (i.e., those receiving inputs). As a result the LCA model without truncation of negative activation may select inferior alternatives in value-based decisions (Usher and McClelland, 2004; Usher et al., 2008), and provide qualitatively poorer fits to experimental data than the models with non-negative evidence only (Leite and Ratcliff, 2010). The same problem also exists in models with feed-forward inhibitory connections (van Ravenzwaaij et al., 2012).

One way to introduce constraints is to transform the integrator state through a non-linear activation function (Brown and Holmes, 2001; Usher and McClelland, 2001; Brown et al., 2005), or to assume high-level baseline activity for avoiding non-negative activations (van Ravenzwaaij et al., 2012). A simpler approach, without losing the explicit nature and tractability of a linear system and yet offering a good approximation of the non-linear activation functions, is to introduce explicit evidence boundaries to existing models. This type of boundary is hereafter referred to as the reflecting boundary (Diederich, 1995; Bogacz et al., 2007; Zhang et al., 2009; Zhang and Bogacz, 2010a; Smith and McKenzie, 2011). The reflecting boundary only constrains the maximum or minimum amount of evidence that can be presented by an integrator (much as a non-linear activation function provides cutoffs at high or low activations), but unlike the absorbing boundary, reaching a reflecting boundary does not terminate the integration process (Figure 4A).

Both types of boundary mechanisms have been applied to various decision models (Ratcliff, 2006; Bogacz et al., 2007; Zhang et al., 2009; Zhang and Bogacz, 2010a; Tsetsos et al., 2011; van Ravenzwaaij et al., 2012). The decision models with boundaries are hereafter referred to as bounded, and the models without a boundary as unbounded. For the DDM and the OU model, when there is no bias toward either alternative, two symmetric absorbing or reflecting boundaries (±b) can be imposed to limit the integrator’s activity (Figure 4A). For simplicity, the terms absorbing DDM and absorbing OU model are used when the two absorbing boundaries apply to the models, and the reflecting DDM and reflecting OU model when referring to models with two reflecting boundaries. For an LCA model with multiple integrators, if one assumes that integrators cannot have arbitrarily large or negative values, then two boundary conditions need to be applied to each integrator (Figure 4B). First, each integrator requires one lower boundary b− at zero to constrain the minimum activity to be non-negative (Bogacz et al., 2007). This lower boundary needs to be a reflecting boundary, since otherwise the model may not render a decision (i.e., if the lower boundary is absorbing, activities of all integrators could be fixed at the boundary). Second, each integrator requires one upper boundary b+ (b+ > 0) to limit the maximum activity. The upper boundary b+ could be either absorbing or reflecting. The LCA model with an absorbing boundary at b+ is referred to as the absorbing LCA model, and the model with a reflecting boundary at b+ as the reflecting LCA model. Table 1 summarizes the bounded decision models discussed above and their properties.

Table 1.

Properties of the sequential sampling models with and without boundaries.

| Primacy | Recency | Optimality | TC paradigm | IC paradigm | ||

|---|---|---|---|---|---|---|

| DDM | Unbound | – | – | Optimal | ✓ | ✓ |

| Absorbing | ✓ | – | – | ✓ | ✓ | |

| Reflecting | – | ✓ | – | ✓ | – | |

| OU | Unbound | λ > 0 | λ < 0 | λ = 0 | ✓ | ✓ |

| Absorbing | Various | λ < 0 | λ < 0 | ✓ | ✓ | |

| Reflecting | λ > 0 | Various | λ > 0 | ✓ | – | |

| LCA | Unbound | w > k | w < k | k = w | ✓ | ✓ |

| Lower-bound | w > k | w < k | Unknown | ✓ | ✓ | |

| Absorbing | Unknown | Unknown | w < k | ✓ | ✓ | |

| Reflecting | Unknown | Unknown | w > k | ✓ | – | |

The lower-bound LCA model refers to the LCA model that has only lower reflecting boundary at zero but no upper boundary.

It is worth noting that models with absorbing boundaries provide a unified account for both IC and TC paradigms (Ratcliff and McKoon, 2008), because contact with absorbing boundaries induces a decision. In contrast, models with pure reflecting boundaries require an external criterion to stop (e.g., decision deadline Tc), and hence they are only for the TC paradigm but cannot account for the IC paradigm. Although the pure reflecting model may be criticized for its lack of generality, it is necessary to consider the models with pure reflecting boundaries together alongside models with absorbing boundaries in order to illustrate some complementary properties of the two types of boundary. First, absorbing boundaries, together with the reflecting boundaries, provide a simple solution for primacy and recency effects in different models (see Primacy and Recency Effects). Second, the two types of boundary could characterize different decision strategies in the TC paradigm (Zhang and Bogacz, 2010a). The absorbing boundary implies that subjects make their choice before the response deadline (i.e., once the absorbing boundary is reached) and withhold their decision. The reflecting boundary implies that subjects continuously hesitate between the choices even when sufficient evidence is available (i.e., when the reflecting boundary is reached) and may change their decision later. Whether subjects adopt one of the two strategies, or are able to switch between the two (see Tsetsos et al., 2012), would be an interesting question for future research.

Primacy and recency effects

The unbounded DDM integrates evidence independent of the current integrator state (Eq. 1), and hence the model implies that influence of sensory evidence on the final choice does not depend on the timing of its occurrence (i.e., neither primacy nor recency). One recent study suggests that the DDM can account for primacy and recency effects by introducing the two types of boundaries (Zhang et al., 2009). For the absorbing DDM, if a boundary is reached before decision time, the preferred decision is determined and only evidence occurring prior to the boundary hit contributes to the integration process, indicating a primacy effect. For the reflecting DDM, each boundary hit results in a partial loss of evidence, since the integrator does not fully integrate momentary evidence that would otherwise exceed the boundary. As a result, the momentary evidence arriving earlier is partially lost and on average a decision depends to a greater extent on later evidence, indicating a recency effect (Figure 5A). A further study indicates that the primacy/recency effects introduced by the two types of boundaries can coincide and interact with the effects introduced by the growth-decay parameter λ in a bounded OU model (Zhang and Bogacz, 2010a). If the boundary and λ provide the same effect, the joint primacy/recency effect of the bounded OU model is maintained. On the contrary, the joint effect of the bounded OU model is weakened or canceled if λ and the boundary present opposite effects (Figures 5B,C). For example, for λ > 0 (primacy effect), an OU model with absorbing boundaries (also the primacy effect) will also exhibit a strong primacy effect, but an OU model with reflecting boundaries will show a weaker effect. There is as yet no study systematically reporting primacy and recency effects in the bounded LCA model. Given the close relationship between LCA model and OU model, one may expect that the primacy/recency effects of bounded LCA model are jointly determined by the type of boundary and the value of inhibition and decay parameters. Recent studies (Tsetsos et al., 2011, 2012) demonstrates that the LCA model with only lower reflecting boundary demonstrates a strong primacy effect when the inhibition is large relative to the decay (w > k), and a recency effect when the inhibition is small relative to the decay (w < k), consistent with results obtained from the unbounded LCA model.

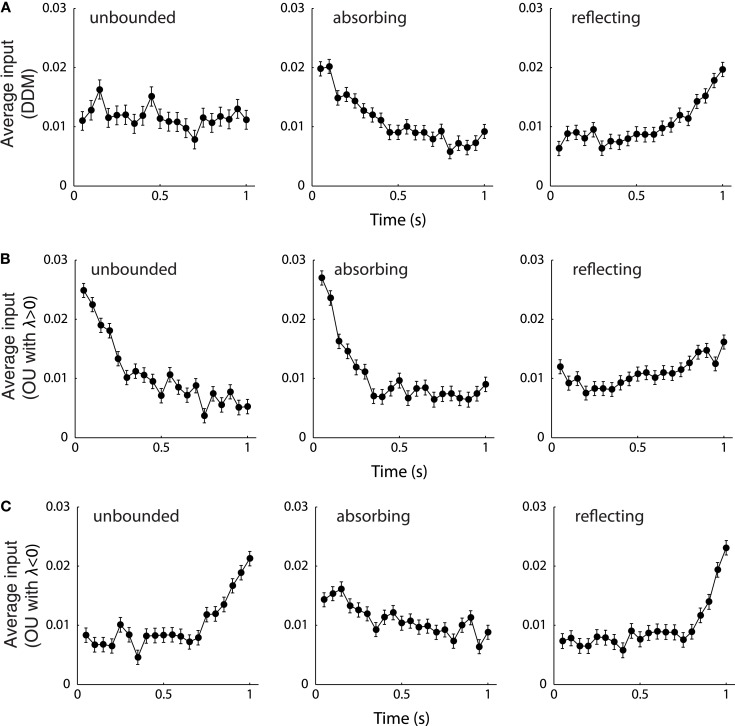

Figure 5.

The primacy and recency effects of the DDM and OU model. (A) The bounded and unbounded DDM. (B) The bounded and unbounded OU models with λ > 0. (C) The bounded and unbounded OU model with λ < 0. All the models were simulated with μ = 0.71 s−1, σ = 1 s−1, b = 0.47, and Tc = 1 s. The growth-decay parameter of the OU models was set to λ = 5.5 (B) and λ = −5.5 (C). In each panel, the model was simulated for 10,000 trials, and the sensory evidence from all correct trials was recorded and averaged. The data points show the means and standard errors of the sensory evidence at every time step. For μ > 0, a larger averaged input indicates that the sensory evidence at that time point has, on average, a larger influence on the final choice, and a smaller averaged input indicates that the choice depends to a lesser extent on the evidence at that time. Figure modified from Zhang and Bogacz (2010a).

This section has shown that primacy and recency effects can be readily produced by evidence boundaries or their interactions with other model parameters. Nevertheless, existing experimental data is insufficient to demonstrate the strength of these effects in the way predicted by the models. An ideal paradigm to systematically investigate and differentiate these effects would be a decision task using time-varying evidence, which favors one alternative early in a trial and another alternative later in a trial. However, the interpretation of results from such an experiment would need to proceed cautiously in case of potential confounds. First, if non-stationary stimuli extends for a long period of time (as in the expanded judgment paradigm, see Pietsch and Vickers, 1997), the observed primacy/recency effects may be to some extent associated with additional attention or working memory processes. Second, if non-stationarity in the evidence is apparent to subjects, they may consciously change their decision strategy. Several studies on rapid perceptual decisions avoided these methodological problems by using carefully designed paradigms. Brown and Heathcote (2005b) presented strong prime stimuli for a very short time and used a metacontrast mask to ensure subjects did not consciously aware the non-stationarity. They showed that early evidence is weighted less in a perceptual decision task (i.e., the integration is leaky), but the leakage quickly decreased with practice. In Usher and McClelland’s (2001) study, primacy/recency effects were tested with fast visual streams of alternating letters lasting for only 256 ms. They randomly mixed shorter trials with non-stationary evidence and longer trials with constant evidence. Such a design encouraged subjects to estimate the entire sequence of the non-stationary evidence, because making decisions on only a fraction of early evidence would result in low performance on longer trials. Their results suggest a general recency effect with strong individual differences, although the source of the large between-subject variability has not yet been identified.

Performance of the bounded decision-making models

Several studies have reported significant improvements in model fit by introducing evidence boundaries. Ratcliff (2006) fitted data for the DDM and the LCA model from a categorization task in which subjects were required to decide whether the number of dots on the screen is large or small. The absorbing DDM and absorbing LCA model provide much better fits than the unbounded models, in particular for the TC paradigm with very short or long decision times. Another study showed that for a shape discrimination task (Usher and McClelland, 2001), the behavioral data is more likely to have been fitted by the bounded DDM than by the unbounded OU model (Zhang et al., 2009). Leite and Ratcliff (2010) showed that the LCA model with zero reflecting boundary produced better fits to the RT distributions than the unbounded model in perceptual decision tasks with different number of alternatives. Zhang et al. (2009) observed that for a given set of model parameters, the ER of the absorbing and reflecting DDM are identical at any decision time. Therefore, although the two types of boundary influence the model dynamics, and weight the order of the momentary evidence in different ways, the two bounded DDMs can fit the experimental data from the TC paradigm equally well. A similar equality between absorbing and reflecting OU models has also been observed (Zhang and Bogacz, 2010a).

The successful applications of the bounded models promote us to consider how different types of evidence boundaries may affect the models’ performance. For the IC paradigm, adding lower reflecting boundaries at zero generally decreases mean RT of the LCA model for a given ER, and this change is more significant for decision tasks with multiple alternatives (Bogacz et al., 2007; Leite and Ratcliff, 2010). Increasing the upper boundary in the absorbing LCA model, or the distance between the two boundaries in the absorbing DDM and absorbing OU model, leads to an increase in the mean and variance of RT distributions (Wagenmakers et al., 2005) and a decrease of ER (i.e., trading speed for accuracy, see Fast Boundary Modulation: Speed–Accuracy Tradeoff). For the TC paradigm, the bounded DDM has an asymptotic accuracy as Tc increases, which is consistent with experimental observations (Meyer et al., 1988; Usher and McClelland, 2001). Increasing boundary separation in the bounded DDM monotonically decrease the ER for a given decision time, until the boundary is sufficiently large that the integrator can barely reach the boundary before Tc, and under this condition the bounded DDM model is equivalent to the unbounded DDM (Zhang et al., 2009; Leite and Ratcliff, 2010). Interestingly, the relationship between the evidence boundary and the ER is not monotonic in the bounded OU model (Zhang and Bogacz, 2010a). For the OU model with a negative λ value, a finite absorbing boundary yields lower ER than the unbounded OU model. In contrast, a finite reflecting boundary lowers the ER for the OU model with a positive λ value (Figure 6A). Simulation results suggested that as Tc increases, the value of λ that yields the lowest ER decreases for the absorbing OU model and increases for the reflecting OU model (Figure 6B). This relationship can be explained by the joint primacy/recency effects from the boundary and the λ value of the bounded OU model (see Primacy and Recency Effects). Recall that the optimal decision strategy, as suggested by the SPRT and NPT, would be to equally weight the momentary evidence received at different time points (i.e., no primacy or recency effects). The bounded OU model approximates to the optimal strategy when the primacy/recency effects introduced by the boundary and λ are balanced. That is, the absorbing OU model needs to be coupled with negative λ and the reflecting OU model needs to be coupled with positive λ. The relative strengths of the primacy/recency effects introduced by the boundary and λ values deserve further research.

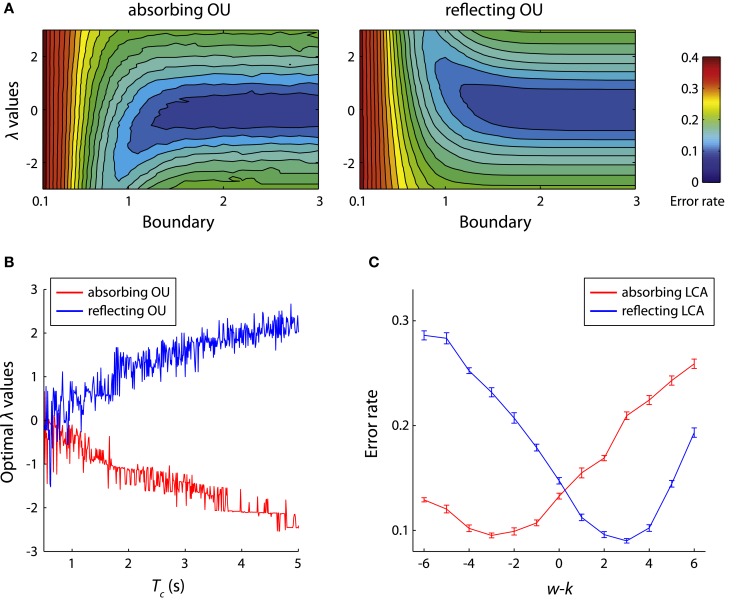

Figure 6.

Performance of the bounded models. (A) The error rates of the absorbing (left) and reflecting (right) OU models in the TC paradigm. The bounded OU models are simulated with the following parameters: λ in (−3, 3) with step 0.1, b in (0.1, 3) with step 0.1, μ = σ = 1 s−1, and Tc = 1 s. The contour plots illustrate the mean error rates of the bounded OU models estimated from 10,000 simulations for each possible parameter combinations. Figure modified from Zhang and Bogacz (2010a). (B) The estimated optimal λ values of the absorbing and reflecting OU models that yield minimum error rate for different Tc varying from 0.5 to 5 s. Figure modified from Zhang and Bogacz (2010a). (C) The error rates of the bounded LCA model. The models were simulated with parameters: μ1 = 5.41 s−1, μ2 = 4 s−1, σ = 1 s−1, b+ = 1.5, b− = 0, and Tc = 3 s. The sum of decay and inhibition was fixed at w + k = 6, while their difference changed from −6 to 6.

The findings from one-dimensional bounded models provide clues to the understanding of performance of the bounded LCA model. Recall that the unbounded LCA model implements the optimal decision strategy when the decay and inhibition are balanced (w = k), i.e., when the LCA model is reduced to the DDM. Bogacz et al. (2007) showed that the balance of decay and inhibition does not optimize the performance of the bounded LCA model in the TC paradigm. Instead, by decreasing inhibition relative to decay (w < k) the absorbing LCA model can achieve lower ER. Conversely, the reflecting LCA model has lower ER when inhibition is larger than decay (w > k; Figure 6C). The symmetric relationship between the absorbing and reflecting LCA models is analogous to that of bounded OU models with positive and negative λ. Therefore it is possible that the bounded LCA model can be reduced to the bounded OU model for certain parameters (cf. van Ravenzwaaij et al., 2012). Bogacz et al. (2007) also suggest that by limiting the integrator stages to be non-negative, the absorbing LCA model can approximates the asymptotically optimal decision strategy (Draglia et al., 1999; Dragalin et al., 2000) for multiple alternative tasks (Bogacz and Gurney, 2007).

Neural Implementation of Decision Boundary

How is the decision boundary realized in neural circuits? In the minimal recurrent loop model by Smith and McKenzie (2011), the decision boundary is implemented by an interaction between the recurrent loops and separate decision neurons. The decision neurons receive spiking inputs from the recurrent loops that represent the accumulated evidence. A decision is rendered as soon as the membrane potential of one decision neuron reaches a threshold. This mechanism predicts a causal link between the firing of decision neurons and overt actions. But an important question remains: where in the brain is the decision boundary implemented?

One possibility is that the decision boundary is implemented within neural integrators, namely the local hypothesis. Wong and Wang (2006) studied a simplified version of the biologically based model of Wang (2002) by using mean-field theory. Their analysis showed that if neural integrators are mediated by recurrent excitatory connections between spiking neurons, the dynamics of neural integrators may contain multiple stable attractor states, which act as implicit decision boundaries to terminate integration processes. This model successfully accounts for psychophysical data and LIP neural activity in RDM tasks (Wong and Wang, 2006; Wong et al., 2007). However, previous studies using the RDM task or other visual discrimination tasks have identified putative neural integrators in the FEF (Hanes and Schall, 1996; Schall and Thompson, 1999; Schall, 2002), the SC (Basso and Wurtz, 1998; Ratcliff et al., 2003a), and the DLPFC (Kim and Shadlen, 1999; Domenech and Dreher, 2010), which exhibit activity patterns similar to LIP neurons. A recent study showed that the inferior frontal sulcus is also likely to integrate evidence from multiple sensory modalities (Noppeney et al., 2010). Therefore, multiple neural integrators may coexist in different brain regions and may be simultaneously functioning during a decision process, though we do not know whether the neural integrators across different regions are independent or are more likely to interact with each other. If the local hypothesis is correct, it is yet not clear whether observed boundary crossing in one integrator region has a causal role in rendering a decision, or could merely reflect terminal integration in other integrator regions. Further experiments testing the activity of neural integrators in predefined regions under different decision tasks are necessary to confirm this hypothesis.

An alternative possibility, the central hypothesis, proposes that detection of boundary crossing is implemented by a central neural circuit outside integrator regions, rather than an intrinsic property of neural integrators. This hypothesis predicts that a central circuit is capable of detecting boundary crossing in integrators within different regions. One potential component of the central circuit is the basal ganglia (BG) because of its unique anatomy. First, the two BG input nuclei, the striatum and the subthalamic nucleus, receive direct inputs from multiple cortical regions including the LIP, FEF, and DLPFC (Smith et al., 1998; Hikosaka et al., 2000; Nakano et al., 2000). Second, most BG nuclei are organized in separate somatotopic areas representing different body parts, and each broad somatotopic area is further subdivided into functionally defined parallel channels, based upon specific movements of an individual body part (Alexander et al., 1986, 1990; Parent and Hazrati, 1995). Therefore the BG can access a number of information sources from the cortex and control complex motor responses, which make the BG important loci of action selection, reinforcement learning, and motor control (Karabelas and Moschovakis, 1985; Graybiel et al., 1994; Gurney et al., 2001a,b; Frank et al., 2004; Samejima et al., 2005). Lo and Wang (2006) proposed that detection of boundary crossing is implemented through a BG-SC pathway. By default the BG output nuclei send tonic inhibition (Hopkins and Niessen, 1976; Francois et al., 1984; Karabelas and Moschovakis, 1985) to downstream motor areas (e.g., the SC) to suppress any saccadic response. When the activity of a neural integrator (e.g., LIP neurons) is large enough, the striatum inhibits BG output nuclei and hence releases inhibition to the SC. The boundary crossing is then detected by burst neurons (Munoz and Wurtz, 1995) in the SC by an all-or-nothing burst signal. Bogacz and Gurney (2007) showed that the BG is necessary for the brain to implement asymptotically optimal decision strategy for NAFC tasks. Nevertheless, although Lo and Wang (2006) demonstrated that the central hypothesis can be implemented by the BG-SC circuit, the model relies on the unique burst property of the SC neurons to detect boundary crossing, which is primarily associated with eye movements. It is not clear whether the same mechanism can be applied to decision tasks requiring other response modalities (e.g., Ho et al., 2009), or tasks which require subjects to withhold their responses before a response signal (i.e., the TC paradigm).

Taken together, although convincing data exists for the presence of neural integrators in the cortex, current findings are inconclusive regarding the neural implementation of decision boundaries. Part of the difficulty in investigating the boundary mechanism is that decision neurons may exhibit task-modulated ramping activity that is similar to neural integrators, if there exists positive feedback connections between the decision neurons and the integrators (Simen, 2012). As a result the two processes may be indistinguishable solely by the observation of ramping activity from neural recording data.

Effects of Boundary Changes

The decision boundary is usually assumed to be under subjective control. On one hand, the decision boundary should be stable in regards to sensory evidence, enabling subjects to respond consistently when faced with similar environments or goals. The stability of the decision boundary is evident from the fact that in both IC and TC versions of the RDM tasks, LIP neurons attain the same level of activity before saccadic responses, independent of motion coherence (Shadlen and Newsome, 2001; Roitman and Shadlen, 2002). On the other hand, the decision boundary may also exhibit a certain degree of flexibility, allowing subjects to tailor their responses on demand, or accounting for changes in some internally driven factors. This section reviews psychological and physiological factors that could be modulated by changes in the decision boundary at different time scales.

Fast boundary modulation: Speed–accuracy tradeoff

The change in decision boundary provides a straightforward account of the speed–accuracy tradeoff (SAT) effect that is often observed in decision-making tasks (Schouten and Bekker, 1967; Wickelgren, 1977; Luce, 1986; Franks et al., 2003; Chittka et al., 2009). For the DDM and the OU model (Figure 7A), decreasing the distance between two decision boundaries reduces the amount of accumulated evidence prior to a decision, leading to fast but error-prone responses. Conversely, increasing the distance between boundaries leads to slow but accurate decisions. For the LCA model or other models that have multiple integrators (e.g., the LBA model), the SAT can be manipulated by changing either the upper boundary (Figure 7B) or the lower baseline activity at the beginning of the trial (Figure 7C) (Bogacz et al., 2010b). Behavioral studies suggest that subjects can effectively trade speed for accuracy when instructed to respond as accurately as possible, or vice versa when instructed to respond as quickly as possible, and the behavioral differences between speed and accuracy instructions can be explained by a change of decision boundaries in the DDM (Palmer et al., 2005; Ratcliff, 2006; Ratcliff and McKoon, 2008). In a similar attempt to study SAT using the LBA model, Forstmann et al. (2008) observed that SAT in the RDM task can be best accounted for by a change in the decision boundary, not by changes of the drift rate or other model parameters. It has been suggested that humans can set the SAT to maximize the reward rate (producing the most correct decisions in a given period of time) by learning the optimal decision boundaries through feedback (Simen et al., 2006, 2009; Bogacz et al., 2010a; Starns and Ratcliff, 2010; Balci et al., 2011). Furthermore, impairments in the optimization of the SAT in neuropsychiatric patients with impulsive behaviors, such as attention-deficit hyperactivity disorder, has been associated with maladaptive regulation of the decision boundary in perceptual tasks (Mulder et al., 2010).

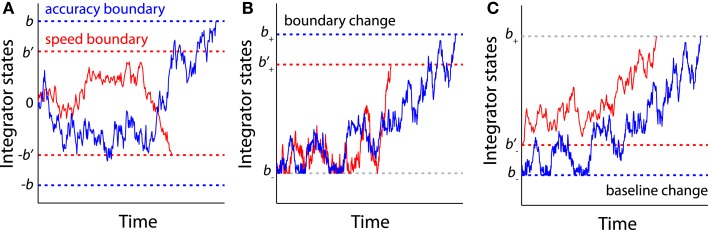

Figure 7.

The sequential sampling models account for SAT. (A) For the models with a single integrator (e.g., the DDM and the OU model), increasing the distance between two boundaries (blue boundaries ±b) leads to slow but accurate decisions, while decreasing the boundary distance (red boundaries ±b’) leads to fast but risky decisions. (B) For the models with multiple integrator (e.g., the LCA model), the SAT can be accounted for by changes in the upper boundary (b+ and b’+). (C) The SAT can also be accounted for by changes in the lower baseline activity (b− and ).

Can we consider the SAT as a signature for identifying neural correlates of decision boundaries? Several recent fMRI studies reveal brain regions associated with the SAT, including the SMA, the pre-SMA, the anterior cingulate cortex, the striatum, and the DLPFC (Forstmann et al., 2008; Ivanoff et al., 2008; van Veen et al., 2008; Blumen et al., 2011; van Maanen et al., 2011; for review, see Bogacz et al., 2010b; Figure 8A). Using a model-based fMRI analysis, Forstmann et al. (2008) showed that the extent of response facilitation for the speed condition in the RDM task, as quantified by a decrease of the decision boundary in the LBA model, correlated with BOLD response increase in the pre-SMA and striatum between the speed and the accuracy conditions (Figure 8B). Further studies suggest that the strength of structural connectivity between the two regions predicts the amount of boundary change in individual subjects (Forstmann et al., 2010a, 2011; Figure 8C). These results support the central hypothesis that the BG circuit is involved in controlling the decision boundary (Lo and Wang, 2006; Bogacz et al., 2010b).

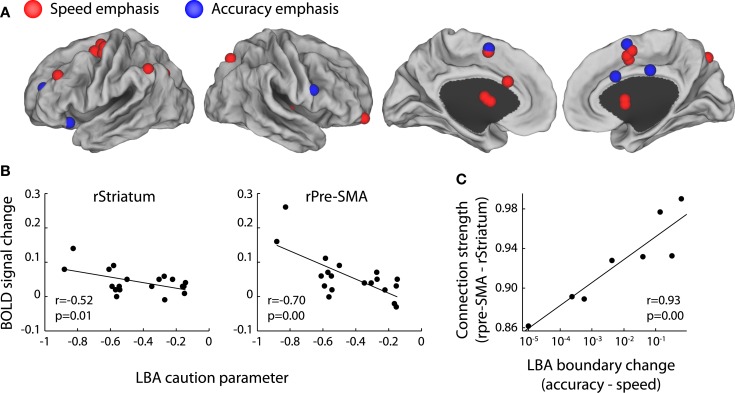

Figure 8.

The neural correlates of SAT. (A) Brain regions associated with the SAT are projected onto a cortical surface using Caret software (Van Essen et al., 2001). The foci represent the coordinates of the peak voxels reported by four fMRI studies (Forstmann et al., 2008; Ivanoff et al., 2008; van Veen et al., 2008; van Maanen et al., 2011). All the studies manipulated the SAT of perceptual decision tasks by speed emphasis or accuracy emphasis. The red foci illustrate increased BOLD response with speed emphasis and the blue foci illustrate increased BOLD response with accuracy emphasis. (B) In the RDM task, the BOLD response increases in the right Pre-SMA and the right Striatum in the speed versus the accuracy condition. These BOLD response changes are associated with decreases in the response caution parameter, which is quantified by boundary changes in the LBA model. Figure modified from Forstmann et al. (2008). (C) The strength of structural connections between the Pre-SMA and the Striatum in individual subjects correlate with the changes of the LBA decision boundary between the speed and the accuracy condition. Figure modified from Forstmann et al. (2010a).

Nevertheless, some concerns remain regarding the causal role of decision boundary in SAT. First, an emphasis on speed may be associated with other cognitive processes (Rinkenauer et al., 2004). For example, some studies have proposed that the integration process is coupled with an urgency signal that increases as a function of time (Churchland et al., 2008; Cisek et al., 2009). The urgency signal effectively lowers the decision boundary as time elapses (Ditterich, 2006), and the SAT can be attributed to a change in strength of the urgency signal. Second, some models predict that SAT is in fact controlled by the distance between the boundary and baseline (Figure 7C). Hence emphasizing speed or accuracy may modulate the decision boundary, baseline, or a combination of the two (Bogacz et al., 2010b; Simen, 2012). In particular, decreasing decision boundary is equivalent to increasing baseline activations in the LBA model. Recent fMRI studies suggest that the SAT is more likely to modulate baseline activity in the medial frontal cortex (pre-SMA and SMA), as these regions exhibit a greater BOLD response in the speed instruction compared to the accuracy instruction. Other studies suggest that SAT may modulate a decision boundary in the lateral PFC, where the speed instruction is associated with decreased BOLD responses (Ivanoff et al., 2008; Wenzlaff et al., 2011). However, it is possible that the aforementioned cortical areas do not directly change the decision boundary or baseline, but provide a control signal that modulates striatal activity (Bogacz et al., 2010b). In a recent neurophysiological study (Heitz and Schall, 2011), monkeys were trained to trade accuracy for speed in a visual search task. Fitting the behavioral data with the LBA model showed that the speed instruction can be accounted for by a decrease in the decision boundary. Interestingly, speed instruction led to an increased baseline activity as well as an increased presaccadic activity in the FEF, suggesting that the neural implementation of SAT likely involves multiple processes, rather than a single boundary or baseline change predicted by psychological models.

Slow boundary modulation: Perceptual learning and aging

It is well-known that practice can improve performance in many perceptual tasks, resulting in higher accuracy and shorter RTs (Logan, 1992; Heathcote et al., 2000). Traditional approaches usually quantify learning effects as changes in the mean accuracy or RT. Several recent studies have attempted to decompose component processes mediating perceptual learning by using sequential sampling models. Petrov et al. (2011) fitted the DDM to behavioral data from a fine motion-discrimination task and showed that learning effects across multiple training sessions are mainly associated with an increase in drift rate and a decrease in non-decision time (see also Dutilh et al., 2009). This result is consistent with previous findings that learning facilitates neural representation of task-relevant features by tuning neural selectivity in the sensory areas (Gilbert et al., 2001; Yang and Maunsell, 2004; Kourtzi and DiCarlo, 2006; Raiguel et al., 2006; Kourtzi, 2010; Zhang et al., 2010). Other studies suggest that extensive training also leads to a significant reduction in the boundary distance in the DDM (Ratcliff et al., 2006; Dutilh et al., 2009; Liu and Watanabe, 2011). Using the RDM task, Liu and Watanabe (2011) investigated the learning effect across different days and showed that training without feedback decreases the decision boundary in the DDM and also increases drift rate. Dutilh et al. (2009) proposed that a dual process (changes in both boundary and drift rate) is necessary to account for the noticeable decrease in RT even after the improvement in accuracy saturates during training. The involvement of boundary reduction in perceptual learning is supported by experimental findings that perceptual learning may not only change sensory representation, but also enhance the decision process in intraparietal regions (Law and Gold, 2008; Zhang and Kourtzi, 2010). Further research combining a modeling approach with multiple imaging sessions over the course of training may reveal how learning and feedback modulate sensory representation and decision processes during perceptual decisions.

While training may improve the ability of subjects to make faster decisions in perceptual decision tasks and result in a lower decision boundary, one primary finding in aging is that RTs in cognitive tasks increase as people age, and this generalized slowing is sometimes coupled with impairments in accuracy (Cerella, 1985, 1991; Fisk and Warr, 1996; Salthouse, 1996). Recent studies have employed the DDM with behavioral data to identify the effects of aging in a number of choice tasks (Ratcliff et al., 2001, 2003b, 2004b, 2007; Thapar et al., 2003; Spaniol et al., 2006). A consistent observation is that slowing in older adults can be explained by two factors: an increase in the decision boundary and a prolongation of non-decision time. The decision boundary increase in aging suggests that older subjects are more cautious in making decisions compared with younger subjects (Ratcliff et al., 2006; Starns and Ratcliff, 2010). This age-dependent change in the decision boundary may be due to structural limits in pre-SMA and striatal connectivity (Forstmann et al., 2011) or functional impairments in the striatum (Kühn et al., 2011) in the aging brain. These findings are consistent with the central hypothesis that the striatum is involved in modulating decision boundaries.

Discussion

This article has reviewed recent developments that shed light on the effects and mechanisms of evidence boundaries. Theoretically, boundaries shape the dynamics of decision processes in two aspects. First, the evidence boundary provides an ecological function to constrain the evidence needed for rendering a decision, since the nervous system cannot process an unlimited amount of information. Second, the evidence boundary provides a mechanistic function to determine the termination of a decision process. The necessity of the evidence boundary is not limited to a specific model, but is a common feature shared by different sequential sampling models and other accumulator models (e.g., the LBA model), independent of the model structures. Empirically, the presence of evidence boundary is evident from behavioral, neurophysiological and neuroimaging data. Existing findings suggest that evidence boundaries remains stable to changes in the external environment (e.g., sensory information), but may vary systematically with some internal factors (e.g., speed or accuracy emphasis, practice, or aging). Whether acting on its own, or interacting with other decision-related processes, boundaries play a crucial role in the formation of decisions. Therefore boundary mechanisms provide a window into understanding the cognitive processes associated with choice behavior.

Despite the increasing number of recent studies examining the evidence boundary, we are still far from a complete picture of its functions and neural implementations. Here I suggest several directions that merit further investigation. First, among decision models that implement the integration-to-boundary mechanism, it is not clear to what extent the effect of a boundary depend on the specific structure of the models. For example, if for a given dataset the DDM predicts a change in the boundary between two experimental conditions, or a correlation between the estimated boundary and cognitive assessment scores (e.g., Ratcliff et al., 2008), would we reach the same conclusion if using the LCA model or the LBA model? van Ravenzwaaij and Oberauer (2009) suggested that boundaries estimated from different sequential sampling models are generally consistent, but do not necessarily correspond with those estimated from the LBA model (cf. Donkin et al., 2011). Such discrepancies between models need be considered if researchers plan to estimate boundary changes from experimental data, or use estimated model parameters to guide subsequent neuroimaging analysis.

Psychological models conceptualize the evidence boundary as a unitary representation. The neural implementation of evidence boundaries is likely to be more sophisticated and remains to be determined (see Simen et al., 2011; Smith and McKenzie, 2011 for recent attempts to bridge the gap between the two). The existing findings favor the central hypothesis over the local hypothesis, but we do not yet fully understand the causal relationship between the activity of the BG nuclei and the changes of the boundary. Studies discussed in this article suggests that boundary changes can occur at different time scales, ranging from a few seconds during which the SAT can be effectively adapted, to a few days during which it is necessary to modulate the boundary through extensive training and feedback. Hence if a central neural circuit exists for the detection of boundary crossing, this system is likely to be affected by different underlying control signals, but we do not know how and where in the brain the control signals for boundary changes are encoded. A related question is how the evidence boundary may be affected by aging or neurodegenerative diseases. Could these long-term factors alter control signals that modulate the boundary, or directly act upon the neural circuits that implement the boundary? Answering these questions will require researchers to combine established modeling approaches with comprehensive neuroimaging protocols.

Finally, existing findings suggest that the integration-to-boundary process governs a broad range of cognitive tasks (Gold and Shadlen, 2007). An important direction for future research is to investigate the effects of boundaries in choice tasks other than perceptual decisions. One example is interval timing estimation, in which subjects produce or estimate a specific duration (Church and Deluty, 1977; Roberts, 1981; Rakitin et al., 1998; Macar et al., 1999; Allan and Gerhardt, 2001). A variant of the DDM has recently been proposed for interval timing (Simen et al., 2011). The model assumes a single integrator with variable drift rate representing elapsed time at different durations and a constant decision boundary. A fixed boundary predicted by the model is supported by experimental findings that slow cortical potentials measured in the pre-SMA/SMA, which have been interpreted as a signature of time accumulation process, show no amplitude difference between different interval times (Elbert et al., 1991; Pfeuty et al., 2005; Kononowicz and van Rijn, 2011; Ng et al., 2011). Another example is voluntary action decision, which require subjects to make selections between actions that have no differential sensory attributes or action outcomes (Brass and Haggard, 2008; Haggard, 2008; Soon et al., 2008; Andersen and Cui, 2009; Roskies, 2010). Recent studies propose that during the formation of voluntary decisions the intention of selecting each action gradually builds up in independent integrators until the winning integrators reaches the boundary and renders the decision (Zhang et al., 2012). This hypothesis is supported by observations of a progressive rise in the readiness potential and neural activity in the medial prefrontal cortex before consciously aware of voluntary actions (Libet, 1985; Sirigu et al., 2004; Fried et al., 2011). These findings from different types of cognitive tasks suggest that the brain may encode the evidence boundary as a common currency for perceptual information, subjective intention, or individual preference (e.g., Chib et al., 2009; Krajbich et al., 2010) to guide behavioral responses, depending on the context of the task. An intriguing possibility is that evidence boundaries associated with different cognitive tasks may be mediated by the same neural implementation. This generic implementation provides a potential bridge between behavioral and neural data to regulate the formation and initiation of complex behavior.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments