Abstract

This paper presents a novel method based on a fiducial marker for correction of motion artifacts in 3D, in vivo, optical coherence tomography (OCT) scans of human skin and skin scars. The efficacy of this method was compared against a standard cross-correlation intensity-based registration method. With a fiducial marker adhered to the skin, OCT scans were acquired using two imaging protocols: direct imaging from air into tissue; and imaging through ultrasound gel into tissue, which minimized the refractive index mismatch at the tissue surface. The registration methods were assessed with data from both imaging protocols and showed reduced distortion of skin features due to motion. The fiducial-based method was found to be more accurate and robust, with an average RMS error below 20 µm and success rate above 90%. In contrast, the intensity-based method had an average RMS error ranging from 36 to 45 µm, and a success rate from 50% to 86%. The intensity-based algorithm was found to be particularly confounded by corrugations in the skin. By contrast, tissue features did not affect the fiducial-based method, as the motion correction was based on delineation of the flat fiducial marker. The average computation time for the fiducial-based algorithm was approximately 21 times less than for the intensity-based algorithm.

OCIS codes: (170.4500) Optical coherence tomography, (100.6950) Tomographic image processing, (100.2000) Digital image processing

1. Introduction

Optical coherence tomography (OCT) [1] provides a means to assess skin pathologies non-invasively. Its application in dermatological research and real-time, in situ examination of skin and skin diseases in clinical scenarios has been explored in recent years [2,3]. Skin structures identified using OCT, such as the stratum corneum, epidermis, dermo-epidermal junction, blood vessels, hair follicles, sweat ducts, and sebaceous glands, have been found to show good correlation with histology [1,4]. OCT has been utilized to detect skin cancer [5], monitor skin changes by UV radiation [6] or aging [7], examine skin inflammatory diseases [3] and vasculature dilation [3,8], as well as the efficacy of laser treatment for vascular malformations [4].

Common practice in dermatological application of OCT is the use of a handheld imaging probe for convenient access to different body sites [9–13]. At the same time, there has been growing interest in utilizing three-dimensional OCT (3D-OCT) to aid in visualization and improve clinical assessment. Such 3D-OCT scans are commonly constructed from a series of 2D vertical cross-sectional images (B-scans) acquired by raster scanning across the skin. Typically, such scans are subject to significant motion artifacts, due to cardiac and respiratory motion, and to inadvertent shaking of the handheld probe.

Our experience has been that motion artifacts are minimally present within each individual 2D B-scan but between consecutively acquired B-scans, relative offsets and rotations distort the appearance and size of anatomical features, such as the skin’s surface topology and subsurface blood vessels. The presence of motion artifacts as a source of 3D-OCT image degradation has been reported in previous publications [9,14,15] involving in vivo human skin. In several publications [5,16,17], it has also been indicated that a portion of the clinical images of skin have been omitted from analysis due to motion artifacts.

Previous efforts in compensating motion artifacts in OCT images have been reported in the fields of ophthalmology [18–21], endoscopy [22,23], and cardiology [24]. Hardware techniques to reduce motion artifacts during in vivo imaging include: decreasing image acquisition time by using a higher scan rate (such as megahertz OCT [25]); avoiding dense sampling; or restricting scanning to a small area of the sample.

An alternative compensation approach adopted in other imaging modalities is post-processing using image registration [26], which is the process of aligning two or more images into spatial correspondence. Manual image registration is highly time-consuming. For a 3D-OCT image, this can involve the manual correction of hundreds or thousands of B-scans. Fully automated image registration methods are necessary in order to incorporate such correction into a clinical workflow. Automation also removes the subjectivity inherent in manual correction.

There are two main categories of image registration methods: intensity-based and feature-based methods. Intensity-based methods align images based on their pixel intensity [27]. Such methods involve applying a geometrical transformation to one image (referred to as the floating image), and calculating the pixel-by-pixel similarity to the other (referred to as the fixed image). The geometrical transformation is iteratively modified to optimize the similarity of the floating and fixed images. This similarity is quantified with a ‘similarity measure’ [27], a single value calculated from the intensity values of pairs of corresponding pixels from the two images. Choice of similarity measure, resampling method during the application of the geometrical transformation, and optimization strategy constitute the main differences between various intensity-based methods. As an example, Zawadzki et al. [20] utilized cross-correlation in an intensity-based registration to align consecutive B-scans in a 3D-OCT image of the eye. Kraus et al. [28], in contrast, utilized the sum of the squared L2 norm as a similarity measure to correct eye motion in multiple, orthogonally acquired volumetric scans.

By contrast, in feature-based methods, the geometrical transformation between two images is calculated by extracting specific tissue structural features. These features are used to establish the correspondence between the two images. Examples of such features include lines, curvature extrema, or contours extracted from each B-scan [29,30]. Antony et al. [21] described a surface segmentation-based registration method to correct for distortions seen along the fast- and slow-scanning axes in 3D-OCT retinal scans.

Feature-based methods may also include the use of a fiducial marker, an object with a well characterized size and shape, to provide features with which to register images. In this paper, we present a fiducial marker specifically designed for OCT imaging of skin. The fiducial is a small, flat metal square with a circular hole through which the skin may be imaged. By affixing the fiducial marker to the skin, the flat surface of the fiducial may be automatically identified and used to correct motion between adjacent B-scans.

In our OCT imaging of skin, two different imaging protocols are used based on whether or not a refractive index (RI)-matching medium is applied. Use of a RI-matching medium has been shown to reduce the impact of a range of morphological and intensity artifacts common in OCT imaging of skin [31]. Application of such media to the skin has also been shown to modify the measured thickness of the epidermis. Thus, the choice of protocol is dependent upon the specific clinical application.

Whilst each imaging protocol is subject to motion artifact, it manifests in different ways. As described in [31], when matching the RI of skin, it is typical to apply a substance such as ultrasound gel and then lightly compress the skin under a glass cover slip, eliminating air from the imaging surface. Because of this contact with the skin, motion artifacts are predominantly perpendicular to the axial direction of the light beam. When imaging directly from air into the skin, no glass cover slip is necessary and motion artifact between B-scans tends to be primarily in the axial direction. In both situations, slight rotations occur between adjacent B-scans.

The goal of the present study was to develop a fully automated motion correction scheme based on the novel use of a fiducial marker, and compare this method against a standard intensity-based registration approach. We have assessed these methods both with and without RI-matching medium. Comparison of the methods was carried out in terms of registration accuracy, robustness and speed, and assessed against a gold-standard manual image registration. This is the first published assessment of such methods for in vivo 3D-OCT scans of human skin.

2. Materials and methods

2.1. Data

The image registration algorithms were assessed on both normal and scarred skin, representing two distinctly different tissue sub-types in terms of surface texture and subsurface structure. The wound healing process in skin results in a broad spectrum of scar types, ranging from normal fine-line scars to various abnormal and problem types that require treatment, such as hypertrophic and keloid scars, and scar contractures [32]. The surface texture of scars ranges from flat and smooth surfaces to raised, irregular surfaces with large corrugations. Unlike normal skin, such scars seldom have a regular pattern of surface furrows and may be highly perfused by blood vessels [33] causing them to appear red or inflamed.

Four subjects with scars and two healthy subjects (four males, two females, mean age: 32 years) were enrolled with prior informed consent. Hair on the skin/scar area to be imaged was trimmed using an electric shaver prior to scanning. Each subject was scanned in 1-3 locations, giving a total of 7 scar and 7 normal skin locations across all subjects. For each of the 14 locations, two 3D-OCT scans were performed, giving a total of 28 data sets. The two scans acquired at each location are illustrated in Figs. 1(c) and 1(d): one with the skin exposed to air and one with a thin layer of ultrasound gel (350 ± 100 µm thick) sandwiched between the glass cover slip and the skin/scar. Each 3D-OCT scan was 6.5 × 6.5 × 3 mm (x × y × z) in size and comprised 1088 × 1088 × 512 pixels. We define the x and y axes to represent the lateral dimensions of the scan, and z to represent the axial depth dimension. Raster scanning was performed with fast-axis B-scans orientated parallel to the xz plane, and slow-axis B-scans parallel to the yz plane. The acquisition time per 3D scan was 2 minutes and 20 seconds.

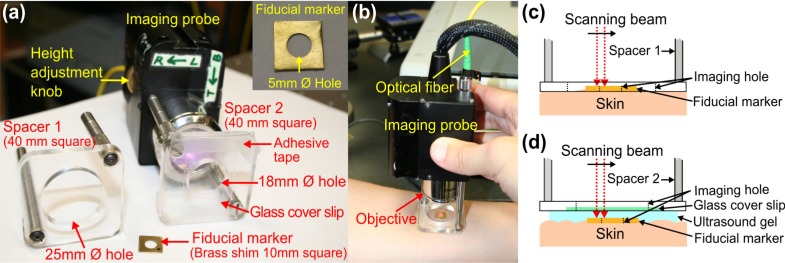

Fig. 1.

Handheld SS-OCT probe and sample spacer setups for in vivo imaging of skin. (a) Photograph of the imaging probe setup showing interchangeable sample spacers. (b) Photograph of the handheld probe in use. (c) Schematic diagram of imaging protocol in air. (d) Schematic diagram of imaging protocol in RI-matching medium (ultrasound gel).

2.2. OCT system

A fiber-based swept-source polarization-sensitive OCT system (PSOCT-1300, Thorlabs, USA) comprising a broadband swept laser source and a Michelson interferometer with balanced detection was used in this study. The laser source has a central wavelength of 1325 nm and the average optical power of the probing beam incident on the skin was 3.2 mW. Data was acquired using in-built software speckle reduction, which reduced the system’s axial resolution. The resulting FWHM axial resolution was measured to be 20 µm (in free space) and the lateral resolution was measured to be 16 µm. The working distance and numerical aperture of the objective lens are 25.1 mm and 0.056, respectively. The system has an axial scan rate of 16 kHz.

The sample arm was configured as a handheld imaging probe, whereby one of two adjustable sample spacers could be affixed to the probe (Fig. 1) to maintain a constant distance between the objective lens and the skin. Spacer 1 consists of a 25 mm Ø hole to allow scanning of the skin in air (i.e., no RI-matching) whilst the probe is held firmly in contact with the skin. Spacer 2 has a smaller hole (18 mm Ø) and a grooved base in order to attach a glass cover slip (22 × 40 × 0.13 mm), which was used as a window for imaging the skin through a layer of RI-matching medium (ultrasound gel).

2.3. Image acquisition

A small metal fiducial marker (inset of Fig. 1(a)) was adhered securely onto the skin using double-sided adhesive tape. The marker is a brass shim (1 cm square, thickness: 170 µm) with a 5 mm diameter imaging hole. The marker was used to perform the gold-standard manual image registration and facilitate the fully automated feature-based registration algorithm. During image acquisition, the imaging probe was placed in contact with the subject’s skin and a 3D scan was acquired across an area covering the entire hole of the fiducial marker (Fig. 1). To reduce the intensity of specular back reflections to the probe, the axis of the scanning beam was tilted at a small angle (~1°) to the surface normal of the marker and glass cover slip. The OCT beam focus was set at ~200 µm below the skin surface for optimal subsurface imaging.

2.4. Image registration methods

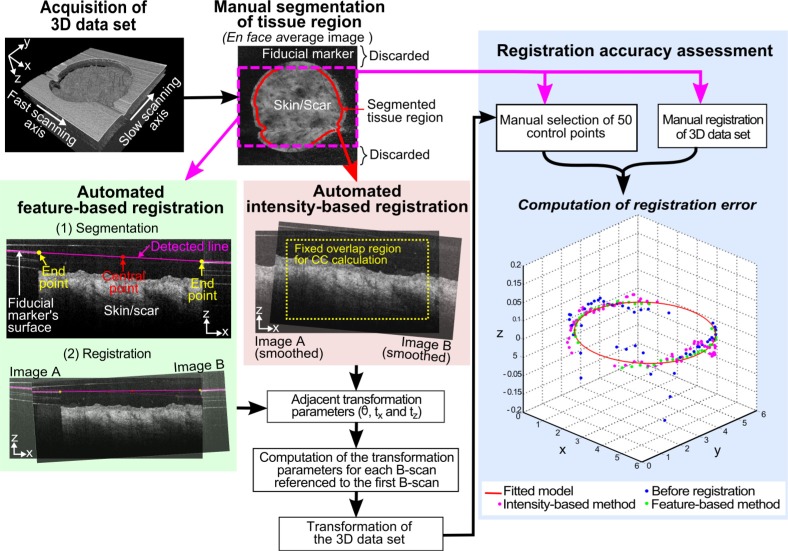

The motion artifact between adjacent fast-axis B-scans was modeled with a rigid transformation consisting of a rotation and a 2D translation. Transformation of each B-scan to the first reference B-scan was subsequently computed by concatenating adjacent transformations, allowing reconstruction of the motion-corrected 3D data set. The two registration approaches we have adopted to estimate the adjacent geometrical transformations are detailed in the following sections and illustrated in Fig. 2 .

Fig. 2.

Flow diagram for the comparison of feature-based and intensity-based registration methods.

2.4.1. Feature-based registration using the fiducial marker

The intersection of the fiducial marker with a single B-scan imaging plane appears as a strongly reflecting horizontal line with a large break in the center, corresponding to the surface of the fiducial and the circular imaging area, respectively. Thus, the feature-based method involved two steps: automatic segmentation of the fiducial marker, which appears as two line segments in each B-scan; and registration of these line segments between adjacent B-scans.

Line segmentation used the Hough transform [34], which is a standard image processing algorithm widely used in other imaging modalities [35,36]. Results are shown in Fig. 2, as a magenta line overlay on a B-scan (far left image in the middle row). Prior to applying the Hough transform, 20 pixels at the start of each A-scan were cropped to eliminate the confounding effects caused by autocorrelation noise. As the metal fiducial gave a strong reflection, a threshold (T1) was applied to mask out other features (e.g., skin). The Hough transform was then performed on the left 1/8th and right 1/8th of the image. The central portions of the image typically contained skin and not fiducial, and were not included in the computation.

During execution of the Hough transform, an accumulator space (i.e., a 2D histogram) was created and quantized into an array of (ρ, θ) bins, in which ρ is the perpendicular distance from the line to the origin, and θ is the angle that the perpendicular line makes with the x-axis, as defined in [34]. The global maximum of the histogram was identified, and all connected histogram bins with a high count (>95% of the global maximum) were tagged. The algorithm was then used to calculate the average (ρ, θ) values of the tagged histogram bins, giving the parameters of the line corresponding to the surface of the fiducial marker.

The inner edges (i.e., end points) of each line segment (left and right) of the marker were then identified by tracing along the line until the image intensity fell below an empirically chosen threshold value, T2. At conclusion of the calculation, two line segments had been extracted, corresponding to the intersection of the fiducial marker with the B-scan on each side of the image.

To register adjacent B-scans, the rotation angle, θAB between them was determined from Eq. (1):

| (1) |

where A and B refer to the fixed and floating images, respectively, and mA and mB are the slopes of the detected lines in each pair of adjacent B-scans. The horizontal and vertical translations between B-scans were determined from Eq. (2):

| (2) |

where tx and tz are the horizontal and vertical translations, respectively. (xA, zA) and (xB, zB) are, respectively, the coordinates of the central point of the line segments detected in images A and B.

A range of threshold values (T1 and T2) for segmentation were tested and optimal values were chosen empirically to reduce segmentation error. The correct choice of thresholds may affect the success of a segmentation process (and, thus, the feature-based registration process) by affecting the accuracy of detection of the fiducial marker’s surface and edges. Robustness of the algorithm over a range of values for T1 and T2 will be discussed in Section 4.

2.4.2 Intensity-based registration

For each pair of adjacent B-scans, the intensity-based method searches for an optimal geometrical mapping to transform the floating image onto the fixed image. We use the correlation coefficient as the similarity measure between the images [26,27]. The optimal geometrical transformation will maximize the correlation coefficient between the images, defined as

| (3) |

where A and B denote the fixed and floating images, respectively; xA is the pixel position in image A, A(xA) and BT(xA) are the pixel intensity values of these images at position xA; Ā is the mean pixel intensity value in image A and is the mean of, where is image B transformed to the coordinate space of image A, and ΩA,B is the overlap domain of images A and BT for a given transformation estimate T.

An iterative search for the optimum transformation at sub-pixel resolution was performed using the pattern search optimization method [37]. The search was initialized with the identity transformation. At each iteration, Hanning-windowed sinc interpolation (half width: 18 µm) was applied to resample the floating image to the coordinate space of the fixed image. A common source of bias in registration algorithms is the failure to account for the varying degree of overlap of the images with each geometrical transformation, which impacts the numerical value of the similarity measure. A fixed number of overlap pixels (within a rectangular window) between both images was used for the correlation coefficient calculation in order to avoid such bias.

To avoid the influence of the fiducial marker on the intensity-based registration, the skin region of each data set was delineated before registration. Cropping of the marker allowed simulation of the situation in which a fully automated intensity-based registration is performed on 3D data sets without the presence of the marker. This delineation was performed manually to avoid bias from segmentation errors.

To reduce the confounding effects of speckle noise on the intensity-based registration algorithm, each fast-axis B-scan was low-pass filtered prior to registration using a 2D anisotropic Gaussian kernel. Several smoothing factors (defined as the ratio of FWHM of the Gaussian smoothing kernel to that of the measured system resolution) were tested and the registration results obtained using the optimum factor are reported.

2.5. Technique for comparison of registration accuracy and robustness

Figure 2 illustrates the method used to compare the registration algorithms. A gold-standard manual registration was performed on all 28 data sets by aligning the fiducial marker visible within each B-scan. The accuracy of each registration algorithm was quantified at 50 manually selected control points distributed throughout each data set. These control points were positioned on the edge of the circular imaging field (the inner boundary of the marker). For each registered data set, we calculated the minimum RMS error (in microns) between each of the 50 geometrically transformed control points and the closest point on a manually fitted model of the inner boundary of the marker.

To assess the robustness of each registration algorithm, we calculated the RMS error both before and after its application. Where the RMS error was reduced, the algorithm was deemed successful, whilst an increase in RMS error was recorded as a failure. To assess accuracy, the average RMS error was calculated for data sets for which an algorithm was deemed successful.

Computation times for the registration algorithms were also recorded. The algorithms were implemented in MATLAB (vR2009b, Mathworks, Natick, Massachusetts) on an Intel Quad Core i7@3.07GHz computer. The average computation time to register a 3D volume consisting of approximately 750 ± 30 fast-axis B-scans using the feature-based method, including the time required for segmentation, was 2.4 min. This is approximately 21 times faster than intensity-based method, which required an average of 51 min per registration. Algorithm implementations were not optimized for execution speed.

3. Results

Table 1 shows the success rate and average RMS error obtained for both registration methods, grouped by imaging protocol. We will refer to the data sets collected with these imaging protocols as ‘air-tissue’ and ‘gel-tissue’. The success rate of both algorithms varies with the imaging protocol.

Table 1. Registration accuracy and success rate for air-tissue and gel-tissue data sets.

| Registration method (optimum parameter(s)) | Data sets | Average RMS error, µm (±SD)* | Success rate, % |

|---|---|---|---|

| Feature-based method (T1: 98%; T2: 60%) | Air-tissue (n = 14) | 12 (±3) | 100 |

| Gel-tissue (n = 14) | 19 (±8) | 93 | |

| Intensity-based method (Smoothing factor: 1) | Air-tissue (n = 14) | 36 (±13) | 50 |

| Gel-tissue (n = 14) | 44 (±20) | 86 |

*Note that only successful registrations were used in computing the accuracies (average RMS errors). n: number of data sets.

The feature-based method using the fiducial marker was found to have comparable success rates for air-tissue data sets (100%) and gel-tissue data sets (93%) for T1 and T2 values of 98% and 60%, respectively. A success rate above 90% for air-tissue data sets required a T1 value of greater than 70%. To obtain a similar success rate for gel-tissue data sets, it was necessary to increase T1 to 95% in order to eliminate extraneous horizontal lines from the glass cover slip and artifacts caused by detector saturation. Success of the feature-based registration was robust under a wide range of values for T2. A success rate of over 90% was achieved for 40% < T2 < 70% under both imaging protocols. For T2 lower than 40%, the edges of the hole could not be determined accurately primarily because of noise in the image. For T2 higher than 70%, parts of the marker’s surface became invisible, resulting in inaccurate segmentation. The average RMS error of the feature-based method was found to be approximately the same as the error associated with the manual selection of control points, which was estimated to be 15 ± 3 µm (≈2 to 3 pixels).

The average RMS error for the intensity-based method varied between imaging protocols, and in both situations was inferior to the feature-based method. The intensity-based method gave a higher success rate for the gel-tissue protocol (86%) than for the air-tissue protocol (50%). We found that the intensity-based method had its highest success rate when the image was smoothed with a smoothing factor (defined in Section 2.4.2) equal to 1. When no smoothing was applied, the accuracy was adversely impacted by the presence of interpolation artifacts [38]. When a smoothing factor of 2 or more was applied, blurring of small features and edges reduced the accuracy.

Normal and scarred skin typically have very different surface and subsurface structures. These structures have an influence on the success of the intensity-based method. Table 2 provides the registration accuracy and success rate of this method organized by tissue type. Registration of normal skin data sets resulted in a slightly lower error (38 µm) and higher success rate (79%) compared with scar data sets (45 µm and 57%, respectively). By contrast, tissue features did not affect the feature-based method, as the registration was based on delineation of the fiducial marker.

Table 2. Intensity-based registration accuracy and success rate for normal skin and scar data sets.

| Data sets | Average RMS error, µm (±SD)* | Success rate, % |

|---|---|---|

| Normal skin (n = 14) | 38 (±11) | 79 |

| Scar (n = 14) | 45 (±25) | 57 |

*Note that only successful registrations were used in computing the accuracies (average RMS errors). Results are based on the use of a smoothing factor of 1.

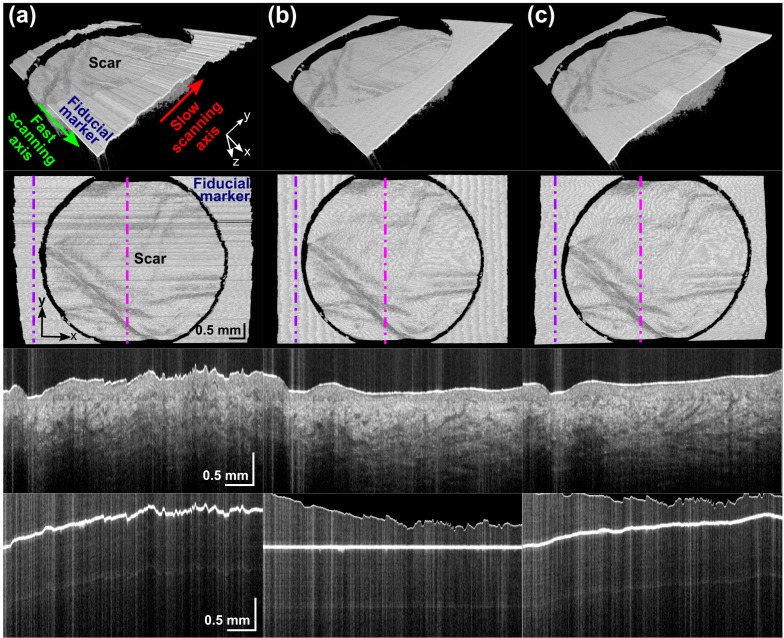

Figures 3 and 4 illustrate typical registration results for air-tissue and gel-tissue data sets, respectively. Figure 3(a) shows the impact of uncorrected motion artifact on an OCT acquisition of scarred skin. The scar surface and subsurface features such as blood vessels are significantly distorted. With air-tissue imaging, these distortions were primarily due to vertical motion. Both feature-based (Fig. 3(b)) and intensity-based (Fig. 3(c)) methods were shown to enhance the visualization and assessment of the scar by reducing the amount of motion artifact. The third row of Fig. 3 shows a representative slow-axis B-scan (yz-plane), the location of which is indicated by the vertical magenta dot-dashed line in the second row of Fig. 3. The bottom row shows a slow-axis B-scan of the fiducial marker (at a location indicated by purple dot-dashed line in the second row). Errors in the intensity-based algorithm (right column) manifest as a curve in this line.

Fig. 3.

Typical results for an air-tissue data set: (a) before registration; (b) after feature-based registration; and (c) after intensity-based registration. For each of (a), (b), and (c): 3D solid render side view (Row 1) and top view (Row 2); a slow-axis B-scan across the scar (Row 3); and fiducial marker (Row 4) at positions indicated by the magenta and purple dot-dashed lines, respectively.

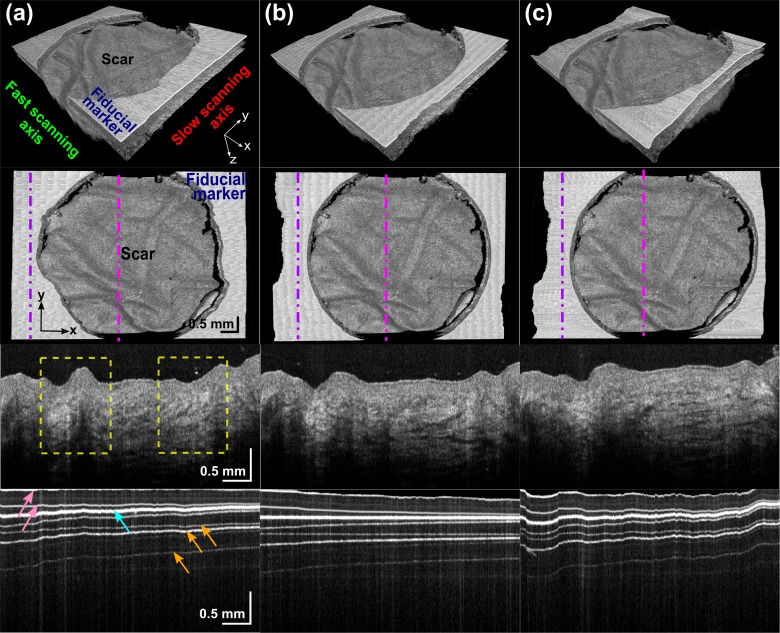

Fig. 4.

Typical results for a gel-tissue data set: (a) before registration; (b) after feature-based registration; and (c) after intensity-based registration. For each of (a), (b), and (c): 3D solid render side view (Row 1) and top view (Row 2); a slow-axis B-scan across the scar (Row 3) and fiducial marker (Row 4) at positions indicated by the magenta and purple dot-dashed lines, respectively. Yellow dashed boxes indicate the distortion of the scar features by motion in horizontal x direction. Arrows in Row 4: cyan – surface of the marker; pink – upper and lower interface of the glass cover slip; and orange – examples of artifacts caused by detector saturation.

Motion artifacts in data sets acquired with the gel-tissue imaging protocol were observed to occur primarily along the x and y axes, as illustrated in Fig. 4(a). The third row of Fig. 4 shows a slow-axis B-scan acquired along the yz-plane, and two examples of motion artifact are delineated by the yellow dashed boxes. Both registration methods were shown to effectively reduce motion artifacts in the gel-tissue data sets. With this imaging protocol, the glass cover slip is apparent, as shown in the fourth row in Fig. 4. The additional horizontal lines at depths below the fiducial marker are artifacts caused by detector saturation. This is inevitable because the marker exhibits a very strong back reflection that is outside the dynamic range of our detector. This signal, as well as its beat terms with the reflecting glass cover slip interfaces, is clipped by the finite dynamic range of the digitizer input, resulting in harmonics in the FFT reconstruction. The marker’s surface, nonetheless, was found to reliably appear as the strongest and widest horizontal line in data sets in this study acquired by tilting of the glass cover slip to reduce the signal strength of the extraneous lines.

4. Discussion

Motion artifact is a source of image distortion that can compromise the clinical utility of in vivo 3D-OCT imaging. The magnitude of the motion artifacts observed in our system was on the order of tens to hundreds of microns between adjacent fast-axis B-scans, with more severe distortions observed on images acquired from areas of the body more affected by involuntary movement, such as the neck, chest, and abdomen.

This study has proposed a novel method based on a fiducial marker for correction of motion artifacts in 3D. This feature-based approach was compared to a standard cross-correlation intensity-based registration method and found to be more accurate (smaller RMS error) and reliable (higher success rate) than the intensity-based method. It was observed that the two imaging protocols assessed were susceptible to different motion artifacts. In general, both registration methods were effective in reducing these artifacts for both imaging protocols, as evidenced by the smooth and continuous skin features observed in the slow-axis B-scan after registration (Figs. 3 and 4).

Potential disadvantages of the feature-based algorithm are the requirement for a fiducial marker to be present within the imaging field and the reduced field of view. The use of a fiducial marker may not be feasible in some clinical situations, such as with fresh wounds. Our experience is that the use of the marker did not present any complications in our clinical scanning conducted during follow-up burn scar assessments on successfully grafted scar tissue.

The success of the feature-based method also depends heavily on the success of the fiducial segmentation process. The most common errors arose in the gel-tissue imaging protocol, in which the glass cover slip resulted in additional horizontal lines in the field of view. Even though the marker in general produces a much stronger signal, in some scans the additional horizontal lines confounded its automatic detection. Optimal choices of threshold parameters T1 and T2 are important in reducing such errors. We note that the algorithm was found to be robust over a wide range of values for T1 (>70% for air-tissue; >95% for gel-tissue data sets) and T2 (40% to 70% for both imaging protocols). Such robustness suggests that the algorithm may be applicable to a wide range of data sets without the need for adjustment of these parameters.

The intensity-based method was found to be adversely influenced by surface furrows and corrugations, often misinterpreting these variations in surface topology as motion artifact and inappropriately attempting to smooth these features. Over an entire 3D-OCT scan, we observed that this effect introduced a bias in the positioning of the set of B-scans, resulting in substantial cumulative misplacement of the final B-scan. This resulted in a reduction in the measured accuracy and success rate of the intensity-based algorithm. This effect was most pronounced in the registration of scar data sets (Table 2), as a majority of the scars in this study had more significant surface corrugations than were present in normal skin. The distortion caused by this bias was substantiated by the deformation of the fiducial marker, which was simultaneously registered in the registration process. Similar ‘flattening’ artifacts have been reported in the registration of OCT images in the eye [20]. Even at higher OCT acquisition speeds, where motion is minimized, this bias will still be present due to the skin’s surface corrugations.

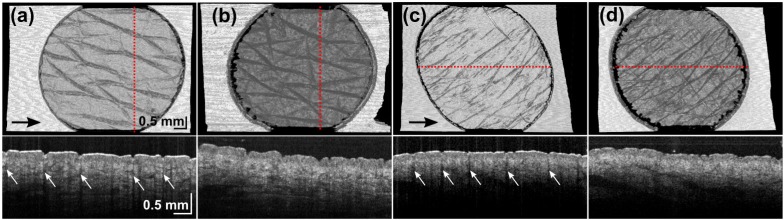

Intensity artifacts manifest as low intensity vertical streaks specifically located under the skin surface furrows [31] and partially account for the lower success rate of the intensity-based algorithm with the air-tissue imaging protocol as compared to the gel-tissue protocol (50% versus 86%). These artifacts are more significant in the air-tissue protocol (Figs. 5(a) , 5(c), Row 2), and are reduced after the application of ultrasound gel (Figs. 5(b), 5(d), Row 2). Intensity-based algorithms implicitly assume a very similar distribution of intensity values between adjacent B-scans, and local intensity artifacts reduce the validity of this assumption. Figures 5(a) and 5(c) show the skew in the round hole of the fiducial marker as an effect of this artifact for data sets such as these with major surface furrows oriented at a diagonal angle to the fast-scanning axis. Figures 5(b) and 5(d) show the co-located scans acquired with the gel-tissue protocol, which reduced this artifact. The shape of the fiducial hole is better preserved in these images.

Fig. 5.

Intensity-based image registrations on normal skin ((a) and (b)) and scarred skin ((c) and (d)) with an air-tissue ((a) and (c)) and gel-tissue ((b) and (d)) image protocol. (a) and (b) are co-located as are (c) and (d). Upper row: en face surface images. Lower row: OCT B-scans at location indicated by the red dotted line. Arrows: black - direction of fast-scanning axis; white - intensity artifacts.

While failure of the intensity-based algorithm sometimes resulted in subtle corruption of the skin features, we observed that the feature-based algorithm tended to clearly succeed or clearly fail. Such catastrophic failures are more easily detected by manual inspection of the registered data set. While all algorithms will fail in a subset of situations, we consider it desirable in a clinical setting for such failures to be easily detectable.

The motion correction algorithms outlined in this paper do not account for motion along the slow-scanning direction (y-axis). Such motion will result in uneven spacing of the B-scans. The well-defined geometry of the fiducial marker may allow for extensions to account for such motion, by assessing the shape of the flat marker in the en face plane. For example, with the circular marker utilized in this paper, we note that it may be feasible to utilize a circular Hough transform [39] to automatically extract its correct shape.

Computation time of the registration method has important implications for the feasibility of 3D-OCT in on-site clinical examination. The feature-based method was found to perform 21 times faster than intensity-based method because it operates on a small number of points and is not iterative. By contrast, in the intensity-based method, a similarity measure based on all pixel values in each pair of adjacent fast-axis B-scans is calculated, and the geometrical transform between each pair of images is optimized iteratively. This large contrast in the computational complexity of the two algorithms is in agreement with comparisons of feature-based and intensity-based registration algorithms undertaken in other imaging modalities [28].

5. Conclusion

We have described a method for motion correction of 3D-OCT scans of skin using a fiducial marker with feature-based image registration. The method has been quantitatively compared against a cross-correlation intensity-based registration method, and accuracy was assessed against manual motion correction. The methods were tested on a total of 28 data sets acquired using two imaging protocols: from air into tissue; and with ultrasound gel as an optical coupling layer. The feature-based method was shown to be more accurate and reliable, with an average RMS error of below 20 µm and success rate of above 90%. The intensity-based method had an average RMS error ranging from 36 to 45 µm and a success rate ranging from 50% to 86%. The performance of both algorithms was found to be influenced by the imaging protocol. Additionally, the intensity-based method was affected by the level of corrugation of the skin surface. Our results show that the use of a fiducial marker can greatly aid in motion correction of in vivo 3D-OCT skin scans.

Acknowledgments

The authors gratefully acknowledge the following colleagues who contributed to this study: Rodney W. Kirk, Lixin Chin, Ngie Min Ung, Pei Jun Gong and Timothy R. Hillman. The authors also thank Sharon Rowe at the Telstra Burns Reconstruction and Rehabilitation Unit, Royal Perth Hospital, for her help in patient management. Y. M. Liew is supported by a scholarship from The University of Western Australia. R. A. McLaughlin is supported by funding from the Cancer Council Western Australia, and this study is in part supported by the National Breast Cancer Foundation, Australia.

References and links

- 1.Welzel J., Lankenau E., Birngruber R., Engelhardt R., “Optical coherence tomography of the human skin,” J. Am. Acad. Dermatol. 37(6), 958–963 (1997). 10.1016/S0190-9622(97)70072-0 [DOI] [PubMed] [Google Scholar]

- 2.Gambichler T., Jaedicke V., Terras S., “Optical coherence tomography in dermatology: technical and clinical aspects,” Arch. Dermatol. Res. 303(7), 457–473 (2011). 10.1007/s00403-011-1152-x [DOI] [PubMed] [Google Scholar]

- 3.Mogensen M., Thrane L., Joergensen T. M., Andersen P. E., Jemec G. B. E., “Optical coherence tomography for imaging of skin and skin diseases,” Semin. Cutan. Med. Surg. 28(3), 196–202 (2009). 10.1016/j.sder.2009.07.002 [DOI] [PubMed] [Google Scholar]

- 4.Steiner R., Kunzi-Rapp K., Scharffetter-Kochanek K., “Optical coherence tomography: clinical applications in dermatology,” Med. Laser Appl. 18(3), 249–259 (2003). 10.1078/1615-1615-00107 [DOI] [Google Scholar]

- 5.Mogensen M., Nürnberg B. M., Forman J. L., Thomsen J. B., Thrane L., Jemec G. B., “In vivo thickness measurement of basal cell carcinoma and actinic keratosis with optical coherence tomography and 20-MHz ultrasound,” Br. J. Dermatol. 160(5), 1026–1033 (2009). 10.1111/j.1365-2133.2008.09003.x [DOI] [PubMed] [Google Scholar]

- 6.Gambichler T., Künzlberger B., Paech V., Kreuter A., Boms S., Bader A., Moussa G., Sand M., Altmeyer P., Hoffmann K., “UVA1 and UVB irradiated skin investigated by optical coherence tomography in vivo: a preliminary study,” Clin. Exp. Dermatol. 30(1), 79–82 (2005). 10.1111/j.1365-2230.2004.01690.x [DOI] [PubMed] [Google Scholar]

- 7.Sakai S., Yamanari M., Miyazawa A., Matsumoto M., Nakagawa N., Sugawara T., Kawabata K., Yatagai T., Yasuno Y., “In vivo three-dimensional birefringence analysis shows collagen differences between young and old photo-aged human skin,” J. Invest. Dermatol. 128(7), 1641–1647 (2008). 10.1038/jid.2008.8 [DOI] [PubMed] [Google Scholar]

- 8.Salvini C., Massi D., Cappetti A., Stante M., Cappugi P., Fabbri P., Carli P., “Application of optical coherence tomography in non-invasive characterization of skin vascular lesions,” Skin Res. Technol. 14(1), 89–92 (2008). [DOI] [PubMed] [Google Scholar]

- 9.Coulman S. A., Birchall J. C., Alex A., Pearton M., Hofer B., O’Mahony C., Drexler W., Považay B., “In vivo, in situ imaging of microneedle insertion into the skin of human volunteers using optical coherence tomography,” Pharm. Res. 28(1), 66–81 (2011). 10.1007/s11095-010-0167-x [DOI] [PubMed] [Google Scholar]

- 10.Morsy H., Kamp S., Thrane L., Behrendt N., Saunder B., Zayan H., Elmagid E. A., Jemec G. B., “Optical coherence tomography imaging of psoriasis vulgaris: correlation with histology and disease severity,” Arch. Dermatol. Res. 302(2), 105–111 (2010). 10.1007/s00403-009-1000-4 [DOI] [PubMed] [Google Scholar]

- 11.Hinz T., Ehler L. K., Voth H., Fortmeier I., Hoeller T., Hornung T., Schmid-Wendtner M. H., “Assessment of tumor thickness in melanocytic skin lesions: comparison of optical coherence tomography, 20-MHz ultrasound and histopathology,” Dermatology 223(2), 161–168 (2011). 10.1159/000332845 [DOI] [PubMed] [Google Scholar]

- 12.Alex A., Povazay B., Hofer B., Popov S., Glittenberg C., Binder S., Drexler W., “Multispectral in vivo three-dimensional optical coherence tomography of human skin,” J. Biomed. Opt. 15(2), 026025 (2010). 10.1117/1.3400665 [DOI] [PubMed] [Google Scholar]

- 13.Mogensen M., Morsy H. A., Thrane L., Jemec G. B. E., “Morphology and epidermal thickness of normal skin imaged by optical coherence tomography,” Dermatology 217(1), 14–20 (2008). 10.1159/000118508 [DOI] [PubMed] [Google Scholar]

- 14.Enfield J., Jonathan E., Leahy M., “In vivo imaging of the microcirculation of the volar forearm using correlation mapping optical coherence tomography (cmOCT),” Biomed. Opt. Express 2(5), 1184–1193 (2011). 10.1364/BOE.2.001184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhang E. Z., Povazay B., Laufer J., Alex A., Hofer B., Pedley B., Glittenberg C., Treeby B., Cox B., Beard P., Drexler W., “Multimodal photoacoustic and optical coherence tomography scanner using an all optical detection scheme for 3D morphological skin imaging,” Biomed. Opt. Express 2(8), 2202–2215 (2011). 10.1364/BOE.2.002202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mogensen M., Joergensen T. M., Nürnberg B. M., Morsy H. A., Thomsen J. B., Thrane L., Jemec G. B. E., “Assessment of optical coherence tomography imaging in the diagnosis of non-melanoma skin cancer and benign lesions versus normal skin: observer-blinded evaluation by dermatologists and pathologists,” Dermatol. Surg. 35(6), 965–972 (2009). 10.1111/j.1524-4725.2009.01164.x [DOI] [PubMed] [Google Scholar]

- 17.Forsea A. M., Carstea E. M., Ghervase L., Giurcaneanu C., Pavelescu G., “Clinical application of optical coherence tomography for the imaging of non-melanocytic cutaneous tumors: a pilot multi-modal study,” J. Med. Life 3(4), 381–389 (2010). [PMC free article] [PubMed] [Google Scholar]

- 18.S. Ricco, M. Chen, H. Ishikawa, G. Wollstein, and J. Schuman, “Correcting motion artifacts in retinal spectral domain optical coherence tomography via image registration,” in Medical Image Computing and Computer-Assisted Intervention—MICCAI 2009 (Springer, 2009), pp. 100–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jørgensen T. M., Thomadsen J., Christensen U., Soliman W., Sander B., “Enhancing the signal-to-noise ratio in ophthalmic optical coherence tomography by image registration—method and clinical examples,” J. Biomed. Opt. 12(4), 041208 (2007). 10.1117/1.2772879 [DOI] [PubMed] [Google Scholar]

- 20.Zawadzki R. J., Fuller A. R., Choi S. S., Wiley D. F., Hamann B., Werner J. S., “Correction of motion artifacts and scanning beam distortions in 3D ophthalmic optical coherence tomography imaging,” Proc. SPIE 6426, 642607 (2007). 10.1117/12.701524 [DOI] [Google Scholar]

- 21.Antony B., Abràmoff M. D., Tang L., Ramdas W. D., Vingerling J. R., Jansonius N. M., Lee K., Kwon Y. H., Sonka M., Garvin M. K., “Automated 3-D method for the correction of axial artifacts in spectral-domain optical coherence tomography images,” Biomed. Opt. Express 2(8), 2403–2416 (2011). 10.1364/BOE.2.002403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kang W., Wang H., Wang Z., Jenkins M. W., Isenberg G. A., Chak A., Rollins A. M., “Motion artifacts associated with in vivo endoscopic OCT images of the esophagus,” Opt. Express 19(21), 20722–20735 (2011). 10.1364/OE.19.020722 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McLaughlin R. A., Armstrong J. J., Becker S., Walsh J. H., Jain A., Hillman D. R., Eastwood P. R., Sampson D. D., “Respiratory gating of anatomical optical coherence tomography images of the human airway,” Opt. Express 17(8), 6568–6577 (2009). 10.1364/OE.17.006568 [DOI] [PubMed] [Google Scholar]

- 24.Jenkins M. W., Chughtai O. Q., Basavanhally A. N., Watanabe M., Rollins A. M., “In vivo gated 4D imaging of the embryonic heart using optical coherence tomography,” J. Biomed. Opt. 12(3), 030505 (2007). 10.1117/1.2747208 [DOI] [PubMed] [Google Scholar]

- 25.Klein T., Wieser W., Eigenwillig C. M., Biedermann B. R., Huber R., “Megahertz OCT for ultrawide-field retinal imaging with a 1050 nm Fourier domain mode-locked laser,” Opt. Express 19(4), 3044–3062 (2011). 10.1364/OE.19.003044 [DOI] [PubMed] [Google Scholar]

- 26.Hill D. L. G., Batchelor P. G., Holden M., Hawkes D. J., “Medical image registration,” Phys. Med. Biol. 46(3), R1–R45 (2001). 10.1088/0031-9155/46/3/201 [DOI] [PubMed] [Google Scholar]

- 27.Penney G. P., Weese J., Little J. A., Desmedt P., Hill D. L. G., Hawkes D. J., “A comparison of similarity measures for use in 2-D-3-D medical image registration,” IEEE Trans. Med. Imaging 17(4), 586–595 (1998). 10.1109/42.730403 [DOI] [PubMed] [Google Scholar]

- 28.Kraus M. F., Potsaid B., Mayer M. A., Bock R., Baumann B., Liu J. J., Hornegger J., Fujimoto J. G., “Motion correction in optical coherence tomography volumes on a per A-scan basis using orthogonal scan patterns,” Biomed. Opt. Express 3(6), 1182–1199 (2012). 10.1364/BOE.3.001182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McLaughlin R. A., Hipwell J., Hawkes D. J., Noble J. A., Byrne J. V., Cox T. C., “A comparison of a similarity-based and a feature-based 2-D-3-D registration method for neurointerventional use,” IEEE Trans. Med. Imaging 24(8), 1058–1066 (2005). 10.1109/TMI.2005.852067 [DOI] [PubMed] [Google Scholar]

- 30.Li Y., Gregori G., Knighton R. W., Lujan B. J., Rosenfeld P. J., “Registration of OCT fundus images with color fundus photographs based on blood vessel ridges,” Opt. Express 19(1), 7–16 (2011). 10.1364/OE.19.000007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Liew Y. M., McLaughlin R. A., Wood F. M., Sampson D. D., “Reduction of image artifacts in three-dimensional optical coherence tomography of skin in vivo,” J. Biomed. Opt. 16(11), 116018 (2011). 10.1117/1.3652710 [DOI] [PubMed] [Google Scholar]

- 32.Bayat A., McGrouther D. A., Ferguson M. W. J., “Skin scarring,” BMJ 326(7380), 88–92 (2003). 10.1136/bmj.326.7380.88 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Amadeu T., Braune A., Mandarim-de-Lacerda C., Porto L. C., Desmoulière A., Costa A., “Vascularization pattern in hypertrophic scars and keloids: a stereological analysis,” Pathol. Res. Pract. 199(7), 469–473 (2003). 10.1078/0344-0338-00447 [DOI] [PubMed] [Google Scholar]

- 34.Duda R. O., Hart P. E., “Use of the Hough transformation to detect lines and curves in pictures,” Commun. ACM 15(1), 11–15 (1972). 10.1145/361237.361242 [DOI] [Google Scholar]

- 35.Golemati S., Stoitsis J., Sifakis E. G., Balkizas T., Nikita K. S., “Using the Hough transform to segment ultrasound images of longitudinal and transverse sections of the carotid artery,” Ultrasound Med. Biol. 33(12), 1918–1932 (2007). 10.1016/j.ultrasmedbio.2007.05.021 [DOI] [PubMed] [Google Scholar]

- 36.Zhu Y. M., Cochoff S. M., Sukalac R., “Automatic patient table removal in CT images,” J. Digit. Imaging (2012), 6 pages, online first. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lewis R. M., Torczon V., “Pattern search algorithms for bound constrained minimization,” SIAM J. Optim. 9(4), 1082–1099 (1999). 10.1137/S1052623496300507 [DOI] [Google Scholar]

- 38.Rohde G. K., Aldroubi A., Healy D. M., Jr, “Interpolation artifacts in sub-pixel image registration,” IEEE Trans. Image Process. 18(2), 333–345 (2009). 10.1109/TIP.2008.2008081 [DOI] [PubMed] [Google Scholar]

- 39.E. R. Davies, Machine Vision: Theory, Algorithms, Practicalities, 3rd ed. (Academic, 2005), Chap. 9. [Google Scholar]