Abstract

A major challenge in the analysis of population genomics data consists of isolating signatures of natural selection from background noise caused by random drift and gene flow. Analyses of massive amounts of data from many related populations require high-performance algorithms to determine the likelihood of different demographic scenarios that could have shaped the observed neutral single nucleotide polymorphism (SNP) allele frequency spectrum. In many areas of applied mathematics, Fourier Transforms and Spectral Methods are firmly established tools to analyze spectra of signals and model their dynamics as solutions of certain Partial Differential Equations (PDEs). When spectral methods are applicable, they have excellent error properties and are the fastest possible in high dimension; see [15]. In this paper we present an explicit numerical solution, using spectral methods, to the forward Kolmogorov equations for a Wright-Fisher process with migration of K populations, influx of mutations, and multiple population splitting events.

1. Introduction

Natural selection is the force that drives the fixation of advantageous phenotypic traits, and represses the increase in frequency of deleterious ones. The growing amount of genome-wide sequence and polymorphism data motivates the development of new tools for the study of natural selection. Distinguishing between genuine selection and the effect of demographic history, such as gene-flow and population bottlenecks, on genetic variation presents a major technical challenge. A traditional population genetics approach to the problem focuses on computing neutral allele frequency spectra to infer signatures of natural selection as deviations from neutrality. Diffusion theory provides a set of classical techniques to predict such frequency spectra [8, 21, 4], while the connection between diffusion and the theory of Partial Differential Equations (PDEs) allows for borrowing well established high-perfomance algorithms from applied mathematics.

The theory of predicting the frequency spectrum under irreversible mutation was developed by Fisher, Wright and Kimura [6, 22, 13]. In particular Kimura [14] noted that this theory was applicable to many nucleotide positions and introduced the infinite sites model. The joint frequency spectra of neutral alleles can be obtained from the coalescent model [20] or by Monte-Carlo simulations [11]. The analysis in terms of diffusion theory is mathematically simpler and can incorporate natural selection easily [8, 21, 4]. In this paper, we model the demographic history of K different populations that are descended by K − 1 population splitting events from a common ancestral population. The populations evolve with gene exchange under an infinite sites mutation model. We introduce a powerful numerical scheme to solve the associated forward diffusion equations. After introducing a regularized discretization of the problem, we show how spectral methods are applied to compute theoretical Non-Equilibrium Frequency Spectra.

The introduction of spectral methods is usually attributed to [16], although they are based on older precursors, such as finite element methods, and Ritz methods in quantum mechanics [17]. The basic idea consists of using finite truncations of expansions by complete bases of functions to approximate the solutions of a PDE. This truncation allows the transformation of a diffusion PDE into a finite system of Ordinary Differential Equations (ODEs). The motivation to use these methods relies on their excellent error properties, and their high speed. In general, they are the preferred methods when the dimension of the domain is high [15], and the solutions to the PDE are smooth. This is because the number of basis functions that one needs to approximate the solutions of a PDE is much lower than the number of grid-points that one needs in a finite difference scheme, working at the same level of accuracy [7].

As we show in this paper, the convergence of spectral methods depends on the smoothness of the solutions to be approximated. In many situations, solutions to diffusion equations have good analytical properties, and spectral methods can be applied. However, the application of these methods to the problem that interests us here requires a proper discretization of the problem. Influx of mutations, population splitting events and boundary conditions have to be properly regularized before one applies these methods and exploits their high-perfomance properties.

1.1. Non-Equilibrium Frequency Spectra

The K-dimensional Allele Frequency Spectrum (AFS) summarizes the joint allele frequencies in K populations. We distinguish between the AFS, which consists of the unknown distribution of allele frequencies in K natural populations, and observations of the AFS. Given DNA sequence data from multiple individuals in K populations, the resulting observation of the AFS is a K-dimensional matrix with the allele counts (for a complete discussion on this see [20]). Each entry of the matrix consists of the number of diallelic polymorphisms in which the derived allele was found. In other words, each entry of the AFS matrix is the observed number of derived alleles, ja, found in the corresponding number of samples, na, from population a (1 ≤ a ≤ K).

The full AFS is the real distribution of joint allele frequencies at the time when the samples were collected. If the total number of diploid individuals in the ath-population is Na ≫ na, the natural allele frequencies x1 = i1/(2N1), x2 = i2/(2N2), … xk = iK/(2NK) (with ia the total number of derived polymorphisms in the ath population) can be seen as points in the K-dimensional cube [0, 1]K. Thus, given the frequencies of every diallelic polymorphism (which we indexed by r) , the AFS can be expressed as the probability density function

| (1.1) |

Here, S is the total number of diallelic polymorphisms segregating in the K populations, and δ( ) is the Dirac delta function.

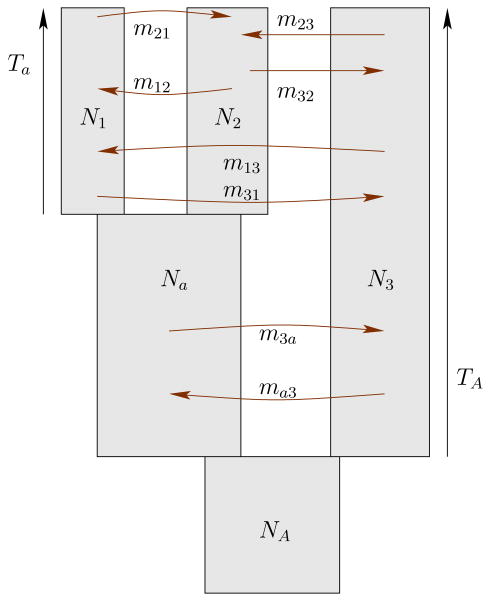

Our goal is to determine this AFS under the infinite sites model. Any demographic scenario in the model is defined through a population tree topology T, such as in Fig. 1, and a set of parameters that specify the effective population sizes Ne,a, splitting times tA, and migration rates mab at different times. Hence, 2Ne,amab is defined as the number of haploid genomes that the population a receives from b per generation. For simplicity, we refer to the set of parameters that specify a unique demographic scenario as Θ.

Figure 1.

A graphical representation of a model for the demographic history of three populations.

Thus, given a population tree topology and a choice of parameters, we will compute theoretical densities of derived joint allele frequencies as functions on [0, 1]K of the type

| (1.2) |

with Λ a truncation parameter, a complete basis of functions on the Hilbert space L2 [0, 1] to be defined below, and αi1⋯iK the coefficients associated with the projection of ϕ(x∣Θ, T) onto the basis spanned by {Ri1 (x)Ri2 (x) ⋯ RiK (x)}. These continuous densities relate to the expectation of an observation of the AFS via standard binomial sampling formulae

| (1.3) |

Using Eq. (1.3) we can compare model and data, for instance, by means of maximum likelihood.

The major goals of this paper are twofold. First, we present the finite Markov chain and diffusion approximation, associated with the infinite sites model used to compute neutral allele spectra. A special emphasis is made on the boundary conditions and the influx of mutations, because of their potential singular behavior. Second, we introduce spectral methods and show how to transform the diffusion equations into coupled systems of Ordinary Differential Equations (ODEs) that can be integrated numerically. In particular, we introduce a set of techniques to handle population splitting events, mutations and boundary interactions, that protect the numerical setup against Gibbs phenomena1. A detailed analysis of the stability of the methods as a function of the model parameters, and the control of the numerical error, are included at the end of the paper.

2. Finite Markov chain model

The evolutionary dynamics of diallelic SNP frequencies in a randomly-mating diploid population can be modeled using a finite Markov chain, with discrete time t representing non-overlapping generations. For simplicity, we consider first one population with N diploid individuals, and later will extend the results to more than one population.

The state of the Markov chain at time t is described by the vector fj(t), with 1 ≤ j ≤ 2N. Each entry, fj(t), consists of the expected number of loci at which the derived state is found on j chromosomes. Therefore, is the expected number of polymorphic loci segregating in the population at time t, and f2N(t) is the expected number of loci fixed for the derived state. The model assumes that the total number of sites per individual is so large, and the mutation rate per site so low, that whenever a mutation appears, it always does so on a previously homoallelic site [14].

The vector fj(t), is also called the density of states. Under the assumption of free recombination between loci and constant mutation rate, the time evolution of fj(t) under random drift and mutation influx is described by the difference equations

| (2.1) |

In its simplest form, one assumes that the alleles in generation t + 1 are derived by sampling with replacement from the alleles in generation t. Therefore, the transition coefficients in the chain Eq. (2.1) are

| (2.2) |

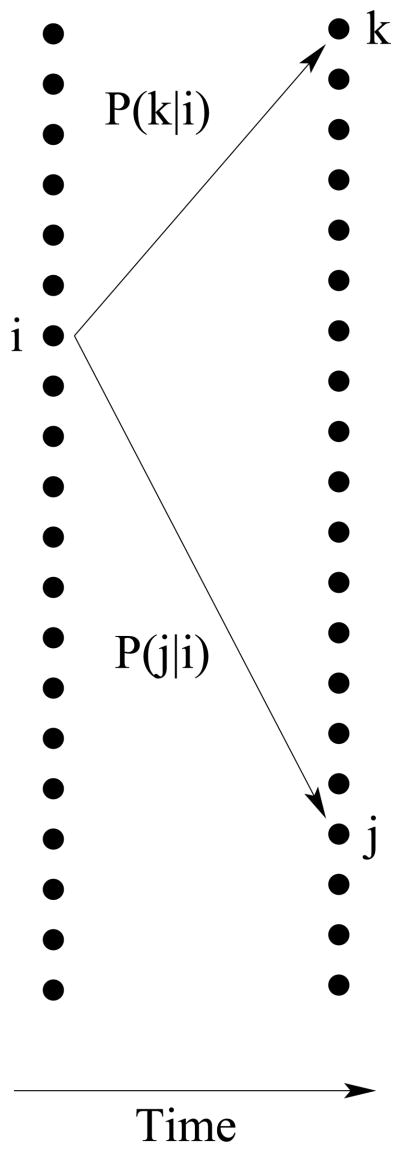

This describes stochastic changes in the state after a discrete generation, Fig. 2. The second term in Eq. (2.1) represents the influx of polymorphisms. Mutations are responsible for the creation of new polymorphisms in the population. The influx of mutations depends on the expected number of sites 2Nν, in which new mutations appear in the population each generation2. If we assume that at each generation, every new mutation is found in just one chromosome, then

Figure 2.

One unit of time transition in a finite Markov chain.

| (2.3) |

for the mutation alone [4]. The term δi,j in Eq. (2.3) is the Kronecker symbol, with δ1,j = 1 if j = 1 and δ1,j = 0 otherwise.

2.1. Effective Mutation Densities

In applications of the infinite sites model, one usually finds that the census population size and the effective population size that drives random drift in Eq. (2.2) are not the same [14]. For this reason, we distinguish between Ne, the effective population size that defines the variance of the Wright-Fisher process in Eq. (2.2), and the census population size N that can be used to define the allele frequencies x = i/(2N). Therefore, the smallest frequency, x = 1/(2N), with which new mutations enter populations will be sensitive to small stochastic fluctuations in the census population size, even if the effective population size remains constant. This is important when we take the diffusion limit of Eq. (2.1), and the stochastic process is described by the continuous variable x = j/(2N), rather than the integer j. If we consider a constant census population size, the term Eq. (2.3) in the Markov chain is substituted by

| (2.4) |

in the diffusion limit. However, if the census population size per generation is a stochastic variable distributed as F(N)dN, the diffusion limit of the mutation term will be

| (2.5) |

We expect that μ(x) will have some general properties, independent of the particular characteristics of F(N)dN. For instance, in many realistic scenarios μ(x) will be a function that is nearly zero for frequencies x > x*, with x* = 1/(2Nmin) a very small characteristic frequency associated with the inverse of the minimum census population size.

Other phenomena that might not be properly captured by the simple mutational model in Eqs. (2.3) and (2.4), consist of organisms with partially overlapping generations, and organisms in which mutations in gametes arise from somatic mutations. When an organism has a mating pattern that violates the assumption of non-overlapping generations (e.g. humans), the generation time in the model Eq. (2.2) is interpreted as an average generation time. Hence, during a generation unit, there is time enough for some individuals to be born with new mutations at the beginning of the generation time, and to reproduce themselves by the end of a generation unit. This implies that after one average generation, there can exist new identical mutations in more than one chromosome. Similarly, when the gametes of an organism originate from somatic tissue, they inherit de novo mutations that arose in the soma after multiple cell divisions. If the individuals of this organism can have more than one offspring per generation, one expects to find the same new mutation, in the same site, in more than one chromosome per generation.

Because of these different biological phenomena, we believe that the notion of effective mutation density, μ(x), is a more general way to describe mutations in natural populations. The effective mutation density describes the average frequency distribution of new mutations per generation, in one population, after taking into account the effects due to stochastic changes in census population size, non-overlapping generations and/or mutations of somatic origin. From a numerical point of view, effective mutation densities are a useful tool to avoid the numerical instabilities associated with polynomial expansions of non-smooth functions (e.g. Dirac deltas) that appear in the standard approaches to mutation influx. As we show later when we discuss the continuous limit of the infinite sites model, different effective mutation densities can yield predictions which are identical to predictions of models based on Eq. (2.4).

2.2. More than one population

Here, we show how to incorporate arbitrarily more populations, and migration flow between them. Generally, for the state in the chain we consider a discrete random variable X⃗ which takes values in the K-dimensional lattice of derived allele frequencies:

| (2.6) |

with K the number of populations, and 0 ≤ ia ≤ 2Na. For simplicity, we use a single index notation, 0 ≤ I ≤ ∏a 2Na, to label the states where the random variable X⃗ takes values. The random variable X⃗ = I jumps to the state X⃗ = J at a discrete generation unit, with prescribed probability P(J∣I). The density of states in this multi-population setup is fI(t), and the difference equations that describe its dynamics are equivalent to Eq. (2.1). The transition matrix P̂ = P(J∣I) incorporates random drift and migration events between populations. New mutations enter each population with an effective mutation vector μ⃗

| (2.7) |

in the standard model, the mutation density is .

The Markov chain for a Wright-Fisher process for two independent populations is defined by the transition matrix

| (2.8) |

where Bi(j; k, p) stands for the binomial distribution with index k and parameter p. Also, we can introduce migration between populations, by sampling a constant number of alleles nab in population a that become part of the allele pool in population b. Thus, in a model of two populations with migration, one considers the transition matrix

| (2.9) |

In this model the parameter space is given by the effective population sizes Ne, 1 and Ne, 2, and the scaled migration rates n21 and n12. This process is generalizable to an arbitrary number of populations in a straightforward way.

3. Diffusion approximation

Diffusion approximations to finite Markov chains have a distinguished history in population genetics, dating back to Wright and Fisher. This approach can be used to describe the time evolution of derived allele frequencies in several populations, with relatively large population sizes. This approximation applies when the population sizes Na are large (if Ne > 50, the binomial distribution with index 2Ne can be accurately approximated by the Gaussian distribution used in the diffusion limit) and migration rates are of order 1/Ne.

In the large population size limit, the state space spanned by vectors such as Eq. (2.6) converges to the continuous space [0, 1]K. The density of states fI(t) on the state space will converge to a continuous density ϕ(x, t) on [0, 1]K. The time evolution of ϕ(x, t) depends on how the inifinitesimal change δX⃗,

is distributed. If the mean of the δX⃗ distribution is M(X⃗, t) and the covariance matrix is C(X⃗, t), the time continuous limit δt → 0+ of the process X⃗(t) is well defined. In the small, but finite, limit of δt the stochastic process obeys the equation

| (3.1) |

where ε⃗ is a standard normal random variable (with zero mean and unit covariance matrix) in ℝK, σ(X⃗, t) is a square root of the covariance matrix C(X⃗, t) = σσT(X⃗, t), and δt is a finite, but very small, time step.

In the diffusion approximation to the discrete Markov chain, the process is described as a time continuous stochastic process governed by Gaussian jumps of prescribed variance and mean. These processes can be denoted using the notation of stochastic differential equations:

| (3.2) |

where dWb is the infinitesimal element of noise given by standard Brownian motion in K-dimensions, and σ is the square root matrix of the covariance matrix C = σσT, [19]. The diffusion generator associated with Eq. (3.2) is

| (3.3) |

Thus, if ϕ(x, t = 0) is the density of allele frequencies at time 0, the time evolution of ϕ(x, t) will be governed by the forward Kolmogorov equation

| (3.4) |

Here, ρ(x, t) is the continuous limit of μj in Eq. (2.1), that describes the net influx of polymorphisms in the population per generation.

3.0.1. Modeling migration flow and random drift

The continuous limit of the Markov chain defined in Eq. (2.9), in the case of K diploid populations and in the weak migration limit, has as associated moments

| (3.5) |

| (3.6) |

with δab the Kronecker delta (δab = 1 if a = b and δab = 0 otherwise). The matrix element mab = nab/(2Na) defines the migration rate from the bth population to the ath population.

Thus, associated with this process one has the Kolmogorov forward equations

| (3.7) |

Eq. (3.7) describes the time evolution of the frequency spectrum density under random drift and migration events between populations, given an initial density and absorbing boundary conditions (see below). The inhomogenous term ρ(x, t) models the total incoming/outgoing flow of SNPs per generation into the K-cube which is not due to the diffusion flow, ja = −Maϕ + ∂b(Cabϕ), at the boundary. This total flow depends on mutation events that generate de novo SNPs: inflow from higher dimensional components of the allele density (see below), inflow from migration events from lower dimensional components of the allele density, and the outflow of migration events towards higher dimensional components. If there is not a positive influx of SNPs, the density would converge to ϕ(x, t) → 0 as t → ∞. In order to understand the probability flow between different components of the density of alleles, we will have to study how the boundary conditions are defined precisely.

3.1. Boundary Conditions

Understanding the boundary conditions in this problem is one of the most challenging tasks. In Kimura's famous solution to the problem of pure random drift in one population, [12], he required the solutions to the diffusion equation to be finite at the boundaries x = 0 and x = 1. This boundary condition is absorbing. The points x = 0 and x = 1 describe states where SNPs reach the fixation of their ancestral or derived states.

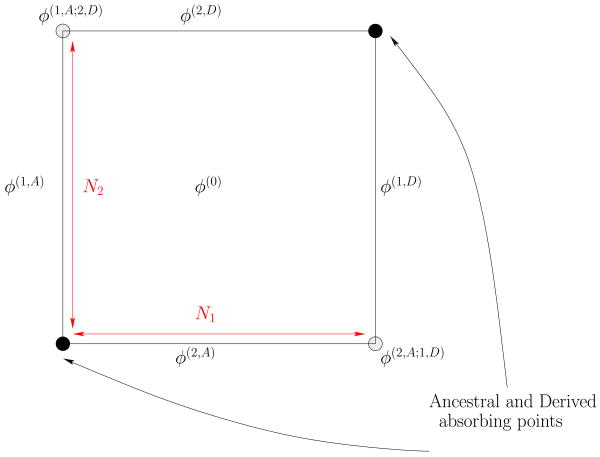

If we consider K populations, the natural generalization of Kimura's boundary conditions can be derived by studying the possible stochastic histories of single diallelic SNPs segregating in the K populations. A SNP which is initially polymorphic in all the K populations can reach the fixation of its derived or ancestral state in one population while still being polymorphic in the remaining K − 1 populations. More generally, a SNP can be polymorphic in K − α populations, while its state can be fixated in the remaining α populations. A convenient way of visualizing this is to look at the geometry of the K-cube of allele frequencies, and the different subdimensional components of its boundary (see examples Fig. 3 and Fig. 4 for the 2-cube and 3-cube). A K-cube's boundary can be decomposed as a set of cubes of lower dimensionality, from (K − 1)-cubes up to 0-cubes or points. The number of boundary components of codimension α, i.e. the number of (K − α)-cubes, contained in the boundary of the K-cube is

Figure 3.

Decomposition of the singular probability density, for two populations, on the two-dimensional bulk and the different subdimensional boundary components.

Figure 4.

Decomposition of the singular probability density, for three populations, on the three dimensional bulk and the different subdimensional boundary components.

| (3.8) |

The most important set of boundary components are the (K − 1)-cubes, because any other boundary component can be expressed as the intersection of a finite number of (K − 1)-cubes at the boundary. We identify each 2K codimension-one boundary component by the population where the SNPs are not polymorphic, and by the state that is fixated in this population (Derived or Ancestral). For example, the component (i, A) is defined as the set of points in the K-cube that obeys the equation xi = 0, and the component (i, D) is defined by the equation xi = 1. Therefore, any codimension α boundary component can be expressed as the intersection

| (3.9) |

with iα ≠ iβ when α ≠ β, δS,D = 1 for the derived state S = D, and δS,D = 0 for the ancestral state S = A.

To each boundary component of codimension α we associate a (K − α)-dimensional density of derived allele frequencies that are polymorphic only on the corresponding K − α populations, while are fixated in the other α populations. In this way, ϕ(0) denotes the bulk probability density, (with the state Si being either ancestral Si = A or derived Si = D) are the 2K codimension-one densities, {ϕ(i,Si;j,Sj)}i≠j the codimension 2 densities, etc. This decomposition is illustrated in the case of 2 and 3 populations in Fig. 4 and Fig. 3.

In this notation, we write the density of derived alleles segregating on K populations as the generalized probability function

| (3.10) |

with δ(·) the Dirac delta-function. The points (1, A) ∩ (2, A) ∩ ⋯ (K, A) and (1, D) ∩ (2, D) ∩ ⋯ (K, D) are the universal fixation boundaries, and they do not contribute to the total density of alleles in Eq. (3.10). It is useful to write the probability density ϕ(x, t) using such decomposition, because despite being a singular generalized function, each boundary component ϕ(i,S1;j,S2,…) (x, t) will be, most of the time, a regular analytic function.

The dynamics of the boundary components ϕ(i1,S1;i2,S2,…) (x, t) are governed by diffusion equations, with an inhomogenous term, of the type

| (3.11) |

with ρ(i1,S1;i2,S2,…)(x, t) the net incoming/outgoing flow into the boundary component (i1, S1) ∩ (i2, S2) ∩ …. The ρ term can be interpreted as an interaction term between different boundary components.

More precisely, ρ(i1,S1;i2,S2;…)(x, t) consists of four terms

| (3.12) |

ρmut(x, t) is the influx of spontaneous mutations (only present in codimension K − 1), ρdrift(x, t) consists of the boundary inflow from codimension α − 1 components that have (i1, S1) ∩ (i2, S2) ∩ … ∩ (iα, Sα) as a boundary component, ρinm(x, t) represents the incoming flow due to migration events from lower dimensional boundary components, and ρoutm(x, t) is the outflow due to migration events from (i1, S1) ∩ (i2, S2) ∩ … (iα, Sα) towards higher dimensional components that have (i1, S1) ∩ (i2, S2) ∩ … (iα, Sα) as a boundary component.

We can write in a more precise way each term in ρ(i1,S1;i2,S2,…)(x, t), as follows:

-

ρmut:

(3.13) with δα,K−1 = 1 if α = K − 1, δα,K − 1 = 0 if α ≠ K − 1, and μ(xa) is the mutation density (in the classical theory, μ(xa) = δ(xa − 1/(2Ne,a))).

-

ρdrift: Assuming that the first derivatives of ϕ(x, t) are finite at the boundary,

(3.14) where the sum over jα is over all components of codimension α − 1 that have (i1, S1) ∩ (i2, S2) ∩ … (iα, Sα) as a boundary component. δSjα,A is 1 when Sjα = A and 0 when Sjα = D; similarly δSjα,D is one when Sjα = D and zero when Sjα = A. The sum over a and b is over all populations that are not j1, j2, … jα.

-

ρinm: Here, and throughout, cα is a shorthand for the boundary component (i1, S1; i2, S2; …; iα, Sα). represents the total incoming flow due to migration events of SNPs that are contained in densities of SNPs located at boundary components of cα. If

d(cα) is the set of boundary components of cα with fixed codimension d (α < d ≤ K),

then can be written as the sum of contributions from all boundary components in

d(cα) is the set of boundary components of cα with fixed codimension d (α < d ≤ K),

then can be written as the sum of contributions from all boundary components in d, for all codimensions d = α + 1, α + 2, …, K, and for all possible migration events from elements q in

d, for all codimensions d = α + 1, α + 2, …, K, and for all possible migration events from elements q in

d(cα) to cα:

d(cα) to cα:

(3.15) Here, Γ(q → cα) is the set of all possible migrations events from SNPs in ϕ(q) to ϕ(cα), p(e) denotes the probability of occurence of the migration event e, and denotes the expected frequency, in the ith-population, of a SNP that enters cα after the event e. We provide below a more precise description of Eq. (3.15), such as a description of the event space Γ(q → cα), the corresponding probabilities of occurence and expected frequencies.

- ρoutm: Denotes the outflow of SNPs due to migration events to higher dimensional boundary components. In other words, measures the rate of loss of SNPs in ϕ(cα), because of migration flow towards boundary components of codimension d < α, that have cα as a boundary component. Let I∂q,cα be a discrete function that returns 1 when cα is a boundary component of q, and zero when it is not. Thus,

(3.16)

To compute Eq. (3.15) and Eq. (3.16), the use of approximations is unavoidable. In principle, one could use the transition probabilities of the finite Markov chain to estimate the probabilities of different migration events and their expected allele frequencies. However, there is a simpler approximation, which is consistent with the weak migration limit in which the diffusion equation is derived.

This approximation follows from the observation that at the boundary xa = δS,D, the strength of random drift along the population a vanishes (xa(1 − xa) = 0), and hence, the infinitesimal change in xa obeys a deterministic equation:

| (3.17) |

Eq. (3.17) implies that a migration event from several populations b, to a target population a, can push the frequency xa of a SNP out of the boundary where it was initially fixated (xa = δS,D).

Therefore, given a K-cube, a boundary component cα (of codimension α), and a boundary component q (of codimension β > α) of cα, we say that there will exist migration flow from q to cα, if and only if

| (3.18) |

| (3.19) |

where denote the allele frequencies of SNPs which are fixated at the boundary components cα and q, are the populations at cα whose allele frequencies are polymorphic, and the frequencies xb are defined at the boundary component q (which means that xb is polymorphic as long as b > β, and is δSb,D otherwise). It is important to realize that xb can be 0 or 1 at q, and a migration event to cα can still bring alleles of the opposite state that is fixated in the target population.

In this approximation, Γ(q → cα) consists of a single element, and p(e) can be zero or one. If Eq. (3.18) and Eq. (3.19) are satisfied, the migration event in Γ(q → cα) has probability p(e) = 1, and the expected frequencies are

| (3.20) |

If Eq. (3.18) and Eq. (3.19) are not satisfied, p(e) = 0, and we say that there is not migration flow from q to cα.

3.2. Effective Mutation Densities

Given a constant spontaneous mutation rate in the species under study, of u “base substitutions per site and per generation,” and expected number of sites ν = L × u where new mutations appear in the population each generation, the total number of de novo mutant sites that appear in the population a, every generation, is 2Ne,aν. We can model this constant influx of mutations by adding a Dirac delta term

| (3.21) |

to the K diffusion equations that govern the ancestral components of codimension K − 1 ϕ(i1A)∩(i2A)∩⋯(iK−1A)(x, t). However, as we discussed above, more generally we work with an effective mutation density

| (3.22) |

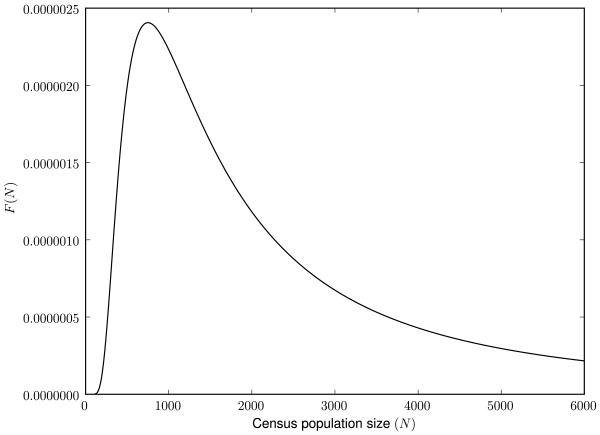

As a particular example of an effective mutation density, we consider a stochastic census population size, which is a random variable distributed as

| (3.23) |

This distribution avoids extremely small populations by an exponential tail, while large population sizes are distributed as ∼ N−2, as shown in Fig. 5. In this model, we keep constant the effective population size Ne that defines the variance of random drift in Eq. (3.6). Thus, the mutation density will be

Figure 5.

A model for a stochastic census population size, with exponential decay in the small population size limit, a quadratic decay ∼ N−2 in the large population size limit, and a population peak at N = 1000.

| (3.24) |

We can integrate Eq. (3.24) exactly, by making the change of variables y = 1/2M, dM = −dy/2y2:

| (3.25) |

3.3. Population splitting events

So far we have studied how the allele frequency density changes as a function of time while the number of populations K remains constant. When two populations split, the diffusion jumps to dimension K + 1, and the corresponding density will obey the time evolution defined by Eq. (3.7) for K + 1 populations, with different population sizes and migration parameters. The initial density ϕK+1(x, xK+1, t) in the K + 1 diffusion problem is determined from the density ϕK(x, t), before the populations split. Therefore, if population K + 1 was formed by Nf,a migrant founders from the ath population, then

| (3.26) |

This formula is derived by considering the binomial sampling of 2Nf,a chromosomes from population a, and using the Gaussian approximation for the binomial distribution with 2Nf,a degrees of freedom and parameter xa. In the limit Nf,a → ∞, Eq. (3.26) simplifies to

| (3.27) |

with δ(x) the Dirac delta. Additionally, if the new population is formed by migrants from two populations merging, with a proportion f from population i and 1 − f from population b, then

| (3.28) |

In the diffusion framework, one can also deal with populations that go extinct or become completely isolated. More precisely, if we remove the ath population, the initial density in the K − 1 dimensional problem will be

| (3.29) |

with x̃ denoting the vector x̃ = (x1, x2, …, xa−1, xa+1, … xK).

4. Solution to the diffusion equations using spectral methods

The idea behind spectral methods consists of borrowing analytical methods from the theory of Hilbert spaces to transform a partial differential equation, such as Eq. (3.7), into an ordinary differential equation that can be integrated numerically using, for instance, a Runge-Kutta method.

In general, the problems in which we are interested are mixed initial-boundary value problems of the form

| (4.1) |

| (4.2) |

| (4.3) |

where D = [0, 1]K is the frequency spectrum domain with boundary ∂D, LFP(x, t) is a linear differential operator also known as the Fokker-Planck operator, ρ(x, t) is a function, and B(x) is the linear boundary operator that defines the boundary condition. In this paper, we are interested in the particular set of PDEs defined in Eq. (3.7), although we sometimes keep the notation of Eq. (4.1) as a shorthand.

We assume that ϕ(x, t) is for all t an element of a Hilbert space ℋ of square integrable functions, and associated L2-product 〈 , 〉L2. Furthermore, we assume that all functions in ℋ satisfy the boundary conditions imposed by Eq. (4.2). In spectral methods we consider a complete orthogonal basis of functions for ℋ, that we denote by , which obeys

| (4.4) |

with hi a function of i that depends on the particular choice of basis functions. One then approximates ϕ(x, t) as the truncated expansion

| (4.5) |

Similarly, one approximates the PDE in Eq. (4.1) by projecting it onto the finite dimensional basis , as

| (4.6) |

By ℋΛ we denote the finite dimensional space spanned by

, and by

Λ the corresponding projector ℋ → ℋΛ. If

Λ the corresponding projector ℋ → ℋΛ. If

we can re-write the ODE in Eq. (4.6) using just modal variables as

| (4.7) |

One can solve Eq. (4.7) by discretizing the time variable t, and using a standard numerical method to integrate ODEs. Therefore, the spectral solution to the diffusion PDE is expressed in the form of a truncated expansion, like Eq. (4.5), and has coefficients determined by the integral of Eq. (4.7).

There are many different ways to construct sequences of approximating spaces ℋΛ that converge to ℋ in the limit Λ → ∞, when the domain is the K-cube. Here, we follow other authors' preferred choice [7], and choose the basis of Chebyshev polynomials of the first kind. In the following section we introduce Chebyshev expansions and show why they are a preferred choice.

4.1. Approximation of functions by Chebyshev expansions

Let be the basis of Chebyshev polynomials of the first kind. They are the set of eigenfunctions that solve the singular Sturm-Liouville problem

| (4.8) |

with i = 0, 1, …, ∞, and −1 ≤ x ≤ 1. are orthogonal under the L2-product with weight function :

| (4.9) |

where c0 = 2 and ci>0 = 1. This basis of polynomials is a natural basis for the approximation of functions on a finite interval because the associated Gauss-Chebyshev quadrature formulae have an exact closed form, the evaluation of the polynomials is very efficient, and the convergence properties of the Chebyshev expansions are excellent [7].

The Chebyshev polynomials of the first kind can be evaluated by using trigonometric functions, because of the identity Ti(x) = cos(i arccos(x)). The derivatives of the basis functions can be computed by utilizing the recursion

| (4.10) |

to express the derivative as

| (4.11) |

Similar formulae can be found for higher derivatives. The coefficients in the expansion

| (4.12) |

can be calculated by using the orthogonality relations of the basis functions

| (4.13) |

However, a direct evaluation of the continuous inner product, Eq. (4.13), can be a source of considerable problems, as in the case of the Fourier series. The classical solution lies in the introduction of a Gauss quadrature of the form

| (4.14) |

If f(x) is smooth enough, the finite sum over Q grid points in Eq. (4.14) will converge quicker than O(Q−1) to the exact formula [15]. As Eq. (4.14) is equivalent to a discrete Fourier cosine transform, general results on the convergence of cosine transforms apply also to this problem. One can see this relationship by considering the change of variables x = cos y:

| (4.15) |

and choosing Q equally spaced abscissas in the interval 0 ≤ y ≤ π,

| (4.16) |

In order to study the convergence properties of the Chebyshev expansions Eq. (4.12), we exploit the rich analytical structure of the Chebyshev polynomials. By using the identity Eq. (4.8), one can re-write Eq. (4.13) as

| (4.17) |

If f(x) is C1([−1, 1]) (i.e., if its first derivative is continuous), we can twice integrate by parts Eq. (4.17) to obtain

| (4.18) |

We can repeat this process as many times as f(x) is differentiable; thus, if f(x) ∈ C2q−1([−1, 1]) then

| (4.19) |

If we use the truncation error

| (4.20) |

as a measure of convergence of the Chebyshev expansion, we may estimate its asymptotic expansion by calculating the rate of decrease of ai. But as we showed in Eq. (4.19), , for some constant c(q) if f(x) ∈ C2q−1([−1, 1]). Therefore, for large Λ the error decreases as a power law

| (4.21) |

and if the function is infinitely differentiable (q = ∞), the corresponding Chebyshev series expansion will converge faster than any power of 1/Λ.

In the applications of this paper we will work with re-scaled Chebyshev polynomials. As the Allele Frequency Spectrum is defined on the interval [0, 1], or direct products of it, we re-scale the Chebyshev polynomials to obtain an orthonormal basis on [0, 1]. More precisely, the basis that we use is with x ∈ [0, 1], , , L2-product:

| (4.22) |

and orthonormality relations,

| (4.23) |

4.1.1. High-dimensional domains and spectral approximations of functional spaces

The joint site frequency spectrum of K populations can be defined as a density on [0, 1]K. A natural basis of functions on the Hilbert space , comes from the tensor product of one dimensional functions. More particularly, we consider the tensor product of Chebyshev polynomials

| (4.24) |

because . Therefore, any square integrable function F(x) under the L2-product

| (4.25) |

can be approximated as multi-dimensional Chebyshev expansion

| (4.26) |

The truncation parameters Λ1, Λ2… can be fixed depending on the analytical properties of the set of functions that one wants to approximate and their corresponding truncation errors. There always exists a trade-off between the accuracy of the approximation (the higher the Λ the more accurate the approximation) and the speed of the implementation of the algorithm (the lower the Λ, the faster the algorithm); therefore, choosing different values of Λi will yield more optimal implementations of the algorithm. Here, for simplicity in the notation, we use a unique truncation parameter Λ = Λ1 = ⋯ = ΛK.

4.2. Diffusion Operators in Modal Variables

In order to approximate the PDEs defined in Eq. (3.7) by a system of ODEs in the modal Chebyshev variables such as Eq. (4.7), we need to project the Fokker-Planck operator in the Chebyshev basis spanned by Eq. (4.24). Later on we will show how to deal with the influx of mutations specified by the Dirac deltas.

A direct projection of the Fokker-Planck operator onto the Chebyshev basis spanned by Eq. (4.24), would require storing the coefficients in a huge matrix with Λ2K matrix elements. Fortunately, the Fokker-Planck operator in our problem is very simple, and its non-trivial information can be stored in just four sparse Λ × Λ matrices. First, we need the random drift matrix

| (4.27) |

and then, the three matrices needed to reconstruct the migration term

| (4.28) |

| (4.29) |

| (4.30) |

The matrix elements in Eqs. (4.27), (4.28), (4.29) and (4.30) can be quickly determined by means of the Gauss-Chebyshev quadrature defined in Eq. (4.14). Due to the properties of the Chebyshev polynomials many matrix elements vanish. More particularly, Dij and Jij are upper triangular matrices (i.e., Dij = Jij = 0 if i > j), Hij = 0 if i ≥ j, and Gij = 0 if i > j + 1 or i < j − 1. Thus, the total number of non-trivial matrix elements that we need to compute, for a given Λ, is just . This is much smaller than the default number of matrix elements (i.e., Λ2K).

Finally, the ΛK × ΛK matrix elements of the corresponding ω matrix in Eq. (4.7) can be easily recovered from the tensor product structure of the ΛK-dimensional vector space that defines the Chebyshev expansion (as in Eq. (4.26)). Thus,

| (4.31) |

with δij = 1, if i = j, and δij = 0 if i ≠ j.

4.3. Influx of Mutations

The inhomogeneous terms in Eq. (3.7) that model the influx of mutations are given by effective mutation densities. As we show in the appendices, a model of mutations given by an exponential distribution will give the same results, up to an exponentially small deviation, as a standard model with a Dirac delta. The motivation for using smooth effective mutation densities is that they are better approximated by truncated Chebyshev expansions. As we briefly explained in the review on Chebyshev polynomials and its truncated expansions, the convergence of a truncated expansion depends strongly on the analytical properties of the function to be approximated. As Dirac deltas are not smooth functions, their truncated Chebyshev expansions present bad convergence properties. This is related to the problem of Gibbs phenomena, and we will give a more detailed account of its origin below (see Sources of error and limits of numerical methods).

In this paper, we only consider a positive influx of mutations in boundary components of dimension one. In order to approximate the behavior under a mutation term given by a Dirac delta, an effective mutation density μ(x) has to satisfy the following:

The truncation error is bounded below the established parameter, ε, for the control of error; i.e., ‖μ(x) −

Λμ(x)‖L2 < ε.

Λμ(x)‖L2 < ε.The expected frequency of the mass mutation-function is .

The mutation-function is nearly zero for relatively large frequencies (e.g., x > 0.05), and it is as peaked as possible near x = 1/(2Ne).

While the first and third qualitative requirements are straightforward, the second numerical condition is not. One can interpret this requirement as equivalent to fixing the neutral fixation rate to be u, because the probability that an allele at frequency x = p reaches fixation at x = 1 is p. Thus, the average number of new mutants that reach fixation per generation is 2Neu ×

μ(x) = u. This constraint can also be derived by studying the properties of the equilibrium density associated with this stochastic process. At equilibrium, the density ϕe(x) of derived alleles obeys

μ(x) = u. This constraint can also be derived by studying the properties of the equilibrium density associated with this stochastic process. At equilibrium, the density ϕe(x) of derived alleles obeys

| (4.32) |

with ψ(x) = x(1 − x)ϕe(x). Therefore, the expected frequency of the mass mutation-function can be computed as

| (4.33) |

Using the identity one can rewrite Eq. (4.33) as

| (4.34) |

On the other hand, the probability flux associated with the equilibrium density of alleles at the boundary x = 1 is . We use this to write the expected frequency of the mutation density as:

| (4.35) |

In neutral evolution the probability flux at the boundary x = 1 equals the fixation rate, which satisfies j(1) = u. Therefore, Eq. (4.35) has to satisfy

| (4.36) |

which is what we wanted to show.

Numerical experiments show that for a large class of functions μ(x), and in the frequency range x > x*, the associated solutions to the different diffusion problems are identical (up to a very small deviation) to the standard model with a Dirac delta. x* is a very small frequency that depends on the choice of μ(x), and generally can be made arbitrarily small. It is in the region of the frequency space with 0 ≤ x ≤ x*, where the behavior of the different diffusion problems can deviate most.

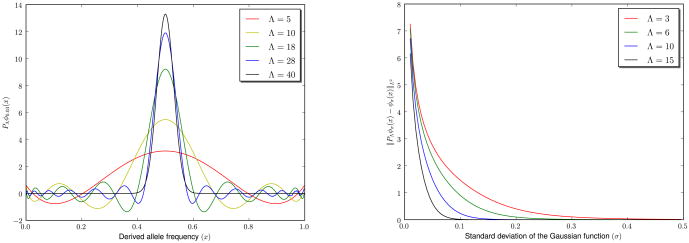

The truncation error in the Chebyshev expansion depends on the smoothness of the function, and the choice of truncation parameter (see Fig. 6 for an example). For the effective mutation density μ(x), we use the exponential function

Figure 6.

On the left, we show the plot of five different truncated Chebyshev expansions for a Gaussian peaked at x = 0.5 and σ = 0.03. On the right, we show the truncation error of different Chebyshev expansions (with Λ = 3, 6, 10 and 15) of a family of Gaussian functions peaked at x = 0.5 and parametrized by the standard deviation 0.01 ≤ σ ≤ 0.5.

| (4.37) |

where the values for κ(Λ, ε) ≫ 1 are determined by saturating the bound on error: ‖μ(x) −

Λμ(x)‖L2 < ε.

Λμ(x)‖L2 < ε.

4.3.1. Comparison of different mutation models at equilibrium

We derive in the Appendix A the associated equilibrium distributions of derived alleles. For a model with a mutation density given by a Dirac delta, one finds the equilibrium density

| (4.38) |

with θ(y) the Heaviside step function (θ(y) = 0 for y < 0, θ(y) = 1/2 for y = 0, and θ(y) = 1 for y > 0). Which in the region x > 1/(2Ne) simplifies to

| (4.39) |

In the case of μ2(x) = c exp(−κx), the corresponding equilibrium density is

| (4.40) |

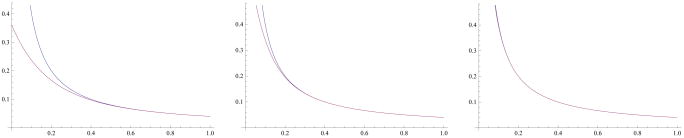

Therefore, a pairwise comparison of both equilibrium densities shows that the deviation from both models when x > x* = κ−1 is exponentially small when equilibrium is reached (see Fig. 7). We can show that the same is true in non-equilibrium.

Figure 7.

Three comparisons of the equilibrium densities associated with the exponential mutation density (blue) for several values of κ vs. the equilibrium density associated with the Dirac delta mutational model (red). For illustrative purposes, the population size used was N = 10, 000 and the spontaneous mutation rate is u = 10−6. On the left the equilibrium density associated with the exponential distribution with κ = 10 is shown, in the middle κ = 20, and on the right κ = 40. For a truncation parameter Λ = 20, one can choose mutation densities with κ up to 43, while keeping the truncation error below sensible limits.

4.3.2. Non-equilibrium dynamics with effective mutation densities

Here, we show how the non-equilibrium dynamics of a diffusion system under an exponential distribution mutation influx is the same (up to an exponentially small deviation) as a system where mutations enter the population through the standard Dirac delta δ(x − 1/(2Ne)), as long as the allele frequencies are bigger than certain minimum frequency x*. Below x* the dynamics will be sensitive to differences in the mutation densities.

Let φ(x) be an arbitrary initial density of alleles. Let ϕ1(x, t) be the solution to the diffusion equations under pure random drift and a mutation influx given by δ(x − 1/(2Ne)). ϕ2(x, t) is the solution of the diffusion equations under pure random drift and mutation influx given by the exponential effective mutation density Eq. (4.37). In Appendix B, we prove the following identity in the large t limit

| (4.41) |

with ϕ1(x, 0) = ϕ2(x, 0) = φ, and κ ≤ 2Ne. If we normalize Eq. (4.41) by

the normalized deviation of ϕ2(x, t) from ϕ1(x, t) is, for large t,

| (4.42) |

We can also show, by applying the Minkowski inequality to Eq. (B.10) in Appendix B, that

| (4.43) |

for all t > 0. This means that the deviation is bounded by O(κ−1) for all t, and therefore the non-equilibrium dynamics of ϕ1(x, t) and ϕ2(x, t) are identical in the large κ limit.

As |ϕ1(x, 0) − ϕ2(x, 0)| = 0 at time zero and the deviation of ϕ2(x, t) from ϕ1(x, t) attains equilibrium in the large t limit, we can study the frequency dependence of such deviation by looking at the equilibrium

| (4.44) |

Here, the O(e−κ) term exactly cancels the singularity at x = 1 and the deviation decays exponentially as a function of the frequency. This shows that for frequencies x > x* = κ−1 ≥ 1/(2Ne) the dynamics of a model with mutation influx given by a Dirac delta is the same, up to an exponentially small deviation, as the non-equilibrium dynamics of a model with exponential mutation density.

4.4. Branching-off of populations

Modeling a population splitting event also involves the use of Dirac deltas, as in Eq. (3.27), or peaked functions such as Eq. (3.26), whose truncated Chebyshev expansions may present bad convergence properties. These Gibbs-like phenomena can be dealt with in a similar way as we did with the mutation term of Eq. (3.7).

We implemented two different solutions to this problem and both solutions yielded similar results. First, we constructed a smoothed approximation of the Dirac delta by using Gaussian functions:

| (4.45) |

with

| (4.46) |

and σ(xa) as a standard deviation which is chosen as small as possible while preserving the bound on error, ‖δ̃(xa − x) −

Λδ̃(xa − x)‖L2[0≤x≤1] < ε, for any value of xa ∈ [0, 1]. In order to map the δ̃-function in Eq. (4.45) to a truncated Chebyshev expansion,

Λδ̃(xa − x)‖L2[0≤x≤1] < ε, for any value of xa ∈ [0, 1]. In order to map the δ̃-function in Eq. (4.45) to a truncated Chebyshev expansion,

| (4.47) |

one has to perform a Gauss-Chebyshev quadrature in 2 dimensions, 0 ≤ xa ≤ 1, 0 ≤ xK + 1 ≤ 1:

| (4.48) |

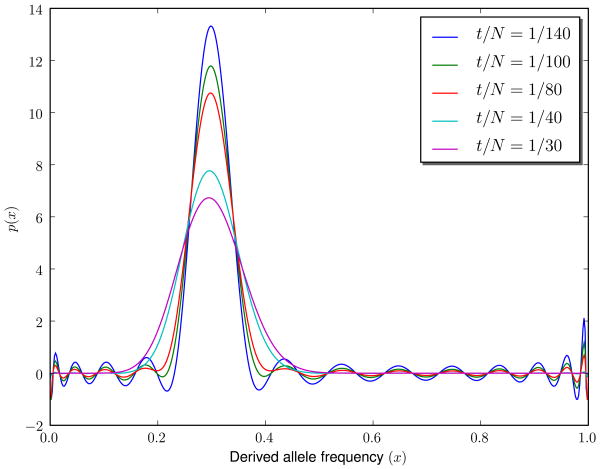

The second approach exploits the analytical behavior of diffusion under pure random drift (i.e., with no migration). By Kimura's solution to the diffusion PDE in terms of the Gegenbauer polynomials {

i(z)}, see [12], we know that the time evolution of 1-d density is

i(z)}, see [12], we know that the time evolution of 1-d density is

| (4.49) |

with r = 1 − 2p, z = 1 − 2x and ϕ(x, 0) = δ(x − p). Thus, in the exact solution to the diffusion equation, the time evolution of the coefficients of degree i in the Gegenbauer expansion is described by the term exp(−(i + 1)(i + 2)t/4N). This means that diffusion smooths out the Dirac delta at initial time. Fig. 8 represents the evolution of the density at different times.

Figure 8.

Diffusion under pure random drift acts by smoothing out the initial density at t = 0. Here we show numerical solutions to the diffusion equations with Λ = 28, at 5 different times, with initial condition ϕ(x, t = 0) = δ(x − 0.3). As time passes, the numerical solution approaches the exact solution more quickly, and the Gibbs phenomena disappear.

Thus, we can use diffusion under pure random drift to smooth out the density introduced after the population splitting event. Let ϕK (x, t) be the density before the splitting and let a be the population from which population K + 1 is founded. We initially consider the density

| (4.50) |

The associated Chebyshev expansion

ΛϕK+1(x, xK+1, t) will present Gibbs-phenomena. However, by diffusing for a short time τ under pure random drift

ΛϕK+1(x, xK+1, t) will present Gibbs-phenomena. However, by diffusing for a short time τ under pure random drift

| (4.51) |

(with Sa = SK+1 = W, Sb = V for K + 1 ≠ b ≠ a, and V ≫ W), ϕK+1(x, xK+1, τ) becomes tractable under Chebyshev expansions. In other words, by choosing τ such that the error bound is satisfied

we obtain a smooth density after the population splitting event which can follow the regular diffusion with migration prescribed in the problem, and approximate accurately the branching-off event. In some limits this approximation can fail, though we leave the corresponding analysis for the next section.

Here, we do not consider the numerical solution to the problem of splitting with admixture, although we are confident that it should be possible to solve along similar arguments.

4.5. Sources of error and limits of numerical methods

There are two major sources of error in these numerical methods. First, the solution of the diffusion equation is itself a time-continuous approximation to the time evolution of a probability density evolving under a discrete Markov chain. Hence, whenever the diffusion approximation fails, its numerical implementation will also fail. Secondly, a numerical solution by means of spectral expansions involves an approximation of the infinite dimensional space of functions on a domain by a finite dimensional space generated by bases of orthonormal functions under certain L2-product. As we show below, under a broad set of conditions the numerical solution will converge accurately to the exact solution; otherwise, the numerical solution can fail to approximate the exact solution. A third source of error appears because one has to discretize time in order to integrate the high-dimensional ODE that approximates the PDE. Fortunately, this source of error can be ignored because the diffusion generators yield a stable time evolution.

We summarize below the main conditions that have to be satisfied in order to obtain high-quality numerical solutions to the PDEs studied in this work.

4.5.1. Limits of diffusion equations

In the diffusion approximation to a Markov chain, the transition probability is approximated by a Gaussian distribution [5]. Here, we review the derivation of the diffusion equation as the continuous limit of a Markov chain, in order to emphasize the assumptions made and determine the limits of this approximation.

Given a Markov process defined by a discrete state space

, transition matrices p(I∣J), initial value K ∈

, transition matrices p(I∣J), initial value K ∈

and discrete time τ = 0, 1, …, the probability that the state will be at I at time τ is f(I∣K, τ), where f(I∣K, τ) obeys the recurrence relation

and discrete time τ = 0, 1, …, the probability that the state will be at I at time τ is f(I∣K, τ), where f(I∣K, τ) obeys the recurrence relation

| (4.52) |

In the diffusion approximation one considers a sequence of discrete state spaces {

λ}λ∈ℤ+ such that in the limit λ → ∞ the state space

λ}λ∈ℤ+ such that in the limit λ → ∞ the state space

∞ converges to a smooth manifold (in practical applications, a compact domain D ⊂ ℝK).

∞ converges to a smooth manifold (in practical applications, a compact domain D ⊂ ℝK).

In this paper, we take

λ to be [0, λ]K, and

λ to be [0, λ]K, and

∞ ∼ [0, 1]K. Therefore, the state variables can be re-scaled as Ka/λ = xa, with a = 1, …, K and Ka ∈ [0, λ]K. Similarly, we introduce the time variable t = τ/λ. In the large λ limit, the transition probability for the change of the state from time τ/λ to time (τ + 1)/λ is governed by a distribution with moments

∞ ∼ [0, 1]K. Therefore, the state variables can be re-scaled as Ka/λ = xa, with a = 1, …, K and Ka ∈ [0, λ]K. Similarly, we introduce the time variable t = τ/λ. In the large λ limit, the transition probability for the change of the state from time τ/λ to time (τ + 1)/λ is governed by a distribution with moments

| (4.53) |

| (4.54) |

| (4.55) |

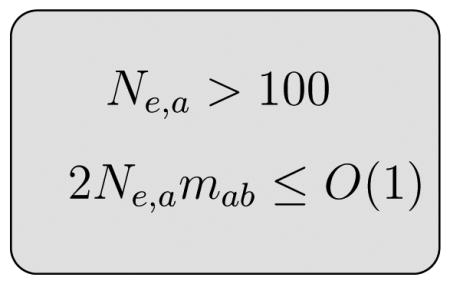

In this continuous limit, the equation that describes the time evolution of the Markov chain in Eq. (4.52) can be written as a forward Kolmogorov equation if we neglect terms of order O(1/λ2). However, if Ma(x) is proportional to λ, the O(1/λ2) terms in Eq. (4.53) cannot be neglected and the diffusion approximation will not be valid. As in this paper we take λ = 2Ne, and Ma(x) proportional to the migration rates mab, if the migration rates obey 2Ne,amab ≤ O(1) the diffusion approximation will be valid. Indeed, computer experiments show that the numerical solutions become unstable and yield incorrect results if this bound is violated. This limit precisely defines when two populations can be considered the same [9]. Therefore, in cases when 2Ne,amab ≫ O(1), we can consider populations a and b as two parts of the same population. Another assumption in the diffusion approximation is that a binomial distribution with 2Ne,a degrees of freedom can be approximated by a Gaussian distribution. This will be a valid approximation as long as Ne,a is large enough. Numerical experiments show that the approximation is accurate if Ne,a > 100; otherwise, effects due to the finiteness of the Markov chain cannot be neglected and the approximation will fail.

|

4.5.2. Limits of spectral expansions

Spectral methods, as with any numerical scheme for solving PDEs, require several assumptions about the behavior of the solution of the PDE. The most important one is that one can approximate the solution as a series of smooth basis functions,

| (4.56) |

In other words, the projection of the solution

Λϕ(x, t) is assumed to approximate ϕ(x, t) well in some appropriate norm for sufficiently large Λ. As one has to choose finite values for Λ, Eq.(4.56) will sometimes fail to approximate correctly the solution of the PDE.

Λϕ(x, t) is assumed to approximate ϕ(x, t) well in some appropriate norm for sufficiently large Λ. As one has to choose finite values for Λ, Eq.(4.56) will sometimes fail to approximate correctly the solution of the PDE.

In the applications of this paper, the basis of functions that we use consist of the Chebyshev polynomials of the first kind3. Below we provide bound estimates for the truncation error ‖

Λϕ(x, t) − ϕ(x, t)‖L2[−1,1]K, to understand the quality of the approximate solutions for different values of Λ, (see also [7, 1] for different choices of basis functions).

Λϕ(x, t) − ϕ(x, t)‖L2[−1,1]K, to understand the quality of the approximate solutions for different values of Λ, (see also [7, 1] for different choices of basis functions).

More precisely, as the L2 inner product and norm in the Chebyshev problem are:

| (4.57) |

and

| (4.58) |

the terms in the expansion Eq. (4.56) can be computed by performing inner products

| (4.59) |

with c0 = 2 and cj = 1 (j > 0). A consequence of the orthogonality of the basis functions is that the squared truncation error admits a simple formulation in terms of the coefficients in the expansion:

| (4.60) |

Thus, the truncation error depends only on the decay of the higher modes |αi1, i2, … iK| in the expansion. On the other hand, the decay of these higher modes depends on the analytical properties of ϕ(x, t) itself. For instance, if ϕ(x, t) ∈ C2q1 − 1,2q2 − 1, …, 2qK − 1 ([−1, 1]K), i.e. if

| (4.61) |

we can integrate by parts Eq. (4.59), as we did in Eq. (4.18), to write the decay of each mode as

| (4.62) |

Eq. (4.62) implies that the truncation error is directly related to the smoothness of ϕ(x, t): it follows that we can bound the truncation error as a function of Λ:

| (4.63) |

Another convenient measure of smoothness is the Sobolev norm:

| (4.64) |

in terms of the Sobolev norm, the truncation error is bounded as

| (4.65) |

A corollary of Eq. (4.65) is that if ϕ(x, t) is smooth,

Λϕ(x, t) converges to ϕ(x, t) more rapidly than any finite power of Λ−1. This is indeed the basic property that has given name to spectral methods.

Λϕ(x, t) converges to ϕ(x, t) more rapidly than any finite power of Λ−1. This is indeed the basic property that has given name to spectral methods.

In the absence of influx of polymorphisms in the populations, the time evolution of the density obeys pure diffusion, and therefore |αi1,i2,…iK(t)| → 0 whent t → ∞ as it follows from Eq. (4.49). This means that diffusion acts as a smoothing operator on the initial density. Empirically, we find that in the presence of influx of polymorphisms the density can also be approximated by spectral expansions and the truncation error remains low.

After two populations split and the K-dimensional diffusion becomes a K + 1 dimensional process, the K + 1 dimensional density becomes a distribution concentrated in the linear subspace of [−1, 1]K+1 defined by xa = xa+1 (with a and a + 1 labeling the two daughter populations that just split). Such density has an infinite Sobolev norm and cannot be represented as a finite sum of polynomials. Fortunately, the diffusion generator acts on the density by smoothing it out and by bringing the density to a density with finite Sobolev norm. The main variables involved in this process are: the time difference between the current splitting event and the next one, TA+1 − TA, and the effective population sizes Ne,a and Ne,a+1 of the daughter populations. Therefore, depending on the choice of the truncation parameter Λ, a minimum diffusion time tm(Ne,a, Ne,a+1, Λ) will be necessary to bring the truncation error within desired limits ‖

Λϕ(x, tm) − ϕ(x, tm)‖L2 ≤ ε. Here, ε is the control parameter on numerical error. Therefore, the bigger the largest effective population size of the two daughter populations, the bigger will be such minimum diffusion time. If the time difference between the current splitting event and the next one is bigger than

Λϕ(x, tm) − ϕ(x, tm)‖L2 ≤ ε. Here, ε is the control parameter on numerical error. Therefore, the bigger the largest effective population size of the two daughter populations, the bigger will be such minimum diffusion time. If the time difference between the current splitting event and the next one is bigger than

| (4.66) |

(where C(Λ) is a function that can be computed numerically), the resulting numerical error will stay below the desired limits. As our model aims to reproduce the real SNP Allele

|

Frequency Spectrum density there should exist low error approximations of such density (that we denote as γ̂(x)) in terms of polynomial expansions. Otherwise, the methods here presented will fail to solve the problem. This can only happen if γ̂(x) is so rugged, i.e. the corresponding Sobolev norm is so high, that the largest finite choice for Λ that we can implement in our computer-code is not large enough to approximate accurately γ̂(x):

| (4.67) |

In case that Eq. (4.67) is obeyed, it is likely that 2 or more populations are so closely related that we can treat them as if they were one identical population. If we reduce the dimensionality of the problem in this way (by only incorporating differentiated populations), the correlations will disappear and the Sobolev norm of γ̂′(x) will be such that we will be able to find a sensible parameter Λ to approximate γ̂′(x) as a truncated Chebyshev expansion.

5. Conclusion

In this paper we have introduced a forward diffusion model of the joint allele frequency spectra, and a numerical method to solve the associated PDEs. Our approach is inspired by recent work in which similar models were proposed [21, 4, 8]. Analogously, our methods are quite general and can accomodate selection coefficients and time dependent effective population sizes.

The major novelties of the model here presented with respect to previous work are:

The introduction of spectral methods/finite elements in the context of forward diffusion equations and infinite sites models. Traditionally, these techniques yield better results than finite differences schemes when the dimension of the domain is high (i.e., when the final number of populations is high), and the solutions are smooth. A comparison of our implementation using spectral methods, and previous implementations using finite differences [8], will be the matter of future work.

A set of boundary conditions that deals with the possibility that some polymorphisms reach fixation in some populations while remaining polymorphic in other populations. When the differences in effective population sizes between different populations are large, this phenomenon can become very important. Here, we have introduced a solution to address this possible scenario. Previous work imposed zero flux at the boundaries [8], and hence avoided the fixation of polymorphisms in some populations while remaining polymorphic in the rest.

The introduction of effective mutation densities, which generalize previous models for the influx of mutations [4]. We have emphasized how different ways to inject mutations at very low frequencies converge to the same solution for larger frequencies.

The non-equilibrium theory of Allele Frequency Spectra is of primary importance to analyze population genomics data. Although it does not make use of information about haplotype structure or linkage non-equilibrium, the analysis of AFS allows the study of demographic history and the inference of natural selection. In this work, we have extended the diffusion theory of the multi-population AFS, to accommodate spectral methods, a general framework for the influx of mutations, and non-trivial boundary interactions.

Acknowledgments

We thank Rasmus Nielsen, Anirvan Sengupta, Simon Gravel and Vitor Sousa for helpful conversations. This work was partially supported by the National Institutes of Health (grant number R00HG004515-02 to K. C. and grant number R01GM078204 to J. H.).

Appendix A. Comparison of mutational models at equilibrium

In this appendix we compute the equilibrium densities associated with Wright-Fisher processes with mutation. Two types of mutation processes are considered, both modeled by a mutation density. The first mutation density is a Dirac delta, while the second one is an exponential distribution.

As the diffusion equation that describes the time evolution of the density of alleles for diallelic SNPs is

| (A.1) |

the equilibrium density ϕe(x) satisfies . By using instead the function ψ(x) = x(1 − x)ϕe(x), the associated second order ordinary differential equation becomes

| (A.2) |

As Eq. (A.2) is only defined for x > 0, we can use Laplace transforms to solve the equation. Let

| (A.3) |

be the Laplace transform associated with the mutation density μ(x), and

| (A.4) |

the Laplace transform associated with ψ″(x), with ψ(0) and ψ′(0) integration constants. Therefore, in the s domain, ψ̃(s) is

| (A.5) |

and by performing the inverse Laplace transform we obtain the solution to the equilibrium density

| (A.6) |

We fix the integration constants, ψ(0) and ψ′(0), by requiring ϕe(x) to be finite at x = 1, and the probability flow at x = 1 to be equal to u,

| (A.7) |

As an example, we can evaluate exactly Eq. (A.6), for μ1(x) = δ(x − 1/(2Ne)) and μ2(x) = c exp(−κx). For the Dirac delta, the Laplace transform is

| (A.8) |

If we compute the corresponding inverse Laplace transform in Eq. (A.6), and fix the integration constants as explained above, we find the equilibrium density

| (A.9) |

with θ(y) the Heaviside step function (θ(y) = 0 for y < 0, θ(y) = 1/2 for y = 0, and θ(y) = 1 for y > 0). If x > 1/(2N), Eq. (A.9) simplifies to

| (A.10) |

In the case of μ2(x) = c exp(−κx), the Laplace transform is

| (A.11) |

and the corresponding equilibrium density, after integrating Eq. (A.6), is

| (A.12) |

which in the large κ limit, and for x ≫ 1/κ, converges exponentially quickly to

| (A.13) |

In the limit x → 0, ϕe(x) is finite only iff , which is the normalization choice made in Eq. (4.37), and the only one satisfying

| (A.14) |

This shows how a mutation model defined by a certain class of smooth mutation densities reaches the same equilibrium density, up to a small deviation, as the standard model with a Dirac delta.

Appendix B. Comparison of mutational models at non-equilibrium

In this appendix we compare the non-equilibrium dynamics of models with a mutation influx described by exponential distributions, with models that consider a standard Dirac delta.

More particularly, we prove that if ϕ1(x, t) is the solution to an infinite sites model PDE, with absorbing boundaries,

| (B.1) |

and ϕ2(x, t) is the solution to the same model, but with an exponential mutation density

| (B.2) |

then, the deviation of ϕ2(x, t) with respect to ϕ1(x, t), as a function of time and for any initial condition ϕ2(x, t = 0) = ϕ1(x, t = 0) = φ(x), is, in the large t limit,

| (B.3) |

Here, | · | is the absolute value, and O(e−κ) are terms that decay exponentially as a function of κ, which can be neglected in the large κ limit.

As the total number of SNPs that are polymorphic in one population depends on the population size and the mutation rate, it is convenient to normalize the deviation Eq. (B.3) by . In this normalization we have

| (B.4) |

To prove Eq. (B.3), we first describe the solutions to Eq. (B.1) and Eq. (B.2). Both equations consist of a homogeneous term and an inhomogeneous contribution given by the mutation density. As they are linear equations, the solution to the PDE is the sum of a homogeneous and an inhomogeneous term

| (B.5) |

satisfying

| (B.6) |

Hence, the only time-independent term that solves Eq. (B.6) is the equilibrium density Eq. (A.9), and obeys a standard diffusion equation with no mutation density, and with initial condition . If denotes the Fokker-Planck operator acting on ,

we can write the solution to Eq. (B.6) in the following compact form

| (B.7) |

Here, exp (tℒ) is the time-dependent action of the diffusion operator on the initial density while preserving the absorbing boundary conditions. This operator can be diagonalized in the basis of Gegenbauer polynomials on L2([0, 1]); see [12]. The corresponding eigenvalues of exp (tℒ) are exp(−(i + 1) (i + 2)t/4Ne) with i ∈ [0, ∞).

We can solve Eq. (B.2) in a similar way, by using the decomposition

| (B.8) |

In this case, is the equilibrium density associated with the exponential mutation density, as defined in Eq. (A.12). The term evolves under pure random drift, with no mutation influx, and initial condition :

| (B.9) |

By subtracting Eq. (B.9) from Eq. (B.7), we can describe the time evolution of the deviation as

| (B.10) |

which is independent of the initial condition φ(x).

One can show that is non negative on [0, 1], if κ ≤ 2Ne. This can be seen more clearly by computing in the large κ limit

| (B.11) |

| (B.12) |

The terms of order e−κ in Eq. (B.12) exactly cancel the divergence at x = 1. Therefore, the action of the diffusion operator on , will preserve the non-negativity of the density

| (B.13) |

Because of this inequality, the absolute value , is the same as , and we can evaluate exactly the integral

| (B.14) |

by expanding in the eigenbasis of exp (tℒ). This basis is orthogonal under the L2-product defined by the weight x(1 − x), and the constant function on [0, 1] corresponds to the eigenfunction with the smallest eigenvalue. In this way we can interpret the right-hand side of Eq. (B.14) as a projection on this eigenfunction, and evaluate the integral exactly.

The eigenbasis of exp (tℒ) is defined by the Gegenbauer polynomials. As an example, the first three Gegenbauer polynomials on [0, 1], orthonormal under the L2-product with weight x(1 − x), are

| (B.15) |

| (B.16) |

| (B.17) |

The corresponding eigenvalues in exp (tℒ), are eigenvalues exp(−t/(2Ne)), exp(−3t/(2Ne)), and exp(−3t/Ne). Thus, Eq. (B.14) is the same as

| (B.18) |

and

| (B.19) |

As for all x ∈ [0, 1] and for t ≫ Ne, we lastly compute Eq. (B.3), as

| (B.20) |

which is

| (B.21) |

as we wanted to show.

Footnotes

Gibbs phenomena are numerical instabilities that arise when the error between a function and its truncated polynomial approximation is large.

The expected number of sites 2Nν, relates to the expected number of mutations per base 2Nu, by the total length L of the genomic sequence under study in units of base pairs, ν = u × L. Sometimes in this paper, in an abuse of notation we do not distinguish between ν and u, and they are seen as the same quantity expressed with different units.

One can work either with the basis of functions {Ti(x)} on x ∈ [−1, 1], or with the re-scaled basis {Ri(x)} defined on x ∈ [0, 1], by performing a simple scale transformation.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Sergio Lukić, Email: lukic@biology.rutgers.edu, Department of Genetics and BioMaPS Institute, Rutgers University, Piscataway NJ 08854, USA.

Jody Hey, Department of Genetics, Rutgers University, Piscataway NJ 08854, USA.

Kevin Chen, Department of Genetics and BioMaPS Institute, Rutgers University, Piscataway NJ 08854, USA.

References

- 1.Canuto C, Hussaini MY, Quarteroni A, Zang TA. Spectral Methods in Fluid Dynamics. Springer Series in Computational Physics. Springer; 1988. [Google Scholar]

- 2.Crow JF, Kimura M. Some genetic problems in natural populations. Proc Third Berkeley Symp on Math Statist and Prob. 1956;4:1–22. [Google Scholar]

- 3.Crow JF, Kimura M. An introduction to population genetics theory. Harper & Row Publishers; 1970. [Google Scholar]

- 4.Evans SN, Shvets Y, Slatkin M. Non-equilibrium theory of the allele frequency spectrum. Theoretical Population Biology. 2007;71(1):109–119. doi: 10.1016/j.tpb.2006.06.005. [DOI] [PubMed] [Google Scholar]

- 5.Ewens WJ. Interdisciplinary Applied Mathematics. Vol. 27. Springer; 2004. Mathematical Population Genetics. I. Theoretical Introduction. [Google Scholar]

- 6.Fisher RA. The distribution of gene ratios for rare mutations. Proc Royal Soc Edinburgh. 1930;50:205–220. [Google Scholar]

- 7.Gottlieb D, Orszag SA. Numerical analysis of spectral methods: Theory and Applications. Society for Industrial and Applied Mathematics; 1977. [Google Scholar]

- 8.Gutenkunst RN, Hernandez RD, Williamson SH, Bustamante CD. Inferring the Joint Demographic History of Multiple Populations from Multidimensional SNP Frequency Data. PLoS Genet. 2009;5(No. 10) doi: 10.1371/journal.pgen.1000695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hey J. Isolation with migration models for more than two populations. Mol Biol Evol. 2010;27(4):905–920. doi: 10.1093/molbev/msp296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hey J, Nielsen R. Multilocus Methods for Estimating Population Sizes, Migration Rates and Divergence Time, With Applications to the Divergence of Drosophila pseudoobscura and D. persimilis. Genetics. 2004;167:747–760. doi: 10.1534/genetics.103.024182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hudson RR. Generating samples under a Wright-Fisher neutral model of genetic variation. Bioinformatics. 2002;18:337–338. doi: 10.1093/bioinformatics/18.2.337. [DOI] [PubMed] [Google Scholar]

- 12.Kimura M. Solution of a process of random genetic drift with a continuous model. Proc Nat Acad Sci. 1955;41:144–150. doi: 10.1073/pnas.41.3.144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kimura M. Diffusion models in population genetics. Journal of Applied Probability. 1964;1:177–232. [Google Scholar]

- 14.Kimura M. The number of heterozygous nucleotide sites maintained in a finite population due to steady flux of mutations. Genetics. 1969;61:893–903. doi: 10.1093/genetics/61.4.893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Press WH, Teukolsky SA, Vetterling WT, Flannery BP. Numerical Recipes: The Art of Scientific Computing. 3rd. Cambridge University Press; 2007. [Google Scholar]

- 16.Orszag SA. Numerical Methods for the Simulation of Turbulence. Phys Fluids Supp II. 1969;12:250–257. [Google Scholar]

- 17.Ritz W. Uber eine neue Methode zur Losung gewisser Variationsprobleme der mathematischen Physik. Journal fr die Reine und Angewandte Mathematik. 1909;135:161. [Google Scholar]

- 18.Sawyer SA, Hartl DL. Population genetics of polymorphisms and divergence. Genetics. 1992;132(4):1151–1176. doi: 10.1093/genetics/132.4.1161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shreve SE. Stochastic Calculus II. Continous-Time Models. Springer; 2004. [Google Scholar]

- 20.Wakeley J, Hey J. Estimating ancestral population parameters. Genetics. 1997;145:847–855. doi: 10.1093/genetics/145.3.847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Williamson SH, Hernandez R, Fledel-Alon A, Zhu L, Nielsen R, et al. Simultaneous inference of selection and population growth from patterns of variation in the human genome. Proc Nat Acad Sci USA. 2005;102:7882–7887. doi: 10.1073/pnas.0502300102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wright S. Evolution in Mendelian populations. Genetics. 1931;16:97–159. doi: 10.1093/genetics/16.2.97. [DOI] [PMC free article] [PubMed] [Google Scholar]