Abstract

A major barrier to actualizing the public health impact potential of screening, brief intervention, and referral to treatment (SBIRT) is the suboptimal development and implementation of evidence-based training curricula for healthcare providers. As part of a federal grant to develop and implement SBIRT training in medical residency programs, we assessed 95 internal medicine residents before they received SBIRT training to identify self-reported characteristics and behaviors that would inform curriculum development. Residents’ confidence in their SBIRT skills significantly predicted SBIRT practice. Lack of experience dealing with alcohol or drug problems and discomfort in dealing with these issues were significantly associated with low confidence. To target these barriers, we revised our SBIRT curriculum to increase resident confidence in their skills and developed an innovative SBIRT Proficiency Checklist and Feedback Protocol for skills practice observations. Qualitative feedback suggests that, despite the discomfort residents experience in being observed, a proficiency checklist and feedback protocol appear to boost learner confidence.

Keywords: SBIRT, curriculum, observation, feedback, confidence, graduate medical education

INTRODUCTION

To encourage health care professionals to identify at-risk populations and intervene early, the Substance Abuse and Mental Health Services Administration (SAMHSA) has funded several graduate medical programs in the United States to develop and implement training programs that teach resident physicians how to provide evidence-based screening, brief intervention, and referral to specialty treatment (SBIRT) for patients who either have, or are at risk for, substance use disorders. At San Francisco General Hospital (SFGH), we developed an intensive SBIRT curriculum for PGY 2 and 3 residents enrolled in the Health Equities: Academics and Advocacy Training (HEAAT) program of the Health and Society Pathway of the University of California San Francisco’s Internal Medicine Residency Program. We report here on the evidence-based development, implementation, and learner response to a SBIRT Proficiency Checklist and Feedback Protocol designed to address residents’ perceived barriers to and confidence in their skills conducting SBIRT.

Providing learners with opportunities for observation with feedback has been a longstanding recommendation in medical education literature (1, 2) and has been widely studied with generally positive effects on learner and patient outcomes (3, 4). Implementation of observation and feedback is a requirement of Accreditation Council for Graduate Medical Education (5). However, despite the importance of this construct in graduate medical education (GME), little evaluation has been directed towards this topic in the area of SBIRT. Some studies with positive intervention effects cite providing learners with practice opportunities. Fewer studies describe observation and feedback, and none describe the use of a standardized feedback tool or procedures for providing feedback.

Of the few SBIRT curriculum evaluation studies available, the inclusion of observation with feedback opportunities is variable. Some studies with positive intervention effects provide learners with practice opportunities but do not describe whether or how observation or feedback was provided following skills practice (6, 7). Others have found positive effects of feedback when provided following role-playing with simulated patients (SP; 8, 9). However, neither of these studies described the use of a standardized feedback tool or procedures for providing feedback. Wilk et al. (10) also found positive differences in SBIRT behaviors from observed SP encounters followed by preceptor feedback using reference to the NIAAA Clinician Guide to provide structure. Conversely, Roche et al. (11) and Walsh et al. (12) compared traditional didactic and demonstration curricula with feedback enhanced versions and found no significant differences between groups. Again, an explanation of a standardized feedback tool or a feedback protocol that was implemented was not included.

Because these studies do not provide details regarding the format or structure of the observation or feedback procedures, including the methods by which observations were rated and style in which feedback was provided, it is difficult to interpret the variability in effects of or infer how such practices might be successfully implemented or evaluated in GME settings and training sites.

CURRICULAR METHODS

Setting

Site

SFGH is the primary provider of safety-net health care in the City and County of San Francisco. Serving some 100,000 inpatient, outpatient, and emergency patients a year, the population is 29% Hispanic; 25% White; 21% African American and 20% Asian. Over 80% receive either publicly-funded health insurance or are uninsured, and 8% are homeless. The population served by SFGH is disproportionately affected by HIV/AIDS, advanced disease, endemic violence, mental illness, and alcohol and drug disorders. Of the four campuses where UCSF medicine internal medicine residents train, SFGH is the epicenter for substance use issues and education.

Residency Program

The current report describes a curriculum implemented with residents in the HEAAT track of UCSF’s Internal Medicine Residency Program. Residents in the HEAAT track include all of the primary care medicine residents based at SFGH, together with residents in the categorical and Parnassus/Mt. Zion-based primary care program who select to participate in this program based on their interest in working with underserved populations. HEAAT residents meet one-half day per week during their elective and ambulatory block months during their PGY2 and PGY3 years (12 months total) to participate in a structured curriculum that focuses on the social, economic, and political factors that are important determinants in the health of vulnerable populations.

We developed and implemented a longitudinal 7-week intensive curriculum for Health Equities and Advocacy Track residents in 2009–10 that was based largely on survey feedback from residency program directors and chief residents, reviews of the literature on addiction and medical education, and resources from federally-funded statewide SBIRT grantees. Trainers included UCSF faculty from the Departments of Medicine, Family and Community Medicine, and Psychiatry, an MI expert from the University of Virginia, and an addiction psychiatrist from the San Francisco Department of Public Health. In seven consecutive Friday morning seminars during a two-month ambulatory block, the curriculum covered the epidemiology and neurobiology of addiction, the rationale and evidence base for SBIRT, motivational interviewing (MI) principles and skills, office-based pharmacotherapy of alcohol and opioid use disorders, chronic pain management, cultural competence, and systems-based issues affecting residents’ SBIRT practice experience. Teaching methods were comprised of didactic lectures, small group discussion, written narrative reflections about encounters with substance-using patients, meeting patients in recovery, and skills demonstration and practice. In addition, residents completed a narrative reflection exercise after a half-day site visit to one of two specialty programs where patients are often referred after a hospital admission or from an outpatient clinic. The Treatment Access Program (TAP) is an assessment, referral and placement unit of the San Francisco Department of Public Health, which directly assesses clients who self-refer or are referred by various providers throughout the City to community-based programs. Residents visiting TAP learn about local substance use treatment resources and observe initial evaluations, program linkages, and ongoing patient support. At the Outpatient Buprenorphine Induction Clinic (OBIC), opioid-dependent patients are evaluated for buprenorphine treatment, induced and stabilized on the medication, receive counseling, and transitioned to a community provider. Residents visiting OBIC learn about office-based buprenorphine treatment and who is appropriate for buprenorphine versus methadone treatment. At both sites, residents have the opportunity to meet patients and observe initial patient evaluations.

METHODS

To assess learner characteristics and to tailor the curriculum to the needs of the residents, we collected anonymous baseline data using paper or online surveys from UCSF internal medicine residents that had not been exposed to the SBIRT curriculum. These surveys were administered in the month prior to the initiation of the HEAAT curriculum. We sought to assess self-reported use of SBIRT behaviors with patients, as well as skills confidence and perceived barriers for engaging in SBIRT behaviors.

We administered a modified version of the Boston Primary Care Survey (13) in which residents were asked how often they engaged in a variety of alcohol SBIRT behaviors on a 5-point Likert scale ranging from 1 (never) to 5 (always). Nine specific SBIRT behaviors (including asking patients whether they drink alcohol and advising safe drinking limits) were summed to form an SBIRT composite variable. We measured confidence in their skills performing seven SBIRT behaviors using a composite of 7 Likert-scale questions ranging from 1 (not very confident) to 5 (extremely confident) and measured 12 perceived barriers to SBIRT implementation on another Likert scale ranging from 1 (not a barrier) to 5 (very major barrier). Survey participants received a $20 gift card for their participation. In addition, we collected and examined qualitative data submitted by HEAAT residents following their participation in the SBIRT curriculum at SFGH. All procedures were approved by the UCSF Committee on Human Research and we emphasized that recruitment and participation would not affect participants’ residency evaluations.

Analysis

We calculated descriptive statistics for all survey variables and used linear regression to determine which barriers were the best predictors of low confidence among residents. Using the qualitative comments from the narratives, discussions, rating checklists, and debriefs about residents' SBIRT interactions, we extracted all resident comments regarding observation with feedback and categorized them for themes.

RESULTS

Quantitative Analysis of Learner Characteristics

The quantitative survey was completed by 95 out 113 residents, resulting in an 84% response rate. Most participants were PGY1s (N=69, 72.6%) with equal representations of PGY2s (N=13; 13.7%) and PGY3s (N=13; 13.7%). Mean age was 28 years (SD = 2). Female (N = 52; 54.7%) and male (43; 45.3%) residents were fairly equally represented. The sample was White (N=54; 56.8%), Asian/Pacific Islander (N=22; 23.2%); Black (N=5; 5.3%); Hispanic (N=2; 2.1%); and mixed or other (N=10; 10.5%) race groups.

Use of SBIRT behaviors, confidence, and perceived barriers among the sample are shown in Table 1. The most highly endorsed SBIRT behaviors were “asking patients whether they drink” and “asking the amount they drink”, with most residents reporting usually or always engaging in these practices. Most residents reported only rarely or sometimes “using a formal screening tool” and “treating them yourself without specialty consultation or referral.” Average confidence in conducting the various components of SBIRT was moderate (X=3.56, SD= .73) and “lack of experience in dealing with alcohol or drug problems” (X=2.8, SD=.83) and “discomfort in dealing with these issues” (X=1.75) were reported moderate barriers to screening and/or treating patients with alcohol or drug problems. Confidence did not differ by year of residency (F2, 92) = 1.94, p=1.5).

Table 1.

Resident Baseline Characteristics

| Range | Mean | SD | |

|---|---|---|---|

| SBIRT BEHAVIORS | |||

| In new patients, how often do you*: | |||

| Ask whether they drink | 4–5 | 4.79 | .41 |

| Ask amount they drink | 3–5 | 4.67 | .54 |

| Use a formal screening tool | 1–5 | 2.72 | .87 |

| In patients who drink: | |||

| Advise safe drinking limits | 1–5 | 3.01 | .83 |

| In patients who drink excessively: | |||

| Ask about health problems related to alcohol | 1–5 | 3.70 | .83 |

| Counsel them about alcohol problems | 2–5 | 3.76 | .75 |

| In alcohol dependent patients: | |||

| Discuss treatment | 2–5 | 3.70 | .78 |

| Refer them for treatment | 1–5 | 3.20 | .84 |

| Treat them yourself | 1–4 | 2.14 | .88 |

| SBIRT Sum | 29–58 | 38.20 | 8.27 |

| SBIRT PERCEPTIONS | |||

| Average Confidence** | 2.28–4.86 | 3.56 | .73 |

| Barrier: Lack of Experience*** | 1–5 | 2.80 | .83 |

| Barrier: Discomfort*** | 1–4 | 1.75 | .89 |

1=never, 2=rarely, 3=sometimes, 4=usually, 5=always

1=not confident - 5=very confident

1=not a barrier, 2=minor barrier, 3=moderate barrier, 4=major barrier, 5=very major barrier

Resident confidence significantly predicted self-reported SBIRT practice (β=.24, t(94) = 2.41, p < .01). Correlational analyses revealed that confidence was significantly related to several specific SBIRT behaviors including: using a formal alcohol screening tool (r = .22, p<.05), counseling patients about alcohol use (r = .247 p<.05), and referring patients to treatment (r=.48, p<.001). To determine what factors may be responsible for low confidence in the resident sample, we examined the relationship between confidence and perceived barriers. Among twelve assessed barriers, only discomfort and lack of experience significantly predicted confidence, together accounting for 15.9% of the variance in this variable, F(2, 93) = 8.78, p < .001. Both lack of experience dealing with alcohol or drug problems (r=−.343, p<.001) and discomfort in dealing with substance issues (r=−.36, p<.001) were negatively related to confidence. When we controlled for the effects of lack of experience and discomfort, the relationship between confidence and SBIRT behaviors was no longer significant.

These interim evaluation findings underscored the critical importance of providing residents with ample practice with feedback opportunities to increase their comfort and experience dealing with substance use issues. In addition to our findings, other researchers have found that provider confidence is associated with professional satisfaction in caring for patients with substance use problems (13) and is predictive of the provision of SBIRT interventions to patients (14). Provider confidence also has been found to increase after SBIRT training (6). Lastly, substance use and SBIRT curriculum targeting attitudes and confidence have been shown to increase both self-reported (15, 16) and objective measurements of provider intervention provision and skillfulness (17).

Based on ours and others’ findings, we redesigned our SBIRT curriculum for 2010–11 in two ways. First, we redefined the major learning objective of the curriculum ”to help residents learn practical skills and gain confidence in detecting, diagnosing, and managing patients across a spectrum of substance use disorders.” Second, we increased our emphasis on and time scheduled for case-based role plays during training and experiential learning outside of classroom via site visits and clinic practice with observation. These changes occurred in the core curriculum and scheduled booster skills practice sessions for ambulatory blocks throughout the remainder of the year. In order to facilitate the structured evaluation of practice sessions and to guide learners in developing their skills, we created an SBIRT Proficiency Checklist based specifically on the SBIRT Clinician Skills Guide and Pocket Card used in the curriculum. This checklist was used by course trainers and peers to support and guide experiential learning activities throughout the curriculum. To supplement resident observation opportunities, we developed and implemented an SBIRT Learner Feedback Protocol to guide deployment of the SBIRT Proficiency Checklist in a learner centered and consistent manner. The new curriculum added individual observations of SBIRT-trained housestaff practicing with their own primary care clinic patients and feedback by trained faculty using the SBIRT Proficiency Checklist and Feedback Protocol.

SBIRT Proficiency Checklist Development

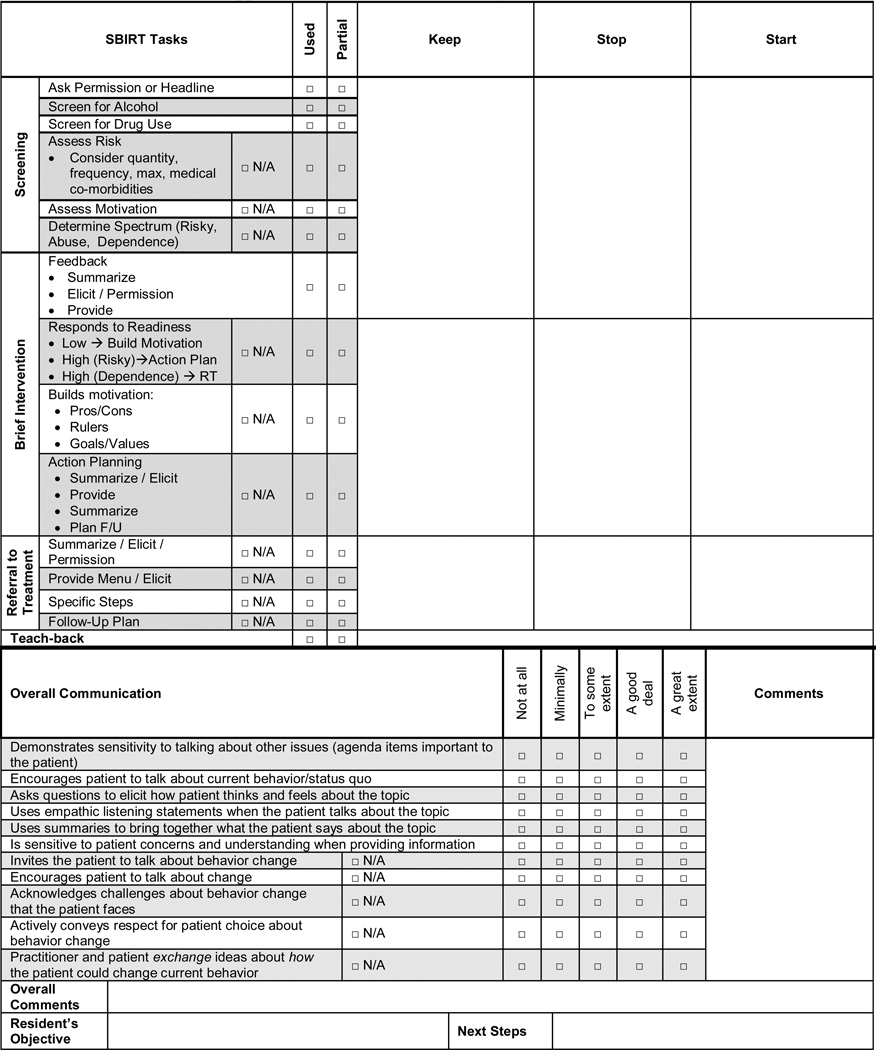

During our first year of the curriculum, we observed that faculty trainers provided highly variable and unstructured feedback based on their own content expertise and communication skills. Based on these observations and the formative quantitative analysis described above, we developed a standardized fidelity checklist to correspond with our SBIRT Clinician Skills Guide and Pocket Card. We used monthly curriculum workgroup meetings and SBIRT faculty skills-based workshops to practice, refine, and standardize the administration of this fidelity instrument. The SBIRT Skills Checklist (see Appendix) is comprised of check-off boxes for specific SBIRT tasks, rating scales for communication style, and columns to record clinician behaviors to "keep, stop, and start" doing. The instrument captures the presence or absence of SBIRT components, proscribed behaviors, appropriate decision making based on patient severity and readiness levels, and interpersonal skillfulness as measured by the Behavior Change Counseling Index (BECCI; 18).

SBIRT Learner Feedback Protocol Development

To aid observation and feedback sessions, we also created an SBIRT Learner Feedback Protocol that is consistent with the learner-centered and MI principles that lie at the heart of the brief intervention skills we train the residents to use with their patients. This process allows for role modeling by using a faculty-learner interaction for feedback that is a parallel process to resident-patient interactions. These steps were adapted from the Motivational Interviewing Assessment: Supervisory Tools for Enhancing Proficiency (MIA:STEP; 19) and include: (1) eliciting the resident’s perception of the patient interaction; (2) reflecting, affirming, and appreciating challenges; (3) providing feedback from the SBIRT Proficiency Checklist, first focusing on strengths (things to “keep”) and then areas of improvement (things to “stop” or “start”), that is collaborative in identifying causes for difficulties and strategies for improvement; (4) eliciting from the resident physician 1–2 areas of focus for which there is discussion and review of techniques, role playing, and the completion of a skill development action plan. The feedback session ends with the preceptor providing a summary with an emphasis on the learner’s strengths, learner-chosen commitment to change future behavior, and plan to follow up in the next practice-with-feedback session.

Practice with Feedback Implementation

We implemented the checklist in a formative feedback exercise in which trained faculty observers rated residents' interactions with primary care patients from their continuity clinics. Residents were required to select at least two patients with whom they planned to practice SBIRT that session, and SBIRT faculty trainers observed silently during these patient encounters. The residents were encouraged to complete the medical encounter as they normally would, to integrate SBIRT into the visit, and to receive clinical supervision from clinic preceptor as usual. At the end of the clinic session or at a later date that same week, the resident and SBIRT observer debriefed and set goals for future practice-based improvement. During the 2010–2011 year, we collected SBIRT Proficiency Checklist results for 18 live clinic observations.

Qualitative Evaluation of Curricular Changes

Analysis of 13 post-SBIRT curriculum written narrative reflections and observer notes from debriefing sessions revealed several important themes regarding resident experiences and responses to observation with feedback experiences. These themes and corresponding illustrative quotes can be seen in Table 3. In our implementation of the new curriculum, we also observed several barriers and facilitators to implementation that are summarized in Table 3. One specific challenge to observation with feedback using the SBIRT Proficiency Checklist and Feedback Protocol was the high number of negative alcohol and drug screens, which resulted in fewer opportunities for observation and feedback on the BI and RT components of the SBIRT intervention. To increase the utility of the session, several SBIRT trainers instructed their residents to demonstrate their brief intervention skills with a different behavioral health issue (e.g. medication adherence, diet and exercise) in the event of a negative alcohol or drug screen. Several PGY-3 residents, who had not been trained with the new SBIRT Fidelity Checklist in the prior curriculum year (2009–10), reported feeling overwhelmed by the unfamiliar checklist and indicated that having the opportunity to review the instrument prior to the feedback session would have been helpful. PGY-2s had the benefit of being introduced to the checklist during their core SBIRT curriculum in 2010–11 and had more opportunities to be rated and rate others using the checklist in role plays and other practice opportunities.

Table 3.

Barriers and Facilitators of Curricular Changes

| Curricular Change | Barrier | Facilitators |

|---|---|---|

| Increase in case-based role plays conducted in training | Requires a decrease in didactic time. | High faculty capacity in generating and conducting role play activities, including 3 members of the Motivational Interviewing Network of Trainers. |

| Increase in site visits | Coordination with outside programs such as AA, treatment programs requires administrative support and time. Quality of experience is highly variable and difficult to control. |

Community programs had a high level of interest and commitment to teaching residents. Member of the faculty is a leader in the local department of public health which oversees sites. |

| Implementation of practice with observation and standardized feedback in clinic | Coordination of clinical observation sessions requires both administrative and faculty time. Negative alcohol screens do not allow for feedback on brief intervention. Initial resident discomfort or perceptions of feeling overwhelmed. |

Practice sessions for faculty who will be doing clinical observations standardizes quality of observation and feedback. SBIRT checklist and feedback guide (appendix A) was helpful in ensuring that clinical observations are evaluated in a standard manner. Giving feedback on BI for other behavior change increased utility of interactions with negative screens. |

DISCUSSION

In our baseline survey of UCSF internal medicine residents, we discovered a strong relationship between learner confidence and self-reported SBIRT skills practice, and this relationship was explained by the perceived barriers of lack of experience dealing with alcohol or drug problems and a discomfort in dealing with these issues (p<.001). These results provided us with a clear signpost to guide curricular development towards increasing experience and reducing discomfort. By providing residents with multiple opportunities for practice and observation with a standardized feedback protocol, we targeted those perceived barriers that were associated with lower confidence in SBIRT skills. We more finely tuned our curriculum to our learner’s needs and the overall outcome of increasing SBIRT behaviors in the future.

Our experience has several implications for others considering SBIRT curricular design. First, assessing characteristics of learners at baseline proved to be a valuable tool for tailoring the curriculum to target the specific needs of residents. Second, developing tailored curricula is an iterative process. Anticipating this during planning and putting in place procedures to evaluate learner responses will likely facilitate the magnitude of improvements at each iteration. Lastly, the exercise of developing a proficiency checklist is valuable, in and of itself, to help ensure alignment in curriculum and explicitly address and standardize practice recommendations that we make to residents. Overall, a body of related research and our initial qualitative data support the effectiveness of performance feedback as a core curriculum component in teaching SBIRT skills.

Observation with feedback is critical to the effective implementation of medical skills, particularly those that involve interpersonal communication (20). Despite this, implementation of feedback in training curricula is insufficient, particularly in the area of addictions (21). As early as 1950, Weiner drew poignant parallels between the critical role of feedback in medical education and rocket science, where the importance of reinserting performance information into the system via feedback is a necessary antecedent to the modification of behavior (1). However, unlike rockets, which are designed with their own objective performance evaluation instrumentation (1), health care providers, particularly those with minimal exposure to a given behavioral intervention, are poor estimators of their own skillfulness (22, 20, 23). Arguments for the implementation of observation with feedback also have drawn parallels between interpersonal communication in medical interactions and complex skills from other areas, such as athletic or musical domains. Here providers’ struggles to accurately assess their own performance may be analogous to a golfer practicing with a blindfold on, or a pianist playing while wearing earplugs. Without a feedback loop, performance is unlikely to improve (20).

Feedback has been referred to as the life-blood of learning, especially if it is provided often and in a learner-centered style (24). A learner-responsive curriculum requires data gathering about learner deficiencies and resources and attitudes, as well as ongoing evaluation of and responsiveness to implementation challenges and successes. What we learned in the development, implementation, and modification of our SBIRT curriculum at SFGH parallels research from the broader field of medical education, where observation with feedback is a highly replicated essential strategy for enhancing intervention skillfulness. This teaching technique holds great promise for SBIRT curricula. Our experiences developing and implementing an SBIRT Proficiency Checklist and Feedback Protocol with UCSF internal medicine residents demonstrate that observation with feedback in GME clinical settings is feasible, well-received, and valued by the resident physicians. Qualitative feedback from housestaff supports the continued development, implementation, and rigorous evaluation of clinic-based observation with feedback. Continued research is needed to document the impact of observation with feedback on self-reported and objective measures of SBIRT-related provider behavior.

Table 2.

Qualitative Evaluation Themes

| Qualitative Theme | Illustrative Resident Quotes |

|---|---|

| 1) Residents indicated a desire to receive effective training in SBIRT. |

|

| 2) Residents had some initial uneasiness regarding observation that dissipated once they experienced the benefits of this approach. |

|

| 3) Residents experienced benefits as a result of practice opportunities. |

|

Acknowledgment

This work was supported by SAMHSA grant U79 TI020296 (JEH, NR, DC, DC, PJL) and NIH T32DA07250 (JKM).

Appendix SFGH SBIRT Proficiency Checklist

|

References

- 1.Ende J. Feedback in clinical medical education. JAMA. 1983;250:777–781. [PubMed] [Google Scholar]

- 2.Katz PO. Providing feedback. Gastrointest Endosc Clin N. Am. 1995;5:347–355. [PubMed] [Google Scholar]

- 3.Overeem K, Faber MJ, Arah OA, Elwyn G, Lombarts KM, Wollersheim HC, Grol RP. Doctor performance assessment in daily practise: does it help doctors or not? A systematic review. Med Educ. 2007;41:1039–1049. doi: 10.1111/j.1365-2923.2007.02897.x. [DOI] [PubMed] [Google Scholar]

- 4.Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians' clinical performance: BEME Guide No. 7. Med Teach. 2006;28:117–128. doi: 10.1080/01421590600622665. [DOI] [PubMed] [Google Scholar]

- 5.Kogan JR, Holmboe ES, Hauer KE. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 2009;302:1316–1326. doi: 10.1001/jama.2009.1365. [DOI] [PubMed] [Google Scholar]

- 6.Bernstein E, Bernstein J, Feldman J, Fernandez W, Hagan M, Mitchell P, et al. An evidence based alcohol screening, brief intervention and referral to treatment (SBIRT) curriculum for emergency department (ED) providers improves skills and utilization. Subst Abus. 2007;28:79–92. doi: 10.1300/J465v28n04_01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Babor TF, Higgins-Biddle JC, Higgins PS, Gassman RA, Gould BE. Training medical providers to conduct alcohol screening and brief interventions. Subst Abus. 2004;25:17–26. doi: 10.1300/J465v25n01_04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ockene JK, Wheeler EV, Adams A, Hurley TG, Hebert J. Provider training for patient-centered alcohol counseling in a primary care setting. Arch Intern Med. 1997;157:2334–2341. [PubMed] [Google Scholar]

- 9.Adams A, Ockene JK, Wheller EV, Hurley TG. Alcohol counseling: physicians will do it. J Gen Intern Med. 1998;13:692–698. doi: 10.1046/j.1525-1497.1998.00206.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wilk AI, Jensen NM. Investigation of a brief teaching encounter using standardized patients. J Gen Intern Med. 2002;17:356–360. doi: 10.1046/j.1525-1497.2002.10629.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Roche AM, Stubbs JM, Sanson-Fisher RW, Saunders JB. A controlled trial of educational strategies to teach medical students brief intervention skills for alcohol problems. Prev Med. 1997;26:78–85. doi: 10.1006/pmed.1996.9990. [DOI] [PubMed] [Google Scholar]

- 12.Walsh RA, Sanson-Fisher RW, Low A, Roche AM. Teaching medical students alcohol intervention skills: results of a controlled trial. Med Educ. 1999;33:559–565. doi: 10.1046/j.1365-2923.1999.00378.x. [DOI] [PubMed] [Google Scholar]

- 13.Saitz R, Friedmann PD, Sullivan LM, Winter MR, Lloyd-Travaglini C, Moskowitz MA, Samet JH. Professional satisfaction experienced when caring for substance abusing patients. J Gen Int Med. 2002;17:373–376. doi: 10.1046/j.1525-1497.2002.10520.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Geller G, Levine DM, Mamon JA, Moore RD, Bone LR, Stokes EJ. Knowledge, attitudes, and reported practices of medical students and house staff regarding the diagnosis and treatment of alcoholism. JAMA. 1989;261:3115–3120. [PubMed] [Google Scholar]

- 15.Alford DP, Bridden C, Jackson AH, Saitz R, Amodeo M, Barnes HN, Samet JH. Promoting substance use education among genralist physicians: an evaluation of the Chief Resident Immersion Training (CRIT) Program. J Gen Intern Med. 2009;24:40–47. doi: 10.1007/s11606-008-0819-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Karam-Hage M, Nerenberg L, Brower KJ. Modifying residents’ professional attitudes about substance abuse treatment and training. Am J Addict. 2001;10:40–47. doi: 10.1080/105504901750160466. [DOI] [PubMed] [Google Scholar]

- 17.Chossis I, Lane C, Gache P, Michaud PA, Pecoud A, Rollnick S, Daeppen JB. Effect of training on primary care residents’ performance in brief alcohol intervention: A randomized controlled trial. J Gen Intern Med. 2007;22:1144–1149. doi: 10.1007/s11606-007-0240-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lane C, Huws-Thomas M, Hood K, Rollnick S, Edwards K, Robling M. Measuring adaptations of motivational interviewing: the development and validation of the behavior change counseling index (BECCI) Patient Educ Couns. 2005;56:166–173. doi: 10.1016/j.pec.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 19.Martino S, Ball SA, Gallon SL, Hall D, Garcia M, Ceperich S, et al. Motivational Interviewing Assessment: Supervisory Tools for Enhancing Proficiency. Salem, OR: Northwest Frontier Addiction Technology Transfer Center, Oregon Health and Science University; 1996. [Google Scholar]

- 20.Miller WR, Yahne CE, Moyers TB, Martinez J, Pirratano M. A randomized trial of methods to help clinicians learn motivational interviewing. J of Consult and Clin Psych. 2004;72:1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- 21.Miller WR, Sorensen JL, Selzer JA, Brigham GS. Dissminating evidence-based practices in substance abuse treatment: a review with suggestions. J Subst Abuse Treat. 2006;31:25–39. doi: 10.1016/j.jsat.2006.03.005. [DOI] [PubMed] [Google Scholar]

- 22.Hartzler B, Baer JS, Dunn C, Rosengren DB, Wells EA. What is seen through the looking glass: The impact of training on practitioner self-rating of motivational interviewing skills. Behav Cog Psychother. 2007;35:431–445. [Google Scholar]

- 23.Miller WR, Mount KA. A small study of training in motivational interviewing: does one workshop change clinician and client behavior? Behav Cogn Psychother. 2001;29:457–471. [Google Scholar]

- 24.Hudson JN, Bristow DR. Formative assessment can be fun as well as educational. Adv Physiol Educ. 2006 Mar;30:33–37. doi: 10.1152/advan.00040.2005. [DOI] [PubMed] [Google Scholar]