Abstract

Twenty-eight 4-month-olds’ and 22 20-year-olds’ attention to object-context relations was investigated using a common eye-movement paradigm. Infants and adults scanned both objects and contexts. Infants showed equivalent preferences for animals and vehicles and for congruent and incongruent object-context relations overall, more fixations of objects in congruent object-context relations, more fixations of contexts in incongruent object-context relations, more fixations of objects than contexts in vehicle scenes, and more fixation shifts in incongruent than congruent vehicle scenes. Adults showed more fixations of congruent than incongruent scenes, vehicles than animals, and objects than contexts, equal fixations of animals and their contexts but more fixations of vehicles than their contexts, and more shifts of fixation when inspecting animals in context than vehicles in context. These findings for location, number, and order of eye movements indicate that object-context relations play a dynamic role in the development and allocation of attention.

Object-Context Relations in Perception and Cognition

Our perceptions of and cognitions about objects always occur in some context, and context informs perception and cognition. Context normally refers to aspects of the internal and external environments that are present during object information processing. Thus, context includes stimulation in which the object is embedded and which may influence or give meaning to how we sense, think about, and remember the object or its components (e.g., the surrounding location, sounds accompanying the object, and the like). In this paper, we are concerned with the role of object-context relations in infant and adult perception.

Research underscores the pervasiveness of external context in perception and cognition across the lifespan. Generically, “context effects” are influences of the environment of an object on perception of the object. Physiologically, the appearance of a face in a fearful context enhances the amplitude of the N170 over the same face in a neutral context; so the context in which a face appears influences how it is neurally encoded (Righart & de Gelder, 2006). The classical “configural superiority effect” is observed when the detectability or perceptibility of stimulus segments is affected by a context of surrounding similar or dissimilar segments (Pomerantz, Sager, & Stoever, 1977; Williams & Weisstein, 1978). More broadly, object identification is affected by whether objects appear in likely or unlikely contexts, such as a fire hydrant on top of a mail box instead of on a sidewalk (Biederman, 1981, 1987), and categories are learned differently depending on whether other thematic features related to the category are present (Murphy, 2002). It appears that many sources of information about context -- featural, physical, and experiential – contribute to object perception and cognition.

Despite their significance and pervasiveness, the effects of context on the origins of object perception and cognition have received only sparse attention. One contention has been that, in navigating a multidimensional world that is constantly changing, infants monitor the environment and differentiate object-context relations by deploying their attention selectively and flexibly. Hence, some have asserted that “virtually all learning during infancy is … independent of context” (Nadel, Willner, & Kurz, 1985, p. 398). (Certainly, infants can learn to ignore context if, for example, habituated to a cue in the presence of multiple contexts; Haaf, Lundy, & Coldren, 1996). However, empirical research with infants as young as 3 months challenges this position, and it has been counter-claimed that when infants normally monitor objects in their environment context cues are salient. Colombo, Laurie, Martelli, and Hartig (1984) observed that surrounding segments aid infants’ discrimination of linear and curvilinear segment orientation (the configural superiority effect). Haaf et al. (1996) observed that infants habituate to a focal cue more quickly when its context is constant than when its context varies, so it appears that “infants … attend to and encode background context information while encoding central stimulus cues” (p. 96). Furthermore, Rovee-Collier and colleagues learned that infants encode general features of contexts as well as specific details (Butler & Rovee-Collier, 1989; Rovee-Collier & DuFault, 1991). Recognition of visual stimuli is context-sensitive regardless of whether contextual cues are visual or auditory, and recognition failure can result from changes in context (Pescara-Kovach, Fulkerson, & Haaf, 2000). Together, these developmental findings comport with the adult literature that says that objects and their settings are processed interactively (Davenport & Potter, 2004) and indicate that young infants process both object and context information.

Object-Context Congruency

Objects in the natural world normally do not appear in just any context, but objects of certain kinds naturally appear in certain kinds of contexts. In shorter words, objects and contexts tend to co-occur in the world. Several prominent relations characterize visual scenes (Biederman, Mezzanotte, & Rabinowitz, 1982), probability, the likelihood that certain objects will be present in certain scenes, among them. Animals are more likely to be found in fields, and vehicles are more likely to be found on streets. This natural object-context congruency constitutes the circumstances in which the visual system and visual cognition evolved phylogenetically and develop ontogenetically. Part of human adults’ knowledge of objects, and human infants acquiring knowledge about objects, includes learning about the typical, probable, expected, or congruent contexts in which objects appear.

Object-context relational congruence is a strong cue in perception and cognition. Objects that violate probability relations in a scene are generally processed more slowly and with more errors (Biederman et al., 1982). In adults, objects are detected more readily, identified faster and more accurately, and named more quickly when they appear in congruent contexts (Biederman, 1981, 1987; Biederman et al., 1982; Boyce & Pollatsek, 1992; Davenport & Potter, 2004; Ganis & Kutas, 2003; Palmer, 1975; Ullman, 1996); congruent object-context relations aid picture recognition, whereas incongruent ones interfere (Antes & Metzger, 1980; Franken & Diamond, 1983; Mathis, 2002); and objects are identified more accurately when primed by semantically congruent scenes than when primed by incongruent ones (Palmer, 1975). Indeed, consistency information is available when a scene is glimpsed (even briefly) and affects the processing of objects and contexts. Objects and contexts are processed interactively, and knowledge of which objects and contexts tend to co-occur influences perception. To the extent that object-context congruency is a factor in object perception and cognition, we hypothesized that infant and adult attention patterns alike would differ for congruent vs. incongruent object-context relations. Specifically, we expected that infants and adults both would dwell on objects more than contexts, especially in object-context congruent scenes, and that, if the target object of a scene determines that scene’s expected object-context relation, then incongruent contexts will violate infants’ expectations and elicit greater looking toward contexts in incongruent scenes than contexts in congruent ones.

Infants are sensitive to and learn from statistical regularities in their environment (Saffran, 2009). For example, by 3 months, infants’ looking preferences correlate with the relative frequency of certain stimuli they more likely see (Bar-Haim, Ziv, Lamy, & Hodes, 2006; Kelly et al., 2005, 2007). When tested with Middle Eastern, Chinese, African, and Caucasian faces, European American babies growing up in predominantly Caucasian areas of Sheffield, England, preferred looking at Caucasian faces, and Han babies growing up in China with no exposure to non-Chinese faces preferred looking at Chinese faces (Kelly et al., 2005, 2007).

The Present Studies

To explore the role of the object-context relations in infant and adult object perception directly, infants and adults were presented with animals and vehicles appearing in object-context relations that were either congruent (e.g., a monkey on a tree log, a SUV in a parking lot) or in object-context relations that were incongruent (e.g., a monkey in the middle of the street, a SUV in a garden). For congruent object-context scenes, we imported animals into “nature” scenes and vehicles into “residential” scenes; for incongruent object-context scenes, we imported animals into “residential” scenes and vehicles into “nature” scenes. To explore the generality of our findings we tested infants and adults in each condition with multiple exemplars nested in 2 classes of stimuli, animals and vehicles. To assess perception closely, we recorded infant and adult dwell times and eye movements over objects and contexts. Since the seminal studies of Buswell (1935) and Yarbus (1967), much work has focused on eye movements in natural scene perception, but almost exclusively with adult viewers and object-context congruent scenes. Following Salapatek and Kessen (1966), research in infant eye movements has been renewed by methodological advances. We followed this course.

When we look at a scene, we scan it with a series of fixations directed at different elements of the scene (Parkhurst, Law, & Niebur, 2002; Torralba, Oliva, Castelhano, & Henderson, 2006). The distribution of these fixations is not random (Rayner, 1998). Even if initial fixations are not (necessarily) related to the configuration of a scene, our fixations quickly dwell on the scene’s informative regions (Antes, 1974; Henderson & Hollingworth, 1999; Mackworth & Morandi, 1967). Eye movements are essential because we have to move our eyes to the part of the scene we want to process in detail (Henderson, 2003; Rayner, 1998). Infant as well as adult viewers do this. Of course, human scan paths vary across observers as well as different kinds of scenes. However, by moving our eyes toward certain objects, we reveal what is of interest to us (Ballard, Hayhoe, Pook, & Rao, 1997; Just & Carpenter, 1980). It is generally agreed that perceptual and cognitive processing occur during dwell times (e.g., Just & Carpenter, 1984), and foveated regions of a scene are encoded in greater detail than are peripheral regions (visual acuity attenuates rapidly as distance increases from the fovea; Hochberg, 1978).

Recording the location of infants’ and adults’ eye movements, their number and duration, and their order (as we do here) permits certain kinds of inferences about attention allocation and information processing that are difficult to make with other common methodologies, such as habituation or preferential looking in infancy (Haith, 2004; Lécuyer, Berthereau, Ben Taieb, & Tardif, 2004; Schlesinger & Casey, 2003). The eye-movement methodology we implemented was undertaken to advance our understanding of visual behavior and the processes that underlie it in comparable ways at two points in the lifespan. Our analyses focused on four questions: Are there differences in allocation of attention to objects versus their contexts? If so, do these differences vary as a function of the object-context relation? Do they differ for different categories of stimuli (animal natural kinds versus vehicle designed artifacts)? Do infants and adults show similar or different patterns? The results of this study speak to an array of basic issues in perception and cognition, including the role of context in object perception, holistic versus analytic processing in attention, and the implications of eye movement distributions for object cognition and memory in infancy and maturity.

Infant Study

This work coordinates general experience and object perception with eye-movement analysis in infancy. With respect to the development of eye movements, during the first month of life eye movements are often inaccurate and unreliable (Atkinson, 1992), and infants 1 to 3 months of age who are fixating a stimulus also sometimes show difficulty shifting their gaze (Butcher, Kalverboer, & Geuze, 2000; Hunnius & Geuze, 2004a, b). Hunnius and Geuze (2004a, b) recorded eye movements of infants between the ages of 1.5 and 6.5 months to the naturally moving face of their mother and an abstract stimulus. The way infants scanned these stimuli stabilized around approximately 4 months, and from their 3.5-month session on infants adapted their scanning to characteristics of the stimulus. Frank, Vul, and Johnson (2009) recorded eye-movements of 3-, 6-, and 9-month-old infants during free-viewing of video clips. Three-month-olds were less consistent in where they looked than older infants. With respect to infants’ perception of objects, Johnson, Slemmer, and Amso (2004) reported that 3-month-olds who tended to direct their gaze more often toward relevant parts of an object—specifically, their visible surfaces, points of intersection, and motions— perceived object unity, unlike comparable infants who tended to look more at less informative parts of a stimulus array. With respect to general experience, at birth babies do not look longer at faces from their own ethnic group than at faces from other groups, but by 3 months infants have developed looking preferences correlated with the relative frequency of own-group and other-group faces in their environment (Kelly et al., 2005). Based on the empirical observations that by ~ 4 months eye movement control has stabilized ontogenetically, eye movements are under greater voluntary control, eye movements are sensitive to stimulus characteristics, eye movements confirm whole object perception, and looking patterns are coming to be shaped by predominant experience, we evaluated eye movements to object-context relations in 4-month-olds.

Methods

Participants

Twenty-eight infants (M age = 126.8 days, SD = 10.3, range = 110-150; 9 females) participated: 14 infants each in congruent object-context and incongruent object-context conditions. An additional 6 infants began the procedure, but they were not included because of fussiness (n = 1) or equipment/measurement failure (initial calibration failure, n = 3; calibration drift, n = 2), a common attrition rate in eye-movement studies with babies (Haith, 2004). All infants were term, healthy at birth and at the time of testing, and represented families of middle to high SES on the Hollingshead (1975) Four-Factor Index of Social Status (Bornstein, Hahn, Suwalsky, & Haynes, 2003).

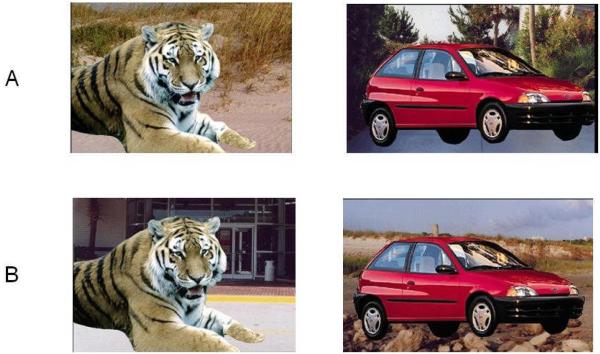

Stimuli

The full stimulus array consisted of 36 full-color digitized images (Table 1; see Figure 1 for examples) divided into 2 sets of stimuli to correspond to 2 conditions: congruent object-context and incongruent object-context. (Our use of the term “congruent” simply refers to the statistically real co-occurrence of particular object kinds with particular context kinds.) Color photographs of 9 animals and 9 vehicles were first obtained in their natural environments, such as fields, forests, or lakesides (“nature” scenes) and streets, driveways, or parking lots (“residential” scenes) and subsequently digitally manipulated in one of two ways. Target objects were first extracted from their contexts using a computer graphics software package and then imported into new contexts. To create congruent scenes, animals were placed in “nature” scenes other than where they were originally photographed and vehicles placed in other “residential” scenes. See Figure 1A. To create incongruent scenes, animals were placed in “residential” scenes and vehicles in “nature” scenes. See Figure 1B. Thus, both congruent and incongruent object-context scenes were equally manipulated.

Table 1.

Object-context pairings for the images

| Object | Congruent Object-Context | Incongruent Object-Context |

|---|---|---|

| Animals | ||

| Bear | Green field with trees in distance | Street, trees in distance |

| Bird | Alongside lake | Road, trees in distance |

| Cow | Garden with grass, flowers, and stones | Driveway, house in distance |

| Elk | Green and brown field with live oak trees |

Parking lot, wood and brick wall in distance |

| Horse | Snow-covered forest | Parking lot, building in distance |

| Monkey | Arid field, placed on boulders | Road, yellow center line visible |

| Sheep | Green hillside (steppes) | Road, sidewalk visible |

| Squirrel | Green field with flowers | Driveway, wall in distance |

| Tiger | Sandy surface with beach grasses | Parking lot, store in distance |

| Vehicles | ||

| Coup | Parking lot, wood and brick wall in distance |

Green and brown field with live oak trees |

| Delivery truck | Parking lot, store in distance | Green field with flowers |

| Hatchback | Street, trees in distance | Arid field, placed on boulders |

| Motorcycle | Road, yellow center line visible | Green hillside (steppes) |

| Pickup truck | Parking lot, building in distance | Sandy surface with beach grasses |

| Sports Utility Vehicle |

Driveway, house in distance | Garden with grass, flowers, and stones |

| Sports Car | Driveway, wall in distance | Snow-covered forest |

| Utility Golf Cart |

Road, sidewalk visible | Alongside lake |

| VW Bug | Road, trees in distance | Green field with trees in distance |

Figure 1.

Example stimuli used for (A) congruent object-context and (B) incongruent object-context conditions.

Images in whole subtended 23° high by 29° wide on average, and objects were 19° high by 26° wide on average; these are typical parameters in natural scene eye-movement studies (see Foulsham, Barton, Kinstone, Dewhurst, & Underwood, 2009; Frank et al., 2009; Johnson et al., 2004; Hunnius & Geuze, 2004a, b). The stimulus images varied modestly in size, but did not differ significantly between categories (animals: M = 140.0 cm2, SD = 39.0; vehicles: M = 148.4 cm2, SD = 18.1), t(16) = 0.59, ns, and were identical between conditions (M = 144.2 cm2, SD = 29.8, for both congruent and incongruent scenes). Because the objects and contexts varied in size over individual stimuli, the number of looks at the object on each trial was weighted by the proportion of the image taken by the object in that scene, and the number of looks at the context was weighted by the remaining proportion of that scene. Analyses were conducted with weighted values. Stimulus images were uniform in mean power spectral density across conditions at both low (0.03-4.95 cy/cm), F(1, 32) = 0.01, ns, and high (11.55-16.50 cy/cm), F(1, 32) = 0.75, ns, spatial frequency bands. In a series of exacting examinations, Mannen, Ruddock, and Wooding (1995, 1996, 1997) showed that viewers look in the same locations across normal scenes as they do the same scenes that had been high-pass or low-pass filtered.

Materials and apparatus

An Applied Science Laboratories Model 504 eye-tracking system was used to record infants’ eye movements using infrared corneal reflection relative to coordinates on the stimulus plane continuously at 60 Hz. This system has high precision: Spatial error between true gaze position and computed measurement is less than 1° (Applied Science Laboratories, 2001). An Ascension Technologies electromagnetic motion tracker corrected camera angles for spontaneous head movements that exceeded the frame limits of optical tracking. The motion-tracking sensor was attached to an infant head band. Signals from the motion tracker were integrated with the eye camera control unit to guide the camera’s pan/tilt motors when corneal reflections were lost. Eye movement recording was synchronized with stimulus presentation using GazeTracker software that was running on a second microprocessor. Stimuli were presented on a 21- by 29-cm Panasonic video monitor.

Procedures

Infants were randomly assigned to one of the 2 experimental conditions. They were seated in an infant chair, and parents were asked not to interact with them during testing. Infants were 90 cm from the monitor on which the stimuli were displayed (in front) and between the transmission component of the head movement tracking system (to the rear). The eye camera was located beneath the stimulus monitor in the same depth plane as the stimulus monitor’s screen. The eye tracking system was calibrated for each infant individually by presenting a rotating red 1.3° plus sign in the upper-left and lower-right corners of an otherwise uniform white field. When infants were judged to be fixating the targets, the known locations of those targets were mapped onto the corneal reflections for each infant using ASL calibration procedures.

Each infant saw all 18 stimuli from the assigned condition, 9 animals and 9 vehicles, in one of two random orders with the constraint that no more than two images from the same category appeared consecutively. Because each scene was shown only a single time, infants could not be biased by previous exposures to the scene or influenced by having seen a given object in multiple contexts or a given context with different objects. Between trials, a uniform field of 16 black 2.5° plus elements on a white background maintained infant attention toward the stimulus screen without systematically biasing fixation toward any particular region of the display. On each trial the stimulus was presented by a key press when the infant was judged to be looking toward the attention-getting display. Stimuli were presented for 10 s each, a common temporal parameter in eye-movement studies of natural scenes (see Rayner et al., 2007).

Data coding and analysis

For each trial, fixations of 200 ms or more were plotted directly on the stimulus image using the GazeTracker software package. All fixations meeting this criterion were coded independently of saccade length and speed. Drift in fixation position was analyzed and corrected using the software’s purpose-designed algorithms. Adult viewers can theoretically obtain the gist of a scene from exposures as brief as 40 to 100 ms, but to process a scene normally adult viewers need to see the scene for at least 150 to 200 ms (Antes, Penland, & Metzger, 1981; Biederman, 1972; Biederman et al., 1973; Castelhano & Henderson, 2008; Intraub, 1981; Loftus, Nelson, & Kallman, 1983; Potter, 1975; Rayner, Smith, Malcolm, & Henderson, 2009; Rousselet, Joubert, & Fabre-Thorpe, 2005; Salvucci & Goldberg, 2000; Schyns & Oliva, 1994; Thorpe, Fize, & Marlot, 1996). Average adult dwell times in fixating natural scenes fall between 280 and 330 ms (Rayner, 1998; Rayner et al., 2007). For 3.5- to 4.5-month infants viewing “abstract” stimuli (not faces), median dwell times average 700 to 1000 ms (Hunnius & Geuze, 2004a, b). For these reasons, infancy researchers have often used a minimum of 200 ms as a dwell time threshold (Gredebäck & von Hofsten, 2004). Notably, fixation durations for individuals tend to be fairly stable across different tasks (Castelhano & Henderson, 2008; Rayner et al., 2007).

Fixations were plotted on the stimulus images with guide lines drawn 1° in radius around each fixation point. Plots were coded by visual inspection of coders uninformed as to the conditions, parameters, and hypotheses of the experiment. We coded two areas of interest (AOI). Fixations were classified as “object” if their corresponding guide lines fell on the object without spanning the object’s outer boundary. Fixations were classified as “context” if their corresponding markers fell in any remaining portion of the structured scene lying beyond 1° from the object boundary. We chose a 1° criterion with several considerations in mind. The fovea covers approximately a 1° field of view (i.e., a1° angle with its vertex at the eye extending outward into space). Although the density of foveal receptors is not uniform, it is sufficiently high over an approximately 1° area that we normally obtain a clear view of an object in that area. Outside the fovea, acuity ranges from 15 to 50% of that of the fovea (Hochberg, 1978), and qualitatively different information is acquired from the region ≤1.5° around fixation than from any region further from fixation (Nelson & Loftus, 1980). (Inconveniently for eye movement study, it is not possible to tell where within an approximately 1° area a participant is looking; Rayner, 1998.) In addition, the resolution of the eye-tracker is not sufficient to differentiate more finely between looking at the context just beyond the boundary of an object, at the boundary itself, and at the object on the near side of the boundary. For these reasons, many infancy researchers have measured eye movement dwell times with a precision of 1° (as we did here). For example, Johnson et al. (2004; see also Frank et al., 2009) drew AOIs about 1° of visual angle on all sides of their stimuli, and Gredebäck, Theuring, Hauf, and Kenward (2008) estimated spatial accuracy to 1° error.

There is no definitive evidence that infants assign object boundaries to regions that adults perceive as figure vs. ground. Needham (1998) examined infants’ ability to segregate objects at shared boundaries and observed differences between 5 and 8 months in the features that infants detected and used in boundary assignment. Furthermore, boundary detection in infancy did not appear to be all-or-none and underwent development. Boundaries per se might be particularly compelling, not because they are part of the object or the context, but because they constitute locations of visual interest involving changes in contrast, luminance, and so forth. Thus, infants’ assignment of boundaries is equivocal. For all these reasons, we omitted boundaries from our object vs. context analyses. Traversals off the monitor were not coded and not included in any analyses either.

Overall, coding parameters conformed to a set of conservative criteria for location and duration of infant looking. Fifteen percent of the sessions were coded by a second observer, and ratings coincided on 97% of the individual trials.

Preliminary Analyses

Most infants remained attentive and engaged throughout the task, but some became fussy and inattentive before completing all trials. On average, infants contributed 14 trials of usable data (range = 9-18); the number of usable trials did not differ between conditions, t(26) = 1.41, ns. Fixations are summarized both by their frequency and by their duration. Because these two measures correlated highly (object fixations r = .98, context fixations r = .95), and parallel analyses of frequencies and durations revealed the same pattern of findings, only numbers of fixations are reported. Preliminary analyses for outliers (Fox, 1997) were conducted, but none was found. There were no significant effects of gender or of stimulus order; subsequent analyses collapsed across these factors.

Results

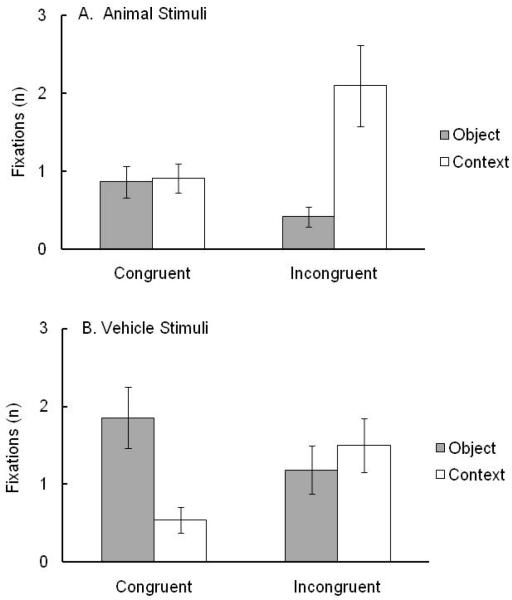

Infants’ weighted mean numbers of fixations (Figure 2) were analyzed in a 2 × 2 × 2 ANOVA with condition (congruent object-context, incongruent object-context) as a between-infants factor and category (animal, vehicle) and AOI (object, context) as within-infant factors. No main effects were significant: condition, F(1, 26) = 0.54, ns; category, F(1, 26) = 3.88, ns; and AOI, F(1, 26) = 0.77, ns. There were, however, two significant interactions involving AOI. First, AOI interacted with condition, F(1, 26) = 15.50, p = .001, ηp2 = .37. To explore this interaction, tests for simple effects examined AOI differences separately for each condition. Results revealed more fixations to objects than contexts in the congruent condition (M = 1.36, SD = 1.09, and M = 0.73, SD = 0.59, respectively), F (1, 13) = 4.67, p = .05, ηp2 = .26, and more fixations of contexts than objects in the incongruent condition (M = 1.80, SD = 1.56, and M = 0.80, SD = 0.80, respectively), F (1, 13) = 11.64, p = .005, ηp2 = .47. Second, AOI interacted with category, F(1, 26) = 28.45, p < .001, ηp2 = .52. To explore this interaction, tests for simple effects examined AOI differences separately for each category. Results revealed more fixations of objects than contexts for vehicle scenes (M = 3.05, SD = 2.67, and M = 1.85, SD = 2.09, respectively), F (1, 27) = 4.63, p = .041, ηp2 = .15. No difference in fixations was found for animal scenes (M = 1.89, SD = 2.04, and M = 2.17, SD = 2.27, for objects and contexts, respectively), F (1, 27) = 0.32, ns. No other effects were significant.

Figure 2.

Weighted mean numbers of infant fixations (± SEM) on the object and context as a function of object category and object-context relation: congruent object-context and incongruent object-context conditions for animals and vehicles.

In addition to the number of fixations, the pattern of fixations was examined by coding each instance in which fixation shifted from one AOI to the other (i.e., from object to context or the reverse). This analysis of performance permits a direct examination of infants’ relational processing of objects and contexts. Fixations were relatively stable by AOI, with the mean number of such shifts being < 1 per trial. Shift frequency was analyzed in a 2 × 2 ANOVA with condition as a between-infants factor and category as a within-infant factor. There were no main effects of condition or category, but a significant interaction emerged between condition and category, F(1, 26) = 6.52, p = .017, ηp2 = .20. To explore this interaction, tests for simple effects examined condition differences separately for each category. Results revealed more fixation shifts with incongruent stimuli (M = 1.17, SD = 1.18) than with congruent stimuli (M = 0.45, SD = 0.53) when infants looked at vehicles, F(1, 13) = 4.55, p = .05, ηp2 = .26, but no difference when they looked at animals, (M = 0.62, SD = 0.54, for congruent; M = 0.61, SD = 0.75, for incongruent), F(1, 13) = 2.24, ns.

A final analysis was conducted to examine infant performance in line with more conventional preferential-looking procedures. Total looking time was calculated by summing over all fixations for each trial (both object and context). Mean overall looking times did not differ between categories (animals: M = 5.01 s, SD = 2.00; vehicles: M = 4.58 s, SD = 1.50), F(1, 26) = 1.00, ns, or between conditions (congruent: M = 4.78 s, SD = 1.20; incongruent: M = 4.80 s, SD = 1.49), F(1, 26) = 0.01, ns. Thus, infants did not demonstrate an overall preference for stimuli of one category or condition over those of the others.

Summary

For infants, the results showed (a) equivalent looking-time preferences for animals and vehicles and for congruent and incongruent object-context relations overall, (b) more fixations of objects in congruent object-context relations contrasting with more fixations of contexts in incongruent object-context relations, (c) more fixations of objects than contexts in vehicle scenes, and (d) more fixation shifts for incongruent than congruent vehicle scenes.

Adult Study

Methods

Participants

Twenty-two undergraduates (M = 20.05 years, SD = 1.13; 17 females) were paid $10 for their participation in a single experimental session. All participants had normal or corrected-to-normal vision.

Materials and apparatus

The same stimuli presented to infants were presented to adults. Images in whole subtended 29° high by 36° wide on average; objects were 18° high by 27° wide on average.

As with infants, eye movements were collected using ASL Model 504 with a motion-tracking sensor. The calibration stimulus consisted of 9 1°-diameter red dots in a 3 × 3 grid displayed against a black screen. The dots were placed 12° from each other. Between trials a 6°-diameter round smiley face was displayed in the middle of the screen.

Procedure

Participants were seated 85 cm from the stimulus monitor. They were told they would have to answer questions about the images at the end of the session. These instructions were designed to motivate participants to pay attention during the session.

Following a 9-point calibration, participants were shown all 18 images in each condition (congruent object-context; incongruent object-context) intermixed in a unique random order for each participant (determined by GazeTracker) for a total of 36 trials. The smiley face was shown for 1 s in the middle of the stimulus monitor before each stimulus to center the participant’s fixation on the screen. Each stimulus was shown for 15 s.

Data coding and analyses

Adult fixations were coded in the same manner as for infants; reliability for 10% of the trials was 98%. On average, adults contributed 34 trials of usable data (range = 26-36). As with infants, mean numbers of fixations were weighted by the proportion taken by the object in the scene for fixations of the object and the remaining proportion of the scene for fixations of the context. As with infants, fixation frequency and duration were correlated (object fixations r = .98, context fixations r = .97); thus, only numbers of fixations are reported. Preliminary analyses identified no outliers (Fox, 1997) and no effect for gender.

Results

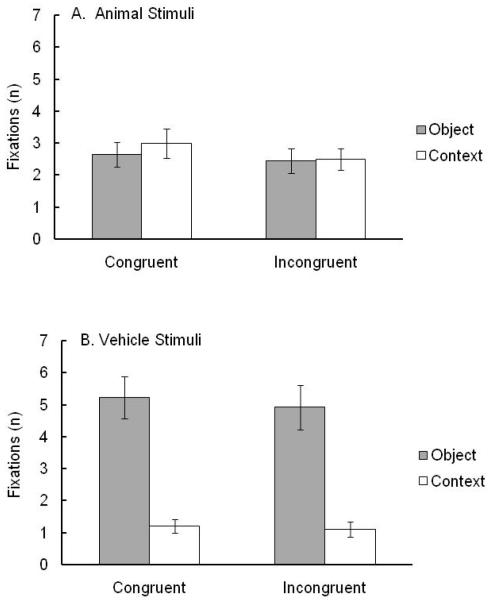

Adults’ weighted mean numbers of fixations (Figure 3) were analyzed in a 2 × 2 × 2 ANOVA with condition (congruent object-context; incongruent object-context), category (animal, vehicle), and AOI (object, context) as within-subject factors. A main effect emerged for condition, F (1, 21) = 10.21, p = .004, ηp2 = .46, with more fixations to congruent (M = 3.02, SD = 1.69) than incongruent (M = 2.74, SD = 1.64) scenes. A main effect emerged for category, F (1, 21) = 14.98, p = .001, ηp2 = .42, with more fixations to vehicles (M = 3.11, SD = 1.83) than to animals (M = 2.65, SD = 1.50). A main effect emerged for AOI, F (1, 21) = 18.28, p < .001, ηp2 = .47, with more fixations on objects (M = 3.81, SD = 2.40) than on contexts (M = 1.95, SD = 1.35). The main effects for category and AOI were subsumed by a significant Category by AOI interaction, F (1, 21) = 79.24, p < . 001, ηp2 = .79. To explore this interaction, tests for simple effects examined AOI differences separately for each category. Results revealed more fixations on objects than contexts for vehicle scenes (M = 5.08, SD = 3.08, and M = 1.16, SD = .99, respectively), F (1, 21) = 46.08, p < .001, ηp2 = .69. No difference in fixations was found for animal scenes (M = 2.55, SD = 1.75, and M = 2.75, SD = 1.76, object and contexts, respectively), F (1, 21) = .27, ns.

Figure 3.

Weighted mean numbers of adult fixations (± SEM) on the object and context as a function of object category and object-context relation: congruent object-context and incongruent object-context conditions for animals and vehicles.

Gaze shift frequency was analyzed in a 2 × 2 ANOVA with condition and category as within-subject factors. A main effect emerged for category, F (1, 21) = 12.38, p = .002, ηp2 = .37, with more switches for animals (M = 2.26, SD = 1.49) than vehicles (M = 1.76, SD = 1.27). No other main effects or interactions were found.

Summary

For adults, the results showed (a) more fixations of congruent than incongruent scenes, (b) more fixations of vehicles than animals, (c) more fixations of objects than contexts, (d) equal fixations of animals and their contexts but more fixations of vehicles than their contexts, and (e) more shifts of fixation when inspecting animal scenes than vehicle scenes.

General Discussion

The four questions explored in these studies addressed whether differences exist in infant and adult attention allocation to objects and their contexts, whether and how they vary as a function of the object-context relation, whether and how they vary for different categories of stimuli (animals versus vehicles), and whether and how they vary for infants and adults. Analyses of the mean numbers and durations of infants’ and adults’ fixations converged and revealed some similar and some different patterns. When inspecting object-context scenes, infants and adults alike looked at both objects and their contexts. Moreover, infants and adults explored animals and their contexts equally, but looked at vehicles more than their contexts. Performance differed between age groups, however, in relation to scene components by condition. Infants looked more at objects than contexts when viewing congruent object-context scenes, and they looked more at contexts than objects when viewing incongruent object-context scenes. By contrast, adult viewing patterns were better characterized by clear main effects: Adults looked more at congruent than incongruent scenes, vehicles than animals, and objects than contexts, as has been found before (Christianson, Loftus, Hoffman, & Loftus, 1991; G. R. Loftus & Mackworth, 1978).

Infants appear to fixate important or interesting objects in object-context congruent scenes. Given the same fixed amount of available inspection time and the same stimuli, infants’ patterns of looking at object-context incongruent conditions differed: Infants explored contexts more than objects and they shifted their gaze between object and context more (at least for vehicle scenes). When infants find objects in their naturally occurring contexts, which presumably they do in the normal course of events, they look at both but pay more attention to target objects. Even with a conservative measure, one that did not include short (< 200 ms) looks or looks at boundaries, we found that in naturally occurring conditions infants regard objects more than their contexts. Infants also treated object-context relations for two categories of stimuli (animals and vehicles) similarly.

Not unexpectedly, adult looking was more straightforward than that of infants. It would appear that more mature eye-movement patterns are more discrete, focusing on more regular relations (congruent > incongruent) and more central sources of information (objects > contexts). In considering these developmental differences, we hasten to add limitations to the comparison, that ours is a cross-sectional (not longitudinal) design and infant and adult procedures differed (e.g., between- vs. within-subject, durations of scene exposures, and so forth).

Nevertheless, the different patterns of infant and adult eye movements are developmentally sensible. The adult data follow significant main effects of both condition and AOI. The AOI effect shows that adults look more often at objects than contexts, while infants did not. This result is consistent with a general expectation that development is accompanied by better extraction of objects from their contexts, supporting better selective attention to them. Additionally, the main effect of condition in adults – more frequent looks in congruent than incongruent scenes – suggests that adults are slower to shift fixations between incongruent regions. Perhaps more knowledge about normative object-context relations guides adults to spend more time attempting to verify scene contents, and shifting fixation less frequently with incongruent scenes. Thus, adults do not show the infant condition × AOI interaction because their looks are more bound to objects by their more mature and automatic extraction capability and by slow-down from top-down verification of incongruent content. Infants and adults alike showed sensitivity to object-context relations, but demonstrated it in different ways ascribable to developmental differences in visual analysis, attention, and scene knowledge.

Adults look more at objects than contexts, and young infants already show a processing advantage for objects when objects (even designed artifacts) appear in congruent object-context relations, those in which the visual system and visual cognition evolved phylogenetically and develop ontogenetically. Perhaps the congruent object-context advantage we found in infants is an example of an experience-expectant effect. Experience-expectant processes are common to all members of a species and presumably evolved as neural preparation for incorporating general information from the environment efficiently and satisfactorily (Greenough, Black, & Wallace, 1987, 1993). Our environment is characterized by statistical regularities that can be learned and predicted under many circumstances (e.g., when we see a person, it is highly probable that s/he has a face). Infants are exposed to environmental regularities and must learn them to plan appropriate action. Regularities occur in space or time or both, and it is acknowledged that infants learn many kinds of statistically defined associations and sequences (e.g., Kirkham, Slemmer, & Johnson, 2002; Saffran, 2009). Indeed, infants are believed to be good statistical learners (e.g., Saffran, 2009), and, just as they appear to be skilled at extracting correlations among attributes (Quinn, Johnson, Mareschal, Rakison, & Younger, 2000), they could be both fast and accurate at extracting regular and prominent co-occurrences such as object-context relations in congruent scenes. For example, infants in the age range we studied see an image that does not contain the complete outline of a square qua a square because the perceptual system expects forms (Kavšek, 2002, 2009). These expectations are in part built into the anatomical and functional organization of the visual pathways; and they are derived in part from common experience. At birth, babies do not look longer at faces from their own ethnic group than at faces from other groups (e.g., Kelly et al., 2005). By just 3 months, however, they have developed looking preferences correlated with the relative frequency of own-group vs. other-group faces in their environment (Bar-Haim et al., 2006; Kelly et al., 2005, 2007). Similarly, by 3 to 4 months, infants being reared primarily by mothers are able to discriminate among individual female faces, but not among individual male faces, and look longer at unfamiliar female than unfamiliar male faces (Quinn, Yahr, Kuhn, Slater, & Pascalis, 2002). In a parallel way, infants being reared primarily by fathers look longer at unfamiliar male faces than at unfamiliar female faces. Thus, very early in infancy, object processing is tuned to the probable characteristics of the visual environment, much as speech processing attunes to the probable properties of the ambient language environment (Werker, Maurer, & Yoshida, 2009). The tuning is expressed as a sensitivity to objects in congruent object-context relations, a sensitivity that biases attention away from the unfamiliar or less salient or important stimuli.

Could object-context congruency cue infant attention in a similar way? Are infants anatomically and physiologically equipped to take advantage of experience-expectant statistical regularities in their environment? Perhaps so. In adults, three cortical areas have been identified that mediate visual object-context processing, including the parahippocampal cortex, the retrosplenial complex, and the medial prefrontal cortex. These regions are selectively activated when participants view objects with strong contextual associations compared with objects with weak contextual associations (see Aminoff, Gronau, & Bar, 2007; Bar, 2004; Bar & Aminoff, 2003). The hippocampal system is activated during the expression of memory across changes in temporal or physical contexts (Brown & Aggleton, 2001; Eichenbaum, Otto, & Cohen, 1994). Damage to the hippocampus interferes with the ability to remember the context in which an object occurred (Rogan, Leon, Perez, & Kandel, 2005), and cells in the hippocampus fire selectively to a variety of images of a single stimulus even when appearing in different contexts (Quiroga, Reddy, Kreiman, Koch, & Fried, 2005). Our infant findings provide behavioral evidence consistent with a notion of early maturation of this hippocampal system. Cell formation appears to take place in the first half of gestation in all hippocampal structures, and even the dentate gyrus shows cell formation in the first year of life (Seress, 2001). Thus, hippocampal circuitry develops rapidly and is functional by mid-gestation (Khazipov et al., 2001), and in humans it is more mature at birth than was previously believed (Alvarado & Bachevalier, 2000; Seress, 2001).

Information about scenes is abstracted throughout the time course of viewing a scene. Our results demonstrate that infants’ eye movements reflect on-line processing of visual scenes. The eye-movement methodology revealed that some objects were attended to more than others as determined by whether they appeared in congruent or incongruent object-context relations, thereby showing that infants respond flexibly to different object-context relations, depending on the combinations they saw. However, in infants the object-context congruency of scenes apparently channels cognitive resources to objects, whereas incongruencies excite more attention to contexts. Extending from our findings, it is likely that as human infants encounter naturally occurring scenes, they eventually pay more attention to objects than to their contexts, and so they may also learn about objects as well as objects’ typical contexts. When 6-month-old infants are presented in a habituation-recognition task with figures occurring in the same background, as opposed to different backgrounds, they recognize figures presented with the same background faster. Thus, a familiar background facilitates object recognition, whereas an unfamiliar background hampers recognition (Haaf, Fulkerson, Pescara-Kovach, & Jablonski, 2000). A familiar (congruent) background facilitates attention to, and encoding of, figures, whereas a novel (incongruent) background can interfere, diverting attention away from target objects, resulting in enhanced inspection of the context. Infants’ attention allocation may have cognitive consequences. In adults, memory for objects in a scene is related to number of fixations: More fixations yield higher recognition scores (Christianson et al., 1991; G. R. Loftus, 1972, 1983). Natural contextual associations also facilitate the recognition of other objects in the environment by providing predictions about the kinds of objects that are likely to be found in specific contexts (Bar & Ullman, 1996; Biederman et al., 1982; Davenport & Potter, 2004; Palmer, 1975), and they direct attentional resources to selected items in the environment (Chun & Nakayama, 2000; Neider & Zelinsky, 2006). It appears that context exerts an effect on object processing (Boyce & Pollatsek, 1992) and vice-versa.

The patterns of eye movements we observed also speak to holistic versus analytic attentional processes in infancy. As they appear in the everyday world to infants, objects may be independently represented but associated with their contexts or objects and contexts may form blended representations. In other words, object and context could be perceived and encoded as a holistic stimulus compound, or they could be perceived and encoded as independent components. Insofar as infants treated congruent vs. incongruent object-context conditions differently, they give evidence that they process objects (animals and vehicles) and contexts in terms of components -- object and context – rather than as holistic compounds. Similarly, Haaf et al. (1996) found that 6-month-olds habituated to a target object more slowly when its background context varied from trial to trial than when a single context was present, and so concluded that infants perceived object and context as separates. Our eye-movement data further indicate that infants process objects and their contexts interactively, and not in isolation, and moreover knowledge of which objects and contexts tend to co-occur influences infant object perception. Scene processing does not appear to proceed in parallel and separate channels, but interactively integrates object and context to facilitate object information processing, and uses object identity to promote understanding of context and context to promote understanding of objects (Bar, 2004).

The results from the current study raise new questions in the area of object-context relations in infant perception and cognition. First, objects in natural scenes are accompanied by other objects (with greater or lesser probabilities) and appear in certain contexts (with greater or lesser probabilities). So, learning about the natural world implies learning these additional object-object and object-context regularities. Experiences in the world thus shape predictions and set expectations about other objects in scenes and their setting as well as their configuration. Being carried into the kitchen raises expectations, not only about future milk delivery, but about a refrigerator, stove, and favorite cup. These soon predictable properties of the environment in turn facilitate perception, and in particular object and context recognition, and might sensitize the visual system or visual cognition to certain representations that then become easier to recognize. Thus, an object can activate a context frame or a set of frames (Davenport & Potter, 2004), and a frame can activate an object or a set of objects (Bar & Ullman, 1996). We know where objects are likely to be found, and may use this knowledge to help identify objects we see. Objects that appear in semantically congruent contexts are recognized faster (Ganis & Kutas, 2003) and more accurately (Davenport & Potter, 2004) than objects in semantically incongruent contexts.

Second, as foveated regions of a scene are typically encoded in greater detail than peripheral regions (Smith et al., 2002), and memory for a scene is related to the fixations it receives (Christianson et al., 1991; G. R. Loftus, 1972), there are likely to be functional consequences of infants’ spending more time looking at objects in congruent object-context relations (as they naturally and more frequently appear in the world) than in novel incongruent ones. In other terms (Lavie, 1995), the load in processing non-target information (context) helps to determine the degree of processing target information (object) . Active attention and learning tends to result in deeper processing (Nachman, Stern, & Best, 1986). On such a depth-of-processing argument, infants should learn more about objects when they appear in congruent than incongruent object-context relations. Perhaps, then, infants also remember more about objects that appear in congruent (than incongruent) contexts. Available evidence supports such a prediction. For example, retention is generally improved when the contexts of encoding and retrieval are similar, a phenomenon called “reinstatement” or “context-dependent” memory (e.g., Smith, 1982; Tulving & Thompson, 1973). Retrieval of focal information is typically better when that information is retrieved in the same context in which it was learned than when retrieved in a different context. Context is encoded along with to-be-remembered object information, and context serves as a retrieval cue for object information. Consequently, object information memory is facilitated by the presence, during retrieval, of the context that was present during learning (Haaf et al., 1996).

Third, the vast majority of work in infancy has been conducted using traditional looking-time measures, which afford information about overall preferences and information processing, but which do not allow determination of which aspects of stimuli infants actually look at; eye-movement technology does (e.g., Gallay, Baudouin, Durand, Lemoine, & Lécuyer, 2006; Johnson et al., 2004). Of course, the relation between what an individual is looking at and what the individual is mentally processing is not straightforward. The “eye–mind” approach acknowledges these limitations (Irwin, 2003; Viviani, 1990) and is attended by methodological caveats (Inhoff & Radach, 1998). Nonetheless, over complex stimuli, such as natural scenes, it is efficient and common to move our eyes (Sclingensiepen, Campbell, Legge, & Walker, 1986), and investigations of relations between eye movements and cognitive processes have been productive in many domains, such as selective attention, visual search, reading comprehension, and visual working memory (e.g., Hayhoe & Ballard, 2005; Liversedge & Findlay, 2000; Rayner, 1998). Peering more deeply into infants’ eyes will illuminate early perception and cognition as well.

Acknowledgments

We thank K. Billington, M. Hochstein, S. Jones, A. Laban, L. May, A. Starr, M. Stevenson, and T. Taylor. This research was supported by the Intramural Research Program of the NIH, NICHD.

References

- Alvarado MC, Bachevalier J. Revisiting the maturation of medial temporal lobe memory functions in primates. Learning and Memory. 2000;7:244–256. doi: 10.1101/lm.35100. [DOI] [PubMed] [Google Scholar]

- Aminoff E, Gronau N, Bar M. The parahippocampal cortex mediates spatial and nonspatial associations. Cerebral Cortex. 2007;17:1493–1503. doi: 10.1093/cercor/bhl078. [DOI] [PubMed] [Google Scholar]

- Antes JR. The time course of picture viewing. Journal of Experimental Psychology. 1974;103:62–70. doi: 10.1037/h0036799. [DOI] [PubMed] [Google Scholar]

- Antes JR, Metzger RL. Influences of picture context on object recognition. Acta Psychologica. 1980;44:21–30. [Google Scholar]

- Antes JR, Penland JG, Metzger RL. Processing global information in briefly presented scenes. Psychological Research. 1981;43:277–292. doi: 10.1007/BF00308452. [DOI] [PubMed] [Google Scholar]

- Applied Science Laboratories . Eye Tracking System Instruction Manual. Model 504 Pan/Tilt Optics. Bedford, MA: 2001. [Google Scholar]

- Atkinson J. Early visual development: Differential functioning of parvocellular and magnocellular pathways. Eye. 1992;6:129–135. doi: 10.1038/eye.1992.28. [DOI] [PubMed] [Google Scholar]

- Ballard DH, Hayhoe MM, Pook PK, Rao RPN. Deictic codes for the embodiment of cognition. Behavioral and Brain Sciences. 1997;20:723–767. doi: 10.1017/s0140525x97001611. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nature Reviews Neuroscience. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E. Cortical analysis of visual context. Neuron. 2003;38:347–358. doi: 10.1016/s0896-6273(03)00167-3. [DOI] [PubMed] [Google Scholar]

- Bar M, Ullman S. Spatial context in recognition. Perception. 1996;25:343–352. doi: 10.1068/p250343. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Ziv T, Lamy D, Hodes R. Nature and nurture in own-race face processing. Psychological Science. 2006;17:159–163. doi: 10.1111/j.1467-9280.2006.01679.x. [DOI] [PubMed] [Google Scholar]

- Biederman I. Perceiving real world scenes. Science. 1972;177:77–80. doi: 10.1126/science.177.4043.77. [DOI] [PubMed] [Google Scholar]

- Biederman I. On the semantics of a glance at a scene. In: Kubovy M, Pomerantz J, editors. Perceptual organization. Erlbaum; Hillsdale, NJ: 1981. pp. 213–253. [Google Scholar]

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–117. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Mezzanotte RJ, Rabinowitz JC. Scene perception: Detecting and judging objects undergoing violation. Cognitive Psychology. 1982;14:143–177. doi: 10.1016/0010-0285(82)90007-x. [DOI] [PubMed] [Google Scholar]

- Bornstein MH, Hahn C-S, Suwalsky JTD, Haynes OM. Socioeconomic status, parenting, and child development: The Hollingshead Four-Factor Index of Social Status and the Socioeconomic Index of Occupations. In: Bornstein MH, Bradley RH, editors. Socioeconomic status, parenting, and child development. Erlbaum; Mahwah, NJ: 2003. pp. 29–82. [Google Scholar]

- Boyce SJ, Pollatsek A. Identification of objects in scenes: The role of scene background in object naming. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1992;18:531–543. doi: 10.1037//0278-7393.18.3.531. [DOI] [PubMed] [Google Scholar]

- Brown MW, Aggleton JP. Recognition memory: What are the roles of the perirhinal cortex and hippocampus? Nature Reviews of Neuroscience. 2001:51–61. doi: 10.1038/35049064. [DOI] [PubMed] [Google Scholar]

- Buswell GT. How people look at pictures. University of Chicago Press; Chicago: 1935. [Google Scholar]

- Butcher PR, Kalverboer AF, Geuze RH. Infants’ shifts of gaze from a central to a peripheral stimulus: A longitudinal study of development between 6 and 26 weeks. Infant Behavior and Development. 2000;23:3–21. [Google Scholar]

- Butler J, Rovee-Collier C. Contextual stating of memory retrieval. Developmental Psychobiology. 1989;22:533–552. doi: 10.1002/dev.420220602. [DOI] [PubMed] [Google Scholar]

- Castelhano MS, Henderson JM. Stable individual differences across images in human saccadic eye movements. Canadian Journal of Experimental Psychology. 2008;62:1–14. doi: 10.1037/1196-1961.62.1.1. [DOI] [PubMed] [Google Scholar]

- Christianson SA, Loftus EF, Hoffman H, Loftus GR. Eye fixations and memory for emotional events. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1991;17:693–701. doi: 10.1037//0278-7393.17.4.693. [DOI] [PubMed] [Google Scholar]

- Chun M, Nakayama K. On the functional role of implicit memory for the adaptive deployment of attention across scenes. Visual Cognition. 2000;7:65–81. [Google Scholar]

- Colombo J, Laurie C, Martelli T, Hartig B. Stimulus context and infant orientation discrimination. Journal of Experimental Child Psychology. 1984;37:576–586. doi: 10.1016/0022-0965(84)90077-8. [DOI] [PubMed] [Google Scholar]

- Davenport JL, Potter MC. Scene consistency in object and background perception. Psychological Science. 2004;15:559–564. doi: 10.1111/j.0956-7976.2004.00719.x. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Otto T, Cohen NJ. Two functional components of the hippocampal memory system. Behavioral Brain Science. 1994;17:449–518. [Google Scholar]

- Foulsham T, Barton JJS, Kingstone A, Dewhurst R, Underwood G. Fixation and saliency during search of natural scenes: The case of visual agnosia. Neuropsychologia. 2009;47:1994–2003. doi: 10.1016/j.neuropsychologia.2009.03.013. [DOI] [PubMed] [Google Scholar]

- Fox J. Applied regression analysis, linear models, and related methods. Sage; Thousand Oaks, CA: 1997. [Google Scholar]

- Frank MC, Vul E, Johnson SP. Development of infants’ attention to faces during the first year. Cognition. 2009;10:160–170. doi: 10.1016/j.cognition.2008.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franken RE, Diamond DC. Picture recognition memory in children: Effects of homogeneous versus heterogeneous classes of pictures. Perceptual and Motor Skills. 1983;57:749–750. doi: 10.2466/pms.1983.57.3.749. [DOI] [PubMed] [Google Scholar]

- Gallay M, Baudouin J, Durand K, Lemoine C, Lécuyer R. Qualitative differences in the exploration of upright and upside-down faces in four-month-old infants: An eye-movement study. Child Development. 2006;77:984–996. doi: 10.1111/j.1467-8624.2006.00914.x. [DOI] [PubMed] [Google Scholar]

- Ganis G, Kutas M. An electrophysiological study of scene effects on object identification. Cognitive Brain Research. 2003;16:123–144. doi: 10.1016/s0926-6410(02)00244-6. [DOI] [PubMed] [Google Scholar]

- Gredebäck G, Theuring C, Hauf P, Kenward B. The microstructure of infants’ gaze as they view adult shifts in overt attention. Infancy. 2008;13:533–543. [Google Scholar]

- Gredebäck G, von Hofsten C. Infants’ evolving representations of object motion during occlusion: A longitudinal study of 6- to 12-month-old infants. Infancy. 2004;6:165–184. doi: 10.1207/s15327078in0602_2. [DOI] [PubMed] [Google Scholar]

- Greenough W, Black J, Wallace C. Experience and brain development. Child Development. 1987;58:539–559. [PubMed] [Google Scholar]

- Greenough W, Black J, Wallace C. Experience and brain development. In: Johnson M, editor. Brain development and cognition. Blackwell; Oxford: 1993. pp. 319–322. [Google Scholar]

- Haaf RA, Fulkerson AL, Pescara-Kovach L, Jablonski B. The attention-getting function of stimulus context during habituation: The long and short of looking time. Presentation at the International Conference on Infant Studies; Brighton, England. 2000. [Google Scholar]

- Haaf RA, Lundy BL, Coldren JT. Attention, recognition, and the effects of stimulus context in 6-month-old infants. Infant Behavior and Development. 1996;19:93–106. [Google Scholar]

- Haith MM. Progress and standardization in eye movement work with human infants. Infancy. 2004;6:257–265. doi: 10.1207/s15327078in0602_6. [DOI] [PubMed] [Google Scholar]

- Hayhoe M, Ballard D. Eye movements in natural behavior. Trends in Cognitive Sciences. 2005;9:188–194. doi: 10.1016/j.tics.2005.02.009. [DOI] [PubMed] [Google Scholar]

- Henderson JM. Human gaze control during real-world scene perception. Trends in Cognitive Science. 2003;7:498–504. doi: 10.1016/j.tics.2003.09.006. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Hollingworth A. High-level scene perception. Annual Review of Psychology. 1999;50:243–271. doi: 10.1146/annurev.psych.50.1.243. [DOI] [PubMed] [Google Scholar]

- Hochberg J. Perception. 2nd ed Prentice Hall; Englewood Cliffs, NJ: 1978. [Google Scholar]

- Hunnius S, Geuze RH. Gaze shifting in infancy: A longitudinal study using dynamic faces and abstract stimuli. Infant Behavior & Development. 2004a;27:397–416. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Hunnius S, Geuze RH. Developmental changes in visual scanning of dynamic faces and abstract stimuli in infants: A longitudinal study. Infancy. 2004b;6:231–255. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Inhoff AW, Radach R. Definition and computation of oculomotor measures in the study of cognitive processes. In: Underwood G, editor. Eye guidance in reading and scene perception. Elsevier; Oxford, England: 1998. pp. 29–54. [Google Scholar]

- Intraub H. Rapid conceptual identification of sequentially presented pictures. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1981;10:115–125. doi: 10.1037//0278-7393.10.1.115. [DOI] [PubMed] [Google Scholar]

- Irwin JR. Parent and non-parent perception of the multimodal infant cry. Infancy. 2003;4:503–516. [Google Scholar]

- Johnson SP, Slemmer JA, Amso D. Where infants look determines how they see: Eye movements and object perception performance in 3-month-olds. Infancy. 2004;6:185–201. doi: 10.1207/s15327078in0602_3. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA. A theory of reading: From eye fixations to comprehension. Psychological Review. 1980;87:329–354. [PubMed] [Google Scholar]

- Just MA, Carpenter PA. Using eye fixations to study reading comprehension. In: Kieras DE, Just MA, editors. New Methods in Reading Comprehension Research. Erlbaum; Hillsdale, NJ: 1984. pp. 151–182. [Google Scholar]

- Kavšek MJ. The perception of static subjective contours in infancy. Child Development. 2002;73:331–344. doi: 10.1111/1467-8624.00410. [DOI] [PubMed] [Google Scholar]

- Kavšek MJ. The perception of subjective contours and neon color spreading figures in young infants. Attention, Perception, & Psychophysics. 2009;71:412–420. doi: 10.3758/APP.71.2.412. [DOI] [PubMed] [Google Scholar]

- Kelly D, Liu S, Ge L, Quinn P, Slater A, Lee K, Liu Q, Pascalis O. Cross-race preferences for same-race faces extend beyond the African versus Caucasian contrast in 3-month-old infants. Infancy. 2007;11:87–95. doi: 10.1080/15250000709336871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly DJ, Quinn PC, Slater AM, Lee K, Gibson A, Smith M, Ge L, Pascalis O. Three-month-olds, but not newborns, prefer own-race faces. Developmental Science. 2005;8:F31–F36. doi: 10.1111/j.1467-7687.2005.0434a.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khazipov R, Esclapez M, Caillard O, Bernard C, Khalilov I, Tyzio R. Early development of neuronal activity in the primate hippocampus in utero. Journal of Neuroscience. 2001;21:9770–9781. doi: 10.1523/JNEUROSCI.21-24-09770.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirkham NZ, Slemmer JA, Johnson SP. Visual statistical learning in infancy: Evidence for a domain general learning mechanism. Cognition. 2002;83:B35–B42. doi: 10.1016/s0010-0277(02)00004-5. [DOI] [PubMed] [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lécuyer R, Berthereau S, Ben Taieb A, Tardif N. Location of a missing object and detection of its absence by infants: Contribution of an eye-tracking system to the understanding of infants’ strategies. Infant and Child Development. 2004;13:287–300. [Google Scholar]

- Liversedge SP, Findlay JM. Saccadic eye movements and cognition. Trends in Cognitive Sciences. 2000;4:6–14. doi: 10.1016/s1364-6613(99)01418-7. [DOI] [PubMed] [Google Scholar]

- Loftus GR. Eye fixations and recognition memory for pictures. Cognitive Psychology. 1972;3:525–551. [Google Scholar]

- Loftus GR. Eye fixations on text and scenes. In: Rayner K, editor. Eye movements in reading: Perceptual and language processes. Academic Press; New York: 1983. pp. 359–376. [Google Scholar]

- Loftus GR, Mackworth NH. Cognitive determinants of fixation location during picture viewing. Journal of Experimental Psychology: Human Perception and Performance. 1978;4:565–572. doi: 10.1037//0096-1523.4.4.565. [DOI] [PubMed] [Google Scholar]

- Loftus GR, Nelson WW, Kallman HJ. Differential acquisition rates for different types of information from pictures. Quarterly Journal of Experimental Psychology. 1983;3SA:187–198. doi: 10.1080/14640748308402124. [DOI] [PubMed] [Google Scholar]

- Mackworth NH, Morandi AJ. The gaze selects informative details within pictures. Perception and Psychophysics. 1967;2:547–552. [Google Scholar]

- Mannen SK, Ruddock KH, Wooding DS. Automatic control of saccadic eye movements made in visual inspection of briefly presented 2-D images. Spatial Vision. 1995;9:363–386. doi: 10.1163/156856895x00052. [DOI] [PubMed] [Google Scholar]

- Mannen SK, Ruddock KH, Wooding DS. The relationship between the locations of spatial features and those of fixation made during visual examination of briefly presented images. Spatial Vision. 1996;10:165–188. doi: 10.1163/156856896x00123. [DOI] [PubMed] [Google Scholar]

- Mannen SK, Ruddock KH, Wooding DS. Fixation patterns made during brief examination of two-dimensional images. Perception. 1997;26:1059–1072. doi: 10.1068/p261059. [DOI] [PubMed] [Google Scholar]

- Mathis KM. Semantic interference from objects both in and out of a scene context. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:171–182. doi: 10.1037/0278-7393.28.1.171. [DOI] [PubMed] [Google Scholar]

- Murphy GL. The big book of concepts. MIT Press; Cambridge, MA: 2002. Anti-summary and conclusions; pp. 477–498. [Google Scholar]

- Nachman P, Stern D, Best C. Affective reactions to stimuli and infants’ preferences for novelty and familiarity. Journal of the American Academy of Child Psychiatry. 1986;25:801–804. doi: 10.1016/s0002-7138(09)60198-9. [DOI] [PubMed] [Google Scholar]

- Nadel L, Willner J, Kurz EM. Cognitive maps and environmental context. In: Balsam PD, Tomie A, editors. Context and learning. Erlbaum; Hillsdale, NJ: 1985. pp. 385–406. [Google Scholar]

- Needham A. Infants’ use of featural information in the segregation of stationary objects. Infant Behavior and Development. 1998;21:47–76. [Google Scholar]

- Neider MB, Zelinsky GJ. Scene context guides eye movements during visual search. Vision Research. 2006;46:614–621. doi: 10.1016/j.visres.2005.08.025. [DOI] [PubMed] [Google Scholar]

- Nelson WW, Loftus GR. The functional visual field during picture viewing. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:391–399. [PubMed] [Google Scholar]

- Palmer SE. The effects of contextual scenes on the identification of objects. Memory & Cognition. 1975;3:519–526. doi: 10.3758/BF03197524. [DOI] [PubMed] [Google Scholar]

- Parkhurst D, Law K, Niebur E. Modeling the role of salience in the allocation of overt visual attention. Vision Research. 2002;42:107–123. doi: 10.1016/s0042-6989(01)00250-4. [DOI] [PubMed] [Google Scholar]

- Pescara-Kovach L, Fulkerson AL, Haaf RA. Do you hear what I hear? Auditory context, attention and recognition in six-month-old infants. Infant Behavior & Development. 2000;23:119–123. [Google Scholar]

- Pomerantz J, Sager L, Stoever J. Perceptions of wholes and their component parts: Some configural superiority effects. Journal of Experimental Psychology: Human Perception and Performance. 1977;3:422–435. [PubMed] [Google Scholar]

- Potter MC. Meaning in visual search. Science. 1975;187:965–966. doi: 10.1126/science.1145183. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Johnson MH, Mareschal D, Rakison DH, Younger BA. Understanding early categorization: One process or two? Infancy. 2000;1:111–122. doi: 10.1207/S15327078IN0101_10. [DOI] [PubMed] [Google Scholar]

- Quinn PC, Yahr J, Kuhn A, Slater AM, Pascalis O. Representation of the gender of human faces by infants: a preference for female. Perception. 2002;31:1109–1121. doi: 10.1068/p3331. [DOI] [PubMed] [Google Scholar]

- Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature. 2005;435:1102–1107. doi: 10.1038/nature03687. [DOI] [PubMed] [Google Scholar]

- Rayner K. Eye movements in reading and information processing: 20 years of research. Psychological Bulletin. 1998;124:372–422. doi: 10.1037/0033-2909.124.3.372. [DOI] [PubMed] [Google Scholar]

- Rayner K, Smith TJ, Malcolm GL, Henderson JM. Eye movements and visual encoding during scene perception. Psychological Science. 2009;20:6–10. doi: 10.1111/j.1467-9280.2008.02243.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context influences early perceptual analysis of faces—an electrophysiological study. Cerebral Cortex. 2006;16:1249–1257. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Rogan MT, Leon KS, Perez DL, Kandel ER. Distinct neural signatures for safety and danger in the amygdala and striatum of the mouse. Neuron. 2005;46:309–320. doi: 10.1016/j.neuron.2005.02.017. [DOI] [PubMed] [Google Scholar]

- Rousselet GA, Joubert OR, Fabre-Thorpe M. How long to get to the “gist” of real-world natural scenes. Visual Cognition. 2005;12:852–877. [Google Scholar]

- Rovee-Collier C, DuFault D. Multiple contexts and memory retrieval at three months. Developmental Psychobiology. 1991;24:39–49. doi: 10.1002/dev.420240104. [DOI] [PubMed] [Google Scholar]

- Saffran JR. What can statistical learning tell us about infant learning. In: Woodward A, Needham A, editors. Learning and the infant mind. Oxford University Press; New York, NY: 2009. pp. 29–46. [Google Scholar]

- Salapatek P, Kessen W. Visual scanning of triangles by the human newborn. Journal of Experimental Child Psychology. 1966;3:155–167. doi: 10.1016/0022-0965(66)90090-7. [DOI] [PubMed] [Google Scholar]

- Salvucci DD, Goldberg JH. Identifying fixations and saccades in eye-tracking protocols. In: Duchowski AT, editor. Proceedings of the eye tracking research and applications symposium. ACM Press; New York: 2000. pp. 71–78. [Google Scholar]

- Schlesinger M, Casey P. Where infants look when impossible things happen: Simulating and testing a gaze-direction model. Connection Science. 2003;15:271–280. [Google Scholar]

- Schyns P, Oliva A. From blobs to boundary edges: Evidence for time- and spatial-scale-dependent scene recognition. Psychological Science. 1994;5:195–200. [Google Scholar]

- Sclingensiepen KH, Campbell FW, Legge GE, Walker TD. The importance of eye movements in the analysis of simple patterns. Vision Research. 1986;26:1111–1117. doi: 10.1016/0042-6989(86)90045-3. [DOI] [PubMed] [Google Scholar]

- Seress L. Morphological changes of the human hippocampal formation from midgestation to early childhood. In: Nelson CA, Luciana M, editors. Handbook of developmental cognitive neuroscience. The MIT Press; Cambridge, MA: 2001. pp. 45–58. [Google Scholar]

- Smith SM. Enhancement of recall using multiple environmental contexts during learning. Memory and Cognition. 1982;10:405–412. doi: 10.3758/bf03197642. [DOI] [PubMed] [Google Scholar]

- Thorpe SJ, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A, Castelhano MS, Henderson JM. Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychological Review. 2006;113:766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- Tulving E, Thompson DM. Encoding specificity and retrieval processes in episodic memory. Psychological Review. 1973;80:352–373. [Google Scholar]

- Ullman S. High-level vision. MIT Press; Cambridge, MA: 1996. [Google Scholar]

- Viviani P. Eye movements in visual search: cognitive, perceptual and motor control aspects. Reviews in Oculomotor Research. 1990;4:353–393. [PubMed] [Google Scholar]

- Werker JF, Maurer DM, Yoshida KA. Perception. In: Bornstein MH, editor. The handbook of cultural developmental science. Part 1. Domains of development across cultures. Taylor & Francis Group; New York: 2009. pp. 89–125. [Google Scholar]

- Williams A, Weisstein N. Line segments are perceived better in coherent context than alone: An object-line effect in visual perception. Memory & Cognition. 1978;6:85–90. doi: 10.3758/bf03197432. [DOI] [PubMed] [Google Scholar]

- Yarbus AL. Eye movements during perception of complex objects. In: Riggs LA, editor. Eye movements and vision. Plenum; New York: 1967. pp. 171–196. [Google Scholar]