Summary

For modern evidence-based medicine, decisions on disease prevention or management strategies are often guided by a risk index system. For each individual, the system uses his/her baseline information to estimate the risk of experiencing a future disease-related clinical event. Such a risk scoring scheme is usually derived from an overly simplified parametric model. To validate a model-based procedure, one may perform a standard global evaluation via, for instance, a receiver operating characteristic analysis. In this article, we propose a method to calibrate the risk index system at a subject level. Specifically, we developed point and interval estimation procedures for t-year mortality rates conditional on the estimated parametric risk score. The proposals are illustrated with a dataset from a large clinical trial with post-myocardial infarction patients.

Some key words: Cardiovascular diseases, Cox model, Nonparametric functional estimation, Risk index, ROC analysis, Survival analysis

1. Introduction

The choice of disease prevention or management strategy for an individual from a population of interest is often made based on his/her risk of experiencing a certain clinical event during a specific follow-up time period. Subjects classified as high risk may be recommended for intensive prevention or therapy. The risk is usually estimated via a parametric or semiparametric regression model using baseline risk factors. For example, Eagle et al. (2004) created a simple bedside risk index system to predict six-month post-discharge mortality for patients hospitalized due to acute coronary syndrome. A proportional hazards model (Cox, 1972) was utilized to fit the data on post-discharge follow-up mortality and all potential predictors taken at admission as well as during hospitalization. The six-month post-discharge mortality rate is then estimated with the fitted model for each future individual patient.

An empirical model is merely an approximation to a true model, and it is crucial to assess its performance for appropriate clinical decision making. In addition to checking the fitted model with routine goodness-of-fit techniques, the model-based scoring system is often evaluated or validated globally based on specific summary measures, such as the receiver operating characteristic curve (Pepe et al., 2004; Wang et al., 2006; Ware, 2006; Cook, 2007), expected Brier score (Gerds & Schumacher, 2006) or the net reclassification index (Pencina et al., 2008). For example, Uno et al. (2007) developed procedures for evaluating the accuracy of empirical parametric models in predicting t-year survival with respect to various global accuracy measures. For the aforementioned post-discharge mortality example, the investigators used an independent cohort to validate their system with an overall c-statistic. However, even if the risk index system is acceptable using a global average measure, for the group of patients with the same model-based risk score, the corresponding parametric risk estimator may not approximate its true mortality rate well. Consequently, clinicians may be misguided in choosing therapies for treating these individuals.

In this paper, we propose a procedure for calibrating the parametric risk estimates. Specifically, under a general survival analysis setting, a consistent estimation procedure is proposed for t-year mortality rates of patients grouped by a parametric risk score. Furthermore, we provide pointwise and simultaneous confidence intervals for such risks over a range of estimated risk scores. These interval estimates quantify the precision of our consistent point estimator. Moreover, the upper or lower bounds of the intervals provide valuable information for complex cost-benefit decision making. Pointwise confidence interval estimates of the true average risk may be of interest to individual patients with a specific risk score. Simultaneous interval estimates can be used to identify subgroups with differential risks and to assist in selecting target populations for appropriate interventions. Our proposal is also useful for calibrating a risk index system when applied to populations different from the study population. For example, the Framingham Risk Score (Anderson et al., 1991) for predicting the risk of coronary heart disease was developed based on a U.S. population. Its applicability to other populations has been extensively investigated in recent years (D’Agostino et al., 2001; Brindle et al., 2003; Zhang et al., 2005). For a new population, our procedure can be used to assess the true risk of coronary heart disease for subjects with any given Framingham Risk Score.

2. A Consistent estimator for the mean risk of subjects with the same model-based risk score

Consider a subject randomly drawn from the study population. Let T̃ be the time to the occurrence of a specific event since baseline and U be the corresponding set of risk factors/markers ascertained at baseline; some marker values may be collected repeatedly over time. In this paper, we assume that markers are measured at a well-defined time zero. Discussions on the setting where time zero is not well defined are given in §5. Now, assume that T̃ has a continuous distribution given U. Also, let 𝒫(u) = pr(T̃ < t0 | U = u). The event time T̃ may be censored by a random variable C, which is assumed to be independent of T̃ and U. For T̃, one can only observe T = min(T̃, C) and δ = I (T̃ ⩽ C), where I(·) is the indicator function. Our data {(Ti, δi, Ui), i = 1, . . ., n} consist of n independent copies of (T, δ, U).

One may estimate 𝒫(u) fully nonparametrically. Li & Doss (1995) and Nielsen (1998) considered local linear estimators of the conditional hazard function by smoothing over the q-dimensional U. However, such nonparametric estimates may not behave well when q > 1 and n is not large. A standard, feasible way to reduce the dimension of u is to approximate 𝒫(u) with a working parametric or semiparametric model such as the proportional hazards model:

| (1) |

where g(s) = 1 − exp(−es), Λ(·) is the working baseline cumulative hazard function for T̃; x, a p × 1 vector, is a function of u; and β is an unknown vector of regression parameters.

To obtain an estimate for 𝒫(u) via (1), one may employ the maximum partial likelihood estimator β̂ for β with all mortality information from the data collected up to time t0. That is, β̂ is the maximizer of the log partial likelihood function,

where Ni (t) = I (Ti ⩽ t)δi and Yi (t) = I (Ti ⩾ t), for i = 1, . . ., n. When the model (1) is correctly specified, β̂ consistently estimates the true value of β. On the other hand, if there is no vector ζ such that pr(T1 > T2 | ζTX1 > ζTX2, T2 ⩽ t0) = 1, then β̂ converges to a finite constant β0, as n → ∞, even when the model (1) is misspecified (Hjort, 1992). Alternatively, one may use an estimate of β by fitting a global Cox model without truncating at t0, denoted by β̃. When the Cox model fits the data well, β̃ may be more efficient than β̂.

Next, we estimate Λ(t) in (1) with Breslow’s estimator (Kalbfleisch & Prentice, 2002),

which is a step function that only jumps at observed failure times. It follows that a model-based estimate of 𝒫(u) is 𝒫̂(u) = g(γ̂Tx+), where γ̂ = [log{Λ̂(t0)}, β̂T]T and x+ = (1, xT)T. Following from the convergence of β̂ and arguments given in Hjort (1992), 𝒫̂(u) converges to a deterministic function 𝒫̄(u) in probability, as n → ∞. When (1) is correctly specified, 𝒫̄(u) = 𝒫(u).

Although most likely (1) is not the true model, the parametric risk scores {𝒫̂(u)} or {γ̂Tx+} can be used as a risk index system for future populations similar to the study population. Consider a future subject with (T̃, U, X) = (T̃0, U0, X0) and . When (1) is misspecified, the risk of T̃0 < t0 may be quite different from v. To calibrate the subject-level model-based risk estimate, one needs a consistent estimate for

the mortality rate among subjects with risk score 𝒫̂(U0) = v. Here, the probability is with respect to T̃0, U0 and {(Ti, δi, Ui), i = 1, . . ., n}.

To estimate τ (v; t0), let Λv (t) = − log{1 − τ (v; t)} be the corresponding cumulative hazard function and we propose a nonparametric kernel Nelson–Aalen estimator for Λv (t) based on {Ti, δi, 𝒫̂(Ui)}, for i = 1, . . ., n. As for the standard one-sample estimation of the cumulative hazard function, we focus on the class of potential estimators which are step functions over t and only jump at the observed failure times with jump sizes ΔΛv (t). First, we consider a local constant estimator by assuming that for v′ in a small neighbourhood of v, ΔΛv′(t) ≈ ΔΛv (t). Specifically, for any given t and v, we obtain ΔΛ̃v(t) as the following minimizer:

| (2) |

where ; D̂vi = ψ{𝒫̂(Ui)} − ψ(v); K (·) is a smooth symmetric density function; Kh(x) = K (x h)/h, h = O(n−v) is a bandwidth with 1/2 > v > 0; and ψ(·) : (0, 1) → (−∞, ∞) is a known increasing smooth function (Wand et al., 1991; Park et al., 1997). Based on (2), we obtain a local constant estimator for Λv (t0) as

Here, we adopt the notation ∫ΔNi (t)W(t)dt ≡ ∫ W(t)d Ni (t) as in Nielsen & Tanggaard (2001). When 𝒫̂(·) is deterministic, the limiting distribution of such a nonparametric estimator with a fixed v has been derived by Nielsen & Linton (1995) and Du & Akritas (2002). Here, we investigate the asymptotic properties of Λ̃v (t0) over a set of v at a given time t0 in the presence of the additional variability in 𝒫̂(·).

The above estimator can potentially be improved by considering a local linear approximation to Λv (t) (Fan & Gijbels, 1996; Li & Doss, 1995). That is, for v′ in a small neighbourhood of v, we assume ΔΛv′(t) ≈ ΔΛv (t) + bv (t){ψ(v′) − ψ(v)}. Thus, for any given t and v, we replace a in (2) by a local linear function a + bD̂vi with unknown intercept and slope parameters a and b. The resulting estimator ΔΛ̂v(t) is the intercept of the vector that minimizes

with respect to (a, b). The corresponding estimator Λ̂v(t) for Λv(t) is the sum of ΔΛ̂v(·) over all distinct observed death times by t. The resulting estimator for τ (v; t0) is τ̂(v; t0) = 1 −exp{−Λ̂v(t0)}. In Appendix A, we show that under mild regularity conditions, if h = O(n−ν) with 1/5 < ν < 1/2, τ̂(v; t0) is consistent for τ (v; t0), uniformly in v ∈ 𝒥 = [ψ−1(ρl + h), ψ−1(ρr − h)], where (ρl, ρr) is a subset contained in the support of ψ{𝒫̄(U)}.

3. Pointwise and simultaneous interval estimation procedures for τ (v; t0) over risk score V

For any fixed v ∈ 𝒥, we show in Appendix A that the distribution of

| (3) |

can be approximated well by the conditional distribution of a zero-mean normal distribution

| (4) |

given the data for large n and h = O(n−ν) with 1/5 < ν < 1/2, where Ξ = (ξ1, . . ., ξn) are standard normal random variables independent of the data, , Λ̂v (t0, γ) is given in (A3) and γ̂* is given in (A5). A perturbed version of the observed Λ̂v(t0) is Λ̂v(t0, γ̂*) with the same perturbation variables Ξ, which accounts for the extra variability of γ̂. Although asymptotically one only needs the first term of (4) since γ̂ = O(n−1/2), the inclusion of the second term is likely to improve the approximation to the distribution of (3) in finite sample. This perturbation method is similar to the so-called wild bootstrap (Wu, 1986; Härdle, 1990; Mammen, 1992) and has been used successfully for a number of interesting problems in survival analysis (Jin et al., 2001; Park & Wei, 2003; Cai et al., 2005). The distribution of (4) can be easily approximated by generating a large number M of realizations of Ξ.

With the above approximation, for any v ∈ 𝒥, one may obtain a variance estimator of (3), , based on the empirical variance of M realizations from (4). For any given α ∈ (0, 1), a 100(1 − α)% confidence interval for Λv(t0) can be obtained as Λ̂v(t0) ± (nh)−1/2cσ̂v(t0), where c is the 100(1 − α/2)th percentile of the standard normal. The corresponding pointwise 100(1 − α)% confidence interval for τ(v; t0), the mortality rate with score v, is

To make inference about the mortality rate over a range of v, one may construct simultaneous confidence intervals for {τ(v; t0), v ∈ 𝒥} by considering a suptype statistic

| (5) |

However, the distribution of (nh)1/2{Λ̂v(t0) − Λv(t0)} does not converge as a process in v, as n → ∞. Therefore, we cannot use the standard large sample theory for stochastic processes to approximate the distribution of W. On the other hand, by the strong approximation arguments and extreme value limit theorem (Bickel & Rosenblatt, 1973), we show in Appendix B that a standardized version of W converges in distribution to a proper random variable. In practice, for large n, one can approximate the distribution of W by W*, the supremum of the absolute value of (4) divided by σ̂v (t0), with (4) perturbed by the same set of Ξ for all v ∈ 𝒥. It follows that the 100(1 − α)% simultaneous confidence interval for τ (v; t0) is

where the cut-off point d is chosen such that pr(W* < d) ⩾ 1 − α.

As for any nonparametric functional estimation problem, the choice of h for τ̂(v; t0) is crucial for making inferences about τ (v; t0). To incorporate censoring, we propose obtaining an optimal h by minimizing mean integrated squared martingale residuals over time interval (0, t0) through K -fold crossvalidation. Such a procedure has been successfully used for bandwidth selection in Tian et al. (2005). Specifically, we randomly split the data into K disjoint subsets of about equal sizes denoted by {ℐk, k = 1, . . ., K}. For each k, we use all observations not in ℐk to obtain Λv (t) with a given h. Let the resulting estimators be denoted by Λ̂v(k)(t). We then use the observations from 𝒥k to calculate the sum of integrated squared martingale residuals

| (6) |

Lastly, we sum (6) over k = 1, . . ., K, and then choose hopt as the minimizer of the sum of K martingale residuals. Since the order of hopt is expected to be n−1/5 (Fan & Gijbels, 1995), the bandwidth we use for estimation is h = hopt × n−d0 with 0 < d0 < 3/10 such that h = n−ν with 1/5 < ν < 1/2. This ensures that the resulting functional estimator Λ̂v (t0) with the data-dependent smooth parameter has the above desirable large sample properties.

4. Numerical studies

4.1. Example

We illustrate the new proposal with a dataset from the Valsartan in Acute Myocardial Infarction study (Pfeffer et al., 2003). This large clinical study, often referred to as the VALIANT study, was conducted to evaluate the effect of angiotensin II receptor blocker and angiotensin converting enzyme inhibitors on overall mortality among patients with myocardial infarction complicated by left ventricular systolic dysfunction and/or heart failure. The trial was designed to compare three treatments, angiotensin II receptor blocker valsartan, angiotensin converting enzyme inhibitor captopril and a combination of these two drugs, for treating high-risk patients after myocardial infarction with respect to mortality and morbidity. The study was conducted from 1999 to 2003 with a total of 14 703 patients assigned randomly and equally to the three groups. The median follow-up time was 24.7 months. There are no significant differences among the three groups with respect to the overall mortality and thus we combine all three groups to develop a risk scoring system for mortality.

For simplicity, we used 11 baseline covariates from each study subject considered by Solomon et al. (2005) in our analysis. These 11 predictors are potentially the most significant ones among the risk factors identified by Anavekar et al. (2004) for the overall mortality based on this study. We fitted the survival data with an additive Cox model using these 11 covariates to obtain a parametric risk score for each individual. Specifically, the vector U = X consists of age, X1; Killip class, X2; estimated glomerular filtration rate, X3; history of myocardial infarction, X4; history of congestive heart failure, X5; percutaneous coronary intervention after index myocardial infarction, X6; atrial fibrillation after index myocardial infarction, X7; history of diabetes, X8; history of chronic obstructive pulmonary disease, X9; new left bundle-branch block, X10; and history of angina, X11. Our analysis includes n = 14 088 patients who had complete information on these 11 covariates.

First, suppose that we are interested in predicting the six-month mortality rates of future patients. To this end, we let t0 = 6 months and fitted the survival observations truncated slightly after six months with a Cox model (1) and x = u. The estimated regression coefficient vector β̂ is given in Table 1. These estimates, coupled with the estimated intercept γ̂ = −6.02, create a risk score 𝒫̂(u) for six-month mortality of future patients. To obtain τ̂(v, 6) for a given 𝒫̂(u) = v, we let K (·) be the Epanechnikov kernel, and ψ(v) = log{− log(1 − v)}, which leads to ψ{𝒫̂(U)} = γ̂TX+. The smoothing parameter h = 0.18 was obtained by multiplying hopt by a factor of n−0.07 as defined in §3, with ten-fold crossvalidation. We chose the second and 98th percentiles of the empirical distribution of {γ̂TX+i}i=1,...,n as the boundary points ρl and ρr for interval 𝒥. To approximate the distribution of (3) and W in (5), we used the perturbation-resampling method (4) with M = 500 independent realized standard normal samples Ξ.

Table 1.

Estimates of the regression coefficients, the corresponding standard errors and p-values, multiplied by 100, derived from fitting the Cox model to the VALIANT dataset based on survival time information up to t0 and based on the entire dataset

| X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 | X9 | X10 | X11 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| t0 = 6 | Est | 2.8 | 28.9 | −1.2 | 20.6 | 24.2 | −43.3 | 25.2 | 24.1 | 16.1 | 22.0 | 16.4 |

| SE | 0.3 | 3.2 | 0.2 | 6.6 | 7.2 | 10.7 | 7.2 | 6.1 | 8.8 | 10.8 | 6.2 | |

| p | 0.0 | 0.0 | 0.0 | 0.2 | 0.1 | 0.0 | 0.1 | 0.0 | 7.0 | 4.2 | 0.8 | |

| t0 = 24 | Est | 3.0 | 23.7 | −1.0 | 29.5 | 32.7 | −46.3 | 29.6 | 26.8 | 24.3 | 23.1 | 23.5 |

| SE | 0.2 | 2.3 | 0.1 | 4.7 | 5.1 | 7.7 | 5.2 | 4.4 | 6.2 | 8.0 | 4.5 | |

| p | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.4 | 0.0 | |

| Global | Est | 2.7 | 27.3 | −1.1 | 24.1 | 28.3 | −50.6 | 26.3 | 26.4 | 19.6 | 22.7 | 18.2 |

| SE | 0.3 | 3.0 | 0.2 | 5.6 | 6.8 | 9.3 | 6.2 | 5.2 | 7.6 | 9.5 | 5.8 | |

| p | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.7 | 0.2 |

Est, estimates; SE, standard error; p, p-value; Global, entire dataset.

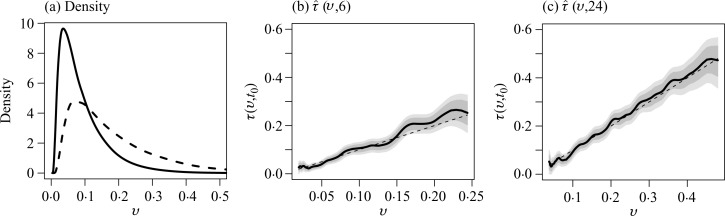

The smoothed density estimate for 𝒫̂(U), shown in Fig. 1(a), provides useful information regarding the relative size of the subgroup of individuals with 𝒫̂(U) = v, where v ∈ 𝒥 = [0.02, 0.24]. In Fig. 1(b), we present the point and interval estimates for τ (v, 6). The estimated risk scores for most patients are less than 0.15. For groups of subjects with low risk scores, their interval estimates tend to be tight. For example, among subjects with 𝒫̂(u) = 0.045, the six-month mortality rate is likely to be between 0.03 and 0.05, based on the 95% point-wise confidence interval. The corresponding simultaneous confidence interval is [0.02, 0.06]. For this subgroup, the Cox model appears to be slightly overestimating the risk with a p-value of 0.08 when testing the difference τ (v, 6) − v = 0. For patients whose risk scores are greater than 0.15, the interval estimates are relatively wide, as expected. For example, the mortality rate among patients with 𝒫̂(u) = 0.17 is estimated as 0.21 with a 95% pointwise confidence interval of [0.18, 0.23] and a simultaneous interval of [0.17, 0.25]. The Cox model underestimates the risk of this subgroup substantially with a pointwise p-value of 0.001 and a familywise adjusted p-value of 0.04 after controlling for the overall Type I error. For comparison, we also obtained the regression coefficient estimates based on the global Cox model without truncation. As shown in Table 1, the estimates are slightly different from the ones obtained based on survival information up to six months only. Under the global model, when the Cox model predicted risk is 0.045, the calibrated risk estimate is 0.034 with 95% pointwise and simultaneous confidence intervals being [0.03,0.04] and [0.02,0.05]. Overall, we find that the global model and the truncated version lead to very similar interval estimates for the present case.

Fig. 1.

Prediction of six-month and 24-month mortality risks based on the 11 risk factors. Panel (a) shows the estimated density function of the model-based risk estimate for t0 = 6 (solid line) and 24 (dashed line) months. Shown also are the point (thick solid curve) estimate of the true risk function τ (v, t0) along with their point-wise (dark shaded region) and simultaneous (light shaded region) confidence intervals for (b) t0 = 6 months; and (c) t0 = 24 months. The dashed line is the 45° reference line.

Next, suppose that we are interested in predicting long-term survival for future patients similar to the VALIANT study population. Here, we let t0 = 24 months and fitted the survival observations slightly truncated at 24 months with a Cox model and x = u. The resulting regression coefficient estimates are also given in Table 1. For this case, the bandwidth h = 0.15 and the range of the estimated risk score v is from 0.04 to 0.48. The smoothed density function estimation of the parametric score is also given in Fig. 1(a). Relatively few patients have scores beyond 0.3. Our point and interval estimates for the true mortality rate are given in Fig. 1(c). For example, for subjects with a risk score of 0.1, their 24-month mortality rate is estimated as 0.09 with 95% pointwise and simultaneous confidence intervals being [0.08, 0.11] and [0.07, 0.12], respectively. For patients whose risk scores are high, as expected, their interval estimates can be quite wide. For example, for subjects with a risk score of 0.3, the true mortality rate is likely to be between 0.26 and 0.37 based on the 95% simultaneous confidence interval. Under the global model, the calibrated risk estimates at risk scores of 0.1 and 0.3 are similar to the above results based on the truncated fitting.

4.2. Simulation studies

We conducted simulation studies to examine the validity of the proposed inference procedures; and compared the performance of the calibrated prediction procedures with that of the prediction based on the Cox model. We first simulated data from a normal mixture model under which the Cox model is misspecified. In particular, we generated all discrete covariates, XD = (X2, X4, X5, X6, X7, X8, X9, X10, X11)T, based on their empirical distribution from the observed data. Then, for each given value of XD, we generated the corresponding value of XC = (X1, X3), from N (μXD, Σ), where μXD is the empirical mean of XC given XD and Σ is the empirical covariance matrix of XC in the VALIANT dataset. Then, we generated T from

where for (X4, X8) = (0, 0), (0, 1), (1, 0) and (1, 1), we let αX4X8 = 11.5, 8.5, 5.5, 8.5 and σX4X8 = 0.9, 1.5, 1.3, 1.0; X−(48) denotes the covariate vector with (X4, X8) excluded and βX4X8 is obtained from fitting a lognormal model to the subset of VALIANT data with a given value of (X4, X8). The censoring was generated from a Weibull (5, 30) that was obtained by fitting the Weibull model to the VALIANT data. This results in about 77% censoring and overall event rates of 17% by month 10 and 22% by month 24. We considered a moderate sample size of 5000 and a relatively larger sample size of 10 000. For all the simulation studies, we obtain β̂ based on the truncated partial likelihood function at t0. For ease of computation, the band-width for constructing the nonparametric estimate was fixed at h = 0.37, 0.38, 0.29 and 0.27 for (i) n = 5000, t0 = 10; (ii) n = 5000, t0 = 24; (iii) n = 10 000, t0 = 10; and (iv) n = 5000, t0 = 24, respectively. Here, h was chosen as the average of the bandwidths selected based on (6) with d0 = 0.1 from 10 simulated datasets.

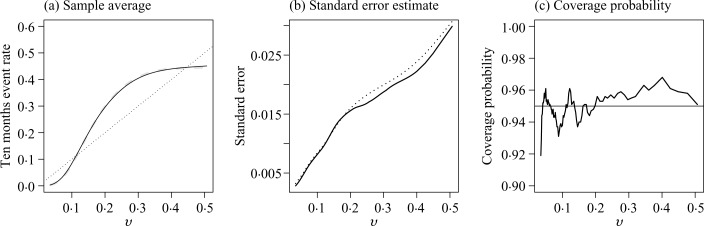

Across all four settings, the nonparametric estimator has negligible bias, the estimated standard errors are close to their empirical counterparts, and the empirical coverage levels of the 95% confidence intervals are close to the nominal level. The coverage levels of the simultaneous confidence band were 93.7%, 94.5%, 93.1% and 94.1%, for settings (i), (ii), (iii) and (iv), respectively. In Fig. 2, we summarize the performance of the point and interval estimates of the proposed nonparametric procedure for n = 5000 and t0 = 10. Under the correct model specification, we would expect that the true risk against the predicted risk from the Cox model is a 45° diagonal line. For the present case, the predicted risk based on the Cox model is severely biased. For example, the event rate at t0 = 10 is 0.31 among the subjects whose predicted risk is 0.20 based on the Cox model. It is interesting that the standard test has very low power in detecting the lack of fit for the misspecified model. For example, the test based on the Schoenfeld residuals has about 15% power when n = 5000 and 25% power when n = 10 000.

Fig. 2.

Performance of the new procedure under a misspecified model with sample size 5000 for t0 = 10: (a) the sample average of τ̂(v, t0) (solid) compared with truth (dashed); (b) the empirical standard error estimate (solid) and the average of the estimated standard errors (dotted); and (c) the empirical coverage of the 95% confidence intervals based on the proposed resampling procedures. The 45° reference line in (a) corresponds to the Cox-model-based risk estimates.

We also conducted simulation studies to evaluate the performance of the proposed procedure under the correct model specification. To this end, we generated the covariates and the censoring time from the same distribution as described above, but generated T̃ from an exponential model with coefficients obtained by fitting the model to the VALIANT data. The proposed estimators also perform well with little bias for the point estimation and proper coverage level for intervals estimators. For example, when n = 5000 and t0 = 10, the empirical coverage level ranges from 0.94 to 0.98 for the pointwise confidence intervals and is about 0.96 for the simultaneous confidence interval. Similar patterns were observed for t0 = 24.

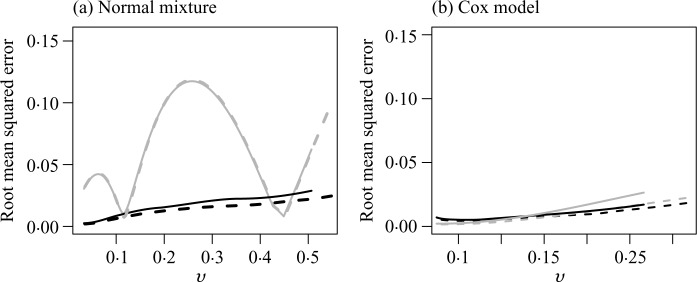

Lastly, we examined the performance of the nonparametric calibrated risk estimates with respect to their true predicted risks conditional on the observed data under correctly, and also incorrectly, specified models. Specifically, for each simulated dataset, we obtained γ̂obs based on the above Cox models to calculate for any given covariate U0, and then assign future subjects to a risk group Ωv = {U0 : 𝒫̂(U0) = v}. A standard Cox model would estimate the event rate for the subgroup Ωv as v. On the other hand, the nonparametric procedure would produce a calibrated estimate τ̂(v; t0) for the event rate among Ωv, while the true event rate conditional on the observed data is . In the previous simulation studies, τ (v; t0) = E{τ (v; t0, γ̂obs)} with expectation taken over the observed data. For each dataset, we summarized the performance of the proposed and the parametric risk prediction procedures based on their squared distance from the true event rate. The root mean squared errors of the Cox and calibrated estimates for t0 = 10, denoted rmseCox and rmseCalib, respectively, are shown in Fig. 3. Under the above misspecified normal mixture model, the parametric procedure is severely biased. For instance, when n = 5000, rmseCox can be as high as 0.12 while rmseCalib is below 0.03. Under model misspecification, over 90% of the root mean squared error is due to bias for most of the risk estimates. On the other hand, under the correct specification of the Cox model, the risk estimators from the nonparametric procedure are only slightly inefficient compared to the Cox procedure. For example, at t0 = 10 with a sample size of 5000, (rmseCox, rmseCalib) is (0.003, 0.006) when v = 0.05, is (0.005, 0.008) when v = 0.10 and is (0.017, 0.021) when v = 0.20.

Fig. 3.

The root mean squared error of the risk estimates for t0 = 10 based on the Cox model and the nonparametric calibrated procedure when the data were generated from (a) a normal mixture; and (b) a Cox model, with n = 5000 (solid) and n = 10 000 (dashed) on both panels.

5. Remarks

Due to the complexity of the disease process and heterogeneity among study subjects, it is unlikely, if not impossible, that a fitted parametric or semiparametric model is the true one. On the other hand, a reasonable approximation to the true model can be quite useful in practice. Generally, the standard goodness-of-fit tests only provide qualitative assessment of the model fitting and may have little power when n is not large. An alternative way to evaluate the overall adequacy of the fitted model is to use a reasonable, physically interpretable distance function between the observed and predicted responses averaged over the entire study population (Cook, 2007; Uno et al., 2007). In this paper, we make an extra effort to calibrate the estimation of the mean risk for subgroups indexed by a parametric score. Through extensive numerical studies, we find that our two-stage procedure significantly outperforms purely model-based counterparts.

While one may obtain β̂ by fitting a global Cox model, the parametric risk score 𝒫̂(U0) = g{Λ̂(t0) + β̂TX0} established based on the survival time observations up to t0 is preferable when the primary interest is in estimating the t0-year survival rate and the Cox model does not fit the data well. For such settings, the covariate effects on the t0-year survival rate may vary markedly across t0. Indeed, we often find that certain covariates are essential for predicting short-term survival, but not so for predicting long-term survival. On the other hand, for overall decision making, it may be interesting to construct a unified risk score system, not specific to a time-point t0. Such a global score may be obtained by fitting the full dataset with a Cox model and obtaining β̂ as the maximum partial likelihood estimator. Subsequently, one can group future subjects with β̂TX0 = u and consistently estimate τu(t) = pr(T̃0 ⩽ t | β̂TX0 = u) for various ts. For a finite set of time-points, using similar techniques utilized in this article, it is straightforward to obtain the pointwise and simultaneous confidence intervals for such multiple survival probabilities. For example, for patients with a risk score of β̂TX0 = 1.40 in the aforementioned VALIANT study, the simultaneous confidence region for {τ1.40(6), τ1.40(24)} is [0.03, 0.05] × [0.05, 0.09], for t = 6, 24. For patients with a parametric risk score of β̂TX0 = 2.87, the corresponding simultaneous confidence region is [0.19, 0.25] × [0.30, 0.38]. A challenging problem is how to construct a simultaneous confidence band for the subject-specific survival function over an entire time interval of interest. We expect that the technique developed in Li & Doss (1995) may be extended to the present case with extra care for the additional variability in 𝒫̂(U).

For the present paper, we assume that there are so-called baseline covariates available for each study patient. Often the baseline is not clearly defined. That is, it may be difficult to define the time zero for the study patient’s follow-up time. Let S denote the study entry time since time zero and let U(S) denote the corresponding covariate vector. For the VALIANT trial, every study participant had a recent nonfatal myocardial infarction with certain complications when entering the trial. For this case, the time zero for the patient’s survival can be reasonably defined as the study entry date, which is approximately the time of occurrence of myocardial infarction. Therefore, S = 0, T̃ represents the survival time since the myocardial infarction and U = U(0) is the covariate level measured near the time of myocardial infarction. The proposed procedure would be valid for predicting the risk of death within t0 since experiencing myocardial infarction for a future population whose distribution of (T̃0, U0) is the same as that of (T̃, U).

In general, S may not be well defined or observable. For example, in AIDS studies with HIV infected patients, the time zero could be the unknown time of infection and S is the time interval between infection and study entry. For such cases, the observable event time T̃ discussed in this paper, can be viewed as the residual life after S. Even when S is unknown, our new proposal is still valid for future subject-specific risk prediction provided that the joint distribution of {T†, S, U(S)} for the study population is the same as that of {T†0, S0, U0(S0)} for the future population, where T† is the event time since time zero, and T̃ = T† − S is the residual life from the entry time. Specifically, one can make inferences about the risk function

Here, the probability is taken over T†0, U0(S0), S0 and the observed data. The quantity τ (v; t0) is the event rate by t0 among the subgroup of patients who were event-free at study entry with 𝒫̂ {U0(S0)} = v.

When U(s) is measured repeatedly over time, the prediction of residual life with longitudinal markers is an interesting yet challenging problem. It is generally difficult even to identify a coherent prediction model for such settings. While the article focuses on the relatively simple objective of predicting survival time with baseline biomarker value U(0), it is often of interest, though difficult, to predict the residual life repeatedly based on periodically measured time-dependent biomarker U(s). In such a case, it is desirable to make predictions based on a fitted global model describing the dynamic association between the longitudinal markers and survival time. However, it is generally difficult even to specify a coherent prediction model for such a setting. For example, in general, imposing the commonly used proportional hazards or accelerated failure time model on the relationship between the most updated marker value and residual life over a time span of interest leads to an inconsistent model (Jewell & Nielsen, 1993, Theorem 1). See Jewell & Nielsen (1993) and Mammen & Nielsen (2007) for excellent discussions on the prediction of survival times with time-varying markers.

Acknowledgments

The authors are grateful to the editor and the reviewers for their insightful comments on the article. The work was partially supported by grants from the National Institutes of Health, U.S.A.

Appendix

In the Appendix, we use standard notation for the empirical process: n and represent the expectation with respect to the empirical distribution generated {(Ti, δi, Xi), i = 1, . . ., n} and the distribution generated by (T, δ, X), respectively. Similarly, 𝔾n = n1/2(n − ). We assume that the covariate X is bounded, h = O(n−ν) with 1/5 < ν < 1/2, K(x) is a symmetric smooth kernel function with a bounded support [−1, 1] and ∫ K̇(x)2dx < ∞, where K̇(x) = dK(x)/dx. For convenience, we use the following notation: Kj (x) = K (x)xj, Kj,h(x) = Kj (x/h)/h and . Unless noted otherwise, suprema are taken over [ψ−1(ρl + h), ψ−1(ρr − h)] for v and over [0, t0] for t.

Appendix A

Asymptotic properties of τ̂(v; t0)

We first derive the asymptotic properties of τ̂(v; t0), for v ∈ [ψ−1(ρl + h), ψ−1(ρr − h)]. Without loss of generality, we choose ψ(x) = log{− log(1 − x)}. Thus, , ψ{𝒫̂(Ui)} = γ̂TX+i, and ψ {𝒫̂(Ui)} − ψ {𝒫̄(Ui)} = Op(n−1/2). Let ṽ = ψ(v), λv(t) be the hazard function of T̃ conditional on , ; ζ(·) be the density function of ; and . In view of τ̂(v; t0) = 1 − exp{−Λ̂v(t)} and the delta method, we next establish the asymptotic properties of Λ̂v(t). Furthermore, we will repeatedly use the fact that

| (A1) |

where {(Ei, Fi)}i=1,...,n are independent and identically distributed realizations of (E, F), satisfying supe0 (|F|s | E = e0) < ∞, for all s > 0.

To derive asymptotic properties of Λ̂v (t0), we first show that there is no additional variability due to γ̂. To this end, we write Λ̂v(t0) = Λ̂v(t0, γ̂) and aim to show that if γ̂ = γ0 + Op(n−1/2),

| (A2) |

where

| (A3) |

; ; Dvi (γ) = ψ{g(γTX+i)} − ψ(v); and . We next show that

Since ,

where is a class of functions indexed by x, t and δ. Furthermore, ℋδ is uniformly bounded by an envelope function of order δ1/2 with respect to the L2 norm. By the maximum inequality (Van der Vaart & Wellner, 1996, Theorem 2.14.2) and |γ̂ − γ0| = Op(n−1/2), we have h−1n−1/2||𝔾n||ℋδ ≲ Op{h−1n−1/2n−1/4 log(n)}. It follows that . Consequently, by (A1),

where and . Next, we note that

where

We next bound these three terms. For the first term, ∊̂1 ⩽ op{(nh)−1/2}n {Kh(γ̂TX+ − ṽ)N(t0)} = op{(nh)−1/2}. For the second term, , which is bounded by Op{h−1(nh)−1/2 log(n)1/2 + h} Op(n−1/2) = op{(nh)−1/2}. For the last term,

Therefore, . Similarly, , and thus (A2) holds. On the other hand, following Li & Doss (1995), we can show supv |Λ̂v (t0, γ0) − Λv (t0)| → 0, in probability. It follows that supv (|Λ̂vt0) − Λv(t0)| ⩽ supv |Λ̂v(t0, γ̂) − Λ̂v (t0, γ0)| + supv |Λ̂v(t0, γ0) − Λv(t0)| → 0, in probability. This establishes the uniform consistency of Λ̂v (t0).

We next derive the asymptotic distribution of 𝒲̂v(t0) = (nh)1/2{Λ̂v(t0) − Λv(t0)}. From (A2), we have 𝒲̂v (t0) = 𝒲̃v(t0) + op(1), where 𝒲̃v(t0) = (nh)1/2{Λ̂v(t0, γ0) − Λv(t0)}. Noting that n1/2h5/2 = op(1) and the decomposition that

we have

By integration by part and maximum inequality for empirical process, we have

uniformly in ṽ. Therefore,

| (A4) |

For any fixed v or ṽ, by martingale central limit theorem, , in distribution, as n → ∞, where . It follows that for any fixed v, 𝒲̂v(t) converges in distribution to .

To demonstrate the validity of the resampling variance estimator, we define

| (A5) |

where

and for any vector x, x⊗0= 1, x⊗1 = x and x⊗2= xxT. It is straightforward to show that n1/2(γ̂* − γ̂) conditional on the data and n1/2(γ̂ − γ0) converge to the same limiting distribution. From γ̂* = γ0 + Op(n−1/2) and (A2), (4) = n−1/2h1/2 . Thus the variance estimator for (nh)1/2{Λ̂v(t0) − Λv(t0)} is

which converges to in probability.

Appendix B

Justification for the validity of the confidence band for τ̂(v; t0)

We first justify that with proper standardization, W = supv |(nh)1/2Λ̂v (t0) − Λv(t0)/σ̂v (t0)| converges weakly. It follows from (A4) and the consistency of σ̂v (t0) for σv (t0) that

This, together with the continuity of , implies that

where . It follows from a similar argument in Bickel & Rosenblatt (1973) that pr { an(W − dn) < x} → e−2e−x, where

To justify the validity of the resampling procedure for constructing the confidence band, we consider

Again, since |γ̂* − γ̂| = Op(n−1/2), from (A2), we have

It follows from the same argument as given in Tian et al. (2005) that

in probability as n → ∞. Therefore, the conditional distribution of an(W* − dn) can be used to approximate the distribution of an(W − dn) for large n.

References

- Anavekar N, McMurray J, Velazquez E, Solomon S, Kober L, Rouleau J, White H, Nordlander R, Maggioni A, Dickstein K, Zelenkofske S, Leimberger J, Califf R, Pfeffer M. Relation between renal dysfunction and cardiovascular outcomes after myocardial infarction. New Engl J Med. 2004;351:1285–95. doi: 10.1056/NEJMoa041365. [DOI] [PubMed] [Google Scholar]

- Anderson K, Wilson P, Odell P, Kannel W. An updated coronary risk profile: a statement for health professionals. Circulation. 1991;83:356–62. doi: 10.1161/01.cir.83.1.356. [DOI] [PubMed] [Google Scholar]

- Bickel PJ, Rosenblatt M. On some global measures of the deviations of density function estimates (Corr: V3 p1370) Ann Statist. 1973;1:1071–95. [Google Scholar]

- Brindle P, Emberson J, Lampe F, Walker M, Whincup P, Fahey T, Ebrahim S. Predictive accuracy of the Framingham coronary risk score in British men: prospective cohort study. Br Med J. 2003;327:1267. doi: 10.1136/bmj.327.7426.1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai T, Tian L, Wei L. Semiparametric Box-Cox power transformation models for censored survival observations. Biometrika. 2005;92:619–32. [Google Scholar]

- Cook N. Use and misuse of the receiver operating characteristic curve in risk prediction. Circulation. 2007;115:928–35. doi: 10.1161/CIRCULATIONAHA.106.672402. [DOI] [PubMed] [Google Scholar]

- Cox D. Regression models and life-tables (with discussion) J. R. Statist. Soc. B. 1972;34:187–220. [Google Scholar]

- D’Agostino R, Sr, Grundy S, Sullivan L, Wilson P. Validation of the Framingham coronary heart disease prediction scores: results of a multiple ethnic groups investigation. J Am Med Assoc. 2001;286:180–7. doi: 10.1001/jama.286.2.180. [DOI] [PubMed] [Google Scholar]

- Du Y, Akritas M. IID representations of the conditional Kaplan–Meier process for arbitrary distributions. Math Meth Statist. 2002;11:152–82. [Google Scholar]

- Eagle K, Lim M, Dabbous O, Pieper K, Goldberg R, Van De Werf F, Goodman S, Granger C, Steg P, Gore J, Budaj A, Avezum A, Flather MD, Fox KA, GRACE Investigators A validated prediction model for all forms of acute coronary syndrome: estimating the risk of 6-month postdischarge death in an international registry. J Am Med Assoc. 2004;291:2727–33. doi: 10.1001/jama.291.22.2727. [DOI] [PubMed] [Google Scholar]

- Fan J, Gijbels I. Data-driven bandwidth selection in local polynomial regression: variable bandwidth selection and spatial adaptation. J. R. Statist. Soc. B. 1995;57:371–94. [Google Scholar]

- Fan J, Gijbels I. Local Polynomial Modelling and Its Applications. London: Chapman & Hall; 1996. [Google Scholar]

- Gerds T, Schumacher M. Consistent estimation of the expected Brier score in general survival models with right-censored event times. Biomet J. 2006;48:1029–40. doi: 10.1002/bimj.200610301. [DOI] [PubMed] [Google Scholar]

- Härdle W. Applied Nonparametric Regression. Cambridge UK: Cambridge University Press; 1990. [Google Scholar]

- Hjort N. On inference in parametric survival data models. Int Statist Rev. 1992;60:355–87. [Google Scholar]

- Jewell N, Nielsen J. A framework for consistent prediction rules based on markers. Biometrika. 1993;80:153–64. [Google Scholar]

- Jin Z, Ying Z, Wei L. A simple resampling method by perturbing the minimand. Biometrika. 2001;88:381–90. [Google Scholar]

- Kalbfleisch JD, Prentice RL. The Statistical Analysis of Failure Time Data. New York: John Wiley & Sons; 2002. [Google Scholar]

- Li G, Doss H. An approach to nonparametric regression for life history data using local linear fitting. Ann Statist. 1995;23:787–823. [Google Scholar]

- Mammen E. Bootstrap, wild bootstrap, and asymptotic normality. Prob. Theory Rel. Fields. 1992;93:439–55. [Google Scholar]

- Mammen E, Nielsen J. A general approach to the predictability issue in survival analysis with applications. Biometrika. 2007;94:873–92. [Google Scholar]

- Nielsen J. Marker dependent kernel hazard estimation from local linear estimation. Scand Actuar J. 1998;2:113–24. [Google Scholar]

- Nielsen J, Linton O. Kernel estimation in a nonparametric marker dependent hazard model. Ann Statist. 1995;23:1735–48. [Google Scholar]

- Nielsen J, Tanggaard C. Boundary and bias correction in kernel hazard estimation. Scand J Statist. 2001;28:675–98. [Google Scholar]

- Park B, Kim W, Ruppert D, Jones M, Signorini D, Kohn R. Simple transformation techniques for improved non-parametric regression. Scand J Statist. 1997;24:145–63. [Google Scholar]

- Park Y, Wei L. Estimating subject-specific survival functions under the accelerated failure time model. Biometrika. 2003;90:717–23. [Google Scholar]

- Pencina M, D’Agostino R, Sr, D’Agostino R, Jr, Vasan R. Evaluating the added predictive ability of a new marker: from area under the ROC curve to reclassification and beyond. Statist Med. 2008;27:157–72. doi: 10.1002/sim.2929. [DOI] [PubMed] [Google Scholar]

- Pepe M, Janes H, Longton G, Leisenring W, Newcomb P. Limitations of the odds ratio in gauging the performance of a diagnostic, prognostic, or screening marker. Am J Epidemiol. 2004;159:882–90. doi: 10.1093/aje/kwh101. [DOI] [PubMed] [Google Scholar]

- Pfeffer M, McMurray J, Velazquez E, Rouleau J, Køber L, Maggioni A, Solomon S, Swedberg K, Van de Werf F, White H, Leimberger J, Henis M, Edwards S, Zelenkofske S, Sellers M, Califf R, for the Valsartan in Acute Myocardial Infarction Trial Investigators Valsartan, captopril, or both in myocardial infarction complicated by heart failure, left ventricular dysfunction, or both. New Engl J Med. 2003;349:1893–906. doi: 10.1056/NEJMoa032292. [DOI] [PubMed] [Google Scholar]

- Solomon S, Skali H, Anavekar N, Bourgoun M, Barvik S, Ghali JK, Warnica JW, Khrakovskaya M, Arnold JMO, Schwartz Y, Velazquez EJ, Califf RM, McMurray JV, Pfeffer MA. Changes in ventricular size and function in patients treated with valsartan, captopril, or both after myocardial infarction. Circulation. 2005;111:3411–19. doi: 10.1161/CIRCULATIONAHA.104.508093. [DOI] [PubMed] [Google Scholar]

- Tian L, Zucker D, Wei L. On the Cox model with time-varying regression coefficients. J Am Statist Assoc. 2005;100:172–83. [Google Scholar]

- Uno H, Cai T, Tian L, Wei LJ. Evaluating prediction rules for t-year survivors with censored regression models. J Am Statist Assoc. 2007;102:527–37. [Google Scholar]

- Van der Vaart A, Wellner J. Weak Convergence and Empirical Processes. New York: Springer; 1996. [Google Scholar]

- Wand M, Marron J, Ruppert D. Transformation in density estimation (with comments) J Am Statist Assoc. 1991;86:343–61. [Google Scholar]

- Wang T, Gona P, Larson M, Tofler G, Levy D, Newton-Cheh C, Jacques P, Rifai N, Selhub J, Robins S, Benjamin EJ, D’Agostino RB, Vasan RS. Multiple biomarkers for the prediction of first major cardiovascular events and death. New Engl J Med. 2006;355:2631–9. doi: 10.1056/NEJMoa055373. [DOI] [PubMed] [Google Scholar]

- Ware J. The limitations of risk factors as prognostic tools. New Engl J Med. 2006;355:2615–7. doi: 10.1056/NEJMp068249. [DOI] [PubMed] [Google Scholar]

- Wu C. Jackknife, bootstrap and other resampling methods in regression analysis. Ann Statist. 1986;14:1261–95. [Google Scholar]

- Zhang X, Attia J, D’Este C, Yu X, Wu X. A risk score predicted coronary heart disease and stroke in a Chinese cohort. J Clin Epidemiol. 2005;58:951–8. doi: 10.1016/j.jclinepi.2005.01.013. [DOI] [PubMed] [Google Scholar]