Summary

Phase I clinical studies are experiments in which a new drug is administered to humans to determine the maximum dose that causes toxicity with a target probability. Phase I dose-finding is often formulated as a quantile estimation problem. For studies with a biological endpoint, it is common to define toxicity by dichotomizing the continuous biomarker expression. In this article, we propose a novel variant of the Robbins–Monro stochastic approximation that utilizes the continuous measurements for quantile estimation. The Robbins–Monro method has seldom seen clinical applications, because it does not perform well for quantile estimation with binary data and it works with a continuum of doses that are generally not available in practice. To address these issues, we formulate the dose-finding problem as root-finding for the mean of a continuous variable, for which the stochastic approximation procedure is efficient. To accommodate the use of discrete doses, we introduce the idea of virtual observation that is defined on a continuous dosage range. Our proposed method inherits the convergence properties of the stochastic approximation algorithm and its computational simplicity. Simulations based on real trial data show that our proposed method improves accuracy compared with the continual re-assessment method and produces results robust to model misspecification.

Some key words: Continual re-assessment method, Dichotomized data, Discrete barrier, Heteroscedasticity, Robust estimation, Semiparametric mean-variance relationship

1. Introduction

The early phase clinical development of a new drug typically involves testing several doses of the new drug, with safety as the primary concern. A specific objective is to identify the maximum tolerated dose, defined as the largest dose that causes toxicity with a prespecified probability. Traditionally, the maximum tolerated dose is approached from a low dose according to a 3 + 3 algorithm whereby escalation occurs after cohorts of three nontoxic observations. As this traditional algorithm is criticized for its arbitrary statistical properties, several authors propose novel designs, such as the continual re-assessment method (O’Quigley et al., 1990) and rigorous extension of the 3 + 3 algorithm (Lin & Shih, 2001; Cheung, 2007) to address dose-finding as a quantile estimation problem. These designs, utilizing binary toxicity data for estimation, provide clinicians with viable options for testing drugs in general.

In clinical studies with a biological safety endpoint, the toxicity outcome is often defined by dichotomizing a quantitative biomarker expression. Take for example the Neuroprotection with Statin Therapy for Acute Recovery Trial, NeuSTART, a recent phase I trial of lovastatin in acute ischemic stroke patients conducted at Columbia University (Elkind et al., 2008). In this trial, high dose lovastatin was administered to patients for three days followed by a standard dose for 30 days. A primary safety concern for administering high dose lovastatin is toxicity measured by the elevation in liver enzyme alanine aminotransferase. In NeuSTART, each patient was evaluated up to day 30 after the start of treatment, and toxicity was said to occur if the patient’s post-treatment peak transferase exceeded three times the upper normal limit of 123 U/L. The objective of the trial was to estimate the dose with toxicity probability closest to 10%. A two-stage continual re-assessment method (Cheung, 2005) was used for dose escalation in 33 subjects at five high-dose levels. Table 1 summarizes the liver function outcomes by dose group in the study: two patients at dose level 3 exhibited elevated liver function, and the trial did not reach the highest dose. According to the continual re-assessment method, dose level 4 was estimated to be the maximum tolerated dose with a 13% toxicity rate. However, an isotonic fit to the continuous transferase measurements suggested a much smaller rate of 4% for dose level 4. Details of the continual re-assessment method and the isotonic fit are described in § 5.

Table 1.

Summary of the liver function data in NeuSTART

| Dose | Cohort size | Patients with toxicity | log(alt) | Isotonic | Toxicity rate | |||

|---|---|---|---|---|---|---|---|---|

| k | Mean | sd | Estimate | sd | crm | Isotonic | ||

| 1 | 3 | 0 | 3.24 | 0.23 | 3.24 | 0.23 | 0.01 | 0.00 |

| 2 | 10 | 0 | 3.25 | 0.42 | 3.25 | 0.42 | 0.03 | 0.00 |

| 3 | 12 | 2 | 3.78 | 0.72 | 3.63 | 0.66 | 0.06 | 0.04 |

| 4 | 8 | 0 | 3.42 | 0.54 | 3.63 | 0.66 | 0.13 | 0.04 |

| 5 | 0 | – | – | – | – | – | 0.24 | – |

alt, peak alanine aminotransferase; crm, continual re-assessment method; sd, standard deviation.

In retrospect, we realized that statistical efficiency might have been lost by using only the dichotomized data, and that the original measurements might be utilized to retain information. In this article, we propose a variant of the Robbins–Monro (1951) stochastic approximation that uses the quantitative measurements for dose-finding in phase I trials. The Robbins–Monro procedure is a stochastic root-finding method, and is a natural method of choice for the quantile estimation objective in dose-finding (Anbar, 1977, 1984). While their paper has generated a voluminous statistical and engineering literature, the stochastic approximation has seldom been considered for use in clinical trials; exceptions are Anbar (1984) and O’Quigley & Chevret (1991). Two considerations render application of the Robbins–Monro procedure unsuited to many phase I trial settings. First, from a statistical viewpoint, the method has been demonstrated to be inferior to parametric procedures such as the maximum likelihood recursion for binary data (Wu, 1985). In our current application where the binary outcome is defined by a continuous measurement, this difficulty can be resolved if we make appropriate use of the original continuous data. Second, the method entails the availability of a continuum of doses. This is neither feasible in practice nor preferred for reporting purposes in publications. In addition, in trials involving combination of treatments, each subsequently higher regimen may involve incrementing doses of different treatments and hence there is no natural scale of dosage. This important point has been noted in O’Quigley & Chevret (1991) and Shen & O’Quigley (2000), both of whom address the problem by rounding the stochastic approximation output to its closest dose at each step. We shall return to this when we introduce a novel concept called virtual observation.

2. Stochastic approximation with virtual observations

2.1. Problem formulation

Consider a trial in which patients are enrolled in small cohorts of size m. Let and Xi respectively denote the dose assigned to the ith cohort and the actual dose given to patients. The assigned dose can take on any real number from a conceptual scale that represents an ordering of doses or regimens. Suppose for the moment that Xi can take on any real number and that Xi = for all i. Let Yij be the safety measurement of the j th patient in the i th cohort; the patient is said to experience toxicity if Yij exceeds some threshold t0. The objective of a dose-finding study is the identification of θ such that π (θ) = p, where π (x) = P(Yij > t0 | Xi = x) is the probability of toxicity at dose x.

Assume that Yij = M(Xi) + σ(Xi)∊ij, where the ∊ s are independently distributed according to a common distribution G with E(∊) = 0 and var(∊) = 1, so that M(x) and σ (x), respectively, denote the mean and the standard deviation of the safety outcome at dose x. Both M(x) and σ (x) are unspecified. Under this regression model, the toxicity probability at dose x,

| (1) |

and the target dose θ can be re-expressed as the solution to the equation f (x) ≡ M(x) + zpσ (x) = t0, where zp is the upper pth percentile of G. For brevity, suppose that π (x) and f (x) are strictly increasing on the relevant dose range so that the root θ exists uniquely. This monotone dose-toxicity assumption is reasonable in phase I trials, although the main results in this article hold under the much weaker assumptions stated in the Appendix. In practice, the actual dose given is confined to a discrete set of K levels, denoted by {1, . . . , K}, with π (1) < ⋯ < π (K) and f (1) < ⋯ < f (K). As such, it is possible that π (k) ≠ p and f (k) ≠ t0 for all k. One may then define the maximum tolerated dose by ν1 = arg mink |π (k) − p| as in O’Quigley et al. (1990). Alternatively, under the current formulation, it is natural to define the maximum tolerated dose by ν2 = arg mink | f (k) − t0|. The objectives ν1 and ν2 represent the closest doses to θ on two different scales, and are not necessarily identical.

Lemma 1. Define ck such that G(ck) = 1 − π (k) for k = 1, . . . , K. (a) Assume π (ν1) ⩾ p. If π(k) < 1 − G{zp + σ (ν1)(zp − cν1)/σ(k)} for all k < ν1, then ν2 = ν1; else, ν2 = ν1 − 1.

(b) Assume π (ν1) ⩽ p. If π (k) > 1 − G{zp + σ (ν1)(zp − cν1)/σ (k)} for all k > ν1, then ν2 = ν1;else, ν2 = ν1 + 1.

Therefore, ν2 = ν1 if π (ν1) = p. Lemma 1 also implies that ν2 = ν1 when the dose-toxicity curve is steep around ν1. In other words, when ν2 ≠ ν1, π (ν1) and π (ν2) will be quite close to each other, thus rendering both objectives similar. For clarity in presentation, we will focus on the estimation of ν1 in accordance with the conventional phase I objective.

2.2. The procedure

Let Ui = Ȳi + [E{Si /σ(Xi)}]−1zp Si, where Ȳi and Si are the respective sample mean and standard deviation of the measurements in the m subjects of cohort i, so that E(Ui | Xi) = f (Xi). Since Si /σ (Xi) is a pivotal quantity that depends on the ∊ s but not M and σ, we can derive E{Si /σ (Xi)} for any given distribution G either by analytical calculation or by simulation. Define, for the ith cohort, a virtual observation Vi = Ui + β ( − Xi) made at the assigned dose for some β > 0. Then the next assigned dose is calculated via a stochastic approximation recursion based on the virtual observation:

| (2) |

and the next actual dose Xi+1 = C( ), where C (x) =k if lk ⩽ x < lk+1 with l1 = −∞, lK +1 = ∞, and lk = k − 0.5 for k = 2, . . . , K. That is, C(x) is the rounded value of x if 0.5 ⩽ x < K + 0.5.

There are various ways to initiate the recursion. One could start the first cohort at the lowest dose, i.e. = Xi = 1, or at the prior maximum tolerated dose as in O’Quigley et al. (1990). One may also institute a predetermined escalation sequence until the first sign of toxicity appears. Specifically, let = Xi = x0,i for the i th cohort, where x0,i is a nondecreasing sequence of dose levels with x0,i ⩽ x0,i+1, and switch to recursion (2) once a toxicity is observed. While a two-stage strategy is not necessary for the method’s implementation, it may be practical to dictate the pace of escalation in accordance with the clinician’s preference when there is no toxicity (Cheung, 2005).

Another way to avoid aggressive escalation is by restricting the trial from skipping an untested dose. That is, the next assigned dose would be min( , max1 ⩽n ⩽i Xn + 2) instead of . If this restriction is to be applied, then it should be only to the first few cohorts because the increment diminishes as i increases.

2.3. Discrete barrier: an illustration

As an alternative to the virtual observation recursion (2), we may generate a discretized stochastic approximation of the given dose Xi based on the actual observations Ui by taking the closest dose to the value obtained from a recursion output; that is,

| (3) |

O’Quigley & Chevret (1991) and Shen & O’Quigley (2000) take a similar discretization approach to accommodate the use of discrete doses in the context of different dose-finding designs. The discretized stochastic approximation (3) is straightforward and at first looks reasonable, but may indefinitely confine the design sequence to a wrong dose. Consider a two-stage design for five dose levels with t0 = 4.81. In the event of no toxicity, i.e. Yij ⩽ 4.81, escalation will proceed according to an initial sequence: x0,1 = 1, x0,2 = 2, x0,3 = x0,4 = 3, x0,5 = x0,6 = x0,7 = 4 and x0,i = 5 for i ⩾ 8. Once the first toxicity is seen, recursion (3) with β = 0.05 will be used to assign doses. Take a simple instance with negligible variability so that Ui = f (Xi) with f (1) = 4.20, f (2) = 4.67, f (3) = 4.80, f (4) = 4.93 and f (5) = 5.30; hence, the correct dose is level 3. The outcome sequence will follow the initial design and yield (X1, U1) = (1, 4.20), (X2, U2) = (2, 4.67), (X3, U3) = (X4, U4) = (3, 4.80) and (X5, U5) = (4, 4.93), after which recursion (3) will come into effect and give X6 = C{4 − (5 × 0.05)−1(4.93 − 4.81)} = 4, and U6 = 4.93. It is easy to see that the remaining patients will receive dose 4. If, instead, we use the virtual observation recursion (2) after the initial design, then = 3.52 which gives X6 = 4, U6 = 4.93 and V6 = 4.906, followed by = 3.14 and X7 = 3. The remaining process will continue at the correct dose 3.

To see how the virtual observation recursion corrects the problem, consider another instance with f (x) = t0 + β (x − θ), where θ is an integer, and Ui is observed with noise Zi, i.e. Ui = f (Xi) + Zi. If the i th dose Xi is an overdose dose, namely, Xi = θ + Δi for some Δi > 0, then the update according to (3) is Xi+1 = Xi + [C{(1 − i−1)Δi − (iβ)−1Zi} − Δi]. As i grows, the second term is likely to equal zero and does not contribute to future updates. A discrete barrier is thus built by rounding. The virtual observation recursion (2) updates a dose with , where . The second term, which is of the order O(i−1), can be carried over to future updates and provides continuation to overcome the discrete barrier.

3. Design properties

The idea of virtual observation is to create an objective function h(x) = E(Vi | = x) with a local slope at the available doses {1, . . . , K}. To be precise, h(x) = f {C(x)} + β{x − C(x)} is piecewise continuous with jumps at {0.5, 1.5, . . . , K − 0.5} and is linearly increasing with slope β except at the jumps. Following from the standard results of stochastic approximation (Sacks, 1958; Lai & Robbins, 1979), under the condition that h(x) = t0 has a unique root at θβ, the virtual dose generated by (2) is consistent for θβ, and Xn for C (θβ). Furthermore, since the objective h(x) is equal to the target f (x) on the actual doses, i.e. h(k) = f (k), we hope that the root θβ will be close to θ, and C(θβ) to ν1.

Proposition 1. (a) Assume ν1 = ν2. If Bν1 < β < mink ≠ ν1 Bk, then Xn = ν1 eventually with probability one, where Bk = 2σ (k)|ck − zp|.

(b) Assume ν1 ≠ ν2. If β < mink ≠ ν1 Bk, then pr(Xn = ν1 or ν2 eventually) = 1 and

eventually with probability one.

Supposing cν1 ≈ zp, i.e. Bν1 ≈ 0, and the parameter β is adequately small, Proposition 1 implies that Xn will eventually recommend a neighbouring dose of ν1, if not ν1 itself. The specific choice of β can be aided by the knowledge about π and σ. While these quantities are often unknown prior to the trial, we illustrate in § 4 how β may be determined with only mild prior inputs under a semiparametric mean-variance assumption.

Proposition 1(b) prescribes how far Xn may deviate from θ on the probability scale if β is adequately small. For example, if p = 0.1 and π (ν1) = 0.08, applying Proposition 1(b) gives |π (Xn) − 0.1| ⩽ 0.024 eventually by assuming σ (ν1) = σ (ν2). The maximum deviation is only slightly larger than |π (ν1) − p| = 0.02. The deviation will be bounded above by 0.037 if we conservatively assume σ (ν1) = 1.5σ (ν2), while we would expect σ (ν1)/σ (ν2) ≈ 1 as ν1 and ν2 are neighbours.

4. Design calibration

4.1. Consistency

The virtual observation recursion is specified by a tuning parameter β. Assuming that the condition for consistency in Proposition 1 holds, we can further show that is asymptotically normal with mean θβ and variance β−2var(Vi | = θβ). To minimize the asymptotic variance, therefore, we should set β at its largest possible value that guarantees consistency. However, since the upper bound of β depends on the unknown π (k) and σ (k), to simplify the calibration process, we restrict our attention to scenarios in which

| (4) |

for k < ν1 < k′ and some prespecified limits pL, pU. This class of scenarios consists of toxicity configurations in which there is an unambiguous target with θ = ν1 = ν2 = θβ, and the toxicity probabilities at the other doses lie outside the interval (pL, pU). By not considering scenarios with π (k) ∈ (pL, pU), we are in a sense indifferent to whether such a dose should be selected as the maximum tolerated dose. Thus, the interval (pL, pU) is called the indifference interval (Cheung & Chappell, 2002).

Proposition 2. Assume that σ (k) depends on dose k only via M(k) as follows: s{σ (k)} = s{σ (θ)} + ϕ{M(k) − M(θ)} for some ϕ ⩾ 0 and some smooth function s(σ) with s(σ) ⩾ 0 and s′(σ) > 0. Under (4), Xn is consistent for θ if 0 < β/{2σ (θ)} < w ≡ min(zp − /zL, zp − zU), where zL = G−1(1 − pL) and zU = G−1(1 − pU).

The consistency upper bound for β depends on the unknown σ (θ). On the other hand, we can often obtain clinical data to estimate the standard deviation σ0 of the safety measurements in a comparable population that is untreated or treated at lower doses. Since σ0 ⩽ σ (θ) by the assumption that variance increases with doses, we may use 2wσ0 as a conservative consistency upper bound for β.

4.2. Robustness

Our proposed method works under a fairly general dose-toxicity relationship as described in Proposition 2, but requires us to specify a distribution G. To provide some assurance in an application, it is important to evaluate the method’s robustness against misspecification. Suppose we assume ∊ij follows G̃ instead of the true G, and generate recursion (2) based on Ṽi = Ỹi + [EG̃ {Si /σ (Xi)}]−1z̃p Si + β { − C( )} where EG̃ (·) denotes expectation computed under G̃ and G̃ (z̃p) = 1 − p. Furthermore, let

and h̃(x) ≡ EG (Ṽi | = x) = M{C(x)} + σ{C(x)} + β{x − C(x)}. If β is chosen such that h̃(x) = t0 has a unique solution at θ̃β, then it can be shown that the virtual dose generated based on Ṽi s will converge to θ̃β and Xn to C (θ̃β).

Proposition 3. Define ν̃ = arg mink B̃k, where B̃k = 2σ (k)| ck − |. (a) Under (4), ν̃ = θ if pL < 1 − G{ + σ(θ)|zp − |/σ(1)} ≡ p̃L and pU > 1 − G{ − |zp − |} ≡ p̃U.

(b) Assume that the mean-variance relationship in Proposition 2 holds, and that pL < p̃L and pU > p̃U under (4). Then, Xn is consistent for C (θ̃β) = ν̃ = θ if |zp − | < β /{2σ (θ)} < w̃ ≡ min(zp − zp /zL, − zU).

Proposition 2 is a special case of Proposition 3 when G̃ = G. Generally, Proposition 3 states that the false objective θ̃β will coincide with the true θ under a steep dose-toxicity curve, where steepness is quantified by p̃L and p̃U. For example, Table 2 displays the key robustness quantities for recursion (2) with standard normal G̃, m = 3 and p = 0.1 evaluated under the logistic, t5, and Gumbel distributions standardized so that they have mean zero and variance one; the logistic distribution has location 0 and scale 0.55; the noise under t5 is generated as a t-variate with 5 degrees of freedom multiplied by 0.77; the distribution function for the Gumbel distribution is G(z) = exp[− exp{(−0.45 − z)/0.78}]. To evaluate p̃L we set σ (1) = 0.25σ (θ). Table 2 shows that p̃L and p̃U are within 0.05 of the target p, suggesting mild conditions on the dose-toxicity curve for robust estimation of θ. In addition, |zp − | is generally small, and is much smaller than w̃, when computed with pL = 0.05, pU = 0.15. We thus anticipate that a wide range of β will satisfy the consistency conditions under misspecification of the model.

Table 2.

Robustness analysis of the virtual observation recursion with standard normal G̃, m = 3 and p = 0.1

| G | zp | EG {Si /σ (Xi)} | p̃L | p̃U | |zp − | | w̃ | |

|---|---|---|---|---|---|---|---|

| N (0, 1) | 1.282 | 0.8862 | 1.282 | 0.100 | 0.100 | 0.000 | 0.245 |

| Logistic | 1.211 | 0.8663 | 1.253 | 0.071 | 0.100 | 0.041 | 0.277 |

| t5 | 1.143 | 0.8438 | 1.220 | 0.053 | 0.100 | 0.077 | 0.250 |

| Gumbel | 1.305 | 0.8514 | 1.231 | 0.076 | 0.119 | 0.073 | 0.265 |

5. Application to NeuSTART

5.1. Trial design and data

In NeuSTART, we adopted a two-stage continual re-assessment method for dose escalation in 33 subjects at five dose levels using the initial sequence given in § 2.3. Once the first toxicity was seen, the dose-toxicity curve would be updated after an observation was made from the most recent patient, and the next patient would be given the dose with toxicity probability estimated to be closest to p = 0.10. The toxicity probability for dose k was modelled as with d1 = 0.02, d2 = 0.06, d3 = 0.10, d4 = 0.18 and d5 = 0.30, where ψ was a priori lognormal with location 0 and scale 1.34 (O’Quigley & Shen, 1996). Enrolment into the trial began in October 2005 and was completed in August 2007. The first sign of elevated liver function test was seen in the eighth subject who was given dose level 3. The subsequent dose assignments were then model-based.

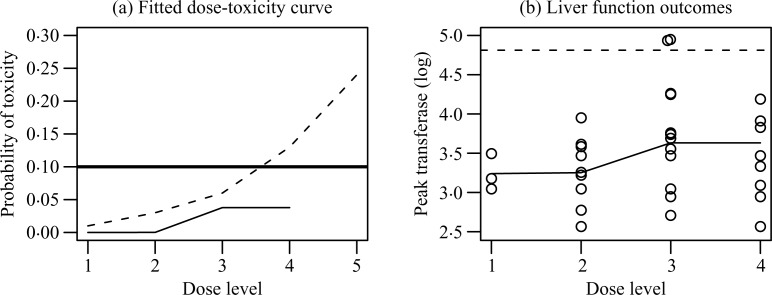

Table 1 reports the sample mean and the standard deviation of peak transferase levels (on log scale) for each dose. Assuming monotone dose-toxicity, we estimate M(k) and σ (k) by pooling data in dose levels 3 and 4, because the sample mean of dose 3 is larger than that of dose 4. Substituting these estimates for the corresponding parameter values in (1) gives an isotonic fit of π (k), which is shown in the last column of Table 1 and plotted in Fig. 1(a). The estimate of π (4) based on the isotonic fit is much lower than the target 10%. In contrast, the continual re-assessment method estimates that π (4) exceeds the target, even though no toxicity was observed at dose level 4. This is indeed a feature of the continual re-assessment method that allows strong influence of observations at lower doses on estimation at the higher doses via parametric extrapolation.

Fig. 1.

Model fits for the NeuSTART data. (a) Fitted dose-toxicity curve by the continual reassessment method (dashed) and the isotonic fit (solid); the target probability is also indicated (thicker solid line). (b) Liver function outcomes. Each observation is indicated (o), as well as the isotonic fit (solid) and the toxicity threshold (dashed).

Figure 1(b) displays the liver function data by dose, and reveals that the two toxic outcomes were results of peak transferase levels exceeding the threshold t0 = log(123) by a slight margin. Were these measurements to drop a few units, the trial outcomes would have been quite different. Such sensitivity in dichotomized analysis speaks favourably for using the original continuous measurements as the basis of estimation, especially when the data are subject to measurement errors.

The liver function data demonstrate heteroscedasticity in Table 1 and Fig. 1(b). Specifically, the variance increases with dose as the mean increases. Such a monotone mean-variance relationship is typical for biological laboratory values, although the exact form of the relationship is usually hard to estimate. Finally, to identify the noise distribution, we calculate the standardized residual for each observation via centring the transferase measurement by the isotonic estimate of its mean and standardization by its standard deviation estimate. A normal Q–Q-plot of these standardized residuals shows that the noise ∊ij fits well to the standard normal distribution.

5.2. Redesign of NeuSTART

Having checked all the model components, we may consider the virtual observation recursion assuming standard normal noise, so that E{Si /σ (Xi)} = 0.886 and zp = 1.28 for a trial with m = 3 and p = 0.1. To determine β, we approximate σ0 by σ̂0,low = 0.59, which is the 80% confidence lower limit based on the 20 observations in the combined dose levels 3 and 4. Then the upper bound for β is 0.29 according to Proposition 2 if we set pL = 0.05 and pU = 0.15, and it is less than 2w̃σ̂0,low under various noise distributions, see Table 2. This estimate is conservative as far as consistency and robustness are concerned, because σ̂0,low tends to underestimate σ0 and hence σ (θ). Also, 0.29/{2σ (θ)} > |zp − | under the distributions in Table 2, unless the true σ (θ) ⩾ 3.77, which is very unlikely. Therefore, recursion (2) will be generated based on Vi = Ȳi + 1.446 Si + 0.29( − Xi).

5.3. Simulation study

Simulations were run to compare the performance of the virtual observation recursion and the continual re-assessment method. In the simulation, we used the same two-stage strategies for both methods: start at the lowest dose, follow the initial sequence as in § 2.3 and switch to the continual re-assessment method or recursion (2) upon the first toxic outcome. Furthermore, we applied the restriction that no untested level would be skipped in escalation. For the purposes of theoretical comparisons, we also ran simulations using the nonparametric optimal design described in O’Quigley et al. (2002). The design assumed that the binary toxicity outcomes at every test dose were observable for each given patient and used the complete toxicity profile to evaluate the sample toxicity proportion at each dose. It can be readily shown that this design is optimal in that the sample proportion for π (k) achieves the Cramer–Rao lower bound with ′ variance proportional to G(ck){1 − G(ck)}. While the nonparametric optimal design cannot be implemented in practice where each patient is treated at a single dose, the design’s performance can be evaluated in simulations and can be used as a benchmark for efficiency.

In each simulated trial, a true dose-toxicity curve π (k) was first generated from the posterior distribution under the assumed model in the continual re-assessment method, i.e. π (k) = with ψ drawn from the posterior given the NeuSTART data. Continuous outcomes were generated from a normal distribution with mean and standard deviation determined so that, for a given π(k), σ4(k) = log{α + 0.52M(k)} for some α ∈ [−1, 1]. With p = 0.1 and t0 = log(123), the values of σ (θ) represent a wide range of variability from 0.57 to 1. For each generated π (k), we recorded the true ν1; ran the continual re-assessment method, the nonparametric optimal design and the virtual observation recursion with β = 0.15, 0.29 or 0.45 under various values of α; and recorded their recommended doses.

Figure 2(a) plots the proportions of selecting ν1 against σ (θ) in 10 000 generated dose-toxicity curves. Since π (ν1) ≠ 0.1 in general in the simulated configurations, it may be acceptable to select a dose with π (k) ∈ (0.05, 0.15). Figure 2(b) displays the proportions of selecting such an acceptable dose; these proportions were calculated after discarding dose-toxicity curves with π (ν1) ∉ / (0.05, 0.15). The virtual observation recursion with β = 0.29 is uniformly better than the continual re-assessment method and exceeds the nonparametric optimal design. Using β = 0.15 leads to inferior performance especially as σ (θ) increases; this is in accordance with the fact that a small β is associated with a large asymptotic variance of . Using β = 0.45 exhibits a reverse trend where performance improves as σ (θ) increases and is generally better than the continual re-assessment method. This suggests not only that we can use data to form a good choice of β, but also that improvement over the continual re-assessment method can be achieved on a wide range of β.

Fig. 2.

(a) and (c) The proportion of selecting ν1 vs. σ (θ) by the virtual observation recursion assuming normal noise. (b) and (d) The proportion of selecting an acceptable dose by our method. Our method was run with β = 0.15 (dot-dashed), 0.29 (solid), 0.45 (dotted) under normal noise in (a) and (b), and with β = 0.29 and noise generated from the logistic (solid), t5 (dotted) and Gumbel (dot-dashed) distributions in (c) and (d). The selection probabilities of the continual re-assessment method (heavier solid) and the nonparametric optimal design (heavier dashed) are indicated.

The efficiency gain is achieved through the use of the continuous data with an additional normality assumption. Figures 2(c) and 2(d) display the operating characteristics of the virtual observation recursion with β = 0.29 under G other than normal. The accuracies of the method are quite comparable and remain superior to the continual re-assessment method under model misspecification, thus offering assurance of robustness in our application.

6. Discussion

In many dose-finding studies, the experimental drug is available only at a discrete set of doses. This is an important feature that distinguishes the phase I dose-finding literature from the large literature of stochastic approximation and its well-studied descendants, although both deal with quantile estimation. Shen & O’Quigley (1996, 2000) point out the difficulty in the theoretical investigation of dose-finding methods due to the discrete barrier. In this article, we introduce the idea of virtual observations to bridge the gap between stochastic approximation for continuous dosage ranges and practical situations in phase I trials. On a technical note, if we apply (2) by replacing β with some other value 0 < b < 2β, then, assuming consistency, the sequence will be asymptotically normal with mean θβ and variance {b(2β − b)}−1var(Vi | = θβ). This leads to the well-established fact that b = β is the optimal choice that achieves the minimum asymptotic variance. Thus, by creating an objective function h(x) using virtual observation, we set up a root-finding problem with a known slope β around the root. This is a nice design feature that makes the application of stochastic approximation stable and reliable in small-sample settings.

A specific contribution of this article is the use of continuous data in phase I trials, in which the safety outcomes are typically dichotomized. We demonstrate via simulation based on the NeuSTART data that our procedure does better than the continual re-assessment method and the nonparametric optimal design. At first glance, it appears inconceivable that any method can improve on the Cramer–Rao lower bound. A closer look reveals that this is to be expected, as we set out to retrieve information through the use of the original continuous data. Precisely, an efficient estimator for π (k) based on Yij s observed at dose k has asymptotic variance proportional to Σak, where ak = {G′(ck), ckG′(ck)}T and Σ is the inverse of the information matrix for {M(k), σ (k)} with diagonals I11 = ∫ {G″(z)/G′(z)}2 dG(z), I22 = ∫ {zG″(z)/G′(z) + 1}2 dG(z), and off-diagonals I12 = I21 = ∫ z{G″(z)/G′(z)}2 dG(z); see Lehmann (1983). For standard normal G, for instance, the ratio of Σak to the Cramér–Rao lower bound for π (k) is {G′(ck)}2(1 + /2)[G(ck){1 − G(ck)}]−1, and attains a maximum 0.663 when π (k) = 0.21 or 0.79, and converges to 0 as π (k) approaches 0 or 1. With a target p = 0.10, this variance ratio at θ is 0.623. In other words, 33 continuous measurements observed at θ contain about the same amount of information as 42 dichotomized outcomes. To verify this intuition, we ran the nonparametric optimal design with a sample size of 42 under the simulation set-up in § 5.3 and it was uniformly superior to the virtual observation recursion with 33 subjects: the proportion of selecting ν1 was 0.58 and that of selecting an acceptable dose was 0.75. This might be because the optimal design had observations from all patients at the true ν1 but the recursion did not. The efficiency advantage should be considered in light of the computational ease and operational transparency of the stochastic approximation. First, dose assignment for the next cohort can be obtained by a hand calculator using (2). Second, the update rule (2) is intuitive and easy to explain: de-escalation occurs if Vi is large because of either a large sample mean Ȳi or a large variance observed in the current cohort. As shown in Fig. 1(b), an increase in drug level raises not only the mean level of the liver function test but also its variability. For biological measurements, the drug impact on the variance is usually larger than that on the mean. Therefore, it is sensible for a dose-finding method to curb its escalation pace in the presence of increased variability.

Acknowledgments

This work was supported by grants from the U.S. National Institute of Neurological Disorders and Stroke, part of the National Institutes of Health.

Appendix

Technical details

In this appendix, we first state and discuss the conditions on π (x), f (x) and h(x) required by the lemma and the propositions. Recall that ν1 = arg mink |π (k) − p|.

Condition 1. The functions π (x) and f (x) are assumed to be weakly monotone in x such that (a) π (k′) < π (ν1 − 1) < π (ν1) < π (ν1 + 1) < π (k), (b) f (k′) < f (ν1 − 1) < f (ν1) < f (ν1 + 1) < f (k), (c) π (k′) < π (ν1 − 1) < p < π (ν1 + 1) < π (k) and (d) f (k′) < f (ν1 − 1) < t0 < f (ν1 + 1) < f (k) for all k′ < ν1 − 1 and k > ν1 + 1.

To account for scenarios with ν1 = 1 or = K, define π (0) = 0, π (K + 1) = 1, f (0) = −∞ and f (K + 1) = ∞. Condition 1 does not require π and f to be monotone for doses below ν1 − 1 and above ν1 + 1 as long as doses below (above) ν1 are less (more) toxic than the target level. It is easy to see that strict monotonicity satisfies Condition 1. Condition 1(c) is an easy consequence of Condition 1(a) and the definition of ν1, and Condition 1(d) follows Condition 1(b): because f (k) = M(k) + ck σ (k) + σ (k)(zp − ck) = t0 + σ (k)(zp − ck), we have π (ν1 − 1) < p implies f (ν1 − 1) < t0, and π (ν1 + 1) > p implies f (ν1 + 1) > t0.

Condition 2. One and only one of the following statements is true:

The equation h(x) = t0 has a unique root, denoted by θβ, not among the jump points {l2, . . . , lK}.

The root of h(x) = t0 does not exist.

The equation h(x) = t0 has multiple roots, with the smallest root denoted by θ′ and the largest root by θ″. Both θ′ and θ″ are not among the jump points.

We require that the roots (θβ, θ′ and θ″) are not among the jump points so as to satisfy the Lipschitz condition around the roots. For example, Condition 2(c) guarantees that there exist K′ > 0 and K″ > 0 such that |h(x) − h(θ′)| ⩽ K′|x − θ′| and |h(x) − h(θ″)| ⩽ K″|x − θ″| for all x.

Proof of Lemma 1. For Lemma 1(a), suppose that π (ν1) ⩾ p. Because f (k) = t0 + σ (k)(zp − ck), π(ν1) ⩾ p implies f (ν1) ⩾ t0. Thus, by Condition 1(d) and definition of ν2, we know that ν2 = ν1 or ν1 − 1. More precisely, ν1 = ν2 if and only if f (ν1) − t0 < t0 − f (k) if and only if σ (ν1)(zp − cν1) < σ(k)(ck − zp) if and only if ck > zp + σ (ν1)(zp − cν1)/σ(k) for all k < ν1, giving the desired result. The proof of Lemma 1(b) is analogous.

Proof of Proposition 1(a). First, the assumption β > 2σ (ν1)|cν1 − zp| implies that h(θβ) = t0 for some θβ with C(θβ) = ν1. When π (ν1) = p, h(ν1) = f (ν1) = t0 and hence θβ = ν1. When π (ν1) > p, we have h(ν1) = f (ν1) > t0 and h(ν1 − 0.5) = h(ν1) − 0.5β = t0 + σ (ν1)(zp − cν1) − 0.5β < t0; the last inequality holds by the assumption. By continuity, there exists θβ ∈ (ν1 − 0.5,ν1) so that h(θβ) = t0 and C(θβ) = ν1. Similar arguments can be made for the case π (ν1) < p.

Second, the assumption β < mink ≠ ν1 2σ(k)|ck − zp| precludes the scenarios where h(x) = t0 has multiple roots, because the assumption implies h(k′ + 0.5) = t0 + σ (k′)(zp − ck′) + 0.5β < t0 for all k′ < ν1 and h(k − 0.5) = t0 + σ (k)(zp − ck) − 0.5β > t0 for all k > ν1.

Consequently, h(x) = t0 has a unique root at θβ. Using standard convergence results of the stochastic approximation gives → θβ with probability one, and Xn = C( ) C(θβ) = ν1 by the Lipschitz assumption; cf. Condition 2.

Proof of Proposition 1(b). When π (ν1) > p, ν2 = ν1 − 1 by Lemma 1(a) and the assumption ν1 ≠ ν2. Furthermore, we have h(ν1) − t0 > t0 − h(ν2) > 0.5β; the first inequality holds by definition of ν2, the second by the assumption β < mink ≠ ν1 2σ (k)|ck − zp|. As a result, the root of h(x) = t0 does not exist with h(ν2 + 0.5) < t0 and h(ν1 − 0.5) > t0. More precisely, we have (x − lν1) { h(x) − t0} > 0 for all x ≠ lν1 and h(lν1) > t0 and h(lν1 −) < t0, where lν1 − indicates a number that is arbitrarily close to lν1 from the left. Define Zi = Vi − h( ) and let ℐi denote the σ-field generated by { , Z1, . . . , Zi} so that Vi is ℐi-measurable and is ℐi−1-measurable. Following the algebraic steps similar to those of Robbins & Monro (1951), we obtain

Taking conditional expectation on both sides with respect to ℐi−1, we have

for some K″′ > 0. Applying Theorem 1 of Robbins & Siegmund (1971) for nonnegative almost supermartingale, we can show that limi→∞( − lν1)2 exists and almost surely. Also, since ( − lν2){h( ) − t0} > 0, we can conclude that → lν1 and pr(Xn = ν1 or ν2 eventually) = 1. By definition of ν1, |π (ν1) − p| < |π (ν2) − p|. Thus, for sufficiently large n,

| (A1) |

The inequality (A1) is due to Lemma 1(a) under the assumptions ν1 ≠ ν2 and π (ν1) > p. The proof is completed by analogous arguments for the case π (ν1) < p.

Proof of Proposition 3(a). First, it is easy to see that σ (k) < σ (θ) < σ (k′) for k < θ < k′ under the assumed mean-variance relationship and Condition 1. Since s{σ (k)} − s{σ (θ)} = ϕ{M(k) − M(θ)} = ϕ{f (k) − f (θ)} − ϕ{σ(k) − σ (θ)}, we have f (k) < f (θ) if and only if σ (k) < σ (θ) because s(σ) is increasing. Now, the assumption pL < p̃L implies

because σ (k) < σ (θ). Hence B̃k = 2σ (k)(ck − ) > 2σ (θ)|zp − | = B̃θ for all k < θ. Using similar arguments, we can show pU > p̃U implies B̃k′ > B̃θ for k′ >θ. Thus, ν̃ = θ by definition.

Proof of Proposition 3(b). First, following similar arguments in the proof of Proposition 1(a), we can prove that h̃(x) = t0 has a unique root at θ̃β with C(θ̃β) = ν̃ if B̃ν̃ < β < mink ≠ ν̃ B̃k. Applying standard convergence results of stochastic approximation gives the consistency of Xn for ν̃, which is equal to θ by Proposition 3(a).

Next, because ck ⩽ zU < by the assumption pU < p̃U and σ(θ) ⩽ σ (k) for k >θ, we have 2σ (θ)( − zU) ⩽ 2σ (k)( − ck) = Bk for k >θ. Hence,

| (A2) |

For the case k < θ, we have ck ⩾ zL > max(zp, ) and σ (k) ⩽ σ (θ). Substituting M(k) = M(θ) + [s{σ (k)} − s{σ (θ)}]/ϕ into ck = {t0 − M(k)}/σ (k) gives

| (A3) |

for ϕ > 0. Fix M(θ) and σ (θ). For any given ck, we differentiate both sides of (A3) with respect to ϕ and get

for ϕ > 0. Therefore, ∂σ (k)/ ∂ϕ < 0 as long as ck ⩾ 0 which holds for most practical purposes; i.e. it suffices to have zp ⩾ 0, and σ(k) is minimized as ϕ grows large upon which M(k) → M(θ). Specifically, its infimum is achieved by taking the limit ϕ → ∞ in (A3):

Also, since σ (k) = σ (θ) when ϕ = 0, the above expression provides the minimum value of σ (k) over all possible ϕ ⩾ 0 for a given ck. Thus,

This, together with (A2), implies that |zp − | < β/{2σ (θ)} < w̃ suffices the consistency condition, B̃θ < β < mink ≠ θ B̃k.

References

- Anbar D. The application of stochastic methods to the bioassay problem. J Statist Plan Infer. 1977;1:191–206. [Google Scholar]

- Anbar D. Stochastic approximation methods and their use in bioassay and phase I clinical trials. Commun Statist. 1984;13:2451–67. [Google Scholar]

- Cheung YK. Coherence principles in dose-finding studies. Biometrika. 2005;92:863–73. [Google Scholar]

- Cheung YK. Sequential implementation of stepwise procedures for identifying the maximum tolerated dose. J Am Statist Assoc. 2007;102:1448–61. [Google Scholar]

- Cheung YK, Chappell R. A simple technique to evaluate model sensitivity in the continual reassessment method. Biometrics. 2002;58:671–4. doi: 10.1111/j.0006-341x.2002.00671.x. [DOI] [PubMed] [Google Scholar]

- Elkind MS, Sacco RL, Macarthur RB, Fink DJ, Peerschke E, Andrews H, Neils G, Stillman J, Chong J, Connolly S, Corporan T, Leifer D, Cheung K. The neuroprotection with statin therapy for acute recovery trial (NeuSTART): an adaptive design phase I dose-escalation study of high-dose lovastatin in acute ischemic stroke. Int. J. Stroke. 2008;3:210–8. doi: 10.1111/j.1747-4949.2008.00200.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lai TL, Robbins H. Adaptive design and stochastic approximation. Ann Statist. 1979;7:1196–221. [Google Scholar]

- Lehmann EL. Theory of Point Estimation. New York: Wiley; 1983. [Google Scholar]

- Lin Y, Shih WJ. Statistical properties of the traditional algorithm-based designs for phase I cancer trials. Biostatistics. 2001;2:203–15. doi: 10.1093/biostatistics/2.2.203. [DOI] [PubMed] [Google Scholar]

- O’Quigley J, Chevret S. Methods for dose finding studies in cancer clinical trials: a review and results of a monte carlo study. Statist Med. 1991;19:1647–64. doi: 10.1002/sim.4780101104. [DOI] [PubMed] [Google Scholar]

- O’Quigley J, Paoletti X, MacCario J. Non-parametric optimal design in dose finding studies. Biostatistics. 2002;3:51–56. doi: 10.1093/biostatistics/3.1.51. [DOI] [PubMed] [Google Scholar]

- O’Quigley J, Pepe M, Fisher L. Continual reassessment method: a practical design for phase I clinical trials in cancer. Biometrics. 1990;46:33–48. [PubMed] [Google Scholar]

- O’Quigley J, Shen LZ. Continual reassessment method: a likelihood approach. Biometrics. 1996;52:673–84. [PubMed] [Google Scholar]

- Robbins H, Monro S. A stochastic approximation method. Ann Math Statist. 1951;22:400–7. [Google Scholar]

- Robbins H, Siegmund D. A convergence theorem for non-negative almost supermartingales and some applications. In: Rustagi JS, editor. Optimizing Methods in Statistics. New York: Academic Press; 1971. pp. 233–57. [Google Scholar]

- Sacks J. Asymptotic distribution of stochastic approximation procedures. Ann Math Statist. 1958;29:373–405. [Google Scholar]

- Shen LZ, O’Quigley J. Consistency of continual reassessment method under model misspecification. Biometrika. 1996;83:395–405. [Google Scholar]

- Shen LZ, O’Quigley J. Using a one-parameter model to sequentially estimate the root of a regression function. Comp Statist Data Anal. 2000;34:357–69. [Google Scholar]

- Wu CFJ. Efficient sequential designs with binary data. J Am Statist Assoc. 1985;80:974–84. [Google Scholar]