Summary

Treatment switching is a frequent occurrence in clinical trials, where, during the course of the trial, patients who fail on the control treatment may change to the experimental treatment. Analysing the data without accounting for switching yields highly biased and inefficient estimates of the treatment effect. In this paper, we propose a novel class of semiparametric semicompeting risks transition survival models to accommodate treatment switches. Theoretical properties of the proposed model are examined and an efficient expectation-maximization algorithm is derived for obtaining the maximum likelihood estimates. Simulation studies are conducted to demonstrate the superiority of the model compared with the intent-to-treat analysis and other methods proposed in the literature. The proposed method is applied to data from a colorectal cancer clinical trial.

Some key words: Expectation-maximization algorithm, Maximum likelihood estimate, Noncompliance, Panitumumab, Partial switching, Transition model, Treatment switching

1. Introduction

Treatment switching commonly occurs in clinical trials such as in cancer or in other diseases, where patients who fail on the control treatment may begin taking the experimental treatment. This often happens in cancer clinical trials when the control arm consists of a placebo or no treatment. In such trials, patients in the control arm who experience an intermediate event, such as disease progression, may begin taking the experimental treatment to receive a rescue medication. As discussed in Marcus & Gibbons (2001), an intent-to-treat analysis will lead to attenuated treatment effect estimates, and thus one must properly model the data accommodating this switching effect and then appropriately estimate the treatment effect.

In clinical trials, there are many types of switching possibilities. Drop-in refers to situations where control subjects start taking an active treatment. There is also switching due to drop-out, where subjects stop taking the active treatment. Here we focus on the drop-in problem of control subjects switching to the experimental treatment after experiencing an intermediate event.

Methods have been advocated to compensate for the effects of drop-in, assuming an intent-to-treat analysis. This could be a viable approach if the drug effect is believed to be sufficiently large to yield a clinically meaningful intent-to-treat effect. Various methods for sample size adjustment are described by Lachin & Foulkes (1986), Lakatos (1988), Lu & Pajak (2000), Porcher et al. (2002), Jiang et al. (2004) and Barthel et al. (2006). Although these approaches manage the risk of a false-negative error, they may result in a larger than needed sample size and yield an effect estimate of marginal clinical significance that fails to address the drop-in bias, especially when appreciable drop-in occurs nonrandomly. In the presence of drop-in, analysis methods to estimate the treatment’s causal effect that do not respect randomization are potentially confounded. Common examples include an analysis treating the drop-in time as a censoring time, or the exclusion of patients with drop-in. Law & Kaldor (1996) proposed a multiplicative Cox model in which patients are divided into subgroups based on their randomized and observed subsequent therapy. As noted by White (1997), their model is flawed since subgroup membership at a particular time depends on the future, and estimates will tend to be biased under the null. Other approaches that respect the randomization include the use of causal models with counterfactuals (Lunceford et al., 2002; White et al., 2003; London et al., 2010). Related approaches are marginal structural models (Robins et al., 2000). For valid causal inference, these models must account for all confounders that predict drop-in and there must be no censoring bias. Even when these conditions apply, model estimates can become unstable when drop-in is certain among all patients with a specific value of a time-dependent covariate. Examples of applying marginal structural models are provided by Hernán et al. (2000) and Yamaguchi & Ohashi (2004).

When marginal structural models are not applicable, a structural nested model may be used (Yamaguchi & Ohashi, 2004; Greenland et al., 2008) In particular, based on the methods of Robins & Tsiatis (1991), Branson & Whitehead (2002) developed an estimation method for an accelerated failure-time model similar to a structural nested model to estimate the true effect. Their model assumes that the effect of the experimental treatment is the same at randomization in the test arm as at drop-in in the control arm. In addition, their model assumes that patients who receive drop-in therapy are comparable to those who do not, although the authors noted that baseline covariates could be incorporated, and thus their model could include factors that predict drop-in. Shao et al. (2005) extended the Branson & Whitehead (2002) methodology to allow the effect of drop-in to vary with time receiving the drop-in therapy, and also defined a latent hazard rate model with the same features. White (2006) noted that the recensoring procedure of Branson & Whitehead (2002) needs to be modified when the control arm survival time without drop-in depends on the drop-in time, otherwise a bias towards the null results if drop-in patients have a poor prognosis, and away from the null if they have a good prognosis. White (2006) also pointed out that the estimation procedure proposed in Shao et al. (2005) is biased when the drop-in time is prognostic. For the structural nested modelling approaches, such as those of Branson & Whitehead (2002) and Shao et al. (2005), one major concern is that the assumed model for the true survival time does not account for the disparity that some subjects experience the intermediate event while others do not. Furthermore, assuming a constant experimental treatment effect between treatment arms may be questionable since the disease course is more advanced among drop-in patients that receive delayed therapy.

In this paper, we tackle this practical problem from a completely different modelling perspective than the aforementioned methods. Instead of modelling the true survival time using either the accelerated failure time model or the proportional hazards model, we model the observed event times using a semiparametric hazards model. To account for the fact that some subjects do not experience the intermediate event, we introduce a mixture model to characterize the progression and nonprogression subpopulation. Furthermore, for the progression population, we separately model the time to the intermediate event and the time from the intermediate event to death. We also include baseline covariates and prognostic covariates in both time-to-event regression models. In this way, we not only account for the heterogeneity at baseline, but also capture the heterogeneity at treatment switching. Finally, our model assumes a parametric switching effect at the time of the intermediate event, which may be different from the baseline treatment effect. The advantages of our model are clear: we model only observed event times which makes it possible to assess model assumptions and check model fit using the observed data; we allow the treatment effect at switching to be completely different from the baseline treatment effect; and the model can handle both baseline covariates and prognostic covariates at switching.

2. Panitumumab study

Our proposed methodology was motivated by the panitumumab colorectal cancer clinical trial conducted by Amgen Inc. (Amado et al., 2008). This clinical trial was an open label, randomized, phase III multicentre study designed to compare the efficacy and safety of panitumumab plus best supportive care versus best supportive care alone in colorectal cancer patients. One objective was to compare the treatment effect on the overall survival time in this subject population.

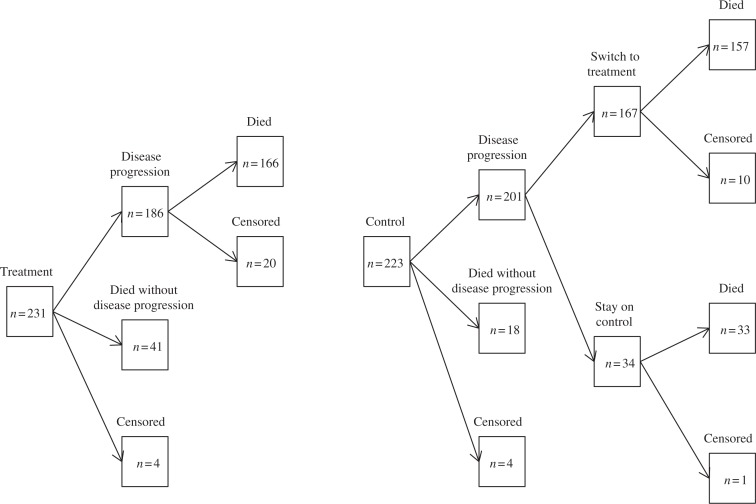

Subjects were randomly assigned to receive treatment or control. Panitumumab was administered until disease progression, inability to tolerate the investigational product, or other reasons for discontinuation. During the study, subjects in the control group who had disease progression at any time were eligible to receive panitumumab at 6 mg/kg administered once every 2 weeks as part of a separate protocol. Figure 1 shows the counts for each group in the follow-up period. Among the 223 patients on the control arm, 201 patients had disease progression, of which 167 switched over to the treatment arm. Due to this substantial switching percentage, this study provides strong motivation for developing new statistical models as well as new methods for estimating the true causal effect of the treatment in the presence of a semicompeting risk.

Fig. 1.

Graphical representation of the panitumumab data: total n = 454.

3. Proposed method

3.1. Models and assumptions

In cancer clinical trials, some subjects experience the intermediate event of disease progression and others do not and these subjects are censored for the event. To address this issue, we propose a mixed semicompeting risks transition model. We assume that the population consists of two subpopulations, where one population will eventually develop disease progression before death, but the other population will never experience disease progression. For the no-progression population, the only event time of interest is time-to-death, but for the progression population, both the time to disease progression and the event of death need to be considered.

To introduce our statistical models, we use the following notation: a dichotomous variable U is used to denote the lifetime disease progression status of the subjects, U = 1 if the subject has disease progression before death and 0 otherwise; we let TD denote the time to death for the no-progression subjects with U = 0; for the other subjects with U = 1, we use TU to denote their time to disease progression and let G denote the time from disease progression to death.

The proposed statistical model has three components. The first component models the distribution of the progression status given the baseline covariates X and randomized treatment R:

| (1) |

where R = 1 if the patient is on the experimental treatment arm and 0 otherwise, and the αs are unknown regression coefficients. The second component models the survival distribution for the no-progression population given X and R:

| (2) |

where hD(t | R, X, U = 0) is the conditional hazard function of TD given the covariates, h0(t) is an unknown baseline hazard function and (β0, )T are unknown regression coefficients. In the third component, we model the distributions of time to disease progression, TU, and time from disease progression to death, G, in the progression population, given treatment switching or not, by assuming a transition model structure:

| (3) |

where hU(t | R, X, U = 1) is the conditional hazard function for TU, hG(t | R, Z, U = 1, TU) is the conditional hazard function of G, both h1(t) and h2(t) are unknown baseline hazard functions, and βs and γs are regression coefficients. Here, V indicates the treatment switching and Z, which contains X, reflects covariates collected at baseline and at disease progression, which could be prognostic factors for the switching decision.

Our models naturally account for the situation that some subjects may or may not experience disease progression for reasons other than ignorable censoring and also include the gap time between disease progression and death, thereby automatically implying that disease progression occurs before death. Because we condition on the disease progression status, our model can be considered as a type of pattern-mixture model. As one reviewer points out, our method can also be viewed as an illness-death model with four states: alive with/without progression, and dead with/without progression (Fix & Neyman, 1951; Sverdrup, 1965), but with a pattern-mixture parameterization (Larson & Dinse, 1985). However, our current hazard models have different interpretations in the survival context. For example, all the models are the hazard models for some specific survival events so that all the β parameters give the treatment effects on the risk of these events. Specifically, β21 represents the logarithm of the hazard ratio of treatment post-disease progression while the coefficient of TU gives the effect of the disease progression time on future death. In the second model of (3), since the switching only happens to some subjects on the control arm; V (1 – R) is used in the regression. Furthermore, our model (3) assumes that, for the same subjects, the hazard function after the switching at disease progression would change by exp(β22), when compared with the case when they had no switching. Obviously, the latter is a structural assumption, which is acceptable in practice.

Our goal is to compare the survival function of death time in the setting when no subjects have switching. To see how to use the proposed models for achieving this goal, we adopt a counterfactual outcome framework by defining as a potential survival time when a subject receives treatment a and never changes treatment status and letting . Thus, we are interested in comparing S1(t) and S0(t). As in the usual causal framework, we assume the following consistency assumption and no unobserved confounder assumption:

Assumption 1. Treatment R is completely randomized and if a subject never changes treatment.

Assumption 2. Given (R = 0, Z, TU = s), that is, a subject in the control arm has disease progression at time s and covariates Z, or (R = 1, Z, TU = s), V is independent of the potential outcomes { , }.

Let fX (x) and fZ (z) denote the density functions for X and Z, respectively. Then by the randomization of R, we obtain the potential survival function of treatment a, , as

From Assumption 2, . On the other hand, (R = a, U = 0) or (R = a, U = 1, V = 0) implies that the treatment status is never switched so can be replaced by the observed TD in the above expression by Assumption 1. Since TD = G + TU for subjects with U = 1, we obtain the survival functions Sa(t) as follows:

In other words, Sa(t) can be expressed in terms of the parameters in our models (1)–(3) and the distributions of X and Z given (X, U = 1, R). Hence, by inserting the estimates of these parameters into the above expression, we will be able to estimate Sa(t), and thus the causal effect of treatment.

In real applications, there is often some potential bias due to censoring and obtaining the differential prognostic covariates at disease progression. To eliminate such bias, we need the following assumptions:

Assumption 3. The censoring time is independent of TD, G and TU given the observed covariates.

Assumption 4. For progression subjects, TU is independent of Z given R and X.

Assumptions 3 and 4 discard the contribution of the censoring distribution. Assumption 4 is plausible if the part of Z excluding X is collected after disease progression.

3.2. Inference procedure

Let Y denote the observed event if no disease progression occurs; otherwise, we use Y to denote the second event time and W to denote the disease progression time. Let Δ be the censoring indicator. The observed data can be divided into four groups of observations:

Group 1. Subjects are observed to die at time Y and no disease progression has been observed. Clearly, these subjects belong to the first subpopulation with U = 0 and TD = Y. The observed data are (Y, Δ = 1, U = 0, X, R). Thus, the contribution to the likelihood function is h0(t) exp(β0 R + γ0 X) exp{−H0(t)eβ0R+γ0X}pr(U = 0 | R, X) fX(x | R)pr(R).

Group 2. Subjects are observed to have disease progression at W and die at Y. These subjects belong to the second subpopulation (U = 1) and TU = W, TD = Y so G = Y – W. The observed data are (TU, G, Δ = 1, U = 1, V, Z, X, R). Thus, the contribution to the likelihood function is

where pr(Z | X, R, U = 1) = pr(Z without X | X, R, U = 1). We will use this notation for all conditional distributions of Z given X thereafter.

Group 3. Subjects are observed to have disease progression at W and censored at C. The subjects belong to the second subpopulation with U = 1 and TU = W, TD > C = Y so G > Y – W. The observed data are (TU, G > Y, Δ = 0, U = 1, V, Z, X, R). Thus, the contribution to the likelihood function is

Group 4. Subjects are only observed to be censored at Y and no disease progression occurs before Y . These subjects may belong to the first subpopulation, U = 0, with TD > Y; or, they may belong to the second subpopulation, U = 1, with TU > Y. The observed data are {UTU + (1 – U)TD > Y, X, R}. Thus, the contribution to the likelihood function is [exp{−H0(Y)eβ0R+γ0X}pr(U = 0 | R, X) + exp{−H1(Y) eβ1R+γ1X}pr(U = 1 | R, X)] fX (x | R)pr(R).

For inference, we estimate all the model parameters, including the βs, γs and Hs, via the nonparametric maximum likelihood approach. In this approach, the baseline hazard functions, H0, H1 and H2, are assumed to be step functions with jumps at the observed event times. To compute the nonparametric maximum likelihood estimates, we will use the expectation-maximization algorithm to facilitate the computation of the nonparametric maximum likelihood estimates. Specifically, we treat Ui for subject i as potential missing data. Then it is clear that only for subjects in Group 4, Ui is not observed. To estimate the asymptotic covariance matrix of the parameter estimates, we treat all the αs, βs, γs and the jump sizes of the Hs as parameters and use their observed information matrix. In particular, the observed information matrix can be calculated using the Louis formula (Louis, 1982) and its inverse is used as the estimator for the asymptotic covariance matrix.

3.3. Prediction of the survival function with partial treatment crossover

To estimate Sa(t), we can estimate each term on the right-hand side of Sa(t), given in § 3.1, using the parameter estimates. Specifically, the estimators are

where in the final expression, δ is the Dirac delta function and Kan is a kernel weight with bandwidth an. Either the delta method or resampling techniques like the bootstrap will be used to derive the confidence band of Ŝa(t). As demonstrated in the simulation studies in § 5, we find, in practice, that the bootstrap is easy to implement and performs well for moderate sample sizes. In addition, our experience also shows that even though kernel estimation of pr(Z | X, R, U = 1) may be biased if the dimension of the Xs is not small, the final estimate of Sa(t) is not that sensitive to the dimension of X due to the averaging operations used in calculating Ŝa(t).

To compare the experimental arm survival function with the control arm survival function without switching, we examine the weighted difference , where t1,…, tK are prespecified time-points in [0, τ] and ω̂(tk) is a weight specified at time tk. Useful weight functions ω̂(t) can be based on the class of Ŝ0(t)ρ1 {1 – Ŝ0(t)}ρ2, where ρ1 and ρ2 are constants in [0, 1] and Ŝ0(t) can be also replaced by Ŝ1(t) or a pooled estimator of survival functions. Thus, by choosing different ρ1 and ρ2, we can emphasize comparisons at either early stages or late stages of follow-up. In the subsequent analysis, we consider (ρ1,ρ2) = (0, 1) or (1/2, 1/2). Under the null hypothesis for which S0(t) = S1(t), according to the asymptotic results to be given later, √n{Ŝ1(t1) – Ŝ0(t1), …, Ŝ1(tK) – Ŝ0(tK)}T → N(0, Σ) in distribution for some covariance matrix Σ. Thus, it is easy to see that converges in distribution to 𝒵TΣ1/2diag{ω(t1),…, ω(tK)}Σ1/2 𝒵, where 𝒵 denotes a multivariate standard normal variate and ω(t) is the limit of ω̂(t). We reject the null hypothesis if the test statistic is larger than the (1 – α)-percentile of 𝒵TΣ̂1/2diag{ω(t1), …, ω̂(tK)} Σ̂1/2𝒵, where Σ̂ is a consistent estimator for Σ.

Remark 1. Because of the randomization, pr(X | R = a) = pr(X), and therefore, we can replace with the empirical distribution of X. However, we observe very little efficiency gain in numerical studies.

4. Asymptotic properties

We establish the asymptotic properties for the parameter estimators and Ŝa(t) using the general nonparametric maximum likelihood theory framed in Zeng & Lin (2010). In addition to Assumptions (1)–(4), we need the following assumptions:

Assumption 5. The true parameters values of the βs, γs and αs, still denoted as θ ≡ (β0, β1, β21, β22, γ0, , , α0, α1, )T belong to a bounded set in real Euclidean space. Moreover, the true baseline functions, still denoted as (h0, h1, h2), are continuous and are bounded away from zero in [0, τ], where τ is the study duration.

Assumption 6. If there is some constant ν such that νT(1, R, Z) = 0 with probability one, then ν = 0. Additionally, we assume (R, Z) to have bounded support and there exits a continuous component of X such that its coefficient in model (1) is nonzero.

Assumption 7. With probability one, pr(C ⩾ τ | R, Z) > 0 and pr(V = 1 | R = 0, Z, TU) ∈ (μ0, μ1) for some constant 0 < μ0 < μ1 < 1.

Under these conditions, the following theorems give the consistency and asymptotic distribution of the estimators.

Theorem 1. Under Assumptions (1)–(7), , almost surely, where θ̂ ≡ (β̂0, β̂1, β̂21, β̂22, γ̂0, , , α̂0, α̂1, )T and Ĥk(t) is the estimator of Hk(t).

Theorem 2. Under Assumptions (1)–(7), √n(θ̂ – θ, Ĥ1 – Ĥ1, Ĥ2 – Ĥ2, Ĥ3 – H3) converges in distribution to a mean zero Gaussian process in the metric space Rd × l∞[0, τ] × l∞[0, τ] × l∞[0, τ], where d is the dimension of θ.

Using the results from Theorem 1 and Theorem 2, we can further obtain the asymptotic distribution of Ŝa(t) as given in the previous section.

Theorem 3. In addition to Assumptions (1)–(7), we assume that , where dx is the dimension of X, K (·) is a symmetric kernel density function with ∫ ys K (y)dy = 0, s = 1, …, (m – 1) with m > d/2, and an satisfies , . Then with probability one, supt∈[0, τ] | Ŝa(t) – Sa(t) | → 0 and for each fixed t, and √n{Ŝa(t) – Sa(t)} converges in distribution to a mean zero normal variate.

5. Simulation studies

5.1. Simulation study I

To examine the small sample performance of the proposed method, we conducted a simulation study by generating data from models (1)–(3). Specifically, the baseline treatment R = 1 for the first half of the subjects and 0 for the other half; two baseline covariates X1 and X2 are independently generated from the uniform distribution on [−1, 1], and a Bernoulli with success probability 0.6, respectively. We then use models (2) and (3) to further generate time to events of interest. The susceptibility status is Bernoulli with success probability 1/{1+exp(−1.6+1.8R – X1–0.1X2)}. For the no-progression subjects with U = 0, we simulate their death time TD using model (2) with H0(t) = t, β0 = −1 and (γ01, γ02) =(1, 0.2). For the progression subjects, the time to disease progression, TU, is generated from the first hazard model in (3) with H1(t) = t/2, β1 = −0.5 and (γ11, γ12) = (1, 0). Finally, to generate the time from disease progression to death for the progression subjects with U = 1, we first generate the prognostic factors Z at disease progression from the uniform distribution on [0, 1]. The assignment to treatment switching, V, in the untreated subjects is assumed to have a Bernoulli with success probability 1/{1+exp(0.5–0.3TU – 0.2X1 – 0.5Z}, yielding a switching rate of 38.7% in the control arm. Then, the time from disease progression to death, G, follows the second hazard model in (3) with H2(t) = exp(t) – 1, β21 = −0.3, β22 = −0.5, and γ21 = 0.6, γ22 = −0.5, γ23 = 0.5, γ24 = −0.4. Thus, the subjects who change treatment status from untreated to treated will have their hazard risk reduced by exp(0.5) and the longer the time disease progression is, the longer the survival time will be. Finally, the censoring time is generated from a uniform distribution on (1, 7) and the study duration is τ = 3. The latter yields average proportions for groups 1 to 4 as 23, 41, 21 and 13%.

In the simulation study, we consider sample sizes of n = 400 and n = 1000. Bootstrap samples of size 50 are used to construct pointwise 95% confidence intervals for the estimated survival probability. The results from 1000 replicates are given in Table 1. Additionally, we calculate the square root of the mean square error and maximum absolute difference of the estimated survival curve using 200 equally spaced time-points between 0 and the maximum censoring time. In particular, for the methods of intent-to-treat, Branson & Whitehead (2002), Shao et al. (2005), and the proposed model, the square roots of the mean square errors of Ŝ0(t) are 0.062, 0.050, 0.054 and 0.035, respectively; the square root of the mean square errors of Ŝ1(t) are 0.032, 0.028, 0.030 and 0.030, respectively; the maximum absolute differences of Ŝ0(t) are 0.101, 0.067, 0.083 and 0.051, respectively and those of Ŝ1(t) are 0.061, 0.036, 0.051 and 0.049, respectively.

Table 1.

Simulation study I

| n = 400 | n = 1000 | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Parameter | True | EST | SD | ESE | CP% | EST | SD | ESE | CP% |

| Survival model of no-progression population | |||||||||

| β0 | −1.0 | −1.08 | 0.26 | 0.26 | 94 | −1.04 | 0.16 | 0.16 | 93 |

| γ01 | 1.0 | 0.96 | 0.23 | 0.22 | 94 | 0.97 | 0.13 | 0.13 | 94 |

| γ02 | 0.2 | 0.22 | 0.23 | 0.24 | 94 | 0.20 | 0.14 | 0.14 | 95 |

| Disease progression model of progression population | |||||||||

| β1 | −0.5 | −0.54 | 0.16 | 0.16 | 94 | −0.52 | 0.10 | 0.10 | 94 |

| γ11 | 1.0 | 1.08 | 0.14 | 0.14 | 91 | 1.04 | 0.09 | 0.08 | 94 |

| γ12 | 0.0 | −0.01 | 0.14 | 0.15 | 94 | 0.00 | 0.09 | 0.09 | 95 |

| Gap time model of progression population | |||||||||

| β21 | −0.3 | −0.31 | 0.19 | 0.19 | 95 | −0.30 | 0.12 | 0.12 | 95 |

| β22 | −0.5 | −0.50 | 0.20 | 0.20 | 95 | −0.51 | 0.12 | 0.12 | 96 |

| γ21 | 0.6 | 0.61 | 0.18 | 0.18 | 95 | 0.60 | 0.11 | 0.11 | 95 |

| γ22 | −0.5 | −0.51 | 0.16 | 0.16 | 94 | −0.50 | 0.10 | 0.10 | 95 |

| γ23 | 0.5 | 0.51 | 0.17 | 0.17 | 94 | 0.50 | 0.10 | 0.10 | 95 |

| γ24 | −0.4 | −0.41 | 0.27 | 0.28 | 95 | −0.41 | 0.17 | 0.17 | 95 |

| Susceptibility model | |||||||||

| α0 | 1.6 | 1.66 | 0.26 | 0.26 | 95 | 1.63 | 0.16 | 0.16 | 96 |

| α1 | −1.8 | −1.80 | 0.27 | 0.28 | 94 | −1.79 | 0.17 | 0.16 | 96 |

| α21 | 1.0 | 0.90 | 0.24 | 0.24 | 93 | 0.94 | 0.15 | 0.15 | 95 |

| α22 | 0.1 | 0.11 | 0.26 | 0.27 | 94 | 0.11 | 0.16 | 0.15 | 95 |

| Predicted survival functions in control arm | |||||||||

| S0(τ/2) | 0.51 | 0.49 | 0.04 | 0.04 | 91 | 0.49 | 0.02 | 0.02 | 92 |

| S0(τ) | 0.17 | 0.18 | 0.03 | 0.03 | 91 | 0.18 | 0.02 | 0.02 | 91 |

| Predicted survival functions in experimental arm | |||||||||

| S1(τ/2) | 0.63 | 0.61 | 0.03 | 0.03 | 94 | 0.61 | 0.02 | 0.02 | 92 |

| S1(τ) | 0.32 | 0.33 | 0.03 | 0.03 | 93 | 0.33 | 0.02 | 0.02 | 94 |

EST, average of the parameter estimates; SD, sample standard deviation of the estimates; ESE, average of the standard error estimates; CP%, coverage probability of the 95% confidence interval based on a normal approximation.

In addition, we study the power of the test statistics proposed in § 3.3 under the simulation setup discussed above, and obtain the Type I error rate by letting β0 = β1 = β21 = β22 = α1 = 0. In particular, we consider two test statistics, test I with (ρ1,ρ2) = (0, 1) and test II with (ρ1,ρ2) = (0.5,0.5), for sample sizes of n = 400 and n = 1000. When n = 400, the powers are 87.1% for test I, and 85.6% for test II. The power increases to 99.8% for both tests when the sample size increases to 1000. For tests I and II, the Type I error rates are 4.5% and 4.8% when n = 400, respectively; and 5.4% and 4.8% when n = 1000, respectively.

5.2. Simulation study II

In the second simulation study, we use the modified simulation setup as in Shao et al. (2005) to compare the proposed model with existing methods for switching, and to also demonstrate the robustness of the proposed model. In particular, the survival time is generated according to the exponential distribution with hazard rate 0.0693 for the control arm and 0.0462 for the experimental treatment arm. The total sample size of n = 600, with 300 subjects in each arm. For both treatment arms, the random censoring time is generated according to the uniform distribution on the interval of 15–20 months, resulting in an overall censoring percentage of 35.4%. The time-to-event, which is the switching time in Shao et al. (2005), is generated from the exponential distribution with a mean of 7.22 months for the control group and 10.82 months for the experimental treatment group. In this paper, we focus on switching only from the control to treatment arm and let the patients switch at the event time with probability 0.6, yielding a switching rate of 39.0%. After switching, the observed survival time for the switching patients is updated using equation (4) and (11) in Shao et al. (2005) with β = −0.4055, η00 = 0.1, η01 = 0.009 and η10 = η11 = 0.

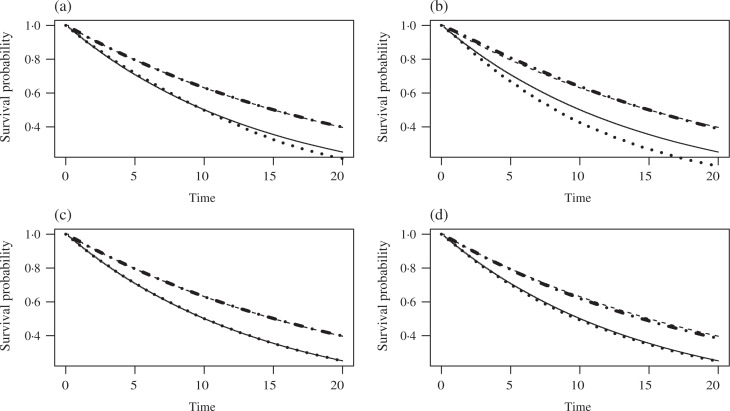

Figure 2 presents the averaged predicted survival curves on [0, 20] for the intent-to-treat Kaplan–Meier, Branson & Whitehead (2002), Shao et al. (2005) and proposed methods using 1000 simulations. The discrepancy in the four different approaches focuses on the estimation of the control group survival curve. In particular, the survival curves generated by Shao et al. (2005) and the proposed model yield survival curves with smaller bias. The square roots of the mean square errors and maximum absolute differences are 0.029 and 0.049, respectively, for Shao et al. (2005), and 0.031 and 0.043, respectively, for the proposed approach. On the other hand, bigger biases are observed for the Branson & Whitehead (2002) and the intent-to-treat approaches. The square roots of the mean square errors and maximum absolute differences are, respectively, 0.073 and 0.088 for the Branson & Whitehead (2002) method, and 0.034 and 0.069 for the intent-to-treat approach.

Fig. 2.

Average predicted survival curves from simulation study II. S(t | R = a) is the potential survival function for subjects with treatment status a if they have no treatment switching. In each panel, the solid curve is the true survival function in the control arm, the dashed curve is the true survival function in the experimental treatment arm, while the dotted and the dash-dotted curves are, respectively, the estimated survival functions in these two arms. The estimates in the plots are based on (a) intent-to-treat analysis, (b) Branson & Whitehead (2002), (c) Shao et al. (2005) and (d) our method.

6. Analysis of the panitumumab data

We carry out here a detailed analysis of the panitumumab study. It is purely an abstract construct of the semicompeting risk nature of the proposed model to assume the existence of a subpopulation that is subject to disease progression, and thus this condition is not assumed to apply literally to this study. The baseline covariates we consider are initial treatment, age in years at screening, baseline electrocorticography performance status with 0 or 1 versus ⩾ 2, primary tumour diagnosis type with rectal versus colon, gender, and region with three levels consisting of western Europe, eastern and central Europe, and rest of the world. In the panitumumab study, the median age was 62.5 years and the interquartile range of age was (55, 69) years. There were 388 patients with electrocorticography score 0 or 1, 287 were male, 151 had rectal cancer, 352 were from Western Europe, 39 were from Eastern and Central Europe, and 63 were from the rest of the world. The median follow-up time was 189.5 days and the interquartile range of the follow-up time was (93, 334) days. Among those 387 patients who developed disease progression, the median disease progression time is 53 days and the interquartile range is (45, 84) days.

The model for the time of disease progression includes all the baseline covariates. Among the 387 patients who developed disease progression, the median age at the time of disease progression was 62.1 years with interquartile range (55.0, 69.1), the numbers of patients who had partial response, stable disease and progressive disease were 19, 86 and 282, respectively. There were 348 patients with baseline electrocorticography score 0 or 1, 286 patients had a last electrocorticography score 0 or 1, and 180 patients had grade 2 or above adverse events.

The covariates at the time from disease progression to death include additional progonostic factors for the switching decision. They are progression time, partial response age, best tumour response with partial response or stable disease versus progressive disease according to investigator assessment, last electrocorticography performance status and grade 2 or above adverse events. We include those prognostic factors based on our best knowledge with assistance from trial clinicians so that Assumption 2 could be valid. Because of the dependency on the unobserved outcome, Assumption 2 is not testable, and the results could be biased if it is violated.

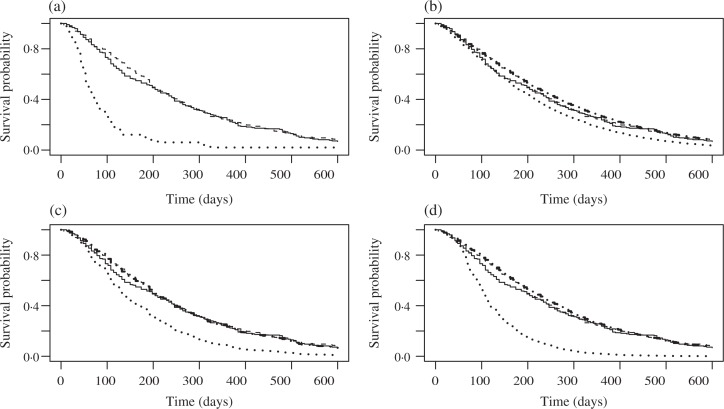

The results from the proposed model are given in Table 2. The survival probability estimates using the intent-to-treat Kaplan–Meier, no switching subgroup analysis using Kaplan–Meier, Branson & Whitehead (2002), Shao et al. (2005), and the proposed methods at the 25, 50, 75 and 100% quartiles of time to death are given in Table 3. The no-switching approach excludes the patients who switched from best supportive care alone to panitumumab plus supportive care. The p-values to test the treatment effect using the above five approaches are 0.577, <0.001, 0.520, 0.002 and <0.001, respectively. For the Branson & Whitehead (2002) method, 1000 bootstrap samples are used to construct the standard error and p-value because the standard errors calculated from the covariance matrix at convergence are too small to construct valid confidence limits (Branson & Whitehead, 2002). Figure 3 provides the predicted survival curves for the two treatment groups of panitumumab plus best supportive care and best supportive care alone using the five approaches. We notice that the intent-to-treat Kaplan–Meier and Branson & Whitehead (2002) approaches yield small survival differences between the panitumumab plus best supportive care and best supportive care alone groups before 200 days and there is little difference after 200 days since enrolment. On the other hand, the subgroup analysis based on no patients switching shows big differences between the two arms for the whole follow-up period. We then investigated the reason for the survival curve discrepancy for best supportive care alone group and found two key contributing factors: a high switching rate for the best supportive care alone group, 167/223=75%; and selection bias, that is, the patients with longer time-to-event were more likely to be switched from best supportive care alone to panitumumab plus best supportive care with a median time-to-event of 40.5 days for the no switching patients and 49 days for the switching patients. The Shao et al. (2005) and the proposed approach both yield big differences in the estimated survival curves compared with the control arm with the bigger treatment difference being obtained by the proposed approach.

Table 2.

Model estimates for the panitumumab data

| Parameter | EST | SE | P | Parameter | EST | SE | P |

|---|---|---|---|---|---|---|---|

| TD Model | TU Model | ||||||

| Treatment | −0.464 | 3.47 | 0.182 | Treatment | −1.144 | 1.18 | <0.001 |

| Age | 0.023 | 0.15 | 0.124 | Age | −0.015 | 0.05 | 0.004 |

| bECOG | −0.589 | 2.99 | 0.048 | bECOG | −0.805 | 1.74 | <0.001 |

| Rectal | −0.028 | 3.20 | 0.929 | Rectal | −0.018 | 1.10 | 0.871 |

| Male | −0.288 | 3.05 | 0.345 | Male | −0.054 | 1.09 | 0.622 |

| CenEastEU | −0.188 | 6.27 | 0.764 | CenEastEU | 0.194 | 2.50 | 0.439 |

| WesternEU | 0.181 | 3.99 | 0.650 | WesternEU | −0.068 | 1.60 | 0.672 |

| TG Model | U Model | ||||||

| Treatment | −0.784 | 2.14 | <0.001 | Intercept | 1.366 | 9.72 | 0.160 |

| V*(1-Treatment) | −1.383 | 2.09 | <0.001 | Treatment | −1.070 | 3.19 | <0.001 |

| Prog Time | −0.003 | 0.01 | 0.039 | Age | −0.008 | 0.14 | 0.546 |

| PR Age | −0.004 | 0.05 | 0.450 | bECOG | 1.905 | 3.34 | <0.001 |

| BTR PR | −0.226 | 3.45 | 0.512 | Rectal | 0.314 | 3.31 | 0.342 |

| BTR SD | −0.180 | 1.74 | 0.302 | Male | −0.303 | 3.21 | 0.346 |

| bECOG | −0.268 | 1.96 | 0.173 | CenEastEU | 0.078 | 6.23 | 0.901 |

| LECOG | −1.035 | 1.48 | <0.001 | WesternEU | 0.346 | 4.12 | 0.400 |

| AE | 0.295 | 1.16 | 0.011 | ||||

EST, parameter estimates; SD(×10), standard error of the estimates; P, p-values; bECOG, baseline electrocorticography; CenEastEU, central Europe; WesternEU, western Europe; Prog Time, progression time; PR, partial response; BTR, best tumour response; SD, stable disease; LECOG, last electrocorticography; AE, adverse event.

Table 3.

Predicted survival functions for the panitumumab data

| Time (Days) | ITT | No Crossover | IPE | Shao Cox | TM | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| BSC | P+BSC | BSC | P+BSC | BSC | P+BSC | BSC | P+BSC | BSC | P+BSC | |

| 93 | 0.750 | 0.793 | 0.303 | 0.793 | 0.722 | 0.783 | 0.678 | 0.798 | 0.548 | 0.801 |

| 190 | 0.511 | 0.533 | 0.081 | 0.533 | 0.454 | 0.551 | 0.341 | 0.536 | 0.171 | 0.555 |

| 334 | 0.266 | 0.260 | 0.020 | 0.260 | 0.201 | 0.298 | 0.097 | 0.258 | 0.025 | 0.282 |

| 1024 | 0.013 | 0.038 | 0.020 | 0.038 | 0.001 | 0.007 | 0.001 | 0.023 | 0.001 | 0.026 |

| p-value | 0.577 | <0.001 | 0.520 | 0.002 | <0.001 | |||||

ITT, intent-to-treat; IPE, Branson & Whitehead (2002); Shao Cox, Shao et al. (2005); TM, proposed method; BSC, best supportive care alone; P+BSC, panitumumab plus best supportive care.

Fig. 3.

Predicted survival curves for the panitumumab data. In each panel, the solid curve is the intent-to-treat survival function in the control arm, the dashed curve is the intent-to-treat survival function in the experimental treatment arm, while the dotted and the dash-dotted curves are, respectively, the estimated survival functions in these two arms. The estimates in the survival curves are based on (a) no switching subgroup analysis using the Kaplan–Meier estimates, (b) the Branson & Whitehead (2002) method, (c) the Shao et al. (2005) method and (d) our method.

7. Extensions

We have conducted simulation studies when data are generated from the proposed model or from the models in Shao et al. (2005). Additional simulation studies may be carried out to further examine the robustness of the proposed method to misspecification of models (1)–(3) or to perform sensitivity analysis on the ignorability assumption of switching selection.

Although the proposed model is developed under partial treatment switching, i.e., not all patients switch treatment, it can be easily extended to the case with complete treatment switching in which all patients on the control arm switch to the experimental arm. Specifically, under complete treatment switching, the components of the proposed model for U, TD and TU remain the same and only the model in (3) for G needs to be modified as follows

as in this case β22 is no longer identifiable. However, under complete treatment switching, the estimator of the predictive survival function needs to be rederived, which is much more challenging than under partial treatment switching. In addition, in the proposed model, we build dependence between TU and G via the transition model. An alternative to the transition model is the frailty model. Under the latter, we have hU(t | R, X, U = 1) = h1(t) exp(β1 R + γ1 X)ω, and hG(t | R, Z, V, U = 1, TU) = h2(t) exp{β21 R + β22V (1 – R) + γ2 Z}ω, where ω is a latent gamma-frailty with mean one and variance θ. Compared with the frailty model, the transition model is much more numerically stable in the implementation of the expectation-maximization algorithm. Finally, the proposed method can also be extended to the case where patients may switch from either treatment arm as discussed in Shao et al. (2005). These extensions, along with comparison between the transition model and the frailty model, are currently under investigation.

Acknowledgments

This research work was part of a collaborative effort with Amgen, Inc. and was partly funded by the National Institutes of Health, U.S.A. and by Amgen Inc. We thank the editor, associate editor and the two referees for their constructive comments and suggestions which have greatly improved this paper. The Amgen Research Group consists of, in alphabetical order, Drs Chao-Yin Chen, Eric M. Chi, Thomas Liu, Jean Pan, Steve M. Snapinn, Mike Wolf, Allen Xue and Nan Zhang. The authors do not have a conflict of interest in any portions of this paper.

Appendix

Proof of Theorem 1. Let ln(θ, H1, H2, H3) denote the observed loglikelihood function for (θ, H), and H{t} = {H(t) – H(t−)}. First, it is easy to see if Ĥk{t} = ∞, then ln(θ̂, Ĥ1, Ĥ2, Ĥ3) = −∞. Moreover, this also holds if the jump size of Ĥk at the corresponding events is zero. Thus, the jump sizes of Ĥk at the corresponding events are positive and finite so the derivatives of ln(θ, H1, H2, H3) with respect to each jump size of Ĥk should be zero at (θ̂, Ĥ1, Ĥ2, Ĥ3). This gives

| (A1) |

| (A2) |

| (A3) |

where Gi denotes group i, Ii(A) = I (i ∈ A), , , , and the additional subindex j denotes the expression for the j th subject. In addition, we let ŜD(t) = exp{− Ĥ0(t)eη̂Dj}, ŜU(t) = exp{−Ĥ1(Yi)eη̂Uj} and . Equation (A3) implies Ĥ0{Yi} ⩽ Ii(G1)/Σj∈G1 c0, where c0 is a positive lower bound of eη̂Dj. Since n−1 Σj∈G11 → pr(U = 0, Y ⩽ C) > 0, we obtain . Similarly, equations (A2) and (A3) yield that lim supn Ĥ1(τ) and lim supn Ĥ2(τ) are both finite.

By Helly’s selection theorem, for any subsequence, we can choose a further subsequence such that Ĥk weakly converges to an increasing function for k = 1, 2, 3. Moreover, we can assume θ̂ → θ*. We then show and θ* = θ. To this end, we construct H̃k such that H̃k has jumps at the same events as Ĥk; moreover, the jumps of H̃k are given by the right-hand side of (A1) to (A3) except that the parameters on the right-hand side are set to be the true values. It is straightforward to verify that H̃k converges uniformly to the true function Hk . Furthermore, we can show that d Ĥk/d H̃k converges uniformly to .

Therefore, since ln(θ̂ Ĥ1, Ĥ2, Ĥ3) – ln(θ, Ĥ1, Ĥ2, Ĥ3) ⩾ 0, we take limits on both sides and conclude that the Kullback–Leilber information between (θ*, , , ) and (θ, H1, H2, H3) is nonpositive. This immediately implies that the loglikelihood function at (θ*, , , ) is equal to the loglikelihood function at (θ, H1, H2, H3) with probability one. Thus, this equality holds for all subjects in Groups 1 to 4 as defined in § 3. Comparing the differences of the loglikelihood functions from subjects in Group 2 and Group 3, we have

so by Assumption 6, , , and . Let W = 0, we have

Now in the loglikelihood for subjects in Group 1, we let Y = 0 and obtain

Compare the above equations, so . Since one component of X is continuous and has a nonzero coefficient in α2, the above equation gives and . Finally, after integrating the likelihood equality function for Group 2 for W from 0 to Y, we have

Thus, and , . On the other hand, integrating the likehood equality function for subjects in Group 1 for Y from 0 to Y gives

so , and .

We have proved that θ̂ → θ and Ĥk converges weakly to Hk . The latter can be further strengthened to uniform convergence in [0, τ ] since Hk is continuous. Therefore, Theorem 1 holds.

Proof of Theorem 2. The proof of Theorem 2 follows from the same argument in proving Theorem 2 in Zeng & Lin (2010). In particular, their conditions (C.1)–(C.4) and (C.6) hold for our specific models. Their first identifiability condition (C.5) has been verified in the proof of Theorem 1. To complete the proof, it remains to verify the second identifiability of their condition (C.7). Consider the score function along a sub model Hk + ∊∫ fkd Hk and θ+ ∊ν where ν = (β0,γ0, β1,γ1, β21, β22,γ2, α0, α1, α2). If this score function is zero with probability one, then we need to show that fk = 0 and ν = 0. For subjects in Group 2, the score equation is

| (A4) |

For subjects in Group 3, we obtain the score equation to be

| (A5) |

The difference between (A4) and (A5) gives f2(G) + ηG = 0, so by Assumption 6, f2 = 0, β21 = 0, β22 = 0 and γ2 = 0.

Using this result and equation (A5), the score equation for subjects in Group 4 becomes

| (A6) |

On the other hand, for subjects in Group 1,

| (A7) |

Then the difference between (A6) and (A7) gives f0(Y) + ηD = 0 which further gives f0 = 0, β0 = 0 and γ0 = 0. As a result, (A7) becomes so α0 = 0, α1 = 0 andα2 = 0. This further combined with equation (A5) gives f1 = 0, β1 = 0 and γ1 = 0. We have verified condition (C.7) in Zeng & Lin (2010). According to their results, our Theorem 2 holds.

Moreover, from Theorem 3 in Zeng & Lin (2010), we also conclude that the inverse of the observed information is a consistent estimator for the asymptotic covariance.

Proof of Theorem 3. The consistency of Ŝa(t) follows from the consistency of the following terms, , , from Theorem 1. Moreover, we have the fact that, by the kernel approximation,

uniformly in x in the support of X and with probability one. Since Z and (TU, C) are independent given (R, X), the limit on the right-hand side is also equal to pr(G + TU > t | X = x, R = a, U = 1). Thus, Ŝa(t) → Ŝa(t). Note Ŝa(t) – Ŝa(t) can be written as

| (A8) |

The first two terms are Hadamard differentiable with respect to θ̂, Ĥk and the empirical distribution of X given R. Therefore, by the functional delta method, these terms can be approximated as for some bounded functions fk (t) and g(x), where F̂ (X | R) is the empirical distribution function of X given R. To complete the proof of Theorem 3, we only need to show that the last term in equation (A8) is asymptotically normal.

Denote Q as subjects in Group 2 and Group 3 and use Pn to denote the empirical measure. The last term of (A8) can be reorganized as

| (A9) |

In (A9), we can apply the functional central limit result in Theorem 2.11.23 of van der Vaart & Wellner (1996) to show that the first two terms of (A9) converge in distribution to a Gaussian process with a factor n1/2. From the kernel approximation, the last term is , and therefore, is op(n−1/2). Combining the above results, we conclude that Theorem 3 holds.

References

- Amado RG, Wolf M, Peeters M, Cutsem EV, Siena S, Freeman DJ, Juan T, Sikorski R, Suggs S, Radinsky R, et al. Wild-type KRAS is required for panitumumab efficacy in patients with metastatic colorectal cancer. J Clin Oncol. 2008;28:1626–34. doi: 10.1200/JCO.2007.14.7116. [DOI] [PubMed] [Google Scholar]

- Barthel FMS, Babiker A, Royston P, Parmar MKB. Evaluation of sample size and power for multi-arm survival trials allowing for non-uniform accrual, non-proportional hazards, loss to follow-up and cross-over. Statist Med. 2006;25:2521–42. doi: 10.1002/sim.2517. [DOI] [PubMed] [Google Scholar]

- Branson M, Whitehead J. Estimating a treatment effect in survival studies in which patients switch treatment. Statist Med. 2002;21:2449–63. doi: 10.1002/sim.1219. [DOI] [PubMed] [Google Scholar]

- Fix E, Neyman J. A simple stochastic model of recovery, relapse, death and loss of patients. Hum Biol. 1951;23:205–41. [PubMed] [Google Scholar]

- Greenland S, Lanes S, Jara M. Estimating effects from randomized trials with discontinuations: the need for intent-to-treat design and g-estimation. Clin. Trials. 2008;5:5–13. doi: 10.1177/1740774507087703. [DOI] [PubMed] [Google Scholar]

- Hernán MÁ, Brumback B, Robins JM. Marginal structural models to estimate the causal effect of zidovudine on the survival of HIV-positive men. Epidemiology. 2000;11:561–70. doi: 10.1097/00001648-200009000-00012. [DOI] [PubMed] [Google Scholar]

- Jiang Q, Snapinn SM, Iglewicz B. Calculation of sample size in endpoint trials: the impact of informative noncompliance. Biometrics. 2004;60:800–6. doi: 10.1111/j.0006-341X.2004.00231.x. [DOI] [PubMed] [Google Scholar]

- Lachin JM, Foulkes MA. Evaluation of sample size and power for analyses of survival with allowance for nonuniform patient entry, losses to follow-up, noncompliance, and stratification. Biometrics. 1986;42:507–19. [PubMed] [Google Scholar]

- Lakatos E. Sample sizes based on the log-rank statistic in complex clinical trials. Biometrics. 1988;44:229–41. [PubMed] [Google Scholar]

- Larson G, Dinse G. A mixture model for the regression analysis of competing risks data. Appl Statist. 1985;34:201–11. [Google Scholar]

- Law MG, Kaldor JM. Survival analyses of randomized trials adjusting for patients who switch treatments. Statist Med. 1996;15:2069–76. doi: 10.1002/(SICI)1097-0258(19961015)15:19<2069::AID-SIM347>3.0.CO;2-V. [DOI] [PubMed] [Google Scholar]

- London WB, Frantz CN, Campbell LA, Seeger RC, Brumback BA, Cohn SL, Matthay KK, Castleberry RP, Diller L. Phase II randomized comparison of topotecan plus cyclophosphamide versus topotecan alone in children with recurrent or refractory neuroblastoma: a children’s oncology group study. J Clin Oncol. 2010;28:3808–15. doi: 10.1200/JCO.2009.27.5016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Louis TA. Finding the observed information matrix when using the EM algorithm. J. R. Statist. Soc. B. 1982;13:2233–47. [Google Scholar]

- Lu J, Pajak TF. Statistical power for a long-term survival trial with a time-dependent treatment effect. Contr. Clin. Trials. 2000;21:561–73. doi: 10.1016/s0197-2456(00)00108-2. [DOI] [PubMed] [Google Scholar]

- Lunceford JK, Davidian M, Tsiatis AA. Estimation of survival distributions of treatment policies in two-stage randomization designs in clinical trials. Biometrics. 2002;58:48–57. doi: 10.1111/j.0006-341x.2002.00048.x. [DOI] [PubMed] [Google Scholar]

- Marcus SM, Gibbons RD. Estimating the efficacy of receiving treatment in randomized clinical trials with noncompliance. Health Serv Outcomes Res Methodol. 2001;2:247–58. [Google Scholar]

- Porcher R, Lévy V, Chevret S. Sample size correction for treatment crossovers in randomized clinical trials with a survival endpoint. Contr. Clin. Trials. 2002;23:650–61. doi: 10.1016/s0197-2456(02)00239-8. [DOI] [PubMed] [Google Scholar]

- Robins JM, Tsiatis AA. Correcting for non-compliance in randomized trials using rank preserving structural failure time models. Commun. Statist. A. 1991;20:2609–31. [Google Scholar]

- Robins JM, Hernán MÁ, Brumback B. Marginal structural models and causal inference in epidemiology. Epidemiology. 2000;11:550–60. doi: 10.1097/00001648-200009000-00011. [DOI] [PubMed] [Google Scholar]

- Shao J, Chang M, Chow S-C. Statistical inference for cancer trials with treatment switching. Statist Med. 2005;24:1783–90. doi: 10.1002/sim.2128. [DOI] [PubMed] [Google Scholar]

- Sverdrup E. Estimates and test procedures in connection with stochastic models for deaths, recoveries and transfersb etween differents tates of health. Skand Aktuar. 1965;52:185–211. [Google Scholar]

- van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. New York: Springer; 1996. [Google Scholar]

- White IR. Editorial on survival analyses of randomized trials adjusting for patients who switch treatments by M. G. Law and J. M. Kaldor. Statist Med. 1997;16:2619–25. doi: 10.1002/(sici)1097-0258(19971130)16:22<2619::aid-sim699>3.0.co;2-x. [DOI] [PubMed] [Google Scholar]

- White IR. Letter to the editor. Estimating treatment effects in randomized trials with treatment switching. Statist Med. 2006;25:1619–22. doi: 10.1002/sim.2453. [DOI] [PubMed] [Google Scholar]

- White IR, Carpenter J, Pocock SJ, Henderson RA. Adjusting treatment comparisons to account for non-randomized interventions: an example from an angina trial. Statist Med. 2003;22:781–93. doi: 10.1002/sim.1369. [DOI] [PubMed] [Google Scholar]

- Yamaguchi T, Ohashi Y. Adjusting for differential proportions of second-line treatment in cancer clinicals trials. Part II: an application in a clinical trial of unresectable non-small-cell lung cancer. Statist Med. 2004;23:2005–22. doi: 10.1002/sim.1817. [DOI] [PubMed] [Google Scholar]

- Zeng D, Lin DY. A general asymptotic theory for maximum likelihood estimation in semiparametric regression models with censored data. Statist. Sinica. 2010;20:871–910. [PMC free article] [PubMed] [Google Scholar]