Abstract

In many cases, neurons process information carried by the precise timings of spikes. Here we show how neurons can learn to generate specific temporally precise output spikes in response to input patterns of spikes having precise timings, thus processing and memorizing information that is entirely temporally coded, both as input and as output. We introduce two new supervised learning rules for spiking neurons with temporal coding of information (chronotrons), one that provides high memory capacity (E-learning), and one that has a higher biological plausibility (I-learning). With I-learning, the neuron learns to fire the target spike trains through synaptic changes that are proportional to the synaptic currents at the timings of real and target output spikes. We study these learning rules in computer simulations where we train integrate-and-fire neurons. Both learning rules allow neurons to fire at the desired timings, with sub-millisecond precision. We show how chronotrons can learn to classify their inputs, by firing identical, temporally precise spike trains for different inputs belonging to the same class. When the input is noisy, the classification also leads to noise reduction. We compute lower bounds for the memory capacity of chronotrons and explore the influence of various parameters on chronotrons' performance. The chronotrons can model neurons that encode information in the time of the first spike relative to the onset of salient stimuli or neurons in oscillatory networks that encode information in the phases of spikes relative to the background oscillation. Our results show that firing one spike per cycle optimizes memory capacity in neurons encoding information in the phase of firing relative to a background rhythm.

Introduction

There is increasing evidence that information is represented in the brain through the precise timing of spikes (temporally coded), not only through the neural firing rate [1]–[3]. For example, temporally structured multicell spiking patterns, organized into frames, were observed in hippocampus and cortex, and were associated to memory traces [4], [5]. In the olfactory bulb, spike latencies represent sensory input strength and identity [6], [7]. In the visual cortex, the relative spike timings of quasi-synchronized neurons, firing in sequences shorter than one cycle of beta/gamma oscillation, represent stimulus properties, and the information they carry grows with the oscillation strength [8]. The coding of information in the phases of spikes relative to a background oscillation has been observed in many brain regions, including the visual and prefrontal cortices and the hippocampus [9]–[14].

Learning in neural networks that represent information through a firing rate code has been thoroughly studied [15]; however, we have lacked efficient, theory-supported learning rules for spiking neurons with temporal coding of information. The tempotron, a model of a spiking neuron endowed with a specific learning rule, has shown how a neuron can give a binary response to information encoded in the precise timings of the afferent spikes [16]–[18]. But the tempotron's output represents information through the existence or the lack of an output spike during a predefined period. The timing of the tempotron's output spikes is arbitrary and does not carry information. Because of this change in the representation of information, a tempotron cannot be an information-carrying input for another tempotron. By contrast, the ReSuMe learning rule [19], [20] allows supervised learning of spiking neural codes where the output is also temporally coded, but this rule, as we will show, has a much lower memory capacity than the E-learning rule introduced here.

Here we present two new supervised learning rules for spiking neurons, which allow such neurons to process information that is encoded, for both input and output, in the precise timings of spikes. We show how single neurons can perform classification of input spike patterns into multiple categories, using a temporal coding of information with sub-millisecond precision. The E-learning rule that we introduce here is analytically derived, with approximations, and has a high memory capacity. The I-learning rule is heuristic, but is more biologically plausible, because synaptic changes depend directly on the synaptic currents at the timings (actual and target) of the postsynaptic spikes.

We first describe our results, by illustrating the chronotron problem, introducing our new learning rules, and describing their performance and their memory capacity. We then compare our results with previous ones. After a discussion of our results, the methods used for analytical derivations and computer simulations are presented in detail at the end of the paper.

Results

Understanding and illustrating the chronotron problem

We consider the problem of training a spiking neuron by changing its parameters, such that, for a given input, its output is as close as possible to some given target spike train (for how the target spike train may be provided in the brain, see the Discussion section). Multiple such input–output associations must be performed with a single set of neural parameters. Information is represented in both the input and the output through the precise timings of spikes. We call a neuron that solves this problem a chronotron.

In order to solve the chronotron problem, appropriate learning rules should be defined. Here we focus on learning rules that change the synaptic efficacies of the neuron, although other neural parameters can also be trained.

Our analysis uses the Spike Response Model (SRM) of spiking neurons, which reproduces with high accuracy the dynamics of the complex Hodgkin-Huxley neural model while being amenable to analytical treatment [21]. The integrate-and-fire neuron is a particular case of the SRM.

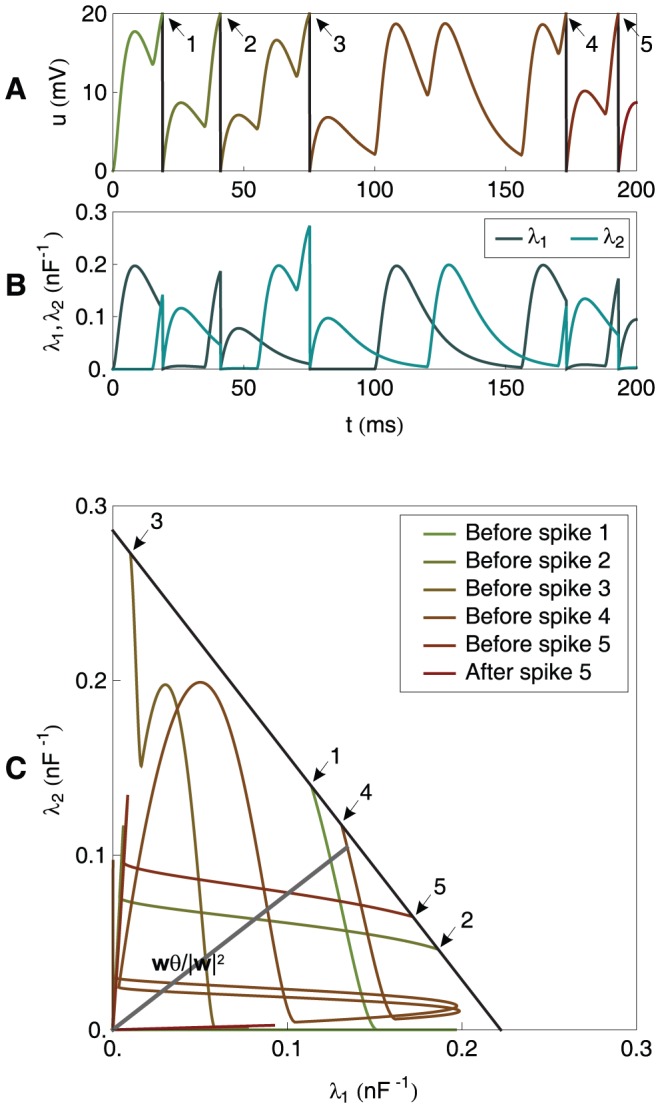

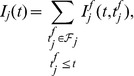

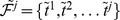

The considered neuron receives inputs through multiple synapses indexed by  , and the incoming spikes received through each of these synapses during the considered trial are indexed by

, and the incoming spikes received through each of these synapses during the considered trial are indexed by  according to their temporal order. We consider that the arrival of the

according to their temporal order. We consider that the arrival of the  -th presynaptic spike on the synapse

-th presynaptic spike on the synapse  of a neuron at the moment

of a neuron at the moment  leads to a postsynaptic potential (PSP) whose value as a function of the time

leads to a postsynaptic potential (PSP) whose value as a function of the time  is the product of synaptic efficacy

is the product of synaptic efficacy  and a normalized kernel

and a normalized kernel  , i.e.

, i.e.  (Methods). We consider here that synaptic changes are applied on a time scale that is much slower than the time scale of the variation of the PSPs and than the length of the considered trial, or that, in simulations, synaptic changes are applied at the end of one or more trials grouped in batches of information processing within which synaptic efficacies are held constant. Thus, the synaptic efficacies

(Methods). We consider here that synaptic changes are applied on a time scale that is much slower than the time scale of the variation of the PSPs and than the length of the considered trial, or that, in simulations, synaptic changes are applied at the end of one or more trials grouped in batches of information processing within which synaptic efficacies are held constant. Thus, the synaptic efficacies  can be considered effectively constant during a trial, but can change across trials. We denote as

can be considered effectively constant during a trial, but can change across trials. We denote as  the total normalized PSP resulting from the contribution of past presynaptic spikes coming through the synapse

the total normalized PSP resulting from the contribution of past presynaptic spikes coming through the synapse  ,

,

|

(1) |

The membrane potential  of the neuron is determined by the integration of the PSPs generated by all presynaptic spikes, and also by a term

of the neuron is determined by the integration of the PSPs generated by all presynaptic spikes, and also by a term  that models the refractoriness caused by the last spike fired by the studied neuron:

that models the refractoriness caused by the last spike fired by the studied neuron:

| (2) |

When the membrane potential reaches the firing threshold  , a spike is fired and the membrane potential is reset to the reset potential

, a spike is fired and the membrane potential is reset to the reset potential  .

.

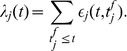

The chronotron problem can be illustrated graphically by considering a space having the same number of dimensions  as the number of afferent synapses of the neuron. In this space, the

as the number of afferent synapses of the neuron. In this space, the  synaptic efficacies

synaptic efficacies  define a vector

define a vector  and the normalized PSPs

and the normalized PSPs  define a vector

define a vector  . The vector

. The vector  moves around this space, in time, according to the dynamics of the PSPs, while

moves around this space, in time, according to the dynamics of the PSPs, while  changes on much larger timescales than

changes on much larger timescales than  . The neuron fires when

. The neuron fires when  touches a hyperplane that is perpendicular on

touches a hyperplane that is perpendicular on  and at a distance

and at a distance  of the origin (Methods). After firing, the PSPs are reset to 0 and thus the trajectories of

of the origin (Methods). After firing, the PSPs are reset to 0 and thus the trajectories of  always start from the origin. This is illustrated in Figs. 1 and 2 for a neuron with 2 synapses and in Fig. 3 for a neuron with 3 synapses. The chronotron problem can be understood as the problem of setting the spike-generating hyperplane, by changing

always start from the origin. This is illustrated in Figs. 1 and 2 for a neuron with 2 synapses and in Fig. 3 for a neuron with 3 synapses. The chronotron problem can be understood as the problem of setting the spike-generating hyperplane, by changing  , such that it intersects the trajectory of

, such that it intersects the trajectory of  at exactly those timings when we want spikes to be fired. This problem is very similar to the problem that needs to be solved in reservoir computing [22]–[24], where the state of a high-dimensional dynamical system, such as our vector

at exactly those timings when we want spikes to be fired. This problem is very similar to the problem that needs to be solved in reservoir computing [22]–[24], where the state of a high-dimensional dynamical system, such as our vector  , is processed by a (usually) linear discriminator such that the switch between output states (the crossing of the hyperplane defined by the linear discriminator) happens at desired moments of time.

, is processed by a (usually) linear discriminator such that the switch between output states (the crossing of the hyperplane defined by the linear discriminator) happens at desired moments of time.

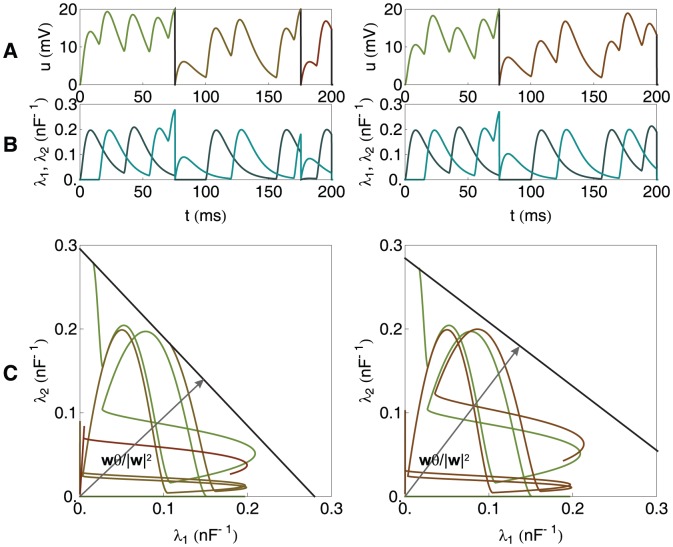

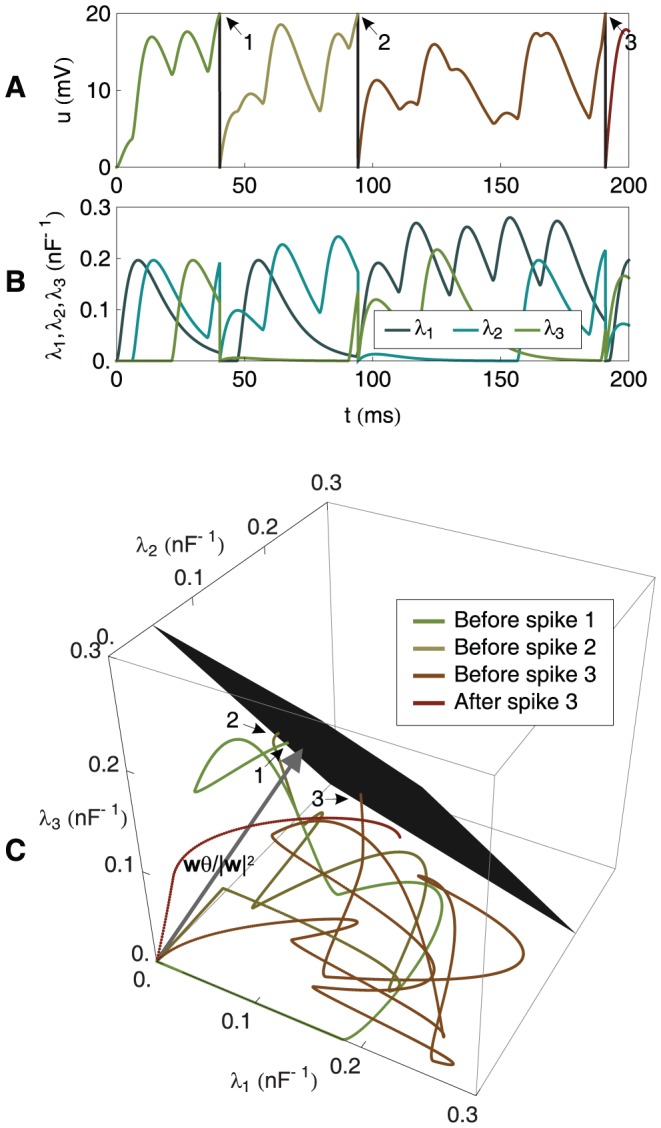

Figure 1. A graphical illustration of the chronotron problem for a neuron with 2 synapses.

(A) The dynamics of the membrane potential  . The numbered arrows indicate the timings when the membrane potential reaches the firing threshold and spikes are fired. (B) The dynamics of the two components of

. The numbered arrows indicate the timings when the membrane potential reaches the firing threshold and spikes are fired. (B) The dynamics of the two components of  . (C) The trajectory of

. (C) The trajectory of  . Spikes are generated when the trajectory reaches the spike-generating hyperplane, which is here a line. The chronotron problem is solved by adjusting the location of the spike-generating hyperplane, through changes in

. Spikes are generated when the trajectory reaches the spike-generating hyperplane, which is here a line. The chronotron problem is solved by adjusting the location of the spike-generating hyperplane, through changes in  , such that the timings of the fired spikes are the target ones. The numbered arrows indicate the generation of spikes at the times when the spike-generating line is reached. The neuron has

, such that the timings of the fired spikes are the target ones. The numbered arrows indicate the generation of spikes at the times when the spike-generating line is reached. The neuron has  .

.

Figure 2. A graphical illustration of the chronotron problem for a neuron with 2 synapses (continued).

As in Fig. 1, but for other values of  , resulted through the application of E-learning, starting from the situation in Fig. 1, and having as a target the generation of one spike at 75 ms. Left: during learning. Right: after learning converged.

, resulted through the application of E-learning, starting from the situation in Fig. 1, and having as a target the generation of one spike at 75 ms. Left: during learning. Right: after learning converged.

Figure 3. A graphical illustration of the chronotron problem for a neuron with 3 synapses.

(A) The dynamics of the membrane potential  . The numbered arrows indicate the timings when the membrane potential reaches the firing threshold and spikes are fired. (B) The dynamics of the three components of

. The numbered arrows indicate the timings when the membrane potential reaches the firing threshold and spikes are fired. (B) The dynamics of the three components of  . (C) The trajectory of

. (C) The trajectory of  . Spikes are generated when the trajectory reaches the spike-generating hyperplane, which is here the black plane. The numbered arrows indicate the generation of spikes at the timings when the spike-generating hyperplane is reached. The neuron has

. Spikes are generated when the trajectory reaches the spike-generating hyperplane, which is here the black plane. The numbered arrows indicate the generation of spikes at the timings when the spike-generating hyperplane is reached. The neuron has  .

.

Similar optimization problems can usually be solved by defining an error function and then changing the parameters to be optimized, through methods like gradient descent, which minimize this error function. The differences between the actual spike train fired by the neuron for a particular input and, respectively, the target spike train can be measured using spike train metrics such as the Victor & Purpura (VP) distance [25]. The VP distance is defined as the minimum cost for transforming one spike train into the other by creating, removing or moving spikes [25]. However, one cannot derive an efficient learning rule using directly this distance, because the terms corresponding to spikes that should be created or removed are constant and do not reflect how creating or removing these spikes depends on the plastic parameters. In order to solve this issue, we used a new error function, which is a modification of the VP distance.

E-learning

The VP distance is the sum of the costs assigned to either insertion of spikes, removal of spikes or shifting the timing of spikes. The cost of adding or deleting a single spike is set to 1, while the cost of shifting a spike by an amount  is

is  , where

, where  is a positive time constant that is a parameter of the metric. Instead of constant cost terms for the independent spikes that have to be created or removed, our error function changes the VP distance by including terms that depend on the value of the membrane potential of the neuron at the timings of these spikes. This allows these terms to be differentiated piecewisely with respect to the plastic parameters (Methods).

is a positive time constant that is a parameter of the metric. Instead of constant cost terms for the independent spikes that have to be created or removed, our error function changes the VP distance by including terms that depend on the value of the membrane potential of the neuron at the timings of these spikes. This allows these terms to be differentiated piecewisely with respect to the plastic parameters (Methods).

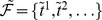

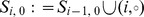

For a given input, the trained neuron fires at the moments  , where

, where  represents the index of the spike in the spike train. The ordered set of the spikes in the spike train fired by the neuron is

represents the index of the spike in the spike train. The ordered set of the spikes in the spike train fired by the neuron is  . The target spike train that the neuron should fire for that input is

. The target spike train that the neuron should fire for that input is  . In a transformation of minimal cost, according to the VP metric, of the actual spike train

. In a transformation of minimal cost, according to the VP metric, of the actual spike train  into the target one

into the target one  , the operations involved are the following: removal of spikes (that are not previously moved); insertion of spikes (at their target timings, so that they are not moved after insertion); and shifting of spikes toward their target timings. We denote as

, the operations involved are the following: removal of spikes (that are not previously moved); insertion of spikes (at their target timings, so that they are not moved after insertion); and shifting of spikes toward their target timings. We denote as  the subset of

the subset of  that represents the spikes that should be eliminated; and as

that represents the spikes that should be eliminated; and as  the subset of

the subset of  that represents the timings of target spikes at which new spikes should be inserted into

that represents the timings of target spikes at which new spikes should be inserted into  . We call the spikes in

. We call the spikes in  and

and  independent. The spikes in the actual spike train that are not eliminated,

independent. The spikes in the actual spike train that are not eliminated,  , are in a one-to-one correspondence with the spikes in the target spike train for which a correspondent is not inserted,

, are in a one-to-one correspondence with the spikes in the target spike train for which a correspondent is not inserted,  , and they should be moved towards their targets. We say that the spikes in correspondent pairs from

, and they should be moved towards their targets. We say that the spikes in correspondent pairs from  and

and  are linked or paired to their correspondent (match). The existing algorithm that computes the VP distance between two given spike trains [25] can be extended in order to also compute the sets

are linked or paired to their correspondent (match). The existing algorithm that computes the VP distance between two given spike trains [25] can be extended in order to also compute the sets  ,

,  and their complements (Methods).

and their complements (Methods).

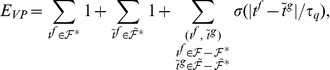

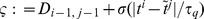

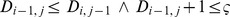

E-learning aims to minimize the following error function:

|

(3) |

where  is a positive parameter. The first sum runs over the independent actual spikes, the second sum runs over the independent target spikes, and the last sum runs over all unique pairs of matching spikes. Because the creation and deletion of spikes and changes in their classification in either

is a positive parameter. The first sum runs over the independent actual spikes, the second sum runs over the independent target spikes, and the last sum runs over all unique pairs of matching spikes. Because the creation and deletion of spikes and changes in their classification in either  or

or  lead to discontinuous changes of

lead to discontinuous changes of  (Fig. 4 B), gradient descent can only be ensured piecewisely. The synaptic changes that aim to minimize the error function through piecewise gradient descent are

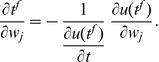

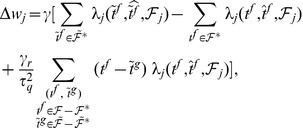

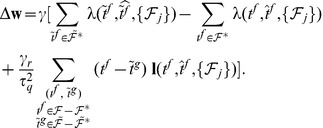

(Fig. 4 B), gradient descent can only be ensured piecewisely. The synaptic changes that aim to minimize the error function through piecewise gradient descent are  . By performing the derivation and after some approximations (Methods), we get the E-learning rule:

. By performing the derivation and after some approximations (Methods), we get the E-learning rule:

|

(4) |

where  is the learning rate, a positive parameter, and

is the learning rate, a positive parameter, and  another positive parameter.

another positive parameter.

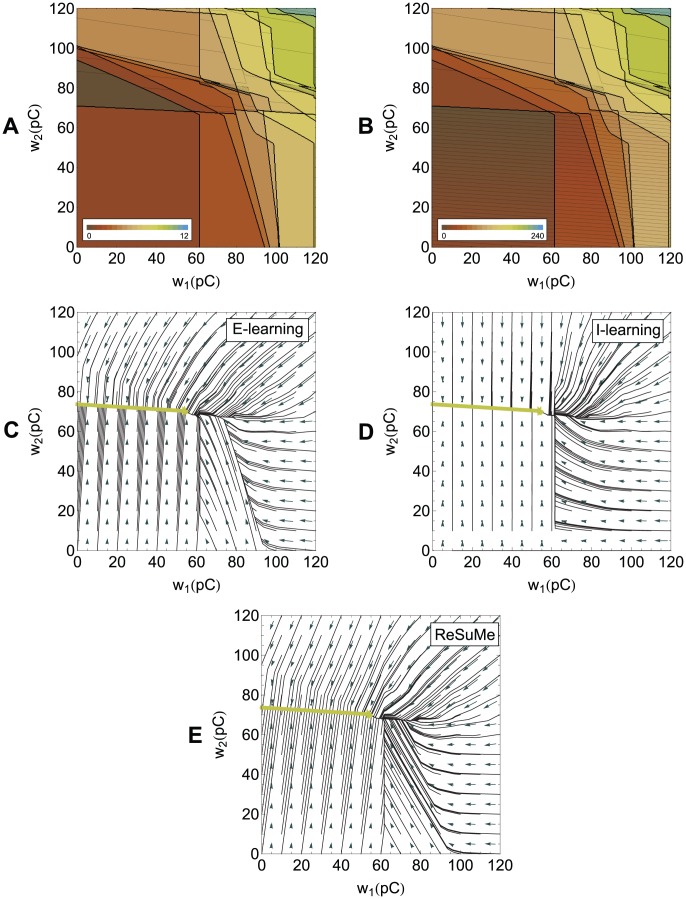

Figure 4. The error landscape for a neuron with two synapses and the descent on this landscape during learning.

The neuron receives several input spikes on each synapse, the same as in Figs. 1 and 2, and has to fire one spike at a predefined target timing, the same as in Fig. 2. (A), (B) A contour plot of the VP and E distances between the actual spike train and the target spike train as a function of the values of the synaptic efficacies. The thick lines correspond to discontinuities of the distances. (A) VP distance. (B) E distance. (C), (D), (E) The dynamics of the synaptic efficacies according to the learning rules. The black lines represent actual trajectories of the synaptic efficacies. The vectors represent synaptic changes. The green line corresponds to the values of the synaptic efficacies for which the output corresponds to the target spike train. (C) E-learning. (D) I-learning. (E) ReSuMe.

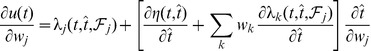

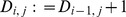

E-learning works by modifying each synaptic efficacy  by terms that depend on the normalized PSP

by terms that depend on the normalized PSP  . For all target spikes that the neuron should fire, for which a spike should be created, each synaptic efficacy should be increased with a term proportional to

. For all target spikes that the neuron should fire, for which a spike should be created, each synaptic efficacy should be increased with a term proportional to  at the moments of these target spikes. For all output spikes that should be eliminated, each synaptic efficacy needs to be decreased with a term proportional to the value of

at the moments of these target spikes. For all output spikes that should be eliminated, each synaptic efficacy needs to be decreased with a term proportional to the value of  at the moments of these spikes. For all actual spikes that are close to their target positions and should be moved towards them, each synaptic efficacy needs to change with a term proportional to the value of

at the moments of these spikes. For all actual spikes that are close to their target positions and should be moved towards them, each synaptic efficacy needs to change with a term proportional to the value of  at the moments of the actual spikes, multiplied by the temporal difference between actual and target spikes. Fig. 5 illustrates the learning rule.

at the moments of the actual spikes, multiplied by the temporal difference between actual and target spikes. Fig. 5 illustrates the learning rule.

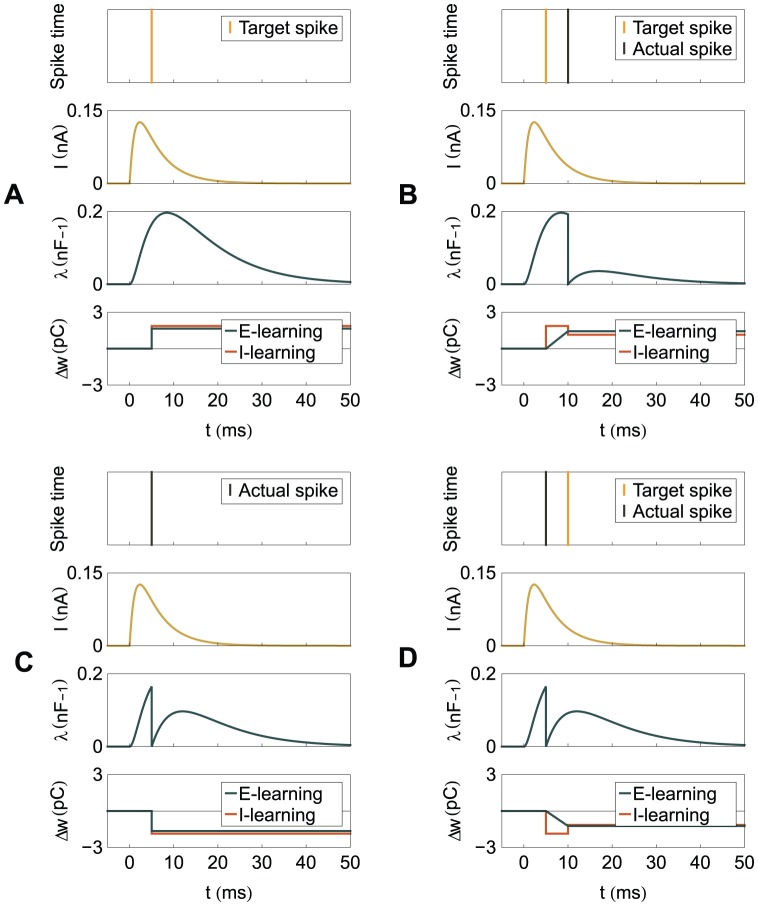

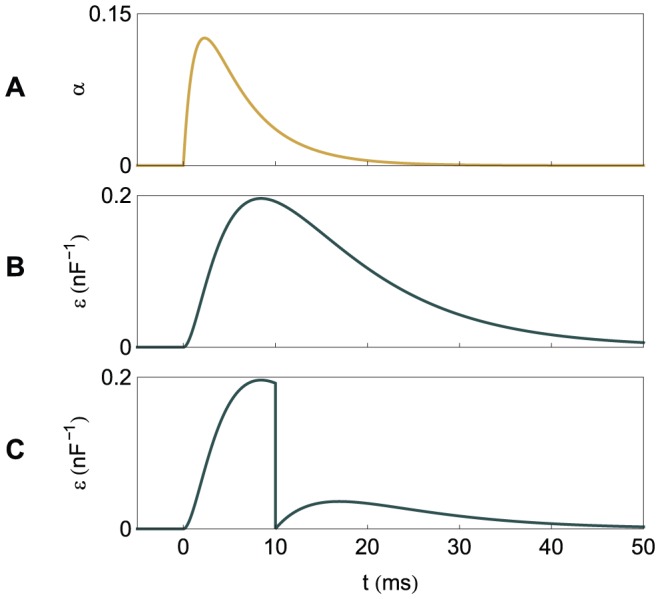

Figure 5. A graphical illustration of the plastic changes implied by the learning rules.

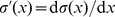

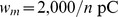

The graphs show the spike timings and, for one synapse, the dynamics of the synaptic current  , the normalized PSP

, the normalized PSP  and the synaptic changes

and the synaptic changes  implied by the two learning rules. It is considered that one input spike arrives at this synapse at

implied by the two learning rules. It is considered that one input spike arrives at this synapse at  . The synaptic changes are shown to be localized temporally along the events that cause them; the actual application of the synaptic changes can be delayed with respect to these events. (A) One independent target spike and no actual spike. (B) A pair of matching target and actual spikes, the actual one following the target one. (C) One independent actual spike and no target spike. (D) A pair of matching target and actual spikes, the target one following the actual one.

. The synaptic changes are shown to be localized temporally along the events that cause them; the actual application of the synaptic changes can be delayed with respect to these events. (A) One independent target spike and no actual spike. (B) A pair of matching target and actual spikes, the actual one following the target one. (C) One independent actual spike and no target spike. (D) A pair of matching target and actual spikes, the target one following the actual one.

The E-learning rule is appropriate for both excitatory and inhibitory synapses. If we consider that the excitatory synapses have a positive synaptic efficacy  and the inhibitory synapses have a negative one, the learning rule in the form presented above can be applied to both cases. Without an extra bounding of the synaptic efficacies, E-learning will transform an excitatory synapse into an inhibitory one or viceversa, as needed for performing the task.

and the inhibitory synapses have a negative one, the learning rule in the form presented above can be applied to both cases. Without an extra bounding of the synaptic efficacies, E-learning will transform an excitatory synapse into an inhibitory one or viceversa, as needed for performing the task.

E-learning aims to minimize the error function by performing piecewise gradient descent. The inherent discontinuities introduced in the error function by creation or removal of spikes or by creation or breaking of matching pairs of actual and target spikes may possibly lead to both increases and decreases of the error function. However, the terms that reflect in the error function spikes that should be created or removed ensure that the membrane potential is increased or, respectively decreased at the corresponding timings, such that the number of spikes becomes the desired one and the actual spikes are close to the target ones. Because the learning rule uses approximations, it is possible that gradient descent is not ensured, not even piecewisely. Thus, the optimality of E-learning cannot be guaranteed analytically. However, as the simulations have shown, E-learning is more efficient for chronotron training than the other existing learning rules, having a much higher memory capacity.

It is possible to devise a continuous error function that is then properly derivable, yielding a proper gradient descent that can be guaranteed analytically. However, the continuous error function would be much more complex than the current one, yielding a complex learning rule. This would encumber an intuitive understanding of the learning rule, as it is possible with E-learning. A learning rule based on a continuous error function will be presented elsewhere.

I-learning

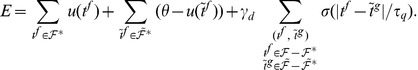

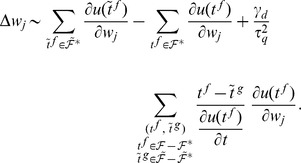

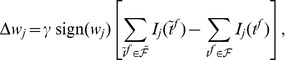

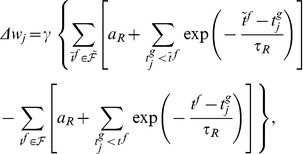

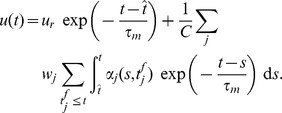

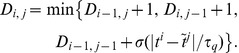

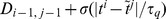

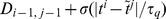

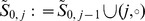

The second learning rule that we developed is heuristic and is inspired by both the E-learning rule and the existing ReSuMe learning rule [19], [20] (Methods). The I-learning rule is defined by

|

(5) |

where  is the learning rate, a positive parameter, and

is the learning rate, a positive parameter, and  is the synaptic current on the synapse

is the synaptic current on the synapse  . In our simulations, synaptic currents were modeled as a difference between two exponentials (Methods).

. In our simulations, synaptic currents were modeled as a difference between two exponentials (Methods).

As in ReSuMe, actual and target output spikes lead to synaptic changes of equal amplitude but of opposite signs, such that when the actual spike train corresponds to the target one the terms cancel out and synapses become stable. In ReSuMe, synaptic changes depend exponentially on the relative timings of pairs of pre- and postsynaptic spikes, as in some models of spike-timing-dependent plasticity. In contrast, here we consider that synaptic changes depend on the value of the synaptic current at the timings of spikes. This learning rule is thus biologically-plausible, since it depends on quantities that are locally available to the synapse. Target spikes determine synaptic potentiation, while actual spikes lead to synaptic depression. We call this synaptic current-dependent rule I-learning (Fig. 5).

Because  is proportional to

is proportional to  , synaptic changes under I-learning converge to zero when

, synaptic changes under I-learning converge to zero when  approaches zero, for small

approaches zero, for small  . Thus, the I-learning rule does not allow an excitatory synapse to become inhibitory or viceversa. This corresponds to how neurons in the brain release neurotransmitters that lead, for a particular presynaptic neuron, to either excitation or inhibition of postsynaptic neurons having potentials not far from the resting potentials (Dale's principle) [26].

. Thus, the I-learning rule does not allow an excitatory synapse to become inhibitory or viceversa. This corresponds to how neurons in the brain release neurotransmitters that lead, for a particular presynaptic neuron, to either excitation or inhibition of postsynaptic neurons having potentials not far from the resting potentials (Dale's principle) [26].

In E-learning, synaptic changes caused by activity within a trial can be computed only at the end of the trial, because one needs the actual spikes fired during the entire trial under study in order to compute which spikes are independent and which are linked, although one can imagine approximate algorithms for matching the spike trains, which would also work online. In I-learning, synaptic changes can also be applied online, which is more biologically relevant.

Performance of the learning rules

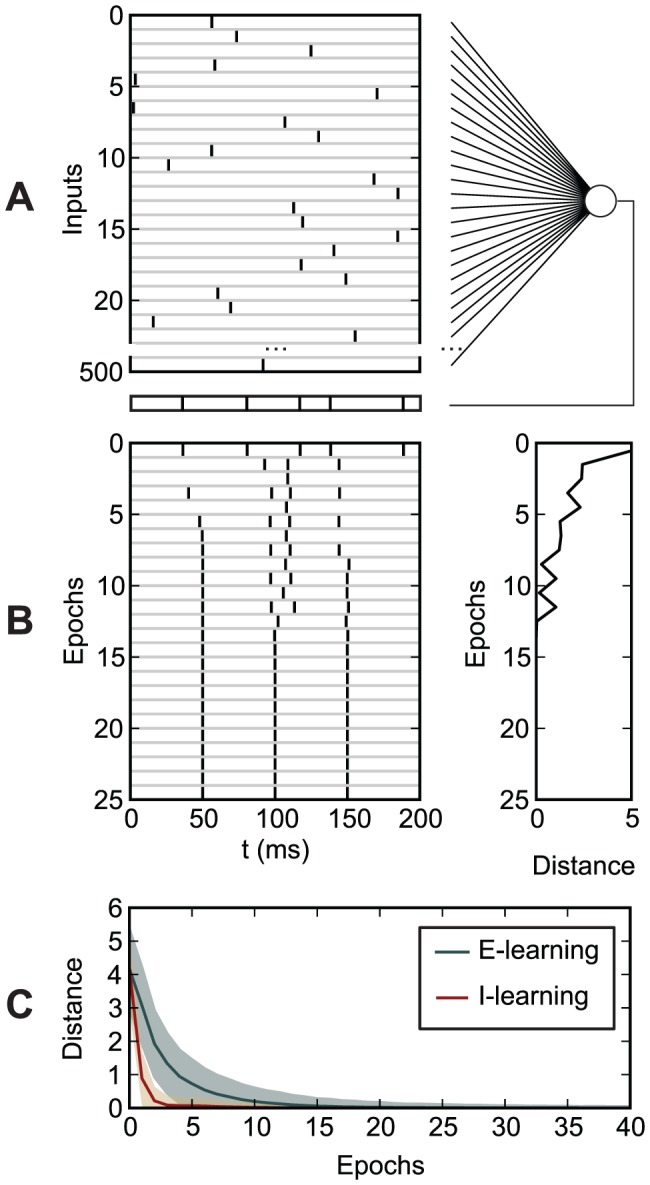

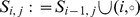

We have studied these rules in computer simulations involving integrate-and-fire neurons. Both learning rules allow a neuron to perform temporally-accurate input-output mappings. Fig. 6 illustrates learning of a mapping between one input pattern (the spike trains coming through all input synapses) and one output spike train consisting of three spikes. The learning rules perform a descent in the landscape defined by the VP or E distance (Fig. 4).

Figure 6. Learning of a mapping between one input pattern and one output spike train.

The trained neuron receives inputs from 500 neurons. The spike trains received from these neurons form the input pattern. Each input spike train consists of one spike within the 200 ms of a trial, generated at a random timing having an uniform distribution within the trial. The target output spike train consists of spikes at 50, 100 and 150 ms. (A) Part of the input pattern and the output spike train of the trained neuron, corresponding to this input, before learning. Only some of the 500 input spike trains are illustrated. (B) The synaptic efficacies change according to E-learning, such that the trained neuron's output reproduces the target spike train. Left: The output spike train during learning. Right: The VP distance between the actual and the target output spike train, during learning. The target output is reproduced after less than 15 epochs (presentations of the input pattern). (C) The VP distance between the actual and the target output spike train during learning, for E-learning and I-learning: averages and standard deviations over 10,000 realizations of the same experiment. Each realization uses different, random input spike trains and initial values of the synaptic efficacies.

We studied next setups where the chronotron had to memorize multiple input-output associations. Both the input and the output encoded information in the precise spike timings: both input and output spike trains consisted of one spike per trial and the timing of this spike represented the information (time-to-first-spike coding or latency coding). The length of spike patterns (and of one simulation trial) was 200 ms. The latency of a spike with respect to the beginning of a trial can correspond to the phase of a spike with respect to a background oscillation, modeling a phase-of-firing encoding of information, and multiple trials can correspond to multiple periods of the oscillation. This could model experimentally-observed situations where phase locking of spikes relative to a theta rhythm is associated to encoding and memorizing of information [6], [9], [10], [13], [14].

Fig. 7 illustrates learning of a mapping between 10 different input patterns and one output spike train consisting of one spike at the middle of the trial interval. The neuron learns to perform this mapping, for all 10 input patterns, using the same set of synaptic efficacies. For example, for E-learning, in 99.9% of 10,000 realizations, the neuron was able to fire the correct number of spikes (one spike) and the spike had an average timing difference of less than 0.03 ms with respect to the timing of the target spike, after about 8 minutes of learning (simulated time; 241 learning epochs). In 95% of realizations, the average timing error was less than 1 ms after 1.6 minutes of learning (48 learning epochs). Learning worked even when the inputs were jittered, i.e. at each trial, input spikes were displaced around the reference timing according to a gaussian distribution. For example, in the same conditions as before but with an input jittered with a 5 ms amplitude, in more than 95% of the realizations, the neuron fired one spike with an average timing error of less than 2 ms, after about 8 minutes of learning (225 epochs). A 5 ms gaussian jitter amplitude corresponds to a 3.99 ms average timing displacement of the input spikes (Methods), so, in this case, the mapping also led to noise reduction, by doubling the precision of spike timing.

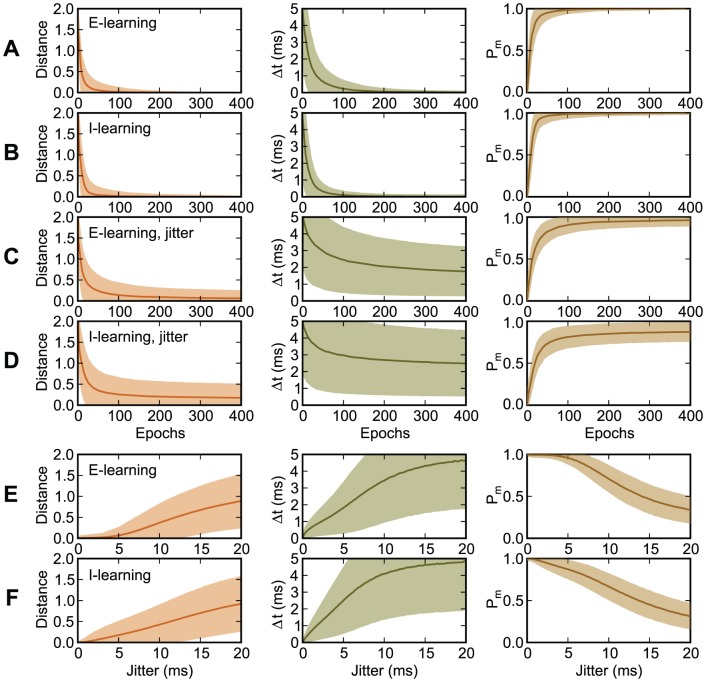

Figure 7. Learning of a mapping between 10 input patterns, with and without jitter, and one output spike train.

Left: The VP distance between the actual and the target output spike train. Center: The timing difference  between matching spikes and the target spikes. Right: The probability

between matching spikes and the target spikes. Right: The probability  that the fired spikes matched the target ones. The graphs represent averages and standard deviations over input patterns and over 10,000 realizations. (A)–(D): Evolution during learning, as a function of the learning epoch. (A), (B): No jitter. (C), (D): A gaussian jitter with an amplitude of 5 ms is added to each presentation of the input patterns. (E), (F): Values after 400 learning epochs, as a function of the amplitude of the input jitter. (A), (C), (E): E-learning. (B), (D), (F): I-learning. The inputs and the trial length are as in Fig. 6. The target output spike train consists of one spike at 100 ms.

that the fired spikes matched the target ones. The graphs represent averages and standard deviations over input patterns and over 10,000 realizations. (A)–(D): Evolution during learning, as a function of the learning epoch. (A), (B): No jitter. (C), (D): A gaussian jitter with an amplitude of 5 ms is added to each presentation of the input patterns. (E), (F): Values after 400 learning epochs, as a function of the amplitude of the input jitter. (A), (C), (E): E-learning. (B), (D), (F): I-learning. The inputs and the trial length are as in Fig. 6. The target output spike train consists of one spike at 100 ms.

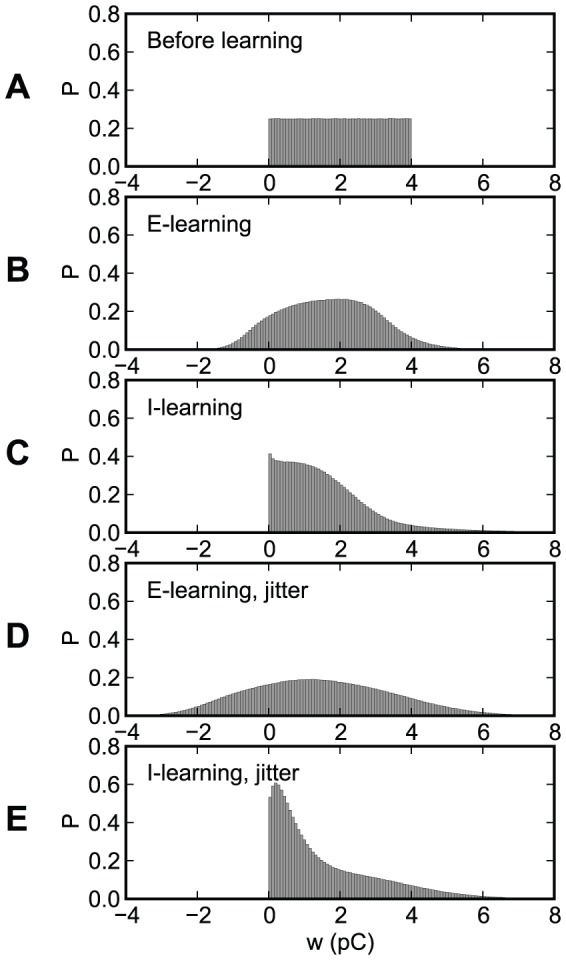

Fig. 8 presents the distribution of the synaptic efficacies, before and after learning, for the experiments presented in Fig. 7. This distribution has been computed over the 10,000 realizations of the experiments. With I-learning, all synapses stay excitatory, like they were generated initially, although a significant fraction of them become close to zero, after learning. E-learning allows synapses to change sign. When the input is subject to jitter, the synaptic distributions after learning become broader than in the case of no jitter.

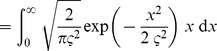

Figure 8. The distribution of the synaptic efficacies, before and after learning, for the experiments presented in Fig. 7 .

(A) Before learning. (B)–(E) After 400 learning epochs. (B), (D) E-learning. (C), (E) I-learning. (B), (C) No jitter. (D), (E) A gaussian jitter with an amplitude of 5 ms is applied to the inputs.

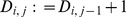

Memory capacity of the chronotron

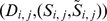

The chronotron is able to perform generic classification tasks, where  input patterns must be classified into

input patterns must be classified into  categories through hetero-association. For all the different input patterns in one category, the chronotron must fire the same output spike train, using the same set of synaptic efficacies. In our simulations, equal number of patterns were randomly assigned to each category.

categories through hetero-association. For all the different input patterns in one category, the chronotron must fire the same output spike train, using the same set of synaptic efficacies. In our simulations, equal number of patterns were randomly assigned to each category.

The ability of neurons to memorize mappings corresponding to classification tasks increases with the number of input synapses  . The ratio

. The ratio  (the number of input patterns memorized per input synapse) represents the load imposed by the task on the neuron. A characteristic of the neuron's ability to learn is the maximum load for which the mappings are performed correctly [16], which we call the capacity

(the number of input patterns memorized per input synapse) represents the load imposed by the task on the neuron. A characteristic of the neuron's ability to learn is the maximum load for which the mappings are performed correctly [16], which we call the capacity  of the neuron. We considered that the chronotron had a correct output when target spikes were reproduced with a 1 ms precision, which corresponds to the lower end of the 0.15–5 ms range of the precision of spikes observed in several areas of the brain [27]–[34]. In our setup, in both input and target output spike trains there was one spike per trial and information was encoded in the spike latencies. Except where specified, the input spike trains consisted, for each of the

of the neuron. We considered that the chronotron had a correct output when target spikes were reproduced with a 1 ms precision, which corresponds to the lower end of the 0.15–5 ms range of the precision of spikes observed in several areas of the brain [27]–[34]. In our setup, in both input and target output spike trains there was one spike per trial and information was encoded in the spike latencies. Except where specified, the input spike trains consisted, for each of the  synapses, of one spike generated at a random timing, distributed uniformly, and the target spike train for each category

synapses, of one spike generated at a random timing, distributed uniformly, and the target spike train for each category  consisted of one spike at

consisted of one spike at  (Methods). Fig. 9 illustrates the performance of the chronotron in simulations where inputs were classified into

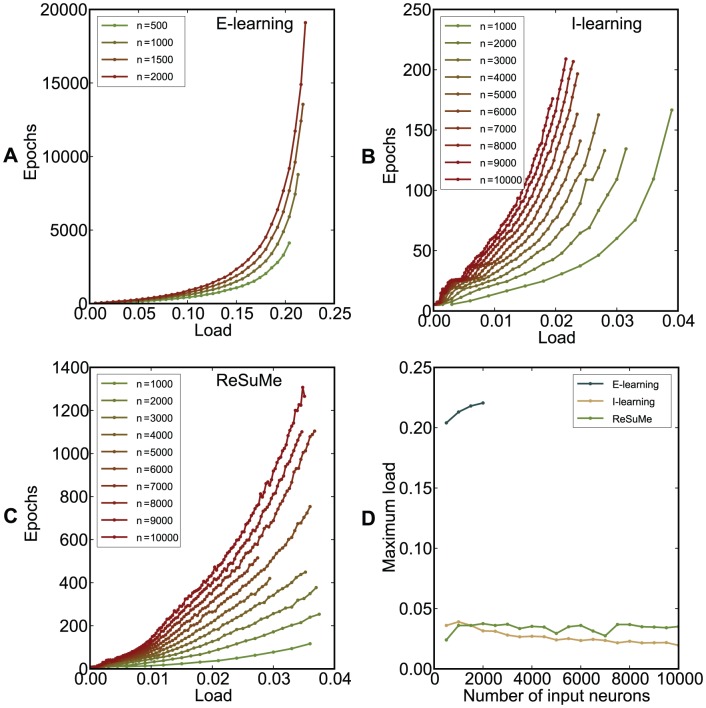

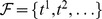

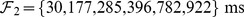

(Methods). Fig. 9 illustrates the performance of the chronotron in simulations where inputs were classified into  categories. For the particular studied setup, both I-learning and ReSuMe led to a capacity between 0.02 and 0.04, while E-learning led to a capacity up to

categories. For the particular studied setup, both I-learning and ReSuMe led to a capacity between 0.02 and 0.04, while E-learning led to a capacity up to  patterns per synapse.

patterns per synapse.

Figure 9. The performance of the chronotron learning rules for a classification problem.

The input patterns are classified into 3 classes. (A)–(C) The average minimum number of epochs required for correct learning is displayed as a function of the load  , for various values of the number of input synapses

, for various values of the number of input synapses  . Note the scale differences. (A) E-learning. (B) I-learning. (C) ReSuMe. (D) The maximum load for which correct learning can be achieved (the capacity

. Note the scale differences. (A) E-learning. (B) I-learning. (C) ReSuMe. (D) The maximum load for which correct learning can be achieved (the capacity  ), as a function of

), as a function of  . E-learning has a much better performance than I-learning or ReSuMe. For E-learning, simulations for higher

. E-learning has a much better performance than I-learning or ReSuMe. For E-learning, simulations for higher  were not performed because of the high computational cost, due to the high capacity resulted through this learning rule. Averages were computed over 500 realizations with different, random initial conditions.

were not performed because of the high computational cost, due to the high capacity resulted through this learning rule. Averages were computed over 500 realizations with different, random initial conditions.

The load and the capacity have been used to characterize neurons with binary outputs, which memorize one bit of information for every pattern. The chronotron can classify inputs in more than one category, and for  categories it memorizes

categories it memorizes  bits of information for every input pattern. Therefore, a better measure for the chronotron's learning ability is the information load

bits of information for every input pattern. Therefore, a better measure for the chronotron's learning ability is the information load  and the corresponding information capacity

and the corresponding information capacity  , equal to the maximum information load. The number of categories into which a chronotron with latency coding of information classifies its inputs is limited only by the temporal precision of the output spike. For example, if this temporal precision is 1 ms, with the particular setup presented here, the chronotron can encode up to about

, equal to the maximum information load. The number of categories into which a chronotron with latency coding of information classifies its inputs is limited only by the temporal precision of the output spike. For example, if this temporal precision is 1 ms, with the particular setup presented here, the chronotron can encode up to about  categories (Methods). When information is encoded in the spike latencies, the simulations showed that the chronotron's capacity does not depend on the number of categories

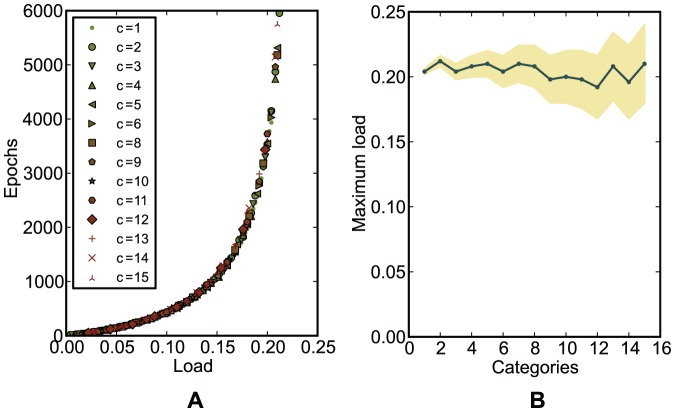

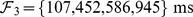

categories (Methods). When information is encoded in the spike latencies, the simulations showed that the chronotron's capacity does not depend on the number of categories  (Fig. 10). The maximum information capacity of the chronotron, for E-learning and the particular setup that we used, can be then computed as

(Fig. 10). The maximum information capacity of the chronotron, for E-learning and the particular setup that we used, can be then computed as  bits per synapse (Methods). Extrapolating, this means that a chronotron with about 10,000 input synapses would be able to fire a spike at the correct timing, with a 1 ms precision, among up to 80 possible ones, for about 2,200 different, random input patterns, and thus to memorize about 13.9 kilobits of information. The information capacity of the perceptron is 2 bits per synapse and the one of the tempotron is around 3 bits per synapse [16]. However, if more than two input categories have to be discriminated, the chronotron has the advantage of being able to carry computations that need multiple perceptrons or tempotrons to be performed, being thus more efficient. Unlike the tempotron, the chronotron uses the same coding of information for both inputs and outputs and is therefore able to interact with other chronotrons.

bits per synapse (Methods). Extrapolating, this means that a chronotron with about 10,000 input synapses would be able to fire a spike at the correct timing, with a 1 ms precision, among up to 80 possible ones, for about 2,200 different, random input patterns, and thus to memorize about 13.9 kilobits of information. The information capacity of the perceptron is 2 bits per synapse and the one of the tempotron is around 3 bits per synapse [16]. However, if more than two input categories have to be discriminated, the chronotron has the advantage of being able to carry computations that need multiple perceptrons or tempotrons to be performed, being thus more efficient. Unlike the tempotron, the chronotron uses the same coding of information for both inputs and outputs and is therefore able to interact with other chronotrons.

Figure 10. The dependence on the number of categories of the performance of E-learning for a classification problem.

of the performance of E-learning for a classification problem.

(A) The average minimum number of epochs required for correct learning, as a function of the load  , for various numbers of categories

, for various numbers of categories  . Regardless of

. Regardless of  , the points fall on the same curve. (B) The maximum load for which correct learning is achieved (the capacity

, the points fall on the same curve. (B) The maximum load for which correct learning is achieved (the capacity  ), as a function of the number of categories

), as a function of the number of categories  . The shaded area represents the uncertainty due to the fact that the load can vary only discretely, in steps of

. The shaded area represents the uncertainty due to the fact that the load can vary only discretely, in steps of  , for a particular

, for a particular  . The capacity is approximately constant for all

. The capacity is approximately constant for all  .

.

The capacities computed here for the chronotron are lower bounds, since it might be possible to develop learning rules which are more efficient than E-learning and to devise setups with more efficient encoding of information.

Dependence on the setup parameters

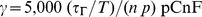

In our setups, information was represented in the precise timings of spikes relative to the beginning of trials of constant duration. If trials correspond to periods of a background oscillation, the timing of spikes corresponds to the phase relative to this oscillation. Simulations performed in this framework have shown that chronotrons have the best efficacy when both input and output spike trains consist of one spike per trial (period). Setups where inputs or outputs consisted of more than one spike, or where some of the inputs fired no spikes, had suboptimal performance in terms of learning speed and memory capacity (Figs. 11, 12, and 13). However, learning was still possible under all of these conditions, unless the input pattern included too few spikes (less than about 100, for our setup).

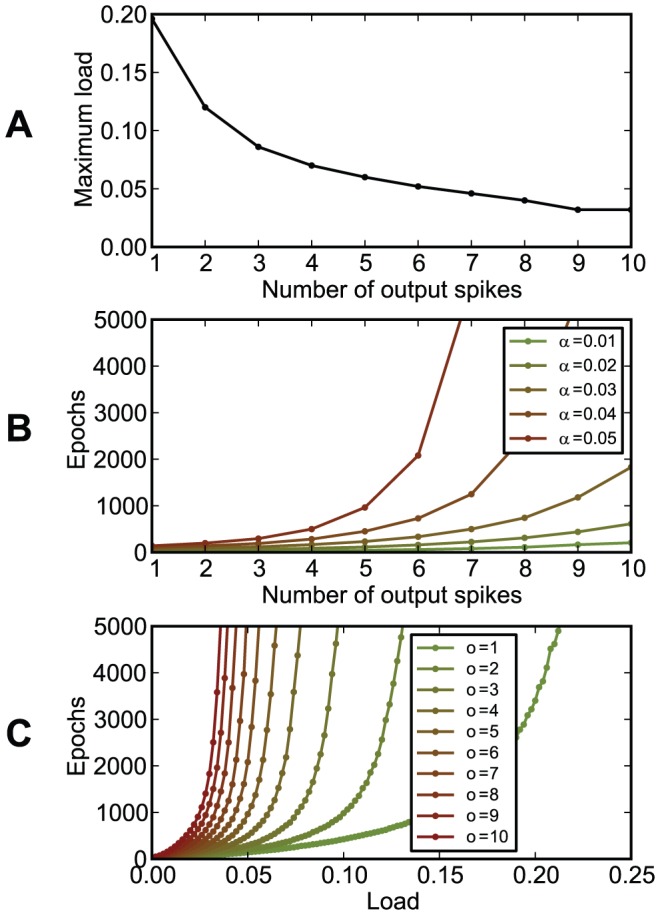

Figure 11. The dependence of chronotron performance on the number of output spikes per trial.

The neuron had to learn to have the same output for all inputs, using E-learning. The output consisted of  output spikes, placed at

output spikes, placed at  , for

, for  . (A) The maximum load (the capacity

. (A) The maximum load (the capacity  ) as a function of the number of output spikes

) as a function of the number of output spikes  . (B) The number of learning epochs required for correct learning as a function of the number of output spikes

. (B) The number of learning epochs required for correct learning as a function of the number of output spikes  , for various loads

, for various loads  . (C) The number of learning epochs required for correct learning as a function of load, for various numbers of output spikes

. (C) The number of learning epochs required for correct learning as a function of load, for various numbers of output spikes  . Best performance was achieved for a single output spike per trial.

. Best performance was achieved for a single output spike per trial.

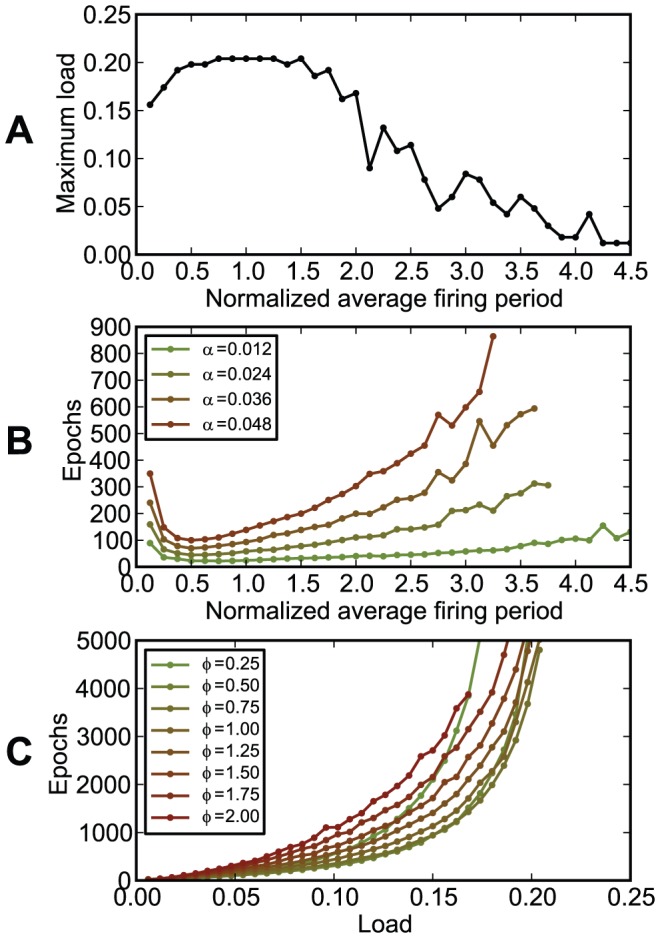

Figure 12. The dependence of chronotron performance on the firing rate of the inputs.

The inputs were generated using a Gamma process having a normalized average period (the average period over the trial length)  (Methods). (A) The maximum load (the capacity

(Methods). (A) The maximum load (the capacity  ) as a function of the normalized average period

) as a function of the normalized average period  . (B) The number of learning epochs required for correct learning as a function of the normalized average period

. (B) The number of learning epochs required for correct learning as a function of the normalized average period  , for various loads

, for various loads  . (C) The number of learning epochs required for correct learning as a function of load

. (C) The number of learning epochs required for correct learning as a function of load  , for various values of the normalized average period

, for various values of the normalized average period  . Best capacity was achieved for values of

. Best capacity was achieved for values of  around 1, i.e. a single input spike per trial, for each synapse, on average, while fastest learning was achieved for

around 1, i.e. a single input spike per trial, for each synapse, on average, while fastest learning was achieved for  around 0.5.

around 0.5.

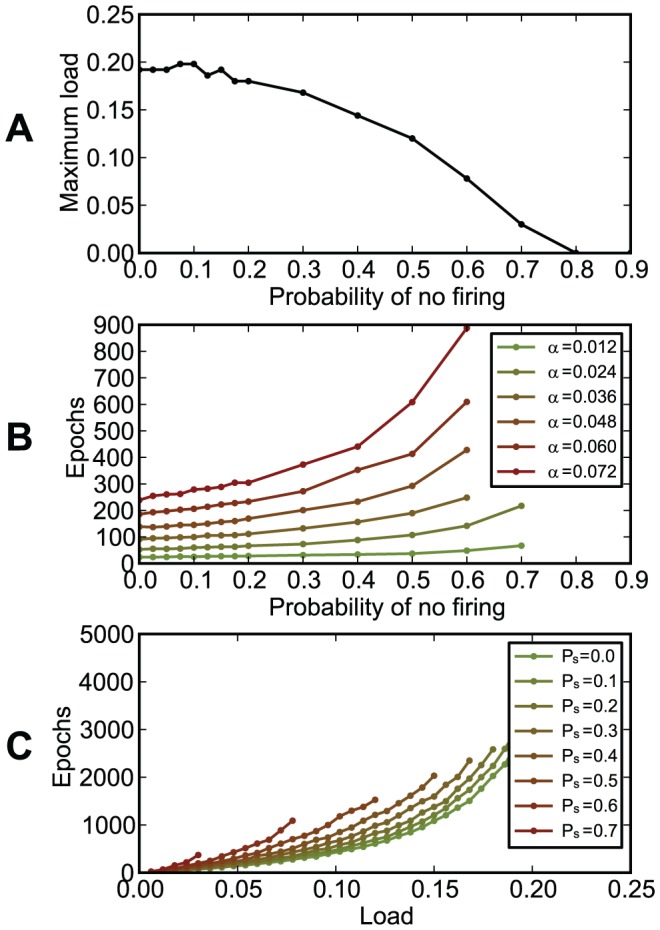

Figure 13. The dependence of chronotron performance on the probability that input synapses receive no spikes.

that input synapses receive no spikes.

At the beginning of the experiment, each input spike train was set up as either one spike generated at a random timing or, with a probability  , of no spikes. Input patterns did not change during learning. (A) The maximum load (the capacity

, of no spikes. Input patterns did not change during learning. (A) The maximum load (the capacity  ) as a function of the no firing probability

) as a function of the no firing probability  . (B) The number of learning epochs required for correct learning as a function of the no firing probability

. (B) The number of learning epochs required for correct learning as a function of the no firing probability  , for various loads

, for various loads  . (C) The number of learning epochs required for correct learning as a function of load

. (C) The number of learning epochs required for correct learning as a function of load  , for various values of the no firing probability

, for various values of the no firing probability  . Best capacity was achieved for values of

. Best capacity was achieved for values of  less or equal to 0.1, while fastest learning was achieved when there was no input with no spikes. For large

less or equal to 0.1, while fastest learning was achieved when there was no input with no spikes. For large  there are not enough input spikes to drive the neuron and, as expected, performance drops.

there are not enough input spikes to drive the neuron and, as expected, performance drops.

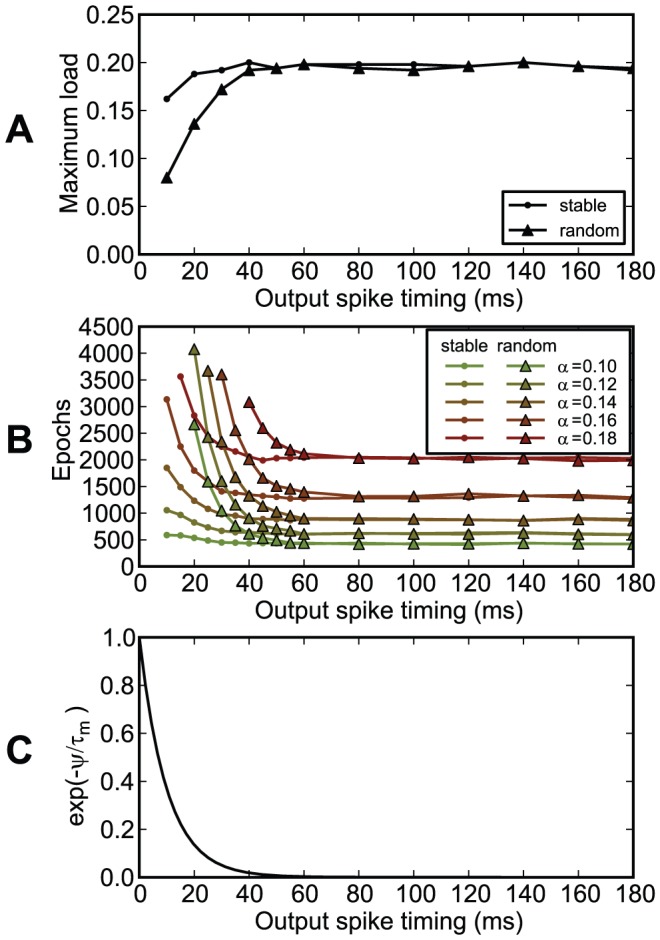

Chronotron's efficacy was not affected by the initial state of their membrane potential at the beginning of trials if target spike times were set at a delay relative to the beginning of the trial of more than about 4 times the time constant of the membrane potential's exponential decay (Fig. 14).

Figure 14. The dependence of chronotron performance on the timing of the output spike and on the initial state of the membrane potential.

The neuron had to learn to have the same output for all inputs. The output was one spike at a given timing  . At the beginning of each trial, the membrane potential

. At the beginning of each trial, the membrane potential  was either set to

was either set to  , as in the other experiments (stable initial state), or was generated randomly, with a uniform distribution, between 0 and

, as in the other experiments (stable initial state), or was generated randomly, with a uniform distribution, between 0 and  (random initial state). (A) The maximum load (the capacity

(random initial state). (A) The maximum load (the capacity  ) as a function of the timing of the output spike

) as a function of the timing of the output spike  . (B) The number of learning epochs required for correct learning as a function of the timing of the output spike

. (B) The number of learning epochs required for correct learning as a function of the timing of the output spike  , for various loads

, for various loads  . (C)

. (C)  , as a reference for comparing the effect on learning of the initial conditions, as a function of the timing of the output spike

, as a reference for comparing the effect on learning of the initial conditions, as a function of the timing of the output spike  . For this setup, the capacity and the learning time for reaching the correct output, for stable initial state, does not depend on

. For this setup, the capacity and the learning time for reaching the correct output, for stable initial state, does not depend on  if it is larger than about 40 ms. Because of the exponential decay of the membrane potential of the chronotron with a time constant

if it is larger than about 40 ms. Because of the exponential decay of the membrane potential of the chronotron with a time constant  , the effect of the random initial state of the membrane potential on the chronotron's performance, as a function of the output spike timing

, the effect of the random initial state of the membrane potential on the chronotron's performance, as a function of the output spike timing  , becomes insignificant at about

, becomes insignificant at about  , similarly to

, similarly to  , as

, as  .

.

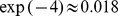

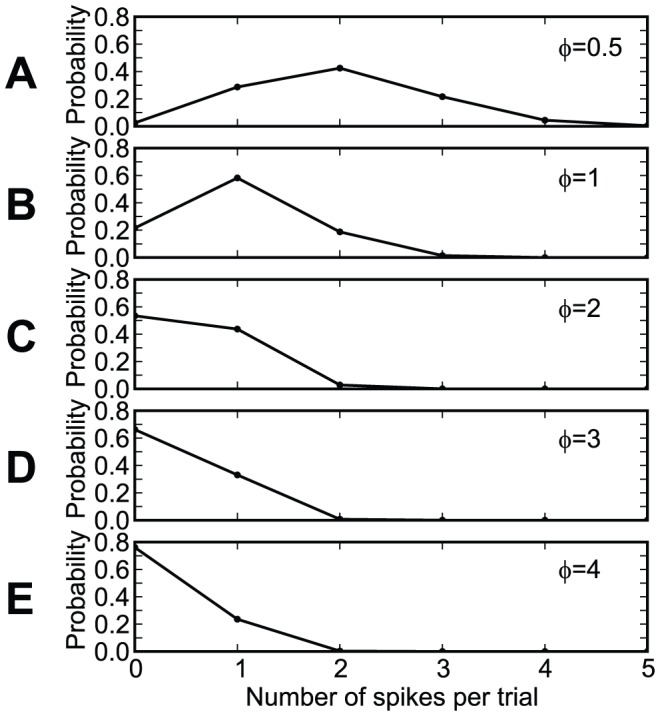

In our setup, the chronotron had an optimal memory capacity if the trial length (the oscillation period) was about 8–10 times larger than the membrane time constant (Fig. 15). Since typical neurons in the brain have membrane time constants between 8 and 100 ms [35]–[40], this would correspond to oscillation periods between 64 and 1000 ms (frequencies between 1 and about 16 Hz), an interval that covers the theta rhythm.

Figure 15. The dependence of chronotron performance on trial length .

.

(A) The maximum load (the capacity  ) as a function of the trial length

) as a function of the trial length  . (B) The number of learning epochs required for correct learning as a function of the trial length

. (B) The number of learning epochs required for correct learning as a function of the trial length  , for various loads

, for various loads  . (C) The number of learning epochs required for correct learning as a function of load

. (C) The number of learning epochs required for correct learning as a function of load  , for various values of the trial length

, for various values of the trial length  . Best performance was achieved for

. Best performance was achieved for  ms (the relevant parameter is

ms (the relevant parameter is  ,

,  ).

).

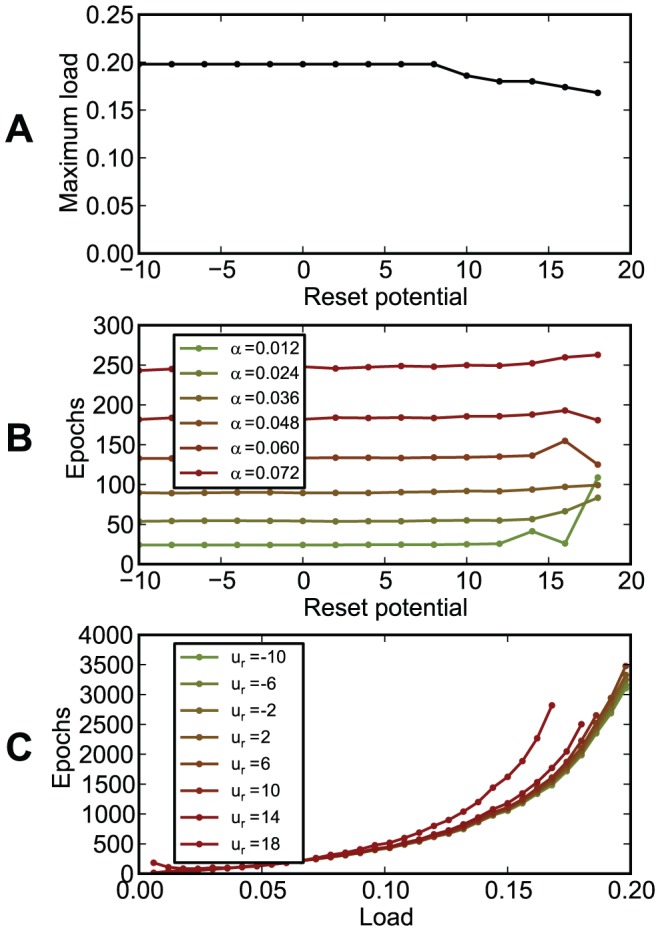

The chronotron's performance did not depend on the reset potential if it was lower than half of the firing threshold  and declined slowly for higher reset potentials, which are, however, artificially high (Fig. 16).

and declined slowly for higher reset potentials, which are, however, artificially high (Fig. 16).

Figure 16. The dependence of chronotron performance on the reset potential .

.

(A) The maximum load (the capacity  ) as a function of the reset potential

) as a function of the reset potential  . (B) The number of learning epochs required for correct learning as a function of the reset potential

. (B) The number of learning epochs required for correct learning as a function of the reset potential  , for various loads

, for various loads  . (C) The number of learning epochs required for correct learning as a function of load

. (C) The number of learning epochs required for correct learning as a function of load  , for various values of the reset potential

, for various values of the reset potential  . The performance does not depend on the reset potential if it is lower than half of the firing threshold,

. The performance does not depend on the reset potential if it is lower than half of the firing threshold,  mV.

mV.

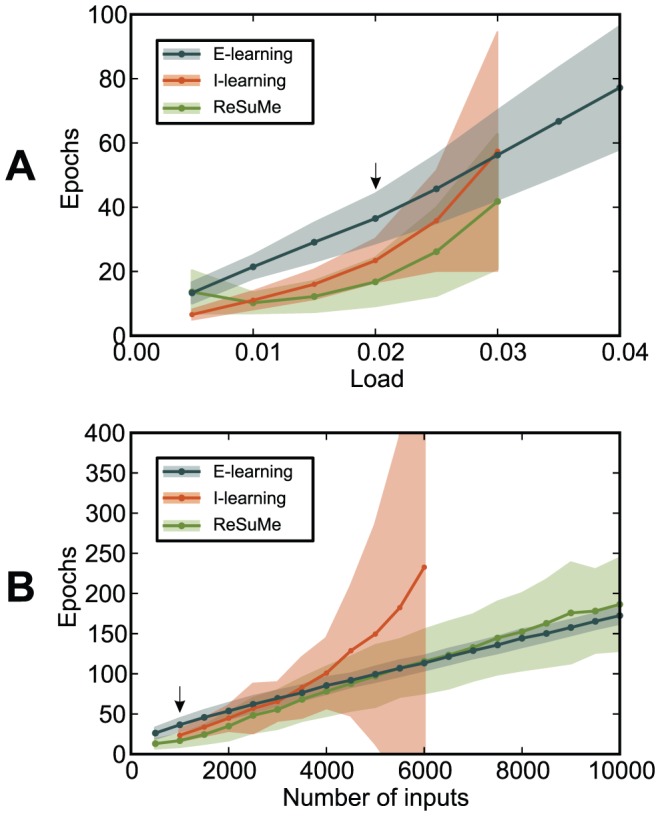

In Fig. 17, parameters were optimized to lead to the minimum average number of learning epochs needed for correct learning for a setup with a relatively low load,  . For the setup that was optimized and for the optimal parameters, ReSuMe had the fastest learning (16.75

. For the setup that was optimized and for the optimal parameters, ReSuMe had the fastest learning (16.75 7.43 epochs), followed by I-learning (23.39

7.43 epochs), followed by I-learning (23.39 6.87 epochs) and E-learning (36.48

6.87 epochs) and E-learning (36.48 7.61 epochs). However, the advantages of the first two learning rules over E-learning disappeared for setups with higher loads or higher number of input synapses than the optimized setup, when the other parameters were kept the same.

7.61 epochs). However, the advantages of the first two learning rules over E-learning disappeared for setups with higher loads or higher number of input synapses than the optimized setup, when the other parameters were kept the same.

Figure 17. The performance of learning rules when their parameters were optimized for fast learning for ,

,  (

( ).

).

(A) The number of learning epochs required for correct learning as a function of the load  , for

, for  . Correct learning was not achieved for I-learning and ReSuMe for

. Correct learning was not achieved for I-learning and ReSuMe for  larger than 0.03. (B) The number of learning epochs required for correct learning as a function of the number of input synapses

larger than 0.03. (B) The number of learning epochs required for correct learning as a function of the number of input synapses  . Correct learning was not achieved for I-learning for

. Correct learning was not achieved for I-learning for  nor

nor  larger than 6,000. Averages and standard deviations over 500 realizations. The arrows indicate the conditions for which the parameters were optimized.

larger than 6,000. Averages and standard deviations over 500 realizations. The arrows indicate the conditions for which the parameters were optimized.

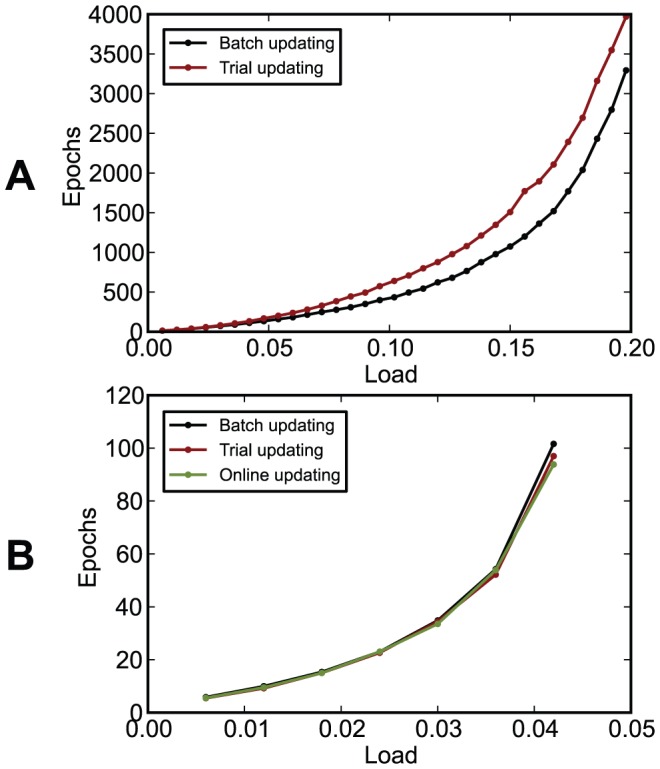

In our simulations, the synaptic changes defined by the learning rules were accumulated and were applied to the synapses at the end of each batch consisting of  trials (presentations of the

trials (presentations of the  input patterns) [41], [42]. Simulations of E-learning where synaptic changes were applied at the end of each trial required a slightly higher number of epochs for correct learning, but led to the same memory capacity (Fig. 18 A). Simulations of I-learning where synaptic changes were applied either at the end of each trial or online, triggered by postsynaptic spikes (as in Fig. 5) did not lead to results significantly different than simulations with batch updating of the synapses (Fig. 18 B).

input patterns) [41], [42]. Simulations of E-learning where synaptic changes were applied at the end of each trial required a slightly higher number of epochs for correct learning, but led to the same memory capacity (Fig. 18 A). Simulations of I-learning where synaptic changes were applied either at the end of each trial or online, triggered by postsynaptic spikes (as in Fig. 5) did not lead to results significantly different than simulations with batch updating of the synapses (Fig. 18 B).

Figure 18. The dependence of chronotron performance on when synapses are updated during simulations.

The number of learning epochs required for correct learning as a function of the load  , for various methods of applying the synaptic changes according to the learning rules: batch updating (synapses are changed at the end of each batch of

, for various methods of applying the synaptic changes according to the learning rules: batch updating (synapses are changed at the end of each batch of  trials, each one corresponding to one of the input patterns); trial updating (synapses are changed at the end of each trial); online updating (synapses are changed after each target or actual postsynaptic spike — for I-learning only). (A) E-learning. (B) I-learning.

trials, each one corresponding to one of the input patterns); trial updating (synapses are changed at the end of each trial); online updating (synapses are changed after each target or actual postsynaptic spike — for I-learning only). (A) E-learning. (B) I-learning.

Comparison to other results

The first supervised learning method for spiking neurons was SpikeProp [43], [44], a method inspired by the backpropagation algorithm used for training classical neural networks. SpikeProp works by minimizing the difference between the timing of an output spike and the desired timing. The first versions of the learning method required a feedforward network and that each neuron in the network fires only once during a trial. Later versions [45]–[51] extended the method for including a momentum term; adapting the synaptic delays, time constants and neurons' thresholds during learning; for networks where the input (but not the output) neurons fire more than once per trial; for recurrent networks; and for improving learning speed under certain assumptions. However, the method is designed for adjusting just the timing of a single (first) spike per output neuron and assumes that the synapses are such that each output neuron fires at least one spike for the given inputs. The method is not suitable for adjusting the number of output spikes nor for training a neuron to fire given output spike patterns that extend beyond the first spike.

Carnell and Richardson [52] devised a method for modifying the synaptic weights such as the weighted sum of the presynaptic spike trains (in an algebraic representation) converge to a desired one. If the neuron model is such that the firing of the postsynaptic neuron is close to this weighted sum, then the method allows the supervised learning of a target output spike train. The method is quite original and general, but ignores the details of the dynamics of the postsynaptic potential and of the neuronal membrane.

Pfister et al. [53] have derived supervised learning rules for probabilistic neurons. The learning method is based on gradient ascent in the space of synaptic efficacies, which maximizes the likelihood of having a trained neuron firing at the desired moments. Because of the probabilistic framework, the learning rules do not involve the actual timing of the output spikes, but the probability of having a particular output spike train given a particular input spike train. Calculating such a probability while taking into account the reset of the membrane potential after the spikes of the output neuron is computationally challenging and not biologically plausible.

Legenstein and colleagues [54], [55] have studied a supervised, biologically-inspired learning method for spiking neurons that works by clamping neurons to the desired output and applying spike timing–dependent plasticity (STDP) to the afferent synapses of the trained neurons. Under certain conditions, after learning, the neurons yield the desired output even after the teaching signal is removed. The effectiveness of this learning method has been proved analytically only for Poisson input spike trains, and there are worst case scenarios where the method fails, but simulations have shown that the method is effective in more general conditions. The method works only when synapses have hard bounds, by driving synaptic efficacies toward these bounds. Thus, the output patterns that this method can learn are restricted to those that can be generated by synapses that have either minimum (zero) or maximum efficacy. A similar rule can be used for supervised learning of patterns by networks [56], but not by single neurons.

The tempotron [16] implements supervised learning for a particular task where an output neuron either fires one spike or does not fire during a predetermined time interval, when presented with an input spike pattern that encodes information in the precise spike timings. The approach assumes that after the neuron emits a spike in response to a input pattern all other incoming spikes have no effect at all on the neuron (are shunted), which is artificial. The timing of the output spike cannot be controlled with this method, and thus the output of a tempotron cannot be used as an information-carrying input for another tempotron. The tempotron has a binary response and therefore its output cannot distinguish between more than two input categories. Although it is claimed that it is biologically plausible, the tempotron learning rule requires information that is nonlocal in time, needing to monitor the maximum of the output, and information that is not available to the neuron, such as the maximum of the membrane potential that would have been reached if the neuron would have not fired. We have shown that the tempotron is equivalent to a particularization of the ReSuMe learning rule [17]. A learning rule by Urbanczik and Senn [18] improves the original tempotron learning rule but is still focused on the artificial tempotron setup.

Barak and Tsodyks [57] have developed learning rules that increase the variance of the input current evoked by a set of learned patterns relative to that obtained from random background patterns. The trained neuron then has a larger firing rate when presented with one of the learned patterns, as compared to when presented with a typical background pattern. The learning rules are quite complex, with low biological plausibility. They allow a neuron to recognize input patterns of precisely timed spikes, but the timing of the output spikes is not controlled by these rules. The memory capacity computed for these learning rules, for just the recognition of patterns, is an order of magnitude smaller than the maximum memory capacity we obtained for mapping memorized patterns to specific outputs (Methods). Other complex setups for recognizing spike patterns were also developed [58]–[61].

A few other supervised learning methods for spiking neurons or neural networks also exist but work only for some specific cases, such as neurons receiving oscillatory inhibition [62], population-temporal coding [63], theta neurons [64], [65], neurons with very large membrane decay time constants and constant interspike intervals for the inputs [66], networks with time to first spike coding for classification through plasticity of synaptic delays [67], neurons having two presynaptic and one postsynaptic spikes per learning cycle [68], specific configurations, composed of several modules, of the trained network [69].

ReSuMe [19], [20], [70]–[74] is a general supervised learning method for spiking neurons that allows learning of arbitrary output spike trains. It is the only existing learning rule that is comparable to the ones introduced here. This learning rule has been conjectured by analogy to the Widrow-Hoff rule for analog neurons. Simulations have shown that not all the terms of the conjectured learning rule are needed for learning [74]. To date, it has been shown analytically that ReSuMe will converge to an optimal solution only for the case of one input spike and one target output spike [70]. We have shown here (Fig. 9) that E-learning leads to a much higher memory capacity than ReSuMe. The higher performance of E-learning can be attributed to the analytical derivation of the E-learning rule, although the derivation included approximations that preclude analytical guarantees on the optimality of E-learning.

I-learning is quite similar to ReSuMe. As in ReSuMe, in I-learning actual and target postsynaptic spikes lead to synaptic changes of opposite signs, such that when the actual spike train corresponds to the target one the terms cancel out and synapses become stable, and thus the basic mechanism is identical. In contrast to the typical form of ReSuMe, where synaptic changes depend exponentially on pairs of pre- and postsynaptic spikes, as in some models of spike-timing-dependent plasticity, in I-learning synaptic changes depend on the value of the synaptic current. In the case that synaptic currents are exponentials, I-learning would be identical to a form of ReSuMe where the non-Hebbian terms are set to zero. Variants of ReSuMe where the exponentials have been replaced by other types of functions, including differences between two exponentials (double-exponentials) like in our model of I-learning, have been previously studied [74] but these double-exponentials have not been previously associated to the synaptic currents. ReSuMe is typically presented as using exponential functions and non-zero non-Hebbian terms [20]; the lack of these in I-learning makes it distinct from ReSuMe. Because the rising part of the double-exponentials is deleterious to learning [74] and because I-learning does not allow synapses to change sign, unlike ReSuMe, I-learning has, in most cases, a lower performance than ReSuMe (Figs. 9, 17). However, unlike in the experiment with double-exponentials in [74], where, additionally to the terms that we used in I-learning some anti-Hebbian terms have been used and a lack of convergence has been observed, in our experiments I-learning converged well to the target output (Fig. 6, 7). Real synaptic currents do involve a non-zero rising time and thus using double-exponentials in modeling currents is biologically relevant.

For a review of supervised learning methods for spiking neural networks, see [75].

Discussion

We have shown that, through appropriate learning methods, spiking neurons are able to process and memorize information that is encoded in the precise timing of spikes. We presented two new spike-timing-based learning rules, E-learning and I-learning, which allow neurons to fire specific spike trains in response to specific input patterns of spike timings, by modifying accordingly their synaptic efficacies. E-learning leads to high memory capacity, while I-learning is more biologically plausible.

There obviously are input-output mappings that are mechanistically impossible to be performed by a spiking neuron. For example, when there is no input, the neuron obviously cannot fire. A sufficient number of input spikes that arrive uncorrelated on each of its synapses leads to a wide range of outputs that the neuron is able to map to these inputs, by adjusting the synaptic efficacies. But if the neuron has to perform several different input-output mappings with the same set of synaptic efficacies, the various mappings constrain each other through the synaptic efficacies. These constraints lead to the mechanistical impossibility that the neuron performs new input-output mappings beyond the current ones, and thus to a finite memory capacity of the neuron. We computed lower bounds of the memory capacity of a spiking neuron with temporal coding of information and studied how this depends on various parameters of the setup.

The chronotron can model situations where information is coded in the time of the first spike relative to the onset of salient stimuli [2], or situations where information is coded in the phase of spiking relative to a background oscillation. Some of the results presented here underline the role of oscillations for temporal information processing. First, oscillations segment time into frames (periods), offering a reference for temporal encoding of information in spike latencies (phases) [76]. Second, in the parts of the cycles where neurons are globally inhibited or global excitation is low, oscillations ensure that neurons are reset such that they are able to process independently the inputs corresponding to different frames (periods). If this reset is such that it allows the chronotron to get into the resting state or another baseline state (the chronotron is inhibited or does not receive significant input for a duration of about 4–6 times the membrane time constant or more), then the absolute latencies, relative to the oscillation period, of the input spikes do not matter for the chronotron, but just the relative timings of spikes in the input pattern. The output spikes of the chronotron would then encode information in their relative timings with respect to the input spikes. The relevance of oscillations to temporal coding is consistent to the results of Havenith et al. [8] where the information carried by neurons in the visual cortex through their relative firing times was found to increase considerably with the oscillation strength. It has also been shown that the hippocampal theta rhythm is necessary for learning by rats of the Morris water maze [77] and that it enhances learning in eyeblink classical conditioning in rabbits [78], [79]. Oscillations also enhance the temporal precision of action potentials [80]. Although the parameters of most of our simulations correspond to a theta rhythm, the simulations' results remain the same when all temporal parameters are rescaled, and thus the results are also relevant for neurons that are subject to a gamma rhythm or other oscillations.

In the brain, when the spike phase encodes information relative to a background oscillation, the neurons fire no more than one spike per cycle in some, but not all, experiments [4], [6], [8]–[10], [14]. Our results showed that firing one spike per cycle is optimal for processing and memorization of phase-of-firing temporally encoded information by spiking neurons. In many cases, neurons in the brain skip oscillation cycles, which implies that the neurons that skip cycles do not participate in all input patterns received by postsynaptic neurons. This means that postsynaptic chronotrons will have an effective number of inputs lower than the real one, which would reduce the memory capacity as compared to the case when all input neurons fire one spike per cycle. However, this does not preclude the possibility that chronotrons process and memorize information in oscillatory networks where neurons skip cycles or where neurons fire more than one spike per cycle.

I-learning implies that synaptic changes are proportional to the corresponding synaptic currents, which are quantities that are locally available to the synapse. Postsynaptic spikes lead to synaptic depression similar to anti-Hebbian spike timing-dependent plasticity (STDP) [81]–[89], while the timings of target postsynaptic spikes trigger potentiation. The depression and potentiation should balance each other when actual spikes occur at the target timings. The target timings could be indicated by spikes coming from other, teacher neurons, through special teaching synapses [90]. The firing of these teacher neurons should lead to heterosynaptic associative changes [91]–[93] according to the I-learning rule and should not have a significant impact on the trained neuron's potential [90]. The potentiation generated through such a mechanism should be balanced by anti-Hebbian STDP when the trained neuron reproduces the firing of the teacher neuron. In this case, the trained neuron's firing should then become increasingly correlated to the one of the teacher neuron, eventually mimicking its firing with a lag corresponding to the delay of the arrival of the teaching spikes. If the trained neuron learns from several teacher neurons, it should learn to fire when either one of the teacher neurons fires, acting thus as a kind of multiplexer. If the trained neuron does not need to reproduce the entire activity of teaching neurons, but just the one during salient events, teaching could be modulated by a neuromodulator. Neuromodulation of supervised learning could be similar to the control of induction of associative plasticity in Purkinje cells through targeted modulation of instructive climbing fiber synapses [94] or the neuromodulation of STDP [95]–[102]. Just as STDP [103]–[105] or its neuromodulation [106], [107] were predicted theoretically in advance of experimental verification, future experiments may find plasticity mechanisms similar to I-learning in the brain. For example, such mechanisms might be responsible for neural synchronization that modulates neural interactions [108], such as the synchronization of thalamic neurons needed for driving the cortex through weak synapses [109]; for encoding of information through synchronization [110]; or for the fine temporal tuning of excitation relative to inhibition that contributes to stimulus selectivity in rat somatosensory cortex [111].

Besides the particular supervised learning rules introduced here, other learning mechanisms, such as reinforcement learning, or developmental mechanisms selected through evolution, could lead to a chronotron-like processing of temporally-coded information.

Many computational applications of the presented learning rules are possible. For example, the supervised learning rules presented here could be used to train readout neurons of liquid state machines, for which perceptrons or spiking neurons with rate coding of outputs were previously used [23]. Using spiking neurons with temporal coding as readouts for liquid state machines makes their information representation compatible to the one of spiking neurons in the liquid, thus allowing the outputs of the readouts to be fed back into the liquid. Such feedback significantly improves the computing power of liquid state machines [112], allowing the development of better models of information processing in the brain. Another possible application is the decoding of neural signals. Less efficient learning rules than the ones presented here have been already applied successfully, and with better results than alternative methods, to train simulated spiking neural networks to extract arm movement direction and hand orientation intent from the timing of spike trains recorded from monkeys [113]. These are just a few examples of the potential uses of the learning rules presented here. These rules open the way to a plethora of future experiments that will explore how information encoded in the precise timing of spikes can be processed and memorized. This should lead to a better understanding of the information-processing features of neurons in the brain.

Methods

The neural model

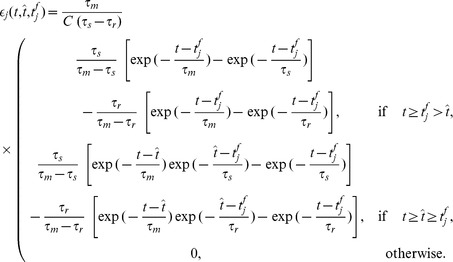

Our analysis uses the Spike Response Model (SRM) of spiking neurons, which reproduces with high accuracy the dynamics of the complex Hodgkin-Huxley neural model while being amenable to analytical treatment [21], [114]. For this model, the dynamics of the membrane potential  of a neuron as a function of the time

of a neuron as a function of the time  is given by

is given by

| (6) |

where  is a kernel that represents the refractoriness caused by the last spike of the neuron;

is a kernel that represents the refractoriness caused by the last spike of the neuron;  is the last time the neuron fired before

is the last time the neuron fired before  ; the first sum runs over all synapses

; the first sum runs over all synapses  afferent to the considered neuron;

afferent to the considered neuron;  is the synaptic efficacy of the synapse

is the synaptic efficacy of the synapse  ; the second sum runs over the set of the timings when spikes coming through synapse