Abstract

Objectives

The purpose of the current study was to examine the relation between speech intelligibility and prosody production in children who use cochlear implants.

Methods

The Beginner's Intelligibility Test (BIT) and Prosodic Utterance Production (PUP) task were administered to 15 children who use cochlear implants and 10 children with normal hearing. Adult listeners with normal hearing judged the intelligibility of the words in the BIT sentences, identified the PUP sentences as one of four grammatical or emotional moods (i.e., declarative, interrogative, happy, or sad), and rated the PUP sentences according to how well they thought the child conveyed the designated mood.

Results

Percent correct scores were higher for intelligibility than for prosody and higher for children with normal hearing than for children with cochlear implants. Declarative sentences were most readily identified and received the highest ratings by adult listeners; interrogative sentences were least readily identified and received the lowest ratings. Correlations between intelligibility and all mood identification and rating scores except declarative were not significant.

Discussion

The findings suggest that the development of speech intelligibility progresses ahead of prosody in both children with cochlear implants and children with normal hearing; however, children with normal hearing still perform better than children with cochlear implants on measures of intelligibility and prosody even after accounting for hearing age. Problems with interrogative intonation may be related to more general restrictions on rising intonation, and the correlation results indicate that intelligibility and sentence intonation may be relatively dissociated at these ages.

Keywords: pediatric cochlear implantation, speech intelligibility, prosody, intonation

1. Introduction

Cochlear implants are primarily aids to sound perception, but in both adults and children, they can also aid in the production of spoken language. The speech and spoken language of children with cochlear implants have been examined at several structural levels, including the articulatory, the phonological, and the morphological. For communication, however, overall speech intelligibility is the gold standard for assessing the benefit of cochlear implantation for the production of speech, because it addresses directly the communicative function of language. Speech intelligibility involves the transmission and reception of linguistic information and meaning, or, as Kent, Weismer, Kent, and Rosenbek (1989) define it, “the degree to which the speaker’s intended message is recovered by the listener.” Speaking to the importance of measure intelligibility, Subtelney (1977) proposes that “intelligibility is considered the most practical single index to apply in assessing competence in oral communication.”

Previous research in clinical and nonclinical populations has identified segmental and suprasegmental production factors that may be associated with overall speech intelligibility. An obvious potential factor is the articulation accuracy of consonants and vowels. De Bodt et al. (2002) report that articulation was the strongest contributor to intelligibility in a study of English-speaking dysarthria patients. However, although articulation may be a major factor for intelligibility, it is not equivalent to intelligibility. De Bodt, Huici, and Van De Heyning (2002) cite several other contributing factors, such as voice quality, nasality, and prosody, and Peterson and Marquardt (1981) note that “’Articulation’ and ‘intelligibility’ are related, but they are not identical. If a speaker distorts the sound element but does so in a consistent manner, her speech may be easily intelligible because of the predictability of the errors” (p. 59). Weismer and Martin (1992) describe an extensive literature reporting moderate negative correlations between intelligibility and segmental errors in persons with hearing loss, including omissions of word-initial phonemes (Hudgins & Numbers, 1942; Levitt, Stromberg, Smith, & Gold, 1980); voicing and consonant cluster errors (Hudgins and Numbers, 1942); manner substitutions for consonants and substitution of non-English segments (Levitt et al., 1980); and vocalic errors (Smith, 1975). Suprasegmental and prosodic factors have also been implicated in the degree of speech intelligibility (Parkhurst & Levitt, 1978; Smith, 1975). Factors cited by Weismer and Martin (1992) that potentially affect the intelligibility of persons with hearing loss include rhythm (Hudgins & Numbers, 1942), segment and pause durations (Monsen 1974), stress, fundamental frequency (Stevens, Nickerson, & Rollins, 1983), fundamental frequency contours (McGarr & Osberger, 1978), intonation, and voice quality. Thus, speech intelligibility may be affected not only by segmental characteristics but also by suprasegmental and prosodic characteristics as well.

Prosody is the melody and rhythm of spoken language. Operationally, prosody can be defined as “the suprasegmental features of speech that are conveyed by the parameters of fundamental frequency, intensity, and duration”; such suprasegmental features include stress, intonation, tone, and duration (Kent and Kim, 2008). How children acquire target-appropriate prosodic structure is important because it plays a role in many aspects of linguistic function, from lexical stress to grammatical structure to emotional affect; it is therefore important for the transmission of meaning and thus for intelligibility. Infants and children with normal hearing are sensitive to prosody in language (motherese: Fernald, 1985; foot structure: Jusczyk, Houston, & Newsome, 1999; Thiessen & Saffran, 2003; phrase boundaries: Hirsch-Pasek et al., 1987; meter: Mehler et al., 1988; Jusczyk et al., 1992). Typically developing children also exhibit cross-linguistic prosodic patterns in their speech productions. For example, 18- to 24-month-old children tend to omit unstressed syllables from their utterances (e.g., “banana” becomes “nana”). By the age of 2 to 3 years, children begin to master phrasal stress, boundary cues, and meter in their production of speech (e.g., Klein, 1984; Clark, Gelman, & Lane, 1985; Snow, 1994). Finally, by the age of 5 years, children are capable of reproducing intonation (Koike & Asp, 1981; Loeb & Allen, 1993).

Control over prosodic aspects of language such as stress and intonation can be problematic for children with hearing loss. In a study of intonation in children with hearing loss, O’Halpin (2001) cites various factors that may underlie problems with intonation: respiratory problems resulting in fewer syllables per breath unit (Forner and Hixon, 1977; Osberger and McGarr, 1982); problems coordinating respiratory and laryngeal muscles resulting in atypical pausing and lack of gradual decline in fundamental frequency toward the ends of sentences (Osberger and McGarr, 1982); problems with phoneme shortening or lengthening resulting in lack of differentiation of stressed and unstressed syllables (La Bruna Murphy, McGarr, & Bell-Berti, 1990). Furthermore, because constructs such as stress correspond to multiple physical parameters (e.g., duration, intensity, fundamental frequency), implementation by children with hearing loss may not correspond exactly to ambient implementation, even if there is a perception of apparent correctness. O’Halpin cautions against remediation that targets only single parameters without consideration of remaining parameters, as this may change a child’s phonological system in undesired directions.

There have been no comprehensive investigations into prosody production in children with cochlear implants, although several studies have investigated specific areas of prosody production in this population. Lenden and Flipsen (2007) examined prosody and voice characteristics in 6 children aged 3–6 years with 1–3 years of cochlear implant experience using the Prosody–Voice Screening Profile (PVSP; Shriberg, Kwiatkowski, & Rasmussen, 1990), which was used to assess phrasing, rate, stress, loudness, pitch, laryngeal quality, and resonance quality in a sample of conversational speech. In their 6 children, Lenden and Flipsen found substantial problems with stress and resonance quality; some problems with rate, loudness, and laryngeal quality; and no consistent problems with phrasing or pitch. Also using conversational and narrative speech, Lyxell et al. (2009) examined prosody production in 34 children ages 5–13 years who had received cochlear implants between 1–10 years of age. Various prosodic characteristics were examined at both the word level (vowel length, tonal word accent, stress) and at the phrase level (questions, stress). Results indicated that children with cochlear implants had lower scores on measures of prosody production at both the word level and the phrase level than the children with normal hearing.

Using a nonword repetition task, Dillon, Cleary, and colleagues (Carter, Dillon, & Pisoni, 2002; Cleary, Dillon, & Pisoni, 2002; Dillon, Burkholder, Cleary, & Pisoni, 2004) found that 7- to 9-year-old children with 3–7 years of cochlear implant experience in English-speaking environments produced segmental characteristics more poorly than suprasegmental characteristics, such as the number of syllables and the placement of primary stress; in these studies, 64% of imitations contained the correct number of syllables, and 61% contained correct primary stress placement. As a comparison, Gathercole, Willis, Baddeley, and Emslie (1994) reported that children with normal hearing typically perform near ceiling on such nonword repetition tasks. Similar measures of segmental correctness, number of syllables, and stress placement were applied by Ibertsson, Willstedt-Svensson, Radeborg, and Sahlén (2008) to 13 children aged 5–9 years with 1–6 years of cochlear implant use in a Swedish-speaking environment. Consistent with the findings for children in English-speaking environments, the children in the Swedish study showed higher accuracy for suprasegmental imitation than for segmental imitation. Also consistent with Carter et al. (2002), these children displayed reductions in segmental accuracy as syllable length of the nonwords increased.

Intonation in the speech of children with cochlear implants has been addressed in several studies. Peng, Tomblin, Spencer, and Hurtig (2007) examined the production of rising speech intonation associated with English interrogatives annually in 24 prelingually deafened children aged 9–18 years who used cochlear implants up to 10 years. Recordings of the sentence “Are you ready?” were submitted to perceptual judgments by adults with normal hearing in both an intonation identification task and a rating task, as well as to acoustic analyses of fundamental frequency, intensity, and duration. Results indicated that the children had not mastered the use of rising intonation, although performance increased up to approximately 7 years of device use. Acoustic results were consistent with the perceptual results. The overall finding of nonmastery reiterated results from earlier studies with children who used older implant technology and processing strategies (Osberger, Miyamoto et al., 1991; Osberger Robbins et al., 1991; Tobey et al., 1991; Tobey & Hasenstab, 1991).

In fact, previous research has indicated that even young children with normal hearing have difficulty producing rising intonation (particularly sentence-final rising intonation) and by extension, with interrogative intonation. In Snow (1998), preschool-aged children with normal hearing produced spontaneous speech elicited in semistructured play activity and imitative productions of four types of intonation (“intonation groups”) defined along three parameters: tone (falling, rising), position (final, nonfinal), and type (declarative, interrogative, imperative, vocative). Acoustic analyses of both types of speech demonstrated that children experienced more difficulty producing final rising tones than final falling tones.

Loeb and Allen (1993), like Snow, studied intonation imitation in 3- and 5-year-old children. Children were asked to imitate declarative and interrogative sentences, as well as sentences spoken in a monotone fashion. Analysis of results indicated that differences in overall intonation imitative abilities between 3- and 5-year-old children were largely due to differences in the ability to imitate interrogative intonation. Koike and Asp (1981) described a three-part 25-item suprasegmental test eliciting imitative productions of the nonsense syllable /ma/ in various rhythmic and intonational patterns. Results from a group of 3-year-old children and a group of 5-year-old children indicated that the 5-year-old children produced both patterns correctly 100% of the time. On the other hand, 3-year-old children produced the falling intonation pattern correctly 90% of the time but the rising pattern only 50% of the time.

How does intonation production compare in children with cochlear implants and children with normal hearing? Peng, Tomblin, & Turner (2008) examined intonation in 7- to 20-year-old children with 5–17 years of cochlear implant experience and children with normal hearing. Children were asked to produce sentences with declarative syntax using both declarative and interrogative intonation. These sentences were recorded and played for adult listeners with normal hearing who judged the productions in a two-alternative forced-choice (question vs. statement) task of accuracy and a contour appropriateness task using a rating scale (1–5). Results indicated that mean accuracy for the children with cochlear implants (74%) was significantly lower than for the children with normal hearing (97%). Similarly, the mean appropriateness score for the children with cochlear implants (3.06) was significantly lower than for children with normal hearing (4.52).

Snow and Ertmer (2009) studied the development of intonation in a longitudinal study of 6 children who received a cochlear implant between the ages of 10–36 months. Spontaneous speech samples were collected 2 months prior to implant activation and monthly for 6 months after activation. Acoustic measurements were made on the nuclei of individual syllables to determine accent range (the difference between fundamental frequency minimum and maximum) and nucleus duration. Results indicated developmental stages for intonation that were similar to those of children with normal hearing, but the children with cochlear implants showed an interaction between chronological age at device activation and duration of cochlear implant use. Specifically, after 2 months of implant use, older children evidenced a more advanced stage of intonation development than younger children. This result indicates that simple maturation plays a role in the development of intonation apart from the effects of auditory experience.

In addition to grammatical characteristics, prosody is also employed to convey emotional and affective information. Although no one has yet investigated production of vocal emotion by children with cochlear implants, House (1994), Pereira (2000), and Hopyan-Misakyan, Gordon, Dennis, and Papsin (2008) have shown difficulties in emotion perception tasks by adult users of cochlear implants.

Chin, Tsai, and Gao (2003) found that 2- to 7-year-old children with normal hearing produced more intelligible speech than 2- to 11-year-old children with 6 months to 5.5 years of cochlear implant experience, even when controlling for chronological age and hearing experience. Speech intelligibility of the children with cochlear implants may be affected by their relatively poorer production of suprasegmental and prosodic characteristics of speech as outlined in the above studies. Although a relatively large amount of research has examined relations between intelligibility and prosody in adults with hearing loss, little specific information is available for children who use cochlear implants. Thus, the current study examines the relation between intelligibility and prosody in the speech of prelingually deafened children who use cochlear implants. We administered two speech production tasks to measure speech intelligibility and prosody production in terms of emotional and grammatical mood in children with normal-hearing and prelingually deafened children who have used a cochlear implant for at least 3 years.

2. Methods

2.1. Participants

Fifteen children from English-speaking homes who used cochlear implants (10 males and 5 females) served as participants. Hearing loss was identified at birth in 13 subjects and at 4 and 6 months of age in two subjects. The etiologies of hearing loss were unknown (8 subjects), genetic (3 subjects), auditory neuropathy (2 subjects), meningitis (1 subject), and ototoxicity (1 subject). The mean age at amplification fitting was 1.42 years (SD = 0.82, range = 0.50 to 2.29 years). The mean age at cochlear implant stimulation was 1.82 years (SD = 0.85, range = 0.69 to 3.37 years). The mean chronological age for the children with cochlear implants at time of testing was 8.31 years (SD = 1.33, range = 6.00 to 10.33 years). The mean hearing age for the children with cochlear implants was 6.58 years (SD = 1.57, range = 3.33 to 9.00 years). The children with implants were recruited through our database. They were selected because they had consecutive, upcoming follow-up appointments and were all at least 3 years of age at the time of testing. Pre-implantation scores on the cognitive domain of the Developmental Assessment of Young Children (DAYC, Voress & Maddox, 1998) were available for 13 of the 15 subjects with cochlear implants and revealed a mean standard score of 98.31 (SD = 12.33, range = 75 to 113). Although three of the participants received scores below the average standard score (90–110), the children’s cognitive scores on the DAYC were not significantly correlated with any of their test measures used in the current study (r = −0.08 to 0.43). The participants with cochlear implants completed both the Beginner’s Intelligibility Test (BIT; Osberger, Robbins, Todd, & Riley, 1994) and the Prosodic Utterance Production (PUP; Bergeson & Chin, 2008) task.

Ten children with normal hearing (5 males and 5 females), as reported by their parents, also served as participants. The mean age for these children was 8.50 years (SD = 3.38, range = 4.00 to 14.08 years). They were recruited by means of an electronic mailing list located on the campus of Indiana University–Purdue University Indianapolis. All of the children were from English-speaking homes in central Indiana and did not have any known cognitive or other developmental delay. All of the children in the study were at least 3 years of age at the time of testing and completed both the BIT and PUP task.

Forty-four adults (17 males and 27 females) served as listener judges. The mean age for the listener judges was 25.93 years (SD = 6.93, range = 19 to 48 years). Listener judges were recruited by means of an electronic mailing list located on the Indiana University–Purdue University Indianapolis campus. The listener judges all spoke American English as a native language, had normal hearing and speech, and had little or no experience with the speech of the deaf.

2.2. Materials

The Beginner’s Intelligibility Test (BIT; Osberger et al., 1994) is a live-voice, sentence imitation test of speech intelligibility developed for use with children who use cochlear implants. The BIT consists of four separate lists, and each list consists of 10 single sentences. The words used in the test were familiar to children and were no more than two syllables long. Each sentence was syntactically simple and contained between two and six words. Each list of ten sentences contained 37 to 40 words each.

The Prosodic Utterance Production (PUP) task (Bergeson & Chin, 2008) is a sentence imitation test utilizing recorded voice stimuli. It consists of 60 single sentences, with each sentence conveying one of four grammatical or emotional moods. There were 15 declarative sentences, 15 interrogative sentences, 15 happy sentences, and 15 sad sentences. Additionally, the sentences were classified as being either semantically neutral or semantically non-neutral. Semantically non-neutral sentences consist of words that can evoke a particular emotion, as in (1), whereas semantically neutral sentences consist of words that do not, as in (2).

(1) My soccer team won the game. (happy) OR

I fell off the swing. (sad)

(2) The cup is on the table. OR

His coat was red.

There were 20 semantically neutral sentences and 40 semantically non-neutral sentences. Because this PUP task was part of a larger study examining prosody, the children recorded all 60 sentences. This also offered the advantage of giving the children the benefit of hearing the semantically neutral interrogative sentences (e.g., “His coat was red?”) in the context of the rising intonation of the semantically non-neutral interrogative sentences (e.g., “What is your favorite color?”). However, only the 20 semantically neutral sentences were used for the current study. The words used in this test were familiar to children, and each sentence was syntactically simple.

2.3. Procedures

Informed consent was obtained, and children and listener judges were paid for their participation. All study protocols, including recruitment of human subjects and collection of data, were approved by the relevant Institutional Review Board for Indiana University-Purdue University Indianapolis and Clarian Health Partners (now Indiana University Health).

During a session, both the BIT and PUP tasks were administered to the children. For each sentence on the BIT list used in a session, the examiner provided a live-voice model for the child, who was instructed to repeat the sentence. For each sentence of the PUP test, the examiner played recorded model sentences through a speaker attached to a computer for the child, who was instructed to repeat the sentence and convey the mood assigned to that sentence, as demonstrated by the model. The entire session was recorded using a Marantz PMD670 solid state recorder, which directly digitized the signal to a compact flash card.

Using CoolEdit 2000 (Syntrillium Software Corporations; Phoenix, AZ), the session recording was edited to isolate the child’s production of each sentence by removing all other material, such as the examiner’s prompt and extraneous noises. A separate file was created for each of the 10 BIT sentences and for each of the 20 sentences of the PUP test.

Stimulus files for a listening session using the BIT sentences were created by combining the 10 sentences with a set of prerecorded listener prompts and silent periods. Stimulus files for a listening session using the PUP sentences were created by combining the 20 sentences with a set of prerecorded listener prompts and silent periods. For the rating (RT) task, the PUP sentences in the listener file were grouped by mood (e.g. 5 declarative sentences, followed by 5 interrogative sentences, then 5 happy sentences, and finally 5 sad sentences). For the identification (ID) task, the PUP sentences in the listener file were organized in a set, random order. The schema for a sentence presentation was the same for all types of listener files, as in (3).

(3) Listener prompt: Number X, ready

Child: [Sentence X]

Silence: 2s

Listener prompt: Number X again, ready

Child: [Sentence X]

Silence: 4s

Each sentence X was presented twice, and the final 4s silent period was then followed by a prompt for Sentence X+1, and so on. After the each listener file was created, the volume of the file was equalized using the program Adobe Soundbooth CS5 (Adobe Systems, Inc.; San Jose, California) so that the intensity of the child’s sentences matched the intensity of the listener prompts.

Each listener file was played to a panel of three adult listener judges. This part of the experiment was conducted in a sound-attenuated booth. A speaker was located on top of a table within the sound booth. A Macintosh computer running iTunes software, which was used to play the stimulus files for the listener judges, was located on a table inside the sound booth. The computer screen was facing the sound booth window, and the experimenter could view the computer screen from the outside of the sound booth. The computer allowed the experimenter to start and stop the sound stimuli.

Each panel heard up to four BIT lists, and no listener judge heard a BIT list more than once or a child producing more than one list. Each panel also heard stimuli from the PUP lists, but no listener judge heard a child producing more than one list. For the BIT listener files, listener judges were instructed to transcribe on paper what they heard the child say using traditional orthography and were also instructed to make their best guess if they were not sure about a word or words. For the ID task using the PUP sentences, the listener judges were instructed to identify each sentence using one of four moods (declarative, interrogative, happy, or sad) and to make their best guess if they were unsure. The declarative sentence was described as a “neutral sentence” and the interrogative sentence was described as a “question” to the listener judges. For the RT task using the PUP sentences, the listener judges were instructed to rate the sentences according to how well they thought the child conveyed the mood assigned to that sentence, on a scale of 1 to 7 (1 = worst, 7 = best).

For each of the three BIT transcriptions, the percent of correctly transcribed words was calculated. A BIT score was then derived as the mean percent correctly transcribed word score, calculated across the three listeners. For the ID task, the number of moods identified correctly was calculated for each of the three listeners. An ID score was then derived as the total number correct, calculated across the three listeners (number correct out of 60). For the RT task, the mean rating for each mood was calculated for each listener judge. Then, an RT score for each mood was determined by calculating the mean rating across the three listeners. Finally, a total RT score was calculated by taking the sum of the mean ratings from the three listener judges for each sentence (maximum total rating score of 140).

3. Results

3.1. Adult listeners’ identification of intelligibility and prosodic mood

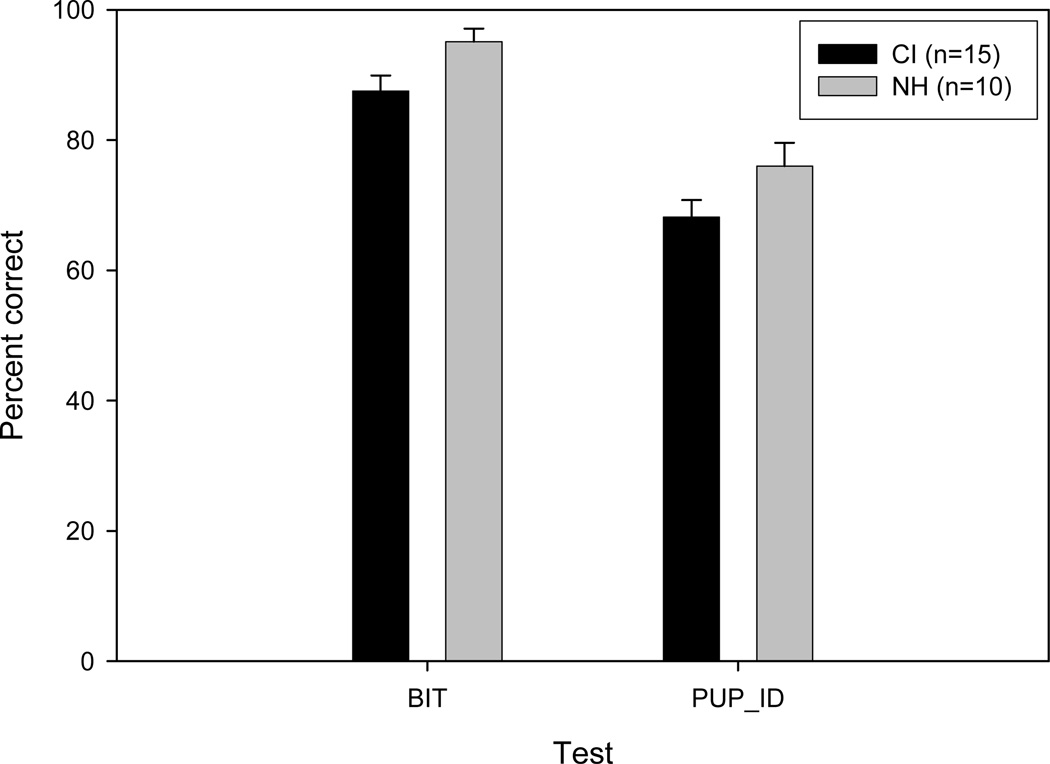

Figure 1 shows the intelligibility (BIT) and prosodic mood (PUP_ID) percent correct scores for the children cochlear implants (CI) and children with normal hearing (NH). We ran a mixed effects Analysis of Covariance with Test (BIT, PUP_ID) as the within-subjects variable, Hearing Status (CI, NH) as the between-subjects variable, and Hearing Age (duration of implant use for children with cochlear implants, chronological age for children with normal hearing) as the continuous covariant. (Note: we also completed all analyses using Chronological Age for both groups of children as the covariant. Because we found the same pattern of results, we include here only the results for Hearing Age.) We found significant main effects of Test (F(1, 22) = 8.09, p = .009, ηp2 = .27), Hearing Status (F(1, 22) = 7.43, p = .012, ηp2 = .25), and Hearing Age (F(1, 22) = 9.16, p = .006, ηp2 = .29). Percent correct scores were higher for the BIT as compared to the PUP_ID for all children, although scores were also generally higher for children with normal-hearing as compared to children with cochlear implants. Finally, scores were better for children with more hearing experience. There were no significant interactions between any of these variables.

Figure 1.

Intelligibility (BIT) and prosodic mood identification (PUP_ID) scores

Table 1 shows the confusion matrix of identification errors adult listeners made across the four PUP_ID moods. Listeners were most accurate in identifying the Declarative sentences (84.4% correct). They were also reasonably accurate in identifying the Sad (80.7%) and Happy (70.7%) sentences, confusing them most often with Declarative sentences. Identification performance was quite poor for the Interrogative sentences (34.8%), with listeners confusing them with Declarative and even Happy sentences.

Table 1.

Adult listener identification errors across the four moods on the PUP_ID test

| Correct Answer | ||||

|---|---|---|---|---|

| Listener Answer | H | S | I | D |

| H | 70.7 | 1.1 | 14.8 | 6.7 |

| S | 1.9 | 80.7 | 6.7 | 7.4 |

| I | 4.8 | 3.7 | 34.8 | 1.5 |

| D | 22.6 | 14.4 | 43.7 | 84.4 |

Note: H = Happy, S = Sad, I = Interrogative, D = Declarative

3.2. Adult listeners’ ratings of prosodic mood

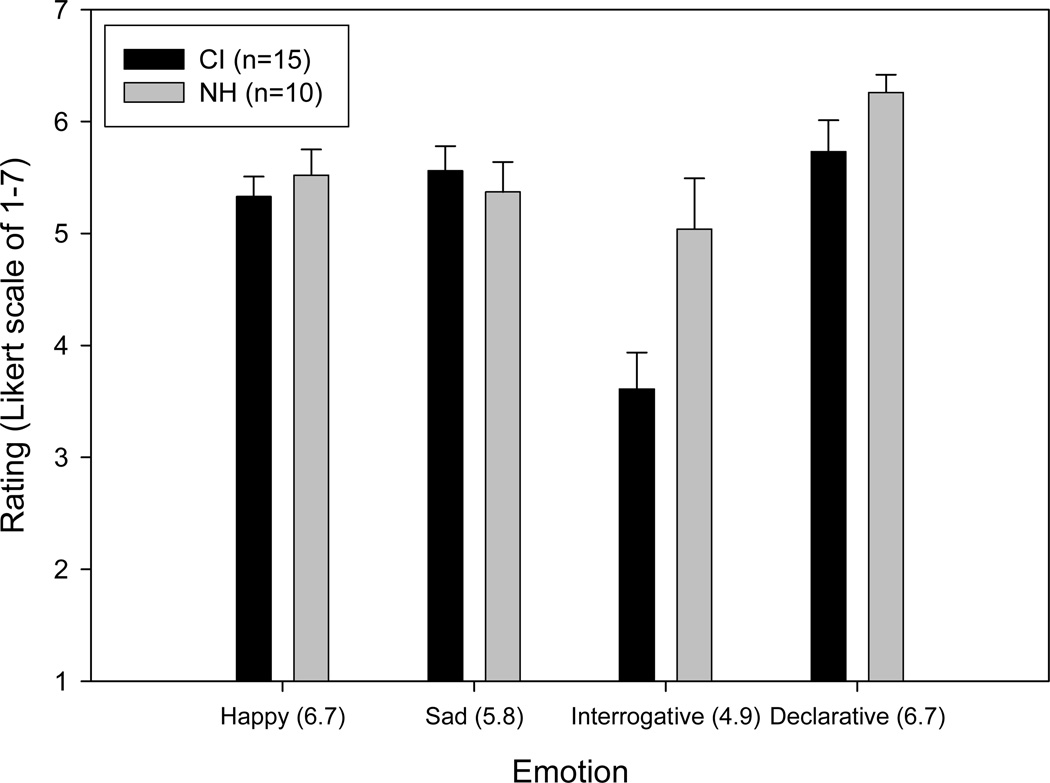

Figure 2 shows the rating scores (on a scale of 1–7) for the four mood categories (declarative, interrogative, happy, sad) on the PUP test across children with cochlear implants and children with normal hearing. We ran a mixed-effects Analysis of Covariance with Mood (declarative, interrogative, happy, sad) as the within-subjects variable, Hearing Status (CI, NH) as the between-subjects variable, and Hearing Age (duration of implant use for children with cochlear implants, chronological age for normal-hearing children) as the continuous covariant. We found that the main effect of Mood approached statistical significance, F(3, 66) = 2.40, p = .075, ηp2 = .10. Children’s renditions of Declarative prosody received the highest ratings by the adult listeners, renditions of Interrogative prosody received the lowest ratings, and the Happy and Sad prosody renditions received intermediate ratings. We also found a statistically significant interaction between Mood and Hearing Status, F(3, 66) = 3.48, p = .021, ηp2 = .14.

Figure 2.

Prosodic mood rating scores across the two groups of children, with the adult model’s rating scores in parentheses

We ran a series of follow-up independent samples t-tests to compare listeners’ ratings of the two groups of children across each of the four prosodic mood categories. The only significant difference between the two groups of children was for ratings of the Interrogative prosody, with normal-hearing children’s renditions rated as higher (M = 5.04, SD = 1.43) than renditions of children with implants (M = 3.61, SD = 1.26), t(23) = 2.63, p = .015, Cohen’s d = 1.06.

We also ran a series of one-sample t-tests to determine whether listeners’ ratings of children’s renditions of the four prosodic mood categories differed from ratings of the adult model’s renditions. For Happy prosody, children’s productions received significantly lower ratings (NH: M = 5.52, SD = .73; CI: M = 5.33, SD = .69) than the model’s productions (6.70), NH: t(9) = 5.13, p = .001, Cohen’s d = 2.29; CI: t(14) = 7.67, p < .001, Cohen’s d = 2.81. For Sad prosody, there were no significant differences between children’s and the model’s (5.80) productions. For Interrogative prosody, only the implanted children’s productions received significantly lower ratings (M = 3.61, SD = 1.26) than the model’s productions (4.90), t(14) = 3.95, p = .001, Cohen’s d = 1.45. Finally, for Declarative prosody, children’s productions received significantly lower ratings (NH: M = 6.26, SD = .50; CI: M = 5.73, SD = 1.09) than the model’s productions (6.70), NH: t(9) = 2.79, p = .021, Cohen’s d = 1.24; CI: t(14) = 5.73, p = .004, Cohen’s d = 1.26.

3.3. Relations between intelligibility and prosodic mood production

To determine the potential relations between intelligibility and prosodic mood production we ran a series of partial correlations, controlling for children’s hearing experience (duration of implant use for children with cochlear implants; chronological age for children with normal hearing). Table 2 shows the correlations between identification and rating scores on the BIT and PUP tests across children with cochlear implants and children with normal hearing. Contrary to our expectations, intelligibility scores on the BIT were negatively correlated with mood identification and rating scores on the PUP (with the exception of rating scores for the Declarative mood category) for both groups of children, although these correlations did not reach statistical significance.

Table 2.

Partial correlations for BIT and PUP tests across two groups of children

| PUP_ID | PUP_RT (All) |

Happy rating |

Sad rating |

Interrogative rating |

Declarative rating |

|

|---|---|---|---|---|---|---|

| Children with cochlear implants (df = 12) | ||||||

| BIT | −.45 | −.30 | −.46 | −.18 | −.24 | .01 |

| PUP_ID | .85 ** | .83 ** | .69 ** | .39 | .45 | |

| PUP_RT | .83 ** | .86 ** | .34 | .70 ** | ||

| Happy rating | .82 ** | .07 | .56 * | |||

| Sad rating | −.10 | .77 ** | ||||

| Interrogative rating | −.32 | |||||

| Children with normal hearing (df = 7) | ||||||

| BIT | −.65 | −.58 | −.55 | −.29 | −.53 | .35 |

| PUP_ID | .72 * | .70 * | .32 | .58 | −.16 | |

| PUP_RT | .68 * | .41 | .85 ** | .06 | ||

| Happy rating | .29 | .28 | .12 | |||

| Sad rating | .18 | −.59 | ||||

| Interrogative rating | −.07 | |||||

Note: Partial correlations with Hearing Age as a control variable;

p < .05;

p < .01

The mood identification, overall rating, and Happy rating scores on the PUP were significantly and positively correlated for both groups of children. For children with cochlear implants, the mood identification, overall rating scores, and the individual rating scores were all significantly correlated, with the exception of the Interrogative rating scores and the Declarative rating scores in two instances (PUP_ID × Declarative; Interrogative × Declarative). For children with normal hearing, the only additional correlation was between the overall mood and Interrogative rating scores. The pattern of results for the PUP test highlights the particular challenge of producing Interrogative prosody as compared to Happy, Sad, and Declarative prosody for children with cochlear implants.

4. Discussion

The goal of this study was to examine the relation between intelligibility and prosody in the speech of prelingually deafened children who use cochlear implants. Our results revealed that all children received significantly higher percent correct scores on the intelligibility task compared to the prosody identification task. Furthermore, we found that children with normal hearing generally performed better on both the intelligibility and prosody identification tasks than children with cochlear implants. This result is consistent with previous studies. For example, using the same BIT sentences as in the current study, Chin et al. (2003) showed that children with normal hearing were significantly more intelligible than children with cochlear implants when controlling for chronological age and duration of auditory experience. Lyxell et al. (2009) further demonstrated that prosody production by children with normal hearing was significantly better than children with cochlear implants. As expected, we also observed that, in general, children with more hearing experience scored better on the BIT and PUP_ID.

On the PUP rating task, all children received the highest ratings on productions of the declarative mood and received the lowest ratings on productions of the interrogative mood. This finding is not surprising as the results from previous studies provide evidence that this difference in performance among both groups of children may be associated with the fact that maturation influences the development of intonation apart from any effects of auditory experience. For instance, Peng et al. (2007) showed that prelingually deafened children with cochlear implants had not completely mastered the use of rising intonation of the interrogative mood, although their performance increased over 7 years of device use. Despite our findings among both groups of children, however, children with normal hearing were still rated significantly higher than children with cochlear implants on renditions of the interrogative prosody. Peng et al. (2008) similarly demonstrated that children with normal hearing were judged by adult listeners to have produced more accurate and appropriate renditions of the interrogative intonation than children with cochlear implants. The relatively poorer performance for children with cochlear implants as compared to children with normal hearing is likely related to poorer perception of intonation (Peng et al., 2008). When compared to ratings of the adult model’s renditions of the four prosodic mood categories, children’s ratings were significantly lower on productions of the happy prosody and declarative prosody but not significantly different on productions of the sad prosody. For the interrogative prosody, only the renditions of children with cochlear implants received significantly lower ratings than the model’s renditions. Taken together, these findings suggest that children with cochlear implants have the most difficulty producing the interrogative prosody. However, these results also indicate that children with cochlear implants have at least some prosodic capabilities.

In the current study, interrogative intonation was tested using “rising declaratives” (sentences with declarative word order but interrogative intonation). In English, such constructions are similar to polar interrogatives in that they elicit yes/no responses and have rising intonation but are somewhat different in their pragmatics (see Trinh & Crnič, 2011). Because of the declarative word order of such constructions (i.e., no subject-auxiliary inversion), they are interpretable as interrogatives solely by their intonation. Previous research has indicated that children (with normal hearing and development) have problems with rising intonation (particularly sentence-final rising intonation) and by extension, with interrogative intonation. Snow (1998) found that children experienced more difficulty producing final rising tones than final falling tones. Specifically, when children’s final tone patterns did not match the adult model, it was usually the falling tone substituting for the rising tone. When children correctly imitated falling tones, duration and pitch range also matched the model; with rising tones, however, durations were longer and pitch ranges were narrower than the models. Snow suggested a reciprocal relation between tonal direction and utterance position whereby final falling and nonfinal rising are unmarked, and final rising and nonfinal falling are marked. Markedness in this case is physiologically based, in that unmarked constructions require relatively little effort and marked constructions relatively more effort.

Loeb and Allen (1993), who asked 3- and 5-year-old children to imitate declarative and interrogative sentences, as well as sentence spoken in a monotone fashion, found that differences in overall intonation production between these two age groups were largely due to differences in the ability to imitate interrogative intonation. Note that Loeb and Allen used rising declaratives to elicit interrogative intonation, as in the current study. Loeb and Allen consider several reasons for this difference. One possibility is that at certain ages, children may trade off syntactic cues and prosodic cues; in this instance, children may require syntactic cues (e.g., subject-auxiliary inversion) to guide their production of interrogative (final rising) intonation. On the other hand, there is also evidence that apart from grammatical functions of prosody, rising intonation may be more difficult than falling intonation. For example, Koike and Asp (1981) found that 5-year-old children produced falling and rising intonational patterns on the nonsense syllable /ma/ correctly 100% of the time. On the other hand, 3-year-old children produced the falling intonation pattern correctly 90% of the time but the rising pattern only 50% of the time. These results bring us back to the observations of Snow (1981) and the physiologically-based, rather than linguistically-based, markedness of final rising patterns.

Koike and Asp’s (1981) results are also consistent with other results from the children examined in the current study. As reported by Bergeson, Kuhns, Chin, & Simpson (2009), these children imitated an adult model’s prolonged [a] more accurately with a falling intonation than with a rising intonation. Bergeson et al. and Koike and Asp both additionally report that prolonged syllables ([a] and [ma] respectively) were more accurately produced with rising-falling intonation (i.e., final falling) than with falling-rising (i.e., final rising) intonation, consistent with Snow’s observations regarding marked and unmarked intonational constructs.

There is thus evidence that interrogative intonation, relative to declarative intonation, is problematic not only for children with cochlear implants but also for children with normal hearing. Evidence available from the literature suggests that the bases of these difficulties may be both linguistic and nonlinguistic. One reason may be reticence to apply interrogative intonation to sentences with declarative word order (e.g., Loeb and Allen, 1993). Unlike Loeb and Allen (1993), however, the interrogative sentences in the current study were embedded in a group of sentences with both interrogative intonation and interrogative word order. We included both types of interrogative sentences so that (a) the children would feel more comfortable with the task but that (b) the adult listeners would not be influenced by the interrogative word order in their judgments. A second reason for children’s difficulty with interrogative intonation includes physiologically-based markedness of final rising contours (e.g., Koike and Asp, 1981; Snow, 1998). Given this evidence, it is not surprising that ratings scores were highest for declarative sentences (with target falling intonation) and lowest for interrogative sentences (with target rising intonation), for both children with cochlear implants and children with normal hearing.

Contrary to expectations, there was no significant correlation between intelligibility scores on the BIT and either identification or rating scores on the PUP for all moods and all children, except declaratives for the children with cochlear implants. Expectations that intonation and intelligibility would be correlated stemmed from such notions as prosodic bootstrapping (Gleitman & Wanner, 1982; Gleitman et al., 1988) and reports in the literature asserting relations between prosody and intelligibility (see Ramig, 1992; Weismer & Martin, 1992). In fact, however, prosodic bootstrapping may be irrelevant to the specific speech production tasks used in the current study, and results concerning the relation between prosody and intelligibility can be equivocal. Specifically, although prosody may provide children with evidence for ascertaining specific structures in the language they are learning (particularly morphological and syntactic structure), it may not provide sufficient information about the phonological detail necessary for producing intelligible speech. That is, to a large extent, productive intelligibility depends on the accurate production of the phonetic segments that form an utterance, and this may not be directly related to prosodic accuracy, specifically intonational accuracy.

Furthermore, intonation has several linguistic and paralinguistic functions and can be used to convey both grammatical mood (e.g., declarative vs. interrogative in the current study) and affect (e.g., happiness and sadness in the current study). As discussed above, the characteristic English intonational patterns for declarative and interrogative sentences appear not to develop at the same rate, and there is evidence that intonation to convey affect is not associated with other aspects of language in acquisition (Wells and Peppé, 2003). Specifically, although intonation may convey both grammatical mood and affect, the two may not be closely associated in acquisition, and both may not be closely associated with segmental factors affecting intelligibility. Nevertheless, there is evidence that children with recent cochlear implant technology perceive a 0.5 semitone pitch change across two tones rising in pitch (Vongpaisal, Trehub, & Schellenberg, 2006). It is possible that these children will develop better prosody production over time as well. Future studies with children whose cochlear implant technology optimizes pitch encoding could reveal whether prosody production and intelligibility continue to be dissociated even with better sentence intonation production abilities.

It is important to mention several limitations of the study. First, the sample sizes for the both groups of children are relatively small (n = 15 for children with cochlear implants; n = 10 for children with normal hearing) and did not include equal proportions of boys and girls. Analyses with these small sample sizes and imbalanced gender proportions should be interpreted conservatively. Second, the composition of the BIT and PUP sentences were different as mentioned previously. To provide stronger support for our findings, the BIT and PUP lists should ideally contain the same sentences. Finally, varying degrees of attention and cooperation among the younger children during sentence recording sessions may have affected the quality of the sentence recordings, which in turn could have influenced the responses of the listener judges.

The present study is among the first to examine the relation between intelligibility and prosody in children who use cochlear implants. Further research is needed to delineate the development of the relationship between speech intelligibility and prosody and to address the limitations of the current study. Future work should involve testing of larger sample sizes of children with cochlear implants and children with normal hearing, as well as additional adult listener judges, replication of the study using the same sentences for the BIT and PUP tasks, and investigation of the acoustic components of sentence production and their relation to speech intelligibility and prosody.

Highlights.

We examined speech intelligibility and prosody in children with cochlear implants.

We compared children with implants and children with normal hearing.

Both groups performed better on intelligibility than prosody.

Children with normal hearing did better on both measures than children with implants.

Intelligibility and prosody production appear to be dissociated at these ages.

Learning Outcomes.

As a result of this activity, readers will be able to understand and describe (1) methods for measuring speech intelligibility and prosody production in children with cochlear implants and children with normal hearing, (2) the differences between children with normal hearing and children with cochlear implants on measures of speech intelligibility and prosody production, and (3) the relations between speech intelligibility and prosody production in children with cochlear implants and children with normal hearing.

Acknowledgments

This research was supported in part by a National Institutes of Health research grant to Indiana University (R01DC000423) and an Indiana University–Purdue University Signature Center grant to the Department of Otolaryngology–Head and Neck Surgery, Indiana University School of Medicine. We are grateful to Zafar Sayed for assistance with measurement and analysis; to Richard Miyamoto, Shirley Henning, Bethany Colson for their help in conducting the study; and especially to the children and their families who participated in the study.

Role of the Funding Source

The authors declare the following funding sources for the research reported in this paper: (1) a research grant from the (U.S.) National Institutes of Health to Indiana University (R01DC000423), and (2) an Indiana University–Purdue University Signature Center grant to the Department of Otolaryngology–Head and Neck Surgery, Indiana University School of Medicine. The authors further declare that neither funding source played a role in or placed restrictions on the study design; the collection, analysis, and interpretation of data; the writing of the report; or the decision to submit the paper for publication.

Appendix A

CEU Questions for “Speech Intelligibility and Prosody Production in Children with Cochlear Implants”

- The Beginner’s Intelligibility Test (BIT) and Prosodic Utterance Production (PUP) task are

- Picture-naming tasks

- Spontaneous speech samples

- Sentence imitation tasks

- Cloze tests

- Acoustic measures

- Children’s prosody production was assessed by adult listeners using

- both an identification task and a rating task

- only a rating task

- an identification and a transcription task

- only an identification task

- a transcription task

- T-tests of listeners’ ratings of children’s productions showed a significant difference between children with cochlear implants and children with normal hearing for

- Only Declarative intonation

- Only Interrogative intonation

- Happy and Sad intonation

- Declarative and Interrogative intonation

- All intonations

- Problems with the correct production of interrogative intonation may be related generally to

- Problems with falling intonation

- Problems with vowel perception

- Problems with lexical retrieval

- Problems with rising intonation

- Problems with consonant production

- The correlation between speech intelligibility and prosody production was

- Positive and significant

- Negative and significant

- Positive but not significant

- Negative but not significant

- Indeterminate

Key: 1: c; 2: a; 3: b; 4: d, 5: d

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosure Statement

The authors declare that they have no proprietary, financial, professional, or other personal interest of any nature or kind in any product, service, and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled “Speech intelligibility and prosody production in children with cochlear implants.”

Assurance of Protection of Human Subjects in Research

The authors declare that all research procedures used in the study reported in the manuscript entitled “Speech intelligibility and prosody production in children with cochlear implants” were approved by the Institutional Review Board of Indiana University–Purdue University Indianapolis and Clarian Health Partners (now Indiana University Health).

Contributor Information

Steven B. Chin, Email: schin@iupui.edu.

Tonya R. Bergeson, Email: tbergeso@iupui.edu.

Jennifer Phan, Email: jphan@iupui.edu.

References

- Bergeson TR, Chin SB. Prosodic utterance production. Manuscript, Indiana University School of Medicine; 2008. [Google Scholar]

- Bergeson TR, Kuhns MJ, Chin SB, Simpson A. Production of vocal prosody and song in children with cochlear implants. Paper presented at the 2009 Biennial Meeting of the Society for Music Perception and Cognition; Indianapolis, Indiana. 2009. [Google Scholar]

- Carter AK, Dillon CM, Pisoni DB. Imitation of nonwords by hearing impaired children with cochlear implants: suprasegmental analyses. Clinical Linguistics & Phonetics. 2002;16:619–638. doi: 10.1080/02699200021000034958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chin SB, Tsai PL, Gao S. Connected speech intelligibility of children with cochlear implants and children with normal hearing. American Journal of Speech-Language Pathology. 2003;12:440–451. doi: 10.1044/1058-0360(2003/090). [DOI] [PubMed] [Google Scholar]

- Clark E, Gelman S, Lane N. Compound nouns and category structure in young children. Child Development. 1985;56:84–94. [Google Scholar]

- Cleary M, Dillon C, Pisoni DB. Imitation of nonwords by deaf children after cochlear implantation: preliminary findings. Annals of Otology, Rhinology, & Laryngology. 2002;111(5, Pt. 2):91–96. doi: 10.1177/00034894021110s519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Bodt MS, Huici MEHD, Van De Heyning PH. Intelligibility as a linear combination of dimensions in dysarthric speech. Journal of Communication Disorders. 2002;35:283–292. doi: 10.1016/s0021-9924(02)00065-5. [DOI] [PubMed] [Google Scholar]

- Dillon CM, Burkholder RA, Cleary M, Pisoni DB. Nonword repetition by children with cochlear implants: accuracy ratings from normal-hearing listeners. Journal of Speech, Language, and Hearing Research. 2004;47:1103–1116. doi: 10.1044/1092-4388(2004/082). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A .Four-month-olds prefer to listen to motherese. Infant Behavior and Development. 1985;8:181–195. [Google Scholar]

- Forner LL, Hixon TJ. Respiratory kinematics in profoundly hearing-impaired speakers. Journal of Speech and Hearing Research. 1977;20:373–408. doi: 10.1044/jshr.2002.373. [DOI] [PubMed] [Google Scholar]

- Gathercole SE, Willis CS, Baddeley AD, Emslie H. The Children’s Test of Nonword Repetition: A test of phonological working memory. Memory. 1994;2:103–127. doi: 10.1080/09658219408258940. [DOI] [PubMed] [Google Scholar]

- Gleitman L, Gleitman H, Landau B, Wanner E. Where learning begins: initial representations for language learning. In: Newmeyer FJ, editor. Linguistics: The Cambridge Survey, Vol.3: Language: Psychological and biological aspects. New York: Cambridge University Press; 1988. pp. 150–193. [Google Scholar]

- Gleitman L, Wanner E. Language acquisition: the state of the state of the art. In: Wanner E, Gleitman L, editors. Language acquisition: The state of the art. Cambridge, UK: Cambridge University Press; 1982. pp. 3–48. [Google Scholar]

- Hirsch-Pasek K, Kemler Nelson DG, Jusczyk PW, Wright Cassidy K, Druss B, Kennedy L. Clauses are perceptual units for prelinguistic infants. Cognition. 1987;26:269–286. doi: 10.1016/s0010-0277(87)80002-1. [DOI] [PubMed] [Google Scholar]

- Hopyan-Misakyan TM, Gordon KA, Dennis M, Papsin BC. Recognition of affective speech prosody and facial affect in deaf children with unilateral right cochlear implants. Child Neuropsychology. 2009;15:136–146. doi: 10.1080/09297040802403682. [DOI] [PubMed] [Google Scholar]

- House D. Perception and production of mood in speech by cochlear implant users; Proceedings of the International Conference on Spoken Language Processing; 1994. pp. 2051–2054. [Google Scholar]

- Hudgins SV, Numbers FC. An investigation of the intelligibility of the speech of the deaf. Genetic Psychology Monographs. 1942;25:289–392. [Google Scholar]

- Ibertsson T, Willstedt-Svensson U, Radeborg K, Sahlén B. A methodological contribution to the assessment of nonword repetition–a comparison between children with specific language impairment and hearing-impaired children with hearing aids or cochlear implants. Logopedics Phoniatrics Vocology. 2009;33:168–178. doi: 10.1080/14015430801945299. [DOI] [PubMed] [Google Scholar]

- Jusczyk PW, Hirsch-Pasek K, Kemler Nelson D, Kennedy L, Woodward A, Piwoz J. Perception of acoustic correlates of major phrasal units by young infants. Cognitive Psychology. 1992;24:252–293. doi: 10.1016/0010-0285(92)90009-q. [DOI] [PubMed] [Google Scholar]

- Jusczyk PW, Houston DM, Newsome M. The beginnings of word segmentation in English-learning infants. Cognitive Psychology. 1999;39:159–207. doi: 10.1006/cogp.1999.0716. [DOI] [PubMed] [Google Scholar]

- Kent RD, Kim Y. Acoustic analysis of speech. In: Ball MJ, Perkins MR, Müller N, Howard S, editors. The handbook of clinical linguistics. Malden MA: Blackwell; 2008. pp. 360–380. [Google Scholar]

- Kent RD, Weismer G, Kent JF, Rosenbek JC. Toward phonetic intelligibility testing in dysarthria. Journal of Speech and Hearing Disorders. 1989;54:482–499. doi: 10.1044/jshd.5404.482. [DOI] [PubMed] [Google Scholar]

- Klein HB. Learning to stress: A case study. Journal of Child Language. 1984;11:375–390. doi: 10.1017/s0305000900005821. [DOI] [PubMed] [Google Scholar]

- Koike KJM, Asp CW. Tennessee Test of Rhythm and Intonation Patterns. Journal of Speech and Hearing Disorders. 1981;46:81–86. doi: 10.1044/jshd.4601.81. [DOI] [PubMed] [Google Scholar]

- La Bruna Murphy A, McGarr NS, Bell-Berti F. Acoustic analysis of stress contrasts produced by hearing-impaired children. The Volta Review. 1990;92:80–91. [Google Scholar]

- Lenden JM, Flipsen P., Jr Prosody and voice characteristics of children with cochlear implants. Journal of Communication Disorders. 2007;40:66–81. doi: 10.1016/j.jcomdis.2006.04.004. [DOI] [PubMed] [Google Scholar]

- Levitt H, Stromberg H, Smith CR, Gold T. The structure of segmental errors in the speech of deaf children. Journal of Communication Disorders. 1980;13:419–442. doi: 10.1016/0021-9924(80)90043-x. [DOI] [PubMed] [Google Scholar]

- Loeb DF, Allen GD. Preschoolers’ imitation of intonation contours. Journal of Speech and Hearing Research. 1993;36:4–13. doi: 10.1044/jshr.3601.04. [DOI] [PubMed] [Google Scholar]

- Lyxell B, Wass M, Sahlén B, Samuelsson C, Asker-Árnason L, Ibertsson T, Mäki-Torkko E, Larsby B, Hällgren M. Cognitive development, reading and prosodic skills in children with cochlear implants. Scandinavian Journal of Psychology. 2009;50:463–474. doi: 10.1111/j.1467-9450.2009.00754.x. [DOI] [PubMed] [Google Scholar]

- McGarr NS, Osberger MJ. Pitch deviancy and intelligibility of deaf speech. Journal of Communication Disorders. 1978;11:237–247. doi: 10.1016/0021-9924(78)90016-3. [DOI] [PubMed] [Google Scholar]

- Mehler J, Jusczyk PW, Lambertz G, Halsted N, Bertoncini J, Amiel-Tison C. A precursor of language acquisition in young infants. Cognition. 1988;29:143–178. doi: 10.1016/0010-0277(88)90035-2. [DOI] [PubMed] [Google Scholar]

- Monsen RB. Durational aspects of vowel production in the speech of deaf children. Journal of Speech and Hearing Research. 1974;17:386–398. doi: 10.1044/jshr.1703.386. [DOI] [PubMed] [Google Scholar]

- O’Halpin R. Intonation issues in the speech of hearing impaired children: analysis, transcription and remediation. Clinical Linguistics & Phonetics. 2001;15:529–550. [Google Scholar]

- Osberger MJ, McGarr NS. Speech production characteristics of the hearing impaired. In: Lass N, editor. Speech and language: advances in basic research and practice, Vol. 8. New York: Academic Press; 1982. pp. 221–283. [Google Scholar]

- Osberger MJ, Miyamoto RT, Zimmerman-Phillips S, Kemink JL, Stroer BS, Firszt JB, Novak MA. Independent evaluation of the speech perception abilities of children with the Nucleus 22-channel cochlear implant system. Ear and Hearing. 1991;12(Supplement):66S–80S. doi: 10.1097/00003446-199108001-00009. [DOI] [PubMed] [Google Scholar]

- Osberger MJ, Robbins AM, Miyamoto RT, Berry SW, Myres WA, Kessler KA, Pope ML. Speech perception abilities of children with cochlear implants, tactile aids, or hearing aids. The American Journal of Otology. 1991;12(Supplement):105S–115S. [PubMed] [Google Scholar]

- Osberger MJ, Robbins AM, Todd SL, Riley AI. Speech intelligibility of children with cochlear implants. Volta Review. 1994;96(5):169–180. [Google Scholar]

- Parkhurst BG, Levitt H. The effect of selected prosodic errors on the intelligibility of deaf speech. Journal of Communication Disorders. 1978;11:249–256. doi: 10.1016/0021-9924(78)90017-5. [DOI] [PubMed] [Google Scholar]

- Peng S-C, Tomblin JB, Spencer LJ, Hurtig RR. Imitative production of rising speech intonation in pediatric cochlear implant recipients. Journal of Speech, Language, and Hearing Research. 2007;50:1210–1227. doi: 10.1044/1092-4388(2007/085). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peng S-C, Tomblin JB, Turner CW. Production and perception of speech intonation in pediatric cochlear implant recipients and individuals with normal hearing. Ear and Hearing. 2008;29:336–351. doi: 10.1097/AUD.0b013e318168d94d. [DOI] [PubMed] [Google Scholar]

- Pereira C. The perception of vocal affect by cochlear implantees. In: Waltzman SB, Cohen NL, editors. Cochlear implants. New York: Thieme Medical; 2000. pp. 343–345. [Google Scholar]

- Peterson HA, Marquardt TP. Appraisal and diagnosis of speech and language disorders. Third ed. Englewood Cliffs NJ: Prentice-Hall; 1994. [Google Scholar]

- Ramig LO. The role of phonation in speech intelligibility: a review and preliminary data from patients with Parkinson’s disease. In: Kent RD, editor. Intelligibility in speech disorders: Theory, measurement and management. Amsterdam: John Benjamins; 1992. pp. 119–155. [Google Scholar]

- Shriberg LD, Kwiatkowski J, Rasmussen C. The Prosody-Voice Screening Profile. Tucson, AZ: Communication Skill Builders; 1990. [Google Scholar]

- Smith CR. Residual hearing and speech production in deaf children. Journal of Speech and Hearing Research. 1975;18:795–811. doi: 10.1044/jshr.1804.795. [DOI] [PubMed] [Google Scholar]

- Snow D. Children’s imitations of intonation contours: are rising tones more difficult than falling tones? Journal of Speech, Language, and Hearing Research. 1998;41:576–587. doi: 10.1044/jslhr.4103.576. [DOI] [PubMed] [Google Scholar]

- Snow D, Ertmer D. The development of intonation in young children with cochlear implants: a preliminary study of the influence of age at implantation and length of implant experience. Clinical Linguistics & Phonetics. 2009;23:665–679. doi: 10.1080/02699200903026555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snow DP. Phrase-final lengthening and intonation in early child speech. Journal of Speech and Hearing Research. 1994;37:831–840. doi: 10.1044/jshr.3704.831. [DOI] [PubMed] [Google Scholar]

- Subtelney JD. Assessment of speech with implications for training. In: Bess FH, editor. Childhood deafness: Causation, assessment, and management. New York: Grune & Stratton; 1977. pp. 183–194. [Google Scholar]

- Stevens KN, Nickerson RS, Rollins AM. ‘Suprasegmental and postural aspects of speech production and their effect on articulatory skills and intelligibility. In: Hochberg I, Levitt H, Osberger MJ, editors. Speech of the hearing-impaired: Research, training and personnel preparation. Baltimore MD: University Park Press; 1983. pp. 35–51. [Google Scholar]

- Thiessen ED, Saffran JR. When cues collide: Use of statistical and stress cues to word boundaries by 7-and 9-month-old infants. Developmental Psychology. 2003;39:706–716. doi: 10.1037/0012-1649.39.4.706. [DOI] [PubMed] [Google Scholar]

- Tobey EA, Angelette S, Murchison C, Nicosia J, Sprague S, Staller S, Brimacombe JA, Beiter AL. Speech production performance in children with multichannel cochlear implants. The American Journal of Otology. 1991;12(Supplement):165S–173S. [PubMed] [Google Scholar]

- Tobey EA, Hasenstab MS. Effects of a Nucleus multichannel cochlear implant upon speech production in children. Ear and Hearing. 1991;12(Supplement 4):48S–54S. doi: 10.1097/00003446-199108001-00007. [DOI] [PubMed] [Google Scholar]

- Trinh T, Crnic L. On the rise and fall of declaratives. In: Reich I, et al., editors. Proceedings of Sinn und Bedeutung. Vol. 15. Saarbrücken, Germany: Universaar-Saarland University Press; 2011. pp. 1–15. [Google Scholar]

- Vongpaisal T, Trehub SE, Schellenberg EG. Song recognition by children and adolescents with cochlear implants. Journal of Speech, Language, and Hearing Research. 2006;49:1091–1103. doi: 10.1044/1092-4388(2006/078). [DOI] [PubMed] [Google Scholar]

- Voress JK, Maddox T. Developmental assessment of young children. San Antonio, TX: Pearson; 1998. [Google Scholar]

- Weismer G, Martin RE. Acoustic and perceptual approaches to the study of intelligibility. In: Kent RD, editor. Intelligibility in speech disorders: Theory, measurement and management. Amsterdam: John Benjamins; 1992. pp. 67–118. [Google Scholar]

- Wells B, Peppé S. Intonation abilities of children with speech and language impairment. Journal of Speech, Language, and Hearing Research. 2003;46:5–20. doi: 10.1044/1092-4388(2003/001). [DOI] [PubMed] [Google Scholar]