Abstract

In the effort to promote more continuous and quantitative assessment of surgical proficiency, there is an increased need to define and establish common surgical metrics. Furthermore, as various pressures such as limited duty hours and access to educational resources, including materials and expertise, place increased demands on training, the value of quantitative automated assessment becomes increasingly apparent. We present our methods to establish common surgical metrics within the otology and neurotology community and our initial efforts in the subsequent transfer of these metrics into objective automated assessments provided via a simulation environment.

1. Introduction

Although advances in medical and minimally invasive treatments have advanced tremendously over the years, surgical intervention is still required to alleviate many health conditions. As an example, disorders of the temporal bone affect millions of patients in the United States [1] and many require surgical intervention for resolution. To gain surgical proficiency, trainees must possess comprehension of the complex anatomy and pathology of the temporal bone. This knowledge must be integrated with refined microsurgical technical skills. This proficiency requires hours of deliberate practice and considerable clinical experience. Surgical training requires at least 5 years under current methods at a cost of approximately $80,000 per year per resident [2]. Conventional temporal bone laboratories, with related equipment, cost over a million dollars to construct and are expensive to maintain [3].

Several factors contribute to inefficiencies in this training and adversely impact the overall cost of healthcare. These factors include limits in duty hours which restrict teaching interactions between residents and faculty, reduced access to resources such as cadaveric specimens, and related exposure to hazardous materials including pathogens present in cadaveric specimens, e.g., hepatitis B, C, prion derived illness [4], HIV infection, and formalin [5] present increased dangers to health.

In order to mitigate some of these barriers, many look to simulation technologies. However, in order to provide adequate transfer to clinical delivery, proficiency metrics must be established and translated into automated systems for quantitative assessments. Unfortunately, many surgical specialties suffer from a lack of objective assessment of surgical skills. Having inadequate metrics prevents uniform formative feedback during training and also quantitative measurement of professional technical proficiency. If these metrics can be resolved and quantified, they can be translated into automated assessment tools.

2. Background

Metric Definition

Most metrics have been developed locally and have not been extended in a global or systematic fashion [6][7][8][9]. We conducted an online survey of members of the American Neurotology Society and American Otological Society [10] to formulate a cross-institutional set of metrics based on previous examples that have been validated by a large number of experts. We have established a dedicated consortium of 11 institutions that will focus on the iterative improvement of these metrics as applied to otologic surgery. Early involvement of the expert community is essential for robust development, and ultimately, acceptance into a standardized, uniform curriculum using simulation.

In order to facilitate the translation of objective measures into the simulation, an algorithm is developed for each. This algorithm is defined in terms of measurable objective quantities. These quantities can be evaluated by an expert or computer program. This is a refinement to the work of Sewell [6] and provides a more comprehensive and flexible approach.

Automated Assessment

While other otologic simulator systems have integrated some aspects of metric-driven feedback, none have used a complete set of expert validated metrics integrated into the simulation to produce an overall numerical score representing a thorough analysis of performance. These metrics can also provide a means for metric-driven automated instruction. In our approach, experts provide data in the form of virtually drilled temporal bones to construct composite scores for use in determining expert variance of technique. Sample temporal bones, rated to be equivalent in difficulty, are provided to experts and residents through the simulator for both setting standards (through experts) and grading the performance of the residents. Through the use of virtual bones, we can assure each expert performs on the same data set.

Sewell implemented a basic scripting language as a way of adding variance to the experience, such as a triggered response to a specific action [6]. Whereas that system used a markup language to define state transitions, we employ a more expressive scripting language that allows faculty experts to create new behaviors based on user input.

3. Methods & Materials

Metrics Definition

Before any valid, reliable and practical assessment of technical skill can be performed, a valid, reliable and practical set of metrics must be developed. In order to objectively assess surgical competence, a survey was deployed to canvass expert opinion regarding key grading criteria for performing a complete mastoidectomy with a facial recess approach [10]. Members of the American Neurotology Society and American Otological Society rated the importance of assessment criteria. The criteria ranked in importance above 70% comprise the starting point for deliberation by the proposed consortium of experts. A list of the top 25 criteria is available below (See Table 1).

Table: 1.

| Criteria to be included in the new cross-institutional scale (ranked by > 70% of participants as "very important" or "important. |

% participants that ranked criterion as "very important" or "important" |

Type of Assessment for Quantification of the metric |

|---|---|---|

| 1. Maintains visibility (of tool) while removing bone |

100% | Contextual/Procedural |

| 2. Selects appropriate burr type and size |

98.4% | Contextual |

| 3. Antrum entered | 98.4% | % of structure (volume violated) |

| 4. No violation of facial nerve sheath |

98.3% | Proximity - % of volume structure violated |

| 5. Sigmoid sinus is not entered |

96.7% | % of volume of structure violated |

| 6. Identifies tympanic segment of the facial nerve |

96.6% | % correlated with expert removal Procedural |

| 7. Does not drill on ossicle | 91.8% | Proximity - % of volume of structure violated |

| 8. Firm, low, good hand position and grip on drill |

91.8% | Procedural |

| 9. Does not use excessive drill force near critical structures |

91.8% | Procedural Proximity |

| 10. Identifies the chorda tympani or stump |

91.7% | % correlated with expert removal Procedural |

| 11. Drills in best direction (clear understanding of cutting edge) |

90.2% | Procedural/Contextual |

| 12. Canal wall up (EAC) | 89.8% | % correlated with expert removal |

| 13. Identifies the facial nerve at the cochlearform process |

88.1% | % correlated with expert removal |

| 14. Appropriate depth of cavity (Cortex) |

88% | % correlated with expert removal |

| 15. Drills with broad strokes | 86.9% | Procedural/Contextual Technique |

| 16. No holes in EAC | 86.4% | % of volume of structure violated |

| 17. Complete saucerization (Cortex) |

83.3% | % correlated with expert removal |

| 18. Posterior canal wall thinned |

81.4% | % of volume of structure violated |

| 19. Facial recess completely exposed (overlying bone sufficiently thinned so nerve can be seen, located and safely avoided) |

80.4% | % correlated with expert removal |

| 20. Identifies the facial nerve at the external genu |

79.3% | % correlated with expert removal Procedural |

| 21. Low frequency of drill "jumps" (jumps defined as drilling further than 1 cm from previous spot) |

77% | Procedural (localization of tool) |

| 22. No holes in tegmen | 73.8% | % of volume of structure violated |

| 23. Use of diamond burr within 2 mm of facial nerve |

73.3% | Proximity/Localization Procedural |

| 24. No cells remain on sinodural angle |

72.5% | % correlated with expert removal |

| 25. Sinodural angle sharply defined |

71.2% | % correlated with expert removal |

Categories of Metrics:

Contextual = Contingent on Sequence (Steps)

Technique = Speed, force, angle, etc.

Localization = Position of tool

Proximity = Distance of tool to structure (s)

Violation = % of structure (volume) removed

Identified = % correlated with expert removal

Categorization of Metrics

The characteristics of the criteria identified by expert surgeons to evaluate mastoidecomy performance although different from each other, can be grouped into broad categories. It is no surprise, therefore, that different methods should be employed to efficiently and accurately compute scores based on these criteria. Violation criteria such as “does not drill the facial nerve” and “does not drill on ossicle” can be computed using a simple occupancy check based on a segmented volume. More complicated criterion require more complicated algorithms. Metrics based explicitly on the motion of the drill itself, rather than the position of the drill (such as “Drills in broad strokes”) can be accurately analyzed using the framework detailed by Sewell [6]. In our previous work [13], we presented a method to give an automated score for the complicated metrics that do not have clear representations in either the hand motion analysis framework or direct voxel comparison. These include “Appropriate depth of cavity” and “Complete saucerization” and others.

By comparing matching regions of voxels between high quality expert products, i.e., drilled virtual bones, and student volumes using various distance metrics, we obtain a feature vector that can be used in a range of clustering and classification algorithms (See Figure 1). Our preliminary results employing this method have found it even more powerful than hand-motion analysis for some metrics, obtaining kappa scores above 0.6 when comparing expertly and automatically graded scores for the metrics “Complete Sauceriziation”, and “Antrum Entered”.

Figure 1.

(LEFT) Image of feature vectors used in assessment.

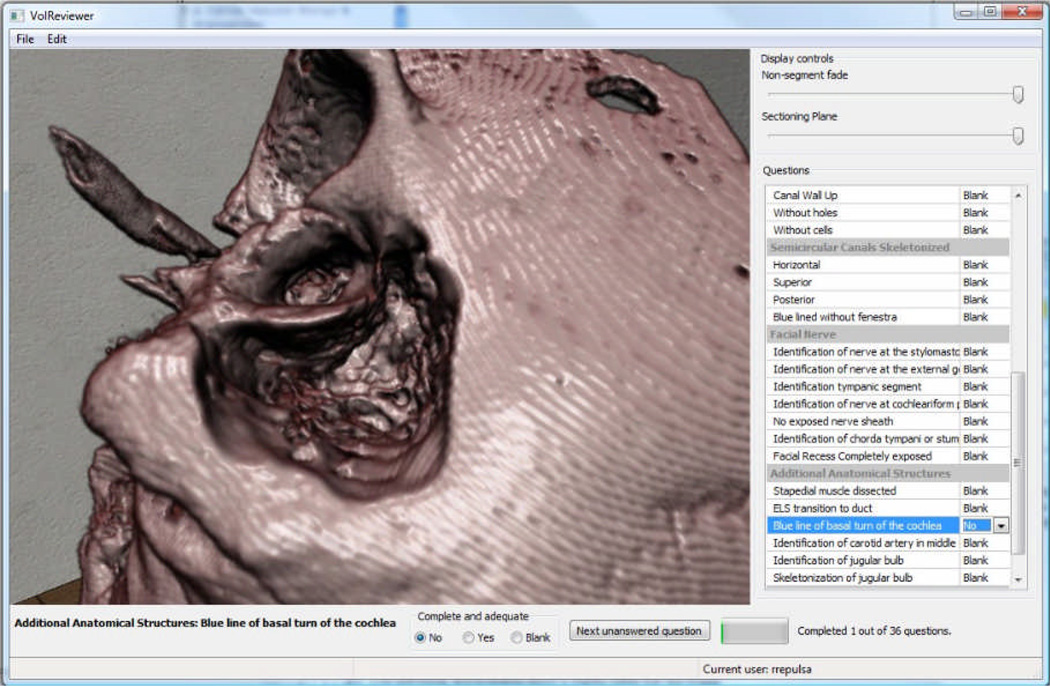

We have also developed visualization tools to compare different user performance in the simulation. The visual analysis of this data has the potential to provide our experts valuable insights into what type of errors are most common while learning these surgical procedures. Future formative sessions with experts will provide feedback on the utility of these implementations, providing suggestions for improvements. Finally, experts will use the virtual system, VolReviewer, (see Figure 2) to evaluate the resultant dissection performed by the residents. This program provides an interactive method for experts to assess virtually drilled bones using the expert-defined metrics.

Figure 2.

Image from VolReviewer tool.

4. Results

By using machine learning techniques, we can provide scores to the final products of a surgical simulation. Given a set of final products already graded by an expert, we then can extract features from the volumes and construct a decision tree [13]. This method allows for scoring on metrics that are not easily defined in terms of strict structural boundaries. For scores based on violation of segmented structures, this technique will not perform well compared to the obvious method. However, this technique does allow scoring to be performed for procedures or parts of procedures that do not rely on violation for traditional scoring.

By integrating automated evaluation into the system, it is possible to deliver to the user more continuous and quantified performance measurements. Descriptions of metrics as defined by experts must be translated into quantifiable values. For example, violation of a critical structure can be quantified as a percent of tagged voxels of the structure incorrectly removed by the user. Some contextual metrics will require time and positional information. Sewell [6] has shown that hand-motion analysis using Hidden Markov Models can be useful in classifying if a user is a novice or expert. We integrate Sewell’s hand-motion analysis, along with session capturing software developed by our group [11]. However, hand-motion analysis has limitations in terms of long-term learning [12]. We are conducting statistical analysis of the final product of students as compared with experts.

5. Conclusions

We have presented our methods for developing consortium-based metrics of surgical proficiency and our methods for implementing into automated assessment tools using a computer simulation. The integration of more standardized metrics translated into automated assessment will provide avenues for continuous and quantified assessment of surgical proficiency.

Acknowledgements

This research was supported through funding from R01 DC011321091A1 through the National Institute on Deafness and other Communications Disorders (NIDCD) of the National Institutes of Health.

References

- 1.NIDCD. 2010 see: http://www.nidcd.nih.gov/health/statistics/hearing.asp.

- 2.Williams TE, Santiani B, Thomas A, Ellison EC. The Impending Shortage and the Estimated Cost of Training the Future Surgical Workforce. Ann. Surg. 2009 Aug 27;:590–597. doi: 10.1097/SLA.0b013e3181b6c90b. [DOI] [PubMed] [Google Scholar]

- 3.Welling DB. Personal Communication, 2/14/2010. Recently outfitted complete temporal bone laboratory with 12 stations with instructor station, approximately $2 million.

- 4.Scott A, De R, Sadek SAA, Garrido MC, Oath MRC, Courtney-Harris RG. Temporal bone dissection: a possible route for prion transmission? J Laryn. & Oto. 2001;115(5):374–375. doi: 10.1258/0022215011907901. Royal Society of Medicine Press. [DOI] [PubMed] [Google Scholar]

- 5.NIOSH. 2010 http://www.cdc.gov/niosh/npg/npgd0294.html.

- 6.Sewell C, Morris D, Blevins NH, Agrawal S, Dutta S, Barbagli F, Salisbury K. In: Validating Metrics for a Mastoidectomy Simulator. Proc. MMVR15. Westwood JD, et al., editors. Amsterdam: IOS Press; 2007. pp. 421–426. [PubMed] [Google Scholar]

- 7.Zirkle M, Taplin MA, Anthony R, Dubrowski A. Objective Assessment of Temporal Bone Drilling Skills. Annals of Otolgy, Rhinology, & Laryngology. 2007;116(11):793–798. doi: 10.1177/000348940711601101. [DOI] [PubMed] [Google Scholar]

- 8.Butler NN, Wiet GJ. Reliability of the Welling Scale (WS1) for rating temporal bone dissection performance. Laryngoscope. 2007 Oct;117(10):1803–1808. doi: 10.1097/MLG.0b013e31811edd7a. [DOI] [PubMed] [Google Scholar]

- 9.Laeeq K, Bhatti NI, Carey JP, Della Santina CC, Limb CJ, Niparko JK, Minor LM, Francis HW. Pilot Testing of an Assessment Tool for Competency in Mastoidectomy. The Laryngoscope. 2009 Dec;119:2402–2410. doi: 10.1002/lary.20678. [DOI] [PubMed] [Google Scholar]

- 10.Wan D, Wiet GJ, Welling DB, Kerwin T, Stredney D. Creating a Cross-Institutional Grading Scale for Temporal Bone Dissection. Laryngoscope. 2010 doi: 10.1002/lary.20957. (Accepted for Print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kerwin T, Shen HW, Stredney D. Moller T, et al., editors. "Capture and Review of Interactive Volumetric Manipulations for Surgical Training", Volume Graphics. 2006;106 http://www.cse.ohio-state.edu/~kerwin/vg06/capture2006-final.pdf.

- 12.Porte MC, Xeroulis G, Reznick RK, Dubrowski A. Verbal feedback from an expert is more effective than self-accessed feedback about motion efficiency in learning new surgical skills. American Journal of Surgery. 2007;193(1):105–110. doi: 10.1016/j.amjsurg.2006.03.016. [DOI] [PubMed] [Google Scholar]

- 13.Kerwin T, Wiet G, Stredney D, Shen HW. Automatic scoring of virtual mastoidectomies using expert examples. Int J Comput Assist Radiol Surg. 2011 May 3; doi: 10.1007/s11548-011-0566-4. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]