Abstract

Background

Effective strategies for implementing best practices in low and middle income countries are needed. RHL is an annually updated electronic publication containing Cochrane systematic reviews, commentaries and practical recommendations on how to implement evidence-based practices. We are conducting a trial to evaluate the improvement in obstetric practices using an active dissemination strategy to promote uptake of recommendations in The WHO Reproductive Health Library (RHL).

Methods

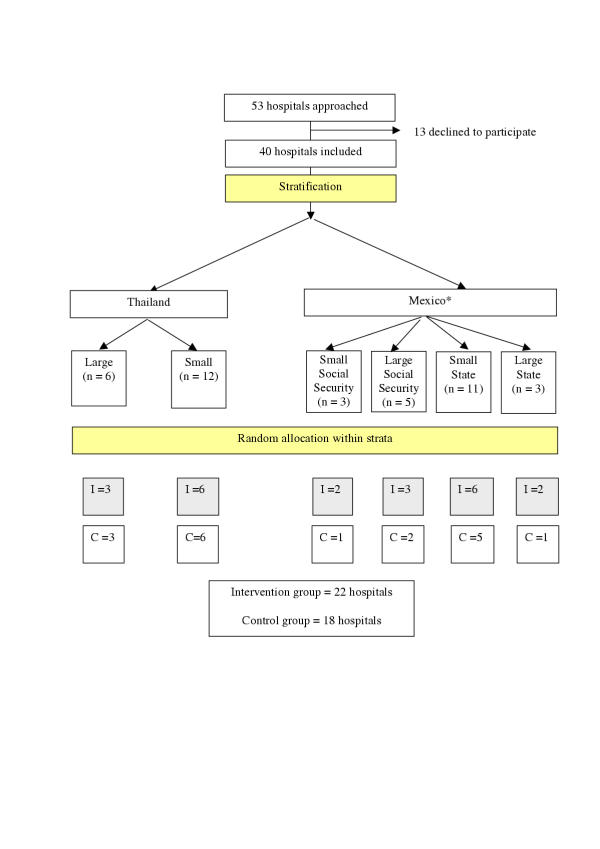

A cluster randomized trial to improve obstetric practices in 40 hospitals in Mexico and Thailand is conducted. The trial uses a stratified random allocation based on country, size and type of hospitals. The core intervention consists of three interactive workshops delivered over a period of six months. The main outcome measures are changes in clinical practices that are recommended in RHL measured approximately a year after the first workshop.

Results

The design and implementation of a complex intervention using a cluster randomized trial design are presented.

Conclusion

Designing the intervention, choosing outcome variables and implementing the protocol in two diverse settings has been a time-consuming and challenging process. We hope that sharing this experience will help others planning similar projects and improve our ability to implement change.

Background

There is an increasing recognition of the information access and management problems of health care workers. [1] Resolving a clinical problem begins with a search for a valid systematic review or practice guideline as the most efficient method of deciding on the best patient care. [2] Systematic reviews of randomized controlled trials are widely acknowledged as providing the gold standard evidence base to support decision-making for further research, policy and practice. Systematic reviews prepared by the Cochrane Collaboration are published electronically and have the additional advantage of being updated, as new evidence becomes available.

The World Health Organization has published The WHO Reproductive Health Library (RHL) since 1997 in an effort to provide access to the most reliable and up-to-date information to health care workers in developing countries. [3] This annually updated database includes predominantly Cochrane systematic reviews that are relevant to reproductive health problems faced in low-income countries together with commentaries written by experts familiar with under-resourced settings, short practical guidance documents and implementation aids (such as educational videos) to facilitate the implementation of recommended practices. RHL is published in English and Spanish with new topics added and updated topics revised on an annual basis. The sixth issue of RHL published in 2003 contains 79 Cochrane reviews.

Initiatives such as RHL should address some of the information access and management difficulties faced by physicians. However they are unlikely, by themselves, to lead to significant improvements in performance, as health care workers have limited time to read reviews and guidelines and many changes in behaviour require organisational changes. There is evidence that passive dissemination of information is unlikely to lead to improvements in health care performance. [4] Active interventions with components ranging from simple reminders given to clinicians, to complex multi-faceted interventions have been tested in diverse settings to improve clinical practices. While there is no single intervention that works under all conditions, most interventions may yield modest to moderate improvements in some settings. [5] Interventions with several components (multi-faceted) seem to have a higher chance of improving practices as more barriers can be addressed and overcome with different components. [6]

The objective of the study is to evaluate the improvement in obstetric practices using an active dissemination strategy to promote uptake of recommendations in The WHO Reproductive Health Library (RHL). We hypothesized that equipping health care workers with the skills and knowledge to use systematic reviews will improve their practices.

Study settings

Maternity units of hospitals with sufficient number of deliveries (> 1000 deliveries/year) to enable measurement of the outcomes, not associated directly with a university or other academic/research department were eligible to participate. In Mexico all state and social security hospitals in the Mexico City municipal area were approached. Twenty-two out of 34 eligible and approached hospitals participated. In Thailand, all hospitals in the Northeast province except one agreed to participate in the trial (n = 18). About 2/5ths of Thailand's population lives in the Northeast province (Figure 1).

Figure 1.

Flow chart of the trial design and random allocation (* In Mexico one hospital in large-social security stratum withdrew after random allocation (because of building renovation in the maternity). Another hospital that consented to participate that belonged to the small-state stratum was randomized; I = Intervention, C = Control)

The characteristics of the hospitals in the two countries are quite different (Table 1). In Mexico, the hospitals are all urban, cosmopolitan, and doctors provide the care to a large extent. There are two hospital systems, one state hospital system and the social security system. The social security system has two components providing health care to formal-sector workers, their families and to government workers and their families. We thought that the two system hospitals (state federal hospitals and social security hospitals) may differ somewhat in their characteristics and response to the intervention and stratified accordingly. In Thailand, the hospitals are in small towns and midwives usually provide the routine obstetric care. In general, Thai hospitals have fewer doctors and are without written guidelines on management of specific obstetric problems or well-defined continued medical education programmes.

Table 1.

Hospital strata and characteristics

| Country | Stratum | Type / size | Number of hospitals | Mean number of deliveries (range)/ year / hospital | Number of hospitals with guidelines available | Number of hospitals with continued education programme present |

| Mexico | 1 | Social security / small | 3 | 3334 (2050–4951) | 3 | 3 |

| 2 | Social security / large | 5 | 9886 (5964–17670) | 5 | 5 | |

| 3 | State / small | 11 | 3112 (1307–4458) | 7 | 8 | |

| 4 | State / large | 3 | 6428 (5019–7244) | 2 | 2 | |

| Thailand | 5 | State / small | 12 | 3249 (1500–4000) | 0 | 0 |

| 6 | State / large | 6 | 6688 (5200–10000) | 0 | 0 | |

Methods

Study design

The study has a stratified cluster randomized trial design. Cluster randomized trials provide both practical and methodological advantages for implementation studies especially when the intervention requires policy changes and the intended effect is at the institutional level. [7] Some RHL recommended practices require organisational change (such as policy of allowing companions to women in labour) and need to be implemented at the hospital (cluster) level. Cluster randomization using hospitals as the unit of allocation reduces contamination between groups. It is easier to deliver the intervention (workshops) to a group at the hospital level (unit) than to selected individuals working within the same unit. Also, by focusing on the whole staff (head of department, consultants, trainees, and midwives) one can utilise group dynamics and peer pressure, which may facilitate the adoption of recommended practices.

Cluster randomized trials, however, are less efficient statistically than individually randomized trials because the responses of individuals in a cluster tend to be more similar (intracluster) than those individuals in different clusters (intercluster). The sample size required is accordingly larger and the analysis techniques have to be adjusted by the level of association among members of the cluster (intracluster correlation coefficient). [8] However, when inferences are at the cluster level, 'the study could be regarded, at least with respect to adopting an approach to the sample size estimation and analysis, as a standard clinical trial'. [9]

We collected hospital baseline information on factors likely to be associated with the outcomes such as the number of deliveries per year, number of professional staff relative to size, referral status and the distance to university hospitals. We discussed which of these had to be controlled for in the design, deciding finally that country, type and size of hospital captured most of the variation of these factors.

We then debated whether the design should be a matched-pair design or a stratified design. In the former, two hospitals with similar pre-defined characteristics assumed to be correlated with the outcome are paired and then one hospital of the pair is randomly allocated to the intervention, while the other hospital of the pair receives the control. The stratified design is a generalisation of the matched-pair design in which hospitals are grouped into homogeneous groups or strata according to the pre-defined characteristics, and then they are randomly allocated, within each stratum, to the intervention or the control group. The situations in which one or the other design is better or might be a choice, and which situation prevailed in our study, are described in Table 2.

Table 2.

Situations in which the matched-pair design is (or might be) better and those in which the stratified design is (or might be) better (shading indicates the situations prevailing in our study)

| Matched pairs better | Stratified better |

| Large variation between pairs with respect to baseline risk | Within-stratum variation small compared to between-stratum variation |

| High matching correlation | Small matching correlation within strata |

| No individual level analysis desired | Analysis at individual level desired (2) (interactions of interventions with age, gender, medical history) |

| Homogeneity of effect can be assumed across pairs | Heterogeneity of effect across strata possible |

| No drop-outs expected | Individual hospitals may drop out |

| Medium number of clusters (20 to 40, so as to have 10–20 well-matched pairs) | Large number of clusters (perhaps >30 or >40 depending on the number of strata) |

| Calculation of ICC(1) needs special assumptions | Calculation of ICC straightforward |

(1) ICC – intracluster correlation coefficient (2) Only as secondary analysis in our study

We decided to use the stratified design because the initial analysis of hospital characteristics – country and type and size of hospital – indicated that the gain in power achieved by matching would not compensate the loss of power resulting from the reduction in the degrees of freedom. This is because the pairs based on these variables were not very distinct and they were not likely to achieve more balancing between arms of the potentially important factors at baseline than that achieved by the stratified design. For example, with regard to the annual delivery rates three of the pairs would be 3000–3000, 3344–3600 and 3600–3743. When the pairs are not clearly distinct as in our case, pairing would result in a low matching correlation, which is a measure of the (in) effectiveness of pairing and can be regarded as the standard Pearson correlation computed over the paired clusters. [8]

In addition, we wished to allow for heterogeneity of the effect across strata (intervention by strata interaction) and for the possibility of hospital dropouts. The number of hospitals (40) was reasonably large. Analysis at individual level was not essential, which would have allowed the choice of the matched-pair design, but analysis at cluster level is also compatible with the stratified design.

Finally, the estimation of the intracluster correlation coefficient (ICC) requires an estimate of the between-cluster and of the within-cluster variance. In a matched-pair design, the former is completely confounded with the intervention effect and cannot be estimated without making the assumption of no intervention effect or other special assumptions. On the other hand, the estimation of the ICC is straightforward with the stratified design and we would contribute with ICC estimates for our outcomes, which would be useful for future experiments in this field.[9]

After these considerations, we decided on a stratification by country, type of hospital and size, with six strata overall, two in Thailand and four in Mexico (Table 1).

Random allocation

The random allocation sequence was produced centrally by WHO in Geneva, assigning hospitals at random in each stratum shown in Table 1 to one of the two arms (intervention or control), in equal numbers. For each stratum, random permutations were produced using a SAS® random number generator, with the starting number taken independently for each stratum. The allocation was concealed until knowledge of the assignment was required operationally to implement the intervention. Thus, country investigators were informed of the allocation status of the hospitals after collection of baseline data, when the first intervention workshop had to be organized.

One hospital was excluded after randomization but before any intervention was conducted due to the maternity ward renovation which reduced the number of deliveries substantially. Another hospital (that happened to belong to another stratum) was included and randomized separately to replace this hospital.

Sample size / power calculation

Since inferences, and therefore the analysis, are at the cluster level, sample size calculations are based on the cluster as the unit. [8]

The total number of units that could be enrolled within our collaborators' reach was between 36–40. To calculate the power we used standard formulae using the hospital as the unit of analysis, as indicated above. We calculated that with 40 hospitals we would have 90% power to detect a decrease in the (end-of-study) rate of use of episiotomy from 70% to 50% or an increase in the (end-of-study) rate of use of corticosteroids from 20% to 40% in a one-sided significance test at 5% level of significance. This calculation was based on the assumption that the variation in the prevalence of a practice across hospitals, measured by the standard deviation, is 20%, equal to the minimum difference to detect. This was arbitrary, since no baseline data were available. We used a one-sided significance test because we thought the intervention could only improve the rate of use of practices (in a direction corresponding to the recommendation). Our estimate is conservative because we assumed a completely randomized (cluster) design (without stratification), which is likely to require a larger sample size.

We planned to estimate the prevalence of a practice in a hospital based on a minimum of 100 women (cluster size). This number would provide a maximum error of estimation of 10% using a 95% confidence interval. For example, we estimated that about 10% of all women admitted to labour ward would have preterm babies (less than 37 weeks) and thus be eligible for corticosteroid administration. Therefore, we needed to collect data from 1000 women to have adequate precision for the hospital level estimates. For practical reasons we decided to collect data from 1000 women or for six months whichever is reached first in each unit.

Intervention

We planned a multifaceted intervention addressing perceived barriers to evidence based practice that included: meeting with hospital directors before intervention for their consent and support (to ensure organisational buy in); provision of the RHL, computers and printer in an accessible part of the labour ward/department (to ensure easy access to the RHL); identification of a hospital RHL coordinator (to help health care workers to use RHL and to maintain links with the research team in case of problems); RHL information materials (poster, short printouts) (to promote awareness in health care workers); and a series of interactive workshop delivered by a specialist (to teach principles of evidence based practice and use of the RHL). In these complex intervention trials it is important to identify all components of the intervention clearly as much as possible. We wanted to ensure that the intervention would be feasible in routine care settings and agreed that three workshops spread over a period of six months would be the maximum intensity intervention that could be delivered in a non-research setting (i.e. under normal circumstances). Each workshop had a different focus. Briefly, the first workshop focused on giving the information about the project, WHO's role, evidence-based decision-making and the description of RHL. The second workshop focused on RHL contents and the third on how to implement change.

Once the intervention was developed then the individuals to deliver the intervention were identified and trained in a workshop. The aim of the workshop was to ensure that in both countries the same standard intervention is followed in addition to training in the techniques of the workshops. We felt that the workshops needed to be conducted by obstetric consultants who would be respected by staff. The actual implementation was monitored through workshop checklists. In some large hospitals in Mexico the same workshop was repeated over a period of one or two days to capture staff who were in night duty or were not available for one of the workshops. The consultants were instructed to focus on the use of RHL rather than going into details of one particular topic. Nevertheless, it was expected that especially in the second workshops specific topics would be discussed due to the interactive nature of the workshops.

The control group did not receive any intervention. Those hospitals gave consent to participate in the trial and were told that they would receive the same intervention if the trial was successful. The computers and printers will be given to the control hospitals at the end of the trial regardless of the results.

Co-interventions

Evidence-based medicine was becoming more widely known during the planning phase of the trial (1999–2000). We expected that in the study sites there could be initiatives beyond our control that could influence the adoption of practices. In Thailand, health sector reforms and hospital accreditation programmes are being implemented across the country. In Mexico, there is growing recognition of evidence-based medicine and independent initiatives to improve obstetric practices are becoming more common especially in large cities. We hope to record these developments as much as possible and assess their effects in exploratory post hoc analyses.

The global passive dissemination of RHL to existing subscribers continued during the trial period in both countries. We avoided conducting active initiatives such as training workshops, seminars or presentations at the trial sites during the trial period.

Outcomes

Outcome measurements are taken at baseline (pre-intervention) and at the end of the study (post-intervention) to enable baseline performance to be accounted for during the analysis. Collecting baseline data strengthens the overall design of the study by allowing the evaluation of change in practices over time, assessment of a 'ceiling effect' in selected practices and assessing the imbalance of important prognostic variables between groups.

The end-of-study outcome assessments are made at 10–12 months after the first intervention workshop. This period was chosen arbitrarily but based on the argument that following an intervention delivered in a six-month period, a change should be demonstrable within the following 3–6 months to assess the effectiveness of the intervention.

Field workers not involved in the implementation of the trial collected outcome data. The data collection forms were completed in the postnatal wards mostly from hospital records. The mothers were consulted if there were missing information in the records. As of October 2003 all outcome data collection has been completed and data cleaning/entry are ongoing.

Selection of outcomes

The discussion on the choice of outcomes focused on measurement of knowledge, physician practices and health outcomes. If the practices for which increased utilization is important are known to be cost-effective, then it is not necessary to measure health outcomes when evaluating implementation strategies.[11] Measurement of knowledge on the other hand is not satisfactory because it does not relate to change in behaviour, which is the target of the intervention.

We therefore decided to focus on seven practices for which there is unequivocal evidence for benefit (or harm) based on the Cochrane reviews and the commentaries included in RHL No 4 published in 2001 (available when the intervention was ready to start) (Table 3). These practices are heterogeneous and range from change of a drug (e.g. oxytocin for active management of the third stage of labour rather than another agent) to those that require organisational change within the units (e.g. labour companionship by 'doulas'). Therefore, the potential for change is likely to be different for different practices.[11]

Table 3.

Recommended practices in RHL

| Practice | Desired outcome |

| 1. External cephalic version at term | ↓ breech delivery, ↓ caesarean section |

| 2. Social support during labour | ↓ caesarean section, ↑ satisfaction with labour |

| 3. Magnesium sulfate for women with eclampsia | ↓ recurrent convulsions |

| 4. Corticosteroids before preterm delivery | ↓ neonatal death, respiratory distress syndrome |

| 5. Selected episiotomy for nulliparous women | ↓ perineal pain postpartum |

| 6. Active management of the third stage of labour | ↓ postpartum haemorrhage |

| 7. Unrestricted breastfeeding on demand | ↑ exclusive breastfeeding |

Analysis plan

The analysis will be based on the hospital as the unit of analysis. All the units that provide information on outcomes will be included in the analysis as randomized. It is anticipated that for some practices the number of events may be too small to give precise estimates even with the sample size described above (e.g. magnesium sulfate for eclampsia).

Means and standard deviations or mean rates of baseline clinical data, as appropriate, will be calculated by country and arm. The change between the practice rate at 10–12 months and the baseline rates (before-after) will be calculated for each hospital. The mean change will be compared between countries and arms using analysis of variance techniques, perhaps after transformation of the proportions to the arcsine or logit or logarithmic transformation as appropriate. The model for analysis of variance will include terms for countries, strata within countries, arm and for significant interactions. Adjusted mean changes and 95% confidence intervals will be derived from an appropriate model.

However, the analysis of change has the limitation that baseline values are likely to be negatively correlated with change because hospitals with low percentages of a given desirable practice at baseline might improve more than hospitals with high percentages. Analysis of covariance allows the adjustment of the mean rates at 10–12 months by baseline rates and other baseline variables and has generally greater power to detect a treatment effect.[12] Therefore analysis of covariance will be used with covariates at the cluster level to obtain adjusted mean practice rates by arm and compare them. Possible confounders to be included in the analysis of covariance are existing clinical practice guidelines, continued medical education programme and the initial reception of the research team by the hospital staff. Interactions of arm by country and arm by strata will be explored, and if found, stratified analyses will be done. The interaction of arm by baseline will be examined to assess the validity of the analysis based on changes from baseline.

The procedure described above will be applied in the analysis of the seven outcomes described in Table 3. We shall declare the intervention effective if statistical significance at the 5% level is demonstrated for the majority (four or more) of these outcomes. However, we also expect that the intervention 'holds at least a clinically relevant numerical edge over the control for those primary endpoints for which demonstration of statistical significance is not achieved.'[13] It is generally accepted that no adjustment for multiple endpoints is necessary under this scenario. [13]

An exploratory analysis will be considered to compare the practices between the group receiving active dissemination and the group with no intervention controlling for individual level covariates.

Nested studies

We conducted a survey of hospital staff to learn their sources of new information, the time they dedicated to reading and their knowledge of evidence-based medicine. We shall conduct interviews with RHL coordinators at the intervention hospitals and repeat the survey at the end of the trial to get more in-depth information about their experiences. We also plan to look at individual hospitals where certain practices may have been adopted to see if we can identify any context specific issues. We wish to capture the dynamics of the workshops and assess the reactions of the staff to the intervention as well as to the tool.

Each computer has a log file to indicate how many times the program is accessed. We shall analyse the log files as a proxy indicator of RHL use in the intervention hospitals, acknowledging that these may not relate directly to change in behaviour.

Ethical approval and consent

The study was approved by the Scientific and Ethical Review Group of the UNDP/UNFPA/WHO/World Bank Special Programme on Research, Development and Research Training in Human Reproduction (HRP) and the participating institutions. Responsible officers of the hospitals and the administrative bodies (i.e. Ministry of Health) were approached for giving consent to participate (consent at randomization level). We thought that it would be ethically permissible for a designated decision-maker to seek and provide such consent on behalf of his or her constituents. [14] We did not obtain consent from patients because the intervention was not delivered to the patients and the data collection was from the records. The women were approached only for missing information.

Discussion

Although there is significant body of research in the area of the practice change, most focus on an intervention to improve one or two pre-defined practices. It is intuitively easier to tailor an intervention to change a single practice as the circumstances surrounding that practice can be more clearly addressed. There is little evidence about the effectiveness of strategies to promote the uptake of multiple evidence-based recommendations using resources such as the RHL in either developed or developing country settings. Furthermore, trials of complex dissemination and implementation interventions are much more heterogeneous (in many ways) than biomedical intervention trials and their results are more difficult to generalise. The majority of these trials have been conducted in North American and European settings and it is difficult to extrapolate the findings to developing countries where there are likely to be differences in health care delivery, continued education models and access to information at public hospitals. The evidence gap for effective implementation strategies in low and middle-income countries needs to be addressed.

To our knowledge, this is the first time a comprehensive electronic tool is used as the core of an educational intervention for health care providers in developing countries. A similar randomized controlled trial (RCT) was conducted by Wyatt et al. to improve 4 obstetric practices recommended in the Cochrane Pregnancy & Childbirth database in the United Kingdom [15]. The intervention comprised a single visit to the senior obstetrician and midwife together with the Cochrane database disks and an educational video of one of the practices. There was significant uptake of practices in both the intervention and control groups overall. Ventouse delivery increased (compared to the use of forceps) more in the intervention group but other practices did not follow this pattern. The timing of the trial coincided with increased recognition of the Cochrane Pregnancy and Childbirth Group's work in the U.K. The authors attributed the uptake in the control group in part to this increased visibility.

Complex intervention trials are becoming increasingly important as more emphasis is placed on changing professional behaviour. The challenges faced in the conceptualisation and the eventual design of these projects are different from clinical trials. There is a need for a multidisciplinary team working on the study design and the dynamics of implementing these studies. Clinicians are usually not familiar with the science and the process of behaviour change although they are still needed in the tailoring of the intervention according to the practices. The statistical issues are usually more complex and teams with experience in cluster randomized trials are better placed to design and implement such trials. The selection of a stratified rather than matched pair design and data collection at individual level enabling ICC calculation and secondary analyses were decisions that were taken after discussing the pros and cons of each option. The decision to collect individual data meant that a longer data collection period was necessary.

Campbell et al. presented a framework for the development and evaluation of RCTs of complex interventions [16,17]. They describe several phases of development from theory to the implementation of the trial stressing that the phases do not necessarily follow one another (theory, modelling, exploratory trial, definitive RCT and long-term implementation). Our trial did not follow this framework. The theory and past experience were available through several systematic reviews in the field. We spent considerable time in the modelling stage. This was necessary because we had to develop the regimen (i.e. dose, duration) using judgements. Following the modelling stage we held a workshop on how to deliver the intervention including role-plays for the specialists who were to deliver the intervention. In Thailand two and in Mexico one specialist conducted all workshops. We then proceeded with the definitive trial. We were aware that the intervention would be delivered slightly differently in the two countries as indicated above but thought that they were similar enough. There were some spontaneous add-on activities at the centres. For example in Thailand in order to overcome the language barrier the staff in some hospitals arranged seminars on specific topics where an English speaking member of staff translated the documents on a particular topic and presented in Thai. We think that differential effects can be expected across hospitals. The additional data collected on workshops and qualitative analyses will shed more light into the dynamics of change in those particular settings.

The differences in health care delivery systems, the availability of continuing medical education programmes and access to evidence-based health care information are likely to be different in many low-income countries compared to their industrialized counterparts. The other important aspect of this trial is attempting to equip health workers with the skills to use evidence-based health care information. Provision of a regularly updated tool containing the best evidence with information on the relevance of the findings to low-income settings and implementation tools is an integral part of the implementation strategy. Strengthening the evidence-base for implementation interventions in developing countries will contribute to improvements in standards of care.

Competing interests

None declared.

Authors contributions

All authors are members of the Steering Committee of the trial and participated in the conceptualisation and implementation of the trial. All authors read, commented and approved the manuscript.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Acknowledgments

Acknowledgements

Irwin Nazareth moderated the workshop for the specialists who delivered the interventions. Richard Johanson and Jeremy Wyatt shared their experiences from the CCPC trial with the research team. Patricio Sanhueza, Narong Winiyakul and Chompilas Chongsomchai delivered the trial intervention. Robert Pattinson contributed to the trial protocol. Jeremy Grimshaw holds a Canada Research Chair in Health Knowledge Transfer and Uptake.

Contributor Information

A Metin Gülmezoglu, Email: gulmezoglum@who.int.

José Villar, Email: villarj@who.int.

Jeremy Grimshaw, Email: jgrimshaw@ohri.ca.

Gilda Piaggio, Email: piaggiog@who.int.

Pisake Lumbiganon, Email: pisake@kku.ac.th.

Ana Langer, Email: alanger@popcouncil.org.mx.

References

- Haynes RB, Sackett DL, Tugwell P. Problems in handling of clinical research evidence by medical practitioners. Arch Intern Med. 1983;143:1971–1975. doi: 10.1001/archinte.143.10.1971. [DOI] [PubMed] [Google Scholar]

- Guyatt GH, Rennie D. Users' guides to the medical literature. JAMA. 1993;270:2096–2097. doi: 10.1001/jama.270.17.2096. [DOI] [PubMed] [Google Scholar]

- Gulmezoglu AM, Villar J, Carroli G, Hofmeyr J, Langer A, Schulz K, Guidotti R. WHO is producing a reproductive health library for developing countries. BMJ. 1997;314:1695. doi: 10.1136/bmj.314.7095.1695a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freemantle N, Harvey EL, Wolf F, Grimshaw JM, Grilli R, Bero LA. Printed educational materials: effects on professional practice and health care outcomes (Cochrane Database) Syst Rev. 2002. p. C0000172. [DOI] [PubMed]

- NHS Centre for Reviews and Dissemination Getting evidence into practice. Effective Health Care. 1999;5:1–16. [Google Scholar]

- Hulscher ME, Wensing M, van der Weijden T, Grol R. Interventions to implement prevention in primary care (Cochrane Database) Syst Rev. 2001. p. C0000362. [DOI] [PubMed]

- Eccles M, Grimshaw J, Campbell M, Ramsay C. Research designs for studies evaluating the effectiveness of change and improvement strategies. Quality and Safety in Health Care. 2003;12:47–52. doi: 10.1136/qhc.12.1.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donner A, Klar N. Design and analysis of cluster randomization trials in health research. Hodder & Stoughton Educational, London. 2000.

- Campbell MK, Grimshaw JM. Cluster randomized trials: time for improvement. BMJ. 1998;317:1171–1172. doi: 10.1136/bmj.317.7167.1171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sculpher M. Evaluating the cost-effectiveness of interventions designed to increase the utilization of evidence-based guidelines. Family Practice. 2000;17:S26–S31. doi: 10.1093/fampra/17.suppl_1.S26. [DOI] [PubMed] [Google Scholar]

- Grol R, Dalhuijsen J, Thomas S, Veld C, Rutten G, Mokkink H. Attributes of clinical guidelines that influence use of guidelines in general practice: observational study. BMJ. 1998;317:858–861. doi: 10.1136/bmj.317.7162.858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vickers AJ, Altman DG. Analysing controlled trials with baseline and follow up measurements. BMJ. 2001;323:1123–1124. doi: 10.1136/bmj.323.7321.1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sankoh AJ, D'Agostino RB, Sr, Huque MF. Efficacy endpoint selection and multiplicity adjustment methods in clinical trials with inherent multiple endpoint issues. Statistics in Medicine. 2003;22:3133–3150. doi: 10.1002/sim.1557. [DOI] [PubMed] [Google Scholar]

- Donner A. Some aspects of the design and analysis of cluster randomized trials. Applied Statistics. 1998;47:95–113. doi: 10.1111/1467-9876.00100. [DOI] [Google Scholar]

- Wyatt J, Paterson-Brown S, Johanson R, Altman DG, Bradburn MJ, Fisk NM. Randomized trial of educational visits to enhance use of systematic reviews in 25 obstetric units. BMJ. 1998;317:1041–1046. doi: 10.1136/bmj.317.7165.1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell M, Fitzpatrick R, Haines A, Kinmouth AL, Sandercock P, Spiegelhalter P, Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ. 2000;321:694–696. doi: 10.1136/bmj.321.7262.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medical Research Council A framework for development and evaluation of RCTs for complex interventions to improve health http://www.mrc.ac.uk/pdf-mrc_cpr.pdf date visited: 21 March 2003.